Summary

Background

Eliciting knowledge from geographically dispersed experts given their time and scheduling constraints, while maintaining anonymity among them, presents multiple challenges.

Objectives

Describe an innovative, Internet based method to acquire knowledge from experts regarding patients who need post-acute referrals. Compare, 1) the percentage of patients referred by experts to percentage of patients actually referred by hospital clinicians, 2) experts’ referral decisions by disciplines and geographic regions, and 3) most common factors deemed important by discipline.

Methods

De-identified case studies, developed from electronic health records (EHR), contained a comprehensive description of 1,496 acute care inpatients. In teams of three, physicians, nurses, social workers, and physical therapists reviewed case studies and assessed the need for post-acute care referrals; Delphi rounds followed when team members did not agree. Generalized estimating equations (GEEs) compared experts’ decisions by discipline, region of the country and to the decisions made by study hospital clinicians, adjusting for the repeated observations from each expert and case. Frequencies determined the most common case characteristics chosen as important by the experts.

Results

The experts recommended referral for 80% of the cases; the actual discharge disposition of the patients showed referrals for 67%. Experts from the Northeast and Midwest referred 5% more cases than experts from the West. Physicians and nurses referred patients at similar rates while both referred more often than social workers. Differences by discipline were seen in the factors identified as important to the decision.

Conclusion

The method for eliciting expert knowledge enabled national dispersed expert clinicians to anonymously review case summaries and make decisions about post-acute care referrals. Having time and a comprehensive case summary may have assisted experts to identify more patients in need of post-acute care than the hospital clinicians. The methodology produced the data needed to develop an expert decision support system for discharge planning.

Keywords: Knowledge elicitation, decision support, discharge planning, nursing informatics, Delphi rounds

1. Introduction and background

Healthcare operations increasingly depend on knowledge-intensive processes organized and operated by interdisciplinary teams. The process of discharge planning and post-acute referral decision making is a knowledge-intensive process often conducted by nurse or social work case managers in collaboration with physicians and physical therapists, patients and their caregivers. These busy, practicing clinicians often have neither the comprehensive information needed to make optimal decisions, nor the time to synthesize and deliberate on each clinical detail [1]. However, with the growing adoption of health information technology, clinical decision support systems, based on expert knowledge have become a common model to support knowledge-intensive processes. To build expert decision support systems, the knowledge of expert clinicians is often used to construct and parameterize the contents. To this end, several methodologies for expert knowledge elicitation have emerged in the last several decades [2].

Knowledge elicitation is one of the most important steps in constructing expert based decision support systems because of its direct impact on the overall quality of the system [2]. In general, knowledge elicitation is a process of acquiring information about a specific domain. It is often a first step in the knowledge acquisition process where knowledge is transformed from the forms in which it is available in the world into forms that can be used by a knowledge system [3]. In healthcare, an increasing body of literature focuses on the theoretical and methodological aspects of knowledge elicitation [4–7].

Multiple expert knowledge elicitation methods exist including direct observation, structured and unstructured interviews, and think aloud [11]. In our case, these techniques were not appropriate since conducting interactive knowledge elicitation with a large number of geographically dispersed, multi-disciplinary experts was not possible due to distance and, scheduling difficulties.[8–10]. However, our methods were similar to other established methods such as direct sorting or picking from a list of attributes as described by Bech-Larsen and Nielsen [12]. Using a web-based platform, we were able to present attributes to the experts for their choosing to gain their perspectives on what was important to their decisions, no matter where they were located.

2. Objective

The purpose of this paper is to describe and share the results of a four step, innovative, Internet based knowledge elicitation method used in our parent study to acquire interprofessional experts’ knowledge about which patients need post-acute referral and what kind of services are needed (home care, inpatient rehabilitation, skilled nursing facility, nursing home, or hospice). We compare the percentage of patients referred by experts after case study review to the percentage of patients actually referred by hospital clinicians. We also compare the expert decisions by discipline, region of the country, and factors deemed important by discipline. The overall parent study used the results of this methodology to build a decision support system for acute care discharge planning.

3. Methods

3.1 The data sources

Six hospitals, within four health systems, with the same brand of EHR supplied the patient data for this methodology. One site contained three hospitals within one urban, academic, health system in the Northeast and the other three were regional and rural hospitals in the Northeast and Midwest of the United States. A variety of hospital types were chosen to increase the generalizability of the study findings. The majority of the data was collected from the nursing admission assessment and the remainder from a snapshot of the final nursing documentation before discharge. To create a uniform dataset, the data elements of interest were chosen from the EHR based on the domains within the Orem Self-Care Deficit Theory, since, according to Orem, patients with self-care deficits in these domains need nursing care and we were building decision support to determine the need for post-acute care [13]. Major domains within the Orem Self-Care Deficit Theory include socio-demographics (age, race, gender, education level), health state, self-care actions one needs to perform, available resources, and environment, for example. The content of the case studies were organized by headings based on these concepts. For example, under the health domain were the medical diagnosis, co-morbid conditions, self-rated health, medications, and wounds. Under resources were caregiver availability, willingness and ability. The Orem framework was successfully used in our prior work [14].

The Principal Investigator (PI) and Project Manager, along with the hospital nurse informatics leaders, educated the hospital nurses about the study either in person or using voice over Power-Point slide shows. They learned about the purpose and goals of the parent study, which data elements in the EHR would be used for the study, and the importance of accurate, complete documentation during the study period. The data collection period at each site varied according to the size of the hospital and the amount of time it took to accumulate approximately 1,000 patients per site. Data collection occurred between October 10, 2011 and July 31, 2012.

Data analysts at each hospital obtained the data for eligible patients from the hospital databases, removed personal identifiers (i.e.: names, medical record numbers), and sent the data to the research team via a secure web-based service. Eligible cases were age 55 and older, discharged alive, with at least a 48 hour length of stay, and drawn from the hospital-specific data collection period.

The research team completed a rigorous data cleaning process that addressed out of range and missing data values, as well as ensured that the data were uniformly coded in order to merge the data from all six hospitals together [15]. When the final cleaned and merged data set was produced, the statistician purposively chose a random sample of 1,496 cases from among 5,333 cases. The stratified sampling strategy balanced the distribution of primary diagnoses to reflect a representative sample based on the 16 most frequently reported primary diagnoses nationally. This resulted in a final sample of 1,496 subjects that met our power analysis requirements for the parent study. The data from this sample was used to populate the case summaries described below.

The research team (the PI and trained junior and senior nursing student research assistants) reviewed every case summary for accuracy and consistency [15]. The case summaries, organized by the Orem Self-Care Deficit domains, contained socio-demographic, medical, emotional, mental, social, physical, financial, environmental, and social support patient specific data. Each case study was approximately four pages long.

3.2 The web platform for the knowledge elicitation

A contracted vendor built a web-based application with a mySQL database to display clinical case summaries of hospitalized patients and capture study related data for future analysis. The application took five months to specify, build and test and cost approximately $30,000. The secure system resided in a password protected hosting environment behind an encrypted connection with logins unique to each expert. The application was accessible via desktop or tablet devices.

The team completed usability testing of the web-based application with six nursing research assistants assigned to teams of three to simulate the experience of the experts. This exercise revealed that the web pages were intuitive, easy to navigate, with good readability, the system response time was excellent, and the decisions and supporting characteristics were successfully captured in the database. Navigation through the site helped us refine our user instructions and fine-tune some of the content. The research assistants found a few minor problems with scrolling down one of the pages and the ability to sort a header, which were fixed.

3.3 The experts

Physicians, and masters or doctorally prepared nurses, social workers and physical therapists with at least five years of recent experience in discharge planning, transitional care, gerontology, and/or post-acute care served as experts to identify the characteristics of patients in need of post-acute care referrals and the optimal site of care from a list of the five major types of post-acute care (skilled home care, inpatient rehabilitation, skilled nursing facility, nursing home and hospice). We recruited a nationwide sample of 325 qualified, multidisciplinary clinical experts using multiple methods such as professional networking, snowball sampling, and personal contact via email. We sought experts in four regions of the United States (Northeast, Midwest, West and South) to balance any potential bias due to geographic availability of post-acute care or regional conventions. All 325 experts were invited to judge 10 practice cases to familiarize themselves with the website navigation and the study procedures. The statistician examined the results of these 10 cases across all 251 experts who subsequently completed the practice cases and found no outliers or issues indicating any experts were not following directions or were making judgments out of line with others. No experts were eliminated as outliers.

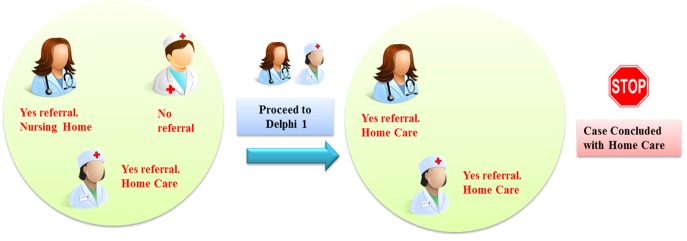

Stratified by geographic region and discipline (doctor, nurse, physical therapist, social worker), the statistician randomly assigned 171 experts to teams of three to review and judge approximately 13–30 unique case summaries each. To represent the disciplines routinely involved in making post-acute care referral decisions, each team included a representative from nursing and/or medicine as well as representation by a physical therapist and/or a social worker (► Figure 1). Further, each team of three had no overlap in geographic region. A balanced incomplete Latin square design was used to ensure each combination of expert discipline / geographic location was represented as equally as possible [16]. Experts were contracted as independent consultants and were paid $20 per completed case plus $10 per case for each Delphi review.

Fig. 1.

Expert Team Composition

4. Innovative knowledge elicitation method

4.1 Independent expert review of the cases

Reviews of the case summaries occurred via the following sequential steps.

Step 1: Experts independently read the case summaries. Step 2: Experts independently made their decision to refer the patient for post-acute care or not. We instructed the experts to base their decision solely on the case characteristics and/or needs and to ignore insurance eligibility, geographic availability, local or regional conventions and other barriers to care such as homebound status. Step 3: We asked the experts to review the case summary and to select the characteristics (factors) that influenced their decisions. A mySQL database captured their choices and stored each response for reporting and analysis. This step assisted the team in the analysis phase by determining which characteristics were the most important and therefore directed the selection of factors for the regression analyses. Step 4: The experts reviewed their decisions and the characteristics that supported the decision. After review, the case was considered completed and they submitted the case. We allowed three days before making the case “read only” to allow the expert to change their mind.

At this point the experts worked independently and did not know the disciplines or decisions of their teammates. The software provided a report of each team member’s decisions that enabled the identification of cases that had a lack of agreement among the experts and therefore required Delphi rounds.

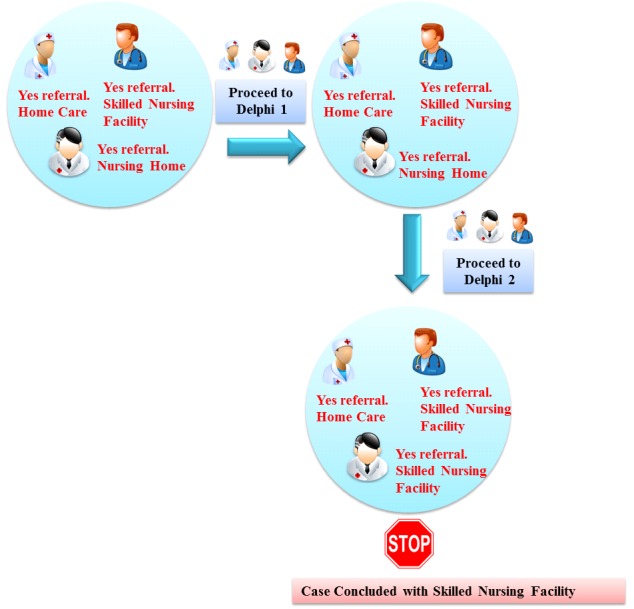

4.2 Handling Disagreement: Delphi Round One

Experts could agree or disagree at two levels, first on the decision to refer or not, and second on the site of care. A priori the study team determined a majority (at least two of three members) would constitute agreement on the decision to refer or not. For example, if at least two of the three experts say, “yes refer” the decision was yes. If at least two of the three say, “no”, the decision was no. So, for this decision there was always a majority rule decision since there were only two choices. However, in cases where the experts agreed to refer the patient for post-acute care, the experts went on to choose one of five post-acute care sites of care. This provided an opportunity for three-way disagreement on the site of care. In that instance, the experts entered into a Delphi round to reach consensus (► Figure 2). Delphi rounds provide a structured communication technique to assist a group to converge toward a mutually agreed upon decision [17]. In Delphi round one, the experts saw their previous decisions and characteristics chosen to support their decisions and the decisions and characteristics chosen by their teammates. They remained blinded to the identity and discipline of the other experts. After review of theirs’ and others’ decisions, the experts read the case summary and voted again on the site of referral. They were limited to choose one of the sites previously chosen by either themselves or their teammates. Experts wrote a comment to explain or support their decision to their teammates.

Fig. 2.

Expert Case Decision Flow (Delphi 1)

4.3 Delphi Round Two

If there was still no majority agreement on the site of care after Delphi round one, the experts in each team entered into Delphi round two. In this round they saw their own Delphi round one decision and supportive characteristics and their teammate’s as well as each other’s explanatory comments written in round one (► Figure 3). After reviewing this content, they voted again. If consensus was still not reached, the case was marked as uncertain.

Fig. 3.

Expert Case Decision Flow (Delphi 2)

4.4 Data Capture and Analysis

The mySQL database captured and stored the decisions and supporting characteristics chosen by the experts as they navigated the application. The data represented knowledge elicited directly from the interprofessional experts as they made their clinical decisions. Generalized estimating equations (GEEs) were used to compare experts’ decisions made as individuals or teams by discipline, region of the country and to the decisions made by study hospital clinicians, adjusting for the repeated observations from each expert and case (18, 19). Frequencies determined the most common characteristics chosen as important by the expert.

5. Results

The case summaries contained clinical information on 1,496 de-identified patients. The average age was 74 (range 55–103), 55.5% female, 83% white and 12% black. The most common primary diagnosis codes included Diseases of the Heart, Diseases of the Urinary System, Fractures, Non-traumatic Joint Disorders, and Respiratory Infections. Patients had an average of 10 co-morbid conditions.

Thirty-two physicians, 47 nurses, 44 social workers and 48 physical therapists participated in the study as experts. Twenty-nine percent were from the East, 26% from the Midwest, 19% from the West and 26% from the Southern regions of the United States. It took the experts 5–10 minutes per case to make their decisions.

In the initial judgement period there were a total of 171 experts who judged 1496 cases between April 11 – April 22, 2014. The methodology enabled timely monitoring of case completion and the majority of the experts finished their assigned cases on time. However, there were several experts who did not complete all of their cases, therefore 70 cases (approximately 5% of the cases) were reassigned to another expert of the same discipline and region.

Of the 171 experts there were only three who did not require participation in any Delphi rounds. Of those who participated in the Delphi rounds, 65% (110/168) of the experts had to be sent email reminders to complete the cases on time.

5.1 The Referral Decisions

The method of case study presentation via the website and the database behind the interactive website functioned well to capture the experts’ decisions and case factors deemed important to their decision. Experts recommended yes refer for 1,204 cases (80%) and do not refer for 292 cases (20%). Among the 1,204 cases recommended for referral, there was disagreement on the site of care for 280 cases thus requiring Delphi rounds. Of the 280 cases that required Delphi Rounds, 175 (12%) required one Delphi Round and 105 cases (7%) required two Delphi rounds to reach consensus on the site of care. In the end, after all Delphi rounds were completed, 37% of cases were recommended for skilled nursing facility care, 37% were recommended for home care services, 11% for inpatient rehabilitation, 9% for nursing home care, 6% for hospice, and 1.4% (N=17) were unable to reach agreement at the end of two Delphi rounds and were not used in the modeling of the decision support algorithm (► Table 1).

Table 1.

Sites of Referral by Hospital Clinicians and Experts

| Hospital Clinician | Study Expert | |

|---|---|---|

| Percentage referred overall | 67% | 80% |

| Home care | 38% | 37% |

| Skilled nursing facility | 46% | 37% |

| Inpatient rehabilitation | 6% | 11% |

| Nursing home | 5% | 9% |

| Hospice | 5% | 6% |

| Other | 1.3% | 1.4% unable to resolve |

The discharge dispositions of the patients obtained from the study sites showed that 986 (67%) were referred to a post-acute care service in “real life”. Thirty-eight percent went home with homecare services, 46% went to a skilled nursing facility, 5% to a nursing home, 6% to inpatient rehabilitation, 5% to Hospice Care, 0.1% to long term acute care, 1.1% were transferred to another acute care setting (► Table 1).

There was agreement between the experts and hospital clinicians on 76% of the cases ((No/No: n=214 (14%); Yes/Yes: n=925 (62%)). Study experts were significantly more likely to refer the remaining cases than the hospital clinicians. ((Experts/Clinicians: Yes/No: n=279 (19%); No/Yes: n=78 (5%) (p<0.001)).

5.2 Referrals by Geographic Region or Discipline

The method of placing the experts in teams stratified by discipline and region of the country worked well to balance regional differences in decision making. When examining the outcome to refer or not by individual experts, experts from the Northeast and Midwest referred about 5% more cases to post-acute care than experts in the West (77.6% and 75.5% versus 71.9%, p<.001). Physician and nursing experts were more likely to refer cases than social workers (78% and 75% versus 72%, p<.001) (► Table 2). However, there were no differences in referral sites (home care etc.) by region (p=0.92) or discipline (p=0.97). When examining the outcome of referral yes or no made by the team of experts (majority rules) versus their individual decisions, the differences by region and discipline disappeared indicating the randomization and team method worked

Table 2.

Decision to Refer for Post-Acute Care Services by Geographic Region and Discipline of the Experts.

| N (% within row) | Referred n=2,819 | Not Referred n=961 | p-value1 | Pairwise Comparison Groups2 |

|---|---|---|---|---|

| Experts’ Region | <0.001 | |||

| Northeast | 830 (77.6) | 239 (22.4) | A | |

| Midwest | 724 (75.5) | 235 (24.5) | AB | |

| South | 702 (72.4) | 267 (27.6) | BC | |

| West | 563 (71.9) | 220 (28.1) | C | |

| Experts’ Discipline | <0.001 | |||

| Medicine | 561 (78.1) | 157 (21.9) | A | |

| Nursing | 780 (75.0) | 260 (25.0) | AB | |

| Physical Therapy | 781 (74.5) | 267 (25.5) | BC | |

| Social Work | 697 (71.6) | 277 (28.4) | C |

1Comparison of Referred to Not-Referred across all categories via GEE.

2Categories with the same group letter were not significantly different on post-hoc pairwise comparisons with Sidak adjustment for multiple comparisons.

5.3 Case Characteristics that Influenced Decisions to Refer

Experts indicated an average of 22 characteristics in the case studies (factors) (range=1–67) that were important in making their decision for each case. The frequency at which an individual factor was selected ranged from 1% (race & ethnicity) – 68% (caregiver availability). Experts in the Northeast tended to select three fewer factors, on average, than other regions (p<0.001). By discipline, medicine experts tended to select 17 factors, physical therapy and social workers 21 factors, and nurses 23 factors (p<0.001). The only factor which medicine experts were more likely to select was specific discharge medications such as anti-coagulants; they were less likely than the other disciplines to select all other factors on which they varied. Physical therapists and social workers were more likely to indicate function in activities of daily living as being important. Nurses indicated that the numbers and types of comorbidities and medications, as well as past emergency department and hospital visits, marital status, living arrangement, and self-rated health were important more often than the other disciplines. Across all the disciplines primary diagnosis, fall risk, ADL function, number of co-morbid conditions, caregiver availability, and who the patient lives with were deemed important most often. Due to the high number of factors selected in each case, it was impossible to determine a limited number of important factor combinations based on expert responses.

6. Limitations

The experts’ decisions were made based on the content of case studies prepared from clinical documentation in the EHR. The case studies were comprehensive covering many health domains, but did contain missing data if it was not documented or not provided within the EHR. In real life, discharge planners making these decisions are able to discuss the case with others, but in this methodology the experts made decisions alone except for cases requiring Delphi rounds when they could only see text of each other’s decisions and reasons. However, we purposely chose experienced clinicians to increase the likelihood that they were comfortable making independent, expert level decisions.

7. Discussion

The web based methodology worked well for eliciting knowledge from a large group of multidisciplinary experts dispersed across the country. The success of these methods adds to the science of knowledge elicitation for investigators faced with such challenges. The experts were blinded to the disciplines of their teammates therefore ensuring them the freedom to express their decisions openly and they did express variation in what they felt was important to their decisions. In addition, the web based knowledge elicitation method provided access to a geographically diverse sample of experts that was important for generalizability and to avoid regional bias in decision making. The findings confirmed the importance of randomly assigning the experts to teams stratified by region of the country and discipline as we did see differences when we isolated their decisions and compared them as individuals. As teams, the regions and the discipline differences were erased.

The most difficult part of this study was retrieving the electronic data files from the various hospital EHRs and harmonizing the data among the six sites to have it uniformly populate the case study. The existence of free text mixed with structured data required laborious data cleaning and recoding which we have described elsewhere [15].

The majority of the experts finished the task on time and the methodology worked well to capture their decisions and the reasons behind their decisions. In hindsight, we suggest limiting the number of factors the experts could choose to support their decisions since the number of factors they chose ranged from 1–67 making it more difficult to reduce the data into what was most salient to the decisions.

Our study showed that physicians and advanced practice nurses did not differ significantly in the rates at which they referred patients for post-acute care services. These findings have policy implications because currently, unlike physicians, nurse practitioners are not authorized to order home care services. The Home Health Planning Improvement Act (HR2267) aims to authorize nurse practitioners, physician assistants, midwives, and clinical nurse specialists to order home health services [20]. Our findings suggest nurses would not over refer as may be the concern of some policy makers.

Nurses considered significantly more factors within the case studies as important to their decision making than the other disciplines. On average they marked 23 factors as important while physicians marked 17, physical therapy and social workers 21. This may reflect the holistic training of nurses to consider multiple domains when assessing including socio-demographic, physical, mental, emotional, social, and environmental. Combined, the most common characteristics earmarked as important by all experts include: primary diagnosis, fall risk, ADL function, number of co-morbid conditions, caregiver availability, and who the patient lives with.

The experts referred 13% more patients for post-acute care than the hospital clinicians. This could be a reflection of having a comprehensive case study with pertinent patient information organized and in one place. The experts also had the time to deliberate their decisions and were all experienced experts in the field. In addition, they were not aware of or limited by the patients’ insurance. We do not know the characteristics or the decision making methods of the actual hospital clinicians. In general, we do know that the content of the assessments and the information gathered to support these decisions are not standardized across the nation [21]. Similar to our approach using uniform information in a case study, Holland and Bowles [22] showed how uniform assessments improve the discharge planning process. Patients for whom uniform assessments were used during discharge planning had fewer unmet needs and problems complying with discharge instructions compared to usual care. Further, various models of decision making exist with some places using a team approach and others relying on nurse or social worker case managers to make the recommendations then consult with physicians and physical therapists for the final disposition [21]. Our findings indicate the importance of a team approach.

These findings have implications for EHR design to support structured, uniform assessments to collect the data needed to make these important decisions and to present them concisely and organized to the decision makers.

A drawback of our method of knowledge elicitation is the time and expense needed to program the case studies and build the website to host the case studies and experts’ choices. Dexter and colleagues [(4)] successfully used a similar methodology to elicit knowledge using scenarios from information systems data to model operating room management decisions. The result was a database of decision support for further use by clinicians. Our technique using case studies derived from electronic patient records and web based interprofessional teams adds to the literature on knowledge elicitation techniques where a review of knowledge elicitation methods included interviews, questionnaires, observation, storytelling and others, but not case studies and web based Delphi rounds [8].

8. Conclusion

An interactive, web-based application provided a convenient, secure, and efficient method to display de-identified EHR data and capture knowledge from geographically dispersed, multidisciplinary experts using case studies. Our study demonstrates how web-based knowledge elicitation maintained anonymity, automated data collection, supported live monitoring of progress, facilitated Delphi rounds, and captured knowledge. However, there were costs involved, therefore, the efficacy and efficiency of the method must be weighed against the costs incurred to build and maintain the website.

In summary, we highly recommend this innovative method to elicit decision-making knowledge for the development of expert decision support systems. The data elicited from this methodology enabled our team to build decision support algorithms for discharge referral decision making (manuscript under development). Testing of those algorithms is underway at two hospitals.

Acknowledgements

The authors wish to thank the National Institute of Nursing Research of the National Institutes of Health under Award Number R01-NR007674 and the experts who participated in this study for supporting the research reported in this publication. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. We also thank the nursing and information technology staff at the six hospitals who assisted us in collecting the electronic data for the study and MI Digital Agency for their technical expertise in building the website and data management.

Footnotes

9. Clinical Relevance

The results of this research demonstrate that researchers aiming to elicit knowledge from geographically dispersed, multi-disciplinary experts can do so using case studies derived from de-identified EHR data within a web-based application. This research provides methodological guidance for eliciting decision-making knowledge from experts to support the development of discharge decision support systems. The intent of the resulting decision support algorithms is to provide case managers and discharge planners with appropriate and timely expert decision support advice as they make decisions to refer older adults for post-acute care services ultimately with the goal of promoting safe and optimal recovery following a hospital stay. Ongoing study will determine this aim.

Conflicts of Interest

The authors declare they have no conflicts of interest in the research.

Protection of Human and Animal Subjects

Human subjects were not used in the knowledge elicitation portion of this study, rather use of de-identified data was used to prepare case summaries for the experts. The study was performed in compliance with and was reviewed by University of Pennsylvania Institutional Review Board.

References

- 1.Bowles KH, Foust JB, Naylor MD. Hospital discharge referral decision making: a multidisciplinary perspective. Appl Nurs Res 2003. Aug;16(3): 134-143. [DOI] [PubMed] [Google Scholar]

- 2.Milton NR. Knowledge acquisition in practice: a step-by-step guide. Springer; 2007. [Google Scholar]

- 3.Musen MA, Fagan LM, Combs DM, Shortliffe EH. Use of a domain model to drive an interactive knowledge-editing tool.. Int J Human Comput Stud 1999; 51(2): 479-495. [Google Scholar]

- 4.Dexter F, Wachtel RE, Epstein RH. Event-based knowledge elicitation of operating room management decision-making using scenarios adapted from information systems data. BMC Med Inform Decis Mak 2011; 11: 2, 6947–11–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gibert K, García-Alonso C, Salvador-Carulla L. Integrating clinicians, knowledge and data: Expert-based cooperative analysis in healthcare decision support. Health Res Policy and Systems 2010; 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Groznik V, Guid M, Sadikov A, Mozina M, Georgiev D, Kragelj V, Ribaric S, Pirtosek Z, Bratko I. Elicitation of neurological knowledge with argument-based machine learning. Artif Intell Med 2013; 57(2): 133-144. [DOI] [PubMed] [Google Scholar]

- 7.Keune H, Gutleb AC, Zimmer KE, Ravnum S, Yang A, Bartonova A, Krayer von Krauss M, Ropstad E, Eriksen G.S, Saunders M, Magnati B, Forsberg B. We’re only in it for the knowledge? A problem solving turn in environment and health expert elicitation. Environ Health 2012; 11 (Suppl. 1): S3,069X-11-S1-S3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Gavrilova T, Andreeva T. Knowledge elicitation techniques in a knowledge management context. J Knowledge Management 2012; 16(4): 523-537. [Google Scholar]

- 9.Ziebell D, Fiore SM, Becerra-Fernandez I. Knowledge Management Revisited. IEEE Intelligent Systems 2008; 23(3): 84-88. [Google Scholar]

- 10.Okafor EC, Osuagwu CC. The underlying issues in knowledge elicitation. Interdisc J of Information, Knowledge, and Management 2006; 1: 95–108. [Google Scholar]

- 11.Cooke NJ. Knowledge Elicitation. Durso FT, Nickerson RS, Schvaneveldt RW, Dumais ST, Lindsay DS, Chi MTH, editors. Handbook of Applied Cognition. First ed. Chichester, New York: Wiley; 1999. p. 479–501. [Google Scholar]

- 12.Bech-Larsen T, Nielsen NA. A comparison of five elicitation techniques for elicitation of attributes of low involvement products. J Econ Psych 1999; 20: 315-341. [Google Scholar]

- 13.Orem DE. Nursing: Concepts of Practice. 5th ed. St. Louis, MO: Mosby; 1995. [Google Scholar]

- 14.Bowles KH, Holmes JH, Naylor MD, Liberatore M, Nydick R. Expert consensus for discharge referral decisions using online delphi. AMIA Annu Symp Proc. 2003:106-9. [PMC free article] [PubMed] [Google Scholar]

- 15.Bowles K, Potashnik S, Ratcliffe S, Rosenberg M, Shih N, Topaz M, Holmes J, Naylor M. Conducting research using the electronic health record across multi-hospital systems: semantic harmonization implications for administrators. J Nurs Admin 2013; 43(6): 355-360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ai MY, Li K, Lin DJK. Balanced incomplete Latin square designs. J Statistical Planning and Inference 2013; 143: 1575-1582. [Google Scholar]

- 17.Rowe G, Wright G. The Delphi technique as a forecasting tool: issues and analysis. Int J Forecasting 1999; 15(4): 353-375. [Google Scholar]

- 18.Liang KY, Zeger S. Longitudinal data analysis using generalized linear models. Biometrics 1986; 73: 13–22. [Google Scholar]

- 19.Zeger S, Liang KY. Longitudinal data analysis for discrete and continuous outcomes. Biometrics 1986; 42: 121-130. [PubMed] [Google Scholar]

- 20.Walden G, Schwartz A. Walden, Schwartz introduce bipartisan legislation to ensure seniors and disabled can access home health services. [Internet]. Washington, DC: U.S. Representative Greg Walden; 2015. [cited January 18, 2016]. Available from: https://walden.house.gov/media-center/press-releases/walden-schwartz-introduce-bipartisan-legislation-ensure-seniors-and [Google Scholar]

- 21.Maramba PJ, Richards S, Larrabee JH. Discharge Planning Process, Applying a Model for Evidence-Based Practice. J Nurs Care Qual 2004; 19(2): 123-129. [DOI] [PubMed] [Google Scholar]

- 22.Holland DE, Bowles KH. Standardized discharge planning assessments: impact on patient outcomes. J Nurs Care Qual 2012; 27(3): 200-208. [DOI] [PubMed] [Google Scholar]