Summary

Objective

Big data or population-based information has the potential to reduce uncertainty in medicine by informing clinicians about individual patient care. The objectives of this study were: 1) to explore the feasibility of extracting and displaying population-based information from an actual clinical population’s database records, 2) to explore specific design features for improving population display, 3) to explore perceptions of population information displays, and 4) to explore the impact of population information display on cognitive outcomes.

Methods

We used the Veteran’s Affairs (VA) database to identify similar complex patients based on a similar complex patient case. Study outcomes measures were 1) preferences for population information display 2) time looking at the population display, 3) time to read the chart, and 4) appropriateness of plans with pre- and post-presentation of population data. Finally, we redesigned the population information display based on our findings from this study.

Results

The qualitative data analysis for preferences of population information display resulted in four themes: 1) trusting the big/population data can be an issue, 2) embedded analytics is necessary to explore patient similarities, 3) need for tools to control the view (overview, zoom and filter), and 4) different presentations of the population display can be beneficial to improve the display. We found that appropriateness of plans was at 60% for both groups (t9=-1.9; p=0.08), and overall time looking at the population information display was 2.3 minutes versus 3.6 minutes with experts processing information faster than non-experts (t8= -2.3, p=0.04).

Conclusion

A population database has great potential for reducing complexity and uncertainty in medicine to improve clinical care. The preferences identified for the population information display will guide future health information technology system designers for better and more intuitive display.

Keywords: Health information systems, uncertainty, big data, population decision support systems, information display

1. Background and Significance

Ordering antibiotics is complex. A physician who is deciding on the diagnosis and treatment of an infectious disease must integrate both patient-specific and population data where both may be uncertain. The widespread adoption of electronic health records (EHRs) is creating ample opportunities to leverage population-based evidence otherwise not easily retrievable from paper-based health records. However, EHRs usually provide only patient-specific views [1, 2]; population information or aggregated patient data are rarely included as decision support in EHR design [3, 4]. Providing only patient-specific views may not be sufficient to support clinical decision-making as most complex patients are unique and do not fit into available practice guidelines [5–8].

According to a current estimate, about 25% (15 million) of the annual deaths worldwide are directly related to infectious diseases (ID) [9]. For ID clinicians, emerging infections, resistant organisms, and the possibility of a global pandemic increase decision urgency and treatment difficulty [10–12]. Given the lack of evidence-based information or guidelines for clinicians in this field, population-based information has greater weight. Therefore, population-based information that can be extracted from the EHR or a population database is a critical component of ID treatment decisions.

Big data has been successfully used in astronomy, retail sales, search engines, and politics. In clinical informatics, applications such as Cloudwave can real-time visualize large amount of stored EEG data [13]. Despite the great promise of “big data” or population data, the adoption of these resources for clinical decision support has been relatively slow [14, 15]. One reason may be that the most effective information displays for this information are not yet known. Generally, previous research on information presentation of healthcare data has shown the value of cognitive design in the information display to better understand the data [16–20]. Cognitive design refers to construction of interface or visualization that represents the user’s internal mental models or mental images [21]. Research has shown that EHR and healthcare data visualization may lead to greater efficiency and a better understanding of the patient’s situation [18, 22–24]. Therefore, it is important to design visualization displays of population information that improve cognitive support and the efficiency of clinical decision-making. There has been limited research for providing the specific guidance for designing population based information display [18, 25, 26]. Variables important to design considerations include expertise, patient acuity, complexity, context, and relevant patient cohort identification. Therefore, it is imperative to identify the specific features of a population information display for optimal clinical decision support.

Previous studies on using aggregated patient information from a population database or EHRs have utilized different visualization methods for information presentation [9, 27, 28]. However, these visualization methods do not differentiate between simple and complex attributes of patients. Most visualization displays address simple problems by categorizing tasks into simple or smaller steps [24, 29]. The goal is often to minimize cognitive load or minimize analytical thinking while maximizing pattern matching. However, when dealing with complex problems, displays should also provide support for more intense and deliberate thinking by providing a rich source of information that matches the needs of the decision-maker. If the information is difficult to comprehend and does not match the decision task, there is a risk of increasing cognitive load and a higher chance of diagnostic errors. Therefore, easy to understand presentations of the aggregated patient information from a population database are equally important alongside individual data for effective clinical decision-making. Some initiatives such as the “Green Button” have shown how to aggregate patient information from the EHR [30]. This kind of visualization tool can effectively demonstrate comparative outcomes in the face of treatment unpredictability. However, expertise differences often are not taken into account while developing these information displays.

The potential to leverage clinical experience from the secondary use of EHR or population-based decision support can help to address the knowledge and experience gap of individual clinicians [31–33]. However, such potential has not yet been demonstrated in most clinical domains. As a result, clinicians are reluctant to adopt population data not actionable at the point-of-care [34]. Moreover, few studies have addressed the feasibility of extracting and displaying population-based information from clinical records. In this study, we have designed a complex case in the ID domain and assessed the feasibility of population-based analytical algorithms to extract similar patients in a “live” patient database electronic warehouse. To test design components for a population information display, we created a computerized visualization display that presents data on similar patient cases. Then, we performed an exploratory mixed-method study to assess the impact of population health analytics and data visualization on cognition. Finally, we proposed an improved design based on the findings of this study.

2. Objectives

The objectives of this study are:

To explore the feasibility of extracting and displaying population-based information from a large clinical database.

To explore specific design features for improving population display.

To explore perceptions for population information displays.

To explore the impact of a population information display on cognition.

3. Methods

3.1 Study design

We used both qualitative and quantitative methods in this study. The experimental design was 2 between (expertise) X 2 within (pre-/post population information display).

In other words, we blocked on expertise through our selection of two defined groups and exposed all participants to both forms of the display, collecting data pre-/post-exposure to the population-based display. The design included both qualitative and quantitative components.

3.2 Participants

Ten volunteer physicians participated in the study (five ID experts and five non-ID experts). Expertise was defined by board certification in ID. The “experts” were selected based on ID board certification and ID faculty role. The non-experts were board certified in areas outside ID. Both groups were required to have a minimum of 5 years of clinical experience. The experts had an average experience of 15.6 years and a range of 10 to 24 years. The five non-expert participants had an average experience of 17 years with a range of 7 to 38 years. The clinicians were contacted by email and participation was voluntary. All participants provided verbal consent. The participants did not receive any compensation for this voluntary participation. The experiment was conducted in private offices and conference rooms at the University of Utah Hospital and Veterans Affairs (VA) Hospital in Salt Lake City. The study was approved by the IRB (Institutional Review Board) at the University of Utah.

3.3 Development of stimulus materials

In this study, we have used two types of materials: 1) A complex case scenario and 2) display forms. Three of the authors (YL, DR and MJ), including an ID clinician (MJ), were involved with the design of the materials.

3.3.1 Complex Case Design

A complex case was created to mimic realistic diagnostic uncertainty in the ID domain from selecting a real patient from the VA database. The real patient was selected by two of the authors, one ID expert and one clinical pharmacist (MJ and DR), and the patient’s de-identified data were used to form the backbone of the case. The main treatment uncertainty was to explore the outcome of using Daptomycin versus other therapies. Therefore we selected a patient manually from the VA database with the following criteria: 1) diagnosis code of VRE neutropenia, presence of fever, bacterial infection and acute myloegenous leukemia; 2) laboratory results positive for refractory VRE bacteremia, and 3) the patient got treated with daptomycin for a period of atleast 2 consecutive days. In CharReview, we created a mock EHR view with different tabs representing patient’s current visit, past medical history, lab data and the information display. The complex patient case consisted data from the patient’s current visits of laboratory data, past medical history data, current medication list and chief complaints. We created a separate tab consisting the population information display for the clinicians to review. We asked all participants to rate the complexity of the case based on high (8–10/10), medium (5–7/10) and low complexity (1–4/10) scores. The summary of the overall case is described in ► Table 1.

Table 1.

Complex case summary

| Patient is a 60-year-old man with AML s/p induction with 7+3 day+35 now s/p re-induction who has had sustained neutropenia and now fever for the past 7 days. Initial blood cultures revealed Vancomycin-resistant Enter-ococcus. Infectious diseases was consulted the day after the fever spike and recommended Daptomycin given history of the same during previous admissions. Routine susceptibility report demonstrated susceptibility to Daptomycin but after 2 days of sustained bacteremia and worsening picture, gentamicin was added and his PICC line was discontinued. The patient remains on the floor, but has been persistently febrile. Transthoracic echocardiogram shows new tricuspid-valve regurgitation and a 3 cm vegetation. He endorses subjective fevers and chills but does not otherwise localize his symptoms. He reports feeling depressed about his outlook. VRE TV endocarditis currently failing or with delayed response to Daptomycin + Gentamicin and removal of the PICC line. Worsening on therapy. Creatinine now 1.6 from 1.3. Currently neutropenic, precluding surgical intervention. Daptomycin etest 4, Linezolid 2. Susceptibility on the VRE from 2 days ago was rechecked and was the same as the original. Instructions: Please write down a plan about how will you manage the patient therapeutically and rank the plans |

3.3.2 Display Forms

Two forms of case display were created. The first emulated the usual narrative, patient-based medical record. The second included both the case narrative and a population-based information display. The design process for the population display involved two steps. First, we used several search criteria for finding similar patients from the VA clinical data repository. Then, we designed the display based on the information of similar patients found from the database. The process is described below.

3.4 Search criteria from the population database

We first identified the most important clinical question for the complex patient (► Table 1). For our complex case, the patient’s deteriorating condition and comorbidities did not fit any evidence-based guideline. Moreover, the use of Daptomycin was recommended per clinical guidelines for VRE neutropenia patients [35]. However, using this agent did not improve the patient’s overall clinical status. On the contrary, the patient’s overall clinical and functional status declined rapidly. As a result, the clinician had to deal with the uncertainty of using other medications without knowing the consequences due to a lack of evidence.

To investigate the treatment of refractory Vancomycin-resistant Enterococcus (VRE) bacteremia, we initially focused our search within admissions with combinations of the following ICD-9-CM codes: neutropenia (204) and acute myelogenous leukemia (208, 288), the presence of fever (780.6, 790.7), bacteremia (038.0, 038.9), bacterial infection (041, 599), or other general infection codes (771.8, 785.2, 995.92, 995.91). Refractory VRE bacteremia was defined as the inpatient isolation of an enterococcal species from blood, where the first and last positives were more than 5 days apart, but positive cultures in the series were separated by no more than 14 days.

Initial examination of the potential cohort revealed that the matched group of patients was quite small; therefore, all individuals with refractory VRE bacteremia were included, regardless of their comorbidities, which resulted in a cohort of a few hundred patients. We measured the administration of antibiotics with potential activity against VRE alone or in combination with other antibiotics, i.e., Quinupristin / Dalfopristin, Daptomycin, Ampicillin, Gentamicin, Streptomycin, Linezolid or Tigecycline. We defined courses as consecutive antibiotics where gaps between doses were no longer than two hospital days apart. As a result, we found 19 patients from the database who matched the similarity profile of the complex case that we designed. A summary of these patients is described in ► Table 2.

Table 2.

Information from similar patients

| Patients with Daptomycin and/or combination therapy including Daptomycin | Patients with any antibiotic other than Daptomycin | Total | Percentage of total patients | |

|---|---|---|---|---|

| Died | 8 | 2 | 10 | 52% |

| Did not die | 6 | 3 | 9 | 48% |

| Total | 14 | 5 | 19 | |

| Percentage of total patients | 74% | 26% |

**More patients died on treatment therapy containing Daptomycin than other therapeutics alternatives. Also, the guideline does suggest using Daptomycin as an initial therapeutic agent.

3.5 Population display design rationale

The main goal of this display was to make clinicians aware of the individual patient outcomes when using Daptomycin. Therefore, aggregated measure was not a priority for display design. The if-then relationship in this design was aimed at improving providers’ confidence by displaying the outcome of different therapeutic regimens for each individual patient. Our initial design correlates to the temporal proximity of human-factors based design principles [36]. In temporal proximity, several tasks typically may be performed in the same time frame to meet the overall goal. For example, the goal of finding the outcome with different medications for each patient involves looking at the same display artifacts for each different patient. The overall goal was to help clinicians easily identify the ultimate outcome (death vs no death) through a similar task of scanning each patient in the display.

The two other goals for the design process were the presentation of similar complex patients in one display and support of an “if-then” relationship. The idea behind the information display was to show the aggregated patient information to represent the data and provide a better understanding of certain outcomes for similar patients. The “if-then” heuristics supports the mental model for simple decision logic. For example, in this display, we were interested in the outcomes of Daptomycin or a combination drug including Daptomycin, other antibiotics not including Daptomycin and the number of deaths. We laid out the outcomes (deaths and/or not death of patients) of therapeutic agents in a timeline view. This provided the “if-then” (if patient used drug X then death happened or did not happen) relationship for better cognition. We designed the display in such a way that the time-oriented data are graphically displayed for individual patients. Previous studies have shown that multiple patient records in longitudinal views provide better understanding of the display [18]. The longitudinal view provides the patient-specific outcome and effective representation of the timeline view. The display provided outcome information for different therapeutic regimens including Daptomycin. Guideline or evidence from clinical trials only had information regarding Daptomycin for treating VRE. Therefore, the goal of the display was to provide longitudinal view and to increase clinicians’ confidence to explore different therapeutic options other than Daptomycin. For example, the display had different legends to denote start and end time of antibiotics, negative culture results and survival outcome.

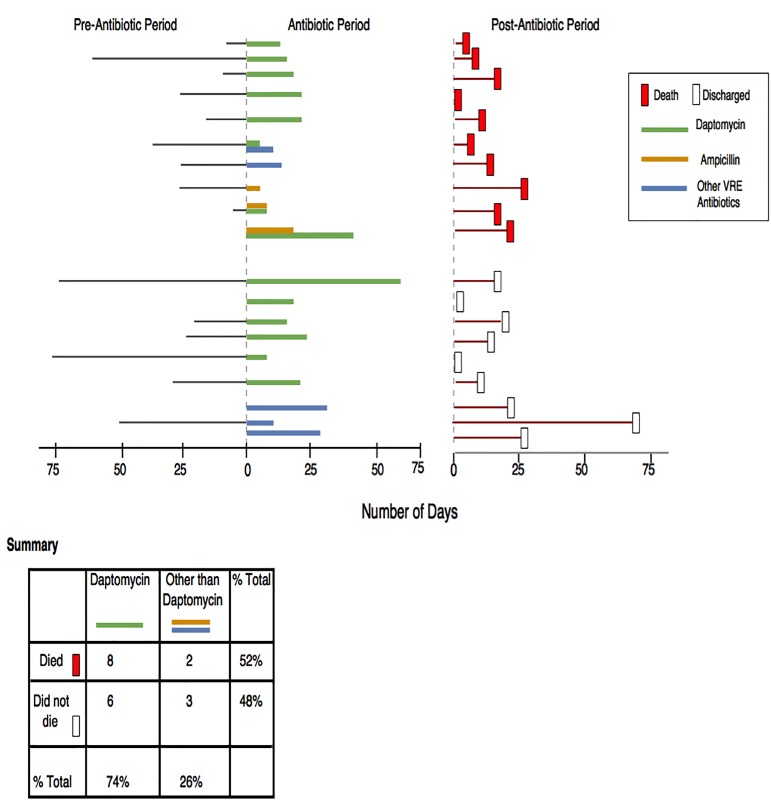

► Figure 1 depicts the different features of the display. In this initial display, each single line represents an individual patient. The horizontal axis represents the number of hospital days and vertical axis represents the total number of patients. Different colors and legends denote different therapies and outcomes associated with them. Overall, this figure nudges the fact that the number of deaths with using Daptomycin is the almost equal as with other medications.”

Fig. 1.

Population information display

The population graph and the patient electronic information were embedded into an artificial electronic chart, “ChartReview,” developed by Duvall et al. [37].

3.6 Population information display validation

To validate the display content, we asked two ID clinicians to evaluate if the population information display represented similar patient characteristics of the complex patient described in ► Table 1. The clinicians first checked the parameters of finding similar patients and confirmed the appropriateness of the parameters based on their clinical experience. Then, they explored the visualization of the population information in-depth, making sure that the legends and data points cognitively make sense. They checked to makes sure if the outcomes (death or no death) from the therapeutic agents for similar patients’ added any clinical value to reduce uncertainty. Both clinicians carefully examined the data to confirm that the display content matched the similar characteristics of the complex patient. They also confirmed the validity of the clinical utility of the population display for helping with clinical decisions for this very complex case.

3.7 Procedures and manipulation of variables

Clinicians were first shown the “ChartReview” with a mock patient to understand the functionalities and get acquainted with the electronic chart. The training time lasted between 7–10 minutes on average for all participants. The first author provided the training and explained the procedure, different functionalities in the mock EHR and gave each participants time to ask additional questions for clarification. Each participant regardless of expertise spent almost equal amount of time on training. The steps are as described below:

The participants were first asked to read the patient chart, including patient background information and lab data.

Then, they were asked to write down a plan for the case and to rank each item of the plan according to their priorities.

After the participants wrote down the ranked plans, they were shown the population display of similar patients. The participants reviewed patients for similarity based on the complex patient case characteristics such as diagnosis, treatment start and end date, overall inpatient stay and different therapeutic regimens outcomes. Once they examined the display, they were asked to make modifications to the plan as deemed necessary.

Finally, the first author conducted post-study in-depth interviews, probing into each pause to gauge the subject’s mental models, and asking follow-up questions.

Demographic information was collected at the end of the study.

3.8 Study outcome measures

The measured outcomes and the procedures for data collection are described in ► Table 3.

Table 3.

Measured outcomes and data collection

| Independent variables | Data collection procedure |

|---|---|

| Preferences for information display | The first author audio recorded and transcribed the post-study in-depth interview with the participants. |

| Time looking at the population information display | Quick Time player screen capture recorded the total time each participant spent looking and exploring the population information display |

| Time to read the chart | Quick Time player screen capture recorded the total time each participant spent reading the patient chart |

| Appropriateness of plans | Participants wrote down the treatment plan in a word-processing software and ranked the plan. They wrote down the plan twice: once after reading the chart and then again after seeing the population information display |

3.9 Appropriateness of plan based on expert panel (EP) review

An expert panel (EP) consisting of two ID experts and a clinical pharmacist reviewed the case and constructed the criteria for an appropriate plan. All experts had clinical experience greater than 5 years. The EP developed the basis for the appropriate plan described in ► Table 4. Any plans that did not meet the criteria were rated as not appropriate. As long as the clinicians mentioned one of the plans included in the table, the plan was decided to be appropriate.

Table 4.

Criteria for appropriate plans

| Appropriate Plan | |

|---|---|

| Infectious diseases group | 1. Start Linezolid Or 2. Start Tigecycline Or 3. Start very high dose of Ampicillin + Ceftriaxone |

| Non-infectious diseases group | 1. Consult ID Or 2. Start Linezolid Or 3. Start Tigecycline Or 4. Start very high dose of Ampicillin + Ceftriaxone |

3.10 Data analysis

The data analysis involved both qualitative and quantitative analysis.

3.10.1 Qualitative analysis

Content analysis and appropriate qualitative methods were used to generate themes for the preferences for population display. We used Atlas ti for coding the post-study interviews regarding the preferences for population display. Two researchers (DR and JM) independently reviewed the transcripts and later met face to face to discuss their perceptions for multiple rounds. After several iterations, themes emerged about the clinicians’ preferences for ideal population information display. We used the RATS (Relevance of study question, Appropriateness of qualitative method, Transparency of procedure and Soundness of interpretive approach) protocol for the content analysis [38].

3.10.2 Quantitative analysis

We conducted quantitative analysis to explore the perceptions for population information displays and the impact of population display on cognition.

We used a t-test to explore the expertise effect on the perceptions for population information display between two groups. It is suitable to use t-test for small sample size [39].

We operationalized cognition by measuring the percentage of subjects who changed their treatment plans after being exposed to the population display. We used a paired sampled t-test to understand the significance of changed (appropriate versus not appropriate) plans before and after the population information display was shown.

We used the t-test to detect expertise effects on reading the chart. The level of significance was set at alpha=0.05 (two-tailed) a priori. A sample size of five in each group makes this analysis exploratory. Previous exploratory pilot studies successfully used 4 to 10 participants for similar study designs [40–42].

4. Results

We successfully extracted similar patient information from the VA database and designed a population information display incorporating the similar patients. The results are organized in two sections: qualitative and quantitative analysis. All clinicians rated the case as highly complex except one who rated the case as medium complex.

4.1 Qualitative analysis

The qualitative analysis included all clinicians including ID and non-ID. The content analysis revealed the following four themes that emerged as preferences for population information display: 1) trusting population data can be an issue, 2) embedded analytics is necessary to explore patient similarities providers would like to understand more about the similarities, 3) need for tools to control the view (overview, zoom, and filter) and 4) different presentations of the population display can be beneficial. The themes are described in the following sections.

4.1.1 Theme 1: Trusting the population data can be an issue

Clinicians appear to be concerned about the validity and trustworthiness of overall population data. In this study, the data were verified by other ID clinicians and thus helped clinicians to improve their confidence on the accuracy of the data. However, clinicians expressed concern about automatically retrieving such information in real-time without expert validation.

Even though patients found through the population database search are similar to a certain extent, they are not identical. Therefore, clinicians are cautious of using the information to infer cause-effect relationships. Also, the practice-based information may differ significantly due to different formulary management or culture of practice in a particular hospital, resulting in potential decision conflicts rather than reducing such conflicts. Therefore, establishing trust for the population data is crucial. For example,

“Exactly, so I would narrow it down and go this way and see how many patients we have here actually. So I would want to know that because just glancing at this, I don’t know if it really is the same patient population.”

4.1.2 Theme 2: Embedded analytics is necessary to explore patient similarities. Providers would like to understand more about the similarities.

Clinicians would like to see the similarities and differences among patients in an aggregated summarized view or through analytical functions. It is important to understand the differences among the matched similar patients with the patient at hand. For example,

“So I think a complex display is fine. There’s some learning curve for it but once I got used to it, it could be useful. But I have to think about how to show that better. The similarity profile of the match patients may help. Or you can also show the data of matched profile as percentage of similarity.”

4.1.3 Theme 3: Need for tools to control the view (overview, zoom and filter)

Features such as overview, zoom and filter embedded in the display may reduce confusion. Therefore, clinicians prefer an overview function to explore the patient profiles first for an integrated view; to zoom if necessary to look into specific details (lab results and different days of results); and filter the data based on specific patient features or outcomes, such as time of death or time for negative culture results. For example,

“And by that have a filter panel with full control over those comorbidities. I’m saying I want to include or exclude diabetics, the heart failures, the surgical abscesses, the by sight infection. So, this is the individual case review and you almost would say that I want a summary viewed first. Then, sort of overviewed, filter and zoom kind of thing. I think that’s relevant. The overview is of the, you know, the four, five antibiotics choices, some sort of heat map of how well you did and then drilling in to individual cases like this being able to filter in or out, the ones that you think are closest to the patient.”

4.1.4 Theme 4: Different presentations of the population display can be beneficial

Different presentations of the same information can help make sense of the data cognitively [43, 23, 44]. Depending on the question related to the problem, the searching criteria are set to find similar patients from the population database. Therefore, few patients can be found for very rare or complex cases, and many patients can be found regarding comparative outcomes of certain treatments. Therefore, different presentations depending on the number of patients available may be necessary. For example,

“I don’t know about pie chart for very small number of patients but that might work for large numbers of patients. But, yeah, I mean when you’re dealing with all those cases and you want to give the data, showing individual level patient’s data is a good way to do it I think.”

4.2 Quantitative analysis

Viewing time for the population graph did differ (t8= 2.3, p=0.04) between groups with experts taking significantly less time than non-experts (2.3±0.86 minutes versus 3.63.6±0.91 minutes, respectively). The viewing time for the population display is shown in a box plot in Figure 2.

Fig. 2.

Viewing time for the population display (expert versus non-expert)

Clinicians’ assessment of appropriateness of plans (cognition) was relatively low (60% of plans being appropriate) and not statistically significant (t9=-1.9; p=0.08).

For the expert group, the average time to read the chart was 4.9±0.48 minutes and for the non-expert group 5.5±0.79 minutes. This difference was also not significant (t6=-1.3, p=0.22).

5. Discussion

In this study, we have successfully used an actual clinical database to extract information from patients who are similar to the complex case and designed a population information display. Previous studies also developed similar visualizations by extracting information from EHRs or population databases [9, 27, 45]. We have used ICD-9 CM codes to find similar patients from the VA clinical database and displayed the information in a single display. The parameters chosen for extraction may be the key to finding the desired outcome from similar patients’ profiles for better cognitive support. Further work is needed to make such queries automatic and efficient, but first, we needed to know if providing that information makes a difference in decision-making and what preferences users might have. The methodologies used in this paper can also be used to test and validate the usability of different visualization techniques within healthcare settings.

Most complex patients do not fit into the evidence-based guidelines [46–50]. Therefore, clinicians need more point-of-care information without information overload for reducing cognitive complexity. Cognitive complexity refers to the complexity that the interface or system introduces to the user with cluttered or unnecessary information. Also, the data can be better represented by visualization in a single display. Visualization of population information has the potential to support “if-then” heuristics for improved and informed clinical reasoning [7, 51, 52]. Most decision support systems do not take heuristics into consideration in the design due to the associated biases [7, 53]. However, intuitive design for future innovative population decision support systems should match the higher cognitive reasoning and mental models of clinicians [54–56]. Showing treatment or diagnostic outcome data leveraged from population or EHR database may nudge the clinician positively and provide cognitive support when the clinician is dealing with unique and complex patients. The visualization design principles used in our study can help future researchers and designers with better task allocation and intuitive display features. More future work is needed in the area of visual presentation that can match clinicians’ mental model to effectively show similar complex patients in a single display.

The discussion is further subdivided into the following three sections: implications for healthcare system design from the qualitative analysis, implications of the quantitative analysis, and improved design of the population information display based on the findings from the qualitative analysis.

5.1 Implications for healthcare system design from the qualitative analysis

The themes that emerged based on the preferences for information display may help future researchers and designers. Our results resonate with the results of previous studies on understanding clinicians’ preferences for information display design in healthcare [17, 20, 40, 57–60]. However, our findings that providers are concerned with data trustworthiness, their need to have more meta-information about the display, and their desire to explore the similarity profile of the patients are unique to this kind of display. In the following paragraphs, we discuss the results from the perspective of the implications for design.

5.1.1 Improving the trust in population data

Practice-based information may provide a glimpse of what can be done when the case at hand is complex and evidence is scarce. However, data pulled from a population database may reveal wide variations in clinical practice, leading to more confusion for the clinician, or at least much more uncertainty as compared to a clinical guideline [61]. Also, the snapshot view may lead to attribution errors regarding the cause and effect of different choices [62]. For example, if providers can assume from the data that 50 patients receiving drug A had a positive outcome, then their patients at hand may also benefit from it. However, the treatment outcomes of the matched patients may not be the same for the patient at hand due to the unique characteristics of each patient’s clinical and functional presentation. Many current applications for providing population data assume that real-time information can be shown without validation [27]. Our findings suggest that clinicians are worried about the validity of real-time population data and would prefer that data validation by a domain expert be done before it can be used to guide decisions for a specific patient.

5.1.2 Analytical complexities of finding similar patients

It is sometimes difficult to explore a large number of similar patients when the patient at hand has complex and very unique characteristics [63]. Therefore, defining similarity measures for temporal categorical data is important for understanding the results from the population inquiry. For example, a similarity measure of temporal categorical data called M&M (Match and Mismatch) developed by researchers at the University of Maryland finds similar patients by ranking scores [64]. This tool has the capability of comparing different features of a patient’s characteristics by using filters as well as visualizing the similarities in a scatterplot. Tools such as M&M may be embedded in the population information display to provide a better measurement of the matched similar patients.

5.1.3 Better tools to control the display

The need for tools to control the view of the display can include overview, zoom and filter functions. These kinds of functionalities have worked well in many other domains as well as in medicine [65–71]. An overall view gives a better understanding and helps with the clinician’s situational awareness. Then, zoom and filter options help the clinician to focus on the important information by filtering out the unnecessary information and allowing them to pay particular attention to details [72]. For example, LifeFlow has an analytic function to show the overall view. In addition, users can zoom and filter as needed to get the relevant information from the EHR [73].

5.1.4 Multiple representations of the population display

Different visualizations of the same information can help researchers make better sense of the data. Infographics researchers in other domains, such as information or computer science, have established design guidelines (e.g., space/time resource optimization, attention management, consistency, etc.) [74–77]. However, the particular problem of finding and displaying similar patients is new, and guidelines have not been established. A systematic review of innovative visualization of EHR data has shown pie charts, bar charts, line graphs, and scatterplots may reveal important information in an aggregated view for representing a larger number of patients [24]. However, for a very small number of patients, a longitudinal view may be better [18]. For example, researchers from IBM Research developed an interactive clinical pattern technique that can visualize and the change the visualization display based on the pattern of the data [78].

5.2 Implications of the quantitative analysis

The quantitative results from this exploratory study offer insights into the effect of a population display on cognitive processes. We found that the display did have a marginal effect on the quality of the plan in pre-/post-assessments. We also found that experts processed the population-based information faster than non-experts, giving validity to the display content. The initial display design provided a quick overview of therapy outcomes of individual patients. Moreover, the results indicate that clinical experts processed the information faster than nonexperts. Clinical experts have years of experiences that could have contributed towards selecting more appropriate patient information for reasoning in shorter time. This finding is congruent with similar findings about experts’ ability to process information in search, perception and reasoning components of the task faster when compared with non-experts [79–83].

5.3 Improved design of the population information display

The results of this study provided us with rich qualitative data regarding the preferences for population information display design. This new design takes considerations of the qualitative findings from this study (► Figure 3). The improved visualization will provide better understanding of the impact of the display in future usability studies.

Fig. 3.

Redesigned Population-based information display.

In this new design, we have placed the legends on the right side of the display for convenience. Showing the antibiotics period as three different timeframes side by side provides a better picture to compare the patient in hand. The differentiation of the timeline can improve the comprehensibility of the display. Also, we have clustered the patients by deaths and by the types of antibiotics used. These clusters provide a better representation of the population information and help to make better cognitive sense of the display by reducing search time. Finally, we have added a summary table for a different presentation of the data through numerical variables. The summary table provides a quick overview of the patients who died after using Daptomycin versus non-Daptomycin medications.

5.4 Limitations and future work

The main limitation of our study was the small sample size. As an exploratory study, the results will guide future larger studies with visualization displays showing population information. There were also biases with regard to the appropriateness of plans for experts versus non-experts. The expert panel decided that as long as the non-experts consulted ID clinicians, then the plan was appropriate. However, this was not the case for experts’ evaluations of the plans. It is difficult to judge an appropriate plan for very complex cases. Also, this is a pilot study and the results are ID domain specific. The cognitive requirements for the information display design may be different in other clinical domains. Thus, this study provides important directions for other clinical domain to design their domain-specific visualization tools.

The 19 patients found in our query shared similar features of the complex patient case. Yet, we did not conduct any additional analysis for quantitatively measuring the similarity of those patients. However, all clinicians concurred that the population information was useful for clinical reasoning, even though the number of patients retrieved was small. It is possible that, when evidence based information is nonexistent, even a small number of patients with different treatments can help reduce uncertainty and improve clinical reasoning.

In addition, there may be limitations regarding the variables that were chosen to evaluate the display for cognition. Also, we did not compare the design with other current population information displays. However, the results lay out the foundation for future larger studies that can test more variables with more participants. Finally, in this study, we have used a static population information display without analytical capabilities. Future studies can explore the final design with larger sample sizes to measure clinical decision-making.

6. Conclusion

In this study, we have successfully extracted similar complex patient information from an actual clinical database and presented the information in a population information display. Future studies may use our methodology of finding similar complex attributes of patients based on the clinical question to reduce uncertainty and cognitive complexity. In addition, the content analysis of the qualitative data on preferences for population display revealed the following four themes: 1) trusting population data can be an issue, 2) embedded analytics is necessary to explore patient similarities, 3) need for tools to control the view (overview zoom and filter) and 4) different presentations of the population display can be beneficial. The results indicate that ID experts processed the population information visualization faster than the non-experts. Also, we have proposed a better design based on the qualitative findings of the study. Future studies with a large number of participants and a more fine-tuned visualization population display may further validate the results of this exploratory study.

Acknowledgements

This project was supported by the Agency for Healthcare Research and Quality (grant R36HS023349) and Department of Veterans Affairs Research and Development (grant CRE 12–230). Dr. Islam was supported by National Library of Medicine training grant (T15-LM07124) and partially supported by Houston Veterans Affairs Health Services Research & Development Center for Innovations in Quality and Effectiveness and Safety (IQuESt).

Footnotes

Clinical Relevance Statement

This work provides a unique look at the clinical utility of big data or population information. Clinicians may use population information once the benefits of clinical utility outweigh the time spent to find relevant information from the database. The design recommendations for population decision support systems from this study can help with innovative and intuitive design for clinical practice.

Conflict of Interest

All authors declare that there are no conflicts of interest.

Human Subject Protection

The University of Utah Institutional Review Board approved the IRB for both VA Salt Lake City Medical Center and University of Utah.

References

- 1.Mandl KD, Kohane IS. Escaping the ehr trap — the future of health it. New England Journal of Medicine 2012; 366(24): 2240-2242. doi:doi:10.1056/NEJMp1203102. [DOI] [PubMed] [Google Scholar]

- 2.Mitka M. Physicians cite problems with ehrs. JAMA 2014; 311(18): 1847–1847. doi:10.1001/jama.2014.5030. [Google Scholar]

- 3.Wu HW, Davis PK, Bell DS. Advancing clinical decision support using lessons from outside of healthcare: An interdisciplinary systematic review. BMC Med Inform Decis Mak 2012; 12: 90 doi:10.1186/1472–6947–12–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Welch BM, Kawamoto K. Clinical decision support for genetically guided personalized medicine: A systematic review. Journal of the American Medical Informatics Association 2013; 20(2): 388–400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hemmerich JA, Elstein AS, Schwarze ML, Moliski EG, Dale W. Risk as feelings in the effect of patient outcomes on physicians’ future treatment decisions: A randomized trial and manipulation validation. Soc Sci Med 2012; 75(2): 367-376. doi:10.1016/j.socscimed.2012.03.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Woolley A, Kostopoulou O. Clinical intuition in family medicine: More than first impressions. Ann Fam Med 2013; 11(1): 60-66. doi:10.1370/afm.1433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Islam R, Weir C, Fiol GD, editors. Heuristics in managing complex clinical decision tasks in experts’ decision making. Healthcare Informatics (ICHI), 2014 IEEE International Conference on; 2014. 15–17 Sept 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Redmond Oster R. Questioning protocol. JAMA Intern Med 2014; 174(5): 667-667. doi:10.1001/jamain-ternmed.2014.107. [DOI] [PubMed] [Google Scholar]

- 9.Sistrom CL, Dreyer K, Weilburg JB, Perloff JN, Tompkins CP, Ferris TG. Images of imaging: How to process and display imaging utilization for large populations. American Journal of Roentgenology 2015: W405-W420. [DOI] [PubMed] [Google Scholar]

- 10.Fauci AS, Morens DM. The perpetual challenge of infectious diseases. N Engl J Med 2012; 366(5): 454-461. doi:10.1056/NEJMra1108296. [DOI] [PubMed] [Google Scholar]

- 11.Fong IW. Challenges in infectious diseases. Springer; 2013. [Google Scholar]

- 12.Sullivan T. Antibiotic overuse and clostridium difficile: A teachable moment. JAMA Intern Med 2014; 174(8): 1219-1220. doi:10.1001/jamainternmed.2014.2299. [DOI] [PubMed] [Google Scholar]

- 13.Luo J, Wu M, Gopukumar D, Zhao Y. Big data application in biomedical research and health care: A literature review. Biomedical informatics insights 2016; 8: 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Weber GM, Mandl KD, Kohane IS. Finding the missing link for big biomedical data. JAMA 2014; 311(24): 2479-2480. doi:10.1001/jama.2014.4228. [DOI] [PubMed] [Google Scholar]

- 15.Tan SSL, Gao G, Koch S. Big data and analytics in healthcare. Methods of Information in Medicine 2015; 54(6): 546-547. doi:10.3414/ME15–06–1001. [DOI] [PubMed] [Google Scholar]

- 16.Donderi DC. Visual complexity: A review. Psychol Bull 2006; 132(1): 73–97. doi:10.1037/0033–2909.132.1.73. [DOI] [PubMed] [Google Scholar]

- 17.Miller A, Scheinkestel C, Steele C. The effects of clinical information presentation on physicians’ and nurses’ decision-making in icus. Appl Ergon 2009; 40(4): 753-761. doi:10.1016/j.apergo.2008.07.004. [DOI] [PubMed] [Google Scholar]

- 18.Klimov D, Shahar Y, Taieb-Maimon M. Intelligent visualization and exploration of time-oriented data of multiple patients. Artif Intell Med 2010; 49(1): 11–31. doi:10.1016/j.artmed.2010.02.001. [DOI] [PubMed] [Google Scholar]

- 19.Koch SH, Weir C, Westenskow D, Gondan M, Agutter J, Haar M, et al. Evaluation of the effect of information integration in displays for icu nurses on situation awareness and task completion time: A prospective randomized controlled study. Int J Med Inform 2013; 82(8): 665-675. doi:10.1016/j.ijmedinf.2012.10.002. [DOI] [PubMed] [Google Scholar]

- 20.Djulbegovic B, Beckstead JW, Elqayam S, Reljic T, Hozo I, Kumar A, et al. Evaluation of physicians’ cognitive styles. Med Decis Making 2014; 34(5): 627-637. doi:10.1177/0272989X14525855. [DOI] [PubMed] [Google Scholar]

- 21.Visser W. Designing as construction of representations: A dynamic viewpoint in cognitive design research. Human–Computer Interaction 2006; 21(1): 103-152. [Google Scholar]

- 22.Mirel B, Eichinger F, Keller BJ, Kretzler M. A cognitive task analysis of a visual analytic workflow: Exploring molecular interaction networks in systems biology. J Biomed Discov Collab 2011; 6: 1–33. doi:10.5210/disco.v6i0.3410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Shneiderman B, Plaisant C, Hesse B. Improving health and healthcare with interactive visualization methods. IEEE Computer Special Issue on Challenges in Information Visualization 2013; 1: 1–13. [Google Scholar]

- 24.West VL, Borland D, Hammond WE. Innovative information visualization of electronic health record data: A systematic review. Journal of the American Medical Informatics Association 2015; 22(2): 330–339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Syroid ND, Agutter J, Drews FA, Westenskow DR, Albert RW, Bermudez JC, et al. Development and evaluation of a graphical anesthesia drug display. ANESTHESIOLOGY-PHILADELPHIA THEN HAGERSTOWN 2002; 96(3): 565-575. [DOI] [PubMed] [Google Scholar]

- 26.Miller A, Sanderson P, editors. Designing an information display for clinical decision making in the intensive care unit. Proceedings of the Human Factors and Ergonomics Society Annual Meeting; 2003: SAGE Publications. [Google Scholar]

- 27.Longhurst CA, Harrington RA, Shah NH. A ‘green button’for using aggregate patient data at the point of care. Health Affairs 2014; 33(7): 1229-1235. [DOI] [PubMed] [Google Scholar]

- 28.Jing X, Cimino JJ. A complementary graphical method for reducing and analyzing large data sets. Case studies demonstrating thresholds setting and selection. Methods of Information in Medicine 2014; 53(3): 173-185. doi:10.3414/ME13–01–0075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kopanitsa G, Hildebrand C, Stausberg J, Englmeier KH. Visualization of medical data based on ehr standards. Methods of Information in Medicine 2013; 52(1): 43–50. doi:10.3414/ME12–01–0016. [DOI] [PubMed] [Google Scholar]

- 30.Murdoch TB, Detsky AS. The inevitable application of big data to health care. JAMA 2013; 309(13): 1351-1352. [DOI] [PubMed] [Google Scholar]

- 31.Livnat Y, Gesteland P, Benuzillo J, Pettey W, Bolton D, Drews F, et al. Epinome – a novel workbench for epidemic investigation and analysis of search strategies in public health practice. AMIA Annu Symp Proc 2010; 2010: 647-651. [PMC free article] [PubMed] [Google Scholar]

- 32.Garvin JH, Duvall SL, South BR, Bray BE, Bolton D, Heavirland J, et al. Automated extraction of ejection fraction for quality measurement using regular expressions in unstructured information management architecture (uima) for heart failure. J Am Med Inform Assoc 2012; 19(5): 859–866 doi:amiajnl-2011–000535 [pii]10.1136/amiajnl-2011–000535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Krall MAG A.V., Samore MH. Big data and population-based decision support. Greenes RA, editor. Clinical decison support: The road to braod adoption. 2nd ed. Oxford, UK: Elsevier Inc; 2014. p. 363-378. [Google Scholar]

- 34.Wolf JR. Do it students prefer doctors who use it? Computers in Human Behavior 2014; 35(0): 287-294. doi:http://dx.doi.org/10.1016/j.chb.2014.03.020. [Google Scholar]

- 35.National Guideline C. Clinical practice guideline for the use of antimicrobial agents in neutropenic patients with cancer: 2010 update by the infectious diseases society of america. Agency for Healthcare Research and Quality (AHRQ), Rockville MD: http://www.guideline.gov/content.aspx?id=25651. Accessed 7/11/2015. [Google Scholar]

- 36.Wickens CD, Carswell CM. The proximity compatibility principle: Its psychological foundation and relevance to display design. Human Factors: The Journal of the Human Factors and Ergonomics Society 1995; 37(3): 473-494. [Google Scholar]

- 37.South BR, Shen S, Leng J, Forbush TB, DuVall SL, Chapman WW, editors. A prototype tool set to support machine-assisted annotation. Proceedings of the 2012 Workshop on Biomedical Natural Language Processing; 2012: Association for Computational Linguistics. [Google Scholar]

- 38.Clark J. How to peer review a qualitative manuscript. Peer review in health sciences 2003; 2: 219-235. [Google Scholar]

- 39.Altman DG. Practical statistics for medical research. CRC press; 1990. [Google Scholar]

- 40.Le T, Reeder B, Thompson H, Demiris G. Health providers’ perceptions of novel approaches to visualizing integrated health information. Methods Inf Med 2013; 52(3): 250-258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Park CL, O’Neill PA, Martin DF. A pilot exploratory study of oral electrical stimulation on swallow function following stroke: An innovative technique. Dysphagia. 1997;12(3): 161–6. doi:10.1007/PL00009531. [DOI] [PubMed] [Google Scholar]

- 42.Ellis G, Dix A. An explorative analysis of user evaluation studies in information visualisation. Proceedings of the 2006 AVI workshop on BEyond time and errors: novel evaluation methods for information visualization; Venice, Italy: 1168152: ACM; 2006. p. 1–7. [Google Scholar]

- 43.Shneiderman B. Enabling visual discovery. Science 2014; 343(6171): 614–614. doi:10.1126/science.1249670. [Google Scholar]

- 44.Wang TD, Wongsuphasawat K, Plaisant C, Shneiderman B. Extracting insights from electronic health records: Case studies, a visual analytics process model, and design recommendations. Journal of Medical Systems 2011; 35(5): 1135-1152. [DOI] [PubMed] [Google Scholar]

- 45.Wongsuphasawat K, editor. Finding comparable patient histories: A temporal categorical similarity measure with an interactive visualization. IEEE Symposium on Visual Analytics Science and Technology (VAST); 2009. [Google Scholar]

- 46.Onuoha OC, Arkoosh VA, Fleisher LA. Choosing wisely in anesthesiology: The gap between evidence and practice. JAMA Intern Med 2014; 174(8): 1391-1395. doi:10.1001/jamainternmed.2014.2309. [DOI] [PubMed] [Google Scholar]

- 47.Lobach D SG, Bright TJ, et al. Enabling health care decisionmaking through clinical decision support and knowledge management. Evidence report/technology assessments,. Rockville (MD): Agency for Healthcare Research and Quality US; 2012. [PMC free article] [PubMed] [Google Scholar]

- 48.Elstein AS. Thinking about diagnostic thinking: A 30-year perspective. Adv Health Sci Educ Theory Pract 2009; 14 (Suppl. 1): 7–18. doi:10.1007/s10459–009–9184–0. [DOI] [PubMed] [Google Scholar]

- 49.Tinetti ME, Bogardus ST, Jr., Agostini JV. Potential pitfalls of disease-specific guidelines for patients with multiple conditions. N Engl J Med 2004; 351(27): 2870-2874. doi:351/27/2870 [pii]10.1056/NEJMsb042458. [DOI] [PubMed] [Google Scholar]

- 50.Elstein AS. On the origins and development of evidence-based medicine and medical decision making. Inflamm Res 2004; 53 (Suppl. 2): S184-S189. doi:10.1007/s00011–004–0357–2. [DOI] [PubMed] [Google Scholar]

- 51.Gigerenzer G, Hertwig R, Pachur T. Heuristics: The foundations of adaptive behavior. Oxford University Press, Inc.; 2011. [Google Scholar]

- 52.Wegwarth O, Gaissmaier W, Gigerenzer G. Smart strategies for doctors and doctors-in-training: Heuristics in medicine. Med Educ 2009; 43(8): 721-728. doi:10.1111/j.1365–2923.2009.03359.x. [DOI] [PubMed] [Google Scholar]

- 53.Gorini A, Pravettoni G. An overview on cognitive aspects implicated in medical decisions. Eur J Intern Med 2011; 22(6): 547-553. doi:10.1016/j.ejim.2011.06.008. [DOI] [PubMed] [Google Scholar]

- 54.Crebbin W, Beasley SW, Watters DA. Clinical decision making: How surgeons do it. ANZ J Surg 2013; 83(6): 422-4208. doi:10.1111/ans.12180. [DOI] [PubMed] [Google Scholar]

- 55.Markman KD, Klein WM, Suhr JA. Handbook of imagination and mental simulation. Psychology Press; 2012. [Google Scholar]

- 56.Phansalkar S, Weir CR, Morris AH, Warner HR. Clinicians’ perceptions about use of computerized protocols: A multicenter study. Int J Med Inform 2008; 77(3): 184-193. doi:10.1016/j.ijmedinf.2007.02.002. [DOI] [PubMed] [Google Scholar]

- 57.Luker KR, Sullivan ME, Peyre SE, Sherman R, Grunwald T. The use of a cognitive task analysis-based multimedia program to teach surgical decision making in flexor tendon repair. Am J Surg 2008; 195(1): 11-15. doi:10.1016/j.amjsurg.2007.08.052. [DOI] [PubMed] [Google Scholar]

- 58.Baxter GD, Monk AF, Tan K, Dear PR, Newell SJ. Using cognitive task analysis to facilitate the integration of decision support systems into the neonatal intensive care unit. Artif Intell Med 2005; 35(3): 243-257. doi:10.1016/j.artmed.2005.01.004. [DOI] [PubMed] [Google Scholar]

- 59.Pieczkiewicz D, Finkelstein S, Hertz M, editors. The influence of display format on decision-making in a lung transplant home monitoring program-preliminary results. Engineering in Medicine and Biology Society, 2003. Proceedings of the 25th Annual International Conference of the IEEE; 2003: IEEE. [Google Scholar]

- 60.O’Hare D, Wiggins M, Williams A, Wong W. Cognitive task analyses for decision centred design and training. Ergonomics 1998; 41(11): 1698-1718. doi:10.1080/001401398186144. [DOI] [PubMed] [Google Scholar]

- 61.Winter A, Stŗbing A. Model-based assessment of data availability in health information systems. Methods of Information in Medicine 2008; 47(5): 417-424. doi:10.3414/ME9123. [DOI] [PubMed] [Google Scholar]

- 62.Arnott D. Cognitive biases and decision support systems development: A design science approach. Information Systems Journal 2006; 16(1): 55–78. [Google Scholar]

- 63.Islam R, Weir C, Del Fiol G. Clinical complexity in medicine: A measurement model of task and patient complexity. Methods of Information in Medicine 2016; 55(1): 14–22. doi:10.3414/ME15–01–0031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Wongsuphasawat K, Plaisant C, Taieb-Maimon M, Shneiderman B. Querying event sequences by exact match or similarity search: Design and empirical evaluation. Interacting with Computers 2012; 24(2): 55–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Carroll LN, Au AP, Detwiler LT, Fu TC, Painter IS, Abernethy NF. Visualization and analytics tools for infectious disease epidemiology: A systematic review. J Biomed Inform 2014; 51: 287-298. doi:10.1016/j.jbi.2014.04.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Rind A. Interactive information visualization to explore and query electronic health records. Foundations and Trends® in Human–Computer Interaction 2013; 5(3): 207-298. doi:10.1561/1100000039. [Google Scholar]

- 67.Smith A, Malik S, Shneiderman B. Visual analysis of topical evolution in unstructured text: Design and evaluation of topicflow. Applications of social media and social network analysis. Springer International Publishing; 2015; 159-175. [Google Scholar]

- 68.Monroe M, Lan R, Lee H, Plaisant C, Shneiderman B. Temporal event sequence simplification. IEEE Transactions on Visualization and Computer Graphics 2013; 19(12): 2227-2236. [DOI] [PubMed] [Google Scholar]

- 69.Shneiderman B, Plaisant C, Hesse BW. Improving healthcare with interactive visualization. Computer 2013; 46(5): 58–66. [Google Scholar]

- 70.Carroll LN, Au AP, Detwiler LT, Fu T-c, Painter IS, Abernethy NF. Visualization and analytics tools for infectious disease epidemiology: A systematic review. Journal of Biomedical Informatics 2014; 51(0): 287-298. doi:http://dx.doi.org/10.1016/j.jbi.2014.04.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Puget A, Mejino JLV, Jr, Detwiler LT, Franklin JD, Brinkley JF. Spatial-symbolic query engine in anatomy. Methods of Information in Medicine 2012; 51(6): 463-478. doi:10.3414/ME11–01–0047. [DOI] [PubMed] [Google Scholar]

- 72.Wongsuphasawat K, Guerra Gómez JA, Plaisant C, Wang TD, Taieb-Maimon M, Shneiderman B, editors. Lifeflow: Visualizing an overview of event sequences. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; 2011: ACM. [Google Scholar]

- 73.Gallego B, Walter SR, Day RO, Dunn AG, Sivaraman V, Shah N, et al. Bringing cohort studies to the bedside: Framework for a ‘green button’ to support clinical decision-making. 2015; 33(7): 1229–1235. [DOI] [PubMed] [Google Scholar]

- 74.Spinellis D, Androutsellis-Theotokis S. Software development tooling: Information, opinion, guidelines, and tools. IEEE Software 2014(6): 21-23. [Google Scholar]

- 75.Lankow J, Ritchie J, Crooks R. Infographics: The power of visual storytelling. John Wiley & Sons; 2012. [Google Scholar]

- 76.Wang Baldonado MQ, Woodruff A, Kuchinsky A, editors. Guidelines for using multiple views in information visualization. Proceedings of the working conference on Advanced visual interfaces; 2000: ACM. [Google Scholar]

- 77.Zuk T, Schlesier L, Neumann P, Hancock MS, Carpendale S, editors. Heuristics for information visualization evaluation. Proceedings of the 2006 AVI workshop on BEyond time and errors: novel evaluation methods for information visualization; 2006: ACM. [Google Scholar]

- 78.Gotz D, Wang F, Perer A. A methodology for interactive mining and visual analysis of clinical event patterns using electronic health record data. Journal of Biomedical Informatics 2014; 48: 148-159. [DOI] [PubMed] [Google Scholar]

- 79.Crowley RS, Naus GJ, Stewart Iii J, Friedman CP. Development of visual diagnostic expertise in pathology: An information-processing study. Journal of the American Medical Informatics Association 2003; 10(1): 39–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Schubert CC, Denmark TK, Crandall B, Grome A, Pappas J. Characterizing novice-expert differences in macrocognition: An exploratory study of cognitive work in the emergency department. Ann Emerg Med 2013; 61(1): 96–109. doi:10.1016/j.annemergmed.2012.08.034. [DOI] [PubMed] [Google Scholar]

- 81.Craig C, Klein MI, Griswold J, Gaitonde K, McGill T, Halldorsson A. Using cognitive task analysis to identify critical decisions in the laparoscopic environment. Hum Factors 2012; 54(6): 1025-1039. [DOI] [PubMed] [Google Scholar]

- 82.Christensen RE, Fetters MD, Green LA. Opening the black box: Cognitive strategies in family practice. Ann Fam Med 2005; 3(2): 144-150. doi:10.1370/afm.264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Arocha JF, Patel VL, Patel YC. Hypothesis generation and the coordination of theory and evidence in novice diagnostic reasoning. Medical Decision Making 1993; 13(3): 198–211. [DOI] [PubMed] [Google Scholar]