Abstract

We exploit the unique phonetic properties of bilingual speech to ask how processes occurring during planning affect speech articulation, and whether listeners can use the phonetic modulations that occur in anticipation of a codeswitch to help restrict their lexical search to the appropriate language. An analysis of spontaneous bilingual codeswitching in the Bangor Miami Corpus (Deuchar et al., 2014) reveals that in anticipation of switching languages, Spanish-English bilinguals produce slowed speech rate and cross-language phonological influence on consonant voice onset time. A study of speech comprehension using the visual world paradigm demonstrates that bilingual listeners can indeed exploit these low-level phonetic cues to anticipate that a codeswitch is coming and to suppress activation of the non-target language. We discuss the implications of these results for current theories of bilingual language regulation, and situate them in terms of recent proposals relating the coupling of the production and comprehension systems more generally.

Keywords: bilingualism, codeswitching, language production, language comprehension, spontaneous speech, phonetic variation

Despite the many potential pitfalls encountered during spontaneous conversation, communication between adult native speakers is generally relatively fluid and effortless. How do speakers and listeners coordinate the numerous subgoals involved in fluent language use, and to what extent does this coordination rely on sensitivity to the distributional properties of natural speech? This paper reports three studies that exploit the unique properties of bilingual language production and comprehension to investigate the resources available to interlocutors during spontaneous communication. Specifically, we examine the processing of phonetic variation related to cross-language activation during English-Spanish codeswitching. The overarching hypothesis is that pressures on the production system can give rise to regularities in the phonetic variation present in the speech stream, and that members of the speech community can ultimately come to exploit these regularities during comprehension. In essence, this proposal is a phonetic analogue of MacDonald's (2013) Production-Distribution-Comprehension account; but where MacDonald focuses on morphosyntactic aspects of linguistic form and processing – asking how processes related to memory retrieval affect word choice and syntactic formulation – we argue that the same logic can be applied to processes involved in the production and comprehension of phonetic variation. We note here that neither the production nor comprehension sides of this proposal are entirely novel. Previous studies of language production have explored processing-related sources of phonetic variation (for monolingual speakers, cf. Bell, Brenier, Gregory, Girand, & Jurafsky, 2009; Gahl, Yao, & Johnson, 2012; Goldrick & Blumstein, 2006; for bilingual speakers, cf. Amengual, 2012; Goldrick, Runnqvist, & Costa, 2014; Jacobs, Fricke, & Kroll, in press), and studies of language comprehension have repeatedly demonstrated that listeners develop acute sensitivity to low-level phonetic regularities (for monolingual listeners, cf. Beddor, McGowan, Boland, Coetzee, & Brasher, 2013; Dahan, Magnuson, Tanenhaus, & Hogan, 2001; McMurray, Tanenhaus, & Aslin, 2002; for bilingual listeners, cf. Ju & Luce, 2004). The novel aspect of our proposal is its focus on the interplay between these processes, and on the mechanisms that support them. To better understand the processes and mechanisms involved, we take advantage of a particular type of language use that sheds light on the relationship between psycholinguistic processing and phonetic variation: codeswitching.

Why codeswitched speech?

Codeswitching offers a window into the relation between linguistic form and processing. Codeswitching is a specialized form of language use, subject to a unique set of linguistic (e.g., Myers-Scotton, 2002; Poplack, 1980, Torres Cacoullos & Travis, 2015) and psycholinguistic (e.g., Broersma & de Bot, 2006; Kootstra, van Hell, & Dijkstra, 2012; Hartsuiker & Pickering, 2008) constraints, and engaging a dedicated mode of language control processes (e.g., Green & Abutalebi, 2013). Habitual codeswitchers are able to regulate the activation of psycholinguistic representations in a way that allows them to fluidly interleave their two languages without obvious disruptions in processing. The surface form of codeswitched speech thus ultimately reflects the end of a long chain of complex processing events, including production-internal processes (Levelt, 1989) as well as interactions between the production and comprehension systems (e.g., Kootstra, van Hell, & Dijkstra, 2010; Loebell & Bock, 2003). On the flip side, listeners' responses to codeswitched speech can provide an index of their expectations given previous experience processing a particular linguistic input (Valdés Kroff, Dussias, Gerfen, Perrotti, & Bajo, in press). In sum, codeswitched speech presents a rich and relatively transparent opportunity for investigating the ways in which the members of a speech community come to produce and comprehend variation in linguistic form.

At this point, it is important to draw a distinction between the study of codeswitched speech and the study of language switching. In studies using the language switching paradigm (e.g., Costa & Santesteban, 2004; Meuter & Allport, 1999), participants are typically cued to switch between their two languages while naming digits or pictures, and the requirement to switch languages generally results in a “switch cost” to response times. It is therefore reasonable to hypothesize that the need to regulate two languages constitutes a source of pressure on the production planning system that could impact the surface form of codeswitched speech. However, experimentally manipulated language switching differs from codeswitching in a number of important ways. Language switching studies typically examine single word production, where the target language can vary at random and is determined by the experimenter (but see Gollan & Ferreira, 2009). During codeswitching, by contrast, grammatical planning mechanisms are fully engaged, the language of all lexical and morphosyntactic elements is fully under the control of the speaker, and in a normal conversational setting, production must additionally be coordinated with comprehension of the interlocutor's speech (Gullberg, Indefrey, & Muysken, 2012). A critical question, then, is whether the switch costs (and cross-language phonological influence; see below) that have previously been observed in experimental settings are particular to laboratory speech, or whether there is any evidence that language regulation has appreciable consequences during spontaneous conversation; it is in no way clear that production planning in these two settings is subject to the same set of demands.

If production patterns during spontaneous codeswitching come to reflect the language regulation processes of bilingual speakers, sensitivity to these regularities would undoubtedly prove beneficial for bilingual listeners. Bilingual comprehension, like production, is widely thought to be language non-selective: a multitude of evidence indicates that even highly proficient bilinguals continue to activate representations in the non-target language, despite the fact that such non-target activation may incur a processing cost (Thomas & Allport, 2000; Von Studnitz & Green, 2002). However, a small amount of work indicates that under some circumstances, bilinguals may take advantage of exogenous cues to language identity to minimize the influence of the non-target language during comprehension (e.g., Ju & Luce, 2004; Libben & Titone, 2009; Schwartz & Kroll, 2006), and interestingly, there may be reason to believe that such cues are relatively more accessible during auditory processing. We return to this point in further depth below. Importantly, very little work (in either the production or comprehension domains) has focused on habitual codeswitchers, arguably the group of language users most likely to develop sensitivity to any statistical regularities in the speech stream that could act as cues to language regulation. The few psycholinguistic studies of codeswitching have examined proficient bilinguals who don't normally engage in codeswitching (e.g., Kootstra, van Hell, & Dijkstra, 2010), but it is as yet largely unknown whether accumulated experience with codeswitching in particular is associated with quantitative or qualitative changes in the mechanisms involved in language regulation. A goal of the current paper is to begin to address this question: can the phonetic form of spontaneous speech provide insight into the language regulation mechanisms of habitual codeswitchers, and if so, can members of a codeswitching community capitalize on such phonetic variation during auditory comprehension?

The remainder of the paper is organized as follows. First, we present an overview of the ways in which bilingual language regulation has been hypothesized to affect the production planning process. We then describe the similarities and differences between the hypothesized pressures on the bilingual production system, and the demands placed on the comprehension system. Subsequently we present three studies: two corpus studies ask whether the phonetic form of spontaneously produced codeswitched speech reflects the processing demands specific to bilingual language production, and an eye tracking study using the visual world paradigm asks whether similarly small fluctuations in phonetic information can be perceived and exploited by habitual codeswitchers during comprehension. We conclude with a discussion of the ways in which these studies contribute to our understanding of bilingual language regulation, in particular, and of the linkages between the production and comprehension systems more generally.

Background

Pressures on the bilingual production system

As alluded to above, the activation of psycholinguistic representations during bilingual production planning is widely thought to be language non-selective; even for highly proficient bilinguals, and even when the experimental setting would be highly conducive to “turning off” the non-target language, studies have consistently provided evidence for transient activation of the non-target language (Kaushanskaya & Marian, 2007; Wu & Thierry, 2012). In certain instances, the activation of the non-target language may be beneficial to production planning processes. For example, bilingual word and picture naming studies typically find relatively faster processing of cognates, words that share both form and meaning in a bilingual's two languages, relative to non-cognates (e.g., Colomé & Miozzo, 2010; Costa, Caramazza, & Sebastián-Gallés, 2000; Hoshino & Kroll, 2008; Jacobs et al., in press). While these findings suggest that a certain amount of cross-talk between languages may be unavoidable, they also demonstrate that cross-language activation is not necessarily detrimental to language processing.

The picture becomes somewhat more complicated when processes other than lexical selection are considered. While the literature on cognate production rather unequivocally demonstrates that cross-language representational overlap can be a boon to lexical access, recent phonetic work indicates that the activation of non-target phonological representations can interfere with accurate phonetic production. Work by Amengual (2012), Goldrick et al. (2014), and Jacobs et al. (in press) demonstrates that the voice onset time (VOT; a timing parameter involved in the production of stop consonants) of cognate words is subject to stronger phonological influence from the non-target language than that of non-cognates. Importantly, the phonetic effects in these studies appear to be the result of online processing demands, rather than (or perhaps in addition to) any qualitative differences in the long-term representation of cognate words. Both Amengual and Goldrick et al. find that (unpredictable) language switching is associated with cross-language phonological influence, suggesting that given adequate time and/or resources, bilinguals can control or resist the effects of cross-language activation on phonetic production. The findings of Jacobs et al. lend credence to this hypothesis: in that study, three groups of bilinguals differing in language proficiency and immersion context all exhibited effects of cross-language activation on naming response times, but only for the non-immersed group of lower proficiency learners did cross-language activation spill over to influence phonetic production. Again, this suggests that the ability to regulate cross-language activation – an ability likely subject to changes in language proficiency and immersion status – determines the extent to which the surface form of bilingual speech overtly reflects the activation of the non-target language during planning.

While these studies indicate that phonetic variation can be used as a tool to investigate the regulation of cross-language activation during speech planning, it is not yet clear whether the results from laboratory studies of language learners and bilinguals who do not codeswitch regularly can be extended to the spontaneous speech of habitual codeswitchers. A number of proposals have hypothesized that bilingual language regulation may be subject to tuning over the lifespan (Green, 1986; 1998; Blumenfeld & Marian, 2011), a process that may yield quantitative and qualitative differences in the regulation mechanisms engaged by habitual versus non-habitual codeswitchers (Green & Abutalebi, 2013). If the presence of phonetic variation in bilingual speech is dependent on disruptions in processing and/or a lack of experience regulating cross-language influence, then it is possible that the highly tuned language regulation capabilities of habitual codeswitchers could eliminate or greatly reduce the amount of language regulation-related phonetic variation produced by these speakers. One recent study does speak against this hypothesis, however: Balukas and Koops (2015) examined the spontaneous speech of habitual codeswitchers from New Mexico and found that the VOT of voiceless English stops varied along with the distance from Spanish, such that English /ptk/ were produced with more Spanish-like VOTs the closer they were to Spanish words. This finding suggests that even for habitual codeswitchers, phonetic variation may provide an index of language regulation during spontaneous speech, although to our knowledge, the Balukas and Koops study is the only one to address this issue thus far. The goal of the production study presented here is to expand on these findings and dig more deeply into the psycholinguistic mechanisms involved in language regulation during spontaneous codeswitching.

Pressures on the bilingual comprehension system

In some respects, the pressures placed on the bilingual comprehension system are similar to pressures on the production system; in both cases, a large body of evidence supports the idea that the activation of psycholinguistic representations is largely language non-selective (see Kroll & Dussias, 2013, for a recent review) and that this non-selectivity may necessitate the recruitment of additional or more finely tuned cognitive resources relative to monolingual processing (Green, 1998). Specifically within the study of bilingual comprehension, a recurring research question concerns the types of cues bilinguals may exploit to help restrict their attention to the target language. Some studies in this vein have focused on contextual and/or syntactic cues to language identity. Schwartz and Kroll (2006), for example, compared word-naming times for cognate and non-cognate words presented in either highly constraining or relatively non-constraining sentence contexts. In their study, both groups of bilinguals (one highly proficient and one of intermediate proficiency) exhibited cognate facilitation in non-constraining sentences, but no cognate facilitation in highly constraining sentences, suggesting that given an adequately constraining context, bilinguals can use language-specific syntactic cues to restrict activation to the target language. It is important to note, however, that the number of studies reporting no mitigating effect of syntactic context on non-target language activation is quite great, perhaps especially in the written modality (cf. Duyck, Van Assche, Drieghe, & Hartsuiker, 2007; Gullifer, Kroll, & Dussias, 2013; Libben & Titone, 2009; Van Assche, Drieghe, Duyck, Welvaert, & Hartsuiker, 2011; Van Assche, Duyck, Hartsuiker, & Diependaele, 2009). Similarly negative results have been found for other potential cues, such as orthographic script (cf. Hoshino & Kroll, 2008 for evidence of persistent cross-language activation in Japanese-English bilinguals) and semantic context (cf. Van Assche et al., 2011; but see Van Hell & de Groot, 2008).

In comparison to the body of work on the written modality, however, relatively little work has examined bilingual comprehension in the spoken modality. Importantly, spoken language offers an additional, extremely rich source of potential language cuing: the phonetic form of the speech stream. A few previous studies suggest that phonetic information can modulate cross-language activation patterns during bilingual comprehension. An early study by Grosjean (1988) used a gating paradigm to investigate whether the recognition of codeswitched words was affected by their phonetic integration into the “base language”; in one condition, French-English bilinguals were asked to transcribe interlingual homophones (words with the same phonological form but different meanings in English and French) whose phonetic realization either did or did not clearly mark their membership in the “guest” language. For example, the last word in the French sentence Il faudrait qu'on [kul] (“It is necessary to [kul]”) could either be transcribed as the English word cool or the French word coule (“sink”). Grosjean found that whether the last word was produced with an English or French accent affected whether it was transcribed as an English or French word, although this is perhaps not surprising given that in this condition, phonetic detail was the only cue available to assist participants in determining the language of the target word.

Li (1996) expanded on Grosjean's findings by asking Chinese-English bilinguals to transcribe codeswitched words that were either phonetically integrated into the base language or not, but that were presented in highly constraining or less constraining sentence contexts1. Li found main effects of both phonetic integration and sentence context, but no interaction between them: codeswitched words whose phonetic production matched the language of the carrier phrase were more difficult to recognize than words that retained their language-specific phonetic cues (similarly to Grosjean, 1988), and more constraining sentence contexts were associated with improved recognition, regardless of phonetic integration. The lack of interaction between these factors indicates that, contrary to Schwartz and Kroll (2006), the constraining sentence contexts in Li's study did not wipe out cross-language effects associated with the target word's form, perhaps suggesting that a word's phonetic form provides a more robust source of language-specific cues than its orthographic form.

While language-specific phonetic information can in some cases function as a source of confusion (Grosjean, 1988; Li, 1996), under the right circumstances phonetic information can be exploited to the listener's benefit. Ju and Luce (2004), for example, demonstrated that during single word recognition, language-specific phonetic cues can mitigate the activation of lexical representations in the non-target language. Using the visual world paradigm, they found that Spanish-English bilinguals relied on VOT to restrict their attention to the lexicon of the target language; when listeners heard a Spanish word (e.g., playa, “beach”) produced with Spanish-like VOT, they were no more likely to look at an interlingual distractor picture (e.g., pliers) than at an unrelated distractor (e.g., ruler). However, when they heard Spanish words produced with English-like VOT, they spent significantly longer gazing at the interlingual (i.e., English) distractor picture, indicating that low-level cues such as stop consonant VOT are involved in the formation of initial hypotheses concerning the language membership of lexical items. Again, this may suggest that cues to language identity are more robust or more readily accessible in the auditory than in the written modality (cf. Hoshino & Kroll, 2008), although it should be noted that not all studies of bilingual auditory word recognition converge on this conclusion (cf. Lagrou, Hartsuiker, & Duyck, 2011; Spivey & Marian, 1999; Weber & Cutler, 2004).

Of course, phonetic information does not exist in a vacuum; it must be integrated with information at other levels of linguistic processing. Recent work by Lagrou, Hartsuiker, and Duyck (2013) investigated the interaction between phonetic information and sentence processing by asking whether the global accentedness of a speaker's voice impacts cross-language activation during auditory word recognition in a sentence context. Dutch-English bilinguals performed an auditory lexical decision task on interlingual homophones embedded in low- or high-constraint English sentences that were produced by either a native Dutch or native English speaker. Both the highly constraining sentence context and the English-accented speech reduced (but did not eliminate) the degree of Dutch activation during English word recognition. These findings are again consistent with the idea that bilingual comprehension is fundamentally language non-selective, but that under some circumstances, cues to language identity may allow listeners to reduce the activation of the non-target language.

Taken together, these studies suggest that the phonetic realization of speech provides an important source of language cuing information, leading to either confusion or facilitation as a function of the particular task and perhaps the group of bilinguals being tested. Interestingly, the vast majority of studies of bilingual auditory comprehension have investigated how the phonetic realization of the target word itself affects the activation of lexical representations in the non-target language. To our knowledge, no study thus far has asked whether anticipatory phonetic cues to language switching modulate the degree of cross-language activation during word recognition, likely in part because the presence of such cues is not well established in the production literature. As argued above, however, if habitual codeswitchers' phonetic production reliably reflects cross-language activation patterns experienced during speech planning, then listeners may be able to key in to these patterns as a means of predicting upcoming language switches.

Bilingual production, comprehension, and the bigger picture

The studies reviewed above are consistent with the claim that bilingual production and comprehension are largely language non-selective processes. In both domains, the majority of the available evidence indicates that the activation of representations in the non-target language is automatic and nearly unavoidable. The interesting questions currently lie in determining the particular contexts that promote reduction or suppression of the non-target language, and the degree to which speakers' past experience allows them to modulate this reduction or suppression. While the answers to these questions are obviously critical for understanding bilingual language regulation, they also provide important data on the relationships between different levels of linguistic processing, on the relation between language processing and more general cognitive processes (i.e., prediction, inhibition, learning), and on the extent to which these relationships hold for both language production and comprehension. The latter issue is particularly important for understanding the similarities and differences between production and comprehension processing: are both processes impacted by the same factors, and to the same extent?

Thus while the specific phenomena that we examine here are necessarily unique to bilingual speech, we contend that these effects provide a window into processes that are relevant for understanding the relationship between production and comprehension more generally. Bilingual phonetic variation allows us to probe the circumstances under which pressures on the production planning system affect the surface form of spontaneously produced speech, and consequently, bilingual comprehension serves as a natural testing ground for the question of listener sensitivity to naturally occurring distributional patterns. The logic of the present study was therefore to first identify the types of phonetic variation associated with language regulation in codeswitched speech, and then to ask whether listeners can exploit these potential cues to anticipate when a codeswitch is about to occur.

Corpus Study 1: Speech Rate

The first production study examined speech rate (and speech disfluency) in the Bangor Miami Corpus of spontaneous codeswitching (Deuchar et al., 2014). The Bangor Miami Corpus consists of 56 conversations, each around 30 minutes long, between 85 highly balanced Spanish-English bilinguals. In total, the corpus contains approximately 250,000 words and 35 hours of speech. Speakers typically knew each other quite well; many were family members or good friends. The age range represented is from 9 to 78, with a median age of 29.5. The analyses presented here rely on the CHAT transcripts prepared by Deuchar et al., which consist of word-level transcription and language tagging. The corpus also contains syntactic category information, which was automatically generated using the Bangor Autoglosser (http://bangortalk.org.uk/autoglosser.php).

Speech Rate Analysis

Method

The Bangor Miami Corpus is divided up into a series of “utterances” consisting of one main clause each. For the analyses described here, we used an automated script to categorize each utterance as unilingual English (n = 26,801), unilingual Spanish (n = 13,999), or codeswitched (n = 2,527). Unilingual utterances were those that contained words in only one language, while codeswitched utterances contained at least one word in English and one in Spanish. For codeswitched utterances, the script used the word-level language tags to determine the location of any language switches.

Inclusion/exclusion criteria

The speech rate analysis was restricted to codeswitches characterized by a single insertion of “other language” material; for example, English–Spanish switches and English–Spanish–English switches were included, but English–Spanish–English– Spanish switches were not. This resulted in the exclusion of 141 codeswitched utterances (5.6% of all switches). Because previous research has demonstrated that the more dominant language tends to be the most affected under language mixing conditions (e.g., Guo, Liu, Misra, & Kroll, 2011), we restricted the analysis to switches from the predominant language of the conversation to the non-predominant. For example, in a predominantly Spanish conversation, only utterances that began in Spanish and switched into English were included in the codeswitched sample, under the hypothesis that anticipation of the switch into the non-predominant language would more strongly affect the predominant language than vice versa. This resulted in the exclusion of 1,085 (45.5%) of the remaining codeswitched utterances. To reduce any influence from the language of the preceding utterance (thereby isolating anticipatory changes in articulation), we included only those codeswitched utterances that were preceded by a unilingual “matching-language” utterance; for example, utterances that switched from English to Spanish were only included if they were preceded by a unilingual English utterance. This resulted in the exclusion of 373 (28.7%) of the remaining codeswitches. Next, because we wished to examine speech rate preceding codeswitches, only utterances with switches that occurred on the third word or later were included, ensuring that all measures of preceding speech rate were based on a minimum of two words. 185 (19.9%) of the remaining codeswitches were excluded at this step. Finally, 10 additional codeswitches were excluded due to errors in language tagging, leaving a total of 733 codeswitched utterances that met the inclusion criteria.

After identifying the set of eligible codeswitched utterances, a set of “matching” unilingual utterances were identified to serve as a control comparison. Unilingual utterances were matched according to the following criteria: 1) they must begin in the same language as their matched codeswitched utterance; 2) they must occur in the same conversation, and be produced by the same speaker, as their matching counterpart; 3) they must be the same length in words; 4) they must also be preceded by a unilingual utterance in the same language, and 5) the syntactic category at the point of the language switch (plus or minus one word) must be the same. Two examples of utterance matching are given below. All codeswitched utterances lacking a match were excluded from the analysis (n = 219), and up to five matching unilingual utterances were included for each codeswitch (with an average of 2.8 unilingual matches per codeswitch). The final number of codeswitched utterances included in the analysis was 514, and the number of matched unilingual utterances was 1,436. Table 1 provides summary information for the final data set examined in Analysis 1. The final sample represented 68 different speakers, in 48 different conversations.

Table 1.

Summary information for the data set used in the analysis of speech rate and disfluency in the Bangor Miami Corpus.

| Codeswitched Utterances | Matched Unilingual Utterances | |

|---|---|---|

| n Total | 514 | 1436 |

| % Disfluent | 37.6% | 24.5% |

| n English(-to-Spanish) | 285 | 808 |

| n Spanish(-to-English) | 228 | 628 |

| mean length in syllables (SD) | 12.9 (6.4) | 10.2 (5.3) |

| mean n syllables before switch point (SD) | 6.8 (4.6) | 5.8 (3.9) |

| most common syntactic category at switch point | Noun (n = 182) | Noun (n = 618) |

| mean syllable duration (SD) | 177.6 ms (74.3 ms) | 161.4 ms (66.9 ms) |

| 1a) | Donde siempre tenemos el [Where we always have the] |

delay. (N) |

(5 words) |

| 1b) | Yo las vi en [I saw them at] |

casa. (N) [home.] |

(5 words) |

| 2a) | Did you think like projects |

en un día? (PREP) [in one day?] |

(8 words) |

| 2b) | They gave me two tickets |

to the movies. (PREP) |

(8 words) |

Measuring speech rate

The measure of speech rate examined here is the average syllable duration for the portion of the utterance leading up to the switch point (or matched non-switch point, for the unilingual utterances), excluding pauses. Previous work has sometimes drawn a distinction between speech rate, which includes pauses, and articulation rate, which excludes them from the calculation (Crystal & House, 1990; Verhoeven, De Pauw, & Kloots, 2004). According to this distinction, then, the present analysis concerns articulation rate. To derive this measure, it was necessary to determine how many syllables preceded the switch point or matched non-switch point. Since Spanish orthography is almost entirely transparent, with only a few lexical exceptions, an automated script was used to count the syllables in each Spanish word based on its orthography. For English words, syllables were counted based on the pronunciation given in the Carnegie Mellon Pronouncing Dictionary (Weide, 2007). The precise onset and offset of each utterance were hand-labeled by a research assistant using the Praat program for acoustic analysis (Boersma & Weenink, 2014), as were the onset and offset of the switch word or matched non-switch word, as well as the onset and offset of any stretches of speech that were perceived as pauses2. Pause durations were subtracted out from the portion of the utterance preceding the switch point, and this duration was divided by the number of syllables leading up to the switch point (or matched non-switch point).

In the course of labeling time points for the speech rate analysis, all utterances containing speech disfluencies (at any point, whether before or after the switch) were hand-tagged. All disfluent utterances were excluded from the speech rate analysis, but a subsequent analysis asked whether there was a significant difference in the rate of disfluency in codeswitched versus unilingual utterances (see below).

As a reliability check, a subset of 10% of the utterances (144 unilingual and 51 codeswitched) were randomly chosen for recoding by the same research assistant approximately one year after the initial measurements were taken. The correlation between mean syllable durations was 0.97 (t(164) = 52.6, p < .0001), with an average difference of 1 ms. An exact binomial test indicated that significantly more utterances were coded as disfluent in the reliability check (30%, vs. 22% for the full data set, p < .01), but this increase was roughly equivalent across utterance types: 10% more of the unilingual utterances were coded as disfluent, versus 8% more of the codeswitched utterances, perhaps indicating that generally stricter inclusion criteria were used for the reliability check.

Statistical Analysis and Results

The mean syllable durations formed a skewed distribution and were log-transformed for the purpose of statistical analysis. Mixed-effects linear regression was used to obtain the best fitting model predicting average syllable duration. A baseline model including by-speaker and by-conversation random intercepts was used as the starting point, and variables were then added in a step-wise fashion to determine which predictors significantly improved model fit; this was evaluated via chi-squared tests of model log-likelihoods, with a relaxed alpha level for control variables of 0.15. By-speaker random slopes for the predictors of interest were included wherever possible, with correlations between random effects. Following construction of the control model, leave-one-out comparisons were used to verify that all predictors remained significant with all other variables in the model. The lmerTest package in R was used to estimate p values for individual beta coefficients using MCMC sampling (Kuznetsova, Brockhoff, & Christensen, 2013); these are included for reference.

The following control variables were examined before testing for an effect of codeswitching: language (English vs. Spanish) preceding the switch point or matched non-switch point, utterance length in words, utterance length in syllables, location of the switch point within the utterance, syntactic category at the switch point, position of the utterance within the conversation, mean conditional probability of bigrams preceding the switch point (cf. Myslín & Levy, in press), conditional probability of the bigram spanning the switch point, mean conditional probability of bigrams including the switch point, and mean conditional probability of all bigrams in the utterance (cf. Jurafsky, Bell, Gregory, & Raymond, 2001). The best fitting control model contained significant effects of language, position of the switch point within the utterance, syntactic category at the switch point, and the mean conditional probability of bigrams leading up to the switch point. The average syllable duration for English was 183.0 ms, versus 144.0 ms for Spanish (χ2(1) = 17.6, p < .0001 in leave-one-out comparisons; β = -0.027, t = -4.7, pMCMC < .0001). Switch points (and matched non-switch points) that occurred later in the utterance were associated with faster speech rates (χ2(1) = 17.5, p < .0001, β = -0.016, t = -4.4, pMCMC < .0001). Note that the Switch Point predictor entered into competition with both of the utterance length predictors, but model comparisons indicated that the position of the switch point accounted for the most unique variance (neither utterance length predictor remained significant with Switch Point in the model), so it was retained. Because the vast majority of codeswitches occurred on a noun, “noun” was taken as the reference level for the Syntactic Category predictor (χ2(12) = 26.6, p < .01). Switches that occurred on pronouns and conjunctions were associated with significantly longer preceding syllable durations (β = 0.015, pMCMC < .01; β = 0.014, pMCMC < .05, respectively), while switches on proper nouns were associated with marginally shorter syllable durations (β = -0.017, pMCMC = .08). Finally, higher mean conditional probability of bigrams leading up to the switch point was associated with marginally faster speech rate (χ2(1) = 2.6, p = .11, β = -0.036, t = -1.6, pMCMC = .10).

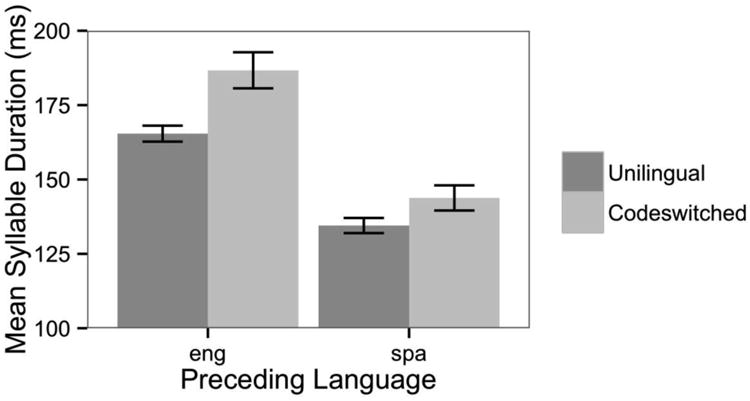

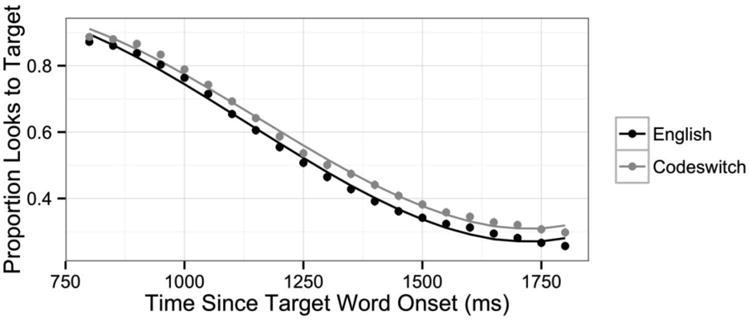

Once the best fitting control model was obtained, the effect of Utterance Type (unilingual vs. codeswitched) was examined. The addition of Utterance Type to the control model resulted in a significant gain in model log-likelihood (χ2(1) = 18.2, p < .0001): mean syllable durations preceding a codeswitch were longer than in matched unilingual utterances, on average by about 16 ms (β = 0.016, t = 3.9, pMCMC = .001). This effect is depicted in Figure 1. There was no interaction between Language and Utterance Type, and the main effect of Utterance Type remained significant when by-conversation random slopes were included in the model3. The final model is included as an appendix.

Figure 1.

Disfluency Analysis and Results

The overall rates of disfluency are given along with the other descriptive statistics for the data set in Table 1: 24.5% of unilingual utterances were disfluent, versus 37.6% of codeswitched utterances. To determine whether this difference was significant, a mixed-effects logistic regression was constructed in the same manner, and examining most of the same predictors, as in the speech rate analysis. (The exception being that the conditional probability of disfluent sequences was not considered; the only measure of bigram frequency examined in this analysis was conditional probability spanning the switch point.) The best-fitting control model contained only two predictors: the position of the switch point within the utterance and the utterance length in words. A greater probability of disfluency was observed for earlier switch points and matched non-switch points (χ2(1) = 3.1, p = .08 in leave-one-out comparisons; β = -0.321, t = -1.7, pMCMC = .09), and also for longer utterances (χ2(1) = 116.7, p < .0001, β = 2.079, t = 10.0, pMCMC <.0001). When added to this control model, Utterance Type was a significant predictor: codeswitching was reliably associated with a greater probability of speech disfluency (χ2(1) = 10.2, p = .001, β = 0.444, t = 3.2, pMCMC = .001), and this effect was significant when by-speaker random slopes for the effect of Utterance Type were included in the model. The final model is included as an appendix.

Discussion for Corpus Study 1

To our knowledge, these data provide the first evidence for a processing cost associated with switching languages in spontaneous speech. While speech rate can vary for many reasons (e.g., emphasis, clarification), some of which could also be associated with codeswitching, the cooccurrence of the speech rate and disfluency effects in this data set strongly suggest that processing-related factors are involved. Interestingly, the effect of the Syntactic Category predictor may also support this conclusion. We found that speech rate leading up to proper nouns was marginally faster than speech rate leading up to common nouns. In fact, the estimated coefficient associated with proper nouns completely canceled out the coefficient for the Utterance Type predictor (β = -0.017 vs. β = 0.016, respectively), indicating no apparent cost associated with switching into a proper noun. This is potentially consistent with the Triggering Hypothesis (Clyne, 2003; Broersma & de Bot, 2006), the idea that spontaneous codeswitching can be triggered as a result of cross-language phonological overlap. Broersma and de Bot provided the first quantitative evidence that codeswitches were significantly more likely to occur within clauses containing phonological trigger words – words with the same phonological representation across a bilingual's two language, such as proper nouns. Our findings suggest a possible extension of this hypothesis: in a new, much larger corpus, representing a different pair of languages, we find that speech rate leading up to trigger words (proper nouns) is marginally faster than speech rate leading up to common nouns; in fact, speech rate leading into codeswitched trigger words was no slower than speech rate leading into non-codeswitched common nouns, perhaps suggesting that the same cross-language phonological overlap that promotes triggered codeswitching also facilitates the production of trigger words more generally.

Of course, the missing piece of information is whether speech rate is consistently faster leading up to proper nouns than common nouns in the absence of cross-language phonological overlap, and this is not currently known. To determine whether the apparent “triggering effect” on speech rate was significantly different in unilingual versus codeswitched utterances, we added an interaction between Syntactic Category and Utterance Type to the fitted speech rate model. While this interaction term marginally improved the model (χ2(12) = 20.8, p = .05), the estimated coefficient for speech rate preceding proper nouns in codeswitched utterances was not significantly different from that of proper nouns in unilingual utterances (β = -0.002, t = -0.12, pMCMC = .9). The only syntactic categories reliably associated with different coefficients in a codeswitching versus unilingual context were pronouns (β = 0.019, t = 1.86, pMCMC = .06) and verbs (β = 0.039, t = 3.44, pMCMC < .001), which were both associated with relatively slowed speech rate leading into the switch point. These findings are clearly not conclusive, but certainly suggestive of differential processing costs associated with structurally different types of codeswitches. Future studies should explicitly address the intersection of triggered codeswitching, bilingual syntactic planning, and changes in speech rate and disfluency.

The finding that codeswitching may be associated with a processing cost even in the spontaneous speech of habitual codeswitchers is highly pertinent to the recent debate surrounding the question of how language experience could give rise to the cognitive and neural changes associated with bilingualism (Green & Abutalebi, 2013; Green & Wei, 2014). As discussed above, previous evidence suggesting that bilingual language regulation may require the recruitment of additional cognitive resources has largely been based on the language-switching paradigm. While the language-switching paradigm provides a high degree of experimental control, it is quite far-removed from typical language use in a number of ways, and its corresponding lack of ecological validity raises questions as to whether the bilingual's everyday linguistic experience engages the type of control mechanisms that could eventually foster an advantage in executive function. The finding that codeswitching appears to be associated with a processing cost in spontaneous conversation thus adds a crucial piece of information to the puzzle: even when highly balanced, proficient codeswitchers retain full control over the choice to switch languages, this switch does not come without a cost.

While this finding fits nicely into a large body of experimental literature, it is also worth considering two alternative – although not mutually exclusive – possibilities. First, in some cases, codeswitching may serve as a repair strategy for already disrupted speech planning. That is, while we think it likely that the intention to switch languages can give rise to a processing cost, it is also possible that when faced with difficulties in lexical retrieval and/or syntactic formulation, bilinguals may choose to switch languages rather than persist in the current, temporarily problematic language. The finding that most of the switches in the corpus occurred on common nouns tends to support the idea that lexical retrieval difficulty may tend to promote codeswitching, at least in some instances. Second, it is possible that the slowed speech rate preceding codeswitches reflects some difference in prosodic organization in codeswitched versus unilingual utterances4; for example, it is possible that codeswitching tends to take place at prosodic boundaries (cf. Shenk, 2006). If this is the case in the present data set, then the slowed speech rate could in part reflect phrase-final lengthening (Klatt, 1976). Both of these issues should be the subject of future research. For now we simply note that these explanations are not at odds with one another; it is certainly possible that all three factors play a role.

Corpus Study 2: Voice Onset Time

The starting point for the voice onset time (VOT) analysis was the companion comprehension study that we present below, where we ask whether proficient codeswitchers can use phonetic modulations in an English carrier phrase to anticipate codeswitches into Spanish. We accordingly restricted the VOT analysis to variation in the production of the English voiceless stops /ptk/. As a first pass, the set of utterances identified for inclusion in the speech rate analysis was searched for instances of /ptk/ that preceded a stressed vowel (and that did not follow the sound /s/). A total of 562 /ptk/ words were initially identified for analysis: 315 in unilingual English utterances, and 247 preceding codeswitches into Spanish. However, a very large number of tokens were found to be unanalyzable for various reasons: approximately 60% were excluded due to poor sound quality in the recordings, overlapping speech, speech disfluency, and /t/ flapping, the phenomenon in American English whereby /t/ sounds preceding unstressed syllables are produced similarly to /d/, as in the word butter5. This first pass analysis comprised 227 tokens in total: 133 from unilingual English utterances, and 94 from codeswitched utterances. A mixed-effects regression predicting log-transformed VOT was constructed following the same procedure as in previous analyses, but no effect of Utterance Type was found (mean VOT in both utterance types was 40 ms; χ2(1) = 0.10, p = .75).

To increase statistical power, we considerably relaxed the search criteria for tokens of /ptk/ and conducted a second analysis adopting some aspects of the approach taken in Balukas and Koops (2015). In a second pass through the corpus, we searched all codeswitched utterances (not just those from the speech rate analysis) for tokens of English /ptk/ that occurred before a stressed vowel (but not after /s/), with no restrictions on the type of codeswitch or the language of the preceding utterance. This resulted in the identification of 1,169 possible English VOT tokens, in 828 unique codeswitched utterances. To serve as a control comparison, we then identified up to five unilingual English utterances that matched each codeswitched utterance according to the speaker, conversation, and utterance length in words, where the preceding utterance was also unilingual English. These matching unilingual English utterances were then searched for tokens of English /ptk/, resulting in the identification of 1,992 possible VOT tokens in 1,277 unique unilingual utterances.

A research assistant examined all 3,161 possible VOT tokens and hand-labeled the utterances for the following time points: the onset and offset of the utterance, the onset and offset of any switches into Spanish, the onset and offset of the word containing the VOT, and the VOT itself, measured from the stop release burst to the onset of periodicity associated with the following vowel. In cases where the burst and/or vowel onset were not clearly visible on the waveform display, the token was marked as unusable: VOT could not be reliably determined for a total of 1,781 (56.3%) of the possible tokens. Of the remaining 1,380 tokens, 591 (42.8%) contained a speech disfluency that did not affect the word containing the VOT of interest; because the statistical analysis indicated that the inclusion versus exclusion of utterances containing speech disfluencies did not qualitatively alter the results, we report the results including the disfluent utterances. The final data set contained usable VOT tokens from 345 unique words, produced by 67 speakers in 49 conversations; 498 of these were in codeswitched utterances, versus 882 in unilingual utterances.

As a reliability check, the first author (blinded to the utterance type of each token) labeled the VOT of 33% of the tokens that were marked as usable. The correlation between these measurements and those of the research assistant was 0.94 (t(454) = 57.3, p < .0001), with an average measurement difference of 1 ms.

Two analyses were then conducted: the first analysis compared (log-transformed) English VOT in codeswitched versus control (unilingual) utterances, and the second examined the effect of proximity to Spanish in only the subset of codeswitched utterances. The following control predictors were examined in both analyses: consonant (/p/ vs. /t/ vs. /k/; cf. Lisker & Abramson, 1964), vowel height (high vs. low; cf. Klatt, 1975), stress of the target syllable (primary vs. secondary; cf. Klatt, 1975), speech rate (i.e., average syllable duration calculated over the entire utterance; cf. Boucher, 2002), length of the target word in syllables, utterance length in words and in syllables, position of the target word within the utterance, and language of the final word of the preceding utterance (English vs. Spanish). All models included random intercepts by speaker, conversation, and target word, and by-speaker and by-word random slopes were also included for the predictors of interest.

In the analysis comparing codeswitched to unilingual utterances, the best fitting control model included significant effects of consonant (χ2(1) = 60.6, p < .0001 in leave-one-out comparisons; see appendix for beta coefficients), number of syllables in the target word (χ2(1) = 5.4, p = .02, β = -0.040, t = -2.3, pMCMC = .02), and speech rate (χ2(1) = 58.7, p < .0001, β = 0.308, t = 7.1, pMCMC < .0001). When added to this control model, the fixed effect of Utterance Type was significant (χ2(1) = 4.7, p < .05), and this was true when by-speaker and by-word random slopes for the effect of Utterance Type were included. English VOT was overall significantly shorter in codeswitched utterances than in matched unilingual English utterances, though only very slightly (38.7 vs. 40.7 ms; β = -0.05, pMCMC = .03). The final model is included as an appendix.

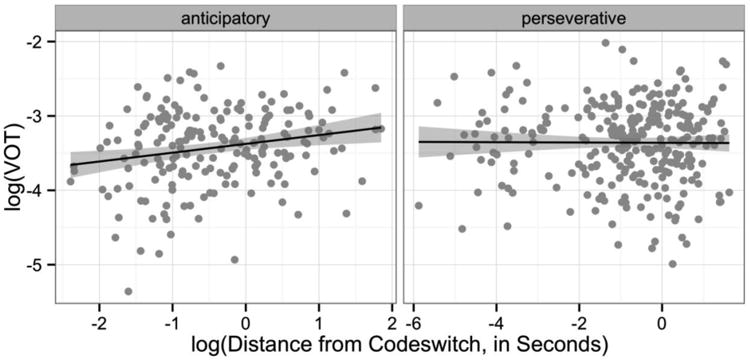

While this analysis indicates that cross-language phonological activation affects the realization of English VOT in codeswitched utterances, it does not address the timing of the effect: is it the anticipation of upcoming Spanish that affects English VOT, or residual activation of Spanish following a switch out of Spanish (or both)? To answer this question, we conducted a second analysis of only the English VOT tokens that occurred in codeswitched utterances, splitting the data set into the 348 tokens that were preceded by some amount of Spanish within the same utterance, and the 191 tokens that were followed by Spanish within the same utterance. (There were 41 tokens that were both preceded and followed by Spanish; these tokens were included in both subsets of the data.)

For the second analysis, the control model obtained from the analysis of all codeswitched tokens was used for each subset of the data, and the amount of time between the target VOT (measured from the stop burst) and either the offset or onset of the nearest Spanish word (in the Preceding versus Following Spanish analyses, respectively) was log-transformed and entered as a predictor. Tokens of /ptk/ ranged from 0.091 to 6.351 seconds preceding switches into Spanish (providing an index of anticipatory changes to articulation), and tokens ranged from 0.003 to 5.066 seconds following switches (corresponding to perseverative changes). In the analysis of Preceding Spanish, the amount of time between the offset of the nearest Spanish word and the English VOT that followed it did not improve the control model (χ2(1) = 0.3, p = .58). In the analysis of Following Spanish, however, the time between the target English VOT and the onset of the nearest following Spanish word did significantly improve the control model (χ2(1) = 5.8, p = .01, β = 0.215, t = 2.5, pMCMC = .01), which was true when random by-speaker and by-word slopes for the effect of Following Spanish were included. These analyses indicate that the effect of cross-language phonological activation on English VOT is anticipatory in nature; this effect is depicted in Figure 2, and the final model is included as an appendix.

Figure 2.

Discussion for Corpus Study 2

Consistent with the only other known study of VOT in spontaneous codeswitching (Balukas & Koops, 2015), we found that English VOT varied systematically as a function of proximity to Spanish words, such that English words produced closer to a codeswitch boundary were realized with significantly more Spanish-like VOT. However, our analyses build on Balukas and Koops' work in a number of ways. For one, Balukas and Koops did not report whether VOT in their data set was conditioned by speech rate. Given the results of our Corpus Study 1, it was crucial to demonstrate that any differences in consonant VOT in unilingual versus codeswitched speech were not a byproduct of systematic changes in speech rate associated with switching languages. Because we find an effect of cross-language activation when speech rate is taken into account, our results corroborate and strengthen the findings of Balukas and Koops.

We also build on these authors' findings by investigating the question of whether cross-language phonological influence in spontaneous speech can be considered primarily anticipatory or perseverative. When we examined English words that occurred before versus after switches into Spanish, we found that the influence of Spanish on English was significant only in the anticipatory direction. This finding provides crucial data regarding the time course of language regulation during spontaneous bilingual speech. When speaking English, habitual codeswitchers in the Bangor Miami Corpus exhibit increased Spanish activation in anticipation of switching into Spanish, but once the language switch has occurred, they show no evidence of residual Spanish activation. This observation raises numerous questions, i.e., Does a similar effect occur when these speakers speak Spanish? Does either the language that is affected or the direction of the effect (anticipatory versus perseverative) vary as a function of language dominance? Are these results specific to habitual codeswitchers, or would similar findings be observed in other populations? These questions must be left up to future research, but two main points bear discussion here: the direction of causality leading up to the codeswitch, and the lack of perseveration following it.

As in the speech rate analysis, it is not possible to determine the direction of causality from the present data. On the one hand, it is possible that speakers evince increased cross-language phonological influence leading up to a codeswitch because they intend to switch languages, and the anticipation of the language switch leads them to preemptively begin activating the language not currently in use. Under this interpretation, it is the intention to switch languages that causes Spanish influence to creep into the phonetic realization of English words (and perhaps by the same token, that necessitates the engagement of a suppression mechanism associated with the slowed speech rate in Corpus Analysis 1). On the other hand, it is also possible that the unintended activation of Spanish representations while speaking English (due to some unknown factor) in effect triggers the codeswitch. Under this interpretation, it is the change in the relative activation levels of the two languages that causes the codeswitch, and that is reflected in the low-level phonetic detail of words prior to the moment when the decision to switch languages is made.

Regardless of the causal relationship between the act of codeswitching and the changes in language activation that temporally lead up to it, what is clear from our data is that once the decision to switch languages occurs, the activation of the now-non-target language is immediately greatly reduced. Taken together, a possible interpretation of our findings is that of active anticipation and suppression on the part of the speaker; a speaker who intends to switch languages may begin preemptively increasing the activation of the language not in use (giving rise to anticipatory cross-language phonological influence) while simultaneously suppressing the activation of the language currently in use (yielding slightly slowed speech rate), allowing them to seamlessly switch into the other language (and show no signs of perseverative activation of the previously active language). It will be important to test this hypothesis in other populations of bilinguals, and to pinpoint the specific instances where this general pattern holds (or not) within the population of habitual codeswitchers. Moreover, we readily submit that this is not the only possible interpretation of our corpus findings, and it does not address the idea of “codeswitching as a recovery device” that was proposed in the discussion of the disfluency results. We maintain that the picture is likely far more complicated, and will require a more nuanced consideration of the factors involved, than we have proposed here. What is clear, however, is that phonetic variation in the speech of habitual codeswitchers reveals multiple ways in which bilingual language regulation impacts the surface form of spoken language.

Comprehension Study

Given the findings of our production studies, the goal of the comprehension study was to determine whether the types of fine-grained phonetic changes associated with cross-language activation could be used by bilingual listeners to predict when a codeswitch is about to happen, and whether such a prediction could aid in the suppression of the non-target language. Also, as mentioned in the background section on bilingual auditory comprehension, the present study was aimed not only at making the link between bilingual production and comprehension more explicit, but also at extending previous findings in several ways. First, we ask whether anticipatory changes in articulation, rather than changes in the realization of the target word itself, affect listeners' processing of the target. Second, we present the target stimuli within a mixed language experimental context, with the goal of making the experimental setting somewhat more similar to that in which bilingual codeswitchers normally find themselves than a blocked or single-language design allows. And finally, the phonetic cues present in our stimuli are explicitly modeled on (and derived from) naturally occurring low-level differences found in natural speech.

Method

Participants

A total of 36 Spanish-English bilinguals were tested. Four participants (all heritage Spanish speakers) were excluded from the analysis because they scored lower than one standard deviation below the group mean on the Spanish portion of the Boston Naming Test. Two additional participants were also excluded: one highly proficient L2 Spanish speaker who reported learning Spanish in school, and one participant who reported a childhood language disorder that resulted in involuntary language switching. Table 2 summarizes the language background information for the remaining 30 participants. The average age was 22.9 (SD = 7.1). All participants reported exposure to both Spanish and English from an early age, as well as continued use of both languages on a daily basis, including language mixing with friends and family. Twenty-three participants reported that Spanish was their more dominant language (vs. seven for English), and eight reported some knowledge of a language other than Spanish or English. Thirteen participants were Puerto Rican, with the remainder of the group composed of participants born in the U.S. (n = 8), Central America (n = 7), or elsewhere in the Caribbean (n = 2).

Table 2.

Language background information for participants in the comprehension study.

| English Mean (SD) | Spanish Mean (SD) | |

|---|---|---|

| n Correct Items, Boston Naming Test (max. 30) | 18.8 (4.9) | 15.0 (5.6) |

| % Exposure at Home | 30.3 (21.7) | 68.8 (21.2) |

| % Exposure at Penn State | 68.8 (18.1) | 29.0 (16.2) |

| Age Began Acquiring | 4.8 (3.0) | 1.0 (1.0) |

| Age Became Fluent | 9.1 (4.5) | 5.0 (2.4) |

| Self-Rated Speaking | 9.1 (0.8) | 9.5 (1.1) |

| Self-Rated Understanding | 9.4 (0.8) | 9.8 (0.8) |

| Self-Rated Reading | 9.3 (0.8) | 9.3 (1.1) |

| Self-Rated Foreign Accent | 4.7 (3.1) | 2.4 (2.9) |

Materials

Picture stimuli

All picture stimuli were drawn from two sources: half were colorized versions of the Snodgrass and Vanderwart set of line drawings (Rossion & Pourtois, 2004), and half were from the Peabody Picture Vocabulary Test (Dunn & Dunn, 2007).

Auditory stimuli

Auditory stimuli were recorded in a sound-attenuated booth using an Audix HT-5 head-mounted microphone. The speaker, a female highly balanced Spanish-English bilingual from Puerto Rico, was asked to simply read sentences as they appeared on a computer screen, in a clear but natural speaking style. All target words were recorded in the English carrier phrase, “Click on the picture of the [target].” The speaker first recorded the full set of English targets, followed by the full set of Spanish targets, followed by all of the English targets again. Each target word was presented twice within each recording block, in randomized order. The speaker's productions were subsequently examined and manipulated using the Praat software for phonetic analysis (Boersma & Weenink, 2014). When the upcoming target word was English, the speaker produced average VOTs of 68 and 22 ms in “click” and “picture”, respectively. When the upcoming word was Spanish, these shortened slightly to 64 and 20 ms, respectively. The average duration of the phrase “click on the” was 427 ms in anticipation of an English target word, and 429 ms in anticipation of switching to Spanish. The latter portion of the carrier phrase, “picture of the”, averaged 647 ms in unilingual English sentences, versus 661 ms in codeswitched sentences, a lengthening of approximately 14 ms. This “case study” corroborates previous work on cross-language effects on phonetic production (e.g., Amengual, 2012; Balukas & Koops, 2015; Goldrick et al., 2014) as well as the results of our corpus analyses. We note that the durational differences that the speaker naturally produced while recording the stimuli were quite small, on the order of 5% - 10% for VOT and around 2% for speech rate. While previous work has demonstrated that monolingual listeners are sensitive to VOT differences as small as 5 ms (McMurray, Tanenhaus, & Aslin, 2002), work on the perception of speech rate has typically examined differences on the order of 40% (Baese-Berk et al., 2014).

All stimuli were spliced and manipulated in the same way using Praat, ensuring that any effects could not be due to differences in acoustic manipulation across conditions. For each target word, a single acoustic token was selected from among the speaker's productions and spliced on to a different carrier phrase from the one in which it originally appeared. Two different carrier phrase productions were used for each target, such that participants heard 192 different carrier phrases during the experiment (each presented twice). Table 3 summarizes the distribution of trials in the experiment, along with the durational aspects of the stimuli that were explicitly controlled. A total of 24 trials (12 carrier phrases, each heard twice) contained anticipatory phonetic cues signaling an upcoming codeswitch: these carrier phrases were originally produced by the speaker during the codeswitching block, and had their VOTs and word durations scaled to fall consistently within a slightly shorter (VOT) or longer (word duration) range than the carrier phrases in the “uncued” condition. Note that other phonetic cues may well have been present in the cued carrier phrases (for example, the speaker seemed to produce a “clearer”, more Spanish-like /1/ in some codeswitched utterances), but only the durational cues were explicitly controlled. For the rest of the 168 codeswitched trials, the target Spanish target word was spliced onto a carrier phrase that originally preceded an English word; for example, the word “pot” in “Click on the picture of the [pot]” was replaced with the Spanish word “pato”. These uncued codeswitch trials also had their VOTs and speech rate adjusted to fall within the restricted range given in Table 3. The result was that all stimuli sounded extremely natural, with no noticeable coarticulatory mismatches, and that VOT and speech rate were reliable, albeit extremely subtle and only occasional, cues to codeswitching within the context of the experiment.

Table 3.

Summary of the experiment design for the comprehension study.

| Condition | n Unilingual English Trials | n Codeswitched Trials | “Click” VOT | “Picture” VOT | “Click on the” Duration | “Picture of the” Duration |

|---|---|---|---|---|---|---|

| Uncued | 192 | 168 | 65 – 75 ms | 20 – 30 ms | 410 – 430 ms | 612 – 654 ms |

| Cued | -- | 24 | 55 – 60 ms | 12 – 17 ms | 410 – 430 ms | 660 – 680 ms |

Design

On each trial of the experiment, participants were instructed to “click on the picture of the [target]”, where the target could be produced in either English or Spanish. Twelve target-interlingual distractor pairs were constructed for the purpose of gauging cross-language activation during word recognition, taking the Ju and Luce (2004) stimuli as a point of departure. These critical pairs are given in Table 4. We introduced several additional constraints to the stimulus set: all critical Spanish targets were two syllables, with stress on the first syllable, and all English interlingual distractors either followed this same pattern or were one syllable words. Additionally, none of the targets or distractors in the experiment were cognates, and only half of the critical targets began with voiceless stop consonants; because it was unclear whether or how the phonetics of the target word would interact with the phonetics of the carrier phrase, we selected six critical targets beginning with /ptk/ and six additional critical targets beginning with other sounds (liquids, nasals, and the voiceless fricative /s/)6. The experiment used a within-participant design, with each listener responding to the twelve critical target words in each of four different contexts: participants heard all critical Spanish targets twice with the English interlingual distractor (ILD) picture present on the screen (once with anticipatory phonetic cues signaling a codeswitch, and once without such cues), and twice without an ILD present (once with phonetic cuing, and once without). This design allows us to ask whether phonetic cuing has an effect on target recognition absent any strong competition from a non-target lexical item (cf. Dahan et al., 2001) versus when a non-target language competitor is immediately present in the display (e.g., Ju & Luce, 2004; Spivey & Marian, 1999).

Table 4.

Critical target word-interlingual distractor pairs in the comprehension study.

| Critical Target (English Translation) | Interlingual Distractor |

|---|---|

| pato (duck) | pot |

| perro (dog) | parrot |

| taza (cup) | tie |

| queso (cheese) | carrot |

| cama (bed) | comb |

| cola (tail) | corn |

| sapo (toad) | sock |

| libro (book) | leaf |

| lápiz (pencil) | lock |

| mano (hand) | money |

| niña (girl) | knee |

| huesos (bones) | whistle |

A critical aspect of the experiment was that the probability of a codeswitched trial be the same as the probability of a non-codeswitched (unilingual English) trial. We additionally aimed to reduce the possibility that participants would become aware of the target-ILD relationships. Given these constraints, participants identified the twelve critical items four times in Spanish with the cuing/distractor manipulations described above, but also four times in English with no such manipulations. Likewise, the interlingual distractors were identified four times in English and four times in Spanish. We also included an additional 24 filler items, each identified four times in each language, for a total of 384 trials (48 total items × 2 languages × 4 presentations in each language). Because the critical target items were presented twice in the context of their ILDs, we additionally added the constraint that all targets must occur twice with each of their potential distractors. We therefore paired each target word with 12 possible distractor pictures, ensuring that if participants were able to learn the co-occurrence restrictions on the target-distractor pairs, this would be uniform across trial types and would not allow the critical target items to be more easily predicted than any other items. The result was that each picture was presented a total of 32 times (8 times as a target, 24 times as a distractor).

The presentation of the trials was pseudo-randomized as follows. All participants responded to the same four practice trials at the beginning of the experiment, and were then asked whether they had any questions about the procedure. Participants then saw the same twelve items, in the same fixed order, none of which contained a critical target. The remainder of the experiment was organized into 31 blocks of twelve items each such that each block contained no repetitions of the same target (in either language), as well as no more than three critical trials (those containing a critical target item, whether cued or uncued, with or without an ILD) per block. The order of these 31 blocks was randomized by the experimental program used to present the stimuli (Experiment Builder; SR Research, Ottawa, Canada). Within each block, the trial order was fixed, with approximately half of the trials in unilingual English and half codeswitched, although this ratio varied up to 8:4 (or 4:8). Critical trials never occurred as the first or last item of the block, to ensure that a minimum of two non-critical trials always intervened between critical items. Additionally, critical trials always occurred immediately following a unilingual English trial. Since it was not known whether switching from unilingual English to a codeswitch would affect the results, we opted to keep this factor constant.

Procedure

Data collection took place in a sound-attenuated booth using an EyeLink 1000 eye tracker (SR Research, Ottawa, Canada) with a chin rest. Participants wore a set of professional quality headphones and were told that on each trial, they would see four pictures displayed on the computer screen, and their task was to simply click on the correct picture as quickly and accurately as possible. They were also told that they would be listening to a native speaker of Puerto Rican Spanish who would name some of the pictures in English, and some in Spanish. Calibration of the eye tracker took place at the beginning of the experimental procedure, and then throughout the experiment as needed. Participants were given the opportunity to take a short break approximately 25%, 50%, and 75% of the way through the experiment. The eye tracking task lasted approximately one hour, after which participants completed an abridged version of the Boston Naming Test (Kaplan et al., 2001) containing 30 English and 30 Spanish items (15 high and low frequency words in each language), as well as a detailed language history questionnaire (the LEAP-Q; Marian, Blumenfeld, & Kaushanskaya, 2007), which was supplemented with a set of questions concerning participants' experience with language mixing. The entire experimental procedure lasted approximately 90 minutes, and participants were paid $15 for their time.

Results

In all analyses presented here, we focus on only the critical target items. Recall that these twelve items were presented four times in Spanish (twice each in the cued vs. uncued conditions, and twice each with an ILD present vs. no ILD) and four times in English (with no cuing or ILD manipulation). In each analysis, we first ask whether participants' response to the codeswitched trials (all conditions collapsed together) differ significantly from the English trials. We then examine the effects of the ILD and cuing manipulations within the codeswitched trials only. Following Barr, Levy, Scheepers, and Tily (2013), each model uses the maximal random effects structure that would converge and statistical significance is evaluated using chi-squared comparisons of model log-likelihood. Estimated p values for individual beta coefficients were obtained using MCMC sampling via the lmerTest package in R (Kuznetsova et al., 2013) and are included for reference. The full model specifications are given in the appendix.

Mouse click data

Accuracy for the mouse click data was at ceiling; of a total of 2,880 critical trials, 7 were responded to incorrectly or timed out, yielding an overall accuracy rate of 99.8%. For the mouse click response time (RT) analysis, RT was calculated as the interval between the onset of the target word and the press of the mouse button (for accurate trials only). Table 5 gives the mean RTs for the critical items in English and the four codeswitching conditions. RTs were log-transformed for the purposes of statistical analysis. In the analysis comparing English to codeswitched trials, a mixed effects regression returned significant main effects of trial number and of language: RTs sped up over the course of the experiment (χ2(1) = 15.8, p < .0001, β = -0.0001, t = -4.0, pMCMC < .0001), and RTs were overall significantly slower to codeswitched than to English trials (χ2(1) = 22.1, p < .0001, β = 0.035, t = 4.2, pMCMC < .0001). These predictors did not interact.

Table 5.

Mean response times by trial type in the comprehension study. Response time was computed as the time of the mouse click minus the auditory onset of the target word. See text for details.

| Trial Type | Mean RT (SD), ms |

|---|---|

| Unilingual English | 1080.5 (252.3) |

| Uncued – No ILD Present | 1115.8 (239.9) |

| Cued – No ILD Present | 1084.0 (241.4) |

| Uncued – ILD Present | 1143.9 (244.4) |

| Cued – ILD Present | 1126.2 (264.3) |

In the analysis of the subset of codeswitched trials, there was still a main effect of trial number (χ2(1) = 8.1, p < 01, β = -0.0001, t = -2.8, pMCMC < .01). There was also a main effect of Distractor, such that mouse clicks were slower on trials where the ILD was present in the display (χ2(1) = 8.1, p < .01, β = 0.029, t = 2.8, pMCMC < .01), and a main effect of Cuing, such that mouse clicks were faster on trials where anticipatory phonetic cues were present (χ2(1) = 6.0, p = .01, β = -0.025, t = -2.2, pMCMC < .05). These predictors did not interact.

Eye fixation data

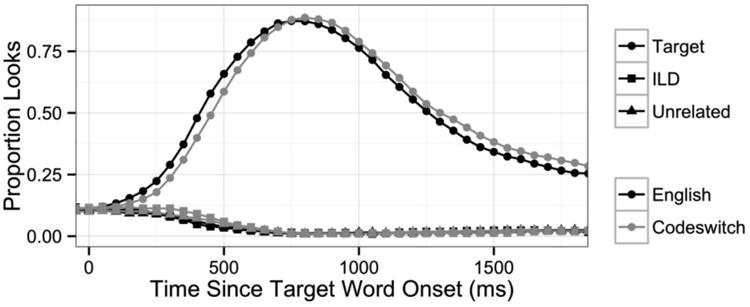

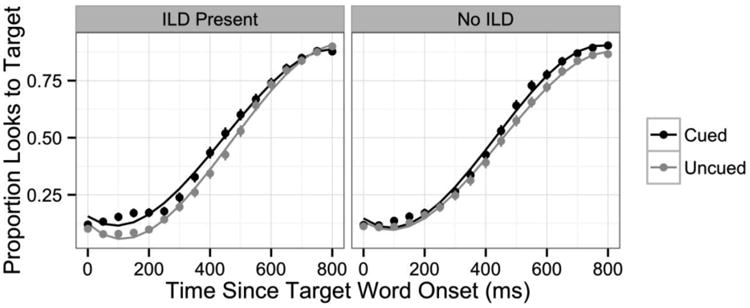

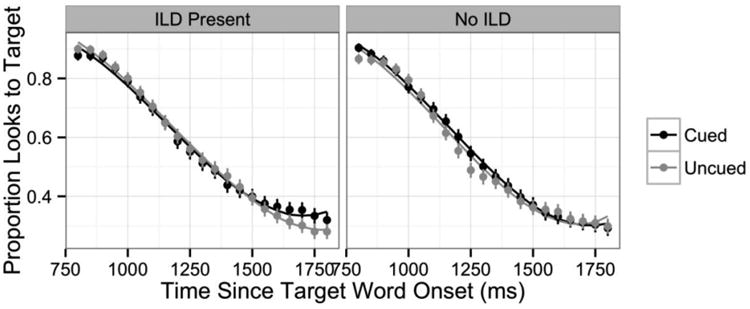

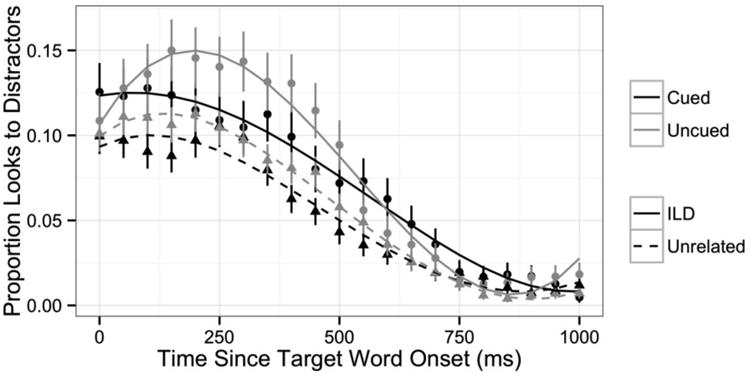

The proportion of looks to the target, interlingual distractor (ILD), and unrelated distractors (averaged together) is plotted for codeswitched versus English trials in Figure 3 (but recall that there was never an ILD present on English trials). The analysis of the eye fixation data focuses on three time windows: an early target activation window (from 0 to 800 ms following auditory onset of the target word) corresponding to the initial activation of the target, a late target activation window (from 800 to 1800 ms) corresponding to the decay in target activation, and the competitor activation window (from 0 to 1000 ms) corresponding to the activation and decay of the non-target competitors. These windows were chosen based on visual inspection of the fixation time course plots.

Figure 3.

The eye fixation data were modeled using growth curve analysis (Mirman, 2014), a statistical method that allows the use of mixed effects regression modeling, and that does not violate the independence assumption7. Following Mirman (2014), orthogonal polynomials were used to model changes in fixation proportions over time. In the analysis of looks to the target picture, the proportion of fixations within each time window was modeled using third-order orthogonal polynomial time terms, which were permitted to interact with each of the experimental variables of interest. The analysis of looks to non-target competitors was similar but used fourth-order orthogonal polynomial time terms to capture the more complex shape of the fixation functions. Under this approach, effects of the experimental variables on the model intercept reflect differences in the overall number of fixations throughout the entire time window, while interactions between experimental variables and the time terms reflect differences in the rate of change in fixation proportions.

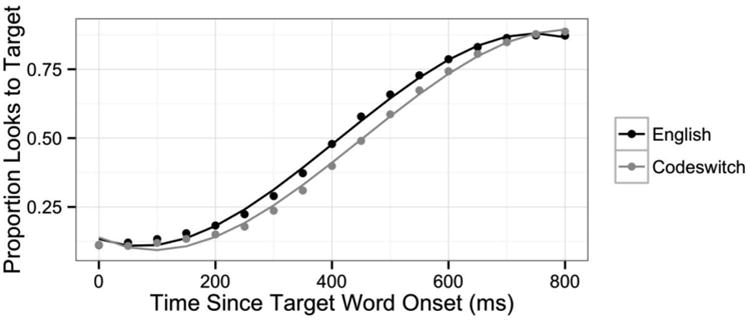

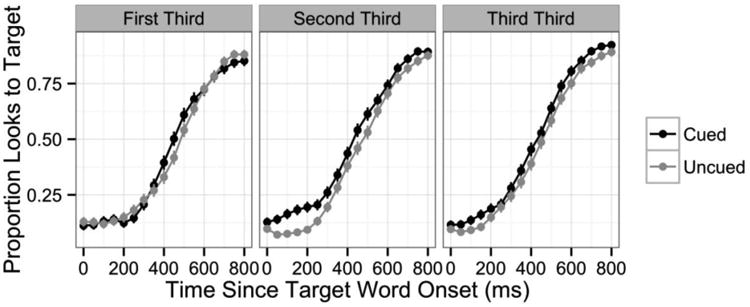

In the analysis of English versus codeswitched trials in the early target activation window (0 ms to 800 ms following target word onset), there was a main effect of Language on the intercept (χ2(1) = 116.3, p < .0001) reflecting the fact that overall, participants spent more time looking at the target on English trials than on codeswitched trials (β = -0.035, t = -4.5, pMCMC <.0001). There was also a significant interaction between Language and the quadratic time term (χ2(1) = 71.2, p < .0001), reflecting the different time course of target activation in the two language conditions. On codeswitched trials, the increase in target activation was initially delayed relative to English trials (likely due in part to the presence of interlingual distractors on some trials), but by the end of the window, the proportion of fixations to the target in the two language conditions was approximately equal (β = 0.114, t = 4.9, pMCMC < .0001). These effects are shown in Figure 4, which plots the empirical data along with smoothed lines depicting the model fit.

Figure 4.