Abstract

Policy Points:

Scarce resources, especially in population health and public health practice, underlie the importance of strategic planning.

Public health agencies’ current planning and priority setting efforts are often narrow, at times opaque, and focused on single metrics such as cost‐effectiveness.

As demonstrated by SMART Vaccines, a decision support software system developed by the Institute of Medicine and the National Academy of Engineering, new approaches to strategic planning allow the formal incorporation of multiple stakeholder views and multicriteria decision making that surpass even those sophisticated cost‐effectiveness analyses widely recommended and used for public health planning.

Institutions of higher education can and should respond by building on modern strategic planning tools as they teach their students how to improve population health and public health practice.

Context

Strategic planning in population health and public health practice often uses single indicators of success or, when using multiple indicators, provides no mechanism for coherently combining the assessments. Cost‐effectiveness analysis, the most complex strategic planning tool commonly applied in public health, uses only a single metric to evaluate programmatic choices, even though other factors often influence actual decisions.

Methods

Our work employed a multicriteria systems analysis approach—specifically, multiattribute utility theory—to assist in strategic planning and priority setting in a particular area of health care (vaccines), thereby moving beyond the traditional cost‐effectiveness analysis approach.

Findings

(1) Multicriteria systems analysis provides more flexibility, transparency, and clarity in decision support for public health issues compared with cost‐effectiveness analysis. (2) More sophisticated systems‐level analyses will become increasingly important to public health as disease burdens increase and the resources to deal with them become scarcer.

Conclusions

The teaching of strategic planning in public health must be expanded in order to fill a void in the profession's planning capabilities. Public health training should actively incorporate model building, promote the interactive use of software tools, and explore planning approaches that transcend restrictive assumptions of cost‐effectiveness analysis. The Strategic Multi‐Attribute Ranking Tool for Vaccines (SMART Vaccines), which was recently developed by the Institute of Medicine and the National Academy of Engineering to help prioritize new vaccine development, is a working example of systems analysis as a basis for decision support.

Keywords: systems analysis, population health, public health practice, cost‐effectiveness

As the costs of diagnosing, managing, and treating diseases continue to escalate—compounded by expenditures associated with the aging of populations worldwide—improving ways to reduce the burden and consequences of disease becomes critical. While efforts to treat disease may appear to be relatively well funded through governmental programs and private health insurance, disease prevention remains the unseen stepchild, generally underfunded and underappreciated unless a crisis occurs, as demonstrated by the recent Ebola panic. Even though planning and prioritization are essential to all areas of health, the scarcity of resources makes careful planning all the more important to population health and public health practice.

Many approaches have been taken to plan for resource allocation and deployment in public health. Very few analyses, however, have ventured into the realm of full cost‐benefit analysis, since health care analysts and providers hesitate to place a specific dollar value on potential health outcomes. But cost‐effectiveness analysis, a near relative of cost‐benefit analysis, has been commonly used and often is preferred because it expresses health benefits in natural units, such as quality‐adjusted life years (QALYs) gained or disability‐adjusted life years (DALYs) prevented.1

Lacking complete data or proper assessment tools, some analysts have turned to simpler measures, such as lives saved or life years saved as a proxy for specifying the importance of public health interventions. Many public health programs are evaluated on intermediate (process) goals, such as smoking cessation rates, on the premise that these achievements may lead to better population health. Moreover, preventive interventions are often evaluated according to whether they help save money, which is, of course, an unfair goal, one that almost no standard diagnostic or therapeutic intervention could (or is expected to) meet. As attractive as such simple metrics may seem, they cannot provide the value and richness that many stakeholders in the public health communities collectively desire or demand. Continuing to accept the harsh “cost savings” metric for preventive interventions can have a serious impact on strategic decisions to improve population health.

Inclusive strategic planning in public health appears to miss a key ingredient: individuals with specific training in systems analysis that can assist in decision making and priority setting. We believe that institutions of higher education can significantly augment analytical capabilities by creating graduate or advanced undergraduate courses in public health, public policy, and related professional programs that incorporate content relating to systems analysis for students to carry out and lead strategic planning efforts. Thus we issue a call to action by proposing that higher education institutions around the world arm the next generation of public health professionals with competencies in systems‐based strategic planning tools and models. These courses may not necessarily appeal to all public health students, nor would it be necessary for all public health programs to offer them, but filling this void will ultimately improve population health and public health practice. Most desirably, course work would provide an introduction to systems‐based approaches and methods that would enhance the value of public health practitioners. In addition, more robust next‐generation training would help create highly competent systems‐based analysts who could support the requisite policy decisions.

These skills differ from those found in the core strengths of most public health programs, which typically are foundational training in epidemiology and related topics. Those disciplines emphasize the proper estimation of population parameters, risk factors, characteristics of disease burden, and causality inference. In contrast, the issues in systems approaches focus on integrating various relative values of health outcomes and applying an analytical framework to comprehensively examine trade‐offs and choices among alternative approaches to improving public health. In settings in which group decisions affect policy, it may also be important to teach students about social choice and the strengths and weaknesses of various voting systems that might be used to create group consensus when multiple alternatives are available.

How Does Strategic Planning for Population Health Differ?

Strategic planning for population health and public health practice differs from strategic planning for generic business development. Most important, strategic planning for public health begins with an understanding of the needs of a specific population, their disease burdens, and the associated interventions. Relevant and accurate data are essential to strategic planning for health promotion. Public health programs generally excel in these areas, especially in sequences of epidemiologic training. A key component lacking, however, is training in systems methods of using such data in program (ie, intervention) prioritization and evaluations. Our informal review of public health course syllabi with “strategic planning” in the title revealed that these courses often focused on planning in not‐for‐profit organizations and emphasized planning in the organization's marketing or competitive advantage. Without presuming that these types of courses dominate the offerings of US public health programs, we simply note that courses with such a focus are unlikely to fulfill the goals that we set in this article.

Another issue pertains to goals of the organization conducting a strategic plan. The vast majority of businesses are devoted to a single goal: maximizing profit for the owners. A similarly single‐minded focus may apply to governmental organizations’ strategic planning. For example, in its official mission statement, the US Internal Revenue Service states its role as “help[ing] the large majority of compliant taxpayers with the tax law, while ensuring that the minority who are unwilling to comply pay their fair share.”2 Similarly, the US Strategic Command (STRATCOM) in the US Department of Defense has the statutory authority to “detect, deter and prevent strategic attacks against the United States and our Allies.”3

More often than not, an organization has an apparent single goal but a complex set of potential mechanisms to measure success. The US Environmental Protection Agency (EPA), for example, has the official mission “to protect human health and the environment.”4 This mission is subdivided into categories such as air (“to preserve and improve air quality in the United States”) and water (“to ensure that drinking water is safe, and restore and maintain oceans, watersheds, and their aquatic ecosystems”). These categories are further subdivided into various operational branches of the EPA, each with its own focus and mission. The emphasis placed on each subcategory has the effect of creating a multigoal organization. And so it is with the complex missions of population health and public health practice. One can envision a state or federal health agency with an overall goal of enhancing the health of the population for which it has responsibility. How can planners—or the legislators making funding decisions—know how well they are performing or succeeding?

To answer such questions, planners routinely develop indicators to measure a program's success. In public health, these metrics for a new or improved intervention might include premature deaths prevented; life years saved; QALYs gained; incident cases of a disease prevented; health care treatment costs saved; lost workforce productivity prevented; cost‐effectiveness of interventions employed to achieve other specified goals; rates of healthy lifestyle choices adopted (eg, smoking cessation or obesity reduction); vaccination; or medical screening test use rates (eg, Pap smears or mammography). Some of these directly measure valued outcomes (eg, prevention of premature death or disease incidence), and others are valued because they are thought to lead to better outcomes of primary importance (eg, exercise might reduce cardiovascular complications).

Sometimes public health organizations have multiple goals rather than multiple ways of measuring success toward a single goal. In either case, though, the challenge remains the same: How does one select the measures that matter (goals)? How much emphasis does one place on each of the chosen goals (attributes)? And critically, what does one do when improvement on one measure comes at the cost of reducing success on another measure (competing goals)?

As an example of competing goals, consider the publications of the Institute of Medicine of the National Academies of Sciences, Engineering, and Medicine. A pair of reports published in the mid‐1980s (one for domestic, the other for international use) prioritized new vaccines for development using the single metric of infant‐mortality equivalents prevented, or what we would now probably describe as life years saved.5, 6 A subsequent publication released in 2000 produced a domestic vaccine development priority list applying only the metric of cost‐effectiveness analysis, calculated as dollars per quality‐adjusted life years saved.7

These metrics differ greatly and sometimes lead to quite different priorities. Interventions with the greatest number of life years saved may not be the most cost‐effective, and vice versa. Yet both these studies—and indeed, almost every cost‐effectiveness analysis ever published—end (or should end) with the caveat that many other factors beyond this metric will affect the choice and performance of a particular program. Neither of these single metrics satisfies multiple stakeholders with their own and often conflicting perspectives and priorities.

How can strategic planning and systems analysis help resolve this dilemma? The answer is that these approaches allow the inclusion of attributes of what cannot be incorporated in cost‐effectiveness analyses. Systems‐based approaches provide ways to do so clearly, formally, and transparently. They can be implemented with fewer data demands than comparable cost‐effectiveness analysis methods would require, and they allow easier and more robust sensitivity analysis to show which attributes and/or value weights most strongly affect decision recommendations.

The Educational Chasm

We believe that these more broadly inclusive methods must be developed and taught in undergraduate and graduate public health programs to illuminate the many factors that influence decision making in practice and enable diverse stakeholders to reach a consensus despite differing perspectives and measures of success in public health. Much of the current specialized software that incorporates some of the unique aspects of strategic planning in public health rests in the domain of cost‐effectiveness analysis. Several well‐developed cost‐effectiveness programs readily support public health planning as long as the cost‐effectiveness paradigm suffices.

A recent publication of the World Health Organization recommends generalized cost‐effectiveness as the basis for making health policy choices.8 Similarly, a tutorial from the Centers for Disease Control and Prevention proposes either cost‐benefit or cost‐effectiveness analysis as the basis for the evaluation, planning, and prioritization of public health programs.9 Yet, as any survey of typical cost‐effectiveness analysis will reveal, practitioners of the technique invariably acknowledge the role of numerous other aspects of the decision that their approach has not taken into account. Better decision support analyses, therefore, must find ways to incorporate such issues directly and formally in systems‐based models.

Accordingly, we maintain that training public health students requires a special focus, special data, and special techniques based on systems analysis. Moreover, specialized interactive software systems may enhance students’ experience and capability. As we noted earlier, this training need not be universal among public health students but should be widely, perhaps universally, available as an online course option.

A Strategic Systems Approach: Multicriteria Decision Analysis

New approaches to decision making can help reduce or remove the limitations of cost‐effectiveness and cost‐benefit analyses. Modern analytic tools for multicriteria systems analysis allow the consideration of many complex facets of various programs’ options, and they allow decision makers to specify, using weights, the importance assigned to each program's attribute. These approaches often come at the apparent cost of greater data complexity, but they bring the advantages of clarity of thought, increased precision and transparency, and (because of the transparency) they may enable parties holding different views to reach a consensus on the desirability of policy and program strategies.

Using multiattribute utility models, one can also begin the prioritization process simply by asking decision makers to rank the various attributes being considered for analysis. A mathematical technique available in the utility model then converts these rankings of attributes into formal decision weights. This approach—created by the mathematician Pierre‐Simon Laplace in the early 1800s and now known as the rank order centroid method—calculates the average of all weights (adding up to 100%) that are consistent with the rankings chosen by the decision maker and that have been shown to yield outcomes with only small losses in value when compared with more formally elicited decision weights.10

An essential feature of multiattribute utility theory models is setting boundaries for evaluating each potential attribute for the worst and best possible outcomes. If a new vaccine can prevent zero deaths per year, then that is a worst‐case scenario for that candidate. But if a new vaccine can prevent, at a minimum, at least N deaths (where N is the highest possible number of vaccine‐preventable deaths according to the relevant population conditions) then that is a best‐case scenario. These boundaries create a common system of measurement for all attributes, no matter what their intrinsic unit of measure is. In effect, all attributes are measured with a common yardstick with, for example, 0 representing nil success on each attribute's dimension and 100 representing maximum achievable success.

SMART Vaccines: A Public Health Decision Support System

The Institute of Medicine, in collaboration with the National Academy of Engineering, recently developed a platform technique for vaccine prioritization known as the Strategic Multi‐Attribute Ranking Tool for Vaccines (SMART Vaccines), which uses a multiattribute utility model.11, 12, 13 We discuss this software tool here to illuminate the complexity of decision support tools that can assist with strategic planning and evaluation for public health policies, extending beyond the traditional approach of cost‐effectiveness analysis. Although the generalization of SMART Vaccines to strategic planning and prioritization for any public health interventions will be evident, we endorse the systems‐based approach that underpins the basic model. We also explore the potential of the software to be used as an interactive learning tool in professional degree courses in public health. Nearly all quantitatively based professional programs in other domains—such as engineering, architecture, urban planning, and business management—combine both hands‐on training with software tools and traditional lecture‐based approaches to learning. We believe that a practical planning tool such as SMART Vaccines can similarly enrich the understanding and preparation of students and stimulate further work in public health education.

SMART Vaccines requires diverse data to describe the population, its vaccine‐preventable disease burden, and the costs of treating those diseases. As discussed by Madhavan and colleagues, these data will likely come from national or subnational sources and may have varying levels of accuracy or completeness.14 In some cases, the relevant data on infection and death rates from disease X in country Y may be found in public health agencies serving the population or in the published literature. Ultimately, the initial estimates may be assembled by groups of experts based on their expert opinion.

As with any formal evaluation process, the quality of the data drives the quality of the analysis. Some of the requisite data (eg, demographic) in SMART Vaccines are relatively stable over time. The costs of treating various diseases may move generally with overall health system costs (in which case relative costs will not change meaningfully), but the cost of treatment data, particularly as new treatments emerge for various diseases, must be updated. If a new, low‐cost medication is developed that cures malaria, the desirability of a malaria vaccine will diminish, so the analysis must be recalibrated to accommodate this change. We cannot predict how often that will occur, only that the continuous monitoring of the medical market will show when an update is necessary. The data on disease burden typically change slowly for many diseases (eg, cancer) in a given population but may change quite rapidly for some infectious diseases (eg, Ebola, SARS), depending on the nature of outbreaks.

From the entered data, SMART Vaccines calculates the numerical values for the health and economic outcomes. The software allows the user to select up to 10 attributes for vaccination programs from among 28 attributes, as well as any personally defined attributes. (The literature on multiattribute utility theory emphasizes that the effect of lower‐ranked attributes becomes vanishingly small once the number of attributes exceeds 7 or 8; hence the limit of 10 attributes is built into this program.)

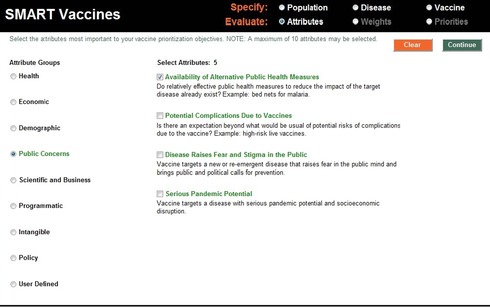

From the health benefits, the user may select premature deaths averted per year, incident cases prevented per year, QALYs created, or DALYs averted. From the economic considerations, users can choose attributes such as one‐time (development) costs, costs per QALY (or DALY), net direct costs, or workforce productivity gains. Other attributes of the program include details like how well a new vaccine might fit into a country's existing immunization program and whether it requires cold‐chain storage capability (Figure 1). These types of attributes are the essence of the many other considerations mentioned in the discussion of cost‐effectiveness analyses but typically are not factored into decisions made without formal data, analysis, or public discussion.

Figure 1.

Attribute Selection Screen of SMART Vaccines

SMART Vaccines contain clusters of health, economic, demographic, public concern, scientific and business, programmatic, intangible, policy, and user‐defined attributes for analyses. This example screen (taken from software version 1.1) shows the attribute “availability of alternative public health measures” from the listing in the public concerns category.

These other considerations are not, and in most cases cannot be, incorporated into formal cost‐effectiveness analysis. Some lie wholly beyond the realm of cost‐effectiveness (and cost‐benefit) analysis, for example, indications that an intervention specifically benefits populations of special interest to policymakers. The multicriteria approach allows ways to at least introduce these factors into the framework formally.

The current version 1.1 of SMART Vaccines contains basic population data for the 34 countries in the Organisation of Economic Co‐operation and Development (OECD) plus India and South Africa. For all available populations, users need to add information about the disease burden created by vaccine‐preventable diseases, including existing incidence, mortality rate, and costs of treatment for each category of disease. After entering the disease burden data, users enter information about hypothetical vaccine candidates that might be created to prevent any of these diseases. These vaccine attributes include factors such as development costs; cost per dose to purchase, distribute, and use; the anticipated coverage rate; efficacy (ie, the percentage of those receiving the vaccine who acquire immune status); the risk of not achieving scientific success; and even such program attributes as a vaccine fitting in an existing immunization program or its reliance on cold‐chain storage. For vaccine candidates, these data, of course, come from expert estimates, since the vaccines do not yet exist. When SMART Vaccines is used to choose among existing vaccines for deployment, the relevant parameters normally are well anchored with data derived from previous uses of other vaccines.

For each candidate vaccine being revaluated, the user must specify values for any attributes that are not calculated by SMART Vaccines. These user‐selected values arise for attributes typically falling outside the realm of standard cost‐effectiveness analysis, for example, “fit with existing vaccine programs,” “potential pandemic vaccine,” “generates fear in the population,” and “special benefit for children.” These attributes enter the analysis with specific and visible weights attached to them, so the decision process is clear. Once the user has completed the data entry, the evaluation process begins, based on up to 10 attributes, as described earlier. The software then asks the user to rank these selected attributes, beginning with their first choice and then their last choice and next their second choice and so forth, until the all options are ranked.

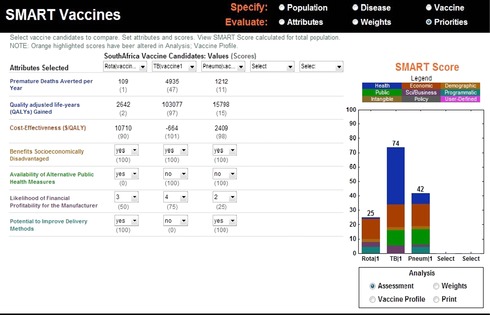

Based on the user's ranking of attributes, SMART Vaccines then suggests a set of initial weights,10 which the user can adjust. Finally, SMART Vaccines asks the user to select the vaccine candidates to be compared in this analysis. After saving these results, the user can calculate scores for a set of up to 5 vaccine candidates and compare the results directly with previously calculated results so long as the attribute list and associated weights have not changed (Figure 2).

Figure 2.

Priority Analysis Screen of SMART Vaccines

A screen showing the various attributes applied by a user for a comparative analysis of 3 hypothetical vaccine candidates—rotavirus, tuberculosis, and pneumococcal pneumonia—for use in South Africa. SMART Scores show the numerical merit values for these vaccine candidates along with a transparent (color‐coded) indication of the contributions from various attributes. The SMART Score for a vaccine candidate for tuberculosis (74) has gained significant contributions from health (premature deaths averted per year and quality‐adjusted life years gained) and economic (cost‐effectiveness) attributes. In this hypothetical assessment, the tuberculosis vaccine outperforms the candidates considered for pneumococcal (42) and rotavirus diseases (25).

Data from the Institute of Medicine, National Academy of Engineering, and the National Academies.

Tool‐Driven Strategic Planning Courses for Public Health Education

Given the general capabilities of SMART Vaccines and its many possible extensions to health applications beyond vaccines, we argue for a new approach to teaching students in public health programs. We base this on the following simple premises:

The paucity of resources for public health interventions underscores the need for data‐driven, analytic, and wholly transparent strategic planning and prioritization.

Virtually all professional degree–granting programs, especially in engineering and business, extensively use tool‐driven, hands‐on education to supplement and/or replace didactic, lecture‐driven learning.

The currently available analytic tools for the planning, prioritization, and evaluation of public health interventions have generally limited the analyses to single‐valued metrics such as life years saved, program costs, or some variant of cost‐effectiveness analysis. By their very design, single‐value analyses unnecessarily omit important dimensions of real‐world problem solving.

New approaches to decision‐support software tools allow consideration of a much wider array of program attributes in planning and evaluation and also allow the user to specify “what's important” in such analyses. SMART Vaccines is an operational example of such a tool. Many other existing software tools could be readily adapted to support strategic planning courses in public health. A recent survey of the software market by the International Society of Multiple Criteria Decision Making found 25 distinct software packages offering formal decision support capabilities (www.mcdmsociety.org/soft.html). Instructors may wish to survey these options to determine what fits best with their course offerings, and perhaps choose more than one option, just as biostatistics instructors select among statistical analysis packages available to students.

There is an important synergy between the educational uses of SMART Vaccines and its further development, particularly when students using the software could (as part of their educational process) develop new data sets for a centralized repository for SMART Vaccines. Learning how to assemble such key data, how to evaluate the quality of the data, and how to create estimates when none are elsewhere available is one of the principal skills of people supporting strategic planning in public health. Thus, we view learning how to assemble and evaluate data as a key strategic planning skill that public health programs must teach their students. We note again that in some cases, the types of data (and their likely sources) correspond to standard public health data demands (eg, epidemiologic estimates of disease burden) but that others may differ significantly (eg, cost of disease treatment).

Another issue goes directly to the choice among competing alternatives with voting, grading, or ranking mechanisms. In some settings, groups of decision makers or advisers (“experts”) are asked to evaluate and choose among the candidates (options). For example, in the field of vaccines in the United States, the Advisory Committee on Immunization Practices (ACIP) performs this task. In the World Health Organization (WHO), the Strategic Advisory Group of Experts (SAGE) has a similar role. If they formally employed multicriteria decision support tools, their role would change. That is, rather than evaluating options, they would evaluate the importance of attributes that describe all options. The latter approach increases transparency and reduces arbitrary decision making regarding specific public health interventions. While mechanisms exist to convert rank order lists into weights (eg, the rank order centroid approach built into SMART Vaccines), the group still needs to agree on the rank order (at a minimum) and perhaps even place actual weights on the attributes. Thus they need to assemble individual preferences into group preferences.

We describe these methods generally as voting or grading systems. Knowing the strengths and weaknesses of these various voting and grading systems within the relevant settings is important for leaders in the field of public health. Some approaches require rank order lists from individuals. Others require that each option be graded on a common scale. Various formulas synthesize these inputs into a group decision (a voting rule). Some are relatively immune to strategic manipulation, while others are easy to manipulate. Some have easy‐to‐understand rules, and others rely on complex mathematical formulas. Moreover, when given the same data, they can produce different outcomes. Voting rules matter. The growing field of social choice helps explain these choices, as the best voting process may well differ from setting to setting.

Whether directly ranking options or creating lists of attributes (and their weights) that subsequently enter multicriteria decision support models, decision makers cannot make thoughtful choices without the proper data. Multicriteria approaches formalize the data structure and thus may appear daunting to the user. The direct‐ranking systems ask voters or judges to reach conclusions without a formal data analysis, but they implicitly presume that they have made some analysis that supports their rankings. Thus these social choice mechanisms also deserve attention, just as formal decision support tools do, whether or not multicriteria models are employed.

A Call to Action for Higher Education

The 2003 Institute of Medicine report entitled Who Will Keep the Public Healthy? Educating Public Health Professionals for the 21st Century is a guide to the transformation of education to meet the growing needs of public health.15 The report acknowledged the importance of “traditional core areas” for public health education, stating that “the following eight content areas are now and will continue to be significant to public health and public health education in programs and schools of public health for some time to come: informatics, genomics, communication, cultural competence, community‐based participatory research, global health, policy and law, and public health ethics.”11 (p 62 )

To this, we would add the critical domain of systems‐based approaches to strategic planning. In the future, if we do not teach these skills to at least some public health students, we will have no leaders with these important competencies as the complexity of the decision scenarios increases. Public health officials cannot reasonably be expected to help to assure that their organizations achieve their best outcomes without embedding systems‐based strategic planning in each organization's management and governance. The leaders must at least be aware of the principles, core performance measures, and the basics of systems analysis in order for strategic planning to achieve their objectives. Courses such as those we envision—hands‐on, tool‐driven learning focused on the most sophisticated ways to carry out strategic planning and policy analysis—may be too specialized for some degree programs to create and offer. This, however, opens the possibility of using online educational platforms and forums to accelerate expansion of such courses.

The best outcome may be courses with varying levels of sophistication regarding the concepts, with more advanced course work preparing students to use the relevant software, develop and curate relevant data, and provide sophisticated technical support to their organizations in using these approaches. Other courses, as part of a broader training program, may offer a less intensive exposure to the concepts, with a goal of creating informed users of the approach rather than specialized practitioners.

We offer a plea and a challenge to educators in population health to develop meaningful courses of at least one academic term in length centered on both systems analysis and strategic planning. Most desirably, such courses—modeled on the active practical use of software tools in engineering and business management programs—would also involve students in gathering data to support their analytical approaches. The opportunities for students and professionals with such skills will invariably grow in the future as resources for public health come under duress at the same time that the US and world populations continue to age and face the consequences of chronic illnesses.

The time is now. The need is great.

Funding/Support: We thank the National Vaccine Program Office and the National Institutes of Health, Fogarty International Center of the Department of Health and Human Services, for supporting this research in part through a contract with the National Academy of Sciences.

Conflict of Interest Disclosures: All authors have completed and submitted the ICMJE Form for Disclosure of Potential Conflicts of Interest. Scott Levin reports that he was a paid consultant to the Institute of Medicine for the SMART Vaccines projects. Rino Rappuoli reports that he is an employee of GlaxoSmithKline Vaccines.

Acknowledgments: We acknowledge the contributions of Lonnie King, Kinpritma Sangha, Rose Marie Martinez, Patrick Kelley, Proctor Reid, and Harvey Fineberg, and that of the advisory members who served on the SMART Vaccines project committees of the National Academies.

The views expressed in this article are those of the authors and not necessarily of the National Academies of Sciences, Engineering, and Medicine. Charles Phelps and Guruprasad Madhavan wrote the first draft. Rino Rappuoli, Edward Shortliffe, Scott Levin, and Rita Colwell contributed to the draft and edited it.

References

- 1. Gauvreau C, Ungar W, Kohler J, Zlotkin S. The use of cost‐effectiveness analysis for pediatric immunization in developing countries. Milbank Q. 2012;90(4):762‐790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. U.S. Internal Revenue Service . The Agency, its Mission and Statutory Authority. www.irs.gov/uac/The‐Agency,‐its‐Mission‐and‐Statutory‐Authority. Accessed December 8, 2015.

- 3. U.S. Strategic Command. Mission . www.stratcom.mil/mission. Accessed January 29, 2016.

- 4. U.S. Environmental Protection Agency . About EPA. www.epa.gov/aboutepa. Accessed January 29, 2016.

- 5. IOM (Institute of Medicine) . Diseases of Importance in the United States. Vol. 1 of New Vaccine Development: Establishing Priorities. Washington, DC: National Academy Press; 1985. [Google Scholar]

- 6. IOM (Institute of Medicine) . Diseases of Importance in Developing Countries. Vol. 2 of New Vaccine Development: Establishing Priorities. Washington, DC: National Academy Press; 1986. [PubMed] [Google Scholar]

- 7. IOM (Institute of Medicine) , Stratton K, et al., eds. Vaccines for the 21st Century: A Tool for Decision Making. Washington, DC: National Academy Press; 2000. [PubMed] [Google Scholar]

- 8. Edejer TT‐T, Baltussen R, Adam T, et al. Making Choices in Health: WHO Guide to Cost‐Effectiveness Analysis. Geneva, Switzerland: World Health Organization; 2003. [Google Scholar]

- 9. Control Centers for Disease and Disease Prevention Division for Heart and Prevention Stroke. http://www.cdc.gov/dhdsp/programs/spha/economic_evaluation/. Accessed February 10, 2016.

- 10. Edwards W, Barron FH. SMARTS and SMARTER—improved simple methods for multiattribute utility measurement. Organ Behav Hum Decis Processes. 1994;60(3):306‐325. [Google Scholar]

- 11. IOM (Institute of Medicine) , Madhavan G, et al., eds. Ranking Vaccines: A Prioritization Framework. Washington, DC: National Academies Press; 2012. [PubMed] [Google Scholar]

- 12. IOM (Institute of Medicine ), Madhavan G, et al., eds. Ranking Vaccines: A Prioritization Software Tool. Washington, DC: National Academies Press; 2013. [PubMed] [Google Scholar]

- 13. IOM (Institute of Medicine) and NAE (National Academy of Engineering ), Madhavan G, et al., eds. Ranking Vaccines: Applications of a Prioritization Software Tool. Washington, DC: National Academies Press; 2015. [PubMed] [Google Scholar]

- 14. Madhavan G, Phelps C, Sangha K, Levin S, Rappuoli R. Bridging the gap: need for a data repository to support vaccine prioritization efforts. Vaccine. 2015;33(S2):B34‐B39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. IOM (Institute of Medicine) . Who Will Keep the Public Healthy? Educating Public Health Professionals for the 21st Century. Washington, DC: National Academies Press; 2003. [PubMed] [Google Scholar]