Abstract

Policy Points:

Communities, funding agencies, and institutions are increasingly involving community stakeholders as partners in research, to provide firsthand knowledge and insight.

Based on our systematic review of major literature databases, we recommend using a single term, community‐academic partnership (CAP), and a conceptual definition to unite multiple research disciplines and strengthen the field.

Interpersonal and operational factors that facilitate or hinder the collaborative process have been consistently identified, including “trust among partners” and “respect among partners” (facilitating interpersonal factors) and “excessive time commitment” (hindering operational factor).

Once CAP processes and characteristics are better understood, the effectiveness of collaborative partner involvement can be tested.

Context

Communities, funding agencies, and institutions are increasingly involving community stakeholders as partners in research. Community stakeholders can provide firsthand knowledge and insight, thereby increasing research relevance and feasibility. Despite the greater emphasis and use of community‐academic partnerships (CAP) across multiple disciplines, definitions of partnerships and methodologies vary greatly, and no systematic reviews consolidating this literature have been published. The purpose of this article, then, is to facilitate the continued growth of this field by examining the characteristics of CAPs and the current state of the science, identifying the facilitating and hindering influences on the collaborative process, and developing a common term and conceptual definition for use across disciplines.

Methods

Our systematic search of 6 major literature databases generated 1,332 unique articles, 50 of which met our criteria for inclusion and provided data on 54 unique CAPs. We then analyzed studies to describe CAP characteristics and to identify the terms and methods used, as well as the common influences on the CAP process and distal outcomes.

Findings

CAP research spans disciplines, involves a variety of community stakeholders, and focuses on a large range of study topics. CAP research articles, however, rarely report characteristics such as membership numbers or duration. Most studies involved case studies using qualitative methods to collect data on the collaborative process. Although various terms were used to describe collaborative partnerships, few studies provided conceptual definitions. Twenty‐three facilitating and hindering factors influencing the CAP collaboration process emerged from the literature. Outcomes from the CAPs most often included developing or refining tangible products.

Conclusions

Based on our systematic review, we recommend using a single term, community‐academic partnership, as well as a conceptual definition to unite multiple research disciplines. In addition, CAP characteristics and methods should be reported more systematically to advance the field (eg, to develop CAP evaluation tools). We have identified the most common influences that facilitate and hinder CAPs, which in turn should guide their development and sustainment.

Keywords: community‐academic partnership, collaboration, community‐based participatory research, research design

Research carried out in community settings has traditionally progressed in one direction, in which academic researchers conceptualize research projects with minimal (or perhaps without any) input from community stakeholders; implement interventions or programs, often without a plan for sustainment in the communities; obtain data and information from community members; and disseminate the newly gained knowledge and information to peers and colleagues rather than to members of the community.1, 2, 3 Consequently, research has often failed to be translated from university‐based to “real‐world” settings and program implementation, with community stakeholders reporting a lack of investment in the research and needs different from those being addressed by the researchers.4, 5, 6, 7 These challenges highlight the need for improved collaboration between academics and community stakeholders.8

Community stakeholders can provide relevant firsthand knowledge and insight that could help to identify critical public health concerns and to design and implement research projects examining evidence‐based interventions.9, 10 This insight is hypothesized to increase the relevance and feasibility of the interventions for community care11, 12 and is especially important to researchers interested in interventions for use in community settings, effectiveness studies, and studies of the dissemination and implementation of evidence‐based practices in usual care settings.13, 14 For example, collaboration with community stakeholders can provide information about the context of a community or agency, thereby allowing researchers to tailor implementation, maximize feasibility, and increase the external validity of evidence‐based practices.15 Building and sustaining community‐academic partnerships (CAPs) thus are important foundational skills for researchers and community partners hoping to disseminate and implement promising interventions and community programs.16 Moreover, involving community stakeholders is believed to help decrease the marginalization of communities that have historically received little benefit from participating in research.17, 18 Successful partnerships between community stakeholders and researchers also improve communication, cooperation, and trust between community stakeholders and researchers, generate feasible and useful innovations, and help close the gap between research and community practice that has been noted for decades.19, 20 For example, according to Lewin,

Many psychologists working today in an applied field are keenly aware of the need for close cooperation between theoretical and applied psychology. This can be accomplished in psychology, as it has been accomplished in physics, if the theorist does not look toward applied problems with highbrow aversion or with a fear of social problems, and if the applied psychologist realizes that there is nothing so practical as a good theory.21 (p169)

Given CAPs’ potential, it is not surprising that they have been used for decades in research, education, and service projects.22 Moreover, communities, funding agencies, and institutions are increasingly emphasizing the need for involving community stakeholders as partners in these projects.22, 23, 24 For example, the National Institutes of Health (NIH) and the Patient‐Centered Outcomes Research Institute emphasize involving community stakeholders in research, including studying and planning for the development, dissemination, and implementation of evidence‐based interventions.25, 26 In addition, for the past decade the NIH and the Canadian Institutes of Health Research (CIHR) have prioritized translational science, which has helped connect funded researchers and various stakeholders, including community groups.24, 27 Institutions like the US Department of Veterans Affairs and the US Department of Defense are creating specialized departments, training, and research agendas in their institutions that focus specifically on the involvement of community stakeholders in research and service projects.28, 29

Relatively few community‐academic partnership models have been described at length in the literature, however. Most notable are community‐based participatory research (CBPR)30 and participatory action research (PAR). The purpose of CBPR is to reduce the gap between research and practice through collaboration between academic researchers and community stakeholders in order to provide both benefits important to communities and interventions relevant to the community. Community members and researchers participate in the CBPR framework30 throughout the entire research process, from the conceptualization of the research to the interpretation and dissemination of its results. The literature describes 8 important principles of CBPR, including partnership in all phases of research, building on the community's resources and strengths, using an iterative colearning process, providing benefits to partners, and disseminating the information learned to all members.30 PAR is a dynamic collaborative model similar to CBPR in which researchers and participants decide on the research questions and methods, collect and analyze the data, and implement the research results in the community or group being studied.31 The purpose of PAR is to effect change in the lives of the participants involved through a method that they choose for themselves.31 While PAR and CBPR are similar, CBPR takes place in community settings and has the community participate in the design and implementation of research projects, whereas PAR is “a way of generating research about a social system while simultaneously attempting to change that system.”31, 32, 33

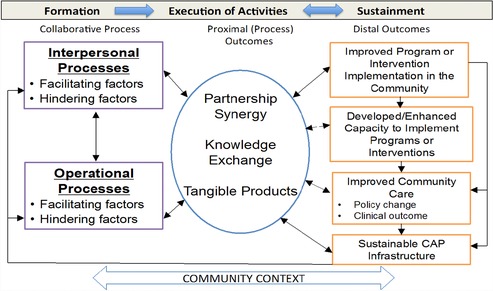

Although both CBPR and PAR are frequently cited in literature on collaborative partnerships, descriptions of these collaborative models include guiding principles rather than a formal conceptual definition of collaboration between community partners and academic researchers. This could limit the use of these collaborative models in evaluation studies of collaborative group processes. Furthermore, the specific principles guiding CBPR's development and collaborative nature, such as fully involving collaborative partners at the earliest stages of research conceptualization, may exclude many collaborative partnerships from fitting into the CBPR framework (eg, a researcher identifying the topic of interest and/or research design and then soliciting community stakeholders to participate in a collaborative partnership).34, 35 Community‐academic partnership research whose purpose is not social system change will not fit into the PAR model. Thus, a more inclusive CAP model is necessary for the continued development of this field. A recently introduced theory‐based collaborative model is the Model of Research‐Community Partnership36 (Figure 1), which can be used to guide and evaluate the development and maintenance of CAPs as well as to interpret outcomes of partnership efforts.37 This model identifies specific collaborative processes important to the development of a CAP, proximal outcomes (eg, partnership synergy, knowledge exchange, tangible products) that occur during the execution of CAP activities, and distal outcomes (eg, community improvements) that occur as a result of the CAP's proximal outcomes. Our goal was to examine the variety of collaborative interactions that might occur between research and community partners at each stage of the model, thereby increasing our understanding of how different partnerships may function. We used this flexible model to guide the evaluation of the CAPs in our systematic review.

Figure 1.

Model of Research‐Community Partnership

Adapted from Brookman‐Frazee et al. 2012.36

Objectives

Even though the amount of published literature on collaborative groups has increased dramatically in recent years, it still lacks consensus and systematic review. The current literature ranges from “lessons learned” papers outlining perceptions and anecdotes regarding factors important to the development of collaborative partnerships, to rigorous research evaluations of collaborative groups identifying those factors facilitating and hindering the development and sustainment of collaborative partnerships, and literature reviews that are often discipline specific (eg, include only education collaborations).38, 39, 40, 41, 42, 43 To our knowledge, no rigorous systematic reviews have been conducted, using the PRISMA criteria (eg, rigorous eligibility/ineligibility criteria, structured coding schemas, and evaluation of risk of bias within and across studies),44 to find the key facilitating and hindering factors of CAPs.

Given the potential for CAPs to make research in community settings more relevant and feasible, we need to better understand the characteristics and state of the science of CAP research in order to increase the use of CAPs across multiple disciplines, and to encourage collaborative partnerships of funding agencies, institutions, and communities. To that end, finding common facilitating and hindering influences on CAPs’ collaborative process and outcomes and agreeing on a common term and universal conceptual definition for use across various disciplines are critical to the continued development of this field. These contributions to the literature will allow greater examination of the relative benefit of CAPs in research, provide guidance for developing and sustaining CAPs, and support a standardized terminology to facilitate research on CAPs.

Methods

We specified and documented in advance our methods of analysis and inclusion criteria in an a priori protocol that was updated iteratively during our systematic review (the protocol is available upon request from the corresponding author).

Eligibility Criteria

To be included, the article must have (1) involved a collaborative partnership (collaboration or partnership between at least one academic and at least one community stakeholder), (2) contained a systematic evaluation of the collaborative process, (3) been written in English, and (4) been published in a peer‐reviewed journal. An article was excluded if it (1) was a review article, (2) was written in a language other than English, or (3) did not present original data. To be considered a CAP, the collaboration must have been between at least one academic partner (eg, investigator(s) in a university department, university hospital, university medical center) and at least one community organization or stakeholder (eg, community agency, church, school, policymaker, according to a definition adopted in order to maximize the number of articles eligible for inclusion), and have shown some indication of shared control or shared decision making, as described in the collaboration's collaborative or specific actions. Studies were considered systematically evaluative if they used a qualitative or quantitative assessment of the collaboration process among members of the CAP. The CAP participant's age, area of study, intervention, outcome, study design, or publication date restrictions were not considered.

Information Sources

We identified studies by searching the electronic databases PsycInfo (1887‐present), Proquest (1971‐present), ERIC (1966‐present), CSA Social Services Abstract (1971‐present), PubMed (1946‐present), and Business Source Premier (1922‐present). Our initial search was run in August 2012, with additional searches in March 2013 and May 2015 to find more recently published studies. Besides searching electronic databases, we used a snowball strategy in which we searched the references of articles included in our systematic review and of review articles of CAPs ineligible for inclusion.45, 46, 47, 48 Finally, we asked 2 experts on CAPs to suggest other relevant articles that we had not included. Duplicate articles were excluded at each stage of the search process.

Search

The following keywords were searched in various combination: “research community partnership,” “research community collaboration,” “research practice partnership,” “research practice collaboration,” “academic community partnership,” “academic community collaboration,” “academic practice partnership,” “academic practice collaboration,” “university community collaboration,” “university community partnership,” “university practice collaboration,” and “university practice partnership.” Truncated forms of these terms (eg, “partner,*” “collaborat*”) were used in the search. These search terms were identified during a preliminary search of the literature for various terms used by authors. We used a filter in all searches to exclude articles that were not written in English or were not peer reviewed.

Study Selection

The first and second authors (Amy Drahota and Rosemary Meza) reviewed the titles and abstracts found in the searches. Articles were eligible for a full‐text review if the title or abstract referred to a collaboration or partnership between at least one academic and at least one community stakeholder (eg, community agency, governmental body, school/school district). If the project members could not determine initial eligibility from the title and abstract, they passed the article to the next stage for a full‐text review. We excluded those articles whose title and abstract did not clearly pertain to a CAP.

Two project members independently reviewed 26.5% of the 1,332 titles and abstracts screened for inclusion. The percentage of agreement for inclusion between the independent coders was 77%. The reviewers’ disagreements were resolved by discussion and coming to a consensus about eligibility, with consultation with the other project members if necessary.

After the title and abstract review, members of the coding team were randomly assigned articles to review. Sets of 2 project members independently reviewed the full text of each article to determine inclusion in or exclusion from our systematic review. Disagreements were resolved through discussion between the 2 reviewers and a third independent reviewer until consensus was reached. Additional study authors also were consulted to confirm the eligibility or ineligibility criteria for 2 CAP articles.

Data Collection

A data extraction form was developed a priori, based on research questions and preliminary conceptualizations of CAP models. The 5 reviewers of the data extraction team deductively generated additional codes.

A priori themes were derived from a review of the literature and evidence‐based training curriculum for the University of Washington's Community‐Campus Partnerships for Health.49 We then analyzed the data using a coding, consensus, and comparison method,50 which followed an iterative approach rooted in grounded theory.51 Using this approach, data extraction team members identified emergent themes, which were discussed during consensus team meetings. Emergent themes were added to the codebook after the team considered the frequency and salience (ie, importance or emphasis) with which it was discussed as a factor of facilitation or hindrance in the CAP process. The emergent themes that were considered to be frequently identified and/or salient to the process of partnerships were operationally defined by 2 team members (Amy Drahota and Rosemary Meza). Emergent themes were collectively reviewed with expert co‐authors (Aubyn Stahmer and Gregory Aarons) to identify and reconcile discrepancies. The data extraction team completed a final review of the emergent themes, their definitions, and coded text in order to reach a consensus on the codes.

The data collection team also participated in a data collection and coding training workshop lasting 4 hours over the course of 3 days. This training included didactics (eg, an introduction to the PRISMA statement for reporting systematic reviews and defining variables to be coded) and an independent practice assignment using one article. The team reviewed and discussed the responses to the practice assignment in order to address any questions related to the coding scheme. Data from each CAP were coded in an online survey format (Qualtrics, Provo, UT). The data extraction team members were divided into pairs and then independently extracted data on a single CAP from the articles. For each article, the paired team members reached a consensus on coding disagreements at weekly consensus meetings (17 meetings lasting 2 hours each) of the pair and at least one independent team member.

Data Items

The extracted data comprised 29 items focusing on (1) basic publication details about the article (ie, publication date, author, etc.), (2) terms and definitions used to describe the CAP, (3) area of study, (4) study design and methods, (5) objective evaluation of the study, (6) outcomes reported, (7) use of a model to guide the development or evaluation of the CAP, (8) factors associated with the collaboration's success or failure, (9) funding sources, (10) initiation of the CAP, (11) participants in the CAP, (12) duration of the CAP, and (13) sustainment of the CAP. Information about the data items is available in the systematic review protocol.

Reduction of Bias

To ascertain the validity of individual studies, the pairs of data extraction team members independently determined whether objective evaluation methods were used for each study, coming to a consensus as needed. The presence or absence of an objective evaluation method for each study was determined from the inclusion of an independent or objective method of data collection (ie, outside evaluator or quantitative survey). In addition, in order to reduce the risk of reporting bias, the unit of analysis for the systematic review was set at the CAP level. Therefore, data related to one CAP that was reported across multiple articles was combined (n = 5). Similarly, when an article (n = 4) presented independent data related to multiple CAPs, the data were separated. Thus, data were collected on 54 CAPs from 50 articles.

Planned Methods of Analysis

We calculated frequencies to determine the prevalence of several items reported in evaluations of CAPs (ie, terms and definitions to describe the CAP, study design and methods, facilitating and hindering factors associated with CAPs, etc.). The mean, standard deviation, and range were calculated for quantitative measures, such as number of terms per article.

Development of a Common Term and Conceptual Definition

We also calculated the frequency of terms and conceptual definitions used in each article to describe the collaborative partnerships between academics and community partners. For each conceptual definition provided in the CAP articles, we used a content analysis to evaluate the definitions’ similarities and differences. Amy Drahota and Rosemary Meza synthesized this data and developed a conceptual definition that would cross disciplines and topics.

Feedback on the term and conceptual definition was elicited from first authors of the articles included in this systematic review (resulting from the August 2012 and March 2013 searches). These authors were emailed a survey, using Qualtrics.com, asking for responses on a Likert type of scale indicating whether they felt the term “community‐academic partnership” was appropriate (on a rating from “very appropriate” to “very inappropriate”) and whether they agreed (from “strongly agree” to “strongly disagree”) with the conceptual definition. Forty‐six percent of the first authors responded to the survey. Analysis of these responses as well as discussions with additional experts in the field provided the feedback necessary to reach a majority consensus on using the term “community‐academic partnership” and to refine the conceptual definition of a CAP.

Specifically, the conceptual definition for CAPs was developed in an iterative fashion involving feedback from the first authors included in the systematic review and in‐depth discussions with experts in the field. Coauthors of this paper were presented with the systematic review outcomes and then discussed possible conceptual definitions. The first draft was sent to the first authors of the articles included in the systematic review. Their feedback was incorporated into a second draft of the conceptual definition and presented for feedback from attendees at a meeting of the Implementation Science Seminar at the University of California, San Diego. Finally, the first and second authors (Amy Drahota and Rosemary Meza) reviewed the conceptual definitions from the articles in the systematic review, feedback from the first authors of the included articles, and comments from the attendees at the UCSD seminar, and then drafted the final conceptual definition used in this article.

Results

Study Selection

The searches generated 1,332 unique articles published up to January 2015 (Figure 2). After reviewing these articles’ titles and abstracts, we determined that 630 articles met the criteria for a full‐text review. After the full‐text review, 50 articles met the criteria for final inclusion. While extracting the data, 8 articles were excluded because they did not fit the criteria of being a community‐academic partnership (n = 5), did not include a systematic evaluation (n = 1), or did not include all CAP members in the systematic evaluation (n = 2). The included articles were published between January 1993 and January 2015.

Figure 2.

PRISMA Flow Diagram

*Data were collected on 54 CAPs from 50 articles.

Study Characteristics

Table 1 summarizes the descriptive characteristics of each CAP, including the primary area of study, the reported CAP initiator, the types of partners involved, the funding at the beginning and throughout the CAP, and the duration of the CAP. Table 2 describes the studies’ characteristics, including the design and methods used, types of data collected, and type of analysis. Table 3 presents the facilitating and hindering themes identified through the systematic review, definitions, and frequencies. Additional tables presenting the facilitating and hindering factors and CAP outcomes are available in Appendices 1, 2, and 3, respectively.

Table 1.

Descriptive Characteristics of CAPs

|

Table 2.

CAP Research: State of the Science

| CAP | Study | Study | Types of | |

|---|---|---|---|---|

| # | Article | Design | Methods | Data Collected |

| 1 | Abdulrahim et al. 201052 | Case Study | Qualitative | I, M |

| 2 | Anderson & Bronstein 201253 | Case Study | Qualitative | I |

| 3 | Austin & Claiborne 201154 | Case Study | Qualitative | FG |

| 4 | Baker et al. 199955 | Case Study | Qualitative | Ot |

| 5 | Benoit et al. 200517 | Case Study | Qualitative | I, O |

| 6 | Blackman et al. 200256 | Case Study | Qualitative | I |

| 7 | Bowen & Martens 200557 | Case Study | Qualitative | I, O, Ot |

| Bowen & Martens 200658 | ||||

| 8 | Brookman‐Frazee et al. 201236 | Single Case Study | Qualitative & Quantitative | S, Ot |

| 9 | Bryan et al. 201459 | Case Study | Qualitative | I, FG |

| 10 | Cargo et al. 201160 | Single Case Study | Quantitative | S |

| 11 | Carlton et al. 200961 | Case Study | Qualitative | I, O, FN, Ot |

| 12 | Chaskin et al. 200634 | Case Study | Qualitative | I, Ot |

| 13 | Christie et al. 200762 | Case Study | Qualitative | I, D |

| 14 | Christie et al. 200762 | Case Study | Qualitative | M, D |

| 15 | Christopher et al. 201163 | Case Study | Qualitative | I, Ot |

| 16 | Cobb & Rubin 200664 | Case Study | Qualitative | I, O, M, FN, G, Ot |

| 17 | Deppeler 200665 | Case Study | Qualitative | S |

| 18 | Drabble et al. 201366 | Case Study | Qualitative | I, S |

| 19 | Ebersohn et al. 201567 | Case Study | Qualitative | D, I, O, FG, FN, Ot |

| 20 | Fook et al. 201168 | Case Study | Qualitative | O |

| 21 | Fouche & Lunt 201069 | Case Study | Qualitative | Ot |

| 22 | Friedman et al. 201370 | Case Study | Quantitative | S |

| 23 | Galinsky et al. 199371 | Case Study | Qualitative | O |

| 24 | Garland et al. 200635 | Case Study | Qualitative | I |

| 25 | Goodnough 201472 | Case Study | Qualitative | I, FN, O, Ot, S, |

| 26 | Groen & Hyland‐Russell 201273 | Case Study | Qualitative | I, M, G, Ot |

| 27 | Groen & Hyland‐Russell 201273 | Case Study | Qualitative | I, M, G, Ot |

| 28 | Haire‐Joshu et al. 200174 | Case Study | Qualitative | I, Ot |

| 29 | Hoeijmakers et al. 201375 | Case Study | Mixed Methods | I, FG, M, Ot |

| 30 | Matusov & Smith 201176 | Case Study | Qualitative | I, O, FN, D |

| 31 | Mayo et al. 200977 | Case Study | Qualitative | I, D |

| 32 | McCauley et al. 200178 | Case Study | Qualitative & Quantitative | I, M |

| 33 | Metzler et al. 200379 | Case Study | Qualitative & Quantitative | I, S, M, FG, G |

| 34 | Metzler et al. 200379 | Case Study | Qualitative & Quantitative | I, S, M, FG, G |

| 35 | Metzler et al. 200379 | Case Study | Qualitative & Quantitative | I, S, M, FG, G |

| 36 | Miller & Hafner 200880 | Case Study | Qualitative | I, O, M, Ot |

| 37 | Minkler et al. 2008a81 | Case Study | Qualitative | I, FG |

| 38 | Minkler et al. 2008a81 | Case Study | Qualitative | I, FG |

| 39 | Minkler et al. 2008a81 | Case Study | Qualitative | I, FG |

| 40 | Minkler et al. 2008a81 | Case Study | Qualitative | I, O, FG, Ot |

| Tajik & Minkler 2006–200782 | ||||

| 41 | Minkler et al. 2008b83 | Case Study | Qualitative | I |

| 42 | Mosavel et al. 201084 | Case Study | Qualitative | M, Ot |

| 43 | Perrault et al. 201185 | Case Study | Qualitative & Quantitative | I, S, M, D, G, Ot |

| 44 | Postma 200886 | Case Study | Qualitative | O, M, FN |

| 45 | Richardson & Grose 201387 | Case Study | Qualitative | S, I |

| Richardson & Grose 201488 | ||||

| 46 | Riemer et al. 201289 | Case Study | Qualitative | I, M, G |

| 47 | Solomon et al. 200190 | Case Study | Qualitative | M, Ot |

| 48 | Stahl & Shdaimah 200891 | Case Study | Qualitative | I, O |

| 49 | Stedman‐Smith et al. 201292 | Case Study | Qualitative & Quantitative | I, S |

| 50 | Szteinberg et al. 201493 | Case Study | Qualitative | S |

| 51 | Teal et al. 201294 | Case Study | Qualitative | I |

| 52 | Vandyck et al. 201295 | Case Study | Qualitative | O |

| 53 | Wong et al. 201196 | Case Study | Qualitative | I |

| 54 | Zendell et al. 200797 | Case Study | Qualitative | I, FG |

Data Types: I = Interview; O = Observation; S = Survey; M = Meeting minutes/notes/agendas; FN = Field notes; FG = Focus groups; D = Discussions; G = Grant proposals; Ot = Other.

Table 3.

Facilitating and Hindering Factors, Definitions, and Frequencies

| Factor | Definition | Frequency (%) |

|---|---|---|

| Facilitating Factors (n = 12) | ||

| Trust between partners |

|

16 (29.6%) |

| Respect among partners |

|

16 (29.6%) |

| Shared vision, goals, and/or mission |

|

14 (25.9%) |

| Good relationship between partners |

|

13 (24.1%) |

| Effective and/or frequent communication |

|

13 (24.1%) |

| Well‐structured meetings |

|

10 (18.5%) |

| Clearly differentiated roles/functions of partners |

|

8 (14.8%) |

| Good quality of leadership |

|

6 (11.1%) |

| Effective conflict resolution |

|

5 (9.3%) |

| Good selection of partners |

|

3 (5.6%) |

| Positive community impact |

|

3 (5.6%) |

| Mutual benefit for all partners |

|

2 (3.7%) |

| Hindering Factors (n = 11) | ||

| Excessive time commitment |

|

12 (22.2%) |

| Excessive funding pressures or control struggles |

|

9 (16.7%) |

| Unclear roles and/or functions of partners |

|

8 (14.8%) |

| Poor communication among partners |

|

7 (13%) |

| Inconsistent partner participation or membership |

|

6 (11.1%) |

| High burden of activities/ tasks |

|

5 (9.3%) |

| Lack of shared vision, goals, and/or mission |

|

5 (9.3%) |

| Differing expectations of partners |

|

4 (7.4%) |

| Mistrust among partners |

|

4 (7.4%) |

| Lack of common language or shared terms among partners |

|

4 (7.4%) |

| Bad relationship |

|

2 (3.7%) |

CAP Characteristics

The CAPs included in this systematic review covered a range of primary areas of study. The most frequently mentioned primary area of study was public health/medicine (33.3%), encompassing topics such as access to health and social services or specific interventions for medical conditions in low‐resource areas. The second most prevalent area of study was education (24.1%), focusing on education‐related collaborations such as communities of inquiry and teacher development. Slightly more than half the articles reported who initiated the CAP (53.7%), and those articles that did report this information were fairly evenly split between community stakeholders (18.5%) and academic researchers (25.9%) initiating the CAP. In some cases, a mixed or simultaneous initiation by community stakeholders and academics was reported (7.4%), and in one case a funder paired community stakeholders with academics (1.9%). The type of partners involved in the CAPs was reported in every article. All the CAPs had a member from a university department or university hospital, and most had members from community agencies, such as for‐profit or not‐for‐profit agencies, providing services to community stakeholders or identified populations (66.7%). After community agencies, the partners were most frequently from governmental offices (27.8%), schools or school districts (18.5%), religious institutions or churches (11.1%), nonuniversity hospitals (13%), or public safety agencies (1.9%). Other types of partners identified (31.5%) were teachers (5.7%), parents (5.7%), members of farmworker communities (2.9%), and Native American tribal communities (2.9%). Funding at the start of the CAP was often not reported (59.3%), and of those that did report on funding at the start, 25.9% of the CAPs had start‐up funding, and 14.8% explicitly stated that they began without funding. Of the CAPs with funding, most funding was from federal institutes or agencies (n = 7 CAPs); some from local or national foundations, institutes, or agencies (n = 5); and rarely from universities (n = 1) or churches (n = 1). Most of the CAPs reported having obtained funding during the partnership, which many considered to be an outcome of the partnership. For example, 81.5% of the articles reported that the CAP had funding at some point during the partnership; 1.9% explicitly reported that the CAP had no funding throughout the partnership; and 16.7% of the articles did not report whether the CAP had funding support during the partnership. Of the CAPs that did have funding during the partnership, 46.3% of the funding was from federal institutes or agencies (n = 25 CAPs); 38.9% was from local or national foundations, institutes, or agencies (n = 21); 3.7% was from universities (n = 2); 3.7% was from churches (n = 2); and 1.9% was from corporate sponsors (n = 1).

The number of CAP members at the beginning of the partnership and at the time of publication was often not reported (70.4% and 74.1%, respectively). Of the articles that reported this information, at the beginning of the partnership 14.8% of the CAPs had 2 to 10 members, 5.6% had 11 to 20 members, and 9.3% had more than 20 members. The number of CAPs that reported the duration of the CAPs was fairly evenly split (57.4% reported, 42.6% unreported). The duration of the CAPs at the time of publication ranged from less than 1 year to 10 years. Because some CAPs reported exact durations while others provided approximate durations, the means and standard deviations of CAP duration could not be calculated. But the categorical data related to duration indicated that 27.8% reported the CAP as lasting from less than 1 year to 3 years; 20.4% reported the CAP as lasting from 4 to 6 years; and 9.3% reported the CAP as lasting from 7 to 10 years.

In summary, the articles included in our systematic review presented information about CAPs that spanned research disciplines, involved a variety of communities, and focused on a large range of study topics. However, the majority of the articles did not systematically report the CAPs’ characteristics.

CAP Research: State of the Science

All the CAP studies included in this systematic review were naturalistic, and most were case studies (96.3%).98 Two of the CAP studies had a single group design.99 The methods used to evaluate the CAPs were primarily qualitative (81.5%), along with quantitative (3.7%) and a combination of qualitative and quantitative (14.8%). Seven of the eight studies that used both qualitative and quantitative methods did not integrate these methods or data and thus cannot be considered as using a mixed‐methods design.100, 101 Multiple types of data were collected to evaluate the CAP process and outcomes. In fact, 2.98 types of data (SD = 2.15, range 1 to 9), on average, were collected to analyze the CAPs. The most common type of data collected was interviews (72.2%), followed by meeting minutes or notes (29.6%), observations (25.9%), surveys or questionnaires (22.2%), field notes (16.7%), focus group data (16.7%), grant proposals or funding progress notes (14.8%), discussions (11.1%), and other methods not fitting in these categories (35.2%), such as sign‐in sheets.

Our systematic review of the state of CAP science indicates that few studies have evaluated CAPs longitudinally or compared responses from partners across time points. Fewer than half the studies used a single, consistent term for their collaborative partnerships (46.2%); 29.6% used 2 terms; 13% used 3 terms; 9.3% used 4 terms; and 1.9% used 5 terms in the same analysis of the partnership. Moreover, only a few articles (16.7%) provided a conceptual definition for the term that was used, creating significant challenges in evaluating the partnerships.102

CAP Formation

The articles in this systematic review identified both facilitating and hindering factors that affected the interpersonal and operational processes during the CAP's formation.

CAP Facilitating Factors

Twelve themes were identified as facilitating the CAP process. Table 3 provides definitions and frequencies of the facilitating factors. Fifty‐three (98.1%) of the articles reported at least one factor facilitating the CAP process. The mean number of facilitating factors identified by the CAPs was 3.76 (SD = 2.41, range 0 to 10).

Sixteen articles (29.6%) reported that trust among partners was a facilitating factor for the process of collaboration. For example, one CAP article reported, “A belief in the trustworthiness among members of the EFCP collaboration had a significant impact on their ability to work together.”85 Respect among partners was also reported as a facilitating factor by 16 articles (29.6%). One article stated that “the clinicians noted that they felt the researchers had a great deal of respect for them—for their time, their commitment, their efforts and their contributions. One senior clinician noted, ‘The researchers have gone out of their way . . . [They] have been just absolutely fabulous.’”35 Thirteen articles (24.1%) stated that a good relationship between partners facilitated the collaboration. As one article reported, “Members said that it was the sense of purpose and camaraderie that they had with each other that motivated them to be part of this group.”84 Thirteen articles (24.1%) cited effective and/or frequent communication as facilitating the partnership. One article stated, “Partners report that access to this diverse spectrum of research experiences and outcomes across partnerships through the different reporting schemes, phone calls and web conferences, face‐to‐face conferences, and informal interactions has served to strengthen the partnership.”63 Ten articles (18.5%) reported that well‐structured meetings facilitated the CAP process. As one article reported, “During in‐depth interviews, most members expressed satisfaction with the new structure because of its potential to enhance trust and maintain momentum in the partnership.”52 Other facilitating factors were shared vision, goals, and/or mission (25.9%), clearly differentiated roles/functions of partners (14.8%), good quality of leadership (11.1%), effective conflict resolution (9.3%), good selection of partners (5.6%), positive community impact (5.6%), and mutual benefit for all partners (3.7%).

CAP Hindering Factors

Besides the facilitating factors, our systematic review yielded 11 themes hindering the CAP process. Table 3 gives definitions and frequencies of the factors identified as hindering the CAP process. Thirty‐eight (70.4%) articles reported at least one hindering factor. The mean number of hindering factors identified was 1.91 (SD = 2.04, range 0 to 11).

The most frequently reported hindering factor was excessive time commitment (22.2%). An example of excessive time commitment was described in Mayo and Tsey:

{T}ime spent sitting down and talking, building relationships, developing trust and strategizing around a range of funding, personal, professional and organizational challenges may impinge upon collecting and analyzing data for publication, making it difficult to demonstrate academic productivity. . . . The issue of where to put one's energy is a constant dilemma.77

The next most common hindering factors were unclear roles and/or functions of partners (16.7%), followed by excessive funding pressures or control struggles (14.8%). An example of unclear roles and/or functions was illustrated in an article that quoted one partner as saying, “Not understanding what the process was, what the roles were, who was going to do what and when, what my role was . . . it was tough working through those obstacles.”53 An example of excessive funding pressures or control struggles was provided in an article observing that the “[community partners] expressed high levels of distrust and anxiety about the way [other partners] apportioned money, and these sentiments punctuated the Year 4 planning discussions.”64

Additional hindering interpersonal process factors were poor communication among partners (13%); inconsistent partner participation or membership (11.1%); a high burden of activities/tasks (9.3%); lack of shared vision, goals, and/or mission (9.3%); mistrust among partners (7.4%); lack of a common language or shared terms (7.4%); differing expectations of partners (7.4%); and a bad relationship among partners (3.7%).

CAP Outcomes

Forty‐two (77.8%) of the articles reported that the CAPs had one or more proximal outcomes, such as partnership synergy (18.5%), knowledge exchange (25.9%), or tangible products (72.2%), with the most common proximal outcome reported being the development or refinement of a tangible product. Eighteen (33.3%) of the articles reported one or more distal outcomes, such as the development of or an enhanced capacity to implement programs or interventions (13%), improved community care (18.5%), sustainable community‐academic partnership infrastructure (5.6%), and changed community context (1.9%).

Development of a Common Term and Conceptual Definition

One challenging aspect of collaboration research is the lack of standardized terminology and conceptual definitions. An aim of our systematic review was to find a common term and develop a conceptual definition for collaborations between community stakeholders and academic partners that could be used consistently across a broad range of disciplines. In each article, the number of terms used to describe the partnerships ranged from 1 to 5 (mean = 1.91, SD = 1.07). Forty‐six percent of the articles used a single term to refer to the collaborative partnership. Of the terms used, “community‐academic partnership” was the most common (n = 8), and is a more inclusive term that would likely appeal most broadly across disciplines and partnership methods. Other terms used by included articles were “CBPR partnership” (n = 8), “university‐community partnership” (n = 8), “community‐university partnership” (n = 5), and “academic‐community partnership” (n = 5).

Fifty percent of the responding first authors included in this systematic review rated the term “community‐academic partnership” as an “appropriate” or “very appropriate” term. Of the 22.2% of the first authors who rated the term to be “inappropriate” or “very inappropriate,” 1 indicated that the term “community” was not concrete enough; 2 indicated that the term “academic” was not concrete enough; and 1 felt that the term CBPR was more commonly used in the literature.

Nine of the articles included in the systematic review (16.7%) provided a conceptual definition of their collaborative partnership. Of these, 4 conceptual definitions were generated by the authors themselves; 4 were derived from a single cited article; and 1 was derived from combining definitions from multiple cited articles. A review of these definitions indicated that they varied significantly from one another and lacked generalizability across disciplines and areas of study. For example, some focused on specific outcomes of the CAP (ie, “arrangements among structurally unequal groups that come together to address problems such as poverty, crime and housing”),64 while others focused on the process of partnership (ie, “a process of ongoing negotiation through which academic and community partners establish their respective expectations and responsibilities in the partnership, always taking into account changes in personal, agendas, and budget allocations, among other things.”)17 Based on this iterative process, we developed the following conceptual definition for CAPs:

Community‐academic partnerships (CAPs) are characterized by equitable control, a cause(s) that is primarily relevant to the community of interest, and specific aims to achieve a goal(s), and involves community members (representatives or agencies) that have knowledge of the cause, as well as academic researchers.

Discussion

There is a growing emphasis on using CAPs to increase the relevance, feasibility, and utility of research and implementation of programs and interventions in community settings.37 Studies evaluating CAPs, including the collaboration processes and outcomes, began decades ago, and the number has grown quickly in the past several years. This literature includes studies from multiple disciplines and research areas. Given the opportunity for cross‐fertilization between disciplines by combining this literature, our article contributes to our knowledge by systematically reviewing CAP collaborative process data across disciplines and content areas.

Principal Findings

Our systematic review confirms that studies of CAPs span research disciplines, involve a variety of community populations, and focus on a large range of study topics. Some CAP characteristics, however, such as initiation, the number of partners, and funding, were not systematically reported in the majority of the articles. Moreover, the methods used to study CAPs were often not reported fully. The reported data suggest that CAPs were most often evaluated at a single time point; few studies evaluated CAPs longitudinally or compared responses from partners over time. Also, many different terms were used in and between studies to label CAPs, and few articles offered conceptual definitions for the terms they used.

Most of the articles in our systematic review described facilitating and hindering factors that affected the interpersonal and operational processes during the CAP's formation. The most frequently cited facilitating factors were trust, respect, and good relationships among partners. The most frequently cited hindering factors were excessive time commitment and unclear roles and/or functions of partners. The studies reported developing or refining tangible products as the CAP's most common proximal outcome, and relatively few studies reported distal outcomes as a result of the CAP.

Findings in Relation to Other Studies

To our knowledge, ours is the first rigorous systematic review of the CAP literature. Although reviews have been conducted,20 most have been specific to a single discipline or research area. As indicated by the results of this systematic review, the published data usually are descriptive. Much of the literature has neglected to cover important information about the CAP's characteristics. For example, most studies did not disclose who initiated the CAP, the funding sources or processes of obtaining funding for the partnership, the composition of members at the beginning of the CAP and the retention of CAP members over time, or the CAP's duration. This information would benefit the continued study of CAPs, as it would help confirm whether CAPs positively affect the relevance and feasibility of research, as has been hypothesized.11 Furthermore, this information might show when members of CAPs evaluate their collaborative process to determine whether the CAP is meeting its goals.103 Thus, standards are needed for reporting evaluations of CAPs by collaboration researchers. A few of the CAP studies contained a theory‐based model to guide the evaluation of the partnership group process. These models provide a theoretical framework for the development of CAPs and contribute standardization, science, and rigor during their evaluation. One such conceptual model, the Model of Research‐Community Partnership,36 was used to guide the evaluation of the CAPs discussed in our systematic review. This model provides guidance that may be used across stages of collaboration (ie, formation, execution of activities, and sustainment), and in combination with the results of this systematic review, collaborative group partners may be able to emphasize specific facilitating factors to promote positive collaborative processes.

Both interpersonal and operational collaborative processes are important during the formation of CAPs. Interpersonal processes are constructs pertaining to the quality of relationships or communication among partners. Seven facilitating interpersonal factors were found in this systematic review: “Trust among partners,” “Respect among partners,” “Shared vision, goals, and/or mission,” “Good relationship among partners,” “Effective and/or frequent communication,” “Clearly differentiated roles/functions of partners,” and “Effective conflict resolution.” Seven hindering interpersonal factors were identified as well: “Unclear roles and/or functions of partners,” “Poor communication among partners,” “Distrust among partners,” “Lack of shared vision, goals, and/or mission,” “Lack of common language or shared terms among partners,” “Bad relationship,” and “Differing expectations of partners.” Operational processes are constructs pertaining to the logistics and quality of partnership functioning in the CAP. Five facilitating organizational processes were identified in this systematic review: “Well‐structured meetings,” “Good quality of leadership,” “Mutual benefit for all partners,” “Good selection of partners,” and “Positive community impact.” Three hindering operational factors were “Excessive time commitment,” “Excessive funding pressures or control struggles,” and “High burden of activities/tasks.” Further study of the relative influence of each factor on the development of CAPs is needed to gauge their relative impact on the CAPs’ sustainment.

Strengths and Weaknesses

To our knowledge, ours is the first rigorous systematic review to identify key facilitating and hindering factors of community‐academic partnerships, using the PRISMA criteria (eg, rigorous eligibility/ineligibility criteria, structured coding schemas, and evaluation of risk of bias within and across studies).44 This study evaluated CAP characteristics, the state of the science, factors facilitating and hindering the collaborative process, and CAP outcomes. Moreover, this systematic review puts forth a common term for these kinds of collaborative partnerships and provides a universal conceptual definition to guide the continued study of this field of research.

We should note some of the limitations of our systematic review. First, only those studies of CAPs that were published in peer‐reviewed literature were eligible for inclusion. “Gray literature” and unpublished literature were not included. As a result, the review may contain publication bias, as it excludes data on CAPs that were not funded or published. Second, many CAP studies did not provide information about conflicts of interest. Last, relatively few of the studies (n = 19) in this systematic review took place outside the United States, potentially limiting the assessment of cultural variation in CAPs. Although some publications concluded that the use of CAPs increases research feasibility and relevance,12, 17, 55, 77, 81 we believe that there can be no rigorous experimentation to test this hypothesis without removing the methodological and reporting limitations we have noted.

Implications for Policy and Practice

Given the long history and increased emphasis on including community stakeholders in the research process, it is important to understand the state of the science in order to determine whether community‐academic partnerships actually are beneficial, as has been hypothesized. While the state of the research is currently evaluative, this systematic review provides a foundation from which measures may be developed. Important measures include a theory‐based evaluation of the collaborative process; an assessment of proximal (eg, presence and measurement of partnership synergy or knowledge exchange; completion of tangible goals) and distal outcomes, such as improved program or intervention implementation in the community; an enhanced capacity to implement programs or interventions; better community care; and a sustainable CAP infrastructure. Moreover, if community‐academic partnerships are to be formed for research, evaluations, or services projects, a clear understanding of the characteristics that facilitate or hinder the collaborative group process would help with forming, developing, and sustaining the CAP, as well as promoting engagement among the partners and guiding the specific collaborative strategies that meet the CAP's goal(s).

Implications for Future Research

Given the amount of time that CAPs have been used and written about, it is noteworthy that the empirical evaluations of them are inadequate. Strengthening conceptual clarity by using standardized terminology, definitions, and methods is an important research direction for this field. It is important to note as well that the articles included in this systematic review seldom mentioned the “success” of particular community‐academic partnerships. These articles did allude to the concept of success by discussing proximal outcomes, such as the characteristics of the CAP itself (eg, duration, partner retention) and the tangible products that were produced or refined. Studies specifically singling out factors common to successful CAPs may be useful for future research. Once the characteristics of CAPs and CAP processes are better understood, the hypothesis that the involvement of collaborative partners increases the relevance, feasibility, and utility of research questions and designs can be tested. Finally, once standardized methods have been developed, comparative effectiveness trials of CAPs using various partnership models, goals, and structures can be conducted in order to test whether specific CAP characteristics lead equally to intervention or service adoption, implementation, and sustainment.

Appendix 1. Facilitating Factors Reported by CAP

| Facilitating Factors | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CAP # | Article | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | Ot |

| 1 | Abdulrahim et al. 201052 | X | X | X | ||||||||||

| 2 | Anderson & Bronstein 201253 | X | X | |||||||||||

| 3 | Austin & Claiborne 201154 | X | X | X | ||||||||||

| 4 | Baker et al. 199955 | X | ||||||||||||

| 5 | Benoit et al. 200517 | X | ||||||||||||

| 6 | Blackman et al. 200256 | X | ||||||||||||

| 7 | Bowen & Martens 200557 | X | X | X | X | X | ||||||||

| Bowen & Martens 200658 | ||||||||||||||

| 8 | Brookman‐Frazee et al. 201236 | X | X | X | X | |||||||||

| 9 | Bryan et al. 201459 | |||||||||||||

| 10 | Cargo et al. 201160 | |||||||||||||

| 11 | Carlton et al. 200961 | X | X | X | X | X | ||||||||

| 12 | Chaskin et al. 200634 | X | X | |||||||||||

| 13 | Christie et al. 200762 | X | X | X | X | |||||||||

| 14 | Christie et al. 200762 | X | X | X | X | X | ||||||||

| 15 | Christopher et al. 201163 | X | X | X | ||||||||||

| 16 | Cobb & Rubin 200664 | X | ||||||||||||

| 17 | Deppeler 200665 | X | X | X | X | X | ||||||||

| 18 | Drabble et al. 201366 | |||||||||||||

| 19 | Ebersohn et al. 201567 | |||||||||||||

| 20 | Fook et al. 201168 | X | X | X | X | X | X | X | ||||||

| 21 | Fouche & Lunt 201069 | X | X | X | ||||||||||

| 22 | Friedman et al. 201370 | |||||||||||||

| 23 | Galinsky et al. 199371 | X | X | X | ||||||||||

| 24 | Garland et al. 200635 | X | X | |||||||||||

| 25 | Goodnough 201472 | |||||||||||||

| 26 | Groen & Hyland‐Russell 201273 | |||||||||||||

| 27 | Groen & Hyland‐Russell 201273 | |||||||||||||

| 28 | Haire‐Joshu et al. 200174 | |||||||||||||

| 29 | Hoeijmakers et al. 201375 | |||||||||||||

| 30 | Matusov & Smith 201176 | X | X | X | X | |||||||||

| 31 | Mayo et al. 200977 | X | X | X | ||||||||||

| 32 | McCauley et al. 200178 | X | ||||||||||||

| 33 | Metzler et al. 200379 | X | ||||||||||||

| 34 | Metzler et al. 200379 | X | X | |||||||||||

| 35 | Metzler et al. 200379 | X | ||||||||||||

| 36 | Miller & Hafner 200880 | X | X | |||||||||||

| 37 | Minkler et al. 2008a81 | X | X | X | ||||||||||

| 38 | Minkler et al. 2008a81 | X | X | X | ||||||||||

| 39 | Minkler et al. 2008a81 | X | X | X | ||||||||||

| 40 | Minkler et al. 2008a81 | X | X | X | ||||||||||

| Tajik & Minkler 2006–200782 | ||||||||||||||

| 41 | Minkler et al. 2008b83 | X | X | X | X | |||||||||

| 42 | Mosavel et al. 201084 | X | ||||||||||||

| 43 | Perrault et al. 201185 | X | X | X | X | X | ||||||||

| 44 | Postma 200886 | X | X | |||||||||||

| 45 | Richardson & Grose 201387 | |||||||||||||

| Richardson & Grose 201488 | ||||||||||||||

| 46 | Riemer et al. 201289 | X | X | X | X | |||||||||

| 47 | Solomon et al. 200190 | X | X | |||||||||||

| 48 | Stahl & Shdaimah 200891 | X | X | X | ||||||||||

| 49 | Stedman‐Smith et al. 201292 | X | X | X | X | |||||||||

| 50 | Szteinberg et al. 201493 | |||||||||||||

| 51 | Teal et al. 201294 | X | X | X | ||||||||||

| 52 | Vandyck et al. 201295 | X | X | |||||||||||

| 53 | Wong et al. 201196 | X | X | |||||||||||

| 54 | Zendell et al. 200797 | X | X | X | ||||||||||

X = Coded as present.

1 = Trust among partners; 2 = Respect among partners; 3 = Good relationship among partners; 4 = Shared vision, goals, and/or mission; 5 = Effective and/or frequent communication; 6 = Well‐structured meetings; 7 = Clearly differentiated roles and/or functions of partners; 8 = Effective conflict resolution; 9 = Good quality of leadership; 10 = Mutual benefit for all partners; 11 = Good selection of partners; 12 = Positive community impact; Ot = Other.

Appendix 2. Hindering Factors Reported by CAP

| Hindering Factors | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CAP # | Article | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | Ot |

| 1 | Abdulrahim et al. 201052 | X | X | ||||||||||

| 2 | Anderson & Bronstein 201253 | X | X | ||||||||||

| 3 | Austin & Claiborne 201154 | ||||||||||||

| 4 | Baker et al. 199955 | X | |||||||||||

| 5 | Benoit et al. 200517 | X | X | X | X | ||||||||

| 6 | Blackman et al. 200256 | ||||||||||||

| 7 | Bowen & Martens 200557 | ||||||||||||

| Bowen & Martens 200658 | |||||||||||||

| 8 | Brookman‐Frazee et al. 201236 | ||||||||||||

| 9 | Bryan et al. 201459 | ||||||||||||

| 10 | Cargo et al. 201160 | ||||||||||||

| 11 | Carlton et al. 200961 | X | X | ||||||||||

| 12 | Chaskin et al. 200634 | ||||||||||||

| 13 | Christie et al. 200762 | X | |||||||||||

| 14 | Christie et al. 200762 | ||||||||||||

| 15 | Christopher et al. 201163 | ||||||||||||

| 16 | Cobb & Rubin 200664 | X | X | X | X | X | X | X | |||||

| 17 | Deppeler 200665 | X | X | ||||||||||

| 18 | Drabble et al. 201366 | ||||||||||||

| 19 | Ebersohn et al. 201567 | ||||||||||||

| 20 | Fook et al. 201168 | X | X | X | |||||||||

| 21 | Fouche & Lunt 201069 | ||||||||||||

| 22 | Friedman et al. 201370 | ||||||||||||

| 23 | Galinsky et al. 199371 | ||||||||||||

| 24 | Garland et al. 200635 | X | X | X | |||||||||

| 25 | Goodnough 201472 | ||||||||||||

| 26 | Groen & Hyland‐Russell 201273 | ||||||||||||

| 27 | Groen & Hyland‐Russell 201273 | X | |||||||||||

| 28 | Haire‐Joshu et al. 200174 | X | |||||||||||

| 29 | Hoeijmakers et al. 201375 | ||||||||||||

| 30 | Matusov & Smith 201176 | X | |||||||||||

| 31 | Mayo et al. 200977 | X | X | ||||||||||

| 32 | McCauley et al. 200178 | X | |||||||||||

| 33 | Metzler et al. 200379 | X | X | ||||||||||

| 34 | Metzler et al. 200379 | X | X | ||||||||||

| 35 | Metzler et al. 200379 | X | X | ||||||||||

| 36 | Miller & Hafner 200880 | X | X | ||||||||||

| 37 | Minkler et al. 2008a81 | ||||||||||||

| 38 | Minkler et al. 2008a81 | ||||||||||||

| 39 | Minkler et al. 2008a81 | ||||||||||||

| 40 | Minkler et al. 2008a81 | X | |||||||||||

| Tajik & Minkler 2006–200782 | |||||||||||||

| 41 | Minkler et al. 2008b83 | ||||||||||||

| 42 | Mosavel et al. 201084 | X | |||||||||||

| 43 | Perrault et al. 201185 | X | X | ||||||||||

| 44 | Postma 200886 | X | |||||||||||

| 45 | Richardson & Grose 201387 | ||||||||||||

| Richardson & Grose 201488 | |||||||||||||

| 46 | Riemer et al. 201289 | X | |||||||||||

| 47 | Solomon et al. 200190 | ||||||||||||

| 48 | Stahl & Shdaimah 200891 | X | X | ||||||||||

| 49 | Stedman‐Smith et al. 201292 | X | |||||||||||

| 50 | Szteinberg et al. 201493 | ||||||||||||

| 51 | Teal et al. 201294 | X | X | ||||||||||

| 52 | Vandyck et al. 201295 | X | |||||||||||

| 53 | Wong et al. 201196 | X | X | X | X | ||||||||

| 54 | Zendell et al. 200797 | X | X | X | |||||||||

X = Coded as present.

1 = Excessive time commitment; 2 = Excessive funding pressures or control struggles; 3 = Unclear roles and/or functions of partners; 4 = Poor communication among partners; 5 = Inconsistent partner participation or membership; 6 = High burden of activities/tasks; 7 = Distrust among partners; 8 = Lack of shared vision, goals, and/or mission; 9 = Lack of common or shared terms among partners; 10 = Bad relationship among partners; 11 = Differing expectations of partners; Ot = Other.

Appendix 3. CAP Outcomes

| Proximal (Process) Outcomes | Distal Outcomes | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| CAP # | Article | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 1 | Abdulrahim et al. 201052 | ||||||||

| 2 | Anderson & Bronstein 201253 | X | X | X | |||||

| 3 | Austin & Claiborne 201154 | X | |||||||

| 4 | Baker et al. 199955 | X | X | ||||||

| 5 | Benoit et al. 200517 | X | X | ||||||

| 6 | Blackman et al. 200256 | X | X | X | X | ||||

| 7 | Bowen & Martens 200557 | X | X | ||||||

| Bowen & Martens 200658 | |||||||||

| 8 | Brookman‐Frazee et al. 201236 | X | |||||||

| 9 | Bryan et al. 201459 | X | |||||||

| 10 | Cargo et al. 201160 | X | X | ||||||

| 11 | Carlton et al. 200961 | ||||||||

| 12 | Chaskin et al. 200634 | X | |||||||

| 13 | Christie et al. 200762 | ||||||||

| 14 | Christie et al. 200762 | X | |||||||

| 15 | Christopher et al. 201163 | ||||||||

| 16 | Cobb & Rubin 200664 | ||||||||

| 17 | Deppeler 200665 | X | X | ||||||

| 18 | Drabble et al. 201366 | X | |||||||

| 19 | Ebersohn et al. 201567 | ||||||||

| 20 | Fook et al. 201168 | ||||||||

| 21 | Fouche & Lunt 201069 | X | X | ||||||

| 22 | Friedman et al. 201370 | ||||||||

| 23 | Galinsky et al. 199371 | X | |||||||

| 24 | Garland et al. 200635 | X | |||||||

| 25 | Goodnough 201472 | X | X | ||||||

| 26 | Groen & Hyland‐Russell 201273 | X | X | ||||||

| 27 | Groen & Hyland‐Russell 201273 | X | |||||||

| 28 | Haire‐Joshu et al. 200174 | X | |||||||

| 29 | Hoeijmakers et al. 201375 | X | X | X | |||||

| 30 | Matusov & Smith 201176 | X | |||||||

| 31 | Mayo et al. 200977 | X | X | X | |||||

| 32 | McCauley et al. 200178 | X | |||||||

| 33 | Metzler et al. 200379 | X | X | X | X | ||||

| 34 | Metzler et al. 200379 | X | X | X | X | ||||

| 35 | Metzler et al. 200379 | X | X | X | |||||

| 36 | Miller & Hafner 200880 | X | |||||||

| 37 | Minkler et al. 2008a81 | X | X | ||||||

| 38 | Minkler et al. 2008a81 | X | X | ||||||

| 39 | Minkler et al. 2008a81 | X | X | ||||||

| 40 | Minkler et al. 2008a81 | X | X | X | |||||

| Tajik & Minkler 2006–200782 | |||||||||

| 41 | Minkler et al. 2008b83 | X | X | ||||||

| 42 | Mosavel et al. 201084 | X | |||||||

| 43 | Perrault et al. 201185 | X | |||||||

| 44 | Postma 200886 | X | |||||||

| 45 | Richardson & Grose 201387 | ||||||||

| Richardson & Grose 201488 | |||||||||

| 46 | Riemer et al. 201289 | X | |||||||

| 47 | Solomon et al. 200190 | ||||||||

| 48 | Stahl & Shdaimah 200891 | X | |||||||

| 49 | Stedman‐Smith et al. 201292 | X | X | ||||||

| 50 | Szteinberg et al. 201493 | X | X | ||||||

| 51 | Teal et al. 201294 | X | X | X | |||||

| 52 | Vandyck et al. 201295 | X | |||||||

| 53 | Wong et al. 201196 | X | X | X | |||||

| 54 | Zendell et al. 200797 | X | X | X | |||||

X = Coded as present.

Proximal (Process) Outcomes: 1 = Partnership synergy; 2 = Knowledge exchange; 3 = Tangible products.

Distal Outcomes: 4 = Improved program or intervention implementation in the community; 5 = Developed or enhanced capacity to implement programs or interventions; 6 = Improved community care; 7 = Sustainable community‐academic partnership infrastructure; 8 = Changed community context.

Funding/Support: This work was supported by a grant from the National Institute of Mental Health, NIMH K01MH093477 (AD). The preparation of this article was supported in part by the Implementation Research Institute (IRI) at the George Warren Brown School of Social Work, Washington University in St. Louis, through an award from the National Institute of Mental Health (R25MH080916) and the Quality Enhancement Research Initiative (QUERI), Department of Veterans Affairs Contract, Veterans Health Administration, Office of Research and Development, Health Services Research and Development Service.

Conflict of Interest Disclosure: All authors have completed and submitted the ICMJE Form for Disclosure of Potential Conflicts of Interest. Aubyn C. Stahmer disclosed a grant from Autism Speaks for work outside of this publication. No other disclosures were reported.

Acknowledgments: We would like to thank Lauren Brookman‐Frazee, PhD; attendees of the University of California, San Diego Implementation Science Seminar; and authors of included CAP literature who took the time to provide feedback related to the development of the community‐academic partnership conceptual definition.

References

- 1. Balas EA, Boren SA. Managing clinical knowledge for health care improvement In: Bemmel J, McCray AT, eds. Yearbook of Medical Informatics 2000: Patient‐Centered Systems. Stuttgart, Germany: Schattauer Verlagsgesellschaft; 2000. [PubMed] [Google Scholar]

- 2. Glasgow RE, Lichtenstein E, Marcus AC. Why don't we see more translation of health promotion research to practice? Rethinking the efficacy‐to‐effectiveness transition. Am J Public Health. 2003;93(8):1261‐1267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Staniszewska S, Haywood KL, Brett J, Tutton L. Patient and public involvement in developing patient‐reported outcome measures: evolution not revolution. Patient. 2012;5(2):79‐87. [DOI] [PubMed] [Google Scholar]

- 4. Altman DG. Sustaining interventions in community systems: on the relationship between researchers and communities. Health Psychol. 1995;14(6):526‐536. [DOI] [PubMed] [Google Scholar]

- 5. Israel BA, Schulz AJ, Parker EA, Becker AB. Review of community‐based research: assessing partnership approaches to improve public health. Annu Rev Public Health. 1998;19:173‐202. [DOI] [PubMed] [Google Scholar]

- 6. Wandersman A, Duffy J, Flaspohler P, et al. Bridging the gap between prevention research and practice: the interactive systems framework for dissemination and implementation. Am J Community Psychol. 2008;41:171‐181. [DOI] [PubMed] [Google Scholar]

- 7. Weeks MR, Liao S, Li F, et al. Challenges, strategies, and lessons learned from a participatory community intervention study to promote female condoms among rural sex workers in southern China. AIDS Educ Prev. 2010;22:252‐271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Lasker RD, Weiss ES, Miller R. Partnership synergy: a practical framework for studying and strengthening the collaborative advantage. Milbank Q. 2001;79(2):179‐205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Stahmer AC, Suhrheinrich J, Reed S, Bolduc C, Schreibman L. Pivotal response teaching in the classroom setting. Prev Sch Failure. 2010;54(4):265‐274. [Google Scholar]

- 10. Sibbald SL, Tetroe J, Graham ID. Research funder required research partnerships: a qualitative inquiry. Implementation Sci. 2014;9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Kone A, Sullivan M, Senturia KD, Chrisman NJ, Ciske SJ, Krieger JW. Improving collaboration between researchers and communities. Public Health Rep. 2000;115:243‐248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Kobeissi L, Nakkash R, Ghantous Z, Abou Saad M, Yassin N. Evaluating a community based participatory approach to research with disadvantaged women in the southern suburbs of Beirut. J Community Health. 2011;36(5):741‐747. [DOI] [PubMed] [Google Scholar]

- 13. Reynolds KD, Spruijt‐Metz D. Translational research in childhood obesity prevention. Eval Health Professions. 2006;29(2):219‐245. [DOI] [PubMed] [Google Scholar]

- 14. Dearing JW, Kreuter MW. Designing for diffusion: how can we increase uptake of cancer communication innovations? Patient Educ Counseling. 2010;81(Suppl. 1):S100‐S110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Glasgow RE. Critical measurement issues in translational research. Res Soc Work Pract. 2009;19:560‐568. [Google Scholar]

- 16. Drahota A, Aarons GA, Stahmer AC. Developing the Autism Model of Implementation for autism spectrum disorder community providers: study protocol. Implementation Sci. 2012;7(85):1‐10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Benoit C, Jansson M, Millar A, Phillips R. Community‐academic research on hard‐to‐reach populations: benefits and challenges. Qualitative Health Res. 2005;15(2):263‐282. [DOI] [PubMed] [Google Scholar]

- 18. Washington WN. Collaborative/participatory research. J Health Care Poor Underserved. 2004;15(1):18‐29. [DOI] [PubMed] [Google Scholar]

- 19. Hergenrather KC, Geishecker S, McGuire‐Kuletz M, Gitlin DJ, Rhodes SD. An introduction to community‐based participatory research. Rehabil Educ. 2010;24(3‐4):225‐238. [Google Scholar]

- 20. Redman RW. The power of partnerships: a model for practice, education, and research. Res Theory Nurs Pract. 2003;17(3):187‐189. [DOI] [PubMed] [Google Scholar]

- 21. Lewin K. Field Theory in Social Science: Selected Theoretical Papers. Ed. Cartwright Dorwin. Oxford, England: Harpers; 1951. [Google Scholar]

- 22. Green L, Daniel M, Novick L. Partnerships and coalitions for community‐based research. Public Health Rep. 2001;116(Suppl. 1):20‐31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Eisinger A, Senturia K. Doing community‐driven research: a description of Seattle partners for health communities. J Urban Health. 2001;78(3):519‐534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Canadian Institutes of Health Research. Guide to knowledge translation planning at CIHR: integrated and end of grant approaches. http://www.cihr‐irsc.gc.ca/e/documents/kt_lm_ktplan‐en.pdf. Updated March 19, 2015. Accessed February 10, 2016.

- 25. National Institutes of Health Office of Behavioral and Social Science Research. Dissemination and implementation. n.d. http://obssr.od.nih.gov/scientific_areas/translation/dissemination_and_implementation/index.aspx. Accessed June 1, 2014.

- 26. Patient‐Centered Outcomes Research Institute. National priorities for research and research agenda. 2012. http://www.pcori.org/assets/PCORI‐National‐Priorities‐and‐Research‐Agenda‐2012‐05‐21‐FINAL1.pdf. Accessed June 1, 2014.

- 27. National Center for Advancing Translational Sciences. Clinical and translational science awards. http://www.ncats.nih.gov/research/cts/ctsa/ctsa.html. Accessed March 12, 2015.

- 28. Stetler CB, McQueen L, Demakis J, Mittman BS. An organizational framework and strategic implementation for system‐level change to enhance research‐based practice. QUERI Series. Implementation Sci. 2008;3(30):1‐11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Board Defense Business. Public‐private collaboration in the department of defense: report to the secretary of defense. 2012. http://dbb.defense.gov/Portals/35/Documents/Reports/2012/FY12‐4_Public_Private_Collaboration_in_the_Department_of_Defense_2012‐7.pdf. Accessed June 1, 2014.

- 30. Israel BA, Eng E, Schulz AJ, Parker EA, eds. Methods in Community‐Based Participatory Research for Health. San Francisco, CA: Jossey‐Bass; 2005. [Google Scholar]

- 31. Kidd SA, Kral MJ. Practicing participatory action research. J Counselling Psychol. 2005;52(2):187‐195. [Google Scholar]

- 32. Strand K. Community‐Based Research and Higher Education: Principles and Practices. San Francisco, CA: Jossey‐Bass; 2003. [Google Scholar]

- 33. Troppe M. Participatory Action Research: Merging the Community and Scholarly Agendas. Providence, RI: Campus Compact; 1994. [Google Scholar]

- 34. Chaskin RJ, Goerge RM, Skyles A, Guiltinan S. Measuring social capital: an exploration in community‐research partnership. J Community Psychol. 2006;34(4):489‐514. [Google Scholar]

- 35. Garland AF, Plemmons D, Koontz L. Research‐practice partnership in mental health: lessons from participants. Adm Policy Ment Health. 2006;33(5):517‐528. [DOI] [PubMed] [Google Scholar]

- 36. Brookman‐Frazee L, Stahmer AC, Lewis K, Feder JD, Reed S. Building a research‐community collaborative to improve community care for infants and toddlers at risk for autism spectrum disorders. J Community Psychol. 2012;40:715‐734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Garland AF, Brookman‐Frazee L. Therapists and reseachers: advancing collaboration. Psychother Res. 2015;25(1):95‐107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. LaVeaux D, Christopher S. Contextualizing CBPR: key principles of CBPR meet the indigenous research context. Pimatisiwin. 2009;7(1):1‐16. [PMC free article] [PubMed] [Google Scholar]

- 39. Spector AY. CBPR with service providers: arguing a case for engaging practitioners in all phases of research. Health Promotion Pract. 2012;13(2):252‐258. [DOI] [PubMed] [Google Scholar]

- 40. Stacciarini JR, Shattell MM, Coady M, Wiens B. Review: community‐based participatory research approach to address mental health in minority populations. Community Ment Health J. 2011;47(5):489‐497. [DOI] [PubMed] [Google Scholar]

- 41. Shalowitz MU, Isacco A, Barquin N, et al. Community‐based participatory research: a review of the literature with strategies for community engagement. J Dev Behav Pediatr. 2009;30(4):350‐361. [DOI] [PubMed] [Google Scholar]

- 42. Sherrod LR. “Giving child development knowledge away”: using university‐community partnerships to disseminate research on children, youth, and families. Appl Dev Sci. 1999;3(4):228‐234. [Google Scholar]

- 43. Brookman‐Frazee L, Stahmer AC, Stadnick N, Chlebowski C, Herschell A, Garland AF. Characterizing the use of research‐community partnerships in studies of evidence‐based interventions in children's community services. Adm Policy Ment Health. 2016;43(1):93‐104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta‐analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med. 2009;6(7):1‐28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Butterfoss F. Process evaluation for community participation. Annu Rev Public Health. 2006;29:325‐350. [DOI] [PubMed] [Google Scholar]

- 46. Cargo M, Mercer SL. The value and challenges of participatory research: strengthening its practice. Annu Rev Public Health. 2008;29:325‐350. [DOI] [PubMed] [Google Scholar]

- 47. Chrisman NJ. Extending cultural competence through systems change: academic, hospital, and community partnerships. J Transcultural Nurs. 2007;18(1, Suppl.):68S‐76S. [DOI] [PubMed] [Google Scholar]

- 48. Trickett EJ, Espino S. Collaboration and social inquiry: multiple meanings of a construct and its role in creating useful and valid knowledge. Am J Community Psychol. 2004;34(1‐2):1‐69. [DOI] [PubMed] [Google Scholar]

- 49. TEC–IPfPR Group. Developing and sustaining community‐based participatory research partnerships: a skill‐building curriculum. 2006. www.cbprcurriculum.info. Accessed March 1, 2011.

- 50. Willms D, Best AJ, Taylor DW, et al. A systematic approach for using qualitative methods in primary prevention research. Med Anthropol Q. 1990;4:391‐409. [Google Scholar]

- 51. Glaser BS, Strauss A. The Discovery of Grounded Theory: Strategies for Qualitative Research. Chicago, IL: Aldine; 1967. [Google Scholar]

- 52. Abdulrahim S, Shareef ME, Alameddine M, Afifi RA, Hammad S. The potentials and challenges of an academic‐community partnership in a low‐trust urban context. J Urban Health. 2010;87(6):1017‐1020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Anderson EM, Bronstein LR. Examining interdisciplinary collaboration within an expanded school mental health framework: a community–university initiative. Adv Sch Ment Health Promotion. 2012;5(1):23‐37. [Google Scholar]