Abstract

Background and Objective

Quality measures used in pay-for-performance systems are intended to address specific quality goals, such as safety, efficiency, effectiveness, timeliness, equity and patient-centeredness. Given the small number of narrowly focused measures in prostate cancer care, we sought to determine whether adherence to any of the available payer-driven quality measures influences patient-centered outcomes, including health-related quality of life (HRQOL), patient satisfaction, and treatment-related complications.

Methods

The Comparative Effectiveness Analysis of Surgery and Radiation (CEASAR) study is a population-based, prospective cohort study that enrolled 3708 men with clinically localized prostate cancer during 2011 and 2012, of whom 2601 completed the 1-year survey and underwent complete chart abstraction. Compliance with six quality indicators endorsed by national consortia was assessed. Multivariable regression was used to determine the relationship between indicator compliance and Expanded Prostate Cancer Index Composite (EPIC-26) instrument summary scores, satisfaction scale scores (SSS-CC) and treatment-related complications.

Results

Overall rates of compliance with these quality measures ranged between 64–88%. Three of the six measures were weakly associated with 1-year sexual function and bowel function scores (β −4.6 and 1.69,2.93, respectively; p ≤ 0.05) while the remaining measures had no significant relationship with patient-reported HRQOL outcomes. Satisfaction scores and treatment-related complications were not associated with quality measure compliance.

Conclusions

Compliance with available nationally-endorsed quality indicators, which were designed to incentivize effective and efficient care, was not associated with clinically important changes in patient-centered outcomes (HRQOL, satisfaction or complications) within 1-year.

Keywords: prostate cancer, quality, health-related quality of life, patient-centered, PQRS

Introduction

Quality measures establish benchmarks for high-quality care, which can hold healthcare providers accountable and make the process of healthcare delivery more transparent1. Nonetheless, quality measurement is complex and the definition of high-quality care is contingent on the perspective of stakeholders, including patients, policymakers, and payers. Given the unsustainable growth in healthcare costs, coupled with variation in the quality-of-care delivered to patients2, there has been considerable policy interest in the adoption of a value-based model designed to ensure high-quality care at reasonable cost3.

Despite the expectation that adherence to structure and process quality measures translates to improvements in patient outcome(s), there remain few data that support the current approach to quality measurement. The dimensions of quality-of-care, according to the conceptual framework proposed by Donabedian4, include structure (characteristics of the setting in which care is delivered); process (characteristics of the interaction between care provider and patient); and outcomes (effects of healthcare upon the patient). Most quality measures used in pay-for-performance systems are process measures because they are easiest to measure and are responsive to incentives. But outcomes, particularly those important to patients, may be the most salient measures, although they are difficult to measure and risk-adjust. Stakeholders have drawn increasing attention to patient-centered outcomes. Indeed, the National Quality Strategy emphasizes the importance of “making health care more patient-centered” and the Affordable Care Act established the Patient-Centered Outcomes Research Institute to foster research in this area. In an ideal value-based care system, adherence to available quality measures would result not only in improved efficiency and effective care, but also in outcomes of importance to patients, such as improved safety, satisfaction and quality of life. Whether adherence to the narrowly focused process measures that underlie current value-based care systems results in meaningful improvement in patient-centered outcomes remains an open question.

Prostate cancer is a common disease, with a prevalence of 2.71 million and an incidence of approximately 240,000 new cases per year in the US5. An initial list of twenty-two quality measures for localized prostate cancer was developed at RAND through literature review, expert opinion, and patient focus groups6,7. The American Urological Association (AUA) and the Physician Consortium for Performance Improvement (PCPI) then convened a multi-stakeholder panel and three of these measures were endorsed by the National Quality Forum (NQF) for inclusion in the Centers for Medicare and Medicaid Services (CMS) Physician Quality Reporting System (PQRS). These measures include avoidance of bone scan in low risk patients, adjuvant androgen-deprivation therapy (ADT) for high risk patients undergoing radiation (XRT), and complete pathology reporting for radical prostatectomy specimens (Table 1). While these measures are intended to address efficient care and effective care, it would be ideal if adherence to these measures also resulted in better patient-centered outcomes. In localized prostate cancer, a disease with nearly 100% 5-year survival, the most important clinical outcomes are functional outcomes of treatment, complications, and satisfaction. Therefore, the goal of our study was to determine whether adherence to nationally-endorsed quality measures was associated with patient-reported functional outcomes, patient satisfaction, and treatment-related complications.

Table 1.

Compliance with Quality Measures

| Measure | Source | # Compliant | Total | % |

|---|---|---|---|---|

| 1. Avoidance of overuse of bone scan in men with low-risk tumors |

PQRS #102, PCPI #3, NQF 0389 |

881 | 1155 | 76.3% |

| 2. ADT for High Risk patients undergoing XRT |

PQRS #104, PCPI #5, NQF 0390 |

160 | 210 | 76.2% |

| 3. Documentation cT stage, biopsy Gleason in newly diagnosed |

PCPI #2 | 1663 | 2310 | 72.0% |

| 4. Documentation of DRE, cT stage, biopsy Gleason prior to 1° therapy |

PCPI #1 | 1228 | 1924 | 63.8% |

| 5. Documentation of discussion of treatment options |

PCPI #4 | 1338 | 1897 | 70.5% |

| 6. Documentation of pathologic T and N stage, Gleason score, and margin status on pathology report in men undergoing RP |

PQRS #250, NQF 1853 |

1096 | 1252 | 87.5% |

PQRS: Physician Quality Reporting System, PCPI: Physician Consortium for Performance Improvement, NQF: National Quality Forum

ADT: androgen deprivation therapy, DRE: digital rectal examination, RP: radical prostatectomy

Methods

Patients

The Comparative Effectiveness Analysis of Surgery and Radiation (CEASAR) study is a population-based, prospective cohort study that enrolled 3708 men with clinically localized prostate cancer from January 2011 to February 2012, of whom 2601 completed the 12-month survey and underwent complete medical chart abstraction, and, therefore, were included in the analytic cohort (supplemental figure). The parent study design and patient characteristics have been described previously8 (supplemental table 1). Patients were accrued from five population-based Surveillance, Epidemiology and End Results (SEER) registry catchment areas (Atlanta, Los Angeles, Louisiana, New Jersey, and Utah), as well as an additional prostate cancer patient registry (Cancer of the Prostate Strategic Urologic Research Endeavor (CaPSURE™)9.

Data Collection

Data were collected through manual chart abstraction at 1-year as well as patient surveys at baseline and at 1-year. The Expanded Prostate Cancer Index Composite (EPIC-26), characterizes disease-specific function or HRQOL domains (sexual function, urinary incontinence, urinary irritation/obstruction, and bowel function) scored from 0–100 with 100 being better HRQOL.10 EPIC-26 is widely used in prostate cancer research and practice. There is evidence that supports its high test-retest reliability and internal consistency reliability (each r ≥0.80 and Cronbach’s alpha ≥0.82) for most domain-specific sub-scales and excellent criterion validity without excessive overlap when compared to instruments that measure related but distinct domains11. Clinically meaningful differences in sub-scale scores have been quantified as 4–6 points in the bowel domain, 6–9 points in the urinary domains and 10–12 points in the sexual domain12. We also collected a satisfaction scale based on the Service Satisfaction Scale for Cancer Care (SSS-CC)13–15 at 1-year. The internal consistency for the SSS-CC has been estimated as >0.80, and validation was performed comparing patient and spouse responses, treatment outcomes, and associations with other health measures, and has been used in longitudinal prostate cancer cohort studies13–15. The abbreviated SSS-CC includes five questions regarding satisfaction with cancer care, relief of symptoms, and effectiveness. The SSS-CC was scored from 0–100, with 100 indicating higher satisfaction. A list of early (< 30d) and delayed (30–365d) complications (Figure 3) was agreed upon by an expert panel and collected through extensive medical chart review. These were evaluated as secondary outcomes. In addition, sociodemographic data, disease characteristics, comorbidity, and psychometric scales measuring participatory decision-making16 (PDM-7), social support17 (MOS Social Support Survey), and depression18 (CES-D) were collected and included as covariates.

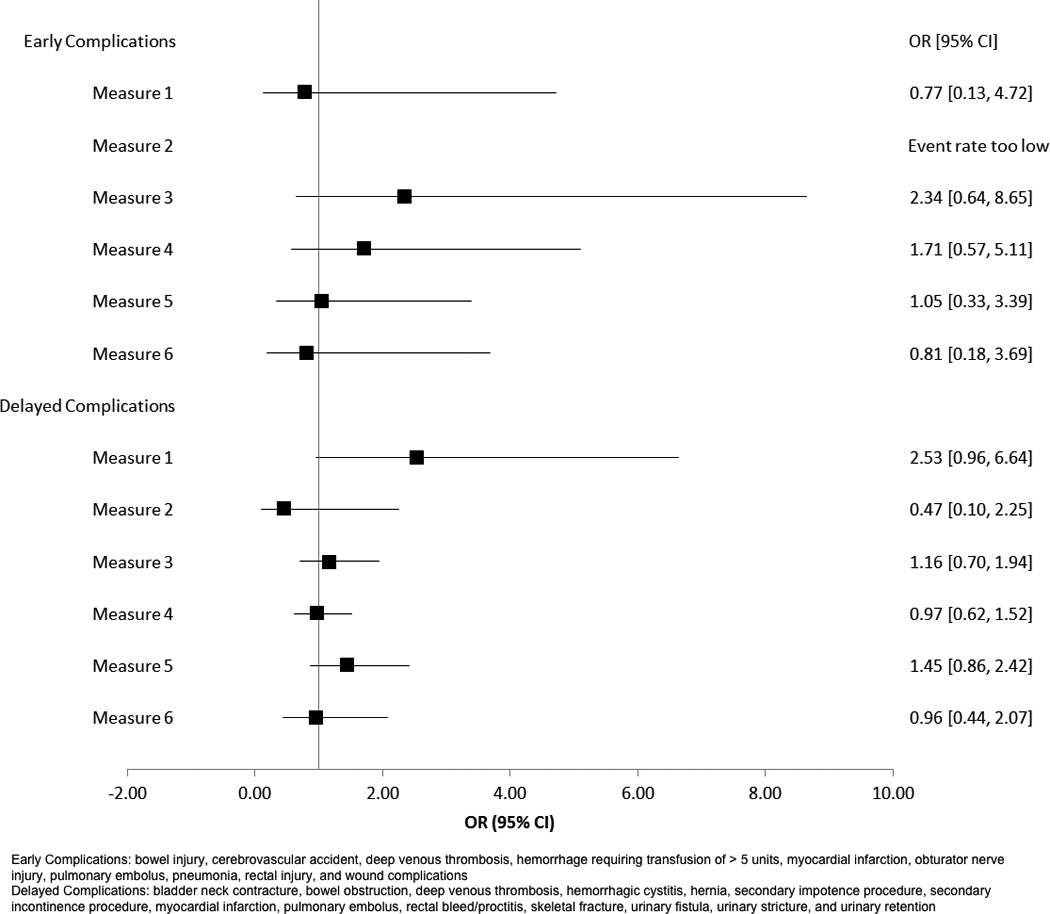

Figure 3.

Relationship between Quality Measure Compliance and Treatment-Related Complications

Quality Measures

Six quality measures were chosen based on their endorsement by the NQF, PCPI, and PQRS. Of these measures, three have been adopted for use in PQRS19.

For the measures that describe documentation at new diagnosis and prior to treatment, adherence to PSA documentation was not available, and was either omitted from the measure (documentation in newly diagnosed) or exchanged for digital rectal examination (documentation prior to primary therapy). Measures containing multiple elements (e.g. complete pathology documentation) required compliance with every element to be compliant with the quality measure.

Statistical Analysis

We calculated physician compliance with each measure in the relevant patient population. D’Amico risk stratification20 was used to determine the proper patient groups for guideline concordant imaging use. Pre-treatment EPIC scores were calculated for both measure compliant and non-compliant groups, and compared using appropriate parametric statistical tests. Multivariable linear regression analysis was performed to determine the effect of measure compliance on EPIC domain scores, adjusting for baseline EPIC score, treatment type (surgery vs. radiation), age (<65 vs. ≥65), race (white vs. other), household income level (≤$50k vs. >$50k), insurance status (Medicare, Private, or other), comorbidity (total illness burden index for prostate cancer21 [TIBI-CaP]), D’Amico risk classification, and SEER site. We performed planned sub-group analyses using different age, race, and socioeconomic groups to test the hypothesis that compliance with these measures may be of importance in certain vulnerable sub-groups. Similar models were constructed to determine the effect of measure compliance on composite satisfaction scale score, with additional adjustment for social support, CES-D, and PDM-7. Multivariable logistic regression was performed with adjustment for patient and disease characteristics to determine the effect of measure compliance on complication rates.

A significance level of 0.05 was used for all statistical inference. Stata v.11.2 (StataCorp, College Station, TX, USA) and R3.0 (R Core Team, Vienna, Austria) were used for all statistical analyses.

Results

The study cohort included 2601 men, with a mean age of 64.4 (median 65). Compliance with the six quality measures studied ranged from 63.8% to 87.5% (Table 1).

Baseline EPIC domain summary scores were calculated for measure compliant and non-compliant groups (supplemental table 2). For most measures, baseline scores were similar between groups. However, mean baseline scores in the EPIC sexual domain for measure 2 (ADT for high-risk patients) were markedly lower in the compliant group (mean 47 vs. 74, p=0.001). This may be expected, as patients with erectile dysfunction at baseline may be more likely to be offered or to accept ADT, without concerns about its adverse effects on libido and sexual performance. There were small but statistically significant differences in EPIC bowel for measure 1 (bone scan avoidance in low-risk patients) and in EPIC sexual for measure 5 (discussion of treatment options).

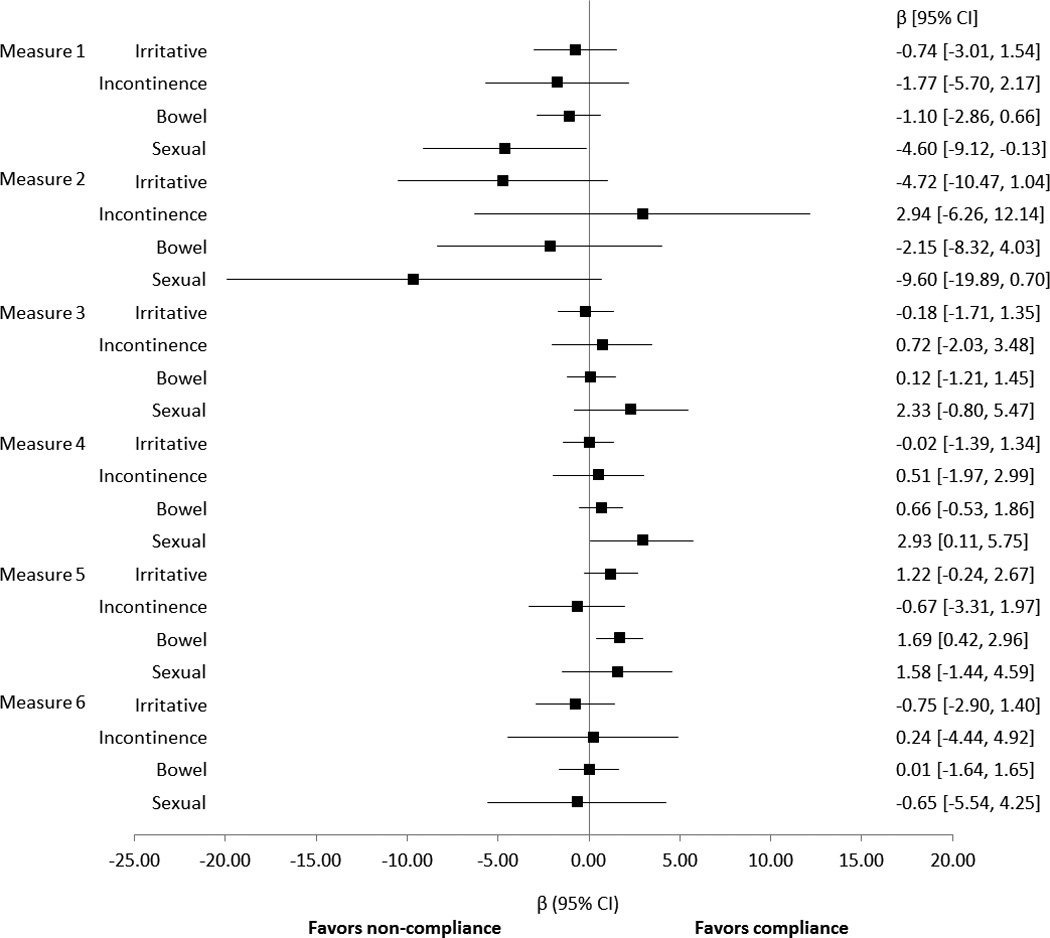

In 21 of the 24 multivariable models predicting 1-year EPIC domain scores, compliance with the quality measure was not associated with any difference in functional outcome (Figure 1). The remaining 3 models showed small magnitude, but statistically significant effects. EPIC bowel scores were slightly better among patients whose physician complied with measure 5 (documentation of the discussion of treatment options; β 1.69, p=0.01). EPIC sexual scores were slightly worse among patients whose physician was compliant with measure 1 (avoidance of bone-scan in low-risk; β −4.6, p=0.04) and slightly better for patients whose physician was compliant with measure 4 (documentation of disease characteristics prior to treatment; β 2.93, p=0.04). The forest plot (Figure 1) displays the difference in EPIC domain score by quality measure compliance with 95% confidence intervals.

Figure 1.

Relationship between Quality Measure Compliance and EPIC-26 Instrument Summary Scores

Sub-group analysis was performed using age, race, and income level, with interaction terms between these variables and measure compliance (data not shown). Of these sub-groups, patients with income <$50,000 were found to have a significant reduction in EPIC sexual domain score with compliance with adjuvant ADT with XRT in high-risk patients (β −23.85, p=0.001). This may be related to the known impact of ADT usage on sexual function, although it is not clear why the effect is less significant for men with higher income.

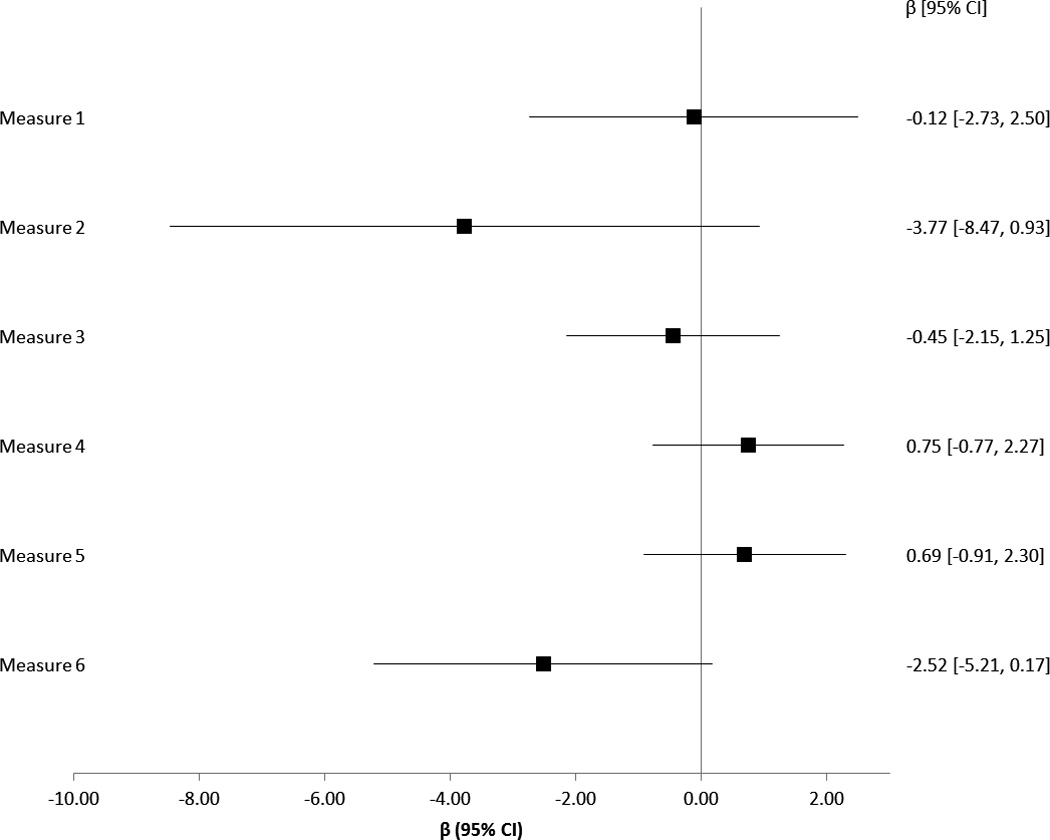

Multivariable linear regression analyses were repeated with satisfaction composite scores as the outcome measure (Figure 2). Compliance with quality measures did not have a significant effect on composite satisfaction scores at 1-year.

Figure 2.

Relationship between Quality Measure Compliance and SSS-CC Satisfaction Scale Scores

Treatment-related complication rates were calculated in the early and delayed periods. Logistic regression was performed, with odds ratios calculated to summarize the likelihood of complication with measure compliance. Documented complication rates were low overall, with 19 occurring within 30 days and 120 occurring within 1-year. There was no significant association between measure compliance and complication rates (Figure 3). This finding must be tempered due to small-sample bias affecting the maximum likelihood estimation in the model.

Discussion

Assessment of patient-centered outcomes is essential in the evaluation of the patient experience after cancer treatment. Indeed, HRQOL and satisfaction represent two critically important outcomes that reflect healthcare quality. However, outcome measures are associated with difficulties in risk-adjustment and large scale data collection22. To this end, the majority of quality measures in pay-for-performance programs have been structure or process measures, most of which are intended to assess efficiency and/or effective care. In the case of prostate cancer, we found few weak associations between compliance with nationally-endorsed quality measures and patient-centered outcomes. While there were associations that met statistical significance, there was no discernable pattern of association between compliance and improved outcomes, and the magnitude of these differences is not clinically important, according to published thresholds for clinically detectable change12,23.

There are several potential reasons for failing to identify an association between these measures and patient-centered outcomes, and process-outcome links have generally been elusive24,25. We may have lacked statistical power to identify a true association (a Type II error). This is unlikely, at least in the case of the patient-reported outcomes, given our sample size.

It is possible that these measures are related to patient-centered outcomes, but only in specific sub-groups. We did not identify any such effects based on age, race or income level, perhaps because of limited statistical power in these smaller subgroups. However, additional analyses could reveal certain high-risk sub-populations that may have improvements in patient-centered outcomes with measure compliance. Recognizing that many patients achieve favorable outcomes regardless of the measured quality-of-care, identification of vulnerable sub-groups at high-risk for poor outcomes may facilitate targeted application of quality measures26.

It is also conceivable that a composite quality measure comprising multiple measures would be more predictive of patient-centered outcomes. For example, it has been shown that composite measures, including process and intermediate outcome measures, account for a significant proportion of physician-level variation in diabetes outcomes27.

The most likely explanation for our null findings is that these nationally-endorsed process measures were developed to address other quality goals, namely effective clinical care, cost-effectiveness, and efficiency, rather than improved patient-reported outcomes. Conversely, there may be other processes of care that more directly influence patient-reported outcomes, such as the use of nerve sparing in low-risk surgical patients. Yet none of the nationally-endorsed measures, upon which the value of prostate cancer care may be judged and upon which reimbursement may be based, include patient-reported outcomes as their aim, despite the fact that HRQOL and satisfaction are recognized as the most salient treatment outcomes in this disease with a nearly 100% 5-year survival. Among the measures endorsed by CMS, two target effective clinical care and one targets efficiency and cost-reduction. This mirrors the overall trend in quality measurement by CMS; of the 175 measures CMS assessed for PQRS in 2015, 103 (59%) are directed at effective clinical care or cost reduction/efficiency and 11 (6%) specifically target “Patient-Centered Experience and Outcomes”19. Yet PQRS is not intended to merely enhance adherence to selected measures. Rather, its intention is to “obtain meaningful data to improve care” presuming that adherence to specific processes will serve as a proxy for more global quality-of-care. Thus, it is expected that adherence to available measures would result in better patient-centered outcomes. The remaining measures for prostate cancer do not have a clear quality-improvement domain specified. This represents an opportunity to develop measures that influence patient-reported outcomes, so that patient-centered care may be recognized as an important quality aim.

Each of the six quality measures analyzed in this study is a process measure28. Process measures are attractive as they do not require extensive risk-adjustment, are easy to benchmark, and can be collected during the clinical process22. However, they may not be associated with important outcomes. On the other hand, outcome measures have face-validity and may reflect the impact of multiple processes of care, but require careful risk-adjustment29, which may result in lower standards of care for disadvantaged populations30. Moreover, the collection of some outcome measures may be onerous (e.g. HRQOL) or take place long after the intervention (e.g. mortality), and the opportunity for quality improvement may be lost. Nonetheless, direct measurement of patient-reported outcomes with appropriate risk-adjustment is one avenue to explore for improving the assessment of quality-of-care for prostate cancer.

The findings in the study must be interpreted in light of the study design and dataset. One significant limitation of our study is the lack of adjustment for structural measures related to the resources and qualifications of hospitals and providers. The main independent variables were process measures because these are the nationally-endorsed measures. We were also limited to specific outcome measures. The CEASAR study was designed and powered to measure differences in EPIC-26 1-year after treatment. It was felt that the short-term (and maybe long-term) oncologic control are similar between groups (referring both to treatment and guideline compliance). Therefore, by design, we set out to determine whether process measures influenced patient-reported outcomes, but we recognize that there may be alternative measures that could demonstrate a process-outcome link. Finally, we did not make adjustments for multiple comparisons, for two reasons. First, these were a-priori analyses. Secondly, we were not concerned about Type I error, since we were interpreting the clinical importance of the magnitude of difference rather than p-values, and no clinically meaningful associations between guideline compliance and patient-centered outcomes emerged.

Conclusion

Quality assessment is critical to provide the best care possible to patients, and to inform comparative-effectiveness research. Furthermore, it is the backbone of value-based reimbursement initiatives that comprise the payment structure of our healthcare system. For these reasons, it is important to establish clear expectations for the intended outcomes and impact of adherence to these quality measures. This study did not find a clinically meaningful improvement in functional outcomes, satisfaction scores, or treatment-related complication rates associated with adherence to available quality measures. This represents an opportunity to identify alternative measures that may influence patient-centered outcomes.

Supplementary Material

Supplemental Figure 1 – CONSORT Diagram

Supplemental Table 1 – Patient Demographics

Supplemental Table 2 – Premorbid Disease Specific Function (EPIC-26) by Quality Indicator Compliance

Acknowledgments

This study was supported by the Agency for Healthcare Research and Quality (1R01HS019356, 1R01HS022640). The study centers were supported by NIH/NCI contracts (N01-PC-67007, N01-PC-67009, N01-PC-67010, N01-PC-67006, N01-PC-67005, and N01-PC-67000). The data management was facilitated by the use of Vanderbilt University’s Research Electronic Data Capture (REDCap) system, which is supported by the Vanderbilt Institute for Clinical and Translational Research grant (UL1TR000011 from NCATS/NIH).

The Louisiana Tumor Registry receives support from the SEER Program of the National Cancer Institute (NCI) under contract HHSN261201300016I, the National Program of Cancer Registries, Centers for Disease Control and Prevention under cooperative agreement 5U58DP003915-03, State of Louisiana and the University Medical Center Management Corporation dba Interim LSU Hospital funding PH-15-141-005.

The New Jersey State Cancer Registry receives support from the SEER Program of the NCI under contract no. HHSN 261201300021I, the National Program of Cancer Registries, Centers for Disease Control and Prevention under cooperative agreement 5U58DP003931-02, the State of New Jersey, and the Rutgers Cancer Institute of New Jersey.

The Utah Cancer Registry is funded by contract no. HHSN2612013000171 from the NCI SEER Program with additional support from the Utah State Department of Health and the University of Utah

The Los Angeles County cancer incidence data used in this study was supported by the California Department of Public Health as part of the statewide cancer reporting program mandated by California Health and Safety Code Section 103885; the NCI SEER Program under contract HHSN261201000035C awarded to the University of Southern California; and the Centers for Disease Control and Prevention’s National Program of Cancer Registries, under agreement U58DP003862-01 awarded to the California Department of Public Health. The ideas and opinions expressed herein are those of the author(s) and endorsement by the State of California, Department of Public Health the National Cancer Institute, and the Centers for Disease Control and Prevention or their Contractors and Subcontractors is not intended nor should be inferred.

CaPSURE is supported in part by an independent, unrestricted educational grant from Abbott and by the US Department of Defense Prostate Cancer Research Program (W81XWH-13-2-0074 and W81XWH-11-1-0489). CaPSURE/CEASAR has been funded by the Agency for Research and Quality (AHRQ) and the Patient-centered Outcomes Research Institute (PCORI).

References

- 1.Penson DF. Assessing the quality of prostate cancer care. Current opinion in urology. 2008;18(3):297–302. doi: 10.1097/MOU.0b013e3282f9b393. [DOI] [PubMed] [Google Scholar]

- 2.McGlynn EA, Asch SM, Adams J, et al. The Quality of Health Care Delivered to Adults in the United States. New England Journal of Medicine. 2003;348(26):2635–2645. doi: 10.1056/NEJMsa022615. [DOI] [PubMed] [Google Scholar]

- 3.Chien AT, Rosenthal MB. Medicare's Physician Value-Based Payment Modifier — Will the Tectonic Shift Create Waves? New England Journal of Medicine. 2013;369(22):2076–2078. doi: 10.1056/NEJMp1311957. [DOI] [PubMed] [Google Scholar]

- 4.Donabedian A. Evaluating the Quality of Medical Care. The Milbank Quarterly. 2005;83(4):691–729. doi: 10.1111/j.1468-0009.2005.00397.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Howlader N, Noone AM, Krapcho M, et al. SEER Cancer Statistics Review, 1975–2011. National Cancer Institute. 2014 http://seer.cancer.gov/csr/1975_2011/

- 6.Spencer BA, Steinberg M, Malin J, Adams J, Litwin MS. Quality-of-care indicators for early-stage prostate cancer. Journal of clinical oncology : official journal of the American Society of Clinical Oncology. 2003;21(10):1928–1936. doi: 10.1200/JCO.2003.05.157. [DOI] [PubMed] [Google Scholar]

- 7.Litwin MS, Steinberg M, Malin J, et al. Prostate Cancer Patient Outcomes and Choice of Providers: Development of an Infrastructure for Quality Assessment. Santa Monica, CA: RAND; 2000. [Google Scholar]

- 8.Barocas DA, Chen V, Cooperberg M, et al. Using a population-based observational cohort study to address difficult comparative effectiveness research questions: the CEASAR study. Journal of comparative effectiveness research. 2013;2(4):445–460. doi: 10.2217/cer.13.34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lubeck DP, Litwin MS, Henning JM, et al. The CaPSURE database: a methodology for clinical practice and research in prostate cancer. CaPSURE Research Panel. Cancer of the Prostate Strategic Urologic Research Endeavor. Urology. 1996;48(5):773–777. doi: 10.1016/s0090-4295(96)00226-9. [DOI] [PubMed] [Google Scholar]

- 10.Szymanski KM, Wei JT, Dunn RL, Sanda MG. Development and validation of an abbreviated version of the expanded prostate cancer index composite instrument for measuring health-related quality of life among prostate cancer survivors. Urology. 2010;76(5):1245–1250. doi: 10.1016/j.urology.2010.01.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wei JT, Dunn RL, Litwin MS, Sandler HM, Sanda MG. Development and validation of the expanded prostate cancer index composite (EPIC) for comprehensive assessment of health-related quality of life in men with prostate cancer. Urology. 2000;56(6):899–905. doi: 10.1016/s0090-4295(00)00858-x. [DOI] [PubMed] [Google Scholar]

- 12.Skolarus TA, Dunn RL, Sanda MG, et al. Minimally important difference for the Expanded Prostate Cancer Index Composite Short Form. Urology. 2015;85(1):101–105. doi: 10.1016/j.urology.2014.08.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Greenfield TK, Attkisson CC. Progress toward a multifactorial satisfaction scale for primary care and mental health services. Evaluation and Program Planning. 1989;12:271–278. [Google Scholar]

- 14.Shah NL, Dunn R, Greenfield TK, et al. Development and validation of a novel instrument to measure patient satisfaction in multiple dimensions of urological cancer care quality. The Journal of Urology. 2003;169(4):11–19. [Google Scholar]

- 15.Sanda MG, Dunn RL, Michalski J, et al. Quality of Life and Satisfaction with Outcome among Prostate-Cancer Survivors. New England Journal of Medicine. 2008;358(12):1250–1261. doi: 10.1056/NEJMoa074311. [DOI] [PubMed] [Google Scholar]

- 16.Kaplan SH, Gandek B, Greenfield S, Rogers W, Ware JE. Patient and visit characteristics related to physicians' participatory decision-making style. Results from the Medical Outcomes Study. Medical care. 1995;33(12):1176–1187. doi: 10.1097/00005650-199512000-00002. [DOI] [PubMed] [Google Scholar]

- 17.Sherbourne CD, Stewart AL. The MOS social support survey. Social science & medicine (1982) 1991;32(6):705–714. doi: 10.1016/0277-9536(91)90150-b. [DOI] [PubMed] [Google Scholar]

- 18.Radloff LS. The CES-D Scale: A Self-Report Depression Scale for Research in the General Population. Applied Psychological Measurement. 1977;1(3):385–401. [Google Scholar]

- 19.Centers for Medicare & Medicaid Services, editor. Centers for Medicare & Medicaid Services. 2015 Physician Quality Reporting System (PQRS) Measure Specifications Manual for Claims and Registry Reporting of Individual Measures. 9.0. 2014. pp. 12–16.pp. 159–163.pp. 375–377. [Google Scholar]

- 20.D'Amico AV, Whittington R, Malkowicz S, et al. BIochemical outcome after radical prostatectomy, external beam radiation therapy, or interstitial radiation therapy for clinically localized prostate cancer. JAMA. 1998;280(11):969–974. doi: 10.1001/jama.280.11.969. [DOI] [PubMed] [Google Scholar]

- 21.Litwin MS, Greenfield S, Elkin EP, Lubeck DP, Broering JM, Kaplan SH. Assessment of prognosis with the total illness burden index for prostate cancer: aiding clinicians in treatment choice. Cancer. 2007;109(9):1777–1783. doi: 10.1002/cncr.22615. [DOI] [PubMed] [Google Scholar]

- 22.Rubin HR, Pronovost P, Diette GB. The advantages and disadvantages of process-based measures of health care quality. International journal for quality in health care : journal of the International Society for Quality in Health Care / ISQua. 2001;13(6):469–474. doi: 10.1093/intqhc/13.6.469. [DOI] [PubMed] [Google Scholar]

- 23.Jaeschke R, Singer J, Guyatt GH. Measurement of health status. Ascertaining the minimal clinically important difference. Controlled clinical trials. 1989;10(4):407–415. doi: 10.1016/0197-2456(89)90005-6. [DOI] [PubMed] [Google Scholar]

- 24.Howell EA, Zeitlin J, Hebert PL, Balbierz A, Egorova N. Association between hospital-level obstetric quality indicators and maternal and neonatal morbidity. JAMA. 2014;312(15):1531–1541. doi: 10.1001/jama.2014.13381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Neuman MD, Wirtalla C, Werner RM. Association between skilled nursing facility quality indicators and hospital readmissions. JAMA. 2014;312(15):1542–1551. doi: 10.1001/jama.2014.13513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.McKethan A, Jha AK. Designing smarter pay-for-performance programs. JAMA. 2014;312(24):2617–2618. doi: 10.1001/jama.2014.15398. [DOI] [PubMed] [Google Scholar]

- 27.Kaplan SH, Griffith JL, Price LL, Pawlson LG, Greenfield S. Improving the reliability of physician performance assessment: identifying the "physician effect" on quality and creating composite measures. Medical care. 2009;47(4):378–387. doi: 10.1097/MLR.0b013e31818dce07. [DOI] [PubMed] [Google Scholar]

- 28.Donabedian A. The quality of care. How can it be assessed? Jama. 1988;260(12):1743–1748. doi: 10.1001/jama.260.12.1743. [DOI] [PubMed] [Google Scholar]

- 29.Brook RH, McGlynn EA, Cleary PD. Quality of health care. Part 2: measuring quality of care. The New England journal of medicine. 1996;335(13):966–970. doi: 10.1056/NEJM199609263351311. [DOI] [PubMed] [Google Scholar]

- 30.Fiscella K, Burstin HR, Nerenz DR. Quality measures and sociodemographic risk factors: To adjust or not to adjust. JAMA. 2014;312(24):2615–2616. doi: 10.1001/jama.2014.15372. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Figure 1 – CONSORT Diagram

Supplemental Table 1 – Patient Demographics

Supplemental Table 2 – Premorbid Disease Specific Function (EPIC-26) by Quality Indicator Compliance