Abstract

Background

The use of Web-based interventions to deliver mental health and behavior change programs is increasingly popular. They are cost-effective, accessible, and generally effective. Often these interventions concern psychologically sensitive and challenging issues, such as depression or anxiety. The process by which a person receives and experiences therapy is important to understanding therapeutic process and outcomes. While the experience of the patient or client in traditional face-to-face therapy has been evaluated in a number of ways, there appeared to be a gap in the evaluation of patient experiences of therapeutic interventions delivered online. Evaluation of Web-based artifacts has focused either on evaluation of experience from a computer Web-design perspective through usability testing or on evaluation of treatment effectiveness. Neither of these methods focuses on the psychological experience of the person while engaged in the therapeutic process.

Objective

This study aimed to investigate what methods, if any, have been used to evaluate the in situ psychological experience of users of Web-based self-help psychosocial interventions.

Methods

A systematic literature review was undertaken of interdisciplinary databases with a focus on health and computer sciences. Studies that met a predetermined search protocol were included.

Results

Among 21 studies identified that examined psychological experience of the user, only 1 study collected user experience in situ. The most common method of understanding users’ experience was through semistructured interviews conducted posttreatment or questionnaires administrated at the end of an intervention session. The questionnaires were usually based on standardized tools used to assess user experience with traditional face-to-face treatment.

Conclusions

There is a lack of methods specified in the literature to evaluate the interface between Web-based mental health or behavior change artifacts and users. Main limitations in the research were the nascency of the topic and cross-disciplinary nature of the field. There is a need to develop and deliver methods of understanding users’ psychological experiences while using an intervention.

Keywords: eHealth; medical informatics applications; web browser; Web-based; usability; computer systems; psychology, clinical; usability testing; eHealth evaluation

Introduction

Internet-Delivered Health Care

The past 15 years have included a burgeoning in the development of Internet-delivered health care. The term eHealth has been widely adopted to describe the application of Internet and communication technology to improve the operation of the health care system and health care delivery [1]. The rationale for offering health services online is similar to the provision of any service online, be it commerce, education, or entertainment. It is accessible anytime and anywhere to an almost unlimited audience and is often cheaper than face-to-face [1]. Within health care, the growth of technology means that eHealth is seen as a means of providing targeted treatment to a wide client base [2,3], and self-help Web-based interventions can also contribute to the reduction of disparities in health care internationally [4,5]. It also allows for a focus on patient-centered customized care “providing the right information, to the right person, at the right time” [6]. Moreover, people now expect to be able to use the Internet to assist in most aspects of their lives, including health care.

The range of health services offered over the Internet encompasses not only physical concerns, such as high blood pressure or diabetes, but also psychological issues such as depression, addiction, and anxiety [7-9]. The provision of eHealth for psychological issues can take many different forms. They include (1) direct human interaction, known as e-counseling or e-therapy, which involve electronic communication (via email, Skype, or messaging for example) between a clinician and client; (2) Web-based psychological interventions such as w the new Zealand government’s depression support site[10] or Sleepio [11], which have information, health assessment screening, and treatment and are targeted to specific issues; (3) Internet-based therapeutic software programs such as gaming and virtual worlds; (4) mobile apps; and (5) other activities such as support discussion groups, blogs, and podcasts [3,12,13].

It makes sense to assume that the proliferation of Web-based psychological interventions could not have occurred without strong supporting evidence, yet there is a notable gap between what is proposed by eHealth interventions and what is actually delivered [14]. As such, the continued investment in, and adoption of, Web-based interventions requires more evaluative information to ensure resources are not wasted on ineffective interventions and that the “best practices of successful programs are rapidly disseminated” [15]. Evaluative information should be relevant to policy makers, health care providers, practitioners, and clients [16,17]. This requires a multifaceted approach, coalescing aspects of traditional psychological intervention evaluations such as efficacy, effectiveness, and measures of the therapeutic relationship, client variables, and therapist feedback, with computer science developed usability testing approaches to gather an overall measure of an intervention’s utility.

Evaluating Web-Based Psychosocial Interventions

It makes sense that evaluation of a Web-based eHealth intervention should naturally follow much of the same evaluative process as a traditional intervention [18]. Effectiveness can be measured by pre- and postintervention assessments using clinical trials. However, assessments can also be made of the components of the intervention. In traditional face-to-face treatment, this would include the therapeutic relationship and session outcome measures, which gather information on the experience of the user during as well as after the intervention. In eHealth, user experience is often focused on usability testing that generally measures the degree to which the intervention is understandable and easy to use.

Effectiveness of Web-Based Psychosocial Interventions

Effectiveness refers to how well a health or psychological treatment works in a real-world setting [19]. Methods used for clinical trials of pharmacological treatments can be applied to complex nonpharmacological interventions such as eHealth initiatives [20]. Randomized clinical trials (RCTs) have found that Web-based interventions are effective in treating a range of psychological issues. For example, individually tailored Internet-delivered cognitive behavioral therapy (CBT) has been found to be an effective and cost-effective means of treating patients with anxiety disorders [9]. A recent meta-analysis of RCTs investigating the effectiveness or efficacy of Web-based psychological interventions for depression found positive results across diverse settings and populations [21]. However, as Richards and Richardson [21] note, effectiveness is only one part, albeit an important one, of an intervention that should be evaluated. For example, effectiveness research does not necessarily investigate the influence of therapist factors.

User Experience of Web-Based Psychosocial Interventions

In evaluation research of face-to-face psychosocial interventions, findings have shown that an effective therapeutic relationship in and of itself may be enough to provide successful outcomes for clients [22]. Moreover, this correlation between ratings of the therapeutic relationship and outcome seems unaffected by other variables such as outcome measure or type of intervention employed [23]. These variables are client derived, based on their subjective experiences, and can be adjusted for and evaluated throughout the treatment program or therapy session. As a result, the interaction between the client and clinician is fine-tuned and dynamic, and the clinician is able to constantly monitor the therapeutic relationship and make changes accordingly. This is possible in a dynamic person-to-person interaction but it is not possible in a one-way interaction between a person and a computer.

In the person-computer interaction, the psychological experience of the person using the therapeutic intervention is lost and cannot be responded or adjusted to (although there are developments in this area [24]). This raises three key concerns. First is the potential loss of the therapeutic relationship. Second, the psychological experience of the person may be important to determining usage and adherence to an intervention with some reports of dropout rates exceeding 98% from open-access websites dealing with psychosocial issues [25]. Third, it may be important in understanding issues of psychological safety and well-being for “if information is too complex to understand, especially under periods of duress or high cognitive load” then the intervention may not be as effective [6]. Therefore, the in situ psychological experience of the user may be an important variable to be considered; however, it is not clear how it has been or should be evaluated.

Methods of Evaluating Web-Based Psychosocial Interventions

User-Focused Evaluations

In traditional face-to-face interventions, evaluation of user experience is relatively straightforward. For starters, the client can provide instant and direct feedback to the therapist during a session. In addition, there are a number of psychometrically reliable and valid standardized tools such as the Helping Alliance Questionnaire [26] or the Outcome Rating Scale and Session Rating Scale [27], which directly address user experience. In e-therapy or e-counseling, when there is still a person-to-person relationship via Skype or email, the measurement of the therapeutic process is similar as only the delivery differs [12]. However, when the intervention is provided by a website and is driven by computer-programmed algorithms and automated responses it is much more complex. A cross-disciplinary approach is needed that links the psychosocial approach with user-centered design from computer science [6]. One of the most common ways of evaluating the relationship the user has with a website or Web-based intervention is through usability testing.

Intervention-Focused Evaluations

The literature on human-computer interaction yields a number of different methods of evaluating a website, be it a psychosocial intervention or an web-based retail store. One of the most widely used and recognized means of addressing this is usability evaluation [28]. Usability testing is a means of evaluating the design and functionality elements of a website as they apply to the user. However, the end user is not always involved and, if so, any information capturing the user experience is designed to enhance the performance of the intervention in terms of content, design, and navigability of the website, rather than an investigation of the relationship between the user and computer.

Usability inquiry on the other hand is designed to gather broader and more subjective information from the user with regard to the website or intervention. Zhang [29] defined usability inquiry quite broadly as gaining “information about users’ experience with the system by talking to them, observing them using the system in real work (not for the purpose of usability testing), or letting them answer questions (verbally or in written form)” [29]. Therefore, although usability testing provides output from the person using the system, the focus is on functionality of the website. In usability inquiry, there is a subtle shift to understanding the users’ goals, context, profile, feelings, and thoughts during the process of interaction. The output extends beyond issues of website design and functionality to broader concerns of purpose and context [28].

The methods of ethnographic interviewing, contextual inquiry, cognitive interviewing, and situated co-inquiry lend themselves, somewhat naturally, to an exploration of how users may experience a Web-based psychological intervention. They all place the user as the most important piece of the process. Thus, it fits the paradigm of interpretative and participatory research with elements of phenomenological inquiry to gain an understanding of the users’ experience in a natural setting. In some ways, this would come close to understanding the nuance of the relationship between a user and an intervention in a similar way that an evaluation of the therapeutic relationship captures a client’s perception and experience of traditional therapy. However, it is unclear to what extent usability inquiry, or any other methods, have been used to address the psychological experiences of those using Web-based psychosocial interventions. Thus, a systematic literature review was undertaken to assess what methods, if any, have been applied to understanding users’ in situ psychological experience with Web-based interventions.

Methods

Systematic Literature Review Methodology

A systematic literature review was employed to determine how end users’ psychological experiences of Web-based interventions have been evaluated. A systematic review is beneficial for this relatively new topic for “identifying gaps and weaknesses in the evidence base and increasing access to credible knowledge” [30,31]. The AMSTAR (Assessment of Multiple Systematic Reviews) was selected [31] to guide the review. AMSTAR factors and how they were applied in this study are outlined below. The research question under investigation was defined as “What evaluation methods have been used to explore end-users’ in-situ psychological experiences with web-based psychosocial interventions?”

Inclusion and Exclusion Criteria

There were 4 criteria components for selecting studies. First, the health issues of interest were those with a psychosocial component for which a person may have been reasonably expected to see a clinician if the Web-based intervention was not available; for example, helping people with common mental health concerns such as stress, anxiety, and depression. Also included were psychosocial or behavior change intervention programs associated with physical ailments, such as rehabilitation and psychological well-being following surgery or living with human immunodeficiency virus infection. The psychosocial element was required to underscore the importance of the user’s psychological experience with the intervention. Thus, interventions with a purely physical component such as smoking cessation were excluded.

Second, the type of interventions included were Web-based interventions with a primarily self-help basis that required users to work their way through a series of steps. Excluded were e-counseling and e-therapy programs where the treatment was based on person-to-person interaction rather than a person-to-computer interaction. They were also excluded if they were solely psychoeducation or health promotion websites that did not require an interactive, self-guided program. Online tools (such as “Facebook”) that may be used as part of social skills therapy but not designed for the purpose of treatment or psychosocial intervention were excluded.

Third, the method of evaluation had to involve end users’ assessment of their experience, rather than expert assessments. Finally, the focus of the evaluation must be the end users’ psychological experience interacting with the intervention. Studies that looked at the overall efficacy or treatment outcome of a Web-based intervention were excluded. Usability studies were excluded if they focused solely on functional Web-development issues (content, layout, design), rather than on the psychological experience of the user. For example, “think aloud” (also called talk aloud or cognitive interviewing) studies that did not elicit psychological insight or experience from the user but discussed use of menu options, friendliness, and ease of use were not included. Basically, the study had to be user focused, rather than intervention or computer focused. Expert usability and heuristic studies were also not of value as they did not address the actual experience of the intended user of the intervention. If an evaluation of the users’ psychological experience formed part of a broader study on the effectiveness study or feasibility study, it was included. As a result of a scoping exercise, we expanded the search protocol to include end users’ psychological experience per se, whether in situ or postintervention.

Search Protocol

Keywords applied in the search were determined by an initial review of the literature and modified by feedback from psychologists, computer science usability experts, and a librarian. Sets of terms were proximally searched including experience (“experience,” “evaluation,” “usability”), Internet (“internet,” “web*,” “online,” “eHealth”), health (“mental health,” “behavio*,” “psycho*”), and interventions (“intervention,” “treatment,” “therapy”). To ensure coverage of the health, psychological, and computing components of the topic the following databases were searched: CINAHL (EBSCO), MEDLINE (EBSCO), and Psychology and Behavioral Sciences Collection (EBSCO), Computers and Applied Sciences Complete (EBSCO), ABI/INFORM Global, and IEEE Xplore (see Multimedia Appendix 1). The search was restricted to studies from 2004 to October 2015 because of the relative newness of the field of Web-based interventions. A title scan across non–peer-reviewed journals elicited no studies of interest in the initial scoping, so only scholarly journals were included in the search. A total of 2 studies came from a review of the references of a meta-synthesis of user experience of online computerized therapy for depression and anxiety [32].

Search Results

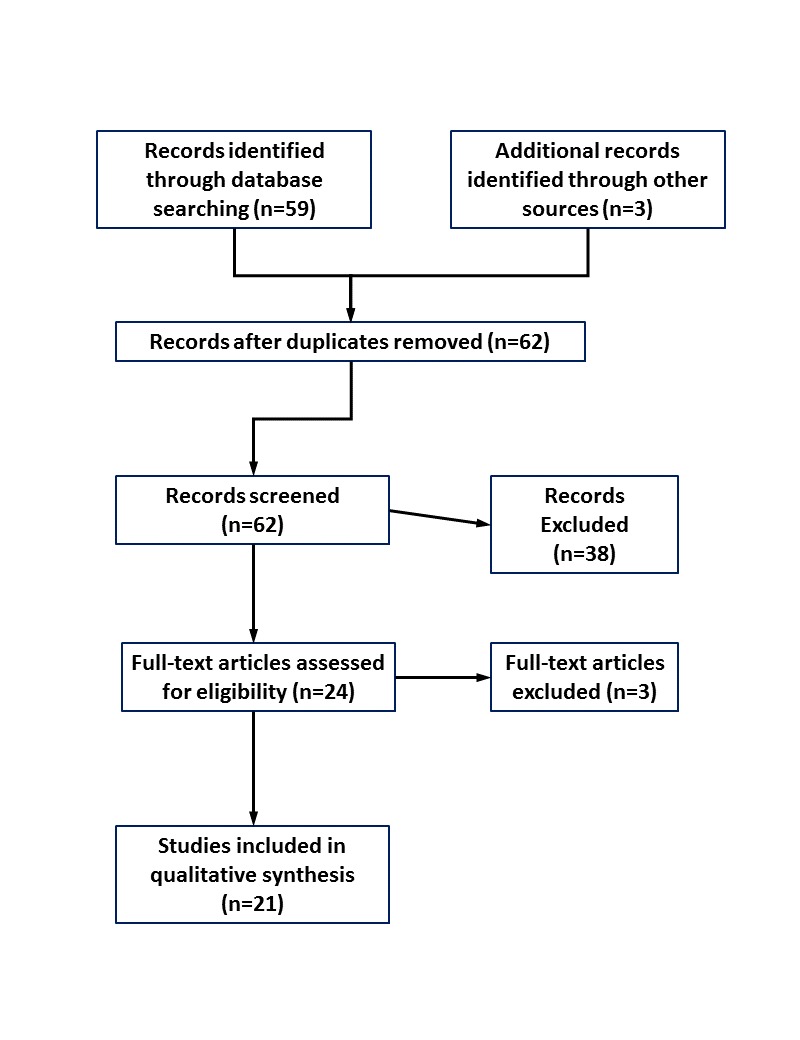

The search protocol resulted in 62 records that were screened by 2 reviewers (LM and LR), with disagreements resolved by the research team (see Figure 1). After final full-text review, 21 studies were identified as meeting the 4 inclusion criteria. Included and excluded studies are listed in Multimedia Appendices 2 and 3, respectively.

Figure 1.

Flow Diagram of Study Selection.

Quality Assessment and Data Synthesis

An assessment of the type and quality of the methods used in the 21 studies was integral to this research project, and screening studies out on the basis of methodological quality would have been counterproductive. Therefore, all the 21 selected studies were included in the synthesis phase. As the identified studies were heterogeneous, a narrative synthesis was undertaken. The a priori data extraction framework involved first, describing the studies (see Multimedia Appendix 2 for study characteristics) and second, their methods of examining user psychological experience of Web-based psychosocial interventions (see Table 1).

Table 1.

Included studies: methods of examining user psychological experience.

| Reference | User experience focus | Methodsa | Tools | Time of assessment |

| Baños et al [33] | No | Open-ended questions (online) | Researcher designed questionnaire | Postsession (6 × weekly sessions) |

| Bendelin et al [34] | Yes | Semistructured interviews (face-to-face) | On the basis of the Client Change Interview | Posttreatment (8-10 months) |

| Bradley et al [35] | Yes | Semistructured interviews (phone) | Researcher devised based on Theory of Planned Behavior | Posttreatment (1 week) |

| Cartreine et al [36] | No | Questionnaires (online) | System Usability Scale; Credibility Questionnaire; Assessment of Self-Guided Treatment |

Postsession |

| de Graaf et al [37] | No | Questionnaires (online) | Credibility Expectancy Questionnaire (CEQ); customized questionnaire | CEQ at baseline; questionnaire posttreatment (3 months) |

| Devi et al [38] | Yes | Semistructured interviews (face-to-face) | Researcher devised interview guide | Posttreatment (6 weeks) |

| Fergus et al [39] | No | Questionnaire; semistructured interviews (face-to-face) | Treatment Satisfaction Questionnaire; researcher devised interview guide | Posttreatment (time unspecified) |

| Gega et al [40] | Yes | Questionnaire; semistructured interviews (face-to-face) | Session Evaluation Questionnaire; Session Impacts Scale; Helpful Aspects of Therapy form; Client Change Interview |

Questionnaires postsession; Interview posttreatment (time unspecified) |

| Gerhards et al [41] | Yes | Semistructured interviews (face-to-face) | Researcher devised interview guide | Posttreatment (time unspecified) |

| Gorlick et al [42] | Yes | Semistructured interviews (phone) | Researcher devised interview guide | Posttreatment (<2 years) |

| Gulec et al [43] | No | Questionnaires (online) | Researcher designed self-report questionnaires | Online weekly during treatment and posttreatment |

| Hind et al [44] | Yes | Session evaluation forms; semistructured interviews (phone and face-to-face) |

Researcher devised session evaluation forms and interview guide | Evaluation postsession; brief interview postsession 1; interview posttreatment (after completing or withdrawing) |

| Lara et al [45] | No | Questionnaire (online) | Researcher devised questionnaire | Posttreatment |

| Lederman et al [46] | No | Semistructured interviews (face-to-face) | Researcher devised interview guide | Posttreatment (time unspecified) |

| Lillevoll et al [47] | Yes | Semistructured interviews (face-to-face) | Researcher devised interview guide based on phenomenological hermeneutical approach | Posttreatment (time unspecified) |

| McClay et al [48] | Yes | Semistructured interviews (phone) | Researcher devised interview guide based on motivation, experience, and comparison with other treatments | Posttreatment (time unspecified) |

| Serowik et al [49] | No | Think aloud usability; questionnaire | Think aloud usability protocol; researcher designed questionnaire; modified Working Alliance Inventory | Usability during session; questionnaire posttreatment or at dropout (time unspecified) |

| Tonkin-Crine et al [50] | Yes | Unstructured interviews (phone) | Open-ended interview researcher devised | Posttreatment (time unspecified) |

| Topolovec-Vranic et al [51] | No | Unstructured interview (phone) | Unspecified | Weekly during 6-week program and 12 months postenrollment |

| Van Voorhees et al [52] | No | Questionnaire; diaries | Researcher designed questionnaire | Diary during and after session; questionnaire posttreatment (time unspecified) |

| Wade et al [53] | No | Semistructured interviews; survey |

Unspecified | At follow-up (time unspecified) |

a Other measures may have been used in the study such as pre- and postbaseline measures of diagnosis but these were not included in the data extraction as they did not concern user experience.

Results

Overview of Included Studies

Intervention Type

The interventions were Web-based self-help interventions consisting of a number of modules (ranging from 3 to 12), most expected to be used on a weekly basis. Although all of the studies were predominantly self-help programs, some were augmented by other elements such as an online diary [37,38,41], social or peer group support in the form of discussion or chat groups [38,39,42,44,46], and some degree of therapist or tailored guidance to support the self-help component [34,38-40,43,53]. A total of 9 studies focused on an intervention in the pilot or development phase [33,34,36,39,43,46,49,52,53], whereas 13 studies focused on existing treatment programs such as “MoodGYM” or “Colour Your Life” [35,37,38,40-42,44,45,47,48,50-52]. All of the interventions contained interactive or homework components and some reported including multimedia such as audio or video in content delivery.

Of the 21 studies, most studies focused on the treatment of psychological issues. A total of 10 studies evaluated interventions designed to treat or prevent the development of depression using principles of CBT as the modality of treatment [34,36,37,40,41,44,45,47,51,52]. One intervention used CBT to assist adolescents with stress, anxiety, and depression [35]. Of the studies, 2 focused on delivering mainstream treatment for eating disorders online [43,48] and 1 on the treatment of first psychotic episodes through the use of CBT, support, and psychoeducation [46]. One intervention used motivational interview principles to support veterans with war exacerbated psychiatric issues apply for jobs and benefits [48]. Another aimed to reduce child behavior problems and parenting stress for young children with traumatic brain injury (TBI) via an online self-guided program and live coaching [53]. A total of 4 studies focused on interventions delivering psychological well-being and support to those with chronic or acute physical issues including irritable bowel syndrome [50], cancer [39,42], and angina [38]. Another study used a positive psychology approach to enhance mood in general populations [33].

Study Characteristics

Of the 21 studies, 13 were published from 2012 onward (see Table 1). A total of 10 studies were explicitly focused on the evaluation of the patient or users’ psychological experience of the intervention [34,35,38,40-42,44,47,48,50]. Of the remaining 10 studies, 8 gathered user experience as part of the development and pilot testing of the intervention [36,39,43,45,46,49,52,53]. Another study collected information on user experience to assess the acceptability and use of online CBT for depression [37], and another looked at the efficacy of using an online CBT intervention for depression with patients experiencing depression along with their TBI [51].

Evaluation of User Experience

Rationale for Evaluating User Experience

The rationale for evaluating user experience varied across the studies. Of these, 5 studies were seeking information on the users’ psychological experience of using the website to inform improvements [36,39,45,46,49]. A total of 6 studies were interested in finding out how to increase use of online interventions by understanding barriers to change [35] and acceptability in particular populations [42-44,51,52].

Process of Evaluating User Experience

The process of gathering user experience information also varied. A total of 13 studies relied on only one form of evaluation method. Of these, 10 studies used only an interview at the end of the treatment program (with a range of 1 week to 2 years posttreatment, where specified) to ask users about their experience with the intervention. Of the studies, 5 used face-to-face interviews [34,38,41,46,47] and 5 employed phone interviews [35,42,48,50,51]. The interviews were conducted by the researcher or research assistants (when specified) and their duration ranged from 19 minutes [35] to 111 minutes [34]. Of the studies, 3 employed only questionnaires to investigate user experience. Of the 3 studies, 2 deployed it at the end of each treatment session [33,36] and the other [37] at the beginning of treatment and then 3 months posttreatment.

The remaining 8 studies relied on a mixed methods design to investigate user experience. Of these, 4 studies employed a posttreatment semistructured interview, 1 study [39] complimenting it with a posttreatment questionnaire and another study with a posttreatment satisfaction survey [53]; Gega and colleagues [40] with postsession questionnaires and Hind and colleagues [44] with a postsession evaluation form. One study used weekly evaluation questions and a postintervention questionnaire [43]. A further study used a during and after session diary to capture user feedback combined with a posttreatment questionnaire [52] and another used module and a postintervention evaluations along with content analysis of the site’s discussion forums [45]. The final study used a think aloud usability process during the session followed by an end-of-treatment questionnaire [49]. Note that this was the only study employing a usability testing method that included a user experience focus, explicitly asking for feelings during the session, and a questionnaire to elicit user experience with the therapeutic alliance during the Web-based intervention.

Tools Used to Evaluate User Experience

Tools used to gather user experience information ranged from customized to off-the-shelf. Of the 14 studies that used some form of interview with users, 12 were semistructured with the topic guide designed by the research team. Of these, 2 studies provided a theoretical basis for the design of the interview guide. Lillevoll and colleagues [47] followed a phenomenological-hermeneutic approach to understand the lived experience of the user of the intervention in the natural setting, and Bradley and colleagues [35] followed a Theory of Planned Behavior. A further 2 studies [34,40] based the interview guide on the Client Change Interview (CCI), which was designed as an empathic exploration of aspects of a client’s experience with traditional face-to-face counseling and therapy.

Questionnaires used to evaluate user experience were either researcher designed or existing tools. Of the studies, 7 used self-customized questionnaire or evaluation forms [33,37,43-45,52,53]. Others relied on questionnaires designed to gain feedback about the therapeutic process, sessions, expectations, and outcomes (Table 2).

Table 2.

Treatment feedback questionnaires used to evaluate user experience.

| Questionnaire | Purpose | Cited ina |

| Credibility Questionnaire | Credibility of computer programs, psychotherapy, and treatment | Cartreine et al [36] |

| Assessment of Self-guided Therapy | Acceptability of treatment (eg, comfort, personal acceptance) | Cartreine et al [36] |

| Credibility Expectancy Questionnaire | Expectation and rationale for treatment | de Graaf et al [37] |

| Treatment Satisfaction Questionnaire | Satisfaction and experience (convenience, quality, value) with online treatment | Fergus et al [39] |

| Session Evaluation Questionnaire | User experience with the session in terms of depth, positivity, smoothness, and arousal | Gega et al [40] |

| Session Impact Scale | User view of session impact on understanding, problem solving, therapeutic relationship, and hindering impact | Gega et al [40] |

| Helpful Aspects of Therapy | Identify helpful or hindering aspects of the treatment session | Gega et al [40] |

| Working Alliance Inventory | Self-report assessment of user experience of alliance with treatment (modified for online) | Serowik et al [49] |

a Further details of questionnaires can be found by consulting the references.

Analysis of User Experience Data

As this study is focused on the methods of evaluating user experience as opposed to user experience per se, the results of the studies are not presented in detail. In summary, quantitative analysis was carried out on the statistical data gathered in the questionnaires and descriptive summaries reported where appropriate. The qualitative user experience information in 19 of the studies was analyzed using content analysis to elicit emerging themes. Of these, 2 studies followed elements of grounded theory methodology to develop theories of user experience with Web-based interventions [41,52]. One study took a phenomenological-hermeneutic approach [47].

Overall, these studies reported thematic categories to describe the users’ experiences within the frame of the study purpose. Thus, the themes that evolved were varied and included user experience in terms of the therapeutic process [40,41], individual and social aspects of the intervention [33,40-42,47], as well as Web-based characteristics of the intervention [40-42,48-50]. Process issues included motivation and user profile [34], privacy and help-seeking [35], overall experience [37,46], barriers [50,52], expectations [37], personalization, solitude, and social, individual, and contextual features [40,41]. For example, in one of the grounded theory studies, Gerhards and colleagues [41] identified themes of the user experience in terms of the computer, individual, social, research, and environmental contexts.

Discussion

Key Findings

This systematic review identified a gap in examining users’ psychological experience of Web-based interventions in situ. Among studies using usability testing, only 1 study [49] explicitly explored what it felt like for the user in-session. Most were focused on the functionality of the intervention in terms of Web design, ease of use, and readability. Usability inquiry methods such as situated co-inquiry proposed by Carter [54] or ethnographic and contextual inquiry discussed by Cooper et al [28] are not yet evident in the literature. It is important that our methods of evaluating Web-based interventions include opportunities for uncovering the relationship between the user and the computer as a social agent. The studies that did focus on the therapeutic experience online tended to evaluate it postsession or posttreatment using tools designed for evaluating face-to-face interventions. For Web-based interventions, as called for by Knowles et al, “it is likely that more in-depth, observational data collection methods will be necessary to better capture user experience in [the] future” (p11) [32].

The rationale for postsession and posttreatment evaluation occurred when the interest was social science rather than computer science and the focus was the treatment experience rather than the mode in which it is delivered. Some of the studies sought to understand the therapeutic process at a session level [40,44], the therapeutic relationship [34,40], and how overall experience translated from traditional delivery channels of treatment into Web-based treatments [41,43]. Other studies aimed to enhance the treatment program and increase usage and acceptability of particular populations to certain interventions, such as adolescents with psychological distress [35] or those with psychosocial issues associated with physical health problems [38,39,42,44,50,51]. Postsession and posttreatment evaluations were also used to understand barriers to usage [52] and motivations to participate in Web-based interventions [37,48]. Most of these evaluations used semistructured interviews or questionnaires to collect information. Posttreatment semistructured interviews (face-to-face or phone based) were the key methods used to understand the experience of the user.

An in-depth review of the methods was not possible because of gaps in the literature on timing, length, interviewer characteristics, and topic guide details. It is evident however that the problem with posttreatment evaluations is the potential time delay between treatment and recollection of experience and this potentially affects validity and reliability of the information. The time between treatment and evaluation varied, some took place 1 week posttreatment [35] and another had a gap of up to 2 years [42]. Some studies did not actually specify how long following treatment that the evaluations occurred. Some interviews were conducted by phone for 20 minutes [48] and others reported almost 2-hour face-to-face interviews [34] and thus the depth and quality of information varied accordingly. In addition, the characteristics of the interviewers were also sometimes overlooked. When specified, the interviews were conducted by the researcher or research assistant who may or may not have had relevant clinical training. In general, the topic guides or questions asked by the researchers were not published so that the areas discussed with the participants are unknown, although it can be extrapolated to some extent by the focus of the research. Some studies provided example topics or questions. For example, Devi and colleagues [38] presented a table of example interview questions that included “What were your initial thoughts and feelings regarding this web-based programme?” and “What was your overall experience of using the programme?” Having more information as to the topics covered would be useful to understand in detail what aspects of user experience were investigated.

In addition to interviews, questionnaires were also used; most commonly they were standardized tools to measure experience with traditional treatment or therapy modified for eHealth. For example, Gega and colleagues [40] used the Session Impact Scale, Session Evaluation Questionnaire, and Helpful Aspects of Therapy questionnaire to gather immediate feedback from users following each online session. This elicited in-depth session and overall feedback that were categorized into a number of themes, such as the experience of “learning by doing” or having no fear of being judged by a Web-based intervention. These questionnaires were designed to understand treatment experience and outcomes and were not concerned with the functionality of the website. The use of the CCI as the basis for the interview guide by Bendelin and colleagues [34] and Gega and colleagues [40] reinforced this focus on treatment versus delivery that was also evident in the analysis for the evaluation findings. The analysis of user feedback employed by these studies reflected an interest in understanding users’ psychological experience for its own sake rather than in relation to the intervention alone. The value of the information elicited was dependent on the purpose of the research, and each of the 21 selected studies had slightly different foci and rationale for exploring the experience of the user.

Most of the studies that used an interview approach used inductive content analysis to interpret the findings in terms of themes and subthemes of experience. Examples of themes included user profile and motivation [34], privacy, and help-seeking [35]; solitariness and personalization [40]; and the individual, social, computer, and wider contexts of experience [41]. Interviews are widely used in information systems research and eHealth evaluation, but it is important to be clear that an interview is an artificial and additional intervention. Myers and Newman’s [55] description of the dramaturgical model as originally described by Goffman [56] may aid interviewers to move between evaluation and clinical issues.

Although functionality was not a driver in the research, themes of intervention characteristics [36,40,41] and Web engagement [47] did emerge. This suggests that users’ psychological experiences are determined in part by the psychological issue and treatment process, and in part by the channel or mode of delivery. In other words, the functionality explored in usability testing might be a piece of the experience but is by no means all of it.

The choice of methods of evaluation depends primarily on which aspect of the intervention is most critical to measure—for example, effectiveness, usability or engagement—and on the maturity of the implementation of the intervention, and multiple methods may be appropriate. For early prototypes, interviews directly after the intervention are appropriate in order to gain an understanding of all of the issues and potential benefits as seen by the users. Larger-scale interventions will be more likely assessed by questionnaires, often modified from existing assessments. However, these should occur as quickly as possible after the intervention so that the users still have the experience fresh in their minds. Standard functional questionnaires may be preferred when Web-based treatment approaches are being compared with other interventions. However, all of these evaluation approaches must bear in mind the “emergent” nature of eHealth interventions and the degree to which the objectives and nature of the evaluation of the intervention may differ as prototypes are developed and evaluators gain experience [57]. The unique role of eHealth interventions also needs to be considered; as these inherently deal with people’s well-being, there is a need for closer evaluation of the psychological aspects of a user’s experience.

Overall, the evaluation of users’ psychological experiences with psychosocial interventions is a new yet growing area. Of the 21 studies in the final selection, only 10 were explicitly focused on understanding users’ psychological experience (as opposed to usability, outcome, or overall evaluation). Of those 10 studies, none were published before 2009 and 6 were published in the past 2 years. The nascency of the topic and exploratory nature of this study means that a number of opportunities for future research and action in the area are possible.

Methodological Limitations

The methodological limitations of this study derived from the cross-disciplinary nature of the topic, resource constraints, and the newness of the field that could have resulted in missed studies. Searching across databases from the computer science and health and social science fields precluded the use of consistent search protocols in terms of qualifiers and data fields searched. Indeed, as noted by reviewers, several studies were missed in the search process [58-61]. Examining these studies, however, offered no additional methods of user inquiry. For example, the Helpful Aspects of Therapy questionnaire used by Gega et al [40] was also used by Richards and Timulak [61]. In addition, the search process was limited to scholarly material. In hindsight, the resource constraint could have been mediated somewhat by limiting the search date to studies published in the past 5 years.

The retrieved studies were not subjected to a quality review. This was due to the nature of the study that was looking at methods used rather than study outcomes. Overall, one could then argue that the search was semisystematic with the front end (the search process) meeting the requirements of a predetermined and comprehensive search, but the back end (data extraction and synthesis) was less systematized and more exploratory in nature. It reflects the challenges outlined by Jesson et al [62] and Curran et al [30] in conducting systematic reviews across disciplines.

There is a lack of consistency in the terminology used to describe Internet-delivered interventions. An effort to create and broadly ratify agreed key or subject words would make the dissemination of information much easier. “eHealth” is almost as broad a term as “health”—searching for eHealth (or mHealth) interventions is as wide a scope as searching for health interventions. Therefore, as the area grows and consolidates, categorization needs to be clearer and differentiate from other deliveries such as telemedicine. Interventions need to be defined more by type (eg, CBT, mindfulness, psychoeducation) with an agreed identifier such as Web-based, Internet-based, or online for example.

Future Research and Implications

With regard to Web-based psychosocial interventions, there is a lack of cohesion between computer science literature, focused on the technical design, and health literature, focused on the treatment process and its impact. Combining modes of assessment prevalent in one discipline, such as usability testing from computer science, with session and treatment evaluations from psychology and social sciences could bridge this gap. This collaboration could contribute to the development of best practice protocols for understanding users’ psychological experience that might include an evaluation of the in situ experience of the participant using the system, postsession impact, and a reflective posttreatment review. For example, combining the approach by Serowik et al [49] using a feelings-based think aloud usability and a posttreatment therapeutic evaluation (such as the Working Alliance Inventory or CCI) with the type of session evaluations used by Gega et al [40] would provide a comprehensive view of the user’s experience, as would a user experience–focused usability inquiry method such as situated co-inquiry [54]. Research approaches focused on capturing user experience in situ help us understand the impact of Web-based interventions moment-to-moment, as well as their overall effectiveness. There is a role for clinicians in this process along with computer usability and design experts.

Overall, it is the experience of the user that is important in delivering acceptable and useful treatment. An understanding of user experience including their expectations and responses is essential to increasing acceptability, effectiveness, and adherence to cost-effective, broadly accessible Web-based psychosocial interventions. The therapeutic process is important to treatment and the way it is assessed needs to keep up with the way in which therapy is delivered. As the delivery of health changes, there needs to be increasing collaboration among disciplines. This will contribute not only to robust best practice but also to the creation of new and agreed terminology and a cohesive body of literature to ensure broad and effective dissemination of knowledge. A critical understanding of user experience of eHealth is needed to improve outcomes for people who look to the Internet for help.

Abbreviations

- AMSTAR

Assessment of Multiple SysTemAtic Reviews

- CBT

cognitive behavioral therapy

- CCI

Client Change Interview

- RCT

randomized clinical trial

- TBI

traumatic brain injury

Database search details.

Included studies.

Excluded studies.

Footnotes

Conflicts of Interest: None declared.

References

- 1.Oh H, Rizo C, Enkin M, Jadad A. What is eHealth (3): a systematic review of published definitions. J Med Internet Res. 2005;7(1):e1. doi: 10.2196/jmir.7.1.e1. http://www.jmir.org/2005/1/e1/ v7e1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Al-Shorbaji N, Geissbuhler A. Establishing an evidence base for e-health: the proof is in the pudding. Bull World Health Organ. 2012 May 1;90(5):322–322A. doi: 10.2471/BLT.12.106146. http://www.scielosp.org/scielo.php?script=sci_arttext&pid=BLT.12.106146&lng=en&nrm=iso&tlng=en .BLT.12.106146 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Abbott JM, Klein B, Ciechomski L. Best Practices in Online Therapy. Journal of Technology in Human Services. 2008 Jul 03;26(2-4):360–375. doi: 10.1080/15228830802097257. [DOI] [Google Scholar]

- 4.Muñoz RF. Using evidence-based internet interventions to reduce health disparities worldwide. J Med Internet Res. 2010 Dec;12(5):e60. doi: 10.2196/jmir.1463. http://www.jmir.org/2010/5/e60/ v12i5e60 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Viswanath K, Kreuter MW. Health disparities, communication inequalities, and eHealth. Am J Prev Med. 2007 May;32(5 Suppl):S131–3. doi: 10.1016/j.amepre.2007.02.012. http://europepmc.org/abstract/MED/17466818 .S0749-3797(07)00100-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hesse BW, Shneiderman B. eHealth research from the user's perspective. Am J Prev Med. 2007 May;32(5 Suppl):S97–103. doi: 10.1016/j.amepre.2007.01.019. http://europepmc.org/abstract/MED/17466825 .S0749-3797(07)00048-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Andersson G, Paxling B, Wiwe M, Vernmark K, Felix CB, Lundborg L, Furmark T, Cuijpers P, Carlbring P. Therapeutic alliance in guided internet-delivered cognitive behavioural treatment of depression, generalized anxiety disorder and social anxiety disorder. Behav Res Ther. 2012 Sep;50(9):544–50. doi: 10.1016/j.brat.2012.05.003.S0005-7967(12)00085-X [DOI] [PubMed] [Google Scholar]

- 8.Gainsbury S, Blaszczynski A. A systematic review of Internet-based therapy for the treatment of addictions. Clin Psychol Rev. 2011 Apr;31(3):490–8. doi: 10.1016/j.cpr.2010.11.007.S0272-7358(10)00177-7 [DOI] [PubMed] [Google Scholar]

- 9.Nordgren LB, Hedman E, Etienne J, Bodin J, Kadowaki A, Eriksson S, Lindkvist E, Andersson G, Carlbring P. Effectiveness and cost-effectiveness of individually tailored Internet-delivered cognitive behavior therapy for anxiety disorders in a primary care population: a randomized controlled trial. Behav Res Ther. 2014 Aug;59:1–11. doi: 10.1016/j.brat.2014.05.007. http://linkinghub.elsevier.com/retrieve/pii/S0005-7967(14)00076-X .S0005-7967(14)00076-X [DOI] [PubMed] [Google Scholar]

- 10.Health Promotion Agency The Journal. [2015-10-27]. http://www.depression.org.nz .

- 11.Sleepio. [2015-10-27]. Let's build your sleep improvement program https://www.sleepio.com/

- 12.Barak A, Hen L, Boniel-Nissim M, Shapira N. A Comprehensive Review and a Meta-Analysis of the Effectiveness of Internet-Based Psychotherapeutic Interventions. Journal of Technology in Human Services. 2008 Jul 03;26(2-4):109–160. doi: 10.1080/15228830802094429. [DOI] [Google Scholar]

- 13.Barak A, Klein B, Proudfoot JG. Defining internet-supported therapeutic interventions. Ann Behav Med. 2009 Aug;38(1):4–17. doi: 10.1007/s12160-009-9130-7. [DOI] [PubMed] [Google Scholar]

- 14.Black AD, Car J, Pagliari C, Anandan C, Cresswell K, Bokun T, McKinstry B, Procter R, Majeed A, Sheikh A. The impact of eHealth on the quality and safety of health care: a systematic overview. PLoS Med. 2011 Jan;8(1):e1000387. doi: 10.1371/journal.pmed.1000387. http://dx.plos.org/10.1371/journal.pmed.1000387 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.van Heerden A, Tomlinson M, Swartz L. Point of care in your pocket: a research agenda for the field of m-health. Bull World Health Organ. 2012 May 1;90(5):393–4. doi: 10.2471/BLT.11.099788. http://www.scielosp.org/scielo.php?script=sci_arttext&pid=BLT.11.099788&lng=en&nrm=iso&tlng=en .BLT.11.099788 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Buenestado D, Elorz J, Pérez-Yarza EG, Iruetaguena A, Segundo U, Barrena R, Pikatza JM. Evaluating acceptance and user experience of a guideline-based clinical decision support system execution platform. J Med Syst. 2013 Apr;37(2):9910. doi: 10.1007/s10916-012-9910-7. [DOI] [PubMed] [Google Scholar]

- 17.Glasgow RE. eHealth evaluation and dissemination research. Am J Prev Med. 2007 May;32(5 Suppl):S119–26. doi: 10.1016/j.amepre.2007.01.023.S0749-3797(07)00052-9 [DOI] [PubMed] [Google Scholar]

- 18.Eysenbach G, CONSORT-EHEALTH Group CONSORT-EHEALTH: improving and standardizing evaluation reports of Web-based and mobile health interventions. J Med Internet Res. 2011;13(4):e126. doi: 10.2196/jmir.1923. http://www.jmir.org/2011/4/e126/ v13i4e126 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kring A, Johnson S, Davison G, Neale J. Abnormal Psychology. 12th edition. New York: John Wiley and Sons; 2012. [Google Scholar]

- 20.Campbell M, Fitzpatrick R, Haines A, Kinmonth AL, Sandercock P, Spiegelhalter D, Tyrer P. Framework for design and evaluation of complex interventions to improve health. BMJ. 2000 Sep 16;321(7262):694–6. doi: 10.1136/bmj.321.7262.694. http://europepmc.org/abstract/MED/10987780 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Richards D, Richardson T. Computer-based psychological treatments for depression: a systematic review and meta-analysis. Clin Psychol Rev. 2012 Jun;32(4):329–42. doi: 10.1016/j.cpr.2012.02.004.S0272-7358(12)00027-X [DOI] [PubMed] [Google Scholar]

- 22.Hill C. Helping Skills: Facilitating Exploration, Insight, and Action. 3rd edition. Washington, DC: American Psychological Association (APA); 2009. [Google Scholar]

- 23.Martin DJ, Garske JP, Davis MK. Relation of the therapeutic alliance with outcome and other variables: a meta-analytic review. J Consult Clin Psychol. 2000 Jun;68(3):438–50. [PubMed] [Google Scholar]

- 24.Wu C, Tzeng Y, Kuo B, Tzeng Gh. Integration of affective computing techniques and soft computing for developing a human affective recognition system for U-learning systems. IJMLO. 2014;8(1):50–66. doi: 10.1504/ijmlo.2014.059997. [DOI] [Google Scholar]

- 25.Christensen H, Griffiths KM, Farrer L. Adherence in internet interventions for anxiety and depression. J Med Internet Res. 2009;11(2):e13. doi: 10.2196/jmir.1194. http://www.jmir.org/2009/2/e13/ v11i2e13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Luborsky L, Barber JP, Siqueland L, Johnson S, Najavits LM, Frank A, Daley D. The Revised Helping Alliance Questionnaire (HAq-II) : Psychometric Properties. J Psychother Pract Res. 1996;5(3):260–71. http://europepmc.org/abstract/MED/22700294 . [PMC free article] [PubMed] [Google Scholar]

- 27.Miller S, Duncan B, Brown J, Sparks J, Claud D. The outcome rating scale: A preliminary study of the reliability, validity, and feasibility of a brief visual analog measure. Journal of Brief Therapy. 2003;2(2):91–100. [Google Scholar]

- 28.Cooper A, Reimann R, Cronin D. About face 3: the essentials of interaction design. 3rd edition. Indianapolis, IN: Wiley Pub; 2007. [Google Scholar]

- 29.Zhang Z. Usability evaluation. In: Zaphiris P, editor. Human Computer Interaction Research in Web Design and Evaluation. Hershey, PA: IGI Global; 2007. pp. 209–228. [Google Scholar]

- 30.Curran C, Burchardt T, Knapp M, McDaid D, Bingqin L. Challenges in Multidisciplinary Systematic Reviewing: A Study on Social Exclusion and Mental Health Policy. Social Policy & Administration. 2007;41(3):289–312. doi: 10.1111/j.1467-9515.2007.00553.x. [DOI] [Google Scholar]

- 31.Shea BJ, Grimshaw JM, Wells GA, Boers M, Andersson N, Hamel C, Porter AC, Tugwell P, Moher D, Bouter LM. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol. 2007;7:10. doi: 10.1186/1471-2288-7-10. http://bmcmedresmethodol.biomedcentral.com/articles/10.1186/1471-2288-7-10 .1471-2288-7-10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Knowles SE, Toms G, Sanders C, Bee P, Lovell K, Rennick-Egglestone S, Coyle D, Kennedy CM, Littlewood E, Kessler D, Gilbody S, Bower P. Qualitative meta-synthesis of user experience of computerised therapy for depression and anxiety. PLoS One. 2014;9(1):e84323. doi: 10.1371/journal.pone.0084323. http://dx.plos.org/10.1371/journal.pone.0084323 .PONE-D-13-37470 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Baños RM, Etchemendy E, Farfallini L, García-Palacios A, Quero S, Botella C. EARTH of Well-Being System: A pilot study of an Information and Communication Technology-based positive psychology intervention. The Journal of Positive Psychology. 2014 Jun 23;9(6):482–488. doi: 10.1080/17439760.2014.927906. [DOI] [Google Scholar]

- 34.Bendelin N, Hesser H, Dahl J, Carlbring P, Nelson KZ, Andersson G. Experiences of guided Internet-based cognitive-behavioural treatment for depression: a qualitative study. BMC Psychiatry. 2011;11:107. doi: 10.1186/1471-244X-11-107. http://www.biomedcentral.com/1471-244X/11/107 .1471-244X-11-107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Bradley KL, Robinson LM, Brannen CL. Adolescent help-seeking for psychological distress, depression, and anxiety using an Internet program. International Journal of Mental Health Promotion. 2012 Feb;14(1):23–34. doi: 10.1080/14623730.2012.665337. [DOI] [Google Scholar]

- 36.Cartreine JA, Locke SE, Buckey JC, Sandoval L, Hegel MT. Electronic problem-solving treatment: description and pilot study of an interactive media treatment for depression. JMIR Res Protoc. 2012;1(2):e11. doi: 10.2196/resprot.1925. http://www.researchprotocols.org/2012/2/e11/ v1i2e11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.de Graaf LE, Huibers MJ, Riper H, Gerhards SA, Arntz A. Use and acceptability of unsupported online computerized cognitive behavioral therapy for depression and associations with clinical outcome. J Affect Disord. 2009 Aug;116(3):227–31. doi: 10.1016/j.jad.2008.12.009.S0165-0327(08)00487-4 [DOI] [PubMed] [Google Scholar]

- 38.Devi R, Carpenter C, Powell J, Singh S. Exploring the experience of using a web-based cardiac rehabilitation programme in a primary care angina population: a qualitative study. International Journal of Therapy & Rehabilitation. 2014;21(9):434–40. doi: 10.12968/ijtr.2014.21.9.434. [DOI] [Google Scholar]

- 39.Fergus KD, McLeod D, Carter W, Warner E, Gardner SL, Granek L, Cullen KI. Development and pilot testing of an online intervention to support young couples' coping and adjustment to breast cancer. Eur J Cancer Care (Engl) 2014 Jul;23(4):481–92. doi: 10.1111/ecc.12162. [DOI] [PubMed] [Google Scholar]

- 40.Gega L, Smith J, Reynolds S. Cognitive behaviour therapy (CBT) for depression by computer vs. therapist: patient experiences and therapeutic processes. Psychother Res. 2013;23(2):218–31. doi: 10.1080/10503307.2013.766941. [DOI] [PubMed] [Google Scholar]

- 41.Gerhards SA, Abma TA, Arntz A, de Graaf LE, Evers SM, Huibers MJ, Widdershoven GA. Improving adherence and effectiveness of computerised cognitive behavioural therapy without support for depression: a qualitative study on patient experiences. J Affect Disord. 2011 Mar;129(1-3):117–25. doi: 10.1016/j.jad.2010.09.012.S0165-0327(10)00580-X [DOI] [PubMed] [Google Scholar]

- 42.Gorlick A, Bantum EO, Owen JE. Internet-based interventions for cancer-related distress: exploring the experiences of those whose needs are not met. Psychooncology. 2014 Apr;23(4):452–8. doi: 10.1002/pon.3443. http://europepmc.org/abstract/MED/24243756 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Gulec H, Moessner M, Mezei A, Kohls E, Túry F, Bauer S. Internet-based maintenance treatment for patients with eating disorders. Professional Psychology: Research and Practice. 2011;42(6):479–486. doi: 10.1037/a0025806. [DOI] [Google Scholar]

- 44.Hind D, O'Cathain A, Cooper CL, Parry GD, Isaac CL, Rose A, Martin L, Sharrack B. The acceptability of computerised cognitive behavioural therapy for the treatment of depression in people with chronic physical disease: a qualitative study of people with multiple sclerosis. Psychol Health. 2010 Jul;25(6):699–712. doi: 10.1080/08870440902842739.910789764 [DOI] [PubMed] [Google Scholar]

- 45.Lara M, Tiburcio M, Aguilar Abrego A, Sánchez-Solís A. A four-year experience with a Web-based self-help intervention for depressive symptoms in Mexico. Rev Panam Salud Publica. 2014;35(5-6):399–406. http://www.scielosp.org/scielo.php?script=sci_arttext&pid=S1020-49892014000500013&lng=en&nrm=iso&tlng=en .S1020-49892014000500013 [PubMed] [Google Scholar]

- 46.Lederman R, Wadley G, Gleeson J, Bendall S, Álvarez-Jiménez M. Moderated online social therapy. ACM Trans. Comput.-Hum. Interact. 2014 Feb 01;21(1):1–26. doi: 10.1145/2513179. [DOI] [Google Scholar]

- 47.Lillevoll KR, Wilhelmsen M, Kolstrup N, Høifødt RS, Waterloo K, Eisemann M, Risør MB. Patients' experiences of helpfulness in guided internet-based treatment for depression: qualitative study of integrated therapeutic dimensions. J Med Internet Res. 2013 Jun;15(6):e126. doi: 10.2196/jmir.2531. http://www.jmir.org/2013/6/e126/ v15i6e126 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.McClay C, Waters L, McHale C, Schmidt U, Williams C. Online cognitive behavioral therapy for bulimic type disorders, delivered in the community by a nonclinician: qualitative study. J Med Internet Res. 2013 Mar;15(3):e46. doi: 10.2196/jmir.2083. http://www.jmir.org/2013/3/e46/ v15i3e46 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Serowik K, Ablondi K, Black A, Rosen M. Developing a benefits counseling website for Veterans using Motivational Interviewing techniques. Computers in Human Behavior. 2014 Aug;37:26–30. doi: 10.1016/j.chb.2014.03.019. [DOI] [Google Scholar]

- 50.Tonkin-Crine S, Bishop FL, Ellis M, Moss-Morris R, Everitt H. Exploring patients' views of a cognitive behavioral therapy-based website for the self-management of irritable bowel syndrome symptoms. J Med Internet Res. 2013 Sep;15(9):e190. doi: 10.2196/jmir.2672. http://www.jmir.org/2013/9/e190/ v15i9e190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Topolovec-Vranic J, Cullen N, Michalak A, Ouchterlony D, Bhalerao S, Masanic C, Cusimano MD. Evaluation of an online cognitive behavioural therapy program by patients with traumatic brain injury and depression. Brain Inj. 2010 Apr;24(5):762–72. doi: 10.3109/02699051003709599. [DOI] [PubMed] [Google Scholar]

- 52.Van Voorhees BW, Ellis JM, Gollan JK, Bell CC, Stuart SS, Fogel J, Corrigan PW, Ford DE. Development and process evaluation of a primary care internet-based intervention to prevent depression in emerging adults. Prim Care Companion J Clin Psychiatry. 2007;9(5):346–55. doi: 10.4088/pcc.v09n0503. http://europepmc.org/abstract/MED/17998953 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Wade SL, Oberjohn K, Burkhardt A, Greenberg I. Feasibility and preliminary efficacy of a web-based parenting skills program for young children with traumatic brain injury. J Head Trauma Rehabil. 2009;24(4):239–47. doi: 10.1097/HTR.0b013e3181ad6680.00001199-200907000-00003 [DOI] [PubMed] [Google Scholar]

- 54.Carter P. Liberating usability testing. Interactions. 2007 Mar 01;14(2):18–22. doi: 10.1145/1229863.1229864. [DOI] [Google Scholar]

- 55.Myers MD, Newman M. The qualitative interview in IS research: Examining the craft. Information and Organization. 2007 Jan;17(1):2–26. doi: 10.1016/j.infoandorg.2006.11.001. [DOI] [Google Scholar]

- 56.Goffman E. The presentation of self in everyday life. New York: Doubleday; 1959. [Google Scholar]

- 57.Greenhalgh T, Russell J. Why do evaluations of eHealth programs fail? An alternative set of guiding principles. PLoS Med. 2010;7(11):e1000360. doi: 10.1371/journal.pmed.1000360. http://dx.plos.org/10.1371/journal.pmed.1000360 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Advocat J, Lindsay J. Internet-based trials and the creation of health consumers. Soc Sci Med. 2010 Feb;70(3):485–92. doi: 10.1016/j.socscimed.2009.10.051.S0277-9536(09)00760-6 [DOI] [PubMed] [Google Scholar]

- 59.Iloabachie C, Wells C, Goodwin B, Baldwin M, Vanderplough-Booth K, Gladstone T, Murray M, Fogel J, Van Voorhees Bw. Adolescent and parent experiences with a primary care/Internet-based depression prevention intervention (CATCH-IT) General Hospital Psychiatry. 2011 Nov;33(6):543–555. doi: 10.1016/j.genhosppsych.2011.08.004.S0163-8343(11)00250-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Mitchell N, Dunn K. Pragmatic evaluation of the viability of CCBT self-help for depression in higher education. Counselling and Psychotherapy Research. 2007 Sep;7(3):144–150. doi: 10.1080/14733140701565987. [DOI] [Google Scholar]

- 61.Richards D, Timulak L. Client-identified helpful and hindering events in therapist-delivered vs. self-administered online cognitive-behavioural treatments for depression in college students. Counselling Psychology Quarterly. 2012 Sep;25(3):251–262. doi: 10.1080/09515070.2012.703129. [DOI] [Google Scholar]

- 62.Jesson J, Matheson L, Lacey FM. Doing your literature review: Traditional and systematic techniques. London: SAGE Publications Ltd; 2011. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Database search details.

Included studies.

Excluded studies.