Abstract

Objective

To compare physicians' self‐reported willingness to provide new patient appointments with the experience of research assistants posing as either a Medicaid beneficiary or privately insured person seeking a new patient appointment.

Data Sources/Study Setting

Survey administered to California physicians and telephone calls placed to a subsample of respondents.

Study Design

Cross‐sectional comparison.

Data Collection/Extraction Methods

All physicians whose California licenses were due for renewal in June or July 2013 were mailed a survey, which included questions about acceptance of new Medicaid and new privately insured patients. Subsequently, research assistants using a script called the practices of a stratified random sample of 209 primary care physician respondents in an attempt to obtain a new patient appointment. By design, half of the physicians selected for the telephone validation reported on the survey that they accepted new Medicaid patients and half indicated that they did not.

Principal Findings

The percentage of callers posing as Medicaid patients who could schedule new patient appointments was 18 percentage points lower than the percentage of physicians who self‐reported on the survey that they accept new Medicaid patients. Callers were also less likely to obtain appointments when they posed as patients with private insurance.

Conclusions

Physicians overestimate the extent to which their practices are accepting new patients, regardless of insurance status.

Keywords: Medicaid, physicians, primary care, access, new patient appointments

The Patient Protection and Affordable Care Act (ACA) has substantially increased the number of Americans enrolled in both Medicaid and private health insurance. This large growth enrollment has heightened concern about beneficiaries' access to medical care, especially primary care and preventive services that can reduce the need for emergency department visits and hospital admissions. As expansion of access to health insurance progresses, policy makers need to monitor the capacity of primary care providers to provide timely access to care.

The Medicaid and CHIP Payment and Access Commission (MACPAC) conceptualizes access to medical care for Medicaid enrollees as a function of enrollees' characteristics, availability of care, and utilization of care (Medicaid and CHIP Payment and Access Commission 2011). Availability of care encompasses what previous conceptual frameworks have characterized as “potential access” (Andersen and Aday 1978). Measures of availability assess whether sufficient resources are available for Medicaid enrollees to obtain care. Important metrics include the overall supply of practitioners, the proportion of practitioners who participate in Medicaid, and the proportion of these practitioners who are accepting new Medicaid patients.

Surveys of practitioners are a major source of data on availability of care. The National Ambulatory Medical Care Survey (NAMCS) conducts an annual survey, which asks a national sample of office‐based physicians to indicate whether they are accepting any new patients and, if so, whether they are accepting new patients with Medicaid or other types of health insurance (Decker 2013, 2015). From 1996 to 2008, the Center for Studying Health System Change conducted five surveys of nationally representative samples of physicians that asked physicians whether they accepted new patients with Medicaid or other types of health insurance (Cunningham and Hadley 2008). Some states have conducted their own surveys on physicians' participation in Medicaid (Bindman, Chu, and Grumbach 2010; Coffman et al. 2014).

A limitation of physician surveys is that they rely on self‐reported data and, thus, are vulnerable to recall and social desirability bias. In recent years, the simulated patient approach has been used to collect information on availability of care in a manner that more closely resembles the experiences of Medicaid beneficiaries attempting to obtain care (Bisgaier and Rhodes 2011; Rhodes et al. 2014; Tiperneni et al. 2015). Recently the US Office of the Inspector General identified this type of study as the most valid and “direct method” for states to monitor access in Medicaid Managed Care organizations (United States Department of Health & Human Services, Office of Inspector General 2014). In the typical simulated patient study, trained supervised research assistants pose as patients contacting physician practices to request appointments. The script that callers use can be varied to measure whether availability of appointments varies by insurance status or other specific patient characteristics. A major advantage of simulated patient studies is that they obtain information in “real time” from office staff who book appointments in a manner that minimizes risks of recall and social desirability bias (Rhodes and Miller 2012). Staff members are not aware they are being observed and, thus, conduct “business as usual,” which might differ if they knew that their responses were being used to measure the availability of appointments. The method also reduces nonresponse bias that is prominent in many physician surveys.

This study uses findings from a simulated patient study as a gold standard by which to judge how accurately physicians report acceptance of new Medicaid and privately insured patients. If physicians' responses to surveys are consistent with their staff's responses to simulated patients, analysis of data from routinely administered surveys, such as NAMCS, may offer a relatively low‐cost means for monitoring potential access to care for Medicaid beneficiaries.

Methods

Physician Survey

The survey data are from a survey the Medical Board of California mailed to California physicians (MDs) whose licenses were due for renewal in June or July of 2013, along with their license renewal materials. Renewals are due every 2 years on the last day of a physician's birth month. Physicians may respond by mail or online through the Medical Board's website.

Physicians undergoing relicensure are required to complete a mandatory survey, which includes questions regarding their demographics, practice location, professional activities, hours worked, primary and secondary specialty, and whether they have completed residency and fellowship training. Through a research partnership with the Medical Board, we incorporated a voluntary, supplemental survey that asks physicians to report the percentage of their patients enrolled in Medicaid and whether their practice accepts new Medicaid patients. Physicians were also asked if their practices accepted new patients with private insurance. In addition, the supplemental survey includes a question about the physician's practice setting (e.g., solo practice, group practice, community/public clinic).

Physicians were eligible for inclusion in the sample if they had an active California license, practiced in California, had completed training, and provided patient care at least 20 hours per week. These inclusion criteria ensured that the analysis focused on physicians whose primary professional activity was providing patient care to Californians. Among physicians who met the eligibility criteria, the response rate for the supplemental survey was 63 percent. The demographic characteristics of respondents were similar to those of all physicians with California licenses who met the eligibility criteria (Coffman et al. 2014).

Simulated Patient Study

The simulated patient study involved the practices of randomly selected primary care physicians caring for nonelderly adults who responded to both the mandatory and supplemental surveys. The study focused on physicians who care for nonelderly adults because this is the population most likely to be newly eligible for Medicaid under the ACA expansion. Primary care physicians were defined as family physicians, general internists, and general practitioners. Primary care physicians caring for nonelderly adults were identified based on responses to a question on the supplemental survey that asked physicians to indicate whether they care for patients aged 18–64 years. Physicians employed by Kaiser Permanente were excluded because Kaiser Permanente uses a centralized telephone call center and a web portal to schedule new patient appointments with primary care physicians. Kaiser Permanente's health plan enrolls less than 1 percent of Medicaid beneficiaries in California.1

A random number generator was used to select two random samples of physicians who met the inclusion criteria: physicians who said they are willing and able to accept new Medicaid patients and physicians who say they are not willing and able to accept new Medicaid patients. Trained research assistants used a computer‐assisted telephone interviewing (CATI) system to place calls to physician practices. We aimed to contact 200 physicians (100 who indicated a willingness to accept new Medicaid patients and 100 who indicated that they were unwilling). We drew samples of 143 physicians in each group to account for the possibility that we would not have accurate telephone numbers for some physician practices and that some practices would be “out of scope” because they do not provide a full range of primary care services (e.g., an internist who practices as a hospitalist).

Telephone numbers for physicians' practices were obtained from publicly available sources, for example, websites, health plan directories, online physician rating services, and Google's online directory. All calls were placed between November 2013 and February 2014. The amount of time elapsed between completion of the survey, and the call ranged from 3 to 11 months.

The same caller contacted each randomly selected physician's practice twice with the same scenario to reduce the likelihood that variables other than insurance type would affect the response. During one call, the caller stated that he or she was enrolled in Medicaid. In the other, the caller stated that he or she had private health insurance. The calls were spaced at least 2 weeks apart to reduce the likelihood that a scheduler would recognize the caller's voice.

During both calls, the caller presented a story of having relocated to California with untreated hypertension and of needing a primary care appointment. The hypertension scenario was chosen because hypertension is a common health problem that is routinely treated by primary care physicians. In addition, the National Heart, Blood, and Lung Institute recommends persons with newly diagnosed hypertension have a physician visit within 1 month of diagnosis. Callers mentioned the hypertension scenario only if the scheduler asked why they were seeking the soonest possible appointment. In some cases, callers were able to schedule an appointment with their assigned insurance status without presenting this clinical scenario.

Callers used the name of a specific health plan to simulate typical interactions between schedulers and patients. For all calls in which the caller claimed to have private health insurance, the caller stated that he or she was enrolled in Anthem Blue Cross's preferred provider organization, the private health plan with the largest enrollment in California. Simulating interactions for Medicaid enrollees was more complicated because most Medicaid beneficiaries in California are enrolled in managed care plans and because the managed care plans with which California's Medicaid program contracts vary by county.2 We used the U.S. Department of Housing and Urban Development's crosswalk of zip codes and counties to map physician practices to counties (United States Department of Housing and Urban Development 2014). For each Medicaid call, the CATI system was populated with the name of the Medicaid managed care plan with the highest enrollment in that county or, if the physician's name appeared in the directory of any of the managed care plans serving that county, the name of the health plan in whose directory the physician appeared.

Calls requesting new patient appointments were considered successful if one of the following conditions was met:

An appointment was scheduled with the sampled physician or with another physician or other primary care practitioner (e.g., nurse practitioner, physician assistant) in the sampled physician's practice;

The practice indicated that an appointment would be scheduled as soon as caller called back with an insurance identification (ID) number (e.g., the receptionist confirms that the practice accepts a caller's insurance type and gives a possible date and time);

The practice indicated that a “walk in” appointment was available, with the ability to have a follow‐up appointment with the primary care practitioner listed or another primary care practitioner in the office.

Calls in which an appointment was offered with a different primary care practitioner in the practice were deemed successful because we hypothesized that physicians in group practices may have answered the survey from the perspective of their practices overall as opposed to their own patient panels. In addition, for purposes of assessing access to new patient appointments, obtaining an appointment with any primary care practitioner in a practice is more important than obtaining an appointment with a specific physician.

Requests for appointments were considered unsuccessful if:

The practice was not accepting any new patients.

The practice was not accepting new patients with the caller's type of insurance.

Other reason for not granting an appointment, when the scheduler was aware of the patient's insurance status.

Statistical Analysis

All analyses were conducted using SAS 9.4. In addition to descriptive statistics, the McNemar's test of the significance of the difference between two correlated proportions was conducted to assess the extent of consistency of responses to the physician survey and the simulated patient telephone calls. Use of a paired analysis provides substantial power to detect differences across the characteristic being tested. The McNemar's test is appropriate because our goal is to compare responses for a single group of subjects for whom data on one variable (i.e., accepting new Medicaid patients) were collected using two different methods (survey and simulated patient calls). The null hypothesis is that the contingency table is symmetric (i.e., that the proportions of “yes” and “no” responses are consistent for both methods for measuring acceptance of new Medicaid patients). If the p value for the McNemar's test is not statistically significant, the proportions of “yes” and “no” responses generated by the two methods are correlated and we can conclude that the survey method yields findings consistent with those of simulated patient calls. If, on the other hand, the p value is statistically significant, the proportions of “yes” and “no” responses are not correlated, indicating that findings from the survey and the simulated patient calls differ. To assess the accuracy of responses to the survey, we also calculated positive and negative predictive value of survey responses for predicting responses to the simulated patient calls.

Several secondary analyses were undertaken to examine whether the degree of consistency in responses varied across physicians practicing in counties with different models of Medicaid managed care and among physicians with different demographic and practice characteristics. To test whether our scenario in which a simulated patient names a particular Medicaid managed care plan was miscategorizing physicians as not accepting Medicaid patients when they participate in a different Medicaid plan, we compared the agreement between the survey responses and the simulated patient results in counties with only one Medicaid managed care plan to responses in counties with more than one Medicaid managed care plan. We hypothesized that if the name of the health plan mentioned by the simulated patient was the source of the discrepancy that we would see a higher rate of agreement between the survey response and the experience of the simulated patient in counties with a single Medicaid managed care plan.

We also estimated binary logistic regression models to assess whether the odds of agreement between responses to the physician survey and the simulated patient calls was associated with primary care physicians' demographic and practice characteristics. The model included variables for sex, age group (<46 years, 46–60 years, >60 years), specialty (family medicine, internal medicine), and practice type (community/public clinic, group practice, solo practice). Separate regressions were estimated for Medicaid and private health insurance.

In addition, we assessed whether the rate of agreement between responses to the survey and the simulated patient calls was associated with the amount of time that elapsed between a physician's completion of the survey and completion of the simulated patient call. We hypothesized that longer lags between completion of the survey and the simulated patient call would be negatively associated with the rate of agreement, because the likelihood that a physician's practice would change its policies on accepting new patients increases over time. Testing this hypothesis is important because some simulated patient calls were made in January and February 2014. Demand for new patient appointments may have increased in some physicians' practices during those months due to the large number of Californians who had recently obtained health insurance through the state's health insurance exchange or the expansion of eligibility for its Medicaid program. We estimated the Pearson correlation coefficient for the association between the number of months between completion of the surveys and the simulated calls and the rate of agreement between responses to the survey and the simulated patient calls.

Institutional Review Boards

The project was approved by the institutional review boards (IRBs) of the University of California, San Francisco and the University of Pennsylvania.

Results

Callers attempted to contact the practices of 286 primary care physicians who provided full scope primary care services for nonelderly adults. By design this was 143 whose survey responses indicated that they accept new Medicaid patients and 143 who reported that they do not accept new Medicaid patients. Seventy‐seven physicians sampled based on their survey responses were subsequently omitted from the final sample because contact information for the practice was not valid (32 physicians), callers were unable to contact the practice after six attempts (7 physicians), or responses to the simulated patient indicated that their practice did not provide a full scope of primary care services for nonelderly adults (38 physicians). Calls were successfully completed with the practice of 209 primary care physicians. Table 1 presents the characteristics of these physicians along with the characteristics of all primary care physicians who completed the supplemental survey. The primary care physicians included in the validation study were somewhat older and more likely to be general internists and in solo practice than all primary care physicians who completed the supplemental survey, but the differences were not statistically significant.

Table 1.

Demographic and Practice Characteristics

| All Primary Care Physicians Eligible for Inclusion in Validation Study # (%) | Primary Care Physicians Included in Validation Study # (%) | |

|---|---|---|

| Age | ||

| <46 years | 135 (25%) | 45 (22%) |

| 46–60 years | 237 (44%) | 99 (47%) |

| >60 years | 165 (31%) | 65 (31%) |

| Gender | ||

| Female | 201 (37%) | 82 (39%) |

| Male | 336 (63%) | 127 (61%) |

| Specialty | ||

| Family medicine | 317 (59%) | 115 (55%) |

| General internal medicine | 220 (41%) | 94 (45%) |

| Practice type | ||

| Solo practice | 175 (33%) | 80 (38%) |

| Private group practice | 234 (44%) | 93 (45%) |

| Community/public clinics | 76 (14%) | 26 (12%) |

| Other | 52 (10%) | 10 (5%) |

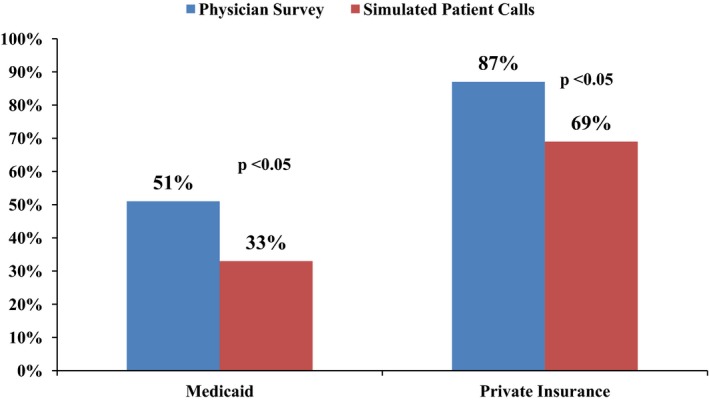

Figure 1 shows the percentages of physicians whose survey responses indicated that they are accepting new Medicaid and privately insured patients and compares these percentages to the percentages of callers who successfully obtained new patient appointments with these physicians or with other primary care practitioners in their practices. By design, 51 percent (95 percent CI: 44 percent, 58 percent) of the respondents to the physician survey indicated that they accepted new Medicaid patients.3 In contrast, callers were able to schedule new patient appointments in only 33 percent (95 percent CI: 27 percent, 40 percent) of the practices called, a difference of 18 percentage points. A similar pattern was observed for private health insurance. The survey results suggest that 87 percent (95 percent CI: 82 percent, 92 percent) of primary care physicians were accepting new patients with private insurance, whereas only 69 percent (95 percent CI: 63 percent, 75 percent) of the practices called offered the privately insured callers new patient appointments.

Figure 1.

Rates at Which Physicians Accept New Patients: Physician Survey versus Simulated Patient Calls

Table 2 provides information about the sources of the discrepancy between findings from the survey and the simulated patient calls for Medicaid beneficiaries. For 149 of the 209 primary care physicians (71 percent) included in the study, responses to the survey and the simulated patient telephone calls regarding Medicaid were consistent; 28 percent of calls confirmed that the physician's practice accepted new Medicaid patients and 43 percent confirmed that the practice did not accept new Medicaid patients. Among the 60 physicians (29 percent) for whom responses to the survey and telephone calls were inconsistent, four of five discrepancies occurred because the simulated patient telephone calls failed to confirm the physician's report that the practice accepted new Medicaid patients. In other words, 48 physicians (23 percent) indicated through their survey responses that their practice accepted new Medicaid patients, but callers were told that this was not the case. There were also 12 cases (6 percent) in which the physician reported that the practice did not accept new Medicaid patients, but callers were told that the practice did in fact accept them.

Table 2.

Cross‐Tab of Responses to Physician Survey and Simulated Patient Calls: Medicaid

| Physician Survey | Simulated Patient Calls | |

|---|---|---|

| Accepts New Medicaid Patients | Does Not Accept New Medicaid Patients | |

| Accepts new Medicaid patients | 58 (28%) | 48 (23%) |

| Does not accept new Medicaid patients | 12 (6%) | 91 (43%) |

p value for McNemar's test <0.0001.

Positive predictive value = 58/(58 + 48) = 55%.

Negative predictive value = 91/(12 + 91) = 88%.

Among the 43 physicians included in the study who practiced in counties served by a single Medicaid managed care plan, 70 percent of responses to the physician survey and the simulated patient telephone calls were consistent, a rate that is similar to the rate for all physicians include in the study (71 percent). Thus, inconsistencies between physicians' responses to the survey and the experiences of simulated patients with their practices do not seem to be a result of simulated patients, indicating that they were covered by health plans with which physicians are not affiliated.

Table 3 shows that the pattern of responses was similar for private health insurance. For 161 of 209 (77 percent) physicians, responses to the survey and the telephone calls were consistent; for 48 physicians (23 percent), the responses were inconsistent. As with Medicaid, most discrepancies occurred because the simulated patient telephone calls did not support the physician's assertion that the practice was accepting new patients with private insurance.

Table 3.

Cross‐Tab of Responses to Physician Survey and Simulated Patient Calls: Private Insurance

| Physician Survey | Simulated Patient Calls | |

|---|---|---|

| Accepts New Patients with Private Insurance | Does Not Accept New Patients with Private Insurance | |

| Accepts new patients with private insurance | 139 (66%) | 43 (21%) |

| Does not accept new patients with private insurance | 5 (2%) | 22 (11%) |

p value for McNemar's test <0.0001.

Positive predictive value = 139/(139 + 43) = 76%.

Negative predictive value = 22/(5 + 22) = 81%.

We found that for Medicaid there were no statistically significant associations between the odds of agreement in responses to the physician survey and simulated patient calls and a physician's sex, age, primary care specialty, or practice type. For private health insurance, the odds of inconsistent responses for private insurance are higher for physicians who practice in a community/public clinic than for physicians in solo practice, but there were no statistically significant associations between the odds of agreement and other physician characteristics (Results not shown.)

In addition, we examined whether physicians for whom responses to the physician survey and simulated patient calls were inconsistent for Medicaid also had inconsistent responses regarding private health insurance. We found that only one in five of physicians who had inconsistent responses for Medicaid or private health insurance had inconsistent responses for both insurance types (results not shown). This finding suggests that the discrepancy between responses to the survey and simulated patient calls was not due to a physician recall characteristic independent of the type of insurance on which physicians were reporting.

As we hypothesized, the rate of agreement between physicians' responses to the survey and the simulated patients' experience decreased as the number of months between completion of the survey and the calls increased. However, for both Medicaid and private insurance the Pearson correlation statistic was small and was not statistically significant. For Medicaid the correlation coefficient (rho) was −0.12 (95 percent CI: −0.80, 0.69), and for private insurance the coefficient was −0.18 (95 percent CI: −0.75, 0.55).

Positive and negative predictive values were calculated to assess the accuracy of survey responses for predicting responses to simulated patient calls (see notes below Tables 2 and 3). For Medicaid, the positive predictive value of the physician's response to the survey was 55 percent and the negative predictive value was 88 percent. For private health insurance, the positive predictive value of the physician's response to the survey was 76 percent and the negative predictive value was 81 percent. These findings suggest that the survey may be better at predicting whether a physician's practice will accept privately insured patients than Medicaid patients.

Discussion

Findings from this validation study suggest that results of physician self‐reported surveys and simulated patient studies differ regardless of a patient's type of health insurance. These differences are highly statistically significant and are due primarily to physicians' overestimating of the availability of new patient appointments in their practices. Our findings suggest that primary care physicians may overestimate the rate at which their practices accept new adult patients by 17–19 percentage points. This is particularly concerning for new Medicaid patients because in many states the number of primary care physicians willing to see them is substantially lower than the number accepting new patients with private health insurance (Decker 2013).

Our study cannot definitively explain why physicians' self‐reports of their availability to accept new patients covered by either Medicaid or private insurance are so different than the experience of simulated patients. In the case of Medicaid, we do not believe it is related to simulated patients naming a plan the physician does not accept, because we found similar rates of agreement between physician self‐reports and the simulated patient results in counties that offer just one Medicaid managed care plan. We also did not find any systematic bias based on physician or practice characteristics to explain our results.

The ACA required states to increase Medicaid payment rates for primary care physicians to at least Medicare rates during the period of our study. This could in theory have changed physician practices' willingness to accept Medicaid patients during the time interval between when physicians completed the survey (March 2013 to August 2013) and when we conducted the calls (November 2013 to February 2014). However, we do not think this is the case because California did not begin issuing increased payments to primary care physicians until after our simulated patient study exited the field.

Regardless, we explored whether some physicians' practices may have changed their policies on acceptance of new Medicaid patients between the time the supplemental survey was administered and the time the telephone calls were made. We found that the rate of agreement decreased as the length of time between submission of survey responses and completion of the calls increased. However, this decrease was not statistically significant for either Medicaid or private insurance and, therefore, not likely to be the primary explanation for why the simulated patient calls yielded smaller estimates of the percentages of physicians accepting new Medicaid and privately insured patients than the survey.

Perhaps, physicians may have responded to the survey questions in an aspirational manner—thinking about whether they have or would ever, as a matter of routine practice, accept new Medicaid patients or new patients with private insurance. This perspective contrasts with that of the schedulers responding to the telephone calls. The schedulers were indicating not only whether the physician was potentially willing to accept new patients with Medicaid but also whether the physician or the practice had the capacity to do so at that specific point in time. The finding that primary care physicians overestimated their practice's ability to accept both new Medicaid patients and new privately insured patients adds support to this explanation of the discrepancy. Physicians may also not be as well informed as schedulers about their practice's current policies about accepting patients with specific types of health insurance. Finally, some physicians may have provided inaccurate information on the self‐reported survey, believing it is socially desirable to be perceived as accepting new patients, whether Medicaid or privately insured.

To the best of our knowledge, this is the first study to use a simulated patient methodology to validate findings from a physician survey. The only previous study that compared methods for monitoring Medicaid participation compared physician surveys to encounter data on a sample of physician visits (Kletke et al. 1985). That study found that the estimates were highly correlated (Pearson coefficient = 0.77) but that physicians overestimated their Medicaid participation by 40 percent relative to estimates derived from the sample of visits.

Our study has some important limitations. First, the findings are limited to primary care physicians. It is not known whether physicians in other specialties also overestimate their participation in Medicaid and private insurance. Second, findings may not generalize to physicians outside California, where expectations about accepting new patients and arrangements between physicians and health plans may differ from those in other states. Third, simulated patient calls have limitations as a gold standard for estimating acceptance of new patients. For example, real patients would have insurance numbers and Social Security numbers, which we did not fabricate but indicated we would bring to the appointment. This might have resulted in fewer scheduled appointments. This method also may not accurately reflect how some Medicaid beneficiaries select a primary care physician. In some states in which Medicaid managed care has been implemented, new beneficiaries are automatically assigned to a participating primary care practice if they do not select one. In addition, while rare, some primary care practices may reach out to new enrollees, as opposed to waiting for them to call for appointments (Robeznieks 2015).

Implications

We found that primary care physicians overestimate their practices' capacity to accept new patients across different types of health insurance. Accurate methods of tracking primary care capacity are critical, as millions of Americans are gaining insurance under the ACA.

Simulated patient studies offer some important advantages over other survey methodologies. Unlike provider surveys, simulated patient studies are not subject to recall bias, response bias, social desirability bias, or aspirational responses. This method also provides the most direct proxy for the actual experiences of persons seeking new patient appointments. However, simulated patient studies do not provide information about the reasons physicians are not accepting new patients.

Traditional surveys are much better for getting at the reasons behind lack of appointment availability, which have important implications for policy making. Surveys of practitioners could also continue to play a useful role in monitoring trends around access to care for Medicaid beneficiaries, if estimates generated from them are not biased. Specifically, if estimates of rates of accepting new Medicaid patients are inflated but positively correlated with estimates generated by simulated patient studies, one could still draw conclusions about trends in Medicaid participation. Estimates derived from surveys could then be adjusted for physicians' tendency to overestimate participation. Our findings suggest that this may be possible because we found no consistent statistically significant associations between the likelihood of overestimation and physicians' characteristics. However, our findings should be interpreted with caution because our sample size limited our ability to detect statistically significant differences among subgroups of physicians.

Cost may be a consideration when deciding whether to use simulated patient calls or practitioner surveys to monitor access to primary care. For our simulated patient study, the cost for each completed call was $170. For our mail survey, the cost of data collection for our research team was $0 because the questionnaire was incorporated into a mailing the Medical Board was already sending as a part of the relicensure process and because physicians either paid postage to return surveys by mail with their licensure renewal forms or completed them online through the Medical Board's website. Although traditional surveys can be expensive to administer, in cases where there is already a vehicle for data collection, such as medical relicensure, they can be an efficient means for gathering information from physicians and following trends over time. Separate from data collection, there are costs for preparing the survey questions and caller scenarios as well as for the data analysis that need to be considered.

Finally, neither methodology can answer all of the important questions about access to care for Medicaid enrollees. Neither method lends itself well to evaluating the quality of care provided by available physicians. These methods also do not capture Medicaid enrollees' perspectives on their access to care. In other words, each method provides important information about potential access to care, but neither is very helpful for assessing realized access to care. As MACPAC's framework suggests (Medicaid and CHIP Payment and Access Commission 2011), a multimethods approach is needed to provide a complete understanding of the impact of the ACA and other policy changes on Medicaid enrollees' access to care.

Supporting information

Appendix SA1: Author Matrix.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: Funding for this project was provided by the California HealthCare Foundation and the California Department of Health Care Services. The authors thank Martha Van Haitsma, Tiana Pyer‐Pereira, David Chearo, and the field staff of the University of Chicago Survey Lab for conducting the simulated patient telephone calls for this project. We also thank Denis Hulett, MS, of the University of California, San Francisco, for his contributions to programming, data management, and statistical analysis for this project.

Disclosures: None.

Disclaimers: None.

Notes

The estimate of the percentage of California Medicaid beneficiaries enrolled in Kaiser Foundation Health Plan was calculated using data from two reports issued by the California Department of Health Care Services, California's Medicaid agency. California Department of Health Care Services. 2014. “Medi‐Cal Managed Care Enrollment Report—January 2014” [accessed August 5, 2015]. Available at http://www.dhcs.ca.gov/dataandstats/reports/Documents/MMCD_Enrollment_Reports/MMCDEnrollRptJan2014.pdf; California Department of Health Care Services. “Medi‐Cal Monthly Eligibles Trend Report for January 2014” [accessed August 5, 2015]. Available at http://www.dhcs.ca.gov/dataandstats/statistics/Documents/RASB_Issue_Brief_Medi-Cal_Eligibles_Trend_Report_for_January_2014%20(Feb%202014).pdf.

California has three different models for Medicaid managed care contracting. In 22 counties, Medicaid contracts with two or more private (i.e., commercial) health plans. Beneficiaries for whom manage care enrollment is mandatory can choose to enroll in any of the private plans offered in their counties. In 13 counties, Medicaid contracts with one private health plan and one “local initiative” health plan whose provider network consists primarily of safety net providers. In these counties, beneficiaries can choose either the private plan or the local initiative plan. Finally, in 23 counties, Medicaid contracts with a single, county‐operated health system that enrolls all Medicaid beneficiaries in managed care. http://www.dhcs.ca.gov/provgovpart/Documents/MMCDModelFactSheet.pdf.

We designed the sampling procedure for the simulated patient calls such that half of the primary care physicians whose practices were called reported on the survey that they accept new Medicaid patients. The percentages of family physicians/general practitioners and general internists whose responses to the survey indicate that they accept new Medicaid patients and new privately insured patients were 54 and 52 percent, respectively (Coffman et al. 2014). (General pediatricians were excluded from the simulated patient calls because we limited our analysis to primary care physicians who care for nonelderly adults.)

References

- Andersen, R. , and Aday L. A.. 1978. “Access to Care in the U.S.: Realized and Potential.” Medical Care 16 (7): 533–46. [DOI] [PubMed] [Google Scholar]

- Bindman, A. B. , Chu P. W., and Grumbach K.. 2010. “Physician Participation in Medi‐Cal, 2008.” California HealthCare Foundation; [accessed on March 10, 2015]. Available at http://www.chcf.org/~/media/MEDIA%20LIBRARY%20Files/PDF/PDF%20P/PDF%20PhysicianParticipationMediCal2008.pdf [Google Scholar]

- Bisgaier, J. , and Rhodes K. V.. 2011. “Auditing Access to Specialty Care for Children with Public Insurance.” New England Journal of Medicine 364 (24): 2324–33. [DOI] [PubMed] [Google Scholar]

- Coffman, J. M. , Hulett D., Fix M., and Bindman A. B.. 2014. “Physician Participation in Medi‐Cal: Ready for the Enrollment Boom?” California HealthCare Foundation; [accessed on March 10, 2015]. Available at http://www.chcf.org/~/media/MEDIA%20LIBRARY%20Files/PDF/PDF%20P/PDF%20PhysicianParticipationMediCalEnrollmentBoom.pdf [Google Scholar]

- Cunningham, P. J. , and Hadley J.. 2008. “Effects of Changes in Income and Practice Circumstances on Physicians' Decisions to Treat Charity and Medicaid Patients.” Milbank Quarterly 86 (1): 91–123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Decker, S. L. 2013. “Two‐Thirds of Primary Care Physicians Accepted New Medicaid Patients in 2011‐2012: A Baseline Measure Future Acceptance Rates.” Health Affairs 32 (7): 1183–7. [DOI] [PubMed] [Google Scholar]

- Decker, S. L. . 2015. “Acceptance of New Medicaid Patients by Primary Care Physicians and Experiences with Physician Availability Among Children on Medicaid or the Children's Health Insurance Program.” Health Services Research 50 (5): 1508–27. doi:10.1111/1475‐6773.12288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kletke, P. R. , Davidson S. M., Perloff J. D., Schiff D. W., and Connelly J. P.. 1985. “The Extent of Physician Participation in Medicaid.” Health Services Research 20 (5): 503–23. [PMC free article] [PubMed] [Google Scholar]

- Medicaid and CHIP Payment and Access Commission . 2011. “Report to Congress on Medicaid and CHIP” [accessed on March 10, 2015]. Available at https://www.macpac.gov/publication/ch-4-examining-access-to-care-in-medicaid-and-chip/

- Rhodes, K. V. , and Miller F. G.. 2012. “Simulated Patient Studies: An Ethical Analysis.” Milbank Quarterly 90 (4): 706–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rhodes, K. V. , Kenney G. M., Friedman A. B., Saloner B., Lawson C. C., Chearo D., Wissoker D., and Polsky D.. 2014. “Primary Care Access for New Patients on the Eve of Health Care Reform.” JAMA Internal Medicine 174 (6): 861–9. [DOI] [PubMed] [Google Scholar]

- Robeznieks, A. 2015. “Oregon Medicaid Reform Meets Savings Goals as More Enroll.” Modern Healthcare; January 14 [accessed on August 13, 2015]. Available at http://www.modernhealthcare.com/article/20150114/NEWS/301149981 [Google Scholar]

- Tiperneni, R. , Rhodes K. V., Hayward R. A., Lichtenstein R. L., Reamer E. N., and Davis M. M.. 2015. “Primary Care Appointment Availability for New Medicaid Patients Increased After Medicaid Expansion in Michigan.” Health Affairs (Millwood) 34 (8): 1399–406. [DOI] [PubMed] [Google Scholar]

- United States Department of Health & Human Services, Office of Inspector General . 2014. “State Standards for Access to Care in Medicaid Managed Care. Report OEI‐02‐11‐00320” [accessed on March 10, 2015]. Available at http://oig.hhs.gov/oei/reports/oei-02-11-00320.asp

- United States Department of Housing and Urban Development . 2014. “HUD USPS Zip Code Crosswalk Files” [accessed on March 10, 2015]. Available at http://www.huduser.org/portal/datasets/usps_crosswalk.html

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix SA1: Author Matrix.