Abstract

Objective

We examined the representativeness of the nonfederal hospital emergency department (ED) visit data in the National Syndromic Surveillance Program (NSSP).

Methods

We used the 2012 American Hospital Association Annual Survey Database, other databases, and information from state and local health departments participating in the NSSP about which hospitals submitted data to the NSSP in October 2014. We compared ED visits for hospitals submitting 15 data with all ED visits in all 50 states and Washington, DC.

Results

Approximately 60.4 million of 134.6 million ED visits nationwide (~45%) were reported to have been submitted to the NSSP. ED visits in 5 of 10 regions and the majority of the states were substantially underrepresented in the NSSP. The NSSP ED visits were similar to national ED visits in terms of many of the characteristics of hospitals and their service areas. However, visits in hospitals with the fewest annual ED visits, in rural trauma centers, and in hospitals serving populations with high percentages of Hispanics and Asians were underrepresented.

Conclusions

NSSP nonfederal hospital ED visit data were representative for many hospital characteristics and in some geographic areas but were not very representative nationally and in many locations. Representativeness could be improved by increasing participation in more states and among specific types of hospitals.

Keywords: syndromic surveillance, situational awareness, public health preparedness

Introduction

In a number of countries, health agencies at national, regional, and local levels use data from hospital emergency departments (EDs) to identify and monitor clusters, outbreaks, and trends in infectious and chronic diseases, injuries, and adverse health effects of hazardous environmental conditions that may require public health action (1–9). Syndromic surveillance is a process by which public health agencies, hospitals, medical professionals, and other organizations share, analyze, and query health and health-related data in near real time to make information on the health of communities available to public health and other officials for situational awareness, decision-making, and enhanced responses to hazardous events and disease outbreaks (1–16).

Syndromic surveillance is distinguished from other public health surveillance systems by the combination of several characteristics, including automated exchange of data originally created for other purposes from clinical electronic health information systems and other sources, the creation of defined syndromes based on words in clinic text notes and diagnostic and treatment information when available, and automated data scans to detect and display statistical anomalies and alert users to potential adverse health events (1–17). Although users and researchers are still assessing the uses and limitations of the systems, many health agencies find syndromic surveillance to be an important addition to their other surveillance systems (1–16) Syndromic surveillance systems provide agencies with near real-time data on a broad range of health conditions for large populations, allowing them to adapt quickly to new public health surveillance needs (1–16). The Health Information Technology for Economic and Clinical Health Act (HITECH Act), enacted under Title XIII of the American Recovery and Reinvestment Act of 2009 (Pub L. 111–5), authorized the US Department of Health and Human Services (HHS) to promote the adaptation and meaningful use of health information technology such as electronic health records to improve population health and strengthen public health surveillance for outbreak management or mitigation of public health disasters (17). In September 2012, HHS identified syndromic surveillance as an objective for hospitals and health professionals to receive incentives from the Centers for Medicare and Medicaid Services for submission of syndromic surveillance data to public health agencies (17).

The National Syndromic Surveillance Program (NSSP), formerly known as BioSense, is a collaboration among local, state, and national public health agencies; other federal agencies; hospitals; and health care professionals that supports the timely exchange of electronic health data and information for syndromic surveillance, situational awareness, and enhanced response to hazardous events and disease outbreaks (18). The NSSP is supported by the Centers for Disease Control and Prevention (CDC), and it includes a community of practice and a cloud-based syndromic surveillance platform (NSSP’s BioSense platform) that hosts an electronic information system and other analytic tools and services. The Association of State and Territorial Health Officials (ASTHO), through a cooperative agreement with the CDC, supports the cloud-based infrastructure for the platform and develops data-use agreements with participating state and local health departments. These health departments may submit hospital-provided data to the platform, or hospitals or health information exchanges within their jurisdictions may submit data directly. The objective of the community of practice and platform is to enable public health practitioners to share and access data, tools, and services to identify, monitor, investigate, and respond to hazardous events and disease outbreaks, including those spanning jurisdictional boundaries (18).

The ability of the NSSP to support syndromic surveillance is based in part on the representativeness of the data in the system, that is, the system’s ability to accurately describe the occurrence of health-related events over time and their distribution in the population by person and place (20, 21). One objective of the NSSP is to receive sufficient near real-time electronic data from US nonfederal hospital ED visits in all 50 states and the District of Columbia to provide public health decision-makers with representative regional- and national-level syndromic surveillance data. However, limited information has been available to date on which hospitals were submitting data to the NSSP’s BioSense platform and on the representativeness of ED visit data at regional and national levels.

We examined the representativeness of NSSP nonfederal hospital ED visits in the 50 states and the District of Columbia by using data from the 2012 American Hospital Association (AHA) Annual Survey Database (22) the most recent data available; the Dartmouth Atlas of Healthcare (23); the US Census (24); and the Health Resource and Services Administration (25) along with information from the participating state and local health departments. The purpose of this assessment was to describe the baseline representativeness of the NSSP data by identifying major differences between national and NSSP ED visits in terms of geographic location, hospital characteristics, and hospital service area characteristics. Although the US Department of Defense and the Department of Veterans Affairs currently participate in the NSSP, and in the future US territories may participate, the focus of this current assessment of representativeness was on the representativeness of nonfederal hospital ED visits excluding visits in the US territories. We focused on identifying types of nonfederal hospital ED visits that were underrepresented in the NSSP to help guide future recruitment of state and local health departments and hospitals and narrow potential gaps in representativeness. Identifying geographic areas or types of hospitals that were overrepresented as well as those underrepresented may help to guide future data analyses by ensuring that the data are appropriately weighted to provide better national and regional estimates. However, that was not the focus of the present assessment.

Methods

This assessment was conducted to improve a public health surveillance practice rather than as research and no data on human subjects were used. Therefore, institutional review board review and approval were not required. The comprehensive AHA hospital database has detailed information on characteristics of US hospitals including the number of total annual ED visits in each hospital in 2012 (22) The AHA data were derived primarily from the AHA Annual Survey of Hospitals. The AHA surveyed more than 6300 hospitals in the United States and its territories identified by AHA, state hospital associations, the Centers for Medicare and Medicaid Services, other national organizations, or government entities. The survey response rate was about 80%. For nonresponding hospitals, the AHA used data from its AHA registration database, the US Census Bureau, hospital accrediting bodies, and other organizations. Additional missing data were then estimated by using statistical imputation procedures. Details of the AHA data collection methods, imputation, and variable definitions are available in the AHA Annual Survey Database Fiscal Year 2012 Documentation Manual (22). We excluded AHA data for federal hospitals and all hospitals in the US territories. To obtain information about characteristics of populations in each hospital’s catchment area or hospital service area, we used the Dartmouth Atlas hospital service area file (23) to link each AHA hospital by zip code or combinations of zip codes to population data from the US Census Bureau’s 2008–2012 5-year American Community Survey (24). Because the American Community Survey did not have zip-code-level data on the number of physicians or hospital beds, we used each hospital’s county location to link hospitals to the Health Resources and Services Administration (HRSA) 2013–2014 data file with its county-level information (25). Details of the methods, definitions of service areas, and variables we used are available at the Dartmouth Atlas (23), US Census Bureau (24), and HRSA websites (25).

To identify which hospitals on the 2012 AHA list (22) were submitting data to the NSSP’s BioSense platform and the total annual ED visits for those hospitals, in October 2014 we collaborated with ASTHO to provide each state and local health department participating in the NSSP with a list of the hospitals in its jurisdiction that had at least one ED visit in 2012 and the total ED visits for each hospital according to the 2012 AHA database (22) and asked the health department representatives which hospitals were submitting data to NSSP. Fifty-one of 57 participating jurisdictions (38 states, 14 counties, and 5 cities; 89%) responded with information about the hospitals in their jurisdictions. Forty-eight jurisdictions identified specific hospitals from the AHA list that were submitting data to NSSP. We used the ED visit information for hospitals in those jurisdictions’ data to examine ED visits by a wide range of geographic (as shown in the figures) and hospital and service area characteristics (as shown in the table). Three jurisdictions provided the total number of hospitals and the total annual ED visits in their jurisdiction combined, based on the AHA data, instead of providing hospital-specific information. These data did not allow examination of ED visits by hospital and service area characteristics. Instead, they were combined with information from the other 48 jurisdictions in the geographic characteristics of the ED visits (as shown in the figures). For the purposes of this assessment, we assumed that hospitals in the 6 nonresponding jurisdictions were not submitting data to the platform.

To examine geographic differences in the representativeness of the NSSP’s BioSense platform, ED data among the 10 HHS regions (http://www.hhs.gov/iea/regional/), excluding US territories, and among the 50 states and Washington, DC, we calculated the percentage of all hospital ED visits that were submitted to NSSP and compared those percentages with the percentage at the national level. To examine differences in representativeness among hospitals or hospital service areas with different characteristics, for each hospital or service area characteristic, we compared the percentage of ED visits in the NSSP with the percentage of all US ED visits. For example, we compared the percentage of NSSP ED visits that were in children’s hospitals with the percentage of all US ED visits that were in children’s hospitals. For this analysis, we used the following hospital characteristics from the AHA database: the total annual ED visits per hospital (grouped into quintiles), status of Joint Commission on Accreditation of Healthcare Organizations (JCAHO) accreditation, teaching hospital status, trauma center designation, hospital ownership, and urban or rural location. We defined the hospitals as children’s hospitals if the hospital restricted admissions primarily to children, if the majority of services were for children, or if the hospital had the word “children” in its name. Hospital service area characteristics we examined were the percentage of residents in the hospital’s service area who were African American, Asian, Hispanic, or female; the percentage of residents below the poverty level; and the median age of residents in the service area (grouped into quintiles). County characteristics we examined were the number of hospital beds per 1000 residents and the number of physicians per 1000 residents (grouped into quintiles).

This purpose of this initial assessment of representativeness was to identify major differences between national ED visits and NSSP ED visits by geographic area, hospital characteristics, and hospital service area characteristics to plan initial program activities to improve representativeness. We set a difference of 5 percentage points between NSSP ED visits and national ED visit data as a practically significant threshold for guiding a first round of action. With this focus on practical significance, we elected not to use statistical inference procedures in this assessment. We used SAS version 9.3 (SAS Institute Inc, Cary, NC) to compute the percentages and the differences in percentages.

Results

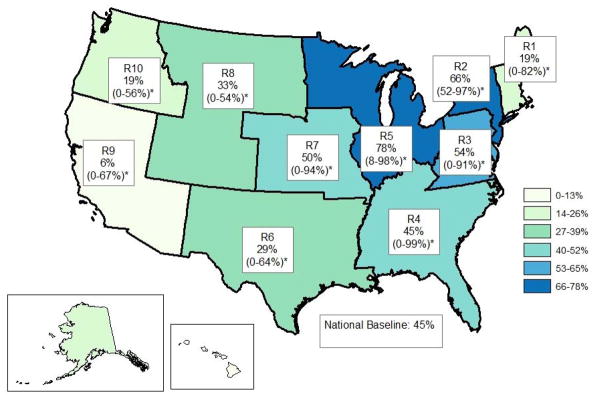

In the 50 states and Washington, DC, combined, 60,394,250 (~45%) of 134,600,959 nonfederal hospital ED visits nationwide were reported as having been submitted to the NSSP’s BioSense platform. These NSSP ED visit data were submitted from 1499 hospitals (~32%) out of a total of 4709 nonfederal hospitals that had at least one ED visit in 2012 according to the AHA file. The representativeness of the NSSP ED visits varied substantially by HHS region, with ED visits in regions 9 (6%) and 10 (19%) in the west and region 1 (19%) in New England being particularly underrepresented (Figure 1). In addition, ED visits in regions 6 and 8 (southern and northern plains) were more than 10 percentage points below the national level of 45%. Four regions were overrepresented in that they were 5 percentage points or more above the national percentage. Within each region, representativeness also varied among the states in the region, with 8 of the 10 regions having at least one state for which no ED visit data were submitted to the NSSP.

Figure 1.

Percentage of U.S. nonfederal hospital emergency department visits covered by the National Syndromic Surveillance Program in the 50 states and Washington, DC, by U.S. Department of Health and Human Services Region (R), October, 2014

*Range among states within each HHS region is the percent of nonfederal hospital emergency department visits covered by the National Syndromic Surveillance System. (For information about HHS regions: http:www.hhs.gov/iea/regions/HHS)

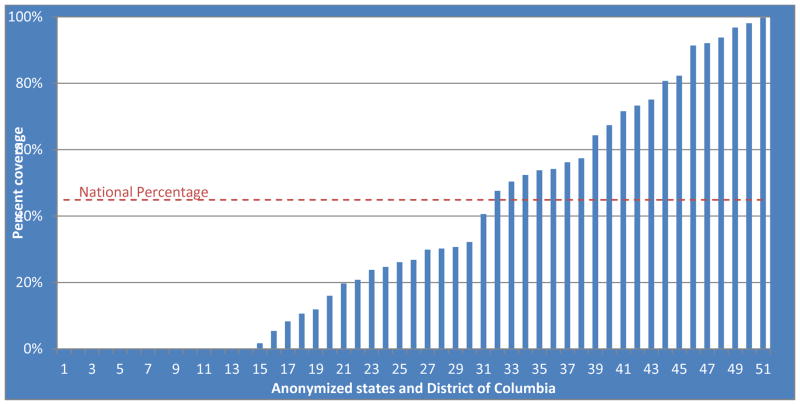

Nationally, representativeness also varied substantially by state, ranging from 0% to approximately 100% (Figure 2). Fourteen states were particularly underrepresented in that neither the state or local health department nor a hospital submitted ED data to the NSSP’s BioSense platform. ED visits in 16 additional states were more than 5 percentage 273 points below the national level of 45%. In 6 states, more than 90% of all ED visits were submitted to the NSSP’s BioSense platform.

Figure 2.

Percentage of U.S. nonfederal hospital emergency department visits covered by the National Syndromic Surveillance Program in 50 states, the Washington, DC and the Nation, October, 2014

The examination of representativeness of the NSSP nonfederal hospital ED visit data by hospital and hospital service area characteristics (Table 1) was based on 55,331,471 ED visits from 1412 hospitals identified by the 48 jurisdictions that provided hospital-specific information. For most characteristics of the hospitals and of hospital service areas, the distributions of hospital ED visits in the NSSP were similar to the distributions of all US hospital ED visits, that is, they differed by less than 5 percentage points. For example, 2.6% of all US hospital ED visits were in children’s hospitals, and 2.9% of NSSP ED visits were in children’s hospitals. Similarly, 87.7% of all US hospital ED visits were in JCAHO-accredited hospitals and 89.1% of NSSP ED visits were in such accredited hospitals. The other hospital and service area characteristics for which distributions were similar included urban-rural location, median age of residents in the service area, percentage of residents in the service area who were female, percentage of residents in the service area below the poverty line, and number of hospital beds and physicians per 1000 residents in the county. However, NSSP ED visit data from hospitals and service areas with certain characteristics were underrepresented by 5 percentage points or more. Approximately 20% of all US hospital ED visits were in hospitals with total annual ED visits in the lowest quintile, whereas 14.6% of NSSP ED visits were in that category. NSSP ED visits also were underrepresented in hospitals with certified rural trauma centers and in hospitals with service areas that included a low percentage of residents who were African American, a high percentage of residents who were Asian American, or a high percentage of residents who were Hispanic. Private not-for-profit hospitals and hospitals with service areas with moderately high percentages African American and with low percentages of Hispanics were overrepresented.

Table 1.

Nonfederal emergency department visits covered by the National Syndromic Surveillance Program compared with visits in all US non-federal hospitals, 50 states and Washington, DC, by characteristics of the hospitals and of hospital service areas or counties, October, 2014a.

| Characteristics | All visitsb | NSSPc visits | Percentage differenced | ||

|---|---|---|---|---|---|

| No. | % | No. | % | ||

| Annual ED visits/hospital | |||||

| 1 – 25678 | 26,921,663 | 20.0 | 8,073,094 | 14.6 | −5.4 |

| 25679 – 43122 | 26,936,662 | 20.0 | 11,156,119 | 20.2 | 0.2 |

| 43123 – 62329 | 26,945,089 | 20.0 | 11,348,756 | 20.5 | 0.5 |

| 62330 – 89174 | 26,936,026 | 20.0 | 12,413,248 | 22.4 | 2.4 |

| 89175 – 446581 | 26,861,519 | 20.0 | 12,340,254 | 22.3 | 2.3 |

| Children’s Hospital | |||||

| Yes | 3,535,650 | 2.6 | 1,589,401 | 2.9 | 0.3 |

| No | 131,065,309 | 97.4 | 53,742,070 | 97.1 | −0.3 |

| JCAHO6 Accreditation | |||||

| Yes | 118,065,720 | 87.7 | 49,285,387 | 89.1 | 1.4 |

| No | 16,535,239 | 12.3 | 6,046,084 | 10.9 | −1.4 |

| Teaching Hospital | |||||

| Yes | 22,487,017 | 16.7 | 10,202,898 | 18.4 | 1.7 |

| No | 112,113,942 | 83.3 | 45,128,573 | 81.6 | −1.7 |

| Trauma Center Designation | |||||

| Not Certified | 55,482,616 | 41.2 | 24,853,676 | 44.9 | 3.7 |

| Certified, Regional | 21,259,311 | 15.8 | 10,387,127 | 18.8 | 3.0 |

| Certified, Community | 22,765,195 | 16.9 | 9,977,857 | 18.0 | 1.1 |

| Certified, Rural | 17,051,307 | 12.7 | 4,170,790 | 7.5 | −5.2 |

| Certified, Other | 3,026,177 | 2.2 | 501,903 | 0.9 | −1.3 |

| Unknown | 15,016,353 | 11.2 | 5,440,118 | 9.8 | −1.4 |

| Hospital Ownership | |||||

| Government, non-federal | 19,747,983 | 14.7 | 6,972,276 | 12.6 | −2.1 |

| Private, not-for-profit | 94,951,803 | 70.5 | 41,855,537 | 75.6 | 5.1 |

| Private, for-profit | 19,901,173 | 14.8 | 6,503,658 | 11.8 | −3.0 |

| Urban-rural Location | |||||

| Division Metropolitan | 32,011,646 | 23.8 | 15,557,122 | 28.1 | 4.3 |

| Metropolitan | 78,642,336 | 58.4 | 31,698,985 | 57.3 | −1.1 |

| Micropolitan | 16,143,972 | 12.0 | 5,948,143 | 10.8 | −1.2 |

| Rural | 7,803,005 | 5.8 | 2,127,221 | 3.8 | −2.0 |

| Percent of residents in service area who are African American | |||||

| 0 – 2 | 27,278,982 | 20.3 | 8,446,762 | 15.3 | −5.0 |

| 2.1 – 5.7 | 26,950,515 | 20.0 | 9,774,435 | 17.7 | −2.3 |

| 5.8 – 11.7 | 26,666,787 | 19.8 | 11,035,065 | 19.9 | 0.1 |

| 11.8 – 22.5 | 27,139,130 | 20.2 | 14,255,184 | 25.8 | 5.6 |

| 22.6 – 84.3 | 26,565,545 | 19.7 | 11,820,025 | 21.4 | 1.7 |

| Percent of residents in service area who are Asian | |||||

| 0 – 0.8 | 27,534,083 | 20.5 | 11,292,213 | 20.4 | −0.1 |

| 0.9 – 1.7 | 26,732,962 | 19.9 | 11,363,375 | 20.5 | 0.6 |

| 1.8 – 3 | 27,385,597 | 20.3 | 13,503,947 | 24.4 | 4.1 |

| 3.1 – 5.7 | 26,351,942 | 19.6 | 10,978,950 | 19.8 | 0.2 |

| 5.8 – 63.5 | 26,596,375 | 19.8 | 8,192,986 | 14.8 | −5.0 |

| Percent of residents in service area who are Hispanic | |||||

| 0 – 2.9 | 27,464,040 | 20.4 | 14,112,306 | 25.5 | 5.1 |

| 3 – 5.9 | 26,860,040 | 20.0 | 12,248,503 | 22.1 | 2.1 |

| 6 – 11 | 26,478,683 | 19.7 | 13,589,878 | 24.6 | 4.9 |

| 11.1 – 25.6 | 27,183,128 | 20.2 | 8,740,855 | 15.8 | −4.4 |

| 25.7 – 95.5 | 26,615,068 | 19.8 | 6,639,929 | 12.0 | −7.8 |

| Median age of residents in service area (years) | |||||

| 21.8 – 35.9 | 28,044,028 | 20.8 | 10,540,715 | 19.1 | −1.7 |

| 36 – 38.1 | 26,070,207 | 19.4 | 10,538,087 | 19.0 | −0.4 |

| 38.2 – 40 | 26,840,174 | 19.9 | 11,213,098 | 20.3 | 0.4 |

| 40.1 – 42.4 | 26,972,186 | 20.0 | 11,925,329 | 21.6 | 1.6 |

| 42.5 – 63.5 | 26,674,364 | 19.8 | 11,114,242 | 20.1 | 0.3 |

| Percent of residents in service area who are female | |||||

| 26.8 – 50.2 | 29,546,942 | 22.0 | 9,527,611 | 17.2 | −4.8 |

| 50.3 – 50.8 | 30,358,304 | 22.6 | 11,448,525 | 20.7 | −1.9 |

| 50.9 – 51.2 | 21,058,636 | 15.6 | 10,054,317 | 18.2 | 2.6 |

| 51.3 – 51.8 | 27,241,376 | 20.2 | 12,502,809 | 22.6 | 2.4 |

| 51.9 – 58.7 | 26,395,701 | 19.6 | 11,798,209 | 21.3 | 1.7 |

| Percent of residents in service area below poverty line | |||||

| 1.1 – 10.9 | 26,925,855 | 20.0 | 11,699,602 | 21.1 | 1.1 |

| 11 – 14.1 | 27,628,191 | 20.5 | 12,850,386 | 23.2 | 2.7 |

| 14.2 – 16.4 | 26,279,282 | 19.5 | 12,221,101 | 22.1 | 2.6 |

| 16.5 – 19.2 | 27,564,933 | 20.5 | 9,773,707 | 17.7 | −2.8 |

| 19.3 – 43.4 | 26,202,698 | 19.5 | 8,786,675 | 15.9 | −3.6 |

| Number of hospital beds Per 1000 residents in county | |||||

| 0 – 0.23 | 26,909,527 | 20.0 | 9,087,435 | 16.4 | −3.6 |

| 0.24 – 0.54 | 27,065,421 | 20.1 | 10,875,362 | 19.7 | −0.4 |

| 0.55 – 1.03 | 26,789,322 | 19.9 | 12,049,289 | 21.8 | 1.9 |

| 1.04 – 1.97 | 26,860,193 | 20.0 | 12,128,695 | 21.9 | 1.9 |

| 1.98 – 40.52 | 26,976,496 | 20.0 | 11,190,690 | 20.2 | 0.2 |

| Total number of physicians Per 1000 residents in county | |||||

| 0 – 1.56 | 26,877,818 | 20.0 | 9,842,150 | 17.8 | −2.2 |

| 1.57 – 2.56 | 27,018,698 | 20.1 | 11,675,542 | 21.1 | 1.0 |

| 2.57 – 3.28 | 26,773,703 | 19.9 | 9,482,408 | 17.1 | −2.8 |

| 3.29 – 4.51 | 26,938,782 | 20.0 | 11,896,796 | 21.5 | 1.5 |

| 4.52 – 39.52 | 26,991,958 | 20.1 | 12,434,575 | 22.5 | 2.4 |

Abbreviations: ED, emergency department; JCAHO, Joint Commission on Accreditation of Healthcare Organizations; NSSP, National Syndromic Surveillance Program

Based on 2012 American Hospital Association (AHA) list of hospitals and hospital ED visits

Based on jurisdictions’ reports of hospitals’ submission of data to the NSSP Platform in October, 2014

Difference between the All Visits percentage and the NSSP Visits percentage. Differences greater than 5 percentage points are in bold font.

Discussion

The main finding of this assessment was that although nonfederal hospital ED visit data submitted to the NSSP’s BioSense platform were representative by many hospital characteristics and were likely to be representative for some states, ie, states in which more than 90% of ED data were submitted, the NSSP data were not geographically representative at the national level or for most HHS regions. Hospital ED visits from half of the regions of the United States and the majority of states were substantially underrepresented. Further, although the NSSP’s ED visit data were representative of many hospital characteristics and hospital service areas, they underrepresented ED visits in those hospitals with the fewest annual ED visits, those with rural trauma centers, and those serving populations with high percentages of Asian Americans or Hispanics. There are no published evidence-based standards on what percentages of hospital ED visits in a geographic region or population are required for a syndromic surveillance system to be representative for the broad range of public health uses of the data. Ideally, the combination of national, regional, state, and local syndromic surveillance systems would include sufficient percentages of hospital ED visits in all geographic locations and populations to be able to identify adverse health events and to monitor population health during mass gatherings or disasters no matter where they occur, including those in isolated populations. Depending on the environmental hazard, health condition, population at risk, and use of ED services in a given location, that capability might require 100% of ED visits. Without such coverage, public health response may be delayed or inadequate. On the other hand, public health monitoring for a broad range of health conditions for which people seek emergency care can be accomplished with much lower levels of coverage (1, 4, 5, 7, 8, 26, 27). While it is not critical that all ED visit data reside in a single national platform, it is critical that public health officials at all levels be able to share information rapidly, because outbreaks, adverse conditions, and public health events frequently cross jurisdictional boundaries. Having data on a single platform—along with shared tools, services, and other resources—can substantially improve capacity for rapidly sharing information. The NSSP currently has goals of increasing national coverage from 45% to 75% by 2017 and substantially reducing underrepresentation in the geographic areas and populations identified by this assessment. On the basis of future publications on syndromic surveillance and the experience of the NSSP, those goals may be revised.

Compared with the Agency for Healthcare Research and Quality’s 2011 National Emergency Department Sample (NEDS) (26) the NSSP has broader but less representative ED visit data. The NSSP data included approximately 60 million ED visits (about 45% of all US hospital ED visits) from 1499 hospitals in 36 states and the District of Columbia, whereas NEDS covered 29 million ED visits from hospitals in 30 states (26) However, the ED visit data in NEDS are from an approximately 20% stratified sample of US hospital-based EDs, and with weighting, the NEDS data can be used to calculate national estimates pertaining to the approximately 131 million ED visits in 2011 (26). Given the substantial variation in representativeness of NSSP data geographically, it is unclear whether NSSP nonfederal hospital ED data could be used to produce national estimates, even with the use of weighting for both under- and over-representation. NEDS, on the other hand, was not developed for syndromic surveillance purposes and it does not collect data in near real time. When we began our assessment of the representativeness of NSSP in 2014, the most recent data from NEDS were from 2011.

The design of this assessment and the findings of this report are subject to important limitations. We used the total annual number of ED visits per hospital from the 2012 AHA database in our analyses for an assessment of hospitals’ submissions of data to the NSSP in October 2014. Between 2012 and 2014, new hospitals may have opened, and consequently, ED visits at these hospitals were not included in this assessment. During that period, the number of visits and hospital characteristics may have changed. In addition, there was variation among hospitals in the definition of their reporting year, eg, calendar year for some and fiscal year for others. Also, for 222 of the US nonfederal hospitals in our assessment, the numbers of ED visits were AHA estimates because those hospitals had not provided data to AHA. As a result of these limitations, the numbers and percentages presented in this report are estimates. Finally, no NSSP program data were used and the AHA database does not include patient record data. Therefore, we were unable to assess completeness or quality of the NSSP data or examine other aspects of representativeness in our assessment, such as the volume of ED visits for various syndromes.

The AHA and the linked databases did, however, enable comparisons of the universe of US nonfederal hospital ED visits with the hospital ED visits in the NSSP. This was done by use of the same data sources for all comparisons. No NSSP program data were used except for the information identifying which AHA hospitals contributed data to NSSP, so no potential errors were introduced by comparing AHA data with NSSP data. This assessment provides baseline estimates on the representativeness of NSSP data and identifies underrepresented geographic areas, types of hospitals, and hospital service areas. These findings may be used by NSSP stakeholders to identify state and local health departments and hospitals to recruit for participation in the NSSP in order to improve representativeness. Future assessments of NSSP’s representativeness may include examination of improvements in coverage of currently underrepresented geographic areas, hospitals, and service area populations; examination of statistical methods to assess representativeness and to weight NSSP ED visit data to account for over- and under-representation in order to calculate national and regional estimates; and to compare actual numbers of ED visits received by the NSSP’s BioSense platform with the estimates based on AHA.

Conclusion

This assessment of the representativeness of NSSP nonfederal hospital ED visits found that NSSP ED visit data were representative in some locations and by many characteristics but were not very representative nationally and in many regions and states. The NSSP could improve representativeness by increasing participation by state and local health departments in specific geographic areas and among hospitals with the fewest annual ED visits, those with rural trauma centers, and those serving populations with high percentages of Asian Americans or Hispanics.

Acknowledgments

The authors thank Dr. Joseph Gibson, Marion County Public Health Department, Indianapolis, Indiana, and Dr. Paula Yoon and Mr. Peter Hicks, Division of Health Informatics and Surveillance, CDC, for their contributions to this assessment.

Footnotes

Disclaimer: The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention.

References

- 1.Baer A, Elbert Y, Burkom HS, et al. Usefulness of syndromic data sources for investigating morbidity resulting from a severe weather event. Disaster Med Public Health Prep. 2011;5(01):37–45. doi: 10.1001/dmp.2010.32. http://dx.doi.org/10.1001/dmp.2010.32. [DOI] [PubMed] [Google Scholar]

- 2.Bellazzini MA, Minor KD. ED syndromic surveillance for novel H1N1 spring 2009. Am J Emerg Med. 2011;29(1):70–74. doi: 10.1016/j.ajem.2009.09.009. http://dx.doi.org/10.1016/j.ajem.2009.09.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fan S, Blair C, Brown A, et al. A multi-function public health surveillance system and the lessons learned in its development: the Alberta Real Time Syndromic Surveillance Net. Can J Public Health. 2010;101:454–458. doi: 10.1007/BF03403963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Josseran L, Fouillet A, Caillère N, et al. Assessment of a syndromic surveillance system based on morbidity data: results from the Oscour® Network during a heat wave. PLoS One. 2010;5(8):e11984. doi: 10.1371/journal.pone.0011984. http://dx.doi.org/10.1371/journal.pone.0011984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hope KG, Merritt TD, Durheim DN, et al. Evaluating the utility of emergency department syndromic surveillance for a regional public health service. Commun Dis Intell. 2010;34:310–318. doi: 10.33321/cdi.2010.34.31. [DOI] [PubMed] [Google Scholar]

- 6.O’Connell EK, Zhang G, Leguen F, et al. Innovative uses for syndromic surveillance. Emerg Infect Dis. 2010;16(4):669–671. doi: 10.3201/eid1604.090688. http://dx.doi.org/10.3201/eid1604.090688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hall G, Krahn T, Majury A, et al. Emergency department surveillance as a proxy for the prediction of circulating respiratory viral disease in Eastern Ontario. Can J Infect Dis Med Microbiol. 2013;24:150–154. doi: 10.1155/2013/386018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hiller KM, Stoneking L, Min A, et al. Syndromic surveillance for influenza in the emergency department – a systematic review. PLoS One. 2013;8(9):e73832. doi: 10.1371/journal.pone.0073832. http://dx.doi.org/10.1371/journal.pone.0073832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Smith GE, Bawa Z, Macklin Y, et al. Using real-time syndromic surveillance systems to help explore the acute impact of the air pollution incident of March/April 2014 in England. Environ Res. 2015;136:500–504. doi: 10.1016/j.envres.2014.09.028. http://dx.doi.org/10.1016/j.envres.2014.09.028. [DOI] [PubMed] [Google Scholar]

- 10.Ziemann A, Rosenkötter N, Garcia-Castrillo Riesgo L, et al. A concept for routine emergency-care data-based syndromic surveillance in Europe. Epidemiol Infect. 2014;142(11):2433–2446. doi: 10.1017/S0950268813003452. http://dx.doi.org/10.1017/S0950268813003452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chretien J-P, Tomich NE, Gaydos JC, et al. Real-time public health surveillance for emergency preparedness. Am J Public Health. 2009;491 99(8):1360–1363. doi: 10.2105/AJPH.2008.133926. http://dx.doi.org/10.2105/AJPH.2008.133926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Watkins SM, Perrotta DM, Stanbury M, et al. State-level emergency preparedness and response capabilities. Disaster Med Public Health Prep. 2011;5(S1):S134–S142. doi: 10.1001/dmp.2011.26. http://dx.doi.org/10.1001/dmp.2011.26. [DOI] [PubMed] [Google Scholar]

- 13.Buehler JW, Whitney EA, Smith D, et al. Situational uses of syndromic surveillance. Biosecur Bioterror. 2009;7(2):165–177. doi: 10.1089/bsp.2009.0013. http://dx.doi.org/10.1089/bsp.2009.0013. [DOI] [PubMed] [Google Scholar]

- 14.Paterson BJ, Durrheim DN. The remarkable adaptability of syndromic surveillance to meet public health needs. J Epidemiol Glob Health. 2013;3(1):41–47. doi: 10.1016/j.jegh.2012.12.005. http://dx.doi.org/10.1016/j.jegh.2012.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.McCloskey B, Endericks T, Catchpole M, et al. London 2012 Olympic and Paralympic Games: public health surveillance and epidemiology. Lancet. 2014;383(9934):2083–2089. doi: 10.1016/S0140-6736(13)62342-9. http://dx.doi.org/10.1016/S0140-6736(13)62342-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Samoff E, Waller A, Fleischauer A, et al. Integration of syndromic surveillance data into public health practice at state and local levels in North Carolina. Public Health Rep. 2012;127(3):310–317. doi: 10.1177/003335491212700311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Centers for Disease Control and Prevention. Meaningful Use. CDC website; [Accessed August 17, 2015]. http://www.cdc.gov/ehrmeaningfuluse/introduction.html. Last updated October 22, 2012. [Google Scholar]

- 18.Centers for Disease Control and Prevention. National Syndromic Surveillance Program. CDC website; [Accessed March 20, 2015]. http://www.cdc.gov/nssp/. Last updated December 4, 2015. [Google Scholar]

- 19.Wenger E, McDermott R, Snyder WM. Cultivating Communities of Practice. Boston, MA: Harvard Business School Press; 2002. [Google Scholar]

- 20.European Centre for Disease Prevention and Control. Data Quality Monitoring and Surveillance System Evaluation–A Handbook of Methods and Applications. Stockholm, Sweden: ECDC; 2014. [Google Scholar]

- 21.Centers for Disease Control and Prevention. Updated guidelines for evaluating public health surveillance systems: recommendations from the guidelines working group. MMWR Recomm Rep. 2001;50(RR-13):1–35. [PubMed] [Google Scholar]

- 22.American Hospital Association (AHA) ANNUAL SURVEY DATA-BASE™, FY2012. Copyrighted and licensed by Health Forum LLC. American Hospital Association company; Chicago: 2014. [Accessed March 2014]. Fiscal Year 2012 Documentation Manual. http://www.ahadataviewer.com/book-cd-products/AHA-Survey/ [Google Scholar]

- 23.Dartmouth Atlas of Healthcare. Geographic Crosswalks and Boundary Files. Zip Code Cross Walks; Lebanon, NH: 2012. [Accessed November 7, 2014]. http://www.dartmouthatlas.org/tools/downloads.aspx?tab=39/ [Google Scholar]

- 24.US Census Bureau. American Community Survey, 2012 American Community Survey 5-Year Estimates, Tables B01001, B01002, B01003, B03002, S1701, and S1702. generated by Alejandro Pérez using American FactFinder; [Accessed September 22, 2014]. http://factfinder2.census.gov. [Google Scholar]

- 25.Area Health Resources Files (AHRF) US Department of Health and Human Services, Health Resources and Services Administration, Bureau of Health Workforce website; 2013–2014. [Accessed September 23, 2014]. http://ahrf.hrsa.gov/download.htm. [Google Scholar]

- 26.HCUP Nationwide Emergency Department Sample (NEDS) Healthcare Cost and Utilization Project (HCUP) Agency for Healthcare Research and Quality; Rockville, MD: 2011. [Accessed January 2014]. www.hcup-us.ahrq.gov/nedsoverview.jsp/ [Google Scholar]

- 27.Wang D, Zhao W, Wheeler K, et al. Unintentional fall injuries among US children: a study based on the National Emergency Department Sample. Int J Inj Contr Saf Promot. 2013;20(1):27–35. doi: 10.1080/17457300.2012.656316. http://dx.doi.org/10.1080/17457300.2012.656316. [DOI] [PubMed] [Google Scholar]