ABSTRACT

Since the introduction of Modernising Medical Careers in 2005, the electronic portfolio (ePortfolio) and workplace based assessments (WPBAs) have become integral, yet anecdotally controversial, components of postgraduate medical training. In this multi-centre, survey-based study, we looked at core medical trainees and trainers in the Northwest London region and their opinions of the ePortfolio and WPBAs. Our results demonstrate mixed feelings regarding these tools, with 60% of trainees stating that their training had not benefited from the use of an ePortfolio. 53% of trainers felt that feedback sessions with their trainees were useful; however 70% of them cited difficulties in fitting the required number of assessments within their clinical schedule. Overall, if implemented correctly, the ePortfolio and WPBAs are potentially powerful tools in the education and development of trainee doctors. However, improvements in mentoring and feedback may be needed to experience the full benefits of this system.

KEYWORDS: ePortfolio, workplace-based assessments, feedback, core medical training

Introduction

In recent years, the assessment of doctors’ performance has become increasingly more objective, with a focus on demonstrating key competencies during workplace-based assessments (WPBAs) and recording them in the form of an electronic portfolio (ePortfolio). Such assessments most commonly involve discussing a patient whom the trainee has just seen during an acute admission to the hospital or an inpatient on a ward. Other forms of WPBA include directly observing a trainee examining a patient, or carrying out a practical procedure such as a lumbar puncture or chest drain insertion, otherwise known as a ‘direct observation of procedural skill’ (DOPS). Following the introduction of ‘Modernising Medical Careers’ (MMC) in 2005, the ePortfolio and WPBAs have become integral, yet anecdotally controversial, components of postgraduate medical training. Before 2005, however, assessment of doctors in training consisted of less formal interactions with trainers, with little or no centralised control over what type of assessment or indeed how many were required of trainees. Some would argue that this approach allowed for a much more flexible, practical approach to teaching and training. Nevertheless, one of the criticisms of this system was that, in the absence of standardised assessment methods, it was increasingly difficult to ensure objectively that all trainees possessed the appropriate skills and competencies expected at their stage of training. As stated in the 2010 GMC guidance,1 the role of WPBAs is the ‘assessment of competence based on what the trainee actually does in the workplace’. Although few would doubt the need for such assessments, their validity and the manner in which they are recorded continue to be the subject of much debate, particularly because of the lack of definitive evidence linking such assessments to improved clinical performance.2 In addition, the increasingly competitive nature of specialty training has left some individuals feeling ‘threatened’ by the current assessment and feedback process and its implications for their career progression.1

Acknowledging these concerns, the GMC has emphasised additional components of WPBAs, including stimulating formative feedback as well as self-directed learning and reflective practice. Trainee–trainer meetings are also crucial to the success of the WPBA system, providing an opportunity for direct verbal feedback and the raising of any concerns, as well as a chance to discuss long-term educational and professional objectives and whether they are being met. The ePortfolio supports this process by providing a secure record of appraisals and workplace assessments. It also provides a platform for reflective practice and facilitates the personal development plan (PDP), a record of the trainee's self-determined goals that they would aim to achieve during their period of training. Much like WPBAs, the ePortfolio polarises opinion; there is some evidence that it facilitates better understanding among learners,3 whereas other studies demonstrate that some perceive the ePortfolio to be a ‘hoop-jumping exercise’, rather than a meaningful educational tool.4 Core medical trainees (CMTs), like trainees in other specialties, are expected to demonstrate attainment of certain competencies over their 2-year training programme, before being deemed eligible to take up medical positions. On the basis of continuing feedback, the structure of their ePortfolio was recently revised in 2012,5 with the aim of improving the quality and quantity of feedback that educational supervisors are able to give to their CMTs.

An electronic portfolio of assessments and competencies is not unique to the medical profession, being commonly employed in other fields such as pharmacy and nursing. Nevertheless, trainee opinions of the ePortfolio within the literature are limited, and those of their trainers even more so. We therefore carried out a multi-centre, survey across five NHS trusts in north-west London (Fig 1), aimed at assessing CMT and trainer opinions of the ePortfolio and WPBAs.

Fig 1.

The five surveyed NHS trusts in north-west London.

Methods

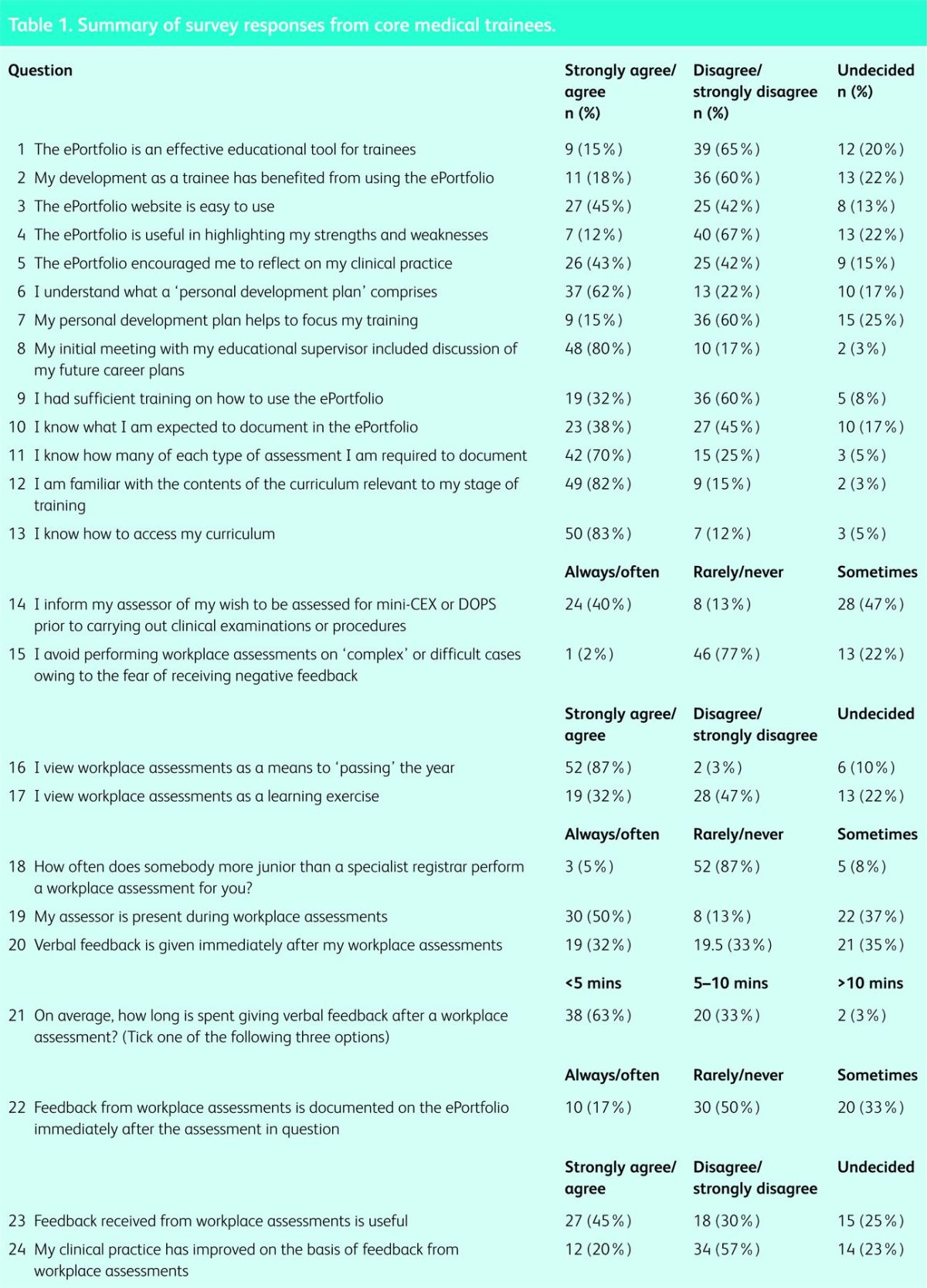

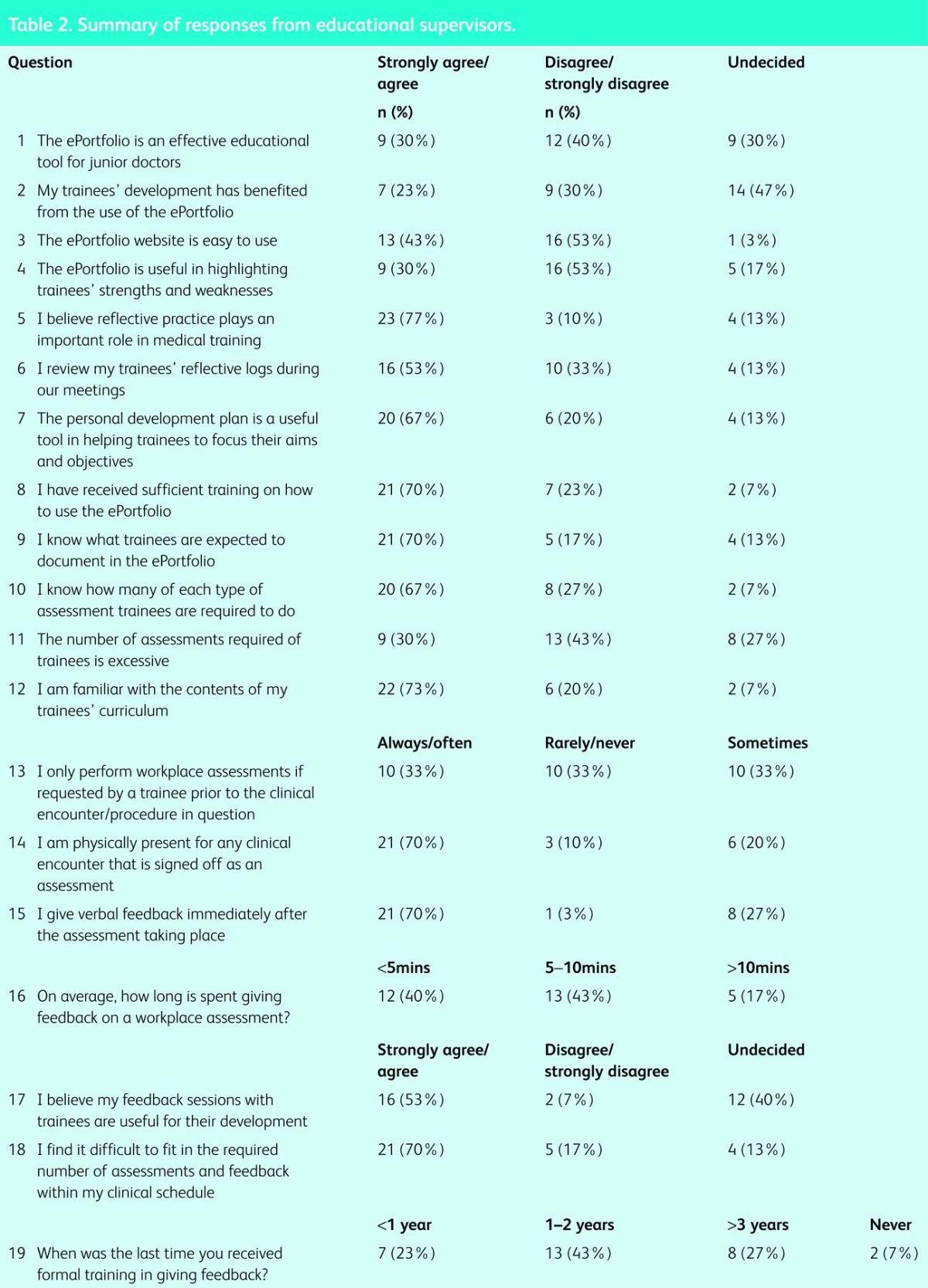

Two paper-based surveys were produced. The first (Table 1) was aimed at the trainees (CMTs) and contained 24 questions that addressed several areas: whether the ePortfolio is serving its purpose, familiarity of trainees with the objective of the ePortfolio, and the nature and perceived value of workplace assessments. The second questionnaire (Table 2) was completed by educational supervisors and comprised 19 questions covering the same domains as the CMT survey. Responses were collected from both groups over a 3-month period between February and April 2013. An initial pilot survey was conducted among 30 foundation year 2 (FY2) trainees at Hillingdon Hospital before the final questionnaire was produced, in order to highlight any necessary changes or additions to the content.

Table 1.

Summary of survey responses from core medical trainees.

Table 2.

Summary of responses from educational supervisors.

Hard copies of the survey were distributed by hand to CMTs (including both core training year 1 and 2) at central teaching sessions attended by the north-west London trainees. For practical reasons, the same method was not employed for the educational supervisors; instead, hard copies of the questionnaire were posted directly to the consultants at their NHS trust addresses, with replies being posted back to one of the co-authors (AT). To ensure anonymity and therefore increase the potential number of total respondents, no demographic data were requested when completing the surveys.

The collected data were qualitative, and therefore no statistical analysis was performed.

Results

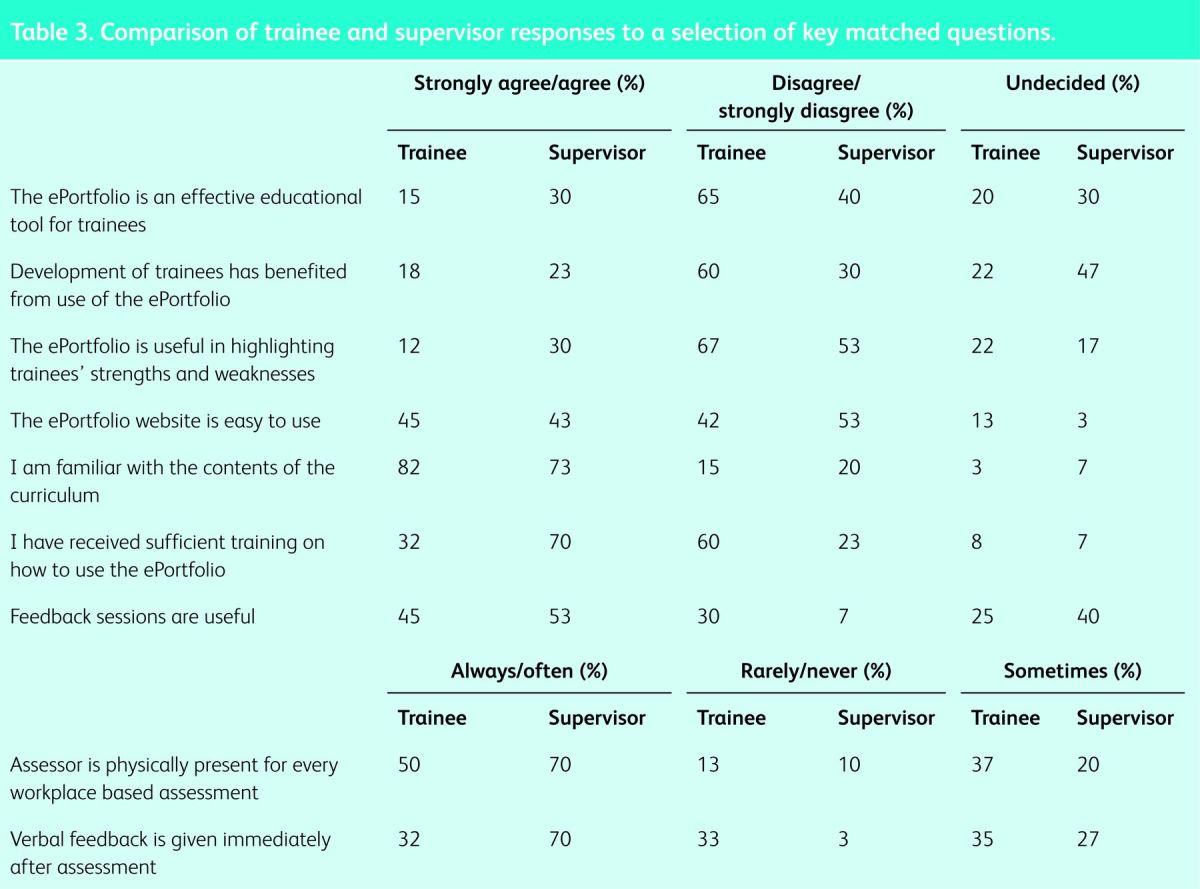

From the total of 158 CMTs in north-west London (London Deanery data), 60 responses were received (38% response rate), and of the 62 educational supervisors contacted, 30 completed and returned the questionnaire (48% response rate). The results from these two groups are summarised in Tables 1 and 2, respectively, while a comparison of trainee and supervisor responses to a selection of key matched questions is given in Table 3.

Table 3.

Comparison of trainee and supervisor responses to a selection of key matched questions.

On the basis of trainee responses, 40 of the 60 respondents (67%) felt that the ePortfolio failed to highlight their strengths and weaknesses; overall, 36 (60%) did not feel that their training had benefited from its use. In addition, 39 respondents (65%) also felt that the ePortfolio was ineffective as an educational tool. Looking at workplace assessments, 52 trainees (87%) viewed workplace assessments as a means to ‘passing the year’ rather than as a meaningful educational exercise. In addition, only 12 of the 60 trainees (20%) felt that feedback from their assessments had improved their clinical practice.

The educational supervisors surveyed were slightly less critical of the ePortfolio and workplace assessments, but they still expressed concerns. Only seven respondents (24%) agreed that the development of their trainees had benefited from use of the ePortfolio, whereas 31% felt that the ePortfolio had been useful in highlighting the strengths and weaknesses of the CMTs. The educational supervisors appear to be more positive regarding feedback sessions than their trainees, with 16 (56%) agreeing that feedback sessions are beneficial for trainee development. However, 22 of the 30 respondents (72%) felt that it was difficult to accommodate the required number of assessments within their daily clinical schedule.

Discussion

The results of this survey show that there continue to be mixed feelings regarding the ePortfolio and WPBAs. Both trainees and trainers appear to be familiar with the content of the CMT curriculum and how to access it. A large majority of the trainees also stated that their career plans were discussed at their initial educational meeting, all of which is encouraging. Nevertheless, approximately two-thirds of trainees and half of trainers questioned stated that the ePortfolio did not adequately highlight strengths and weaknesses, supporting continuing concerns over its role as a valid educational tool.

The ePortfolio: serving its purpose?

The ePortfolio was designed with the intention of supporting trainees’ development and learning by providing a platform for recording assessments, feedback and reflective practice. Through a process of continual re-evaluation, the ePortfolio has been modified to make it less of a ‘tick-box exercise’ and to allow more opportunities for reflection and constructive feedback on where trainees can potentially improve. Analysing the response from trainees and trainers, it would appear that the ePortfolio is failing to serve its purpose fully. As our results show, a considerable number of CMTs and supervisors felt that the ePortfolio was not useful in highlighting the strengths and weaknesses of the trainees, which is concerning given that this is one of its key roles. The majority of CMTs and their supervisors do not feel that trainees’ development has benefited from use of the ePortfolio. To our knowledge, only one other survey-based study has looked at opinions of the ePortfolio among CMTs6 and, interestingly, its findings appear to echo our own. On the basis of a sample of CMTs in the north-west of England, Johnson et al 6 concluded that the ePortfolio failed to demonstrate the clinical competency of the trainees, and neither did it facilitate rapid feedback.

Although only 15% of trainees and 30% of trainers who completed our survey felt that the ePortfolio was an effective educational tool, evidence in the literature seems to demonstrate that, if implemented well, portfolios can be effective in supporting professional, self-directed learning. A questionnaire of 95 FY2 doctors demonstrated that the ePortfolio did support educational processes,7 whereas a survey within obstetrics and gynaecology found that trainees were more likely to undertake self-directed learning if using a portfolio.8 Webb et al 3 surveyed 40 surgical trainees, among whom 30 felt that the ePortfolio improved their understanding of their curriculum, providing further support for its use in the postgraduate education of medical professionals.

Current evidence appears to support strongly the role of a mentor in trainees’ interaction with the portfolio and their engagement in reflective practice, largely through regular meetings and effective delivery of feedback.3,9 Our own data show that just under half of trainees questioned thought that the feedback received from assessments was useful and that the ePortfolio encouraged them to reflect on their practice. A study of 44 general practice trainees demonstrated poor compliance with the ePortfolio in the absence of trainer support,10 whereas another study within general practice found that portfolio users with a supportive mentor were more likely to undertake reflective practice.11 Interestingly, a survey of pharmacists indicated that some trainees had fears that the information disclosed on the portfolio might be used ‘against them’, therefore potentially inhibiting reflective practice.12 This is a concern that is occasionally voiced by trainees within medical specialties, perhaps indicating that more clarity is required on how information within the portfolio could potentially be used.

Despite the potential for portfolios to support learning and to facilitate the development of trainees, our surveys showed that certain practical issues, such as ease of use and time constraints, continue to have a negative impact on both the trainee and trainer experience. When asked whether the ePortfolio was ‘easy to use’, only 43% of trainees and 45% of trainers ‘strongly agreed’ or ‘agreed’ with this statement. When asked about training on how to use the ePortfolio, we saw a clear difference in opinion between trainees and supervisors, with just 32% of trainees stating that training had been sufficient compared with 70% of the supervisors. Although these figures may suggest better levels of training among educational supervisors, the perceived lack of training among educational supervisors is still a common complaint among trainees, with a study of foundation trainees finding that just over 50% of those surveyed felt that their educational supervisor was not sufficiently trained to use the ePortfolio.13 The practicalities of incorporating an ePortfolio system into a busy clinical schedule have long been cited as a reason for poor user compliance. A large study of 539 surgical trainees showed that nearly two-thirds felt that their online portfolio impacted negatively on their training opportunities because of the time required to complete assessments.14 Similarly, a sample of GP trainees cited the lack of sufficient protected time as a reason for poor compliance with the ePortfolio. As our results demonstrate, the trainers also struggle with time constraints, with 70% claiming difficulties in fitting the required amount of assessments for their trainees into their daily clinical schedule, a finding supported by other groups.15,16

Workplace-based assessments: do they work?

WPBAs have become the core of competency assessment in modern medicine. With the advent of revalidation, they will play an ever more important role in postgraduate medical training. Despite our reliance on this method of assessment, many still question its validity, given the lack of any clear evidence linking WPBAs to improved clinical performance.2

Trainee opinions on WPBAs are difficult to interpret because their understanding of the WPBA's intended objective appears to vary greatly. Indeed many view WPBAs as a summative process that enables them to ‘pass’ through to the next level of their training, while very few see the educational benefits that such assessments may have. Our survey data support this, with only a third of trainees viewing WPBAs as a learning exercise, and a large majority seeing their role as a means of ‘passing the year’. Results from a survey of internal medicine residents showed that, despite acknowledging the potential educational impact of WPBAs, trainees felt that their use as summative assessments also limited their value as an educational tool,17 perhaps explaining some of our findings.

Despite a distinct lack of objective evidence linking any form of workplace-based assessment to an improvement in clinical performance, there are at least some observational data from surveys that indicate potential positive outcomes. An observational study of 27 pre-registration house officers found that 70% felt that DOPS helped to improve clinical skills, and 65% agreed that they would help to further their future careers.18 In contrast, when asked whether their clinical practice had improved as a result of WPBAs, only 20% of trainees questioned in our survey agreed. This could suggest that trainees are always aware of educational opportunities, and regularly benefit from them during their daily practice regardless of the existence of the WPBA system. Perhaps the practice of ‘recording’ these educational experiences and reflecting back on them is more valuable for trainees’ clinical practice than the process of carrying out the assessments themselves. This is one particular area that needs further investigation in future survey-based studies.

There is some suggestion that the real value of WPBAs comes from the multi-source feedback (MSF) that is generated, rather than the assessments themselves. The MSF process enables trainees to receive structured feedback through an online form from the wide variety of professionals, both clinical and non-clinical, with whom they work on a daily basis. A study of 113 family physicians showed that 61% had made, or were planning to make, changes to their clinical practice based on MSF,19 although results from other studies involving junior doctors20 and surgical trainees21 would suggest that these groups are perhaps less willing to make changes in their practice as a result of MSF.

When considering the effectiveness of WPBAs, we must also consider how strictly the trainees and trainers adhere to the ‘gold standard’ format for the various assessments. Results from our questionnaires demonstrate that correct procedures are not being followed in some instances. Of the trainees questioned, 13% stated that their trainer was ‘rarely’ or ‘never’ physically present during workplace assessments, raising concerns over how valid such assessments are. Indeed, as previously discussed, time constraints on the part of the trainers may well be an underlying issue here. Another factor that raises concerns over the validity of these assessments was the timing of feedback. Only one-third of trainees stated that they ‘always’ or ‘often’ received feedback immediately after an assessment, casting doubt over the accuracy of the feedback that is eventually received. As we have previously observed, there was a considerable difference in the trainers’ responses to this issue, with 70% claiming that they ‘always’ or ‘often’ gave feedback immediately after an assessment. As we will discuss, such differences may perhaps be attributable to response bias. According to best practice, if trainees wish for a clinical encounter to be recorded as an assessment, their assessor should be informed of this prior to the encounter taking place. Interestingly, results from the trainer questionnaire show that one-third of supervisors ‘rarely’ or ‘never’ adhere to this guidance.

Factors affecting engagement with the ePortfolio

In addition to the structure and content of the ePortfolio and WPBAs, other factors might affect trainee and trainer engagement with the process. Some would argue, for example, that the focus in recent years on objective assessment of specific competencies is fuelling the ‘tick-box’ mentality amongst many of today's trainees, preventing the more practical, self-directed learning that used to occur in the ’pre-MMC’ era.

Trainers have to balance their increasingly busy service commitments with educational roles in mentoring and developing trainees; some may question whether trainers are able to devote enough time to assessments to make them worthwhile. At any one time, most educational supervisors are responsible for several trainees, all of whom require a multitude of assessments and several feedback meetings within their clinical rotation. With a lack of dedicated time and resources set aside for trainee interaction, it is understandable that some trainers find it difficult to complete the required number of assessments and meetings, therefore failing to engage fully in the current training system.

Limitations

While we have been able to gain some important insights into the current opinion of CMTs and their trainers, we accept certain limitations to this study. The total number of respondents for each group is low, particularly among the trainers, therefore limiting the strength of the conclusions that can be drawn. When sending questionnaires by post to the trainers, we are also exposed to responder bias whereby responses are received only from those who have very strong opinions on the particular topic in question, potentially leading to polarised results. In contrast, the CMTs physically received the surveys at their teaching sessions. They might, therefore, have discussed particular questions among themselves before completing the survey, and so we cannot be certain that the responses truly reflect individual opinion. In a broader sense, the responses that are received may be subject to response bias, whereby respondents give the ‘morally right’ answer rather than their true thoughts or feelings, leading to over-reporting of good behaviour and under-reporting of bad behaviour. We did, however, attempt to limit the likelihood of such bias by informing all respondents that no demographic data were being collected.

Conclusions

It is generally agreed that, if implemented correctly, the ePortfolio and WPBAs have the potential to serve as powerful tools in the education and development of trainee doctors. Our own results suggest that, among our study respondents, improvements in certain areas such as mentoring and feedback are still needed in order to allow them to experience the full benefits of this system. In addition, we found that the trainees within the region studied were having difficulties engaging with the ePortfolio because of time constraints and the struggle to accommodate both vital training opportunities and assessments within their busy clinical schedule.

Given the small sample size and geographical region on which this study was based, it is difficult to extrapolate our findings to the rest of the training schemes within the UK. Nevertheless, evidence from the current literature seems to suggest that a change in ethos among trainees, as well as a shift in how they view the ePortfolio and its assessments, may be required to support their long-term success. As long as trainees view the current model as a way of simply progressing to the next stage of their training, the full potential of the ePortfolio and what it can offer might not be realised. As we have seen from other studies, mentors have a great influence on trainee compliance with these tools, therefore the stimulus for a change in perception should ideally begin with them.

References

- 1.General Medical Council Workplace based assessment: a guide for implementation. London: GMC, 2010. www.gmc-uk.org/Workplace_Based_Assessment___A_guide_for_implementation_0410.pdf_48905168.pdf [Accessed 19 March 2013]. [Google Scholar]

- 2.Miller A, Archer J. Impact of workplace based assessment on doctors’ education and performance: a systematic review. BMJ 2010;341:c5064. 10.1136/bmj.c5064 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Webb TP, Aprahamian C, Weigelt JA, Brasel KJ. The Surgical Learning and Instructional Portfolio (SLIP) as a self-assessment educational tool demonstrating practice-based learning. Curr Surg 2006;63:444–7. 10.1016/j.cursur.2006.04.001 [DOI] [PubMed] [Google Scholar]

- 4.Cross M, White P. Personal development plans: the Wessex experience. Educ Prim Care 2004;15:205–12. [Google Scholar]

- 5.JRCPTB CMT currciulum and eportfolio updates August 2012. www.jrcptb.org.uk/trainingandcert/Pages/ST1-ST2.aspx [Accessed 19 March 2013]. [Google Scholar]

- 6.Johnson S, Cai A, Riley P, et al. A survey of Core Medical Trainees’ opinions on the ePortfolio record of educational activities: beneficial and cost-effective? J R Coll Physicians Edinb 2012;42:15–20. 10.4997/JRCPE.2012.104 [DOI] [PubMed] [Google Scholar]

- 7.Ryland I, Brown J, O’Brien M, et al. The portfolio: how was it for you? Views of F2 doctors from the Mersey Deanery Foundation Pilot. Clin Med 2006;6:378–80. 10.7861/clinmedicine.6-4-378 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fung MF, Walker M, Fung KF, et al. An internet-based learning portfolio in resident education: the KOALA multicentre programme. Med Educ 2000;34:474–9. 10.1046/j.1365-2923.2000.00571.x [DOI] [PubMed] [Google Scholar]

- 9.Driessen E, van Tartwijk J, van der Vleuten C, Wass V. Portfolios in medical education: why do they meet with mixed success? A systematic review. Med Educ 2007;41:1224–33. 10.1111/j.1365-2923.2007.02944.x [DOI] [PubMed] [Google Scholar]

- 10.Snadden D, Thomas ML, Griffin EM, Hudson H. Portfolio-based learning and general practice vocational training. Med Educ 1996;30:148–52. 10.1111/j.1365-2923.1996.tb00733.x [DOI] [PubMed] [Google Scholar]

- 11.Pearson DJ, Heywood P. Portfolio use in general practice vocational training: a survey of GP registrars. Med Educ 2004;38:87–95. 10.1111/j.1365-2923.2004.01737.x [DOI] [PubMed] [Google Scholar]

- 12.Swallow V. Learning in practice: but who learns from who? Nurse Educ Pract 2006;6:1–2. 10.1016/j.nepr.2005.11.001 [DOI] [PubMed] [Google Scholar]

- 13.Hrisos S, Illing JC, Burford BC. Portfolio learning for foundation doctors: early feedback on its use in the clinical workplace. Med Educ 2008;42:214–23. 10.1111/j.1365-2923.2007.02960.x [DOI] [PubMed] [Google Scholar]

- 14.Pereira EA, Dean BJ. British surgeons’ experiences of mandatory online workplace-based assessment. J R Soc Med 2009;102:287–93. 10.1258/jrsm.2009.080398 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Keim KS, Gates GE, Johnson CA. Dietetics professionals have a positive perception of professional development. J Am Diet Assoc 2001;101:820–4. 10.1016/S0002-8223(01)00202-4 [DOI] [PubMed] [Google Scholar]

- 16.Dornan T, Carroll C, Parboosingh J. An electronic learning portfolio for reflective continuing professional development. Med Educ 2002;36:767–9. 10.1046/j.1365-2923.2002.01278.x [DOI] [PubMed] [Google Scholar]

- 17.Malhotra S, Hatala R, Courneya CA. Internal medicine residents’ perceptions of the Mini-Clinical Evaluation Exercise. Med Teach 2008;30:414–9. 10.1080/01421590801946962 [DOI] [PubMed] [Google Scholar]

- 18.Morris A, Hewitt J, Roberts CM. Practical experience of using directly observed procedures, mini clinical evaluation examinations, and peer observation in pre-registration house officer (FY1) trainees. Postgrad Med J 2006;82:285–8. 10.1136/pgmj.2005.040477 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sargeant J, Mann K, Ferrier S. Exploring family physicians’ reactions to multisource feedback: perceptions of credibility and usefulness. Med Educ 2005;39:497–504. 10.1111/j.1365-2929.2005.02124.x [DOI] [PubMed] [Google Scholar]

- 20.Burford B, Illing J, Kergon C, Morrow G, Livingston M. User perceptions of multi-source feedback tools for junior doctors. Med Educ 2010;44:165–76. 10.1111/j.1365-2923.2009.03565.x [DOI] [PubMed] [Google Scholar]

- 21.Lockyer J, Violato C, Fidler H. Likelihood of change: a study assessing surgeon use of multisource feedback data. Teach Learn Med 2003;15:168–74. 10.1207/S15328015TLM1503_04 [DOI] [PubMed] [Google Scholar]