ABSTRACT

When nobody or nothing notices an error, it may turn into patient harm. We show that medical devices ignore many errors, and therefore do not adequately support patient safety. In addition to causing preventable patient harm, errors are often reported ignoring potential flaws in medical device design, and front line staff may therefore be inappropriately blamed. We present some suggestions to improve reporting and the procurement of hospital equipment.

KEYWORDS : Healthcare IT, medical devices, safety, human error

Commercial air travel didn't get safer by exhorting pilots to please not crash. It got safer by designing planes and air travel systems that support pilots and others to succeed in a very, very complex environment. We can do that in healthcare, too – Don Berwick

Computing's central challenge, ‘How not to make a mess of it,’ has not been met. On the contrary, most of our systems are much more complicated than can be considered healthy, and are too messy and chaotic to be used in comfort and confidence – Edgser Dijkstra

Introduction

If preventable error in hospitals was a disease, it would be a big killer; recent evidence suggests that preventable error is the third biggest killer, after heart disease and cancer.1 Worse, the death rates for error are likely to be an underestimate; for example, if somebody is in hospital because of cancer, if an error occurs their death is unlikely to be recorded as ‘preventable error’ when it is far easier to say the disease took its inevitable course.

Healthcare has rising costs, increasing expectations, and we are getting older and more obese, increasingly suffering from chronic diseases like diabetes. However we might try to interpret the evidence, it is clear that healthcare is in crisis.

One would imagine that computers (IT) would be part of the solution. IT, new technologies, and the ‘paperless NHS’ are all frequently pushed as obvious ways forward. In fact, while computers make every industry more efficient, they don't help healthcare.2 Healthcare is now widely recognised as turning into an IT problem.3 Conversely, improving IT will improve every aspect of healthcare.

By contrast, we know drug side effects are expected and unavoidable, so we would be skeptical if the latest ‘wonder drug’ could be all that it was trying to promise. We rely on a rigorous process of trials and review before drugs are approved for general use,4 and we expect a balance between the benefits of taking a drug and suffering from its side effects. The review process protects us. Yet there is no similar review or assessment process for healthcare IT systems, whether they are medical devices, patient record systems, incident reporting systems, scanners or other complex devices.

It is tempting to blame hospital staff for errors, but wrong because system defects almost always play a part.5 Error should be considered the unavoidable side effect of IT; therefore, IT systems should be better regulated to manage side effects.

A selection of illustrative problems

There are many reasons why it is potentially wrong to blame the healthcare practitioner following a clinical incident, especially one that involves a medical device. A first step in clearer thinking would be to call error ‘use error’ since calling it ‘user error’ prejudges the user as the cause, when all we know is that an error happened during use. Furthermore, once somebody is blamed it is tempting to look no further for other causes, and then nobody learns anything and the system won't be changed. The problem will reoccur.

Assuming the design is right

At the Beatson Oncology Centre in Glasgow, software was upgraded and the implications were not reviewed. The original forms for performing calculations continued to be used, and as a result a patient, Lisa Norris, was overdosed. Sadly, although immediately surviving the overdose, she died. The report6 was published just after her death, and says:

Changing to the new Varis 7 introduced a specific feature that if selected by the treatment planner, changed the nature of the data in the Eclipse treatment Plan Report relative to that in similar reports prior to the May 2005 upgrade […] the outcome was that the figure entered on the planning form for one of the critical treatment delivery parameters was significantly higher than the figure that should have been used […] the error was not identified in the checking process […] the setting used for each of the first 19 treatments [of Lisa Norris] was therefore too high.

It should be noted that at no point in the investigation was it deemed necessary to discuss the incident with the suppliers of the equipment [Varis 7, Eclipse and RTChart] since there was no suggestion that these products contributed to the error.

This appears to be saying that whatever a computer system does, it is not to be blamed for error provided it did not malfunction: the revised Varis 7 had a feature that contributed to a use error, but the feature was selected by the operator. Indeed, the report dismisses examining the design of the Varis 7 (or why an active piece of medical equipment needs a software upgrade) and instead concentrates on the management, supervision and competence of the operator who made ‘the critical error’. It appears nobody evaluated the design of the new Varis 7, nor the effect of the changes to its design, despite an internal memorandum some months earlier querying unclear control of purchased software.

Blaming the user

In 2001 the radiographer Olivia Saldaña González was involved with the treatment of patients who died from radiation overdoses. Treatment involves placing metal blocks to protect sensitive parts of the patient's body, and calculating the correct radiation dose given that the blocks restrict the treatment aperture. Saldaña drew the shapes of the blocks on the computer screen, but the computer system did not perform the correct calculation because of a bug of which Saldaña was unaware. Multidata Systems, the manufacturer, was aware of the bug in 1992, and the computer should have detected and highlighted its inability to do the correct calculation.7 In spite of this, Saldaña and a colleague were sentenced to four years in prison for involuntary manslaughter.

Not exploring what happened

Kimberly Hiatt made an out-by-ten calculation error for intravenous calcium chloride for a very ill baby, Kaia Zautner, who died, though it was not obvious whether the overdose contributed to the death. Hiatt reported the error and was escorted from the hospital, and she subsequently committed suicide; the ‘second victim’ of the incident.8 Notably, after her death the Nursing Commission terminated their investigation, so we will never know exactly how the incident occurred,9,10 and we will not be able to learn to help find out how to improve safety.

How did the miscalculation occur? It is possible Hiatt made a keying slip on a calculator, such as pressing a decimal point twice, resulting in an incorrect number without the calculator reporting the possibility of error; or perhaps the pharmacy computer had printed an over-complex drug label that was too easy to misread? It is possible some of the equipment (calculator, infusion pump etc) had a bug and, despite being used correctly, gave the wrong results. In the next section we show that calculators are very different and prone to error; it is surprising that the calculation that Hiatt performed was not analysed more closely before assuming Hiatt was at fault.

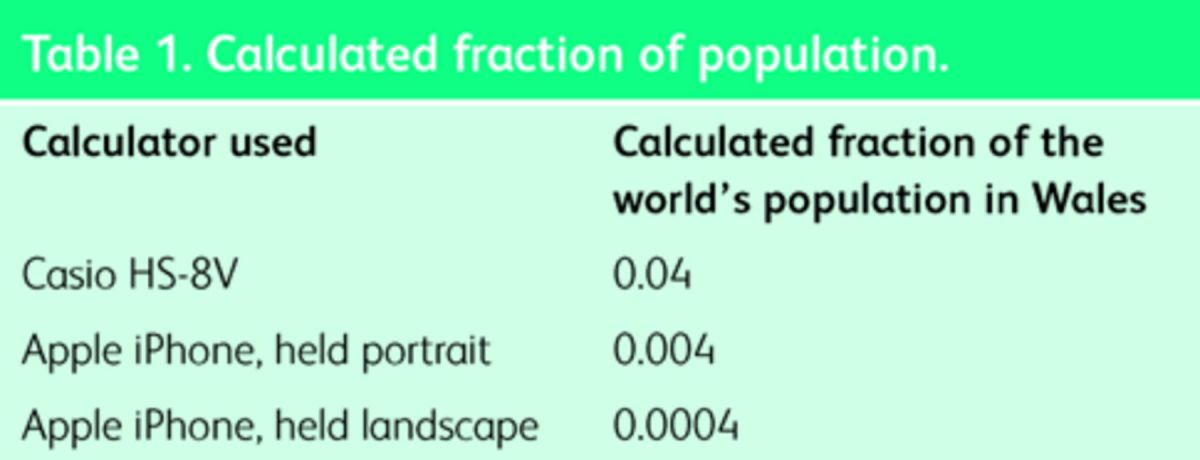

Calculators have systematic but varied faults

Handheld calculators are used throughout healthcare but are fallible. As a simple example, we live in Swansea, and we are interested in our epidemiological studies of the Welsh population. We might therefore wish to calculate what proportion of the world's population live in Wales. The calculation required is the population of Wales divided by the population of the world, namely 3,063,500÷6,973,738,433 at the time of writing. Three attempts at this calculation obtain the results presented in Table 1 (ignoring least significant digits).

Table 1. .

Calculated fraction of population.

Only the last answer is correct. These are market-leading products, yet none report any error. The Apple iPhone could clearly report a possible error since it provides two different answers even if it doesn't know which one is right!

The first electronic calculators appeared in the 1960s. We are no longer constrained by technology, and we’ve had some fifty years to get their designs right. It is hard to understand why calculators used in healthcare are not safer.

Ubiquitous design faults

The Zimed AD Syringe Driver was, according to its company's now defunct website,11 ‘a ground-breaking new infusion device that takes usability and patient safety to new levels […] Simple to operate for professionals, patients, parents, or helpers.’ Its design quality is however typical of modern medical devices.

The Zimed permits error that it ignores, potentially allowing very large numerical errors.12 One cause for concern are over-run errors: a nurse entering a number such as 0.1 will move the cursor to enter the least significant digits of the intended number, but an over-run (too many move-right key presses) will silently move the cursor to the most significant digit. Thus an attempt to enter 0.1 could accidentally enter 1000.0 by just one excess keystroke with no warning to the user.

The B-Braun Infusomat, a popular infusion pump, has a similar user interface to the Zimed, but has a different problem. If a user tries to enter 0.01 ml it gets silently converted to 0.1 ml, with no warning to the user the number is not what was entered.

On the Baxter Colleague 3, another infusion pump, entering 100.5 will be silently turned into 1005; the decimal point is ignored, again with no warning to the user.

These types of design fault are all possible causes of Hiatt's overdosing; they are particularly worrying in that they are silent problems that do not warn the user. Almost all devices we have examined suffer from similar flaws of sloppy programming. It is possible that the manufacturers will read these criticisms and fix their software, which is good, except it will create the problem that for a time there will be multiple versions of the user interfaces to confuse users further. It is surprising these simple design defects were not eliminated during product development.

Calculation problems

Denise Melanson was given an overdose of intravenous chemotherapy. Her death was followed by a root cause analysis (RCA) published by the Institute of Safe Medication Practices.13 Elsewhere we have criticised the difficult calculation aspects of the infusion14 and shown how design problems can be avoided, but here we highlight two issues raised by the simple two-hour experiment performed as part of the RCA.

Three out of six nurses participating in the experiment entered incorrect data on the Abbot AIM Plus infusion pump: all were confused by some aspect of its design. Three did not choose the correct millilitre option (the infusion pump displays ml per hour as ml) – one nurse selected mg/ml instead. Some were confused with the infusion pump using a single key for both decimal point and mode-changing arrow key.

The drug bag label generated by the pharmacy was confusing: it had over 20 numbers printed on it, causing information overload. One of these was 28.8, the fatal dose given; this would have caused confirmation bias without making clear that this dose should be divided by 24 to calculate the correct dose of 1.2 ml per hour. Both nurses omitted to divide by 24 and both therefore obtained 28.8; the bag's presentation of this wrong number helped mislead them (confirmation bias).

One wonders why the manufacturers of the pump and the programmers of the pharmacy label printing system did not perform experiments to help improve the products – the RCA experiment only took 2 hours! Indeed, such evaluation is required by international standards, such as ISO 62366 medical devices – application of usability engineering to medical devices,15 which require manufacturers to identify hazards, perform experiments and have an iterative design process.

If simple experiments had been done during manufacture of the Abbott pump, serious harm could have been designed out.

IT or embedded IT?

The design problems above are not limited to particular sorts of IT. The examples present a varied mixture of IT systems, both ones typically used on PCs and those hidden inside devices and handhelds, using so-called embedded computers. This paper therefore uses the term ‘IT’ to mean either or any sort of computer-based system, particularly ones with user interfaces that are operated by front-line staff.

Why are these problems not more widely recognised?

If preventable error is the third biggest killer, why do we know so little about it?

There is widespread ignorance of these issues for a number of reasons. It is clearly not in the interest of device manufacturers to draw attention to weaknesses in their products, but equally the system of reporting within healthcare is deficient. Error reporting systems are cumbersome and focused on overt clinical incidents, set in a culture of silence and blame, rather than transparency and learning.16,17

When programming infusion pumps, staff tend to ‘workaround’ design weaknesses. When a dose error occurs it will rarely be reported if it is recognised and corrected without harm to the patient. If harm does occur, staff are fearful the emphasis is on blame rather than learning, particularly as the blame tends to be focused on the healthcare professional and rarely the design.

Report feedback is often minimal, with no opportunity for learning. All this leads to a culture of silence,18—20 whereby reporting is minimised and tends only to occur when an incident is overt and serious: few incidents that become visible seem all the more outrageous.

Blame loses the opportunity to synthesise and learn from near misses. A radical change is needed to create an emphasis on learning rather than blame; transparency rather than secrecy.

From problems to solutions

When you get cash from a cash machine there is a risk that you put your card in, key in your PIN code, grab your cash and walk away leaving your card behind. So instead of that disaster, cash machines now force you to take your card before they will give you any cash. You went there to get cash, and you won't go away until you have it – so you automatically pick up your card. This is a simple story of redesigning a system to avoid a common error. Notice that the design has eliminated a type of error without having to retrain anybody.

In the NHS, the UK's largest employer, any solution to problems that relies on retraining the workforce is not going to be very successful. Instead, we should redesign the systems: if IT is designed properly then it will be safer.

How can we achieve this?

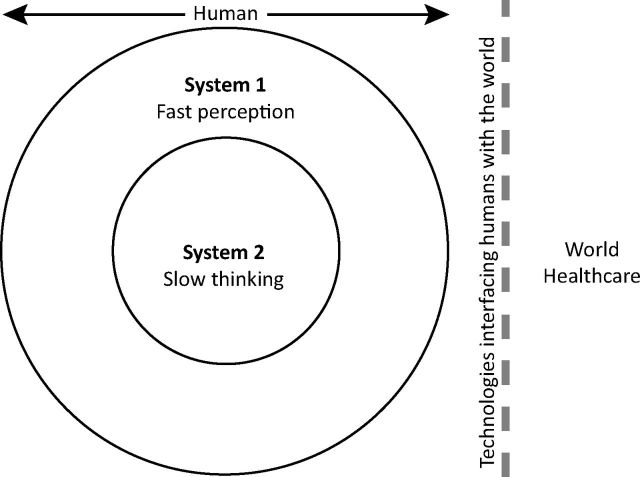

The common thread in the problems listed in this article is that errors go unnoticed, and then, because they are unnoticed, they are unmanaged, and then they may lead to harm. With no help from the system, if a user does not notice an error, they cannot think about it and avoid its consequences (except by chance). This truism is explored in detail by Kahneman, who describes the two human cognitive systems (fast perception and slow thinking) we use.21

Fig 1 demonstrates how slow thinking (Kahneman's system 2) can only know about the world through what it sees using system 1. System 1, our perception, is fast and effortless, like a reflex; whereas system 2, our conscious thinking, is logical and powerful, but requires effort. Many errors occur when system 2 delegates decisions to system 1, in so called attribute substitution.21

Fig 1.

Schematic rings representing Kahneman's two cognitive systems – what goes on inside our heads. Our conscious thinking (system 2) can know nothing about the world apart from what our perceptual system tells it (eg our eyes and ears are in system 1), and in turn almost all of healthcare is mediated by technologies. Reproduced with permission.22

Poorly designed healthcare systems do not work because IT systems do not detect errors, so the human perception (system 1) fails warn we need to think logically (system 2), to fix those errors. The analogy with human teamwork is helpful: in a good team, other people help spot errors we do not notice, and thus the team as a whole is safer. IT rarely behaves like a team player helping spot errors, which leads to unrecognised errors.

Reporting errors and near misses

If learning is to occur, and problems prevented at source, errors and near misses must be detected, recognised and also reported and analysed. To enable this, systems must highlight potential errors, and when something goes wrong, or a near miss, the event should be recorded. If this is done using a national structure,20,23,24 both local and central analysis would be simplified and learning enhanced. In turn, if we do not learn, we will have no evidence-based insights into improving healthcare.

It is important to remember that both computer and device error logs are unreliable and should never be used as evidence unless they are forensically verified (which generally is impossible). For example, in the B-Braun a nurse might enter 0.01 but a log would show they had entered 0.1.

Making informed purchasing decisions

The Kahneman model explains why errors can go undetected, and also why bad IT systems are purchased. As individuals, we get excited about the latest technology, and easily lose a rational approach to what best meets our requirements – this is attribute substitution. Thinking (system 2 work) carefully about IT is difficult, so it is easier to substitute easier factors, such as impressive feature lists, which system 1 understands directly. Unfortunately, many people think they are skilled at deciding which IT systems best meet their needs, but this is illusory. Kahneman has termed this the illusion of skill.

This is why we all rush to buy the latest hand-held device, despite the fact that it won't necessarily meet our clinical needs. If it is cheaper than competitors or a discount is offered on the contract we are even more likely to buy it. The same irrationalities apply in the workplace, and are discussed well by Kahneman.

Makary gives a brilliant discussion of the misleading temptations of hospital robotics.25 What is perfect for consumerism is a disaster for hospital procurement.26 The latest shiny gizmo is not necessarily the best or the safest. We have to change things: what we like and what manufacturers want us to want are not necessarily what patients need.

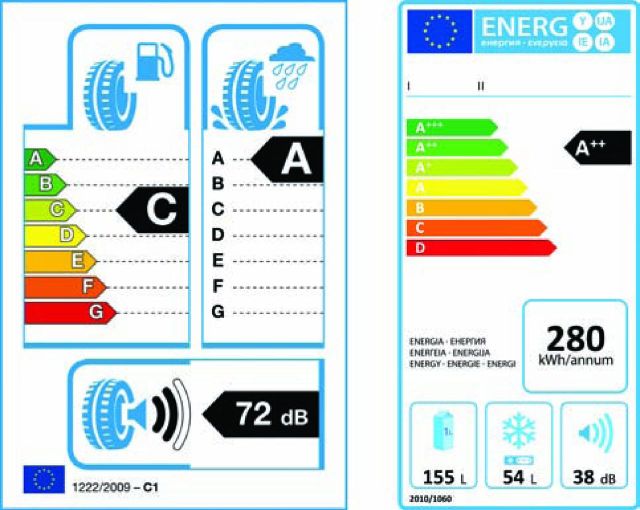

The EU found the same problem with consumers buying tyres. European Union legislation now requires tyres to show their stopping distance, noise level and fuel efficiency at the point of sale. An example is shown in Fig 2. The legislation follows on from similar schemes to show energy efficiency in white goods. Customers want to buy better products, so now when they buy tyres, they can see these factors and use them to inform their decision making.

Fig 2.

Example EU product performance labels. Tyre label (left) and energy efficiency label for white goods, like freezers (right). The A–G scale has A being best. Note how the scales combine several measures of performance. Reproduced with permission.22

The EU has not said how to improve tyres, they have just required the quality to be visible. A complex thinking process (which system 2 is bad at) has been replaced by a visible rating (which system 1 is good at).

Now manufacturers, under competition, work out how to make their products more attractive to consumers using the critical measures. Indeed, thanks to energy efficiency labeling, product efficiency has improved, in some cases so much so that the EU has extended the scales to A*, A**, A***. The point is, normal commercial activity now leads to better products.

By analogy with tyres, medical devices should show safety ratings – ones that are critical and that can be assessed objectively. Safety ratings would stimulate market pressure to start making improvements.

It is easy to measure tyre stopping distances; clearly, stopping before an obstacle instead of hitting it is preferable. There is no similar thinking in healthcare about safety. Therefore any measurement process has to be combined with a process to improve, even create, the measurements and methodologies themselves.

It is interesting to read critiques of pharmaceutical development4 and realise that in pharmaceuticals there is a consensus scientific methods should be used (even if the science actually done has shortcomings). By contrast, for healthcare IT and devices there isn't even any awareness that things need evaluating.

Conclusions

Today's enthusiasm for IT and exciting medical devices recalls the original enthusiasm for X-rays. Clarence Dally, an early adopter, suffered radiation damage and died from cancer only a few years after Röntgen's first publication.27 It is now obvious that X-rays carry risks and have to be used carefully.

Today's healthcare IT is badly designed; the culture blames users for errors, thus removing the need to closely examine design. Moreover, manufacturers often require users to sign ‘hold blameless’ contracts,28 which effectively make the users the only people left to blame. When errors occur, even near misses, a transparent system of reporting would enhance opportunities for learning and improvement, provided it is correct and used in a supportive culture, rather than one of blame and retribution. The current system of inaccuracy, confidentiality and blaming users means the sorts of issues discussed in this paper do not get any light shone on them.

Mortality rates in hospitals can double when computerised patient record systems are introduced:29 computers are not the unqualified ‘X-ray’ blessings they are often promoted as being. A safety-labeling scheme would raise awareness of the issues and stimulate competition for safer devices and systems. It could be done voluntarily with no regulatory burden on manufacturers. By fixing rating labels on devices for their lifetime, patients would also gain increased awareness of the issues, and they would surely help pressurise for better systems.

We need to improve. Kimberley Hiatt, Kaia Zautner, Denise Melanson and many other people might still be alive if the calculators, infusion pumps, robots, linear accelerators and so forth used in their care had been designed with more attention to the possibility of error.

Note

This article was adapted from a lecture transcript presented at Gresham College, London on 11 February 2014. The lecture and transcript can be accessed using the following link: www.gresham.ac.uk/lectures-and-events/designing-it-to-make-healthcare-safer.

Funding

The work reported was funded by the UK Engineering and Physical Sciences Research Council (EPSRC) (EP/G059063/1 and EP/L019272/1) and performed at Swansea University, Wales.

References

- 1 .James JT. A new evidence-based estimate of patient harms associated with hospital care. J Patient Saf 2013;9:112–28. [DOI] [PubMed] [Google Scholar]

- 2 .Goldhill D. Catastrophic Care: How American health care killed my father – and how we can fix it. New York, NY: Knopf Doubleday Publishing Group, 2013. [Google Scholar]

- 3 .Institute of Medicine Health IT and patient safety: building safer systems for better care. Washington, DC: National Academies Press, 2012. [PubMed] [Google Scholar]

- 4 .Goldacre B. Bad Pharma: How drug companies mislead doctors and harm patients. London: Fourth Estate, 2012. [Google Scholar]

- 5 .Aspden, P, Wolcott, JA, Bootman JL, Cronenwett LR. (eds). Preventing medication errors. Washington, DC: National Academies Press, 2007. [Google Scholar]

- 6 .Johnston AM. Unintended exposure of patient Lisa Norris during radiotherapy treatment at the Beatson Oncology Centre Glasgow in January 2006. Edinburgh: Scottish Executive, 2006. [Google Scholar]

- 7 .McCormick J. We did nothing wrong: Why software quality matters. Baseline, 4 March 2004. [Google Scholar]

- 8 .Wu AW. Medical error: the second victim: the doctor who makes the mistake needs help too. BMJ 2000;320:726–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9 .Levy P. I wish we were less patient, 2011. Available online at http://runningahospital.blogspot.co.uk/2011/05/i-wish-we-were-less-patient.html [Accessed 9 January 2015]. [Google Scholar]

- 10 .Ensign J. To err is human: medical errors and the consequences for nurses, 2011. Available online at https://josephineensign.wordpress.com/2011/04/24/to-err-is-human-medical-errors-and-the-consequences-for-nurses/ [Accessed 9 January 2015]. [Google Scholar]

- 11 .Zimed ADSyringeDriver. 2013. www.zimed.cncpt.co.uk/product/ad-syringe-driver. [Google Scholar]

- 12 .Cauchi A, Curzon P, Gimblett A, Masci P, Thimbleby H. Safer “5 key” number entry user interfaces using differential formal analysis. Available online at www.eecs.qmul.ac.uk/~masci/works/hci2012.pdf [Accessed 24 February 2015]. [Google Scholar]

- 13 .Institute for Safe Medication Practices Canada Fluorouracil incident root cause analysis. Toronto, ON: ISMP Canada, 2007. [Google Scholar]

- 14 .Thimbleby H. Ignorance of interaction programming is killing people. ACM Interactions 2008;September + October: 52–7. [Google Scholar]

- 15 .European Commission Medical devices – application of usability engineering to medical devices, ISO 62366 Geneva: International Electrotechnical Commission, 2008. [Google Scholar]

- 16 .Morris S. Just culture – changing the environment of healthcare delivery. Clin Lab Sc 2010;24:120–4. [PubMed] [Google Scholar]

- 17 .Leape LL. Errors in medicine. Clin Chim Acta 2009;404:2–5. [DOI] [PubMed] [Google Scholar]

- 18 .Jeffs L, Affonso DD, MacMillan K. Near misses: paradoxical realities in everyday clinical practice. Int J Nurs Pract 2008;14:486–94. [DOI] [PubMed] [Google Scholar]

- 19 .Firth-Cozens J. Cultures for improving patient safety through learning: the role of teamwork. Qual Health Care 2001;10:26–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20 .Mahajan RP. Critical incident reporting and learning. Br J Anaesth 2010;105:69–75. [DOI] [PubMed] [Google Scholar]

- 21 .Kahneman D. Thinking, fast and slow. New York, NY: Farrar, Straus and Giroux, 2012. [Google Scholar]

- 22 .Thimbleby H. Improving safety in medical devices and systems. Proceedings IEEE International Conference on Healthcare Informatics 2013 Philadelphia, PA: 2013:1–13. [Google Scholar]

- 23 .Mann R, Williams J. Standards in medical record keeping. Clin Med 2003;3:329–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24 .Vincent C. Incident reporting and patient safety. BMJ 2007;334:51–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25 .Makary M, Al-Attar A, Holzmueller C, et al. Needlestick injuries among surgeons in training. N Engl J Med 2007;356:2693–9. [DOI] [PubMed] [Google Scholar]

- 26 .Thimbleby H. Technology and the future of healthcare. J Public Health Res 2014;2:160–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27 .Röntgen WC. On a new kind of rays, translated into English. Br J Radiol 1931;4:32–3. [Google Scholar]

- 28 .Koppel R, Gordon S. (eds). First do less harm: confronting the inconvenient problems of patient safety. Ithaca, NY: Cornell University Press, 2012. [Google Scholar]

- 29 .Han YY, Carcillo JA, Venkataraman ST, et al. Unexpected increased mortality after implementation of a commercially sold computerized physician order entry system. Pediatrics 2005;116:1506–12. [DOI] [PubMed] [Google Scholar]