ABSTRACT

Doctors increasingly rely on medical apps running on smart phones or tablet computers to support their work. However, these apps vary hugely in the quality of their data input screens, internal data processing, the methods used to handle sensitive patient data and how they communicate their output to the user. Inspired by Donabedian's approach to assessing quality and the principles of good user interface design, the Royal College of Physicians’ Health Informatics Unit has developed and piloted an 18-item checklist to help clinicians assess the structure, functions and impact of medical apps. Use of this checklist should help clinicians to feel more confident about using medical apps themselves, about recommending them to their staff or prescribing them for patients.

KEYWORDS: Medical apps, mHealth, quality assessment checklist, Donabedian's structure, process, outcome, health informatics, clinical use of technology

Analysis and problem statement

Smart phone apps are potentially very useful additions to clinical practice and are widely used by junior and senior doctors to support their work. Preliminary results of a 2015 survey of 1,104 Royal College of Physicians (RCP) members and fellows (response rate after two reminders 42% of the 2,658 members of the RCP research panel) show that 586 (54%) use apps to support their clinical work and of these, 42% believed the apps were ‘essential’ or ‘very important’ to their work. However, the survey also reveals that 43% – nearly half – of respondents were concerned about some aspects of app quality. The concern shown by RCP members and fellows about app quality is very appropriate, as several studies have shown that the quality of some apps varies too much for safe clinical use without prior assessment. For example, a study of 23 calculators for converting opioid drug dose equivalents,1 found dangerously large variations in calculated doses. Conversion of a 1 mg dose of oral morphine to methadone resulted in a dose ranging from 0.05 to 0.67 mg methadone (a 13:1 range), with fewer than half the apps recognising that the conversion formula used should depend on the actual dose as well as on the drugs concerned. Thimbleby et al2 found that the delete key on many apps does not work correctly, so ironically if a user tries to correct an error they notice, that correction may cause an error they do not notice.

Proposed solution

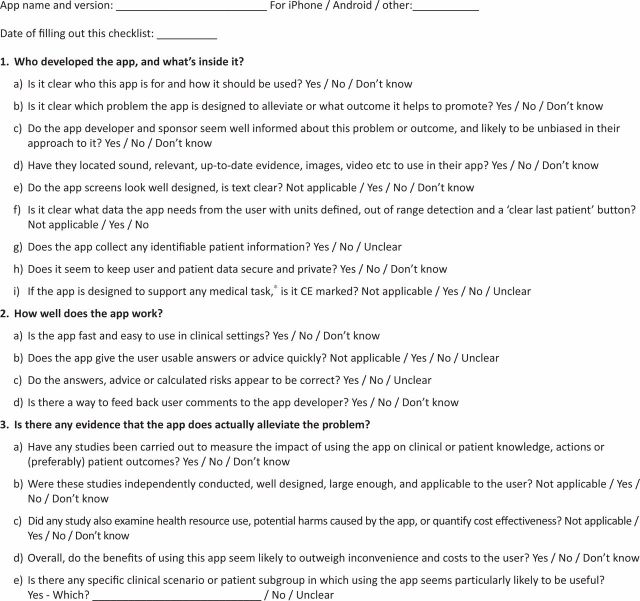

Building on Donabedian's classical 1978 analysis of the factors that determine the quality of medical care3 and with input from app developers, the RCP Health Informatics Unit (HIU) has developed a short checklist (see Fig 1) to support clinicians wishing to assess the quality of apps. We recommend that you start by assessing the app structure, including who is it for, who published it, and whether it incorporates relevant, up to date, evidence-based content. Another key aspect is the design of screens that communicate with the user and ask for data to be input: are they well designed, with clear and unambiguous text? When patient data are requested, are the units and methods to collect the data clear? Is the patient's name, NHS number or other identifiers captured, and if so, where are these sent, or are they stored on the device? If the app collects data, does it have a ‘new patient’ button to clear all previous entries or does it (as many do) carry over some data from the previous patient? If the data are not stored in encrypted form this constitutes a privacy risk when the device is lost or borrowed. You also need to check that the app is CE marked, which shows that it complies with the relevant medical devices and software law, recently summarised in HIU guidance for RCP members and fellows.4 This applies if the app is used to support medical tasks such as diagnosis, test ordering, interpretation of test results, risk assessment or the choice or titration of therapy. If the aim of the app is not medical – for example, to aid the public with lifestyle decisions such as tracking exercise, weight loss or smoking cessation – CE marking is generally not needed.5

Fig 1.

RCP Health Informatics Unit clinical app quality checklist. *Screening, diagnosis, test ordering or interpretation, the choice or titration of- treatment and prognosis. RCP = Royal College of Physicians.

If the app passes this long list of screening questions, the next step is to check if it works well – the equivalent of Donabedian's process measures. How does the app handle error? Try making a few mistakes (does the app reject them?) and try correcting them. Does it appear to generate sensible advice or output for a wide variety of input data items? Does it signal invalid or extremely unlikely data values? Equally, does it request all data likely to be relevant: we know of apps that ignore an age over 75 or provide no field to enter that a patient is diabetic – key information if we need to calculate cardiac risk. Is the output presented to the user in a usable and actionable format avoiding jargon, undefined abbreviations or an obscure risk score that anyone would find hard to interpret at 3am? For example, risks are best communicated using a ‘1 in N patients’ scale rather than a probability between zero and one or a percentage probability.6

Assuming that the app appears to work well when tested on sample data, the final hurdle corresponds with Donabedian's outcome: does it actually help us manage patients? The best test is a large, well-designed randomised trial,7 but so far few of these have been carried out. Trials of apps are perfectly possible, as shown by a study8 which randomised 128 people wanting to lose weight to the MyMealMate app, a website or a paper diary. At three months, weight loss was highest in the app group and least in the diary group. However, only 20 app randomised controlled trials (RCTs) have so far been published (source: PubMed clinical queries search carried out on 4 September 2015 using ‘mobile applications’ or ‘smartphone applications’), so insisting on an RCT would severely limit the number of apps you could recommend to your colleagues. As an alternative, you could potentially test a small number of selected apps on a series of your own patients. This would entail making your initial decision about each patient, then using each app in turn to see if it changes your decision (and ideally subsequent actions and even patient outcome) for the better. Unsafe apps may be reported to the MHRA hotline (www.mhra.gov.uk/safetyinformation/ reportingsafetyproblems/devices), using data captured on the RCP checklist. This process may also help you to identify typical patients whom the app seems to help, and other patients in which the app seems less useful – helpful when it comes to sharing your findings with your staff.

References

- 1.Haffey F, Brady RR, Maxwell S. A comparison of the reliability of smartphone apps for opioid conversion. Drug Saf 2013;36:111–7. [DOI] [PubMed] [Google Scholar]

- 2.Thimbleby H, Oladimeji P, Cairns P. Unreliable numbers: error and harm induced by bad design can be reduced by better design. J Royal Soc Interface 2015, in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Donabedian A. The quality of medical care. Science 1978; 200: 856–64. [DOI] [PubMed] [Google Scholar]

- 4.Health Informatics Unit Guidance on apps for physicians. London: RCP, 2015. Available online at www.rcplondon.ac.uk/resources/using-apps-clinical-practice-guidance [Accessed 8 September 2015]. [Google Scholar]

- 5.MHRA Medical devices – software applications. Available online at www.gov.uk/government/publications/medical-devices-software-applications-apps [Accessed 8 September 2015].

- 6.Hoffrage U, Lindsey S, Hertwig R, Gigerenzer G. Communicating statistical information. Science 2000:290:2261–2. [DOI] [PubMed] [Google Scholar]

- 7.Liu JLY, Wyatt JC. The case for randomized controlled trials to assess the impact of clinical information systems. J Am Med Inform Assoc 2011;18:173–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Carter MC, Burley JV, Nykjaer C, Cade EJ. Adherence to a smartphone application for weight loss compared to website and paper diary: pilot randomized controlled trial. J Med Internet Res 2013;15:e32. [DOI] [PMC free article] [PubMed] [Google Scholar]