Abstract

Metrics for evaluating interruptive prescribing alerts have many limitations. Additional methods are needed to identify opportunities to improve alerting systems and prevent alert fatigue. In this study, the authors determined whether alert dwell time—the time elapsed from when an interruptive alert is generated to when it is dismissed—could be calculated by using historical alert data from log files. Drug–drug interaction (DDI) alerts from 3 years of electronic health record data were queried. Alert dwell time was calculated for 25,965 alerts, including 777 unique DDIs. The median alert dwell time was 8 s (range, 1–4913 s). Resident physicians had longer median alert dwell times than other prescribers (P < .001). The 10 most frequent DDI alerts (n = 8759 alerts) had shorter median dwell times than alerts that only occurred once (P < .001). This metric can be used in future research to evaluate the effectiveness and efficiency of interruptive prescribing alerts.

Keywords: clinical decision support, interruptive alerts, override rates, electronic health record, computerized prescriber order entry

BACKGROUND

Interruptive prescribing alerts are a common feature of clinical decision support (CDS)–enabled computerized prescription order entry (CPOE) systems. These alerts interrupt the prescribing process and require the user to acknowledge its information by taking one or more actions. Although these alerts improve healthcare processes and may improve the quality of patient care, they must be strategically managed to avoid unintended consequences.1–4 Poorly designed interruptive alerting systems unnecessarily distract clinicians from current thought processes, which may cause other prescribing errors and inefficiency.5 Irrelevant or redundant alerts can lead to alert fatigue, which is defined as “… the mental state that is the result of too many alerts consuming time and mental energy, which can cause important alerts to be ignored along with clinically unimportant ones.”6,7 Although alert fatigue affects clinician satisfaction, it can ultimately cause prescribers to disregard important alerts that are designed to prevent patient harm.6,8

Previous efforts to assess the quality of interruptive CDS have been made through prescriber surveys and by calculating alert override rates.7,9–14 Prescriber surveys, one of which has been psychometrically validated for assessing drug–drug interaction (DDI) alerts,13 have been used to evaluate opinions of alert content, design, and frequency.11,14,15 Survey results can provide insights into the perceived usefulness of alert systems but may lack the detail needed for directed improvements. Override rates, one of the most reported alert metrics, are determined by dividing the number of alerts wherein the provider did not carry out the change in care recommended by the alert (i.e., an override) by the total number of alerts presented.9,12,13,16,17 Although override rates can be useful to identify alerts that need additional review for clinical relevance, they are inconsistently defined and have other limitations.18–21

We present preliminary research on a new measure for characterizing interruptive prescriber alerts. Alert dwell time is the time elapsed from the generation of an interruptive alert to dismissal of the alert window by adhering to the instructions of the alert or overriding. The need to consider clinician alert response time while evaluating interruptive CDS alert systems has been previously discussed.21 This metric can be used to describe the time required by clinicians to respond to interruptive alerts. Observed differences in these times across individual alerts, alert categories, clinician roles, or other healthcare contexts may provide insights into the possible improvements of alert systems. Calculating alert dwell times can also emphasize the need to make alerting systems more efficient by quantifying the time required for clinicians to respond to interruptive alerts. In this study, we developed data retrieval techniques to compute alert dwell time from stored CPOE DDI alerts and conducted exploratory analyses of the data. We also discuss future opportunities to use this metric to assess and improve interruptive alerts.

METHODS

Setting

Since 2010, St Jude Children’s Research Hospital has fully implemented an electronic health record (EHR) with CPOE (Millennium system, Cerner Corporation, Kansas City, MO, USA) for all aspects of inpatient and outpatient care, including orders, documentation, laboratory, and pharmacy.22 Proactive efforts were taken throughout CPOE implementation to prevent CPOE-related adverse safety events and alert fatigue.22 This study was approved by the study hospital’s institutional review board.

Interruptive alert design

During the study period, clinicians were presented with DDI alerts in a window that displays the new order and existing medications interacting with the new order. The version of the alert window (Enhanced Window) was the same throughout the study period. To manage alerts and prevent alert fatigue, a team composed of physicians and pharmacists made proactive decisions in 2005, including limiting DDIs to those with severity ratings of “major” or “major contraindicated” (based on the Cerner Multum™ drug knowledge database), disabling duplicate therapy alerts and requiring prescribers to document override reasons via prewritten or free-text responses. The alert system design presents instances of multiple DDIs to the clinician simultaneously in one window. Simultaneously presented alerts were excluded from the primary analyses and are presented separately in the supplemental file.

Data retrieval and analysis

All DDI alerts from July 9, 2012 to July 8, 2015, were included, and the query returned 106,019 alerts. Details of the query are included in the supplemental file. Several exclusion criteria were applied to the dataset and are detailed in the supplemental file. Alert dwell time was calculated as the amount of recorded time elapsed in the database from when an alert was presented to a clinician to when it was dismissed. Summary alert dwell time information was calculated for each drug–drug pair (i.e., the drugs triggering the alert), by clinician role, and alerts that were accepted or overridden. Average annual dwell time was also calculated for the full dataset before excluding certain cases. Differences in median alert dwell times by clinician role, the top 10 most frequent alerts and those that only occurred once, and alerts that were overridden versus those that were accepted were compared by Mann-Whitney U tests in SPSS (version 22.0, SPSS, Chicago, IL, USA).

RESULTS

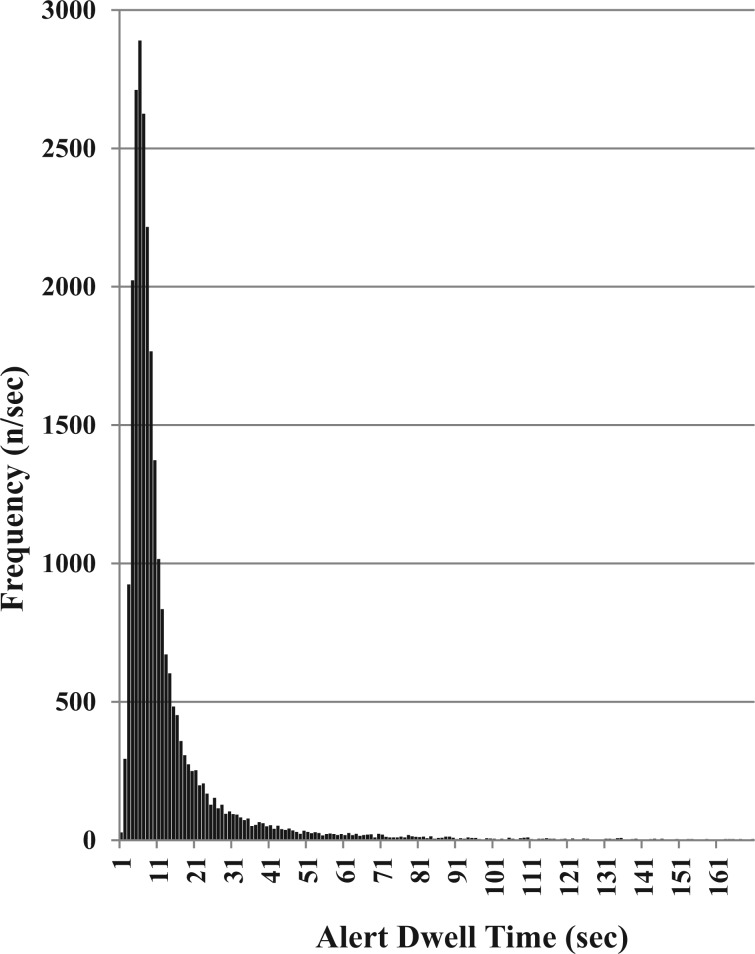

The final dataset included 25,965 alerts. Alert dwell times were not normally distributed (Kolmogorov–Smirnov statistic P < .001) (Figure 1). In the sample, 777 unique DDI alerts were represented and the median alert dwell time was 8 s (range, 1–4913 s; interquartile range, 8 s). The average annual dwell time of the complete dataset (i.e., final dataset plus the excluded cases) was 185.21 h (666,753 s). For the final dataset, the top 10 most frequent alerts represented 130.23 h (7813.6 min), which was 25.9% of the total alert dwell time of the final dataset (Table 1).

Figure 1:

Distribution of alert dwell times in the study sample (n = 25,965).

Table 1:

Summary statistics for the 10 most frequent drug–drug interaction alerts.

| Drug–Drug Interaction | Number of Occurrences | Median Dwell Time (s) | Total Dwell Time for Study Period (min) |

|---|---|---|---|

| Potassium chloride + spironolactone | 1894 | 7 | 390.4 |

| Ibuprofen + ketorolac | 1663 | 8 | 447.9 |

| Acyclovir + tacrolimus | 1071 | 7 | 212.8 |

| Methotrexate + pantoprazole | 918 | 8 | 243.7 |

| Fentanyl + ondansetron | 610 | 8 | 117.9 |

| Potassium phosphate + spironolactone | 609 | 6 | 78.2 |

| Cyclosporine + voriconazole | 592 | 8 | 153.9 |

| Posaconazole + ranitidine | 521 | 7 | 138.9 |

| Lisinopril + potassium chloride | 476 | 8 | 158.6 |

| Enalapril + potassium chloride | 405 | 7 | 85.1 |

| Total | 8759 | 2027.4 |

Resident physicians had the longest median alert dwell time (14 s), and pharmacists had the shortest median dwell time (7 s) (Table 2). The differences between median alert dwell times for resident physicians and for all other clinician roles were significantly different (P < .001). Of the 777 unique DDI alerts, 134 occurred only once. The median dwell time of these infrequent alerts was 11 s vs 7 s for the top 10 most frequent alerts (n = 8759) (P < .001). The median alert dwell time for overridden alerts was 8 s (n = 24,192) and for accepted alerts was 9 s (n = 1773) (P = .36). Descriptive statistics of dwell times for simultaneously occurring alerts and the reasons provided for overridden alerts are provided in the supplemental file.

Table 2:

Number of alerts and dwell times by clinician role.

| Clinician Role | Number of Alerts | Median Dwell Time (range; Interquartile Range), s |

|---|---|---|

| Pharmacist | 11,132 | 7(1–1513; 6) |

| Attending physician | 7168 | 9(1–4029; 10) |

| Physician fellow | 1299 | 9(1–562; 8) |

| Nurse practitioner/physician assistant | 4232 | 10(1–2090; 10) |

| Resident physician | 304 | 14(2–222; 17) |

DISCUSSION

The importance of interruptive CDS alerts to prevent patient harm and improve clinical outcomes is widely recognized.3,4 The significance of including CDS in an EHR has been emphasized by the decision of The Centers for Medicare & Medicaid Services to include CDS development as a requirement for achieving Meaningful Use Stage 1.23 However, excessive and irrelevant alerts can cause alert fatigue, which can compromise the patient safety benefits of this CPOE feature.

In this proof-of-concept study, we successfully developed data retrieval techniques and analyzed data on a new CDS alert metric. By using alert dwell time, we quantified the effects of interruptive alerts on clinician time and identified differences among clinician roles and between frequent and infrequent alerts. We also demonstrated that more frequently occurring alerts are acted on faster than infrequent alerts, suggesting that alert frequency may have implications for cognitive processing of alert information and alert fatigue. Although we recognize that no single alert metric can accurately help decide the appropriateness of interruptive alerts, alert dwell time could be included in a comprehensive analysis of alerts, such as that described by McCoy and colleagues.21

Even though we customized our CDS system to minimize the number of alerts at the study hospital, our results revealed that a substantial amount of time was required by clinicians to respond to alerts. Moreover, querying our historical data of DDI alerts showed that more than 1 million alerts would have been presented to clinicians for each study year if no modifications had been made to our alert system settings. Therefore, for healthcare organizations that do not extensively customize the interruptive CDS system settings, the time burden on clinicians to respond to alerts may be much higher than that reported in this study. By calculating alert dwell time, the 10 most frequent DDI alerts in our sample of 777 DDIs accounted for ∼26% of the total time prescribers spent on reviewing alerts. The unique demand placed by these alerts on prescribers warrants a detailed review of these alerts (e.g., through focus groups or prescriber surveys).

The introduction of alert dwell time as a metric may prompt researchers and practitioners to further explore its utility. Our results indicate that differences in median alert dwell times by prescriber role may represent knowledge gaps in the familiarity with the CDS alert system or the clinical content expressed within the alert. Usability and design are important aspects of CPOE systems and may influence dwell times, such as simultaneously presented alerts.24,25 Dwell time calculated at the individual prescriber level may help identify clinicians who need assistance to appropriately respond to interruptive alerts.

Median dwell times for overridden alerts and accepted alerts were not significantly different. Since this was an exploratory analysis to illustrate the potential use of the dwell time measure, no discrete hypotheses were proposed before data analysis. Longer dwell times observed for overridden alerts might suggest a more in-depth level of critical evaluation. If a clinician is going to bypass a safety alert, reaching this conclusion may demand more time than that needed to decide to accept an alert (i.e., remove the DDI initiating order). However, we found that median dwell times for accepted and overridden alerts were similar, which suggests that equal consideration was given to the contents of both types of alerts. Because the study hospital has made considerable efforts to suppress less clinically relevant alerts, the similar dwell times for overridden and accepted alerts may indicate progress in managing alert fatigue. Differences in the alert dwell times between accepted and overridden alerts can provide additional insights into the efficiency of alerts and need to be explored in future studies.

Examining the temporal trends in dwell time may increase the understanding of clinician responses to alerts. For example, dwell time for specific alerts occurring frequently may decrease throughout the workday or workweek, suggesting a learning effect. Alert dwell time could also be compared to other metrics used to evaluate the quality of alert systems. For example, integration of results from calculating override rates and prescriber surveys with calculated dwell times may increase the validity of these metrics to identify opportunities for improvement.

Although our methods allowed us to calculate the time it took for clinicians to respond to alerts, we were not able to verify that all of that time was spent considering the content of the alert messages. For example, clinicians may have been distracted by an unrelated task as the alert window was presented. Distractions from the cognitive processing of the alert might explain the non-normal distribution of data, which is skewed by long dwell times. Future prospective studies can evaluate how cognitive tasks relate to dwell time and differentiate the time spent processing alert content from the time spent on distractions.

Given that our hospital specializes in treating catastrophic pediatric illnesses, the generalizability of our summary statistics for dwell times is limited. This is also reflected in the efforts we have made to limit the amount of alerts by customizing our alert CDS settings. However, given that the CPOE vendor (Cerner Corporation) for our hospital is common in US healthcare settings, it may be possible to calculate alert dwell time in other settings.

In conclusion, we demonstrated that it is possible to measure the length of time for which an alert is present before being dismissed by a provider, and there are differences in alert dwell times among clinician roles and frequent versus infrequent alerts. Further research is needed to understand the implications of this metric and validate its use for improving the utility of interruptive prescriber alerts.

ACKNOWLEDGEMENTS

The authors thank Vani Shanker for editing the manuscript.

COMPETING INTERESTS

The authors declare no conflicts of interest.

FUNDING

This study was supported by the Cancer Center Core Grant # NCI CA 21765 and American Lebanese Syrian Associated Charities.

REFERENCES

- 1.Abookire SA, Teich JM, Sandige H, et al. Improving allergy alerting in a computerized physician order entry system. Proceedings/AMIA Annual Symposium AMIA Symposium 2000:2–6. http://www.ncbi.nlm.nih.gov/pmc/issues/161274/. [PMC free article] [PubMed] [Google Scholar]

- 2.Bates DW, Gawande AA. Improving safety with informational technology. N Engl J Med. 2003;348(25):26–34. [DOI] [PubMed] [Google Scholar]

- 3.Bright TJ, Wong A, Dhurjati R, et al. Effect of clinical decision-support systems a systematic review. Ann Intern Med. 2012;157(1):29–43. [DOI] [PubMed] [Google Scholar]

- 4.Jaspers MW, Smeulers M, Vermeulen H, et al. Effects of clinical decision-support systems on practitioner performance and patient outcomes: a synthesis of high-quality systematic review findings. JAMIA 2011;18(3):327–334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ratwani RM, Gregory Trafton J. A generalized model for predicting postcompletion errors. Top Cogn Sci 2010;2(1):154–167. [DOI] [PubMed] [Google Scholar]

- 6.Carspecken CW, Sharek PJ, Longhurst C, et al. A clinical case of electronic health record drug alert fatigue: consequences for patient outcome. Pediatrics 2013;131(6):e1970–e1973. [DOI] [PubMed] [Google Scholar]

- 7.van der Sijs H, Aarts J, Vulto A, et al. Overriding of drug safety alerts in computerized physician order entry. JAMIA. 2006;13(2):138–147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Glassman PA, Simon B, Belperio P, et al. Improving recognition of drug interactions: benefits and barriers to using automated drug alerts. Med Care 2002;40(12):1161–1171. [DOI] [PubMed] [Google Scholar]

- 9.Hsieh TC, Kuperman GJ, Jaggi T, et al. Characteristics and consequences of drug allergy alert overrides in a computerized physician order entry system. JAMIA 2004;11(6):482–491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Saleem JJ, Patterson ES, Militello L, et al. Exploring barriers and facilitators to the use of computerized clinical reminders. JAMIA 2005;12(4):438–447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ko Y, Abarca J, Malone DC, et al. Practitioners' views on computerized drug-drug interaction alerts in the VA system. JAMIA 2007;14(1):56–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.van der Sijs H, Aarts J, van Gelder T, et al. Turning off frequently overridden drug alerts: limited opportunities for doing it safely. JAMIA. 2008;15(4):439–448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jani YH, Barber N, Wong ICK. Characteristics of clinical decision support alert overrides in an electronic prescribing system at a tertiary care paediatric hospital. Int J Pharm Prac Practice 2011;19(5):363–366. [DOI] [PubMed] [Google Scholar]

- 14.Zheng K, Fear K, Chaffee BW, et al. Development and validation of a survey instrument for assessing prescribers' perception of computerized drug-drug interaction alerts. JAMIA 2011;18 (Suppl 1):i51–i61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Magnus D, Rodgers S, Avery AJ. GPs' views on computerized drug interaction alerts: questionnaire survey. J Clin Pharm Ther 2002;27(5):377–382. [DOI] [PubMed] [Google Scholar]

- 16.Shah NR, Seger AC, Seger DL, et al. Improving acceptance of computerized prescribing alerts in ambulatory care. JAMIA 2006;13(1):5–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Isaac T, Weissman JS, Davis RB, et al. Overrides of medication alerts in ambulatory care. Arch Intern Med 2009;169(3):305. [DOI] [PubMed] [Google Scholar]

- 18.Ancker JS, Kern LM, Abramson E, et al. The Triangle Model for evaluating the effect of health information technology on healthcare quality and safety. JAMIA 2011;19(1):62–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bryant A, Fletcher G, Payne T. Drug interaction alert override rates in the Meaningful Use era. Appl Clin Inform 2014;5(3):802–813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.McCoy AB, Thomas EJ, Krousel-Wood M, et al. Clinical decision support alert appropriateness: a review and proposal for improvement. Ochsner J 2014;14(2):195–202. [PMC free article] [PubMed] [Google Scholar]

- 21.McCoy AB, Waitman LR, Lewis JB, et al. A framework for evaluating the appropriateness of clinical decision support alerts and responses. JAMIA 2012;19:346–352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hoffman JM, Baker DK, Howard SC, Laver JH, Shenep JL. Safe and successful implementation of CPOE for chemotherapy at a children's cancer center. J Natl Compr Canc Netw 2011;9(3):36–50. [DOI] [PubMed] [Google Scholar]

- 23.Eligible Professional Meaningful Use Core Measures: Measure 2 of 14. Baltimore, MD: Centers for Medicare and Medicaid Services; 2013.

- 24.Payne TH, Hines LE, Chan RC, et al. Recommendations to improve the usability of drug-drug interaction clinical decision support alerts. JAMIA 2015:ocv011. http://jamia.oxfordjournals.org/content/20/e1/e2.full. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Middleton B, Bloomrosen M, Dente MA, et al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. JAMIA 2013;20(e1):e2–e8. [DOI] [PMC free article] [PubMed] [Google Scholar]