Abstract

Objective Clinicians at our institution typically respond to about half of the prompts they are given by the clinic’s computer decision support system (CDSS). We sought to examine factors associated with clinician response to CDSS prompts as part of a larger, ongoing quality improvement effort to optimize CDSS use.

Methods We examined patient, prompt, and clinician characteristics associated with clinician response to decision support prompts from the Child Health Improvement through Computer Automation (CHICA) system. We asked pediatricians who were nonusers of CHICA to rate decision support topics as “easy” or “not easy” to discuss with patients and their guardians. We analyzed these ratings and data, from July 1, 2009 to January 29, 2013, utilizing a hierarchical regression model, to determine whether factors such as comfort with the prompt topic and the length of the user’s experience with CHICA contribute to user response rates.

Results We examined 414 653 prompts from 22 260 patients. The length of time a clinician had been using CHICA was associated with an increase in their prompt response rate. Clinicians were more likely to respond to topics rated as “easy” to discuss. The position of the prompt on the page, clinician gender, and the patient’s age, race/ethnicity, and preferred language were also predictive of prompt response rate.

Conclusion This study highlights several factors associated with clinician prompt response rates that could be generalized to other health information technology applications, including the clinician’s length of exposure to the CDSS, the prompt’s position on the page, and the clinician’s comfort with the prompt topic. Incorporating continuous quality improvement efforts when designing and implementing health information technology may ensure that its use is optimized.

Keywords: computer-based decision support, pediatrics, clinical guidelines, primary care

BACKGROUND AND SIGNIFICANCE

Primary care clinicians in general, and pediatricians in particular, find themselves having to balance a variety of demands on their time during brief patient encounters with children and their guardians, such as delivering health advice, administering vaccines, screening for a variety of health risks, monitoring growth and development, and addressing parental concerns.1 Health information technology (HIT) can help clinicians handle demands on their time without sacrificing the quality of care they provide to patients. We have developed and implemented a pediatric computer decision support system (CDSS), called the Child Health Improvement through Computer Automation (CHICA) system, to provide such help to physicians at our institution. CHICA has been operational since 2004 and is currently used in seven pediatric and adolescent community health clinics affiliated with the Eskenazi Health System in Indianapolis, Indiana. The CHICA system has supported over 270 600 pediatric visits for over 42 000 patients, providing clinicians with guidance according to clinical care guidelines during the medical encounter by automating surveillance and screening activities and generating clinician reminders and educational handouts to supplement brief patient encounters.2 CHICA currently includes tailored support for autism, developmental screening, attention deficit hyperactivity disorder, maternal depression, smoking cessation, and medical-legal issues, among other health risks.3–9 Although the uptake of HIT in pediatrics has been slower compared with other fields, such as adult medicine,10 pediatricians working with CHICA have found that it fits within their busy practice workflows. Thus, pediatricians’ acceptance of and satisfaction with the system have increased over time.11

Despite our successes with the CHICA system, previously conducted studies have shown that clinicians respond to CHICA alerts just under 50% of the time.12 We examined patterns of pediatrician response and found that the patient’s age and the position of the prompt on the physician worksheet (PWS) predict whether a clinician answers or ignores a prompt.13 Experiments in which we highlighted key prompts in yellow to heighten clinicians’ awareness of them were not effective.14 Therefore, we sought to better understand what characteristics of patients, clinicians, and prompts impact the likelihood that clinicians would respond to a prompt. Specifically, we hypothesized that the clinicians’ comfort with the topical content of the prompt and how long the clinician had used CHICA would be associated with an increased prompt response rate. Understanding factors that affect clinicians’ responses to our system’s alerts might inform quality improvement and technical strategies to support clinicians using HIT on a broader scale.

METHODS

Overview of the CHICA System

The CHICA system is an innovative CDSS and electronic health record (EHR); it has been described in detail elsewhere.15–20 Briefly, CHICA provides preventive care and chronic disease management decision support based on clinical guidelines encoded in Arden Syntax rules. CHICA uses a Health Level 7 International-compliant interface to connect with an existing EHR,2 but CHICA can also operate as a standalone EHR.

Once a child is registered for a medical encounter, CHICA produces a tailored pre-screener form (PSF) that contains 20 health risk questions (in English and Spanish) for the guardian or patient (if he or she is 12 years old or older) to complete. These questions are selected based on previous information contained in the patient’s EHR and the age of the patient at the time of the visit. To select just 20 questions, CHICA uses a unique prioritization scheme that takes into account the likelihood and seriousness of each health risk as well as the evidence to support the benefit of intervening on the health risk. The PSF is completed in the waiting room (by the patient or their guardian) before the medical encounter. When CHICA was first designed, it utilized a scannable and tailored paper-based user interface.18 Two years ago, the pre-screening process was transitioned to an child health improvement through computers leveraging electronic tablets (CHICLET).21

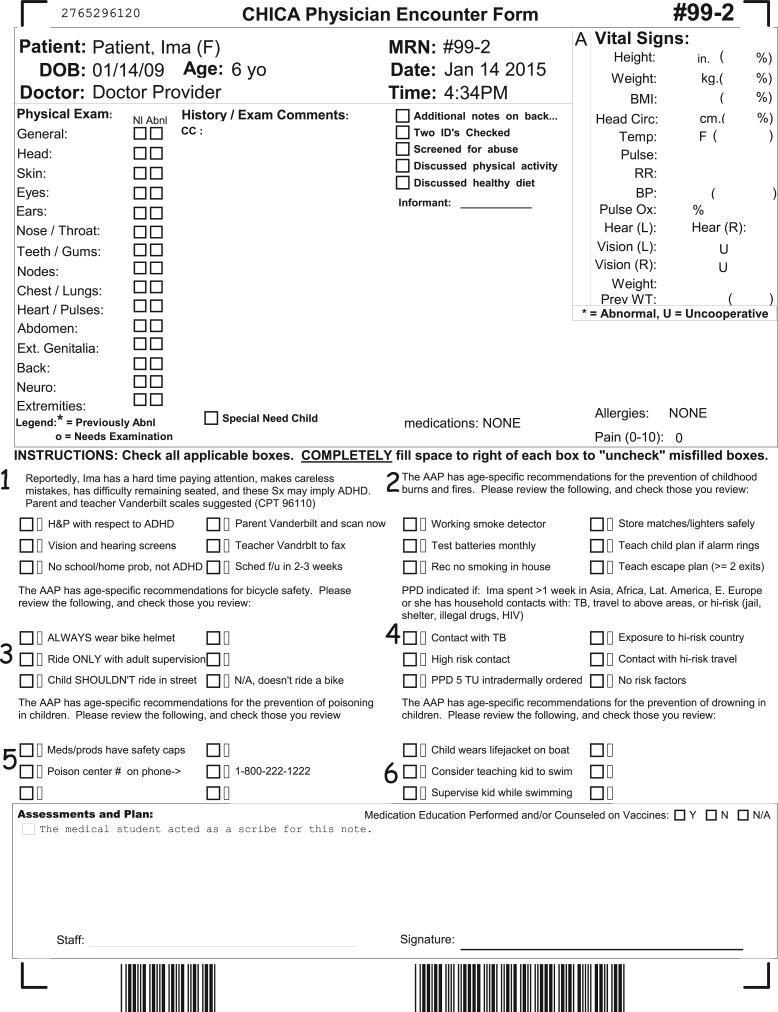

Once completed, information captured by the PSF is transmitted wirelessly to CHICA, and the collected data are integrated into the patient’s EHR. The scannable paper PWS is then generated for the clinician to use during the patient encounter. The PWS has spaces to record the patient’s medical history and notes from the physical examination. It also has six tailored prompts based on information collected from the PSF and information contained in the patient’s EHR. See Figure 1 for a sample PWS. Each prompt has up to six check boxes with which the clinician can document his or her response to each of the generated alerts. After the clinician completes the PWS, the form is scanned back into the system and the data is integrated with the information already in the patient’s EHR. CHICA also generates “just in time” (JIT) handouts, which are printed in English on one side and Spanish on the other, to supplement counseling for certain prompts or to collect additional information that can be scanned into the CHICA system. In the near future, PWS and JITs in CHICA will transition to being completely paperless, so that clinicians can access the PWS and JITs via their laptops, which they would bring with them into the clinic room.

Figure 1:

Sample physician worksheet (PWS) with prompt positions labeled.

Setting and Participants

Data from the PWS (whether a prompt was responded to or not) were extracted for all the patients seen at clinic sites using the updated CHICA 2.0 system from July 1, 2009 to January 29, 2013. During the study timeframe, CHICA had been implemented in five clinics in the Eskenazi Health System in Indianapolis, Indiana. This study was approved by the Institutional Review Board of the Indiana University School of Medicine.

Data Collection and Analysis

The main outcome of interest was whether or not a clinician responded to a prompt. A response was defined as the clinician checking one of the six available boxes for the prompt on the PWS. We then examined patient, prompt, and clinician characteristics to explore what factors were associated with clinicians’ responding to prompts. At the patient level, we examined the patient’s age, race/ethnicity (black, white, Hispanic, or unknown), insurance status (Medicaid, commercial, or self-pay), and preferred language (English or Spanish, based on which side of the PSF was completed by the caregiver). Insurance status and language preference often varied from visit-to-visit for patients and, thus, these covariates were used as time-dependent covariates.

Clinician characteristics were also examined and included the gender of the clinician and length of time they had used CHICA (CHICA maturity). CHICA maturity was calculated as the date the prompt was printed minus the recorded date that the physician first used CHICA. This value ranged from 0-7 years. CHICA maturity was introduced in the model as a continuous covariate.

Lastly, prompt-level characteristics that were examined included the position of the prompt on the PWS (first through sixth position) and the comfort rating of the prompt. To determine clinicians’ comfort level with each prompt, we asked a convenience sample of clinicians at a continuing medical education event to rate their comfort discussing the potential topics included in CHICA prompts on a 5-point, Likert-like rating scale (1 = completely uncomfortable, 3 = neither uncomfortable or comfortable, 5 = completely comfortable). Sixteen general pediatricians, all of whom were not CHICA users, completed the survey and provided topic ratings. The survey scores were averaged by topic, then categorized as either easy (≥4.0) or not easy (<4.0) to discuss with patients and their guardians.

Univariate and bivariate statistics in relation to the primary outcome were examined. Each patient had multiple records corresponding to six prompts and multiple visits; therefore, to model the primary outcome, a repeated measure logistic regression model with generalized estimating equations (GEEs) was used, in which patients were considered nested within the clinic. First, univariate GEE models were fitted to assess the unadjusted association of each covariate with the clinician prompt response at a time, and significant covariates at P < .15 were included in the multivariable GEE model. The odds ratios for age were computed by 5-year increments, for easier interpretation.

RESULTS

During the study timeframe, 80 clinicians used CHICA. Of these clinicians, 27 (63%) were female and 16 (37%) were male (when the data for those clinicians for whom gender data were missing were excluded). The mean CHICA maturity was 1.1 years, with a standard deviation of 1.0 (range: 0–7 years). Approximately 54% of these clinicians worked full-time. Pediatricians comprise the majority of the clinicians in the five clinics (77%), including physicians a combined internal medicine-pediatrics (6%) specialty or whose specialty was designated as “other,” which included those with Triple Board training or whose specialty was not reported (17%).

A total of 414 653 prompts from 22 260 pediatric patients were examined. Overall, clinicians responded to 45% of the prompts. Of the patients, 49% were female and 51% were male. Approximately half of the patients were African American (54%), a third were Hispanic (32%), and the rest were Caucasian (10%). The average patient age was 5 years old (standard deviation: 4.7 years; range: 0–20.9 years old).

The average comfort rating for all the prompt topics was 4.0, on a 5-point scale (range: 2.8–4.9). Based on our cut point of 4.0, 33 routine health topics were categorized as “easy” to discuss and 22 routine health topics were categorized as “not easy” to discuss. Examples of health topics rated as “easy” to discuss with patients and guardians included anemia, injury prevention, dental care, and identification of attention deficit hyperactivity disorder and developmental delays. Topics rated as “not easy” to discuss with patients and guardians included child abuse, maternal depression, autism spectrum disorders, health literacy, and intimate partner violence. See Table 1.

Table 1:

Patient, Prompt, and Clinician Characteristic and Clinician PWS Response

| Characteristics | Response |

|

|---|---|---|

| No (%) | Yes (%) | |

| Patient characteristic | ||

| Child gender | ||

| Male | 119 853 (55.3) | 96 731 (44.7) |

| Female | 109 396 (55.2) | 88 667 (44.8) |

| Child race | ||

| Black | 127 974 (60.8) | 82 558 (39.2) |

| Hispanic | 62 667 (43.9) | 79 978 (56.1) |

| White | 27 824 (63.2) | 16 173 (36.8) |

| Other/Unknown | 10 786 (61.7) | 6693 (38.3) |

| Preferred language | ||

| English | 184 894 (60.9) | 118 512 (39.1) |

| Spanish | 44 357 (39.9) | 66 890 (60.1) |

| Insurance status | ||

| Medicaid | 196 347 (55.6) | 15 7058 (44.4) |

| Self-pay | 22 867 (51.7) | 21 362 (48.3) |

| Commercial | 10 037 (59.0) | 6982 (41.0) |

| Prompt characteristic per displayed prompt | ||

| Position on form | ||

| 1 | 35 179 (48.6) | 37 247 (51.4) |

| 2 | 35 776 (50.1) | 35 572 (49.9) |

| 3 | 38 120 (53.9) | 32 598 (46.1) |

| 4 | 39 810 (57.1) | 29 864 (42.9) |

| 5 | 40 886 (60.9) | 26 276 (39.1) |

| 6 | 39 480 (62.4) | 23 845(37.6) |

| Comfort with topic content | ||

| Easy to discuss | 173 146 (53.4) | 151 274 (46.6) |

| Not easy to discuss | 56 105 (62.2) | 34 128 (37.8) |

| Clinician characteristic | ||

| Clinician gender | ||

| Male | 92 303 (48.5) | 97 990 (51.5) |

| Female | 129 770 (61.3) | 81 775 (38.7) |

Results from the univariate GEE models indicated that all covariates, except patient gender (P = .9) and insurance status (P = .8), were significantly associated with whether a clinician responded to a prompt or not (P < .002) (data not shown). Clinicians were more likely to respond to prompts that involved topics rated as “easy” to discuss with patients and their guardians and less likely to respond to prompts that involved topics rated as “not easy” to discuss with patients and their guardians (P < .0001).

All the covariates in the univariate models that were found to be significantly associated with the outcome were included in the multivariate models. Pediatricians were less likely to respond to prompts for older patients than those for younger patients (adjusted odds ratio [AOR]: 0.98; 95% confidence interval [CI], 0.96-0.99). Prompts were more likely to have a documented clinician response if the patient was Hispanic (AOR: 1.47; 95% CI, 1.35-1.59) but less likely if the patient was white (AOR: 0.89; 95% CI, 0.82-0.97). Clinicians were more likely to respond to prompts if the patient’s and guardian’s preferred language was Spanish than if their preferred language was English (AOR: 1.48; 95% CI, 1.41-1.55). The prompt-level characteristics examined were all significantly associated with clinician prompt response. Clinicians were more likely to respond to prompts located at the top of the PWS than those located at the bottom of the PWS (first prompt position AOR: 1.82; 95% CI, 1.78-1.86 vs fifth position AOR: 1.08; 95% CI, 1.06-1.10). Clinicians were more likely to respond to topics rated as “easy” to discuss compared with those rated as “not easy” (AOR: 1.47; 95% CI, 1.45-1.49). Both clinician-level characteristics (gender and CHICA maturity) were significantly associated with prompt response. The adjusted results of the multivariate models are presented in Table 2.

Table 2:

Multivariable GEE Models Examining Patient, Prompt, and Clinician Characteristics and Clinician Response to Prompts

| Characteristics | AOR (95% CI) |

|---|---|

| Patient characteristics | |

| Race (P-value = < .0001) | |

| Black | 1.02 (0.95-1.11) |

| White | 0.89 (0.82-0.97) |

| Hispanic | 1.47 (1.35-1.59) |

| Other | Reference |

| Age (P-value = .0076) (for every 5-year increase) | 0.98 (0.96-0.99) |

| Preferred language (P-value = < .0001) | |

| Spanish | 1.48 (1.41-1.55) |

| English | Reference |

| Prompt characteristics | |

| Position on form (P-value = < .0001) | |

| First | 1.82 (1.78-1.86) |

| Second | 1.69 (1.66-1.73) |

| Third | 1.47 (1.44-1.50) |

| Fourth | 1.28 (1.25-1.30) |

| Fifth | 1.08 (1.06-1.10) |

| Sixth | Reference |

| Comfort rating of topic (P-value = < .0001) | |

| Easy to discuss | 1.47 (1.45-1.49) |

| Not easy to discuss | Reference |

| Clinician characteristics | |

| Gender (P-value = < .0001) | |

| Male | 1.71 (1.65-1.77) |

| Female | Reference |

| CHICA maturity (P-value = < .0001) | 1.07 (1.05-1.08) |

AOR, adjusted odds ratio; CHICA, Child Health Improvement through Computer Automation; CI, confidence interval; GEE, generalized estimating equation.

DISCUSSION

Although computer-based clinical decision support can unquestionably improve clinical quality, this improvement is limited by the extent to which clinicians respond to CDSS prompts. Our experience, like those of others, has shown that clinician prompt response rates can be low.12,22,23 The present work demonstrates that a thoughtful analysis of which clinicians responds to which CDSS prompts regarding which patients can help us understand what factors influence clinicians’ responses to computer generated alerts.

We found that characteristics of the patient, the prompt, and the clinician can all influence whether a clinician responds to a prompt. However, why each of these factors influences the likelihood of a clinician responding to a prompt deserves careful consideration. For example, physicians caring for children are less likely to respond to prompts as their patients grow older. This may suggest that patient care for younger children is more protocol-driven or that older children are more likely to present with other issues that distract the clinician from CDSS-generated reminders. Or, perhaps older children are more likely to see more experienced clinicians who rely less on CHICA. Another possibility is that the reminders for older children are less well-designed.

The findings that prompts during the patient visits of Hispanic children and children and guardians that speak Spanish may be anomalous. These findings are likely confounded by the clinic setting. Two of the clinics serve a majority of the Spanish-speaking patients that receive care among the 5 clinics. Of these two clinics, one serves almost entirely Spanish-speaking Latino immigrants. One of the clinics that sees almost entirely Spanish-speaking patients is also an effective patient centered medical home and especially engaged in the development and implementation of CHICA.24–26 Therefore the association between responding to prompts and Spanish-speaking patients may be spurious. Alternatively, CHICA’s ability to assess guardians’ concerns in Spanish and prompt clinicians in English may make it especially valuable for this population.

The more actionable findings in this study relate to which characteristics of the prompts themselves increase the likelihood of clinicians responding to them. First, as we did in previous work,13 we found that the position of a prompt on the PWS page strongly influences the likelihood of a clinician responding to the prompt. There is a steady gradient of prompt response rate from the top left prompt on the PWS (Position #1) to the bottom right prompt on the PWS (Position #6), to the point that the prompt in Position #1 is nearly twice as likely to be responded to as the one in Position #6. This suggests that the prompts at the top of the PWS have greater salience and argues for the concept of alert fatigue coming into play.27–29 Presumably, clinicians start addressing prompts at the top of the page and run out of time, energy, or interest in completing the later prompts, perhaps becoming distracted by other pressing issues that come up during the patient encounter. An example of a potential technical refinement to ensure that clinicians respond to all of the prompts might be to incorporate soft stops, once the PWS is no longer printed on paper and available entirely in a web-based format.

One of the new findings in this study is that that clinicians are more likely to respond to those prompts that an independent group of clinicians rated themselves as “comfortable” addressing with patients and their guardians. The fact that more of the psychosocial topics were rated as “not easy” to discuss is not unlike the findings of other studies, that these types of health risks are more challenging for clinicians to address with patients and their families.30–33 It was not surprising that these topics are among those that are usually perceived as more sensitive, such as intimate partner violence and child abuse, or too complex to handle within a time-constrained primary care visit.34 In our previous study, we noted that more serious topics were more likely to be addressed.13 This was based on the priority score assigned to each prompt. In the present study, we examined clinicians’ comfort with the topic of each prompt, which is a different quality. Although this finding is, perhaps, not surprising, it points to the limitation of computerized prompting alone to affect clinician behavior. Prompts and reminders intended to promote a behavior that the physician is not comfortable with should, presumably, be accompanied by education and training that will lower the clinician’s threshold to take action. Otherwise the prompt is unlikely to affect actual patient care.

The finding that certain types of prompts are more or less likely to be answered by clinicians suggests that alterations to the prioritization score should be considered. If a certain prompt is unlikely to elicit a response, it may be that the “effectiveness” term in the corresponding prioritization formula should be decreased, based on a decreased probability that the clinician will respond to the prompt.

Finally, clinician characteristics can influence the likelihood of clinicians responding to prompts. For instance, we observed that male clinicians had 71% higher odds of responding to a given prompt than female clinicians. While we will resist speculating about gender stereotypes and differences in men/women when it comes to their affinity for technology,35 we will point out that our finding may be problematic considering that a growing majority of physicians entering the workforce are female.36,37 In fact, women represent about half of medical school graduates.38 If there are important design differences in the prompts to which male and female clinicians are likely to respond, it will be important to employ an adequate number of women in medical informatics to influence system designs. Larger studies, with more clinicians, would allow us to further investigate this unexpected, but interesting, finding.

It is reassuring to see that CHICA maturity, based on the number of years CHICA has been implemented in the physician’s clinic, was associated with a higher rate of response to prompts. This would logically be attributed to becoming used to, and facile with, the system. However, the effect is modest. So, experience with a system, while helpful, cannot compensate for a poorly designed system.

As with any research, limitations must be acknowledged. This is a retrospective examination of data collected by one CDSS within our institution. Moreover, our system presents alerts/prompts to clinicians all at once rather than one at a time. Additionally, because the PWS used by CHICA is still paper-based, the system differs from computer-based EHRs, which may use soft stops or red text as a way of alerting clinicians to prompts that need to be addressed. Therefore, our findings may not be generalizable to institutions that use different (computer-based) EHRs. However, we believe that considering clinicians’ comfort with prompt topics, the length of time clinicians have been exposed to the HIT application, and other patient and clinician characteristics will be of value to communities serving populations that are similar to ours.

Nonetheless, it is critical to undertake closer examinations of HIT applications after their implementation, to continually refine and improve upon the system. We undertook this study to continue to refine and improve upon the CHICA system. In order to ensure that any HIT application is used as it was intended to be, understanding the needs of the population being served and the context in which the system will be implemented have important implications.

CONCLUSION

Understanding factors associated with clinician response or nonresponse to CDSS prompts highlights the ongoing need to critically examine HIT applications once they are implemented. This study identified several new factors associated with prompt response that point to additional directions for future CDSS refinements.

CONTRIBUTORS

N.B. and S.D. drafted the survey used to obtain topic comfort ratings from non-CHICA physicians and drafted and revised the paper. N.B. is the guarantor for the details contained in the paper. C.S. is responsible for the biostatistical analysis and worked with N.B. and S.D. on the interpretation of results. S.D. and A.C. wrote the CHICA rules, oversee continual CHICA implementation in the clinics, and work closely with the technical team. A.C. and C.S. helped to revise the draft paper.

COMPETING INTERESTS

None.

FUNDING

Funding for the ongoing development of CHICA comes from the National Library of Medicine (1R01 LM010923) and the Agency for Healthcare Research and Quality (1R01 HS020640, 1R01HS022681, 1 R01 HS017939).

ACKNOWLEDGEMENTS

The authors wish to acknowledge the technical expertise and efforts of the individual members of the Child Health Informatics and Research Development Lab (CHIRDL) team, which provides programming and technical support for CHICA; the Pediatric Research Network (PResNet) at Indiana University, for administering the CHICA satisfaction survey at the pediatric clinics that have implemented CHICA; and the clinic personnel, who constantly help us evaluate and improve CHICA.

REFERENCES

- 1.Belamarich PF, Gandica R, Stein RE, et al. Drowning in a sea of advice: pediatricians and American Academy of Pediatrics policy statements. Pediatrics. 2006;118(4):e964–e978. [DOI] [PubMed] [Google Scholar]

- 2.Anand V, Biondich PG, Liu G, et al. Child health improvement through computer automation: the CHICA system. Stud Health Technol Inform. 2004;107(Pt 1):187–191. [PubMed] [Google Scholar]

- 3.Bauer NS, Sturm LA, Carroll AE, et al. Computer decision support to improve autism screening and care in community pediatric clinics. Infants Young Children. 2013;26(4):306–317. [Google Scholar]

- 4.Carroll AE, Bauer NS, Dugan TM, et al. Use of a computerized decision aid for ADHD diagnosis: a randomized controlled trial. Pediatrics. 2013;132(3):e623–e629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Carroll AE, Bauer NS, Dugan TM, et al. Use of a computerized decision aid for developmental surveillance and screening: a randomized clinical trial. JAMA Pediatr. 2014;168(9):815–821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Carroll AE, Biondich P, Anand V, et al. A randomized controlled trial of screening for maternal depression with a clinical decision support system. JAMIA. 2013;20(2):311–316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Carroll AE, Biondich PG, Anand V, et al. Targeted screening for pediatric conditions with the CHICA system. JAMIA. 2011;18(4):485–490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Downs SM, Zhu V, Anand V, et al. The CHICA smoking cessation system. AMIA Annu Symp Proc. 2008:166–170. [PMC free article] [PubMed] [Google Scholar]

- 9.Anand V, Carroll AE, Downs SM. Automated primary care screening in pediatric waiting rooms. Pediatrics. 2012;129(5):e1275–e1281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Leu MG, O'Connor KG, Marshall R, et al. Pediatricians' use of health information technology: a national survey. Pediatrics. 2012;130(6):e1441–e1446. [DOI] [PubMed] [Google Scholar]

- 11.Bauer NS, Carroll AE, Downs SM. Understanding the acceptability of a computer decision support system in pediatric primary care. JAMIA. 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Downs SM, Anand V, Dugan TM, et al. You can lead a horse to water: physicians' responses to clinical reminders. AMIA Annu Symp Proc. 2010;2010:167–171. [PMC free article] [PubMed] [Google Scholar]

- 13.Carroll AE, Anand V, Downs SM. Understanding why clinicians answer or ignore clinical decision support prompts. Appl Clin Inform. 2012;3:309–317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hendrix KS, Downs SM, Carroll AE. Pediatricians' responses to printed clinical reminders: does highlighting prompts improve responsiveness? Acad Pediatr. 2015;15(2):158–164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Anand V, Biondich PG, Liu G, et al. Child health improvement through computer automation: the CHICA system. Stud Health Technol Inform. 2004;107(Pt 1):187–191. [PubMed] [Google Scholar]

- 16.Biondich PG, Anand V, Downs SM, et al. Using adaptive turnaround documents to electronically acquire structured data in clinical settings. AMIA Annu Symp Proc. 2003:86–90. [PMC free article] [PubMed] [Google Scholar]

- 17.Biondich PG, Downs SM, Anand V, et al. Automating the recognition and prioritization of needed preventive services: early results from the CHICA system. AMIA Annu Symp Proc. 2005:51–55. [PMC free article] [PubMed] [Google Scholar]

- 18.Downs SM, Biondich PG, Anand V, et al. Using Arden Syntax and adaptive turnaround documents to evaluate clinical guidelines. AMIA Annu Symp Proc. 2006:214–218. [PMC free article] [PubMed] [Google Scholar]

- 19.Downs SM, Carroll AE, Anand V, et al. Human and system errors, using adaptive turnaround documents to capture data in a busy practice. AMIA Annu Symp Proc. 2005:211–215. [PMC free article] [PubMed] [Google Scholar]

- 20.Downs SM, Uner H. Expected value prioritization of prompts and reminders. Proceedings/AMIA Annu Symp. 2002:215–219. [PMC free article] [PubMed] [Google Scholar]

- 21.Anand V, McKee SJ, Dugan TM, et al. Leveraging Electronic Tablets for General Pediatric Care: A Pilot Study. Appl Clin Inform. 2015;6(1):15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.El-Kareh RE, Gandhi TK, Poon EG, et al. Actionable reminders did not improve performance over passive reminders for overdue tests in the primary care setting. JAMIA. 2011;18(2):160–163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.McDonald CJ. Protocol-based computer reminders, the quality of care and the non-perfectability of man. N Engl J Med. 1976;295(24):1351–1355. [DOI] [PubMed] [Google Scholar]

- 24.The medical home. Pediatrics. 2002;110(1 Pt 1):184–186. [PubMed] [Google Scholar]

- 25.Guerrero AD, Rodriguez MA, Flores G. Disparities in provider elicitation of parents' developmental concerns for US children. Pediatrics. 2011;128(5):901–909. [DOI] [PubMed] [Google Scholar]

- 26.Guerrero AD, Garro N, Chang JT, et al. An update on assessing development in the pediatric office: has anything changed after two policy statements? Acad Pediatr. 2010;10(6):400–404. [DOI] [PubMed] [Google Scholar]

- 27.Russ AL, Zillich AJ, McManus MS, et al. Prescribers' interactions with medication alerts at the point of prescribing: A multi-method, in situ investigation of the human-computer interaction. Int J Med Inform. 2012;81(4):232–243. [DOI] [PubMed] [Google Scholar]

- 28.Isaac T, Weissman JS, Davis RB, et al. Overrides of medication alerts in ambulatory care. Arch Int Med. 2009;169(3):305–311. [DOI] [PubMed] [Google Scholar]

- 29.Embi PJ, Leonard AC. Evaluating alert fatigue over time to EHR-based clinical trial alerts: findings from a randomized controlled study. JAMIA. 2012;19(e1):e145–e148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Fleegler EW, Lieu TA, Wise PH, et al. Families' health-related social problems and missed referral opportunities. Pediatrics. 2007;119(6):e1332–1341. [DOI] [PubMed] [Google Scholar]

- 31.Fremont WP, Nastasi R, Newman N, et al. Comfort level of pediatricians and family medicine physicians diagnosing and treating child and adolescent psychiatric disorders. Int J Psychiatry Med. 2008;38(2):153–168. [DOI] [PubMed] [Google Scholar]

- 32.Galuska DA, Fulton JE, Powell KE, et al. Pediatrician counseling about preventive health topics: results from the Physicians' Practices Survey, 1998-1999. Pediatrics. 2002;109(5):E83. [DOI] [PubMed] [Google Scholar]

- 33.Gardner W, Kelleher KJ, Wasserman R, et al. Primary care treatment of pediatric psychosocial problems: a study from pediatric research in office settings and ambulatory sentinel practice network. Pediatrics. 2000;106(4):E44. [DOI] [PubMed] [Google Scholar]

- 34.Gabrielsen TP, Farley M, Spear L, et al. Identifying autism in a brief obervation. Pediatrics. 2015;135(2). [DOI] [PubMed] [Google Scholar]

- 35.Balka E, ed. Gender and skill in human computer interaciton. CHI ‘96 Conference Companion on Human Factors in Computing Systems. Vancouver, British Columbia, Canada: ACM; 1996, pp. 93–94. [Google Scholar]

- 36.Pan RJ, Cull WL, Brotherton SE. Pediatric residents' career intentions: data from the leading edge of the pediatrician workforce. Pediatrics. 2002;109(2):182–188. [DOI] [PubMed] [Google Scholar]

- 37.Cull WL, Mulvey HJ, O'Connor KG, et al. Pediatricians working part-time: past, present, and future. Pediatrics. 2002;109(6):1015–1020. [DOI] [PubMed] [Google Scholar]

- 38.Roskovensky LB, Grbic D, Matthew D. The Changing Gender Composition of US Medical School Applicants and Matriculants. Washington DC: Association of American Medical Colleges; 2012. [DOI] [PubMed] [Google Scholar]