Abstract

The weighted histogram analysis method (WHAM) for free energy calculations is a valuable tool to produce free energy differences with the minimal errors. Given multiple simulations, WHAM obtains from the distribution overlaps the optimal statistical estimator of the density of states, from which the free energy differences can be computed. The WHAM equations are often solved by an iterative procedure. In this work, we use a well-known linear algebra algorithm which allows for more rapid convergence to the solution. We find that the computational complexity of the iterative solution to WHAM and the closely-related multiple Bennett acceptance ratio (MBAR) method can be improved by using the method of direct inversion in the iterative subspace. We give examples from a lattice model, a simple liquid and an aqueous protein solution.

Keywords: free energy, WHAM, MBAR, DIIS

1. Introduction

An important problem in computational physics and chemistry is to obtain the best estimate of a quantity of interest from a given set of data[1]. For free energy calculations, the multiple histogram method[2–5] or its generalisation, the weighted histogram analysis method (WHAM)[1, 6–17], is an effective tool for addressing such a problem. WHAM is statistically efficient in using the data acquired from molecular simulations, and it has become a standard free energy analysis tool, particularly popular for enhanced-sampling simulations, such as umbrella sampling[18, 19], and simulated[20, 21] and parallel[22–26] tempering.

Given several distributions collected at different thermodynamic states, WHAM obtains the optimal estimate of the free energies of the states. This problem arises in the calculation of potentials of mean force and a variety of free energy difference methods. The central idea of WHAM is to find an optimal estimate of the density of states, or the unbiased distribution, which then allows the free energies to be evaluated as weighted integrals. The optimal density of states is computed from a weighted average of the reweighted energy histograms (hence the name of the method) from different distribution realisations or trajectories. The weights, however, depend on the free energies, so that the free energies and the density of states must be determined self-consistently.

WHAM can be reformulated[1, 17] in the limit of zero histogram bin width as an extension of the Bennett acceptance ratio (BAR) method[27]. This form, adopted by the multistate BAR (MBAR) method[1], avoids the histogram dependency[1, 6, 12].

The straightforward implementation of WHAM or MBAR, in which the equations regarding the free energies are solved by direct iteration, can suffer from slow convergence in the later stages[14, 16, 28]. Several remedies have been proposed[1, 14, 16, 28, 29]. For example, one may use the Newton-Raphson method, which involves a Hessian-like matrix, although the approach sometimes can be unstable[1]. Other more advanced techniques include the trust region and Broyden-Fletcher-Goldfarb-Shanno (BFGS) methods[16].

An elegant non-iterative alternative is the statistical-temperature WHAM (ST-WHAM)[28, 29], which determines the density of states through its logarithmic derivative, or the statistical temperature. In this way, the method estimates the density of states non-iteratively with minimal approximation. ST-WHAM can be regarded as a refinement of the more approximate umbrella integration method (UIM)[30, 31]. However, the extension to multidimensional ensembles, e.g., the isothermal-isobaric ensemble, can be numerically challenging[28].

Here, we discuss a numerical improvement of the implementation of WHAM and MBAR using the method of direct inversion in the iterative subspace (DIIS)[32–36]. DIIS shares characteristics with other optimisation techniques which use a limited (non-spanning) basis of vectors which produce the most gain towards the optimum. Although still iterative in nature, this implementation can often improve the rate of convergence significantly in difficult cases.

2. Method

2.1. WHAM

WHAM is a method of estimating the free energies of multiple thermodynamic states with different parameters, such as temperatures, pressures, etc. Below, we shall first review WHAM in the particular case of a temperature scan, since it permits simpler mathematics without much abstraction. Generalisations to umbrella sampling and other ensembles are discussed afterwards.

Consider K temperatures, labelled by β = 1/(kBT), as β1, …, βK. Suppose we have performed the respective canonical (NVT) ensemble simulations at the K temperatures, and we wish to estimate the free energies at those temperatures.

In WHAM, we first estimate the density of states, g(E) = ∫ δ(ℰ(x) − E) dx, as the number density of configurations, x, with energy E, from

| (1) |

where nk(E) is the unnormalised energy distribution observed from trajectory k, which is usually estimated from the energy histogram as the number of independent trajectory frames whose energies fall in the interval (E − ΔE/2, E + ΔE/2) divided by ΔE (we shall omit “independent” below for simplicity); thus, nk(E)/Nk is the normalised distribution with Nk being the total number of trajectory frames from simulation k; and finally,

| (2) |

with fk and Zk being the dimensionless free energy and partition function, respectively.

To understand Eq. (1), we first observe from the definition the single histogram estimate

| (3) |

where dk(E) ≡ Nk exp(−βkE + fk). That is, the observed distribution, nk(E)/Nk, should roughly match the exact one, g(E) wk(E), where wk(E) ≡ exp(−βkE + fk) is the normalised weight of the canonical ensemble. The values of Eq. (3) from different k can be combined to improve the precision, and Eq. (1) is the optimal combination[4, 5, 7, 12, 13]. To see this, recall that in an optimal combination, the relative weight is inversely proportional to the variance. Assuming a Poisson distribution so that var(nk ΔE) ≈ 〈nk〉 ΔE, we have (here, 〈⋯〉 means an ensemble average). Averaging the values from Eq. (3) using (1/dk)−1 as the relative weight yields Eq. (1).1 Since for a fixed E, dk(E) is proportional to 〈nk(E)〉, several variants of WHAM may be derived by using nk(E) in place of dk(E) as the relative weight for g(E)[37] or related quantities[7, 28–31, 38, 39].

From Eqs. (1) and (2), we find that fi satisfies

| (4) |

where 𝒵i(f) denotes the integral on the right-hand side as a function of f = (f1, …, fK). Once all fi and g(E) are determined, the free energy at a temperature not simulated, β, can be found from Eq. (2) by substituting β for βk.

Histogram-free form

The histogram dependency of WHAM [in using nk(E)] can be avoided by noticing from definition that[12]

| (5) |

where, ℰ(x) is the energy function, and denotes a sum over trajectory frames of simulation k. Using Eq. (5) in Eq. (4) yields the histogram-free, or the MBAR, form[1, 6, 12, 17]:

| (6) |

where qi(x) ≡ exp[−βi ℰ(x)]. The K = 2 case is the BAR result[27], and Eq. (6) also holds for a general setting, which permits, e.g., a nonlinear parameter dependence (see Appendix A for derivation). In this sense, MBAR is not only the zero-bin-width limit of WHAM[1, 17], but also a generalisation[1]. As we shall see, the structural similarity of Eqs. (4) and (6) allows our acceleration technique to be applicable to both cases. Since both Eqs. (4) and (6) are invariant under fi → fi + c for all i and an arbitrary c, fi are determined only up to a constant shift.

Extensions to umbrella sampling

We briefly mention a few extensions. First, for a general Hamiltonian with a linear bias

such that qi(x) = exp [−ℋ(x; λi)], we can show, by inserting 1 = ∫ δ(𝒲(x) − W) dW into Eq. (6), that

where is understood to be the unnormalised distribution of the bias 𝒲(x). Equation (4) is the special case of ℋ0(x) = 0, 𝒲(x) = ℰ(x), and λi = βi. Another common example is a system under a quadratic restraint (umbrella) for some reaction coordinate ξ ≡ ξ(x). In this case, , and 𝒲(x) = −βAξ. The configuration independent term, , can be added back to fi after the analysis.

Extensions to other ensembles

Further, λi and W can be generalised to vectors as λi and W, respectively. For example, for simulations on multiple isothermal-isobaric (NpT) ensembles with different temperatures and pressures, we set λi = (βi, βipi) and W = (E, V) with pi and V being the pressure and volume, respectively. However, if the vector dimension is high, or if the Hamiltonian ℋ(x; λi) depends nonlinearly on λi, the histogram-free form, Eq. (6), is more convenient[1]. Besides, the factor exp(−βi E) can be replaced by a non-Boltzmann (e.g., the multicanonical[40–42], Tsallis[43], microcanonical[44, 45]) weight for multiple non-canonical simulations[28].

Solution by iteration

Numerically, the fi are most often determined by treating Eq. (4) as an iterative equation,

However, this approach, referred to as direct WHAM below, can take thousands of iterations to finish[14, 16, 28] (cf. Appendix B). In Secs. 2.3 and 2.4, we give a numerical technique to accelerate the solution of Eq. (4) or (6).

2.2. ST-WHAM and UIM

For comparison, we briefly discuss two non-iterative alternatives, ST-WHAM[28, 29] and UIM[30, 31]. By taking the logarithmic derivative of the denominator of Eq. (1), we get,

where we have used Eq. (3) to make the final expression independent of dk(E), hence fk. So

This is the ST-WHAM result. In evaluating the integral, we may encounter an empty bin with , which leaves the integrand indeterminate. An expedient fix is to let the integrand borrow the value from the nearest non-empty bin [note, however, setting the integrand to zero would cause a larger error in g(E)]. ST-WHAM is most convenient in one dimension, and its results usually differ only slightly from those of WHAM[28]. In UIM[30, 31], the distribution nk(E) is further approximated as a Gaussian. We note that ST-WHAM is difficult when we have more thermodynamic variables in the ensemble of interest. Below we show that WHAM with the DIIS method can handle such a case for the Lennard-Jones (LJ) fluid, readily.

2.3. DIIS

DIIS is a technique useful for solving equations[32–36], and we use it to solve Eq. (4) here. A schematic illustration is shown in Fig. 1. We first represent an approximate solution by a trial vector, f = (f1, …, fK), which is, in our case, the vector of the dimensionless free energies. The target equations can be written as

| (7) |

which is −log 𝒵i (f) − fi in our case. The left-hand side of Eq. (7) also forms a K-dimensional vector, R = (R1, …, RK), which is referred to as the residual vector. The magnitude ‖R‖ represents the error, and R(f) should optimally point in a direction that reduces the error of f.

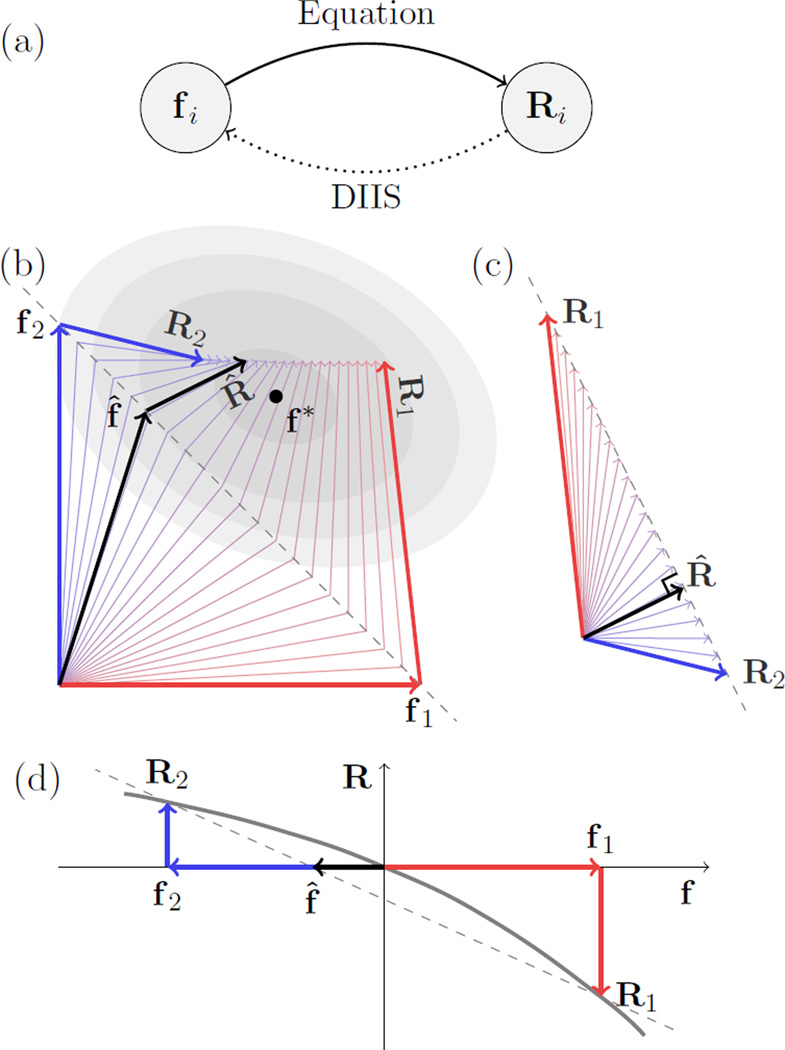

Figure 1.

Schematic illustrations of the method of direct inversion of the iterative subspace (DIIS). (a) As an iterative method, DIIS solves the equation, R(f) = 0, by a feedback loop. When the equation is applied to a trial solution, represented by vector fi, we get a residual vector Ri ≡ R(fi), whose magnitude indicates the error. Ideally, if fi were the true solution, Ri would be zero. In the feedback step, we correct fi using Ri. The task of DIIS is to construct from a few previous vectors accumulated during the iteration an optimal trial vector f̂ with hopefully the smallest error ‖R̂‖ to help the next round of iteration. (b) and (c) Given a basis of M (two here) trial vectors, DIIS seeks the combination that minimises the magnitude ‖R̂‖ under the constraint [panel (c)]. The corresponding combination of the trial vectors, , is expected to be close to true solution, f*. Then, we construct the new trial vector as f(n) = f̂ + R̂ and use it to update the basis for the next round of iteration. (d) If the vectors are one-dimensional (i.e., scalars), it is possible to find a vanishing R̂, and DIIS is equivalent to the secant method.

If Eq. (7) is solved by direct iteration, f is replaced by f + R(f) in each time. This can be a slow process because the residual vector R does not always have the proper direction and/or magnitude to bring f close to the true solution f*. The magnitude of R, however, can be used as a reliable measure of the error of f. Thus, in DIIS, we try to find a vector f̂ with minimal error ‖R(f̂)‖, which would be more suitable for direct iteration.

Suppose now we have a basis of M trial vectors f1, … fM (where M can be much less than K), and the residual vectors are R1, … RM [where Rj ≡ R(fj) for j = 1, …, M], respectively. We wish to construct a vector f̂ with minimal error from a linear combination of the trial vectors.

To do so, we first find the combination of the residual vectors , that minimises the error ‖R̂‖ under the constraint

| (8) |

Mathematically, this means that we solve for the ci simultaneously from Eq. (8) and

| (9) |

for all i, with λ being an unknown Lagrange multiplier that is to be determined from along with ci. Now the corresponding combination of the trial vectors, , should be close to the desired minimal-error vector. This is because around the true solution f*, Eq. (7) should be nearly linear; so the residual vector

has the minimal magnitude. In other words, f̂, among all linear combinations of {fi}, is the closest to the true solution, under the linear approximation. Thus, an iteration based on f̂ should be efficient.

With M = K + 1 independent bases, one can show that it is possible to find a combination with zero R̂, which means that f̂ would be the true solution if the equations were linear. A particularly instructive case is that of two vectors (M = 2) in one dimension (K = 1)2. We then recover the secant method[46], as shown in Fig. 1(d). The number of bases, M, however, should not exceed K + 1 (or, in our case, K because of the arbitrary shift constant of fi) to keep Eqs. (9) independent, although this restriction may be relaxed by using certain numerical techniques[46].

We now construct a new trial vector f(n) as

where the factor α is 1.0 in this study (although a smaller value is recommended for other applications[35, 36]). The new vector f(n) is used to update the basis as shown next.

2.4. Basis updating

In each iteration of DIIS, the basis is updated by the new trial vector f(n) from the above step. Initially, the basis contains a single vector. As we add more vectors into the basis in subsequent iterations, some old vectors may be removed to maintain a convenient and efficient maximal size of M.

A simple updating scheme[35] is to treat the basis as a queue: we add f(n) to the basis, if the latter contains fewer than M vectors, or substitute f(n) for the earliest vector in the basis. If, however, f(n) produces an error greater than Kr times the error of fmin, the least erroneous vector in the basis, we rebuild the basis from fmin. Here, the error of a vector f is defined as ‖R(f)‖, and Kr = 10.0 is recommended[35].

We used the following modification in this study. First, we find the most erroneous vector, fmax, from the basis. If the new vector, f(n), produces an error less than fmax, we add f(n) into the basis or, if the basis is full, substitute f(n) for fmax. Otherwise, we remove fmax from the basis, and if this empties the basis, we rebuild the basis from f(n).

Since the DIIS process is reduced to the direct iteration if M = 1, the method is effective only if multiple basis vectors are used.

3. Results

We tested DIIS WHAM and MBAR on three systems: Ising model, LJ fluid, and the villin headpiece (a small protein) in aqueous solution (see Secs. 3.2, 3.3, and 3.4, respectively, for details). We tuned the parameters such that direct WHAM and MBAR would take thousands of iterations to finish.

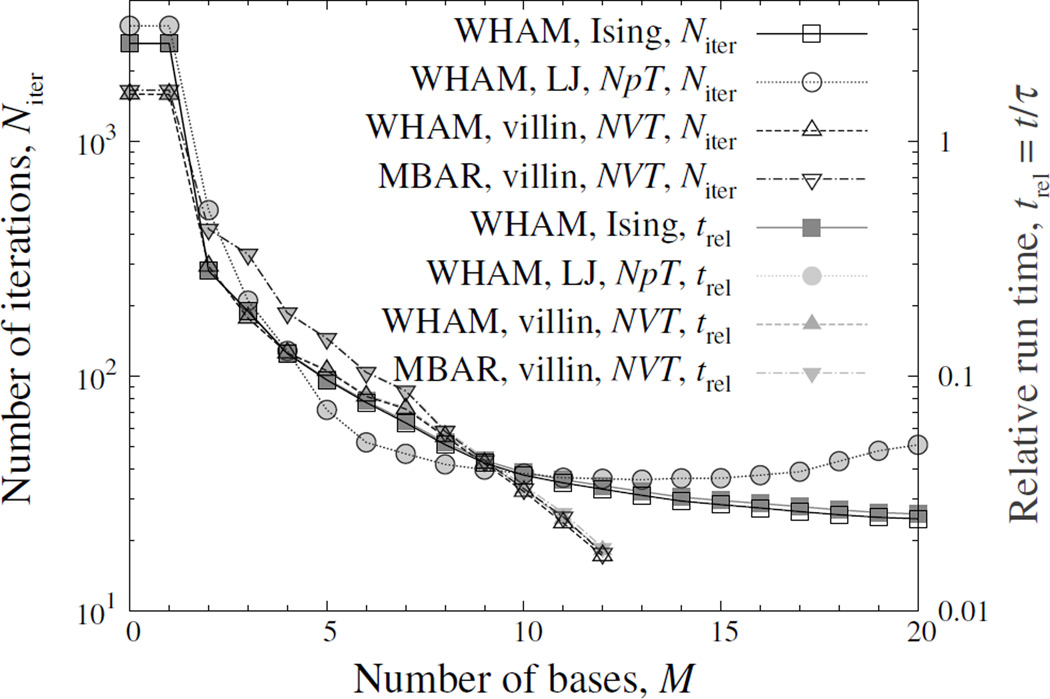

The main results are summarised in Fig. 2, from which one can see that DIIS can speed up WHAM and MBAR dramatically in these cases. The real run time roughly matched the number of iterations, suggesting a negligible overhead for using DIIS. This is unsurprising, for it is often much more expensive to compute the right-hand side of Eq. (4) or (6).

Figure 2.

Number of iterations and run time versus the number of bases, M, in DIIS. The four test cases are (1) WHAM on the Ising model, (2) WHAM on the LJ fluid in the NpT ensemble, (3) WHAM on the mini-protein villin headpiece in the NVT ensemble (with the bin width of energy histograms being 1.0), and (4) MBAR on the same protein system. The M = 0 points represent direct WHAM. The run times are inversely scaled by a factor τ for better alignment with the numbers of iterations. The scaling factors τ for cases 1–4 are 12.5, 1.87 × 103, 14.3, and 260 seconds, respectively. The results were averaged over independent samples for the Ising model and LJ fluid. For the villin headpiece, the results were averaged over bootstrap[4, 15, 47] samples for WHAM, or over random subsamples with about 1% of the trajectory frames for MBAR. To mimic the correlation in the trajectories, each data point in the bootstrap sample is either randomly drawn from the trajectory, or the same as the previous one (if any) with probability exp(−ΔtMD/tact), where ΔtMD and tact are the time step of molecular dynamics and the autocorrelation time of the potential energy, respectively. Here, the error tolerance max{|Ri|} is 10−8. The lines are a guide for the eyes.

3.1. Set-up

For simplicity, we assumed equal autocorrelation times from different temperatures (and pressures). The approximation should not affect the convergence behaviour of the methods.

In testing WHAM and MBAR, the initial free energies were obtained from the single histogram method:

where ΔAi ≡ Ai+1 − Ai, for any quantity A, and 〈…〉i+1 denotes an average over trajectory i + 1. Then, . Iterations are continued until all |Ri| are reduced below a certain value.

For comparison, we also computed fi from three approximate formulae. The first is[16, 48]

| (10) |

where Āi ≡ (Ai+1 + Ai)/2. The second is an improvement by the Euler-Maclaurin expansion[49–52]:

| (11) |

where 〈δE2〉k ≡ 〈(E − 〈E〉k)2〉k for k = i and i + 1. The third formula is derived from the same expansion but using E instead of β as the independent variable (after integration by parts, ∫ E dβ = E β − ∫ β dE):

| (12) |

3.2. Ising model

The first system is a 64 × 64 Ising model. We used parallel tempering[22–26] Monte Carlo (MC) for eighty temperatures: T = 1.5, 1.52, …, 3.08,

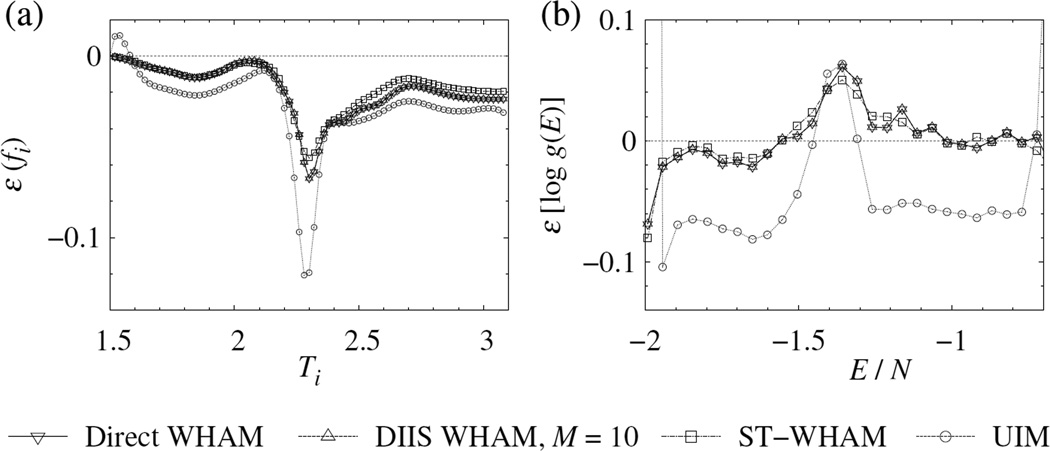

To study the accuracy, we generated a large sample with 109 single-site MC steps for each temperature. Figure 3 shows that DIIS and direct WHAMs produced identical dimensionless free energies. The differences between the ST-WHAM and WHAM results were subtle, whereas the approximate UIM produced more deviation in the results, especially around the critical region.

Figure 3.

Errors of (a) the dimensionless free energies, fi, and (b) the logarithmic density of states, log g(E), for the N = 64 × 64 two-dimensional Ising model (plotted with an energy spacing of 200). Here, ε(a) ≡ a − aref, and the reference values for fi and g(E) were computed using the methods in Refs. [53] and [54], respectively. The bin size of the energy histograms is Δ E = 4. Lines are a guide for the eyes.

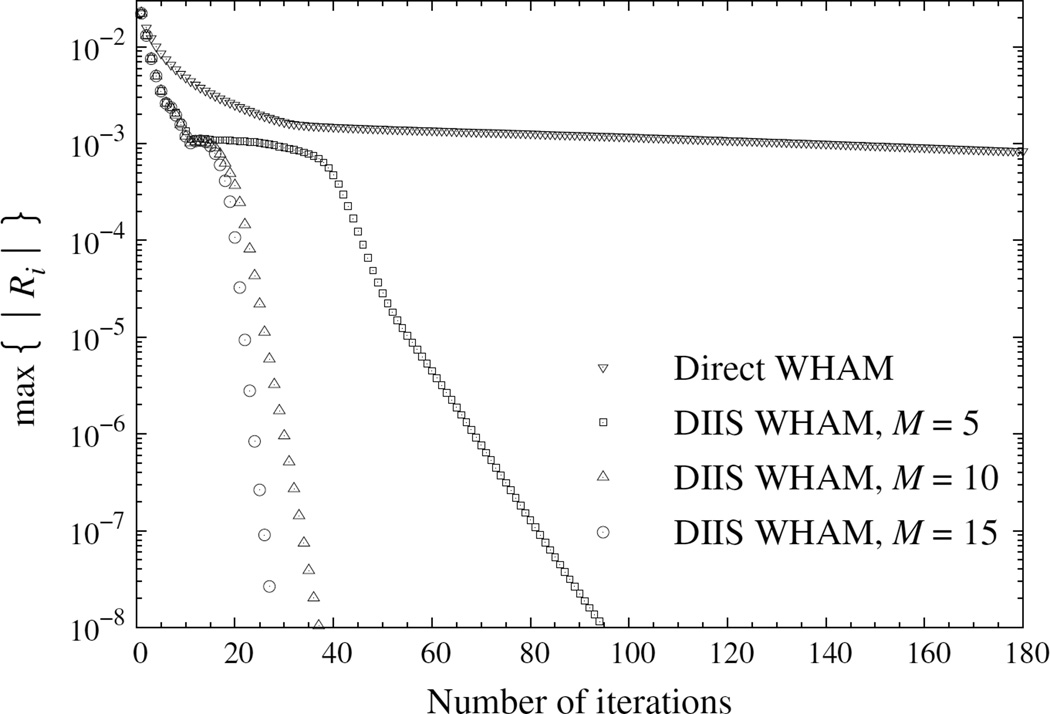

To study the rate of convergence, we generated independent samples with 107 MC steps at each temperature. Figure 4 shows a faster decay of the error in DIIS WHAM than in direct WHAM.

Figure 4.

Convergence error, max{|Ri|}, versus the number of iterations in direct and DIIS WHAMs for the 64 × 64 two-dimensional Ising model. Results were geometrically averaged over independent samples.

3.3. LJ fluid

We tested the DIIS method on the 256-particle LJ fluid in the isothermal-isobaric (NpT) ensemble. This is a case for the two-dimensional (p and T) WHAM, which can be difficult for ST-WHAM[28]. The potential interaction between particles was cutoff at half the box size. We simulated the system using parallel tempering MC. We considered the system at NT × Np = 6 × 3 conditions, with temperatures T = 1.2, 1.3, …, 1.7, and pressures p = 0.1, 0.15, 0.2. The bin sizes for energy and volume were 1.0 and 2.0, respectively.

As shown in Fig. 2, DIIS WHAM effectively reduced the run time, although the efficiency of DIIS does not always increase with the number of basis set members, M.

3.4. Villin headpiece

We tested the methods on a small protein, the villin headpiece (PDB ID: 1VII), in aqueous solution. This is a well-known test system[55]. The protein was immersed in a dodecahedron box with 1898 TIP3P water molecules and two chloride ions. The force field was AMBER99SB[56, 57] with the side-chain modifications[58]. Molecular dynamics (MD) simulations were performed using GROMACS 5.0[59–65], with a time step of 2 fs. Velocity rescaling[66] was used as the thermostat with the time constant being 0.1 ps. The electronic interaction was handled by the particle mesh Ewald method[67]. The constraints were handled by the LINCS method[68] for hydrogen-related chemical bonds on the protein and by the SETTLE method[69] for water molecules.

We simulated the system at 12 temperatures T = 300 K, 310 K, …, 410 K, each for approximately 200 ns. The energy distributions were properly overlapped, as shown in Fig. 5(e). The energies of individual trajectory frames were saved every 0.1 ps, so that there were about 2 million frames for analysis at each temperature.

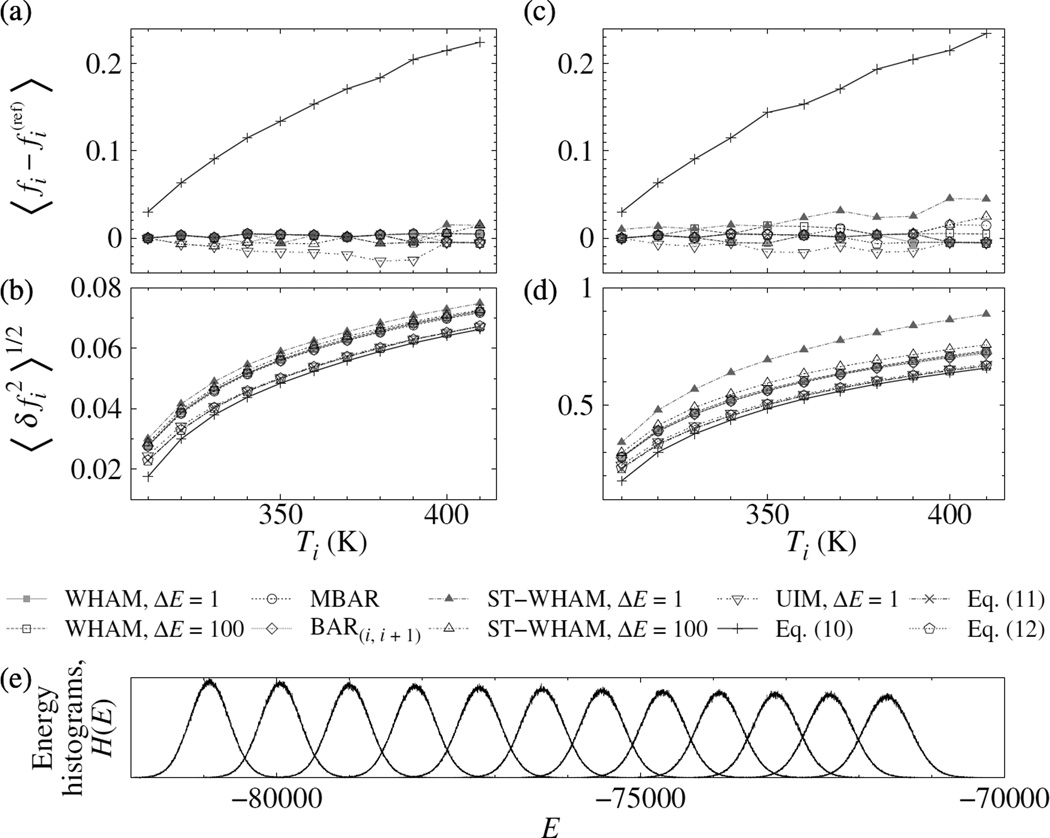

Figure 5.

Accuracy [(a) and (c)] and precision [(b) and (d)] of the dimensionless free energies, fi, from WHAM, BAR, ST-WHAM, UIM, and approximate formulae for the villin headpiece in solution. Two types of samples were used. A large sample [(a) and (b)] and a small sample [(c) and (d)] contain roughly 1% and 0.01% of all trajectory frames, respectively. The results were averaged over random samples. The MBAR results computed from all trajectory frames were used as the reference. fi at T = 300 K is fixed at zero. The lines are a guide for the eyes. (e) Energy histograms collected with bin width ΔE = 1.0.

As shown in Fig. 2, direct WHAM suffered from slow convergence, while the DIIS methodology again delivered a speed-up of two orders of magnitude, in the number of iterations or in real time. The MBAR case was similar, although MBAR was slower than WHAM as it did not use histogram to aggregate data.

To compare the errors of the methods, we prepared two types of samples of different sizes. A larger sample was randomly selected from roughly r = 1% of all trajectory frames from every temperature. In a smaller one, r was reduced to 0.01%. The reference values of fi were computed from all trajectory frames using MBAR, which is the zero-bin-width limit of WHAM[1, 17]. In terms of accuracy [Figs. 5(a) and 5(c)], WHAM, MBAR, BAR, ST-WHAM, Eqs. (11) and (12) were comparably good; UIM was slightly inferior for the larger sample; Eq. (10) was the worst. The accuracy was largely independent of the sample size. In terms of precision [Figs. 5(b) and 5(d)], the differences were small. Generally, WHAM was insensitive to the bin size, whereas ST-WHAM was slightly affected by a small bin size for the smaller samples.

4. Conclusions

In this work, we showed that the DIIS technique can often significantly accelerate WHAM and MBAR to produce free energy difference. The technique achieves rapid convergence by an optimal combination of the approximate solutions obtained during iteration. DIIS does not require computing the Hessian-like matrix, −∂R(f)/∂f, and is numerically stable with minimal run time overhead. Compared to other advanced techniques[1, 16], DIIS is relatively simple and easy to implement. However, methods based on Hessian matrices may further accelerate the solution process for final stages. Other related free energy methods[7, 37–39] may also benefit from this technique.

There are some non-iterative alternatives to WHAM, although they may be less general and/or accurate in some aspect. The use of DIIS makes scanning more than one thermodynamic state variable or a nonlinear variable computationally convenient. This was demonstrated here in the NpT LJ fluid case. Problems with only one thermodynamic variable to scan, such as temperature, are amenable to the non-iterative ST-WHAM, or the more approximate UIM.

Acknowledgments

It is a pleasure to thank Dr. Y. Zhao, J. A. Drake, Dr. M. R. Shirts, Dr. M. W. Deem, Dr. J. Ma, Dr. J. Perkyns and Dr. G. Lynch for helpful discussions.

Funding

The authors acknowledge partial financial support from the National Science Foundation (CHE-1152876), the National Institutes of Health (GM037657), and the Robert A. Welch Foundation (H-0037). Computer time on the Lonestar supercomputer at the Texas Advanced Computing Center at the University of Texas at Austin is gratefully acknowledged.

Appendix A

Probabilistic derivations of Eq. (6)

Here we give some derivations of Eq. (6). First, we show that Eq. (6) is a generalisation of Eq. (4) from the energy space to the configuration space. We follow the probabilistic argument[8–11, 16, 17] for simplicity. We assume that the system is subject to an unknown underlying configuration-space field, g(y), such that the distribution of state k is wk(y) ≡ g(y) qk(y)/Zk[g], with

| (A1) |

We now seek the most probable g(y) from the observed trajectory. Given a certain g(y), the probability of observing the trajectories, {x}, is given by

This is also the likelihood of g given the observed trajectory, {x}. Thus, to find the most probable g(y), we only need to maximise log p ({x}|g) by taking the functional derivative with respect to g(y) and setting it to zero, which yields

| (A2) |

where we have used δ log g(x)/δg(y) = δ(x − y)/g(y), and δZk[g]/δg(y) = qk(y).3 Using Eq. (A2) in Eq. (A1), and then setting g to 1.0 yields Eq. (6).

We can also show Eq. (6) without introducing the configuration-space field [thus, g(x) = 1 below]. Instead, we now assume that each trajectory frame x is free to choose the state i according to the Bayes' rule[70, 71]: since the prior probability of state i is Ni/Ntot, and the conditional probability of observing x in state i is wi(x) ≡ qi(x)/Zi, the posterior probability of x being in state i is given by[11]

Summing this over all trajectory frames x (no matter the original state j) yields the expected population of state i,

| (A3) |

where . If we demand N̂i to be equal to the Ni, Eq. (6) is recovered. Alternatively, by following the argument used in the expectation-maximisation algorithm[11, 71–74], we can view Eq. (A3) as the result of maximising the probability of observing the trajectory {x},

with respect to variations of Ni under the constraint .

Appendix B

Models for the convergence of direct WHAM

Here, we use simple models to study the convergence of direct WHAM. We shall show that slow convergence is associated to a wide temperature range, especially with a large spacing.

B.1. Linearised WHAM equation

Consider K distributions, ρi(E), at different temperatures, βi (i = 1, …, K), normalised as ∫ ρi(E) dE = 1. For simplicity, we assume equal sample sizes, Ni. Then, Eq. (4), can be written in the iterative form as

| (B1) |

Around the true solution, , the equation can be linearised as

where , and

| (B2) |

with . In matrix form, we have δf(new) = A δf(old).

The elements of matrix A are positive, i.e., Aij > 0, and normalised, i.e.,

| (B3) |

The latter can be seen from Eq. (B1) with . Besides, A is symmetric:

| (B4) |

Thus, A can be regarded as a transition matrix[4]. Each left eigenvector c = (c1, …, cK) of A is associated to a mode of δf and the eigenvalue λ gives the rate of error reduction during iteration. That is, decays as λn asymptotically with the number of iterations, n.

The largest eigenvalue is λ0 = 1.0, and its eigenvector c = (1, …, 1) corresponds to a uniform shift of all δfi, which is unaffected by the iteration, i.e., , as a consequence of Eq. (B3). The next largest eigenvalue, λ1, determines the rate of convergence of the slowest mode, and a larger value of 1 − λ1 means faster convergence. Below, we determine λ1 in a few solvable cases.

B.2. Exact distribution approximation (EDA)

To proceed, we assume that the observed distributions are exact, and thus the solution, , is also exact. Then, for any k, we have

| (B5) |

with g(E) being the density of states. This simplifies Eq. (B2) as

| (B6) |

B.3. Gaussian density of states

Further, we approximate g(E) as a Gaussian[14]. With a proper choice of the multiplicative constant of g(E), we have,

| (B7) |

It follows

| (B8) |

and

Thus, the distribution at any temperature is a Gaussian of the same width . The inverse temperature βi affects the energy distributions only as a linear shift to the distribution centre. It follows that both the origin of β and that of E, represented by Ec, can be set to any values that help calculation, without affecting the value of Aij.

B.4. Two-temperature case

For the two-temperature case, the matrix A has only one free variable because of Eqs. (B3) and (B4), and it can be written as

Thus, the second largest eigenvalue is λ1 = 1 − 2 A12.

Under EDA, we have, from Eqs. (B5) and (B6),

where the equality is achieved only for identical distributions. Geometrically, A12 represents the degree of overlap of the distributions, as shown in Fig. B1(a), and it decreases with the separation of the two distributions, from the maximal value, 1/2, achieved at β1 = β2.

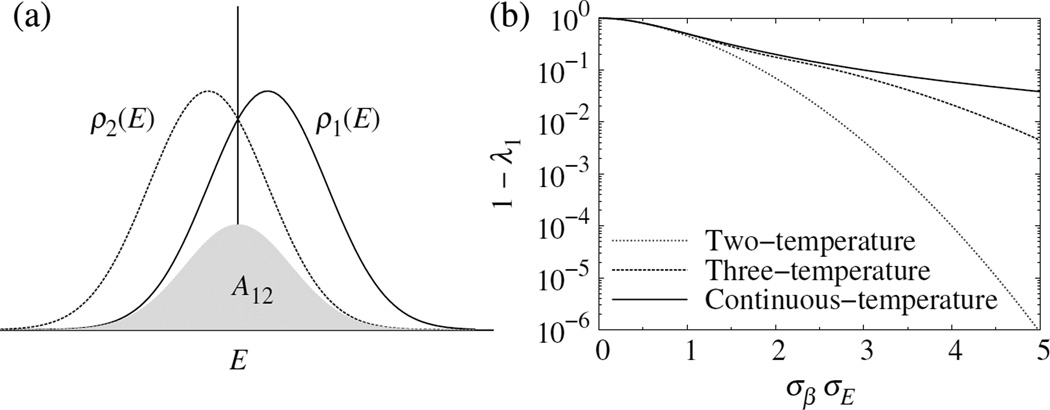

Figure B1. (a) A12, which determines the rate of convergence in the two-temperature case, as a measure of overlap of the two energy distributions. (b) Comparison of the rates of convergence in the two-, three-, and continuous-temperature cases, Eqs. (B9), (B10), and (B12), respectively. Here, σβ is the standard deviation of the β distribution; σE is that of the energy distribution at any temperature. A larger value of 1 − λ1 means faster convergence.

By further assuming a Gaussian density of states, we can, without loss of generality, set β1 = − σβ, β2 = σβ, and Ec = 0. Then, from Eqs. (B6), (B7) and (B8), we have

| (B9) |

where , and we have used 1/cosh x ≈ exp(−x2/2)(1 + x4/12). This model shows a rapid decrease in the rate of convergence of direct WHAM with the temperature separation.

B.5. Three-temperature case

Similarly, for three evenly-spaced temperatures, we can, without loss of generality, set β1 = −Δβ, β2 = 0, and β3 = Δ β, with . Then,4

| (B10) |

where

B.6. Continuous-temperature case

If there are a large number K of temperatures in a finite range, we can approximate them by a continuous distribution, w(β). In this case, the sum over temperatures can be replaced by an integral: .

The eigenvalue λl and eigenvector cl(β) are now determined from the integral equation

| (B11) |

where A(β, β′) is Aij with βi → β and βj → β′.

Equation (B11) can be solved in a special case, in which we assume EDA, Eq. (B6), a Gaussian density of states, Eq. (B7), and a Gaussian β distribution:

with width . The physical solution is

where Hl(x) is the Hermite polynomial[49–51], generated as . Thus, for the second largest eigenvector, we have

| (B12) |

which decreases with increasing temperature range, σβ, albeit more slowly than the two- and three-temperature values, as shown in Fig. B1(b). Thus, Eq. (B12) only gives a upper bound, and nonzero spacing between temperatures can further slow down the convergence. Besides, sampling error, which is ignored in the above calculation, may also slow down the convergence.

Footnotes

We can also view Eq. (1) as the result of applying Eq. (3) to the composite ensemble of the K canonical ensembles[13], using the following substitutions: , and . The last expression is the normalised ensemble weight in the composite ensemble. Note that the composite ensemble is closely related to the expanded ensemble that underlies the simulated tempering method[20, 21]. However, in the latter case, one can show that the Nk in Eq. (1) should be replaced by the average, 〈Nk〉, and the combination 〈Nk〉/Zk is proportional to the weight exp(ηk) in the acceptance probability of temperature transitions, A(βk → βk′) = min{1, exp[ηk′ − ηk − (βk′ − βk)E]}. This variant is thus more convenient as it depends only on the known weight, ηk, instead of on the unknown fk.

This is the case for solving the BAR equation, because fi are determined up to a constant shift, the virtual dimension is 1.

We can also show Eq. (A2) by considering the composite ensemble of the K states. The number of visits to a phase-space element at y is given by the product of the total sample size, , the distribution of the composite ensemble , and the volume dy. This gives , and it is expected to match the observed number of visits to the element, , regardless of the state j.

References

- 1.Shirts MR, Chodera JD. Statistically optimal analysis of samples from multiple equilibrium states. The Journal of Chemical Physics. 2008;129:124105. doi: 10.1063/1.2978177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ferrenberg AM, Swendsen RH. New monte carlo technique for studying phase transitions. Phys Rev Lett. 1988 Dec;61:2635–2638. doi: 10.1103/PhysRevLett.61.2635. [DOI] [PubMed] [Google Scholar]

- 3.Ferrenberg AM, Swendsen RH. Optimized monte carlo data analysis. Phys Rev Lett. 1989 Sep;63:1195–1198. doi: 10.1103/PhysRevLett.63.1195. [DOI] [PubMed] [Google Scholar]

- 4.Newman E, Barkema G. Monte carlo methods in statistical physics. 1st. Oxford: Clarendon Press; 1999. [Google Scholar]

- 5.Frenkel D, Smit B. Understanding Molecular Simulation, Second Edition: From Algorithms to Applications (Computational Science) 2nd. Academic Press; 2001. [Google Scholar]

- 6.Kumar S, Rosenberg JM, Bouzida D, et al. The weighted histogram analysis method for free-energy calculations on biomolecules. i. the method. Journal of Computational Chemistry. 1992;13:1011–1021. [Google Scholar]

- 7.Roux B. The calculation of the potential of mean force using computer simulations. Computer Physics Communications. 1995;91:275–282. [Google Scholar]

- 8.Bartels C, Karplus M. Multidimensional adaptive umbrella sampling: Applications to main chain and side chain peptide conformations. Journal of Computational Chemistry. 1997;18:1450–1462. [Google Scholar]

- 9.Gallicchio E, Andrec M, Felts AK, et al. Temperature weighted histogram analysis method, replica exchange, and transition paths. The Journal of Physical Chemistry B. 2005;109:6722–6731. doi: 10.1021/jp045294f. [DOI] [PubMed] [Google Scholar]

- 10.Habeck M. Bayesian reconstruction of the density of states. Phys Rev Lett. 2007 May;98:200601. doi: 10.1103/PhysRevLett.98.200601. [DOI] [PubMed] [Google Scholar]

- 11.Habeck M. Bayesian estimation of free energies from equilibrium simulations. Phys Rev Lett. 2012 Sep;109:100601. doi: 10.1103/PhysRevLett.109.100601. [DOI] [PubMed] [Google Scholar]

- 12.Souaille M, Roux B. Extension to the weighted histogram analysis method: combining umbrella sampling with free energy calculations. Computer Physics Communications. 2001;135:40–57. [Google Scholar]

- 13.Chodera JD, Swope WC, Pitera JW, et al. Use of the weighted histogram analysis method for the analysis of simulated and parallel tempering simulations. Journal of Chemical Theory and Computation. 2007;3:26–41. doi: 10.1021/ct0502864. [DOI] [PubMed] [Google Scholar]

- 14.Bereau T, Swendsen RH. Optimized convergence for multiple histogram analysis. Journal of Computational Physics. 2009;228:6119–6129. [Google Scholar]

- 15.Hub JS, de Groot BL, van der Spoel D. g wham–a free weighted histogram analysis implementation including robust error and autocorrelation estimates. Journal of Chemical Theory and Computation. 2010;6:3713–3720. [Google Scholar]

- 16.Zhu F, Hummer G. Convergence and error estimation in free energy calculations using the weighted histogram analysis method. Journal of Computational Chemistry. 2012;33:453–465. doi: 10.1002/jcc.21989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Tan Z, Gallicchio E, Lapelosa M, et al. Theory of binless multi-state free energy estimation with applications to protein-ligand binding. The Journal of Chemical Physics. 2012;136:144102. doi: 10.1063/1.3701175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Torrie GM, Valleau JP. Monte carlo free energy estimates using non-boltzmann sampling: Application to the sub-critical lennard-jones fluid. Chemical Physics Letters. 1974;28:578–581. [Google Scholar]

- 19.Laio A, Parrinello M. Escaping free-energy minima. Proceedings of the National Academy of Sciences. 2002;99:12562–12566. doi: 10.1073/pnas.202427399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Marinari E, Parisi G. Simulated tempering: A new monte carlo scheme. EPL (Europhysics Letters) 1992;19:451. [Google Scholar]

- 21.Lyubartsev AP, Martsinovski AA, Shevkunov SV, et al. New approach to monte carlo calculation of the free energy: Method of expanded ensembles. The Journal of Chemical Physics. 1992;96:1776–1783. [Google Scholar]

- 22.Swendsen RH, Wang JS. Replica monte carlo simulation of spin-glasses. Phys Rev Lett. 1986 Nov;57:2607–2609. doi: 10.1103/PhysRevLett.57.2607. [DOI] [PubMed] [Google Scholar]

- 23.Geyer CJ. Proceedings of the 23rd Symposium on the Interface. New York: American Statistical Association; 1991. p. 156. [Google Scholar]

- 24.Hukushima K, Nemoto K. Exchange monte carlo method and application to spin glass simulations. Journal of the Physical Society of Japan. 1996;65:1604–1608. [Google Scholar]

- 25.Hansmann UHE. Parallel tempering algorithm for conformational studies of biological molecules. Chemical Physics Letters. 1997;281:140–150. [Google Scholar]

- 26.Earl DJ, Deem MW. Parallel tempering: Theory, applications, and new perspectives. Phys Chem Chem Phys. 2005;7:3910–3916. doi: 10.1039/b509983h. [DOI] [PubMed] [Google Scholar]

- 27.Bennett CH. Efficient estimation of free energy differences from monte carlo data. Journal of Computational Physics. 1976;22:245–268. [Google Scholar]

- 28.Kim J, Keyes T, Straub JE. Communication: Iteration-free, weighted histogram analysis method in terms of intensive variables. The Journal of Chemical Physics. 2011;135:061103. doi: 10.1063/1.3626150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Fenwick MK. A direct multiple histogram reweighting method for optimal computation of the density of states. The Journal of Chemical Physics. 2008;129:125106. doi: 10.1063/1.2981800. [DOI] [PubMed] [Google Scholar]

- 30.Kästner J, Thiel W. Bridging the gap between thermodynamic integration and umbrella sampling provides a novel analysis method: umbrella integration. The Journal of Chemical Physics. 2005;123:144104. doi: 10.1063/1.2052648. [DOI] [PubMed] [Google Scholar]

- 31.Kästner J. Umbrella integration in two or more reaction coordinates. The Journal of Chemical Physics. 2009;131:034109. doi: 10.1063/1.3175798. [DOI] [PubMed] [Google Scholar]

- 32.Pulay P. Convergence acceleration of iterative sequences. the case of scf iteration. Chemical Physics Letters. 1980;73:393–398. [Google Scholar]

- 33.Pulay P. Improved scf convergence acceleration. Journal of Computational Chemistry. 1982;3:556–560. [Google Scholar]

- 34.Hamilton TP, Pulay P. Direct inversion in the iterative subspace (diis) optimization of openshell, excitedstate, and small multiconfiguration scf wave functions. The Journal of Chemical Physics. 1986;84:5728–5734. [Google Scholar]

- 35.Kovalenko A, Ten-no S, Hirata F. Solution of three-dimensional reference interaction site model and hypernetted chain equations for simple point charge water by modified method of direct inversion in iterative subspace. Journal of Computational Chemistry. 1999;20:928–936. [Google Scholar]

- 36.Howard J, Pettitt B. Integral equations in the study of polar and ionic interaction site fluids. Journal of Statistical Physics. 2011;145:441–466. doi: 10.1007/s10955-011-0260-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Shen J, McCammon J. Molecular dynamics simulation of superoxide interacting with superoxide dismutase. Chemical Physics. 1991;158:191–198. [Google Scholar]

- 38.Woolf TB, Roux B. Conformational flexibility of o-phosphorylcholine and o-phosphorylethanolamine: A molecular dynamics study of solvation effects. Journal of the American Chemical Society. 1994;116:5916–5926. [Google Scholar]

- 39.Crouzy S, Woolf T, Roux B. A molecular dynamics study of gating in dioxolane-linked gramicidin a channels. Biophysical Journal. 1994;67:1370–1386. doi: 10.1016/S0006-3495(94)80618-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Mezei M. Adaptive umbrella sampling: Self-consistent determination of the non-boltzmann bias. Journal of Computational Physics. 1987;68:237–248. [Google Scholar]

- 41.Berg BA, Neuhaus T. Multicanonical ensemble: A new approach to simulate first-order phase transitions. Phys Rev Lett. 1992 Jan;68:9–12. doi: 10.1103/PhysRevLett.68.9. [DOI] [PubMed] [Google Scholar]

- 42.Lee J. New monte carlo algorithm: Entropic sampling. Phys Rev Lett. 1993 Jul;71:211–214. doi: 10.1103/PhysRevLett.71.211. [DOI] [PubMed] [Google Scholar]

- 43.Tsallis C. Possible generalization of boltzmann-gibbs statistics. Journal of Statistical Physics. 1988;52:479–487. [Google Scholar]

- 44.Yan Q, de Pablo JJ. Fast calculation of the density of states of a fluid by monte carlo simulations. Phys Rev Lett. 2003 Jan;90:035701. doi: 10.1103/PhysRevLett.90.035701. [DOI] [PubMed] [Google Scholar]

- 45.Martin-Mayor V. Microcanonical approach to the simulation of first-order phase transitions. Phys Rev Lett. 2007 Mar;98:137207. doi: 10.1103/PhysRevLett.98.137207. [DOI] [PubMed] [Google Scholar]

- 46.Press WH, Teukolsky SA, Vetterling WT, et al. Numerical recipes in c: The art of scientific computing. 2nd. Cambridge: Cambridge University Press; 1992. [Google Scholar]

- 47.Efron B. Bootstrap methods: Another look at the jackknife. The Annals of Statistics. 1979;7:1–26. [Google Scholar]

- 48.Park S, Pande VS. Choosing weights for simulated tempering. Phys Rev E. 2007 Jul;76:016703. doi: 10.1103/PhysRevE.76.016703. [DOI] [PubMed] [Google Scholar]

- 49.Arfken GB, Weber HJ. Mathematical methods for physicists. 5th. Academic Press; 2001. [Google Scholar]

- 50.Abramowitz M, Stegun I. Applied mathematics series. Dover Publications; 1964. Handbook of mathematical functions: With formulas, graphs, and mathematical tables. [Google Scholar]

- 51.Wang Z, Guo D. Special functions. World Scientific; 1989. [Google Scholar]

- 52.Whittaker ET, Watson GN. A course of modern analysis. 4th. Cambridge University Press; 1927. [Google Scholar]

- 53.Ferdinand AE, Fisher ME. Bounded and inhomogeneous ising models. i. specific-heat anomaly of a finite lattice. Phys Rev. 1969 Sep;185:832–846. [Google Scholar]

- 54.Beale PD. Exact distribution of energies in the two-dimensional ising model. Phys Rev Lett. 1996 Jan;76:78–81. doi: 10.1103/PhysRevLett.76.78. [DOI] [PubMed] [Google Scholar]

- 55.Duan Y, Kollman PA. Pathways to a protein folding intermediate observed in a 1-microsecond simulation in aqueous solution. Science. 1998;282:740–744. doi: 10.1126/science.282.5389.740. [DOI] [PubMed] [Google Scholar]

- 56.Wang J, Cieplak P, Kollman PA. How well does a restrained electrostatic potential (resp) model perform in calculating conformational energies of organic and biological molecules? Journal of Computational Chemistry. 2000;21:1049–1074. [Google Scholar]

- 57.Hornak V, Abel R, Okur A, et al. Comparison of multiple amber force fields and development of improved protein backbone parameters. Proteins: Structure, Function, and Bioinformatics. 2006;65:712–725. doi: 10.1002/prot.21123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Lindorff-Larsen K, Piana S, Palmo K, et al. Improved side-chain torsion potentials for the amber ff99sb protein force field. Proteins: Structure, Function, and Bioinformatics. 2010;78:1950–1958. doi: 10.1002/prot.22711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Berendsen H, van der Spoel D, van Drunen R. Gromacs: A message-passing parallel molecular dynamics implementation. Computer Physics Communications. 1995;91:43–56. [Google Scholar]

- 60.Lindahl E, Hess B, van der Spoel D. Gromacs 3.0: a package for molecular simulation and trajectory analysis. Journal of Molecular Modeling. 2001;7:306–317. [Google Scholar]

- 61.Van Der Spoel D, Lindahl E, Hess B, et al. Gromacs: Fast, flexible, and free. Journal of Computational Chemistry. 2005;26:1701–1718. doi: 10.1002/jcc.20291. [DOI] [PubMed] [Google Scholar]

- 62.Hess B, Kutzner C, van der Spoel D, et al. Gromacs 4: algorithms for highly efficient, load-balanced, and scalable molecular simulation. Journal of Chemical Theory and Computation. 2008;4:435–447. doi: 10.1021/ct700301q. [DOI] [PubMed] [Google Scholar]

- 63.Pronk S, Páll S, Schulz R, et al. Gromacs 4.5: a high-throughput and highly parallel open source molecular simulation toolkit. Bioinformatics. 2013;29:845–854. doi: 10.1093/bioinformatics/btt055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Páll S, Abraham M, Kutzner C, et al. Tackling exascale software challenges in molecular dynamics simulations with gromacs. In: Markidis S, Laure E, editors. Solving software challenges for exascale. Vol. 8759 of Lecture Notes in Computer Science. Springer International Publishing; 2015. pp. 3–27. [Google Scholar]

- 65.Abraham MJ, Murtola T, Schulz R, et al. Gromacs: High performance molecular simulations through multi-level parallelism from laptops to supercomputers. SoftwareX. 2015;1–2:19–25. [Google Scholar]

- 66.Bussi G, Donadio D, Parrinello M. Canonical sampling through velocity rescaling. The Journal of Chemical Physics. 2007;126:014101. doi: 10.1063/1.2408420. [DOI] [PubMed] [Google Scholar]

- 67.Essmann U, Perera L, Berkowitz ML, et al. A smooth particle mesh ewald method. The Journal of Chemical Physics. 1995;103:8577–8593. [Google Scholar]

- 68.Hess B, Bekker H, Berendsen HJC, et al. Lincs: A linear constraint solver for molecular simulations. Journal of Computational Chemistry. 1997;18:1463–1472. [Google Scholar]

- 69.Miyamoto S, Kollman PA. Settle: An analytical version of the shake and rattle algorithm for rigid water models. Journal of Computational Chemistry. 1992;13:952–962. [Google Scholar]

- 70.Leonard T, Hsu J. Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge University Press; 1999. Bayesian methods: An analysis for statisticians and interdisciplinary researchers. [Google Scholar]

- 71.Duda R, Hart P, Stork D. Pattern classification. 2nd. Wiley: 2000. [Google Scholar]

- 72.Dempster AP, Laird NM, Rubin DB. Maximum likelihood from incomplete data via the em algorithm. Journal of the Royal Statistical Society, Series B. 1977;39:1–38. [Google Scholar]

- 73.Neal R, Hinton G. Vol. 89 of NATO ASI Series. Springer Netherlands; 1998. A view of the em algorithm that justifies incremental, sparse, and other variants; pp. 355–368. [Google Scholar]

- 74.Press WH, Teukolsky SA, Vetterling WT, et al. Numerical recipes in c: The art of scientific computing. 3rd. Cambridge: Cambridge University Press; 2007. [Google Scholar]