Abstract

Living systems need to be highly responsive, and also to keep fluctuations low. These goals are incompatible in equilibrium systems due to the Fluctuation Dissipation Theorem (FDT). Here, we show that biological sensory systems, driven far from equilibrium by free energy consumption, can reduce their intrinsic fluctuations while maintaining high responsiveness. By developing a continuum theory of the E. coli chemotaxis pathway, we demonstrate that adaptation can be understood as a non-equilibrium phase transition controlled by free energy dissipation, and it is characterized by a breaking of the FDT. We show that the maximum response at short time is enhanced by free energy dissipation. At the same time, the low frequency fluctuations and the adaptation error decrease with the free energy dissipation algebraically and exponentially, respectively.

Living organisms need to respond to external signals with high sensitivity, and at the same time, they also need to control their internal fluctuations in the absence of signal. In equilibrium systems, the fluctuation dissipation theorem (FDT) dictates that these two desirable properties, high sensitivity and low fluctuation, can not be satisfied simultaneously. Most sensory and regulatory functions in biology are carried out by biochemical networks that operate out of equilibrium – metabolic energy is spent to drive the dynamics of the network [1–4]. Thus, in principle they are not constrained by the FDT [5]. How fluctuations, energy dissipation, and sensitivity are related for such systems remains not well understood. Here, we address this question by studying a negative feedback network responsible for adaptation in the bacterial chemosensory system [6–9].

A typical adaptive behavior in a small system such as a single cell is shown in Fig. 1A [10]. In response to a change of the signal S, the output y of the sensory system first changes quickly with a fast time scale τy. After the fast response, the output slowly adapts back towards its pre-stimulus level aad with an adaptation time τad ≫ τy. The new steady state (adapted) output may differ from the pre-stimulus value, and the difference is quantified by the adaptation error ε. In our previous work [11], we showed that the negative feedback network responsible for adaptation operates out of equilibrium with a finite free energy dissipation rate Ẇ. The average adaptation error 〈ε〉 was found to decrease exponentially with τad Ẇ. However, how the variance of the error behaves in an adaptive system still remains unknown. This is an important question as adaptive feedback systems are intrinsically noisy due to the slow adaptation dynamics [12].

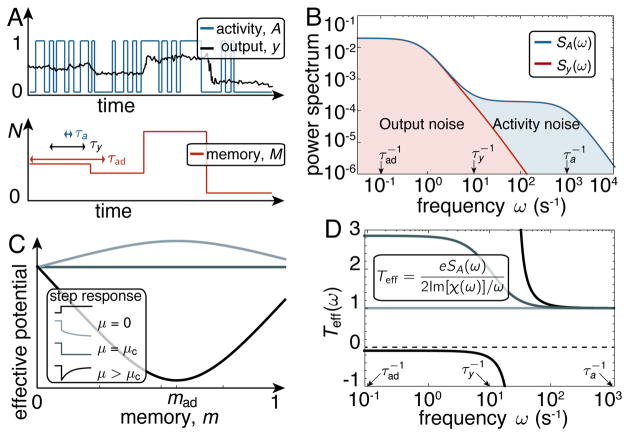

FIG. 1. Noisy response of feedback adaptation.

A) Adaptive output response to a step input signal increase at time 0. After a sharp decrease in a time τy, the output y recovers back in a time τad to its adapted value aad. The adaptation error is characterized by its average 〈ε〉, as well as its variance σε. B) Schematic of the feedback adaptation model. Transitions between the active and inactive memory energy landscapes, f1 and f0, are mediated via equilibrium activity transitions with rates ω0 and ω1. An external chemical energy input μ is used to drive the memory variable uphill in both the active and inactive states. The result is a dissipative loop of probability flow around the adapted memory state mad, which ensures the output to be near aad.

In the linear response regime, the output response of a system to an input signal S(t) is given by , where χ is the response function. For equilibrium systems, under the general assumption that response and signal are conjugate variables, the FDT establishes that χ(t) = −β∂tCR(t)Θ(t), where CR(t) ≡ 〈R(t)R(0)〉 − 〈R〉2 is the auto-correlation function, Θ(t) is the Heaviside function, and β = (kBT)−1 is the inverse thermal energy set to unity hereafter. For a small step stimulus S(t) = S0Θ(t), integration of the FDT leads to a relation between the response and its correlation: R(t) = R(0) − S0(CR(t) − CR(0)). Since for equilibrium systems CR(t) is a monotonically decreasing function of time [13], the response R(t) is also monotonic in time, and thus no adaptation dynamics is possible. Furthermore, the long time response ΔR ≡ R(t = ∞) − R(0) is linearly proportional to the variance , i.e., .

In this paper, we show that in a non-equilibrium adaptive system both the average adaptation error 〈ε〉 (analogous to ΔR) and its variance (analogous to ) are suppressed by the free energy dissipation of the system but in different ways, which results to a nonlinear (log2 arithmic) relationship between them. More importantly, violation of the FDT allows suppression of noise without compromising the strength of the short time response.

The continuous model of feedback adaptation

We start by introducing a discrete adaptation model motivated by the E. coli chemotaxis pathway. The system is characterized by its binary receptor activity A = 0, 1, its output y, and an internal control variable M = 0, 1, … , N, that corresponds to the chemoreceptor’s methylation level in E. coli chemotaxis [9]. For a given external input signal S, the free energy of the system can be written as:

| (1) |

where Sr is a reference signal at a methylation level Mr, and E(> 0) sets the methylation energy scale. For E. coli chemotaxis, the signal S depends on the ligand attractant concentration logarithmically [14].

The dynamics of the system is characterized by the transitions among the 2 × (N + 1) states in the A × M phase space. The receptor activity switches at a time scale τa, which is much shorter than the adaptation time scale τad at which the internal variable M is controlled. The activity A determines the output y of the signaling pathway. In the case of E. coli chemotaxis, this is carried out by the phosphorylation and dephosphorylation reactions of the response regulator CheY with an intermediate time scale τy: τad ≫ τy ≫ τa. To account for this, we express y by , which averages the fast binary activity A over the time scale τy.

According to Eq. (1), a larger signal S favors the inactive state A = 0. Thus, an increase in S quickly reduces the system’s average activity, at time scale ~ τa, and output, at time scale ~ τy, as represented in Fig. 1A. After this sudden initial response, the system slowly adapts by adjusting its internal variable M to balance the effect of the increased signal. Due to its slow time scale, M effectively serves as a memory of the system. This adaptation process restores activity and output to a level near their pre-stimulus value 〈A〉 = 〈y〉 ≈ aad. Although highly precise, adaptation is imperfect, and its inaccuracies are quantified by the adaptation error ε, which we define as

| (2) |

For E. coli chemotaxis, the adaptive machinery consists of chemical reactions that increase M in the inactive state and decrease it in the active state. Note from Eq. (1) that such regulatory reactions are energetically unfavorable, and thus require a chemical driving force μ, see Fig. 1B.

To gain analytical insights about dynamics and energetics of adaptation, we consider the limit where N → ∞ and m = M/N ∈ [0, 1] becomes a continuous variable [15]. Note that free energy and bare rates need to be rescaled for the continuum limit to converge (see Supplementary Information, SI, for details). Proceeding in this way we obtain two coupled Fokker-Planck equations that describe the chemotaxis pathway dynamics:

| (3) |

where p1(m, t) and p0(m, t) are the probabilities of m for the active and inactive states, respectively. The probability currents are given by

| (4) |

where fA(m) = − (A − 1/2)[(m − mr)e − (S − Sr)] is the continuum limit of Eq. (1) characterized by the rescaled energy parameter e = NE. The fast transition rates between the active and inactive states, ω0 and ω1, satisfy detailed balance ω0/ω1 = exp(f0−f1). The diffusion-like constants D1 and D0 set the time scale of m changes for active and inactive states, and thus the adaptation time goes as , see SI for details. Our model is analogous to that of an isothermal ratchet [16], where a chemical driving fuels directed motion. Whereas in ratchets μ drives directed motion, here it fuels currents up the energy landscapes f0 and f1 to achieve adaptation.

In the absence of external driving, i.e. μ = 0, the system relaxes to a state of thermal equilibrium with no phase-space fluxes J0 = J1 = 0. In this regime adaptation is impossible. The chemical driving μ > 0 breaks detailed balance and creates currents that increase m in the inactive state and decrease it in the active state. For large enough μ, the memory variable m can be stabilized (trapped) in a cycle around its adapted state mad, which ensures 〈y〉 ≈ aad as illustrated in Fig. 1B. The free energy dissipation rate Ẇ can be computed, and is given by Ẇ ≈ C|μ|/τad, with C a system specific constant set to unity by our parameter choice, see SI. In the following, we will use the chemical driving μ ≈ τad Ẇ to characterize the system’s energy dissipation.

The dynamics of A, y, and m are illustrated in Fig. 2A. The power spectra of A and y, given in Fig. 2B, show that the high frequency fluctuation of y is suppressed with respect to that of A by time-averaging. However, the low frequency fluctuations of y, which are caused by the slow fluctuations of m, are not affected. These low frequency noise can be suppressed by free energy dissipation, as we show later in this paper.

FIG. 2. Adaptation as a non-equilibrium transition.

A) Schematic time traces of the binary activity A (blue), the output y (black), and the memory M (red) in steady state. The slow M variations induce large fluctuations in the output y, while the fast A switching for a fixed M only produces small fluctuations in y. B) Power spectra of the activity SA and output Sy. The output noise is filtered (reduced) in the high frequency range ; but it remains unfiltered in the range . C) Effective memory potential in Eq. 5 for three values of the chemical driving μ (due to the choice D1 = D0 taken here, mad = m*). At equilibrium, μ = 0, the adapted memory state mad is unstable. At the value μ = μc the system becomes critical. In the region μ > μc the adapted state mad is stable, and the system adapts output and activity to a(mad). Inset: Activity response to step signal increase for corresponding values of μ. D) Effective temperature Teff for three different values of the chemical driving μ. After the onset of adaptation a region with “negative friction” develops, at the end of which the effective temperature diverges. Values of μ from lighter to darker blue are μ = 0, μ = 0.65μc, and μ = 20μc (the same as in panel C). The other parameters are from [17], see SI.

Adaptation as a non-equilibrium phase transition

Given the separation of time scales τa ≪ τad, we can solve Eqs. (3) by using the adiabatic approximation [13, 17]: p1(m) = a(m)p(m), and p0(m) = (1 − a(m))p(m), with a(m) = (1+ef1(m)−f0(m))−1 the average equilibrated activity for a fixed value of m. The distribution of m can be written as p(m) = e−h(m,S)/Z with h the effective potential and Z a normalization constant. We have determined the effective potential h analytically (see SI for 3 derivation):

| (5) |

where we have defined the critical chemical driving as μc = e/2, and m* = mr + (S − Sr)/e.

The analytical form of the effective potential is one of the main results of this paper. The effect of energy dissipation and the onset of adaptation can be understood intuitively with h(m, S), which contains two terms with similar shapes, see Fig. 2C. The first term (proportional to μ/μc) in the right hand side of Eq. (5) comes from chemical driving (non-equilibrium effect) and has a stable free energy minimum. The second term is the equilibrium potential in the absence of driving, and has a maximum at m*. At equilibrium the only critical point m* is unstable, so the system tends to go to the boundaries without adapting. As μ increases the first part of the potential starts to dominate. For μ > μc, the system develops a stable fixed point at mad indicating the onset of adaptive behaviors towards a(mad) [18]. As μ grows further this fixed point becomes increasingly stable, and adaptation accuracy improves. The transition of a feedback system to adaptation can thus be loosely understood as a continuous phase transition (see SI for details). Since the control parameter is the free energy dissipation, the transition to adaptation occurs far from equilibrium and a breaking of FDT is to be expected.

Breakdown of Fluctuation Dissipation Theorem

In our feedback model, the observable conjugate to the signal is eA = −∂SfA, for feedforward models this is not true [19–21]. At equilibrium the FDT leads to χ(t) = e∂tCA(t), where χ is the activity response function and CA the monotonic correlation function. In an adaptive system the integral of χ, which is just the response to a step stimulus, is non-monotonic, therefore FDT is broken and adaptation occurs out of equilibrium.

To quantify the departure from equilibrium, we define an effective temperature Teff using the formulation of the FDT in frequency space [5, 22], see inset in Fig. 2D. The frequency-dependence of Teff for μ > 0 implies a breakdown of FDT. As shown in Fig. 2D, while for any value μ ≠= 0 we have Teff ≠= 1, after the transition to the adaptive regime μ ≥ μc a divergence occurs. This corresponds to the appearance of a frequency region where Im[χ(ω)] < 0. A negative effective viscosity indicates the dominance of the active effects that drive a net current to flow against the gradients of the equilibrium energy landscapes (fA), which was also observed in other biological systems such as collections of motors [23] or the inner ear hair bundle [5]. The breakdown of FDT means that there is no a priori connection among fluctuations , chemical driving μ (dissipation), and long-time response 〈ε〉. In the following we derive relations linking these three quantities in the adaptive feedback system studied here.

The free energy cost of suppressing fluctuations

As evident from the effective potential, increasing the chemical driving μ stabilizes the adapted state. In the limit μ → ∞, the system thus goes to its perfectly adapted state with average activity and output aad = D0/(D0 + D1), that is a(mad) → aad. For finite μ, the output differs from aad, which can be characterized by the average error 〈ε〉 and its variance .

The average adaptation error is 〈ε〉 = (〈y〉 − aad)/aad. Summing and integrating Eqs. (3) at the steady state, we have

| (6) |

Thus to obtain the adaptation error we only need to evaluate the probability at the boundaries. In the limit of μ ≫ μc, we have:

| (7) |

where k and εc are constants with only weak dependence on μ (see SI for derivation). This shows explicitly that the adaptation error goes down exponentially with energy dissipation, as found numerically in our previous work for the discrete model [11]. Here, we show this relationship analytically in the continuum limit, which is consistent with direct simulations of the discrete model, see Fig. 3A.

FIG. 3. Free energy cost of reducing error and noise.

A). Dependence of average error with chemical driving for several system sizes. The decay is exponential, in agreement with the infinite size limit (dashed red). Saturation of the decay for finite N is due to finite size effects. B) Adaptation noise as a function of chemical driving for several system sizes, together with the analytical estimate in dashed red. At very large driving the noise saturates to its minimum σym dictated by the intrinsic activity fluctuations. Note that at the critical driving μc the analytical estimate diverges. This divergence is smoothed for finite N.

Besides stabilizing the adapted state, Eq. (5) shows that increasing μ also reduces the m–fluctuations by making the effective potential sharper. The reduction in these fluctuations implies a decrease in the variance of the error . Taking into account the separation of time scales, the variance of the output y can be approximated as the sum of two variances and . They respectively correspond to variation of y at time scale ~ τy around its average a(m) for a fixed m, and the variation of a(m) due to variation of m at the adaptation time ~ τad, see Fig. 2A. We thus have

| (8) |

The variance of y is caused by the fast fluctuations of the binary variable A at timescale ~ τa averaged over the output timescale τy ≫ τa (see SI for derivation):

which clearly shows that is reduced by time-averaging [12, 24].

The variance , where for n = 1, 2, is caused by the slow variation of m, and can not be reduced by time average. To obtain an analytical expression for we approximate p(m) by a Gaussian, valid for μ ≫ μc. This results in . The variance within the same Gaussian approximation of p(m) is given by . Defining now a characteristic variance as , we finally have:

| (9) |

which vanishes when μ → ∞. This is a main result of the paper, which shows that energy dissipation is used to reduce error noise by suppressing slow activity fluctuations. This result is verified by direct simulations of the discrete models with increasing N, see Fig. 3B.

Discussion

Biochemical networks are non-equilibrium systems fueled by free energy dissipation to achieve their biological functions. Energy dissipation liberates the networks from constraints such as the Fluctuation Dissipation Theorem and Detailed Balance. Here, we show in a negative feedback network that the long-time output response Δ〈y〉= aad〈ε〉 decreases with the free energy dissipation μ ≈ τad Ẇ exponentially, and its fluctuation decreases as μ−1. Both these effects, especially the slower decay of with μ, contribute to enhance the short time response 〈y〉max, see Fig. 4.

FIG. 4. Response and correlations in systems out of equilibrium.

A) (top panel) Average output response to a signal decrease for several values of the chemical driving beyond μc, see the color-code for μ in panel B. As the chemical driving μ increases, the maximal transient response 〈y〉max increases, but the long time response Δ〈y〉= aad〈ε〉 decreases. (bottom panel) The correlation function also decreases as the system is driven further away from equilibrium. B) The dependence of 〈y〉max, Δ〈y〉, and 〈y〉max on the chemical driving μ. The long-time response (adaptation error) Δ〈y〉 decreases quickly with μ. The decrease of the output fluctuation (noise) σy with μ is more gradual, and controls the increase in the maximal response 〈y〉max for large μ. In this figure N = 15, and S = Sr.

Even though FDT is broken in the adaptive system studied here, fluctuations and long-time response of the output are linked via a non-linear relation: , where and yc = aad〈ε〉. Unlike the linear non-equilibrium FDT derived by a change of observables [25–27], our non-linear relation links observables that are conjugate at equilibrium, making it particularly appealing. Another approach is taken in [28], where near equilibrium linear response is used to show that the dispersion of variables can be reduced by dissipation. Adaptation however is a far from equilibrium phenomenon which requires a critical finite amount of free energy dissipation. As a result, the energy scale is set by the intrinsic energy μc instead of the thermal energy kBT in [28]. It remains a challenging question whether these approaches can be combined to obtain a general relationship among response, fluctuation, and energy dissipation for systems far from equilibrium.

Supplementary Material

Acknowledgments

This work is partly supported by a NIH grant (R01GM081747 to YT). We thank Leo Granger and Jordan Horowitz for a critical reading of this manuscript.

Footnotes

PACS numbers: 87.10.Vg, 87.18.Tt, 05.70.Ln

References

- 1.Qian H. Annu Rev Phys Chem. 2007;58:113. doi: 10.1146/annurev.physchem.58.032806.104550. [DOI] [PubMed] [Google Scholar]

- 2.Mehta P, Schwab DJ. Proceedings of the National Academy of Sciences. 2012;109:17978. doi: 10.1073/pnas.1207814109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Niven JE, Laughlin SB. Journal of Experimental Biology. 2008;211:1792. doi: 10.1242/jeb.017574. [DOI] [PubMed] [Google Scholar]

- 4.Bennett CH. BioSystems. 1979;11:85. doi: 10.1016/0303-2647(79)90003-0. [DOI] [PubMed] [Google Scholar]

- 5.Martin P, Hudspeth A, Jülicher F. Proceedings of the National Academy of Sciences. 2001;98:14380. doi: 10.1073/pnas.251530598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Berg HC, Brown DA, et al. Nature. 1972;239:500. doi: 10.1038/239500a0. [DOI] [PubMed] [Google Scholar]

- 7.Block SM, Segall JE, Berg HC. Journal of bacteriology. 1983;154:312. doi: 10.1128/jb.154.1.312-323.1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Barkai N, Leibler S. Nature. 1997;387:913. doi: 10.1038/43199. [DOI] [PubMed] [Google Scholar]

- 9.Tu Y. Annual review of biophysics. 2013;42:337. doi: 10.1146/annurev-biophys-083012-130358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Koshland DE, Goldbeter A, Stock JB. Science. 1982;217:220. doi: 10.1126/science.7089556. [DOI] [PubMed] [Google Scholar]

- 11.Lan G, Sartori P, Neumann S, Sourjik V, Tu Y. Nature physics. 2012 doi: 10.1038/nphys2276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sartori P, Tu Y. Journal of statistical physics. 2011;142:1206. doi: 10.1007/s10955-011-0169-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Van Kampen NG. Stochastic Processes in Physics and Chemistry. 3 Elsevier Ltd; New York: 2007. [Google Scholar]

- 14.Kalinin Y, Jiang L, Tu Y, Wu M. Biophysical journal. 2009;96:2439. doi: 10.1016/j.bpj.2008.10.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gardiner C. Applied Optics. 1986;25:3145. [PubMed] [Google Scholar]

- 16.Parmeggiani A, Jülicher F, Ajdari A, Prost J. Physical Review E. 1999;60:2127. doi: 10.1103/physreve.60.2127. [DOI] [PubMed] [Google Scholar]

- 17.Tu Y, Shimizu TS, Berg HC. Proceedings of the National Academy of Sciences. 2008;105:14855. doi: 10.1073/pnas.0807569105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Allahverdyan AE, Wang QA. Phys Rev E. 2013;87:032139. [Google Scholar]

- 19.Buijsman W, Sheinman M. 2012 doi: 10.1103/PhysRevE.89.022712. arXiv preprint arXiv:1212.5712. [DOI] [PubMed] [Google Scholar]

- 20.De Palo G, Endres RG. PLOS Computational Biology. 2013;9:e1003300. doi: 10.1371/journal.pcbi.1003300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sartori P, Granger L, Lee CF, Horowitz JM. PLoS computational biology. 2014;10:e1003974. doi: 10.1371/journal.pcbi.1003974. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cugliandolo LF, Kurchan J, Peliti L. Physical Review E. 1997;55:3898. [Google Scholar]

- 23.Jülicher F, Prost J. Physical review letters. 1997;78:4510. [Google Scholar]

- 24.Berg HC, Purcell EM. Biophys J. 1977;20:193. doi: 10.1016/S0006-3495(77)85544-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Bedeaux D, Milosevic S, Paul G. Journal of Statistical Physics. 1971;3:39. [Google Scholar]

- 26.Prost J, Joanny J-F, Parrondo JMR. Physical review letters. 2009;103:090601. doi: 10.1103/PhysRevLett.103.090601. [DOI] [PubMed] [Google Scholar]

- 27.Seifert U, Speck T. EPL (Europhysics Letters) 2010;89:10007. [Google Scholar]

- 28.Barato AC, Seifert U. Physical Review Letters. 2015;114:158101. doi: 10.1103/PhysRevLett.114.158101. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.