Abstract

Clinical Decision Support Systems (CDSS) are tools that assist healthcare personnel in the decision-making process for patient care. Although CDSSs have been successfully deployed in the clinical setting to assist physicians, few CDSS have been targeted at professional nurses, the largest group of health providers. We present our experience in designing and testing a CDSS interface embedded within a nurse care planning and documentation tool. We developed four prototypes based on different CDSS feature designs, and tested them in simulated end-of-life patient handoff sessions with a group of 40 nurse clinicians. We show how our prototypes directed nurses towards an optimal care decision that was rarely performed in unassisted practice. We also discuss the effect of CDSS layout and interface navigation in a nurse’s acceptance of suggested actions. These findings provide insights into effective nursing CDSS design that are generalizable to care scenarios different than end-of-life.

Keywords: Human Computer Interaction, Nursing, Electronic Health Records, Clinical Decision Support

Introduction

Of the 60 billion of Medicare dollars spent each year on care of the dying, $300 million are spent during the last month of life, including many millions for inappropriate treatments provided to hospitalized patients [1]. For these patients, pain and symptom relief care is most often administered by nurses on behalf of the entire health care team. Clinical decision support systems (CDSS) could assist this process, but have not been developed or tested to support nursing care decisions.

In addition to research about end-of-life care, data collected as part of routine care provide a potential “treasure trove” of information that can positively influence decision making for nurses. This knowledge needs to be delivered in a way that nurses can quickly and accurately interpret and act upon, in the routine workflow of their already temporally and cognitively demanding work.

One potential method for delivering this information to nurses is in the form of computerized CDSS that are embedded within the electronic health record (EHR). The use of CDSS has the potential of greatly improving care quality, but the adoption rate of these tools in the United States has been lower than expected. One of the main reasons for this delay is the lack of efficiency and usability of available systems [2]. Usability testing of EHR interfaces is not new and has been applied both for personal and clinical interfaces [3]. Beyond usability capturing practice-based and literature-based evidence for CDSS interfaces, it is also critically important to evaluate how the integration of this evidence into EHRs affects clinical decision making.

We present a study on the effect of clinical decision support integration in a nursing care planning, documentation and handoff interface. In particular, we show how evidence-based data extracted from a database of real patients can be used to drive nurse’s care for patients with a matching profile. Influencing nurse’s decisions is a two-step process: first, we need the nurse to realize there is a problem with a patient’s care plan. Second, we want to convey that the problem can be solved by making appropriate changes to the plan of care. Additionally, the nurse should feel confident about the suggested changes, understanding that they are based on strong evidence derived from research and past practice.

Data source

Until recently, standardized nursing care data was not readily available, making it impossible to develop a set of CDSS benchmarks that could be used to guide nursing decisions. The health care data for this study was derived from a multi-year longitudinal study of HANDS (Hands-On Automated Nursing Documentation System). HANDS is an electronic tool that nurses use to track patient care and clinical progress throughout a hospitalization. A hospitalization includes all plans of care that nurses document at every formal handoff (admission, shift-change update, or discharge). HANDS uses standardized nomenclatures to describe diagnoses, outcomes and interventions [4]–[6].

The HANDS system was used over a two-year period on 8 acute care units in 4 Midwestern hospitals, accounting for more than 40,000 patient care episodes. Data mining and statistical analysis of those episodes identified a set of benchmarks that related to end-of-life pain management and death anxiety.

One particularly significant finding extracted from the HANDS database relates to the management of pain for end of life patients. In a previous work we reported that a combination of Pain Management, Medication Management, and Positioning, was statistically more likely to provide a positive effect on pain level compared to other combined interventions [7].

Interestingly, the HANDS data shows that only 7.5% of nurses performed the most effective combination of interventions. We consider this as our control group: the set of professional nurses that, without having CDSS guidance, choose to perform the optimal set of interventions on a patient whose pain was not controlled. A successful CDSS interface should show a significant improvement over this baseline use in the choice of the optimal set of interventions.

From Finding to Feature

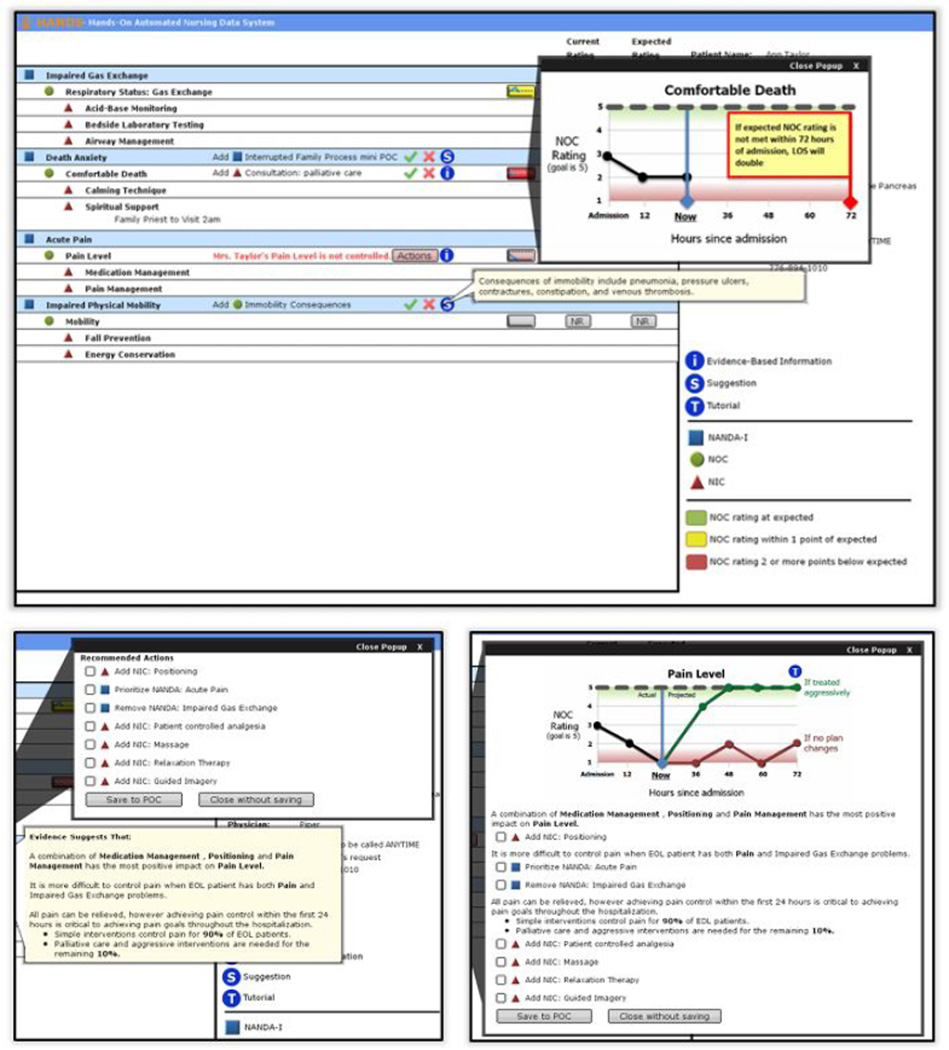

Although this work concentrates on the aforementioned pain control finding, other actionable findings were identified in the HANDS database. These finding were transformed into a set of static and dynamic CDSS features that were introduced in a redesigned prototype of the HANDS interface. Examples of these features (figure 1) include: graphs showing the trend of patient outcomes like pain and death anxiety; tooltips containing evidence-based information; and pop-ups that include checkable suggestions for changes to the plan of care based on the patient profile. To increase our understanding of nurse interaction with our interface, we created alternative designs of several features. Specifically, we chose to evaluate two orthogonal design dimensions: feature grouping and message personalization.

Figure 1.

A screenshot of the HANDS interface enriched with clinical decision support features (top). The bottom panels show an example of ungrouped CDS features (evidence text and action checklist – left panel) and grouped CDS features (evidence text, action checklist and trend graph – right panel).

Feature grouping consists in consolidating multiple CDSS features into a single actionable item of the interface. In an ungrouped interface, evidence-based messages, checkable actions and trend graphs would appear as separate windows that the user could open and close individually. In a grouped interface, all these features would be presented as a single window. Grouping has the advantage of showing all relevant information and related actions at once, but may cognitively overload a user, or display information that is not considered immediately useful, forcing the user to scan and isolate relevant fragments.

Message personalization involves adjusting CDSS messages to explicitly refer to a patient name and interventions in his or her plan of care. While personalized messages (being typically longer) may increase interpretation time, they could be more persuasive as a call to action. To test the effect of each of these variations, we created four CDSS prototype interfaces, covering the four possible permutations of the aforementioned design dimensions.

Sample

To support generalizability of our findings, we used a quota sampling strategy to select 40 registered nurse subjects who possessed demographic characteristics that were broadly representative. The demographics of the nurse-users were: gender (32 [80%] female and 8 [20%] male); race/ethnicity (22 [55%] non-Hispanic Caucasian, 10 [25%] non-Hispanic American/Black, 6 [15%] Asian, and 2 [5%] Other); age (mean = 34.7 years [range = 22–62 years]); education level (26 [65%] Bachelor’s Degree, 10 [25%] Master’s Degree, 4 [10%] Associate’s Degree); experience (mean = 8.6 years [range = 0 – 31 years]) work setting (32 [80%] acute care, 7 [17.5%] non-acute care, 1 [2.5% non-applicable]); and experience with EHRs (Yes = 100%).

User Study

During our user study, subjects were introduced to the patient care scenario (an end-of-life patient, including pre-admission history and current status). The pain trend for this patient, along with the current plan of care, was designed to fit our recommendation for patient positioning, based on the data mining results. Subjects were then presented with one of the alternative prototype designs and instructed to “think-aloud” as they interacted with them. Additional details on the interview process can be found in [8]. It is important to underscore here that subjects were not instructed to complete a specific task. Since our target users are nurses, we wanted them to take reasonable actions on the interface, depending on the patient status, history and on the presented CDSS information. We therefore take task completion to correspond to users verbally ‘committing’ their actions. For instance, after reading the patient information and CDSS, and modifying the plan of care, subjects could say they have done what was needed and are ready to move to the next patient.

We logged changes to the plan of care performed by the test users. We were interested in assessing whether users chose to add the Positioning intervention to the patient plan of care. In our analysis we only include nurses who verbalized their motivation to change the care plan. We note that some users that chose to perform the positioning action without providing a rationale could still have done so for a valid (but non-verbalized) reason. Excluding users that do not provide an action rationale allows us to be more conservative in measuring the efficacy of our CDSS prototype.

We also chose not to include users that displayed exploratory behavior (clicking on buttons or actions in the interface with the only intent to discover how the interface worked). These users typically performed all the suggested changes to the plan of care (including positioning), and would lead to an overestimation of the effect of CDSS on nurse’s decisions in an actual clinical setting.

Findings

After excluding subjects that either displayed exploratory behavior or that did not provide a rationale, the number of valid users is 24. The control group, extracted from the HANDS database, was significantly bigger than our test subject base (N=333). Compared to the 7.5% control group baseline that added positioning, 87% of the test subject group using our CDSS prototype chose to perform the positioning intervention. A two-tailed Fisher Exact test shows this result is statistically significant (p < 0.001).

Effect of Information Organization

Our second analysis involved comparing the effectiveness of the four prototype variants, to evaluate the effect of feature grouping and message personalization on the nurse’s positioning intervention. Table 1 shows the percentage of subjects that choose to add the positioning intervention for each prototype variation. While message personalization does not display a meaningful effect, feature grouping has a considerable impact. Specifically, presenting multiple CDSS features in a single windows appears more effective than letting users “cherry pick” information they want to visualize. Although this result is not statistically significant (p = 0.073), it does hint at a possible effect of mutually-reinforcing, grouped CDSS features in driving nurses towards a desired intervention. We plan to further explore this effect in a larger user study involving a multiple-patient handoff and the introduction of time constraints and distractors matching a real hospital setting.

Table 1.

The percentage of subjects that followed the positioning suggestion has considerable variation between feature-grouped and feature-ungrouped prototypes.

| grouped | ungrouped | |

|---|---|---|

| personalized | 83% | 60% |

| generic | 86% | 57% |

Navigation Styles

A second analysis was based on the observed patters of access of available CDSS features. As mentioned previously and shown in figure 1, our prototype initial screen showed several CDSS features at once. In particular, several buttons were color-coded based on the importance of the alert or actions they contained (using green, yellow and red). Furthermore, red-level alert buttons would present a color animation that remained active until users acknowledged the alert in some way (for instance, opening the pain alert popup and adding the positioning intervention to the plan of care).

Of the 24 valid subjects, 17 (70%) decided to handle the red-level alerts first, and then proceed in order of importance based on alert color (color-based navigation); 6 users (25%) started with alert on the top of the interface and proceeded in order moving down (layout-based navigation); 1 user started somewhere else. The alerts at the top of the interface were medium priority in all prototypes, while the most important ones were close to the center of the layout. These percentages did not change significantly when considering the entire 40-subject test group. Interestingly, 17 out of 17 subjects that used color-based navigation performed the suggested positioning action, compared to 4 out of 7 subjects that used another navigation style. This result is statistically significant (p < 0.02). The result is statistically significant even if we relax the rationale and exploratory behavior criteria to extend our sample (rationale-relaxed: N=30, p < 0.02; exploratory-relaxed: N=40, p < 0.015).

We observed that all users would eventually access the CDSS feature that allowed them to add positioning to the plan of care, so this difference in outcome is not due to a group of users simply skipping that specific feature during navigation. This finding suggests that, although the use of color and flashing interface elements helped in conveying the importance of certain specific actions, it was not enough to immediately direct all users towards that action. We explored the relation between navigation styles and user demographics and found no emerging correlation with nurse’s age or professional experience, which leads us to believe this finding may be related to the sense-making process put in place by each nurse as they build a picture of the patient’s status and needs. Although this process can be guided by appropriate training, it is fundamental for a CDSS interface to provide effective guidance to nursing personnel with widely different backgrounds.

Conclusion

The findings of this study have important implications for CDSS implementation and interface design that likely apply to patient care situations beyond end-of-life patients. First, well-designed CDSS interfaces available to nurses at the point of care that are displayed during the decision-making workflow can influence care plan changes that may yield better patient outcomes. Second, the use of mutually-reinforcing, grouped CDSS features suggests an effect in nurse’s acceptance of care suggestions. Finally, personal navigation style predicts whether nurses will make changes recommended within the CDSS. We expect it is possible to increase the number of nurses who make recommended changes by designing an interface that steers users toward relevant CDS features regardless of their navigation styles. We are planning a follow-up study that will simulate a more complex scenario with multiple patients, evolving plans of care and additional CDSS features.

Footnotes

Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for third-party components of this work must be honored.

Contributor Information

Alessandro Febretti, Email: afebre2@uic.edu.

Janet Stifter, Email: jstift2@uic.edu.

Gail M Keenan, Email: gmkeenan@uic.edu.

Karen D Lopez, Email: kdunnl2@uic.edu.

Andrew Johnson, Email: aej@evl.uic.edu.

Diana J Wilkie, Email: diwilkie@uic.edu.

References

- 1.Zhang B, Wright A. Health care costs in the last week of life: associations with end-of-life conversations. Archives of Internal Medicine. 2009 doi: 10.1001/archinternmed.2008.587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Belden J, Grayson R, Barnes J. Defining and testing EMR usability: Principles and proposed methods of EMR usability evaluation and rating. Healthcare Information and Management Systems Society (HIMSS) 2009 [Google Scholar]

- 3.Haggstrom Da, Saleem JJ, Russ AL, Jones J, Russell Sa, Chumbler NR. Lessons learned from usability testing of the VA’s personal health record. Journal of the American Medical Informatics Association : JAMIA. 2011 Dec.18(Suppl 1):i13–il7. doi: 10.1136/amiajnl-2010-000082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.NANDA International. Nursing diagnoses: Definition and classification [Google Scholar]

- 5.Moorhead S MMI, Johnson M. Outcomes Project. Nursing outcomes classification (NOC) Mosby. 2004 [Google Scholar]

- 6.Dochterman JM BG. Nursing interventions classification (NIC) Mosby: 2004. [Google Scholar]

- 7.Almasalha F, Xu D, Keenan GM, Khokhar A, Yao Y, Chen Y-C, Johnson A, Ansari R, Wilkie DJ. Data mining nursing care plans of end-of-life patients: a study to improve healthcare decision making. International journal of nursing knowledge. 2013 Feb.24(1):15–24. doi: 10.1111/j.2047-3095.2012.01217.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Febretti A, Lopez K, Stifter J. A Component- Based Evaluation Protocol for Clinical Decision Support Interfaces. HCI International. 2013 doi: 10.1007/978-3-642-39229-0_26. [DOI] [PMC free article] [PubMed] [Google Scholar]