Abstract

Several stimulus factors are important in multisensory integration, including the spatial and temporal relationships of the paired stimuli as well as their effectiveness. Changes in these factors have been shown to dramatically change the nature and magnitude of multisensory interactions. Typically, these factors are considered in isolation, although there is a growing appreciation for the fact that they are likely to be strongly interrelated. Here, we examined interactions between two of these factors – spatial location and effectiveness - in dictating performance in the localization of an audiovisual target. A psychophysical experiment was conducted in which participants reported the perceived location of visual flashes and auditory noise bursts presented alone and in combination. Stimuli were presented at four spatial locations relative to fixation (0°, 30°, 60°, 90°) and at two intensity levels (high, low). Multisensory combinations were always spatially coincident and of the matching intensity (high-high or low-low). In responding to visual stimuli alone, localization accuracy decreased and response times (RTs) increased as stimuli were presented at more eccentric locations. In responding to auditory stimuli, performance was poorest at the 30 and 60° locations. For both visual and auditory stimuli, accuracy was greater and RTs were faster for more intense stimuli. For responses to visual-auditory stimulus combinations, performance enhancements were found at locations in which the unisensory performance was lowest, results concordant with the concept of inverse effectiveness. RTs for these multisensory presentations frequently violated race-model predictions, implying integration of these inputs, and a significant location-by-intensity interaction was observed. Performance gains under multisensory conditions were larger as stimuli were positioned at more peripheral locations, and this increase was most pronounced for the low-intensity conditions. These results provide strong support that the effects of stimulus location and effectiveness on multisensory integration are interdependent, with both contributing to the overall effectiveness of the stimuli in driving the resultant multisensory response.

1. Introduction

Our brains are continually receiving sensory information from the environment. Each sensory system is tasked with receiving and processing this information and each accomplishes this task in different ways. Often, information from one modality is accompanied by corresponding information in another, particularly when this information is derived from the same event. To process such stimuli more efficiently, our brains integrate this information, often in ways that result in substantial changes in behavior and perception (G. A. Calvert, Spence, C. , Stein, B.E., 2004; Murray & Wallace, 2012; B.E. Stein & Meredith, 1993). Several of the more familiar and compelling examples of these multisensory-mediated changes in behavior include improvements in target detection (Frassinetti, Bolognini, & Ladavas, 2002; Lovelace, Stein, & Wallace, 2003), improvements in target localization and orientation (Ohshiro, Angelaki, & DeAngelis, 2011; B. E. Stein, Huneycutt, & Meredith, 1988), and speeding of reaction or response times (RTs; Amlot, Walker, Driver, & Spence, 2003; G. A. Calvert & Thesen, 2004; Corneil, Van Wanrooij, Munoz, & Van Opstal, 2002; Diederich, Colonius, Bockhorst, & Tabeling, 2003; Forster, Cavina-Pratesi, Aglioti, & Berlucchi, 2002; Frens, Van Opstal, & Van der Willigen, 1995; Harrington & Peck, 1998; Hershenson, 1962; Hughes, Reuter-Lorenz, Nozawa, & Fendrich, 1994; Molholm, et al., 2002).

In an effort to “decide” what should be integrated (and what should not be integrated), the brain capitalizes on the statistical regularities of cues from the different senses that provide important information as to the probability that they are related (Alais & Burr, 2004; Altieri, Stevenson, Wallace, & Wenger, 2015; Baier, Kleinschmidt, & Muller, 2006; den Ouden, Friston, Daw, McIntosh, & Stephan, 2009; Massaro, 1984; McIntosh & Gonzalez-Lima, 1998; Polley, et al., 2008; Shinn-Cunningham, 2008; Wallace & Stein, 2007). Thus, multisensory integration (and its resultant behavioral and perceptual benefits) is in part determined by physical factors associated with the stimuli to be paired. Several stimulus factors have been identified as being integral to this process. Preeminent among these are the spatial and temporal relationships of the stimuli and their relative effectiveness. As a general rule, the more spatially and temporally proximate two signals are, the more likely they are to influence one another’s processing. Furthermore, weakly effective sensory signals typically result in the largest multisensory gains when they are paired, a phenomenon known as inverse effectiveness.

These stimulus-dependent factors and their influence on multisensory processing have proven to be remarkably robust across a wide array of experimental measures. These include: the activity of individual neurons in animal models [space (Meredith & Stein, 1986a), time (Meredith, Nemitz, & Stein, 1987), effectiveness (Meredith & Stein, 1986b)], neural responses in humans as measured by fMRI and PET [space (Macaluso, George, Dolan, Spence, & Driver, 2004), time (Macaluso, et al., 2004; L. M. Miller & D'Esposito, 2005; Stevenson, Altieri, Kim, Pisoni, & James, 2010; Stevenson, VanDerKlok, Pisoni, & James, 2011), effectiveness (James & Stevenson, 2012; James, Stevenson, & Kim, 2009, 2012; Nath & Beauchamp, 2011; Stevenson & James, 2009; Stevenson, Kim, & James, 2009; Werner & Noppeney, 2009)] and EEG [space (Zhou, Zhang, Tan, & Han, 2004), time (Schall, Quigley, Onat, & Konig, 2009; Senkowski, Talsma, Grigutsch, Herrmann, & Woldorff, 2007; Talsma, Senkowski, & Woldorff, 2009), effectiveness (Stevenson, Bushmakin, et al., 2012)], as well as human behavior and perception [space (Bolognini, Frassinetti, Serino, & Ladavas, 2005; Frassinetti, et al., 2002) but see (Murray, et al., 2005), time (Conrey & Pisoni, 2006; Dixon & Spitz, 1980; Hillock, Powers, & Wallace, 2011; Keetels & Vroomen, 2005; Stevenson & Wallace, 2013; Stevenson, Zemtsov, & Wallace, 2012a; van Atteveldt, Formisano, Blomert, & Goebel, 2007; van Wassenhove, Grant, & Poeppel, 2007; Wallace, Roberson, et al., 2004), effectiveness (Stevenson, Zemtsov, & Wallace, 2012b; Sumby & Pollack, 1954) but see (Chandrasekaran, Lemus, Trubanova, Gondan, & Ghazanfar, 2011; Ross, Saint-Amour, Leavitt, Javitt, & Foxe, 2007)]. It should also be noted here that, aside from these bottom-up factors, other higher-level factors such as task, semantic congruence, and context are likely to also be very important in dictating the final response (Foxe, 2008; Otto, Dassy, & Mamassian, 2013; Stevenson, Wallace, & Altieri, 2014; Ten Oever, Sack, Wheat, Bien, & Van Atteveldt, 2013).

Although these factors have largely been studied in an independent manner in this prior work (e.g., exclusive manipulation of the spatial relationship of the paired stimuli), there is an intuitive interdependency between them that has not been thoroughly explored. For example, manipulating the absolute spatial location of multisensory stimuli impacts the relative effectiveness of these stimuli because of, for example, changes in the sensory acuity of the peripheral organs. Indeed, recent neurophysiological (Carriere, Royal, & Wallace, 2008; Krueger, Royal, Fister, & Wallace, 2009; Royal, Carriere, & Wallace, 2009) and psychophysical (Cappe, Thelen, Romei, Thut, & Murray, 2012; Macaluso, et al., 2004; Stevenson, Fister, Barnett, Nidiffer, & Wallace, 2012) studies have begun to shed light on the nature of these interdependencies.

These studies serve as motivation for the current study, which seeks to examine the interdependency of spatial location and stimulus effectiveness in dictating one aspect of human performance – target localization. The work is predicated on the evidence that manipulations of the location of visual or auditory stimuli results in changes in the accuracy related to detecting the location or changes in the location of the stimuli (Bock, 1993; Carlile, Leong, & Hyams, 1997; Mills, 1958, 1960; Yost, 1974). Therefore, our hypothesis was that changing the location of a stimulus should result in changes in the effectiveness of that stimulus. In turn, the magnitude of behavioral gains from multisensory presentations should reflect this change of effectiveness across space in a manner mirroring inverse effectiveness, providing insights into how space and effectiveness interact to dictate multisensory responses. To explore this hypothesis, we tested individuals’ ability to localize visual, auditory, and paired audiovisual targets as a function of both stimulus location and stimulus intensity. By examining localization accuracy and RTs, we then characterized the multisensory gains seen in responses to these different stimulus combinations (Stevenson, Ghose, et al., 2014). Testing the principles of multisensory integration together and investigating interactions between them would lend support to the notion that the principles are strongly interrelated, and provide novel mechanistic insights into the nature of such interactions.

2. Methods

2.1. Participants

Participants included fifty-one Vanderbilt undergraduate students (21 male, mean age =18.9, STD = 1, age range =18-21 and were compensated with class credit. All recruitment and experimental procedures were approved by the Vanderbilt University Institutional Review Board. Exclusionary criteria, applied prior to in-depth data analysis, included a failure to detect foveal stimuli (at 0°) above 80% rate (N = 5), or the failure to report foveal, synchronous stimuli as synchronous at a 50% rate (N = 5). Finally, one subject was excluded for repeatedly pressing a single button on the response box for the entirety of the experiment. This study is part of a larger study investigating the interaction of spatial, temporal, and effectiveness factors on multisensory processing (Krueger Fister, Stevenson, Nidiffer, Barnett, & Wallace, in revision; Stevenson, Fister, et al., 2012).

2.2. Stimuli

Visual and auditory stimuli were presented using E-Prime version 2.0.8.79 (Psychology Software Tools, Inc; PST). Visual stimuli were presented on two Samsung Sync Master 2233RZ monitors at 100 HZ arranged so that each monitor crossed the circumference of circle centered on the participants nasium at a distance of 46cm at 0°, 30°, 60°, and 90° azimuth, with all presentations in the right visual field (Figure 1a, b). All visual stimuli were white circles measuring 7mm in diameter, or approximately 1° of visual angle. Visual stimulus durations were 10 ms, with timing confirmed using a Hameg 507 oscilloscope with a photovoltaic cell. Visual stimuli were presented at two luminance levels, 7.1 cd/m2 (low) and 215 cd/m2 (high) with a black background of 0.28 cd/m2, measured with a Minolta Chroma Meter CS-100. Visual stimuli were presented at each spatial location (4) and each salience level (2), for a total of eight visual-only conditions.

Figure 1. Stimulus Apparatus and Trial Structure.

(A, B) Auditory and visual stimuli were presented on a two-monitor array such that stimuli locations at 0°, 30°, 60°, and 90° were equidistant from the nasium. (C) Trial Structure for the task. After fixating a cross for 500 – 1000 ms, subjects were presented with auditory, visual or multisensory stimulus. The subjects then had 2000 ms to respond with the location of the stimulus or indicate that there was no stimulus.

Auditory stimuli were presented via four separate speakers mounted on the top of the two monitors at 0°, 30°, 60°, and 90° azimuths angled toward participant, matching the visual presentations. Speakers were mounted 2cm, or 2.5° above their respective visual presentation. Auditory stimuli consisted of a frozen white-noise burst generated at 44100 Hz with the Matlab rand function with a 5 ms rise/fall cosine gate. Auditory stimulus duration was held constant at 10 ms, with timing confirmed using a Hameg 507 oscilloscope. Auditory stimuli were presented at two intensity levels, 46 dB SPL (low) and 64 dB SPL (high), with a background noise at 41 dB SPL, measured with a Larson Davis sound level meter, Model 814. Auditory stimuli were presented at each spatial location (4) and each salience level (2), for a total of eight auditory-only conditions.

Audiovisual (AV) conditions consisted of pairs of the auditory and visual stimuli described above. Presentations were always spatially coincident, and salience levels were always matched (high-high and low-low). AV conditions were presented at each spatial location (4) and each salience level (2) for a total of eight AV conditions. Additionally, a “blank” no stimulus condition was also included in which no auditory or visual stimulus was presented while all aspects of the trial remained consistent. In total 25 unique conditions were presented, eight visual only, eight auditory only, eight AV, and one blank. In addition to these trials that are relevant to this report, additional presentations including temporal synchrony variations of these stimuli were also included. These modulations are reported elsewhere (Stevenson, Fister, et al., 2012) and are incorporated in these analyses only in the exclusionary criteria listed above.

2.3. Procedure

Participants were seated inside an unlit WhisperRoom™ (SE 2000 Series) with their forehead placed against a Headspot (University of Houston Optometry) forehead rest locked in place, with a chinrest and chair height adjusted to the forehead rest. Participants were asked to fixate towards a cross at all times, and were monitored by close circuit infrared cameras throughout the experiment to ensure fixation. Participants were instructed to make all responses as quickly and as accurately as possible. Each trial began with a fixation screen for 1 s with a fixation cross in visual center at 0° elevation 0° azimuth (Figure 1c). Fixation was followed by blank screen with a randomly jittered time between 500 and 1000 ms, with stimulus presentation immediately after. Following stimulus presentation, a response screen appeared including the prompt, “Where was it?” below a fixation cross, with the response options 1-4 displayed on screen at each spatial location coincident with stimulus presentation, and a fifth option (5) for no stimulus detected. Participants completed the spatial location task by responding via a five-button PST serial response box where 1 = 0°, 2 = 30°, 3 = 60°, 4 = 90°, and 5 = no stimulus. Hence, the chance of a correct response from a random guess was 20%. Following the participant’s response, the fixation cross appeared and the subsequent trial began.

Participants completed four sessions, each lasting approximately 20 minutes. Each session included five stimulus presentations with each of the 25 conditions in a randomized order for a total of 125 trials per session. Across the four sessions, participants completed 20 trials per condition, for a total of 600 trials per participant. Participants were given breaks in between sessions as needed. Including breaks, total experiment time was approximately 90 minutes.

2.4. Analysis

An α-level of p = 0.05 was used for all analyses. Response accuracies were measured for the spatial localization task over each spatial location and stimulus intensity. Accuracies were measured as percent of total trials per condition in which the subject judged the location of the stimulus correctly. A three-way ANOVA (location × intensity × sensory modality) was used as an omnibus test with two-way ANOVAs (location × intensity) used as follow-up tests. Multisensory enhancement was found by comparing multisensory performance against the greater performance of the unisensory components. Enhancements were compared across spatial location and stimulus intensity using a three-way, repeated-measures ANOVA. Within each significant main effect, significance of gain of each condition was determined by a protected, one-sample t-test.

Only trials in which subjects correctly localized stimuli and only responses made within 2000 ms were considered in RT analysis. RT cumulative distribution functions (CDFs), specifying at each time point the proportion of total responses that were made, were computed for each condition (i.e., auditory, visual and multisensory; high and low intensity; and 0, 30, 60, and 90°) and subject. In order to account for stimulus redundancy in multisensory stimuli when assessing multisensory gain, multisensory CDFs were compared to an independent race-model computed from a distribution comprised of the minima of randomly selected auditory and visual RTs without replacement (Raab, 1962). In this model, for all reaction times t > 0:

This race model prediction represents a statistical threshold (viz. probability summation) such that probabilities along the multisensory distribution that are greater than the race model prediction are said to violate the race model and thus are indicative of integration of unisensory components. The race model relies on an assumption of context invariance. That is, it implies that the processing of one signal is independent of the processing of another (Ashby & Townsend, 1986; Luce, 1986; Townsend & Wenger, 2004). Significant race-model violations were determined using the Kolmogorov-Smirnoff (KS) Test for each CDF for each condition on an individual–subject basis.

To assess the extent of race-model violations [VAV(t)] across space and intensity, the predicted CDF was subtracted from the multisensory CDF for each subject and condition in which

Group race-model inequality distributions were computed by averaging VAV(t) across all subjects at each RT, t. These resulting distributions are known as the race-model inequalities (J. Miller, 1982). From these distributions, race-model violation distributions were computed by averaging only positive portions of individual subjects’ race-model inequalities (i.e., VAV > 0). These distributions were compared across stimulus location and intensity in two ways. First, following Colonius and Diederich (2006), area under the portions of the race-model violation distributions were computed for each subject and condition and then analyzed using repeated-measures ANOVA. Comparisons between intensity levels or stimulus location were carried out via two-sample t-tests. Second, for individual subjects, race-model inequalities were compared across intensity level (high - low) and spatial location (0° − 30°, etc.). This difference-of-difference measure is a powerful means to highlight interaction effects, and has been used previously in a number of domains, including fMRI (James, Kim, & Stevenson, 2009; James & Stevenson, 2012; Stevenson, et al., 2009), ERPs (Stevenson, Bushmakin, et al., 2012) and in examining RT distributions (Stevenson, Fister, et al., 2012). In addition, such difference-of-difference measures were used to initially reveal simple interaction effects with RT measures (Sternberg, 1969a, 1969b, 1975, 1998). For the resulting contrasts, probability differences were placed in 100 ms bins and bins were compared using two-sample t-tests (for example, high - low at 0° was compared to high - low at 30°).

3. Results

3.1. Changes in localization accuracy as a function of stimulus location and intensity

In measures of accuracy, where subjects were asked to identify stimulus location, an omnibus three-way ANOVA (factors of modality [i.e., visual alone, auditory alone, visual-auditory], location and intensity) showed significant main effects, significant two-way interactions, and a significant three-way interaction. These statistics are summarized in Table 1. Follow-up, two-way ANOVAs (factors of stimulus location and intensity) revealed significant main effects of spatial location for the auditory (F(3,117) = 14.306, p = 5.2 × 10−8, partial η2 = 0.27), visual (F(3,117) = 286.961, p = 1.7 × 10−54, partial η2 = 0.88), and multisensory (F(3,117) = 20.041, p = 1.5 × 10−10, partial η2 = 0.34) conditions (Figure 2a, b). For these spatial manipulations, subjects were less accurate at judging the location of visual stimuli at peripheral locations compared to stimuli that were centrally located (0° vs. 90°; t = 26.36, p = 1.84 × 10−26, d = 8.44). A similar pattern of decreasing accuracy with increasing eccentricity held for auditory stimuli presented from center to 60° (t = 5.50, p = 2.6 × 10−6, d = 1.76), albeit at a slower rate than in the visual condition. However, auditory localization accuracy increased for the most peripheral (90°) location (t = 3.37, p = 0.002, d = 1.08).

Table 1.

Results from three-way ANOVA

| Effect | dfn | dfd | F | P |

|---|---|---|---|---|

| A – Sensory Modality | 2 | 78 | 417.771 | 2.11×10−42 |

| B – Intensity Level | 1 | 39 | 104.786 | 1.31 ×10−12 |

| C – Spatial Location | 3 | 117 | 124.385 | 3.06 ×10−36 |

| A × B | 2 | 78 | 87.767 | 1.08 ×10−20 |

| A × C | 6 | 234 | 120.268 | 1.29 ×10−68 |

| B × C | 3 | 117 | 11.155 | 1.71 ×10−06 |

| A × B × C | 6 | 234 | 30.42 | 6.92 ×10−27 |

Figure 2. Accuracy Performance Across Space and Intensity.

(A, B) Localization performance declined for auditory and visual stimuli for high (A) and low (B) intensity conditions. (C) To assess multisensory enhancement, the greatest unisensory performance was subtracted from the multisensory performance. Enhancements across space follow the pattern of inverse effectiveness.

Main effects of intensity (Figure 2a, b) were highly significant in the visual and multisensory conditions (F(1,39) = 195.076, p = 1.1 × 10−16, partial η2 = 0.83 and F(1,39) = 14.553, p = 4.7 × 10−4, partial η2 = 0.27, respectively). In contrast, the auditory intensity effect failed to reach significance (F(1,39) = 2.162, p = 0.15). Thus, for both the visual and audiovisual conditions, high-intensity stimuli were more accurately localized than low-intensity stimuli. In addition, significant interactions between location and intensity were found in both the auditory and visual modalities (F(3,117) = 9.47, p = 1.2 × 10−5, partial η2 = 0.20 and F(3,117) = 38.31, p = 1.9 × 10−17, partial η2 = 0.50, respectively), but not in the multisensory condition (F(3,117) = 2.08, p = 0.11).

In summarizing these results, localization accuracy was strongly influenced by the location of auditory, visual, and multisensory stimuli. Accuracy was found to decrease for the localization of visual and multisensory targets as intensity was decreased. The effects of changing intensity and location were found to interact for unisensory auditory and visual conditions, but not under multisensory conditions.

Along with examining absolute performance as a function of stimulus location and intensity, we also assessed the amount of multisensory gain, indexed as a comparison between multisensory accuracy and the better of the two unisensory accuracies. This measure yields an effect of spatial location (F(3,117) = 3.663, P = 0.014, partial η2 = 0.09) which is driven by increased multisensory gain at 30° in both high (t = 3.033, p = 0.004, d =0.97) and low (t = 3.499, p = 0.001, d = 1.12) intensities and in the low intensity condition at 60° (t = 2.448 p = 0.02, d = 0.79). In contrast, no significant interaction between location and intensity was observed (F(3,117) = 1.76, p = 0.16).

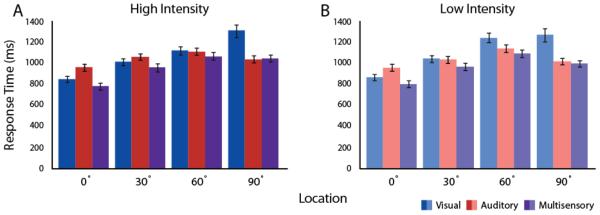

3.2. Changes in mean response times as a function of stimulus location and intensity

Like for accuracy in stimulus localization, RTs showed a main effect for spatial location in auditory (F(3,117) = 22.178, p = 1.9 × 10−11, partial η2 = 0.36), visual (F(3,117) = 102.758, p , p = 4.9 × 10−33, partial η2 = 0.72), and multisensory (F(3,117) = 75.847, p = 1.3 × 10−27, partial η2 = 0.66) conditions (Figure 3, bottom). For the visual condition, subjects were slower to respond to stimuli located in the periphery compared to central stimuli (0° vs. 90°; t = 10.80, p = 2.8 × 10−13, d = 3.46). The auditory spatial effect had a similar pattern as that seen for accuracy. RTs first increased as stimuli were located at more peripheral locations, but with a rate of increase slower than that seen for vision. This effect reached its maximum at 60° (0° vs. 60°; t = 7.19, p = 1.2 × 10−8, d = 2.30), after which RTs were faster at 90° (60° vs. 90°; t = 4.47, p= 6.6 × 10−5, d = 1.43). A main effect of intensity on reaction times was only significant for visual stimuli (F(1,39) = 5.463, p = 2.9 × 10−6, partial η2 = 0.12; Figure 3). We found significant interactions in RTs between spatial location and intensity in the visual and multisensory conditions (F(3,117) = 5.754, P = 0.001, partial η2 = 0.13, F(3,117) = 3.476, p = 0.018, partial η2 = 0.08, respectively), but not in the auditory condition (Figure 3).

Figure 3. Mean Response Times Across Space and Intensity.

(A, B) Mean RTs generally declined with more peripheral presentations for high (A) and low (B) intensity conditions. Likewise, mean RTs were slower for low- than high-intensity stimulus presentations.

3.3. Changes in multisensory response times as a function of stimulus location and intensity: race-model analyses

From performance for the two unisensory conditions, we calculated a race-model prediction to which we compared the multisensory cumulative distribution function (CDF, Figure 4a-b). When the multisensory CDF falls significantly to the left of the modeled CDF, it is considered a violation of the race model, and thus multisensory facilitation (J. Miller, 1982). There were significant race-model violations present in all subjects but one, with just over half of subjects (N = 21) having violations in 5 or more of the multisensory conditions.

Figure 4. Response Time CDFs and Race-Model Inequalities.

(A – D) Step-by-step analysis of response time CDFs for high and low intensities at 0° location. (A and B) Group average multisensory (purple lines) and race-model (black line) CDF for high (A) and low (B) intensity stimuli at the central location. (C) Group average race-model inequality for high and low intensities at 0° location, computed by subtracting race-model CDFs from multisensory CDFs for each subject. (D)Group average race-model violations for high and low intensities at 0° location. Race model violations were calculated by averaging only positive portions of each subject’s race-model inequality. (E – H) CDF analyses shown in (C) and (D) for all stimulus conditions and average areas under these curves. (E) Race-model inequalities for all spatial locations and intensity levels averaged across subjects. (F) Average area under the curve for race-model inequalities for all conditions across subjects. (G) Race-model violation curves for all spatial locations and intensity levels averaged across subjects. Curves decrease in amplitude across space for high intensity stimuli while low intensity curves increase in amplitude across space. (H) Average area under the curve for race model violations, as in (F), showed a significant interaction driven by differences primarily at 60°. Central locations showed no difference for intensity.

A comprehensive comparison of multisensory performance with race-model predictions revealed that for all conditions (8) and all individuals (40), there were 200 significant race-model violations out of 320 possible (p = 4.5 × 10−6). Across conditions, the number of subjects with significant violations was between 23 and 29 (out of the 40 possible). In general, more race-model violations were found at peripheral locations and lower intensities (Table 2).

Table 2.

Race Model Violations per Condition

| Intensity | Location | Violations | p |

|---|---|---|---|

| High | 0 | 25 | 0.036585 |

| 30 | 23 | 0.080702 | |

| 60 | 27 | 0.010944 | |

| 90 | 23 | 0.080702 | |

|

| |||

| Low | 0 | 23 | 0.080702 |

| 30 | 23 | 0.080702 | |

| 60 | 29 | 0.002103 | |

| 90 | 27 | 0.010944 | |

In an additional test for multisensory facilitation, we also calculated the race-model inequality by subtracting the race-model CDF from the multisensory CDF (Figure 4c,e). In this measure, all positive values indicate race-model violations, clear evidence for a lack of independence between visual and auditory processes. Race-model violation distributions are shown for all subjects in Figure 4d,g. As a general rule, race-model violations occurred in the earliest epochs of the response distributions. To quantitatively assess these multisensory gains in RT, we calculated the area under the curve for individual race-model violations (Figure 4h). The main effect of location was significant in these analyses (F(3,117) = 2.905, p = 0.038, partial η2 = 0.07), with race-model violations increasing as stimuli moved more peripherally. The main effect of intensity was also significant (F(3,117) = 5.265, p = 0.027, partial η2 = 0.12), with violations being greater for low- vs. high-intensity stimuli. Area-under-the-curve calculations also yielded a significant interaction between location and intensity (F(3,117) = 2.788, p = 0.044, partial η2 = 0.07). Thus, as stimuli were presented at increasingly peripheral locations, there was greater multisensory facilitation in the low-intensity condition than in the high-intensity condition. Pair-wise comparisons revealed a significant difference between the two intensity conditions at the 60° location (t = 2.609, p = 0.012, d = 0.84). In contrast, area under the race-model inequality curves were not statistically different between the high- and low-intensity conditions at 0°, 30°, and 90° (t = 1.104, p = 0.28; t = 0.25, p = 0.8; and t = 1.402, p = 0.17, respectively).

Finally, analyses were also carried out to identify points along the CDFs that showed significant enhancement in an interaction of location and intensity. First, race-model violations for low-intensity stimuli were contrasted with their high-intensity counterparts (Figure 5a). Positive values represent greater violation in the high intensity condition. These distributions tended to become more negative for peripheral stimuli (Table 3). To test this, we then made comparisons of these CDF contrasts between the central location and each of the peripheral locations, which revealed an interesting pattern of interactions (Figure 5b, c, and d). Here, positive values represent greater race-model violations for low-intensity and peripheral stimuli. There were no significant bins when comparing between 0° and 30°. In contrast, for the 0° − 60° and 0° − 90° contrasts there were a number of significant bins, as denoted by the asterisks (p < 0.05, Table 3). These analyses thus reveal that for increasingly peripheral locations, there was a greater multisensory facilitation of RT for low-intensity stimuli when compared to high-intensity stimuli, indicating an interaction of location and intensity in multisensory facilitation of RTs.

Figure 5. Response Time CDF Interactions.

(A) CDF contrasts for main effect of intensity were calculated by subtracting each subject’s low intensity race model violation curve from their high intensity curve and averaging across subjects. Positive values represent greater enhancement in high intensity conditions. There is a general trend for the CDF contrasts to be more negative in the periphery. (B,C,D) CDFs from (A) were subtracted to produce interaction CDFs. CDFs were divided into 100ms bins for statistical analysis. Shaded regions represent the 95% confidence intervals. (B) The 0° − 30° interaction yielded no significant bins. (C,D) Significant bins were present in the 0° − 60° and 0° − 90° interactions.

Table 3.

Bin-by-bin CDF comparisons

| Contrast | Bin (ms) | t | p |

|---|---|---|---|

| 0° high - low | 1500 | 0.932 | 0.0858 |

|

| |||

| 60° high - low | 1000 | 0.943 | 0.0717 |

| 1100 | 0.984 | 0.0205 | |

| 1200 | 0.99 | 0.0128 | |

| 1300 | 0.99 | 0.0164 | |

| 1400 | 0.937 | 0.0802 | |

| 1700 | 0.955 | 0.0566 | |

|

| |||

| 90° high - low | 500 | 0.935 | 0.0821 |

| 600 | 0.93 | 0.0879 | |

| 700 | 0.974 | 0.0328 | |

| 800 | 0.981 | 0.0242 | |

| 900 | 0.923 | 0.0979 | |

| 1800 | 0.953 | 0.0595 | |

| 1900 | 0.935 | 0.0826 | |

|

| |||

| 0° - 60° x | 800 | 0.958 | 0.0529 |

| high - low | 900 | 0.988 | 0.0156 |

| interaction | 1000 | 0.985 | 0.0188 |

| 1100 | 0.992 | 0.0103 | |

| 1200 | 0.992 | 0.0104 | |

| 1300 | 0.992 | 0.0106 | |

| 1700 | 0.972 | 0.0351 | |

|

| |||

| 0° - 90° x | 600 | 0.962 | 0.0477 |

| high - low | 700 | 0.970 | 0.0375 |

| interaction | 800 | 0.985 | 0.019 |

| 900 | 0.98 | 0.0251 | |

4. Discussion

Here, we present a novel finding showing an interactive effect between the location and intensity of paired audiovisual stimuli in dictating multisensory gains in human performance. Consistent with prior work (Bock, 1993; Carlile, et al., 1997; Green & Swets, 1966; Hecht, Shlaer, & Pirenne, 1942), when visual and auditory stimuli are presented more peripherally or at lower intensity, localization performance suffers. In contrast, the pairing of these stimuli as they become less effective results in larger multisensory gains. Indeed, the greatest effect is seen for low intensity stimuli presented at peripheral locations. A similar pattern occurs for response times. Here subjects became slower to respond to peripheral and lower intensity stimuli with the greatest speeding of RTs when these two manipulations were combined. Collectively, these results are consistent with the principle of inverse effectiveness, and suggest that in addition to being linked to stimulus intensity, effectiveness can also be modulated by changing the location of the stimuli, with comparable effects on the multisensory interactive product.

Although accuracy in localizing visual stimuli decreased monotonically across space, auditory stimuli produced a response minimum at 60°. This pattern of performance is likely explainable by changes in interaural level as a function of azimuth, and where the poorest auditory localization accuracy has been found to be at intermediate locations (i.e., not at 90°) (Moore, 2004). Unlike interaural time differences that increase monotonically from 0° to 90° (Feddersen, Sandel, Taeas, & Jeffress, 1957), maximum interaural level differences occur between 45° and 70°, depending on frequency (Shaw, 1974). Despite the lack of an interaction effect for multisensory gains in localization accuracy, the results provide support for the notion that multisensory gains across space follow the principle of inverse effectiveness. Another possible account for the apparent increase in accuracy at 90° is an edge effect (Durlach & Braida, 1969; Weber, Green, & Luce, 1977). The result of this would be an artificial increase of the auditory accuracy at 90° due to subjects using this extreme as an “anchor.” If this is the case, it is possible that our results are underestimating the multisensory effects on localization accuracy and therefore masking a potential interaction in the accuracy domain. Further work should be conducted to better account for the possibility of such an effect.

Another consideration is related to the differences in how the stimuli are perceived. Most of the incorrect judgments of visual stimuli were misses whereas auditory errors were largely mislocalizations (Supplementary Figure 1). This provides some interesting insights into ways stimulus effectiveness can be manipulated. Due to the spatial acuity of the visual system, it is likely that multisensory enhancement during localization isn’t observed during conditions where a visual stimulus is readily detected (Hairston, Laurienti, Mishra, Burdette, & Wallace, 2003). However, by reducing the intensity of the visual signal (and by shifting its location into the periphery) it becomes less detectible (i.e., less “effective”) and thus less informative of the location of a multisensory stimulus. This is in contrast to the decrease in effectiveness resulting in the mislocalization of the auditory stimulus. It is possible that a sub-threshold visual signal can still be integrated (Aller, Giani, Conrad, Watanabe, & Noppeney, 2015) during a localization task to cause multisensory enhancement without capturing the behavior. This poses an interesting question concerning how various types of information (e.g., presence or location) can be integrated in different tasks (e.g., detection or localization).

In addition to our analysis of response accuracy, we also characterized changes in response times as a function of varying stimulus location and intensity. These results, assessed relative to a race-model predictive framework (which accounts for non-interactive statistical facilitation), revealed three principal findings. First, there were more race-model violations for lower-intensity stimuli, as expected from the principle of inverse effectiveness. Second, as was seen with response accuracies, stimuli located at more peripheral locations also resulted in more race-model violations, providing a convergent measure relating stimulus location to stimulus effectiveness (and the principle of inverse effectiveness). Third, consistent with these patterns, the greatest multisensory-mediated gains in performance were seen for low-intensity stimuli positioned at peripheral locations.

The similarity in the effects of changing stimulus location and intensity on multisensory performance, coupled with the clear interaction between these factors, strongly suggest a unifying mechanism that is dictating the final multisensory product. This novel finding supports the hypothesis that a number of stimulus-driven modulations of multisensory enhancement may be a manifestation of one larger principle possibly guided by the relative effectiveness of the stimuli. This does not contradict previous research, but instead highlights the limited scope of past studies in which the principles of integration were studied in isolation. Inverse effectiveness seems to be a central mechanism for dictating multisensory enhancements across stimulus-level manipulations such as, in the case of the current study, location and intensity. While inverse effectiveness is most often associated with manipulations of stimulus intensity, it is important to note here that effectiveness refers to the general ability of a stimulus to drive a response (B. E. Stein, Stanford, Ramachandran, Perrault, & Rowland, 2009), a concept not only restricted to stimulus intensity. Indeed, relationships following the pattern of inverse effectiveness have been discovered through alternate manipulations such as task difficulty (S. Kim, Stevenson, & James, 2012), cue reliability (Ohshiro, et al., 2011), and motion coherence level (R. Kim, Peters, & Shams, 2012).

The main effects of the current work clearly illustrate the interaction between space and intensity in dictating multisensory localization. However, the results do not say whether the effects of space and intensity are equal. Likely, modulations in space and intensity carry certain weights per unit of modulation (e.g., degrees and decibels) and are not likely to scale linearly. Future work will be aimed at determining the contribution to effectiveness that these stimulus parameters carry and the equilibration of the modulation units. These data could be very instructive in informing models of multisensory integration such as the Time-Window of Integration model (TWIN) (Colonius & Diederich, 2004, 2011; Colonius, Diederich, & Steenken, 2009; Diederich & Colonius, 2009), where the termination of unisensory processes within a given window of time is likely affected by manipulations of location in addition to intensity, thus affecting the final interactive product.

Ongoing research related to this study suggests that the interdependence of the principles of multisensory integration is not restricted to space and intensity. Interactions have been observed, for example, between temporal and spatial properties of audiovisual stimuli (Macaluso, et al., 2004; Slutsky & Recanzone, 2001; Spence, Baddeley, Zampini, James, & Shore, 2003; Stevenson, Fister, et al., 2012) and between timing and effectiveness (Krueger Fister, et al., in revision). Recent evidence from animal physiology suggests that, even on a neural level, stimulus factors such as location and intensity are not independent (Carriere, et al., 2008; Krueger, et al., 2009; Royal, et al., 2009). It is perhaps not surprising that the impacts that space, timing and effectiveness have on multisensory integration are all interlinked.

That space, time and effectiveness are all interlinked makes sense given that these stimulus parameters are not dissociable in real environments. Changing the location of a multisensory stimulus, which often includes a change in distance from the observer, often leads to changes in effective intensity and its temporal structure. Multisensory integration is established early in life (Bremner, Lewkowicz, & Spence, 2012; Lewkowicz & Ghazanfar, 2009; Massaro, 1984; Massaro, Thompson, Barron, & Laren, 1986; Neil, Chee-Ruiter, Scheier, Lewkowicz, & Shimojo, 2006; Wallace, Carriere, Perrault, Vaughan, & Stein, 2006; Wallace & Stein, 1997) and is highly susceptible to the statistics of our perceptual environment (Baier, et al., 2006; den Ouden, et al., 2009; McIntosh & Gonzalez-Lima, 1998) throughout development (Carriere, et al., 2007; Polley, et al., 2008; Wallace, Perrault, Hairston, & Stein, 2004; Wallace & Stein, 2007) and even in adulthood (A. R. Powers, 3rd, Hevey, & Wallace, 2012; A. R. Powers, Hillock, & Wallace, 2009; Schlesinger, Stevenson, Shotwell, & Wallace, 2014; Stevenson, Wilson, Powers, & Wallace, 2013). Hence, in the real world, multisensory stimuli are specified by their spatial and temporal correlations; correlations that interact with (and that are partly derived from) the relative effectiveness of the stimuli in determining the degree of multisensory facilitation that will be generated.

Supplementary Material

Highlights.

Changing location or intensity reduces stimulus effectiveness.

Reduced stimulus effectiveness is associated with poorer performance.

Decreasing stimulus effectiveness causes an increase in multisensory gains.

Space and intensity interact, causing more gains for low level, peripheral stimuli.

Acknowledgements

We would like to acknowledge Daniel Ashmead, Wes Grantham, and two anonymous reviewers for helpful comments on the manuscript. This research was funded in part through NIH grants MH063861, CA183492, DC014114, DC010927, and DC011993, and through the generous support of the Vanderbilt Kennedy Center and the Vanderbilt Brain Institute.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References Cited

- Alais D, Burr D. The ventriloquist effect results from near-optimal bimodal integration. Current Biology. 2004;14:257–262. doi: 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- Aller M, Giani A, Conrad V, Watanabe M, Noppeney U. A spatially collocated sound thrusts a flash into awareness. Front Integr Neurosci. 2015;M9:16. doi: 10.3389/fnint.2015.00016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Altieri N, Stevenson RA, Wallace MT, Wenger MJ. Learning to associate auditory and visual stimuli: behavioral and neural mechanisms. Brain Topogr. 2015;28:479–493. doi: 10.1007/s10548-013-0333-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amlot R, Walker R, Driver J, Spence C. Multimodal visual-somatosensory integration in saccade generation. Neuropsychologia. 2003;41:1–15. doi: 10.1016/s0028-3932(02)00139-2. [DOI] [PubMed] [Google Scholar]

- Ashby FG, Townsend JT. Varieties of perceptual independence. Psychol Rev. 1986;93:154–179. [PubMed] [Google Scholar]

- Baier B, Kleinschmidt A, Muller NG. Cross-modal processing in early visual and auditory cortices depends on expected statistical relationship of multisensory information. Journal Of Neuroscience. 2006;26:12260–12265. doi: 10.1523/JNEUROSCI.1457-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bock O. Localization of objects in the peripheral visual field. Behavioral Brain Research. 1993;56:77–84. doi: 10.1016/0166-4328(93)90023-j. [DOI] [PubMed] [Google Scholar]

- Bolognini N, Frassinetti F, Serino A, Ladavas E. "Acoustical vision" of below threshold stimuli: interaction among spatially converging audiovisual inputs. Exp Brain Res. 2005;160:273–282. doi: 10.1007/s00221-004-2005-z. [DOI] [PubMed] [Google Scholar]

- Bremner A, Lewkowicz M, Spence C. Multisensory Development. Oxford University Press; Oxford: 2012. (Chapter Chapter) [Google Scholar]

- Calvert GA, Spence C, Stein BE. The Handbook of Multisensory Processes. The MIT Press; Cambridge, MA: 2004. (Chapter Chapter) [Google Scholar]

- Calvert GA, Thesen T. Multisensory integration: methodological approaches and emerging principles in the human brain. J Physiology-Paris. 2004;98:191–205. doi: 10.1016/j.jphysparis.2004.03.018. [DOI] [PubMed] [Google Scholar]

- Cappe C, Thelen A, Romei V, Thut G, Murray MM. Looming signals reveal synergistic principles of multisensory integration. Journal Of Neuroscience. 2012;32:1171–1182. doi: 10.1523/JNEUROSCI.5517-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlile S, Leong P, Hyams S. The nature and distribution of errors in sound localization by human listeners. Hearing Research. 1997;114:179–196. doi: 10.1016/s0378-5955(97)00161-5. [DOI] [PubMed] [Google Scholar]

- Carriere BN, Royal DW, Perrault TJ, Morrison SP, Vaughan JW, Stein BE, Wallace MT. Visual deprivation alters the development of cortical multisensory integration. J Neurophysiol. 2007;98:2858–2867. doi: 10.1152/jn.00587.2007. [DOI] [PubMed] [Google Scholar]

- Carriere BN, Royal DW, Wallace MT. Spatial Heterogeneity of Cortical Receptive Fields and Its Impact on Multisensory Interactions. J Neurophysiol. 2008 doi: 10.1152/jn.01386.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chandrasekaran C, Lemus L, Trubanova A, Gondan M, Ghazanfar AA. Monkeys and humans share a common computation for face/voice integration. PLoS Comput Biol. 2011;7:e1002165. doi: 10.1371/journal.pcbi.1002165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colonius H, Diederich A. Multisensory interaction in saccadic reaction time: a time-window-of-integration model. J Cogn Neurosci. 2004;16:1000–1009. doi: 10.1162/0898929041502733. [DOI] [PubMed] [Google Scholar]

- Colonius H, Diederich A. The race model inequality: interpreting a geometric measure of the amount of violation. Psychol Rev. 2006;113:148–154. doi: 10.1037/0033-295X.113.1.148. [DOI] [PubMed] [Google Scholar]

- Colonius H, Diederich A. Computing an optimal time window of audiovisual integration in focused attention tasks: illustrated by studies on effect of age and prior knowledge. Experimental Brain Research. 2011;212:327–337. doi: 10.1007/s00221-011-2732-x. [DOI] [PubMed] [Google Scholar]

- Colonius H, Diederich A, Steenken R. Time-window-of-integration (TWIN) model for saccadic reaction time: effect of auditory masker level on visual-auditory spatial interaction in elevation. Brain Topogr. 2009;21:177–184. doi: 10.1007/s10548-009-0091-8. [DOI] [PubMed] [Google Scholar]

- Conrey B, Pisoni DB. Auditory-visual speech perception and synchrony detection for speech and nonspeech signals. J Acoust Soc Am. 2006;119:4065–4073. doi: 10.1121/1.2195091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corneil BD, Van Wanrooij M, Munoz DP, Van Opstal AJ. Auditory-visual interactions subserving goal-directed saccades in a complex scene. J Neurophysiol. 2002;88:438–454. doi: 10.1152/jn.2002.88.1.438. [DOI] [PubMed] [Google Scholar]

- den Ouden HE, Friston KJ, Daw ND, McIntosh AR, Stephan KE. A dual role for prediction error in associative learning. Cerebral Cortex. 2009;19:1175–1185. doi: 10.1093/cercor/bhn161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diederich A, Colonius H. Crossmodal interaction in speeded responses: time window of integration model. Progressive Brain Research. 2009;174:119–135. doi: 10.1016/S0079-6123(09)01311-9. [DOI] [PubMed] [Google Scholar]

- Diederich A, Colonius H, Bockhorst D, Tabeling S. Visual-tactile spatial interaction in saccade generation. Exp Brain Res. 2003;148:328–337. doi: 10.1007/s00221-002-1302-7. [DOI] [PubMed] [Google Scholar]

- Dixon NF, Spitz L. The detection of auditory visual desynchrony. Perception. 1980;9:719–721. doi: 10.1068/p090719. [DOI] [PubMed] [Google Scholar]

- Durlach NI, Braida LD. Intensity perception. I. Preliminary theory of intensity resolution. J Acoust Soc Am. 1969;46:372–383. doi: 10.1121/1.1911699. [DOI] [PubMed] [Google Scholar]

- Feddersen WE, Sandel TT, Taeas DC, Jeffress LA. Localization of high-frequency tones. Journal of the Acoustical Society of America. 1957;73:652–662. [Google Scholar]

- Forster B, Cavina-Pratesi C, Aglioti SM, Berlucchi G. Redundant target effect and intersensory facilitation from visual-tactile interactions in simple reaction time. Experimental Brain Research. 2002;143:480–487. doi: 10.1007/s00221-002-1017-9. [DOI] [PubMed] [Google Scholar]

- Foxe JJ. Toward the end of a "principled" era in multisensory science. Brain Res. 2008;1242:1–3. doi: 10.1016/j.brainres.2008.10.037. [DOI] [PubMed] [Google Scholar]

- Frassinetti F, Bolognini N, Ladavas E. Enhancement of visual perception by crossmodal visuo-auditory interaction. Experimental Brain Research. 2002;147:332–343. doi: 10.1007/s00221-002-1262-y. [DOI] [PubMed] [Google Scholar]

- Frens MA, Van Opstal AJ, Van der Willigen RF. Spatial and temporal factors determine auditory-visual interactions in human saccadic eye movements. Percept Psychophys. 1995;57:802–816. doi: 10.3758/bf03206796. [DOI] [PubMed] [Google Scholar]

- Green DM, Swets JA. Signal Detection Theory and Psychophsics. Peninsula Publishing; Los Altos: 1966. (Chapter Chapter) [Google Scholar]

- Hairston WD, Laurienti PJ, Mishra G, Burdette JH, Wallace MT. Multisensory enhancement of localization under conditions of induced myopia. Experimental Brain Research. 2003;152:404–408. doi: 10.1007/s00221-003-1646-7. [DOI] [PubMed] [Google Scholar]

- Harrington LK, Peck CK. Spatial disparity affects visual-auditory interactions in human sensorimotor processing. Experimental Brain Research. 1998;122:247–252. doi: 10.1007/s002210050512. [DOI] [PubMed] [Google Scholar]

- Hecht S, Shlaer S, Pirenne MH. Energy, Quanta, and Vision. Journal of General Psychology. 1942;25:819–840. doi: 10.1085/jgp.25.6.819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hershenson M. Reaction time as a measure of intersensory facilitation. Journal of Experimental Psychology. 1962;63:289–293. doi: 10.1037/h0039516. [DOI] [PubMed] [Google Scholar]

- Hillock AR, Powers AR, Wallace MT. Binding of sights and sounds: age-related changes in multisensory temporal processing. Neuropsychologia. 2011;49:461–467. doi: 10.1016/j.neuropsychologia.2010.11.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hughes HC, Reuter-Lorenz PA, Nozawa G, Fendrich R. Visual-auditory interactions in sensorimotor processing: saccades versus manual responses. J Exp Psychol Hum Percept Perform. 1994;20:131–153. doi: 10.1037//0096-1523.20.1.131. [DOI] [PubMed] [Google Scholar]

- James TW, Kim S, Stevenson RA. The International Society for Psychophysics. Galway, Ireland: 2009. Assessing multisensory interaction with additive factors and functional MRI. [Google Scholar]

- James TW, Stevenson RA. The Use of fMRI to Assess Multisensory Integration. In: Murray MM, Wallace MT, editors. The Neural Bases of Multisensory Processes. Boca Raton (FL): 2012. [PubMed] [Google Scholar]

- James TW, Stevenson RA, Kim S. The International Society for Psychophysics. Dublin, Ireland: 2009. Assessing multisensory integration with additive factors and functional MRI. [Google Scholar]

- James TW, Stevenson RA, Kim S. Inverse effectiveness in multisensory processing. In: Stein BE, editor. The New Handbook of Multisensory Processes. MIT Press; Cambridge, MA: 2012. [Google Scholar]

- Keetels M, Vroomen J. The role of spatial disparity and hemifields in audio-visual temporal order judgments. Exp Brain Res. 2005;167:635–640. doi: 10.1007/s00221-005-0067-1. [DOI] [PubMed] [Google Scholar]

- Kim R, Peters MA, Shams L. 0 + 1 > 1: How adding noninformative sound improves performance on a visual task. Psychological Science. 2012;23:6–12. doi: 10.1177/0956797611420662. [DOI] [PubMed] [Google Scholar]

- Kim S, Stevenson RA, James TW. Visuo-haptic neuronal convergence demonstrated with an inversely effective pattern of BOLD activation. Journal of Cognitive Neuroscience. 2012;24:830–842. doi: 10.1162/jocn_a_00176. [DOI] [PubMed] [Google Scholar]

- Krueger Fister J, Stevenson RA, Nidiffer AR, Barnett ZP, Wallace MT. Stimulus intensity modulates multisensory temporal processing. Neuropsychologia (this issue) doi: 10.1016/j.neuropsychologia.2016.02.016. (in revision) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krueger J, Royal DW, Fister MC, Wallace MT. Spatial receptive field organization of multisensory neurons and its impact on multisensory interactions. Hearing Research. 2009;258:47–54. doi: 10.1016/j.heares.2009.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewkowicz DJ, Ghazanfar AA. The emergence of multisensory systems through perceptual narrowing. Trends Cogn Sci. 2009;13:470–478. doi: 10.1016/j.tics.2009.08.004. [DOI] [PubMed] [Google Scholar]

- Lovelace CT, Stein BE, Wallace MT. An irrelevant light enhances auditory detection in humans: a psychophysical analysis of multisensory integration in stimulus detection. Cognitive Brain Research. 2003;17:447–453. doi: 10.1016/s0926-6410(03)00160-5. [DOI] [PubMed] [Google Scholar]

- Luce RD. Response Times: Their Role in Inferring Elementary Mental Organization. Oxford University Press; New York: 1986. (Chapter Chapter) [Google Scholar]

- Macaluso E, George N, Dolan R, Spence C, Driver J. Spatial and temporal factors during processing of audiovisual speech: a PET study. Neuroimage. 2004;21:725–732. doi: 10.1016/j.neuroimage.2003.09.049. [DOI] [PubMed] [Google Scholar]

- Massaro DW. Children's perception of visual and auditory speech. Child Dev. 1984;55:1777–1788. [PubMed] [Google Scholar]

- Massaro DW, Thompson LA, Barron B, Laren E. Developmental changes in visual and auditory contributions to speech perception. Journal of Experimental Child Psychology. 1986;41:93–113. doi: 10.1016/0022-0965(86)90053-6. [DOI] [PubMed] [Google Scholar]

- McIntosh AR, Gonzalez-Lima F. Large-scale functional connectivity in associative learning: interrelations of the rat auditory, visual, and limbic systems. J Neurophysiol. 1998;80:3148–3162. doi: 10.1152/jn.1998.80.6.3148. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Nemitz JW, Stein BE. Determinants of multisensory integration in superior colliculus neurons. I. Temporal factors. J Neurosci. 1987;7:3215–3229. doi: 10.1523/JNEUROSCI.07-10-03215.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Spatial factors determine the activity of multisensory neurons in cat superior colliculus. Brain Res. 1986a;365:350–354. doi: 10.1016/0006-8993(86)91648-3. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. J Neurophysiol. 1986b;56:640–662. doi: 10.1152/jn.1986.56.3.640. [DOI] [PubMed] [Google Scholar]

- Miller J. Divided attention: evidence for coactivation with redundant signals. Cognitive Psychology. 1982;14:247–279. doi: 10.1016/0010-0285(82)90010-x. [DOI] [PubMed] [Google Scholar]

- Miller LM, D'Esposito M. Perceptual fusion and stimulus coincidence in the cross-modal integration of speech. J Neurosci. 2005;25:5884–5893. doi: 10.1523/JNEUROSCI.0896-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mills A. On the minimum audible angle. Journal of the Acoustical Society of America. 1958;30:237–246. [Google Scholar]

- Mills A. Lateralization of high-frequency tones. Journal of the Acoustical Society of America. 1960;32:132–134. [Google Scholar]

- Molholm S, Ritter W, Murray MM, Javitt DC, Schroeder CE, Foxe JJ. Multisensory auditory-visual interactions during early sensory processing in humans: a high-density electrical mapping study. Brain Res Cogn Brain Res. 2002;14:115–128. doi: 10.1016/s0926-6410(02)00066-6. [DOI] [PubMed] [Google Scholar]

- Moore BCJ. An Introduction to the Psychology of Hearing. Elsevier; London: 2004. Space Perception. [Google Scholar]

- Murray MM, Molholm S, Michel CM, Heslenfeld DJ, Ritter W, Javitt DC, Schroeder CE, Foxe JJ. Grabbing your ear: rapid auditory-somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cereb Cortex. 2005;15:963–974. doi: 10.1093/cercor/bhh197. [DOI] [PubMed] [Google Scholar]

- Murray MM, Wallace MT. The Neural Bases of Multisensory Processes. CRC Press; Boca Raton: 2012. [PubMed] [Google Scholar]

- Nath AR, Beauchamp MS. Dynamic changes in superior temporal sulcus connectivity during perception of noisy audiovisual speech. Journal Of Neuroscience. 2011;31:1704–1714. doi: 10.1523/JNEUROSCI.4853-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neil PA, Chee-Ruiter C, Scheier C, Lewkowicz DJ, Shimojo S. Development of multisensory spatial integration and perception in humans. Developmental Science. 2006;9:454–464. doi: 10.1111/j.1467-7687.2006.00512.x. [DOI] [PubMed] [Google Scholar]

- Ohshiro T, Angelaki DE, DeAngelis GC. A normalization model of multisensory integration. Nat Neurosci. 2011;14:775–782. doi: 10.1038/nn.2815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Otto TU, Dassy B, Mamassian P. Principles of multisensory behavior. J Neurosci. 2013;33:7463–7474. doi: 10.1523/JNEUROSCI.4678-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polley DB, Hillock AR, Spankovich C, Popescu MV, Royal DW, Wallace MT. Development and plasticity of intra- and intersensory information processing. Journal of the American Academy of Audiology. 2008;19:780–798. doi: 10.3766/jaaa.19.10.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powers AR, 3rd, Hevey MA, Wallace MT. Neural correlates of multisensory perceptual learning. Journal Of Neuroscience. 2012;32:6263–6274. doi: 10.1523/JNEUROSCI.6138-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powers AR, Hillock AR, Wallace MT. Perceptual Training Narrows the Temporal Window of Multisensory Binding. Journal Of Neuroscience. 2009;29:12265–12274. doi: 10.1523/JNEUROSCI.3501-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raab DH. Statistical facilitation of simple reaction times. Transactions of the New York Academy of Sciences. 1962;24:574–598. doi: 10.1111/j.2164-0947.1962.tb01433.x. [DOI] [PubMed] [Google Scholar]

- Ross LA, Saint-Amour D, Leavitt VM, Javitt DC, Foxe JJ. Do you see what I am saying? Exploring visual enhancement of speech comprehension in noisy environments. Cereb Cortex. 2007;17:1147–1153. doi: 10.1093/cercor/bhl024. [DOI] [PubMed] [Google Scholar]

- Royal DW, Carriere BN, Wallace MT. Spatiotemporal architecture of cortical receptive fields and its impact on multisensory interactions. Experimental Brain Research. 2009;198:127–136. doi: 10.1007/s00221-009-1772-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schall S, Quigley C, Onat S, Konig P. Visual stimulus locking of EEG is modulated by temporal congruency of auditory stimuli. Exp Brain Res. 2009;198:137–151. doi: 10.1007/s00221-009-1867-5. [DOI] [PubMed] [Google Scholar]

- Schlesinger JJ, Stevenson RA, Shotwell MS, Wallace MT. Improving pulse oximetry pitch perception with multisensory perceptual training. Anesth Analg. 2014;118:1249–1253. doi: 10.1213/ANE.0000000000000222. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Talsma D, Grigutsch M, Herrmann CS, Woldorff MG. Good times for multisensory integration: Effects of the precision of temporal synchrony as revealed by gamma-band oscillations. Neuropsychologia. 2007;45:561–571. doi: 10.1016/j.neuropsychologia.2006.01.013. [DOI] [PubMed] [Google Scholar]

- Shaw EAG. Transformation of sound pressure level from the free field to the eardrum in the horizontal plane. Journal of the Acoustical Society of America. 1974;56:1884–1861. doi: 10.1121/1.1903522. [DOI] [PubMed] [Google Scholar]

- Shinn-Cunningham BG. Object-based auditory and visual attention. Trends in Cognitive Sciences. 2008;12:182–186. doi: 10.1016/j.tics.2008.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slutsky DA, Recanzone GH. Temporal and spatial dependency of the ventriloquism effect. Neuroreport. 2001;12:7–10. doi: 10.1097/00001756-200101220-00009. [DOI] [PubMed] [Google Scholar]

- Spence C, Baddeley R, Zampini M, James R, Shore DI. Multisensory temporal order judgments: when two locations are better than one. Perceptual Psychophysics. 2003;65:318–328. doi: 10.3758/bf03194803. [DOI] [PubMed] [Google Scholar]

- Stein BE, Huneycutt WS, Meredith MA. Neurons and behavior: the same rules of multisensory integration apply. Brain Res. 1988;448:355–358. doi: 10.1016/0006-8993(88)91276-0. [DOI] [PubMed] [Google Scholar]

- Stein BE, Meredith MA. The Merging of the Senses. MIT Press; Cambridge, MA: 1993. (Chapter Chapter) [Google Scholar]

- Stein BE, Stanford TR, Ramachandran R, Perrault TJ, Jr., Rowland BA. Challenges in quantifying multisensory integration: alternative criteria, models, and inverse effectiveness. Experimental Brain Research. 2009;198:113–126. doi: 10.1007/s00221-009-1880-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sternberg S. The discovery of processing stages: Extensions of Donders' method. Acta Psychol (Amst) 1969a;30:376–315. [Google Scholar]

- Sternberg S. Memory-scanning: mental processes revealed by reaction-time experiments. Am Sci. 1969b;57:421–457. [PubMed] [Google Scholar]

- Sternberg S. Memory scanning: New findings and current controversies. Experimental Pshychology. 1975;27:1–32. [Google Scholar]

- Sternberg S. Discovering mental processing stages: The method of additive factors. In: Scarborough D, Sternberg S, editors. An Invitation to Cognitive Science: Volume 4, Methods, Models, and Conceptual Issues. Vol. 4. MIT Press; Cambridge, MA: 1998. pp. 739–811. [Google Scholar]

- Stevenson RA, Altieri NA, Kim S, Pisoni DB, James TW. Neural processing of asynchronous audiovisual speech perception. Neuroimage. 2010;49:3308–3318. doi: 10.1016/j.neuroimage.2009.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Bushmakin M, Kim S, Wallace MT, Puce A, James TW. Inverse effectiveness and multisensory interactions in visual event-related potentials with audiovisual speech. Brain Topogr. 2012;25:308–326. doi: 10.1007/s10548-012-0220-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Fister JK, Barnett ZP, Nidiffer AR, Wallace MT. Interactions between the spatial and temporal stimulus factors that influence multisensory integration in human performance. Experimental Brain Research. 2012;219:121–137. doi: 10.1007/s00221-012-3072-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Ghose D, Fister JK, Sarko DK, Altieri NA, Nidiffer AR, Kurela LR, Siemann JK, James TW, Wallace MT. Identifying and Quantifying Multisensory Integration: A Tutorial Review. Brain Topogr. 2014 doi: 10.1007/s10548-014-0365-7. [DOI] [PubMed] [Google Scholar]

- Stevenson RA, James TW. Audiovisual integration in human superior temporal sulcus: Inverse effectiveness and the neural processing of speech and object recognition. Neuroimage. 2009;44:1210–1223. doi: 10.1016/j.neuroimage.2008.09.034. [DOI] [PubMed] [Google Scholar]

- Stevenson RA, Kim S, James TW. An additive-factors design to disambiguate neuronal and areal convergence: measuring multisensory interactions between audio, visual, and haptic sensory streams using fMRI. Experimental Brain Research. 2009;198:183–194. doi: 10.1007/s00221-009-1783-8. [DOI] [PubMed] [Google Scholar]

- Stevenson RA, VanDerKlok RM, Pisoni DB, James TW. Discrete neural substrates underlie complementary audiovisual speech integration processes. Neuroimage. 2011;55:1339–1345. doi: 10.1016/j.neuroimage.2010.12.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Wallace MT. Multisensory temporal integration: task and stimulus dependencies. Exp Brain Res. 2013;227:249–261. doi: 10.1007/s00221-013-3507-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Wallace MT, Altieri N. The interaction between stimulus factors and cognitive factors during multisensory integration of audiovisual speech. Front Psychol. 2014;5:352. doi: 10.3389/fpsyg.2014.00352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Wilson MM, Powers AR, Wallace MT. The effects of visual training on multisensory temporal processing. Experimental Brain Research. 2013 doi: 10.1007/s00221-012-3387-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Zemtsov RK, Wallace MT. Individual differences in the multisensory temporal binding window predict susceptibility to audiovisual illusions. J Exp Psychol Hum Percept Perform. 2012a;38:1517–1529. doi: 10.1037/a0027339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Zemtsov RK, Wallace MT. Individual Differences in the Multisensory Temporal Binding Window Predict Susceptibility to Audiovisual Illusions. Journal of Experimental Psychology Human Perception and Performance. 2012b doi: 10.1037/a0027339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sumby WH, Pollack I. Visual contribution to speech intelligibility in noise. Journal of the Acoustical Society of America. 1954;26:212–215. [Google Scholar]

- Talsma D, Senkowski D, Woldorff MG. Intermodal attention affects the processing of the temporal alignment of audiovisual stimuli. Exp Brain Res. 2009;198:313–328. doi: 10.1007/s00221-009-1858-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ten Oever S, Sack AT, Wheat KL, Bien N, Van Atteveldt N. Audio-visual onset differences are used to determine syllable identity for ambiguous audio-visual stimulus pairs. Frontiers in psychology. 2013:4. doi: 10.3389/fpsyg.2013.00331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Townsend JT, Wenger MJ. A theory of interactive parallel processing: new capacity measures and predictions for a response time inequality series. Psychol Rev. 2004;111:1003–1035. doi: 10.1037/0033-295X.111.4.1003. [DOI] [PubMed] [Google Scholar]

- van Atteveldt NM, Formisano E, Blomert L, Goebel R. The effect of temporal asynchrony on the multisensory integration of letters and speech sounds. Cereb Cortex. 2007;17:962–974. doi: 10.1093/cercor/bhl007. [DOI] [PubMed] [Google Scholar]

- van Wassenhove V, Grant KW, Poeppel D. Temporal window of integration in auditory-visual speech perception. Neuropsychologia. 2007;45:598–607. doi: 10.1016/j.neuropsychologia.2006.01.001. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Carriere BN, Perrault TJ, Jr., Vaughan JW, Stein BE. The development of cortical multisensory integration. J Neurosci. 2006;26:11844–11849. doi: 10.1523/JNEUROSCI.3295-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MT, Perrault TJ, Jr., Hairston WD, Stein BE. Visual experience is necessary for the development of multisensory integration. J Neurosci. 2004;24:9580–9584. doi: 10.1523/JNEUROSCI.2535-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MT, Roberson GE, Hairston WD, Stein BE, Vaughan JW, Schirillo JA. Unifying multisensory signals across time and space. Exp Brain Res. 2004;158:252–258. doi: 10.1007/s00221-004-1899-9. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Stein BE. Development of multisensory neurons and multisensory integration in cat superior colliculus. J Neurosci. 1997;17:2429–2444. doi: 10.1523/JNEUROSCI.17-07-02429.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MT, Stein BE. Early experience determines how the senses will interact. J Neurophysiol. 2007;97:921–926. doi: 10.1152/jn.00497.2006. [DOI] [PubMed] [Google Scholar]

- Weber DL, Green DM, Luce RD. Effects of practice and distribution of auditory signals on absolute identification. Perception and Psychophysics. 1977;22:223–231. [Google Scholar]

- Werner S, Noppeney U. Superadditive Responses in Superior Temporal Sulcus Predict Audiovisual Benefits in Object Categorization. Cerebral Cortex. 2009 doi: 10.1093/cercor/bhp248. [DOI] [PubMed] [Google Scholar]

- Yost W. Discrimination of inter-aural phase differences. Journal of the Acoustical Society of America. 1974;55:1299–1303. doi: 10.1121/1.1914701. [DOI] [PubMed] [Google Scholar]

- Zhou B, Zhang JX, Tan LH, Han S. Spatial congruence in working memory: an ERP study. Neuroreport. 2004;15:2795–2799. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.