Abstract

One of the more challenging feats that multisensory systems must perform is to determine which sensory signals originate from the same external event, and thus should be integrated or “bound” into a singular perceptual object or event, and which signals should be segregated. Two important stimulus properties impacting this process are the timing and effectiveness of the paired stimuli. It has been well established that the more temporally aligned two stimuli are, the greater the degree to which they influence one another's processing. In addition, the less effective the individual unisensory stimuli are in eliciting a response, the greater the benefit when they are combined. However, the interaction between stimulus timing and stimulus effectiveness in driving multisensory-mediated behaviors has never been explored – which was the purpose of the current study. Participants were presented with either high- or low-intensity audiovisual stimuli in which stimulus onset asynchronies (SOAs) were parametrically varied, and were asked to report on the perceived synchrony/asynchrony of the paired stimuli. Our results revealed an interaction between the temporal relationship (SOA) and intensity of the stimuli. Specifically, individuals were more tolerant of larger temporal offsets (i.e., more likely to call them synchronous) when the paired stimuli were less effective. This interaction was also seen in response time (RT) distributions. Behavioral gains in RTs were seen with synchronous relative to asynchronous presentations, but this effect was more pronounced with high-intensity stimuli. These data suggest that stimulus effectiveness plays an underappreciated role in the perception of the timing of multisensory events, and reinforces the interdependency of the principles of multisensory integration in determining behavior and shaping perception.

Keywords: Audiovisual, inverse effectiveness, multisensory, cross-modal, synchrony, perception

1. Introduction

Our daily environment is filled with an abundance of information that our different sensory systems utilize in order to allow us to successfully navigate the world. Despite the fact that many of the objects and events in our world are specified by information carried by multiple senses, we perceive these as singular and unified. In order to create such a unified percept, the brain must be able to “bind” information that belongs together and segregate information that should be separate. The binding process by which multisensory cues are actively synthesized – a process that represents a component of multisensory integration - has been the subject of much study. Collectively, this work has revealed dramatic changes associated with combining information across multiple senses; changes that frequently result in substantial benefits to behavior (Amlot, Walker, Driver, & Spence, 2003; Frassinetti, Bolognini, & Ladavas, 2002; Lovelace, Stein, & Wallace, 2003) and striking alterations in perception (McGurk & MacDonald, 1976; The Neural Bases of Multisensory Processes, 2012; Shams, Kamitani, & Shimojo, 2002).

To solve this “binding or causal source problem,” sensory systems rely upon the statistical properties of the different sensory signals, two of the most important of which are space and time. Multisensory (e.g., visual-auditory) stimuli that are spatially and temporally concordant tend to influence one another's processing, and may ultimately be integrated or bound, whereas those that are discordant in space and/or time tend to not influence the processing of one another (Conrey & Pisoni, 2006; Hairston, Burdette, Flowers, Wood, & Wallace, 2005a; Keetels & Vroomen, 2005; Kording, et al., 2007; Macaluso, George, Dolan, Spence, & Driver, 2004; Powers, Hillock, & Wallace, 2009; Sato, Toyoizumi, & Aihara, 2007; Stevenson, Fister, Barnett, Nidiffer, & Wallace, 2012; Stevenson, Zemtsov, & Wallace, 2012b; van Atteveldt, Formisano, Blomert, & Goebel, 2007; van Wassenhove, Grant, & Poeppel, 2007; Vroomen & de Gelder, 2004; Vroomen & Keetels, 2010; Wallace & Stevenson, 2014). Furthermore, it has also been shown that stimuli that are weakly effective on their own tend to give rise to the largest gains when combined (James, Kim, & Stevenson, 2009; James & Stevenson, 2012b; James, Stevenson, & Kim, 2012; Ross, Saint-Amour, Leavitt, Javitt, & Foxe, 2007; Senkowski, Saint-Amour, Hofle, & Foxe, 2011; Stein, Stanford, Ramachandran, Perrault, & Rowland, 2009; Stevenson, Bushmakin, et al., 2012; Stevenson & James, 2009). Collectively, these integrative principles make a great deal of ethological sense, in that spatial and temporal proximity typically signal a common source, and in that it is highly adaptive to accentuate multisensory gain when each of the sensory signals is weak or ambiguous when presented alone.

Recently, a number of studies have focused on how temporal factors influence the nature of human multisensory perceptual judgments (Billock & Tsou, 2014; Conrey & Pisoni, 2006; Grant, Van Wassenhove, & Poeppel, 2004; Macaluso, et al., 2004; van Wassenhove, et al., 2007; Vroomen & de Gelder, 2004; Vroomen & Keetels, 2010). One useful construct associated with this work is the concept of a multisensory temporal binding window, defined as the epoch of time within which multisensory stimuli can influence one another's processing. The window within which multisensory influences can be demonstrated appears to be surprisingly broad, spanning several hundred milliseconds (Hillock, Powers, & Wallace, 2011; Hillock-Dunn & Wallace, 2012; Powers, Hevey, & Wallace, 2012; Powers, et al., 2009; Sarko, et al., 2012; Stevenson, Wilson, Powers, & Wallace, 2013b). In addition, these studies and others have revealed a number of other salient characteristics concerning multisensory temporal acuity. These include that it: 1) has a great deal of individual variability (Stevenson, Zemtsov, & Wallace, 2012a; van Eijk, Kohlrausch, Juola, & van de Par, 2008), 2) differs depending upon stimulus type and task (Kasper, Cecotti, Touryan, Eckstein, & Giesbrecht, 2014; Megevand, Molholm, Nayak, & Foxe, 2013; Stevenson & Wallace, 2013; van Eijk, et al., 2008; van Eijk, Kohlrausch, Juola, & van de Par, 2010; Vroomen & Stekelenburg, 2011), and 3) is malleable in response to perceptual training (Keetels & Vroomen, 2008; Powers, et al., 2012; Powers, et al., 2009; Schlesinger, Stevenson, Shotwell, & Wallace, 2014; Stevenson, Fister, et al., 2012; Stevenson, Wilson, Powers, & Wallace, 2013a; Stevenson, et al., 2013b; Vroomen, Keetels, de Gelder, & Bertelson, 2004) and across development (Hillock, et al., 2011; Hillock-Dunn & Wallace, 2012; Joanne Jao, James, & Harman James, 2014; Johannsen & Roder, 2014; Lewkowicz, 2012; Polley, et al., 2008; Shi & Muller, 2013) and aging (Bates & Wolbers, 2014; DeLoss, Pierce, & Andersen, 2013; Diaconescu, Hasher, & McIntosh, 2013; Freiherr, Lundstrom, Habel, & Reetz, 2013; Hugenschmidt, Mozolic, & Laurienti, 2009; Mahoney, Verghese, Dumas, Wang, & Holtzer, 2012; Mahoney, Wang, Dumas, & Holtzer, 2014; Mozolic, Hugenschmidt, Peiffer, & Laurienti, 2012; Stevenson, et al., 2015).

Although these studies have illustrated the central importance of time in dictating human multisensory interactions, other studies have focused on the roles of space (Bertelson & Radeau, 1981; Ghose & Wallace, 2014; Kadunce, Vaughan, Wallace, & Stein, 2001; Krueger, Royal, Fister, & Wallace, 2009; Macaluso, et al., 2004; Mahoney, et al., 2015; Meredith & Stein, 1986, 1996; Radeau & Bertelson, 1974; Royal, Carriere, & Wallace, 2009; Royal, Krueger, Fister, & Wallace, 2010; Sarko, et al., 2012; Vroomen, Bertelson, & de Gelder, 2001; Wallace, et al., 2004) and effectiveness (James & Stevenson, 2012a; James, et al., 2012; Kim & James, 2010; Kim, Stevenson, & James, 2012; Leone & McCourt, 2013; Liu, Lin, Gao, & Dang, 2013; Nath & Beauchamp, 2011; Stevenson & James, 2009; Werner & Noppeney, 2010; Yalachkov, Kaiser, Doehrmann, & Naumer, 2015). Collectively, we have learned a great deal from these studies about how stimulus-related factors shape the multisensory process, but most have treated time, space and effectiveness as independent contributors to the final multisensory product. In fact, these stimulus factors are complexly intertwined, with manipulations in one having effects upon the other. For example, simply changing the spatial location of an identical stimulus will impact the effectiveness of that stimulus given the differences in spatial acuity for different regions of space (Nidiffer, Stevenson, Krueger Fister, Barnett, & Wallace, 2015 (in this issue); Stein, Meredith, Huneycutt, & McDade, 1989). Reinforcing the importance of examining these interactions in more detail, recent neurophysiological studies in animal models have shown that manipulating one aspect of a multisensory stimulus (e.g., spatial location) has consequent effects in both the temporal and effectiveness dimensions (Carriere, Royal, & Wallace, 2008; Ghose & Wallace, 2014; Krueger, et al., 2009; Royal, et al., 2009). Indeed, this work has suggested that stimulus effectiveness may play a more preeminent role than space and time in dictating multisensory interactions at the neural level. Extending this work into the domain of human performance, recent studies have shown a strong interdependency between time and space (Keetels & Vroomen, 2005; Krueger, et al., 2009; Stevenson, Fister, et al., 2012). For example, Keetels and Vroomen (2005) showed that judgments concerning the order of auditory and visual stimuli were more precise when they were presented in disparate spatial locations. Stevenson and colleagues (2012) showed that individuals were more likely to perceive auditory and visual stimuli as synchronous when they were presented at peripheral relative to foveal locations.

The present study seeks to expand upon these previous findings by examining for the first time the interaction between the temporal relationship of paired audiovisual stimuli and their relative effectiveness. Specifically, we tested the impact that manipulations of stimulus effectiveness (accomplished via changes in stimulus intensity and defined as rate of perceived synchrony) have on the ability of an individual to report audiovisual stimulus asynchrony. Our results illustrate that the relative effectiveness of the paired stimuli do in fact modulate how they are perceived in time. Furthermore, these studies revealed complex interactions between time and effectiveness in dictating the final behavioral outcome.

2. Methods

2.1 Participants

Participants included 51 Vanderbilt undergraduate students (21 male, mean age =18.9, STD =1, age range =18-21) who were compensated with class credit. All recruitment and experimental procedures were approved by the Vanderbilt University Institutional Review Board (IRB). Data from participants who did not accurately report the perception of synchrony even when the auditory and visual presentation was objectively simultaneous (0ms stimulus onset asynchrony; SOA) at least 50% of the time were excluded from further analysis (N = 5). Data from one additional subject was excluded for responding synchronous for all trials irrespective of SOA resulting in 45 subjects being included in all data analysis. The present study is part of a larger study investigating the interrelationship of stimulus effectiveness, and stimulus spatial and temporal factors (Nidiffer, et al., 2015 (in this issue); Stevenson, Fister, et al., 2012).

2.2 Stimuli

Visual and auditory stimuli were presented using E-Prime version 2.0.8.79 (Psychology Software Tools, Inc; PST). Visual stimuli were presented on a Samsung Sync Master 2233RZ 120 Hz monitor arranged so that subjects were seated at a distance of 46 cm. All visual stimuli were white circles measuring 7 mm in diameter, or approximately 1° of visual angle. Visual stimuli were presented at 0° azimuth (in front of the subject) slightly above a fixation cross. Visual stimuli were presented at two luminance levels, 7.1 cd/m2 (low) and 215 cd/m2 (high) on a black background of 0.28 cd/m2. Luminance values were verified with a Minolta Chroma Meter CS-100. Visual stimulus durations were 10 ms, with timing confirmed using a Hameg 507 oscilloscope with a photovoltaic cell.

Auditory stimuli were presented via a speaker mounted on the top of the monitor at 0° azimuth angled toward the participant. Speakers were mounted 2 cm, or 2.5° above their respective visual presentation. Auditory stimuli consisted of a frozen white-noise burst generated at 44100 Hz with the Matlab rand function with a 5 ms rise and 5ms fall cosine gate (Figure 1b). Auditory stimulus duration was held constant at 10 ms, with timing confirmed using a Hameg 507 oscilloscope. Auditory stimuli were presented at two intensity levels, 46 dB SPL (low) and 64 dB SPL (high), with a background noise at 41 dB SPL. All sound levels were verified with a Larson Davis sound-level meter, Model 814.

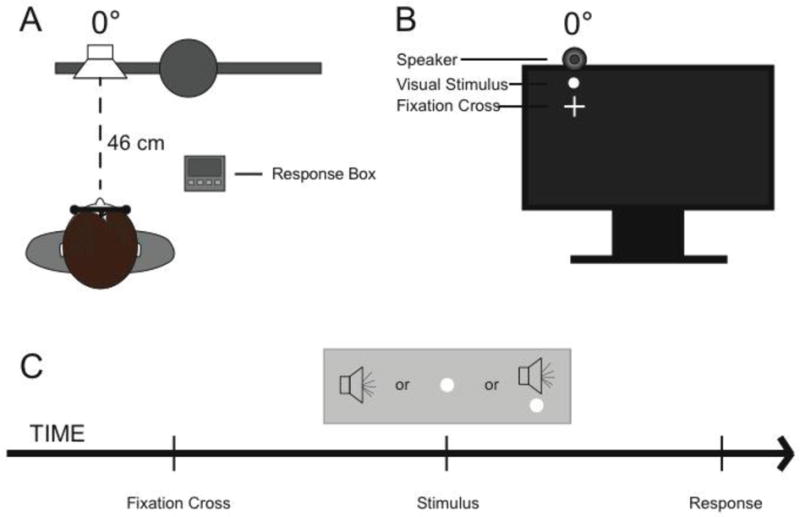

Figure 1.

A – Participant set up indicating the participant's location in relationship to the apparatus. B – Location of auditory and visual stimulus relative to fixation. C – Timeline of stimulus presentations.

Audiovisual (AV) conditions consisted of pairs of the auditory and visual stimuli described above. Presentations were always spatially coincident at 0° azimuth and intensity levels were always matched (high-high and low-low). The temporal offset of the auditory and visual stimulus pairs were parametrically varied, including stimulus onset asynchronies (SOAs) of 0, 50, 100, and 200 milliseconds, with timing confirmed using a Hameg 507 oscilloscope. In all asynchronous cases, visual onset preceded auditory onset.

2.3 Procedure

Participants were seated inside an unlit WhisperRoom™ (SE 2000 Series) with their forehead placed against a Headspot (University of Houston Optometry) forehead rest locked in place. A chinrest and the chair height could be adjusted to the forehead rest to ensure a comfortable seating position. Participants were asked to fixate a cross located at 0° elevation and 0° azimuth at all times and were monitored by close circuit infrared cameras throughout the experiment to ensure compliance (for experimental setup, see Figure 1a and b).

The experiment began with detailed instructions, informing the participants that they would be presented with audiovisual stimuli, and that their task was to judge whether or not the stimuli were synchronous and to respond as quickly and accurately as possible. In the case that the participants did not perceive either the auditory or visual stimulus, they were instructed to report a unisensory perception. Participants were instructed to respond via a five-button PST serial response box were 1 = synchronous, 2 = asynchronous, 3 = visual only, 4 = audio only, and 5 = no stimulus detected. Understanding of the instructions was then confirmed, and the participants were given the opportunity to clarify any questions they had about the instructions. Participants were instructed to respond as quickly and as accurately as possible.

Each trial began with a fixation screen of 1 s displaying the fixation cross in the center of the visual field. After that time period the fixation cross disappeared and was followed by a blank screen with a duration jittered between 500 and 1000 ms, followed by the stimulus presentation. 250ms – 284ms after stimulus presentation, subjects were presented with the response prompt, “Was it synchronous?” Following the participant's response and a 500ms delay, the fixation cross re-appeared, and the subsequent trial began. For a visual depiction of a trial, see Figure 1c. Twenty trials of each of the stimulus conditions (4 SOAs times; 2 intensity levels, unisensory V × 2 intensity levels, unisensory A × 2 intensity level) were presented for a total of 240 trials.

2.4 Analysis

For each trial in which the participant perceived an audiovisual stimulus, both the response and the response time (RT) were recorded. RTs less than 250ms were discarded, and trials with RTs over 2000ms were excluded to eliminate outliers. Our primary analysis focused on rates of perceived synchrony. With each condition, rates of perceived synchrony were calculated as the proportion of trials in which the participant reported a synchronous percept out of all trials in that condition, in which they perceived both stimuli, or:

It should be noted that this explicitly excludes trials in which the participant did not perceive both unisensory components of the stimuli. Rates of perceived synchrony were compared across intensity levels and SOA (for a review of methods see (Stevenson, Ghose, et al., 2014)).

A follow-up, exploratory analysis was conducted on RTs. First, mean RTs were calculated across trials of each condition for each individual. Mean RTs were compared across intensity levels and SOA for perceived synchronous trials. A second exploratory RT analysis was performed using a more rigorous cumulative distribution function (CDFs) analysis. CDFs for perceived synchronous trials were calculated and compared for each condition, again averaging within each participant, and then across participants. Interactions between SOA and stimulus intensity in their CDFs were then assessed using a difference of difference calculation:

3. Results

3.1 Intensity effects on unisensory performance

To ensure that changes in stimulus intensity were actually manipulating stimulus effectiveness, a two-way, repeated-measures ANOVA (intensity × modality) confirmed that high intensity presentations were more effective than low intensity stimulus presentations (F(1,39) = 8.26, p = 0.0065). Paired sample t-tests also revealed that accuracies decreased for both modalities from the high-saliency to the low-saliency condition (visual, high = 97.17%, low = 91.67%, t = 0.965, p = 0.045, d = 0.014 and auditory, high 97.08%, low = 93.75% t = 0.96, p = 0.051, d = 0.307).

3.2 Effects of stimulus effectiveness and temporal factors on judgments of audiovisual synchrony

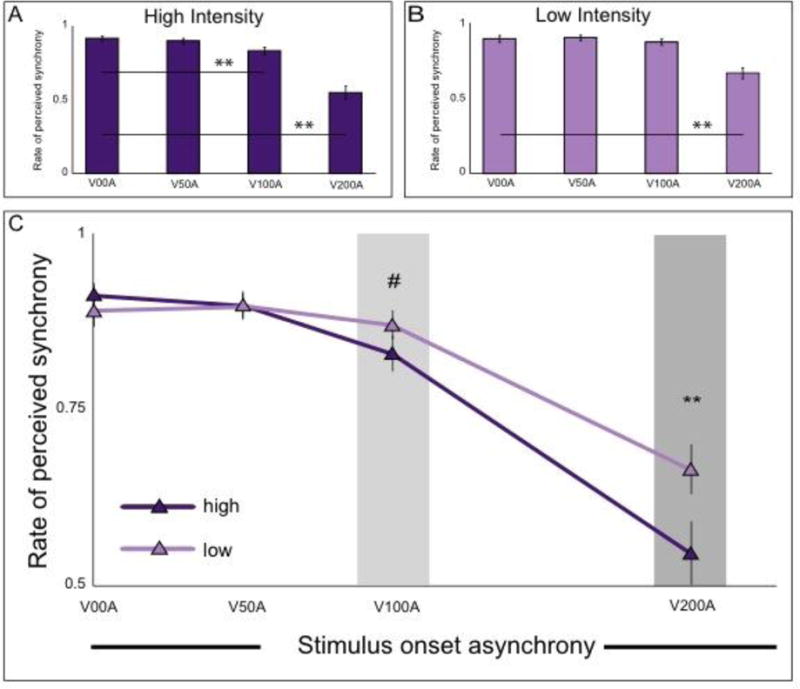

Rates of perceived synchrony were measured for high- and low-intensity audiovisual stimuli presented at temporal offsets ranging from 0 ms (synchronous) to 200 ms (visual leading asynchronies). A two-way, repeated-measures ANOVA (intensity × SOA) was performed. A significant main effect was found for SOA (F(3,44) = 22.411, p = 8.03×10-9, partial-η2 = 0.559). As expected, rates of perceived synchrony decreased with increasing SOA (Figure 2a and b). The main effect for intensity was marginally significant (F(1,44)= 3.171, p = 0.082, partial-η2 = 0.067). Importantly, a significant interaction effect between SOA and intensity was also observed (F(3,44) = 13.219, p = 3.24×10-6, partial-η2 = 0.270).

Figure 2.

A and B – Bar graphs present the changes across SOA within each level of stimulus intensity. For both saliencies, perceived synchrony decreases with increasing SOA with faster changes occurring in the high-saliency condition. C – Line graphs present the changes in perception of synchrony across stimulus intensity levels. Most notably, subjects perceived audiovisual stimuli more often as synchronous for the largest SOA at the low saliency condition. For all panels, ** indicates p < 0.0001, # indicates p < 0.06.

To explore the interaction between SOA and intensity on these synchrony judgments, two sets of protected, follow-up, paired-samples t-tests were performed. First, t-tests were run comparing rates of perceived synchrony across SOAs (See Table 1 for detailed statistics). Rates of perceived synchrony were significantly reduced relative to synchronous presentations at the 100 and 200 ms SOA for the high-intensity stimuli, but only at the 200 ms SOA for the low intensity stimuli (Figure 2a and b). Second, paired t-tests were run across intensity levels at each SOA. A significant difference between rates of perceived synchrony between high and low intensity stimuli was observed only for the 200 ms SOA (t = 3.5507, p = 8.48×10-4, d = 1.071), where participants were more likely to report low intensity stimuli as synchronous relative to high intensity stimuli. No other SOAs showed significant differences between the two intensities (Figure 2c). Thus, the interaction showed that individuals were more likely to report asynchronous presentations as synchronous at lower levels of stimulus intensity.

Table 1. Rates of perceived synchrony relative to synchronous presentations.

| High intensity | Low intensity | |||

|---|---|---|---|---|

| SOA (ms) | t-score | p-value | t-score | p-value |

| 50 | 1.39 | n.s. | 0.56 | n.s. |

| 100 | 4.05 | 1.93e-4 | 1.10 | n.s. |

| 200 | 7.60 | 5.61e-7 | 5.74 | 5.61e-7 |

SOA – stimulus onset asynchrony

3.3 Effects of stimulus intensity and temporal factors on response times

In addition to our analysis of the synchrony judgments of participants as a follow up, supplementary analysis to confirm the accuracy-based findings. Mean RTs were also determined by averaging across subjects by SOA and intensity level (Table 2). Repeated measures ANOVA showed a main effect of SOA (F(3,43) = 2.630, p = 0.049, partial-η2 = 0.039). In contrast, no significant differences in mean RTs across intensities (F(1,43) = 1.260, p = 0.268, partial-η2 = 0.028) and no interaction effect between intensity and SOA (F(3,43) = 0.686, p = 0.566, partial-η2 = 0.019) were found.

Table 2. Mean response times in ms.

| High intensity | Low intensity | |||

|---|---|---|---|---|

| SOA (ms) | Mean | St. Err. | Mean | St. Err. |

| 0 | 421.5 | 29.7 | 429.1 | 27.9 |

| 50 | 420.1 | 26.1 | 401.6 | 26.0 |

| 100 | 433.2 | 29.6 | 434.5 | 25.8 |

| 200 | 457.6 | 28.0 | 427.8 | 23.9 |

SOA – stimulus onset asynchrony

St.Err – standard error

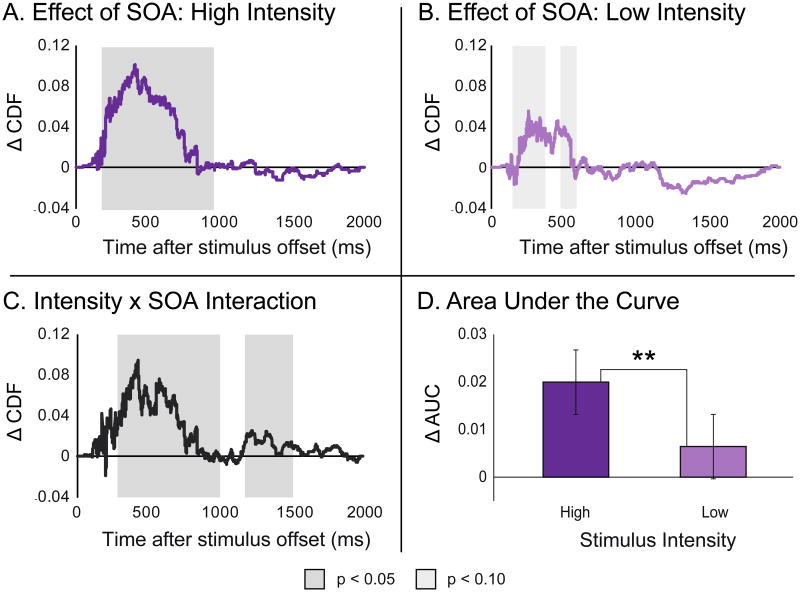

In addition to an analysis of mean RTs, a cumulative distribution function (CDF) analysis was also performed. CDFs were calculated for each subject and then averaged across participants for each condition. To explore the impact of time on these CDFs, differences between the CDFs at 0 ms SOA and 200 ms SOA for each individual were computed for both intensity levels (difference curves plotted in Figure 3a and b) and binned into 100 ms intervals for comparison. In the high intensity condition, there was a significant effect of SOA in the RT bins spanning from 200-1000 ms, where the 0 ms SOA was associated with significantly faster RTs than the 200 ms SOA. A similar pattern was seen in the low-intensity conditions, where RTs in the bins between 200-400 and 500-600 ms showed marginally faster RTs at the 0 ms SOA. Regardless of intensity level, the greatest difference invariably occurred during the early portion of the response distribution, as seen in Figure 3c. Here the difference in these response distributions were segmented into 100 ms bins for comparison, revealing an interaction effect where SOA showed a stronger impact on RT when stimuli were presented at high intensity levels relative to low intensity levels. Finally, to assess this interaction overall, an area-under-the-curve measure was taken for each individual's differences in response distributions across SOAs (Figure 3d). A direct comparison of these areas under the curve confirmed the results of the binned analysis, showing that SOA had a greater impact on stimuli presented at a high intensity level (t = 28.32, p = 1.29e-14, d = 8.539).

Figure 3.

A and B – Differences in cumulative distribution functions measured with synchronous (0 ms SOA) and asynchronous (200 ms SOA) presentations with both high-intensity (Panel A) and low-intensity (Panel B) presentations. C – An interaction between SOA and stimulus intensity was observed using a difference-of-difference measure. The effect of SOA was significantly more pronounced with high- than with low-intensity stimuli. D – Area-under-the-curve measures were extracted from the CDF differences displayed in Panels A and B, providing a secondary measure of interactions between SOA and stimulus intensity. ** denotes a significant difference at an α-level of 0.05.

4. Discussion

Previous studies have established that manipulating space, time, and stimulus intensity can greatly alter multisensory integration and the associated neural, behavioral and perceptual responses. The present study extends this work by examining how stimulus timing and intensity interact to impact perceptual and behavioral performance, showing a strong interdependency between timing and intensity in judgments of the synchrony/asynchrony of the paired stimuli (i.e., synchrony). Specifically, participants were significantly more likely to report asynchronous audiovisual stimuli as synchronous when stimulus intensity levels were lower. This novel finding illustrates that stimulus intensity plays an important and previously unreported role in the perception of the timing of multisensory events.

The results suggest that processing of multisensory stimuli that are weakly effective is less temporally precise than for more effective stimuli. Multisensory systems thus appear to compensate for increasing levels of stimulus ambiguity by increasing their tolerance for asynchronies, resulting in perceptual binding over a greater degree of temporal disparity. Adjusting the width of the temporal binding window would compensate in a manner that effects the overall magnitude of multisensory integration, a notion supported by the time-window-of-integration model (Colonius & Diederich, 2004). Ecologically, this result makes a good deal of sense, since in natural environments sensory inputs arriving from a proximal source are likely to be more intense and will arrive at their respective sensory organs in a more temporally congruent manner. In contrast, sensory information from an identical event that occurs at a greater distance from the individual will be of lower intensity and the temporal disparity at the point of the respective sensory organs will be increased. Thus, a greater tolerance for temporal offsets with low-intensity sensory signals is necessary in order to properly reflect the natural statistics of the environment. Furthermore, the nervous system may also expand its temporal filter for less effective stimuli in order to compensate for the necessity to acquire more information toward a behavioral judgment, These results parallel previous work and work in this issue showing that more peripherally presented audiovisual stimuli are more likely to be perceptually bound at wider temporal offsets (Nidiffer, et al., 2015 (in this issue); Stevenson, Fister, et al., 2012).

One hallmark feature in processing information from external and internal events is the brain's capability to continuously recalibrate and update ongoing neural processes in a dynamic fashion. This occurs particularly frequently when signals are noisy or less reliable as could be the case with low- intensity stimuli. Indeed, previous research has shown that the manner in which the nervous system integrates sensory information is adaptable according to the reliability of information in each sensory modality (Andersen, Tiippana, & Sams, 2004; Beauchamp, Pasalar, & Ro, 2010; Beierholm, Quartz, & Shams, 2009; Besson, et al., 2010; Charbonneau, Veronneau, Boudrias-Fournier, Lepore, & Collignon, 2013; Clemens, De Vrijer, Selen, Van Gisbergen, & Medendorp, 2011; Deneve & Pouget, 2004; Ernst & Banks, 2002; Fetsch, Deangelis, & Angelaki, 2010; Fetsch, Pouget, DeAngelis, & Angelaki, 2012; Fetsch, Turner, DeAngelis, & Angelaki, 2009; Helbig, et al., 2012; Ma & Pouget, 2008; Nath & Beauchamp, 2011; Noppeney, Ostwald, & Werner, 2010; Rohe & Noppeney, 2015).

Furthermore, multisensory systems are characterized by a great deal of plastic capacity, a feature that has been explored extensively in the temporal realm. Thus, through recalibration (Fujisaki, Shimojo, Kashino, & Nishida, 2004; Vroomen, et al., 2004) and perceptual learning (Powers, et al., 2012; Powers, et al., 2009; Schlesinger, et al., 2014; Stevenson, et al., 2013b), it has been demonstrated that an individual's perception of synchrony and the window of time within which that individual will perceptually bind paired stimuli can be modified. Given the malleability of multisensory processing, it seems plausible then that these processes may become more liberal in the window of time with which they perceptually bind incoming sensory information based upon its reliability or intensity. Indeed, such real time recalibration of sensory integration has been reported previously in reference to stimulus reliability as determined by signal-to-noise ratio (Nath & Beauchamp, 2011). In this study, re-weighting of stimulus inputs based on reliability impacted the magnitude of multisensory integration in STS as demonstrated through fMRI. While the current data set cannot directly assess this hypothesis, this possible explanation is intriguing and warrants direct testing in future research.

Consistent with the results of the current study, one line of previous research has provided evidence that another aspect of multisensory temporal processing, the point of subjective simultaneity (PSS), can also be modulated by stimulus intensity. The PSS, which describes the temporal offset at which the perception of synchrony is maximal, is generally found when the visual component of a multisensory stimulus slightly precedes the auditory component. Studies investigating the role that stimulus intensity has on the PSS have generally shown that with decreases in stimulus intensity, the PSS shifts to an SOA with a greater auditory lag (Boenke, Deliano, & Ohl, 2009; Neumann, Koch, Niepel, & Tappe, 1992). Furthermore, stimuli presented in the periphery have also been shown to have a PSS associated with a greater visual-first SOA (Arden & Weale, 1954; Zampini, Shore, & Spence, 2003), a result that may be derivative of changes in stimulus effectiveness. Furthermore, prior work from our lab has shown that the window within which an individual perceives temporal synchrony is in fact broader in the periphery (Stevenson, Fister, et al., 2012). This extension beyond measures of the PSS is vital, as manipulations of other stimulus factors, such as spatial disparity, have been shown to impact the window of integration without impacting the PSS (Keetels & Vroomen, 2005). The current study, however, provides the first evidence that direct manipulations of stimulus intensity alter the temporal tolerance for perceived simultaneity.

In accord with the changes in rates of perceived synchrony across levels of stimulus intensity, exploratory analysis of changes in response time CDFs across SOAs and intensity levels were also observed. Individuals were slower to respond to stimuli presented at longer SOAs, but this effect was smaller with low-intensity stimuli. This interaction effect in RTs provides converging evidence with the simultaneity judgment data, and reinforces the conclusion that at lower intensity levels (i.e., weaker effectiveness) multisensory systems are more tolerant of temporal offsets and thus capable of binding audiovisual stimuli over larger temporal intervals.

The role of effectiveness and timing is also important in the study of atypical sensory and multisensory perception. Specifically, effectiveness and timing play a role in dysfunctional multisensory integration in a number of clinical populations. For example, the ability to benefit from seeing an speaker's face while conversing in a noisy environment is dependent upon the effectiveness of the auditory signal, but individuals with schizophrenia (Ross, Saint-Amour, Leavitt, Molholm, et al., 2007) and autism (Foxe, et al., 2013) show deficits in the amount of perceptual benefit they gain at low levels of stimulus effectiveness. Furthermore, dysfunction in temporal processing of multisensory stimuli have also been shown in individuals with autism (Baum, Stevenson, & Wallace, 2015; Bebko, Weiss, Demark, & Gomez, 2006; de Boer-Schellekens, Eussen, & Vroomen, 2013; Foss-Feig, et al., 2010; Kwakye, Foss-Feig, Cascio, Stone, & Wallace, 2011; Stevenson, Segers, Ferber, Barense, & Wallace, 2014; Stevenson, Siemann, Schneider, et al., 2014; Stevenson, Siemann, Woynaroski, et al., 2014a, 2014b; Wallace & Stevenson, 2014; Woynaroski, et al., 2013), schizophrenia (Martin, Giersch, Huron, & van Wassenhove, 2013), and dyslexia (Froyen, Willems, & Blomert, 2011; Hairston, Burdette, Flowers, Wood, & Wallace, 2005b; Virsu, Lahti-Nuuttila, & Laasonen, 2003). Since having an appropriately sized temporal binding window ensures proper multisensory integration, one avenue of future research should investigate whether or not clinical populations with enlarged temporal windows will show a corresponding widening of these windows with changing stimulus properties (i.e., stimulus intensities as described in the current study) or with increasing levels of stimulus complexity (Stevenson & Wallace, 2013; Vroomen & Stekelenburg, 2011). Furthermore, given previous demonstrations of how one can improve multisensory temporal precision through perceptual learning (Powers, et al., 2012; Powers, et al., 2009; Schlesinger, et al., 2014; Stevenson, et al., 2013b), future studies applying such training to clinical populations as possible therapeutic tool may prove fruitful. Emerging evidence suggests enormous developmental plasticity in multisensory temporal function (Hillock, et al., 2011; Hillock-Dunn & Wallace, 2012; Stevenson, et al., 2013b). Such developmental malleability could potentially be directed in clinical populations to ameliorate some of the changes in sensory function. Indeed, audiovisual training in children with reading disabilities has been used as a tool to improve reading comprehension (Kujala, et al., 2001; Veuillet, Magnan, Ecalle, Thai-Van, & Collet, 2007). Thus, delineating the temporal factors and constraints for multisensory binding and integration in normative populations builds the foundation for comparisons in clinical populations, which may provide key insights into the design of effective interventional measures.

5. Conclusions

The study presented here provides novel evidence of a relationship between the perception of multisensory stimuli in relation to their stimulus effectiveness and their temporal synchrony. Specifically, these data suggest that the effectiveness of a stimulus presentation impacts how the temporal dynamics of the stimulus presentation are perceived, where tolerance for stimulus asynchronies is increased as stimulus effectiveness decreases. While the present study clearly indicates stimulus-intensity dependent changes in the window of temporal integration, it is limited to only two saliency levels, suggesting further exploration of this effect with a broader range of SOAs and salience levels that span the dynamic range of behavioral responses to provide a full picture of how the temporal principle and inverse effectiveness interact.

Highlights.

We tested the interaction between timing and effectiveness of audiovisual stimuli and the effects of that interaction on perception of multisensory stimuli.

Participants completed an audiovisual simultaneity judgment task where stimulus onset asynchronies (SOAs) and stimulus saliencies were parametrically varied (4 SOAs, 2 saliency levels).

Our results show that perceived simultaneity decreases with SOA for both stimulus intensities.

The rate of perceived simultaneity decreases quicker for high-saliency conditions than for low-saliency conditions suggesting a widening of the temporal window of integration with less effective stimuli.

This increase in tolerance of larger temporal offsets with decreasing stimulus effectiveness indicates an interdependence between stimulus factors during multisensory integration which can determine behavior and shape perception.

Acknowledgments

This research was funded in part through NIH grants DC011993, DC010927, MH063861, CA183492, and DC014114. Support for R.S. came from a Banting Postdoctoral Fellowship granted by the Canadian Natural Sciences and Engineering Research Council (NSERC), The Autism Research Training Program funded by The Canadian Institutes of Health Research (CIHR), and an NRSA fellowship from the American NIH's National Institute of Deafness and Communicative Disorders (1F32 DC011993). We would also like to acknowledge the support of the Vanderbilt Kennedy Center, the Vanderbilt Brain Institute and the Bill Wilkerson Center collaborative grant mechanism.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Amlot R, Walker R, Driver J, Spence C. Multimodal visual-somatosensory integration in saccade generation. Neuropsychologia. 2003;41:1–15. doi: 10.1016/s0028-3932(02)00139-2. [DOI] [PubMed] [Google Scholar]

- Andersen TS, Tiippana K, Sams M. Factors influencing audiovisual fission and fusion illusions. Brain Res Cogn Brain Res. 2004;21:301–308. doi: 10.1016/j.cogbrainres.2004.06.004. [DOI] [PubMed] [Google Scholar]

- Arden GB, Weale RA. Variations of latent period of vision. Proc R Soc Lond B Biol Sci. 1954;142:258–267. doi: 10.1098/rspb.1954.0025. [DOI] [PubMed] [Google Scholar]

- Bates SL, Wolbers T. How cognitive aging affects multisensory integration of navigational cues. Neurobiology of Aging. 2014;35:2761–2769. doi: 10.1016/j.neurobiolaging.2014.04.003. [DOI] [PubMed] [Google Scholar]

- Baum SH, Stevenson RA, Wallace MT. Testing sensory and multisensory function in children with autism spectrum disorder. J Vis Exp. 2015:e52677. doi: 10.3791/52677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beauchamp MS, Pasalar S, Ro T. Neural substrates of reliability-weighted visual-tactile multisensory integration. Front Syst Neurosci. 2010;4:25. doi: 10.3389/fnsys.2010.00025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bebko JM, Weiss JA, Demark JL, Gomez P. Discrimination of temporal synchrony in intermodal events by children with autism and children with developmental disabilities without autism. J Child Psychol Psychiatry. 2006;47:88–98. doi: 10.1111/j.1469-7610.2005.01443.x. [DOI] [PubMed] [Google Scholar]

- Beierholm UR, Quartz SR, Shams L. Bayesian priors are encoded independently from likelihoods in human multisensory perception. J Vis. 2009;9:23 21–29. doi: 10.1167/9.5.23. [DOI] [PubMed] [Google Scholar]

- Bertelson P, Radeau M. Cross-modal bias and perceptual fusion with auditory-visual spatial discordance. Percept Psychophys. 1981;29:578–584. doi: 10.3758/bf03207374. [DOI] [PubMed] [Google Scholar]

- Besson P, Richiardi J, Bourdin C, Bringoux L, Mestre DR, Vercher JL. Bayesian networks and information theory for audio-visual perception modeling. Biol Cybern. 2010;103:213–226. doi: 10.1007/s00422-010-0392-8. [DOI] [PubMed] [Google Scholar]

- Billock VA, Tsou BH. Bridging the divide between sensory integration and binding theory: Using a binding-like neural synchronization mechanism to model sensory enhancements during multisensory interactions. J Cogn Neurosci. 2014;26:1587–1599. doi: 10.1162/jocn_a_00574. [DOI] [PubMed] [Google Scholar]

- Boenke LT, Deliano M, Ohl FW. Stimulus duration influences perceived simultaneity in audiovisual temporal-order judgment. Exp Brain Res. 2009;198:233–244. doi: 10.1007/s00221-009-1917-z. [DOI] [PubMed] [Google Scholar]

- Carriere BN, Royal DW, Wallace MT. Spatial heterogeneity of cortical receptive fields and its impact on multisensory interactions. J Neurophysiol. 2008;99:2357–2368. doi: 10.1152/jn.01386.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charbonneau G, Veronneau M, Boudrias-Fournier C, Lepore F, Collignon O. The ventriloquist in periphery: impact of eccentricity-related reliability on audio-visual localization. J Vis. 2013;13:20. doi: 10.1167/13.12.20. [DOI] [PubMed] [Google Scholar]

- Clemens IA, De Vrijer M, Selen LP, Van Gisbergen JA, Medendorp WP. Multisensory processing in spatial orientation: an inverse probabilistic approach. J Neurosci. 2011;31:5365–5377. doi: 10.1523/JNEUROSCI.6472-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colonius H, Diederich A. Multisensory interaction in saccadic reaction time: a time-window-of-integration model. J Cogn Neurosci. 2004;16:1000–1009. doi: 10.1162/0898929041502733. [DOI] [PubMed] [Google Scholar]

- Conrey B, Pisoni DB. Auditory-visual speech perception and synchrony detection for speech and nonspeech signals. J Acoust Soc Am. 2006;119:4065–4073. doi: 10.1121/1.2195091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Boer-Schellekens L, Eussen M, Vroomen J. Diminished sensitivity of audiovisual temporal order in autism spectrum disorder. Front Integr Neurosci. 2013;7:8. doi: 10.3389/fnint.2013.00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeLoss DJ, Pierce RS, Andersen GJ. Multisensory integration, aging, and the sound-induced flash illusion. Psychol Aging. 2013;28:802–812. doi: 10.1037/a0033289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deneve S, Pouget A. Bayesian multisensory integration and cross-modal spatial links. J Physiol Paris. 2004;98:249–258. doi: 10.1016/j.jphysparis.2004.03.011. [DOI] [PubMed] [Google Scholar]

- Diaconescu AO, Hasher L, McIntosh AR. Visual dominance and multisensory integration changes with age. Neuroimage. 2013;65:152–166. doi: 10.1016/j.neuroimage.2012.09.057. [DOI] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Fetsch CR, Deangelis GC, Angelaki DE. Visual-vestibular cue integration for heading perception: applications of optimal cue integration theory. Eur J Neurosci. 2010;31:1721–1729. doi: 10.1111/j.1460-9568.2010.07207.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Pouget A, DeAngelis GC, Angelaki DE. Neural correlates of reliability-based cue weighting during multisensory integration. Nat Neurosci. 2012;15:146–154. doi: 10.1038/nn.2983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Turner AH, DeAngelis GC, Angelaki DE. Dynamic reweighting of visual and vestibular cues during self-motion perception. J Neurosci. 2009;29:15601–15612. doi: 10.1523/JNEUROSCI.2574-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foss-Feig JH, Kwakye LD, Cascio CJ, Burnette CP, Kadivar H, Stone WL, Wallace MT. An extended multisensory temporal binding window in autism spectrum disorders. Experimental Brain Research. 2010;203:381–389. doi: 10.1007/s00221-010-2240-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foxe JJ, Molholm S, Del Bene VA, Frey HP, Russo NN, Blanco D, Saint-Amour D, Ross LA. Severe multisensory speech integration deficits in high-functioning school-aged children with autism spectrum disorder (ASD) and their resolution during early adolescence. Cerebral Cortex. 2013:bht213. doi: 10.1093/cercor/bht213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frassinetti F, Bolognini N, Ladavas E. Enhancement of visual perception by crossmodal visuo-auditory interaction. Exp Brain Res. 2002;147:332–343. doi: 10.1007/s00221-002-1262-y. [DOI] [PubMed] [Google Scholar]

- Freiherr J, Lundstrom JN, Habel U, Reetz K. Multisensory integration mechanisms during aging. Front Hum Neurosci. 2013;7:863. doi: 10.3389/fnhum.2013.00863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Froyen D, Willems G, Blomert L. Evidence for a specific cross-modal association deficit in dyslexia: an electrophysiological study of letter-speech sound processing. Dev Sci. 2011;14:635–648. doi: 10.1111/j.1467-7687.2010.01007.x. [DOI] [PubMed] [Google Scholar]

- Fujisaki W, Shimojo S, Kashino M, Nishida S. Recalibration of audiovisual simultaneity. Nat Neurosci. 2004;7:773–778. doi: 10.1038/nn1268. [DOI] [PubMed] [Google Scholar]

- Ghose D, Wallace MT. Heterogeneity in the spatial receptive field architecture of multisensory neurons of the superior colliculus and its effects on multisensory integration. Neuroscience. 2014;256:147–162. doi: 10.1016/j.neuroscience.2013.10.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grant KW, Van Wassenhove V, Poeppel D. Detection of auditory (cross-spectral) and auditory-visual (cross-modal) synchrony. Speech Communication. 2004;44:43–53. [Google Scholar]

- Hairston WD, Burdette JH, Flowers DL, Wood FB, Wallace MT. Altered temporal profile of visual-auditory multisensory interactions in dyslexia. Experimental Brain Research. 2005a;166:474–480. doi: 10.1007/s00221-005-2387-6. [DOI] [PubMed] [Google Scholar]

- Hairston WD, Burdette JH, Flowers DL, Wood FB, Wallace MT. Altered temporal profile of visual-auditory multisensory interactions in dyslexia. Exp Brain Res. 2005b;166:474–480. doi: 10.1007/s00221-005-2387-6. [DOI] [PubMed] [Google Scholar]

- Helbig HB, Ernst MO, Ricciardi E, Pietrini P, Thielscher A, Mayer KM, Schultz J, Noppeney U. The neural mechanisms of reliability weighted integration of shape information from vision and touch. Neuroimage. 2012;60:1063–1072. doi: 10.1016/j.neuroimage.2011.09.072. [DOI] [PubMed] [Google Scholar]

- Hillock AR, Powers AR, Wallace MT. Binding of sights and sounds: age-related changes in multisensory temporal processing. Neuropsychologia. 2011;49:461–467. doi: 10.1016/j.neuropsychologia.2010.11.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillock-Dunn A, Wallace MT. Developmental changes in the multisensory temporal binding window persist into adolescence. Dev Sci. 2012;15:688–696. doi: 10.1111/j.1467-7687.2012.01171.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hugenschmidt CE, Mozolic JL, Laurienti PJ. Suppression of multisensory integration by modality-specific attention in aging. Neuroreport. 2009;20:349–353. doi: 10.1097/WNR.0b013e328323ab07. [DOI] [PMC free article] [PubMed] [Google Scholar]

- James TW, Kim S, Stevenson RA. In The International Society for Psychophysics. Galway, Ireland: 2009. Assessing multisensory interaction with additive factors and functional MRI. [Google Scholar]

- James TW, Stevenson RA. The use of fMRI to assess multisensory integration. In: Wallace MH, Murray MM, editors. Frontiers in the Neural Basis of Multisensory Processes. London: Taylor & Francis; 2012a. [PubMed] [Google Scholar]

- James TW, Stevenson RA. The Use of fMRI to Assess Multisensory Integration. In: Murray MM, Wallace MT, editors. The Neural Bases of Multisensory Processes. Boca Raton (FL): 2012b. [PubMed] [Google Scholar]

- James TW, Stevenson RA, Kim S. Inverse effectiveness in multisensory processing. In: Stein BE, editor. The New Handbook of Multisensory Processes. Cambridge, MA: MIT Press; 2012. [Google Scholar]

- Joanne Jao R, James TW, Harman James K. Multisensory convergence of visual and haptic object preference across development. Neuropsychologia. 2014;56:381–392. doi: 10.1016/j.neuropsychologia.2014.02.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johannsen J, Roder B. Uni- and crossmodal refractory period effects of event-related potentials provide insights into the development of multisensory processing. Front Hum Neurosci. 2014;8:552. doi: 10.3389/fnhum.2014.00552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kadunce DC, Vaughan JW, Wallace MT, Stein BE. The influence of visual and auditory receptive field organization on multisensory integration in the superior colliculus. Exp Brain Res. 2001;139:303–310. doi: 10.1007/s002210100772. [DOI] [PubMed] [Google Scholar]

- Kasper RW, Cecotti H, Touryan J, Eckstein MP, Giesbrecht B. Isolating the neural mechanisms of interference during continuous multisensory dual-task performance. J Cogn Neurosci. 2014;26:476–489. doi: 10.1162/jocn_a_00480. [DOI] [PubMed] [Google Scholar]

- Keetels M, Vroomen J. The role of spatial disparity and hemifields in audio-visual temporal order judgments. Exp Brain Res. 2005;167:635–640. doi: 10.1007/s00221-005-0067-1. [DOI] [PubMed] [Google Scholar]

- Keetels M, Vroomen J. Temporal recalibration to tactile-visual asynchronous stimuli. Neurosci Lett. 2008;430:130–134. doi: 10.1016/j.neulet.2007.10.044. [DOI] [PubMed] [Google Scholar]

- Kim S, James TW. Enhanced effectiveness in visuo-haptic object-selective brain regions with increasing stimulus salience. Hum Brain Mapp. 2010;31:678–693. doi: 10.1002/hbm.20897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim S, Stevenson RA, James TW. Visuo-haptic neuronal convergence demonstrated with an inversely effective pattern of BOLD activation. J Cogn Neurosci. 2012;24:830–842. doi: 10.1162/jocn_a_00176. [DOI] [PubMed] [Google Scholar]

- Kording KP, Beierholm U, Ma WJ, Quartz S, Tenenbaum JB, Shams L. Causal inference in multisensory perception. PLoS ONE. 2007;2:e943. doi: 10.1371/journal.pone.0000943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krueger J, Royal DW, Fister MC, Wallace MT. Spatial receptive field organization of multisensory neurons and its impact on multisensory interactions. Hear Res. 2009;258:47–54. doi: 10.1016/j.heares.2009.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kujala T, Karma K, Ceponiene R, Belitz S, Turkkila P, Tervaniemi M, Naatanen R. Plastic neural changes and reading improvement caused by audiovisual training in reading-impaired children. Proc Natl Acad Sci U S A. 2001;98:10509–10514. doi: 10.1073/pnas.181589198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kwakye LD, Foss-Feig JH, Cascio CJ, Stone WL, Wallace MT. Altered auditory and multisensory temporal processing in autism spectrum disorders. Front Integr Neurosci. 2011;4:129. doi: 10.3389/fnint.2010.00129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leone LM, McCourt ME. The roles of physical and physiological simultaneity in audiovisual multisensory facilitation. Iperception. 2013;4:213–228. doi: 10.1068/i0532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewkowicz DJ. Development of Multisensory Temporal Perception. In: Murray MM, Wallace MT, editors. The Neural Bases of Multisensory Processes. Boca Raton (FL): 2012. [PubMed] [Google Scholar]

- Liu B, Lin Y, Gao X, Dang J. Correlation between audio-visual enhancement of speech in different noise environments and SNR: a combined behavioral and electrophysiological study. Neuroscience. 2013;247:145–151. doi: 10.1016/j.neuroscience.2013.05.007. [DOI] [PubMed] [Google Scholar]

- Lovelace CT, Stein BE, Wallace MT. An irrelevant light enhances auditory detection in humans: a psychophysical analysis of multisensory integration in stimulus detection. Cognitive Brain Research. 2003;17:447–453. doi: 10.1016/s0926-6410(03)00160-5. [DOI] [PubMed] [Google Scholar]

- Ma WJ, Pouget A. Linking neurons to behavior in multisensory perception: a computational review. Brain Res. 2008;1242:4–12. doi: 10.1016/j.brainres.2008.04.082. [DOI] [PubMed] [Google Scholar]

- Macaluso E, George N, Dolan R, Spence C, Driver J. Spatial and temporal factors during processing of audiovisual speech: a PET study. Neuroimage. 2004;21:725–732. doi: 10.1016/j.neuroimage.2003.09.049. [DOI] [PubMed] [Google Scholar]

- Mahoney JR, Molholm S, Butler JS, Sehatpour P, Gomez-Ramirez M, Ritter W, Foxe JJ. Keeping in touch with the visual system: spatial alignment and multisensory integration of visual-somatosensory inputs. Front Psychol. 2015;6:1068. doi: 10.3389/fpsyg.2015.01068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahoney JR, Verghese J, Dumas K, Wang C, Holtzer R. The effect of multisensory cues on attention in aging. Brain Res. 2012;1472:63–73. doi: 10.1016/j.brainres.2012.07.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahoney JR, Wang C, Dumas K, Holtzer R. Visual-somatosensory integration in aging: does stimulus location really matter? Vis Neurosci. 2014;31:275–283. doi: 10.1017/S0952523814000078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin B, Giersch A, Huron C, van Wassenhove V. Temporal event structure and timing in schizophrenia: preserved binding in a longer “now”. Neuropsychologia. 2013;51:358–371. doi: 10.1016/j.neuropsychologia.2012.07.002. [DOI] [PubMed] [Google Scholar]

- McGurk H, MacDonald J. Hearing lips and seeing voices. Nature. 1976;264:746–748. doi: 10.1038/264746a0. [DOI] [PubMed] [Google Scholar]

- Megevand P, Molholm S, Nayak A, Foxe JJ. Recalibration of the multisensory temporal window of integration results from changing task demands. PLoS ONE. 2013;8:e71608. doi: 10.1371/journal.pone.0071608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. J Neurophysiol. 1986;56:640–662. doi: 10.1152/jn.1986.56.3.640. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Spatial determinants of multisensory integration in cat superior colliculus neurons. J Neurophysiol. 1996;75:1843–1857. doi: 10.1152/jn.1996.75.5.1843. [DOI] [PubMed] [Google Scholar]

- Mozolic JL, Hugenschmidt CE, Peiffer AM, Laurienti PJ. Multisensory Integration and Aging. In: Murray MM, Wallace MT, editors. The Neural Bases of Multisensory Processes. Boca Raton (FL): 2012. [PubMed] [Google Scholar]

- Nath AR, Beauchamp MS. Dynamic changes in superior temporal sulcus connectivity during perception of noisy audiovisual speech. J Neurosci. 2011;31:1704–1714. doi: 10.1523/JNEUROSCI.4853-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neumann O, Koch R, Niepel M, Tappe T. Reaction time and temporal serial judgment: corroboration or dissociation? Z Exp Angew Psychol. 1992;39:621–645. [PubMed] [Google Scholar]

- The Neural Bases of Multisensory Processes. Boca Raton (FL): 2012. [PubMed] [Google Scholar]

- Nidiffer AR, Stevenson RA, Krueger Fister J, Barnett ZP, Wallace MT. Interactions between space and effectiveness in human multisensory performance. Neuropsychologia. 2015 doi: 10.1016/j.neuropsychologia.2016.01.031. in this issue. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noppeney U, Ostwald D, Werner S. Perceptual decisions formed by accumulation of audiovisual evidence in prefrontal cortex. J Neurosci. 2010;30:7434–7446. doi: 10.1523/JNEUROSCI.0455-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polley DB, Hillock AR, Spankovich C, Popescu MV, Royal DW, Wallace MT. Development and plasticity of intra- and intersensory information processing. J Am Acad Audiol. 2008;19:780–798. doi: 10.3766/jaaa.19.10.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powers AR, 3rd, Hevey MA, Wallace MT. Neural correlates of multisensory perceptual learning. J Neurosci. 2012;32:6263–6274. doi: 10.1523/JNEUROSCI.6138-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powers AR, 3rd, Hillock AR, Wallace MT. Perceptual training narrows the temporal window of multisensory binding. J Neurosci. 2009;29:12265–12274. doi: 10.1523/JNEUROSCI.3501-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Radeau M, Bertelson P. The after-effects of ventriloquism. Q J Exp Psychol. 1974;26:63–71. doi: 10.1080/14640747408400388. [DOI] [PubMed] [Google Scholar]

- Rohe T, Noppeney U. Sensory reliability shapes perceptual inference via two mechanisms. J Vis. 2015;15:22. doi: 10.1167/15.5.22. [DOI] [PubMed] [Google Scholar]

- Ross LA, Saint-Amour D, Leavitt VM, Javitt DC, Foxe JJ. Do you see what I am saying? Exploring visual enhancement of speech comprehension in noisy environments. Cereb Cortex. 2007;17:1147–1153. doi: 10.1093/cercor/bhl024. [DOI] [PubMed] [Google Scholar]

- Ross LA, Saint-Amour D, Leavitt VM, Molholm S, Javitt DC, Foxe JJ. Impaired multisensory processing in schizophrenia: deficits in the visual enhancement of speech comprehension under noisy environmental conditions. Schizophr Res. 2007;97:173–183. doi: 10.1016/j.schres.2007.08.008. [DOI] [PubMed] [Google Scholar]

- Royal DW, Carriere BN, Wallace MT. Spatiotemporal architecture of cortical receptive fields and its impact on multisensory interactions. Exp Brain Res. 2009;198:127–136. doi: 10.1007/s00221-009-1772-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Royal DW, Krueger J, Fister MC, Wallace MT. Adult plasticity of spatiotemporal receptive fields of multisensory superior colliculus neurons following early visual deprivation. Restor Neurol Neurosci. 2010;28:259–270. doi: 10.3233/RNN-2010-0488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarko DK, Nidiffer AR, Powers IA, Ghose D, Hillock-Dunn A, Fister MC, Krueger J, Wallace MT. Spatial and Temporal Features of Multisensory Processes: Bridging Animal and Human Studies. In: Murray MM, Wallace MT, editors. The Neural Bases of Multisensory Processes. Boca Raton (FL): 2012. [PubMed] [Google Scholar]

- Sato Y, Toyoizumi T, Aihara K. Bayesian inference explains perception of unity and ventriloquism aftereffect: identification of common sources of audiovisual stimuli. Neural Comput. 2007;19:3335–3355. doi: 10.1162/neco.2007.19.12.3335. [DOI] [PubMed] [Google Scholar]

- Schlesinger JJ, Stevenson RA, Shotwell MS, Wallace MT. Improving pulse oximetry pitch perception with multisensory perceptual training. Anesth Analg. 2014;118:1249–1253. doi: 10.1213/ANE.0000000000000222. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Saint-Amour D, Hofle M, Foxe JJ. Multisensory interactions in early evoked brain activity follow the principle of inverse effectiveness. Neuroimage. 2011;56:2200–2208. doi: 10.1016/j.neuroimage.2011.03.075. [DOI] [PubMed] [Google Scholar]

- Shams L, Kamitani Y, Shimojo S. Visual illusion induced by sound. Brain Res Cogn Brain Res. 2002;14:147–152. doi: 10.1016/s0926-6410(02)00069-1. [DOI] [PubMed] [Google Scholar]

- Shi Z, Muller HJ. Multisensory perception and action: development, decision-making, and neural mechanisms. Front Integr Neurosci. 2013;7:81. doi: 10.3389/fnint.2013.00081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE, Meredith MA, Huneycutt WS, McDade L. Behavioral Indices of Multisensory Integration: Orientation to Visual Cues is Affected by Auditory Stimuli. Journal of Cognitive Neuroscience. 1989;1:12–24. doi: 10.1162/jocn.1989.1.1.12. [DOI] [PubMed] [Google Scholar]

- Stein BE, Stanford TR, Ramachandran R, Perrault TJ, Jr, Rowland BA. Challenges in quantifying multisensory integration: alternative criteria, models, and inverse effectiveness. Exp Brain Res. 2009;198:113–126. doi: 10.1007/s00221-009-1880-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Bushmakin M, Kim S, Wallace MT, Puce A, James TW. Inverse effectiveness and multisensory interactions in visual event-related potentials with audiovisual speech. Brain Topogr. 2012;25:308–326. doi: 10.1007/s10548-012-0220-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Fister JK, Barnett ZP, Nidiffer AR, Wallace MT. Interactions between the spatial and temporal stimulus factors that influence multisensory integration in human performance. Exp Brain Res. 2012;219:121–137. doi: 10.1007/s00221-012-3072-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Ghose D, Fister JK, Sarko DK, Altieri NA, Nidiffer AR, Kurela LR, Siemann JK, James TW, Wallace MT. Identifying and quantifying multisensory integration: a tutorial review. Brain Topogr. 2014;27:707–730. doi: 10.1007/s10548-014-0365-7. [DOI] [PubMed] [Google Scholar]

- Stevenson RA, James TW. Audiovisual integration in human superior temporal sulcus: Inverse effectiveness and the neural processing of speech and object recognition. Neuroimage. 2009;44:1210–1223. doi: 10.1016/j.neuroimage.2008.09.034. [DOI] [PubMed] [Google Scholar]

- Stevenson RA, Nelms CE, Baum SH, Zurkovsky L, Barense MD, Newhouse PA, Wallace MT. Deficits in audiovisual speech perception in normal aging emerge at the level of whole-word recognition. Neurobiology of Aging. 2015;36:283–291. doi: 10.1016/j.neurobiolaging.2014.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Segers M, Ferber S, Barense MD, Wallace MT. The impact of multisensory integration deficits on speech perception in children with autism spectrum disorders. Front Psychol. 2014;5:379. doi: 10.3389/fpsyg.2014.00379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Siemann JK, Schneider BC, Eberly HE, Woynaroski TG, Camarata SM, Wallace MT. Multisensory temporal integration in autism spectrum disorders. J Neurosci. 2014;34:691–697. doi: 10.1523/JNEUROSCI.3615-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Siemann JK, Woynaroski TG, Schneider BC, Eberly HE, Camarata SM, Wallace MT. Brief report: Arrested development of audiovisual speech perception in autism spectrum disorders. J Autism Dev Disord. 2014a;44:1470–1477. doi: 10.1007/s10803-013-1992-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Siemann JK, Woynaroski TG, Schneider BC, Eberly HE, Camarata SM, Wallace MT. Evidence for diminished multisensory integration in autism spectrum disorders. J Autism Dev Disord. 2014b;44:3161–3167. doi: 10.1007/s10803-014-2179-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Wallace MT. Multisensory temporal integration: task and stimulus dependencies. Exp Brain Res. 2013;227:249–261. doi: 10.1007/s00221-013-3507-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Wilson MM, Powers AR, Wallace MT. The effects of visual training on multisensory temporal processing. Exp Brain Res. 2013a;225:479–489. doi: 10.1007/s00221-012-3387-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Wilson MM, Powers AR, Wallace MT. The effects of visual training on multisensory temporal processing. Exp Brain Res. 2013b doi: 10.1007/s00221-012-3387-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Zemtsov RK, Wallace MT. Individual differences in the multisensory temporal binding window predict susceptibility to audiovisual illusions. J Exp Psychol Hum Percept Perform. 2012a;38:1517–1529. doi: 10.1037/a0027339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Zemtsov RK, Wallace MT. Individual Differences in the Multisensory Temporal Binding Window Predict Susceptibility to Audiovisual Illusions. J Exp Psychol Hum Percept Perform. 2012b doi: 10.1037/a0027339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Atteveldt NM, Formisano E, Blomert L, Goebel R. The effect of temporal asynchrony on the multisensory integration of letters and speech sounds. Cereb Cortex. 2007;17:962–974. doi: 10.1093/cercor/bhl007. [DOI] [PubMed] [Google Scholar]

- van Eijk RL, Kohlrausch A, Juola JF, van de Par S. Audiovisual synchrony and temporal order judgments: effects of experimental method and stimulus type. Percept Psychophys. 2008;70:955–968. doi: 10.3758/pp.70.6.955. [DOI] [PubMed] [Google Scholar]

- van Eijk RL, Kohlrausch A, Juola JF, van de Par S. Temporal order judgment criteria are affected by synchrony judgment sensitivity. Atten Percept Psychophys. 2010;72:2227–2235. doi: 10.3758/bf03196697. [DOI] [PubMed] [Google Scholar]

- van Wassenhove V, Grant KW, Poeppel D. Temporal window of integration in auditory-visual speech perception. Neuropsychologia. 2007;45:598–607. doi: 10.1016/j.neuropsychologia.2006.01.001. [DOI] [PubMed] [Google Scholar]

- Veuillet E, Magnan A, Ecalle J, Thai-Van H, Collet L. Auditory processing disorder in children with reading disabilities: effect of audiovisual training. Brain. 2007;130:2915–2928. doi: 10.1093/brain/awm235. [DOI] [PubMed] [Google Scholar]

- Virsu V, Lahti-Nuuttila P, Laasonen M. Crossmodal temporal processing acuity impairment aggravates with age in developmental dyslexia. Neurosci Lett. 2003;336:151–154. doi: 10.1016/s0304-3940(02)01253-3. [DOI] [PubMed] [Google Scholar]

- Vroomen J, Bertelson P, de Gelder B. Directing spatial attention towards the illusory location of a ventriloquized sound. Acta Psychol (Amst) 2001;108:21–33. doi: 10.1016/s0001-6918(00)00068-8. [DOI] [PubMed] [Google Scholar]

- Vroomen J, de Gelder B. Temporal ventriloquism: sound modulates the flash-lag effect. J Exp Psychol Hum Percept Perform. 2004;30:513–518. doi: 10.1037/0096-1523.30.3.513. [DOI] [PubMed] [Google Scholar]

- Vroomen J, Keetels M. Perception of intersensory synchrony: a tutorial review. Atten Percept Psychophys. 2010;72:871–884. doi: 10.3758/APP.72.4.871. [DOI] [PubMed] [Google Scholar]

- Vroomen J, Keetels M, de Gelder B, Bertelson P. Recalibration of temporal order perception by exposure to audio-visual asynchrony. Brain Res Cogn Brain Res. 2004;22:32–35. doi: 10.1016/j.cogbrainres.2004.07.003. [DOI] [PubMed] [Google Scholar]

- Vroomen J, Stekelenburg JJ. Perception of intersensory synchrony in audiovisual speech: not that special. Cognition. 2011;118:75–83. doi: 10.1016/j.cognition.2010.10.002. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Roberson GE, Hairston WD, Stein BE, Vaughan JW, Schirillo JA. Unifying multisensory signals across time and space. Exp Brain Res. 2004;158:252–258. doi: 10.1007/s00221-004-1899-9. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Stevenson RA. The construct of the multisensory temporal binding window and its dysregulation in developmental disabilities. Neuropsychologia. 2014;64C:105–123. doi: 10.1016/j.neuropsychologia.2014.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werner S, Noppeney U. Superadditive responses in superior temporal sulcus predict audiovisual benefits in object categorization. Cereb Cortex. 2010;20:1829–1842. doi: 10.1093/cercor/bhp248. [DOI] [PubMed] [Google Scholar]

- Woynaroski TG, Kwakye LD, Foss-Feig JH, Stevenson RA, Stone WL, Wallace MT. Multisensory Speech Perception in Children with Autism Spectrum Disorders. J Autism Dev Disord. 2013 doi: 10.1007/s10803-013-1836-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yalachkov Y, Kaiser J, Doehrmann O, Naumer MJ. Enhanced visuo-haptic integration for the non-dominant hand. Brain Res. 2015;1614:75–85. doi: 10.1016/j.brainres.2015.04.020. [DOI] [PubMed] [Google Scholar]

- Zampini M, Shore DI, Spence C. Audiovisual temporal order judgments. Exp Brain Res. 2003;152:198–210. doi: 10.1007/s00221-003-1536-z. [DOI] [PubMed] [Google Scholar]