Abstract

Viewing images of manipulable objects elicits differential blood oxygen level-dependent (BOLD) contrast across parietal and dorsal occipital areas of the human brain that support object-directed reaching, grasping, and complex object manipulation. However, it is unknown which object-selective regions of parietal cortex receive their principal inputs from the ventral object-processing pathway and which receive their inputs from the dorsal object-processing pathway. Parietal areas that receive their inputs from the ventral visual pathway, rather than from the dorsal stream, will have inputs that are already filtered through object categorization and identification processes. This predicts that parietal regions that receive inputs from the ventral visual pathway should exhibit object-selective responses that are resilient to contralateral visual field biases. To test this hypothesis, adult participants viewed images of tools and animals that were presented to the left or right visual fields during functional magnetic resonance imaging (fMRI). We found that the left inferior parietal lobule showed robust tool preferences independently of the visual field in which tool stimuli were presented. In contrast, a region in posterior parietal/dorsal occipital cortex in the right hemisphere exhibited an interaction between visual field and category: tool-preferences were strongest contralateral to the stimulus. These findings suggest that action knowledge accessed in the left inferior parietal lobule operates over inputs that are abstracted from the visual input and contingent on analysis by the ventral visual pathway, consistent with its putative role in supporting object manipulation knowledge.

Keywords: functional MRI, dorsal stream, ventral stream, manipulable objects, visual processing

Introduction

One overarching feature of the human visual system is the contralateral representation of the visual fields across the two hemispheres. Another feature is that subregions of high level visual processing areas in the brain exhibit category preferences for a limited number of distinct categories (e.g., faces, places, animals, body parts, tools; Allison, McCarthy, Nobre, Puce, & Belger, 1994; Kanwisher, McDermott, & Chun, 1997; Chao, Haxby, & Martin, 1999; Epstein, Harris, Stanley, & Kanwisher, 1999; Ishai, Ungerleider, Martin, Schouten, & Haxby, 1999; Downing, Jiang, Shuman, & Kanwisher, 2001; for reviews, see Grill-Spector & Malach, 2004; Martin, 2007; Peelen & Downing, 2007; Op de Beeck, Haushofer, & Kanwisher, 2008; Mahon and Caramazza, 2009; Martin, 2009). A third broad characteristic of high-level vision is the distinction between a ventral and a dorsal visual object-processing pathway: the ventral pathway supports object identification while the dorsal pathway supports online control of object-directed actions (Ungerleider & Mishkin, 1982; Goodale, Milner, Jakobsen, & Carey, 1991; Goodale & Milner, 1992; Milner & Goodale, 2008). Here we study the confluence of these three macroscopic properties of the visual system.

The ability to identify, grasp and then use objects correctly according to their function requires coordinated processing across the dorsal and ventral visual pathways, and in reference frames that are both invariant to the location of the target object (identification) and highly dependent on the object’s location (grasping). A key issue is how and where information from the dorsal and ventral streams is integrated (e.g., see Gallivan et al., 2014; Garcea, Almeida, & Mahon, 2012; Garcea & Mahon, 2014). While such integration likely occurs in multiple areas, one candidate structure well suited to integrate information from the two visual pathways is the left inferior parietal lobule. Prior work has shown that the left parietal lobule exhibits neural specificity for manipulable objects (Chao and Martin, 2000; Noppeney, Price, Penny, & Friston, 2006; Mahon, Milleville, Negri, Rumiati, Caramazza, et al., 2007; Mruczek, von Loga, & Kastner, 2013; Peeters, Rizzolatti, & Orban, 2013; for reviews, see Lewis, 2006; Martin, 2007), supports complex object manipulation (Liepmann, 1905; Johnson-Frey, 2004; Rumiati, Weiss, Shallice, Ottoboni, Noth, et al., 2004), and has the requisite functional and anatomical connectivity that could, in principle, support the integration of multiple streams of information (Rizzolatti & Matelli, 2003; Rushworth, Behrens, & Johansen-Berg, 2006; Kravitz, Saleem, Baker, & Mishkin, 2011; Garcea & Mahon, 2014; Hutchinson et al., 2014; Stevens et al., 2015; for discussion, see Binkofksi & Buxbaum, 2013).

Here we sought to test which regions of tool-selective parietal cortex receive functional inputs from the ventral visual pathway, and which receive inputs from the dorsal object-processing pathway. We reasoned that parietal regions that receive inputs from the ventral visual pathway should exhibit object-selectivity that largely abstracts away from the visual field location in which the stimuli were presented. The motivation for this prediction is that inputs that come via a ventral visual analysis of the input will have already been filtered through object categorization and identification processes. In contrast, regions of parietal cortex or dorsal occipital cortex in which stimulus processing is not mediated by analysis in the ventral visual pathway, and which support volumetric analysis in the service of visuomotor actions, would be predicted to show preferences for tools with a strong bias toward the contralateral visual field (e.g., Handy, Grafton, Shroff, Ketay, & Gazzaniga, 2003). To test these hypotheses, we designed an fMRI experiment in which tool and animal stimuli were presented in the right and left visual fields while participants were required to maintain fixation on a central fixation point. This design allows us to determine brain regions that exhibit tool preferences regardless of whether the stimuli were presented in the left or right visual fields, as well as regions that exhibit tool preferences that are strongly modulated by the side of presentation.

Methods

Participants

Sixteen University of Rochester students (9 females; mean age = 22.4 years, SD = 2.7 years) participated in the study in exchange for payment. All participants were strongly right-handed (as established with the Edinburgh Handedness Questionnaire), had normal or corrected-to normal vision, were native English speakers, and had no history of neurological disorders. All participants gave written informed consent in accordance with the University of Rochester Research Subjects Review Board.

General Procedure

Stimulus presentation was controlled with ‘A Simple Framework’ (ASF; Schwarzbach, 2011) using the Psychophysics Toolbox (Pelli, 1997) in MATLAB running on a MacPro. All participants viewed the stimuli binocularly through a mirror attached to the head coil adjusted to allow foveal viewing of a back-projected monitor (temporal resolution = 120 Hz). Each participant took part in two scanning sessions. The first scanning session included a T1 anatomical scan (6 minutes), and 8 three-minute runs of a category localizer (91 volumes per run; see below for materials and design). The second session included 6 seven-minute runs of the lateralized picture viewing experiment (204 volumes per run; see below for materials and design).

Experiment 1: Tool, Animal, Face, Place (TAFP) Localizer. Materials and Design

Twelve grayscale photographs of tools, animals, famous faces, and famous places were used; each item had 8 exemplars (for a total of 96 images per category; 384 images total). Phase-scrambled versions of the stimuli were created to serve as a baseline condition (see Fintzi and Mahon, 2014, for details on materials). Participants passively viewed images of tools, animals, faces, and places in a miniblock design. Within each 6-second miniblock, 12 stimuli were presented for 500 ms each (ISI = 0 ms), and 6-second fixation periods were presented between miniblocks; within each run, 8 miniblocks of intact images and 4 miniblocks of phase-scrambled versions of the stimuli were presented. The order of stimulus presentation was balanced such that upon completion of 8 runs the participants had viewed all stimuli from the four categories (i.e., a different exemplar was used in each run). All participants completed 8 runs of the TAFP experiment (91 volumes per run).

Experiment 2: Lateralized Picture Viewing Materials and Design

Sixteen pictures of tools and 16 pictures of animals were used. Scrambled versions of the items were created to serve as a baseline condition (groups of pixels were randomly displaced). Participants maintained fixation on a centrally-presented black dot while tool and animal stimuli were presented in a miniblock design (the participants’ gaze was monitored online with a video feed inside the bore to ensure task compliance). Within each 8-second miniblock, 16 intact or scrambled tools or animals were presented for 500 ms each (ISI = 0 ms), in either the left or right visual field (the center of the lateralized stimuli subtended ~ 5 degrees of the visual angle). Miniblocks were separated by 8 seconds of fixation in which a black cross was presented in the center of the screen.

There were 3 factors in the experiment – Visual Field (2 levels; right, left), Category (2 levels; animals, tools), and Stimulus Identity (2 levels; intact, scrambled). Within a run, all intact images were presented eight times (four times within each visual field), and all scrambled images were presented four times (two times within each visual field); condition order was random with the caveat that the same cell of the design did not repeat across two successive miniblocks within a run. Fifteen of the 16 participants completed 6 runs of the lateralized picture viewing experiment; due to technical errors with the experiment, 1 participant completed 4 runs of the lateralized picture viewing experiment (always 204 volumes per run). As part of a separate research question, the tool and animal images were bathed in a red or green background color during stimulus presentation; as this factor of the experiment is not germane to the goals of the current report, it is not analyzed.

MRI acquisition and preprocessing

Whole brain blood oxygen level-dependent (BOLD) imaging was conducted on a 3-Tesla Siemens MAGNETOM Trio scanner with a 32-channel head coil located at the Rochester Center for Brain Imaging. High-resolution structural T1 contrast images were acquired using a magnetization prepared rapid gradient echo (MP-RAGE) pulse sequence at the start of each session (TR = 2530, TE = 3.44 ms, flip angle = 7 degrees, FOV = 256 mm, matrix = 256 × 256, 1×1×1 mm sagittal left-to-right slices). An echo-planar imaging pulse sequence was used for T2* contrast (TR = 2000 ms, TE = 30 ms, flip angle = 90 degrees, FOV = 256 × 256 mm, matrix = 64 × 64, 30 sagittal left-to-right slices, voxel size = 4×4×4 mm). The first two volumes of each run were discarded to allow for signal equilibration.

MRI data were analyzed with the BrainVoyager software package (Version 2.8) and in-house scripts drawing on the BVQX toolbox written in MATLAB (http://support.brainvoyager.com/available-tools/52-matlab-tools-bvxqtools.html). Preprocessing of the functional data included, in the following order, slice scan time correction (sinc interpolation), motion correction with respect to the first volume of the first functional run, and linear trend removal in the temporal domain (cutoff: 2 cycles within the run). Functional data were registered (after contrast inversion of the first volume) to high-resolution deskulled anatomy on a participant-by-participant basis in native space. For each participant, echo-planar and anatomical volumes were transformed into standardized space (Talairach and Tournoux, 1988). All functional data were smoothed at 6 mm FWHM (1.5 voxels), and interpolated to 3 mm3 voxels.

Results

Independent Definition of Tool Preferring Regions

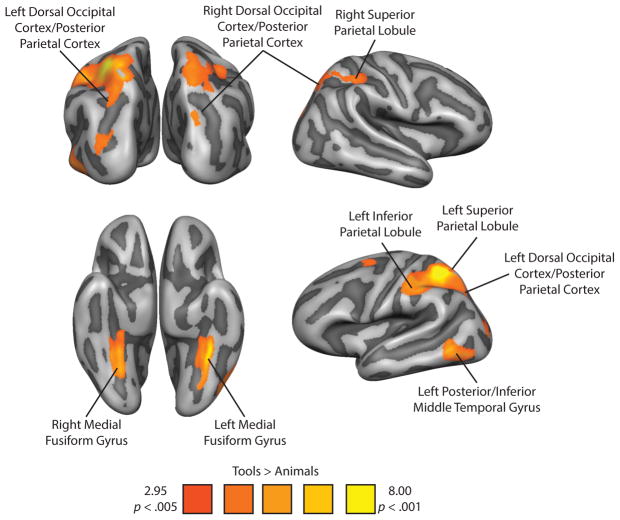

Tool preferring voxels were identified in a whole-brain analysis (random effects general linear model; p < .005, cluster corrected) with the contrast of Tools > Animals using the independent category-localizer experiment (for details, see Experiment 1 in Methods). Replicating previous studies (Chao et al., 1999; Chao and Martin, 2000; Noppeney et al., 2006; Mahon et al., 2007; Mahon, Kumar, and Almeida, 2013; Garcea & Mahon, 2014), viewing images of tools led to increased BOLD contrast in the left inferior and superior parietal lobule, the left posterior/inferior middle temporal gyrus, the left posterior parietal/dorsal occipital cortex, and bilateral medial fusiform gyrus. In addition, there was a significant increase in BOLD contrast for tool stimuli in the right superior parietal lobule, and the right posterior parietal/dorsal occipital cortex. The effect in the right posterior parietal/dorsal occipital tool preferring area was weaker than in the other areas, and was defined with a more lenient threshold, p < .01, cluster corrected. We note however that the location of this tool-preferring region is in very good agreement with prior work from our lab (e.g., Garcea and Mahon, 2014) and others (e.g., Fang and He, 2005). Figure 1 shows all voxels in the brain that exhibited increased BOLD contrast for tool compared to animal stimuli, and Table 1 lists the coordinates and statistical values associated with the peak voxels for each region of interest (ROI).

Figure 1.

Tool Preferences in the Right and Left Dorsal and Ventral Visual Pathways. Tool preferences were identified with the contrast of ‘Tools > Animals’ (p < .005). The whole-brain contrast map was corrected using a Monte-Carlo style permutation test that thresholds voxel cluster size to maintain a Type I error rate of 5% (AlphaSim, 1000 iterations). This contrast identifies bilateral superior parietal lobules, bilateral medial fusiform gyri, bilateral posterior parietal/dorsal occipital cortices (identified at a more lenient threshold of p < .01), the left inferior parietal lobule, and the left posterior/inferior middle temporal gyrus.

Table 1.

Talairach coordinates for peak voxels from regions showing differential BOLD contrast for Tool stimuli in the TAFP Localizer.

| Region | Peak voxel coordinates (XYZ) | Statistical value for peak voxel | Volume (mm3) | ||

|---|---|---|---|---|---|

| Left Parietal Cortex | −34 | −50 | 51 | t(15) = 8.94, p < 0.001 | 15328 |

| Left Medial Fusiform Gyrus | −25 | −35 | −24 | t(15) = 7.43, p < 0.001 | 4803 |

| Left Posterior/Inferior Middle Temporal Gyrus | −49 | −56 | −6 | t(15) = 5.49, p < 0.001 | 4196 |

| Left Posterior Parietal/Dorsal Occipital Cortex | −25 | −71 | 36 | t(15) = 4.82, p < 0.001 | 918 |

| Right Superior Parietal Lobule | 17 | −62 | 48 | t(15) = 4.42, p < 0.001 | 3499 |

| Right Medial Fusiform Gyrus | 29 | −56 | −6 | t(15) = 8.39, p < 0.001 | 2884 |

| Right Posterior Parietal/Dorsal Occipital Cortex | 35 | −83 | 19 | t(15) = 4.45, p < 0.001 | 4978 |

Tool Preferences Robust to Lateralized Presentation

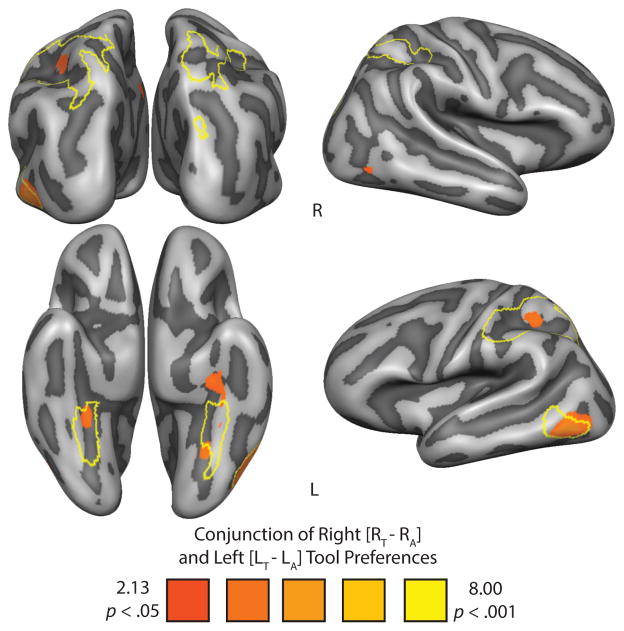

We next evaluated in which regions tool preferences were robust to the visual field in which the stimuli were presented. The most stringent test would be to run a whole brain analysis identifying all voxels that survive the conjunction analysis of [right tools] > [right animals] & [left tools] > [left animals]—any voxel that survives this conjunction would exhibit tool preferences regardless of whether the stimuli are presented in the left or right visual field (see Nichols et al., 2004). The resulting whole-brain contrast map is plotted in Figure 2. The results from that conjunction analysis identify the left posterior/inferior middle temporal gyrus, bilateral medial fusiform gyri, and critically the left inferior parietal lobule. It is important to note the lack of significant BOLD contrast in the left superior parietal lobule and in any of the right hemisphere regions that expressed significant BOLD contrast for tool stimuli in the independent category localizer. This can be seen in Figure 2, as the borders of the independently defined tool preferring regions from the functional localizer experiment are outlined in yellow.

Figure 2.

Whole-brain increase in BOLD contrast for the conjunction of right [Right Tool − Right Animal] and left [Left Tool − Left Animal] tool preferences in the lateralized picture viewing experiment. The ROIs from the independent functional category localizer (Figure 1) are outlined in yellow. All whole-brain maps were cluster-corrected.

Tool Preferences Modulated by Visual Field of Presentation

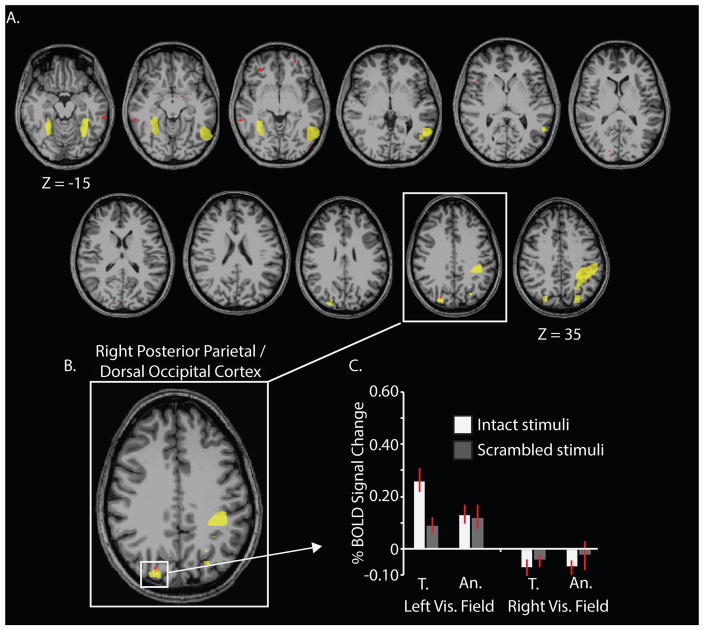

We next sought positive evidence that more posterior parietal or dorsal occipital regions would exhibit tool preferences that are driven by contralateral stimulus presentation. To that end, we carried out a whole-brain repeated measures ANOVA over the data from Experiment 2, the experiment in which participants maintained central fixation while images of tools and animals were presented in the left and right visual fields. The ANOVA modeled the main effects of category (two levels; tool, animal), visual field location (two levels; left visual field, right visual field), stimulus identity (two levels; intact, scrambled), and the interaction among the three factors. A second goal was to confirm that any regions that exhibit a significant 3-way interaction were showing modulation of category by location only for intact stimuli: to that end, a separate whole-brain 2-way ANOVA between category (tools, animals) and location (left, right) was run over only the intact stimuli (i.e., excluding the scrambled images).

The most conservative test to identify regions in which tool preferences are modulated by side of presentation is to take the intersection of two maps: the 3-way interaction between category*visual field location*stimulus identity and the 2-way category*visual field location (only for intact stimuli). Any voxel identified as overlapping those two interaction tests would exhibit modulation of category-preferences by side of presentation only for intact stimuli. The resulting intersection map is plotted in Figure 3A. The only region of the brain identified in this interaction map, that was also independently identified as exhibiting tool preferences in the category localizer session, was the right posterior parietal/dorsal occipital region (see Figure 3B). A visualization of BOLD contrast by condition for those intersecting voxels confirmed that responses were driven by contralaterally presented tool stimuli (see Figure 3C).

Figure 3.

Whole-brain overlap between the Category*Visual Field Location*Stimulus Identity interaction and the Category*Visual Field Location interaction for intact stimuli identifies the right posterior parietal/dorsal occipital cortex. The whole-brain results are plotted as axial slices (panel A). A gray-matter mask was used to indicate which cortical voxels showed robust category preferences that were modulated by the side of presentation for intact stimuli only. The ROIs from the independent category localizer (Figure 1) are outlined in yellow; the overlap between the 3-way interaction map and the 2-way interaction map is marked in red. The only region that emerges in common to the two whole-brain ANOVAs and which was also identified by the category-localizer experiment as exhibiting tool preferences was the right posterior parietal/dorsal occipital cortex (panel B). Responses in the right posterior parietal/dorsal occipital region were driven by contralaterally presented tool stimuli (panel C). T., tools; An., animals.

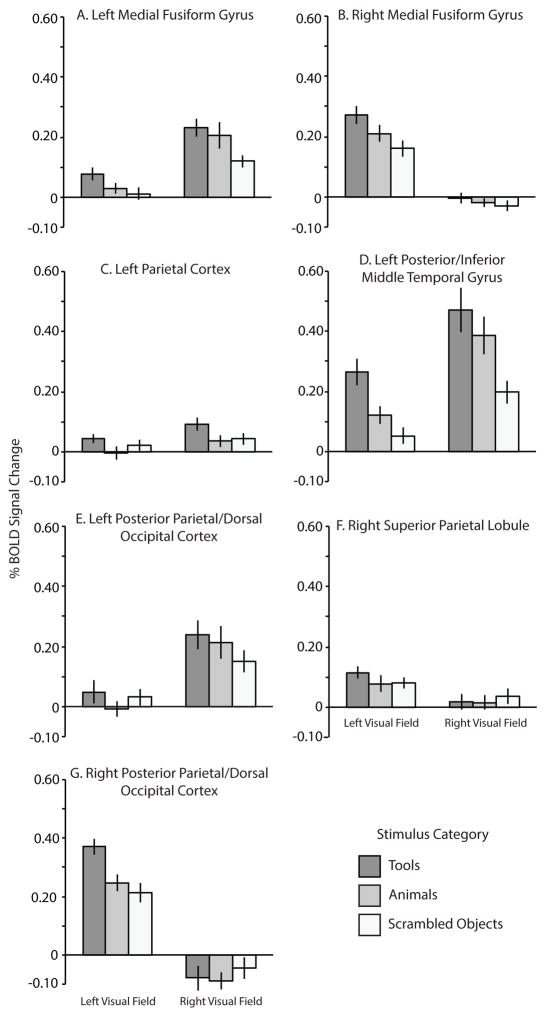

ROI Analysis

As a final test, we carried out an ROI analysis in which we tested for an interaction effects between ‘Category’ and ‘Visual Field Location’ using data from the lateralized viewing experiment (Experiment 2, only for intact stimuli). This was carried out in ROIs that were independently defined with the category-localizer experiment (Experiment 1). BOLD contrast values were extracted from the lateralized viewing experiment for all of the independently defined ROIs (see Figure 4). As expected, there was a significant interaction between Visual Field Location and Category in only one ROI: the right posterior parietal/dorsal occipital cortex (F (1, 15) = 5.60, p < .05; see Figure 4G). BOLD contrast in that region during the lateralized viewing experiment was maximal for tools presented in the left (i.e., contralateral) visual field. The planned contrast of (Left Tool − Left Animal) > (Right Tool − Right Animal) was significant (t (15) = 2.37, p < .05). There was no interaction between Visual Field Location and Category in any of the other regions identified by the independent functional localizer (all p > .17).

Figure 4.

Percent Signal Change (BOLD) for the functionally-defined ROIs as a function of stimulus category and visual field location. BOLD contrast from the scrambled tool and animal miniblocks were averaged into one scrambled condition and are included for reference.

Discussion

The goal of the current investigation was to advance our understanding of how three fundamental properties of high level vision interact: category preferences, contralateral visual processing, and the distinction between the dorsal and ventral visual pathways. We reasoned that tool-preferring regions of parietal cortex whose inputs have been filtered through object recognition processes in ventral temporal-occipital cortex would exhibit a higher degree of tolerance to the visual field location of the stimuli. In contrast, tool-preferring regions of parietal cortex whose inputs come by way of the dorsal visual pathway (i.e., independent of processing in ventral temporal-occipital cortex) should exhibit a strong interaction between the side of presentation of the visual stimulus and any category-preferences. A conjunction analysis that identified regions exhibiting tool preferences regardless of whether the stimuli were presented in the left or right visual fields identified the left inferior parietal lobule, the left medial fusiform gyrus, and the left posterior/inferior middle temporal gyrus (Figure 2). By comparison, a whole-brain repeated measures ANOVA that identified regions in which category preferences were modulated by the side of presentation only for intact stimuli identified the right dorsal occipital cortex bordering on posterior parietal cortex. In a final test, we found that of all of the regions identified as exhibiting tool preferences using an independent functional localizer, the only region that showed a significant interaction between category and side-of-presentation was the right posterior parietal/dorsal occipital cortex.

There is a long history of lesion work which shows that limb apraxia, a neuropsychological deficit in using objects correctly according to their function, is associated with lesions to the left inferior parietal lobule (see e.g., Ochipa, Rothi, and Heilman, 1989; Buxbaum, Veramonti, and Schwartz, 2000; Mahon et al., 2007; Negri, Rumiati, Zadini, Ukmar, Mahon, and Caramazza, 2007; Garcea, Dombovy, and Mahon, 2013; for reviews, see Rothi, Ochipa, and Heilman, 1991; Cubelli, Marchetti, Boscolo, and Della Sala, 2000; Johnson-Frey, 2004; Mahon and Caramazza, 2005; Goldenberg, 2009; Binkofski and Buxbaum, 2013; Osiurak, 2014). On the basis of those patient data, and additional neuroimaging findings (Kellenbach, Brett, & Patterson, 2003; Rumiati et al., 2004; Boronat, Buxbaum, Coslett, Tang, Saffran, et al., 2005; Mahon et al., 2007; Canessa, Borgo, Cappa, Perani, Falini, et al., 2008; Garcea and Mahon, 2014; Chen, Garcea, & Mahon, in press), it has been suggested that complex object associated manipulation knowledge is represented in the left inferior parietal lobule. Our findings and interpretation are entirely in line with that proposal, as complex object manipulation knowledge could only be accessed subsequent to accessing the identity of the object. In other words, the knowledge that a hammer is manipulated with a pounding motion presupposes that the object has been identified as a hammer, and possibly as well that the function of the object has been accessed (for discussion, see Binkosfki and Buxbaum, 2013, Mahon et al., 2013; Almeida et al., 2013; Bruffaerts et al., 2014; Gallivan et al., 2014, Gallivan, Johnsrude, & Flanagan, 2015; Garcea & Mahon, 2014). It is difficult to envision how those types of information could be extracted bottom up from the volumetric properties of the object, which is the information that the dorsal visual pathway has available.

The conclusion that tool preferences in the left inferior parietal lobule are contingent on analysis of the visual input by the ventral visual pathway is not incompatible with the view that inferior parietal BOLD responses are likely an aggregation of processing that occurs at varying time scales and across multiple brain regions. For instance, Bar, Kassam, Ghuman, Boshyan, Schmid, et al. (2006) found that object recognition-associated responses in orbitofrontal cortex preceded activity in ventral temporal cortex (see also Fintzi and Mahon, 2014). On the basis of those and other findings, Bar and colleagues argued that prefrontal processing of object information provides an initial first pass analysis of the visual input, that can then be used to bias slower, and more detailed visual processing in the ventral stream. However, this does not change the conclusion that the retrieval of object-associated manipulation knowledge is contingent upon the retrieval of object identity via the ventral visual pathway. That proposal could explain why responses in the left inferior parietal lobule are robust to changes in the visual field location of the stimuli. It is also consistent with observations that psychophysical treatments of stimuli that bias processing away from the dorsal visual pathway result in tool preferences that are restricted to the left inferior parietal lobule (Almeida et al., 2013; Mahon et al., 2013).

It is also important to note that that our findings are not incompatible with the fact that higher order object responsive areas in the ventral stream exhibit robust modulation by the visual field location of stimuli (Kravitz, Kriegeskorte, and Baker, 2010; Levy, Hassan, Avidan, Hendler, and Malach, 2001; Orban, Zhu, and Vanduffel, 2014). For instance, we observed a clear and strong contralateral bias in the strength of neural activity induced by lateralized stimuli throughout the ventral visual pathway (see Figure 4). However, there was no statistical interaction between visual field and category in the medial fusiform gyri (Figure 3), indicating that tool preferences in the fusiform gyri are not modulated by visual hemifield location (see also Figure 2). The proposal that representations of objects that result from processing in ventral visual pathway would be tolerant to changes in visual field location of the stimulus is not incompatible with the view that some representations in the ventral stream may be category- and visual-field specific (e.g., see Kravitz, Vinson, and Baker, 2008; Kravitz, Peng, and Baker, 2011).

A second aspect of our findings that is important to note is that tool preferences in dorsal occipital/posterior parietal regions were significantly modulated by visual field location only in the right hemisphere. The lack of an interaction in the left hemisphere is interesting considering there were robust responses for tools in dorsal occipital regions of that hemisphere when contrasting the BOLD signal for contralaterally-presented tools with all other conditions (see Supplemental Figure 1). It remains an open issue for future research how tool representations in posterior dorsal structures may be influenced by handedness, language dominance, and the interaction of those factors during visual object recognition of tools (see e.g. Vingerhoets, 2014).

More generally, our results are consistent with the broader theoretical framework that the left inferior parietal lobule (left supramarginal gyrus, anterior intraparietal sulcus) integrates volumetric information relevant for shaping the hand when grasping objects, with complex object-associated manipulation knowledge. While the retrieval of complex object-associated manipulation knowledge is contingent on the computation of object identity by the ventral visual pathway (e.g., see Gallivan et al., 2014; Cant and Goodale, 2007), hand shaping for grasping can plausibly be driven bottom-up by volumetric information communicated by the dorsal stream. For instance, Culham and colleagues (2003) found that reaching actions maximally activate posterior/superior parietal regions, in the vicinity of the dorsal occipital region we identified herein (i.e., in the vicinity of V6/V6a; see also Fang & He, 2005; Pitzalis et al., 2006; Cavina-Pratesi, Goodale, & Culham 2007; Gallivan, Cavina-Pratesi, & Culham, 2009; Konen, Mruczek, Montoya, & Kastner, 2013; Rossit, McAdam, Mclean, Goodale, and Culham, 2013); in contrast, reach-to-grasp actions maximally activate the anterior intraparietal sulcus (for patience evidence, see Binkofski, Dohl, Posse, Stephan, et al., 1998). It is important to underline, however, that object grasping in the service of object manipulation presupposes a functionally appropriate grasp—thus, while basic grasp points may be derivable bottom up on the basis of information propagated through the dorsal stream (e.g., Goodale et al., 1991), functionally appropriate grasps likely require inputs from computations that are supported by the ventral visual pathway (Carey, Harvey, & Milner, 1996; Valyear & Culham, 2010). Our findings suggest that the left inferior parietal lobule may be at least one of the brain regions that supports the integration of information communicated by the ventral visual pathway with information communicated via the dorsal visual pathway.

Supplementary Material

Acknowledgments

Preparation of this manuscript was supported by NIH grants R01 NS089069 to BZM, by a Foundation for Science and Technology of Portugal Project Grant PTDC/MHC-PCN/3575/2012 and programa COMPETE to JA, and by a University of Rochester Center for Visual Science pre-doctoral training fellowship (NIH training grant 5T32EY007125-24) to FEG. SK was supported by a research initiation grant from the Foundation for Science and Technology of Portugal Project Grant PTDC/MHC-PCN/3575/2012. The authors are grateful to Melvyn Goodale for initial discussion of the ideas behind the predictions that were tested herein.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Allison T, McCarthy G, Nobre A, Puce A, Belger A. Human extrastriate visual cortex and the perception of faces, words, numbers, and colors. Cerebral Cortex. 1994;4(5):544–554. doi: 10.1093/cercor/4.5.544. [DOI] [PubMed] [Google Scholar]

- Almeida J, Fintzi AR, Mahon BZ. Tool manipulation knowledge is retrieved by way of the ventral visual object processing pathway. Cortex. 2013;49:2334–2344. doi: 10.1016/j.cortex.2013.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M, Kassam KS, Ghuman AS, Boshyan J, Schmid AM, et al. Top-down facilitation of visual recognition. Proceedings of the National Academy of Sciences of the United States of America. 2006;103(2):449–454. doi: 10.1073/pnas.0507062103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binkofski F, Buxbaum LJ. Two action systems in the human brain. Brain and Language. 2013;127(2):222–229. doi: 10.1016/j.bandl.2012.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binkofski F, Dohle C, Posse S, Stephan KM, et al. Human anterior intraparietal area subserves prehension. A combined lesion and functional MRI activation study. Neurology. 1998;50:1253–1259. doi: 10.1212/wnl.50.5.1253. [DOI] [PubMed] [Google Scholar]

- Boronat CB, Buxbaum LJ, Coslett HB, Tang K, Saffran EM, Kimberg DY, Detre JA. Distinctions between manipulation and function knowledge of objects: evidence from functional magnetic resonance imaging. Cognitive Brain Research. 2005;23(2):361–373. doi: 10.1016/j.cogbrainres.2004.11.001. [DOI] [PubMed] [Google Scholar]

- Bruffaerts R, De Weer A, De Grauwe S, Thys M, Dries E, et al. Noun and knowledge retrieval for biological and non-biological entities following right occipitotemporal lesions. Neuropsychologia. 2014;62:163–174. doi: 10.1016/j.neuropsychologia.2014.07.021. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Veramonti T, Schwartz MF. Function and manipulation tool knowledge in apraxia: Knowing “what for” but not “how”. Neurocase. 2000;6:83–97. [Google Scholar]

- Canessa N, Borgo F, Cappa SF, Perani D, Falini A, Buccino G, … Shallice T. The different neural correlates of action and functional knowledge in semantic memory: an FMRI study. Cerebral Cortex. 2008;18(4):740–751. doi: 10.1093/cercor/bhm110. [DOI] [PubMed] [Google Scholar]

- Cant JS, Goodale MA. Attention to form or surface properties modulates different regions of human occipitotemporal cortex. Cerebral Cortex. 2007;17:713–731. doi: 10.1093/cercor/bhk022. [DOI] [PubMed] [Google Scholar]

- Carey DP, Harvey M, Milner AD. Visuomotor sensitivity for shape and orientation in a patient with visual form agnosia. Neuropsychologia. 1996;34(5):329–337. doi: 10.1016/0028-3932(95)00169-7. [DOI] [PubMed] [Google Scholar]

- Cavina-Pratesi C, Goodale MA, Culham JC. FMRI reveals a dissociation between grasping and perceiving the size of real 3D objects. PLoS ONE. 2007;2:1–14. doi: 10.1371/journal.pone.0000424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about object. Nature Neuroscience. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Representation of manipulable man-made objects in the dorsal stream. Neuroimage. 2000;12:478–484. doi: 10.1006/nimg.2000.0635. [DOI] [PubMed] [Google Scholar]

- Chen Q, Garcea FE, Mahon BZ. The representation of object-directed action and function knowledge in the human brain. Cerebral Cortex. doi: 10.1093/cercor/bhu328. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cubelli R, Marchetti C, Boscolo G, Della Salla S. Cognition in action: Testing a model of limb apraxia. Brain and Cognition. 2000;44:144–165. doi: 10.1006/brcg.2000.1226. [DOI] [PubMed] [Google Scholar]

- Culham JC, Danckert SL, DeSouza JFX, Gati JS, et al. Visually guided grasping produces fMRI activation in dorsal but not ventral stream brain areas. Exp Brain Res. 2003;153:180–189. doi: 10.1007/s00221-003-1591-5. [DOI] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293(5539):2470–2473. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Epstein R, Harris A, Stanley D, Kanwisher N. The parahippocampal place area: recognition, navigation, or encoding? Neuron. 1999;23(1):115–125. doi: 10.1016/s0896-6273(00)80758-8. [DOI] [PubMed] [Google Scholar]

- Fang F, He S. Cortical responses to invisible objects in the human dorsal and ventral pathways. Nature neuroscience. 2005;8:1380–1385. doi: 10.1038/nn1537. [DOI] [PubMed] [Google Scholar]

- Felleman DJ, Van Essen DC. Distributed hierarchical processing in the primate cerebral cortex. Cerebral Cortex. 1991;1:1– 47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Fintzi AR, Mahon BZ. A bimodal tuning curve for spatial frequency across left and right human orbital frontal cortex during object recognition. Cerebral Cortex. 2014;24:1311–1318. doi: 10.1093/cercor/bhs419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garcea FE, Dombovy M, Mahon BZ. Preserved tool knowledge in the context of impaired action knowledge: Implications for models of semantic memory. Frontiers in Human Neuroscience. 2013;7:1–18. doi: 10.3389/fnhum.2013.00120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garcea FE, Mahon BZ. Parcellation of left parietal tool representations by functional connectivity. Neuropsychologia. 2014;60:131–143. doi: 10.1016/j.neuropsychologia.2014.05.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garcea FE, Almeida J, Mahon BZ. A right visual field advantage for visual recognition of manipulable objects. Cognitive, Affective, and Behavioral Neuroscience. 2012;12:813–825. doi: 10.3758/s13415-012-0106-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallivan JP, Cavina-Pratesi C, Culham JC. Is that within reach? fMRI reveals that the human superior parieto-occipital cortex encodes objects reachable by the hand. The Journal of Neuroscience. 2009;29(14):4381–4391. doi: 10.1523/JNEUROSCI.0377-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallivan JP, Cant JS, Goodale MA, Flanagan JR. Representation of object weight in human ventral visual cortex. Current Biology. 2014;24:1–8. doi: 10.1016/j.cub.2014.06.046. [DOI] [PubMed] [Google Scholar]

- Gallivan JP, Johnsrude IS, Flanagan JR. Planning ahead: Object-directed sequential actions decoded from human frontoparietal and occipitotemporal networks. Cerebral Cortex. 2016;26:708–30. doi: 10.1093/cercor/bhu302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldenberg G. Apraxia and the parietal lobes. Neuropsychology. 2009;47:1449–1459. doi: 10.1016/j.neuropsychologia.2008.07.014. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends in neurosciences. 1992;15(1):20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD, Jakobson LS, Carey DP. A neurological dissociation between perceiving objects and grasping them. Nature. 1991;349(6305):154–156. doi: 10.1038/349154a0. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R. The human visual cortex. Annu Rev Neurosci. 2004;27:649–677. doi: 10.1146/annurev.neuro.27.070203.144220. [DOI] [PubMed] [Google Scholar]

- Handy TC, Grafton ST, Shroff NM, Ketay S, Gazzaniga MS. Graspable objects grab attention when the potential for action is recognized. Nature Neuroscience. 2003;6(4):421–427. doi: 10.1038/nn1031. [DOI] [PubMed] [Google Scholar]

- Ishai A, Ungerleider LG, Martin A, Schouten JL, Haxby JV. Distributed representation of objects in the human ventral visual pathway. Proceedings of the National Academy of Sciences. 1999;96(16):9379–9384. doi: 10.1073/pnas.96.16.9379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson-Frey S. The neural bases of complex tool use in humans. Trends in Cognitive Sciences. 2004;8:71–78. doi: 10.1016/j.tics.2003.12.002. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. The Journal of Neuroscience. 1997;17(11):4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kellenbach M, Brett M, Patterson K. Actions speak louder than functions: the importance of manipulability and action in tool representation. Cognitive Neuroscience, Journal of. 2003;15(1):30–46. doi: 10.1162/089892903321107800. [DOI] [PubMed] [Google Scholar]

- Konen CS, Mruczek REB, Montoya JL, Kastner S. Functional organization of human posterior parietal cortex: grasping- and reaching-related activations relative to topographically organized cortex. Journal of Neurophysiology. 2013;109:2897–1908. doi: 10.1152/jn.00657.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kravitz DJ, Vinson LD, Baker CI. How position dependent is visual object recognition? Trends in cognitive sciences. 2008;12(3):114–122. doi: 10.1016/j.tics.2007.12.006. [DOI] [PubMed] [Google Scholar]

- Kravitz DJ, Saleem KS, Baker CI, Mishkin M. A new neural framework for visuospatial processing. Nature Reviews Neuroscience. 2011;12(4):217–230. doi: 10.1038/nrn3008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kravitz DJ, Peng CS, Baker CI. Real-world scene representations in high-level visual cortex: it’s the spaces more than the places. The Journal of Neuroscience. 2011;31(20):7322–7333. doi: 10.1523/JNEUROSCI.4588-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kravitz DJ, Kriegeskorte N, Baker CI. High-level visual object representations are constrained by position. Cerebral Cortex. 2010;20(12):2916–2925. doi: 10.1093/cercor/bhq042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levy I, Hasson U, Avidan G, Hendler T, Malach R. Center–periphery organization of human object areas. Nature neuroscience. 2001;4(5):533–539. doi: 10.1038/87490. [DOI] [PubMed] [Google Scholar]

- Lewis J. Cortical networks related to human use of tools. The Neuroscientist. 2006;12:211–231. doi: 10.1177/1073858406288327. [DOI] [PubMed] [Google Scholar]

- Liepmann H. Research Bulletin. Vol. 506. London, Ont: Department of Psychology, University of Western Ontario; 1905. The left hemisphere and action. (Translation from Munch. Med. Wschr. 48–49). (Translations from Liepmann’s essays on apraxia. 1980. [Google Scholar]

- Mahon BZ, Caramazza A. The orchestration of the sensory-motor systems: Clues from neuropsychology. Cognitive Neuropsychology. 2005;22:480–494. doi: 10.1080/02643290442000446. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, Milleville S, Negri GAL, Rumiati RI, et al. Action-related properties of objects shape object representations in the ventral stream. Neuron. 2007;55:507–520. doi: 10.1016/j.neuron.2007.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Kumar N, Almeida J. Spatial frequency tuning reveals interactions between the dorsal and ventral visual systems. Journal of Cognitive Neuroscience. 2013;25:862–871. doi: 10.1162/jocn_a_00370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin A. The representation of object concepts in the brain. Annual Review of Psychology. 2007;58:25–45. doi: 10.1146/annurev.psych.57.102904.190143. [DOI] [PubMed] [Google Scholar]

- Martin A. Circuits in mind: The neural foundations for object concepts. In: Gazzaniga MS, editor. The Cognitive Neurosciences. 4. MIT Press; Cambridge, MA: 2009. pp. 1031–1046. [Google Scholar]

- Milner AD, Goodale MA. Two visual systems re-viewed. Neuropsychologia. 2008;46(3):774–785. doi: 10.1016/j.neuropsychologia.2007.10.005. [DOI] [PubMed] [Google Scholar]

- Negri GAL, Rumiati RI, Zadini A, Ukmar M, Mahon BZ, Caramazza A. What is the role of motor simulation in action and object recognition? Evidence from apraxia. Cognitive Neuropsychology. 2007;24:795–816. doi: 10.1080/02643290701707412. [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline JB. Valid conjunction inference with the minimum statistic. NeuroImage. 2004;25:653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Price CJ, Penny WD, Friston KJ. Two distinct neural mechanisms for category-selective responses. Cerebral Cortex. 2006;16:437–445. doi: 10.1093/cercor/bhi123. [DOI] [PubMed] [Google Scholar]

- Ochipa C, Rothi LJG, Heilman KM. Ideational apraxia: A deficit in tool selection and use. Annals of Neurology. 1989;25:190–193. doi: 10.1002/ana.410250214. [DOI] [PubMed] [Google Scholar]

- Op de Beeck HP, Haushofer J, Kanwisher NG. Interpreting fMRI data: maps, modules and dimensions. Nature Reviews Neuroscience. 2008;9(2):123–135. doi: 10.1038/nrn2314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orban GA, Zhu Q, Vanduffel W. The transition in the ventral stream from feature to real-world entity representations. Frontiers in psychology. 2014;5 doi: 10.3389/fpsyg.2014.00695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osiurak F. What neuropsychology tells us about human tool use? The four constraints theory (4CT): mechanics, space, time, and effort. Neuropsychology Review. 2014;24(2):88–115. doi: 10.1007/s11065-014-9260-y. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. The neural basis of visual body perception. Nature Reviews Neuroscience. 2007;8(8):636–648. doi: 10.1038/nrn2195. [DOI] [PubMed] [Google Scholar]

- Peeters RR, Rizzolatti G, Orban GA. Functional properties of the left parietal tool use region. NeuroImage. 2013;78:83–93. doi: 10.1016/j.neuroimage.2013.04.023. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision. 1997;10:377–401. [PubMed] [Google Scholar]

- Pitzalis S, Galletti C, Huang RS, Patria F, Committeri G, Galati G, et al. Wide-field retinotopy defines human cortical visual area v6. Journal of Neuroscience. 2006;26:7962–7973. doi: 10.1523/JNEUROSCI.0178-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzolatti G, Matelli M. Two different streams form the dorsal visual system: anatomy and functions. Exp Brain Res. 2003;153:146–157. doi: 10.1007/s00221-003-1588-0. [DOI] [PubMed] [Google Scholar]

- Rossit S, McAdam T, Mclean DA, Goodale MA, Culham JC. fMRI reveals a lower visual field preference for hand actions in human superior parieto-occipital cortex (SPOC) and precuneus. Cortex. 2013;49:2525–2541. doi: 10.1016/j.cortex.2012.12.014. [DOI] [PubMed] [Google Scholar]

- Rothi LJG, Ochipa C, Heilman KM. A cognitive neuropsychological model of limb praxis. Cognitive Neuropsychology. 1991;8:443–458. [Google Scholar]

- Rumiati RI, Weiss PH, Shallice T, Ottoboni G, Noth J, Zilles K, Fink GR. Neural basis of pantomiming the use of visually presented objects. Neuroimage. 2004;21(4):1224–1231. doi: 10.1016/j.neuroimage.2003.11.017. [DOI] [PubMed] [Google Scholar]

- Rushworth MFS, Behrens TEJ, Johansen-Berg H. Connection patterns distinguish 3 regions of human parietal cortex. Cerebral Cortex. 2006;16:1418–1430. doi: 10.1093/cercor/bhj079. [DOI] [PubMed] [Google Scholar]

- Schwarzbach J. A simple framework (ASF) for behavioral and neuroimaging experiments based on psychophysics toolbox for MATLAB. Behavioral Research. 2011;43:1194–1201. doi: 10.3758/s13428-011-0106-8. [DOI] [PubMed] [Google Scholar]

- Stevens WD, Tessler MH, Peng CS, Martin A. Functional connectivity constrains the category-related organization of human ventral occipitotemporal cortex. Human Brain Mapping. 2015;3(6):2187–2206. doi: 10.1002/hbm.22764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme; 1988. [Google Scholar]

- Ungerleider LG, Mishkin M. Two cortical visual systems. In: Ingle DJ, Goodale MA, Mansfield RJW, editors. Analysis of visual behavior. Cambridge: MIT Press; 1982. pp. 549–586. [Google Scholar]

- Valyear KF, Culham JC. Observing learned object-specific functional grasps preferentially activates the ventral stream. Journal of Cognitive Neuroscience. 2010;22(5):970–984. doi: 10.1162/jocn.2009.21256. [DOI] [PubMed] [Google Scholar]

- Vingerhoets G. Praxis, language, and handedness: A tricky triad. Cortex. 2014;57:294–296. doi: 10.1016/j.cortex.2014.01.019. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.