Abstract

Three experiments explored the impact of different reinforcer rates for alternative behavior (DRA) on the suppression and post-DRA relapse of target behavior, and the persistence of alternative behavior. All experiments arranged baseline, intervention with extinction of target behavior concurrently with DRA, and post-treatment tests of resurgence or reinstatement, in two- or three-component multiple schedules. Experiment 1, with pigeons, arranged high or low baseline reinforcer rates; both rich and lean DRA schedules reduced target behavior to low levels. When DRA was discontinued, the magnitude of relapse depended on both baseline reinforcer rate and the rate of DRA. Experiment 2, with children exhibiting problem behaviors, arranged an intermediate baseline reinforcer rate and rich or lean signaled DRA. During treatment, both rich and lean DRA rapidly reduced problem behavior to low levels, but post-treatment relapse was generally greater in the DRA-rich than the DRA-lean component. Experiment 3, with pigeons, repeated the low-baseline condition of Experiment 1 with signaled DRA as in Experiment 2. Target behavior decreased to intermediate levels in both DRA-rich and DRA-lean components. Relapse, when it occurred, was directly related to DRA reinforcer rate as in Experiment 2. The post-treatment persistence of alternative behavior was greater in the DRA-rich component in Experiment 1, whereas it was the same or greater in the signaled-DRA-lean component in Experiments 2 and 3. Thus, infrequent signaled DRA may be optimal for effective clinical treatment.

Keywords: alternative reinforcement, signaled DRA, persistence, relapse, children, pigeons

Treatments of severe problem behavior often arrange differential reinforcement of alternative, socially desirable behavior (DRA). A frequently implemented and highly successful version of DRA is functional communication training (FCT), whereby a patient can gain access to reinforcers that have been identified as maintaining problem behavior by requesting them directly (manding), without engaging in the problem behavior itself. For example, if a functional analysis determines that destructive behavior is maintained by social consequences that follow its occurrence (e.g., attention, perhaps in the form of reprimands), FCT provides a patient with a socially acceptable way to obtain attention that encourages further prosocial behavior. FCT can be especially effective if arranged concurrently with extinction of the problem behavior itself (Hagopian, Fisher, Sullivan, Acquisto, & Leblanc, 1998; Shirley, Iwata, Kahng, Mazaleski, & Lerman, 1997).

Paradoxically, alternative reinforcers obtained by functional communication responses (FCR) can also have countertherapeutic effects. When those alternative reinforcers are presented within the treatment context, they may summate with the reinforcers that maintained problem behavior before treatment was introduced and thereby increase its future resistance to change (e.g., Mace et al., 2010). Moreover, when treatment is discontinued, interrupted, or changed in some way, problem behavior is likely to relapse. Here we consider two forms of relapse: resurgence of problem behavior when alternative reinforcement is discontinued, and reinstatement when reinforcers that had been identified earlier as maintaining problem behavior are reintroduced, either independent of or dependent upon that behavior (Bouton, Winterbauer, & Todd, 2012; Podlesnik & Shahan, 2009).

The effects of alternative reinforcers on persistence and resurgence of problem behavior are predicted by an algebraic model proposed by Shahan and Sweeney (2011) based on behavioral momentum theory (BMT; Nevin & Grace, 2000; see also Nevin & Shahan, 2011, for explanation and predictive equations). In ordinal terms, their resurgence model makes two explicit predictions. First, more-frequent DRA reinforcers will reduce problem behavior more rapidly than will less-frequent DRA reinforcers; and second, when DRA reinforcers are no longer provided, either inadvertently or because treatment ends, resurgence of problem behavior will be greater after more-frequent than less-frequent DRA reinforcers. Ordinally similar predictions hold for reinstatement by occasional presentation of reinforcers for problem behavior.

An equally important goal of therapy is to replace problem behavior with desirable alternative behavior that persists in the face of various challenges such as distraction or nonreinforcement outside the treatment setting. Many studies have demonstrated that resistance to change is an increasing function of reinforcer rate, so high rates of DRA reinforcement may be clinically useful with respect to alternative behavior despite the predicted increase in magnitude of relapse of problem behavior. Thus, according to these extensions of BMT, there is a tradeoff between effectiveness of treatment and magnitude of relapse.

Experiment 1 used pigeons as subjects to examine the effects of both the baseline reinforcer rate maintaining analog problem behavior and the reinforcer rate for alternative behavior on treatment effectiveness, resurgence, and reinstatement in free-operant multiple schedules; the procedure is similar to many previous studies that have arranged DRA for free-operant alternative behavior (e.g., Bouton et al., 2012; Leitenberg, Rawson, & Mulick, 1975; Podlesnik & Shahan, 2009). We employed different rates of alternative reinforcement, both greater than and smaller than that for problem behavior, because in clinical applications the historical rate of reinforcement for problem behavior may be unknown.

Experiment 2 evaluated the effects of different reinforcer rates within a multiple-schedule arrangement for functional communication responses (FCR) in children exhibiting severe problem behavior. The procedure differed from Experiment 1 in that opportunities to obtain alternative reinforcers were explicitly signaled; the rationale is explained below.

Because the results in one condition of Experiment 1 differed substantially from prediction, and from the results of Experiment 2, Experiment 3 repeated that condition of Experiment 1 with signaled alternative reinforcers—in effect, a reverse translation of the procedure employed in Experiment 2, with pigeons as subjects.

Experiment 1

Pigeons pecked keys in a three-component multiple schedule with components signaled by different key-light colors. In Phase 1, target responding produced food reinforcement at either a high rate or low rate in all components, depending on the condition. Next, during treatment (Phase 2), a DRA-based intervention for target responding was introduced in two components while target responding in the third component remained untreated. In the DRA components, food for target responding was suspended, an alternative-response key was illuminated, and pecks to this key produced food reinforcement at either a high rate in one component or at a low rate in the other. Reinforcement for target responding (in the untreated component) and for alternative responding (in the treatment components) was suspended in Phase 3. The purpose of this manipulation was to evaluate persistence of target responding in the untreated component and resurgence of target responding in the treatment components. Finally, in Phase 4, response-independent food was delivered in all components to examine reinstatement of target responding. Analog sensory consequences for target responding were available at all times and in all components during the experiment to simulate potential, extraneous sources of reinforcement for problem behavior in clinical settings. See Table 1 for a summary of conditions and the number of sessions arranged per condition.

Table 1.

Condition Summary for Experiment 1

| High-Rate Baseline |

Low-Rate Baseline |

||||

|---|---|---|---|---|---|

| Component | Target | Alternative | Target | Alternative | |

| Phase 1 | No Treatment | VI 30 RT+VI 30 rT | - | VI 120 RT+VI 30 rT | - |

| (Baseline) | DRA High | VI 30 RT+VI 30 rT | - | VI 120 RT+VI 30 rT | - |

| 30 Sessions | DRA Low | VI 30 RT+VI 30 rT | - | VI 120 RT+VI 30 rT | - |

| Phase 2 | No Treatment | VI 30 rT | - | VI 30 rT | - |

| (Treatment) | DRA High | VI 30 rT | VI 30 RA | VI 30 rT | VI 30 RA |

| 30 Sessions | DRA Low | VI 30 rT | VI 120 RA | VI 30 rT | VI 120 RA |

| Phase 3 | No Treatment | VI 30 rT | - | VI 30 rT | - |

| (Extinction) | DRA High | VI 30 rT | Ext | VI 30 rT | Ext |

| 20 Sessions | DRA Low | VI 30 rT | Ext | VI 30 rT | Ext |

| Phase 4 | No Treatment | VI 30 rT+FT RT | - | VI 30 rT+FT RT | - |

| (Reinstatement) | DRA High | VI 30 rT+FT RT | Ext | VI 30 rT+FT RT | Ext |

| 10 Sessions | DRA Low | VI 30 rT+FT RT | Ext | VI 30 rT+FT RT | Ext |

Note: Target = target response (left or right, counterbalanced), Alternative = alternative response (right or left, counterbalanced), RT = food deliveries for target responses, rT = sensory-consequence deliveries for target responses, RA = food deliveries for alternative responses, FT = fixed-time food deliveries.

Method

Subjects

Seven unsexed homing pigeons with experience responding under various schedules of positive reinforcement (see Sweeney et al., 2014) served. Pigeons were housed individually in a temperature- and humidity-controlled colony room with a 12:12 hr light/dark cycle (lights on at 7:00 AM). Each pigeon had unlimited access to water in its home cage and was maintained at 80% of its free-feeding body weight by the use of supplementary postsession feedings when necessary. Animal care and all procedures reported below were conducted in accordance with guidelines set forth by Utah State University's Institutional Animal Care and Use Committee.

Apparatus

Four Lehigh Valley Electronics operant chambers for pigeons (dimensions 35-cm long, 35-cm high, and 30-cm wide) were used. Each chamber was constructed of painted aluminum and had a brushed-aluminum work panel on the front wall. Each work panel was equipped with three equally spaced response keys that could be transilluminated with various colors. A force of at least 0.1 N was required to operate the keys. A rectangular food aperture (5 cm wide by 5.5 cm tall, with its center 10 cm above the floor of the chamber) also was located on the work panel. Purina Pigeon Checkers could be collected from a solenoid-operated hopper in this aperture, and, during food deliveries, a 28-v DC bulb illuminated the aperture. A 28-v DC house light was centered 4.5 cm above the center response key and provided general illumination at all times except during blackout periods and reinforcer deliveries. A ventilation fan and a white-noise generator masked extraneous sounds at all times. The timing and recording of experimental events was controlled by Med PC software that was run on a PC computer in an adjoining control room.

Procedure

Phase 1 (baseline)

In Phase 1 (and all phases listed below), pigeons pecked keys in a three-component multiple schedule with the following specifications. Components lasted for 3 min per presentation and were separated from one another by 1-min intercomponent intervals. Blocks of components were arranged such that, in each block, all components were presented, but the order in which they were arranged within blocks was determined randomly. Sessions began with a 1-min blackout of the chamber and consisted of four 3-component blocks (36 min of session time, excluding intercomponent intervals and reinforcement time; see below). Only the target-response key (either the left or right key, counterbalanced across pigeons) was available in each component and was transilluminated either red, blue, purple, yellow, or green (counterbalanced across pigeons).

Pecks to the target-response key in each component produced analog sensory consequences according to a variable-interval (VI) 30-s schedule (i.e., the first key peck to occur after a variable interval averaging 30 s produced these consequences as in Sweeney et al., 2014). These consequences consisted of a 1-s darkening of the chamber accompanied by flashing of the target-response key, transilluminated white, and were available in all subsequent phases of the experiment. Pecks to this key also produced 2-s hopper presentations according to separate VI schedules. During all analog sensory and food presentations noted here or below, the session timer and all VI timers stopped. If the VI schedules for both sensory consequences and food elapsed at the same time, the next peck to the target-response key produced sensory consequences first, followed by a 0.5-s delay and delivery of food.

For four pigeons, food was made available according to a VI 30-s schedule (hereafter High-Rate BL); for the other three pigeons, a VI 120-s schedule operated (Low-Rate BL). After completion of Phase 1 and all of the remaining phases of the experiment detailed below, those pigeons that responded under the High-Rate baseline condition completed the Low-Rate baseline condition (plus all subsequent phases) and vice versa.

Phase 2 (treatment)

In Phase 2, the treatment differed between multiple-schedule components. In one (No-Treatment) component, reinforcement contingencies for target responding were the same as in Phase 1. In the other components, food was suspended, an alternative-response key (either left or right, color counterbalanced across pigeons) was illuminated concurrently with the target-response key, and pecks to this key produced an alternative source of food reinforcement according to either a VI 30-s schedule (hereafter the DRA-Rich component) or a VI 120-s schedule (the DRA-Lean component). In both DRA components, a 3-s changeover delay (COD) was enforced such that food reinforcers for alternative responding or sensory consequences for target responding was delivered only if: 1) the VI schedule for the reinforcer had elapsed; and 2) at least 3 s had elapsed since the pigeon switched to pecking the key associated with the available reinforcer.

Phase 3 (extinction + resurgence test)

The stimuli arranged in Phase 3 were the same as in Phase 2 with sensory consequences remaining available for target responding. All food in the No-Treatment component and alternative reinforcers in the DRA components however, were suspended.

Phase 4 (reinstatement test)

Contingencies for responding and the stimuli arranged in Phase 4 were the same as in Phase 3. Response-independent food deliveries occurred 2 and 8 s into each component presentation (for a total of 24 food deliveries, 8 in each component).

Results and Discussion

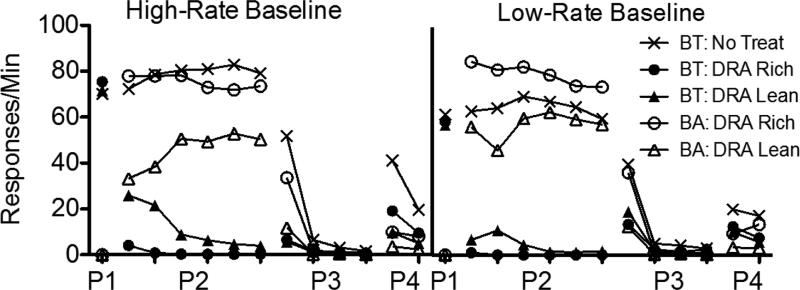

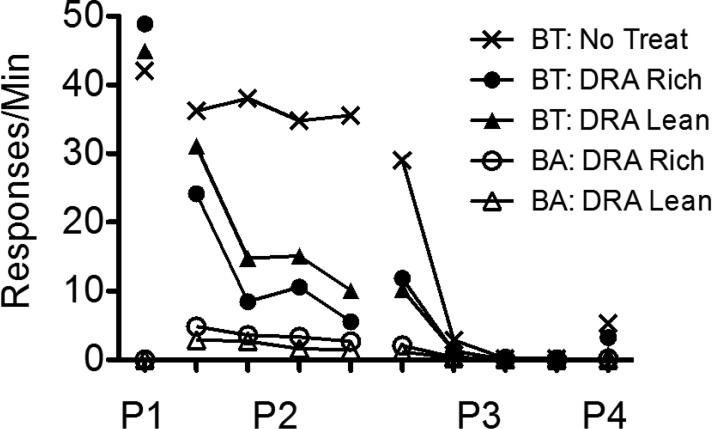

Figure 1 presents a summary of response rates across the various phases of Experiment 1, averaged across pigeons and aggregated across five-session blocks to show relevant trends in the data. The left panel shows data obtained from the High-Rate BL condition, and the right panel shows these data obtained from the Low-Rate BL condition. Note that rates of responding only from the last five sessions of Phase 1 are shown. Figures A1-A2 in the Appendix present the data for individual pigeons.

Fig. 1.

Target (BT) and alternative (BA) response rates across phases (P1-P4) of Experiment 1. Data are averaged across pigeons and aggregated into five-session blocks (response rates only from the last five sessions of Phase 1 are shown). The left panel includes data from the High-Rate baseline condition, and the right panel includes data from the Low-Rate baseline condition. Figures for individual subjects are in Appendices A1 and A2.

Target-key responding occurred at roughly equal rates in the three multiple-schedule components during the terminal sessions of Phase 1 in both baseline conditions. When reinforcers for target behavior were suspended and food was made available in the DRA components in Phase 2, target-response rates in these components decreased to near-zero levels across sessions. Two factors affected the speed of these decreases in rate. First, target responding persisted to a greater degree in the High-Rate BL than the Low-Rate BL conditions. Second, target responding occurred less frequently in DRA-Rich than DRA-Lean components.

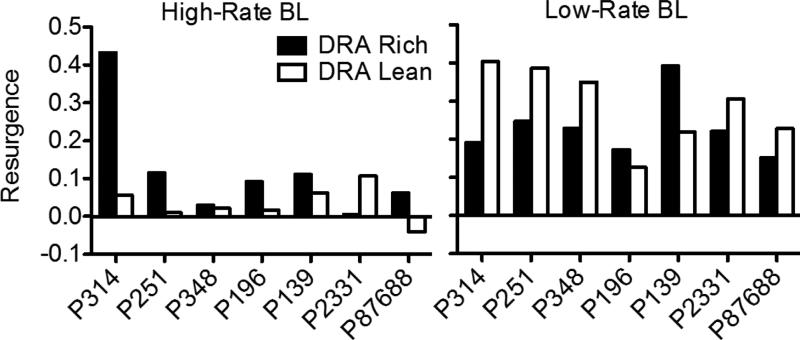

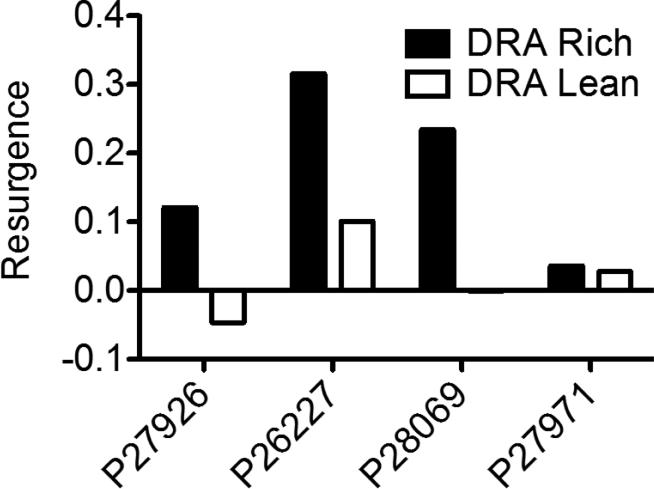

When all food reinforcement for target responding in the No-Treatment component and for alternative responding in the DRA components was suspended in Phase 3, target responding in the No-Treatment component decreased across sessions, and it decreased more slowly in High-Rate BL than Low-Rate BL conditions. In the DRA components, an increase in target responding relative to terminal Phase-2 responding (i.e., resurgence) was observed. Figure 2 presents obtained resurgence, expressed as the difference between mean target proportion-of-baseline response rates from the first five sessions of Phase 3 and the last five sessions of Phase 2, for individual pigeons (proportion of baseline was calculated by dividing response rates during sessions of Phases 2 and 3 by the mean rate of responding obtained in the last five sessions of Phase 1). Values of 0 represent no change in target response rates between phases, values greater than 0 represent resurgence of target responding, and values less than 0 represent a decrease in rate between Phases 2 and 3. In the High-Rate BL condition, target responding resurged for most pigeons in both components. Further, resurgence was greater in the DRA-Rich than in the DRA-Lean component for six of seven pigeons. In the Low-Rate BL condition, target responding resurged in both components for all pigeons. Overall, resurgence here was greater than in the High-Rate BL condition, and was greater in the DRA-Rich than in the DRA-Lean component for only two of seven pigeons.

Fig. 2.

Resurgence of target responding in the DRA-High and DRA-Low components during both baseline conditions of Experiment 1, expressed as the difference in mean proportion-of-baseline response rates from the first five sessions of Phase 3 and the last five sessions of Phase 2. Data for all pigeons are shown. Positive values represent an increase in target responding between these conditions.

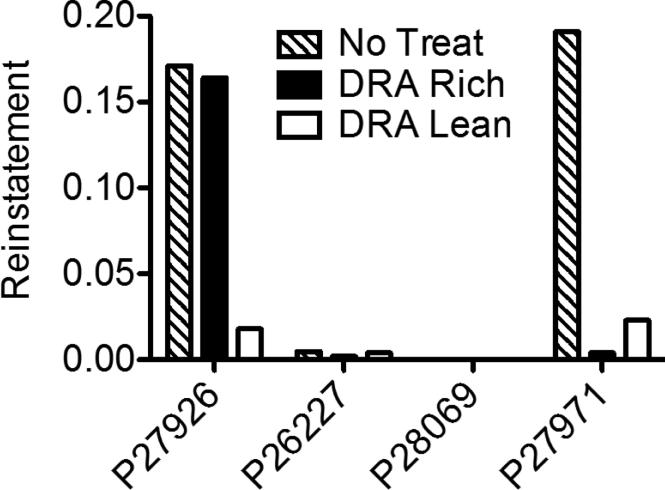

Reinstatement of target responding, expressed as the difference between mean target proportion-of-baseline response rates from the first five sessions of Phase 4 and the last five sessions of Phase 3, is shown in Figure 3 for individual pigeons. Again, values of 0 represent no change in target response rates between phases, values greater than 0 represent reinstatement of target responding, and values less than 0 represent a decrease in rate between Phases 3 and 4. In the High-Rate BL condition, six of seven pigeons exhibited greater reinstatement in the DRA-Rich than the DRA-Lean component (the difference was small for P314). In the Low-Rate BL condition, the magnitude of reinstatement was generally smaller than in the High-Rate condition and was not clearly differentiated between the DRA-Rich and DRA-Lean components. In the No-Treatment component, reinstatement in both High-Rate and Low-Rate baseline conditions was generally greater than in either DRA component, providing some evidence for the efficacy of treatment with DRA plus extinction.

Fig. 3.

Reinstatement of target responding in all components during both baseline conditions of Experiment 1, expressed as the difference in mean proportion-of-baseline response rates from the first five sessions of Phase 4 and the last five sessions of Phase 3. Data for all pigeons are shown. Positive values represent an increase in target responding between these conditions.

During Phase 3, alternative behavior was more resistant to extinction in the DRA-Rich than the DRA-Lean component during the first five-session block (see Fig. 1); the average data were essentially identical for the High-BL and Low-BL conditions. After the first block, alternative response rates fell to near zero in both components and conditions.

Several of these findings provide support for a momentum-based approach to understanding alternative-reinforcer effects on response persistence and relapse in DRA-based treatments. First, during Phase 2, target responding in the DRA components tended to persist to a greater degree in High-Rate BL than in Low-Rate BL conditions; similarly, in the No-Treatment component, target responding tended to persist to a greater degree and reinstate more substantially in the High-Rate BL than in the Low-Rate BL condition. Second, target-response suppression was greater in DRA-Rich than in DRA-Lean components in both baseline conditions, consistent with Shahan and Sweeney's (2011) characterization of alternative reinforcement as a source of disruption of target responding. Third, Phase-3 resurgence of target responding in the DRA components was positively related to alternative reinforcement rates in the High-Rate BL condition, also consistent with Shahan and Sweeney's model.

In the Low-Rate BL condition, however, target responding resurged to a greater degree in the DRA-Lean than in the DRA-Rich component. Moreover, resurgence, in general, was greater in the Low-Rate BL than in the High-Rate BL condition. These findings are contrary to the predictions of Shahan and Sweeney's (2011) model of resurgence based on momentum theory, and raise challenges for future research and theory.

Experiment 2

Experiment 2 translated procedures of Experiment 1 into clinical treatments and tests of resurgence and reinstatement with four children with severe behavior disorders and autism spectrum disorder (ASD). Pretreatment functional analyses showed that each participant's problem behavior was maintained, at least in part, by tangible reinforcers. In each case, the DRA treatment involved training and reinforcing communicative behavior—i.e., a mand, defined as a verbal operant that specifies its consequence—as an alternative response. Two therapists wore different colored teeshirts and were correlated with rich and lean rates of alternative reinforcement during DRA + EXT that was presented in a multiple schedule. In order to reinforce the alternative response on a continuous or near-continuous schedule, the availability of alternative reinforcement was signaled with a countdown timer. Mands for alternative reinforcement after the timer sounded were reinforced with brief access to the reinforcer. Following treatment, relapse was evaluated by a test of resurgence in which the alternative response and problem behavior were placed on extinction in both schedule components, and a test of reinstatement in which baseline schedules of reinforcement were reintroduced. Some conditions and tests were replicated with individual participants, as described below in conjunction with their data.

Method

Participants and Setting

Four boys admitted to university-based programs for the treatment of severe behavior disorders participated with informed consent in Experiment 2. Jose (all participant names are pseudonyms) was a 10-year-old male diagnosed with autism spectrum disorder (ASD). He spoke in two- to five-word phrases, followed one-step instructions, and sight-read at the first grade level. Norman was an 11-year-old male diagnosed with autism. He spoke in complete sentences and could engage in conversation on multiple topics. Norman had academic skills at the third to fifth grade level and was stronger in mathematics than reading and writing, and could select among several on-line sites accessed via iPad. Jeremy was an 8-year-old male diagnosed with ASD, moderate intellectual disability (ID), attention deficit hyperactivity disorder (ADHD), and stereotypic movement disorder with self-injury. George was a 14-year-old male diagnosed with ASD, severe ID, and stereotypic movement disorder with self-injury. Neither Jeremy nor George communicated vocally; however, both participants demonstrated understanding of simple one-step directions and communicated using simple gestures and picture cards. To evaluate generality across clinical settings, therapists, and details of method, participants were studied in two clinical programs with some variations in procedural particulars dictated by institutional practices and clients’ behavior patterns.

Jose and Norman were admitted to Nova Southeastern University's (NSU) Severe Behavior Disorders Program; Jeremy and George were admitted to the Neurobehavioral Unit at the Kennedy Krieger Institute (KKI). Sessions for Jose and Norman were conducted in treatment rooms measuring 3.5 m by 4.0 m. Sessions for Jeremy and George were conducted in a room that measured approximately 2.4 m by 2.6 m (Jeremy) or 2.4 m by 3 m (George). Each room had adjacent audio-equipped observation rooms with one-way windows and contained the materials necessary to conduct sessions.

Target Behaviors, Measurement, and Interobserver Agreement

Target problem behaviors included aggression (Jose, Norman, and Jeremy), disruption (Jose, Norman, and Jeremy), and self-injurious behavior—SIB (Jose, Jeremy, and George). Aggression included one or more of the following: hitting, kicking, pushing/pulling, grabbing others, hair pulling, biting, throwing objects at therapists, spitting at therapists, head butting, scratching, and foot stomping. Disruption included one or more of the following: screaming, throwing objects, dumping objects out of containers, kicking/hitting objects, ripping objects, turning over furniture and swiping objects from table tops. Jose's SIB consisted of head hitting (hand to head) and body hitting (hand to body). Jeremy's SIB included biting and scratching any part of his body, hitting any part of his body (including his head, face, and chin) with an open or closed fist or with an object from a distance of 15 cm or more, banging his head against surfaces, and hitting any part of his head or face with his knee. Jeremy wore a helmet with protective padding during all sessions. George's SIB included biting and scratching any part of his body, attempting to or successfully hitting or punching any part of his body (including his head and face) with his hand or an object from a distance of 15 cm or more, hitting his chin to his shoulder, and hitting any part of his head or face with his knee.

During treatment, Jose and Norman vocally manded for access to a video or iPad. For Jeremy and George, manding was defined as handing a functional communication card, depicting a generic food item for Jeremy and an iPad for George, to the therapist.

Interobserver agreement data were calculated using exact agreement for Jose and Norman (Page & Iwata, 1986) and a modified block-by-block agreement method for Jeremy and George (see Mudford, Martin, Hui, & Taylor, 2009). Mean agreement coefficients for problem behavior were 94%, 95%, 100%, and 95% for Jose, Norman, Jeremy and George, respectively. Mean agreement coefficients for prompted functional communication were 96%, 96%, 97%, and 100% for Jose, Norman, Jeremy and George, respectively. Mean agreement coefficients for unprompted functional communication were 99%, 99%, 100%, and 99% for Jose, Norman, Jeremy and George, respectively. In summary, interobserver agreement percentages for all measures of behavior ranged from 94% to 100%.

Procedure

Pre-study functional analysis

Before Experiment 2 began, functional analyses were conducted for all participants to identify the behavioral function of each participant's target problem behaviors. Brief functional analyses were conducted for Jose and Norman (Derby et al., 1992) and lengthier analyses were conducted for Jeremy and George (Iwata, Dorsey, Slifer, Bauman & Richman, 1994), per clinical convention at the respective treatment sites. These analyses indicated that Jose, Norman, and George's problem behaviors were maintained by access to restricted videos/iPad. Jeremy's problem behavior was maintained by access to edible reinforcers. We confirmed these functional relations for all four participants via comparison of test and control conditions in Phase 1.

Functional analysis baseline (Phase 1)

In the FA baseline, contingent access to the reinforcer that maintained problem behavior and control conditions alternated as schedule components; sessions were composed of a single schedule component. For Jose and Norman, session duration was 5 min excluding video access intervals; four to six sessions were conducted per day. For Jeremy and George, sessions were 10 min in duration and between one and seven sessions were conducted per day. Contingent access sessions were immediately preceded by free access to the video/iPad for 4 min for Jose and Norman, for 30 s for George, or 30 s access to gummy bears for Jeremy, in order to establish removal of the iPad or gummy bears as a motivating condition. Presession access was terminated by the therapist saying, “we have to put this away now” and removing the video/iPad or gummy bears from the participant, at which point session timing began. Access to the video/iPad or gummy bears was provided for occurrences of problem behavior according to a VI 60-s schedule of reinforcement with no limited hold.

Interval values for VI schedules for Jose and Norman were based on the Fleshler-Hoffman (1962) VI generator formulas selected randomly without replacement; however, short interreinforcement intervals were excluded because they were impractical for clinical purposes, making the schedule quasirandom. VI schedules for Jeremy and George were arranged such that all values were in 5-s increments and between +/− 50% of the programmed average (e.g., a VI 30-s schedule of reinforcement would have included the following intervals: 15 s, 20 s, 25 s, 30 s, 35 s, 40 s, and 45 s). For each VI schedule, three lists were generated that contained values starting from the lower bound, with the increments averaging to the programmed VI. Prior to beginning each session, the therapist pseudorandomly selected among one of the three lists for the given VI schedule in effect such that each list was used during a session before one could be repeated.

Control sessions for Jose, Norman, and George consisted of providing the participant continuous access to the iPad. For Jeremy, the therapist placed a gummy bear on a plate. The plate was replenished with another gummy bear as soon as Jeremy consumed the previous piece of food. For Jose and Norman, the therapist interacted with the participant by making comments on the content of the video. For Jeremy and George, the therapist provided attention in the form of a neutral statement every 30 s. No instructions were provided and no consequences were arranged for problem behavior.

There were two contingent tangible components and one control component. Contingent tangible components were correlated with two different therapists, each wearing a different color shirt that was held constant throughout the study. A third therapist conducted control sessions and wore a distinctively different shirt. In addition, for Jeremy and George, different colored poster boards were placed on the door outside the session room and on the walls inside the session room. The color of the poster board corresponded with the color of the shirt worn by each therapist. Components for Jose alternated with three or four successive control sessions followed by a two-component multiple schedule arrangement in which the two therapists alternated in strict order (see Fig. 4). The three schedule components alternated in a multiple schedule for Norman, Jeremy, and George.

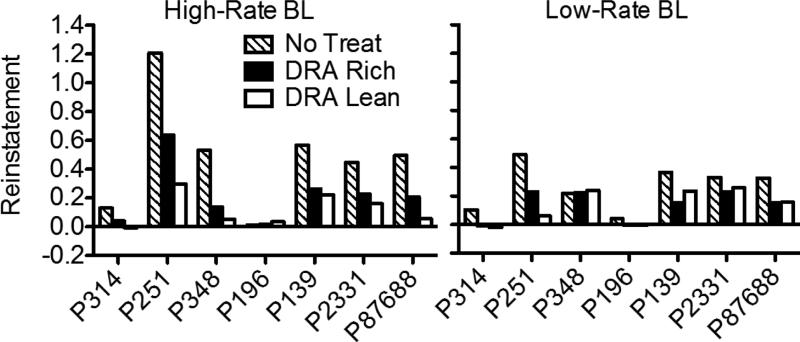

Fig. 4.

Frequency of problem behavior displayed by Jose over successive experimental sessions. See text for description of successive conditions.

DRA Treatment (Phase 2)

Problem behavior was treated with DRA that was signaled on a rich or a lean schedule (hereafter DRA-Rich or DRA-Lean). The schedule values were FI 30-s and FI 120-s for Jose, VI 30-s and VI 120-s for Jeremy and George, and VI 50-s and VI 200-s for Norman; note that rich and lean schedules differed by a factor of 4 for all participants. Norman also was required to satisfy a differential-reinforcement-of-other-behavior (DRO) contingency for the entire interreinforcement interval due to the severity of his aggression; no changeover contingencies were needed for the other participants because their rates of problem behavior were so low. The interreinforcer intervals for Jose, Jeremy, and George (30 s vs.120 s) were borrowed from the animal model in Experiment 1. The interreinforcer intervals for Norman were chosen to allow longer access to the iPad to maintain its reinforcing value.

Immediately prior to treatment sessions, participants had 30 s to 4 min access to the video/iPad or gummy bears. For Jose and Norman, this access period was timed with an iPhone count-down timer that was held 0.5 m from the participant and within his field of vision. Sessions began when the timer alarm sounded. As the alarm sounded, the therapist said, “What's it time for?” prompting Jose to say, “Bye bye, DVD/iPad”. For Norman, therapists asked him to return the iPad. For Jeremy and George, the therapist told the participant that his preferred item (gummy bear for Jeremy and iPad for George) was no longer available and removed the item from the participant. After the reinforcer was removed, the therapist set the countdown timer to the rich or lean DRA schedule value in effect and held the timer within the participant's field of vision with the exception of Norman. The countdown timer remained near him but outside his field of vision. No consequences were provided for problem behavior or mands for reinforcer access.

When the alarm sounded, the therapist asked Jose and Norman, “What do you want?” Jose and Norman reliably responded with a mand for the video/iPad. This prompt was only necessary for the first two to three sessions of treatment. Afterwards, the alarm reliably occasioned the video/iPad mand. For Jeremy and George, the functional communication (FC) card was placed on a table by the participant and the therapist held out his or her hand. If the participant did not immediately hand the card to the therapist, the therapist used a three-step prompting procedure (verbal, gestural, and physical prompts) to prompt the participant to hand over the FC card. The reinforcer was delivered following both prompted and unprompted communication, but prompts were rarely required beyond the 13th DRA treatment session for Jeremy and in only 4 DRA treatment sessions for George. For Jose and Norman, the DRA-Rich and DRA-Lean schedules for were correlated with red and green therapist teeshirts, respectively. For Jeremy and George, the DRA-Rich schedules were correlated with blue and green poster board and teeshirts, respectively; the DRA-Lean schedules were associated with yellow poster board and teeshirts for both Jeremy and George.

Resurgence/extinction test (Phase 3)

Procedures were identical to the preceding phase with the following exceptions. When the count-down timer alarm sounded, all reinforcers for problem and alternative behaviors were withheld. That is, mands for video/iPad access or gummy bear were placed on extinction (i.e., video/iPad or gummy bear access was not provided) and no consequences were provided for problem behavior. For Norman and Jose, vocal mands were simply ignored. For Jeremy and George, when the timer sounded, the FC card was placed on a table by the participant for 10 s then removed. The therapist did not initiate the three-step prompting procedure. If the participant handed the FC card to the therapist within the 10 s in which it was present, the therapist placed the card back on the table by the participant and did not provide access to the reinforcer. This was done to maintain consistency with how the cards were presented in Phase 2 DRA. In addition, the schedule of card availability was part of what made the contexts different during DRA. Because this was a key aspect of the stimulus conditions for DRA-Rich and DRA-Lean, the rate of presentation was maintained to keep this aspect of the context unchanged. For all participants, the countdown timers continued to be set to the DRA-Rich and DRA-Lean schedules of reinforcement used during Phase 2. However, as noted above, mands that occurred after the VI elapsed were placed on EXT.

For Jeremy, Phases 2 and 3 were repeated after the initial implementation of each. We repeated these conditions because Jeremy's medical regimen changed at the same time we introduced Phase 2. The medication change resulted in a general dampening of his behavior across the day. Thus, although we observed marked decreases in problem behavior when we introduced treatment, it was impossible for us to separate the effects of the DRA intervention from the effects of the medication—an effect that continued into the first resurgence test (sessions 51-61; see Fig. 6 below). After session 61, we suspended the analysis until Jeremy's medication regimen had been stabilized and normal activity levels resumed. At this point, it seemed experimentally prudent to reexamine the treatment effects, which we did beginning with session 62. It should be noted that although we observed an overall decrease in Jeremy's problem behavior that seemed to coincide with changes in his medication regimen, we did not (and could not) observe an overall decrease in his manding. If Jeremy did not independently mand when the FC card was presented, he was prompted and then physically guided to hand over the card. This was done to ensure differences in the obtained rates of reinforcement across the VI 30 s and VI 120 s conditions. Therefore, we would not expect to see decreases in manding that coincided with an overall decrease in his behavior as his medications were changed.

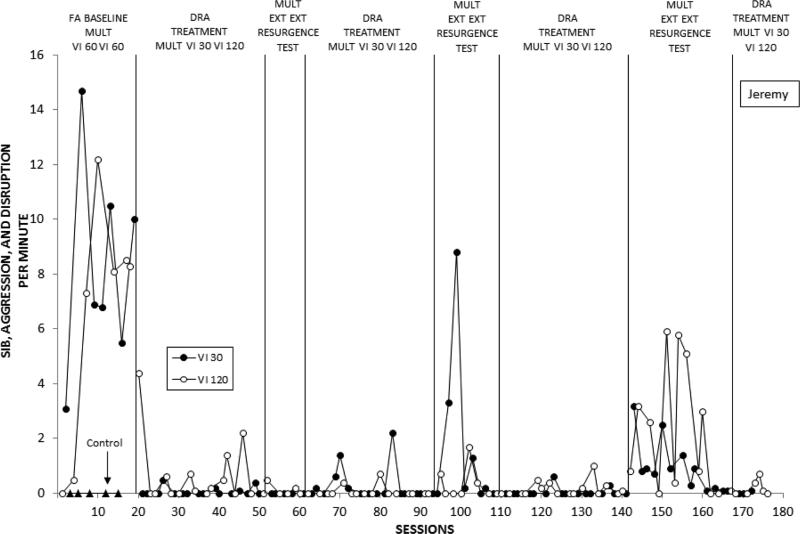

Fig. 6.

Frequency of problem behavior displayed by Jeremy over successive experimental sessions. See text for description of successive conditions.

The resurgence test was in effect for a minimum of five sessions per condition. For the third (Jeremy) and fourth (George) participants, we decided to examine whether there were any differences in the number of sessions before the behavior decreased to low rates. For Jeremy, this took more than five sessions per condition in the second and third exposure to EXT.

Repeat DRA Treatment (Phase 2R)

Jose and Jeremy were exposed again to the DRA treatment conditions. Jose experienced this phase to reestablish treatment conditions prior to his reinstatement test. For Jeremy, this phase was conducted prior to another resurgence test, followed by a final exposure to the DRA treatment to end his analysis.

Reinstatement test (Phase 4, Jose and George)

Phase 1 baseline conditions of reinforcement for problem behavior were reestablished in both multiple schedule components. This constitutes a response-dependent form of reinstatement (Podlesnik & Shahan, 2009) and represents treatment failure by resuming baseline reinforcement of problem behavior. For George, the reinstatement test followed EXT. The reinstatement test was not conducted with Jeremy because he was scheduled for discharge from the hospital and we therefore thought it prudent to end the analysis in a treatment phase.

Results and Discussion

Figure 4 presents data on Jose's combined SIB, aggression and disruption per minute by experimental phase and condition. In the baseline functional analysis (Phase 1), there were clear differences between problem behavior rates in the control versus the two test conditions in which problem behavior was reinforced by contingent video access on equal VI schedules by the red and green therapists. This further confirmed the behavioral function of Jose's problem behavior. Although problem behavior occurred more often with the therapist correlated with green, there was considerable within-component variability for both therapists. Introduction of DRA treatment (Phase 2) resulted in rapid, sustained and comparable reductions in problem behavior for both therapists despite the 4-to-1 difference in rates of alternative reinforcement during DRA. In the extinction test (Phase 3), resurgence of problem behavior was evident in extinction bursts that occurred immediately in both components. However, rates of problem behavior were consistently higher in the DRA-Rich than in the DRA-Lean component. Return to treatment with unequal reinforcer rates (Phase 2R) resulted in marked reductions in problem behavior for 12 sessions for both therapists. For the remaining 14 sessions of DRA treatment, problem behavior gradually increased for no apparent reason. Anecdotally, Jose was generally agitated for several days and did not return to a completely calm state between sessions. Despite this, problem-behavior rates were substantially lower than in the FA baseline and the extinction/resurgence test sessions. Reestablishing Phase 1 baseline conditions (Phase 4) resulted in an increase in problem behavior in both DRA components relative to all Phase 2R sessions. During this reinstatement test, rates of problem behavior were notably higher in the component correlated with rich DRA in four of the five reinstatement sessions.

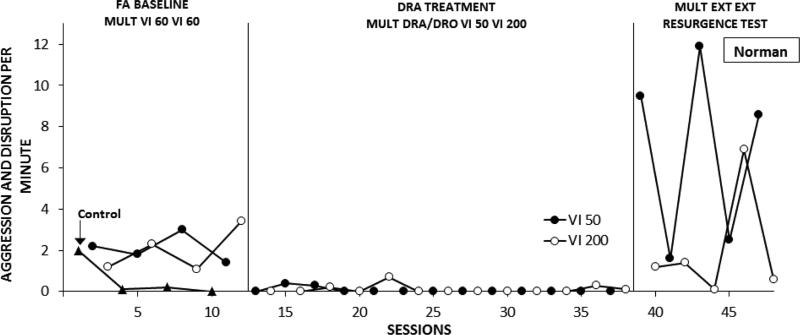

Figure 5 shows Norman's combined aggression and disruption across phases and conditions. In the FA Baseline (Phase 1), rates of problem behavior were clearly differentiated between control and the two test conditions in which it was reinforced by iPad access, while only small differences were found between the red and green teeshirt components. Introduction of treatment with combined DRA and DRO (Phase 2) resulted in an immediate, sustained reduction of problem behavior to zero or near-zero rates in both DRA-Rich and DRA-Lean components. Discontinuing reinforcement of communication in the resurgence test (Phase 3) resulted in increased rates of problem behavior in both components. Resurgence of problem behavior was substantially greater in the DRA-Rich component.

Fig. 5.

Frequency of problem behavior displayed by Norman over successive experimental sessions. See text for description of successive conditions.

Figure 6 depicts Jeremy's combined SIB, aggression, and disruption across phases and conditions. In the FA baseline (Phase 1), rates of problem behavior were clearly differentiated between control and the two test conditions in which it was reinforced by iPad access, while no consistent differences were found between the two VI-60 s components. Introduction of DRA treatment (Phase 2) resulted in immediate decreases of problem behavior to near-zero rates in both rich and lean components that sustained throughout treatment and resurgence testing (Phase 3), but again this effect was complicated by the changes in medication regimen. When we resumed the DRA treatment conditions (Phase 2R) at session 62, rates of problem behavior remained markedly lower than baseline levels in both rich and lean treatment conditions. Discontinuing reinforcement of communication in the subsequent resurgence test (Phase 3R) did not immediately result in returns to high levels of problem behavior. Rather, it re-emerged variably during the first half of the phase, but with markedly higher rates in the DRA-Rich than in the DRA-Lean component. After several sessions, rates of problem behavior returned to treatment levels. A return to the DRA treatment conditions (Phase 2R’) resulted in a return to low levels of problem behavior, but results during a third resurgence test (Phase 3R’) were unlike the previous test. That is, problem behavior resurged in both components, with an overall higher phase mean in the DRA-Lean component, followed by a decrease to near-zero rates in both components at the end of the phase. In the final phase, another return to the DRA treatment conditions, problem behavior remained low under both stimulus conditions.

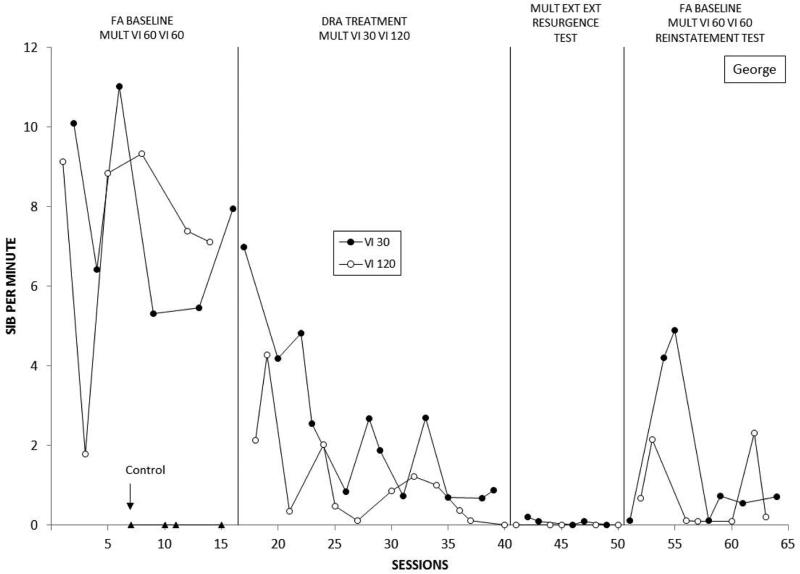

Figure 7 depicts George's SIB across phases and conditions. Again, rates of problem behavior were clearly differentiated between control and the two test conditions in FA baseline (Phase 1) and there was no consistent difference in their rates between the two VI 60 s conditions. Introduction of the DRA intervention (Phase 2) resulted in gradual decreases in problem behavior during both the rich and lean components. Interestingly, this decrease was more rapid, and sustained at lower levels, in the DRA-Lean (VI 120-s) than the DRA-Rich (VI 30-s) component. In contrast to the other participants, terminating reinforcement for appropriate responding (Phase 3) did not produce marked resurgence of problem behavior; instead, it remained low during stimulus conditions associated with both prior treatment schedules. However, when baseline schedules were restored (Phase 4), problem behavior was reinstated when we returned to the initial VI 60 s VI 60 s baseline conditions; in general, reinstatement was stronger in the DRA-Rich component. This outcome replicated what we observed with Jose, the only other participant exposed to the reinstatement phase.

Fig. 7.

Frequency of problem behavior displayed by George over successive experimental sessions. See text for description of successive conditions.

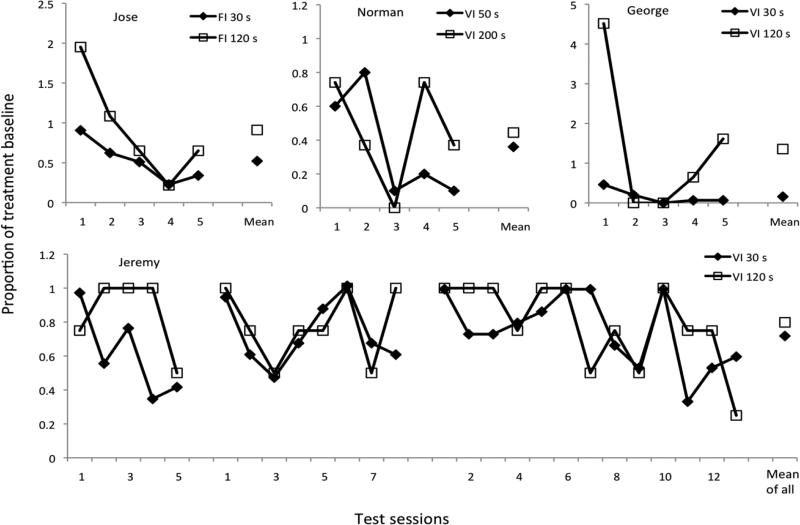

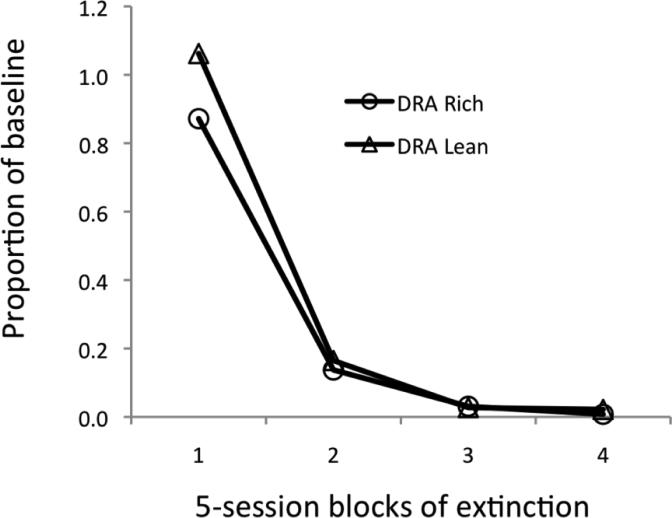

Mands occurred at roughly constant rates during treatment. Table 2 shows the averages for the final five sessions of Phase 2 as well as the rates of signaled reinforcement in DRA-Rich and DRA-Lean components for all participants. Manding rates were slightly lower than the rates of signal presentation, reflecting stimulus control by the signals plus response latencies (not recorded). Figure 8 shows that manding rates during extinction, expressed relative to their Phase 2 values (Table 2) were generally more persistent in the DRA-Lean component for all four participants, albeit with a lot of variation between sessions. The difference is particularly clear for Jeremy's first test, during which he exhibited virtually no problem behavior (see Fig. 6). In sum, problem behavior was generally more prone to relapse in the DRA-Rich component, whereas manding was generally more persistent in the DRA-Lean component.

Table 2.

Average rates of manding in the DRA-Rich and DRA-Lean components compared with scheduled rates of signaled opportunities for reinforcement.

| Participant | DRA Rich | DRA Lean | ||

|---|---|---|---|---|

| Scheduled | Mands/min | Scheduled | Mands/min | |

| Jose | FI 30s (2.0/min) | 1.77 | FI 120s (0.5/min) | 0.46 |

| Norman | VI 50s (1.2/min) | 1.00 | VI 200s (0.3/min) | 0.27 |

| Jeremy (1) | VI 30s (2.0/min) | 1.50 | VI 120s (0.5/min) | 0.40 |

| Jeremy (2) | 1.52 | 0.40 | ||

| Jeremy (3) | 1.48 | 0.40 | ||

| George | VI 30s (2.0/min) | 1.46 | VI 120s (0.5/min) | 0.36 |

Fig. 8.

Persistence of manding during Phase 3 extinction expressed as proportions of overall rate of manding during the final five sessions of Phase 2 treatment. Treatment and extinction were replicated twice for Jeremy (see Fig. 6).

Experiment 2 was an attempted translation of Experiment 1 procedures with pigeons to a clinical population of children with severe behavior disorders occurring secondary to ASD. Procedural parallels include: (a) a 4-to-1 ratio of reinforcement for alternative behavior between rich and lean components during DRA treatment; (b) resurgence tests that withheld all reinforcers; and (c) reinstatement of reinforcers that maintained baseline response rates. These commonalities resulted in some similar findings between Experiment 2 and Experiment 1 (High-BL condition). During DRA treatment, target behavior was reduced to similar low levels in both components, immediately in Experiment 2 and near the end of treatment in Experiment 1. Subsequent tests of resurgence showed that target responding during extinction was usually higher in DRA-Rich than DRA-Lean components. Similarly, tests of reinstatement or return to equal-reinforcement baseline conditions usually resulted in higher rates of target responding in DRA-Rich than DRA-Lean components. Experiment 1 (Low BL condition) gave different results, in that resurgence was greater in the DRA-Lean component, contrary to the High-BL condition and to most results in Experiment 2.

There was one important difference between the procedures of Experiment 1 and the clinical translation in Experiment 2. DRA treatment in Experiment 1 provided reinforcers for free operant responding on VI schedules. In Experiment 2, the availability of reinforcers during DRA was signaled by an audible or visible timer. This adaptation to clinical application was done for a number of reasons. First, this method ensures that rates of obtained reinforcement approximated programmed rates, which is necessary to answer questions regarding applicability of BMT. Second, use of an unsignaled intermittent schedule of reinforcement would result in communication responses being ignored. An intermittent schedule may be insufficient to maintain communication and may result in higher rates of problem behavior. Third, frequent mands for reinforcers may be impractical to honor and are clinically contraindicated. Signaling the availability of reinforcement for communication teaches participants to wait between mands to maximize the probability of reinforcement. This procedure approximates natural conditions that necessitate waiting and is a variant of waiting protocols used in FCT research (e.g., Betz, Fisher, Roan, Mintz, & Owen, 2013). Finally, it is socially unacceptable to be unresponsive to the communication of others, rendering the intermittent reinforcement of free operant communication untenable for clinical treatment (although this is exactly what we did in order to evaluate resurgence).

This difference between free operant and signaled DRA treatment may account for the differences between resurgence outcomes in Experiment 1, Low-BL condition, and Experiment 2. Experiment 3 was a bi-directional translation that modeled the signaled DRA treatment used in Experiment 2.

Experiment 3

As noted above, the low-rate baseline VI condition of Experiment 1 yielded greater resurgence in the DRA-lean than the DRA-rich component, contrary to expectation based on the Shahan-Sweeney model (2011) and to most resurgence findings in Experiment 2. To evaluate the effects of signaled DRA reinforcers in comparable conditions, we trained pigeons on a close analog to Experiment 2 with the Low-BL, DRA-Rich, and DRA-Lean schedules employed in Experiment 1.

Subjects

Four experimentally naïve, unsexed homing pigeons served. Animal care and housing were the same as in Experiment 1.

Apparatus

The apparatus for Experiment 3 was the same as for Experiment 1.

Procedure

Pretraining

Pecking the center key (transilluminated white) was shaped by successive approximations. Then, all pigeons completed three sessions (one each for center, right, and left keys) that ended after fifty 2-s hopper presentations delivered according to a fixed-ratio (FR) 1 schedule of reinforcement.

Phase 1 (baseline)

In Phase 1 (and all phases listed below), pigeons pecked keys in a three-component multiple schedule with the same specifications as in Experiment 1 (i.e., 3-min components separated by 1-min ICIs, and randomly arranged in four 3-component blocks). Sessions began with a 1-min blackout of the chamber. Pecks to the target-response key (position [left or right] and color [red, blue, purple, yellow, or green] counterbalanced across pigeons) produced sensory consequences according to a VI 30-s schedule and food according to a VI 120-s schedule. If the VI schedules for both sensory consequences and food elapsed at the same time, the next peck to the target-response key produced sensory consequences first, followed by a 0.5-s delay and delivery of food.

Phase 2 (treatment)

As in Experiment 1, no change in contingencies between Phases 1 and 2 were arranged in one (No-Treatment) component. In the other components, food reinforcers for target behavior were suspended, and pecks to an alternative-response key (either left or right) produced food according to either a VI 30-s (in the DRA-High component) or a VI 120-s (in the DRA-Low component) schedule. The major difference from Experiment 1, Low-BL condition, was that the alternative response key in the DRA components was transilluminated (color counterbalanced across pigeons) only when the VI schedule for alternative responding elapsed; it remained transilluminated until the available reinforcer was collected. In both DRA components, a 3-s COD was enforced such that food for alternative responses or sensory consequences for target responses were delivered only if: 1) the VI schedule for the reinforcer had elapsed, and 2) at least 3 s had elapsed since the pigeon switched to pecking the key associated with the available reinforcer.

Phase 3 (extinction + resurgence test)

In Phase 3, all food reinforcers in the No-Treatment component both DRA components were suspended. The alternative-response key in the DRA-High and DRA-Low components continued to be illuminated according to variable-time (VT) 30-s and VT 120-s schedules, respectively. This key remained illuminated until either the first peck to the key or 5 s had elapsed, whichever occurred first.

Phase 4 (reinstatement test)

Contingencies for responding and the stimulus conditions in Phase 4 were the same as in Phase 3, with the exception that the alternative-response key no longer was illuminated in either DRA component. Response-independent food deliveries occurred 2 and 8 s into each component presentation (for a total of 24 food deliveries, 8 in each component). See Table 3 for a summary of conditions and the number of sessions arranged per condition.

Table 3.

Condition Summary for Experiment 3

| Component | Target | Alternative | |

|---|---|---|---|

| Phase 1 | No Treatment | VI 120 RT+VI 30 rT | - |

| (Baseline) | DRA High | VI 120 RT+VI 30 rT | - |

| 30 Sessions | DRA Low | VI 120 RT+VI 30 rT | - |

| Phase 2 | No Treatment | VI 30 rT | - |

| (Treatment) | DRA High | VI 30 rT | Signaled VI 30 RA |

| 20 Sessions | DRA Low | VI 30 rT | Signaled VI 120 RA |

| Phase 3 | No Treatment | VI 30 rT | - |

| (Extinction) | DRA High | VI 30 rT | VT 30 Signal + Ext |

| 20 Sessions | DRA Low | VI 30 rT | VT 120 Signal + Ext |

| Phase 4 | No Treatment | VI 30 rT+FT RT | - |

| (Reinstatement) | DRA High | VI 30 rT+FT RT | - |

| 10 Sessions | DRA Low | VI 30 rT+FT RT | - |

Note: Target = target response (left or right, counterbalanced), Alternative = alternative response (right or left, counterbalanced), RT = food deliveries for target responses, rT = sensory-consequence deliveries for target responses, RA = food deliveries for alternative responses, FT = fixed-time food deliveries.

Results and Discussion

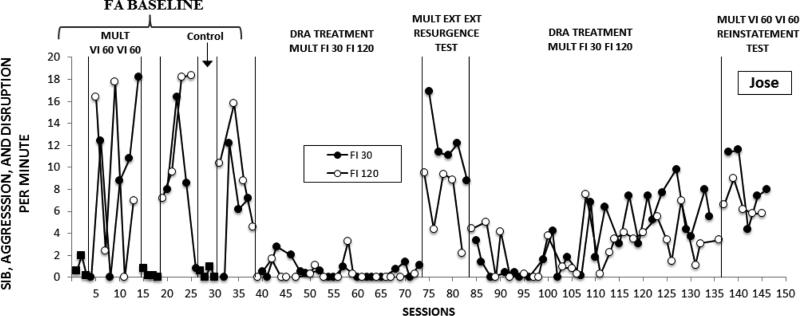

Figure 9 presents a summary of response rates across the various phases of Experiment 3. As in Experiment 1, response rates were averaged across pigeons and aggregated across five-session blocks to show relevant trends in the data. Note that rates of responding only from the last five sessions of Phase 1 are shown. Figure A3 in the Appendix presents the data for individual pigeons.

Fig. 9.

Target (BT) and alternative (BA) response rates across phases (P1-P4) of Experiment 3. Data are averaged across pigeons and aggregated into five-session blocks (response rates only from the last five sessions of Phase 1 are shown). Figures for individual subjects are in Appendix A3.

Target-key responding occurred at roughly equal rates across multiple-schedule components during the terminal sessions of Phase 1. When food reinforcers were suspended and alternative reinforcers were made available in the DRA components in Phase 2, target-response rates in these components decreased across sessions. Decreases in target-key responding occurred more quickly in the DRA-High component than in the DRA-Low component.

When all food reinforcement for target and alternative behavior was suspended in Phase 3, target responding in the No-Treatment component decreased to near-zero levels across sessions. In the DRA components, resurgence of target responding occurred for most pigeons. Figure 10 presents obtained resurgence, expressed as the difference between mean target proportion-of-baseline response rates (calculated here as in Experiment 1) from the first five sessions of Phase 3 and the last five sessions of Phase 2, for each individual pigeon. Values of 0 represent no change in target response rates between phases, values greater than 0 represent resurgence of target responding, and values less than 0 represent a decrease in rate between Phases 2 and 3. Following removal of high-rate alternative reinforcement, resurgence occurred for all four pigeons. Following removal of low-rate alternative reinforcement, an increase in target responding occurred for two pigeons. For three of four pigeons, resurgence in the DRA-High component was substantially greater than in the DRA-Low component; for the fourth, the difference was small but in the same direction.

Fig. 10.

Resurgence of target responding in the DRA-High and DRA-Low components during Experiment 3, expressed as the difference in mean proportion-of-baseline response rates from the first five sessions of Phase 3 and the last five sessions of Phase 2. Data for all pigeons are shown. Positive values represent an increase in target responding between these conditions.

Reinstatement of target responding, expressed as the difference between mean target proportion-of-baseline response rates from the first five sessions of Phase 4 and the last five sessions of Phase 3, for each individual pigeon, is shown in Figure 11. Again, values of 0 represent no change in target response rates between phases, values greater than 0 represent reinstatement of target responding, and values less than 0 represent a decrease in rate between Phases 3 and 4. For one pigeon, reinstatement was lowest in the DRA-Low component and highest in the No-Treatment component. For another, reinstatement was lowest in the DRA-High component and highest in the No-Treatment component. For two pigeons, no noticeable reinstatement of target responding occurred in either component.

Fig. 11.

Reinstatement of target responding in all components during Experiment 3, expressed as the difference in mean proportion-of-baseline response rates from the first five sessions of Phase 4 and the last five sessions of Phase 3. Data for all pigeons are shown. Positive values represent an increase in target responding between these conditions.

During Phase 2, overall rates of alternative responding were closely controlled by the rates of signal presentation in the DRA-rich and DRA-lean components. During Phase 3, extinction, overall response rates expressed relative to the last five sessions of Phase 2 decreased similarly in both components. For two of the four pigeons, the alternative response was more resistant to extinction in the DRA-lean than the DRA-rich component; average data are presented in Figure 12.

Fig. 12.

Rates of alternative behavior during Phase 3, extinction, expressed as proportions of the final five sessions of Phase 2, treatment, in the DRA-Rich and DRA-Lean components.

In general, the results of Experiment 3 accord with those of Experiment 2. Pigeons’ target behavior decreased similarly in DRA-high and DRA-low components, although not to as low a level as for the children in Experiment 2. Likewise, resurgence of target behavior was substantially greater in the DRA-rich than the DRA-lean component for three of the four pigeons, as for the children. Reinstatement of target behavior was greater in the DRA-rich component for only one pigeon, not unlike the results for Experiment 2. Finally, the results for extinction of alternative behavior were comparable to those in Experiment 2.

General Discussion

Review of Methods and Results

Experiment 1, with pigeons as subjects, arranged either rich or lean baseline schedules of reinforcement for analog problem (target) behavior between conditions designated High-BL and Low-BL, respectively. In Phase 2 of both conditions (treatment), rates of reinforcement for free-operant alternative behavior differed between multiple-schedule components designated DRA-Rich and DRA-Lean, respectively. In both High-BL and Low-BL conditions, the decrease of target responding to near-zero levels was more rapid in the DRA-Rich than in the DRA-Lean component. When DRA was discontinued in Phase 3, resurgence was greater in the DRA-Rich than in the DRA-Lean component in the High-BL condition. By contrast, in the Low-BL condition, resurgence was greater overall than in the High-BL condition, and was greater in the DRA-Lean than in the DRA-Rich component for most pigeons. In Phase 4, reinstatement of target behavior was greater in the DRA-Rich than in the DRA-Lean component in the High-BL condition, but was overall lower and less clearly differentiated in the Low-BL condition.

Importantly for clinical application, alternative behavior was more resistant to extinction in the DRA-Rich than in the DRA-Lean component in Phase 3, consistent with many results of research on resistance to change (e.g., Nevin, 2012). Moreover, when extinction data for alternative behavior were expressed as average proportions of their Phase 2 levels, the means were essentially identical for the High-BL and Low-BL conditions, suggesting that the rate of reinforcement for target behavior in baseline, prior to Phase 2 when DRA was introduced, had no effect on subsequent resistance to extinction of alternative behavior.

In Experiment 2, we used a similar method to address severe problem behavior in four children with intellectual disabilities, where DRA was implemented by signaling opportunities for reinforcement of functional communication responses. Experiment 2 was similar to Experiment 1 in that reinforcer rates in DRA-Rich and DRA-Lean components differed by a factor of 4, but differed in that a single intermediate schedule of reinforcement for problem behavior was arranged during Phase 1 (baseline) sessions, and there was no untreated (control) component.

In Phase 2, problem behavior decreased almost immediately to near-zero levels in both components. In Phase 3, when DRA was discontinued, resurgence of problem behavior was greater in the DRA-Rich component for all except Jeremy's third resurgence test (he did not respond in the first test). Likewise, for the two participants who experienced reconditioning (Jose and George), recovery was greater in the DRA-Rich component. In Phase 3, when signals were presented as in Phase 2 but without reinforcement, manding was relatively more persistent in the DRA-Lean than in the DRA-Rich component, contrary to the results for free-operant alternative behavior in Experiment 1.

Because the results of Experiment 2 differed from those in Experiment 1, especially for resurgence of target behavior in the Low-BL condition, Experiment 3 repeated that condition in pigeons with signaled DRA. The results confirmed the ordinal difference obtained in the first resurgence test with three participants in Experiment 2—that is, greater resurgence of target behavior in the component with the richer schedule of alternative reinforcement. In the reinstatement test, one pigeon responded more in the DRA-Rich component; the others emitted too few responses to evaluate. Finally, resistance to extinction of alternative behavior was similar between components, although somewhat greater on average in the DRA-Lean component. Thus, the persistence of the alternative response was either the same or greater in the signaled-DRA-Lean component in both Experiments 2 and 3.

To summarize the resurgence data for Experiment 2, we calculated the mean rate of problem behavior during the first five sessions of Phase 3 (posttreatment extinction) and expressed that mean as a proportion of the mean rate of problem behavior during the pretreatment functional analysis, separately for each component and participant. For Experiment 3, we calculated the difference between the mean rate of target behavior during the first five sessions of Phase 3 (posttreatment extinction) and the mean of the last five sessions of Phase 2, both expressed as proportions of Phase 1 baseline. If the rate of problem behavior in Phase 2 is close to zero (as in Experiment 2), these calculations are essentially the same. The results are presented in Table 4 as proportions of baseline, showing that except for Jeremy's third exposure to extinction, any measurable resurgence was greater in the DRA-Rich than the DRA-Lean component.

Table 4.

Individual measures of resurgence for Experiments 2 and 3

| Experiment 2 | ||

|---|---|---|

| Participant | Proportions of baseline | |

| DRA Rich | DRA Lean | |

| Jose | 1.55 | 0.66 |

| Norman | 3.24 | 1.00 |

| Jeremy (1) | 0.00 | 0.02 |

| Jeremy (2) | 0.33 | 0.07 |

| Jeremy (3) | 0.20 | 0.39 |

| George | No measurable resurgence | |

Relations to Basic Research

Shahan and Sweeney (2011) developed an algebraic model of extinction plus disruption by DRA (see also Nevin & Shahan, 2011). In ordinal terms, the model predicts more rapid decreases in target behavior during treatment with extinction plus DRA in Low-BL than in High-BL conditions, and in DRA-Rich than in DRA-Lean components. The data of Experiment 1, Phase 2 are entirely consistent with these predictions. The model also predicts that resurgence will be greater in DRA-Rich than in DRA-Lean components if baseline reinforcer rates are the same for both components. Although the prediction held for the High-BL condition, the reverse was obtained in the Low-BL condition. To add to the model's difficulties, reinstatement was greater in the DRA-Rich than in the DRA-Lean component in both conditions, albeit not reliably so in the Low-BL condition. The potential dissociation of resurgence and reinstatement is not anticipated by any account of relapse that we are aware of.

Experiment 3 differed from the Lean-BL condition of Experiment 1 in that alternative reinforcers were signaled during Phase 2. The momentum-based model for disruption by alternative reinforcers (Nevin & Shahan, 2011) does not distinguish signaled and unsignaled cases. However, the data of Experiments 1 and 3 show that signaled DRA was less effective than unsignaled DRA as a disruptor of target behavior during Phase 2 even though reinforcer rates were the same (compare Fig. 1, Low-BL, and Fig. 9). The reduced effectiveness of signaled DRA reinforcers in Phase 2 may be attributed to the absence of competing responses. Relatedly, Sweeney et al. (2014) compared the disruptive effects of contingent versus noncontingent alternative reinforcers (i.e., DRA vs. NCR) with pigeons and human participants, and found that NCR was less effective than DRA despite equated reinforcer rates. These results suggest that competing alternative behavior (which is not specified in NCR) may serve as a disruptor in addition to alternative reinforcement. However, it is not clear how to integrate competing behavior into a momentum-based model.

As noted above, the persistence of alternative behavior is important for clinical application. Research on behavioral persistence has repeatedly found that, in multiple schedules, resistance to change is greater in the component with more frequent reinforcement. However, when the average rates of alternative behavior during Phase 3 were expressed as proportion of the rates in DRA-Rich and DRA-Lean components during the final five sessions of Phase 2, alternative behavior was more persistent in the DRA-Lean component for all four participants in Experiment 2, and for two of four pigeons in Experiment 3, contrary to expectation based on overall rates of reinforcement obtained in the DRA components.

These mixed outcomes may result from within-component signal–reinforcer relations. Although stimuli present throughout a component signal overall richer or leaner DRA schedules, within-component signals are paired with relatively immediate reinforcement and may overshadow component stimuli. Moreover, signals within the DRA-Lean component are better predictors of reinforcers because interreinforcer intervals are longer than in the DRA-Rich component (see Gibbon & Balsam, 1981, for rationale). Thus, signal–reinforcer relations are stronger in DRA-Lean, whereas overall component–reinforcer relations are stronger in DRA-Rich. The competition between these two potential determiners of persistence during extinction may be responsible for the individual differences observed.

Implications for Clinical Practice

The concurrent extinction of target behavior plus DRA procedure used in the treatment phase (Phase 2) of all three experiments has been used successfully to decrease problem behavior in many clinical applications. For example, Rooker, Jessel, Kurtz, and Hagopian (2013) summarized outcomes for 58 cases that arranged reinforcement for mands established by FCT concurrently with extinction of problem behavior, and reported substantial decreases in problem behavior in most cases treated with FCT. Likewise, we obtained decreases to near zero in both DRA-Rich and DRA-Lean for all participants in Experiment 2.

Our signaled-DRA procedure is related to the “schedule-thinning” procedure used in several clinical studies, largely for practical reasons. Hanley, Iwata, and Thompson (2001) pointed out that if manding is reinforced every time it occurs, caregivers may not be able to comply with the resulting high frequency of requests, and problem behavior may relapse as a result of nonreinforcement. One of their solutions to this problem was to establish a successive discrimination in which periods of SD, signaling reinforcement for manding, alternated with SΔ, signaling extinction. When SD durations were progressively reduced while SΔ durations were lengthened, problem behavior generally remained at a low level (see also Betz, Fisher, Roane, Mintz, & Owen, 2013; Greer, Fisher, Saini, Owen, & Jones, 2016; Rooker et al., 2013). Our signaled DRA procedure is like the end result of such a thinning procedure, with a short SD signaling availability of a single reinforcer and a long variable SΔ duration given by the DRA-Rich or DRA-Lean schedule in effect, and it appears to be similarly effective.

Summary

At the beginning of this article, we suggested that there are three desired outcomes of reinforcement-based treatment for problem behavior: 1) rapid reduction of problem behavior during treatment; 2) little or no relapse when treatment ends; and 3) establishment of persistent alternative behavior. We also suggested that these may not all be achievable in a single treatment package because high rates of reinforcement for alternative behavior tend to promote outcomes 1 and 3 but work against outcome 2. We may have been unduly pessimistic: The data of Experiment 2 suggest that less frequent, signaled DRA, coupled with extinction of problem behavior is as effective as more frequent, signaled DRA in achieving outcome 1. Both Experiments 2 and 3 suggest that less frequent DRA reduces relapse (outcome 2) and promotes (or at least does not oppose) outcome 3. Accordingly, infrequent, signaled DRA is a candidate for best-practice treatment of severe problem behavior.

Acknowledgments

This research was supported by Grant Number R01 HD064576 from the Eunice K. Shriver National Institute of Child Health and Human Development (NICHD). Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the NICHD. Authors are listed in descending age order. Kenneth Shamlian is now at the University of Rochester School of Medicine. Mary M. Sweeney is now at the Johns Hopkins University School of Medicine.

Appendix

Individual data for Experiments 1 and 3 are presented in Figures A1, A2, and A3.

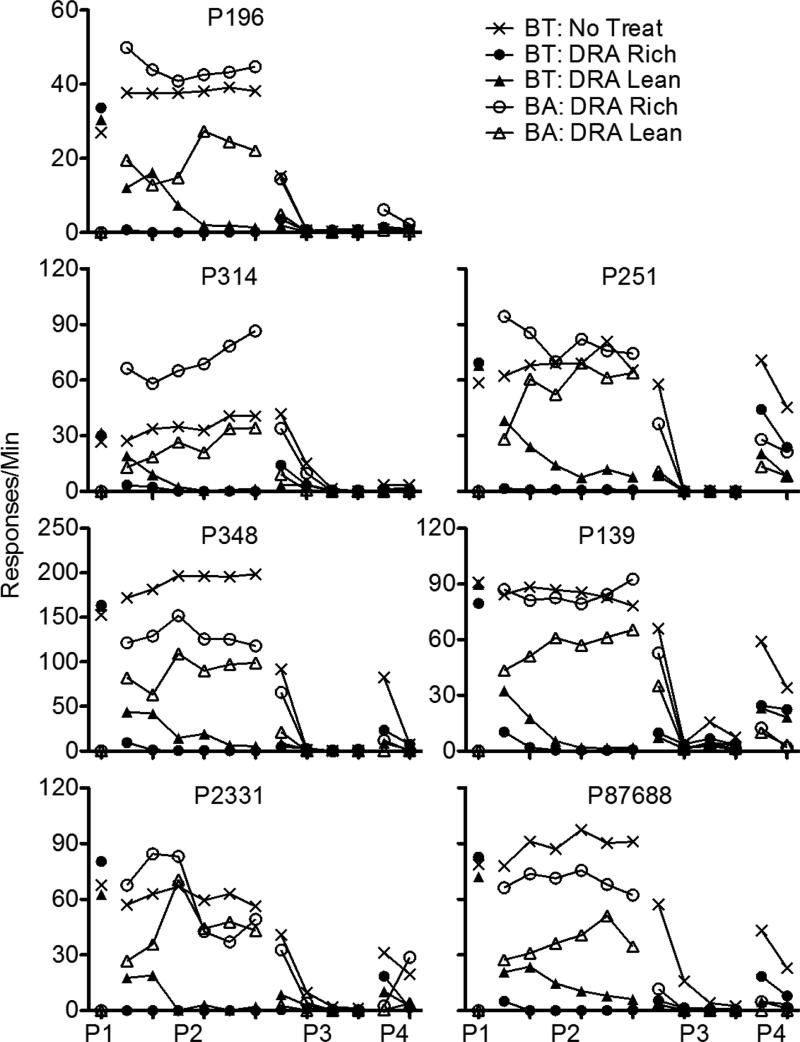

Fig. A1.

Target (BT) and alternative (BA) response rates across phases (P1-P4) of Experiment 1 following high-rate Phase-1 reinforcement for each individual pigeon. Data are aggregated into five-session blocks (response rates only from the last five sessions of Phase 1 are shown).

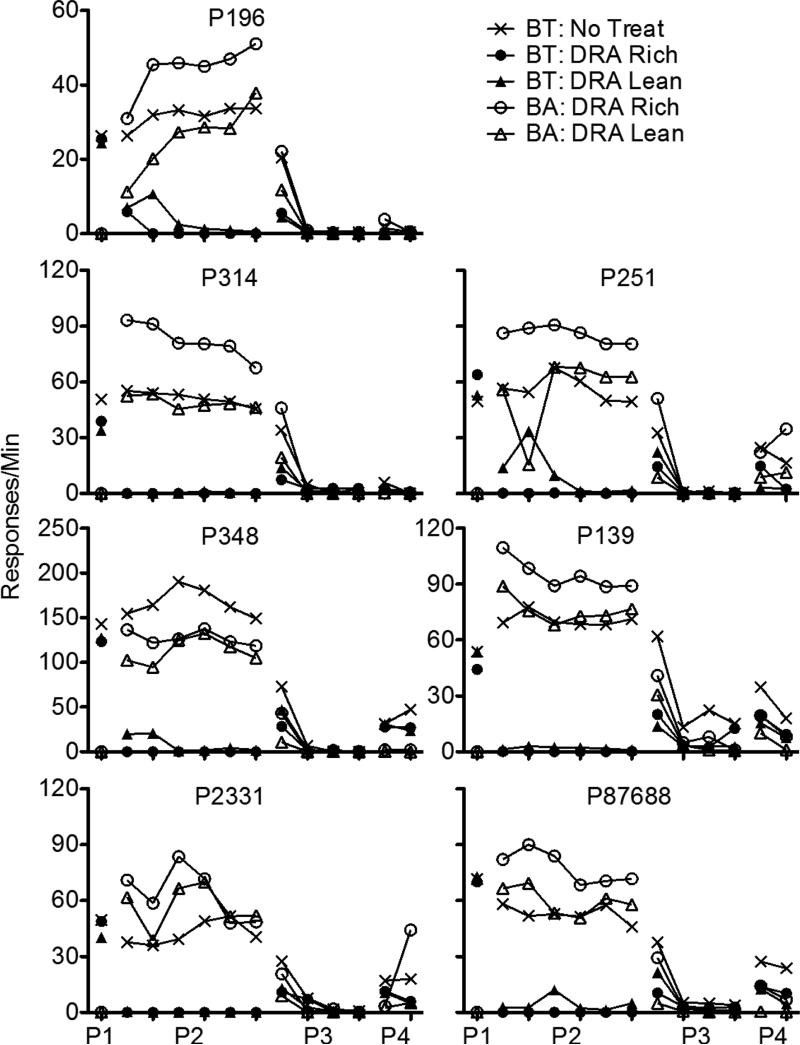

Fig. A2.

Target (BT) and alternative (BA) response rates across phases (P1-P4) of Experiment 1 following low-rate Phase-1 reinforcement for each individual pigeon. Data are aggregated into five-session blocks (response rates only from the last five sessions of Phase 1 are shown).

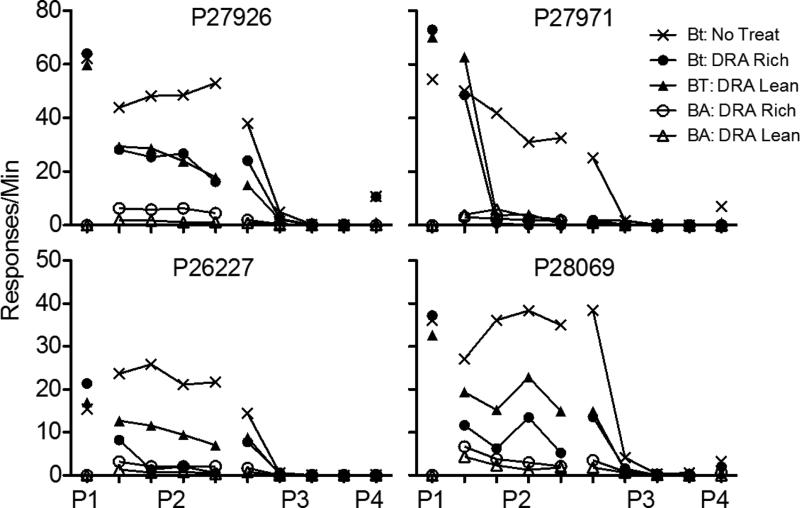

Figure A3.

Target (BT) and alternative (BA) response rates across phases (P1-P4) of Experiment 3 for each individual pigeon. Data are aggregated into five-session blocks (response rates only from the last five sessions of Phase 1 are shown).

References

- Betz AM, Fisher WW, Roane HS, Mintz JC, Owen TM. A component analysis of schedule thinning during functional communication training. Journal of Applied Behavior Analysis. 2013;46:219–241. doi: 10.1002/jaba.23. doi:10.1002/jaba.23. [DOI] [PubMed] [Google Scholar]

- Bouton ME, Winterbauer NE, Todd TP. Relapse processes after the extinction of instrumental learning: Renewal, resurgence, and reacquisition. Behavioural Processes. 2012;90(1):130–141. doi: 10.1016/j.beproc.2012.03.004. http://doi.org/10.1016/j.beproc.2012.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Derby KM, Wacker DP, Sasso G, Steege M, Northup J, Cigrand K, Asmus J. Brief functional assessment techniques to evaluate aberrant behavior in an outpatient setting: A summary of 79 cases. Journal of Applied Behavior Analysis. 1992;25(3):713–721. doi: 10.1901/jaba.1992.25-713. http://doi.org/10.1901/jaba.1992.25-713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibbon J, Balsam P. Spreading association in time. In: Locurto CM, Terrace HS, Gibbon J, editors. Autoshaping and conditioning theory. Academic Press; New York: 1981. pp. 219–253. [Google Scholar]

- Greer BD, Fisher WW, Saini V, Owen TM, Jones JK. Functional communication training during reinforcement schedule thinning: An analysis of 25 applications. Journal of Applied Behavior Analysis. 2016;49:1–17. doi: 10.1002/jaba.265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagopian LP, Fisher WW, Sullivan MT, Acquisto J, LeBlanc LA. Effectiveness of functional communication training with and without punishment: A summary of 21 inpatient cases. Journal of Applied Behavior Analysis. 1998;31:211–235. doi: 10.1901/jaba.1998.31-211. doi: 10.1901/jaba.1998.31-211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley GP, Iwata BA, Thompson RH. Reinforcement schedule thinning following treatment with functional communication training. Journal of Applied Behavior Analysis. 2001;34:17–38. doi: 10.1901/jaba.2001.34-17. http://doi.org/10.1901/jaba.2001.34-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iwata BA, Dorsey MF, Slifer KJ, Bauman KE, Richman GS. Toward a functional analysis of self-injury. Journal of Applied Behavior Analysis. 1994;27:197–209. doi: 10.1901/jaba.1994.27-197. doi:10.1901/jaba.1994.27-197 (Reprinted from Analysis and Intervention in Developmental Disabilities, 2, 3-20, 1982) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leitenberg H, Rawson RA, Mulick JA. Extinction and reinforcement of alternative behavior. Journal of Comparative and Physiological Psychology. 1975;88:640–652. [Google Scholar]

- Mace FC, McComas JJ, Mauro BC, Progar PR, Ervin R, Zangrillo AN. Differential reinforcement of alternative behavior increases resistance to extinction: Clinical demonstration, animal modeling, and clinical test of one solution. Journal of the Experimental Analysis of Behavior. 2010;93:349–367. doi: 10.1901/jeab.2010.93-349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mudford OC, Martin NT, Hui JK, Taylor SA. Assessing observer accuracy in continuous recording of rate and duration: Three algorithms compared. Journal of Applied Behavior Analysis. 2009;42(3):527–539. doi: 10.1901/jaba.2009.42-527. http://doi.org/10.1901/jaba.2009.42-527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nevin JA, Grace RC. Behavioral momentum and the law of effect. Behavioral and Brain Sciences. 2000;23:73–130. doi: 10.1017/s0140525x00002405. http://dx.doi.org/10.1017/S0140525X00002405. [DOI] [PubMed] [Google Scholar]

- Nevin JA, Shahan TA. Behavioral momentum theory: Equations and applications. Journal of Applied Behavior Analysis. 2011;44:877–895. doi: 10.1901/jaba.2011.44-877. http://doi.org/10.1901/jaba.2011.44-877. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Page TJ, Iwata BA. Interobserver agreement: History, theory and current methods. In: Poling A, Fuqua RW, editors. Research methods in applied behavior analysis: Issues and advances (99-126.) Plenum; New York: 1986. [Google Scholar]

- Podlesnik CA, Shahan TA. Behavioral momentum and relapse of extinguished operant responding. Learning & Behavior. 2009;37(4):357–364. doi: 10.3758/LB.37.4.357. http://doi.org/10.3758/LB.37.4.357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rooker GW, Jessel J, Kurtz PF, Hagopian LP. Functional communication training with and without alternative reinforcement and punishment: An analysis of 58 applications. Journal of Applied Behavior Analysis. 2013;46:708–722. doi: 10.1002/jaba.76. doi:10.1002/jaba.76. [DOI] [PubMed] [Google Scholar]

- Shahan TA, Sweeney MM. A model of resurgence based on behavioral momentum. Journal of the Experimental Analysis of Behavior. 2011;95:91–108. doi: 10.1901/jeab.2011.95-91. http://doi.org/10.1901/jeab.2011.95-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shirley MJ, Iwata BA, Kahng S, Mazaleski JL, Lerman DC. Does functional communication training compete with ongoing contingencies of reinforcement? An analysis during response acquisition and maintenance. Journal of Applied Behavior Analysis. 1997;30:93–104. doi: 10.1901/jaba.1997.30-93. http://doi.org/10.1901/jaba.1997.30-93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sweeney MM, Moore K, Shahan TA, Ahearn WH, Dube WV, Nevin JA. Modeling the effects of sensory reinforcers on behavioral persistence with alternative reinforcement. Journal of the Experimental Analysis of Behavior. 2014;102:252–266. doi: 10.1002/jeab.103. http://doi.org/10.1002/jeab.103. [DOI] [PMC free article] [PubMed] [Google Scholar]