Significance

In a dynamic world humans not only have to decide where to look but also when to direct their gaze to potentially informative locations in the visual scene. Little is known about how timing of eye movements is related to environmental regularities and how gaze strategies are learned. Here we present behavioral data establishing that humans learn to adjust their temporal eye movements efficiently. Our computational model shows how established properties of the visual system determine the timing of gaze. Surprisingly, a Bayesian learner only incorporating the scalar law of biological timing can fully explain the course of learning these strategies. Thus, humans use temporal regularities learned from observations to adjust the scheduling of eye movements in a nearly optimal way.

Keywords: eye movements, computational modeling, decision making, learning, visual attention

Abstract

During active behavior humans redirect their gaze several times every second within the visual environment. Where we look within static images is highly efficient, as quantified by computational models of human gaze shifts in visual search and face recognition tasks. However, when we shift gaze is mostly unknown despite its fundamental importance for survival in a dynamic world. It has been suggested that during naturalistic visuomotor behavior gaze deployment is coordinated with task-relevant events, often predictive of future events, and studies in sportsmen suggest that timing of eye movements is learned. Here we establish that humans efficiently learn to adjust the timing of eye movements in response to environmental regularities when monitoring locations in the visual scene to detect probabilistically occurring events. To detect the events humans adopt strategies that can be understood through a computational model that includes perceptual and acting uncertainties, a minimal processing time, and, crucially, the intrinsic costs of gaze behavior. Thus, subjects traded off event detection rate with behavioral costs of carrying out eye movements. Remarkably, based on this rational bounded actor model the time course of learning the gaze strategies is fully explained by an optimal Bayesian learner with humans’ characteristic uncertainty in time estimation, the well-known scalar law of biological timing. Taken together, these findings establish that the human visual system is highly efficient in learning temporal regularities in the environment and that it can use these regularities to control the timing of eye movements to detect behaviorally relevant events.

We live in a dynamic and ever-changing environment. To avoid missing crucial events, we need to constantly use new sensory information to monitor our surroundings. However, the fraction of the visual environment that can be perceived at a given moment is limited by the placement of the eyes and the arrangement of the receptor cells within the eyes (1). Thus, continuously monitoring environmental locations, even when we know which regions in space contain relevant information, is unfeasible. Instead, we actively explore by targeting the visual apparatus toward regions of interest using proper movements of the eyes, head, and body (2–5). This constitutes a fundamental computational problem requiring humans to decide sequentially when to look where. Solving this problem arguably has been crucial to our survival from the early human hunters pursuing a herd of prey and avoiding predators to the modern human navigating a crowded sidewalk and crossing a busy road.

So far, the emphasis of most studies on eye movements has been on spatial gaze selection. Its exquisite adaptability can be appreciated by considering the different factors influencing gaze, including low-level image features (6), scene gist (7) and scene semantics (8), task constraints (9), and extrinsic rewards (10); also, combinations of factors have been investigated (11). Studies have cast doubt on the optimality of spatial gaze selection (12–14), but computational models of the spatial selection have in part established that humans are close to optimal in targeting locations in the visual scene, as in visual search for visible (15) and invisible targets (16) as well as face recognition (17) and pattern classification (18). Although these studies provide insights into where humans look in a visual scene, the ability of managing complex tasks cannot be explained solely by optimal spatial gaze selection. Even if relevant spatial locations in the scene have been determined these regions must be monitored over time to detect behaviorally relevant changes and thus keep information updated for action selection in accordance with the current state of the environment. Successfully dealing with the dynamic environment therefore requires intelligently distributing the limited visual resources over time. For example, where should we look if the task is to detect an event occurring at one of several possible locations? In the beginning, if the temporal regularities in a new environment are unknown, it may be best to look quickly at all relevant locations equally long. However, with more and more observations of events and their durations, we may be able to use the learned temporal regularities. To keep the overall uncertainty as low as possible, fast-changing regions (e.g., streets with cars) should be attended more frequently than slow-changing regions (e.g., walkways with pedestrians).

Although little is known about how the dynamics in the environment influence human gaze behavior, empirical evidence suggests sophisticated temporal control of eye movements, as in object manipulation (19, 20) and natural behavior (4, 5). A common observation is that gaze is often predictive both of physical events (21) and events caused by other people (22), and studies comparing novices’ and experienced sportsmen’s gaze coordination suggest that temporal gaze selection is learned (23). However, the temporal course of these eye movements and how they are influenced by the event durations in the environment has not been investigated so far. We argue that understanding human eye movements in natural dynamic settings can only be achieved by incorporating the temporal control of the visual apparatus. Also, modeling of temporal eye movement allocation is scarce, with notable exceptions (24), but whereas ideal observers (15–17) model behavior after learning has completed and usually exclude intrinsic costs, reinforcement learning models based on Markov decision processes (16, 24) can be applied to situations involving perceptual uncertainty at best only approximately.

Here we present behavioral data and results from a computational model establishing several properties of human active visual strategies specific to temporally varying stimuli. In particular, we address the question of what factors specifically need to be accounted for to predict human gaze timing behavior in response to environmental events, and how learning proceeds. To this end, we created a temporal event detection (TED) task to investigate how humans adjust their eye movement strategies when learning temporal regularities. Our task enables investigating the unknown temporal aspects of eye movement strategies by isolating them from spatial selection and the complexity that arises from natural images. Our results are the first to our knowledge to show that human eye movements are consistently related to temporal regularities in the environment. We further provide a computational model explaining the observed gaze behavior as an interaction of perceptual and action uncertainties as well as intrinsic costs and biological constraints. Surprisingly, based on this model alone, the learning progress is accounted for without any additional assumptions only based on the scalar law of biological timing, allowing crucial insights into how observers alter their behavior due to task demands and environmental regularities.

Results

Behavioral Results.

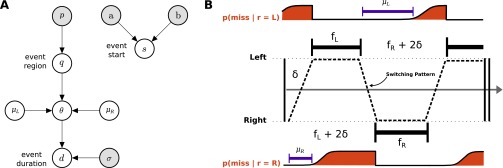

The TED task required participants to complete 30 experimental blocks, each consisting of 20 consecutive trials. Subjects were instructed to detect a single event on each trial and press a button to indicate the detection of the event, which could occur at one of two possible locations with equal probability (Fig. 1A). This event consisted of a little dot at the center of the two locations that disappeared for a certain duration. The visual separation of the two locations was chosen such that subjects could not detect an event extrafoveally (i.e., when fixating the other location). In each block, event durations were constant but unknown to the subjects in the beginning. They were generated probabilistically from three distributions over event durations, termed short (S), medium (M), and long (L). The rationale of this experimental design was that there is no single optimal strategy for all blocks in the experiment, but rather subjects have to adapt their strategies to the event durations in the respective block (Fig. 1B). Thus, over the course of 20 consecutive trials per block, subjects could learn the event durations by observing events.

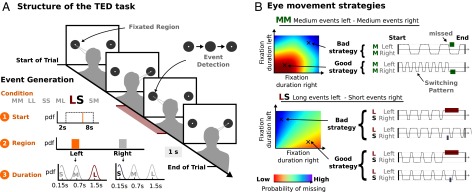

Fig. 1.

Experimental procedure for the TED task and implications for eye movement strategies. (A) Single trial structure and stimulus generation. Participants switched their gaze between two regions marked by gray circles to detect a single event. The event consisted of the little dot within one of the circles disappearing (depicted as occurring on the left side in this trial). Participants confirmed the detection of an event by indicating the region (left or right) through a button press. The event duration (how long the dot was invisible) was drawn from one of three probability distributions (short, medium, and long). Overall, six different conditions resulting from the combination of three event durations and two spatial locations were used in the experiment (SS, MM, LL, SM, SL, MM, and LL). A single block consisted of 20 consecutive trials with event durations drawn from the same distributions. For example, in a block LS all events occurring at the left region were long events with a mean duration of 1.5 s, and all events occurring at the right region were short events with a mean duration of 0.15 s. A single event for the condition LS was generated as follows (lower left). First, the start time for the event was drawn from a uniform distribution between 2 and 8 s. Next, the region where the event occurred was either left or right with equal probability. Finally, the duration of the event was drawn dependent on where the event occurred. Given that in the example the condition was LS and the event was determined to occur at the left region, the event duration was drawn from a Gaussian distribution with mean 1.5 s (long event). (B) Eye movement strategies for different conditions. The relationship between SPs and the probability of missing the event in a single trial is shown for two conditions. For condition MM (Upper) the probability of missing an event is lower for faster switching (short fixation durations at both regions). For condition LS (long events at the left region and short events at the right region) the probability of missing an event can be decreased by longer fixating the region with short events (i.e., the right region).

We investigated how participants changed their switching behavior over trials within individual blocks as a consequence of learning the constant event durations within a single block. Fig. 2A shows two example trials for participant MK, both from the same block (block LS—long event duration at the left region and short event duration at the right region). In the first trial when event durations were unknown (Fig. 2A, Upper), MK switched evenly between the regions to detect the event (). However, at the end of this block in Trial 20 we observed a clear change in the switching pattern (SP) (Fig. 2A, Lower). There was a shift in the mean fixation duration over the course of the block (Fig. 2A, Right). The region associated with the short event durations was fixated longer in the last trial of the block (). Hence, the subject changed the gaze SP over trials in this particular block in response to the event durations at the two locations.

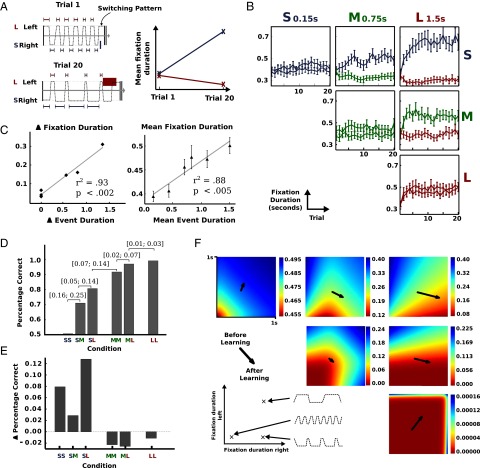

Fig. 2.

Behavioral results. (A) Sample SP for participant MK in a single block (Left) and mean fixation duration for each region (Right). SPs are shown for two trials: before learning the event durations (trial 1) and after learning the event duration (trial 20). (B) Mean fixation duration aggregated for all participants and all blocks grouped by condition (error bars correspond to SEM). Mean fixation duration is shown in seconds (y axis) and is the same scale for all conditions (see also Fig. S1). Trial number within the block is shown on the x axis. Colors of the lines refer to the event duration used in that condition. (C) Mean fixation duration difference as a function of event duration difference (Left). Each data point corresponds to a specific condition. Mean fixation duration as a function of mean event duration (Right). Again, each data point is associated with one of the conditions. (D) Percentage of detected events for all conditions. Values in square brackets correspond to the 95% credibility interval for the differences in proportion. Different conditions are shown on the x axis. (E) Difference in detection performance between the first and last trial in each block. Different conditions are shown on the x axis. (F) Behavioral changes from trial 1 to trial 20 (arrows) for each condition. Color density depicts the probability of missing an event for every possible SP (see also Fig. S2). Each plot corresponds to a single condition. Conditions are arranged as in B.

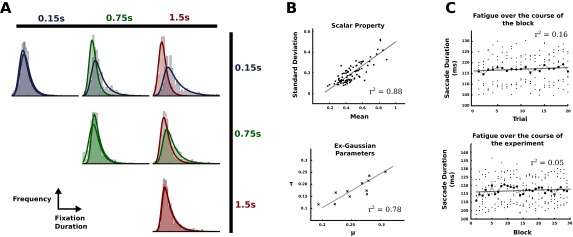

Fig. S1.

Variability of fixation durations. (A) Distributions of fixation durations across conditions. For each condition histograms and fitted exponential Gaussian distributions are shown for both regions. (B) Scalar law of biological timing. (Upper) The relationship between the mean fixation durations in a trial and the respective SD observed in our experiment. The solid line refers to the result of a fitted linear regression. (Lower) The linear relationship of the parameters of the exponential Gaussian distribution, where μ denotes the mean of the Gaussian and τ denotes the rate of the exponential distribution. Each point represents the best parameter estimates for a single distribution shown in A. (C) Quantifying oculomotor fatigue. Saccade durations over the course of trials aggregate over all blocks (Upper). Saccade durations over the course of blocks aggregated over all trials within the respective blocks (Lower).

Mean fixation durations aggregated over all participants are shown in Fig. 2B. Steady-state behavior across subjects was reached within the first 5 to 10 trials within a block, suggesting that participants were able to quickly learn the event durations. Behavioral changes were consistent across conditions. In conditions with different event durations (SM, SL, and ML), regions with shorter event durations were fixated longer in later trials of the block (all differences were highly significant with ). Participants’ gaze behavior was not only influenced by whether the event durations were different but was also guided by the size of the difference (Fig. 2C, Left). Greater differences in event durations lead to greater differences in the fixation durations (linear regression, ). Moreover, the overall mean fixation duration aggregated over both regions was affected by the overall mean event durations (Fig. 2C, Right). Participants, in general, switched faster between the regions if the mean event duration was small (linear regression, ). Blocks with long overall event durations (e.g., LL) yielded longer mean fixation durations compared with blocks with short event durations (e.g., SS). Taken together, these results establish that participants quickly adapted their eye movement strategies to the task requirements given by the timing of events.

However, further inspection of the behavioral data suggests nontrivial aspects of the gaze-switching strategies. The conditions in our TED task differed in task difficulty depending on the event durations (Fig. 2D). The shorter the event durations in a specific condition, the more difficult was the task. Improvement over the course of a block differed between conditions (Fig. 2E) and participants only improved in detection accuracy in conditions associated with a high task difficulty. In contrast, participants showed a slight decrease in performance in the other conditions. This can be represented as arrows pointing from the observed switching behavior in the first trial to the behavior in the last trial within blocks in the action space (Fig. 2F). Overall, several aspects remain unclear: the different rates of change in switching frequencies across trials in a block as well as the different directions and end points of behavioral changes observed in the performance space.

Bounded Actor Model.

We hypothesized that multiple processes are involved in generating the observed behavior, based on the behavioral results in the TED task and taking into account known facts about human time constraints in visual processing, time perception, eye movement variability, and intrinsic costs. Starting from an ideal observer (25) we developed a bounded actor model by augmenting it with computational components modeling these processes (Fig. 3A and see Supporting Information for a derivation of the ideal observer and further model components).

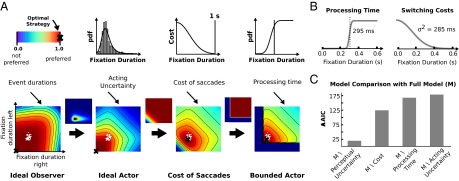

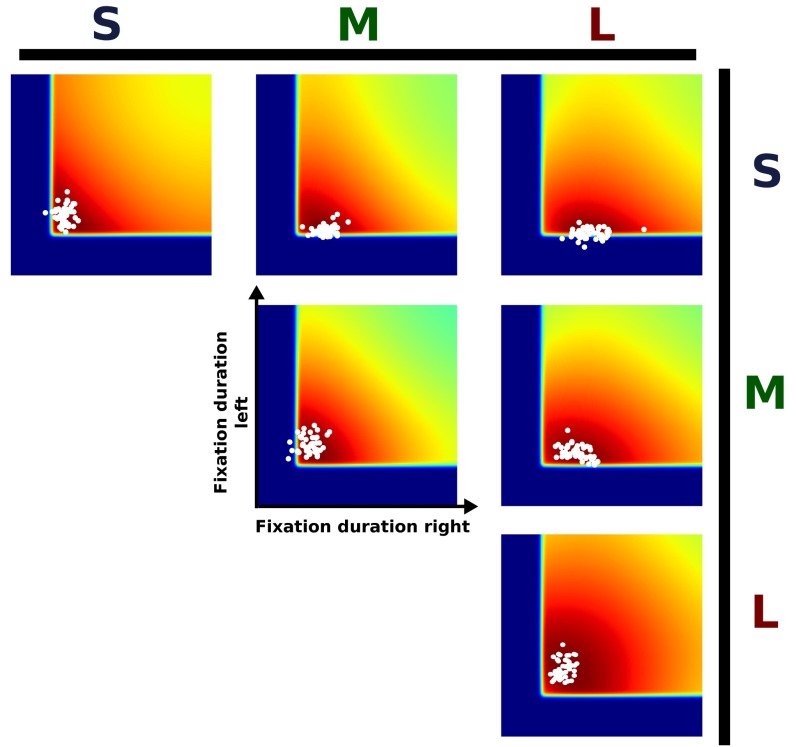

Fig. 3.

Computational model. (A) Schematic visualization describing how the different model components influence the preferred SPs for a single condition (MM). Images show which regions in the action space are preferred by the respective models. Each location in the 2D space corresponds to a single SP (see also Fig. 1B). White dots are the mean fixation durations of our subjects aggregated over individual blocks. The optimal strategy is marked with a black cross for each model. The Ideal Observer only takes the probability of missing an event into account. The Ideal Actor also accounts for variability in the execution of eye movement plans, thus suppressing long fixations (because they increase the risk of missing the event). Therefore, strategies using shorter fixation durations are favored. Next, additive costs for faster switching yield a preference for longer fixation durations. Hence, less value is given to very fast SPs. Finally, the Bounded Actor model excludes very short fixation durations, which are biologically impossible, because they do not allow sufficient time for processing visual information. (B) Model components for processing time and switching costs after parameter estimation. Parameters were estimated through least squares using the last 10 trials of each block. (C) Model comparison. For the absence of each component the model was fitted by least squares. Differences in AIC with respect to the full model are shown.

The ideal observer chooses SPs that maximize performance in the task. The gaze behavior suggested by the ideal observer corresponds to the SP with the lowest probability of missing the event (Fig. 2F). We extend this model by including perceptual uncertainty, which limits the accuracy of estimating event durations from observations. Extensive literature has reported how time intervals are perceived and reproduced (26–28). The central finding is the so-called scalar law of biological timing (26, 29), a linear relationship between the mean and the SD of estimates in the interval timing task. We included perceptual uncertainty in the ideal observer model based on the values found in the literature.

The second extension of the model accounts for eye movement variability, which limits the ability of humans to accurately execute planned eye movements and has been reported frequently in the literature. Although many factors may contribute to this variability in action outcomes, humans have been shown to take this variability into account (30). Further evidence for this comes from experiments that have shown that in a reproduction task the impact of prior knowledge on behavior increased according to the uncertainty in time estimation (31) and studies that extended an ideal observer to an ideal actor by including behavioral timing variability (32). To describe the variability of fixation durations we used an exponential Gaussian distribution (33) because it is a common choice for describing fixation durations (34–37). The parameters were estimated from our data using maximum likelihood.

Although behavioral costs in biological systems are a fundamental fact, very little is known about such costs for eye movements, because research has so far focused on extrinsic costs (10, 11, 24). We included costs in our model by hypothesizing that switching more frequently is associated with increased effort. In a similar task that did not reward faster switching participants showed fixation durations of about 1 s (38). To our knowledge a concrete shape of these costs has not been investigated yet, and in the present study we used a sigmoid function (Fig. 3B, Right) for which the parameters were estimated using least squares from the measured data.

Finally, the model has so far neglected that all neural and motor processes involved take time. The time required for visual processing is highly dependent on the task at hand. It can be very fast (39); however, in the context of an alternative choice task processing is done within the first 150 ms (40) and does not improve with familiarity of the stimulus (41). In our study, the demands for the decision were much lower because discriminating between the two states of a region (ongoing event or no event) is rather simple. Still, there are some additional constraints that limit the velocity of switching: eye–brain lag and time of planning and initiating a saccade (42). In addition, prior research suggests that in general saccades are triggered after processing the current fixation has reached some degree of completeness (43). We estimated the mean processing time (Fig. 3B, Left) from our behavioral data using least squares.

To investigate which model should be favored in explaining the observed gaze-switching behavior we fitted the model leaving out one component at a time and computed the Akaike information criterion (AIC) for each of these models. The results are shown in Fig. 3C. The conditional probability for each model was calculated by computing the Akaike weights from the AIC values as suggested in ref. 44. We found that gaze switches were more than 10,000 times more probable under the full model compared with the second-best model (Table S1 and Fig. S3). In addition, models lacking a single component deviate severely from the observed behavior (Fig. S4). This strongly suggests that the temporal course of eye movements cannot be explained solely on the basis of an ideal observer. Instead, multiple characteristics of perceptual uncertainty, behavioral variability, intrinsic costs, and biological constraints have to be included.

Table S1.

Model comparison for all fitted models

| Model | p | SSE | AIC | AIC | w(AIC) | /w |

| FM | 6 | 0.15 | −561.56 | 0 | 0.99 | 1.0 |

| FM without perceptual uncertainty | 6 | 0.18 | −543.11 | 18.44 | ||

| FM without cost | 4 | 0.65 | −434.06 | 127.50 | ||

| FM without processing time | 5 | 1.04 | −389.14 | 172.42 | ||

| FM without acting uncertainty | 4 | 1.21 | −377.95 | 183.61 |

Differences in AIC (), Akaike weights [], and the probability ratios () were computed with respect to the full model (FM). The number of data points for all models was 90. p, number of fitted parameters.

Fig. S3.

SPs (color map) predicted by the fitted model and observed human data for steady-state behavior (trials 15–20 of each block).

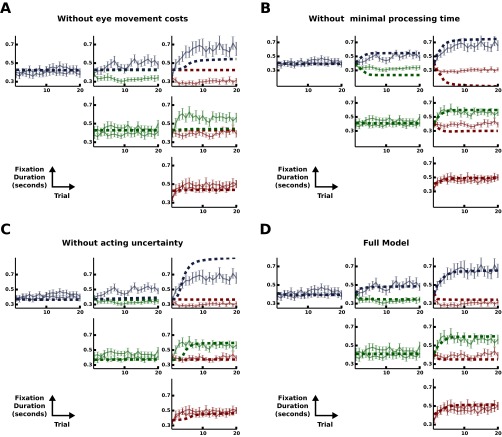

Fig. S4.

Learning of gaze switching over blocks for all conditions according to different models. For each model (except D), a single component was removed from the full model. The remaining parameters were estimated from the data using only the steady-state behavior (trials 10–20). (A) Eye movement costs. (B) Processing time. (C) Acting uncertainty. (D) Full model with all parameters fitted.

Bayesian Learner Model.

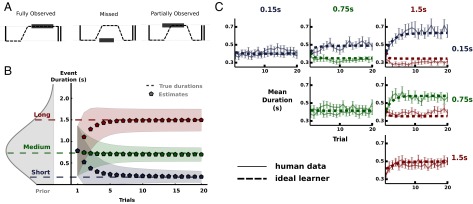

All models so far have assumed full knowledge of the event durations, as is common in ideal observer models. This neglects the fact that event durations were a priori unknown to the participants at the beginning of each block of trials and needed to be inferred from observations. In our TED task information about the event durations cannot be accessed directly but must be inferred from different types of censored and uncensored observations (Fig. 4A). Using a Bayesian learner we simulated the subjects’ mean estimate and uncertainty of the event duration for each trial in a block using Markov chain Monte Carlo techniques (Fig. 4B). We used a single Gaussian distribution fitted to the true event durations for the three conditions to describe subjects’ initial belief before the first observation within a block. Our Bayesian learner enables us to simulate the bounded actor model for every trial of a block using the mean estimates (Fig. 4C). The results show that our bounded actor together with the ideal Bayesian learner is sufficient to recover the main characteristics of the behavioral data. Crucially, the proposed learner does not introduce any further assumptions or parameters. This means that the behavioral changes we observed can be explained solely by changes in the estimates of the event durations. As with the steady-state case, all included factors contributed uniquely to the model. Omitting a single component led to severe deviation from the human data (Fig. S4).

Fig. 4.

Bayesian learner model. (A) Different types of observations in the TED task. Dashed lines depict SPs and rectangles denote events. (B) Mean estimates and uncertainty (SD) for the event durations over the course of a block. Prior belief over event durations before the first observation is shown on the left side. (C) Human data and model predictions for the Bayesian learner model. Fitted parameters from the full model were used. Dashed lines show the mean fixation duration of the bounded actor model over the course of the blocks. For each trial the mean estimate suggested by the ideal learner was used.

Discussion

The current study investigated how humans learn to adjust their eye movement strategies to detect relevant events in their environment. The minimal computational model matching key properties of observed gaze behavior includes perceptual uncertainty about duration, action variability in generated gaze switches, a minimal visual processing time, and, finally, intrinsic costs for switching gaze. The bounded actor model explains seemingly suboptimal behavior such as the decrease in performance in easier task conditions as a trade-off between detection probability and faster but more costly eye movement switching. With respect to this bounded actor model, the experimentally measured switching times at the end of the blocks were close to optimal. Whereas ideal observer models (15–17) assume that the behaviorally relevant uncertainties are all known to the observer, here we also modeled the learning progress. The speed at which learning proceeded was remarkable; subjects were close to their final gaze-switching frequencies after only 5 to 10 trials on average, suggesting that the observed gaze behavior was not idiosyncratic to the TED task. Based on the bounded actor model we proposed a Bayesian optimal learner, which only incorporates the inherent perceptual uncertainty about time passage on the basis of the scalar law of biological timing (26, 28, 29). Remarkably, this source of uncertainty was sufficient to fully account for the learning progress. This suggests that the human visual system is highly efficient in learning temporal regularities of events in the environment and that it can use these to direct gaze to locations in which behaviorally relevant events will occur.

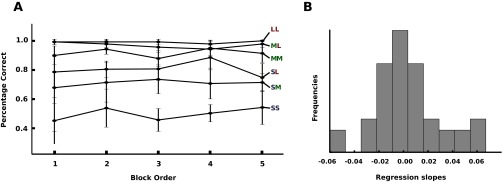

Taken together, this study provides further evidence for the importance of the behavioral goal in perceptual strategies (2, 9, 24), because low-level visual feature saliency cannot account for the gaze switches (45). Instead, cognitive inferences on the basis of successive observations and detections of visual events lead to changes in gaze strategies. At the beginning of a block, participants spent equal observation times between targets to learn about the two event durations and progressively looked longer at the location with the shorter event duration. This implies that internal representations of event durations were likely used to adjust the perceptual strategy of looking for future events. A further important feature of the TED task and the presented model is that, contrary to previous studies (15–17), the informativeness of spatial locations changes moment by moment and not fixation by fixation, which corresponds more to naturalistic settings. Under such circumstances, optimality of behavior is not exclusively evaluated with respect to a single, next gaze shift, but instead is driven by a complex sequence of behavioral decisions based on uncertain and noisy sensory measurements. In this respect, our TED task is much closer to natural vision than experiments with static displays. However, the spatial locations in our task were fixed and the visual stimuli were simple geometric shapes. Naturalistic vision involves stimuli of rich spatiotemporal statistics (46) and semantic content across the entire visual field. How the results observed in this study transfer to subjects learning to attend in more naturalistic scenarios will require further research. The challenge under such circumstances is to quantify relative uncertainties as well as costs and benefits of all contributing factors. There is nothing that in principle precludes applying the framework developed here to such situations because of its generality. Clearly, several factors indicate that participants did not depart dramatically from eye movement strategies in more normal viewing environments, and thus that natural behavior transferred well to the TED task. First, subjects did not receive extensive training (Materials and Methods). Second, they showed only minimal changes in learning behavior across blocks over the duration of the entire experiment (Fig. S5). Third, intersaccadic interval statistics were very close to the ones observed in naturalistic settings according to previous literature (34–37). Overall, the found concepts contribute uniquely to our understanding of human visual behavior and should inform our ideas about neuronal mechanisms underlying attentional shifts (47), conceptualized as sequential decisions. The results of this study may similarly be relevant for the understanding of learning optimal sensory strategies in other modalities and the methodologies can fruitfully be applied there, too.

Fig. S5.

Learning effects over the course of the experiments. (A) Mean performance aggregated over all participants grouped by the position of the block within the experiment. Error bars correspond to the sample SD. Different conditions are shown as separate lines. (B) Histogram of regression slopes. For each participant and each condition linear regression between the mean performance in the blocks and the position of the respected blocks in the experiment was computed. The distribution of regression slopes indicates no improvement in the task over the course of the experiment.

Materials and Methods

Subjects.

Ten undergraduate subjects (four females) took part in the experiment in exchange for course credit. The participants’ ages ranged from 18 to 30 y. All subjects had normal or corrected-to-normal vision. Data from two subjects were not included into the analysis due to insufficient quality of the eye movement data. Participants completed two to four shorter training blocks (10 trials per block) before the experiment to get used to the setting and the task (on average 5 min). The number of training blocks, however, had no influence on task performance. All experimental procedures were approved by the ethics committee of the Darmstadt University of Technology. Informed consent was obtained from all participants.

Stimuli and Analysis.

The two regions (distance ) were presented on a Flatron W2242TE monitor (1,680 1,050 resolution and 60-Hz refresh rate). Each region was marked with a gray circle ( in diameter). Contrast of the target dot was adjusted to prevent extrafoveal vision. Eye movement data were collected using SMI Eye Tracking Glasses (60-Hz sample rate). Calibration was done using a three-point calibration. For detection of saccades, fixations, and blinks dispersion-threshold identification was used.

Statistics of the TED Task

Events for the TED task were generated according to the graphical model presented in Fig. S2A. An event was defined as a tuple with the generative process

| [S1] |

| [S2] |

| [S3] |

| [S4] |

where the random variable s denotes the event’s starting time within a trial, which was distributed uniformly between and . The region q where the event occurs (: left; : right) was drawn from a binomial distribution and the event’s duration d from a Gaussian distribution. The SD of the event durations σ was fixed to a small value (100 ms). Events were equally probable at both regions (). The mean of the event duration depended on the region where the event occurred. The mean event durations for the right region () and for the left region () were fixed over the course of a block according to the respective condition. For example, in a block belonging to condition LM (long–medium) and were set to 1.5 s and 0.75 s, respectively. Because the goal in each trial was to detect the event we established the relationship between SPs and detection probability.

Fig. S2.

Derivation of the statistics in the TED task. (A) Probabilistic graphical model of the event-generating process. (B) Probability of missing an event. The condition for the shown segment is LM (i.e., long event durations at the left region and medium event durations at the right region). The probability of missing the event (orange area) is shown for an arbitrary SP (dashed line), where the left region is fixated for a duration and the right region for a duration . Events are missed if the event’s region is not fixated for the entire duration of the event. For long event durations the offset of the probability of missing an event (purple lines) is greater than for short event durations. As a consequence, the time between two fixations can be greater if the event duration is long without missing events.

Therefore, we first derived the probability that an event ends at time e at region q based on the generative process. This corresponds to the joint distribution , which can be factorized into . For any event the ending time is the sum of the starting time and the duration. The probability of an event’s ending at a specific time e given the region r can be computed by the sum of s and d, which results in the convolution of the respective probability density functions

| [S5] |

The last identity follows from the convolution of a Gaussian and a Uniform distribution, where denotes the cumulative distribution of a normal distributed variable centered at θ.

We formalized SPs as a tuple , where and are the fixation durations for the left and right region, respectively, and δ is the time needed to move focus from one position to the other (i.e., the duration of a saccade). Using our results for the distribution of event ending times we derived the probability of missing an event when performing a SPs (Fig. S2B). For a single region (e.g., R), the time between two consecutive fixations is . Events both starting and ending within this time range at region R are regarded as missed. The probability of missing an event at region R while fixating L can therefore be computed as

| [S6] |

The probability of missing an event at region L can be computed analogously. The event-generating process implies that events are mutually exclusive at the two regions. Therefore, we can compute the probability of missing an event when performing the SP (, δ, ) by marginalizing over the regions

| [S7] |

The derived probability of missing an event only covers the sequence of two fixations. Thus, the total time covered is . To compute the probability of missing an event over the course of the entire trial and to compare different SPs, we divided by the total time covered by the SP. As a result we get the expected probability of missing an event in a single time step associated with a certain SP:

| [S8] |

Computational Models of Gaze Switching Behavior

Based on the statistical relationships derived from the event-generating process we now propose different models for determining the optimal SP assuming that the event durations are learned. The time needed to perform a saccade is small compared with the fixation durations and by and large determined by its amplitude as reflected by the main sequence relation. Thus, δ was treated as a fixed value and omitted from the equations. Further, the probability of missing the event when performing a certain SP can be expressed as a task-related cost function .

We started by deriving an ideal observer that chooses SPs solely by minimizing :

| [S9] |

Ideal observer models are frequently used to investigate human behavior involving perceptual inferences. However, a perceptual problem may have multiple ideal observer models depending on which quantities are assumed to be unknown. Ideal observers usually do not consider costs but provide a general solution to an inference problem. Therefore, we extended our ideal observer to obtain more realistic models.

First, by using the true event durations d the ideal observer assumes perfect knowledge about the generative process. Especially, the mean durations for both regions ( and ) are assumed to be known. This is clearly not the case in the beginning of the blocks and even after learning perceptual uncertainty and, crucially, the scalar property prevents complete knowledge. Although the former is irrelevant for the case of learned event durations, the latter is not. Building on findings regarding the scalar property we augmented the ideal observer by including signal-depending Gaussian noise on the true variance of event durations . As a result the distribution becomes , where is the Weber fraction.

Second, the ideal observer only considers task performance for determining optimal SPs. It implicitly assumes that SPs do not differ with respect to other relevant metrics. However, switching at a very high frequency consumes more metabolic energy and can be associated with further internal costs. Therefore, we hypothesized that human behavior is the result of trading off task performance and intrinsic costs:

| [S10] |

where α quantifies eye movement costs in units of . To our knowledge the exact shape of these costs is unknown. We hypothesize that eye movement costs are proportional to the switching frequency and therefore to the sum of the fixation durations. We used the positive range of a bell curve as a cost function:

| [S11] |

where τ controls how quickly costs increase with switching frequency.

So far, all models have assumed the SPs to be deterministic. However, humans clearly are not capable of performing an eye movement pattern without variability. In addition, they have shown to take motor variability into account when choosing actions, and hence they can be described as ideal actors. The ideal actor model takes into account that there is variation when targeting a certain SP . This property can be considered by no longer treating fixation durations as fixed but as samples drawn from some distribution. We chose an exponential Gaussian, centered at the targeted durations and :

| [S12] |

for X in (L,R), whose expected value is equal to the respective target fixation duration . Given the shape parameters of the distribution were determined using together with . The regression parameters and as well as ω were estimated from the data. The expected value of is then computed by marginalizing out the variability of :

| [S13] |

Finally, SPs are constrained by the abilities of the motor system and the speed of information processing. As a consequence, targeted fixations durations have a lower bound. To account for this constraint, we propose a threshold using a cumulative Gaussian:

| [S14] |

where the mean β was estimated from the data and was fixed to a small value. Combining all components yields the bounded actor:

| [S15] |

Parameter Estimation

Parameters were estimated by least squares using the aggregated data from trials 10–20, because switching behavior was stable across subjects during this period. AIC values for the models were computed according to ref. 48 as

| [S16] |

where n is the number of data points, p the number of free parameters, and is the sum of squared errors. The constant C contains all additive components that are shared between the models. Because we use the difference of AIC values between the models for model comparison, these constant values can be ignored, because they cancel out. Akaike weights were computed according to ref. 44 as

| [S17] |

where i refers to the model the weight is computed for and is the difference in AIC between the ith model and the model with the minimum AIC. The Akaike weight of a model is a measure for how likely it is that the respective model is the best among the set of models. The probability ratio between two competing models i and j was computed as the quotient of their respective weights .

Lower Bound Model Fit

Our model predicted a single optimal fixation duration for each aggregated line in Fig. S3D (in the range of trials 10–20). The SSE was computed as the variation around this predicted value and then used to obtain the AIC. Differences in AIC were used to compare different models. However, we used the AIC solely for the purpose of model comparison, not as an absolute measure of model fit. The reason for this is that the AIC is not well-suited for an evaluation of absolute model fit because there is no fixed lower bound. If a model were so good that it perfectly predicted the dependent variable, the error-sum-of-squares would approach zero, and the natural logarithm of this value and thereby the AIC would approach negative infinity (Eq. S16). Further, the AIC by itself is highly dependent on the units of measurement, which influence the error-sum-of-squares.

Here we present an absolute lower bound on AIC for the data presented in our experiment. We computed the SSE for a model that estimates the best-fitting parameter for each aggregated line in Fig. S3D. For each line the parameter that minimizes the SSE is the mean. The lower bound for the data we collected is SSE = 0.09 and AIC = −603.15, respectively. We used n = 90 and P = 9 (one for each line separately) parameters.

Note that this is an overly optimistic bound for two reasons. First, the estimates for fixation durations underlying the lower bound are independently chosen to best represent each individual optimal strategy. For the full model, however, these predictions are not independent because each estimated parameter simultaneously affects the optimal fixation durations for all conditions. This dependency puts a constraint on the full model that is not considered by this lower bound. Second, the lower bound assumes the sample mean of the data to be equal to the optimal fixation duration and only quantifies the variation around that mean. This neglects the variability across blocks and participants. In contrast, the full model uses all available data to estimate biologically plausible parameters. Using these parameters the optimal fixation duration is predicted by the dynamics of the full model rather than directly estimated from the data. Although this is necessary to provide generalizability and indispensable to prevent overfitting, it naturally introduces a further source for variability. This is reflected in a higher SSE of the full model compared with the lower bound.

Bayesian Learner

All models so far have assumed perfect knowledge of the event durations despite perceptual uncertainty. They are therefore only applicable to the steady-state case because event durations were unknown in the beginning of a block and had to be learned over the course of the block. Learning was formulated as updating the model’s belief about the event durations through observations (Fig. 4A). From a Bayesian perspective this can be seen as using the observation to transform a prior distribution to a posterior distribution:

| [S18] |

where θ denotes the mean of the event duration and o a sequence of observations. The updated belief, the posterior distribution , is computed as the product of the prior and the likelihood . The denominator normalizes the posterior to sum to one.

In the TED task there are three types of observations. First, if an event starts at a region while the respective region is fixated and is perceived for the whole event duration the observation is defined as fully observed. Second, if the region is not fixated at all while the event occurs the observation is defined as missed. Third, if only a fraction of the event is observed (event is already occurring when the region is fixated) the observation is defined as partially observed. Hereby, we assume that if a region is fixated while an event occurs, participants continue fixating the respective region until the event has finished. Our data show that this is indeed the case. The different types of observations lead to different types of censoring. The resulting likelihood can computed by

| [S19] |

| [S20] |

where is Gaussian and F is the cumulative distribution function of θ. The starting and ending times for the event are denoted as and , respectively. The time when the region of interest is fixated is denoted by .

To extend our models from the steady-state case to all trials the progression of uncertainty over the course of the blocks was simulated using the proposed Bayesian learner (Fig. 4B). We computed the mean estimate and the uncertainty of the event duration for each trial in a block as follows. We repeatedly simulated events and determined the type of the resulting observation using the mean SP for each stage of a block. We then used Markov chain Monte Carlo methods to draw samples from the posterior belief about the true event durations for each trial. For each trial, the prior distribution for the event duration is equivalent to the posterior distribution in the trial before. For the first trial, we used a Gaussian distribution fitted to the means of the three event durations. To model the initial belief before the first observation, we used a Gaussian distribution fitted to the true event durations for the three conditions. We controlled the subjects’ prior belief by having them perform a few training trials during which they became familiar with the overall range of event durations.

Acknowledgments

We thank R. Fleming, M. Hayhoe, F. Jäkel, and M. Lengyel for useful comments on the manuscript.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1601305113/-/DCSupplemental.

References

- 1.Land MF, Nilsson D. Animal Eyes. Oxford Univ Press; Oxford: 2002. [Google Scholar]

- 2.Yarbus A. Eye Movements and Vision. Plenum; New York: 1967. [Google Scholar]

- 3.Findlay JM, Gilchrist ID. Active Vision: The Psychology of Looking and Seeing. Oxford Univ Press; Oxford: 2003. [Google Scholar]

- 4.Hayhoe M, Ballard D. Eye movements in natural behavior. Trends Cogn Sci. 2005;9(4):188–194. doi: 10.1016/j.tics.2005.02.009. [DOI] [PubMed] [Google Scholar]

- 5.Land M, Tatler B. Looking and Acting: Vision and Eye Movements in Natural Behaviour. Oxford Univ Press; Oxford: 2009. [Google Scholar]

- 6.Borji A, Sihite DN, Itti L. What stands out in a scene? A study of human explicit saliency judgment. Vision Res. 2013;91(0):62–77. doi: 10.1016/j.visres.2013.07.016. [DOI] [PubMed] [Google Scholar]

- 7.Torralba A, Oliva A, Castelhano MS, Henderson JM. Contextual guidance of eye movements and attention in real-world scenes: The role of global features in object search. Psychol Rev. 2006;113(4):766–786. doi: 10.1037/0033-295X.113.4.766. [DOI] [PubMed] [Google Scholar]

- 8.Henderson JM. Human gaze control during real-world scene perception. Trends Cogn Sci. 2003;7(11):498–504. doi: 10.1016/j.tics.2003.09.006. [DOI] [PubMed] [Google Scholar]

- 9.Rothkopf CA, Ballard DH, Hayhoe MM. Task and context determine where you look. J Vis. 2007;7(14):1–20. doi: 10.1167/7.14.16. [DOI] [PubMed] [Google Scholar]

- 10.Navalpakkam V, Koch C, Rangel A, Perona P. Optimal reward harvesting in complex perceptual environments. Proc Natl Acad Sci USA. 2010;107(11):5232–5237. doi: 10.1073/pnas.0911972107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Schütz AC, Trommershäuser J, Gegenfurtner KR. Dynamic integration of information about salience and value for saccadic eye movements. Proc Natl Acad Sci USA. 2012;109(19):7547–7552. doi: 10.1073/pnas.1115638109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Morvan C, Maloney LT. Human visual search does not maximize the post-saccadic probability of identifying targets. PLOS Comput Biol. 2012;8(2):e1002342. doi: 10.1371/journal.pcbi.1002342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Morvan C, Maloney L. Suboptimal selection of initial saccade in a visual search task. J Vis. 2009;9(8):444–444. [Google Scholar]

- 14.Clarke AD, Hunt AR. Failure of intuition when choosing whether to invest in a single goal or split resources between two goals. Psychol Sci. 2016;27(1):64–74. doi: 10.1177/0956797615611933. [DOI] [PubMed] [Google Scholar]

- 15.Najemnik J, Geisler WS. Optimal eye movement strategies in visual search. Nature. 2005;434(7031):387–391. doi: 10.1038/nature03390. [DOI] [PubMed] [Google Scholar]

- 16.Chukoskie L, Snider J, Mozer MC, Krauzlis RJ, Sejnowski TJ. Learning where to look for a hidden target. Proc Natl Acad Sci USA. 2013;110(Suppl 2):10438–10445. doi: 10.1073/pnas.1301216110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Peterson MF, Eckstein MP. Looking just below the eyes is optimal across face recognition tasks. Proc Natl Acad Sci USA. 2012;109(48):E3314–E3323. doi: 10.1073/pnas.1214269109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Yang SC, Lengyel M, Wolpert DM. 2016. Active sensing in the categorization of visual patterns. eLife 5:e12215. [DOI]

- 19.Ballard DH, Hayhoe MM, Pelz JB. Memory representations in natural tasks. J Cogn Neurosci. 1995;7(1):66–80. doi: 10.1162/jocn.1995.7.1.66. [DOI] [PubMed] [Google Scholar]

- 20.Johansson RS, Westling G, Bäckström A, Flanagan JR. Eye-hand coordination in object manipulation. J Neurosci. 2001;21(17):6917–6932. doi: 10.1523/JNEUROSCI.21-17-06917.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Diaz G, Cooper J, Rothkopf C, Hayhoe M. Saccades to future ball location reveal memory-based prediction in a virtual-reality interception task. J Vis. 2013;13(1):20. doi: 10.1167/13.1.20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Flanagan JR, Johansson RS. Action plans used in action observation. Nature. 2003;424(6950):769–771. doi: 10.1038/nature01861. [DOI] [PubMed] [Google Scholar]

- 23.Land MF, McLeod P. From eye movements to actions: How batsmen hit the ball. Nat Neurosci. 2000;3(12):1340–1345. doi: 10.1038/81887. [DOI] [PubMed] [Google Scholar]

- 24.Hayhoe M, Ballard D. Modeling task control of eye movements. Curr Biol. 2014;24(13):R622–R628. doi: 10.1016/j.cub.2014.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Geisler WS. Sequential ideal-observer analysis of visual discriminations. Psychol Rev. 1989;96(2):267–314. doi: 10.1037/0033-295x.96.2.267. [DOI] [PubMed] [Google Scholar]

- 26.Merchant H, Harrington DL, Meck WH. Neural basis of the perception and estimation of time. Annu Rev Neurosci. 2013;36:313–336. doi: 10.1146/annurev-neuro-062012-170349. [DOI] [PubMed] [Google Scholar]

- 27.Ivry RB, Schlerf JE. Dedicated and intrinsic models of time perception. Trends Cogn Sci. 2008;12(7):273–280. doi: 10.1016/j.tics.2008.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wittmann M. The inner sense of time: How the brain creates a representation of duration. Nat Rev Neurosci. 2013;14(3):217–223. doi: 10.1038/nrn3452. [DOI] [PubMed] [Google Scholar]

- 29.Gibbon J. Scalar expectancy theory and weber’s law in animal timing. Psychol Rev. 1977;84(3):279. [Google Scholar]

- 30.Hudson TE, Maloney LT, Landy MS. Optimal compensation for temporal uncertainty in movement planning. PLOS Comput Biol. 2008;4(7):e1000130. doi: 10.1371/journal.pcbi.1000130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Jazayeri M, Shadlen MN. Temporal context calibrates interval timing. Nat Neurosci. 2010;13(8):1020–1026. doi: 10.1038/nn.2590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Sims CR, Jacobs RA, Knill DC. Adaptive allocation of vision under competing task demands. J Neurosci. 2011;31(3):928–943. doi: 10.1523/JNEUROSCI.4240-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ratcliff R. Group reaction time distributions and an analysis of distribution statistics. Psychol Bull. 1979;86(3):446–461. [PubMed] [Google Scholar]

- 34.Laubrock J, Cajar A, Engbert R. Control of fixation duration during scene viewing by interaction of foveal and peripheral processing. J Vis. 2013;13(12):11. doi: 10.1167/13.12.11. [DOI] [PubMed] [Google Scholar]

- 35.Staub A. The effect of lexical predictability on distributions of eye fixation durations. Psychon Bull Rev. 2011;18(2):371–376. doi: 10.3758/s13423-010-0046-9. [DOI] [PubMed] [Google Scholar]

- 36.Luke SG, Nuthmann A, Henderson JM. Eye movement control in scene viewing and reading: Evidence from the stimulus onset delay paradigm. J Exp Psychol Hum Percept Perform. 2013;39(1):10–15. doi: 10.1037/a0030392. [DOI] [PubMed] [Google Scholar]

- 37.Palmer EM, Horowitz TS, Torralba A, Wolfe JM. What are the shapes of response time distributions in visual search? J Exp Psychol Hum Percept Perform. 2011;37(1):58–71. doi: 10.1037/a0020747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Schütz AC, Lossin F, Kerzel D. Temporal stimulus properties that attract gaze to the periphery and repel gaze from fixation. J Vis. 2013;13(5):6. doi: 10.1167/13.5.6. [DOI] [PubMed] [Google Scholar]

- 39.Stanford TR, Shankar S, Massoglia DP, Costello MG, Salinas E. Perceptual decision making in less than 30 milliseconds. Nat Neurosci. 2010;13(3):379–385. doi: 10.1038/nn.2485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Thorpe S, Fize D, Marlot C. Speed of processing in the human visual system. Nature. 1996;381(6582):520–522. doi: 10.1038/381520a0. [DOI] [PubMed] [Google Scholar]

- 41.Fabre-Thorpe M, Delorme A, Marlot C, Thorpe S. A limit to the speed of processing in ultra-rapid visual categorization of novel natural scenes. J Cogn Neurosci. 2001;13(2):171–180. doi: 10.1162/089892901564234. [DOI] [PubMed] [Google Scholar]

- 42.Trukenbrod HA, Engbert R. ICAT: A computational model for the adaptive control of fixation durations. Psychon Bull Rev. 2014;21(4):907–934. doi: 10.3758/s13423-013-0575-0. [DOI] [PubMed] [Google Scholar]

- 43.Remington RW, Wu SC, Pashler H. What determines saccade timing in sequences of coordinated eye and hand movements? Psychon Bull Rev. 2011;18(3):538–543. doi: 10.3758/s13423-011-0066-0. [DOI] [PubMed] [Google Scholar]

- 44.Wagenmakers EJ, Farrell S. AIC model selection using Akaike weights. Psychon Bull Rev. 2004;11(1):192–196. doi: 10.3758/bf03206482. [DOI] [PubMed] [Google Scholar]

- 45.Itti L, Koch C. Computational modelling of visual attention. Nat Rev Neurosci. 2001;2(3):194–203. doi: 10.1038/35058500. [DOI] [PubMed] [Google Scholar]

- 46.Simoncelli EP, Olshausen BA. Natural image statistics and neural representation. Annu Rev Neurosci. 2001;24(1):1193–1216. doi: 10.1146/annurev.neuro.24.1.1193. [DOI] [PubMed] [Google Scholar]

- 47.Gottlieb J. Attention, learning, and the value of information. Neuron. 2012;76(2):281–295. doi: 10.1016/j.neuron.2012.09.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Burnham KP, Anderson DR. Multimodel inference. Sociol Methods Res. 2004;33(2):261–304. [Google Scholar]