Abstract

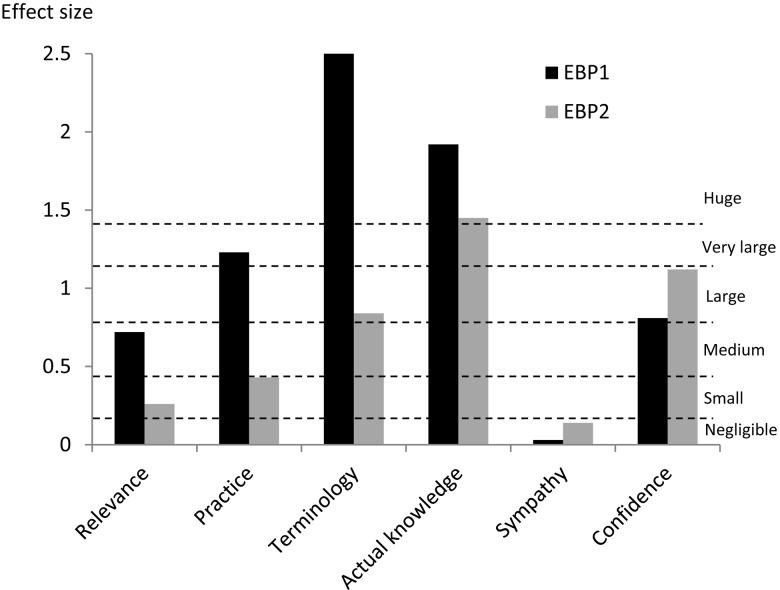

Purpose: To investigate the magnitude of change in outcomes after repeated exposure to evidence-based practice (EBP) training in entry-level health professional students. Method: Using an observational cross-sectional analytic design, the study tracked 78 students in physiotherapy, podiatry, health science, medical radiations, and human movement before and after two sequential EBP courses. The first EBP course was aimed at developing foundational knowledge of and skills in the five steps of EBP; the second was designed to teach students to apply these steps. Two EBP instruments were used to collect objective (actual knowledge) and self-reported (terminology, confidence, practice, relevance, sympathy) data. Participants completed both instruments before and after each course. Results: Effect sizes were larger after the first course than after the second for relevance (0.72 and 0.26, respectively), practice (1.23 and 0.43), terminology (2.73 and 0.84), and actual knowledge (1.92 and 1.45); effect sizes were larger after the second course for sympathy (0.03 and 0.14) and confidence (0.81 and 1.12). Conclusions: Knowledge and relevance changed most meaningfully (i.e., showed the largest effect size) for participants with minimal prior exposure to training. Changes in participants' confidence and attitudes may require a longer time frame and repeated training exposure.

Key Words: education, evidence-based practice, students

Abstract

Objet : Étudier l'ordre de grandeur du changement au niveau des résultats suivant une exposition répétée à la pratique factuelle au cours de la formation des futurs professionnels de la santé au niveau débutant. Méthode : Basée sur un concept d'analyse transversale par observation, l'étude a suivi 78 étudiants en physiothérapie, podiatrie, sciences de la santé, rayonnements médicaux et mouvement humain avant et après deux cours consécutifs sur la pratique factuelle. Le premier visait à produire des connaissances générales et techniques fondamentales sur les cinq étapes de la pratique factuelle. Le deuxième visait à apprendre aux étudiants à appliquer ces étapes. On a utilisé deux instruments relatifs à la pratique factuelle pour réunir des données objectives (connaissances réelles) et autodéclarées (terminologie, confiance, pratique, pertinence, sympathie). Les participants ont rempli les deux questionnaires avant et après chaque cours. Résultats : L'ordre de grandeur de l'effet était plus important après le premier cours qu'après le deuxième pour ce qui est de la pertinence (0,72, 0,26), de la pratique (1,23, 0,43), de la terminologie (2,73, 0,84) et des connaissances réelles (1,92, 1,45). Le changement était plus important après le deuxième cours dans le cas de la sympathie (0,03, 0,14) et dans celui de la confiance (0,81, 1,12). Conclusions : Les connaissances et la pertinence ont changé le plus (c.-à-d., ont montré l'effet le plus important) chez les participants qui avaient une exposition antérieure minime à la formation. Les changements aux niveaux de la confiance et des attitudes des participants peuvent prendre plus de temps et nécessiter une exposition répétée à la formation.

Mots clés : éducation, étudiants, pratique factuelle, spécialité de la physiothérapie

Health practitioners need to understand, recognize, and apply evidence-based practice (EBP) to provide optimal health care and therefore must have the skills to seek, appraise, and integrate new knowledge throughout their careers.1 If clinicians are to possess these skills and become proficient at applying EBP in the health care setting, current graduates must be equipped with EBP knowledge and skills (the five-step EBP model) during their entry-level training.1,2

Although many studies have established improved student outcomes after EBP training, few studies have reported the magnitude of these improvements.3 Of particular interest is the effect size, which considers the effectiveness of an intervention rather than whether the change was statistically significant and allows for comparison of findings across studies that use different scales.4 Previous studies investigating the effectiveness of EBP training have reported statistical significance, which is the likelihood that the difference could be an accident of sampling. The main problem with this approach is that the p value depends on both the size of the effect and the size of the sample; it is therefore possible to get a statistically significant result if the sample was very large, even if the actual effect size was very small. Although it is important to know the statistical significance of a result, statistical significance gives no information on the size of the effect. One way to overcome this confusion is to report the effect size and a corresponding estimate of its confidence interval.4

A recent systematic review3 of studies investigating the impact of EBP training in entry-level (undergraduate) students found that only two studies reported effect sizes,5,6 and a further six studies reported sufficient data (mean, sample size, and standard deviation or standard error) to allow the effect size to be calculated from their results.7–12 The systematic review found that across the EBP outcomes (knowledge, attitudes, behaviours, skills, and confidence), there was little commonality in terms of effect sizes, which could be explained by differences in the educational content and contexts, modes of delivery, settings, participants, disciplines, instructors, and assessment tools used in the included studies.3,13

Undergraduate health professional training commonly begins with foundation courses (e.g., anatomy and physiology) in students' formative years, which provide a sound theoretical framework to build on in more advanced and clinically based courses in their final years. It is reasonable to assume that this iterative building of knowledge and skill also applies to entry-level EBP training, in which students' initial training focuses on learning foundational knowledge and skills and subsequent courses present more complex theory, application, and clinical integration. We were able to identify only one previous study that investigated EBP outcomes across two successive training courses, and because of its small sample size, effect sizes were calculated over each participant's widest exposure to training rather than before and after each course.6 Understanding the pattern of change in outcomes over multiple EBP training courses could be integral to coordinating effective educational curricula. The aim of our study, therefore, was to explore the pattern of change in self-reported and actual EBP outcomes after one or two EBP courses among entry-level students and to consider the size of the change.

Methods

Study design

Our study used an observational, cross-sectional (analytic) design, tracking the same cohort of health professional students before and after exposure to two EBP training courses. Ethical approval for the study was granted by the University of South Australia Human Research Ethics Committee (protocol number 0000021077). All participants provided written informed consent, and the study conforms to the Human and Animal Rights requirements of the February 2006 International Committee of Medical Journal Editors' Uniform Requirements for Manuscripts Submitted to Biomedical Journals.

Participants

A total of 280 entry-level health professional students from the physiotherapy, podiatry, health science, medical radiations, and human movement programmes at the University of South Australia were invited to participate.

Sampling and sample size

Because our goal was to evaluate the effects of exposure to two successive EBP courses, Evidence-Based Practice 1 (EBP1) and Evidence-Based Practice 2 (EBP2; see “EBP Interventions” section), our potential sample size was limited to those students enrolled in these courses at the time of the study (EBP1 in 2010, EBP2 in 2011). The maximum possible number of participants, therefore, was 280: 125 students in the physiotherapy programme, 32 in podiatry, 23 in health science, 81 in medical radiations, and 19 in human movement. To detect a medium effect size with 80% power with an α level of 0.05 (adjusted for multiple dependent variables), we would need a minimum sample size of 33 participants over four test occasions.

Evidence-based practice interventions

The interventions in this study were two EBP theory courses delivered to entry-level health professional students at the University of South Australia. For details on the EBP courses, see Box 1. The first course, EBP1, was a prerequisite for enrolment in the second course, EBP2. Both were 4.5-unit courses in degree programmes in which 1 unit is equivalent to 40 hours of student effort.

Box 1.

Summary of the Content, Delivery and Assessment of the Evidence-Based Practice (EBP) Interventions

| Course | Duration or timeframe |

Mode of delivery and contact hours |

Content (5 EBP steps) |

Assessment |

|---|---|---|---|---|

| EBP1 | 13 weeks; July 2010–November 2010 |

2×1 h didactic lectures | Step 1: Ask | Written quiz (15%) |

| Step 2: Access | Open book written test (35%) | |||

| 12×1 h tutorials | Step 5: Assess | Written exam (50%) | ||

| 36 h of online learning modules |

||||

| EBP2 | 13 weeks; March 2011–June 2011 |

13×2 h didactic lectures and discussions |

Step 1: Ask | Multiple-choice and short-answer test (15%) |

| Step 2: Access | ||||

| Step 3: Appraise | 2 tutorial presentations (2×5%) | |||

| 11×1 h tutorials | Step 4: Apply | 2 A4 summaries of presentation material (2×12.5%) | ||

| 1 h computer workshop | Step 5: Assess | Written exam (50%) |

EBP1 was compulsory for all 1st-year physiotherapy, podiatry, health science, and medical radiations students and an elective offered to all 1st- and 2nd-year human movement students. This course aimed to develop foundation knowledge and skills in EBP, with emphasis on three of the five EBP steps outlined in the Sicily Statement.1 Learning objectives included being able to describe and critique EBP philosophy; to discuss the key principles of quantitative and qualitative research approaches and concepts of research evidence hierarchies to frame a research question and to access and search library databases and other resources; and to reflect on the processes associated with this approach.

EBP2 was mandatory for all 2nd-year students in health-related entry-level programmes (physiotherapy, podiatry, and health science) and 3rd-year medical radiations students and was offered as an elective for 3rd-year human movement students. This course aimed to reinforce content from EBP1 with additional training in appraising methodological bias, as well as teaching students how to apply each of the five EBP steps. Learning outcomes included the ability to recognize different types of research questions; develop an evidence-based question to guide a systematic search; construct search strategies; recognize different study methodologies; identify potential threats to internal validity; and perform, interpret, and summarize the processes used for critical appraisal of the literature.

Data collection

Four test occasions were scheduled; pre-EBP1 (Time [T] 1) and post-EBP1 (T2) in 2010 and pre-EBP2 (T3) and post-EBP2 (T4) in 2011. Students were invited to complete two assessment tools during the first and last face-to-face classes for each course and were asked to write their university student identification numbers on the questionnaires to allow for longitudinal tracking of responses. Student identification numbers were removed and replaced with a code number for data entry, analysis, and reporting purposes.

Assessment tools

Two pre-existing instruments developed for the health professional disciplines were used: the K-REC instrument14 and the EBP2 questionnaire15 (Table 1). The K-REC instrument aims to assess participants' actual knowledge of EBP by asking respondents a series of questions relating to a clinical scenario; responses are scored using a set marking template. The K-REC is based on the Fresno test for Evidence-Based Medicine16 and was developed specifically for entry-level health professional students.14 The EBP2 questionnaire consists of 58 Likert-type items that measure five self-reported EBP outcomes (relevance, sympathy, terminology, practice, and confidence). The questionnaire also includes 13 demographic items relating to age, gender, working position and discipline, work settings, and highest qualifications. These two EBP instruments cover all five of the EBP steps (Table 1) delivered as content in EBP1 and EBP2 (Box 1) and were therefore considered suitable to detect changes after these courses.

Table 1.

Summary of the Instruments Used in This Study

| Items | EBP outcomes | Scoring | Psychometric properties | ||

|---|---|---|---|---|---|

| K-REC14 | Clinical scenario and 9 items relating to the scenario (short answer, multiple choice, and true–false questions) | Actual EBP knowledge (PICO questions, search strategies, research designs, critical appraisal, evidence hierarchies, and statistics) | Set scoring template with highest possible mark of 12 |

Test–retest reliability: moderate to excellent (Cohen's κ 0.62–1.0 for items, ICC 0.88 for total score; n=24 physiotherapy students)14 Inter-rater reliability: very good to excellent (Cohen's κ 0.83–1.0 for items, ICC 0.97 for total score; n=24 physiotherapy students)14 |

Discriminative validity: significant difference (p<0.0001) in total scores between novice and experts (n=24 physiotherapy students exposed, n=76 human movement students non-exposed)14 Responsive validity: able to detect impact of EBP training (p<0.001, ES 1.13; n=77 physiotherapy students)6 |

| EBP2,15 | 58 5-point Likert-scale items in 5 self-reported outcomes (domains) and 13 demographic items | Relevance (values, emphasis, and importance of EBP) Sympathy (compatibility of EBP with professional work) Terminology (understanding of common research terms) Practice (use of EBP) Confidence (perception of ability) |

Minimum–maximum item score of 1–5. Outcome scores (aggregate item scores) calculated for each outcome. |

Test–retest reliability: moderate to good (ICC 0.53–0.86 for items; ICC 0.77–0.94 for outcomes; n=106 allied health students and professionals*)15 Internal consistency: very good (Cronbach's α 0.96; n=106 allied health students and professionals*)15 |

Convergent validity: good for the 3 outcomes (Pearson's rs: confidence, 0.80; practice, 0.66; sympathy, 0.54; n=106 allied health students and professionals*)15 Discriminative validity: significant difference (p≤0.004) for relevance, terminology, and confidence for levels of EBP training (n=106 allied health students and professionals*)15 Response validity: able to detect impact of EBP training (relevance, p<0.001, ES 0.49; sympathy, p=0.005, ES 0.30; terminology, p<0.001, ES 1.07; practice, p<0.001, ES 1.34; confidence, p<0.001, ES 0.89; n=77 physiotherapy students)6 |

Physiotherapy, podiatry, occupational therapy, medical radiation, human movement, nursing and commerce students, and professionals.

EBP=evidence-based practice; PICO=population intervention comparison outcomes; ICC=intra-class correlation coefficient; ES=effect size.

Data analysis

Data were analyzed using Predictive Analytics Software (PASW) Statistics 17.0 (SPSS Inc., Chicago, IL). Missing or illegible answers were recorded as missing data. Although the K-REC instrument has excellent interrater reliability and can feasibly be scored by more than one rater (Table 1),14 only one rater completed the scoring in the current study, using the K-REC marking guidelines. Because there is no precedent in the literature, we set an arbitrary minimum of 70% of items completed for inclusion in the data analysis. Items were given a score of zero if the participant gave no response or more than one response. In the EBP2 questionnaire, missing values were imputed if at least 75% of the non-demographic items had been completed.6,15,17 The imputation process involved assigning missing values a value closest to the most similar completely answered EBP2 questionnaire. If more than 25% of the non-demographic items were missing, the questionnaire was excluded from the analysis.

Only data from participants who completed both instruments on all four test occasions were included in the analysis. We calculated descriptive statistics for the six primary outcomes: actual knowledge from the K-REC total score and self-reported relevance, sympathy, terminology, practice, and confidence scores from the EBP2. To analyze data from all participants in the study across the four test occasions, we used random effects mixed modelling, with significance set at p<0.05. To limit the risk of a type 1 error due to multiple comparisons, a sequential Bonferroni adjustment was undertaken. To examine differences between test occasions (T1 to T2 for EBP1 and T3 to T4 for EBP2) for the physiotherapy students only, we performed paired t-tests (two-tailed). We then calculated effect sizes with 95% CIs for each of the six outcomes to assess the magnitude of change in outcomes pre–post EBP1 (T1 to T2) and pre–post EBP2 (T3 to T4). Effect sizes were classified as negligible (≥−0.15 to<0.15), small (≥0.15 to<0.40), medium (≥0.40 to<0.75), large (≥0.75 to<1.10), very large (≥1.10 to<1.45), or huge (≥1.45).18

Results

Data were collected before and after EBP1 in 2010 and before and after EBP2 in 2011. There were 430 students enrolled in EBP1 and 280 in EBP2; therefore, the maximum number of potential participants was 280 (total number of students enrolled in both courses at the time of the study). The number of participants who returned questionnaires on each of the four test occasions and the questionnaire response rate are shown in Table 2. Students who did not return questionnaires chose not to participate on that test occasion.

Table 2.

Number of Participants and Response Rate at Each Test Occasion

| No. of students |

|||

|---|---|---|---|

| Test occasion | Enrolled | Returned questionnaires |

Response rate, % |

| T1 | 430 | 310 | 72.1 |

| T2 | 430 | 229 | 53.3 |

| T3 | 280 | 249 | 88.9 |

| T4 | 280 | 173 | 61.8 |

| Total | 1420 | 961 | 67.7 |

Of the 280 potential participants, 94 (34%) completed questionnaires on all four test occasions; of these, 78 (83%)—46 students in physiotherapy, 22 in medical radiation, 5 in human movement, 4 in health science, and 1 in podiatry—provided complete data and were included in the final analysis. The majority of participants were female (n=60, 77%) and ranged from 18 to 36 years old, with a mean age of 19.6 (SD 3.0) years.

Table 3 shows the pre–post change in each of the primary outcomes for both courses across the health professions (n=78). Effect sizes were greater after EBP1 than after EBP2 for four outcomes: relevance, practice, terminology, and actual knowledge; for the other two outcomes, sympathy and confidence, the effect size was greater after EBP2.

Table 3.

Magnitude of Change in Outcomes for the Two EBP Training Courses for All Health Professional Students (n=78)

| EBP1 (T1–T2) |

EBP2 (T3–T4) |

Mean difference, (T1–T2) to (T3–T4)‡ |

Difference in ES, (T1–T2) to (T3–T4)‡ |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|

| EBP outcomes (max. score) |

Mean (SD) difference |

ES | 95% CI (ES) | p-value* | Mean (SD) difference |

ES | 95% CI (ES) | p-value* | ||

| Actual knowledge (12) | 2.6 (1.9) | 1.92 | 1.54 to 2.30 | <0.001† | 2.0 (1.9) | 1.45 | 1.10 to 1.81 | <0.001† | −0.6 | −0.47 |

| Confidence (55) | 3.9 (6.8) | 0.81 | 0.49 to 1.14 | <0.001† | 5.4 (6.8) | 1.12 | 0.78 to 1.46 | <0.001† | 1.5 | 0.31 |

| Practice (45) | 5.8 (6.7) | 1.23 | 0.89 to 1.57 | <0.001† | 2.1 (6.7) | 0.43 | 0.11 to 0.75 | 0.015† | −3.7 | −0.80 |

| Relevance (70) | 3.9 (7.7) | 0.72 | 0.39 to 1.04 | <0.001† | 1.4 (7.7) | 0.26 | −0.06 to 0.57 | 0.22 | −2.5 | −0.46 |

| Sympathy (35) | 0.1 (3.9) | 0.03 | −0.29 to 0.34 | 0.86 | 0.4 (3.9) | 0.14 | −0.17 to 0.46 | 0.75 | 0.3 | 0.11 |

| Terminology (85) | 21.1 (10.9) | 2.73 | 2.29 to 3.16 | <0.001† | 6.5 (10.9) | 0.84 | 0.51 to 1.17 | <0.001† | −14.6 | −1.89 |

Random effects mixed modelling with Bonferroni adjustment.

Significant at p<0.05.

Negative value indicates a greater change in the first EBP course.

EBP=evidence-based practice; ES=effect size.

Changes in the primary outcomes for the physiotherapy participants only (n=46) are shown in Table 4. Effect sizes were greater after EBP1 than after EBP2 for all outcomes except confidence, for which the change was greater after EBP2. Although the change in sympathy was not greater after EBP2, the decrease was minimal (from ES 0.05 to ES 0.00).

Table 4.

Magnitude of Change in Outcomes for the Two EBP Training Courses for the Physiotherapy Students (n=46)

| EBP1 (T1–T2) |

EBP2 (T3–T4) |

Mean difference, (T1–T2) to (T3–T4)‡ |

Difference in ES, (T1–T2) to (T3–T4)‡ |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|

| EBP outcomes (max. score) |

Mean (SD) difference |

ES | 95% CI (ES) | p-value* | Mean (SD) difference |

ES | 95% CI (ES) | p-value* | ||

| Actual knowledge (12) | 3.11 (1.52) | 2.05 | 1.53 to 2.54 | <0.001† | 1.89 (1.58) | 1.20 | 0.75 to 1.63 | <0.001† | −1.22 | −0.85 |

| Confidence (55) | 4.37 (7.11) | 0.61 | 0.19 to 1.03 | 0.004† | 4.79 (6.08) | 0.79 | 0.36 to 1.21 | <0.001† | 0.42 | 0.18 |

| Practice (45) | 8.08 (4.58) | 1.76 | 1.27 to 2.23 | <0.001† | 1.76 (5.62) | 0.31 | −0.10 to 0.72 | 0.137 | −6.32 | −1.45 |

| Relevance (70) | 7.17 (6.13) | 1.17 | 0.72 to 1.60 | <0.001† | 1.20 (7.14) | 0.17 | −0.24 to 0.58 | 0.422 | −5.97 | −1.00 |

| Sympathy (35) | 0.15 (3.25) | 0.05 | −0.36 to 0.45 | 0.83 | 0.00 (3.81) | 0.00 | −0.41 to 0.41 | 1.000 | −0.15 | −0.05 |

| Terminology (85) | 21.39 (10.26) | 2.08 | 1.56 to 2.57 | <0.001† | 6.17 (8.17) | 0.76 | 0.33 to 1.17 | <0.001† | −15.22 | −1.32 |

p-value for the mean difference (2-tailed t-test).

Significant at p<0.05).

Negative values indicate a greater change in the first EBP course.

EBP=evidence-based practice; ES=effect size.

Discussion

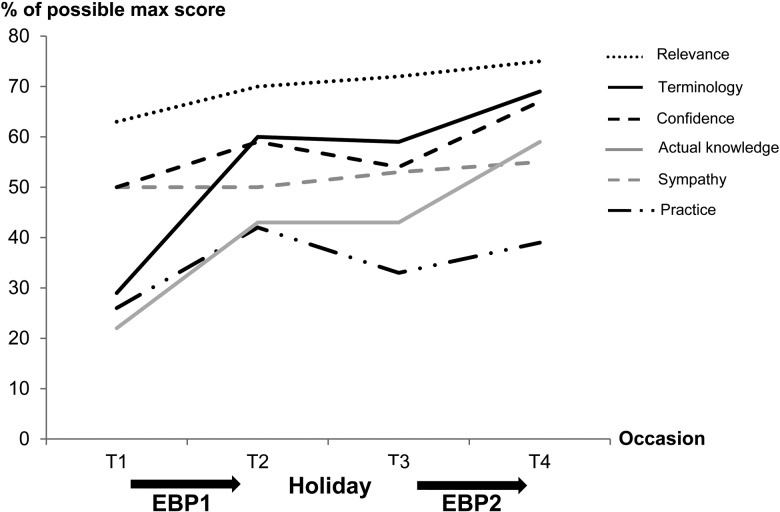

Our study sought to explore the magnitude of change in EBP learning outcomes among students after repeated exposure to EBP training. When the effect sizes are classified according to Thalheimer and Cook's18 definitions, for the majority of outcomes the difference between first and second exposure was at least one category—for example, a shift from medium to small effect size (see Figure 1). The greatest change was in the outcomes of self-reported practice and terminology, which both dropped two categories from first to second exposure. In contrast, although the effect size decreased for actual knowledge (from 1.92 to 1.45), the category stayed the same (huge). It is important to note that although effect sizes diminished for the majority of EBP outcomes over the study period (see Figure 1), percentages of possible maximum scores followed an upward trajectory (see Figure 2), meaning students continued to show improvements in their raw scores across most EBP outcomes, albeit at a slower rate.

Figure 1.

Classification of evidence-based practice (EBP) outcomes by effect size descriptor18 (n=78).

Figure 2.

Percentage of possible maximum score for all outcomes over four test occasions (n=78). EBP=evidence-based practice.

Our findings suggest that a greater change in EBP outcomes such as knowledge (actual knowledge and terminology) may occur at the beginning of a cumulative exposure. It is possible that participants' minimal previous exposure to EBP principles resulted in large improvements in outcomes in the first course, which then appear to have carried over, leading to smaller improvements from subsequent training. Several previous studies have reported that participants with low baseline levels of knowledge and skills make greater gains from EBP training.6,8 Aronoff and colleagues8 investigated the impact of an online course on EBP knowledge and skills among 3rd-year medical students and reported that more than one-third of participants did not improve after the intervention. Interestingly, the pre-intervention scores of these students were significantly higher than those of the students who did improve, which suggests that fewer gains were made from the EBP training when students already had a higher level of EBP proficiency. This finding is consistent with a previous systematic review that found small changes in EBP outcomes among medical residents (previously exposed) and large changes among undergraduate medical students (relatively non-exposed) after EBP training.19

Conversely, we saw greater improvement in students' sympathy toward and confidence in using EBP after longer cumulative exposure (see Figure 1). There are two possible reasons for this: First, the content of EBP2, while reinforcing the theory taught in EBP1, was directed toward applying EBP to clinically relevant research questions, which students may perceive to be more meaningful; second, sympathy and confidence may take longer to develop than the improvements seen in some of the other EBP outcomes (e.g., terminology).

In this study, the improvements after training were greater than those reported in a recent systematic review for the outcomes of knowledge (actual knowledge and terminology) and relevance and equal or slightly lower in the outcomes of sympathy, practice, and confidence.3 Only three previous studies identified in this review reported prior exposure to EBP training or principles, and two of these reported minimal prior exposure6,12 and the third reported prior exposure to a research methods course.11 The limited detail reported in these studies makes it difficult to determine the association between prior exposure and effect of training. There is a clear need to develop reporting guidelines to help researchers provide consistent details of educational interventions, thus allowing for meaningful comparison of studies.13

One could make an argument for implementing only stand-alone foundation courses in EBP (similar to the EBP1 course), given the diminishing effect sizes seen for some outcomes after the subsequent course. However, although the effect sizes in our study were smaller after the second exposure for actual knowledge, terminology, practice, and relevance, the raw scores improved overall; that is, students' scores after completing EBP2 (T4) were higher than their scores after completing EBP1 (T2) for all study outcomes except practice, which decreased slightly (see Figure 2). For example, despite the decrease in effect size for actual knowledge in the second exposure relative to the first, participants continued to make consistent gains, as evidenced by the higher K-REC scores (pre-EBP1 mean=2.6 [SD 1.6]; post-EBP1 mean=5.2 [SD 1.6]; pre-EBP2 mean=5.1 [SD 1.6]; post-EBP2 mean=7.1 [SD 1.6]). It is also important to note that the two EBP courses in this study had different aims; the first course focused on introductory knowledge and skills and the second introduced critical appraisal and taught students how to apply evidence to clinical practice. The application of EBP is extremely important to include in health professional curricula.20 Our finding that practice had a lower effect size from repeated training could be due to several factors, including other, often theoretical, courses in which students were concurrently enrolled (which limited their opportunities to practice EBP in the clinical environment) and difficulty in measuring actual application via a self-reported instrument such as the EBP2 questionnaire.

It is important for both researchers and educators to be aware that despite likely improvement in the raw scores of student learning outcomes, effect sizes are likely to be higher from the first exposure and reduced thereafter. Educational researchers should collect information on participants' prior exposure and report it together with findings on the effectiveness of educational interventions. Educators should also recognize the likely implications for learning outcomes with repeated training exposure and take into account the reported prior exposure (or lack thereof) in studies investigating EBP interventions before applying the findings to their own teaching context.

Although we observed a somewhat expected drop in scores for practice and confidence over the holiday break (T2 to T3; see Figure 2), the scores remained relatively stable for actual knowledge, terminology, relevance, and sympathy, which suggests that participants carried over knowledge of theoretical principles and an awareness of the importance and sense of compatibility with EBP from their first to their second exposure. Changes over holiday breaks are well recognized in secondary school education21 but, to our knowledge, have not been reported in entry-level university courses.

Our study investigated the magnitude of change in EBP learning outcomes among health professional students after repeated exposure to EBP training. Future research should evaluate where EBP education might be best placed in health professional curricula and how EBP education could be specifically tailored to the different health professions.

Limitations

Our study has several limitations. First, our findings represent the EBP outcomes of only those students who chose to participate in this study. Investigating the effect size for outcomes after exposure to two training courses meant including data only from those participants who completed questionnaires on all four test occasions. Although this limited our sample, the final sample size of 78 participants meant that the study was more than adequately powered to detect a medium effect size. Although we were able to conduct separate analyses on the physiotherapy students in the sample (n=46), there were not enough entry-level health professional students in any of the other programmes to conduct meaningful sub-group analyses, and therefore we are unable to comment on any differences between the various participating health professions. Findings in the physiotherapy group, however, were similar to those found in the overall health professional group.

Second, because our study did not include a control group, we do not know whether the improvements in EBP outcomes might be due to the effects of time and other concurrent courses. However, the relatively stable scores for self-reported and actual knowledge seen over the holiday break suggest that the interventions did have an effect. The increase in actual knowledge scores after training could also be due to learning effects over each of the test occasions with the use of the same instrument; however, participants were not given feedback from the K-REC and at least 4 months elapsed between test occasions, limiting recall bias.

Third, it is possible that the smaller effect sizes seen in the majority of outcomes from the second course were due to a possible ceiling effect in the instruments. However, the overall upward trend we observed in the percentage of maximum scores (see Figure 2) seems to indicate that the instruments were sensitive enough to pick up changes in the outcomes even after two successive courses.

Conclusions

This study explored the magnitude and pattern of change in learning outcomes of entry-level students after repeated exposure to EBP training. The findings suggest that effect sizes are likely to be larger in outcomes such as knowledge and relevance for participants with minimal prior exposure to EBP concepts or research principles. Conversely, changes in participants' perception of their ability to use EBP (confidence) and sense of compatibility of EBP with professional work (sympathy) are likely to require a longer time frame, repeated exposures, and possibly integration of EBP training with the clinical environment. Prior exposure to EBP foundation knowledge and skills is likely to affect the magnitude of change in learning outcomes reflected in assessment tools. This issue needs to be considered by both researchers designing educational studies and educators considering applying the results of such studies in their own educational environments.

Key Messages

What is already known on this topic?

Although many studies have established improved student outcomes after evidence-based practice (EBP) training for entry-level health professional students, few studies have reported the magnitude of these improvements. We know that a single EBP training course improves student outcomes such as knowledge and skills, but we currently do not know the impact of repeated exposure to training on student outcomes, nor do we know the size of these changes. This may have important implications for curriculum development, evaluation, and modification in entry-level health professional programmes such as physiotherapy.

What this study adds

This study explored the pattern and size of change in EBP outcomes of entry-level students after two successive EBP training courses. To the best of our knowledge, this is the first study to use matched data to report on the impact of two successive EBP training courses on outcomes in a range of entry-level health professional students. We found that students' knowledge and sense of relevance of EBP changed most meaningfully for those with minimal prior exposure to training. Changes in confidence and attitudes toward EBP may require a longer timeframe and repeated training exposure.

References

- 1. Dawes M, Summerskill W, Glasziou P, et al. . Sicily statement on evidence-based practice. BMC Med Educ. 2005;5(1):1–7. http://dx.doi.org/10.1186/1472-6920-5-1. Medline:15634359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Glasziou P, Burls A, Gilbert R. Evidence based medicine and the medical curriculum. BMJ. 2008;337:a1253 http://dx.doi.org/10.1136/bmj.a1253. Medline:18815165 [DOI] [PubMed] [Google Scholar]

- 3. Wong S, McEvoy M, Wiles L, et al. . Magnitude of change in outcomes following entry-level evidence-based practice training: a systematic review. Int J Med Educ. 2013;4:107–14. http://dx.doi.org/10.5116/ijme.51a0.fd25 [Google Scholar]

- 4. Coe R. It's the effect size, stupid: what effect size is and why it is important. Paper presented at the Annual Conference of British Educational Research Association; 2002 Sept; University of Essex, Colchester (UK) [Google Scholar]

- 5. Johnston JM, Leung GM, Fielding R, et al. . The development and validation of a knowledge, attitude and behaviour questionnaire to assess undergraduate evidence-based practice teaching and learning. Med Educ. 2003;37(11):992–1000. http://dx.doi.org/10.1046/j.1365-2923.2003.01678.x. Medline:14629412 [DOI] [PubMed] [Google Scholar]

- 6. Long K, McEvoy M, Lewis L, et al. . Entry level evidence-based practice training in physiotherapy students: does it change knowledge, attitudes and behaviours? A longitudinal study. Internet J Allied Health Sci Pract. 2011;9(3):1–11 [Google Scholar]

- 7. Bennett S, Hoffmann T, Arkins M. A multi-professional evidence-based practice course improved allied health students' confidence and knowledge. J Eval Clin Pract. 2011;17(4):635–9. http://dx.doi.org/10.1111/j.1365-2753.2010.01602.x. Medline:21114802 [DOI] [PubMed] [Google Scholar]

- 8. Aronoff SC, Evans B, Fleece D, et al. . Integrating evidence based medicine into undergraduate medical education: combining online instruction with clinical clerkships. Teach Learn Med. 2010;22(3):219–23. http://dx.doi.org/10.1080/10401334.2010.488460. Medline:20563945 [DOI] [PubMed] [Google Scholar]

- 9. Kim SC, Brown CE, Fields W, et al. . Evidence-based practice-focused interactive teaching strategy: a controlled study. J Adv Nurs. 2009;65(6):1218–27. http://dx.doi.org/10.1111/j.1365-2648.2009.04975.x. Medline:19445064 [DOI] [PubMed] [Google Scholar]

- 10. Lai NM, Teng CL. Competence in evidence-based medicine of senior medical students following a clinically integrated training programme. Hong Kong Med J. 2009;15(5):332–8. Medline:19801689 [PubMed] [Google Scholar]

- 11. Taheri H, Mirmohamadsadeghi M, Adibi I, et al. . Evidence-based medicine (EBM) for undergraduate medical students. Ann Acad Med Singapore. 2008;37(9):764–8. Medline:18989493 [PubMed] [Google Scholar]

- 12. Akl EA, Izuchukwu IS, El-Dika S, et al. . Integrating an evidence-based medicine rotation into an internal medicine residency program. Acad Med. 2004;79(9):897–904. http://dx.doi.org/10.1097/00001888-200409000-00018. Medline:15326018 [DOI] [PubMed] [Google Scholar]

- 13. Phillips AC, Lewis LK, McEvoy MP, et al. . Protocol for development of the guideline for reporting evidence based practice educational interventions and teaching (GREET) statement. BMC Med Educ. 2013;13(1):9 http://dx.doi.org/10.1186/1472-6920-13-9. Medline:23347417 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Lewis LK, Williams MT, Olds TS. Development and psychometric testing of an instrument to evaluate cognitive skills of evidence based practice in student health professionals. BMC Med Educ. 2011;11(1):77–87. http://dx.doi.org/10.1186/1472-6920-11-77. Medline:21967728 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. McEvoy MP, Williams MT, Olds TS. Development and psychometric testing of a trans-professional evidence-based practice profile questionnaire. Med Teach. 2010;32(9):e373–80. http://dx.doi.org/10.3109/0142159X.2010.494741. Medline:20795796 [DOI] [PubMed] [Google Scholar]

- 16. Ramos KD, Schafer S, Tracz SM. Validation of the Fresno test of competence in evidence based medicine. BMJ. 2003;326(7384):319–21. http://dx.doi.org/10.1136/bmj.326.7384.319. Medline:12574047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. McEvoy MP, Williams MT, Olds TS. Evidence based practice profiles: differences among allied health professions. BMC Med Educ. 2010;10(1):69–76. http://dx.doi.org/10.1186/1472-6920-10-69. Medline:20937140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Thalheimer W, Cook S. How to calculate effect sizes from published research: A simplified methodology [Internet]. Somerville (MA): Work–Learning Research; 2002. [updated 2002 Aug; cited 2012 May 30]. Available from: http://www.bwgriffin.com/gsu/courses/edur9131/content/Effect_Sizes_pdf5.pdf [Google Scholar]

- 19. Norman GR, Shannon SI. Effectiveness of instruction in critical appraisal (evidence-based medicine) skills: a critical appraisal. CMAJ. 1998;158(2):177–81. Medline:9469138 [PMC free article] [PubMed] [Google Scholar]

- 20. Ubbink DT, Guyatt GH, Vermeulen H. Framework of policy recommendations for implementation of evidence-based practice: a systematic scoping review. BMJ Open. 2013;3(1):e001881 http://dx.doi.org/10.1136/bmjopen-2012-001881. Medline:23355664 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Cooper H, Nye B, Charlton K, et al. . The effects of summer vacation on achievement test scores: a narrative and meta-analytic review. Rev Educ Res. 1996;66(3):227–68. http://dx.doi.org/10.3102/00346543066003227 [Google Scholar]