Abstract

Background

Cognitive tasks are typically viewed as effortful, frustrating, and repetitive, which often leads to participant disengagement. This, in turn, may negatively impact data quality and/or reduce intervention effects. However, gamification may provide a possible solution. If game design features can be incorporated into cognitive tasks without undermining their scientific value, then data quality, intervention effects, and participant engagement may be improved.

Objectives

This systematic review aims to explore and evaluate the ways in which gamification has already been used for cognitive training and assessment purposes. We hope to answer 3 questions: (1) Why have researchers opted to use gamification? (2) What domains has gamification been applied in? (3) How successful has gamification been in cognitive research thus far?

Methods

We systematically searched several Web-based databases, searching the titles, abstracts, and keywords of database entries using the search strategy (gamif* OR game OR games) AND (cognit* OR engag* OR behavi* OR health* OR attention OR motiv*). Searches included papers published in English between January 2007 and October 2015.

Results

Our review identified 33 relevant studies, covering 31 gamified cognitive tasks used across a range of disorders and cognitive domains. We identified 7 reasons for researchers opting to gamify their cognitive training and testing. We found that working memory and general executive functions were common targets for both gamified assessment and training. Gamified tests were typically validated successfully, although mixed-domain measurement was a problem. Gamified training appears to be highly engaging and does boost participant motivation, but mixed effects of gamification on task performance were reported.

Conclusions

Heterogeneous study designs and typically small sample sizes highlight the need for further research in both gamified training and testing. Nevertheless, careful application of gamification can provide a way to develop engaging and yet scientifically valid cognitive assessments, and it is likely worthwhile to continue to develop gamified cognitive tasks in the future.

Keywords: gamification, gamelike, cognition, computer games, review

Introduction

Every day, millions of people play games, on computers, consoles, and mobile devices [1]. One explanation for the massive popularity of games is that they can provide easy access to a sense of engagement and self-efficacy which reality may not deliver [2]. By their nature, games present users with difficult challenges to overcome and use narrative structure, complex graphics, strategic elements, and intuitive rules to engage the user [3]. This ability to engross users has recently begun to be leveraged for purposes beyond entertainment in the form of gamification. The aim of gamification is to use gamelike features (competition, narrative, leaderboards, graphics, and other game design elements) to transform an otherwise mundane task into something engaging and even fun.

In cognitive science, whether we are gathering data or trying to encourage behavior change, successfully engaging participants is vital. There is evidence that a lack of participant motivation has a negative impact on the quality of data collected [4], with tasks commonly being viewed as too boring and repetitive for participant attention to be sustained. This is a particular problem for Web-based studies where participants can simply close the browser window and drop out of the study the moment they decide it is not worth their time [5].

If we are attempting to alter behavior (for example, cognitive bias modification or working memory training), then it is vital that our interventions are engaging. Gamification may help in this regard. If we can successfully import game design elements into cognitive tasks without undermining their scientific value, then we may be able to improve the quality of our data, increase the effectiveness of our interventions, and improve the experience for participants. Furthermore, using games as a vehicle to deliver cognitive training may also be advantageous simply because video games appear to have positive effects on a number of outcomes, including working memory, attentional capacity, problem solving, motivation, emotional control, and prosocial behaviors [6]. In essence, delivering targeted cognitive training through a video game medium might provide a range of benefits.

Much of the literature on gamification is relatively recent, cross-disciplinary, and heterogeneous in nature. This lack of coherence in the field is partly due to poor definition of terms; for example, the gamelike tasks covered in this review could be described as “serious games,” “gamelike,” “gamified,” “games with a purpose,” “gamed-up,” or simply “computer based” [7-10]. To our knowledge, there have been no systematic reviews of gamification in cognitive research. There have, however, been several reviews of the concepts core to gamification and how they can be applied successfully (see [11-13]). However, detailed discussion of these reviews is beyond the scope of this paper. Also related but beyond our scope is the body of work on the effects of video games on behavior (see [14,15] for reviews).

This paper aims to systematically review the ways in which gamification has already been used for the purposes of cognitive assessment and training. We were specifically interested in the following questions: (1) Why have researchers opted to use gamification? (2) What domains has gamification been applied in? (3) How successful has gamification been in cognitive research thus far? We deliberately used a broad search strategy so as not to miss any relevant papers. We reviewed only the peer-reviewed literature and have therefore deliberately excluded some cognitive training games available on the iTunes or Play Store (such as Luminosity and Peak) unless supported by peer-reviewed research.

Methods

Databases and Search Strategy

The following databases were searched electronically: PsycInfo, Medline, ETHOS, EMBASE, PubMed, IBSS, Francis, Web of Science and Scopus. We searched the titles, abstracts, and keywords of database entries using the search strategy (gamif* OR game OR games) AND (cognit* OR engag* OR behavi* OR health* OR attention OR motiv*), where * represents a wildcard to allow for alternative suffixes. Searches included papers published in English between January 2007 and October 2015. We searched the bibliographies of included papers to locate further relevant material not discovered in the database search.

Inclusion Criteria

Primary Research Paper

Included papers were empirical research studies, not literature reviews, opinion pieces, or design documents.

Novel Gamelike Task

Included papers focussed on newly developed gamelike tasks, created specifically for the study in question. We excluded commercially available video games (ie, “off-the-shelf” games) as well as gamelike tasks that have been in use for many years, such as Space Fortress (see [16] for a review).

Measure or Train Cognition

Included papers focussed on tasks designed to assess or train cognition. For scoping purposes, we took a narrow definition of cognition: those processes involved in memory, attention, decision making, impulse control, executive functioning, processing speed, and visual perception.

Validated or Piloted

Included papers had to involve an empirical study, either validating the task as a measure of cognition or piloting the intervention. Papers regarding usability testing alone were excluded.

Exclusion Criteria

Non–Peer-Reviewed Papers

We excluded non–peer-reviewed papers such as abstracts or conference posters.

Gamification in the Behavioral Sciences but not Involving Cognition

We excluded papers on gamification for education purposes, disease management, health promotion, exposure therapy, or rehabilitation.

Game Engines/Three-Dimensional (3D) Environments

We excluded papers that made use of virtual reality or a 3D environment without any game mechanics or gamelike framing.

Screening

We did not select papers based on whether they included “gamification.” Rather, papers were selected if they were captured by our search strategy and were considered relevant based on our inclusion/exclusion criteria. We intentionally did not strictly define gamification: as has been discussed famously by Wittgenstein and is alluded to by Deterding [13], trying to precisely define what elements make a game is both difficult and limiting. Therefore, we decided that a task was gamified if its stated purpose was to increase participant motivation. Where there was insufficient detail to determine whether a paper met our inclusion criteria, we erred on the side of caution to increase our confidence in the relevance of the studies reviewed.

After screening, data were extracted from each paper using a standardized data extraction form. Data relating to the questions of interest such as application of gamification, approach taken, and efficacy were extracted from each paper. Application of gamification refers to the field of psychology in which the gamelike task was used and why a gamelike task was used. Approach taken refers to the specific game mechanics used in the task and what themes and scaffolding were applied. Finally, efficacy refers to the findings produced by the task in practice, as well as details on the participants, methods used for evaluation, and limitations of the study. Categorization of concepts (such as the cognitive domains measured) was done using the paper-authors’ own words where possible. Where not possible, we mapped extracted concepts closely to existing categories.

All papers identified by our search strategy were screened by one reviewer (JL) in 3 stages, to determine whether they were relevant based on our inclusion/exclusion criteria: title, abstract, and full text. A second reviewer (EE) rescreened 20% of the papers from the title stage onward to ensure that no relevant papers were missed. Papers were only included in the review on the agreement of both JL and EE.

Results

Search Results

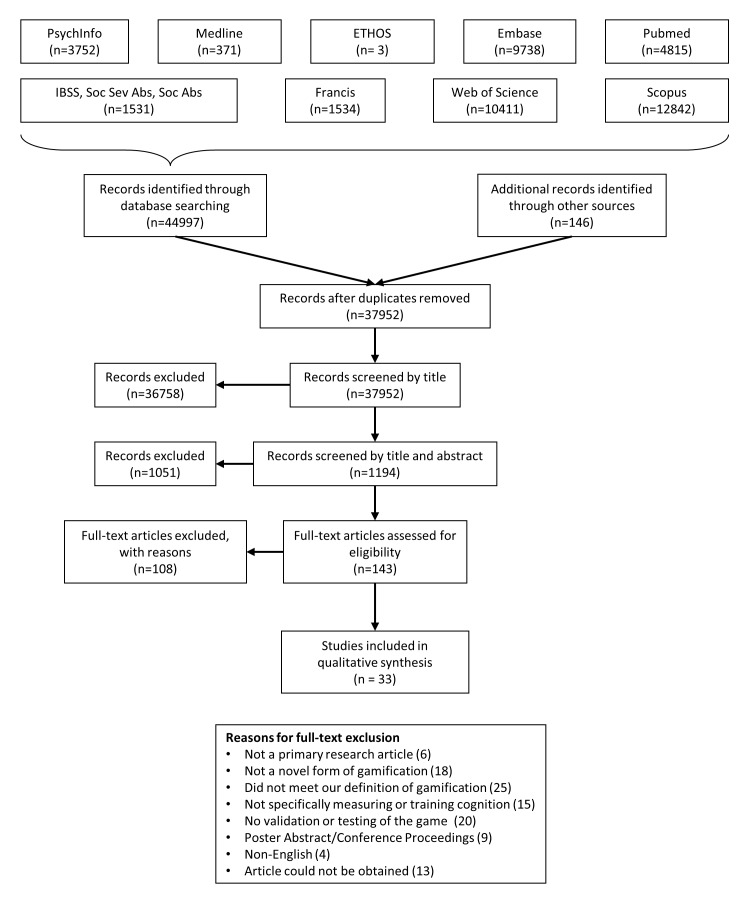

Our initial search yielded 33,445 papers (excluding duplicates). Of these, 23 papers from the original search and 4 papers from the manual reference search were included in the review. We repeated the search in October 2015, including papers from January 2015 until October 2015. This search produced 4448 papers (excluding duplicates) and resulted in another 4 papers being included in the review, with a further 2 also included following peer review. The total number of papers included in the review was therefore 33. See Figure 1 for a flowchart of the combined searches and Table 1 for details of all included studies. We used Cohen K to assess inter-rater reliability of paper inclusion at the 20% data check stage (7590 papers checked). There was moderate agreement between the 2 reviewers (k=.526, 95% CI, 0.416 to 0.633, P<.001). All supplementary data referenced in this paper can be found in Multimedia Appendix 1, whereas Multimedia Appendix 2 contains more detailed information on the games and game mechanics used by studies in the review.

Figure 1.

Flowchart detailing the paper discovery and screening process.

Table 1.

Details of included papers.a

| Author, year | Full title | Game | Category |

| McPherson and Burns, 2007 [17] | Gs invaders: Assessing a computer gamelike test of processing speed | Space Code | Testing |

| McPherson and Burns, 2008 [18] | Assessing the validity of computer gamelike tests of processing speed and working memory | Space Matrix/Space Code | Testing |

| Trapp et al, 2008 [19] | Cognitive remediation improves cognition and good cognitive performance increases time to relapse—results of a 5-year catamnestic study in schizophrenia patients | Xcog | Training |

| Gamberini et al, 2009 [20] | Eldergames project: An innovative mixed reality table-top solution to preserve cognitive functions in elderly people | Eldergames | Testing |

| Gamberini, Cardullo, Seraglia, and Bordin, 2010 [21] | Neuropsychological testing through a Nintendo Wii console | Wii Tests | Testing |

| Dovis, Oord, Wiers, and Prins, 2011 [22] | Can motivation normalize working memory and task persistence in children with attention-deficit/hyperactivity disorder? The effects of money and computer-gaming | Megabot | Testing |

| Delisle and Braun, 2011 [23] | A context for normalizing impulsiveness at work for adults with attention deficit/hyperactivity disorder (combined type) | Retirement Party | Testing |

| Prins, Dovis, Ponsioen, ten Brink, and van der Oord, 2011 [24] | Does computerized working memory training with game elements enhance motivation and training efficacy in children with ADHD? | Supermecha | Training |

| Lim et al, 2012 [25] | A brain-computer interface based attention training program for treating attention deficit hyperactivity disorder | Cogoland | Training |

| Heller et al, 2013 [26] | A machine learning-based analysis of game data for attention deficit hyperactivity disorder assessment | Groundskeeper | Testing |

| Hawkins et al, 2013 [9] | Gamelike features might not improve data | EM-Ants and Ghost Trap | Testing |

| Verhaegh, Fontijn, Aarts, and Resing, 2013 [27] | In-game assessment and training of nonverbal cognitive skills using TagTiles | Tap the Hedgehog | Both |

| Aalbers, Baars, Rikkert, and Kessels, 2013 [28] | Puzzling with online games (BAM-COG): reliability, validity, and feasibility of an online self-monitor for cognitive performance in aging adults | BAM-COG | Testing |

| Fagundo et al, 2013 [29] | Video game therapy for emotional regulation and impulsivity control in a series of treated cases with bulimia nervosa | Playmancer | Training |

| Anguera et al, 2013 [30] | Video game training enhances cognitive control in older adults | Neuroracer | Training |

| van der Oord, Ponsioen, Geurts, Ten Brink, and Prins, 2014 [31] | A pilot study of the efficacy of a computerized executive functioning remediation training with game elements for children with ADHD in an outpatient setting | Braingame Brian | Training |

| Brown et al, 2014 [32] | Crowdsourcing for cognitive science—the utility of smartphones | The Great Brain Experiment | Testing |

| Tong and Chignell, 2014 [33] | Developing a serious game for cognitive assessment: choosing settings and measuring performance | Whack-a-mole | Testing |

| Katz, Jaeggi, Buschkuehl, Stegman, and Shah, 2014 [34] | Differential effect of motivational features on training improvements in school-based cognitive training | WMTrainer | Training |

| Dunbar et al, 2013 [8] | Implicit and explicit training in the mitigation of cognitive bias through the use of a serious game | MACBETH | Training |

| Lee et al, 2013 [35] | A brain-computer interface based cognitive training system for healthy elderly: A randomised control pilot study for usability and preliminary efficacy | Card-Pairing | Training |

| Miranda and Palmer, 2013 [36] | Intrinsic motivation and attentional capture from gamelike features in a visual search task | Visual Search | Testing |

| Atkins et al, 2014 [37] | Measuring working memory is all fun and games: A four-dimensional spatial game predicts cognitive task performance | Shapebuilder | Testing |

| Dörrenbächer et al, 2014 [38] | Dissociable effects of game elements on motivation and cognition in a task switching training in middle childhood | Watermons | Training |

| McNab and Dolan, 2014 [39] | Dissociating distractor-filtering at encoding and during maintenance | The Great Brain Experiment | Testing |

| O’Toole and Dennis, 2014 [40] | Mental health on the go: Effects of a gamified attention-bias modification mobile application in trait-anxious adults | ABMTApp | Training |

| Tenorio Delgado, Arango Uribe, Aparicio Alonso, and Rosas Diaz, 2014 [41] | TENI: A comprehensive battery for cognitive assessment based on games and technology | TENI | Testing |

| De Vries, Prins, Schmand, and Geurts, 2015 [42] | Working memory and cognitive flexibility-training for children with an autism spectrum disorder: a randomized controlled trial | Braingame Brian | Training |

| Dovis, Van Der Oord, Wiers, and Prins, 2015 [43] | Improving executive functioning in children with ADHD: Training multiple executive functions within the context of a computer game. A randomized double-blind placebo controlled trial | Braingame Brian | Training |

| Kim et al, 2015 [44] | Effects of a serious game training on cognitive functions in older adults | Smart Harmony | Training |

| Manera et al, 2015 [45] | “Kitchen and cooking,” a serious game for mild cognitive impairment and Alzheimer’s disease: a pilot study | Kitchen and Cooking | Both |

| Ninaus et al, 2015 [46] | Game elements improve performance in a working memory training task | GAME | Training |

| Tarnanas et al, 2015 [47] | On the comparison of a novel serious game and electroencephalography biomarkers for early dementia screening | VAP-M | Testing |

aIn cases where the game was not named, we assigned a descriptive name.

Why Do Researchers Use Gamification?

We searched each paper for explanations as to why a gamelike task had been used and identified reasons for researchers opting to gamify their cognitive training and testing. These reasons were then coalesced into 7 categories. Some authors listed multiple reasons for gamifying their approach, whereas others gave no motivations at all. Supplementary Table 1 in Multimedia Appendix 1 provides details of which games fell into each category.

To Increase Participant Motivation

Although we assume that increasing participant motivation was a goal for every study in this review, we found 16 studies that explicitly used gamified tasks to measure or train cognition in a more motivating manner, and the majority (10 of 16) of these studies were assessment studies. Cognitive tests are typically a one off measure, and replayability is not a requirement. As such, these games tended to build simple game-archetypes, such as space invaders or whack-a-mole, around an existing cognitive task; with the goal of encouraging self-motivation, improving participant enjoyment, and even reducing test anxiety [17]. Gamification aims to increase engagement with tasks that might otherwise be perceived as demotivating [48], and the highly repetitive nature of cognitive tasks means they are ripe for improvement.

To Increase Usability/Intuitiveness for the Target Age Group

Eleven tasks were gamified specifically to enhance appeal with a given age group (see Supplementary Table 2 in Multimedia Appendix 1). The authors of these studies hypothesized that a more intuitive interface could prevent boredom and anxiety in the target age group, which might damage motivation and concentration on the tasks at hand. Six games were designed to be suitable for the elderly, who may not be familiar working with a mouse and keyboard [49]. Five other games were aimed at young children, and reframed the cognitive assessment as a game, to test the children under optimal mental conditions [41].

To Increase Long-Term Engagement

Commercial gamification is often used to create long-term interest around a user experience, product, or event [13]. Similarly, we found 11 studies (8 games) that used gamification to reduce participant dropout rates over a protracted testing or training programme. Many of these games were used in an unobserved, nonlaboratory setting; therefore, the tasks made use of motivational features that made the training and testing intrinsically more appealing and less of a burden to perform on a regular basis. A common feature was that task sessions were kept as short as possible to make them more convenient and to increase likelihood of completion [28,32]. For example, The Great Brain Experiment aimed to keep task sessions below 5 minutes in duration and found that the shortest task, the Stop Signal Task, was the most popular mini game.

To Investigate the Effects of Gamelike Tasks

Obviously, many of the studies in this review investigated the effects of gamelike tasks, however, only 5 studies explicitly stated that their aim was to assess the motivational and cognitive effects of gamelike features. Three of the 6 games were very simplistic, with only a few specific game mechanics and carefully designed nongame controls, to make the effects of the game mechanics on the data as apparent as possible. One game, Watermons [38], was designed, using Self Determination Theory [50,51], to maximize participant intrinsic motivation and make any motivational effects of game mechanics as apparent as possible.

To Stimulate the Brain

Six studies cited evidence that playing video games can be cognitively beneficial and/or stimulating as a key factor in their decision to gamify. The past decade has seen rapid growth in the investigation of the cognitive effects of video gaming, and findings have typically been positive. There is good evidence that video game players outperform nongamers on tests of working memory [52] visual attention [53,54] and processing speed [55,56]. The “active ingredient” of this cognitive enhancement effect is not yet known, but it comes as no surprise that researchers who are interested in training cognition are keen to include gamelike features in their training tasks.

To Increase Ecological Validity

Cognitive training has often suffered from a lack of transferability [57-59]. Although participants may improve at the training task, these improvements do not generalize to the real world. In a similar vein, cognitive assessment has been accused of being ecologically invalid [60]. A potential solution is to make tasks more realistic, and these tasks inevitably become gamelike as 3D graphics, sounds, and narrative are added. We identified 6 studies that used gamified tasks to test cognition in an engaging close-to-life environment or enhance transferability of learned skills. As Dunbar et al explain [8], games are uniquely suited to some forms of cognitive training as they give the player freedom to make choices and experience feedback on the effects of those choices; in other words, they provide opportunities for experiential learning [61].

To Increase Suitability for the Target Disorder

Gamified tasks may also be more appealing to patients with certain clinical conditions. Specifically, we found 6 studies (covering 4 games) designed for people with attention deficit/hyperactivity disorder (ADHD). It is commonly reported that ADHD patients are compulsive computer game players [23]. Furthermore, patients with ADHD react differently from controls to rewards and feedback: they prefer strong reinforcement and immediate feedback, as well as clear goals and objectives, all of which can easily be delivered in a gamelike environment [22,24,62].

What Are the Application Areas of Gamification?

We found comparable numbers of games used for cognitive testing (17) and training (13), with one game that can be used for both testing and training (see Supplementary Table 3 in Multimedia Appendix 1). It is also worth noting that the numbers of studies investigating cognitive testing (17) and training (15) were very similar, and the slightly smaller proportion of games used to deliver cognitive training is likely explained by the relative recency of the field.

Supplementary Table 4 in Multimedia Appendix 1 shows the cognitive domains assessed. Working memory was the most commonly tested domain. This is likely due to its ease of testing and also because working memory deficit is a common symptom in many disorders. General executive function (EF), attention, and inhibition were also commonly tested domains. Many games tested several cognitive domains and/or general EF, highlighting the difficulty of examining gamification effects on specific cognitive domains (and therefore comparing performance to standard cognitive tasks). Supplementary Table 5 in Multimedia Appendix 1 shows a breakdown of training tasks by the cognitive domains they addressed. Again, working memory was a popular target, closely followed by EF and inhibitory control training. There is a smaller overlap in domains covered by cognitive training tasks; test batteries tend to test a wide range of domains, whereas training focuses on 1 or 2 domains exclusively.

Does Gamification Work?

The studies we reviewed were generally enthusiastic about their use of gamified tasks, although given the diversity of study aims, this does not mean that all games worked as expected. Where reported, subjective and objective measures of participant engagement were positive. All studies that measured intrinsic motivation reported that the use of gamelike tasks improved motivation, compared with nongamified versions. We identified 21 of 33 studies that compared a gamelike task directly against a nongamified counterpart, and these studies can shed light on the specific effects of gamification on testing and training tasks.

Most gamified assessments were validated successfully. Wii Tests [21], Shapebuilder [37], The Great Brain Experiment [32,39], BAM-COG [28], and Tap the Hedgehog [27] were all found to produce output measures/scores that correlated fairly well with their non–gamelike counterparts, though mixed-domain measures were an issue (see Supplementary Table 1 in Multimedia Appendix 2 for full details of all games). Validation studies varied in their design, and some studies reported complex correlations between gamified and nongamified tasks with multiple outputs. However, sample correlations from some of the simpler validation studies suggest intertask correlations of 0.45-0.60 [28,37,39,63]. Broadly speaking, these were well-designed and well-powered studies, and together, they provide encouraging evidence that cognitive tests can be gamified and still be useful as a research tool.

Some studies found that their gamified tests were correlated with measures of multiple cognitive domains, in other words, they were mixed-domain measures. For example, use of exploratory factor analysis showed that Whack-a-Mole’s primary output measure was correlated with 2 of the 3 EFs of interest: inhibition (r (22)=.60, P<.001) and updating (r (22) = .35, P<.05) [33]. Space Code [17,18] had similar problems. The initial study was successful, with Space Code’s output measure correlating well with a conventional measure of processing speed (r (58)=.55, P<.001). However, a second paper detailing 2 experiments which aimed to replicate the previous finding found that Space Code’s correlations with measures of working memory, visuospatial ability, and processing speed were not stable [18]. The fact that Space Code was thought to be a fairly pure measure in one study and then was shown to be mixed-domain in the next highlights the fact that designing gamified cognitive tasks is difficult, and multiple, well-powered validation studies may be required to ensure a task is measuring what is intended.

Gamification also has the potential to invalidate a task. For example, Retirement Party was compared against the Continuous Performance Task-II in healthy controls and adults with ADHD. The Continuous Performance Task-II detected more commission errors from the ADHD adults as expected (mean [M]=56, standard deviation [SD]=13 vs M=46, SD=10), but Retirement Party did not (M=14.4, SD=5.8 vs M=13.2, SD=4.3): this likely invalidates the game as a diagnostic tool for ADHD. However, Delisle and Braun [23] discuss the possibility that Retirement Party may have detected no deficit in ADHD patients as the nature of the task was such that there was no deficit: the highly structured and feedback-rich multitask environment may have normalized the ADHD patients’ usual inattention. Such a performance boost resulting from gamelike elements is a disadvantage when performing cognitive assessment but is likely to be desirable in a cognitive training scenario.

Several studies in this review focused specifically on adding game mechanics to cognitive tasks to investigate the resultant changes in data, enjoyment, and motivation. Dovis et al [22] studied whether different types of incentive could normalize ADHD children’s performance on working memory training. They found that regardless of incentive, ADHD children did not perform as well as healthy controls. However, ADHD children also experienced a decrease in performance over time, and the €10 condition and the gaming condition (Megabot) prevented this decrease. These results indicate that performance problems in ADHD training might be somewhat alleviated through the use of gamelike tasks. This is further supported by the study by Prins et al (Supermecha [24]), which found that ADHD children completed more training trials (M=199.48, SD=47.46 vs M=134.43, SD=34.18), with higher accuracy (69% vs 51%), when trained using a gamified working memory training task as opposed to a non–gamelike one. Children in the gamelike condition were also more engaged and enjoyed the training more, as measured by “absence time.” In a similar vein, The Great Brain Experiment [39] and GAME [46] both showed gamelike tasks to be appropriate for measuring and training working memory. With Ninaus et al, presenting evidence that gamification can improve overall participant performance in a working memory training task [48] and McNab and Dolan showing that data collected from 2 very different gamified and nongamified tasks could fit similar models of working memory capacity.

In contrast, WMTrainer, was assessed across 7 different conditions containing different combinations of game mechanics [34]. They compared training improvement across conditions and found that the greatest training effect was caused by versions with minimal motivational features. The fully gamified condition had one of the shallowest improvement slopes and none of the conditions made any difference on subjective motivation scores. However, even the minimally gamelike version still featured simplistic graphics and displayed a player score at the end of the block. It is possible that even this minimal gamification was enough to induce increased motivation and that adding “distracting” game elements such as persistent score display may have a negative impact on performance by inducing unneeded stress or new cognitive demands [34].

One of the most theoretically driven games in our review was Watermons [38], which included many motivational features aimed at delivering a sense of player autonomy and competence. The task-switching training was embedded within a rich storyline and graphically enhanced theme. They found that these gamelike features increased the effect of training, reducing reaction times and switch costs, compared against a non–gamelike version of the training. Participants were also more willing to perform training when in the gamelike condition.

Miranda and Palmer [36] used a visual search task with 2 forms of reward for fast and accurate responses: sound effects and points. They found that sound effects led to increased reaction times, potentially due to attentional capture and did not improve scores of subjective engagement. Points appeared to have no effect on data and boosted subjective engagement scores. These results highlight the delicate nature of designing gamified cognitive tests because something as innocuous as a few sound effects had deleterious effects on participant performance.

Finally, Hawkins et al, [9] compared gamelike versions of 2 decision-making tasks against nongame counterparts. No difference between the data collected by the gamified versions and the nongame versions was found. Subjective ratings indicated that both versions of both tasks were equally boring and repetitive, but that the gamelike versions were more interesting and enjoyable. Given the relatively large combined sample size of these studies (N=200), they provide good evidence that game mechanics can be included in cognitive tests without invalidating the data and with the desired effect of increasing motivation.

Discussion

Principal Results

We identified 7 reasons why researchers use gamification in cognitive research. These include not only the “traditional” applications of gamification such as increasing long- and short-term engagement with a task but also more clinically related reasons such as making tasks more interactive to enhance the effect of cognitive training. Several studies aimed to reduce test anxiety and optimize performance in groups that traditionally dislike being tested, particularly electronically, such as elderly people and children. By hiding the test behind a novel interface and gameplay, the target audience might feel more comfortable.

We saw several games aimed at training and testing people with ADHD, and overall, these games appear highly engaging to users, in some cases, even increasing the time spent training. Gamified tasks may be valuable for assessing ADHD patients as computer games are particularly appealing to them: with rapid rewards, immediate feedback, and time-pressure being exactly the type of stimulus the ADHD brain craves [64,65]. The dopaminergic system is thought to be abnormal in ADHD [66,67]. However, it is thought that playing video games can facilitate the release of extrastriatal dopamine, which plays a role in focusing attention and heightening arousal [68,69]; this may improve player performance and motivation. Nevertheless, as Delisle and Braun discuss [23], we must be cautious that liberal use of game mechanics does not reverse the very deficit we are hoping to measure.

One of the primary reasons that psychologists are keen to utilize gamification is to increase performance and motivation in research populations. The results of references [9,17,18,38], show that gamified tasks can be used to improve motivation, while still maintaining a scientifically valid task. However, Katz et al [34] and Miranda and Palmer [36] highlight that this can be difficult balancing act, with several game mechanics having unforeseen deleterious effects on performance. If gamified psychological tasks are to become common in the future, further research is required to disentangle the impact of specific game mechanics on task performance, as these studies have already begun to do.

Differences Between Training and Assessment Tasks

Training games typically contained many features and were similar in appearance to commercial video games. Cognitive training normally requires several sessions to be effective, and as a result, training tasks need to be engaging enough to play for many hours. 3D graphics were quite prevalent, as was the use of avatars, points, levels, and dynamically growing game worlds (see Supplementary Figure 1 in Multimedia Appendix 2). Long-term goals which had to be completed over repeated sessions were also common and served to sustain engagement over a long period. In contrast, assessment games were simpler, predominantly using 2D graphics, sound effects, score, and theme to create the appearance of a game. Several games simply presented themselves as “puzzles” which the participant had to complete. Tasks of this nature represent gamification at its simplest, but they were well received by users, implying that minimal gamification is better than no gamification. The simplicity of gamification employed is likely due to the constant tension between creating an engaging task and the risk of undermining the task’s scientific validity: including unknown game mechanics might have deleterious effects on the data collected.

Validating Gamified Tasks

We found heterogeneous standards for validating gamified tasks. Typically, cognitive assessment games were validated rigorously, using correlation with similar cognitive tasks and factor analysis to determine whether they were performing as expected. Many training games used a gamified task only, meaning the effect of gamification cannot be dissociated from the effect of the intervention. Sample sizes were small in nearly all of the studies we reviewed, and there was little consideration of statistical power when sample sizes were decided upon, with only 5 of 33 papers describing a power calculation. Gamified cognitive tests are novel scientific instruments and must be validated as such. Small pilot studies, followed by larger validation studies including assessment of test–retest reliability, and internal and external validity of the measures taken by the game are needed [70]. Regarding cognitive training, ideally gamified training should be treated as an intervention and so the current gold standard of a blinded randomized control trial is appropriate [71]. In both testing and training, we would recommend the use of at least 2 controls: a standard cognitive task designed to produce the same output measures/training effect as the newly gamified task and a nongamified version of the gamified task, built on the same software platform and identical in function/interaction, with all game mechanics removed.

Limitations

One limitation of this review is the necessity of a narrow scope. Gamification in psychology and psychiatry is a rapidly growing field, hence we decided to focus specifically on “cognitive training and testing.” This has resulted in some papers being excluded on the subjective basis of not being “cognitive research.” Nevertheless, to counteract this subjectivity, papers were only included in the review on consensus from both reviewers (JL and EE), and a 20% selection check was performed by EE on papers from the title-screening stage onward. An additional consideration is that many of the studies reviewed were of a preliminary nature, and as such, the findings reported here should be considered tentative.

Conclusions

As discussed by Hawkins et al [9], it has often been suggested that gamified cognitive tasks may result in higher quality data and more effective training, simply by virtue of heightened engagement. Our review found no evidence to support that gamified tests can be used to improve data quality, either by reducing between-subject noise or by improving participant performance, and there were some indications that it may actually worsen it [17,18,34,36]. We did, however, find some evidence that gamification may be effective at enhancing cognitive training, but we must take these positive training findings tentatively due to numerous methodologic problems in the studies that we reviewed.

Irrespective of whether gamification can improve data or enhance training, there are still many reasons why gamelike tasks may play an important role in the future of cognitive research. Gamified cognitive tasks are more engaging than traditional tasks, thus making the participant experience less effortful and potentially reducing drop-outs in longitudinal studies. Furthermore, despite concerns that some commonly used game mechanics might reduce participant motivation [72] (such as by having a visibly low score [73]), we saw no evidence that this was the case. Indeed, gamification was reported as both motivational and positive by study authors and participants alike. Gamelike tasks may also reduce feelings of test anxiety and allow alternative interfaces to cognitive tests that would otherwise be difficult to deliver in certain populations. The results of this review also show that it is possible to design gamified cognitive assessments that validate well against more traditional measures, providing that caution is taken to avoid developing mixed-domain measures or masking deficits of interest.

As cognitive research begins to move out of the laboratory and onto personal computers and mobile devices, engagement will be the key to collecting high-quality data. Gamification is likely to play an important role in enabling this change; but further research and more rigorous validation is needed to understand the delicate interplay between game mechanics and cognitive processes.

Acknowledgments

The authors are members of the United Kingdom Centre for Tobacco and Alcohol Studies, a UKCRC Public Health Research: Centre of Excellence. Funding from British Heart Foundation, Cancer Research UK, Economic and Social Research Council, Medical Research Council, and the National Institute for Health Research, under the auspices of the UK Clinical Research Collaboration, is gratefully acknowledged. The UK Medical Research Council and the Wellcome Trust (092731) and the University of Bristol provide core support for ALSPAC. This work was supported by the Medical Research Council (MC_UU_12013/6), a PhD studentship to JL funded by the Economic and Social Research Council and Cambridge Cognition Limited and a NIHR funded academic clinical fellowship to EE. The funders had no role in review design, data extraction and analysis, decision to publish, or preparation of the manuscript.

Abbreviations

- ADHD

attention deficit/hyperactivity disorder

- EF

executive function

Five tables detailing coding and categorization in the main manuscript. Table 1 lists the reasons for using gamification in cognitive training and testing. Table 2 categorizes games by the age group they were aimed at. Table 3 lists games by training or testing category. Tables 4 and 5 list games by the cognitive domains which they targeted.

Additional information on the papers covered by this review that is highly detailed and intended for further analysis in future papers or for other researchers to assess themselves. It contains short text-based descriptions of all 31 games and a screenshot where the paper included one. There is also a detailed breakdown of the game mechanics in each task.

Footnotes

Conflicts of Interest: Dr Coyle is a Director of Handaxe CIC, a not-for-profit company that develops technology, including computer games, to support mental health interventions for children and adolescents.

References

- 1.State of Online Gaming Report. Spilgames; 2013. [2014-11-27]. http://auth-83051f68-ec6c-44e0-afe5-bd8902acff57.cdn.spilcloud.com/v1/archives/1384952861.25_State_of_Gaming_2013_US_FINAL.pdf . [Google Scholar]

- 2.Cowley B, Charles D, Black M, Hickey R. Toward an understanding of flow in video games. Computers in Entertainment. 2008;6(2) doi: 10.1145/1371216.1371223. Article No. 20. [DOI] [Google Scholar]

- 3.McGonigal J. Reality is Broken: Why games make us better and how they can change the world. New York: Vintage; 2012. [Google Scholar]

- 4.DeRight J, Jorgensen R. I just want my research credit: frequency of suboptimal effort in a non-clinical healthy undergraduate sample. Clin Neuropsychol. 2015;29(1):101–17. doi: 10.1080/13854046.2014.989267. [DOI] [PubMed] [Google Scholar]

- 5.Eysenbach G. The law of attrition. J Med Internet Res. 2005;7(1):e11. doi: 10.2196/jmir.7.1.e11. http://www.jmir.org/2005/1/e11/ v7e11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Boyle E, Hainey T, Connolly T, Gray G, Earp J, Ott M, Lim T, Ninaus M, Ribeiro C, Pereira J. An update to the systematic literature review of empirical evidence of the impacts and outcomes of computer games and serious games. Computers & Education. 2016 Mar;94:178–192. doi: 10.1016/j.compedu.2015.11.003. [DOI] [Google Scholar]

- 7.Boendermaker WJ, Boffo M, Wiers RW. Exploring Elements of Fun to Motivate Youth to Do Cognitive Bias Modification. Games Health J. 2015 Dec;4(6):434–43. doi: 10.1089/g4h.2015.0053. [DOI] [PubMed] [Google Scholar]

- 8.Dunbar N, Miller C, Adame B, Elizondo J, Wilson S, Lane B, Kauffman A, Bessarabova E, Jensen M, Straub S, Lee Y, Burgoon J, Valacich J, Jenkins J, Zhang J. Implicit and explicit training in the mitigation of cognitive bias through the use of a serious game. Computers in Human Behavior. 2014;37:307–318. doi: 10.1016/j.chb.2014.04.053. [DOI] [Google Scholar]

- 9.Hawkins GE, Rae B, Nesbitt KV, Brown SD. Gamelike features might not improve data. Behav Res Methods. 2013 Jun;45(2):301–18. doi: 10.3758/s13428-012-0264-3. [DOI] [PubMed] [Google Scholar]

- 10.Dubbels B. Gamification, Serious Games, Ludic Simulation, and Other Contentious Categories. International Journal of Gaming and Computer-Mediated Simulations. 2013 Apr;5(2):1–19. doi: 10.4018/jgcms.2013040101. [DOI] [Google Scholar]

- 11.Aparicio A, Vela F, Sánchez J, Montes J. Analysis and application of gamification. Proceedings of the 13th International Conference on Interacción Persona-Ordenador; Interacción 2012; October 03-05, 2012; Elche, Spain. 2012. [DOI] [Google Scholar]

- 12.Cugelman B. Gamification: what it is and why it matters to digital health behavior change developers. JMIR Serious Games. 2013;1(1):e3. doi: 10.2196/games.3139. http://games.jmir.org/2013/1/e3/ v1i1e3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Deterding S, Björk S, Nacke L, Dixon D, Lawley E. Designing gamification: creating gameful and playful experiences. Extended Abstracts on Human Factors in Computing Systems; CHI '13; April 27-May 02, 2013; Paris, France. USA: ACM; 2013. pp. 3263–3266. [DOI] [Google Scholar]

- 14.Biddiss E, Irwin J. Active video games to promote physical activity in children and youth: a systematic review. Arch Pediatr Adolesc Med. 2010 Jul;164(7):664–672. doi: 10.1001/archpediatrics.2010.104.164/7/664 [DOI] [PubMed] [Google Scholar]

- 15.Primack B, Carroll M, McNamara M, Klem M, King B, Rich M, Chan C, Nayak S. Role of video games in improving health-related outcomes: a systematic review. Am J Prev Med. 2012 Jun;42(6):630–638. doi: 10.1016/j.amepre.2012.02.023.S0749-3797(12)00172-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Donchin E. Video games as research tools: The Space Fortress game. Behavior Research Methods, Instruments, & Computers. 1995 Jun;27(2):217–223. doi: 10.3758/BF03204735. [DOI] [Google Scholar]

- 17.McPherson J, Burns NR. Gs invaders: assessing a computer game-like test of processing speed. Behav Res Methods. 2007 Nov;39(4):876–83. doi: 10.3758/bf03192982. [DOI] [PubMed] [Google Scholar]

- 18.McPherson J, Burns NR. Assessing the validity of computer-game-like tests of processing speed and working memory. Behav Res Methods. 2008 Nov;40(4):969–81. doi: 10.3758/BRM.40.4.969.40/4/969 [DOI] [PubMed] [Google Scholar]

- 19.Trapp W, Landgrebe Michael, Hoesl Katharina, Lautenbacher Stefan, Gallhofer Bernd, Günther Wilfried, Hajak Goeran. Cognitive remediation improves cognition and good cognitive performance increases time to relapse--results of a 5 year catamnestic study in schizophrenia patients. BMC Psychiatry. 2013;13:184. doi: 10.1186/1471-244X-13-184. http://bmcpsychiatry.biomedcentral.com/articles/10.1186/1471-244X-13-184 .1471-244X-13-184 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gamberini L, Martino F, Seraglia B, Spagnolli A, Fabregat M, Ibanez F, Alcaniz M, Andres J. Eldergames project: An innovative mixed reality table-top solution to preserve cognitive functions in elderly people. 2nd Conference on Human System Interactions; May 21-23, 2009; Catania. 2009. May 21, pp. 164–169. [DOI] [Google Scholar]

- 21.Gamberini L, Cardullo S, Seraglia B, Bordin A. Neuropsychological testing through a Nintendo Wii console. Stud Health Technol Inform. 2010;154:29–33. [PubMed] [Google Scholar]

- 22.Dovis S, Van der Oord Saskia. Wiers R, Prins Pier J M Can motivation normalize working memory and task persistence in children with attention-deficit/hyperactivity disorder? The effects of money and computer-gaming. J Abnorm Child Psychol. 2012 Jul;40(5):669–81. doi: 10.1007/s10802-011-9601-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Delisle J, Braun Claude M J A context for normalizing impulsiveness at work for adults with attention deficit/hyperactivity disorder (combined type) Arch Clin Neuropsychol. 2011 Nov;26(7):602–13. doi: 10.1093/arclin/acr043.acr043 [DOI] [PubMed] [Google Scholar]

- 24.Prins PJ, Dovis S, Ponsioen A, ten Brink E, van der Oord S. Does computerized working memory training with game elements enhance motivation and training efficacy in children with ADHD? Cyberpsychol Behav Soc Netw. 2011 Mar;14(3):115–22. doi: 10.1089/cyber.2009.0206. [DOI] [PubMed] [Google Scholar]

- 25.Lim. Lee. Guan. Fung Daniel Shuen Sheng. Zhao. Teng Stephanie Sze Wei. Zhang. Krishnan K Ranga Rama A brain-computer interface based attention training program for treating attention deficit hyperactivity disorder. PLoS One. 2012;7(10) doi: 10.1371/journal.pone.0046692. http://dx.plos.org/10.1371/journal.pone.0046692 .PONE-D-12-14930 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Heller M, Roots K, Srivastava S, Schumann J, Srivastava J, Hale T. A Machine Learning-Based Analysis of Game Data for Attention Deficit Hyperactivity Disorder Assessment. Games Health J. 2013 Oct;2(5):291–8. doi: 10.1089/g4h.2013.0058. [DOI] [PubMed] [Google Scholar]

- 27.Verhaegh J, Fontijn W, Aarts E, Resing W. In-game assessment and training of nonverbal cognitive skills using TagTiles. Pers Ubiquit Comput. 2012 Apr 22;17(8):1637–1646. doi: 10.1007/s00779-012-0527-0. [DOI] [Google Scholar]

- 28.Aalbers T, Baars Maria A E. Olde Rikkert Marcel G M. Kessels Roy P C Puzzling with online games (BAM-COG): reliability, validity, and feasibility of an online self-monitor for cognitive performance in aging adults. J Med Internet Res. 2013;15(12) doi: 10.2196/jmir.2860. http://www.jmir.org/2013/12/e270/ v15i12e270 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Fagundo. Santamaría. Forcano. Giner-Bartolomé. Jiménez-Murcia. Sánchez. Granero. Ben-Moussa. Magnenat-Thalmann. Konstantas. Lam. Lucas. Nielsen. Bults. Tarrega. Menchón. de le Torre. Cardi. Treasure. Fernández-Aranda Video game therapy for emotional regulation and impulsivity control in a series of treated cases with bulimia nervosa. European Eating Disorders Review. 2013 Nov;21(6):493–499. doi: 10.1002/erv.2259. [DOI] [PubMed] [Google Scholar]

- 30.Anguera J, Boccanfuso J, Rintoul J, Al-Hashimi O, Faraji F, Janowich J, Kong E, Larraburo Y, Rolle C, Johnston E, Gazzaley A. Video game training enhances cognitive control in older adults. Nature. 2013 Sep 5;501(7465):97–101. doi: 10.1038/nature12486. http://europepmc.org/abstract/MED/24005416 .nature12486 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.van der Oord S, Ponsioen A J, Geurts HM, Ten Brink EL, Prins PJ. A pilot study of the efficacy of a computerized executive functioning remediation training with game elements for children with ADHD in an outpatient setting: outcome on parent- and teacher-rated executive functioning and ADHD behavior. J Atten Disord. 2014 Nov;18(8):699–712. doi: 10.1177/1087054712453167.1087054712453167 [DOI] [PubMed] [Google Scholar]

- 32.Brown H, Zeidman P, Smittenaar P, Adams R, McNab F, Rutledge R, Dolan R. Crowdsourcing for Cognitive Science - The Utility of Smartphones. PloS One. 2014 Jul 15;9 doi: 10.1371/journal.pone.0100662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tong T, Chignell M. Developing a Serious Game for Cognitive Assessment: Choosing Settings and Measuring Performance. Proceedings of the Second International Symposium of Chinese CHI; Chinese CHI 2014; April 26-27, 2014; Toronto, Canada. 2014. Apr 26, pp. 70–79. [DOI] [Google Scholar]

- 34.Katz B, Jaeggi S, Buschkuehl M, Stegman A, Shah P. Differential effect of motivational features on training improvements in school-based cognitive training. Front Hum Neurosci. 2014;8:242. doi: 10.3389/fnhum.2014.00242. doi: 10.3389/fnhum.2014.00242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lee T, Goh SJ, Quek S, Phillips R, Guan C, Cheung Y, Feng L, Teng SS, Wang C, Chin Z, Zhang H, Ng T, Lee J, Keefe R, Krishnan KR. A brain-computer interface based cognitive training system for healthy elderly: a randomized control pilot study for usability and preliminary efficacy. PLoS One. 2013 Nov 18;8(11):e79419. doi: 10.1371/journal.pone.0079419. http://dx.plos.org/10.1371/journal.pone.0079419 .PONE-D-13-24227 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Miranda A, Palmer E. Intrinsic motivation and attentional capture from gamelike features in a visual search task. Behav Res Methods. 2014 Mar;46(1):159–72. doi: 10.3758/s13428-013-0357-7. [DOI] [PubMed] [Google Scholar]

- 37.Atkins S, Sprenger A, Colflesh G, Briner T, Buchanan J, Chavis S, Chen S, Iannuzzi G, Kashtelyan V, Dowling E, Harbison J, Bolger D, Bunting M, Dougherty M. Measuring working memory is all fun and games: a four-dimensional spatial game predicts cognitive task performance. Exp Psychol. 2014;61(6):417–438. doi: 10.1027/1618-3169/a000262.V881372332773455 [DOI] [PubMed] [Google Scholar]

- 38.Dörrenbächer S, Müller P, Tröger J, Kray J. Dissociable effects of game elements on motivation and cognition in a task-switching training in middle childhood. Front Psychol. 2014;5:1275. doi: 10.3389/fpsyg.2014.01275. doi: 10.3389/fpsyg.2014.01275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.McNab F, Dolan R. Dissociating distractor-filtering at encoding and during maintenance. J Exp Psychol Hum Percept Perform. 2014 Jun;40(3):960–7. doi: 10.1037/a0036013. http://europepmc.org/abstract/MED/24512609 .2014-05065-001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Dennis TA, O'Toole L. Mental Health on the Go: Effects of a Gamified Attention Bias Modification Mobile Application in Trait Anxious Adults. Clin Psychol Sci. 2014 Sep 1;2(5):576–590. doi: 10.1177/2167702614522228. http://europepmc.org/abstract/MED/26029490 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Delgado MT, Uribe PA, Alonso AA, Díaz RR. TENI: A comprehensive battery for cognitive assessment based on games and technology. Child Neuropsychol. 2016;22(3):276–91. doi: 10.1080/09297049.2014.977241. [DOI] [PubMed] [Google Scholar]

- 42.de Vries M, Prins PJ, Schmand B, Geurts H. Working memory and cognitive flexibility-training for children with an autism spectrum disorder: a randomized controlled trial. J Child Psychol Psychiatry. 2015 May;56(5):566–76. doi: 10.1111/jcpp.12324. [DOI] [PubMed] [Google Scholar]

- 43.Dovis S, Van der Oord S, Wiers RW, Prins PJ. Improving executive functioning in children with ADHD: training multiple executive functions within the context of a computer game. a randomized double-blind placebo controlled trial. PLoS One. 2015;10(4):e0121651. doi: 10.1371/journal.pone.0121651. http://dx.plos.org/10.1371/journal.pone.0121651 .PONE-D-14-16366 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Kim K, Choi Y, You H, Na D, Yoh Myeung-Sook, Park Jong-Kwan, Seo Jeong-Hwan, Ko Myoung-Hwan. Effects of a serious game training on cognitive functions in older adults. J Am Geriatr Soc. 2015 Mar;63(3):603–5. doi: 10.1111/jgs.13304. [DOI] [PubMed] [Google Scholar]

- 45.Manera V, Petit P, Derreumaux A, Orvieto I, Romagnoli M, Lyttle G, David R, Robert P. 'Kitchen and cooking,' a serious game for mild cognitive impairment and Alzheimer's disease: a pilot study. Front Aging Neurosci. 2015;7:24. doi: 10.3389/fnagi.2015.00024. doi: 10.3389/fnagi.2015.00024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Ninaus M, Pereira G, Stefitz R, Prada R, Paiva A, Neuper C, Wood G. Game elements improve performance in a working memory training task. IJSG. 2015 Feb 10;2(1):3–16. doi: 10.17083/ijsg.v2i1.60. http://journal.seriousgamessociety.org/index.php?journal=IJSG&page=article&op=view&path[]=60 . [DOI] [Google Scholar]

- 47.Tarnanas I, Laskaris N, Tsolaki M, Muri R, Nef T, Mosimann U. On the comparison of a novel serious game and electroencephalography biomarkers for early dementia screening. Adv Exp Med Biol. 2015;821:63–77. doi: 10.1007/978-3-319-08939-3_11. [DOI] [PubMed] [Google Scholar]

- 48.Eriksson B, Musialik M, Wagner J. Student essay. 2012. Aug 6, [2016-07-08]. Gamification - Engaging the Future https://gupea.ub.gu.se/handle/2077/30037 .

- 49.Sirály E, Szabó Á, Szita B, Kovács V, Fodor Z, Marosi C, Salacz P, Hidasi Z, Maros V, Hanák P, Csibri E, Csukly G. Monitoring the early signs of cognitive decline in elderly by computer games: an MRI study. PLoS One. 2015 Feb 23;10(2):e0117918. doi: 10.1371/journal.pone.0117918. http://dx.plos.org/10.1371/journal.pone.0117918 .PONE-D-14-36909 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Ryan R, Deci E. Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. American Psychologist. 2000;55(1):68–78. doi: 10.1037/0003-066X.55.1.68. [DOI] [PubMed] [Google Scholar]

- 51.Ryan R, Rigby C, Przybylski A. The Motivational Pull of Video Games: A Self-Determination Theory Approach. Motiv Emot. 2006 Nov 29;30(4):344–360. doi: 10.1007/s11031-006-9051-8. [DOI] [Google Scholar]

- 52.Colzato LS, van den Wildenberg M, Zmigrod S, Hommel B. Action video gaming and cognitive control: playing first person shooter games is associated with improvement in working memory but not action inhibition. Psychol Res. 2013 Mar;77(2):234–9. doi: 10.1007/s00426-012-0415-2. [DOI] [PubMed] [Google Scholar]

- 53.Durlach P, Kring J, Bowens L. Effects of action video game experience on change detection. Mil Psychol. 2009;21(1):24–39. [Google Scholar]

- 54.Green C, Bavelier D. Enumeration versus multiple object tracking: the case of action video game players. Cognition. 2006 Aug;101(1):217–45. doi: 10.1016/j.cognition.2005.10.004. http://europepmc.org/abstract/MED/16359652 .S0010-0277(05)00187-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Dobrowolski P, Hanusz K, Sobczyk B, Skorko M, Wiatrow A. Cognitive enhancement in video game players: The role of video game genre. Computers in Human Behavior. 2015 Mar;44:59–63. doi: 10.1016/j.chb.2014.11.051. [DOI] [Google Scholar]

- 56.Dye Matthew W G. Green CS, Bavelier D. Increasing Speed of Processing With Action Video Games. Curr Dir Psychol Sci. 2009;18(6):321–326. doi: 10.1111/j.1467-8721.2009.01660.x. http://europepmc.org/abstract/MED/20485453 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Abikoff H. Efficacy of cognitive training interventions in hyperactive children: A critical review. Clinical Psychology Review. 1985;5(5):479–512. doi: 10.1016/0272-7358(85)90005-4. [DOI] [Google Scholar]

- 58.Jaeggi S, Buschkuehl M, Jonides J, Shah P. Short- and long-term benefits of cognitive training. PNAS. 2011 Jun 13;108(25):10081–10086. doi: 10.1073/pnas.1103228108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Melby-Lervåg M, Hulme C. Is working memory training effective? A meta-analytic review. Dev Psychol. 2013 Feb;49(2):270–91. doi: 10.1037/a0028228.2012-12954-001 [DOI] [PubMed] [Google Scholar]

- 60.Burgess P, Alderman N, Forbes C, Costello A, Coates L, Dawson D, Anderson N, Gilbert S, Dumontheil I, Channon S. The case for the development and use of “ecologically valid” measures of executive function in experimental and clinical neuropsychology. J Int Neuropsychol Soc. 2006 Mar;12(2):194–209. doi: 10.1017/S1355617706060310.S1355617706060310 [DOI] [PubMed] [Google Scholar]

- 61.Kolb D. Experiential learning: experience as the source of learning and development. Englewood Cliffs, NJ: Prentice-Hall; 1984. [Google Scholar]

- 62.Prins PJ, Brink E, Dovis S, Ponsioen A, Geurts H, de Vries M, van der Oord Saskia. “Braingame Brian”: Toward an Executive Function Training Program with Game Elements for Children with ADHD and Cognitive Control Problems. Games Health J. 2013 Feb;2(1):44–9. doi: 10.1089/g4h.2013.0004. [DOI] [PubMed] [Google Scholar]

- 63.Verhaegh J, Fontijn W, Resing W. On the correlation between children's performances on electronic board tasks and nonverbal intelligence test measures. Computers & Education. 2013 Nov;69:419–430. doi: 10.1016/j.compedu.2013.07.026. [DOI] [Google Scholar]

- 64.Kok A, Kong T, Bernard-Opitz V. A comparison of the effects of structured play and facilitated play approaches on preschoolers with autism. A case study. Autism. 2002 Jun;6(2):181–96. doi: 10.1177/1362361302006002005. [DOI] [PubMed] [Google Scholar]

- 65.WebMD. 2012. [2016-04-19]. Video Games and ADHD: What's the Link? http://www.webmd.com/add-adhd/childhood-adhd/features/adhd-and-video-games-is-there-a-link?print=true .

- 66.Dougherty D, Bonab A, Spencer T, Rauch S, Madras B, Fischman A. Dopamine transporter density in patients with attention deficit hyperactivity disorder. Lancet. 1999;354(9196):2132–3. doi: 10.1016/S0140-6736(99)04030-1.S0140-6736(99)04030-1 [DOI] [PubMed] [Google Scholar]

- 67.LaHoste. Swanson. Wigal. Glabe. Wigal. King. Kennedy Dopamine D4 receptor gene polymorphism is associated with attention deficit hyperactivity disorder. Mol Psychiatry. 1996 May;1(2):121–4. [PubMed] [Google Scholar]

- 68.Nieoullon A. Dopamine and the regulation of cognition and attention. Prog Neurobiol. 2002 May;67(1):53–83. doi: 10.1016/s0301-0082(02)00011-4.S0301008202000114 [DOI] [PubMed] [Google Scholar]

- 69.Nieoullon A, Coquerel A. Dopamine: a key regulator to adapt action, emotion, motivation and cognition. Curr Opin Neurol. 2003 Dec;16 Suppl 2:S3–9. [PubMed] [Google Scholar]

- 70.Kato P. Pam Kato's Blog. 2013. Apr 05, What do you mean when you say your serious game has been validated? Experimental vs. Test Validity https://pamkato.com/2013/04/25/what-do-you-mean-when-you-say-your-serious-game-has-been-validated-experimental-vs-test-validty/

- 71.Kato PM. Evaluating Efficacy and Validating Games for Health. Games Health J. 2012 Feb;1(1):74–6. doi: 10.1089/g4h.2012.1017. [DOI] [PubMed] [Google Scholar]

- 72.Mekler E, Brühlmann F, Opwis K, Tuch A. Do Points, Levels & Leaderboards Harm Intrinsic Motivation?: An Empirical Analysis of Common Gamification Elements. Proceedings of the First International Conference on Gameful Design, Research, and Applications; Gamification 2013; October 02-04, 2013; Stratford, ON, Canada. 2013. pp. 66–73. [DOI] [Google Scholar]

- 73.Preist C, Massung E, Coyle D. Competing or Aiming to Be Average?: Normification As a Means of Engaging Digital Volunteers. Proceedings of the 17th ACM conference on Computer supported cooperative work & social computing; ACM conference on Computer supported cooperative work & social computing; February 15-19, 2014; Baltimore, MD. 2014. pp. 1222–33. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Five tables detailing coding and categorization in the main manuscript. Table 1 lists the reasons for using gamification in cognitive training and testing. Table 2 categorizes games by the age group they were aimed at. Table 3 lists games by training or testing category. Tables 4 and 5 list games by the cognitive domains which they targeted.

Additional information on the papers covered by this review that is highly detailed and intended for further analysis in future papers or for other researchers to assess themselves. It contains short text-based descriptions of all 31 games and a screenshot where the paper included one. There is also a detailed breakdown of the game mechanics in each task.