Abstract

The neural basis of emotion perception has mostly been investigated with single face or body stimuli. However, in daily life one may also encounter affective expressions by groups, e.g. an angry mob or an exhilarated concert crowd. In what way is brain activity modulated when several individuals express similar rather than different emotions? We investigated this question using an experimental design in which we presented two stimuli simultaneously, with same or different emotional expressions. We hypothesized that, in the case of two same-emotion stimuli, brain activity would be enhanced, while in the case of two different emotions, one emotion would interfere with the effect of the other. The results showed that the simultaneous perception of different affective body expressions leads to a deactivation of the amygdala and a reduction of cortical activity. It was revealed that the processing of fearful bodies, compared with different-emotion bodies, relied more strongly on saliency and action triggering regions in inferior parietal lobe and insula, while happy bodies drove the occipito-temporal cortex more strongly. We showed that this design could be used to uncover important differences between brain networks underlying fearful and happy emotions. The enhancement of brain activity for unambiguous affective signals expressed by several people simultaneously supports adaptive behaviour in critical situations.

Keywords: emotion, body perception, fMRI, amygdala, parietal lobe, occipito-temporal cortex

Introduction

The ability to detect social and affective signals is paramount to human cognitive functioning. In daily life, these signals are often conveyed by several people simultaneously, in a group or crowd. When these social signals convey a threat (anger) or indicate a threatening environment (fear), rapid detection has obvious adaptive benefits. Survival in dangerous situations does not only rely on rapid detection of emotions, but needs to be followed immediately by action. Therefore, the cognitive system seems to be wired so that perceptual and attentional processes are biased to detect face and body expressions that signal threat (Fox et al., 2000, 2001; Calvo and Esteves, 2005; Tamietto et al., 2007). For example, an angry facial expression is more quickly detected in a crowd of emotionally neutral faces, than a happy face (Fox et al., 2000).

Emotion perception relies on wide-spread, functional brain networks that are largely overlapping across the different emotions (Lindquist et al., 2012). Relevant subcortical and cortical regions include the insula, the amygdala, the anterior cingulate cortex, and the prefrontal cortex. Neuroimaging studies on emotion perception have shown that the affective content of stimuli, such as faces or bodies, modulates activity in the ventral and dorsal object processing streams (Mishkin et al., 1983). Conscious perception of affective faces and bodies, compared with neutral ones, enhances activity in the object processing regions in the ventral stream, such as the middle temporal gyrus (MTG), around the extrastriate body area (EBA) and the middle temporal (MT) area, the fusiform gyrus (FG) and the superior temporal sulcus (Peelen et al., 2007; Kret et al., 2011). Each emotion seems to put emphasis on different nodes of the cortical network. For example, happiness seems to activate superior temporal gyrus (STG) and FG strongly (Vytal and Hamann, 2010), while fearful faces and bodies activate the amygdala to a greater extent (Hadjikhani and de Gelder, 2003; Vuilleumier and Pourtois, 2007). Nevertheless, this distinction does not always hold as, for example, fearful stimuli also modulate FG (Vuilleumier and Pourtois, 2007). Moreover, the action system seems to be closely linked to the perception of affective stimuli. For example, de Gelder et al. (2004) showed that fearful bodies activated a number of regions involved in action representation, such as the pre-central gyrus, the supplementary motor area, the inferior parietal lobe (IPL) and the intraparietal sulcus (IPS). Additionally, a series of electromyography studies showed that during the perception of angry or fearful body postures most muscles that were activated for the expression of the emotion automatically re-activated (Huis In ’t Veld et al., 2014a,b). This suggests that seeing static photos of people expressing emotions through their body posture automatically activates one’s own action system at the level of the muscles. These findings are in line with evidence that the observation of actions activates one’s own cortical motor system (Gallese et al., 1996) and that the observation of emotions increases cortico-spinal motor tract excitability (Hajcak et al., 2007; Schutter et al., 2008).

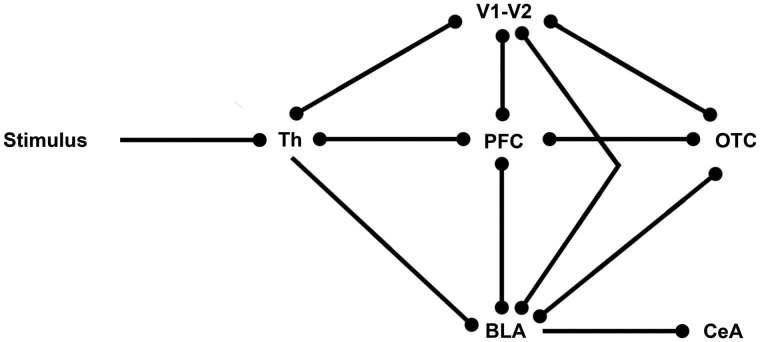

A neurobiological model has been proposed that supports the rapid processing of affective signals and links emotion-related structures in prefrontal cortex with the ventral stream object processing areas (Rudrauf et al., 2008). This two-pathway model, which is based on magnetoencephalography data, consists of one feedforward pathway travelling from the thalamus to the visual cortex (or auditory cortex—depending on the stimulation modality), and along the ventral stream in inferior temporal cortex (ITC). The other pathway is suggested to consist of the subcortical retino-tectal pathway that links from the thalamus directly to the affective system, and long-range fasciculi that range from V1 to the affective system, from which feedback is sent to the other pathway and vice versa (Rudrauf et al., 2008; Garrido et al., 2012). The cortical pathway would support conscious processing of affective stimuli, while the subcortical pathway, through the thalamus and amygdala, could account for subconscious processing. This two-pathway model shows close links to the conceptual model of emotional body language, which also includes one cortical and one subcortical network, as proposed by de Gelder (2006). In Figure 1, we provide an elementary model of how these two pathways could be interconnected.

Fig. 1.

Elementary model of visual emotion processing. Th, Thalamus; V1–V2, primary and secondary visual cortex; PFC, prefrontal cortex, BLA, basolateral complex amygdala; CeA, central amygdala; OTC, occipital temporal cortex.

These models are supported by empirical evidence from neuroimaging studies. As discussed previously, conscious perception of affective faces and bodies activates regions in the ventral and dorsal processing streams more strongly than the perception of neutral stimuli. In the ventral stream affective content modulates the object processing regions, while in the dorsal stream it stimulates action representation (de Gelder et al., 2004). These findings support the presence of a feedforward pathway of emotion perception, along the regular visual object processing streams, toward the anterior affective system and the prefrontal regions. However, other studies have indicated that affective stimuli may also be processed subconsciously, and that these stimuli are more salient. For example, in a study by Calvo and Esteves (2005), participants had higher sensitivity and accuracy for affective faces that were masked than for neutral masked faces. Also, in flash suppression paradigms (a technique where an image presented to one eye is suppressed by presenting a rapidly flashing pattern to the other eye) fearful expressions often emerge from suppression more quickly than other stimuli (for a review, see Yang et al., 2014). These results point to a preference for rapid processing of affective stimuli. Specific support for the existence of the subcortical pathway comes from studies which show the modulatory influence of the amygdala on ventral stream regions, such as the FG. For example, Vuilleumier et al. (2004) demonstrated that patients with a structurally impaired amygdala had reduced brain activity in FG and other cortical regions in response to fearful stimuli.

The amygdala may play an important role in the modulation of cortical responses to emotional stimuli and subsequent behavioural adaptations. Neurophysiological research on associative learning in animals demonstrated the existence of neural populations in the amygdala which are specifically responsive to stimuli predicting reward or fear (a.o. Zhang et al., 2013). Janak and Tye (2015) proposed a circuit of mutual inhibition through interneurons between fear-encoding and reward-encoding neurons in the basolateral complex of the amygdala (BLA). These different populations of neurons in turn connect to populations of neurons in the central nucleus of the amygdala, which promote avoidance or approaching behaviour (see also Steimer, 2002; Sah et al., 2003). This circuit would allow for quick adaptive responses to different emotional stimuli. The amygdala could not only, as suggested in the model by Rudrauf et al. (2008), modulate activity in the ventral stream regions, but it could also, as illustrated in de Gelder (2006), directly link to reflex-like behaviour and motor planning. The two affective pathways seem to be operating in parallel, but do not seem to be segregated. If one of the two pathways is disabled, information may still be partially processed by the other pathway. For example, subconscious processing of emotional stimuli may also occur without the amygdala (Tsuchiya et al., 2009). Furthermore, interactions between regions in the two pathways do seem to take place, especially between the ITC, the amygdala and the orbito-frontal cortex (Steimer, 2002; Rudrauf et al., 2008).

The mechanisms of emotion recognition and adaptive behavioural responses described earlier are in place when the expressed emotions are clear-cut. However, emotions conveyed by several people simultaneously are not always unambiguous. In some situations, e.g. during a soccer match, crowds may express a variety of emotions such as fear, anger and joy. So far, it remains unclear how the brain processes this type of incongruent affective information. In this study, using a novel experimental design, we seek to further disentangle emotion-specific nodes in the affective processing networks and investigate the effects of perceiving multiple emotions simultaneously on the two affective pathways. Although the presence of a behavioural task is essential to take attentional effects into account, explicit emotion identification often dampens activations that are characteristic of specific emotions (de Gelder et al., 2012). Here we used a novel approach, where participants were shown stimulus pairs rather than single stimuli. The stimulus pairs consisted of two affective stimuli of different identities, with either the same or a different affective expression. Participants covertly judged whether the emotions in the stimulus pairs were identical or different. As we were particularly interested in the modulation of regions in the action network, participants viewed bodily expressions of emotion as well as affective faces. We hypothesized that the same-emotion stimulus pairs would lead to increased activation for the specific emotion shown, while in the case of different-emotion pairs, each emotion would interfere with the effect of the other. As a possible underlying mechanism, the conflicting incoming information of the different-emotion pairs could lead to simultaneous mutual inhibition in the BLA, reducing its output. This in turn (see Figure 1) would further diminish the activity in cortical emotion-processing regions and the preparation of affective behaviour (Rosenkranz and Grace, 2001; Janak and Tye, 2015). The design of this study made the distinction between the same-emotion and different-emotion trials more pronounced than with a single stimulus presentation. Moreover, the results could be a starting point to better understand emotional expression effects of groups rather than individuals (Huis In ’t Veld and de Gelder, 2015).

Materials and methods

Participants

Twenty-six healthy right-handed volunteers (12 males, mean age 29.5 years, range 18–68) participated in the fMRI experiment. All participants had normal or corrected-to-normal visual acuity and gave their informed consent. The study was approved by the local ethical committee.

Stimuli

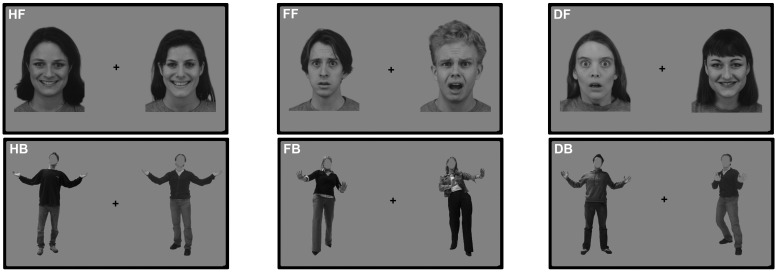

The stimuli were black and white photographs of faces and bodies. The face stimuli were taken from the Karolinska Directed Emotional Faces database (Lundqvist et al., 1998), while the body stimuli came from the Bodily Expressive Action Stimulus Test (de Gelder and Van den Stock, 2011). The face stimuli consist of 10 happy faces and 10 fearful faces of different identities. The body stimuli consist of 10 happy bodies and 10 fearful bodies of different identities. In the body stimuli the faces were masked by a grey oval the size of the face. An equal number of male and female stimuli were used and they were equally divided between the stimuli categories. For examples, see Figure 2.

Fig. 2.

Task design. An illustration of the six different conditions is displayed (not true size). HF, happy faces; HB, happy bodies; FF, fearful faces; FB, fearful bodies; DF, different faces; DB, different bodies.

Design and task

A blocked design was used for this experiment. A total of 32 blocks were presented to the participants in one functional run. The run started with 12 s of fixation and ended with 32 s of fixation. The duration of each block was 12 s with a 12 s inter-block interval. Within 1 block 12 stimulus pairs were presented consecutively. Each stimulus pair consisted of two same-sex stimuli that were presented simultaneously on the left and right side of a central fixation cross for 800 milliseconds (ms), each at a 4.8° visual angle (from fixation cross to centre stimulus). The inter-stimulus interval was 200 ms. The participants were instructed to fixate on the cross and to check whether the emotion displayed in the two stimuli was the same or different without making any overt response. Per block the stimuli were either all faces or all bodies. In half of the blocks the emotions were different (‘Different’ blocks); while in the other half the emotions were the same. This led to six different experimental conditions: ‘Fearful Bodies’ (FB), ‘Happy Bodies’ (HB), ‘Different Bodies’ (DB), ‘Fearful Faces’ (FF), ‘Happy Faces’ (HF) and ‘Different Faces’ (DF). The location of the different emotions was counterbalanced.

fMRI parameters

A 3T Siemens Prisma MR head scanner (Siemens Medical Systems, Erlangen, Germany) was used for imaging. Functional scans were acquired with a Gradient Echo Echo-Planar Imaging sequence with a Repetition Time (TR) of 2000 ms and an Echo Time (TE) of 30 ms. For the functional run, 400 volumes were acquired comprising 35 slices (matrix = 78 × 78, voxel size = 3 mm isotropic, interslice time = 57 ms, flip angle = 90°). High-resolution T1-weighted structural images of the whole brain were acquired using an MPRAGE sequence with 192 slices, matrix = 256 × 256, voxel dimensions = 1 mm isotropic, TR = 2250 ms, TE = 2.17 ms, flip angle = 9°.

fMRI pre-processing and data analyses

All functional MRI data were analysed using fMRI analysis and visualisation software BrainVoyager QX (Brain Innovation B.V., Maastricht, The Netherlands). Functional data were 3D motion corrected (trilinear interpolation), corrected for slice scan time differences, temporally filtered [General Linear Model (GLM) with Fourier basis set using two cycles] and spatially smoothed with a 4-mm gaussian filter. The anatomical data were corrected for intensity inhomogeneity (Goebel et al., 2006) and transformed into Talairach space (Talairach and Tournoux, 1988). The functional data were aligned with the anatomical data and transformed into the same space, to create 4D volume time-courses.

The functional runs were analysed using voxel-wise multiple linear regression (GLM) of the blood-oxygenated level dependent (BOLD) response time courses. The GLM analyses were performed at single subject and group level. All six experimental conditions were modelled as predictors that assumed the value of ‘1’ for the volumes during which the block was presented and ‘0’ for all other volumes. These predictor boxcar functions were convolved with a two gamma hemodynamic response function. Individual activation maps were calculated using a single subject GLM model and corrected using a False Discovery Rate of 0.05 (Genovese et al., 2002). Group activation maps (Figures 3–5) were calculated with a random effects GLM analysis. Linear contrasts served to implement comparisons of interest. The correction for multiple comparisons was performed for each linear contrast separately by using cluster threshold estimation as implemented in Brainvoyager QX (Forman et al., 1995; Goebel et al., 2006), based on permutations with an initial uncorrected threshold of α = 0.01 at t(25) = 2.79. Cluster thresholding was obtained through MonteCarlo simulation (n = 1000) of the random process of image generation, followed by the injection of spatial correlations between neighbouring voxels, voxel intensity thresholding and cluster identification, yielding a correction for multiple comparisons at the cluster level for α = 0.05. The mean time courses in each region of interest (ROI) (Supplementary Figure S1) were calculated by averaging the time course segments belonging to the same condition across subjects.

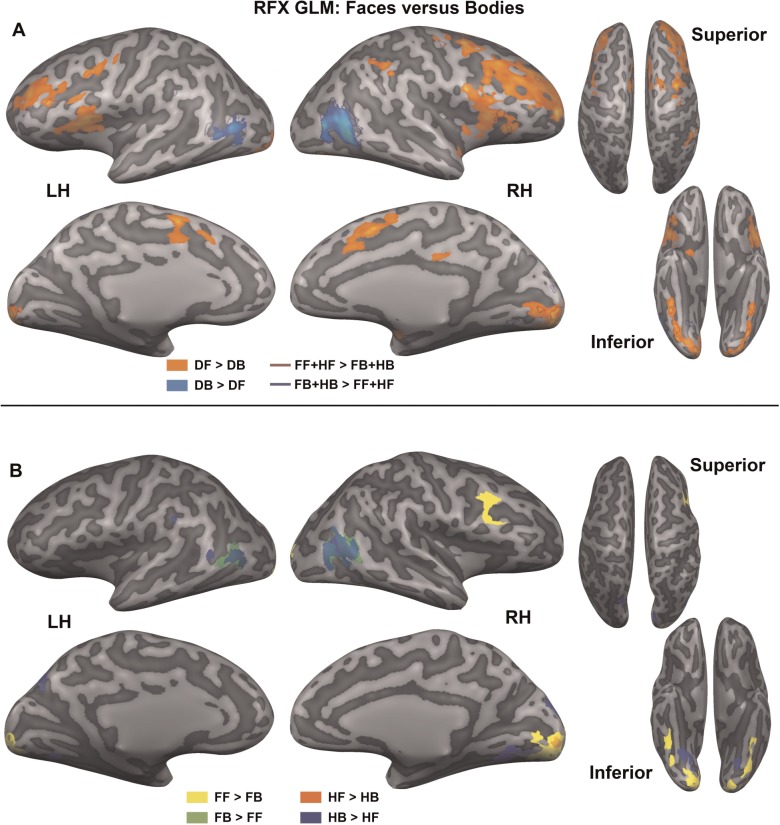

Fig. 3.

Same-emotion vs different-emotion body pairs. The maps show significantly activated voxels [Pcorr < 0.05] for three different linear contrasts, superimposed on an inflated representation of the cortical sheet: HB > DB (yellow), FB > DB (blue) and FB + HB > DB (red). The functional cluster in the amygdala (bottom right, in red) is shown in a coronal slice in isolation (left) or superimposed on the SF (in green) and BLA (in purple) subdivisions of the amygdala as defined by the anatomical probability maps (right). HB, happy bodies; FB, fearful bodies; DB, different bodies; SF, superficial group amygdala; BLA, basolateral complex amygdala.

Fig. 4.

Faces vs bodies. The top (A) shows significantly activated voxels (Pcorr < 0.05) for four linear contrasts that compare object category over all emotions, superimposed on an inflated representation of the cortical sheet: DF > DB (orange), FF + HF > FB + HB (red outline), DB > DF (blue), FB + HB > FF + HF (dark blue outline). The bottom (B) shows four linear contrasts that compare object category for each emotion separately: FF > FB (yellow), FB > FF (green), HF > HB (orange), HB > HF (blue). DF, different faces; DB, different bodies; FF, fearful faces; HF, happy faces; FB, fearful bodies; HB, happy bodies.

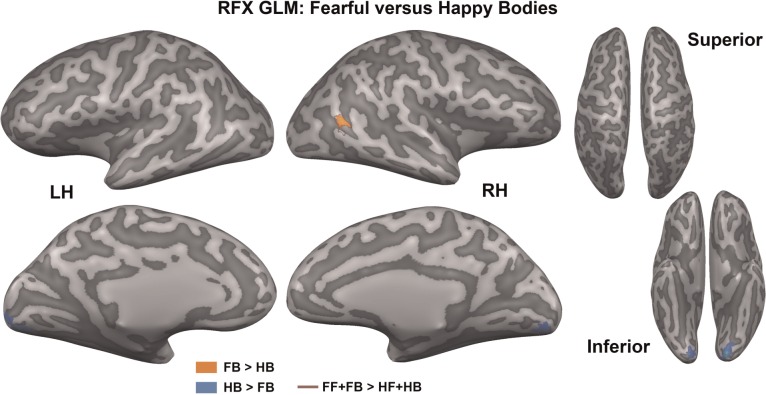

Fig. 5.

Fearful vs happy bodies. The map shows significantly activated voxels (Pcorr < 0.05) for three linear contrasts that compare emotion conditions superimposed on an inflated representation of the cortical sheet: FB > HB (orange), HB > FB (blue) and FF + FB > HF + HB (red outline). FB, fearful bodies, HB, happy bodies; FF, fearful faces; HF, happy faces.

The activity in the amygdala (see Figure 3), was localized to a specific subdivision using cytoarchitectonic maps of the SPM Anatomy Toolbox (Version 2.1, Forschungszentrum Jülich GmbH; Eickhoff et al., 2005). This toolbox contains the anatomical probability maps of several subdivisions of the amygdala, including the superficial, latero-basal and centro-medial complex (Amunts et al., 2005). The toolbox gives the option to enter a set of stereotaxic coordinates, which can be in MNI space or Talairach space. The toolbox shows the location of the voxel, gives information about the cytoarchitectonic region found at that position, and gives the corresponding probability of that voxel belonging to the reported region. We entered the Talairach coordinates of the peak voxel of our functional cluster of interest into the toolbox and the resulting subdivision (BLA) and accompanying probability (76%) were reported. To verify whether not only the peak voxel but the entire functional cluster was located to the BLA and to display the results (Figure 3), we extracted all available sub-regions of the amygdala from the SPM Anatomy Toolbox. Each voxel in a probabilistic region reflects the cytoarchitectonic probability (10–100%) of belonging to that region. We followed the procedure of obtaining maximum probability maps as described in Eickhoff et al. (2006), as these are thought to provide ROIs that best reflect the anatomical hypotheses. This meant that all voxels in the ROI that were assigned to a certain area were set to ‘1’ and the rest of the voxels were set to ‘0’. We also extracted the Colin27 anatomical data to help verify the subsequent transformations. In order to transform the Colin27 anatomical data and the amygdala sub-regions from MNI space to Talairach space (as used for the other analyses), we imported the ANALYZE files in Brainvoyager, flipped the x-axis to set the data to radiological format, and rotated the data −90° in the x-axis and +90° in the y-axis to get a sagittal orientation. Subsequently, we transformed the Colin27 anatomical data to Talairach space and applied the same transformations to the cytoarchitectonic ROIs. We then projected the ROIs and functional cluster together and verified that the functional ROI was localized to the BLA.

Results

The goal of this study was to investigate whether there are neural changes that underlie simultaneous viewing of two faces or bodies with the same emotional expressions vs different emotional expressions. Our results showed significant differences for emotion congruency when participants were viewing affective bodies.

Same vs different: conflicting bodily emotions

When comparing the perception of same-emotion body pairs vs different-emotion body pairs (FB + HB > DB) we found significant increased activation (Pcorr < 0.05; see ‘Materials and Methods’ section) for same-emotion bodies in amygdala, occipitotemporal gyrus (OTG), lingual gyrus (LG), IPL, superior frontal gyrus (SFG) and supramarginal gyrus (SMG) (see Figure 3 and Table 1). The amygdala activation (peak voxel) was located with 76% probability in the left BLA, on the basis of a probabilistic map of the subdivisions of the amygdala (SPM Anatomy Toolbox; Amunts et al., 2005). When splitting the comparison out to the specific emotions, significantly increased activation (Pcorr < 0.05) for fearful bodies compared with different-emotion bodies (FB > DB) was found in right IPL, SMG and insula (see Figure 3 and Table 1). For happy bodies vs different-emotion bodies (HB > DB), activity was significantly larger (Pcorr < 0.05) in parietal-occipital sulcus (POS), inferior frontal gyrus (IFG), OTG and LG (see Figure 3 and Table 1). For the contrasts and regions in Figure 3, mean time courses for every condition were calculated and are displayed in Supplementary Figure S1. The different-emotion body pairs did not show significantly greater activity than the same-emotion body pairs in any of the above mentioned comparisons. For the equivalent comparisons with the face conditions there were no significant differences at all.

Table 1.

Peak voxel Talairach coordinates for significant clusters, and their corresponding T- and P-values, in several same-emotion vs different-emotion body pair contrasts

| Region (n = 26, Pcorr < 0.05) | Hemisphere | No. Voxels | Talairach coordinates peak voxel |

T-value | P-value | ||

|---|---|---|---|---|---|---|---|

| x | Y | z | |||||

| Happy Bodies > Different Bodies | |||||||

| Occipitotemporal Gyrus | R | 1230 | 27 | −43 | −11 | 5.12 | 0.000027 |

| Lingual Gyrus | R | 4625 | 24 | −70 | −8 | 4.79 | 0.000064 |

| Parieto-Occipital Sulcus | L | 967 | −15 | −73 | 34 | 4.09 | 0.000392 |

| Inferior Frontal Gyrus | L | 663 | −42 | 50 | 4 | 4.77 | 0.000068 |

| Fearful Bodies > Different Bodies | |||||||

| Supramarginal Gyrus | R | 936 | 54 | −43 | 25 | 4.61 | 0.000101 |

| Inferior Parietal Lobe | R | 3325 | 48 | −43 | 49 | 4.59 | 0.000108 |

| Insula | R | 846 | 36 | 11 | 16 | 4.46 | 0.000153 |

| Happy + Fearful Bodies > Different Bodies | |||||||

| Supramarginal Gyrus | R | 1026 | 51 | −40 | 25 | 4.13 | 0.000356 |

| Inferior Parietal Lobe | R | 903 | 33 | −52 | 40 | 4.10 | 0.000384 |

| Inferior Parietal Lobe | L | 638 | −33 | −64 | 40 | 4.39 | 0.000179 |

| Occipital Temporal Gyrus | R | 973 | 29 | −43 | −11 | 4.33 | 0.000210 |

| Lingual Gyrus | m | 717 | 3 | −70 | 4 | 4.29 | 0.000236 |

| Superior Frontal Gyrus | R | 776 | 42 | 20 | 37 | 3.91 | 0.000623 |

| Amygdala | L | 663 | −21 | −1 | −17 | 4.11 | 0.000372 |

Hemisphere is indicated by R, right; L, left, or m, medial.

Complementary to our main research question, we compared conditions related to stimulus type (faces, bodies) and emotion type (fear, happy) in order to further investigate the underlying mechanisms of conflicting bodily emotions.

Faces vs bodies: stimulus type

Faces and bodies were either compared for all emotions together (Figure 4A), or compared per emotion (Figure 4B). For all emotions together, two types of comparisons were made. The two same-emotion face conditions were compared with both same-emotion body conditions (HF + FF vs HB + FB) and the different-emotion face condition was compared with the different-emotion body condition (DF vs DB). The latter comparison yielded an extensive network of significant regions (Pcorr < 0.05; see Figure 4A and Table 2), while the first significantly activated a subset of these voxels (Pcorr < 0.05), indicated by the dark red and dark blue borders in Figure 4A. The comparisons of faces and bodies for different emotions were split into fearful (FF vs FB) and happy (HF vs HB) emotions (Pcorr < 0.05; see Figure 4B).

Table 2.

Peak voxel Talairach coordinates for significant clusters, and their corresponding T- and P-values, in several face pair vs body pair contrasts

| Region [n = 26, Pcorr < 0.05] | Hemisphere | No. voxels | Talairach coordinates peak voxel |

T-value | P-value | ||

|---|---|---|---|---|---|---|---|

| x | y | z | |||||

| Different Faces > Different Bodies | |||||||

| Superior Temporal Gyrus | R | 584 | 39 | 2 | −11 | 5.79 | 0.000005 |

| Occipitotemporal Gyrus | R | 3910 | 36 | −64 | −17 | 5.76 | 0.000005 |

| Occipitotemporal Gyrus | L | 4777 | −42 | −67 | −17 | 7.51 | 0.000000 |

| Lingual Gyrus | R | 5975 | 9 | −91 | −5 | 6.51 | 0.000001 |

| Lingual Gyrus | L | 901 | −6 | −89 | −14 | 4.95 | 0.000042 |

| Inferior Occipital Gyrus | R | 2298 | 21 | −76 | −20 | 4.45 | 0.000157 |

| Inferior Occipital Gyrus | L | 4525 | −33 | −83 | −20 | 6.50 | 0.000001 |

| Postcentral Sulcus to Intraparietal Sulcus | R | 1705 | 45 | −40 | 37 | 5.18 | 0.000024 |

| Insula | R | 8059 | 33 | 11 | 13 | 6.03 | 0.000003 |

| Insula | L | 7517 | −39 | 14 | 16 | 5.73 | 0.000006 |

| Precentral Sulcus | R | 2363 | 33 | −4 | 49 | 6.20 | 0.000002 |

| Precentral Sulcus | L | 841 | −42 | 2 | 37 | 5.00 | 0.000037 |

| Inferior Frontal Gyrus | R | 939 | 54 | 5 | 16 | 6.78 | 0.000000 |

| Inferior Frontal Sulcus | L | 1283 | −39 | 8 | 31 | 4.96 | 0.000041 |

| Middle Frontal Gyrus | R | 14428 | 42 | 32 | 25 | 6.03 | 0.000003 |

| Middle Frontal Gyrus | R | 3934 | 27 | 47 | 10 | 7.67 | 0.000000 |

| Middle Frontal Gyrus | L | 6985 | −30 | 38 | 19 | 6.33 | 0.000001 |

| Superior Frontal Gyrus | m | 8408 | 3 | 8 | 52 | 6.97 | 0.000000 |

| Superior Frontal Gyrus | R | 2101 | 39 | −4 | 55 | 5.65 | 0.000007 |

| Caudate Nucleus | R | 701 | 15 | 17 | 19 | 4.20 | 0.000299 |

| Caudate Nucleus | L | 646 | −12 | 14 | 16 | 5.51 | 0.000010 |

| Putamen | R | 881 | 21 | 8 | 4 | 4.93 | 0.000045 |

| Thalamus | R | 100 | 15 | −16 | 1 | 5.15 | 0.000025 |

| Thalamus | R | 122 | 18 | −19 | 10 | 5.52 | 0.000010 |

| Different Bodies > Different Faces | |||||||

| Inferior Temporal Sulcus | R | 6208 | 39 | −61 | 7 | 6.68 | 0.000001 |

| Inferior Temporal Sulcus | L | 3207 | −51 | −68 | 7 | 6.60 | 0.000001 |

| Fearful Faces > Fearful Bodies | |||||||

| Lateral Occipitotemporal Gyrus | R | 562 | 36 | −49 | −17 | 5.15 | 0.000026 |

| Lateral Occipitotemporal Gyrus | L | 423 | −36 | −43 | −17 | 4.31 | 0.000223 |

| Lingual Gyrus | R | 5721 | 12 | −91 | −2 | 7.02 | 0.000000 |

| Lingual Gyrus | L | 4739 | −18 | −85 | −5 | 5.52 | 0.000010 |

| Inferior Occipital Gyrus | L | 1385 | −39 | −70 | −14 | 5.07 | 0.000031 |

| Inferior Frontal Sulcus | R | 1520 | 42 | 14 | 28 | 4.07 | 0.000414 |

| Fearful Bodies > Fearful Faces | |||||||

| Inferior Temporal Sulcus | R | 5450 | 45 | −70 | 7 | 6.67 | 0.000001 |

| Inferior Temporal Sulcus | L | 2599 | −54 | −64 | 5 | 4.79 | 0.000063 |

| Happy Faces > Happy Bodies | |||||||

| Lingual Gyrus | R | 725 | 12 | −91 | −5 | 5.65 | 0.000007 |

| Happy Bodies > Happy Faces | |||||||

| Inferior Temporal Sulcus | R | 4733 | 48 | −70 | 7 | 9.10 | 0.000000 |

| Inferior Temporal Sulcus | L | 2203 | −48 | −71 | 7 | 4.93 | 0.000045 |

| Lingual Gyrus | R | 1855 | 24 | −61 | −5 | 4.81 | 0.000061 |

| Lingual Gyrus | L | 759 | −27 | −61 | −5 | 5.15 | 0.000025 |

| Cuneus | R | 988 | 9 | −89 | 25 | 4.02 | 0.000474 |

| Superior Occipital Gyrus | R | 634 | 21 | −88 | 22 | 4.08 | 0.000404 |

| Parieto-Occiptal Sulcus | L | 1190 | −15 | −73 | 31 | 4.20 | 0.000295 |

| Subparietal Sulcus | L | 234 | −21 | −64 | 25 | 3.30 | 0.002922 |

| Inferior Parietal Lobe | L | 259 | −45 | −61 | 13 | 3.43 | 0.002127 |

| Postcentral Sulcus | L | 361 | −51 | −40 | 19 | 4.15 | 0.000340 |

Hemisphere is indicated by R, right; L, left; or m, medial.

The comparison of different-emotion face pairs vs body pairs (DF > DB; orange colour in Figure 4A) significantly activated (Pcorr < 0.05) frontal regions, including inferior frontal sulcus (IFS), middle frontal gyrus (MFG), medial SFG, insula, and pre- and post-central sulcus, occipito-temporal regions: inferior occipital gyrus (IOG), LG and OTG, as well as several subcortical regions: thalamus, caudate nucleus and putamen. The opposite comparison (DB > DF; blue colour in Figure 4A), showed enhanced activation (Pcorr < 0.05) around the bilateral inferior temporal sulcus (ITS), which corresponds to the EBA and the MT area. As we did not functionally localize EBA, we will refer to it as the anatomical location, ITS, in the results and tables. A subset of these regions was activated for the emotionally congruent face vs body comparisons. Right insula, left IFS and bilateral IOG, LG and OTG were more strongly activated (Pcorr < 0.05) for same-emotion faces (HF + FF > HB + FB; red outline Figure 4A), while bilateral ITS was more strongly activated (Pcorr < 0.05) for same-emotion bodies (HB + FB > HF + FF; dark blue outline Figure 4A).

When looking into stimulus type effects for specific emotions, fearful faces compared with fearful bodies showed enhanced activity (Pcorr < 0.05) in right IFS, bilateral OTG, IOG and LG (FF > FB, yellow colour Figure 4B). Fearful bodies showed stronger activity (Pcorr < 0.05) in bilateral ITS (FB > FF, green colour Figure 4B). For the happy emotion, faces evoked enhanced activity (Pcorr < 0.05) in right LG (HF > HB, orange colour Figure 4B), while happy bodies more strongly activated (Pcorr < 0.05) bilateral ITS and LG, right SOG and cuneus, and left POS, IPL and post-central gyrus (HB > HF, blue colour Figure 4B). For an overview of the significant regions, see Table 2.

Fear vs happy: emotion type

Finally, the type of emotion (fear or happy) was compared between the same-emotion conditions. Stimulus-type independent modulation of emotion (FF + FB vs HF + HB) as well as stimulus-type specific modulation of emotion (FB vs HB and FF vs HF) were investigated. The stimulus-type independent modulation of emotion only showed a significant activation in right ITS for fear (Pcorr < 0.05; FF + FB > HF + HB; red outline Figure 5). Additionally, fearful bodies also showed enhanced activity in right ITS compared with happy bodies (Pcorr < 0.05; FB > HB; orange colour Figure 5), while happy bodies showed enhanced activity in bilateral LG compared with fearful bodies (Pcorr < 0.05; HB > FB; blue colour Figure 5). For an overview of the regions, see Table 3. No significant effects were found for fearful faces vs happy faces.

Table 3.

Peak voxel Talairach coordinates for significant clusters, and their corresponding T- and P-values, in fearful vs happy body pair contrasts

| Region [n = 26, Pcorr < 0.05] | Hemisphere | No. Voxels | Talairach coordinates peak voxel |

T-value | P-value | ||

|---|---|---|---|---|---|---|---|

| x | Y | z | |||||

| Fearful Bodies > Happy Bodies | |||||||

| Inferior Temporal Sulcus | R | 656 | 42 | −55 | 10 | 4.20 | 0.000294 |

| Happy Bodies > Fearful Bodies | |||||||

| Lingual Gyrus | R | 1927 | 18 | −79 | −8 | 4.78 | 0.000065 |

| Lingual Gyrus | L | 3537 | −15 | −82 | −8 | 7.20 | 0.000000 |

Hemisphere is indicated by R, right; L, left; or m, medial.

Discussion

Based on the models of Rudrauf et al. (2008) and Janak and Tye (2015) we hypothesized that same-emotion pairs and different-emotion pairs would drive the affective pathways differently. The conflicting information of the different-emotion pairs could lead to simultaneous mutual inhibition in the BLA, reducing its output. This in turn, as suggested by the models of Barbas (2000), Steimer (2002) and Rudrauf et al. (2008), could lead to a modulation of processing in the occipito-temporal cortex (see Figure 1), prefrontal cortex, and, possibly, action processing and preparation circuits. We conjectured that mutual inhibition from contrary emotions would lead to a reduction of cortical activity. The same-emotion pairs on the other hand could each drive the neuronal populations that encode fear or reward, and increase their output to the cortical regions, as well as drive the cortical regions in itself more strongly.

The results showed a deactivation of the BLA for different-emotion body pairs compared to same-emotion body pairs. Concurrently, the different-emotion body pairs induced a reduction of BOLD responses in cortical regions, compared to the stronger activation for same-emotion body pairs. For happy body pairs these effects were mostly localized to occipito-temporal cortex, while for fearful body pairs the insula and right parietal regions were more strongly activated than for the different-emotion pairs. These results suggest that the display of contrary emotions disrupts the effects each separate affective stimulus may have. We could conjecture that diminished excitatory output from the BLA might have reduced BOLD responses in those regions that were most relevant for each emotion. However, given the limited temporal resolution and inherent properties of the fMRI data, no definite conclusions can be drawn about the direction of inhibitory and excitatory processes, or the flow of information.

Although we found that brain activity is reduced for different-emotion body pairs, the results do not indicate that the fearful and happy emotion categories drive the amygdala-cortical circuits maximally in opposite directions. The differences between fearful bodies and happy bodies (Figure 5) were smaller and localized to fewer regions than the differences between same-emotion body pairs vs different-emotion body pairs (Figure 3). Instead, the different-emotion body pairs showed lower BOLD responses in the regions indicated in Figure 3 than the same-emotion body pairs of the opposite valence. Also, in line with Fitzgerald et al. (2006), Winston et al. (2003) and other research, we found no differences in amygdala activation between fearful and happy emotions. In contrast to earlier findings on fear-specific modulation of the amygdala (a.o. Adolphs et al., 1995), several neuroimaging studies now propose that the amygdala might be involved in the processing of all emotionally salient stimuli (Winston et al., 2003; Fitzgerald et al., 2006; Zhang et al., 2013). Our results support this notion. The underlying mechanisms might be further investigated using measures other than fMRI, such as single cell recordings. As stated by Janak and Tye (2015), behaviours of opposite valence do not necessarily arise from activity in different pathways, but may be modulated by connections between the same regions. Because inhibitory and excitatory processes are hard to disentangle by fMRI measurements, as both processes consume energy and may give rise to increases of BOLD signal (Viswanathan and Freeman, 2007; Logothetis, 2008), positive and negative valence stimuli may not show such strong differences. This notion is further confirmed by several analyses of emotion perception in the brain, where fearful and happy stimuli compared with neutral ones seem to largely activate the same brain regions (Fitzgerald et al., 2006; Lindquist et al., 2012). Nevertheless, the results in Figure 3 highlight that although fearful and happy bodies activate many of the same regions (Figure 5), they do not do so to the same extent. By comparing the same-emotion body pairs with the different-emotion body pairs, subtle differences between the two networks are revealed.

For fearful body pairs compared with different-emotion body pairs (Figure 3), BOLD responses are larger in the IPL and the insula. The right IPL seems to respond to salient environmental events, such as target detection (Linden et al., 1999; Corbetta and Shulman, 2002) and might play a role in action organization (Rizzolatti and Matelli, 2003). Singh-Curry and Husain (2009) suggest that, next to responding to salient information, the right IPL is also involved in maintaining attention. They suggest that these two functions may make the IPL particularly suitable for a role in phasic alerting, where behavioural goals need to be adapted in response to a salient stimulus. These ideas are in line with evidence from monkey research showing connections of the subcortical structures in superior colliculus, hippocampus and the cerebellum to specific subregions of the IPL (Clower et al., 2001). The eyes especially seem to play a determining role in the activation of IPL for fearful faces (Radua et al., 2010). Although in our case the faces of the fearful bodies were masked, other salient features of the body that indicate the fearful emotion might have driven the IPL and caused an initiation of the action system.

The right insula was also activated more for fearful bodies than different-emotion body pairs. Generally, the insula has been associated with the experience of a wide variety of emotions, such as fear, anger, happiness and pain (Damasio et al., 2000), as well as social emotions, empathy, and interoception (Singer, 2006; Craig, 2009). However, these functions seem to be mostly localized to the anterior insular cortex, while in this study the activity seems confined to the mid-posterior insula. The whole of the insular cortex, including posterior insula, has been suggested as a site where internally generated emotions and emotional context are evaluated and the expression of responses is initiated (Reiman et al., 1997). Together with the mid-cingulate cortex, the insula has been proposed to form a salience and action system (Taylor et al., 2009), as the posterior insula is also functionally connected to the somatomotor cortex (Deen et al., 2011). The finding of more extensive activation in the IPL and insula for fearful bodies would be in line with the idea that fearful bodies would induce and require more action than happy bodies or affective faces.

Happy body pairs compared with different-emotion body pairs on the other hand (Figure 3), showed increased activity in occipital and temporal cortex, including POS and regions in the LG and OTC, which may correspond to the fusiform face area/fusiform body area (FBA). Stronger activation in LG (Fitzgerald et al., 2006) and FG (Surguladze et al., 2003) was previously found for happy faces vs neutral objects and neutral faces, respectively. It may be that in our study the intensity of the happy emotion was experienced as being higher than that of the fearful emotion, which could lead to more significant activation (Surguladze et al., 2003). This would be in line with the finding that overall the happy bodies activated the LG and OTC more strongly than fearful bodies (Figure 5). Also, the happy bodies extended the arms slightly further across the visual field in the horizontal dimension (Figure 2), than the fearful bodies, where the arms were closer to the body. The FBA has not only been shown to respond strongly to whole bodies, but also has selectivity for limbs (Taylor et al., 2007; Weiner and Grill-Spector, 2011). Therefore, the increased visibility of the arms might have driven FBA more strongly.

Surprisingly, we did not find any emotion effects for the affective faces. Although the affective faces did drive cortical processing (see Figure 4), they did so to the same extent for both emotions. Perhaps, because bodily expressions of emotion can trigger higher activation than affective faces (Kret et al., 2011), the modulation with respect to the different emotion pairs was also higher for bodies. In general, facial expressions compared with bodily expressions did show stronger activity in a wide number of regions, including superior frontal sulcus, insula, OTC and LG. For the bodies enhanced activity was localized to the ITS, around EBA/MT. Because the stimulus pairs always consisted of two different identities it may have been that the identity was more strongly represented for the facial expressions in comparison to the bodies, leading to a larger activation of prefrontal cortex and OTC (Scalaidhe et al., 1999; Haxby et al., 2000). This identity effect may have been stronger than the neuronal differences between facial emotions.

Conclusions

Using a novel approach to investigate affective processing beyond the individual level, this study showed that the perception of different emotional expressions leads to a deactivation of the amygdala and a reduction of cortical activity. Comparing same-emotion body pairs with different-emotion body pairs revealed that the processing of fearful body expressions relied more strongly on saliency and action regions in IPL and insula, while happy bodies drove OTC more strongly. This result adds significantly to the literature that hitherto has mostly been using single individual stimuli. Although perception of different emotions may drive many of the same brain regions, our experimental design uncovered important differences between the networks underlying the different emotions. The enhancement of brain activity for unambiguous affective signals expressed by multiple people simultaneously supports adaptive behaviour in critical situations, such as mass panic.

Funding

A.d.B. and B.d.G. were supported by the European Union's Seventh Framework Programme for Research and Technological Development (FP7, 2007-2013) under ERC grant agreement number 295673.

Supplementary Material

Acknowledgements

The authors thank Rebecca Watson and Minye Zhan for help with data collection and Lisanne Huis in ‘t Veld and Ruud Hortensius for fruitful discussions on the topic.

Supplementary data

Supplementary data are available at SCAN online.

Conflict of interest. None declared.

References

- Adolphs R., Tranel D., Damasio H., Damasio A.R. (1995). Fear and the human amygdala. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience, 15(9), 5879–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amunts K., Kedo O., Kindler M., et al. (2005). Cytoarchitectonic mapping of the human amygdala, hippocampal region and entorhinal cortex: intersubject variability and probability maps. Anatomy and Embryology, 210(5–6), 343–52. [DOI] [PubMed] [Google Scholar]

- Barbas H. (2000). Connections underlying the synthesis of cognition, memory, and emotion in primate prefrontal cortices. Brain Research Bulletin, 52(5), 319–30. [DOI] [PubMed] [Google Scholar]

- Calvo M.G., Esteves F. (2005). Detection of emotional faces: low perceptual threshold and wide attentional span. Visual Cognition, 12(1), 13–27. [Google Scholar]

- Clower D.M., West R.A., Lynch J.C., Strick P.L. (2001). The inferior parietal lobule is the target of output from the superior colliculus, hippocampus, and cerebellum. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience, 21(16), 6283–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M., Shulman G.L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews. Neuroscience, 3(3), 201–15. [DOI] [PubMed] [Google Scholar]

- Craig A.D.B. (2009). How do you feel–now? The anterior insula and human awareness. Nature Reviews Neuroscience, 10(1), 59–70. [DOI] [PubMed] [Google Scholar]

- Damasio A.R., Grabowski T.J., Bechara A., et al. (2000). Subcortical and cortical brain activity during the feeling of self-generated emotions. Nature Neuroscience, 3(10), 1049–56. [DOI] [PubMed] [Google Scholar]

- de Gelder B. (2006). Towards the neurobiology of emotional body language. Nature Reviews. Neuroscience, 7(3), 242–9. [DOI] [PubMed] [Google Scholar]

- de Gelder B., Hortensius R., Tamietto M. (2012). Attention and awareness each influence amygdala activity for dynamic bodily expressions-a short review. Frontiers in Integrative Neuroscience, 6, 54.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B., Snyder J., Greve D., Gerard G., Hadjikhani N. (2004). Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proceedings of the National Academy of Sciences of the United States of America, 101(47), 16701–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B., Van den Stock J. (2011). The Bodily Expressive Action Stimulus Test (BEAST). construction and validation of a stimulus basis for measuring perception of whole body expression of emotions. Frontiers in Psychology, 2, 181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deen B., Pitskel N.B., Pelphrey K.A. (2011). Three systems of insular functional connectivity identified with cluster analysis. Cerebral Cortex, 21(7), 1498–506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickhoff S.B., Heim S., Zilles K., Amunts K. (2006). Testing anatomically specified hypotheses in functional imaging using cytoarchitectonic maps. NeuroImage, 32(2), 570–82. [DOI] [PubMed] [Google Scholar]

- Eickhoff S.B., Stephan K.E., Mohlberg H., et al. (2005). A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage, 25(4), 1325–35. [DOI] [PubMed] [Google Scholar]

- Fitzgerald D.A., Angstadt M., Jelsone L.M., Nathan P.J., Phan K.L. (2006). Beyond threat: amygdala reactivity across multiple expressions of facial affect. NeuroImage, 30(4),1441–8. [DOI] [PubMed] [Google Scholar]

- Forman S.D., Cohen J.D., Fitzgerald M., Eddy W.F., Mintun M.A., Noll D.C. (1995). Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): use of a cluster-size threshold. Magnetic Resonance in Medicine, 33(5), 636–47. [DOI] [PubMed] [Google Scholar]

- Fox E., Lester V., Russo R., Bowles R.J., Pichler A., Dutton K. (2000). Facial expressions of emotion: are angry faces detected more efficiently? Cognition and Emotion, 14(1), 61–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E., Russo R., Bowles R., Dutton K. (2001). Do threatening stimuli draw or hold visual attention in subclinical anxiety? Journal of Experimental Psychology. General, 130(4), 681–700. [PMC free article] [PubMed] [Google Scholar]

- Gallese V., Fadiga L., Fogassi L., Rizzolatti G. (1996). Action recognition in the premotor cortex. Brain: A Journal of Neurology, 119(Pt 2), 593–609. [DOI] [PubMed] [Google Scholar]

- Garrido M.I., Barnes G.R., Sahani M., Dolan R.J. (2012). Functional evidence for a dual route to amygdala. Current Biology, 22(2), 129–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Genovese C.R., Lazar N.A., Nichols T. (2002). Thresholding of statistical maps in functional neuroimaging using the false discovery rate. NeuroImage, 15(4), 870–8. [DOI] [PubMed] [Google Scholar]

- Goebel R., Esposito F., Formisano E. (2006). Analysis of functional image analysis contest (FIAC) data with brainvoyager QX: from single-subject to cortically aligned group general linear model analysis and self-organizing group independent component analysis. Human Brain Mapping, 27(5), 392–401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadjikhani N., de Gelder B. (2003). Seeing fearful body expressions activates the fusiform cortex and amygdala. Current Biology, 13(24), 2201–5. [DOI] [PubMed] [Google Scholar]

- Hajcak G., Molnar C., George M.S., Bolger K., Koola J., Nahas Z. (2007). Emotion facilitates action: a transcranial magnetic stimulation study of motor cortex excitability during picture viewing. Psychophysiology, 44(1), 91–7. [DOI] [PubMed] [Google Scholar]

- Haxby J.V., Hoffman E.A., Gobbini M.I. (2000). The distributed human neural system for face perception. Trends in Cognitive Sciences, 4(6), 223–33. [DOI] [PubMed] [Google Scholar]

- Huis In ’t Veld E.M., de Gelder B. (2015). From personal fear to mass panic: the neurological basis of crowd perception. Human Brain Mapping, 36(6), 2338–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huis In ’t Veld E.M., Van Boxtel G.J., de Gelder B. (2014a). The Body Action Coding System I: muscle activations during the perception and expression of emotion. Social Neuroscience, 9(3), 249–64. [DOI] [PubMed] [Google Scholar]

- Huis In ’t Veld E.M., van Boxtel G.J., de Gelder B. (2014b). The Body Action Coding System II: muscle activations during the perception and expression of emotion. Frontiers in Behavioral Neuroscience, 8, 330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Janak P.H., Tye K.M. (2015). From circuits to behaviour in the amygdala'. Nature, 517(7534), 284–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kret M.E., Pichon S., Grezes J., de Gelder B. (2011). Similarities and differences in perceiving threat from dynamic faces and bodies. An fMRI study. NeuroImage, 54(2), 1755–62. [DOI] [PubMed] [Google Scholar]

- Linden D.E., Prvulovic D., Formisano E., et al. (1999). The functional neuroanatomy of target detection: an fMRI study of visual and auditory oddball tasks. Cerebral Cortex, 9(8), 815–23. [DOI] [PubMed] [Google Scholar]

- Lindquist K.A., Wager T.D., Kober H., Bliss-Moreau E., Barrett L.F. (2012). The brain basis of emotion: a meta-analytic review. The Behavioral and Brain Sciences, 35(3), 121–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logothetis N.K. (2008). What we can do and what we cannot do with fMRI. Nature, 453(7197), 869–78. [DOI] [PubMed] [Google Scholar]

- Lundqvist D., Flykt A, Öhman A. (1998) The Karolinska Directed Emotional Faces—KDEF. CD ROM from Department of Clinical Neuroscience, Psychology section, Karolinska Institutet, ISBN 91-630-7164-9.

- Mishkin M., Ungerleider L.G., Macko K.A. (1983). Object vision and spatial vision: two cortical pathways. Trends in Neurosciences, 6, 414–7. [Google Scholar]

- Peelen M.V., Atkinson A.P., Andersson F., Vuilleumier P. (2007). Emotional modulation of body-selective visual areas. Social Cognitive and Affective Neuroscience, 2(4), 274–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Radua J., Phillips M.L., Russell T., et al. (2010). Neural response to specific components of fearful faces in healthy and schizophrenic adults. NeuroImage, 49(1), 939–46. [DOI] [PubMed] [Google Scholar]

- Reiman E.M., Lane R.D., Ahern G.L., et al. (1997). Neuroanatomical correlates of externally and internally generated human emotion. The American Journal of Psychiatry, 154(7), 918–25. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G., Matelli M. (2003). Two different streams form the dorsal visual system: anatomy and functions. Experimental Brain Research, 153(2), 146–57. [DOI] [PubMed] [Google Scholar]

- Rosenkranz J.A., Grace A.A. (2001). Dopamine attenuates prefrontal cortical suppression of sensory inputs to the basolateral amygdala of rats. The Journal of neuroscience: The Official Journal of the Society for Neuroscience, 21(11), 4090–103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudrauf D., David O., Lachaux J.P., et al. (2008). Rapid interactions between the ventral visual stream and emotion-related structures rely on a two-pathway architecture. The Journal of Neuroscience, 28(11), 2793–803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sah P., Faber E.S., Lopez De Armentia M., Power J. (2003). The amygdaloid complex: anatomy and physiology. Physiological Reviews, 83(3), 803–34. [DOI] [PubMed] [Google Scholar]

- Scalaidhe S.P., Wilson F.A., Goldman-Rakic P.S. (1999). Face-selective neurons during passive viewing and working memory performance of rhesus monkeys: evidence for intrinsic specialization of neuronal coding. Cerebral Cortex, 9(5), 459–75. [DOI] [PubMed] [Google Scholar]

- Schutter D.J., Hofman D., Van Honk J. (2008). Fearful faces selectively increase corticospinal motor tract excitability: a transcranial magnetic stimulation study. Psychophysiology, 45(3), 345–8. [DOI] [PubMed] [Google Scholar]

- Singer T. (2006). The neuronal basis and ontogeny of empathy and mind reading: review of literature and implications for future research. Neuroscience and Biobehavioral Reviews, 30(6), 855–63. [DOI] [PubMed] [Google Scholar]

- Singh-Curry V., Husain M. (2009). The functional role of the inferior parietal lobe in the dorsal and ventral stream dichotomy. Neuropsychologia, 47(6), 1434–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steimer T. (2002). The biology of fear- and anxiety-related behaviors. Dialogues in Clinical Neuroscience, 4(3), 231–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Surguladze S.A., Brammer M.J., Young A.W., et al. (2003). A preferential increase in the extrastriate response to signals of danger. NeuroImage, 19(4), 1317–28. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. (1988) Co-Planar Stereotaxic Atlas of the Human Brain: 3-D Proportional System: An Approach to Cerebral Imaging. New York: Thieme. [Google Scholar]

- Tamietto M., Geminiani G., Genero R., de Gelder B. (2007). Seeing fearful body language overcomes attentional deficits in patients with neglect. Journal of Cognitive Neuroscience, 19(3), 445–54. [DOI] [PubMed] [Google Scholar]

- Taylor J.C., Wiggett A.J., Downing P.E. (2007). Functional MRI analysis of body and body part representations in the extrastriate and fusiform body areas. Journal of Neurophysiology, 98(3), 1626–33. [DOI] [PubMed] [Google Scholar]

- Taylor K.S., Seminowicz D.A., Davis K.D. (2009). Two systems of resting state connectivity between the insula and cingulate cortex. Human Brain Mapping, 30(9), 2731–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsuchiya N., Moradi F., Felsen C., Yamazaki M., Adolphs R. (2009). Intact rapid detection of fearful faces in the absence of the amygdala. Nature Neuroscience, 12(10), 1224–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Viswanathan A., Freeman R.D. (2007). Neurometabolic coupling in cerebral cortex reflects synaptic more than spiking activity. Nature Neuroscience, 10(10), 1308–12. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P., Pourtois G. (2007). Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia, 45(1), 174–94. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P., Richardson M.P., Armony J.L., Driver J., Dolan R.J. (2004). Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nature Neuroscience, 7(11), 1271–8. [DOI] [PubMed] [Google Scholar]

- Vytal K., Hamann S. (2010). Neuroimaging support for discrete neural correlates of basic emotions: a voxel-based meta-analysis. Journal of Cognitive Neuroscience, 22(12), 2864–85. [DOI] [PubMed] [Google Scholar]

- Weiner K.S., Grill-Spector K. (2011). Not one extrastriate body area: using anatomical landmarks, hMT+, and visual field maps to parcellate limb-selective activations in human lateral occipitotemporal cortex. NeuroImage, 56(4), 2183–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winston J.S., O’Doherty J., Dolan R.J. (2003). Common and distinct neural responses during direct and incidental processing of multiple facial emotions. NeuroImage, 20(1), 84–97. [DOI] [PubMed] [Google Scholar]

- Yang E., Brascamp J., Kang M.S., Blake R. (2014). On the use of continuous flash suppression for the study of visual processing outside of awareness. Frontiers in Psychology, 5, 724.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang W., Schneider D.M., Belova M.A., Morrison S.E., Paton J.J., Salzman C.D. (2013). Functional circuits and anatomical distribution of response properties in the primate amygdala. The Journal of Neuroscience, 33(2), 722–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.