Abstract

Neuroscientific evidence points towards atypical auditory processing in individuals with Autism Spectrum Disorders (ASD), yet the consequences of this for receptive language remain unclear. Using magnetoencephalography (MEG) and a passive listening task, we test for cascading effects on speech sound processing. Children with ASD and age-matched control participants (8-12 y.o.) listened to nonce linguistic stimuli that either did or did not conform to the phonological rules that govern consonant sequences in English (e.g. legal “vimp” vs. illegal “vimk”). Beamformer source analysis was used to isolate evoked responses (0.1 – 30 Hz) to these stimuli in left and right auditory cortex. Right auditory responses from participants with ASD, but not control participants, showed an attenuated response to illegal sequences relative to legal sequences that emerged around 330 ms after the onset of the critical phoneme. These results suggest that phonological processing is impacted in ASD, perhaps due to cascading effects from disrupted initial acoustic processing.

Keywords: Language, Children, MEG, Auditory, Phonology

1 Introduction

Electrophysiological and neuroimaging studies have revealed intriguing differences in early auditory processing in Autism Spectrum Disorders (ASD). For instance, Magnetoencephalography (MEG) research suggests that children with ASD show a delayed right auditory cortex response to tones, as well as a delayed mismatch response to infrequent tones and speech sounds that are interspersed between more frequent stimuli (“oddball” paradigm) (e.g. [1, 2] see [3] for a review). Moreover, children with ASD show abnormal auditory gamma-band (30-80 Hz) oscillatory responses during tone perception [4]. While gamma-band power correlates with clinical measures of language impairment [4], research has not yet tested whether auditory differences have a cascading impact on speech processing in ASD.

Current neurobiological models of speech perception make a compelling case for such a link at the level of phonological (speech sound) processing. These models highlight the role of gamma oscillations in sampling the acoustic input at a rate tuned for speech [5]. Well-regulated gamma oscillations optimally sample the speech stream to isolate the distinctive features of speech (e.g. the timing of vocal cord vibrations that distinguish the phonemes /p/ and /b/). This theory has been tested in healthy individuals (e.g. [6, 7]), but research has not tested the prediction that pathological auditory gamma oscillations in ASD will impact downstream phonological processing.

Behavioral language deficits are well documented in ASD. While research has focused largely on social and pragmatic expression (see [8] for a review), there is also evidence for impairments in the lexicon [9], morphosyntax [10], and phonology [11]. Impairments in phonological expression have, in particular, been linked with distinct sub-types of language disorders in ASD [12]. Comprehension deficits have also been noted at the lexical and sentence levels though patterns of deficit are variable [8, 10]. Disordered speech perception is not a typical symptom of ASD [13, p. 125], although impairments in repeating novel words has been linked with deficits in phonological memory which may have perceptual consequences (e.g. [14, 15]). However, Whitehouse & Bishop [16], using Event-Related Potentials (ERP) and an oddball paradigm, observe that altered neural responses to speech sounds reflect disrupted top-down modulation and not bottom-up sensory effects. This limited evidence for impaired bottom-up phonological processing is puzzling in light of the above-reviewed evidence for abnormal auditory processing.

We seek to reconcile these findings by testing for abnormal phonological perception at the neural level using Magnetoencephalography (MEG). We use beamformer source imaging to test for sensitivity to the difference between possible words and non-words that violate the phonotactic (sound sequence) rules of English. We use speech tokens that are phonotactically legal pseudo-words (e.g. “vimp”) or phonotactically illegal non-words (e.g. “vinp”). The latter sequences violate a phonotactic constraint of English that requires that a consonant cluster comprising a nasal followed by a stop segment to be homorganic, or articulated in the same place within the vocal tract. For example, the sounds indicated by the letters “m” and “p” are both articulated by closing the lips, while “k” is articulated by raising the tongue to the velum in the back of the vocal tract. This homorganic constraint is highly salient to speakers as it is un-violated within words in English.

Prior research indicates that unexpected syllable-final phoneme sequences modulate evoked responses beginning about 700 ms after speech onset [17]1. Accordingly, we predict that phonological processing differences will be evident in altered evoked responses to legal and illegal phonotactic sequences in children with ASD in comparison to control participants.

2 Methods

2.1 Participants

Sixteen children who received clinical diagnoses of Autism Spectrum Disorder (high-functioning) and were between the ages of 8 and 12 years old participated in the study along with sixteen age- and gender-matched control participants (see Table 1). Participants were recruited from clinics and communities in southeastern Michigan and their families were compensated with $100. Institutional review committees at all participating institutions approved this study.

Table 1. Demographic and behavioral information.

| Control M (SD) | ASD M (SD) | t(24) | |

|---|---|---|---|

| N, m/f | 12/1 | 11/1 | |

| Age, y | 9.7 (1.4) | 9.3 (1.4) | 0.64 |

| SCQ | 1.5 (2.0) | 19.1 (6.8) | -8.97*** |

| BASC | 60 (3.6) | 33.7 (5.2) | 15.32*** |

| Full-scale IQ | 116.0 (7.4) | 98.4 (15.2) | 3.51** |

Note: SCQ, Social Communication Questionnaire; BASC, Adaptive Skills T-score from the Behavior Assessment System for Children; IQ measures from the Wechsler Abbreviated Scale of Intelligence-2.

p < 0.05,

p < 0.01,

p < 0.001

Potential participants were initially screened with the Social Communication Questionnaire (SCQ) [19]. The Behavior Assessment System for Children (BASC) [20] and the Wechsler Abbreviated Scale of Intelligence-2 (WASI-2) [21] were administered to rule out adaptive and intellectual deficits consistent with intellectual disability. ASD-likely participants were subsequently diagnosed with Autism Spectrum Disorder based on the Diagnostic and Statistical Manual of Mental Disorders-Fifth Edition diagnostic criteria. Diagnoses were confirmed with the Autism Diagnostic Observation Schedule-2 [22].2

Exclusion criteria for all candidates included WASI-2 full-scale IQ < 70, history of other neurological disorders including active epilepsy, anxiety disorders or head injury with loss of consciousness, and contra-indicators for MEG scanning (e.g. dental braces). Inclusion criteria for ASD candidates included SCQ scores ≥ 11. Inclusion criteria for control participants included SCQ scores < 11, no known developmental delay or learning disability, and no first-degree relatives with an ASD diagnosis.

One participant with ASD who began the study was subsequently excluded due to low full-scale IQ (< 70). Due to fatigue, two participants with ASD did not complete the phonotactic listening protocol. In addition, data from two control participants and two participants with ASD was discarded due to excessive noise or equipment error. Demographic and selected test scores for the remaining 25 participants are shown in Table 1.

2.2 Experimental Design

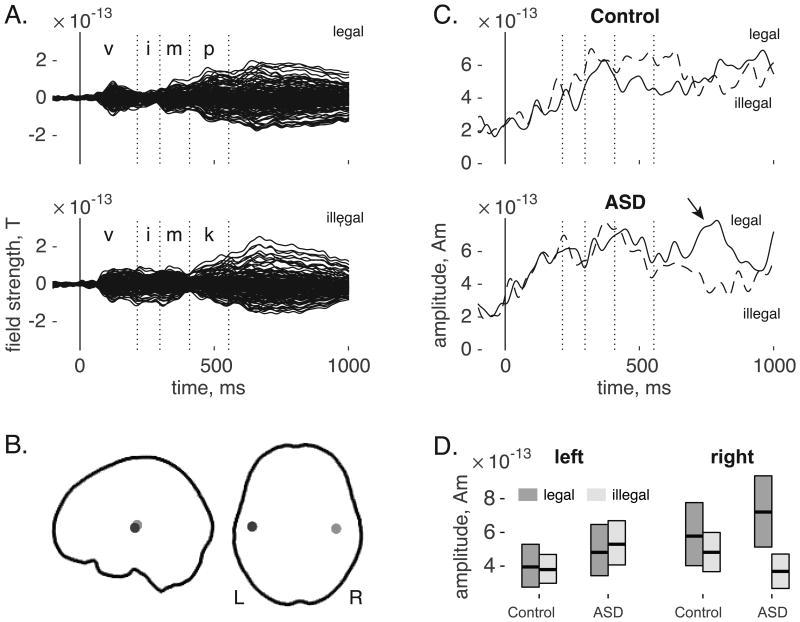

A female English speaker recorded tokens of two items with a phonotactically legal consonant sequence (“vimp” /vɪmp/, “vink” /vɪ(#x0014B)k/) and two with a phonotactically illegal sequence (“vimk” /vɪmk/, “vinp” /vɪŋp/) created by switching only the final two consonants [cf. 23]. Sound files were spliced so that the stimuli shared the same /v/ onset. Co-articulation may influence the pronunciation of the vowel and nasal segments; we did not splice between these segments so as to avoid artificial distortion. This means that information about the phonological sequence may have been available to the listener prior to the onset of the final segment at M = 408 ms (SD = 11).3 The average stimulus duration was 554 ms (SD = 19). Figure 1A shows phoneme boundaries overlaid on the grand-averaged evoked MEG response for phonotactically legal and illegal stimuli.

Figure 1.

(A) Grand-averaged sensor waveforms for legal (top) and illegal (bottom) phonotactic sequences. Dotted vertical lines indicate the average latency of phoneme boundaries. (B) Source locations for left (dark grey) and right (light grey) auditory cortex projected on to the cortical envelope of a template brain. (C) Right auditory source waveforms for control participants (top) and children with ASD (bottom). The arrow indicates an effect for phonotactic legality. Dotted vertical lines indicate average latency of phoneme boundaries. (D) Average activity between 750 and 850 ms after stimulus onset, separated by group, source hemisphere, and phonotactic legality. Boxes indicate bootstrap-based 95% confidence intervals.

Sound files were normalized to 70 dB using Praat software and presented to participants interspersed between 192 complex sinusoidal tones. Each participant heard 24 repetitions of each word yielding 48 phonotactically legal trials and 48 illegal trials.4 Trials were separated by an inter-stimulus-interval of 770 ms.

2.3 Procedure & Data Acquisition

MEG was recorded at 504.63 Hz from 148 neuro-magnetometers (4D Neuroimaging, San Diego CA) while participants lay prone in a dimly-lit magnetically shielded room. Data were band-pass filtered online from 0.1 to 100 Hz. Stimuli were delivered via computer speakers placed at an aperture in the shielded room; loudness was set for each individual's comfort. Coils placed on the forehead and ears were used to determine the location of each participant's head within the MEG array. Participants were asked to passively listen to the sounds with their eyes open. The protocol lasted about 4 m and was part of a larger set of experiments which lasted 1 h. Following MEG scanning, participants completed a series of behavioral tests of language and cognition as part of a related project.

2.4 MEG Data Analysis

MEG data were processed with the Fieldtrip toolbox in MATLAB. Data were cleaned of environmental noise by subtracting signals recorded at 12 reference sensors. The data were subject to a high-pass filter at 0.1 Hz and a notch filter at 60 Hz. The continuous data were divided into epochs from -300 to 1000 ms around stimulus onset. Eye-movement and cardiac artifacts were removed using Independent Component Analysis (ICA) and epochs containing other artifacts were removed based on visual inspection. Across both targets and fillers, marginally more epochs were rejected in control participants (M = 37, SD = 22) than for participants with ASD (M = 23, SD = 13), t(23) = 1.82, p = 0.08. On average, 43 epochs were retained in each of the target phonotactic conditions.

Source time-courses in two “virtual sensors” were estimated using a Linearly Constrained Minimum Variance (LCMV) beamformer. Regional sources estimating current amplitude in three dimensions were placed in the left and right auditory cortex based on the anatomy of an age-matched template brain (7 to 11 y.o. [24]). Source locations are shown in Figure 1B. The cortical envelope of the same template was used to define a single-compartment boundary-element model (BEM) and the beamformer filter was estimated with a sensor covariance matrix calculated from all target and filler epochs. Source time-courses were low-pass filtered at 30 Hz, baseline corrected against a 100 ms pre-stimulus interval, averaged by condition, and a root mean square was calculated to yield one event-related evoked average per source for each subject and condition.

To evaluate phonological processing, left and right auditory responses to phonotactically legal and illegal syllables were analyzed using a non-parametric cluster-based permutation test to correct for multiple comparisons across time [25]. Under this procedure (i) temporally adjacent samples were clustered based on a two-tailed t-test with p < 0.05, (ii) t-values within each cluster were summed to form a cluster test statistic, and (iii) a reference distribution was created by randomly permuting condition labels 10,000 times and recalculating simulated cluster statistics. Observed cluster statistics with values greater than those from 95% of these simulations were statistically significant at α = 0.05. Main effects of phonotactic legality, diagnostic group, and their interaction were tested across the interval spanning 297 ms to 1000 ms, which begins at the offset of the matched initial segments. Our principle analysis tested evoked signals based on prior literature, however we also conducted an exploratory analysis of event-related changes in spectral power that is reported in the Supplementary Materials.

To evaluate early auditory processing, we also conducted a peak latency analysis of the auditory M100 response to 144 repetitions of a complex tone that were included as filler trials. These epochs were processed according to the same procedures just described. Based on prior work, we predict delayed right hemisphere M100 peaks in ASD participants [2].

The grand-average evoked responses for legal and illegal phonotactic sequences are shown in Figure 1A. Early transient response, including the M100, are followed by a sustained activation pattern after about 400 ms. Vertical lines indicate the average latency for each phoneme boundary; the final phoneme, beginning at 408 ms, was the locus of the manipulation. Left and right auditory cortex locations targeted for beamforming are shown in Figure 1B.

Right auditory responses are shown in Figure 1C. There was not a significant main effect between groups (p = 0.115), but there was a significant main effect for phonotactics (p = 0.024): legal sequences evoked a stronger response than illegal sequences in a cluster spanning 778 – 837 ms, This main effect was modulated by a marginally significant interaction, p = 0.058, such that participants with ASD showed a differential response to phonotactic legality at 747 – 786 ms, but control participants did not. Paired comparisons showed no significant differences for control participants, p > 0.5 (top panel), while participants with ASD showed a greater response (p = 0.006) to legal sequences from 733 to 816 ms (bottom panel).

In the left auditory source, there was a main effect for group (p = 0.011) such that ASD participants showed a stronger response from 433-531 ms (see Supplementary Figure S1). There was no effect for phonotactics and no interaction (p-values ≥ 0.15.)

Hemispheric specificity was tested with a 2 (phonotactics) × 2 (group) × 2 (hemisphere) ANOVA conducted over signals averaged from 750 to 850 ms (Figure 1D). This test showed a significant main effect of phonotactic legality (F(1,23) = 4.52, p = 0.044, ηp2 =0.036), a marginal effect for hemisphere (F(1,23) = 3.43, p = 0.077, ηp2 = 0.028), and a significant interaction between phonotactic legality and hemisphere (F(1,23) = 6.23, p = 0.020, ηp2 = 0.048), confirming that the effect for phonotactic legality was right lateralized. Note that the three-way interaction between hemisphere, group, and phonotactic legality did not reach significance, F(1,24) = 2.75, p = .111, ηp2 = 0.022.

Analysis of the right auditory M100 response to tones replicated prior reports of a peak latency delay in in ASD (M = 142 ms, SD = 32 ms) compared to controls (M = 118 ms, SD = 35 ms), t(23) = 1.70, p = 0.051 one-tailed.5

3 Discussion

Evidence that early neural responses to acoustic stimuli are disrupted or delayed in ASD (e.g. [1,2]) suggests that there may be a downstream impact on phonological processing. To test this hypothesis we asked school-aged children with and without ASD to listen to speech sound sequences that violate English phonotactic rules during MEG scanning. MEG responses localized to the right auditory cortex show sensitivity to legal and illegal phonotactic sequences for ASD participants by about 330 ms after the onset of the target phonological segment. Control participants did not show any such effect, although the statistical interaction was only marginally significant (p = 0.058). These results are consistent with the prediction that early auditory processing abnormalities have cascading effects on phonological processing.

Differences between conditions for the ASD participants emerge relatively late relative to the stimulus (about 730 ms after stimulus onset; 330 ms after target phoneme onset; 180 ms after stimulus offset.) The difference is driven by an attenuated response to illegal segments compared to legal segments in this late window. In contrast, control participants show no difference in responding to the two types of stimuli in this time window. However, control participants do show a non-significant trend towards increased responses for illegal segments earlier, starting at the onset of the target phoneme at 408 ms after stimulus onset. The general pattern is one in which sensitivity to phonotactic patterns is present in participants with ASD but processing is atypically slow or inefficient. These findings are consistent with proposals related to impaired phonological memory in ASD based on non-word repetition effects (e.g. [15]). While the data indicate that phonological processing is atypical, they do not license conclusions about behavioral consequences of these differences. One further limitation is that our N does not permit testing for sub-divisions in terms of phonological ability within our ASD participants (cf. [12]).

Statistically reliable differences emerged only in the right auditory cortex, though note that the three-way interaction between hemisphere, group, and phonotactics did not reach significance. While we hesitate to draw strong conclusions about laterality from these limited data, the results are consistent with previous observations of right-lateralized early auditory delays [2], an effect that was observed in our participants.

4 Conclusion

Using MEG, we find evidence for sensitivity to illegal sequences of speech sounds in participants with ASD but not in age- and gender-matched controls. These right-hemisphere responses are consistent with cascading effects from atypical early auditory stages that impact downstream phonological processing. Further work with a greater number of participants is necessary to trace the generality and behavioral consequences of these effects.

Supplementary Material

Acknowledgments

This work was funded in part by a grant from the University of Michigan M-Cubed initiative (JB, IK, RLO). We thank Margaret Ugolini and Stefanie Younce for assistance with data collection. Dr. Kovelman thanks Departments of Psychology & CHGD at the U of Michigan, Harrington Foundation at UT Austin, and NIH R01HD078351 (PI: Hoeft).

Footnotes

Cf. [18] for earlier effects in an oddball paradigm

ADOS-2 domain scores include: MSocial (SD) = 12.57 (5.22); MRepetitive (SD) = 3.00 (2.65)

For example, the nasal segment for “vinp” was articulated more anteriorly than the equivalent segment for “vink”. However, phonotactic illegality was not indicated until the final segment.

Two participants heard 75 stimuli in each condition before the protocol was shortened to reduce fatigue.

Data from one participant was excluded from this analysis due to lack of a clear M100 component.

Contributor Information

Jonathan R Brennan, Email: jobrenn@umich.edu, University of Michigan, Department of Linguistics, 440 Lorch Hall, 611 Tappan St., Ann Arbor, MI 48109, 734-764-8692.

Neelima Wagley, University of Michigan, Department of Psychology, 2032 East Hall, 530 Church St., Ann Arbor, MI 48109.

Ioulia Kovelman, University of Michigan, Department of Psychology, 2038 East Hall, 530 Church St., Ann Arbor, MI 48109

Susan M. Bowyer, Henry Ford Hospital, Department of Neurology, 2799 West Grand Blvd., Detroit, MI 48202

Annette E. Richard, University of Michigan, Department of Neuropsychology, 2215 Fuller Rd., Ann Arbor, MI 48104

Renee Lajiness-O'Neill, Email: rlajines@emich.edu, Eastern Michigan University, Department of Psychology, 301C Science Complex, Ypsilanti, MI 48197, 734.487.6713.

References

- 1.Roberts TPL, Cannon KM, Tavabi K, Blaskey L, Khan SY, Monroe JF, Qasmieh S, Levy SE, Edgar JC. Auditory magnetic mismatch field latency: A biomarker for language impairment in Autism. Biol Psychiat. 2011;70(3):263–269. doi: 10.1016/j.biopsych.2011.01.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Roberts TPL, Khan SY, Rey M, Monroe JF, Cannon K, Blaskey L, Woldoff S, Qasmieh S, Gandal M, Schmidt GL, Zarnow DM, Levy SE, Edgar JC. MEG detection of delayed auditory evoked responses in autism spectrum disorders: towards an imaging biomarker for Autism. Autism Res. 2010;3(1):8–18. doi: 10.1002/aur.111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kujala T, Lepistö T, Näätänen R. The neural basis of aberrant speech and audition in Autism Spectrum Disorders. Neurosci Biobehav Rev. 2013;37(4):697–704. doi: 10.1016/j.neubiorev.2013.01.006. [DOI] [PubMed] [Google Scholar]

- 4.Edgar JC, Khan SY, Blaskey L, Chow VY, Rey M, Gaetz W, Cannon KM, Monroe JF, Cornew L, Qasmieh S, Liu S, Welsh JP, Levy SE, Roberts TPL. Neuromagnetic oscillations predict evoked-response latency delays and core language deficits in Autism Spectrum Disorders. J Autism Dev Disord. 2013 doi: 10.1007/s10803-013-1904-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Giraud AL, Poeppel D. Cortical oscillations and speech processing: emerging computational principles and operations. Nat Neurosci. 2012;15(4):511–517. doi: 10.1038/nn.3063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Luo H, Poeppel D. Cortical oscillations in auditory perception and speech: Evidence for two temporal windows in human auditory cortex. Front Psychol. 2012;3:170. doi: 10.3389/fpsyg.2012.00170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Saoud H, Josse G, Bertasi E, Truy E, Chait M, Giraud AL. Brain- speech alignment enhances auditory cortical responses and speech perception. J Neurosci. 2012;32(1):275–281. doi: 10.1523/JNEUROSCI.3970-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Groen WB, Zwiers MP, van der Gaag RJ, Buitelaar JK. The phenotype and neural correlates of language in autism: An integrative review. Neurosci Biobehav Rev. 2008;32(8):1416–25. doi: 10.1016/j.neubiorev.2008.05.008. [DOI] [PubMed] [Google Scholar]

- 9.Kjelgaard MM, Tager-Flusberg H. An investigation of language impairment in Autism: Implications for genetic subgroups. Lang Cognitive Proc. 2001;16(2-3):287–308. doi: 10.1080/01690960042000058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Eigsti IM, Bennetto L, Dadlani MB. Beyond pragmatics: Morphosyn- tactic development in Autism. J Autism Dev Disord. 2007;37(6):1007–23. doi: 10.1007/s10803-006-0239-2. [DOI] [PubMed] [Google Scholar]

- 11.Bishop DVM, Maybery M, Wong D, Maley A, Hill W, Hallmayer J. Are phonological processing deficits part of the broad Autism phenotype? Am J Med Genet B Neuropsychiatr Genet. 2004;128B(1):54–60. doi: 10.1002/ajmg.b.30039. [DOI] [PubMed] [Google Scholar]

- 12.Rapin I, Dunn MA, Dunn MA, Allen DA, Stevens MC, Fein D. Subtypes of language disorders in school-age children with Autism. Dev Neuropsychol. 2009;34(1):66–84. doi: 10.1080/87565640802564648. [DOI] [PubMed] [Google Scholar]

- 13.Kelley E. Language in ASD. In: Fein DA, editor. The Neuropsychology of Autism. Oxford; Oxford University Press; 2011. [Google Scholar]

- 14.Gathercole SE, Baddeley AD. Phonological memory deficits in language disordered children: Is there a causal connection? J Mem Lang. 1990;29(3):336–360. [Google Scholar]

- 15.Hill AP, van Santen J, Gorman K, Langhorst BH, Fombonne E. Memory in language-impaired children with and without autism. J Neurodev Disord. 2015;7(1):19. doi: 10.1186/s11689-015-9111-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Whitehouse AJO, Bishop DVM. Do children with Autism ‘switch off' to speech sounds? An investigation using event-related potentials. Dev Sci. 2008;11(4):516–24. doi: 10.1111/j.1467-7687.2008.00697.x. [DOI] [PubMed] [Google Scholar]

- 17.Domahs U, Kehrein W, Knaus J, Wiesel R, Schlesewsky M. Event- related potentials reflecting the processing of phonological constraint violations. Lang Speech. 2009;52(Pt 4):415– 35. doi: 10.1177/0023830909336581. [DOI] [PubMed] [Google Scholar]

- 18.Dehaene-Lambertz G, Dupoux E, Gout A. Electrophysiological correlates of phonological processing: a cross-linguistic study. J Cogn Neurosci. 2000;12(4):635–647. doi: 10.1162/089892900562390. [DOI] [PubMed] [Google Scholar]

- 19.Rutter M, Bailey A, Lord C. Social Communication Questionnaire (SCQ) San Antonio, TX: Pearson, Inc.; 2008. [Google Scholar]

- 20.Reynolds CR, Kamphaus RW. Behavioral Assessment System for Children. Second. Pearson; 2004. [Google Scholar]

- 21.Wechsler D. Wechsler Abbreviated Scale of Intelligence-II. Pearson; 2011. [Google Scholar]

- 22.Lord C, Rutter M, DiLavore PC, Risi S, Gotham K. Autism Diagnostic Observation Schedule-2. Los Angeles, CA: Western Psychological Services; 2012. [Google Scholar]

- 23.Shtyrov Y, Smith ML, Horner AJ, Henson R, Nathan PJ, Bullmore ET, Pulvermüller F. Attention to language: Novel MEG paradigm for registering involuntary language processing in the brain. Neuropsychol. 2012;50(11):2605–16. doi: 10.1016/j.neuropsychologia.2012.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fonov V, Evans AC, Botteron K, Almli CR, McKinstry RC, Collins DL Brain Development Cooperative Group. Unbiased average age-appropriate atlases for pediatric studies. NeuroImage. 2011;54(1):313–27. doi: 10.1016/j.neuroimage.2010.07.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Meth. 2007;164(1):177–190. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.