Abstract

It is known that the functional properties of an object can interact with perceptual, cognitive, and motor processes. Previously we have found that a between-subjects manipulation of judgment instructions resulted in different manipulability-related memory biases in an incidental memory test. To better understand this effect we recorded electroencephalography (EEG) while participants made judgments about images of objects that were either high or low in functional manipulability (e.g., hammer vs. ladder). Using a between-subjects design, participants judged whether they had seen the object recently (Personal Experience), or could manipulate the object using their hand (Functionality). We focused on the P300 and slow-wave event-related potentials (ERPs) as reflections of attentional allocation. In both groups, we observed higher P300 and slow wave amplitudes for high-manipulability objects at electrodes Pz and C3. As P300 is thought to reflect bottom-up attentional processes, this may suggest that the processing of high-manipulability objects recruited more attentional resources. Additionally, the P300 effect was greater in the Functionality group. A more complex pattern was observed at electrode C3 during slow wave: processing the high-manipulability objects in the Functionality instruction evoked a more positive slow wave than in the other three conditions, likely related to motor simulation processes. These data provide neural evidence that effects of manipulability on stimulus processing are further mediated by automatic vs. deliberate motor-related processing.

Keywords: manipulability, motor processing, embodied cognition, tool use, semantic knowledge

Introduction

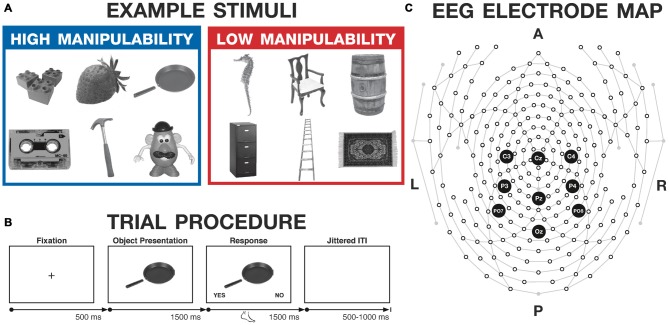

Interacting with objects using our hands is a fundamental facet of daily life. We eat fruits and vegetables using our hands, and cook and eat other foods with the aid of pans and utensils. Children play with toys; musicians become skilled with instruments; most adults have some experience with household tools required for household maintenance and do-it-yourself projects. All of these objects can be interacted with for an intended functional purpose, i.e., are tools. Other objects can only be volumetrically manipulated (i.e., moved or rotated), but not used functionally, such as a chair, carpet, and ladder (see Figure 1A). Here we refer to these two types of objects as high- and low-manipulability, respectively (see Madan and Singhal, 2012a,b). In the current study, we used event-related potentials (ERPs) to further investigate how these two types of objects are differentially processed within the brain, as well as how attending to the motor features of these objects either automatically or deliberately may modulate the underlying neural processes.

Figure 1.

Experimental methods. (A) Example stimuli, selected from the Salmon et al. (2010) database. (B) Trial procedure for the judgment task used with both groups. (C) High-density electroencephalography (EEG) electrode map, with the electrodes of interest highlighted (Cz, Pz, Oz, C3, C4, P3, P4, PO7, PO8). A, P, L, and R denote anterior, posterior, left, and right, respectively.

A number of studies have observed differences in brain activity associated with the processing of high- vs. low-manipulability objects, often using instructions that do not explicitly require the motor-related features of the objects be evoked. For example, some studies used verbal stimuli and others used pictorial stimuli, include object naming (Saccuman et al., 2006), lexical decision (Rueschemeyer et al., 2010), and go/no-go (Proverbio et al., 2011). Importantly, these studies reported greater activation in motor regions when participants were processing the high-manipulability stimuli1. This finding supports the notion that manipulability is a semantic property of words and images that is automatically evoked as part of processing the meaning of the stimuli (Chao and Martin, 2000; Borghi et al., 2007; Campanella and Shallice, 2011; Madan and Singhal, 2012a,b). While it is possible that this incidental activation of motor cortex is a spectator process, rather than directly related to the processing of the high-manipulability stimuli, researchers have found that processing of high-manipulability stimuli can interfere with overt motor movements, as measured by grip aperture and response time (Gentilucci and Gangitano, 1998; Glover et al., 2004; Witt et al., 2010; Marino et al., 2014; but see Matheson et al., 2014a). Convergently, there is evidence that activation of motor cortices, either artificially (due to TMS) or as a result of co-occuring overt motor movements, can interfere with the processing of high-manipulability stimuli (Pulvermüller et al., 2005; Buccino et al., 2009; Shebani and Pulvermüller, 2013; Papeo et al., 2015; but see Matheson et al., 2014b).

While most studies investigating effects of manipulability used instructions that only elicited automatic motor processing, a few studies instead asked participants to directly evaluate the functional properties of the objects (Kellenbach et al., 2003; Boronat et al., 2005; Righi et al., 2014). As with the studies that elicited automatic motor processing, these studies found greater activity in motor regions for the high-manipulability stimuli. However, in the functional magnetic resonance imaging (fMRI) studies that reported a contrast vs. baseline, an interesting difference became apparent: Boronat et al. (2005) and Kellenbach et al. (2003) found that the low-manipulability stimuli also significantly differed from baseline in motor regions, while Rueschemeyer et al. (2010) did not observe a significant difference for the low-manipulability stimuli relative to baseline. While these studies differ on a number of dimensions, such as the use of word vs. image stimuli2, they also differ in the use of instructions that would elicit automatic vs. deliberate motor processing. However, none of these studies directly compared the role of automatic vs. deliberate motor processing. In a behavioral study, Madan and Singhal (2012b) manipulated motor processing instructions across three participant groups, and followed the judgment task with a free recall task. Participants who were given instructions that did not require deliberate processing of the motor features of the stimuli recalled more high- than low-manipulability words. In one such group, participants were asked to judge if word had an odd or even number of letters (Word Length group); in another group, participants were asked to judge if the word represented an object that the participant had seen within the past 3 days (Personal Experience group). Since these participants did not deliberately attend to these motoric properties, any differences between the two word types is inherently driven by automatic motor processing, similar to most prior studies of manipulability. In contrast, the opposite was true in the deliberate motor processing instruction group, where recall rates were higher for the low-manipulability words. Specifically, this group of participants were asked to judge if the word represented an object that they could manipulate with their hands, such as a screwdriver or computer keyboard (Functionality group). This interaction result, in conjunction with the outlined fMRI studies, suggests that while low-manipulability stimuli generally do not evoke much motor-related processing, effortful and deliberate processing of motor-related features can modulate the effect of manipulability. Other recent studies have also observed interactions between manipulability and task demands, e.g., object categorization vs. object naming (Salmon et al., 2014a,b). Here, as in Madan and Singhal (2012b), we use the term “manipulability” when referring to the stimuli property, and “functionality” when referring to the instruction manipulation.

Here we investigated the relationship between automatic vs. deliberate motor processing instructions and object manipulability using electroencephalography (EEG), which additionally allowed us to examine the neural correlates of this relationship. Specifically, by having more precise temporal resolution, we aimed to disentangle motor-related processing differences that were automatic from those that were more effortful. We focused our analyses on two attention-related ERP components: P300 and slow wave.

The P300 is a positive-going waveform typically peaking 270–650 ms after stimulus onset, with the precise peak varying based on the experimental procedure. Research has shown that the P300 consists of two sub-components: the novelty P3a at frontal electrode sites and the P3b at posterior sites (Squires et al., 1975; Soltani and Knight, 2000; Polich, 2012). The P3b (P300) is typically observed when attention is paid to a stimulus train, which has both frequent and infrequent (oddball) trials. It has been shown that the peak latency of the P300 increases if the categorization of a target stimulus becomes more difficult, suggesting it is also involved in low-level perception (Kutas et al., 1977; Coles et al., 1995). However, Armstrong and Singhal (2011) conducted a dual task experiment with primary Fitts’ aiming that varied memory requirements in conjunction with secondary dichotic listening. The main results showed that P300 generated by the auditory task was decreased in amplitude by both Fitts’ conditions, but only its latency was affected by the memory-guided condition. These results were interpreted to suggest that P300 amplitude reflects attention for action processes and its latency reflects more perception-based processes required to briefly maintain an image of a target for delayed action planning. It has been well established that the amplitude of the P300 reflects both bottom-up and top-down attentional processes, specifically perceptual-central resource allocation related to workload, task difficulty, or involuntary attention (Donchin et al., 1973; Kok, 2001; Prinzel et al., 2003; Polich, 2012).

Slow-wave amplitude, on the other hand, reflects more sustained and effortful attentional allocation, perhaps involving conscious awareness and motivational states (Williams et al., 2007). Slow wave generally occurs after 400 ms from stimulus onset and in many ways similar to P300 and also varies in amplitude in relation to task demands (McCarthy and Donchin, 1976; Ruchkin et al., 1980). However, in contrast to P300, slow wave is considered to indicate deliberate attention of higher-order object features, as well as processes related to elaborative memory encoding and effects of emotion on attention (Karis et al., 1984; Fabiani et al., 1990; Codispoti et al., 2006; Schupp et al., 2006). Like the P300, the slow wave is thought to have multiple neural generators and is typically maximal over centro-parietal electrode sites (Codispoti et al., 2006).

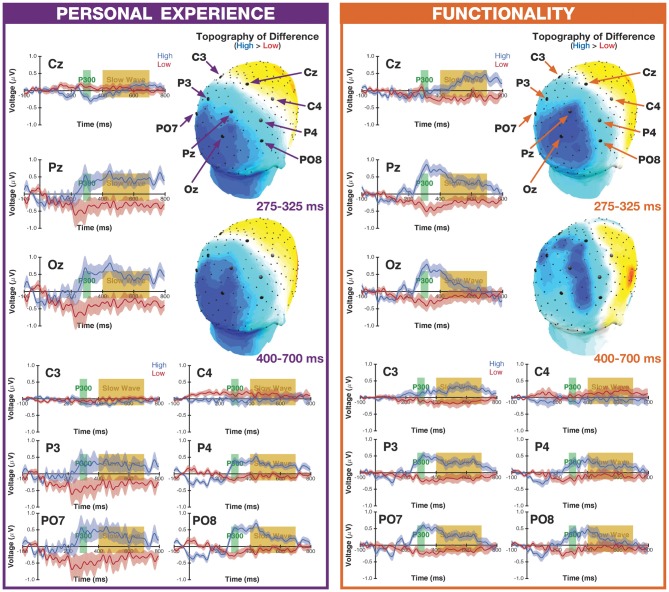

These two ERP components were evaluated at four electrodes. Cz and Pz were examined as they have been previously shown to demonstrate robust P300 and slow-wave components (Ruchkin et al., 1980, 1988; Karis et al., 1984). Additionally, effects at C3 and C4 were investigated in order to evaluate whether the ERPs of interest were sensitive to activity in the primary motor cortex in the left and right hemisphere, respectively (as ERP is not a localization technique, the source is only inferred). The use of C3 and C4 is supported by the extensive literature on the lateralized readiness potential (LRP), an ERP observed over the motor cortex (electrodes C3 and C4), prior to the movement of the contralateral hand (Kutas and Donchin, 1974; Coles, 1989; Smulders and Miller, 2012). Of particular relevance, researchers have observed robust ERPs at C3 related to both actual and imagined motor movements (Green et al., 1997; Ramoser et al., 2000). Given that we were interested in the processing of motor-related semantic features, rather than overt motor movements, and fMRI studies have observed differential activity in motor cortices as a function of high- vs. low-manipulability (Kellenbach et al., 2003; Saccuman et al., 2006; Rueschemeyer et al., 2010), we predicted that relevant motor processing might be observed at C3 rather than C4 since all of our participants were right-handed. We additionally examined the P300 and slow wave ERPs at Oz, P3, P4, PO7, and PO8 to further characterize the effects of manipulability and motor-processing instruction (see Figures 1C, 2 for the locations of all electrodes of interest).

Figure 2.

Event-related potentials (ERP) waveforms and topographic maps. ERP waveforms for all of the electrodes of interest, for the high- and low-manipulability objects, for both the Personal Experience and Functionality groups. The shaded band for the ERP waveforms corresponds to the SEM, corrected for inter-individual differences and after object familiarity variability had been accounted for. Topographic maps are based on the difference between high- and low-manipulability objects, for both the P300 and slow-wave ERPs. Black markers along the scalp surface correspond to electrode locations, with the electrodes of interest highlighted as larger markers. See Appendix C in Supplementary Material for mean voltages.

In the current study, we investigated the effects of automatic and deliberate motor processing on P300 and slow-wave ERPs. Using P300 as an index of attention, we tested if the P300 is sensitive to object manipulability and automatic vs. deliberate motor-processing instructions. We predicted that effects of manipulability should be primarily observed as differences in P300 amplitude and be most prominent at Pz, with high-manipulability objects eliciting a higher amplitude P300 as they are more readily processed. We did not predict a main effect of instruction, i.e., automatic vs. deliberate motor processing, but instead predicted an interaction. Specifically, we predicted that motor processing instruction effects should be most prominent in slow wave, and only produce differential effects of manipulability in the Functionality group, where high-manipulability objects result in motor simulations reflected in the ERP at C3, but not in the other three conditions. For clarity, we use “manipulability” to describe to the stimuli manipulation and “functionality” to refer to the instructional manipulation.

Materials and Methods

Participants

A total of 80 introductory psychology students (age (M ± SD) = 19.43 ± 2.62 years old; 58 females) at the University of Alberta participated for course credit. All participants had normal or corrected-to-normal vision. Participants gave written informed consent prior to beginning of the study, which was approved by the University of Alberta Research Ethics Board.

Materials

Stimuli were grayscale images selected from the Salmon et al. (2010) database of 320 images. Stimuli were divided into two pools: high- and low-manipulability (see Figure 1A for example stimuli). In the norming study, Salmon et al. had subjects (N = 57) “rate the manipulability of the object according to how easy it is to grasp and use the object with one hand” on a 5-point Likert scale (referred to as Manip1 within the dataset). As such, this is a rating of an object’s graspability and functional properties (referred to here as “manipulability”), and is related to the object’s structural properties. Images were selected as the highest and lowest 120 images based on these manipulability ratings, after two independent raters excluded images that might evoke consistent emotional responses (e.g., spider, snake, cake), were of local locations where the database was developed (Halifax), or depicted natural scenes rather than individual objects (e.g., staircase, mountain, bank). Thus, the final stimuli set consisted of 240 images. Based on the normative ratings reported in Salmon et al. (2010), manipulability for the high-manipulability objects ranged from 4.56 to 4.98 (M = 4.80), and the low-manipulability objects ranged from 1.00 to 3.19 (M = 1.70). The stimuli strongly differed in manipulability (t(238) = 33.03, p < 0.001, Cohen’s d = 1.91). The specific stimuli used are listed in Supplementary Material, Appendix A.

Procedure

Participants were randomly assigned to one of two experimental groups: Personal Experience (N = 40) and Functionality (N = 40). In both groups, participants were presented with a randomly selected subset of 120 images (60 each of high- and low-manipulability). In the Personal Experience group, participants were asked to rate the presented image as an object they have seen within the past 3 days (yes/no). In the Functionality group, participants were presented with instructions defining the concept of functionality and then were asked to judge if the object was easy to functionally interact with using their hands (yes/no). The exact instructions used are provided in Supplementary Material, Appendix B. A between-subjects design was necessary to prevent potential carry-over effects. The instructions were the same as the Personal Experience and Functionality instructions previously used in Madan and Singhal (2012b).

The trial procedure is illustrated in Figure 1B. Each trial began with a fixation cross, presented for 500 ms. Object images were then presented in the center of the screen for 1500 ms, but responses were not permitted during this period. Images were resized to 300 × 300 pixels, subtending a visual angle of approximately 10°. Subsequently, the words “YES” and “NO” appeared in the bottom corners of the screen (with side counterbalanced across participants) for 1500 ms, prompting the participant to make the judgment, while the image remained in the center. Participants made their response during this 1500 ms period, using their feet to press buttons on a response pad. Participants had to withhold their response and were then asked to respond using their foot to attenuate possible interaction between hand movements and processing manipulable objects, in order to minimize effects of response-related motor activity on the ERPs of interest. After participants responded, the “YES” and “NO” text would disappear, indicating the response had registered. Thus, the image remained on the screen for total of 3000 ms after its onset, regardless of the participant’s response time—though responses were not permitted for the first 1500 ms. A jittered inter-trial interval followed the image presentation and ranged from 500 to 1000 ms. A block of six practice trials was presented at the beginning of the judgment task. The judgment task was followed by additional motor-processing tasks; performance on those tasks will not be discussed here.

Electroencephalography (EEG) Acquisition and Analyses

The experimental session was conducted in an electrically shielded, sound-attenuated chamber. EEG activity was recorded using a high-density 256-channel Geodesic Sensor Net (Electrical Geodesics Inc., Eugene, OR, USA), amplified at a gain of 1000 μV and sampled at 250 Hz. Impedances were kept below 50 kΩ and EEG was initially referenced to Cz.

Data was analyzed using in-house MATLAB (The MathWorks Inc., Natick, MA, USA) scripts in conjunction with the EEGLAB toolbox (Delorme and Makeig, 20043). EEG signal was average re-referenced, and digitally band-pass filtered between 0.5–30 Hz (Kappenman and Luck, 2010; Chen et al., 2014). Artifacts were corrected via Independent Component Analysis, implemented in EEGLAB (Jung et al., 2000). The selection of components was based on visual inspection of the spatial topographies, time courses, and power spectral characteristics of all components. Components accounting for stereotyped artifacts including eye blinks, eye movements, and muscle movements were removed from the data. Bad channels were identified using the automatic channel rejection prior to artifact rejection, and the missing channels were interpolated after the artifact component removal, using the spherical interpolation method, implemented in EEGLAB. Trials were epoched at the onset of the image and referenced to a 100 ms pre-stimulus baseline.

We had two ERPs of interest, P300 and slow wave. P300 peak amplitude was quantified as the amplitude of the local maxima (averaged with one time point before and after) within a time window of 275–325 ms. Slow-wave mean amplitude was quantified as the mean amplitude over the time window of 400–700 ms. P300 and slow-wave ERPs are often reported at mid-line electrodes. As such, we selected electrodes Cz and Pz for our primary recording sites. We additionally analyzed the ERPs at C3 and C4 electrodes to investigate potential hand-related motor activity. Figure 1C highlights the positions of all four of the electrodes on the high-density electrode map, with the mapping of the electrode position in the international 10–10 system determined based on the conversion detailed in Luu and Ferree (2005). Statistical analyses were carried out on voltage differences at the corresponding electrodes and time windows in MATLAB and SPSS (IBM Inc., Chicago, IL, USA). Topographic maps were constructed using all 257 electrodes with mean voltages over two time windows: 275–325 ms and 400–700 ms, to visualize the spatial topology of the P300 and slow-wave ERPs, respectively.

The Salmon et al. (2010) database also included normative ratings of object familiarity and age of acquisition. In a post hoc analysis, we noticed a small but significant difference in the familiarity ratings (t(238) = 3.84, p < 0.001, d = 0.49; High: M = 3.00; Low: M = 2.40). The stimuli did not differ in age of acquisition (t(238) = 0.65, p = 0.52, d = 0.09; High: M = 2.76; Low: M = 2.83). Based on this, to improve the specificity of our findings to manipulability per se, variability that could be explained by the object familiarity ratings was first regressed from the trial-wise mean voltages for both P300 and slow wave, and the residual was used as the input for the 2 × 2 analysis of variances (ANOVAs). For the stimuli used here, object manipulability and familiarity were only weakly correlated (r(318) = 0.12, p = 0.033). Preliminary analyses that did not account for variability in object familiarity were largely consistent, though statistical power was markedly stronger after familiarity was accounted for.

Data from 19 participants was excluded from analyses. In the Personal Experience group, eight participants were excluded due to machine error and two due to handedness (ambidextrous or left-handed), as measured using Edinburgh Handedness Inventory (Oldfield, 1971). In the Functionality group, six participants were excluded due to equipment malfunction and three due to excessive amounts of artifacts in the EEG recording. After exclusions, the final sample sizes were N = 30 and N = 31 for the Personal Experience and Functionality groups, respectively. All participants in the final sample were right-handed.

Behavioral Results

In the Personal Experience group, participants judged seeing the high-manipulability objects more often in the last 3 days than the low-manipulability objects (t(29) = 7.82, p < 0.001, d = 0.87; High: M = 0.45; Low: M = 0.31). Differences in these responses may relate to differences in the familiarity ratings for the two stimuli pools. In the Functionality group, participants judged the high-manipulability objects to be higher in functionality than the low-manipulability objects (t(30) = 9.56, p < 0.001, d = 2.22; High: M = 0.68; Low: M = 0.25).

ERP Results

ERP waveforms and the representative topographic distributions of the two ERP components of interest are shown in Figure 2. Data for each ERP and electrode site was analyzed as a separate ANOVA, as is commonly done (see McCarthy and Wood, 1985); ANOVAs were conducted using a mixed 2 × 2 design with the within-subject factor of Manipulability (high, low) and the between-subjects factor of Group (Personal Experience, Functionality). The mean voltages used in these ANOVAs are reported in Supplementary Material, Appendix C.

Regarding the general form of the waveforms (see Figure 2), the most prominent ERP components visible are the P300 and slow wave, with early visual deflections being less pronounced. The use of a delayed-response procedure, as participants were not able to make their response for the first 1500 ms of an object being presented, may have increased the temporal variability of early ERPs such as the P1. Nonetheless, there is some evidence of a P1 effect at some electrodes in the Functionality group, particularly at electrode Oz.

P300 Peak Amplitude

Midline Electrodes

At electrode Cz, we observed a main effect of Manipulability (F(1,59) = 25.34, p < 0.001, = 0.30), though this was additionally qualified by a significant interaction (F(1,59) = 4.49, p = 0.038, = 0.07), where the P300 was larger for high- than low-manipulability objects, and this difference being more pronounced in the Personal Experience group (High: M = 1.267, SD = 0.913; Low: M = 0.280, SD = 0.722) than in the Functionality group (High: M = 0.884, SD = 0.764; Low: M = 0.482, SD = 0.618; Note that Figure 2 shows the mean voltages over time, while the P300 was quantified as the peak amplitude within the 275–325 ms time window). At electrode Pz, there was a main effect of Manipulability (F(1,59) = 18.70, p < 0.001, = 0.24), with a larger amplitude being elicited for high-manipulability objects compared to low. At the same electrode, a main effect of Group (F(1,59) = 8.14, p = 0.006, = 0.12) was also observed, where participants in the Personal Experience group were associated with larger amplitude P300s. A similar pattern was found at electrode Oz, where both main effects were significant (Manipulability: F(1,59) = 18.78, p < 0.001, = 0.24; Group: F(1,59) = 4.98, p = 0.029, = 0.08).

Lateralized Electrodes

A significant main effect of Manipulability was also observed at electrode C3 (F(1,59) = 26.18, p < 0.001, = 0.31), with higher peak P300 amplitudes for high-manipulability objects (High: M = 0.808, SD = 0.964; Low: M = 0.250, SD = 0.384). This same pattern of a stronger P300 for low observed at electrode C4 (High: M = 0.726, SD = 0.680; Low: M = 0.425, SD = 1.015), albeit greatly attenuated (Manipulability: F(1,59) = 4.19, p = 0.045, = 0.07).

In the left parietal electrodes, we only observed a main effect of Manipulability, where the P300 was larger in amplitude for high-manipulability objects at electrodes P3 (F(1,59) = 10.82, p = 0.002, = 0.16) and PO7 (F(1,59) = 13.66, p < 0.001, = 0.19). In the right parietal electrodes, only the main effect of Manipulability was significant, with higher amplitude P300 for the high-manipulability objects, at both electrodes P4 (F(1,59) = 33.15, p < 0.001, = 0.36) and PO8 (F(1,59) = 35.75, p < 0.001, = 0.38). All other main effects and interactions were not significant (all p’s >0.05).

Slow-Wave Mean Amplitude

Midline Electrodes

At electrode Pz, there was a main effect of Manipulability (F(1,59) = 9.23, p = 0.004, = 0.14), with a larger amplitude slow-wave being elicited for high-manipulability objects compared to low (High: M = 0.423, SD = 0.843; Low: M = −0.279, SD = 0.628). A similar pattern was also observed at electrode Oz (F(1,59) = 5.60, p = 0.021, = 0.09). All other main effects and interactions were not significant (all p’s >0.05), including no significant effects at electrode Cz.

Lateralized Electrodes

At electrode C3, we observed a significant interaction of Group and Manipulability (F(1,59) = 5.17, p = 0.027, = 0.08), though no significant effects were found at electrode C4. As with the midline electrodes, only a main effect of Manipulability was significant across all of the lateralized parietal electrodes, with greater slow-wave amplitude for the high-manipulability objects: P3 (F(1,59) = 5.52, p = 0.022, = 0.09), PO7 (F(1,59) = 5.74, p = 0.020, = 0.09), P4 (F(1,59) = 5.17, p = 0.027, = 0.08), PO8 (F(1,59) = 4.84, p = 0.032, = 0.08).

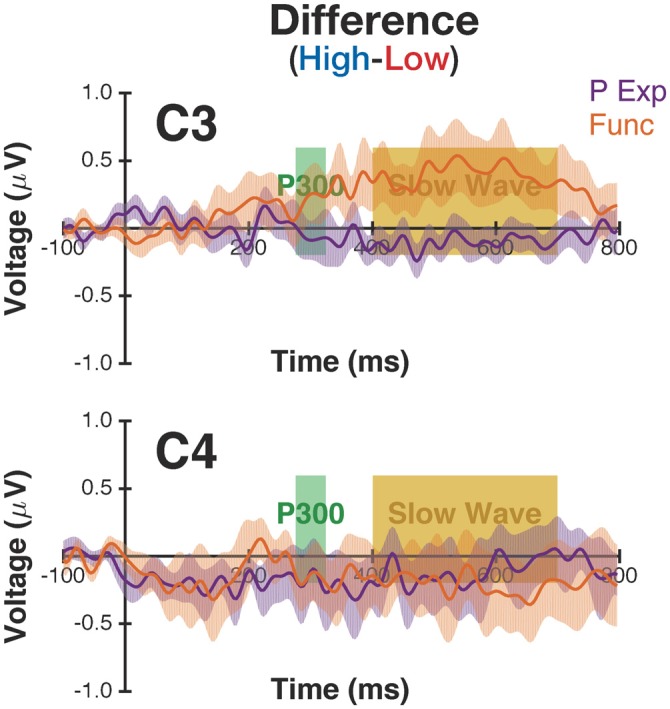

To understand the interaction observed at electrode C3, we followed up with post hoc t-tests. As shown in Figure 3, there was a significant difference between slow-wave potentials for the high-manipulability objects between groups (t(59) = 2.75, p = 0.008, d = 0.72; Personal Experience: M = 0.196, SD = 1.224; Functionality: M = 0.519, SD = 0.718), but no evidence of a difference for the low-manipulability objects (t(59) = 1.03, p = 0.30, d = 0.27; Personal Experience: M = −0.141, SD = 0.740; Functionality: M = −0.326, SD = 1.585). Thus, it appears that the interaction is driven by the slow wave potential being larger for the high-manipulability objects in the Functionality group compared to the three other conditions (of high/low × Personal Experience/Functionality).

Figure 3.

ERP waveforms for the difference between high- and low-manipulability objects. Difference waveforms are shown for both Personal Experience (P Exp) and Functionality (Func) groups at electrodes C3 and C4. The shaded band for the ERP waveforms corresponds to the SEM. See Figure 2 for the original waveforms.

Discussion

Here we had two distinct findings. First, both the P300 and slow-wave components were larger in amplitude during the presentation of high-manipulability stimuli. On the face of it, these results strongly suggest that the processing of images of high-manipulability objects recruits more attentional resources than the processing of low-manipulability objects. Second, we observed a larger amplitude slow-wave at electrode C3 during the processing of high-manipulability objects compared to low, but only in the Functionality group. Thus, this could be described as an effect of automatic vs. deliberate motor processing interacting with the manipulability of the object at some putative level of motor-simulation processing, rather than merely reflecting the allocation of attentional resources.

Regarding the first finding, the traditional approach to examining P300 in cognitive tasks is to employ an oddball paradigm with varied stimulus probability. In our experiment, we did not vary probability, and high- and low-manipulability objects were presented equally often in our two groups and variability in object familiarity was controlled for. Thus, differences in P300 amplitude between object-image-type likely reflect an important distinction in attentional processing during our task. Moreover, as slow wave has been associated with further and more elaborate processes (Karis et al., 1984; Ruchkin et al., 1988; Fabiani et al., 1990), our results show that this effect continues into more elaborative processes. Critically, these effects were observed with either motor processing instruction. Thus, we argue that the high-manipulability objects likely receive more automatic prioritization of attentional resources compared to the low-manipulability objects. That is, we observed a basic attention phenomenon associated with object functionality. Along the same lines, Handy et al. (2003) showed that an early ERP marker of attention, the P1, was larger for images of objects that could be manually interacted with (e.g., tools) suggesting a low-level advantage for these items in the attention system. Our study was not designed to examine the P1, but our later occurring P300/slow-wave effects could very well be related to a subsequent stage of the same overall process. Furthermore, in light of recent theories suggesting the P300 is a composite of two distinct subcomponents, P3a and P3b (e.g., Soltani and Knight, 2000; Polich, 2012), our manipulability effect is likely a difference in P3b amplitude (see Figure 2). While P3a is most closely associated with working memory and generated more frontally, P3b is related to temporal-parietal activity including processes such as perception, episodic memory, and inhibitory control. Additionally, the role of superior parietal cortex, e.g., intraparietal sulcus, in processing tool-related manipulation and action semantics (Kalénine et al., 2010; Schwartz et al., 2011; Tsagkaridis et al., 2014; see Johnson-Frey, 2004 for a review) could serve as the cognitive basis of this effect. However, as our goal was to investigate the role of object functionality, both as a stimuli property (high- vs. low-manipulability) and if the object’s motor features were directly attended to (automatic vs. deliberate motor processing), we are unable to evaluate how our effects may be driven by processing the function- vs. action-related knowledge of objects (e.g., Kellenbach et al., 2003; Canessa et al., 2008; Spunt et al., 2011; Wamain et al., 2014; Chen et al., 2016). Nonetheless, both of these properties would be higher for our high-manipulability objects and processed to a greater extent in the Functionality group.

The interaction of manipulability with motor processing instruction is particularly intriguing, as no prior studies have evaluated the differences in brain activity in relation to automatic vs. deliberate motor processing on object manipulability. While we did not observe an interaction at the midline electrodes, we did find such an effect at C3, the electrode corresponding to the hand-region of the contralateral primary motor cortex. The site of this effect suggests that the interaction is less directly related to attention allocation per se, but is more similar to sub-threshold motor activity, i.e., motor simulations (see Madan and Singhal, 2012a). Furthermore, given that the interaction was observed in the slow-wave time window, it is likely that this interaction is a product of effortful and sustained processes (i.e., a deeper level-of-processing), rather than an incidental process related to the processing of image stimuli (Karis et al., 1984; Fabiani et al., 1990). As such, it appears that processing of the object stimuli, regardless of manipulability, evokes a positive-going ERP at C3, as clearly observable in the Functionality Group (Figure 2). When the motor-related features of the objects are deliberately evaluated, i.e., the Functionality group, high-manipulability objects evoke greater activity in this region, likely associated with motor simulations. However, this is not true of the low-manipulability objects, nor when motor-features were only processed incidentally. Figure 3 further emphasizes this result, showing that there is effectively no difference in activity at C3 due to manipulability in the Personal Experience group, but meaningful deviations in the Functionality group, especially during the slow-wave time window. Importantly, no differences are present at electrode C4, indicating that differences are lateralized such that they correspond to the participants’ dominant hand. Madan and Singhal (2012b) used identical instructions as those used here, with word stimuli, and found better memory for high- than low-manipulability objects in the Personal Experience group, but the reversed effect in the Functionality group. Considering this pattern of results along with the present results, slow wave has been shown to be indicative of elaborative memory encoding (Karis et al., 1984; Fabiani et al., 1990). Thus, it is plausible that this differential processing at C3 may be related to the observed interaction of manipulability and motor processing instruction on episodic memory (also see Palombo and Madan, 2015).

An extensive network of brain regions underlie our ability to use and understand tool function (Binkofski et al., 1999; Johnson-Frey, 2004; Bi et al., 2015). Even with respect to object manipulability directly, much of our current knowledge is derived from patient studies, particularly involving apraxia, but also aphasia and agnosia (e.g., Buxbaum and Saffran, 2002; Wolk et al., 2005; Arévalo et al., 2007; Garcea et al., 2013; Mengotti et al., 2013; Reilly et al., 2014; also see Capitani et al., 2003; Mahon and Caramazza, 2009; and Osiurak, 2014 for related reviews). Patient studies are informative in distinguishing a brain region is meaningfully related to behavior rather than merely a spectator process; however, they are unable to inform us as to the extent at which cognitive processes are supported by the region, which is a clear advantage of cognitive neuroscience approaches. Prior studies do support the finding that both high- and low-manipulability objects robustly relate to the evocation of attention and motor simulations, though this is true of both types of stimuli. Nonetheless, we do observe differences in activity indicating manipulability-related variability. Furthermore, by investigating the precise temporal dynamics of these processes, we were also able to disentangle automatic vs. deliberate cognitive processes.

More generally, on a coarse level our findings are that object manipulability influences the ERP waveform that results from the participant processing an image of that object. While we often referred to our stimuli as high- and low-manipulability objects, the fact that our stimuli were images of objects rather than actual objects is likely an important distinction, especially when considering our results with respect to Gibson’s theory of affordances (Gibson, 1977, 1979). Specifically, “affordance” refers to the properties of the stimuli and a picture of a pan simply does not have the same physical properties (e.g., grip aperture of handle) as a physical pan (Gibson, 1971, 1979; Wilson and Golonka, 2013). As such, stimuli should be physical objects when studying affordances (e.g., Tucker and Ellis, 1998; Mon-Williams and Bingham, 2011). As we used image stimuli, here we refrain from directly connecting our work to the literature on affordances, as the affordances related to an image of an object is unclear (Kennedy, 1974; Snow et al., 2011). For instance, affordances may not be involved in the effects observed here, and may instead be related to effects of manipulability on bottom-up attentional processes (Makris, 2015). However, recent findings indicate that images of tools can nonetheless prime movement-related actions equivalently to primes that were real tool objects (Squires et al., 2016). Independent of this issue, as detailed throughout the “Introduction” Section, a substantial literature has developed that has reliably observed differences in behavior and brain activity in correspondence to object manipulability, and this is the literature that the current study serves to advance.

An object’s functional properties can influence cognitive processes and resulting behaviors. To better understand this effect we recorded EEG while participants made judgments about images of objects that were either high or low in functional manipulability (e.g., hammer vs. ladder). Using a between-subjects design, participants judged whether they had seen the object recently (Personal Experience), or could manipulate the object using their hand (Functionality). Our first main finding was that processing high-manipulability objects recruited more attentional resources than the processing low-manipulability objects, suggesting a relative prioritization of processing for high-manipulability objects. Our second main finding was that automatic vs. deliberate motor instructions interacted with manipulability only in motor regions, suggesting that the differences may have occurred at the level of motor simulations, rather than attentional allocation. While it is generally understood that motor features of an object influence how it is processed and interacted with, our results suggest that these processes are more nuanced than previously thought, particularly with respect to how we intend to process the object in relation to current task demands.

Author Contributions

Conceptualization: all authors; Data collection: YYC; Data analysis: CRM and YYC; Manuscript writing: all authors. All authors reviewed and approved the final version of the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This research was partly funded by a Discovery grant and a Canada Graduate Scholarship, both from the Natural Science and Engineering Research Council of Canada, held by AS and CRM, respectively. The authors declare no competing financial interests.

Footnotes

1Studies comparing images of tools vs. non-tools, such as animals or faces, have also come to similar conclusions (Chao and Martin, 2000; Lewis et al., 2005; Sim and Kiefer, 2005; Proverbio et al., 2007; Just et al., 2010; Almeida et al., 2013; Amsel et al., 2013), but inherently involved less specificity in the comparison, relative to the present research question.

Supplementary Material

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fnhum.2016.00360

References

- Almeida J., Fintzi A. R., Mahon B. Z. (2013). Tool manipulation knowledge is retrieved by way of the ventral visual object processing pathway. Cortex 49, 2334–2344. 10.1016/j.cortex.2013.05.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amsel B. D., Urbach T. P., Kutas M. (2013). Alive and grasping: stable and rapid semantic access to an object category but not object graspability. Neuroimage 77, 1–13. 10.1016/j.neuroimage.2013.03.058 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arévalo A., Perani D., Cappa S. F., Butler A., Bates E., Dronkers N. (2007). Action and object processing in aphasia: from nouns and verbs to the effect of manipulability. Brain Lang. 100, 79–94. 10.1016/j.bandl.2006.06.012 [DOI] [PubMed] [Google Scholar]

- Armstrong G. A. B., Singhal A. (2011). Neural markers of automatic and controlled attention during immediate and delayed action. Exp. Brain Res. 213, 35–48. 10.1007/s00221-011-2774-0 [DOI] [PubMed] [Google Scholar]

- Bi Y., Han Z., Zhong S., Ma Y., Gong G., Huang R., et al. (2015). The white matter structural network underlying human tool use and tool understanding. J. Neurosci. 35, 6822–6835. 10.1523/JNEUROSCI.3709-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binkofski F., Buccino G., Posse S., Seitz R. J., Rizzolatti G., Freund H. J. (1999). A fronto-parietal circuit for object manipulation in man: evidence from an fMRI-study. Eur. J. Neurosci. 11, 3276–3286. 10.1046/j.1460-9568.1999.00753.x [DOI] [PubMed] [Google Scholar]

- Borghi A. M., Bonfiglioli C., Ricciardelli P., Rubichi S., Nicoletti R. (2007). “Do we access object manipulability while we categorize? Evidence from reaction times studies,” in Mental States: Evolution, Function, Nature, eds Schalley A. C., Khlentzos D. (Philadelphia: John Benjamins; ), 153–170. [Google Scholar]

- Boronat C. B., Buxbaum L. J., Coslett H. B., Tang K., Saffran E. M., Kimberg D. Y., et al. (2005). Distinctions between manipulation and function knowledge of objects: evidence from functional magnetic resonance imaging. Brain Res. Cogn. Brain Res. 23, 361–373. 10.1016/j.cogbrainres.2004.11.001 [DOI] [PubMed] [Google Scholar]

- Buccino G., Sato M., Cattaneo L., Rodà F., Riggio L. (2009). Broken affordances, broken objects: a TMS study. Neuropsychologia 47, 3074–3078. 10.1016/j.neuropsychologia.2009.07.003 [DOI] [PubMed] [Google Scholar]

- Buxbaum L. J., Saffran E. M. (2002). Knowledge of object manipulation and object function: dissociations in apraxic and nonapraxic subjects. Brain Lang. 82, 179–199. 10.1016/s0093-934x(02)00014-7 [DOI] [PubMed] [Google Scholar]

- Campanella F., Shallice T. (2011). Manipulability and object recognition: is manipulability a semantic feature? Exp. Brain Res. 208, 369–383. 10.1007/s00221-010-2489-7 [DOI] [PubMed] [Google Scholar]

- Canessa N., Borgo F., Cappa S. F., Perani D., Falini A., Buccino G., et al. (2008). The different neural correlates of action and functional knowledge in semantic memory: an fMRI study. Cereb. Cortex 18, 740–751. 10.1093/cercor/bhm110 [DOI] [PubMed] [Google Scholar]

- Capitani E., Laiacona M., Mahon B., Caramazza A. (2003). What are the facts of semantic category-specific deficits? A critical review of the clinical evidence. Cogn. Neuropsychol. 20, 213–261. 10.1080/02643290244000266 [DOI] [PubMed] [Google Scholar]

- Chao L. L., Martin A. (2000). Representation of manipulable man-made objects in the dorsal stream. Neuroimage 12, 478–484. 10.1006/nimg.2000.0635 [DOI] [PubMed] [Google Scholar]

- Chen Q., Garcea F. E., Mahon B. Z. (2016). The representation of object-directed action and function knowledge in the human brain. Cereb. Cortex 26, 1609–1618. 10.1093/cercor/bhu328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Y. Y., Lithgow K., Hemmerich J. A., Caplan J. B. (2014). Is what goes in what comes out? Encoding and retrieval event-related potentials together determine memory outcome. Exp. Brain Res. 232, 3175–3190. 10.1007/s00221-014-4002-1 [DOI] [PubMed] [Google Scholar]

- Codispoti M., Ferrari V., De Cesarei A., Cardinale R. (2006). Implicit and explicit categorization of natural scenes. Prog. Brain Res. 156, 53–65. 10.1016/s0079-6123(06)56003-0 [DOI] [PubMed] [Google Scholar]

- Coles M. G. (1989). Modern mind-brain reading: psychophysiology, physiology and cognition. Psychophysiology 26, 251–269. 10.1111/j.1469-8986.1989.tb01916.x [DOI] [PubMed] [Google Scholar]

- Coles M. G. H., Henderikus G. O., Smid M., Scheffers M. K., Otten L. J. (1995). “Mental chronometry and the study of human information processing,” in Electrophysiology of Mind: Event-Related Brain Potentials and Cognition, eds Rugg M. D., Coles M. G. H. (New York, NY: Oxford University Press; ), 86–131. [Google Scholar]

- Delorme A., Makeig S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. 10.1016/j.jneumeth.2003.10.009 [DOI] [PubMed] [Google Scholar]

- Donchin E., Kubovy M., Kutas M., Johnson R., Herning R. I. (1973). Graded changes in evoked response (P300) amplitude as a function of cognitive activity. Percept. Psychophys. 14, 319–324. 10.3758/bf03212398 [DOI] [Google Scholar]

- Fabiani M., Karis D., Donchin E. (1990). Effect mnemonic strategy manipulation in a Von Restorff paradigm. Electroencephalogr. Clin. Neurophysiol. 75, 22–35. 10.1016/0013-4694(90)90149-e [DOI] [PubMed] [Google Scholar]

- Garcea F. E., Dombovy M., Mahon B. Z. (2013). Preserved tool knowledge in the context of impaired action knowledge: implications for models of semantic memory. Front. Hum. Neurosci. 7:120. 10.3389/fnhum.2013.00120 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gentilucci M., Gangitano M. (1998). Influence of automatic word reading on motor control. Eur. J. Neurosci. 10, 752–756. 10.1046/j.1460-9568.1998.00060.x [DOI] [PubMed] [Google Scholar]

- Gibson J. J. (1971). The information available in pictures. Leonardo 4, 27–35. 10.2307/1572228 [DOI] [Google Scholar]

- Gibson J. J. (1977). “The theory of affordances,” in Perceiving, Acting and Knowing: Toward an Ecological Psychology, eds Shaw R., Bransford J. (Hillsdale, NJ: Lawrence Erlbaum Associates; ), 67–82. [Google Scholar]

- Gibson J. J. (1979). The Ecological Approach to Visual Perception. Boston, MA: Houghton Mifflin. [Google Scholar]

- Glover S., Rosenbaum D. A., Graham J., Dixon P. (2004). Grasping the meaning of words. Exp. Brain Res. 154, 103–108. 10.1007/s00221-003-1659-2 [DOI] [PubMed] [Google Scholar]

- Green J. B., Bialy Y., Sora E., Thatcher R. W. (1997). An electroencephalographic study of imagined movement. Arch. Phys. Med. Rehabil. 78, 578–581. 10.1016/s0003-9993(97)90421-4 [DOI] [PubMed] [Google Scholar]

- Handy T. C., Grafton S. T., Shroff N. M., Ketay S., Gazzaniga M. S. (2003). Graspable objects grab attention when the potential for action is recognized. Nat. Neurosci. 6, 421–427. 10.1038/nn1031 [DOI] [PubMed] [Google Scholar]

- Johnson-Frey S. H. (2004). The neural bases of complex tool use in humans. Trends Cogn. Sci. 8, 71–78. 10.1016/j.tics.2003.12.002 [DOI] [PubMed] [Google Scholar]

- Jung T.-P., Makeig S., Humphries C., Lee T.-W., McKeown M. J., Iragui V., et al. (2000). Removing electroencephalographic artifacts by blind source separation. Psychophysiology 37, 163–178. 10.1111/1469-8986.3720163 [DOI] [PubMed] [Google Scholar]

- Just M. A., Cherkassky V. L., Aryal S., Mitchell T. M. (2010). The neurosemantic theory of concrete noun representations based on the underlying brain codes. PLoS One 5:e8622. 10.1371/journal.pone.0008622 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kalénine S., Buxbaum L. J., Coslett H. B. (2010). Critical brain regions for action recognition: lesion-symptom mapping in left hemisphere stroke. Brain 133, 3269–3280. 10.1093/brain/awq210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kappenman E. S., Luck S. J. (2010). The effects of electrode impedance on data quality and statistical significance in ERP recordings. Psychophysiology 47, 888–904. 10.1111/j.1469-8986.2010.01009.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karis D., Fabiani M., Donchin E. (1984). “P300” and memory: individual differences in the von Restorff effect. Cogn. Psychol. 16, 177–216. 10.1016/0010-0285(84)90007-0 [DOI] [Google Scholar]

- Kellenbach M. L., Brett M., Patterson K. (2003). Actions speak louder than functions: the importance of manipulability and action in tool representation. J. Cogn. Neurosci. 15, 30–46. 10.1162/089892903321107800 [DOI] [PubMed] [Google Scholar]

- Kennedy J. M. (1974). A Psychology of Picture Perception. San Francisco, CA: Jossey-Bass Publishers. [Google Scholar]

- Kok A. (2001). On the utility of P3 amplitude as a measure of processing capacity. Psychophysiology 38, 557–577. 10.1017/s0048577201990559 [DOI] [PubMed] [Google Scholar]

- Kutas M., Donchin E. (1974). Studies of squeezing: handedness, responding hand, response force and asymmetry of readiness potential. Science 186, 545–548. 10.1126/science.186.4163.545 [DOI] [PubMed] [Google Scholar]

- Kutas M., McCarthy G., Donchin E. (1977). Augmenting mental chronometry: the P300 as a measure of stimulus evaluation time. Science 197, 792–795. 10.1126/science.887923 [DOI] [PubMed] [Google Scholar]

- Lewis J. W., Brefczynski J. A., Phinney R. E., Janik J. J., DeYoe E. A. (2005). Distinct cortical pathways for processing tool versus animal sounds. J. Neurosci. 25, 5148–5158. 10.1523/JNEUROSCI.0419-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luu P., Ferree T. (2005). Determination of the Hydrocel Geodesic Sensor Nets’ Average Electrode Positions and their 10–10 International Equivalents (Technical Report). Eugene, OR: Electrical Geodesics, Inc. [Google Scholar]

- Madan C. R., Singhal A. (2012a). Motor imagery and higher-level cognition: four hurdles before research can sprint forward. Cogn. Process. 13, 211–229. 10.1007/s10339-012-0438-z [DOI] [PubMed] [Google Scholar]

- Madan C. R., Singhal A. (2012b). Encoding the world around us: motor-related processing influences verbal memory. Conscious. Cogn. 21, 1563–1570. 10.1016/j.concog.2012.07.006 [DOI] [PubMed] [Google Scholar]

- Mahon B. Z., Caramazza A. (2009). Concepts and categories: a cognitive neuropsychological perspective. Annu. Rev. Psychol. 60, 27–51. 10.1146/annurev.psych.60.110707.163532 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makris S. (2015). A commentary: viewing photos and reading nouns of natural graspable objects similarly modulate motor responses. Front. Hum. Neurosci. 9:337. 10.3389/fnhum.2015.00337 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marino B. F., Sirianni M., Dalla Volta R., Magliocco F., Silipo F., Quattrone A., et al. (2014). Viewing photos and reading nouns of natural graspable objects similarly modulate motor responses. Front. Hum. Neurosci. 8:968. 10.3389/fnhum.2014.00968 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matheson H. E., White N. C., McMullen P. A. (2014a). A test of the embodied simulation theory of object perception: potentiation of responses to artifacts and animals. Psychol. Res. 78, 465–482. 10.1007/s00426-013-0502-z [DOI] [PubMed] [Google Scholar]

- Matheson H. E., White N., McMullen P. A. (2014b). Testing the embodied account of object naming: a concurrent motor task affects naming artifacts and animals. Acta Psychol. (Amst) 145, 33–43. 10.1016/j.actpsy.2013.10.012 [DOI] [PubMed] [Google Scholar]

- McCarthy G., Donchin E. (1976). The effects of temporal and event uncertainty in determining the waveforms of the auditory event related potential (ERP). Psychophysiology 13, 581–590. 10.1111/j.1469-8986.1976.tb00885.x [DOI] [PubMed] [Google Scholar]

- McCarthy G., Wood C. C. (1985). Scalp distributions of event-related potentials: an ambiguity associated with analysis of variance models. Electroencephalogr. Clin. Neurophysiol. 62, 203–208. 10.1016/0168-5597(85)90015-2 [DOI] [PubMed] [Google Scholar]

- Mengotti P., Corradi-Dell’Acqua C., Negri G. A., Ukmar M., Pesavento V., Rumiati R. I. (2013). Selective imitation impairments differentially interact with language processing. Brain 136, 2602–2618. 10.1093/brain/awt194 [DOI] [PubMed] [Google Scholar]

- Mon-Williams M., Bingham G. P. (2011). Discovering affordances that determine the spatial structure of reach-to-grasp movements. Exp. Brain Res. 211, 145–160. 10.1007/s00221-011-2659-2 [DOI] [PubMed] [Google Scholar]

- Oldfield R. C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9, 97–113. 10.1016/0028-3932(71)90067-4 [DOI] [PubMed] [Google Scholar]

- Osiurak F. (2014). What neuropsychology tells us about human tool use? The four constraints theory (4CT): mechanics, space, time and effort. Neuropsychol. Rev. 24, 88–115. 10.1007/s11065-014-9260-y [DOI] [PubMed] [Google Scholar]

- Palombo D. J., Madan C. R. (2015). Making memories that last. J. Neurosci. 35, 10643–10644. 10.1523/JNEUROSCI.1882-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papeo L., Lingnau A., Agosta S., Pascual-Leone A., Battelli L., Caramazza A. (2015). The origin of word-related motor activity. Cereb. Cortex 25, 1668–1675. 10.1093/cercor/bht423 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polich J. (2012). “Neuropsychology of P300,” in Oxford Handbook of Event-Related Potential Components, eds Luck S. J., Kappenman E. S. (New York, NY: Oxford University Press; ), 159–188. [Google Scholar]

- Prinzel L. J., Freeman F. G., Scerbo M. W., Mikulka P. J., Pope A. T. (2003). Effects of a psychophysiological system for adaptive automation on performance, workload and the event-related potential P300 component. Hum. Factors 45, 601–613. 10.1518/hfes.45.4.601.27092 [DOI] [PubMed] [Google Scholar]

- Proverbio A. M., Adorni R., D’Aniello G. E. (2011). 250 ms to code for action affordance during observation of manipulable objects. Neuropsychologia 49, 2711–2717. 10.1016/j.neuropsychologia.2011.05.019 [DOI] [PubMed] [Google Scholar]

- Proverbio A. M., Del Zotto M., Zani A. (2007). The emergence of semantic categorization in early visual processing: ERP indices of animal vs. artifact recognition. BMC Neurosci. 8:24. 10.1186/1471-2202-8-24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pulvermüller F., Hauk O., Nikulin V. V., Ilmoniemi R. J. (2005). Functional links between motor and language systems. Eur. J. Neurosci. 21, 793–797. 10.1111/j.1460-9568.2005.03900.x [DOI] [PubMed] [Google Scholar]

- Ramoser H., Müller-Gerking J., Pfurtscheller G. (2000). Optimal spatial filtering of single trial EEG during imagined hand movement. IEEE Trans. Rehabil. Eng. 8, 441–446. 10.1109/86.895946 [DOI] [PubMed] [Google Scholar]

- Reilly J., Harnish S., Garcia A., Hung J., Rodriguez A. D., Crosson B. (2014). Lesion symptom mapping of manipulable object naming in nonfluent aphasia: can a brain be both embodied and disembodied? Cogn. Neuropsychol. 31, 287–312. 10.1080/02643294.2014.914022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Righi S., Orlando V., Marzi T. (2014). Attractiveness and affordance shape tools neural coding: insight from ERPs. Int. J. Psychophysiol. 91, 240–253. 10.1016/j.ijpsycho.2014.01.003 [DOI] [PubMed] [Google Scholar]

- Ruchkin D. S., Johnson R., Jr., Mahaffey D., Sutton S. (1988). Towards a functional categorization of slow waves. Psychophysiology 25, 339–353. 10.1111/j.1469-8986.1988.tb01253.x [DOI] [PubMed] [Google Scholar]

- Ruchkin D. S., Sutton S., Kietzman M. L., Silver K. (1980). Slow wave and P300 in signal detection. Electroencephalogr. Clin. Neurophysiol. 50, 35–47. 10.1016/0013-4694(80)90321-1 [DOI] [PubMed] [Google Scholar]

- Rueschemeyer S.-A., van Rooij D., Lindemann O., Willems R. M., Bekkering H. (2010). The function of words: distinct neural correlates for words denoting differently manipulable objects. J. Cogn. Neurosci. 22, 1844–1851. 10.1162/jocn.2009.21310 [DOI] [PubMed] [Google Scholar]

- Saccuman M. C., Cappa S. F., Bates E. A., Arévalo A., Della Rosa P., Danna M., et al. (2006). The impact of semantic reference on word class: an fMRI study of action and object naming. Neuroimage 32, 1865–1878. 10.1016/j.neuroimage.2006.04.179 [DOI] [PubMed] [Google Scholar]

- Salmon J. P., Matheson H. E., McMullen P. A. (2014a). Slow categorization but fast naming for photographs of manipulable objects. Vis. Cogn. 22, 141–172. 10.1080/13506285.2014.887042 [DOI] [Google Scholar]

- Salmon J. P., Matheson H. E., McMullen P. A. (2014b). Photographs of manipulable objects are named more quickly than the same objects depicted as line-drawings: evidence that photographs engage embodiment more than line-drawings. Front. Psychol. 5:1187. 10.3389/fpsyg.2014.01187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salmon J. P., McMullen P. A., Filliter J. H. (2010). Norms for two types of manipulability (graspability and functional usage), familiarity and age of acquisition for 320 photographs of objects. Behav. Res. Methods 42, 82–95. 10.3758/brm.42.1.82 [DOI] [PubMed] [Google Scholar]

- Schupp H. T., Flaisch T., Stockburger J., Junghöfer M. (2006). Emotion and attention: event-related brain potential studies. Prog. Brain Res. 156, 31–51. 10.1016/s0079-6123(06)56002-9 [DOI] [PubMed] [Google Scholar]

- Schwartz M. F., Kimberg D. Y., Walker G. M., Brecher A., Faseyitan O. K., Dell G. S., et al. (2011). Neuroanatomical dissociation for taxonomic and thematic knowledge in the human brain. Proc. Natl. Acad. Sci. U S A 108, 8520–8524. 10.1073/pnas.1014935108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shebani Z., Pulvermüller F. (2013). Moving the hands and feet specifically impairs working memory for arm-and leg-related action words. Cortex 49, 222–231. 10.1016/j.cortex.2011.10.005 [DOI] [PubMed] [Google Scholar]

- Sim E. J., Kiefer M. (2005). Category-related brain activity to natural categories is associated with the retrieval of visual features: evidence from repetition effects during visual and functional judgments. Brain Res. Cogn. Brain Res. 24, 260–273. 10.1016/j.cogbrainres.2005.02.006 [DOI] [PubMed] [Google Scholar]

- Smulders F. T., Miller J. O. (2012). “The lateralized readiness potential,” in Oxford Handbook of Event-Related Potential Components, eds Luck S. J., Kappenman E. S. (New York, NY: Oxford University Press; ), 209–229. [Google Scholar]

- Snow J. C., Pettypiece C. E., McAdam T. D., McLean A. D., Stroman P. W., Goodale M. A., et al. (2011). Bringing the real world into the fMRI scanner: repetition effects for pictures versus real objects. Sci. Rep. 1:130. 10.1038/srep00130 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soltani M., Knight R. T. (2000). Neural origins of the P300. Crit. Rev. Neurobiol. 14, 199–224. 10.1615/CritRevNeurobiol.v14.i3-4.20 [DOI] [PubMed] [Google Scholar]

- Spunt R. P., Satpute A. B., Lieberman M. D. (2011). Identifying the what, why and how of an observed action: an fMRI study of mentalizing and mechanizing during action observation. J. Cogn. Neurosci. 23, 63–74. 10.1162/jocn.2010.21446 [DOI] [PubMed] [Google Scholar]

- Squires S. D., Macdonald S. N., Culham J. C., Snow J. C. (2016). Priming tool actions: are real objects more effective primes than pictures? Exp. Brain Res. 234, 963–976. 10.1007/s00221-015-4518-z [DOI] [PubMed] [Google Scholar]

- Squires N. K., Squires K. C., Hillyard S. A. (1975). Two varieties of long-latency positive waves evoked by unpredictable auditory stimuli in man. Electroencephalogr. Clin. Neurophysiol. 38, 387–401. 10.1016/0013-4694(75)90263-1 [DOI] [PubMed] [Google Scholar]

- Tsagkaridis K., Watson C., Jax S., Buxbaum L. (2014). The role of action representations in thematic object relations. Front. Hum. Neurosci. 8:140. 10.3389/fnhum.2014.00140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tucker M., Ellis R. (1998). On the relations between seen objects and components of potential actions. J. Exp. Psychol. Hum. Percept. Perform. 24, 830–846. 10.1037/0096-1523.24.3.830 [DOI] [PubMed] [Google Scholar]

- Wamain Y., Pluciennicka E., Kalénine S. (2014). Temporal dynamics of action perception: differences on ERP evoked by object-related and non-object-related actions. Neuropsychologia 63, 249–258. 10.1016/j.neuropsychologia.2014.08.034 [DOI] [PubMed] [Google Scholar]

- Williams L. M., Kemp A. H., Felmingham K., Liddell B. J., Palmer D. M., Bryant R. A. (2007). Neural biases to covert and overt signals of fear: dissociation by trait anxiety and depression. J. Cogn. Neurosci. 19, 1595–1608. 10.1162/jocn.2007.19.10.1595 [DOI] [PubMed] [Google Scholar]

- Wilson A. D., Golonka S. (2013). Embodied cognition is not what you think it is. Front. Psychol. 4:58. 10.3389/fpsyg.2013.00058 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Witt J. K., Kemmerer D., Linkenauger S. A., Culham J. (2010). A functional role for motor simulation in identifying tools. Psychol. Sci. 21, 1215–1219. 10.1177/0956797610378307 [DOI] [PubMed] [Google Scholar]

- Wolk D. A., Coslett H. B., Glosser G. (2005). The role of sensory-motor information in object recognition: evidence from category-specific visual agnosia. Brain Lang. 94, 131–146. 10.1016/j.bandl.2004.10.015 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.