Abstract

During the development of new therapies, it is not uncommon to test whether a new treatment works better than the existing treatment for all patients who suffer from a condition (full population) or for a subset of the full population (subpopulation). One approach that may be used for this objective is to have two separate trials, where in the first trial, data are collected to determine if the new treatment benefits the full population or the subpopulation. The second trial is a confirmatory trial to test the new treatment in the population selected in the first trial. In this paper, we consider the more efficient two‐stage adaptive seamless designs (ASDs), where in stage 1, data are collected to select the population to test in stage 2. In stage 2, additional data are collected to perform confirmatory analysis for the selected population. Unlike the approach that uses two separate trials, for ASDs, stage 1 data are also used in the confirmatory analysis. Although ASDs are efficient, using stage 1 data both for selection and confirmatory analysis introduces selection bias and consequently statistical challenges in making inference. We will focus on point estimation for such trials. In this paper, we describe the extent of bias for estimators that ignore multiple hypotheses and selecting the population that is most likely to give positive trial results based on observed stage 1 data. We then derive conditionally unbiased estimators and examine their mean squared errors for different scenarios.©2015 The Authors. Statistics in Medicine Published by JohnWiley & Sons Ltd.

Keywords: adaptive seamless designs, phase II/III clinical trials, multi‐arm multi‐stage trials, subpopulation, subgroup analysis

1. Introduction

In drug development, it is not uncommon to have a hypothesis selection stage followed by a confirmatory analysis stage. In the hypothesis selection stage, data are collected to test multiple hypotheses, with the hypothesis that is most likely to give positive trial results selected to be tested in the confirmatory analysis stage. In this paper, we will consider two‐stage adaptive seamless designs (ASDs) in which the hypothesis selection stage (stage 1) and the confirmatory analysis stage (stage 2) are two parts of a single trial, with hypothesis selection performed at an interim analysis. An alternative to an ASD is to have two separate trials, separate in the sense that stage 1 data are only used for hypothesis selection and the confirmatory analysis uses stage 2 data only. However, an ASD is more efficient than having two separate trials because, as data from both stages of an adaptive seamless trial are used in the final confirmatory analysis, for the same power, fewer patients would be required in stage 2 of an adaptive seamless trial than in the setting with two separate trials hence saving resources. The two‐stage adaptive seamless trial can also be designed so that it is more efficient than having a trial with a single stage, where a single analysis is used to select and test the best hypothesis, for example using the Bonferroni test or the Dunnett test 1.

Much work has been undertaken on ASDs where the multiple hypotheses arise as a result of comparing a control to several experimental treatments in stage 1. Based on stage 1 data, the most promising experimental treatment is selected to continue to stage 2 together with the control. We refer to this as treatment selection. In stage 1, available patients are randomly allocated to the control and all the experimental treatments while in stage 2, patients are randomly allocated to the control and the most promising experimental treatment. The experimental treatments may be distinct treatments or different doses of a single experimental treatment. Treatment selection in ASDs is described in more detail in 2, 3, 4, 5, 6, 7, 8, 9, 10 among others. A challenge with such adaptive seamless trials is that selecting the most promising experimental treatment in stage 1 introduces selection bias because the superiority of the selected experimental treatment may be by chance. Consequently, appropriate confirmatory analysis needs to account for using biased stage 1 data. Hypothesis testing methods that control type I error rate have been developed or described in 2, 3, 4, 5, 6, 7, 8. Point estimators that adjust for treatment selection have been developed in 11, 12, 13, 14, 15 while confidence intervals that adjust for treatment selection have been considered in 7, 13, 16, 17, 18, 19.

In this paper, we consider the case where multiple hypotheses arise because in stage 1, a control is compared with a single experimental treatment in several subpopulations. Based on stage 1 data, the subpopulation in which the experimental treatment shows most benefit over the control is selected to be tested further in stage 2. We refer to this as subpopulation selection. In stage 1, patients are recruited from all subpopulations while in stage 2, patients are recruited from the selected subpopulation only and randomly allocated to the control and the experimental treatment. Subpopulation (subgroup) analysis has been considered in many trials, encompassing many disease areas such as Alzheimer's 20, epilepsy 21 and cancer 22. Most of these trials are single stage but investigators are beginning to design two‐stage adaptive seamless trials for subpopulation selection such as the trial described in 23. The subpopulation may be defined based on baseline disease severity 20, 21, age group 24 or a genetic biomarker 22 among other criteria. As in 23, we will assume that the subpopulations are pre‐specified. The case of subpopulation selection in ASDs is described in more detail in 23, 25, 26, 27, 28.

As in the case of treatment selection, subpopulation selection introduces selection bias because the most promising subpopulation is selected to be tested in stage 2. Methods for hypothesis testing in two‐stage adaptive seamless trials with subpopulation selection that control type I error rate have been developed 5, 23, 26. Some of these methods were initially developed for hypothesis testing following treatment selection. It has been possible to test hypotheses after subpopulation selection using some hypothesis testing methods developed for treatment selection because these methods are not fully parametric. For example, Brannath et al. 23 have shown that the method described in 5, 8 can be used for hypothesis testing in the case of subpopulation selection. Estimation after adaptive seamless trials with subpopulation selection has not been considered. However, for confidence intervals, it is possible to use the duality between hypothesis testing and confidence intervals as described for the case of treatment selection in 7, 18, 19. For point estimation, the methods proposed for treatment selection 11, 12, 13, 14, 15 are based on explicit distributions and so their extension for use in subpopulation selection testing is not straightforward.

In this paper, we will consider point estimation after two‐stage ASDs where stage 1 data are used to perform subpopulation selection. Spiessens and Debois 25 have described the possible scenarios for subgroup analysis based on how the subpopulations are nested within each other and about which subpopulations the investigators want to draw inference. We will consider the scenario where the effect is considered in the full population and in a single subpopulation. This scenario seems to be of most practical importance having been considered in methodological work related to actual trial designs 23, 26. In the discussion, we will describe how the estimators we develop can be extended to some of the other scenarios in 25.

We organise the rest of the paper as follows. In Section 2, we first describe the setting of interest while introducing notation and then define the naive estimator, which ignores subpopulation selection before deriving a conditionally unbiased estimator. Section 3 gives an example that is used to demonstrate how to compute the naive and unbiased estimators and compare the two estimators for specific cases. We assess the mean squared error of the unbiased estimator in relation to the naive estimator in Section 4. The findings in the paper are discussed in Section 5.

2. Estimation in adaptive seamless designs for subpopulation selection

2.1. Setting and notation

As described in Section 1, we will consider an ASD in which a control is compared with an experimental treatment in a population of patients that consists of a subpopulation that may benefit from the experimental treatment more than the full population. In stage 1, patients are recruited from the full population but it is expected that a subpopulation may benefit more so that the focus at the end of the trial may be in the subpopulation only. Figure 1 shows how the patients in stage 1 are partitioned. The subpopulation, defined by some characteristics such as a biomarker and which we refer to as S, is part of the full population. We refer to the full population as F and the part of F that is not part of S as S c. We assume S comprises a proportion p S of F. At first, we focus on the case of known p S before considering the case of unknown p S in Section 2.4. We will use subscripts S, S c and F to indicate notation that corresponds to populations S, S c and F, respectively. The patients are randomised to the control treatment and the experimental treatment. We assume randomisation is stratified such that in each of S and S c, the number of patients randomised to the control is equal to the number of patients randomised to the experimental treatment. Based on stage 1 data, the trial continues to stage 2 either with F or with S.

Figure 1.

Partitioning of the full population.

We assume outcomes from patients are normally distributed with unknown means and known common variance σ 2. We are interested in the unknown treatment difference between means for the control and the experimental treatment. Table 1 shows the key notation that we will use in this paper. We denote the unknown true treatment differences in S and S c by θ S and , respectively. We denote stage 1 sample mean differences for S and S c by X and Y, respectively and the stage 1 sample mean difference for F by Z, which can be expressed by , where . We assume that a total of n 1 patients are recruited in stage 1 so that S X=p S n 1 patients are from S with S X/2 randomly allocated each to the control and the experimental treatment. The remaining (n 1−S X) patients are from S c with (n 1−S X)/2 randomly allocated each to the control and the experimental treatment. Note that , where and , where .

Table 1.

Summary of notation.

| Stage 1 | Stage 2 | Stages 1 and 2 | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Selected | Sub‐ | True parameter | Sample | Variance of | Sample | Variance of | Naive | Sufficient | Unbiased | ||||||

| population | population | value | mean | sample mean | mean | sample mean | estimator | statistic | estimator | ||||||

| S | S | θ S | X |

|

U |

|

D S,N | Z S | D S,U | ||||||

| S c |

|

Y |

|

— | — | — | — | — | |||||||

| F | S | θ S | X |

|

V |

|

|

|

|

||||||

| S c |

|

Y |

|

W |

|

|

|

|

|||||||

| S + S c | θ F | Z | — | — | — | D F,N | — | D F,U | |||||||

The observed values for X, Y and Z are denoted by x, y and z, respectively. The trial continues to stage 2 with S if x > (z + b), which is equivalent to x > y + b/(1 − p S), where b is a number chosen such that the trial continues with S if the effect of the new treatment is sufficiently larger in S than in F. The trial continues to stage 2 with F if , which is equivalent to . In stage 2, a total of n 2 patients are recruited. If S is selected, all the n 2 patients will be from S with n 2/2 patients randomly allocated each to the control and the experimental treatment. If F is selected, S V=p S n 2 patients will be from S and (n 2−S V) patients will be from S c.

If S is selected to continue to stage 2, the objective is to estimate θ S while if F is selected to continue to stage 2, the objective is to estimate . Therefore, the parameter of interest at the end of the two‐stage trial, which we denote by θ, is random and is defined by

| (1) |

We will consider two estimators for θ, namely, the naive and the unbiased estimators. As shown in Table 1, when S is selected, we denote the naive estimator for θ S by D S,N. When F is selected, we denote the naive estimators for θ S, and θ F by , and D F,N, respectively. We define the naive estimator for θ as

| (2) |

We give the expressions for the naive estimators D S,N, , and D F,N and derive their bias functions in Section 2.2. In the following, we derive uniformly minimum variance unbiased estimators (UMVUEs) for θ S and . As indicated in Table 1, when S is selected, we denote the UMVUE for θ S by D S,U. When F is selected, we denote the UMVUEs for θ S and by and , respectively. Note that is an unbiased estimator for θ F. We define the unbiased estimator for θ as

| (3) |

We derive the expressions for UMVUEs D S,U, and in Section 2.3.

We will compare the naive (D N) and the unbiased (D U) estimators for θ by evaluating the bias for D N and the mean squared errors (MSEs) for D N and D U. We will evaluate biases and MSEs conditional on the selection made and so for the naive estimator, we will derive expressions for biases for D S,N and D F,N separately. Similarly, the MSEs for D N and D U will be evaluated conditional on the selection made. Note that if an estimator is unbiased conditional on selection, it is also unconditionally unbiased.

2.2. The naive estimator

In this section, we describe the naive estimator for θ defined by equation (2) and derive simple expressions for its bias function. When S is selected, a possible naive estimator for θ S, which we denote by D S,N in expression (2), is the two‐stage sample mean given by

| (4) |

where U denotes the stage 2 sample mean for patients in S and t S=S X/(S X+n 2) is the proportion of patients in S who are in stage 1. The expected value for D S,N can be expressed as E(D S,N) = t S E(X|X > Y *) + (1 − t S)θ S, where Y *=Y + b/(1 − p S) so that the bias for D S,N is given by

| (5) |

where denotes the indicator function for X > Y *. Following the last expression in Appendix C.1 in 15, Pr(X > Y *) can be expressed as follows

| (6) |

where σ X and σ Y are as defined in Section 2.1, and φ and Φ denote the density and distribution functions of the standard normal, respectively. Also, following Appendix C.1 in 15, can be expressed as

| (7) |

The expressions for Pr(X > Y *)and are substituted in expression (5) to obtain the bias function for D S,N.

If F is selected to continue to stage 2, we are seeking an estimator for θ F. Let and denote the proportion of patients recruited in stage 1 from S and S c, respectively, and as indicated in Table 1, let V and W denote the stage 2 sample means for S and S c, respectively. If F is selected to continue to stage 2, possible naive estimators for θ S and , which we denote by and , respectively in Section 2.1, are the two‐stage sample means and . Consequently, a naive estimator for θ F, which we denote by D F,N in expression (2), could be

| (8) |

The bias for D F,N can be expressed as

| (9) |

where and are given by

| (10) |

where denotes the indicator function for . As for the expressions for Pr(X > Y *) and , and in the earlier expressions can be respectively expressed as

where and

For the case we consider here where the population has two partitions, a simple expression for is . Appendix C.2 in 15 has expressions with a single integral that can be modified when the partitioning of the population is more complex.

The aforementioned expressions for , and are used to obtain the bias functions for , and D F,N. We will use the bias functions for D S,N, , and D F,N that we have derived in this section to show the extent of the bias for the naive estimator in Section 4.1, which necessitates the need for an unbiased estimator for θ such as the one we derive in the following section.

2.3. Conditionally unbiased estimator for θ when the prevalence of the subpopulation is known

In this section, we derive an estimator for θ that is unbiased conditional on the selection made. To do this, we need the densities of the stage 2 means. The notation for the variances for the stage 2 sample means is given in Table 1. If S is selected to continue to stage 2, U is normally distributed with variance . If F is selected to continue to stage 2, V and W are normally distributed with variances and , respectively. Also, to derive the unbiased estimators, we need sufficient statistics and these will be vectors that include the weighted sums of stages 1 and 2 means. The notation for the weighted means for the two alternative choices of population is given in the second last column in Table 1.

To obtain the unbiased estimator, we use the Rao–Blackwell theorem (for example, 29). This states that, to obtain the UMVUE for a parameter, one identifies an unbiased estimator for the parameter of interest and then derives its expectation conditional on a complete and sufficient statistic. Let Q S denote the event X > Y + b/(1 − p S). Conditional on Q S, U is an unbiased estimator for θ S so that if we can identify a sufficient and complete statistic for estimating θ S, we can use the Rao–Blackwell theorem to derive the UMVUE for θ S. Define Z S=(τ U/σ X)X + (σ X/τ U)U. We describe in Appendix A that conditional on Q S, (Y,Z S) is the sufficient and complete statistic for θ S and that the UMVUE for θ S, E[U|Y,Z S,Q S], which we denote by D S,U in Section 2.1, is given by

| (11) |

where, after substituting p S with S X/n 1 in the expression for f U(x,y) given in Appendix A,

We have substituted p S with S X/n 1 in the expression for f U(X,Y) and also in the expressions for f V(X,Y) and f W(X,Y) defined in the following so that estimators in this section and corresponding estimators in Section 2.4 have the same expressions.

Let Q F denote the event . Conditional on Q F, V and W are unbiased estimators for θ S and , respectively so that if appropriate sufficient and complete statistics for θ S and can be identified, the UMVUEs for θ S and can be obtained using the Rao–Blackwell theorem. Define and . We show in Appendix B that conditional on Q F, and are sufficient and complete statistics for θ S and , respectively and that the UMVUE for θ S, , which we denote by in Section 2.1, is given by

| (12) |

where, after substituting p S with S X/n 1 in the expression for f V(x,y) given in Appendix B,

and that the UMVUE for , which we denote by in Section 2.1, is given by

| (13) |

where, after substituting p S with S X/n 1 in the expression for f W(x,y) given in Appendix B,

Consequently, an unbiased estimator for θ F is , where and are given by expressions (12) and (13), respectively.

2.4. Conditionally unbiased estimator for θ when the prevalence of the subpopulation is unknown

In the previous sections, we have assumed that p S, the true proportion of patients in S, is known. In some instances, this may not be a reasonable assumption. In this section, we derive conditionally unbiased estimator for θ when p S is unknown. Unlike in Sections 2.2 and 2.3, for this case, S X and S V are random. We will assume S X, the number of patients from S in stage 1, is Binomial(n 1,p S) so that consequently and are now random. Define , where s X is the observed value for S X and . We assume that the trial continues to stage 2 with S if x > (z *+b), which is equivalent to and with F if , which is equivalent to . Note that when S is selected, if we derive an estimator for θ S that is unbiased conditional on S X=s X, then the estimator is unconditionally unbiased. We show in Appendix C that the UMVUE for θ S when S X is random is given by expression (11).

For the case where F is selected, we assume S V, the number of patients in S in stage 2, is Binomial(n 2,p S) so that consequently and are now random. We show in Appendix D that the UMVUEs for θ S and are given by expressions (12) and (13), respectively. Let

| (14) |

As

and similarly , is an unbiased estimator for θ F.

3. Worked example

In this section, we use an example to demonstrate how the various estimates described in Sections 2.2 and 2.3 are computed and how they compare. Computation of most estimates described in Section 2.4 would be similar to the computation of estimates in Section 2.3. Several trials for Alzheimer's disease (AD) consider continuous outcomes. In some AD trials, the primary outcome is continuous 30 so that our methodology can be used. Also, subgroup analysis has been considered in AD trials 20. Therefore, to construct the example, we use the AD trial reported in 31. This trial recruited patients with moderate or severe AD, with subgroup analysis performed later for patients with severe AD 20. We take the full population to consist of the patients with moderate or severe AD and the subpopulation to be the patients with severe AD that are thought to potentially benefit more from the new treatment. The primary outcome in 31 is not continuous and so for our example, we imagine that the primary outcome is Severe Impairment Battery (SIB) score, a 51‐item scale with scores ranging from 0 to 100. This was a secondary outcome in the original trial. For the AD trial in 31, the observed mean differences in SIB scores for patients with severe AD and the full population are 7.42 20 and 5.62 31, respectively. Based on these values, the observed mean difference for patients with moderate AD is approximately 3.82. Using the results for the severe AD patients, we will assume σ = 13.2. The AD trial 20 is single stage with approximately 290 patients. In the examples constructed here, we will assume a two‐stage ASD with n 1=n 2=200.

Using the definitions of Section 2.1, patients with severe AD form subpopulation S. Therefore, we denote the proportion and the true mean difference in SIB scores for patients with severe AD by p S and θ S, respectively. In stage 1, the observed mean difference in SIB scores for patients with severe AD is denoted by x, and in stage 2, the observed mean difference in SIB scores for patients with severe AD is denoted by u if testing is only conducted for patients with severe AD and by v if the full population is tested. Also, from the definitions in Section 2.1, patients with moderate AD would form S c so that we denote the proportion and the true mean difference in SIB scores for patients with moderate AD by and , respectively. In stage 1, the observed mean difference in SIB scores for patients with moderate AD is denoted by y, and in stage 2, if the full population is tested, we denote the observed mean difference in SIB scores for patients with moderate AD by w.

The proportion of patients with severe AD in 31 is approximately 0.5 so that for the example we take . Because n 1=n 2=200 and p S=0.5 so that S X=p S n 1=100 and S V=p S n 2=100, using the definitions of Section 2.2, t S=S X/(S X+n 2) = 1/3, and . We assume the trial continues with S if the effect for patients with severe AD is greater than the effect for all patients so that b = 0 and b/(1 − p S) = 0. To compute the various unbiased estimates, we need and . If stage 2 data are only available for patients with severe AD, to compute an unbiased estimate for θ S, we need . If the full population is tested in stage 2, to obtain the unbiased estimates for θ S and , we need and .

We will compute estimates for four scenarios. In the first two scenarios, S (patients with severe AD) is selected to continue to stage 2. In both scenarios, we suppose that u = 7.42. For Scenario 1, we suppose x = 6.5 and y = 5.6 and so the naive estimate for the mean difference for patients with severe AD, d S,N=t S x + (1 − t S)u = 7.11. For the unbiased estimate, we use equation (11), with the unbiased estimate . The values for and have been evaluated earlier and so that d S,U=6.67. In the second scenario, we suppose x = 6.5 and y = 3.8 and using similar computation, d S,N=7.11 and d S,U=6.97. The naive estimates for Scenarios 1 and 2 are equal while the unbiased estimates are not equal, with the unbiased estimate for Scenario 2 closer to the naive estimate. Scenarios 1 and 2 differ in the values for y only and this is why the naive estimates are equal because, conditional on selecting S, the naive estimates depend on x and u only. However, the unbiased estimates depend on y, and as can be deduced from the expression for f V(x,y), acquire further from the naive estimate as the difference between the naive estimate and y decreases. This is reasonable because when data suggest that treatment effects for patients with moderate and severe AD are similar, selection bias is likely to be high. Same naive estimates and different unbiased estimates for Scenarios 1 and 2 may indicate more variability for the unbiased estimator for θ S (D S,U) developed in Section 2.3 compared with the naive estimator D S,N.

The other two scenarios are for the case where the full population is tested in stage 2 and in both scenarios, we suppose that v = 7.42 and w = 3.48. For the third scenario, we suppose that x = 5.4 and y = 6.0 so that the naive estimates for θ S, and θ F are , and , respectively. Using equations (12) and (13), the unbiased estimates for θ S and are given by and , respectively, where and . Consequently, , and the unbiased estimate for θ F, . The corresponding naive and unbiased estimates are not equal. In the fourth scenario, we suppose x = 5.7 and y = 5.7 and using similar formulae as in Scenario 3, , , d F,N=5.66, , , d F,U=5.63. In both scenarios, the naive estimates for θ F are equal. The unbiased estimates for θ F in Scenarios 3 and 4 are also equal. However, the corresponding naive estimates for θ S and in Scenarios 3 and 4 are different. The corresponding unbiased estimates for θ S and in Scenarios 3 and 4 are also different. For both θ S and , the difference between the naive estimates in Scenarios 3 and 4 is greater than the difference between the unbiased estimates in Scenarios 3 and 4. This is because the unbiased estimators for θ S and are functions of all stage 1 data while the naive estimators for θ S and only use data from populations S and S c, respectively. The larger differences between the unbiased estimates in Scenarios 3 and 4 than the differences between the naive estimates, may indicate more variability for the unbiased estimator for θ F (D F,U) developed in Section 2.3 than the naive estimator D F,N. In Scenario 4, compared with Scenario 3, and are further from and , respectively. This is reasonable because although in both scenarios is smaller than so that the observed data suggest a correct decision for both scenarios would be to continue to stage 2 with patients with severe AD only, in Scenario 4, is much smaller than providing more evidence that the correct decision would have been to continue with patients with severe AD only, and hence more adjustments to the naive estimates are required.

4. Comparison of the estimators

In this section, we assess the bias of the naive estimator and use a simulation study to compare the mean squared errors for the naive and unbiased estimators for several scenarios.

4.1. Characteristics of the calculated bias for the naive estimator

From the bias functions derived in Section 2.2, we note that the bias for the naive estimator depends on θ S, and p S and so we will vary the values of these parameters. The bias also depends on t F=n 1/(n 1+n 2) but we will only present results for the scenario where n 1=n 2=200 so that t F=0.5. From the expressions for biases, one can demonstrate that biases increase as one makes selection later in the trial, that is, as t F increases. The other parameters that bias depends on are b, σ 2 and n 1+n 2. In this section, we will take σ 2=1. For a given value of t F, to make the results approximately invariant of σ 2 and n 1+n 2, we will divide D S,N by , which is the approximate standard error (SE) for D S,N and we will divide estimators , and D F,N by , which is the approximate SE for D F,N. We will comment how b influences bias after describing results in Figure 2 for which b = 0. The top row in Figure 2 explores the bias for the naive estimator and how the naive estimators for treatment effects in S and S c contribute to the bias. From left to right, the plots correspond to p S=0.3, p S=0.5 and p S=0.7. The y‐axes give the biases. The x‐axes correspond to different values for θ S and in all plots, . We have taken a fixed value for because bias depends on θ S and only through . This can be observed by noting that if we add some value δ to θ S and , the expressions for bias in Section 2.2 change by having t − δ − θ S, , and in place of t − θ S, , and , respectively. If we let r = t − δ and integrate with respect to r and use subscript δ for the new expressions used to obtain bias, these can be expressed as Prδ(X > Y *) = Pr(X > Y *), , and . Substituting the new expressions in equations (5) and (10), we obtain the same forms for bias and hence the same bias when δ is added to both θ S and .

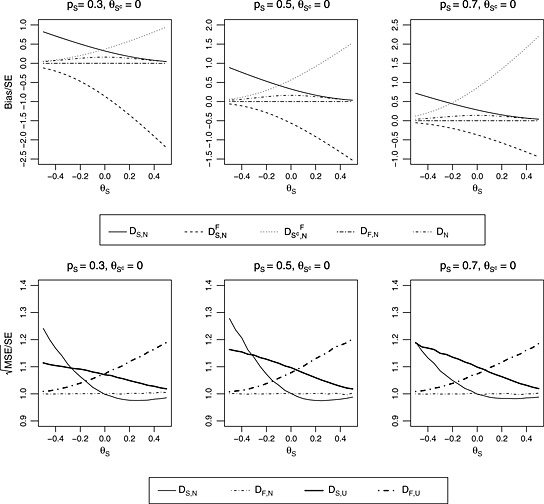

Figure 2.

Plots showing bias (top row) and mean squared error (bottom row) for the case where n 1=n 2=200, σ 2=1 and . The x‐axes correspond to the values for θ S. Each column corresponds to a different value for p S. MSE, mean squared error; SE, standard error.

In Figure 2, the legends at the bottom of the plots describe the line types for each estimator. In the first legend, the continuous lines (—) correspond to the case where S is selected to continue to stage 2 and hence gives the bias for D S,N as an estimator for θ S. The bias for D S,N decreases as increases. This is reasonable because as θ S becomes larger than , Pr(X > Y *) approaches 1 so that the density of X conditional on X > Y * approaches the unconditional density of X and consequently the bias for D S,N approaches zero. The decrease of bias for D S,N can also be explained by the expressions for Pr(X > Y *) and that are given by equations (6) and (7), respectively. As θ S becomes larger than , the density for the sample mean difference in S becomes stochastically larger than the density for the sample mean difference in S c and consequently, for the values of t, where the term with φ in Pr(X > Y *) and is non‐zero, the term with Φ approaches 1. Hence, Pr(X > Y *) and approach one and E(X), respectively so that the bias approaches zero. The dotted lines (···) show the bias for , the naive estimator for when F is selected. The bias is positive and increases as increases. The dashed lines (‐ ‐ ‐) show the bias for , the naive estimator for θ S when F is selected. The bias is negative and increases as increases. The explanation for the behaviours of the biases for and is similar to the explanation for the behaviour of the bias for D S,N. When p S=0.5 (middle panel), except for the sign, the bias for is equal to the bias for . For the other values for p S (other panels in Figure 2), except for the sign, we note that multiplied by p S is equal to multiplied by . Therefore, if F is selected, although the naive components and are biased, as can be seen from the short and long dashed lines (– ‐ – ‐ –), the naive estimator D F,N is unbiased. The dashed and dotted line (·−·−·) shows the bias for D N, the naive estimator for θ. The bias is maximal when . Based on results not presented here, for b ≠ 0, the lines in Figure 2 shift by b/(1 − p S) so that bias is maximal when . Noting that the selection is based on max{X,Y + b/(1 − p S)}, the proof that the bias is maximal when is given by Carreras and Brannath 32.

From the aforementioned assessment of the bias for the naive estimator, we note that, when S is selected, the bias for the naive estimator for θ S is substantial. If F is selected, the naive estimator for θ F is unbiased. However, the naive estimators for θ S and are substantially biased. It is our view that we need unbiased estimators for θ S and such as those developed in Section 2.3 when F is selected because we believe investigators would still want to learn about θ S and .

4.2. Simulation of mean squared errors

In this section, we perform a simulation study to compare the MSEs for the naive and unbiased estimators. In Section 4.1, for the case where F is selected, as well as exploring the bias for the naive estimator for θ F, we have also explored the bias for the naive estimators for θ S and , which are the components for θ F. In this section, we will only focus on the estimators that will be used for inference after stage 2. Hence, when F is selected, we will only compare MSEs for the naive and unbiased estimators for θ F, and when S is selected, we will only compare MSEs for the naive and unbiased estimators for θ S. For each combination of θ S, , t F and p S, we run 1,000,000 simulation runs. As in Section 4.1, we will only present the MSE results for the case where b = 0. For simulations with b = 0, in each simulation run, we simulate stage 1 data (x and y) and if x > y in which case S would be selected to continue to stage 2, we simulate u and if in which case F would be selected to continue to stage 2, we simulate v and w.

The bottom row in Figure 2 gives the square root of the MSEs divided by approximate SE. As indicated earlier, these plots are for the cases where b = 0. Based on results not presented here, for b ≠ 0, the lines in Figure 2 shift by b/(1 − p S). For both the naive and unbiased estimators, we take when S is selected to continue to stage 2 and when F is selected to continue to stage 2. From left to right, p S=0.3, p S=0.5 and p S=0.7, respectively. The second legend at the bottom of the plots describes the line types for each estimator. The continuous lines (—) correspond to D S,N, the naive estimator for θ S when S is selected to continue to stage 2. The MSE for D S,N decreases as increases and varies with the values for p S but not monotonically. The thick continuous lines (—) correspond to D S,U, the UMVUE for θ S when S is selected to continue to stage 2. The MSE for D S,U decreases as increases and seems to increase as the values for p S increase. For most scenarios, the MSE for D S,U is larger than the MSE for D S,N. As for bias, the dashed and dotted lines (·−·−·) correspond to D F,N, the naive estimator for θ F when F is selected to continue to stage 2 and for all scenarios, the is approximately 1. The thick dashed and dotted lines (‐ · ‐·‐) correspond to D F,U, the unbiased estimator for θ F when F is selected to continue to stage 2. The MSE for D F,U increases with and p S.

For the case where S is selected to continue to stage 2, comparing the biases and MSEs for D S,N and D S,U, we would recommend using D S,U. This is because although for most scenarios in Figure 2, the MSE for D S,U is greater than the MSE for D S,N, the gain achieved by D S,U being an unbiased estimator outweighs the loss of precision by using D S,U. For example, from the results in the top left and bottom left plots, when θ S=0, (Bias(D S,N))/S E is 0.32 while is 0.07 less than so that D S,U removes substantial bias at the expense of a slight loss of precision around the true treatment effect. Similar results are observed in the other plots. For the case where F is selected to continue to stage 2, from the results in Figure 2, D F,N seems a better estimator for θ F than the estimator D F,U because both are mean unbiased but D F,N has smaller MSE.

The summary findings from the simulation study is that bias for the naive estimators can be substantial but the naive estimators have lower MSEs than the unbiased estimators we derived in Section 2.3. Balancing between the gain of having an unbiased estimator and the loss of precision, when S is selected, we recommend using the unbiased estimator for θ S given by expression (11). When F is selected, both the naive estimator D F,N and the unbiased estimator D F,U are mean unbiased but D F,N has better precision than D F,U and so for the case when F is selected, we recommend using the naive estimator for θ F (D F,N) given by expression (8).

4.3. Properties of the estimators when the prevalence of the subpopulation is unknown

The results in Sections 4.1 and 4.2 are for the case of known p S. In this section, we assess the performance of the various estimators when p S is unknown. To do this, we use the true value p S to simulate the number of patients in S in stage 1 (s X) as Binomial(n 1,p S) and calculate . After simulating s X, we then simulate stage 1 sample mean differences x and y for populations S and S c, respectively. As in Sections 4.1 and 4.2, we only present results for the case where b = 0. For this case, because , we select S if x > y and select F if . If S is selected, we simulate stage 2 sample mean difference u from a sample consisting of n 2/2 patients in each of the control and experimental arms. The naive estimate for θ S is d S,N=(s X x + n 2 u)/(s X+n 2). The unbiased estimate for θ S, d S,U, is obtained using expression (11). If F is selected, we use the true value p S to simulate the number of patients in S in stage 2 (s V) as Binomial(n 2,p S). For each of S and S c, we assume the number of patients are equally allocated to the control and the experimental treatments. Based on s V patients from S and (n 2−s V) patients from S c, we simulate stage 2 sample mean differences v and w for S and S c, respectively. Let , we compute the naive estimates for θ S, and θ F as , and , respectively. Note that when p S is known so that the estimator can be reasonably compared with the estimator D F,N given by expression (8). The unbiased estimates for and are obtained using expressions (12) and (13), respectively. The unbiased estimate for θ F is calculated as .

Figure 3 gives the simulation results for the configurations considered in Figure 2. The form of the SEs used in Figure 3 are the same as those used in Figure 2. We have not presented bias plots because the estimators obtained assuming that p S is known, have very similar biases to the estimators obtained assuming that p S is unknown. When S is selected, for the naive estimator for θ S, there is no noticeable difference in MSEs between the case when p S is assumed known and the case when p S is assumed unknown so that S X is random. Similar results are observed for D S,U, the unbiased estimator for θ S when S is selected. For the case where F is selected, for both the naive estimator and the unbiased estimator for θ F, the MSEs for the case where p S is assumed known and the case where p s is estimated are approximately equal when . When , the MSEs for and for the case where p S is estimated are slightly higher than MSEs for D F,N and D F,U, respectively, which are the estimators for the case where p S is assumed known. Based on results not presented here, when b ≠ 0 so that we select S if and select F if , as in the case where p s is assumed known, we noted that the lines in Figure 3 shift by b/(1 − p S).

Figure 3.

Mean squared error for the case where n 1=n 2=200, σ 2=1 and . The x‐axes correspond to the values for θ S. Each column corresponds to a different value for p S. MSE, mean squared error; SE, standard error.

To summarise, the results obtained when p S is estimated are very similar to results when p S is assumed known. The biases for the different estimators for θ S and θ F are almost identical and MSEs are only slightly higher. The reason that the increases in MSEs are not substantial for the case when p S is estimated may be as a result of adequate sample size in stage 1 and hence good precision for the estimator for p S. An estimator for p S with good precision would not add a great deal of variability to the estimators for θ S and θ F. Thus, if stage 1 data are adequate to estimate p S, the estimators developed in this paper perform almost as good as when p S is known.

5. Discussion

In order to make testing of new interventions more efficient, ASDs have been proposed. Such designs have been used for trials with subpopulation selection. This is the case we consider in this paper. Specifically, we have considered a design that has two stages, with data collected from stage 1 used to select the population to test in stage 2. In stage 2, additional data for a sample drawn from the selected population are collected. The final confirmatory analysis uses data from both stages. Statistical methods that have previously been developed to adjust for selection bias that arise from using stage 1 data have addressed hypothesis testing without inflating type I error. In this work, we have focussed on point estimation. We have derived formulae for obtaining unbiased point estimators. We have derived the formulae for the case where the prevalence of the subpopulation is considered known and also for the case where the prevalence of the subpopulation is unknown. To acquire unbiased estimators when the prevalence of the subpopulation is unknown, we have derived formulae for unbiased estimators when the proportion of patients from the subpopulation does not have to be equal to the prevalence of the subpopulation. This means that the estimators we have derived can be used to obtain unbiased estimates for trials that use enrichment designs, where proportion of the subpopulation in the trial is not equal to the prevalence of the subpopulation. The rest of this discussion focusses on the case where the prevalence of the subpopulation is assumed known but most points also hold for the case where the prevalence is unknown.

The unbiased estimators we have developed have higher MSEs compared with the naive estimators. Balancing between unbiasedness and precision, when the subpopulation is selected, we recommend using the unbiased estimator we have derived and when the full population is selected, we recommend using the naive estimator. The unbiased estimator for θ F that we derived conditional on continuing to stage 2 with the full population, although based on UMVUEs for θ S and , may not be a UMVUE among estimators for θ F that are functions of unbiased estimators for θ S and and so more research is required to check whether it is an UMVUE and if not, seek an UMVUE.

The estimators we have developed in this paper are unbiased conditional on the selection made. For the case where the full population is selected, we have derived separate unbiased estimators for the treatment effects for the subpopulation and its complement. These estimators are unbiased only if we do not make a selection after stage 2. That is, for the case where the full population continues to stage 2, if we use the observed separate estimates to make a claim that the treatment effect is larger in the subpopulation or in the full population, then the estimators developed in this paper are no longer unbiased. In this case, the same data are used both for selection and estimation, and Stallard et al. 33 have shown that there is no unbiased estimator.

We have considered the case whether the selection rule is pre‐defined and based on the efficacy outcome. In terms of estimation, a pre‐defined selection rule makes it possible to derive point estimators and evaluate their biases because bias is an expectation, and it is not clear what all possible outcomes are when the selection rule is not pre‐defined. The Food and Drug Administration draft guidance also acknowledges the difficulty in interpreting trial results when adaptation is not pre‐defined 34. A compromise between a pre‐defined selection rule used in this paper and a setting where the selection rule is not pre‐defined is a pre‐defined selection rule that includes additional aspects such as safety. More work is required to develop point estimators for such settings.

The unbiased estimators we have developed are for the case where the subpopulations are pre‐specified and they cannot be assumed to be unbiased in trials where subpopulations are not pre‐specified. It is flexible not to pre‐specify subpopulations but it is hard to evaluate bias of point estimators because bias is an expectation, and it is not clear what all possible outcomes are when the subpopulations are not pre‐specified. Hence, it is not possible to quantify the bias of the estimators developed here when the subpopulations are not pre‐specified 35.

Depending on the number of subpopulations and how they are defined, there are several configurations on how the subpopulations can be nested within each other 25. We have focussed on a simple and common configuration where a single subpopulation is thought to benefit more, so that based on stage 1 data, the investigators want to choose between continuing with the full population or the subpopulation. By noting that to obtain unbiased estimators we have partitioned the full population into distinct parts, the formulae we have developed for this configuration can be extended to other configurations. If the full population is not of interest and the other subpopulations are not nested with each other, the subpopulations already form distinct parts and the formulae derived for treatment selection such as in 15 can be used directly. If the full population is of interest or some subpopulations are nested within each other, it is possible to partition the full population into distinct parts and use our methodology to obtain unbiased estimators for the distinct parts. However, following our findings that for some selections the naive estimator is unbiased and has better precision than an estimator that combines unbiased estimators for the distinct parts, in order to make a recommendation on the best estimator for the case where there is nesting of subpopulations, we suggest comparing the characteristics of the naive estimators to the estimator that combines unbiased estimators for the distinct parts in the population.

We have assumed that whether the subpopulation or the full population is selected, the total sample size in stage 2 is fixed. The results also hold for the case where stage 2 sample sizes for continuing with the subpopulation and the full population are different but prefixed for each selection made. The results may not hold when the stage 2 sample size depends on the observed data in some other way.

Finally, if there is a futility rule that requires the trial to continue to stage 2 only if the mean difference for the selected population exceeds some pre‐specified value and as in 15 estimation is conditional on continuing to stage 2, the unbiased estimators developed in Section 2.3 can be extended to account for this. If we denote the pre‐specified futility value by B so that the trial stops if max{x,z}<B, the expression for f U(X,Y) in equation (11) becomes and the expression for f W(X,Y) in equation (13) becomes . The expression given by equation (12) changes to

where f V(X,Y) is as given before, and the expression for is . Note that with a futility rule, the naive estimator for θ F defined in this paper is no longer mean unbiased.

Acknowledgements

The authors thank the associate editor and two anonymous reviewers for comments that greatly improved the paper. Nigel Stallard and Susan Todd are grateful for funding from UK Medical Research Council (grant number G1001344).

Appendix A. Uniformly minimum variance unbiased estimator for θ S when the subpopulation is selected and p S is known

A.1.

Here, we derive the UMVUE for θ S conditional on selecting S. We will skip several steps that are similar to the steps used in 11, 14, 15. The notation used in 15 is very similar to the notation we have used. Let Q S denote the event X > Y + b/(1 − p S). Conditional on the event Q S, the density f(u,x,y) is given by

where denotes the indicator function for the event Q S and K S(θ) the probability for event Q S given the true parameter vector . The density f(u,x,y) can be re‐expressed to

where

Let z s=(τ U/σ X)x + (σ X/τ U)u; from the preceding density, conditional on Q S, (Y,Z S) is sufficient and complete statistic for estimating θ S. Therefore, because conditional on Q S, U is an unbiased estimator for θ S, the UMVUE for θ S is E[U|Y,Z S,Q S]. To obtain the expression for E[U|Y,Z S,Q S], we derive the density f(u|y,z S,Q S) = f(u,y,z S|Q S)/f(y,z S|Q S).

The aforementioned density can be transformed to obtain the density f(x,y,z S|Q S) given by

and to obtain the density f(u,y,z S|Q S) given by

The density f(y,z S|Q S) is obtained from f(x,y,z S|Q S) by integrating out x as follows

| (A.1) |

where

whered S,Ndenotes the observed value for D S,N. We are seeking f(u|y,z S,Q S), which is given by

| (A.2) |

We want to obtain the expression for E[U|Y,Z S,Q S] and so we derive . Using the standard result that 36 and steps in 15,

so that conditional on continuing to stage 2 with S, expression (11) gives the UMVUE for θ S.

Appendix B. Uniformly minimum variance unbiased estimators for θ S and when the full population is selected and p S is known

B.1.

Here we derive the UMVUEs for θ S and conditional on continuing with F in stage 2. We derive the UMVUEs for θ S and separately. Let Q F denote the event . Conditional on Q F, the density f(v,x,y,w|Q F) is given by

| (B1) |

where denotes the indicator function for the event Q F and K F(θ) the probability for event Q F given the true parameter vector . The aforementioned density can be re‐expressed as

where

Let ; from the preceding density, conditional on Q F, is sufficient and complete statistic for estimating θ S. Therefore, because conditional on Q F, V is an unbiased estimator for θ S, the UMVUE for θ S is . To obtain the expression for , we derive the density .

The aforementioned density can be transformed to obtain the density , which is given by

and the density , which is given by

As in Appendix A, the density is obtained by integrating out x in the as follows

where

where is the observed value for . We are seeking the density

We want to obtain the expression for and so we derive . As in Appendix A, using the standard result that and similar steps as in 15, we can show that

so that expression (12) gives the UMVUE for θ S conditional on continuing with F.

To obtain the unbiased estimator for , we note that the density given by expression (B1) can be re‐expressed as

where

Let ; from the preceding density, conditional on Q F, is sufficient and complete statistic for estimating . Therefore, because conditional on Q F, W is an unbiased estimator for , the UMVUE for is . To obtain the expression for , we derive the density . The aforementioned density can be transformed to obtain the density , which is given by

and to obtain the density , which is given by

The density is obtained by integrating out y as follows

where

where is the observed value for . We are seeking which, following previous derivation, is given by

We are seeking the expression for and so we need to obtain . Obtaining this is similar to obtaining in Appendix A and so

so that expression (13) gives the UMVUE for conditional on continuing with F in stage 2.

Appendix C. Uniformly minimum variance unbiased estimator for θ S when the subpopulation is selected and p S is unknown

C.1.

Here, we derive the UMVUE for θ S when S is selected and p S is unknown. The derivation is similar to that in Appendix A, and so we will skip some steps. Let denote the event that S X=s X and that . Conditional on , the density for f(u,x,y) is given by

where denotes the indicator function for the event , and K S(θ,p S) the probability for event given the true parameter vector (θ,p S). The density f(u,x,y) can be re‐expressed to

where α 1 and α 2 are as defined in Appendix A. Let z s=(τ U/σ X)x + (σ X/τ U)u; from the preceding density, conditional on , (Y,Z S) is sufficient and complete statistic for estimating θ S. Therefore, because conditional on , U is an unbiased estimator for θ S, the UMVUE for θ S is . To obtain the expression for , we derive the density .

The aforementioned density can be transformed to obtain the density given by

and to obtain the density given by

Similar to the integration performed to obtain the density given by expression (A.1), the density is obtained from by integrating out x to obtain

where and d S,N is the observed value for D S,N. We are seeking . Using the property that 1 A ∩ B=1 A 1 B and noting that cannot be non‐zero if and following the steps used to obtain the density given by expression (A.2), then it can be shown that is given by

The aim is to obtain the expression for , which requires derivation of . This can be carried out similar to how is derived in Appendix A, which leads to expression (11) being a UMVUE for θ S when p S is unknown and S is selected to continue to stage 2.

Appendix D. Uniformly minimum variance unbiased estimators for θ S and when the full population is selected and p S is unknown

D.1.

Here we derive the UMVUEs for θ S and conditional on continuing with F in stage 2 for the case where p S is unknown. The derivations are similar to those in Appendix B, and so we will skip several steps. Let denote the event that S X=s X, and S V=s V. Conditional on , the density is given by

| (D1) |

where denotes the indicator function for the event , and K F(θ,p S) the probability for event given the true parameter vector (θ,p S). The aforementioned density can be re‐expressed as

where γ 1, γ 2 and ψ(y,w) are as defined in Appendix B. Let ; from the preceding density, conditional on , is sufficient and complete statistic for estimating θ S. Therefore, because conditional on , V is an unbiased estimator for θ S, the UMVUE for θ S is . To obtain the expression for , we derive the density .

The aforementioned density can be transformed to obtain the density , which is given by

and the density , which is given by

Similar to integration performed to obtain the density given by expression (A.1), the density is obtained by integrating out x in to obtain

where and is the observed value for . We are seeking the density . Using the property that 1 A ∩ B=1 A 1 B and noting that cannot be non‐zero if or and following steps used to obtain the density given by expression (A.2), then it can be shown that is given by

We want to obtain the expression for , which requires derivation of . This can be carried out similar to how is derived in Appendix B, which leads to expression (12) giving a UMVUE for θ S when p S is unknown and F is selected to continue to stage 2.

Combining the derivation for the UMVUE for when p S is known and derivation for the UMVUE for θ S when p S is unknown, one can show that expression (13) is a UMVUE for when p S is unknown and F is selected to continue to stage 2.

Kimani, P. K. , Todd, S. , and Stallard, N. (2015), Estimation after subpopulation selection in adaptive seamless trials. Statist. Med., 34, 2581–2601. doi: 10.1002/sim.6506.

The copyright line for this article was changed on 12 May 2015 after original online publication.

References

- 1. Dunnett CW. A multiple comparison procedure for comparing several treatments with a control. Journal of the American Statistical Association 1955; 50:1096–1121. [Google Scholar]

- 2. Thall PF, Simon R, Ellenberg SS. Two‐stage selection and testing designs for comparative clinical trials. Biometrika 1988; 75:303–310. [Google Scholar]

- 3. Schaid DJ, Wieand S, Therneau TM. Optimal two‐stage screening designs for survival comparison. Biometrika 1990; 77:507–513. [Google Scholar]

- 4. Bauer P, Kieser M. Combining different phases in the development of medical treatments within a single trial. Statistics in Medicine 1999; 18:1833–1848. [DOI] [PubMed] [Google Scholar]

- 5. Hommel G. Adaptive modifications of hypotheses after an interim analysis. Biometrical Journal 2001; 43:581–589. [Google Scholar]

- 6. Stallard N, Todd S. Sequential designs for phase iii clinical trials incorporating treatment selection. Statistics in Medicine 2003; 22:689–703. [DOI] [PubMed] [Google Scholar]

- 7. Posch M, Koenig F, Branson M, Brannath W, Dunger‐Baldauf C, Bauer P. Testing and estimation in flexible group sequential designs with adaptive treatment selection. Statistics in Medicine 2005; 24:3697–3714. [DOI] [PubMed] [Google Scholar]

- 8. Bretz F, König F, Racine A, Maurer W. Confirmatory seamless phase ii/iii clinical trials with hypotheses selection at interim: general concepts. Biometrical Journal 2006; 48:623–634. [DOI] [PubMed] [Google Scholar]

- 9. Kimani PK, Stallard N, Hutton JL. Dose selection in seamless phase ii/iii clinical trials based on efficacy and safety. Statistics in Medicine 2009; 28:917–936. [DOI] [PubMed] [Google Scholar]

- 10. Kimani PK, Glimm E, Maurer W, Hutton JL, Stallard N. Practical guidelines for adaptive seamless phase ii/iii clinical trials that use Bayesian methods. Statistics in Medicine 2012; 31:2068–2085. [DOI] [PubMed] [Google Scholar]

- 11. Cohen A, Sackrowitz HB. Two stage conditionally unbiased estimators of the selected mean. Statistics and Probability Letters 1989; 8:273–278. [Google Scholar]

- 12. Shen L. Estimation following selection of the largest of two normal means. Statistics in Medicine 2001; 20:1913–1929. [DOI] [PubMed] [Google Scholar]

- 13. Stallard N, Todd S. Point estimates and confidence regions for sequential trials involving selection. Journal of Statistical Planning and Inference 2005; 135:402–419. [Google Scholar]

- 14. Bowden J, Glimm E. Unbiased estimation of selected treatment means in two‐stage trials. Biometrical Journal 2008; 50(4): 515–527. [DOI] [PubMed] [Google Scholar]

- 15. Kimani PK, Todd S, Stallard N. Conditionally unbiased estimation in phase ii/iii clinical trials with early stopping for futility. Statistics in Medicine 2013; 32(17): 2893–2910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Wu SS, Wang W, Yang MCK. Interval estimation for drop‐the‐losers design. Biometrika 2010; 97(2): 405–418. [Google Scholar]

- 17. Neal D, Casella G, Yang MCK, Wu SS. Interval estimation in two‐stage drop‐the‐losers clinical trials with flexible treatment selection. Statistics in Medicine 2011; 30:2804–2814. [DOI] [PubMed] [Google Scholar]

- 18. Magirr D, Jaki T, Posch M, Klinglmueller F. Simultaneous confidence intervals that are compatible with closed testing in adaptive designs. Biometrika 2013; 100:985–996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Kimani PK, Todd S, Stallard N. A comparison of methods for constructing confidence intervals after phase ii/iii clinical trials. Biometrical Journal 2014; 56(1): 107–128. [DOI] [PubMed] [Google Scholar]

- 20. Feldman H, Gauthier S, Hecker J, Vellas B, Xu Y, Ieni JR, Schwam EM, DONEPEZIL MSAD STUDY INVESTIGATORS GROUP. Efficacy and safety of donepezil in patients with more severe Alzheimer's disease: a subgroup analysis from a randomized, placebo‐controlled trial.. International Journal of Geriatric Psychiatry 2005; 20(6): 559–569. [DOI] [PubMed] [Google Scholar]

- 21. Wheeler M, De‐Herdt V, Vonck K, Gilbert K, Manem S, Mackenzie T, Jobst B, Roberts D, Williamson P, Van‐Roost D, Boon P, Thadani V. Efficacy of vagus nerve stimulation for refractory epilepsy among patient subgroups: a re‐analysis using the engel classification. Seizure 2011; 20:331–335. [DOI] [PubMed] [Google Scholar]

- 22. Amado RG, Wolf M, Peeters M, Van‐Cutsem E, Siena S, Freeman DJ, Juan T, Sikorski R, Suggs S, Radinsky R, Patterson SD, Chang DD. Wild‐type kras is required for panitumumab efficacy in patients with metastatic colorectal cancer. Journal of Clinical Oncology 2008; 26(10): 1626–1634. [DOI] [PubMed] [Google Scholar]

- 23. Brannath W, Zuber E, Branson M, Bretz F, Gallo P, Posch M, Racine‐Poon A. Confirmatory adaptive designs with bayesian decision tools for a targeted therapy in oncology. Statistics in Medicine 2009; 28:1445–1463. [DOI] [PubMed] [Google Scholar]

- 24. Mäkelä KT, Eskelinen A, Paavolainen P, Pulkkinen P, Remes V. Cementless total hip arthroplasty for primary osteoarthritis in patients aged 55years and older. Acta Orthopaedica 2010; 81(1): 42–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Spiessens B, Debois M. Adjusted significance levels for subgroup analyses in clinical trials. Contemporary Clinical Trials 2010; 31:647–656. [DOI] [PubMed] [Google Scholar]

- 26. Jenkins M, Stone A, Jennison C. An adaptive seamless phase ii/iii design for oncology trials with subpopulation selection using correlated survival endpoints. Pharmaceutical Statistics 2011; 10:1356–1364. [DOI] [PubMed] [Google Scholar]

- 27. Friede T, Parsons N, Stallard N. A conditional error function approach for subgroup selection in adaptive clinical trials. Statistics in Medicine 2012; 31:4309–4320. [DOI] [PubMed] [Google Scholar]

- 28. Stallard N, Hamborg T, Parsons N, Friede T. Adaptive designs for confirmatory clinical trials with subgroup selection. Journal of Biopharmaceutical Statistics 2014; 24:168–187. [DOI] [PubMed] [Google Scholar]

- 29. Lindgren BW. Statistical Theory 3rd edn. Collier Macmillan International Editions, London, 1976. [Google Scholar]

- 30. Wilkinson D, Murray J. Galantamine: a randomized, double‐blind, dose comparison in patients with Alzheimer's disease. International Journal of Geriatric Psychiatry 2001; 16:852–857. [DOI] [PubMed] [Google Scholar]

- 31. Feldman H, Gauthier S, Hecker J, Vellas B, Subbiah P, Schwam EM, DONEPEZIL MSAD STUDY INVESTIGATORS GROUP. Efficacy and safety of donepezil in patients with more severe Alzheimer's disease: a subgroup analysis from a randomized, placebo‐controlled trial. International Journal of Geriatric Psychiatry 2001; 57:613–620. [DOI] [PubMed] [Google Scholar]

- 32. Carreras M, Brannath W. Shrinkage estimation in two‐stage adaptive designs with mid‐trial treatment selection. Statistics in Medicine 2013; 32(10): 1677–1690. [DOI] [PubMed] [Google Scholar]

- 33. Stallard N, Todd S, Whitehead J. Estimation following selection of the largest of two normal means. Journal of Statistical Planning and Inference 2008; 138:1629–1638. [Google Scholar]

- 34. Food and drug administration. Guidance for industry: adaptive design clinical trials for drugs and biologics @ONLINE, 2014. Available from: http://www.fda.gov/downloads/Drugs/GuidanceComplianceRegulatoryInformation/Guidances/ UCM201790.pdf [Accessed on 5 April 2015].

- 35. Bauer P, Koenig F, Brannath W, Posch M. Selection and bias ‐ two hostile brothers. Statistics in Medicine 2010; 29:1–13. [DOI] [PubMed] [Google Scholar]

- 36. Todd S, Whitehead J, Facey KM. Point and interval estimation following a sequential clinical trial. Biometrika 1996; 83(2): 453–461. [Google Scholar]