Abstract

A tuning-free procedure is proposed to estimate the covariate-adjusted Gaussian graphical model. For each finite subgraph, this estimator is asymptotically normal and efficient. As a consequence, a confidence interval can be obtained for each edge. The procedure enjoys easy implementation and efficient computation through parallel estimation on subgraphs or edges. We further apply the asymptotic normality result to perform support recovery through edge-wise adaptive thresholding. This support recovery procedure is called ANTAC, standing for Asymptotically Normal estimation with Thresholding after Adjusting Covariates. ANTAC outperforms other methodologies in the literature in a range of simulation studies. We apply ANTAC to identify gene-gene interactions using an eQTL dataset. Our result achieves better interpretability and accuracy in comparison with CAMPE.

Keywords: Sparsity, Precision matrix estimation, Support recovery, High-dimensional statistics, Gene regulatory network, eQTL

1. Introduction

Graphical models have been successfully applied to a broad range of studies that investigate the relationships among variables in a complex system. With the advancement of high-throughput technologies, an unprecedented amount of features can be collected for a given system. Therefore, the inference with graphical models has become more challenging. To better understand the complex system, novel methods under high dimensional setting are extremely needed. Among graphical models, Gaussian graphical models have recently received considerable attention for their applications in the analysis of gene expression data. It provides an approach to discover and analyze gene relationships, which offers insights into gene regulatory mechanism. However gene expression data alone are not enough to fully capture the complexity of gene regulation. Genome-wide expression quantitative trait loci (eQTL) studies, which simultaneously measure genetic variation and gene expression levels, reveal that genetic variants account for a large proportion of the variability of gene expression across different individuals (Rockman & Kruglyak 2006). Some genetic variants may confound the genetic network analysis, thus ignoring the influence of them may lead to false discoveries. Adjusting the effect of genetic variants is of importance for the accurate inference of genetic network at the expression level. A few papers in the literature have considered to accommodate covariates in graphical models. See, for example, Li, Chun & Zhao (2012), Yin & Li (2013) and Cai, Li, Liu & Xie (2013) introduced Gaussian graphical model with adjusted covariates, and Cheng, Levina, Wang & Zhu (2012) introduced additional covariates to Ising models.

This problem has been naturally formulated as joint estimation of the multiple regression coefficients and the precision matrix in Gaussian settings. Since it is widely believed that genes operate in biological pathways, the graph for gene expression data is expected to be sparse. Many regularization-based approaches have been proposed in the literature. Some use a joint regularization penalty for both the multiple regression coefficients and the precision matrix and solve iteratively (Obozinski, Wainwright & Jordan 2011; Yin & Li 2011; Peng, Zhu, Bergamaschi, Han, Noh, Pollack & Wang 2010). Others apply a two-stage strategy: estimating the regression coefficients in the first stage and then estimating the precision matrix based on the residuals from the first stage. For all these methods, the thresholding level for support recovery depends on the unknown matrix l1 norm of the precision matrix or an irrepresentable condition on the Hessian tensor operator, thus those theoretically justified procedures can not be implemented practically. In practice, the thresholding level is often selected through cross-validation. When the dimension p of the precision matrix is relatively high, cross-validation is computationally intensive, with a jeopardy that the selected thresholding level is very different from the optimal one. As we show in the simulation studies presented in Section 5, the thresholding levels selected by the cross-validation tend to be too small, leading to an undesired denser graph estimation in practice. In addition, for current methods in the literature, the thresholding level for support recovery is set to be the same for all entries of the precision matrix, which makes the procedure non-adaptive.

In this paper, we propose a tuning free methodology for the joint estimation of the regression coefficients and the precision matrix. The estimator for each entry of the precision matrix or each partial correlation is asymptotically normal and efficient. Thus a P-value can be obtained for each edge to reflect the statistical significance of each entry. In the gene expression analysis, the P-value can be interpreted as the significance of the regulatory relationships among genes. This method is easy to implement and is attractive in two aspects. First, it has the scalability to handle large datasets. Estimation on each entry is independent and thus can be parallelly computed. As long as the capacity of instrumentation is adequate, those steps can be distributed to accommodate the analysis of high dimensional data. Second, it has the modulability to estimate any subgraph with special interests. For example, biologists may be interested in the interaction of genes play essential roles in certain biological processes. This method allows them to specifically target the estimation on those genes. An R package implementing our method has been developed and is available on the CRAN website.

We apply the asymptotic normality and efficiency result to do support recovery by edge-wise adaptive thresholding. This rate-optimal support recovery procedure is called ANTAC, standing for Asymptotically Normal estimation with Thresholding after Adjusting Covariates. This work is closely connected to a growing literature on optimal estimation of large covariance and precision matrices. Many regularization methods have been proposed and studied. For example, Bickel and Levina (Bickel & Levina 2008a; Bickel & Levina 2008b) proposed banding and thresholding estimators for estimating bandable and sparse covariance matrices respectively and obtained rate of convergence for the two estimators. See also El Karoui (2008) and Lam & Fan (2009). Cai, Zhang & Zhou (2010) established the optimal rates of convergence for estimating bandable covariance matrices. Cai & Zhou (2012) and Cai, Liu & Zhou (2012) obtained the minimax rate of convergence for estimating sparse covariance and precision matrices under a range of losses including the spectral norm loss. Most closely related to this paper is the work in Ren, Sun, Zhang & Zhou (2013) where fundamental limits were given for asymptotically normal and efficient estimation of sparse precision matrices. Due to the complication of the covariates, the analysis in this paper is more involved.

We organize the rest of the paper as follows. Section 2 describes the covariate-adjusted Gaussian graphical model and introduces our novel two-step procedure. Corresponding theoretical studies on asymptotic normal distribution and adaptive support recovery are presented in Sections 3-4. Simulation studies are carried out in Section 5. Section 6 presents the analysis of eQTL data. Proofs for theoretical results are collected in Section 7. We collect a key lemma and auxiliary results for proving the main results in Section 8 and Appendix 9.

2. Covariate-Adjusted Gaussian Graphical Model and Methodology

In this section we first formally introduce the covariate-adjusted Gaussian graphical model, and then propose a two-step procedure for estimation of the model.

2.1 Covariate-adjusted Gaussian Graphical Model

Let (X(i),Y(i)), i = 1, …, n, be i.i.d. with

| (1) |

where Γ is a p × q unknown coefficient matrix, and Z(i) is a p—dimensional random vector following a multivariate Gaussian distribution N (0, Ω−1) and is independent of X(i). For the genome-wide expression quantitative trait studies, Y(i) is the observed expression levels for p genes of the i–th subject and X(i) is the corresponding values of q genetic markers. We will assume that Ω and Γp×q are sparse. The precision matrix Ω is assumed to be sparse partly due to the belief that genes operate in biological pathways, and the sparseness structure of Γ reflects the sensitivity of confounding of genetic variants in the genetic network analysis.

We are particularly interested in the graph structure of random vector Z(i), which represents the genetic networks after removing the effect of genetic markers. Let G = (V, E) be an undirected graph representing the conditional independence relations between the components of a random vector Z(1) = (Z11, …, Z1p)T. The vertex set V = {V1, …, Vp} represents the components of Z. The edge set E consists of pairs (i, j) indicating the conditional dependence between Z1i and Z1j given all other components. In the genetic network analysis, the following question is fundamental: Is there an edge between Vi and Vj? It is well known that recovering the structure of an undirected Gaussian graph G = (V, E) is equivalent to recovering the support of the population precision matrix Ω = (ωij) of the data in the Gaussian graphical model. There is an edge between Vi and Vj, i.e., (i, j) ∈ E, if and only if ωij ≠ 0. See, for example, Lauritzen (1996). Consequently, the support recovery of the precision matrix Ω yields the recovery of the structure of the graph G.

Motivated by biological applications, we consider the high-dimensional case in this paper, allowing the dimension to exceed or even be far greater than the sample size, min {p, q} ≥ n. The main goal of this work is not only to provide a fully data driven and easily implementable procedure to estimate the network for the covariate-adjusted Gaussian graphical model, but also to provide a confidence interval for estimation of each entry of the precision matrix Ω.

2.2 A Two-step Procedure

In this section, we propose a two-step procedure to estimate Ω. In the first step of the two-step procedure, we apply a scaled lasso method to obtain an estimator Γ̂ = (γ̂1, …, γ̂p)T of Γ. This procedure is tuning free. This is different from other procedures in the literature for the sparse linear regression, such as standard lasso and Dantzig selector which select tuning parameters by cross-validation and can be computationally very intensive for high dimensional data. In the second step, we approximate each Z(i) by Ẑ(i) = Y(i) – Γ̂p×qX(i), then apply the tuning-free methodology proposed in Ren et al. (2013) for the standard Gaussian graphical model to estimate each entry ωij of Ω, pretending that each Ẑ(i) was Z(i). As a by-product, we have an estimator Γ̂ of Γ, which is shown to be rate optimal under different matrix norms, however our main goal is not to estimate Γ, but to make inference on Ω.

Step 1 Denote the n by q dimensional explanatory matrix by X = (X(1), …, X(n))T , where the ith row of matrix is from the i–th sample X(i). Similarly denote the n by p dimensional response matrix by Y = (Y(1), …, Y(n))T and the noise matrix by Z = (Z(1), …, Z(n))T . Let Yj and Zj be the j–th column of Y and Z respectively For each j = 1, …, p, we apply a scaled lasso penalization to the univariate linear regression of Yj against X as follows,

| (2) |

where the weighted penalties are chosen to be adaptive to each variance Var (X1k) such that an explicit value can be given for the parameter λ1, for example, one of the theoretically justified choices is . The scaled lasso (2) is jointly convex in b and θ. The global optimum can be obtained through alternatively updating between b and θ. The computational cost is nearly the same as that of the standard lasso. For more details about its algorithm, please refer to Sun & Zhang (2012).

Define the estimate of “noise” Zj as the residue of the scaled lasso regression by

| (3) |

which will be used in the second step to make inference for Ω.

Step 2 In the second step, we propose a tuning-free regression approach to estimate Ω based on Ẑ defined in Equation (3), which is different from other methods proposed in the literature, including Cai et al. (2013) or Yin & Li (2013). An advantage of our approach is the ability to provide an asymptotically normal and efficient estimation of each entry of the precision matrix Ω.

We first introduce some convenient notations for a subvector or a submatrix. For any index subset A of {1, 2,…,p} and a vector W of length p, we use WA to denote a vector of length |A| with elements indexed by A. Similarly for a matrix U and two index subsets A and B of {1, 2,…,p}, we can define a submatrix UA,B of size |A| × |B| with rows and columns of U indexed by A and B, respectively Let W = (W1, …, Wp)T, representing each Z(i), follow a Gaussian distribution N (0, Ω−1). It is well known that

| (4) |

For A = {i, j}, equivalently we may write

| (5) |

where the coefficients and error distributions are

| (6) |

Based on the regression interpretation (5), we have the following data version of the multivariate regression model

| (7) |

where β is a (p − 2) by 2 dimensional coefficient matrix. If we know ZA and β, an asymptotically normal and efficient estimator of ΩA,A is .

But of course β is unknown and we only have access to the estimated observations Ẑ from Equation (3). We replace ZA and ZAc by ẐA and ẐAc respectively in the regression (7) to estimate β as follows. For each m ∈ A = {i, j}, we apply a scaled lasso penalization to the univariate linear regression of Ẑm against ẐAc,

| (8) |

where the vector b is indexed by Ac, and one of the theoretically justified choices of λ2 is . Denote the residuals of the scaled lasso regression by

| (9) |

and then define

| (10) |

This extends the methodology proposed in Ren et al. (2013) for Gaussian graphical model to corrupted observations. The approximation error Ẑ – Z affects inference for Ω. Later we show if Γ is sufficient sparse, Γp×qX can be well estimated so that the approximation error is negligible. When both Ω and Γ are sufficiently sparse, Ω̂A,A can be shown to be asymptotically normal and efficient. An immediate application of the asymptotic normality result is to perform adaptive graphical model selection by explicit entry-wise thresholding, which yields a rate-optimal adaptive estimation of the precision matrix Ω under various matrix lw norms. See Theorems 2, 3 and 4 in Section 3 and 4 for more details.

3. Asymptotic Normality Distribution of the Estimator

In this section we first give theoretical properties of the estimator Ẑ as well as Γ̂, then present the asymptotic normality and efficiency result for estimation of Ω.

We assume the coefficient matrix Γ is sparse, and entries of X with mean zero are bounded since the gene marker is usually bounded.

-

The coefficient matrix Γ satisfies the following sparsity condition,

(11) where in this paper λ1 is at an order of . Note that s1 ≤ maxi Σj≠iI {γij ≠ 0}, the maximum of the exact row sparseness among all rows of Γ.

There exist positive constants M1 and M2 such that 1/M1 ≤ λmin (Cov(X(1))) and 1/M2 ≤ λmin (Ω) ≤ λmax (Ω) ≤ M2.

- There is a constant B > 0 such that

(12)

It is worthwhile to note that the boundedness assumption (12) does not imply the X(1) is jointly sub-gaussian, i.e., X(1) is allowed to be not jointly sub-gaussian as long as above conditions are satisfied. In the high dimensional regression literature, it is common to assume the joint sub-gaussian condition on the design matrix as follows,

- 3′. We shall assume that the distribution of X(1) is jointly sub-gaussian with parameter (M1)1/2 > 0 in the sense that

(13)

We analyze the Step 1 of the procedure in Equation (2) under Conditions 1-3 as well as Conditions 1-2 and 3′. The optimal rates of convergence are obtained under the matrix l∞ norm and Frobenius norm for estimation of Γ, which yield a rate of convergence for estimation of each Zj under the l2 norm.

Theorem 1

Let for any δ1 ≥ 1 and ε1 > 0 in Equation (2). Assume that

Under Conditions 1-3 we have

| (14) |

| (15) |

| (16) |

Moreover, if we replace Condition 3 by the weaker version Condition 3′, all results above still hold under a weaker assumption on s1,

| (17) |

The proof of Theorem 1 is provided in the Section 7.1.

Remark 1

Under the assumption that the lr norm of each row of Γ is bounded by , an immediate application of Theorem 1 yields corresponding results for lr ball sparseness. For example,

provided that and Conditions 1-2, 3′ hold.

Remark 2

Cai et al. (2013) assumes that the matrix l1 norm of (Cov (X(1)))−1, the inverse of the covariance matrix of X(1), is bounded, and their tuning parameter depends on the unknown l1 norm. In Theorem 1 we don't need the assumption on the l1 norm of (Cov (X(1)))−1 and the tuning parameter λ1 is given explicitly.

To analyze the Step 2 of the procedure in Equation (8), we need the following assumptions for Ω.

-

4. The precision matrix Ω = (ωij)p×q has the following sparsity condition

(18) where λ2 is at an order of .

5. There exists a positive constant M2 such that ‖Ω‖l∞ ≤ M2.

It is convenient to introduce a notation for the covariance matrix of (ηi, ηj)T in Equation (5). Let

We will estimate ΨA,A first and show that an efficient estimator of ΨA,A yields an efficient estimation of entries of ΩA,A by inverting the estimator of ΨA,A. Denote a sample version of ΨA,A by

| (19) |

which is an oracle MLE of ΨA,A, assuming that we know β, and

| (20) |

Let

| (21) |

where ε̂A is defined in Equation (9). Note that Ω̂A,A defined in Equation (10) is simply the inverse of the estimator Ψ̂A,A. The following result shows that Ω̂A,A is asymptotically normal and efficient when both Γ and Ω are sufficient sparse.

Theorem 2

Let λ1 be defined as in Theorem 1 with and for any δ2 ≥ 1 and ε2 > 0 in Equation (8). Assume that

| (22) |

Under Conditions 1-2 and 4-5, and Condition 3 or 3′, we have

| (23) |

| (24) |

for some positive constants C5 and C6. Furthermore, ω̂ij is asymptotically efficient

| (25) |

when and , where

Remark 3

The asymptotic normality result can be obtained for estimation of the partial correlation. Let rij = −ωij/(ωiiωjj)1/2 be the partial correlation between Zi and Zj. Define r̂ij = −ω̂ij/(ω̂iiω̂jj)1/2. Under the same assumptions in Theorem 2, the estimator r̂ij is asymptotically efficient, i.e., , when and .

Remark 4

In Equations (4) and (7), we can replace A = {i, j} by a bounded size subset B ⊂ [1 : p] with cardinality more than 2. Similar to the analysis of Theorem 2, we can show the estimator for any smooth functional of is asymptotic normality as shown in Ren et al. (2013) for Gaussian graphical model.

Remark 5

A stronger result can be obtained for the choice of λ1 and λ2. Theorems 1 and 2 sill hold, when and , where and . Another alternative choice of λ1 and λ2 will be introduced in Section 5.

4. Adaptive Support Recovery and Estimation of Ω Under Matrix Norms

In this section, the asymptotic normality result obtained in Theorem 2 is applied to perform adaptive support recovery and to obtain rate-optimal estimation of the precision matrix under various matrix lω norms. The two-step procedure for support recovery is first removing the effect of the co-variate X, then applying ANT (Asymptotically Normal estimation with Thresholding) procedure. We thus call it ANTAC, which stands for ANT after Adjusting Covariates.

4.1 ANTAC for Support Recovery of Ω

The support recovery on covariate-adjusted Gaussian graphical model has been studied by several papers, for example, Yin & Li (2013) and Cai et al. (2013). Denote the support of Ω by Supp(Ω). In these literature, the theoretical properties on the support recovery were obtained but they all assumed that , where Mn,p is either the matrix l∞ norm or related to the irrepresentable condition on Ω, which is unknown. The ANTAC procedure, based on the asymptotic normality estimation in Equation (25), performs entry-wise thresholding adaptively to recover the graph with explicit thresholding levels.

Recall that in Theorem 2 we have

where is the Fisher information of estimating ωij. Suppose we know this Fisher information, we can apply a thresholding level with any ξ ≥ 2 for ω̂ij to correctly distinguish zero and nonzero entries, noting the total number of edges is p (p − 1) /2. However, when the variance is unknown, all we need is to plug in a consistent estimator. The ANTAC procedure is defined as follows

| (26) |

| (27) |

where ω̂kl is the consistent estimator of ωkl defined in (10) and ξ0 is a tuning parameter which can be taken as fixed at any ξ0 > 2.

The following sufficient condition for support recovery is assumed in Theorem 3 below. Define the sign of Ω by

(Ω) = {sgn(ωij), 1 ≤ i, j ≤ p}. Assume that

(Ω) = {sgn(ωij), 1 ≤ i, j ≤ p}. Assume that

| (28) |

The following result shows that not only the support of Ω but also the signs of the nonzero entries can be recovered exactly by Ω̂thr.

Theorem 3

Assume that Conditions 1-2 and 4-5, and Condition 3 or 3′ hold. Let λ1 be defined as in Theorem 1 with

and

with any δ2 ≥ 3 and ε2 > 0 in Equation (8). Also let ξ0 > 2 in the thresholding level (27). Under the assumptions (22) and (28), we have that the ANTAC defined in (26) recovers the support

(Ω) consistently, i.e.,

(Ω) consistently, i.e.,

| (29) |

Remark 6

If the assumption (28) does not hold, the procedure recovers part of the true graph with high partial correlation.

The proof of Theorem 3 depends on the oracle inequality (24) in Theorem 2, a moderate deviation result of the oracle ω̂ij and a union bound. The detail of the proof is in spirit the same as that of Theorem 6 in Ren et al. (2013), and thus will be omitted due to the limit of space.

4.2 ANTAC for Estimation under the Matrix lw Norm

In this section, we consider the rate of convergence of a thresholding estimator of Ω under the matrix lw norm, including the spectral norm. The convergence under the spectral norm leads to the consistency of eigenvalues and eigenvectors estimation. Define Ω̆thr, a modification of Ω̂thr defined in (26), as follows

| (30) |

From the idea of the proof of Theorem 3 (see also the proof of Theorem 6 in Ren et al. (2013)), we see that with high probability ‖Ω̂−Ω‖∞ is dominated by under the sparsity assumptions (22). The key of the proof in Theorem 4 is to derive the upper bound under matrix l1 norm based on the entry-wise supnorm ‖Ω̆thr− Ω‖∞. Then the theorem follows immediately from the inequality |M‖lw ≤ ‖M‖l1 for any symmetric matrix M and 1 ≤ w ≤ ∞, which can be proved by applying the Riesz-Thorin interpolation theorem. The proof follows similarly from that of Theorem 3 in Cai & Zhou (2012). We omit the proof due to the limit of space.

Theorem 4

Assume that Conditions 1-2 and 4-5, and Condition 3 or 3′ hold. Under the assumptions (22) and n = max {O (pξ1), O (qξ2)} with some ξ1, ξ2 > 0, the Ω̆thr defined in (30) with sufficiently large δ1 and δ2 satisfies, for all 1 ≤ w ≤ ∞,

| (31) |

Remark 7

The rate of convergence result in Theorem 4 also can be easily extended to the parameter space in which each row of Ω is in a lr ball of radius . See, e.g., Theorem 3 in Cai & Zhou (2012). Under the same assumptions of Theorem 4 except replacing by , we have

| (32) |

Remark 8

For the Gaussian graphical model without covariate variables, Cai et al. (2012) showed the rates obtained in Equations (31) and (32) are optimal when p ≥ cnγ for some γ > 1 and kn,p = o (n1/2 (logp)−3/2) for the corresponding parameter spaces of Ω. This implies that our estimator is rate optimal.

5. Simulation Studies

5.1 Asymptotic Normal Estimation

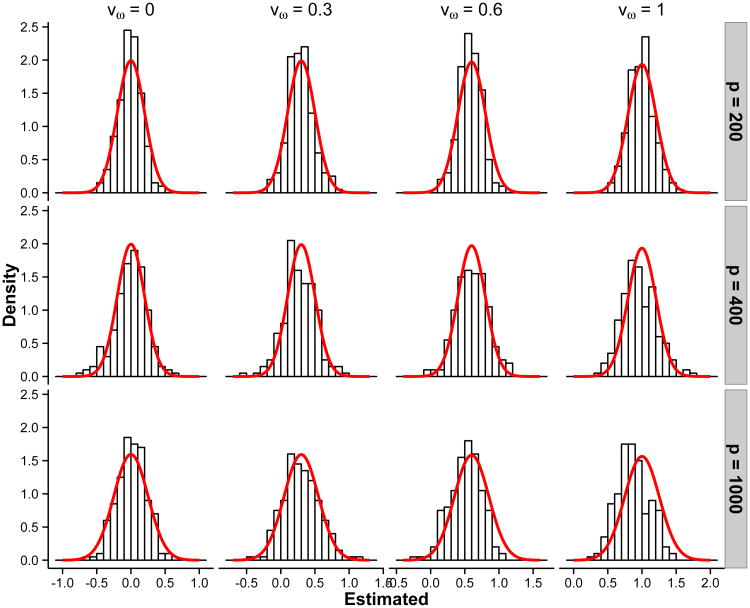

In this section, we compare the sample distribution of the proposed estimator for each edge ωij with the normal distribution in Equation (25). Three models are considered with corresponding {p, q, n} listed in Table 1. Based on 200 replicates, the distributions of the estimators match the asymptotic distributions very well.

Table 1.

Model parameters and simulation results: mean and standard deviation (in parentheses) of the proposed estimator for the randomly selected entry with value vω based on 200 replicates.

| (p, q, n) | π = P(ωij ≠ 0) | vω = 0 | vω = 0.3 | vω = 0.6 | vω = 1 |

|---|---|---|---|---|---|

| (200, 100, 400) | 0.025 | -0.015 (0.168) | 0.289 (0.184) | 0.574 (0.165) | 0.986 (0.182) |

| (400, 100, 400) | 0.010 | -0.003 (0.24) | 0.268 (0.23) | 0.606 (0.23) | 0.954 (0.244) |

| (1000, 100, 400) | 0.005 | 0.011 (0.21) | 0.292 (0.26) | 0.507 (0.232) | 0.862 (0.236) |

Three sparse models are generated in a similar way to those in Cai et al. (2013). The p × q coefficient matrix Γ is generated as following for all three models,

where the Bernoulli random variable is independent with the standard normal variable, taking one with probability 0.025 and zero otherwise. We then generate the p × p precision matrix Ω with identical diagonal entries ωii = 4 for the model of p = 200 or 400 and ωii = 5 for the model of p = 1000, respectively. The off-diagonal entries of Ω are generated i.i.d. as follows for each model,

where the probability of being nonzero π = P(ωij ≠ 0) for three models is shown in Table 1. Once both Γ and Ω are chosen for each model, the n × p outcome matrix Y is simulated from Y = XΓT + Z where rows of Z are i.i.d. N(0, Ω−1) and rows of X are i.i.d. N(0, Iq×q). We generate 200 replicates of X and Y for each model.

We randomly select four entries of Ω with values vω of 0, 0.3, 0.6 and 1 in each model and draw histograms of our estimators for those four entries based on the 200 replicates. The penalty parameter λ1, which controls the weight of penalty in the regression of the first step (2), is set to be , where , and qt(·, n) is the quantile function of t distribution with degrees of freedom n. This parameter λ1 is a finite sample version of the asymptotic level we proposed in Theorem 2 and Remark 5. Here we pick smax, 1 = √n/ log q. The penalty parameter λ2, which controls the weight of penalty in the second step (8), is set to be where B2 = qt(1 − smax,2/ (2p), n − 1), which is asymptotically equivalent to . The smax,2 is set to be √n/ log p.

In Figure 1, we show the histograms of the estimators with the theoretical normal density super-imposed for those randomly selected four entries with values vω of 0, 0.3, 0.6 and 1 in each of the three models. The distributions of our estimators match well with the theoretical normal distributions.

Figure 1.

The histograms of the estimators for randomly selected entries with values vω = 0, 0.3,0.6 and 1 in three models listed in Table 1. The theoretical normal density curves are shown as solid curves. The variance for each curve is , the inverse of the Fisher information.

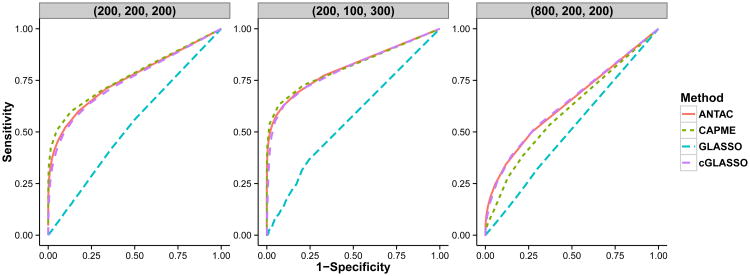

5.2 Support recovery

In this section, we evaluate the performance of the proposed ANTAC method and competing methods in support recovery with different simulation settings. ANTAC always performs among the best under all model settings. Under the Heterogeneous Model setting, the ANTAC achieves superior precision and recall rates and performs significantly better than others. Besides, ANTAC is computationally more efficient compared to a state-of-art method CAPME due to its tuning free property.

Homogeneous Model

We consider three models with corresponding {p, q, n} listed in Table 2, which are similar to the models listed in Table 2 and used in (Cai et al. 2013). Since every model has identical values along the diagonal, we call them “Homogeneous Model”. In terms of the support recovery, ANTAC performs among the best in all three models, although the performance from all procedures is not satisfactory due to the intrinsic difficulty of support recovery problem for models considered.

Table 2.

Model parameters used in the simulation of support recovery.

| (p, q, n) | P(Γij ≠ 0) | π = P(ωij ≠ 0), i ≠ j | |

|---|---|---|---|

| Model 1 | (200, 200, 200) | 0.025 | 0.025 |

| Model 2 | (200, 100, 300) | 0.025 | 0.025 |

| Model 3 | (800, 200, 200) | 0.025 | 0.010 |

We generate the p × q coefficient matrix Γ in the same way as Section 5.1,

The off-diagonal entries of the p × p precision matrix Ω are generated as follows,

where the probability of being nonzero π = P(ωij ≠ 0) is shown in Table 2 for three models respectively. We generate 50 replicates of X and Y for each of the three models.

We compare our method with graphical Lasso (GLASSO) (Friedman, Hastie & Tibshirani 2008), a state-of-art method — CAPME (Cai et al. 2013) and a conditional GLASSO procedure (short as cGLASSO), where we apply the same scaled lasso procedure as the first stage of the proposed method and then estimate the precision matrix by GLASSO. This cGLASSO procedure is similar to that considered in Yin & Li (2013) except that in the first stage Yin & Li (2013) applies ordinary lasso rather than the scaled lasso, which requires another cross-validation for this step. For GLASSO, the precision matrix is estimated directly from the sample covariance matrix without taking into account the effects from X. The tuning parameter for the l1 penalty is selected using five-fold cross validation by maximizing the log-likelihood function. For CAPME, the tuning parameters λ1 and λ2, which control the penalty in the two stages of regression, are chosen using five-fold cross validation by maximizing the log-likelihood function. The optimum is achieved via a grid search on {(λ1, λ2)}. For Models 1 and 2, 10 × 10 grid is used and for Model 3, 5 × 5 grid is used because of the computational burden. Specifically, we use the CAPME package implemented by the authors of (Cai et al. 2013). For Model 3, each run with 5 × 5 grid search and five-fold cross validation takes 160 CPU hours using one core from PowerEdge M600 nodes 2.33 GHz and 16 – 48 GB RAM, whereas ANTAC takes 46 CPU hours. For ANTAC, the parameter λ1 is set to be , where , qt(·, n) is the quantile function of t distribution with degrees of freedom n and smax, 1 = √n/ log q. The parameter λ2, is set to be where B2 = qt(1 − (smax,2/p)3 /2, n − 1). For cGLASSO, the first step is the same as ANTAC. In the second step, the precision matrix is estimated by applying GLASSO to the estimated Z, where the tuning parameter is selected using five-fold cross validation by maximizing the log-likelihood function.

We evaluate the performance of the estimators for support recovery problem in terms of the misspecification rate, specificity, sensitivity, precision and Matthews correlation coefficient, which are defined as,

Here, TP, TN, FP, FN are the numbers of true positives, true negatives, false positives and false negatives respectively. True positives are defined as the correctly identified nonzero entries of the off-diagonal entries of Ω. For GLASSO and CAPME, nonzero entries of Ω̂ are selected as edges with no extra thresholding applied. For ANTAC, edges are selected by the theoretical bound with ξ0 = 2. The results are summarized in Table 3. It can be seen that ANTAC achieves superior specificity and precision. Besides, ANTAC has the best overall performance in terms of the Matthews correlation coefficient.

Table 3.

Simulation results of the support recovery for homogeneous models based on 50 replications. Specifically, the performance is measured by misspecification rate, specificity, sensitivity (recall rate), precision and the Matthews correlation coefficient with all the values multiplied by 100. Numbers in parentheses are the simulation standard deviations.

| (p, q, n) | Method | MISR | SPE | SEN | PRE | MCC | |

|---|---|---|---|---|---|---|---|

| Model 1 | (200, 200, 200) | GLASSO | 35(1) | 65(1) | 37(2) | 2(0) | 1(1) |

| cGLASSO | 25(6) | 76(6) | 64(7) | 6(1) | 8(1) | ||

| CAPME | 2(0) | 100(0) | 4(1) | 96(1) | 21(1) | ||

| ANTAC | 2(0) | 100(0) | 4(0) | 88(8) | 18(2) | ||

| Model 2 | (200, 100, 300) | GLASSO | 43(0) | 57(0) | 51(2) | 3(0) | 3(1) |

| cGLASSO | 5(0) | 97(0) | 47(1) | 25(1) | 32(1) | ||

| CAPME | 4(0) | 97(0) | 56(1) | 29(1) | 39(1) | ||

| ANTAC | 2(0) | 100(0) | 22(1) | 97(2) | 46(1) | ||

| Model 3 | (800, 200, 200) | GLASSO | 19(1) | 81(1) | 19(1) | 1(0) | 0(0) |

| cGLASSO | 1(0) | 100(0) | 0(0) | 100(0) | 2(0) | ||

| CAPME | 1(0) | 100(0) | 0(0) | 0(0) | 0(0) | ||

| ANTAC | 1(0) | 100(0) | 7(0) | 71(2) | 22(1) |

We further construct ROC curves to check how this result would vary by changing the tuning parameters. For GLASSO or cGLASSO, the ROC curve is obtained by varying the tuning parameter. For CAPME, λ1 is fixed as the value selected by the cross validation and the ROC curve is obtained by varying λ2. For proposed ANTAC method, the ROC curve is obtained by varying the thresholding level ξ0. When p is small, CAPME, cGLASSO and ANTAC have comparable performance. As p grows, both ANTAC and cGLASSO outperform CAPME.

The purpose of simulating “Homogeneous Model” is to compare the performance of ANTAC and other procedures under models with similar settings used in Cai et al. (2013). Overall the performance from all procedures is not satisfactory due to the difficulty of support recovery problem. All nonzero entries are sampled from a standard normal. Hence, most signals are very weak and hard to be recovered by any method, although ANTAC performs among the best in all three models.

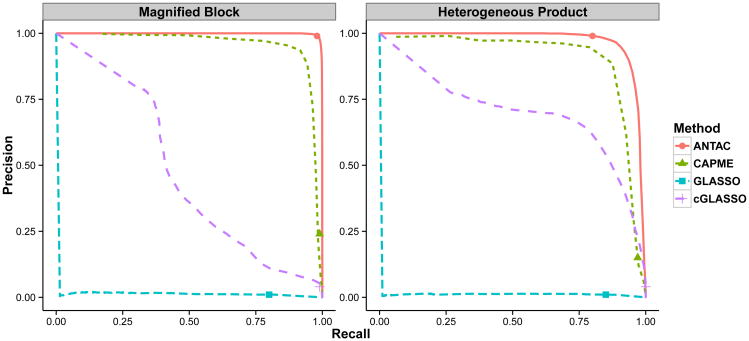

Heterogeneous Model

We consider some models where the diagonal entries of the precision matrix have different values. These models are different from “Homogeneous Model” and we call them “Heterogeneous Model”. The performance of ANTAC and other procedures are explored under “Magnified Block” model and “Heterogeneous Product” model, respectively. The ANTAC performs significantly better than GLASSO, cGLASSO and CAMPE in both settings.

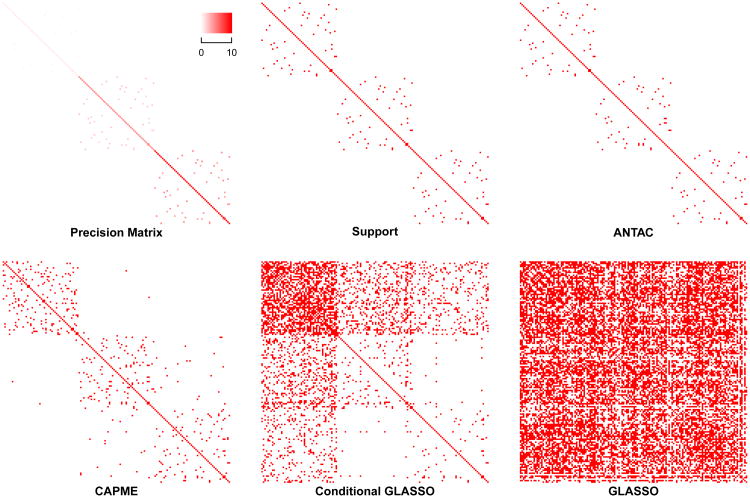

In “Magnified Block” model, we apply the following randomized procedure to choose Ω and Γ. We first simulate a 50 × 50 matrix ΩB with diagonal entries being 1 and each non-diagonal entry i.i.d. being nonzero with P(ωij ≠ 0) = 0.02. If ωij ≠ 0, we sample ωij from {0.4, 0.5}. Then we generate two matrices by multiplying ΩB by 5 and 10, respectively. Then we align three matrices along the diagonal, resulting in a block diagonal matrix Ω with sequentially magnified signals. A visualization of the simulated precision matrix is shown in Figure 3. The 150 × 100 matrix Γ is simulated with each entry being nonzero i.i.d. follows N(0,1) with P(Γij ≠ 0) = 0.05. Once the matrices Ω and Γ are chosen, 50 replicates of X and Y are generated.

Figure 3.

Heatmap of support recovery using different methods for a “Magnified Block” model.

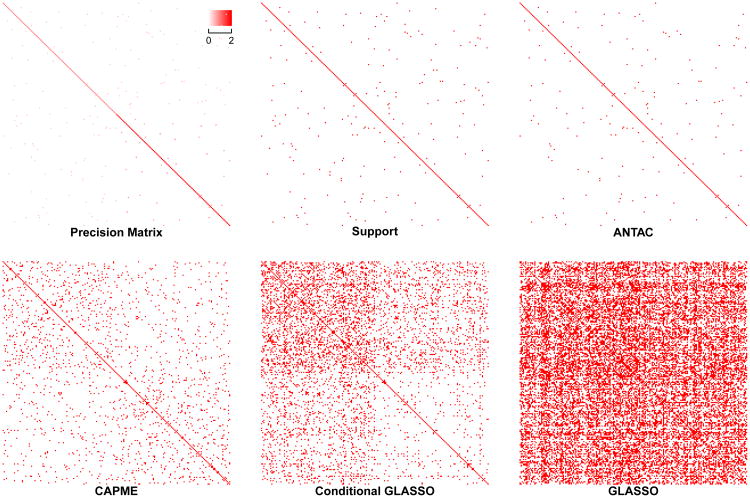

In “Heterogeneous Product” model, the matrices Ω and Γ are chosen in the following randomized way. We first simulate a 200 × 200 matrix Ω with diagonal entries being 1 and each non-diagonal entry i.i.d. being nonzero with P(ωij ≠ 0) = 0.005. If ωij ≠ 0, we sample ωij from {0.4, 0.5}. Then we replace the 100 × 100 submatrix at bottom-right corner by multiplying the 100 × 100 submatrix at up-left corner by 2, which results in a precision matrix with possibly many different product values ωiiσjj over all i,j pairs, where σjj is the jth diagonal entry of the covariance matrix Σ = (σkl)p × p = Ω−1. Thus we call it “Heterogeneous Product” model. A visualization of the simulated precision matrix is shown in Figure 4. The 200 × 100 matrix Γ is simulated with each entry being nonzero i.i.d. follows N(0,1) with P(Γij ≠ 0) = 0.05. Once Ω and Γ are chosen, 50 replicates of X and Y are generated.

Figure 4.

Heatmap of support recovery using different methods for a “Heterogeneous Product” model.

We compare our method with GLASSO, CAPME and cGLASSO procedures in “Heterogeneous Model”. We first compare the performance of support recovery when a single procedure from each method is applied. The tuning parameters for each procedure are set in the same way as in “Homogeneous Model” except that for CAPME, the optimal tuning parameter is achieved via a 10 × 10 grid search on {(λ1, λ2)} by five-fold cross validation. We summarize the support recovery results in Table 4. A visualization of the support recovery result for a replicate of “Magnified Block” model and a replicate of “Heterogeneous Product” model are shown in Figure 3 and 4 respectively. In both models, ANTAC significantly outperforms others and achieves high precision and recall rate. Specifically, ANTAC has precision of 0.99 for two models respectively while no other procedure achieves precision rate higher than 0.21 in either model. Besides, ANTAC returns true sparse graph structure while others report much denser results.

Table 4.

Simulation results of the support recovery for heterogeneous models based on 50 replications. The performance is measured by overall error rate, specificity, sensitivity (recall rate), precision and the Matthews correlation coefficient with all the values multiplied by 100. Numbers in parentheses are the simulation standard deviations.

| (p, q, n) | Method | MISR | SPE | SEN | PRE | MCC | |

|---|---|---|---|---|---|---|---|

| Magnified Block | (150, 100, 300) | GLASSO | 54(0) | 46(0) | 80(4) | 1(0) | 4(1) |

| cGLASSO | 14(0) | 86(0) | 99(1) | 4(0) | 19(0) | ||

| CAPME | 1(0) | 99(0) | 99(0) | 24(2) | 58(1) | ||

| ANTAC | 0(0) | 100(0) | 98(1) | 99(1) | 99(1) | ||

| Heterogeneous Product | (200, 100, 300) | GLASSO | 42(0) | 58(0) | 85(3) | 1(0) | 6(0) |

| cGLASSO | 12(0) | 88(0) | 100(0) | 4(0) | 18(0) | ||

| CAPME | 4(0) | 96(0) | 97(0) | 15(0) | 33(0) | ||

| ANTAC | 0(0) | 100(0) | 80(3) | 99(1) | 89(2) |

Moreover, we construct the precision-recall curve to compare a sequence of procedures from different methods. In terms of precision-recall curve, CAPME has closer performance as the proposed method in “Magnified Block” model whereas cGLASSO has closer performance as the proposed method in “Heterogeneous Product” model, which indicates the proposed method performs comparable to the better of CAPME and cGLASSO. Another implication indicates from the precision-recall curve is that while the tuning free ANTAC method is always close to the best point along the curve created by using different values of threshold ξ0, CAPME and cGLASSO via cross validation cannot select the optimal parameters on their corresponding precision-recall curves, even though one of them has potentially good performance when using appropriate tuning parameters. Here is an explanation why the ANTAC procedure is better than CAPME and cGLASSO in “Magnified Block” model and “Heterogeneous Product” model settings. Recall that in the second stage, CAPME applies the same penalty level λ for each entry of the difference ΩΣ̂ − I, where Σ̂ denotes the sample covariance matrix, but the i,j entry has variance ωiiσjj after scaling. Thus CAPME may not recover the support well in the “Heterogeneous Product” model settings, where the variances of different entries may be very different. As for the cGLASSO, we notice that essentially the same level of penalty is put on each entry ωij while the variance of each entry in the ith row Ωi. depends on ωii. Hence we cannot expect cGLASSO performs very well in the “Magnified Block” model settings, where the diagonals ωii vary a lot. In contrast, the ANTAC method adaptively puts the right penalty level (asymptotic variance) for each estimate of ωij, therefore it works well in either setting.

Overall, the simulation results on heterogeneous models reveal the appealing practical properties of the ANTAC procedure. Our procedure enjoys tuning free property and has superior performance. In contrast, it achieves better precision and recall rate than the results from CAPME and cGLASSO using cross validation. Although in terms of precision-recall curve, the better of CAPME and cGLASSO is comparable with our procedure, generally the optimal sensitivity and specificity could not be obtained through cross-validation.

6. Application to an EQTL Study

We apply the ANTAC procedure to a yeast dataset from Smith & Kruglyak (2008) (GEO accession number GSE9376), which consists of 5,493 gene expression probes and 2,956 genotyped markers measured in 109 segregants derived from a cross between BY and RM treated with glucose. We find the proposed method achieves both better interpretability and accuracy in this example.

There are many mechanisms leading to the dependency of genes at the expression level. Among those, the dependency between transcription factors (TFs) and their regulated genes has been intensively investigated. Thus the gene-TF binding information could be utilized as an external biological evidence to validate and interpret the estimation results. Specifically, we used the high-confidence TF binding site (TFBS) profiles from m:Explorer, a database recently compiled using perturbation microarray data, TF-DNA binding profiles and nucleosome positioning measurements (Reimand, Aun, Vilo, Vaquerizas, Sedman & Luscombe 2012).

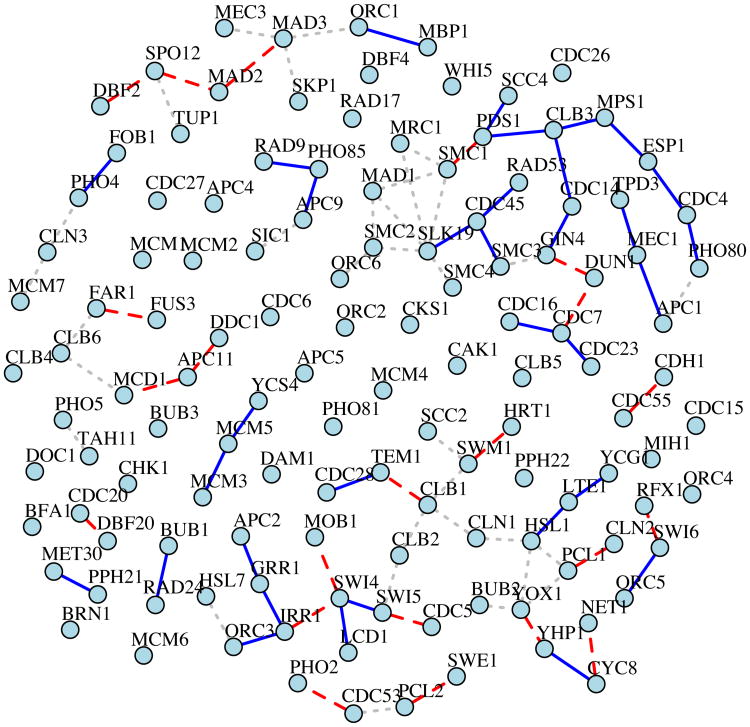

We first focus our analysis on a medium size dataset that consists of 121 genes on the yeast cell cycle signaling pathway (from the Kyoto Encyclopedia of Genes and Genomes database (Kanehisa & Goto 2000)). There are 119 markers marginally associated with at least 3 of those 121 genes with a Bonferroni corrected P-value less than 0.01. The parameters λ1 and λ2 for the ANTAC method are set as described in Theorem 3. 55 edges are identified using a cutoff of 0.01 on the FDR controlled P-values and 200 edges with a cutoff of 0.05. The number of edges goes to 375 using a cutoff of 0.1. For the purpose of visualization and interpretation, we further focus on 55 edges resulted from the cutoff of 0.01.

We then check how many of these edges involve at least one TF and how many TF-gene pairs are documented in the m:Explorer database. In 55 detected edges, 12 edges involve at least one TF and 2 edges are documented. In addition, we obtain the estimation of precision matrix from CAPME, where the tuning parameters λ1 = 0.356 and λ2 = 0.5 are chosen by five-fold cross validation. To compare with the results from ANTAC, we select top 55 edges from CAPME solution based on the magnitude of partial correlation. Within these 55 edges, 13 edges involve at least one TF and 2 edges are documented. As shown in Figure 6, 22 edges are detected by both methods. Our method identifies a promising cell cycle related subnetwork featured by CDC14, PDS1, ESP1 and DUN1, connecting through GIN4, CLB3 and MPS1. In the budding yeast, CDC14 is a phosphatase functions essentially in late mitosis. It enables cells to exit mitosis through dephosphorylation and activation of the enemies of CDKs (Wurzenberger & Gerlich 2011). Throughout G1, S/G2 and early mitosis, CDC14 is inactive. The inactivation is partially achieved by PDS1 via its inhibition on an activator ESP1 (Stegmeier, Visintin, Amon et al. 2002). Moreover, DUN1 is required for the nucleolar localization of CDC14 in DNA damage-arrested yeast cells (Liang & Wang 2007).

Figure 6.

Visualization of the network constructed from yeast cell cycle expression data by CAPME and the proposed ANTAC method. For ANTAC, 55 edges are identified using a cutoff of 0.01 on the FDR controlled p-values. For CAPME, top 55 edges are selected based on the magnitude of partial correlation. 22 common edges detected by both methods are shown in dashed lines. Edges only detected by the proposed method are shown in solid lines. CAPME-specific edges are shown in dotted lines.

We then extend the analysis to a larger dataset constructed from GSE9376. For 285 TFs documented in m:Explorer database, expression levels of 20 TFs are measured in GSE9376 with variances greater than 0.25. For these 20 TFs, 875 TF-gene interactions with 377 genes with variances greater than 0.25 are documented in m:Explorer. Applying the screening strategy as the previous example, we select 644 genetic markers marginally associated with at least 5 of the 377 genes with a Bonferroni corrected P-value less than 0.01. We apply the proposed ANTAC method and CAPME to this new dataset. For ANTAC, the parameters λ1 and λ2 are set as described in Theorem 3. For CAPME, the tuning parameters λ1 = 0.089 and λ2 = 0.281 are chosen by five-fold cross validation. We use TF-gene interactions documented in m:Explorer as an external biological evidence to validate the results. The results are summarized in Table 5. For ANTAC, 540 edges are identified using a cutoff of 0.05 on the FDR controlled P-values. Within these edges, 67 edges are TF-gene interactions and 44 out of 67 are documented in m:Explorer. In comparison, 8499 nonzero edges are detected by CAPME, where 915 edges are TF-gene interactions and 503 out of 915 are documented. This result is hard to interpret biologically. We further ask if identifying the same number of TF-gene interactions, which method achieves higher accuracy according to the concordance with m:Explorer. Based on the magnitude of partial correlation, we select top 771 edges from the CAPME solution, which capture 67 TF-gene interactions. Within these interactions, 38 are documented in m:Explorer. Thus in this example, the proposed ANTAC method achieves both better interpretability and accuracy.

Table 5.

Results for a dataset consists of 644 markers and 377 genes, which was constructed from GSE9376.

| Method | Solution Criteria | Total # of gene-gene interactions | # of TF-gene interactions | # of TF-gene interactions documented | Documented Proportion |

|---|---|---|---|---|---|

| ANTAC | FDR controlled P-values ≤0.05 | 540 | 67 | 44 | 65% |

| CAPME | Magnitude of partial correlation | 771 | 67 | 38 | 57% |

| CAPME | Nonzero entries | 8499 | 915 | 503 | 55% |

7. Proof of Main Theorems

In this section, we will prove the main results Theorems 1 and 2.

7.1 Proof of Theorem 1

This proof is based on the key lemma, Lemma 5, which is deterministic in nature. We apply Lemma 5 with R = Dbtrue + E replaced by Yj= Xγj + Zj, λ replaced by λ1 and sparsity s replaced by s1. The following lemma is the key to the proof.

Lemma 1

There exist some constants such that for each 1 ≤ j ≤ p,

| (33) |

| (34) |

| (35) |

with probability 1 − o(q−δ1+1).

With the help of the lemma above, it's trivial to finish our proof. In fact, ‖Ẑj − Zj‖2 = ‖X(γj − γ̂j)‖2 and hence Equation (14) immediately follows from result (35). Equations (15) and (16) are obtained by the union bound and Equations (33) and (34) because of the following relationship,

It is then enough to prove Lemma 1 to complete the proof of Theorem 1.

Proof of Lemma 1

The Lemma is an immediate consequence of Lemma 5 applied to Yj= Xγj + Zj with tuning parameter λ1 and sparsity s1. To show the union of Equations (33)-(35) holds with high probability 1 − o(q−δ1+1), we only need to check the following conditions of the Lemma 5 hold with probability at least 1 − o(q−δ1+1),

where we set ξ = 3/ε1 + 1 for the current setting, under Condition 3 and under Condition 3′ for some universal constant CA1 > 0, , and ζ (n, q) = o(1/s1). Let us still define as the standardized X in Lemma 5 of Section 8.

We will show that

which implies

where Ej is the union of I1 to I4. We will first consider and , then , and leave to the last, which relies on the bounds for , 2 ≤ i ≤ 4.

(1). To study and , we need the following Bernstein-type inequality (See e.g. (Vershynin 2010), Section 5.2.4 for the inequality and definitions of norms ‖·‖φ2 and ‖·‖φ1) to control the tail bound for sum of i.i.d. sub-exponential variables,

| (36) |

for t > 0, where Ui are i.i.d. centered sub-exponential variables with parameter ‖Ui‖φ1 ≤ K and c is some universal constant. Notice that θora = ‖Zj‖ /√n with , and is sub-gaussian with parameter by Conditions 2 and 3 with some universal constants cφ2 and Cφ2 (all formulas involving φ1 or φ2 parameter of replace “B” by under Condition 3′ hereafter). The fact that sub-exponential is sub-gaussian squared implies that there exists some universal constant C1 > 0 such that

Note that σjj ∈ [1/M2,M2] by Condition 2 and with some universal constants and . Let and for some large constant CA1. Equation (36) with a sufficiently small constant c0 implies

| (37) |

and

| (38) |

(2). To study the lower-RE condition of , we essentially need to study . On the event I2, it's easy to see that if satisfies lower-RE with (αx, ζx (n, q)), then satisfies lower-RE with , since and on event I2. Moreover, to study the lower-RE condition, the following lemma implies that we only need to consider the behavior of on sparse vectors.

Lemma 2

For any symmetric matrix Δq×q, suppose |vT Δv| ≤ δ for any unit 2s sparse vector v ∈ ℝq, i.e. ‖v‖ = 1 and , then we have

See Supplementary Lemma 12 in (Loh & Wainwright 2012) for the proof. With a slight abuse of notation, we define Σx = Cov (X(1)). By applying Lemma 2 on and Condition 2, , we know that satisfies lower-RE (αx, ζx (n, q)) with

| (39) |

Provided

| (40) |

which implies that the population covariance matrix Σx and its sample version behave similarly on all 2s1 sparse vectors.

Now we show Equation (40) holds under Conditions 3 and 3′ respectively for a sufficiently large constant L such that the inequality in the event I4 holds. Under Condition 3′, X(1) is jointly sub-gaussian with parameter (M1)1/2 . A routine one-step chaining (or δ-net) argument implies that there exists some constant csg > 0 such that

| (41) |

See e.g. Supplementary Lemma 15 in (Loh & Wainwright 2012) for the proof. Hence by picking small with any fixed but arbitrary large L, the sparsity condition (17) and Equation (41) imply that Equation (40) holds with probability 1 − o (p−δ1).

Under Condition 3, if , Hoeffding's inequality and a union bound imply that

where the norm ‖·‖∞ denotes the entry-wise supnorm and ‖X(1)‖∞ ≤ B (see, e.g. (Massart 2007) Proposition 2.7). Thus with probability 1 − o (q−δ1), we have for any v ∈

0 (2s1) with‖v‖ = 1,

0 (2s1) with‖v‖ = 1,

where the last inequality follows from

for any v ∈

0 (2s1). Therefore we have Equation (40) holds with probability 1 − o (q−δ1) for any arbitrary large L. If

, an involved argument using Dudley's entropy integral and Talagrand's concentration theorem for empirical processes implies (see, (Rudelson & Zhou 2013) Theorem 23 and its proof) Equation (40) holds with probability 1 − o (q−δ1) for any fixed but arbitrary large L.

0 (2s1). Therefore we have Equation (40) holds with probability 1 − o (q−δ1) for any arbitrary large L. If

, an involved argument using Dudley's entropy integral and Talagrand's concentration theorem for empirical processes implies (see, (Rudelson & Zhou 2013) Theorem 23 and its proof) Equation (40) holds with probability 1 − o (q−δ1) for any fixed but arbitrary large L.

Therefore we showed that under Condition 3 or 3′, Equation (40) holds with probability 1 − o (q−δ1) for any arbitrary large L. Consequently, Equation (39) with on event I2 implies that we can pick and sufficiently small ζ such that event I4 holds with probability 1 − o (q−δ1).

(3). Finally we study the probability of event I1. The following tail probability of t distribution is helpful in the analysis.

Proposition 1

Let Tn follows a t distribution with n degrees of freedom. Then there exists εn → 0 as n → ∞ such that ∀t > 0

Please refer to (Sun & Zhang 2012) Lemma 1 for the proof. Recall that , where τ defined in Equation (67) satisfies that on . From the definition of ν in Equation (66) we have

where each column of W has norm ‖Wk‖ =√n. Given X, equivalently W, it's not hard to check that we have by the normality of Zj, where t(n−1) is the t distribution with (n − 1) degrees of freedom. From Proposition 1 we have

where the first inequality holds when t2 > 2, and the second inequality follows from the fact for 0 < x < 2. Now let t2 = δ1 log q > 2, and with ξ = 3/ε1 + 1, then we have and

which immediately implies .

7.2 Proof of Theorem 2

The whole proof is based on the results in Theorem 1. In particular with probability 1 − o (p·q−δ1+1) the following events hold,

| (42) |

| (43) |

From now on the analysis is conditioned on the two events above. This proof is also based on the key lemma, Lemma 5. We apply Lemma 5 with R = Dbtrue + E replaced by Ẑm= ẐAcβm + Em for each m ∈ A = {i, j}, λ replaced by λ2 and sparsity s replaced by Cβs2, where Em is defined by the regression model Equation (7) as

| (44) |

and Cβ = 2M2 because the definition of βm in Equation (6) implies it is a weighted sum of two columns of Ω with weight bounded by M2.

To obtain our desired result for each pair k,l ∈ A= {i,j}, it's sufficient for us to bound and separately and then to apply the triangle inequality The following two lemmas are useful to establish those two bounds.

Lemma 3

There exists some constant Cin > 0 such that with probability 1 − o (q−δ1).

Lemma 4

There exist some constants , 1 ≤ k ≤ 3 such that for each m ∈ A = {i, j},

with probability 1 − o (q−δ2+1).

Before moving on, we point out a fact we will use several times in the proof,

| (45) |

which follows from Equation (6) and Condition 5. Hence we have

| (46) |

for some constant C3 > 0.

To bound the term , we note that for any k,l ∈ A= {i,j},

| (47) |

where we applied Lemma 4 in the last inequality.

Lemma 3, together with Equation (47), immediately implies the desired result (23),

for some constant with probability 1 − o (p · q −δ1+1 + p−δ2+1). Since the spectrum of ΨA,A is bounded below by and above by M2 and the functional is Lipschitz in a neighborhood of ΨA,A for k,l ∈ A, we obtain that Equation (24) is an immediate consequence of Equation (23). Note that is the MLE of ωij in the model with three parameters given n samples. Whenever and , we have . Therefore we have , which immediately implies Equation (25) in Theorem 2,

where Fij is the Fisher information of ωij.

It is then enough to prove Lemma 3 and Lemma 4 to complete the proof of Theorem 2.

Proof of Lemma 3

We show that with probability 1 − o (q−δ1) in this section. By Equation (46), we have

| (48) |

To bound the term , we note that by the definition of ΔZt in Equation (44) there exists some constant C4 such that,

| (49) |

where the last inequality follows from Equations (43) and (45). Since and X are independent, it can be seen that each coordinate of is a sum of n i.i.d. sub-exponential variables with bounded parameter under either Condition 3 or 3′. A union bound with q coordinates and another application of Bernstein inequality in Equation (36) with imply that with probability 1 − o (q−δ1) for some large constant C5 > 0. This fact, together with Equation (49), implies that with probability 1 − o (q−δ1). Similar result holds for . Together with Equation (48), this result completes our claim on and finishes the proof of Lemma 3.

Proof of Lemma 4

The Lemma 4 is an immediate consequence of Lemma 5 applied to Ẑm= ẐAcβm+ Em for each m ∈ A = {i,j} with parameter λ2 and sparsity Cβs2. We check the following conditions I1 − I4 in the Lemma 5 hold with probability 1 − o (p−δ2+1) to finish our proof. This part of the proof is similar to the proof of Lemma 1. We thus directly apply those facts already shown in the proof of Lemma 1 whenever possible. Let

where we can set ξ = 3/ε2 +1 for the current setting, and ζ (n, p) = o(1/s2). Let us still define as the standardized ẐAc in the Lemma 5 of Section 8. The strategy is to show that and for i = 2, 3 and 4, which completes our proof.

(1). To study and , we note that , where Zk ∼ N (0, σkkI) and according to Equation (42). Similarly where εm ∼ N (0, ψmmI) and from Equation (46). Noting that ψmm, , we use the same argument as that for in the proof of Lemma 1 to obtain

To study the lower-RE condition of , as what we did in the proof of Lemma 1 and Lemma 2, we essentially need to study and to show the following fact

where λmin (ΣAc,Ac) ≥ 1/M2. Following the same line of the proof in Lemma 1 for the lower-RE condition of with normality assumption on Z and sparsity assumption , we can obtain that with probability 1 − o (p−δ2),

| (50) |

Therefore all we need to show in the current setting is that with probability 1 − o (p−δ2),

| (51) |

To show Equation (51), we notice

To control D2, we find

| (52) |

by Equation (42), and sparsity assumptions (22).

To control D1, we find , which is o(1) with probability 1 − o (p−δ2) by Equation (52) and the following result . Equation (50) implies that with probability 1 − o (p−δ2),

(3). Finally we study the probability of event I1. In the current setting,

Following the same line of the proof for event I1 in Lemma 1, we obtain that with probability 1 − o (p−δ2+1),

Thus to prove event holds with desired probability, we only need to show with probability 1 − o (p−δ2+1),

| (53) |

Equation (46) immediately implies by the sparsity assumptions (22). To study ‖WTΔZm/n‖∞, we obtain that there exists some constant C6 > 0 such that,

| (54) |

where we used for all k on I2 in the first inequality and Equations (42) and (46) in the last inequality By the sparsity assumptions (22), we have . Thus it's sufficient show with probability 1 − o (p−δ2+1). In fact,

| (55) |

where the last inequality follows from Equations (43) and (45).

Since each and is independent of X, it can be seen that each entry of is a sum of n i.i.d. sub-exponential variables with finite parameter under either Condition 3 or 3′. A union bound with pq entries and an application of Bernstein inequality in Equation (36) with imply that with probability 1 − o ((pq)−δ1) for some large constant C7 > 0. This result, together with Equation (55), implies that with probability 1 − o (p−δ2+1). Now we finish the proof of ‖WTΔZm/n‖∞ = o(λ2) by Equation (54) and hence the proof of the Equation (53) with probability 1 − o (p−δ2+1). This completes our proof of Lemma 4.

8. A Key Lemma

The lemma of scaled lasso introduced in this section is deterministic in nature. It could be applied in different settings in which the assumptions of design matrix, response variables and noise distribution may vary, as long as the conditions of the lemma are satisfied. Therefore it's a very useful building block in the analysis of many different problems. In particular, the main theorems of both steps are based on this key lemma. The proof of this lemma is similar as that in (Sun & Zhang 2012) and (Ren et al. 2013), but we use the restrict eigenvalue condition for the gram matrix instead of CIF condition to easily adapt to different settings of design matrix for our probabilistic analysis.

Consider the following general scaled l1 penalized regression problem. Denote the n by p0 dimensional design matrix by D = (D1, …, Dp0), the n dimensional response variable R = (R1, …, Rn)T and the noise variable E = (E1, …, En)T. The scaled lasso estimator with tuning parameter λ of the regression

| (56) |

is defined as

| (57) |

where the sparsity s of the true coefficient btrue is defined as follows,

| (58) |

which is a generalization of exact sparseness (the number of nonzero entries).

We first normalize each column of the design matrix D to make the analysis cleaner by setting

| (59) |

and then rewrite the model (56) and the penalized procedure (57) as follows,

| (60) |

and

| (61) |

| (62) |

where the true coefficients and the estimator of the standardized scaled lasso regression (61) are and respectively.

For this standardized scaled lasso regression, we introduce some important notation, including the lower-RE condition on the gram matrix . The oracle estimator θora of the noise level can be defined as

| (63) |

Let |K| be the cardinality of an index set K. Define T as the index set of those large coefficients of dtrue,

| (64) |

We say the gram matrix satisfies a lower-RE condition with curvature α1 > 0 and tolerance ζ (n, p0) > 0 if

| (65) |

Moreover, we define

| (66) |

| (67) |

with constants ξ > 1, A1 and A2 introduced in Lemma 5. C1( , 4ξ A1, α1) is a constant depending on , ξA1 and α1 with its definition in Equation (76). It is bounded above if and 4ξA1 are bounded above and α1 is bounded below by some universal constants, respectively. With the notation we can state the key lemma as follows.

Lemma 5

Consider the scaled l1 penalized regression procedure (57). Whenever there exist constants ξ > 1, A1 and A2 such that the following conditions are satisfied

for some ξ > 1 and τ ≤ 1/2 defined in (67);

for all k;

θora ∈ [1/A2,A2];

-

satisfies the lower-RE condition with α1 and ζ (n, p0) such that

(68) we have the following deterministic bounds(69) (70) (71) (72) (73) where constants Ci(i = 1, …, 5) only depend on A1, A2, α1 and ξ.

Figure 2.

The ROC curves for different methods. For GLASSO or cGLASSO, the ROC curve is obtained by varying its tuning parameter. For CAPME, λ1 is fixed as the value selected by the cross validation and the ROC curve is obtained by varying λ2. For ANTAC, the ROC curve is obtained by varying the cut-off on P-values.

Figure 5.

The precision-recall curves for “Magnified Block” model and “Heterogeneous Product” model using different methods. For GLASSO or cGLASSO, the curve is obtained by varying its tuning parameter. For CAPME, λ1 is fixed as the value selected by the cross validation and the curve is obtained by varying λ2. For ANTAC, the precision-recall curve is obtained by varying threshold level ξ0. The points on the curves correspond to the results obtained by cross-validation for GLASS, cGLASS and CAPME and by using theoretical threshold level ξ0 = 2 for tuning free ANTAC.

Acknowledgments

We thank Yale University Biomedical High Performance Computing Center for computing resources, and NIH grant RR19895 and RR029676-01, which funded the instrumentation. The research of Zhao Ren and Harrison Zhou was supported in part by NSF Career Award DMS-0645676, NSF FRG Grant DMS-0854975 and NSF Grant DMS-1209191.

9. Appendix

9.1 Proof of Lemma 5

The function Lλ (d, θ) in Equation (61) is jointly convex in (d, θ). For fixed θ > 0, denote the minimizer of Lλ (d, θ) over all d ∈ ℝpo by d̂ (θλ), a function of θλ, i.e.,

| (74) |

then if we knew θ̂ in the solution of Equation (62), the solution for the equation is {d̂ (θ̂λ), θ̂}. We recognize that d̂ (θ̂λ) is just the standard lasso with the penalty θ̂λ, however we don't know the estimator θ̂. The strategy of our analysis is that we first show that θ̂ is very close to its oracle estimator θora, then the standard lasso analysis would imply the desired result Equations (70)-(73) under the assumption that θ̂/θora = 1 + O(λ2s). For the standard lasso analysis, some kind of regularity condition is assumed on the design matrix WTW/n in the regression literature. In this paper we use the lower-RE condition, which is one of the most general conditions.

Let μ = λθ. From the Karush-Kuhn-Tucker condition, d̂ (μ) is the solution to the Equation (74) if and only if

| (75) |

Let C2 (a1, a2) and C1 (a11, a12, a2) be constants depending on a1, a2 and a11, a12, a2, respectively. The constant C2 is bounded above if a1 is bounded above and a2 is bounded below by constants, respectively. The constant C1 is bounded above whenever a11 and a12 are bounded above and a2 is bounded below by constants. The explicit formulas of C1 and C2 are given as follows,

| (76) |

The following propositions are helpful to establish our result. The proof is given in Sections 9.2 and 9.3.

Proposition 2

The sparsity s is defined in Equation (58). For any ξ > 1, assuming and conditions 2, 4 in Lemma 5 hold, we have

| (77) |

| (78) |

| (79) |

Proposition 3

Let {d̂, θ̂} be the solution of the scaled lasso (62). For any ξ > 1, assuming conditions 1 − 4 in Lemma 5 hold, then we have

| (80) |

Now we finish our proof with these two propositions. According to Conditions 1 − 4 in Lemma 5, Proposition 3 implies with μ = λθ̂ and Proposition 2 further implies that there exist some constants c1, c2 and c3 such that

Note that . Thus the constants c1, c2 and c3 only depends on A1, A2, α1 and ξ. Now we transfer the results above on standardized scaled lasso (62) back to the general scaled lasso (57) through the bounded scaling constants and immediately have the desired results (70)-(72). Result (69) is an immediate consequence of Proposition 3 and Result (73) is an immediate consequence of assumptions 1 − 3.

9.2 Proof of Proposition 2

Notice that

| (81) |

| (82) |

where the first inequality follows from the KKT conditions (75).

Now define Δ = d̂(μ) − dtrue. Equation (81) also implies the desired inequality (79)

| (83) |

We will first show that

| (84) |

then we are able to apply the lower-RE condition (65) to derive the desired results.

To show Equation (84), we note that our assumption with Equation (82) implies

| (85) |

Suppose that

| (86) |

then the inequality (85) becomes

which implies

| (87) |

Therefore the complement of inequality (86) and Equation (87) together finish our proof of Equation (84).

Before proceeding, we point out two facts which will be used below several times. Note the sparseness s is defined in terms of the true coefficients btrue in Equation (58) before standardization but the index set T is defined in term of dtrue in Equation (64) after standardization. Condition 2 implies that this standardization step doesn't change the sparseness up to a factor A1. Hence it's not hard to see that |T| ≤ A1s and ‖(dtrue)Tc‖1 ≤ A1λs.

Now we are able to apply the lower-RE condition of to Equation (83) and obtain that

where in the second, third and last inequalities we applied the acts (84), , ‖T‖ ≤ A1s, ‖(dtrue)Tc‖1 ≤ A1λs and . Moreover, by applying those facts used in last equation again, we have

The above two inequalities together with Equation (83) imply that

Define . Some algebra about this quadratic inequality implies the bound of Δ under l2 norm

Combining this fact with Equation (84), we finally obtain the bound under l1 norm (77)

9.3 Proof of Proposition 3

For τ defined in Equation (67), we need to show that θ̂ ≥ θora (1 − τ) and θ̂ ≤ θora (1 + τ) on the event . Let d̂ (θλ) be the solution of (74) as a function of θ, then

| (88) |

since for all d̂k (θλ) ≠ 0, and for all d̂k (θλ) = 0 which follows from the fact that {k : d̂k (θλ) = 0 } is unchanged in a neighborhood of θ for almost all θ. Equation (88) plays a key in the proof.

(1). To show that θ̂ ≥ θora (1 − τ) it's enough to show

where t1 = θora (1 − τ), due to the strict convexity of the objective function Lλ (d, θ) in θ. Equation (88) implies that

| (89) |

On the event we have

The last inequality follows from the definition of τ and the l1 error bound in Equation (77) of Proposition 2. Note that for λ2s sufficiently small, we have small τ < 1/2. In fact, although ‖dtrue − d̂ (t1λ)‖1 also depends on τ, our choice of τ is well-defined and is larger than .

(2). Let t2 = θora (1 + τ). To show the other side θ̂ ≥ θora (1 + τ) it is enough to show

Equation (88) implies that on the event we have

where the second last inequality is due to the fact τ < 1/2 and the last inequality follows from the definition of τ and the l1 error bound in Equation (77) of Proposition 2. Still, our choice of τ is well-defined and is larger than .

Contributor Information

Mengjie Chen, Program of Computational Biology and Bioinformatics, Yale University, New Haven, CT 06520, USA.

Zhao Ren, Department of Statistics, Yale University, New Haven, CT 06520, USA.

Hongyu Zhao, Department of Biostatistics, School of Public Health, Yale University, New Haven, CT 06520, USA.

Harrison Zhou, Email: harrison.zhou@yale.edu, Department of Statistics, Yale University, New Haven, CT 06520, USA.

References

- Bickel PJ, Levina E. Regularized estimation of large covariance matrices. Ann Statist. 2008a;36(1):199–227. [Google Scholar]

- Bickel PJ, Levina E. Covariance regularsssization by thresholding. Ann Statist. 2008b;36(6):2577–2604. [Google Scholar]

- Cai TT, Li H, Liu W, Xie J. Covariate-adjusted precision matrix estimation with an application in genetical genomics. Biometrika. 2013;100(1):139–156. doi: 10.1093/biomet/ass058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai TT, Liu W, Zhou HH. Estimating Sparse Precision Matrix: Optimal Rates of Convergence and Adaptive Estimation. Manuscript 2012 [Google Scholar]

- Cai TT, Zhang CH, Zhou HH. Optimal rates of convergence for covariance matrix estimation. Ann Statist. 2010;38(4):2118–2144. [Google Scholar]

- Cai TT, Zhou HH. Optimal rates of convergence for sparse covariance matrix estimation. Ann Statist. 2012;40(5):2389–2420. [Google Scholar]

- Cheng J, Levina E, Wang P, Zhu J. Sparse ising models with covariates. arXiv preprint arXiv. 2012;1209:6342. [Google Scholar]

- El Karoui N. Operator norm consistent estimation of large dimensional sparse covariance matrices. Ann Statist. 2008;36(6):2717–2756. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Sparse inverse covariance estimation with the graphical lasso. Biostatistics. 2008;9(3):432–441. doi: 10.1093/biostatistics/kxm045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanehisa M, Goto S. KEGG: kyoto encyclopedia of genes and genomes. Nucleic acids research. 2000;28(1):27–30. doi: 10.1093/nar/28.1.27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lam C, Fan J. Sparsistency and rates of convergence in large covariance matrix estimation. Ann Statist. 2009;37(6B):4254–4278. doi: 10.1214/09-AOS720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lauritzen SL. Graphical Models. Oxford University Press; 1996. [Google Scholar]

- Li B, Chun H, Zhao H. Sparse estimation of conditional graphical models with application to gene networks. Journal of the American Statistical Association. 2012;107(497):152–167. doi: 10.1080/01621459.2011.644498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liang F, Wang Y. DNA damage checkpoints inhibit mitotic exit by two different mechanisms. Molecular and cellular biology. 2007;27(14):5067–5078. doi: 10.1128/MCB.00095-07. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loh P, Wainwright M. High-dimensional regression with noisy and missing data: Provable guarantees with nonconvexity. Ann Statist. 2012;40(3):1637–1664. [Google Scholar]

- Massart P. Concentration inequalities and model selection. Springer Verlag; 2007. [Google Scholar]

- Obozinski G, Wainwright MJ, Jordan MI. Support union recovery in high-dimensional multivariate regression. The Annals of Statistics. 2011;39(1):1–47. [Google Scholar]

- Peng J, Zhu J, Bergamaschi A, Han W, Noh DY, Pollack JR, Wang P. Regularized multivariate regression for identifying master predictors with application to integrative genomics study of breast cancer. The Annals of Applied Statistics. 2010;4(1):53–77. doi: 10.1214/09-AOAS271SUPP. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reimand J, Aun A, Vilo J, Vaquerizas JM, Sedman J, Luscombe NM. m: Explorer: multinomial regression models reveal positive and negative regulators of longevity in yeast quiescence. Genome biology. 2012;13(6):R55. doi: 10.1186/gb-2012-13-6-r55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ren Z, Sun T, Zhang CH, Zhou HH. Asymptotic Normality and Optimalities in Estimation of Large Gaussian Graphical Model. Manuscript 2013 [Google Scholar]

- Rockman MV, Kruglyak L. Genetics of global gene expression. Nature Reviews Genetics. 2006;7(11):862–872. doi: 10.1038/nrg1964. [DOI] [PubMed] [Google Scholar]

- Rudelson M, Zhou S. Reconstruction from anisotropic random measurements. IEEE Trans Inf Theory. 2013;59(6):3434–3447. [Google Scholar]

- Smith EN, Kruglyak L. Gene–environment interaction in yeast gene expression. PLoS biology. 2008;6(4):e83. doi: 10.1371/journal.pbio.0060083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stegmeier F, Visintin R, Amon A, et al. Separase, polo kinase, the kinetochore protein Slk19, and Spo12 function in a network that controls Cdc14 localization during early anaphase. Cell. 2002;108(2):207. doi: 10.1016/s0092-8674(02)00618-9. [DOI] [PubMed] [Google Scholar]

- Sun T, Zhang CH. Scaled Sparse Linear Regression. Manuscript 2012 [Google Scholar]

- Vershynin R. Introduction to the non-asymptotic analysis of random matrices. arXiv preprint arXiv. 2010;1011:3027. [Google Scholar]

- Wurzenberger C, Gerlich DW. Phosphatases: providing safe passage through mitotic exit. Nature Reviews Molecular Cell Biology. 2011;12(8):469–482. doi: 10.1038/nrm3149. [DOI] [PubMed] [Google Scholar]

- Yin J, Li H. A sparse conditional Gaussian graphical model for analysis of genetical genomics data. 2011;5(The annals of applied statistics)(4):2630. doi: 10.1214/11-AOAS494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin J, Li H. Adjusting for high-dimensional covariates in sparse precision matrix estimation by l1-penalization. Journal of multivariate analysis. 2013;116:365–381. doi: 10.1016/j.jmva.2013.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]