Abstract

Left ventrolateral prefrontal cortex (VLPFC) has been implicated in both integration and conflict resolution in sentence comprehension. Most evidence in favor of the integration account comes from processing ambiguous or anomalous sentences, which also poses a demand for conflict resolution. In two eye-tracking experiments we studied the role of VLPFC in integration when demands for conflict resolution were minimal. Two closely-matched groups of individuals with chronic post-stroke aphasia were tested: the Anterior group had damage to left VLPFC, whereas the Posterior group had left temporo-parietal damage. In Experiment 1 a semantic cue (e.g., “She will eat the apple”) uniquely marked the target (apple) among three distractors that were incompatible with the verb. In Experiment 2 phonological cues (e.g., “She will see an eagle.” / “She will see a bear.”) uniquely marked the target among three distractors whose onsets were incompatible with the cue (e.g., all consonants when the target started with a vowel). In both experiments, control conditions had a similar format, but contained no semantic or phonological contextual information useful for target integration (e.g., the verb “see”, and the determiner “the”). All individuals in the Anterior group were slower in using both types of contextual information to locate the target than were individuals in the Posterior group. These results suggest a role for VLPFC in integration beyond conflict resolution. We discuss a framework that accommodates both integration and conflict resolution.

Keywords: Sentence comprehension, eye-tracking, ventrolateral prefrontal cortex, inferior frontal gyrus

Introduction

Left ventrolateral prefrontal cortex (VLPFC) has been implicated in numerous processes, such as semantic processing (e.g., Buckner et al., 1995; Démonet et al., 1992; Fiez, 1997; Martin, Haxby, Lalonde, Wiggs, & Ungerleider, 1995; Petersen, Fox, Snyder, & Raichle, 1990; Raichle et al., 1994), syntactic processing (Ben-Shachar, Hendler, Kahn, Ben-Bashat, & Grodzinsky, 2003; Embick, Marantz, Miyashita, O’Neil, & Sakai, 2000; Grodzinsky, 2000), phonological segmentation and sequencing (Démonet et al., 1992; Newman, Twieg, & Carpenter, 2001; Price et al., 1994; Zatorre, Evans, Meyer, & Gjedde, 1992) and phoneme-to-grapheme conversional processes (e.g., Fiebach, Friederici, Müller, & Von Cramon, 2002), among others. Some have also suggested a domain-general role for this region, in processes such as temporal sequencing regardless of the specific stimulus type (Gelfand & Bookheimer, 2003).

The present work investigates the role of VLPFC in sentence comprehension, which is most widely proposed to be either semantic integration (e.g., Hagoort, 2005) or conflict resolution (e.g., Novick, Trueswell, & Thompson-Schill, 2005; Nozari & Thompson-Schill, 2015). Semantic integration refers to a process whereby the representation of the incoming word is bound to the representation constructed from previous words in the sentence (e.g., Hagoort, 2005). Conflict resolution refers to an executive operation through which processing is biased towards the relevant and away from the irrelevant information (e.g., Nozari & Thompson-Schill, 2013). These two proposals are, by no means, mutually exclusive. If at any point during the integration process there are competing representations (e.g., when one meaning of a homophone must be selected (Bedny, McGill, & Thompson-Schill, 2008; Hagoort, 2005; Lau, Phillips, & Poeppel, 2008)), conflict resolution is required. However, integration would still be needed for sentence comprehension even in the absence of strong competition. This study examines if VLPFC has a role in semantic integration when competition is controlled for.

VLPFC and conflict resolution

As discussed above, the conflict resolution account proposes a domain-general role for the VLPFC in resolving competition between multiple incompatible representations of a stimulus by biasing processing toward task- or context-appropriate information (Thothathiri, Kim, Trueswell, & Thompson-Schill, 2012). This may happen as part of semantic integration during sentence comprehension, for example, processing sentences containing ambiguous words elicits VLPFC activation (e.g., Rodd, Davis, & Johnsrude, 2005; Rodd, Johnsrude, & Davis, 2012; Rodd, Longe, Randall, & Tyler, 2010; Zempleni, Renken, Hoeks, Hoogduin, & Stowe, 2007), which can activate conflicting meanings. Similarly, VLPFC is activated when encountering garden-path sentences (January, Trueswell, & Thompson-Schill, 2009; Novick, Kan, Trueswell, & Thompson-Schill, 2009; Novick et al., 2005), which can activate conflicting syntactic trees. The idea is that VLPFC starts to bias processing at the moment the parser encounters an ambiguity and continues to update the bias as more information accumulates. If the parser is biased towards the incorrect interpretation, the later the disambiguating information comes in, the more work needed to shift the competition in favor of the alternative meaning, and the greater the VLPFC activation. Thus, VLPFC activation must correlate with the distance between the point of ambiguity and the point of disambiguation. In agreement with this prediction, VLPFC activation is greater when disambiguating information comes later rather than earlier in a sentence (Fiebach, Schlesewsky, Lohmann, Von Cramon, & Friederici, 2005). Also, when the relative timing of an ambiguous word and the disambiguating information is manipulated, VLPFC activation is induced both by the ambiguous word and by the disambiguating information, two points in the sentence where biasing competition was necessary (Rodd, Johnsrude, & Davis, 2012).

Nozari and Thompson-Schill (2015) reviewed a large body of literature linking VLPFC to comprehension of sentences with syntactic complexity, ambiguity, anomaly, and reasoned that all such cases require resolution of conflict between competing representations (See also Kaan & Swaab, 2002.) However, all of these cases fit with the semantic integration account as well: the goal of selecting the relevant information is to construct a coherent representation that could convey an unambiguous message. Thus, the two proposals cannot be distinguished based on experiments in which sentence comprehension requires conflict resolution. Validation of the conflict resolution proposal requires demonstrating that VLPFC is involved in cases where integration into sentential context is not relevant. There are numerous examples of this in the literature, some of which we review below.

An early demonstration of VLPFC activation outside of sentence comprehension was provided through three experiments by Thompson-Schill, D’Esposito, Aguirre, and Farah (1997). VLPFC was found to be more activated when (1) matching a picture (e.g., car) to an attribute (e.g., “expensive”) compared to its name (“car”), (2) when similarity of items was to be judged based on a specific feature (e.g., feature “white” for judging the similarity between tooth, bone and tongue), ignoring other features, compared to when global similarity was the basis of judgment, and (3) when verbs were to be generated in answer to items that were associated strongly with a single verb (e.g., scissors → cut) than with many possible verbs (e.g., cat → eat, meow, play, etc.). Numerous other studies have also shown VLPFC activation outside the domain of sentence comprehension. Among these are living/nonliving classification (Demb et al., 1995; Gabrieli et al., 1996; Kapur et al., 1994), feature-based similarity judgment (e.g., Whitney, Kirk, O’Sullivan, Lambon Ralph, & Jefferies, 2011), category-based verbal fluency (Basho, Palmer, Rubio, Wulfeck, & Müller, 2007; Birn et al., 2010), and Stroop and working memory tasks (Milham, Banich, & Barad, 2003; Nelson, Reuter-Lorenz, Sylvester, Jonides, & Smith, 2003). Moreover, while certain regions of LPFC are sensitive to the stimulus type, the pattern of activity for spatial and verbal information is indistinguishable along LPFC’s rostro-caudal axis (e.g., Bahlmann, Blumenfeld, & D’Esposito, 2014), pointing to some level of domain-generality of this area in carrying out executive control (e.g., Fedorenko & Thompson-Schill, 2014).

In summary, the activation of VLPFC during a variety of tasks and across various modalities builds a strong case for its involvement in a domain-general executive function, one that we have argued is biasing competition. This naturally extends to processing sentences in which conflict resolution is frequently required, hence explaining why this region would be activated when individuals attempt to comprehend sentences with semantic or syntactic anomaly or ambiguity. The critical question is whether VLPFC has any role beyond this in sentence comprehension.

VLPFC and integration

The bulk of evidence for the role of VLPFC in integration comes from studies showing the region’s increased activity when a sentence contains an anomaly (Hagoort, Hald, Bastiaansen, & Petersson, 2004; Kiehl, Laurens, & Liddle, 2002; Kuperberg, 2007; Kuperberg et al., 2000; Kuperberg, Caplan, Sitnikova, Eddy, & Holcomb, 2006; Kuperberg, Sitnikova, & Lakshmanan, 2008; Kuperberg, Holcomb, et al., 2003; Kuperberg, Sitnikova, Caplan, & Holcomb, 2003; Newman et al., 2001; Ni et al., 2000). The type of anomaly does not seem to be critical for VLPFC activation. While not all similar in their EEG footprints, syntactic violations (e.g., “at breakfast the boys would eats…”), semantic violations in the absence of syntactic violations (e.g., “at breakfast the eggs would eat…”), violation of world knowledge (e.g., “The Dutch trains are white…”) or unexpected events (e.g., “…at breakfast the boys would plant…”) all have been shown to activate VLPFC (e.g., Kuperberg et al., 2008).

Anomalies need not be confined to the linguistic system to recruit VLPFC. Willems, Özyürek, and Hagoort (2007) showed that VLPFC responded to mismatch not only within the linguistic domain (e.g., the Dutch version of “He should not forget the items he hit on the shopping list.”), but also to a mismatch between the linguistic and gestural information (e.g., watching the hitting action while listening to a linguistically sound sentence such as “He should not forget the items he wrote on the shopping list.”). In the same vein, Tesink et al. (2009) showed bilateral activation of VLPFC when the semantic content of the sentence did not match the speaker’s characteristics such as age, sex and social background implied by the speaker’s voice. For example, although “Every evening I drink a glass of wine before going to bed” is semantically and syntactically sound, it is unexpected from a child.

As discussed earlier, the increased activation of VLPFC in anomalous vs. correct sentences is also compatible with a conflict resolution account, because when the expected and the actual outcomes clash, two representations are competing for selection. A few studies claim that VLPFC activation is not limited to cases with conflicting information. For example, VLPFC activation was also observed when speaker’s characteristics matched the content of the sentence (Tesink et al., 2009, Fig. 1). Note, however, that this claim is based on a comparison between processing non-anomalous sentences and rest. It is therefore hard to argue that VLPFC activation during processing of such sentences spoke specifically to integration as opposed to any number of processes involved in sentence comprehension.

Figure 1.

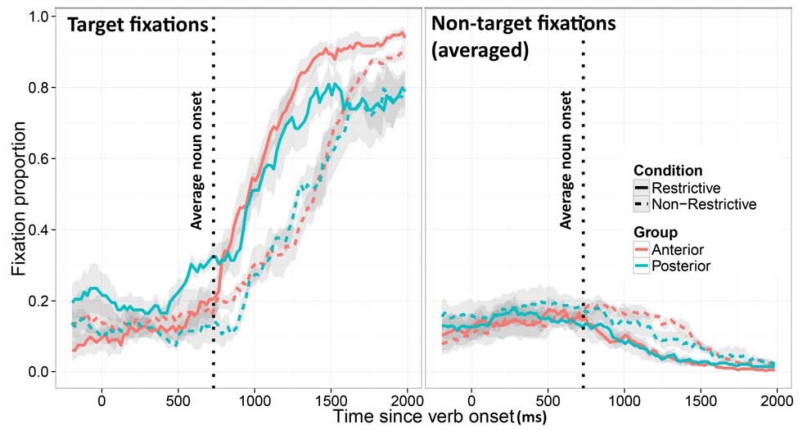

Average fixation proportions (±SE) to the target (left panel) and the three distractors (right panel) in the Restrictive and Non-Restrictive conditions for the Anterior and Posterior groups in Experiment 1.

Current study

The current study evaluated whether VLPFC has a role in semantic integration beyond conflict resolution. The first step is to operationalize the definition of integration. As stated earlier, integration has usually been defined as binding of representation of the incoming word to the representation constructed from previous words in the sentence (e.g., Hagoort, 2005). This statement can be interpreted in two ways: (a) the definition is literal, in which case “binding” really means attaching two representations by establishing a quick connection between them, something that PFC is known for (e.g., Dehaene, Kerszberg, & Changeux, 1998; Stokes, 2015). In this sense, anticipation would be the gold standard test for binding. If a word can be retrieved before information about it reaches sensory processing, its representation must have already been retrieved through binding with prior information. (b) The second interpretation of this definition is metaphorical. Perhaps “binding” implies a host of processes that facilitate extraction of a complete message form the parts of the sentence. This interpretation too requires rapid retrieval of lexical items and access to their semantic. Again, anticipation would be an excellent test of this ability.

More generally, while comprehension is not limited to anticipatory processing, anticipation contributes substantially to rapid integration and comprehension, at least under circumstances where anticipated outcomes match the actual outcomes (e.g., Altmann & Kamide, 1999). Thus, we investigated whether VLPFC has a role in using contextual information to more quickly activate the representations compatible with such information, which would in turn lead to facilitated integration. We identified four individuals who had damage to left VLPFC following left hemisphere stroke, mostly sparing other frontal regions and fully sparing temporo-parietal regions (the Anterior group), and compared them to three individuals with lesions to the temporo-parietal cortex that spared the prefrontal regions (the Posterior group). The participants heard a short simple sentence and looked at four pictures on the four corners of the screen, one of which was the target of the sentence. Their eyes were tracked during each trial in order to obtain an implicit, temporally-sensitive measure of online sentence comprehension. In two experiments, we manipulated two types of contextual cues and tested the difference between the two participant groups in their ability to use these cues to rapidly locate the target picture.

Experiment 1 manipulated semantic cues. Participants heard a sentence like “She will eat the apple.” In the experimental (Restrictive) condition, the verb was compatible with only one referent (e.g., “eat”, when the four pictures were apple, pen, shirt, igloo). Earlier findings showed that neurologically-intact adults can use the information contained in the restrictive verbs to locate the target before the noun is spoken (e.g., Altmann & Kamide, 1999; Kamide, Altmann, & Haywood, 2003). We tested whether the Anterior and Posterior groups differed in this ability. In order to rule out differences in other processes, such as simultaneous processing of the sentence and the visual scene, focusing on the visual display, or selecting one of the four pictures, we included a control (Non-restrictive) condition. This condition was similar to the Restrictive condition, except that the verb was equally compatible with all four alternatives (e.g., “see”). Therefore, while identical in all other aspects to the Restrictive condition, the control condition did not provide contextual information that could be used to make predictions. We compared performance in the two patient groups after correction for the baseline abilities captured by the control condition, which left us with a pure measure of context-based prediction/integration, uncontaminated by conflict-resolution demands of sentence comprehension.

In Experiment 2 we tested the same hypothesis, but using phonological cues. Past research has shown that participants quickly use phonological information to locate the correct target. For example, upon hearing a certain onset (e.g., /k/) multiple words that start with that onset compete for selection (Allopenna, Magnuson, & Tanenhaus, 1998; Zwitserlood & Schriefers, 1995), until further cues (i.e., the next phoneme) constrain competition by eliminating some of the options. Salverda, Dahan, and McQueen (2003) showed that even subtle prosodic cues constrained competition: listeners showed preferential looking to bisyllabic words if the vowel duration was consistent with such words. Similarly, Dahan, Magnuson, Tanenhaus, and Hogan, (2001) showed that misleading coarticulatory cues for the final consonants (provided by cross-splicing initial segments of words like NECK and NET) reliably affected competition.

All sentences in Experiment 2 had the verb “see”, so no contextual information was conveyed by the verb. The manipulation consisted of using either (1) targets that started with a vowel among distractors that started with consonants (e.g., eagle, shirt, rake, cherry), or (2) targets that started with a consonant among distractors that started with a vowel (e.g., shirt, eagle, orange, anchor). In the experimental (Restrictive) condition, the sentence had an indefinite article (a or an), which unambiguously cued the target because the onset of the target differed from the three distractors (e.g, when hearing “an” the only possible referent from the set {eagle, shirt, rake, cherry} is “eagle”). To account for baseline differences, a control (Non-restrictive) condition was used in which the sentence was produced with the definite article “the”. Similar to Experiment 1, we were interested in the difference between the Anterior and Posterior groups in their efficient use of the contextual cues, after accounting for the possible differences in other abilities through the control conditions. To further minimize task demands, which can confound individual differences (e.g., due to differences in motor control impairment), the participants were simply asked to look at the screen while listening to sentences and not required to make any overt response.

Critically, in both experiments, the sentences were not ambiguous or anomalous either semantically or syntactically and the visual display did not contain any related distractors, so there was neither linguistic nor visual conflict that required resolution. The combination of these two experiments allows us to answer two questions: (1) Does VLPFC play a role in contextual integration when demands for conflict resolution are minimal? (2) Is VLPFC’s role in integration specific to the use of semantic cues in the context or does it have a general role in facilitating processing of all types of cues?

Experiment 1

Methods

Participants

Seven individuals with chronic aphasia were recruited via the Moss Neurocognitive Rehabilitation Research Registry (Schwartz, Brecher, Whyte, & Klein, 2005). All had exclusively left hemisphere lesions after a single episode of stroke between 2002 and 2010. Of these, four had Anterior (the experimental group) and three had Posterior lesions (the control group). Lesion sites in the two groups were mutually exclusive: all Anterior group participants had >40% damage to at least one of the VLPFC Brodmann Areas (BA) 44, 45 or 47 (average damage to the three areas ranged between 23 and 50%), and <1% damage to the Posterior regions BA 20, 21, 22, 40, 41, 42. Their average lesions to BA46, BA9 and BA10 were 5%, 10% and <1% respectively, limiting the lesion mostly to the ventral part of the PFC. The Posterior group, on the other hand, all had lesions occupying at least 40% of the temporal/parietal regions listed above (average damage in all six areas ranged between 14% and 24%) and <1% damage to the lesion sites of the four Anterior group participants. The two groups did not differ reliably in mean age (M = 63.5, SE = 6.01 in the Anterior group and M = 53.3, SE = 2.03 in the Posterior group; z = 1.06, p =0.291), education (M =14.0, SD = 2.82 for the Anterior and M = 14.7, SD = 1.76 for the Posterior group; z = 0.58, p = 0.56), or total lesion volume, measured as the number of damaged voxels (M = 41268, SE = 8331 for the Anterior and M = 59380, SE = 16662 for the Posterior group; z = 1.10, p = 0.28).

Table 1 provides a summary of the behavioral profiles of each participant on standardized language tests (see Mirman et al., 2010; Nozari & Dell, 2013 for more details on the background assessments). Apart from the Western Aphasia Battery (WAB) overall comprehension measure, three measures of semantic comprehension are reported: Pyramids & Palm Trees (PPT) assesses semantic comprehension. Picture Name Verification Test (PNVT) is derived from the Philadelphia Naming Test (PNT) and measures the individual’s ability to match a spoken word to a picture in the presence of foils. Thus, it is a good measure to assess the participant’s ability to match auditory to visual information. Synonym judgment task assesses the participant’s ability to compare words in their meaning, thus it measures activation of word meaning without visual-semantic cues. We reported the accuracy on the verb component of this task, as our semantic cues were all verbs. As can be seen from the table, performance is quite similar between individuals from the two groups. Importantly, participants in the Anterior group do not show inferior comprehension compared to those in the Posterior group. A combined measure of semantic comprehension, averaging all four comprehension scores, shows a mean of 68.05 (SE = 1.7) for the Anterior and 65.6 (SE = 3.5) for the Posterior group (z = .35, p = 0.72).

Table 1.

Patients’ test scores on standardized tests.

| Grou p |

Patie nt |

Gend er |

WA B AQ |

WA B fluen cy |

WAB comprehen sion |

PP T |

PNV T |

Synon ym (verbs) |

PN T |

PR T |

Auditory discrimina tion |

Catego ry probe |

Rhy me prob e |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Anteri or |

A1 | F | 59.8 | 2 | 7.2 | 85 | 88 | 73 | 62 | 96 | 85 | 1.347 | 2 |

| Anteri or |

A2 | F | 91.5 | 9 | 8.85 | 94 | 97 | 73 | 95 | 97 | 95 | 3.5 | 5 |

| Anteri or |

A3 | M | 74.5 | 5 | 7.75 | 96 | 91 | 87 | 86 | 97 | 85 | 3.52 | 6 |

| Anteri or |

A4 | F | 90.2 | 8 | 10 | 90 | 94 | 87 | 87 | 95 | 90 | 2.45 | 6.55 |

| Poster ior |

p1 | M | 65.3 | 6 | 7.05 | 90 | 96 | 73 | 53 | 65 | 90 | 1.61 | 4.67 |

| Poster ior |

p2 | F | 68 | 5 | 9.4 | 98 | 97 | 80 | 87 | 97 | 95 | 2 | 5.2 |

| Poster ior |

p3 | F | 78.8 | 8 | 8.8 | 85 | 83 | 60 | 86 | 100 | 88 | 1.25 | 3 |

Table 1 also contains information about participants’ production performance, using a variety of measures, neither of which showed reliable differences between groups at α = 0.05. The accuracy on the PNT was 82.5 ± 7.1 for the Anterior vs. 75.3 ± 11.2 for the Posterior group, and the accuracy for auditory word repetition measured by repetition of the target words from the PNT was 96.2 ± 0.48 for the Anterior vs. 87.3 ± 11.2 for the Posterior group. Finally, Table 1 shows that the two groups were comparable in their ability to perceive and retain phonological and semantic information. The Auditory discrimination test required comparison of phonological strings with a difference in one phoneme. We report the accuracy of this comparison after 5 seconds to account for information retention during processing of sentence which unfolds over time; however, the pattern of scores was similar in the non-delayed version of the task. Performance was not significantly different between the two groups (88.7 ± 4.8 for the Anterior vs. 91.0 ± 3.6 for the Posterior; z = 0.73, p = 0.47). The Rhyme probe task (based on Freedman & Martin, 2001) also measures the ability to retain phonological information by requiring participants to determine if any of the items in a list rhymed with a target item. The task becomes more difficult as the number of items increases and the scores in Table 1 report the average maximum number of list items with which performance was accurate. Again, performance showed no difference (4.9 ± 2.0 for the Anterior vs. 4.3 ± 1.1 for the Posterior; z = 0.71, p = 0.48). The Semantic (category) probe task (Freedman & Martin, 2001) is similar in nature, except that instead of a rhyme comparison, a semantic comparison is required, thus measuring the participant’s ability to process and retain semantic information. Here too, we found no reliable differences between participant groups (2.7 ± 1.0 for the Anterior vs. 1.6 ± 0.38 for the Posterior; z = 1.41, p = 0.16). In summary, there were no reliable behavioral differences between the two groups and patients in the Anterior group had slightly superior abilities in a number of tasks.

Materials

Targets were 30 common nouns, each presented once with a restrictive verb, once with a non-restrictive verb (Appendix, Table A1), and six times as distractors along with other target pictures. We validated our choice of restrictive and non-restrictive verbs by norming the experimental materials on Amazon Mechanical Turk. Sixty-Three native speakers of American English were presented with the written form of the incomplete experimental sentence (e.g., “She will drive the …”) and the four options (car, hat, banana, flashlight) to choose from. For the restrictive trials, the target was chosen >95% of the time, while for non-restrictive trials, none of the alternatives were chosen >50% of the time across participants.

Materials were presented as 300×300 pixel pictures of black and white line-drawings taken from either the IPNP corpus (Szekely et al., 2004) or from Snodgrass & Vanderwart (1980). Sixty sentences in the form of “She will [verb] the [noun]” were recorded by a native English speaker at 44.1 kHz., and were digitally edited to remove silence, so that all sentences would have the same duration from the beginning of the sentence to the beginning of the verb. There was no reliable difference between the duration of restrictive and non-restrictive verbs that were paired with the same target noun (t(29) = 0.71, p = 0.48).

Apparatus

Participants were seated approximately 25 inches away from a 17-inch monitor with the resolution set to 1024×768 dpi. Stimuli were presented using E-Prime Professional, Version 2.0 software (Psychology Software Tools, Inc., www.pstnet.com). A remote Eyelink 1000 eye-tracker recorded participants’ monocular gaze position at 250 Hz.

Procedure

Participants were instructed to “listen and look at the pictures” (no response was required). Each trial began with 1375 ms preview. In the first 1000 ms, the four line-drawings were presented in the four corners, and in the last 375 ms a shrinking red dot appeared at the center to draw the gaze back to the central location. After the preview, the sentence was presented through speakers at a comfortable listening volume. Trials were presented in a pseudorandomized order (so that each word appeared as the “target” in the Restrictive and Non-restrictive condition once in each half of the experiment. Within each half, the order of trials was randomized for each participant). The position of the four pictures was randomized on every trial. Participants first completed six practice trials (three Restrictive and three Non-restrictive), and then moved on to the experimental trials.

Offline comprehension test

The results of the standard language tests did not reveal any reliable differences between the two groups. To ensure that neither group had difficulty with comprehension of sentences of the type used in this study, we had our participants complete a short offline test after the eye-tracking experiment. They listened to 20 sentences and made offline judgments of whether the sentence was semantically plausible or not. The sentence structure was the same as that used for the eye-tracking study. All sentences were syntactically correct, but half of them contained a semantic anomaly (e.g., “She will read the strawberry.”). None of the participants in the Anterior group made any mistakes on this test. Two participants in the Posterior group each made one mistake. Thus, offline measures of sentence comprehension indicate that our participants could properly hear and understand the semantics of the sentences similar to those used in the study.

Results and Discussion

The stringent criteria we used for participant selection yielded a small sample in each group. To ensure that the results we report are not driven by an idiosyncratic individual, we first report measures of central tendency, and analyses pertaining to those, but also report effect sizes at the level of individuals. The latter allows for inspection of the consistency of the effect among participants belonging to each group. Figure 1 shows the proportion of fixations (±SE) to the target (left panel) and the three unrelated items averaged together (right panel) in the two patient groups. For each group, the Restrictive and Non-restrictive conditions are plotted separately. As seen in the graph, divergence of looks to the target as a function of restrictiveness of the verb happens earlier in the Posterior than in the Anterior group.

We tested the reliability of this difference using the Growth Curve Analysis method (Mirman, 2014), a variant of multilevel regression (or hierarchical linear modeling) that uses orthogonal polynomials to capture the curvilinear pattern of fixation proportions over time. Effects of the variables of interest on the polynomial terms provide a way to quantify and evaluate those effects on statistically independent (i.e., orthogonal) aspects of the fixation proportions trajectory. Fixation proportions were computed for each time bin (20 ms) as the number of trials on which the participant was fixating each object divided by the number of trials in that condition. These proportions do not add to 1.0 because, at any given time, participants need not be fixating one of the objects -- they may be looking elsewhere (e.g., screen center or off-screen), or moving their eyes (e.g., a saccade), or there may be track loss (e.g., a blink). This also means that target object fixations can increase without an arithmetically equivalent decrease in distractor object fixation, particularly early in the time course when participants are likely to be looking at screen center until they have information that drives target fixation. The overall target fixation trajectory was modeled with a cubic polynomial as in previous studies (e.g., Chen & Mirman, 2015; Magnuson, Dixon, Tanenhaus, & Aslin, 2007). The hypotheses regarding anticipation were tested by adding effects of Condition (Restrictive vs. Non-Restrictive) and Group (Anterior vs. Posterior) on the intercept term, which captures the mean fixation proportion during the analysis time window (analogous to a repeated measures ANOVA), and on the linear term, which captures the linear increase in fixation proportions during the analysis time window. These terms capture differences between conditions and groups in these aspects of the target fixation trajectory. Critical for our purpose, is the interaction between Condition and Group, which tests whether the within-subject Condition manipulation had a different effect for the two groups while taking into account any overall (condition-independent) differences between the groups. In addition to these fixed effects, the model included random effects of participant and participant-by-condition on all time terms to capture individual variability in target fixation trajectory. All analyses were conducted in the statistical software R-3.2.1, using the package LmerTest.

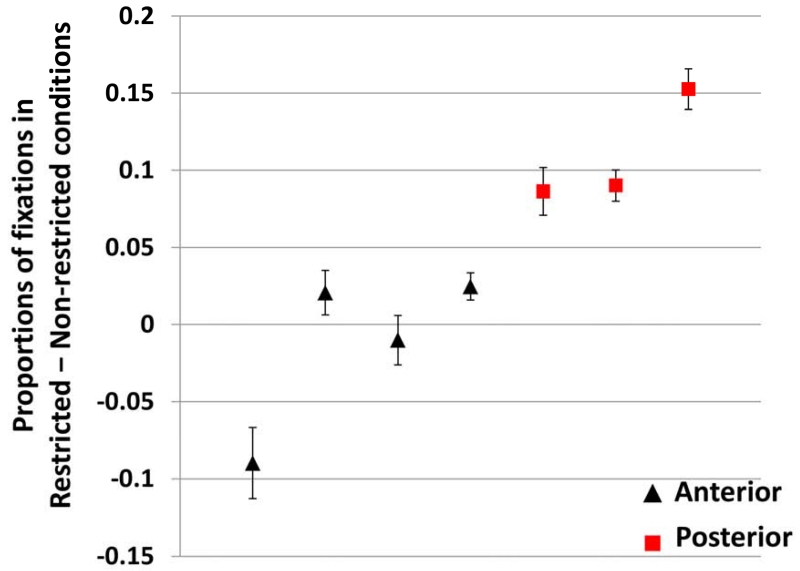

The window of the analysis was 200 ms after the verb onset (to allow time for planning and execution of an eye movement; e.g. Hallet, 1986) until the average noun onset for sentences in our experiment, which was 731 ms after the verb. This time window accurately captures the anticipatory looks to the target before participants have a chance to use the noun information to locate the target. Table 2 shows the full results of this analysis. Most critical for our hypothesis is the difference between looks to the target in the Restrictive and Non-restrictive conditions in the Anterior and Posterior groups. This difference starts to show later in the Anterior group (Figure 1), and its reliability can be tested using the Condition-by-Group interaction, which had a significant effect on the intercept term (Estimate = −0.031, SE = 0.007, t = −4.13, p = 0.004) and marginal effect on the linear term (Estimate = −0.055, SE = 0.027, t = −2.06, p = 0.072). Figure 2 plots the difference between mean target fixation proportions in the Restrictive and Non-restrictive conditions for each individual in the time window specified above (i.e., the individual participant anticipation effect sizes). The figure shows that the two groups are linearly separable: all patients with Anterior damage had lower values than all patients with Posterior damage2.

Table 2.

Results of GCA analysis for Experiment 1 (semantic restriction)

| Fixed effects | ||||

|---|---|---|---|---|

|

| ||||

| coefficient | SE | t | p-value | |

| intercept | 0.160 | 0.021 | 7.782 | <0.001 |

| linear term | 0.118 | 0.033 | 3.613 | 0.008 |

| quadratic term | 0.053 | 0.020 | 2.610 | 0.027 |

| cubic term | −0.014 | 0.015 | −0.927 | 0.379 |

| condition*intercept | −0.024 | 0.007 | −3.219 | 0.013 |

| condition*linear term | −0.085 | 0.027 | −3.179 | 0.012 |

| condition*quadratic term | −0.021 | 0.018 | −1.203 | 0.250 |

| condition*cubic term | 0.008 | 0.013 | 0.646 | 0.530 |

| group* intercept | 0.011 | 0.021 | 0.544 | 0.603 |

| group*linear term | 0.015 | 0.033 | 0.449 | 0.666 |

| group*quadratic term | 0.015 | 0.020 | 0.758 | 0.467 |

| group* cubic term | −0.014 | 0.015 | −0.903 | 0.391 |

| condition*group*intercept | −0.031 | 0.007 | −4.132 | 0.004 |

| condition*group*linear term | −0.055 | 0.027 | −2.056 | 0.072 |

| condition*group*quadratic term | 0.018 | 0.018 | 1.015 | 0.328 |

| condition*group*cubic term | 0.016 | 0.013 | 1.31 | 0.214 |

| Random effects | |

|---|---|

| Subject | Variance |

|

| |

| intercept | 0.0025 |

| linear term | 0.0023 |

| quadratic term | 0.0007 |

| cubic term | 0.0005 |

|

| |

| Condition|Subject | Variance |

|

| |

| intercept | 0.0007 |

| linear term | 0.0095 |

| quadratic term | 0.0038 |

| cubic term | 0.0017 |

Figure 2.

The individual effect sizes (±SE) in Experiment 1. Effect size is calculated as the average of looks to the target in the Restrictive – Non-restrictive conditions in the time-window of the analysis, thus capturing the degree that contextual information were used for quicker integration of information. Black triangles are patients in the Anterior group. Red squares are patients in the Posterior group. The data are spread out horizontally (i.e., each column is a patient) to avoid point overlap for patients with similar effect sizes.

In summary, both the group analysis and inspection of individual effect sizes show that patients with VLPFC damage exhibited reduced use of semantic contextual cues to locate the target. Experiment 2 tests whether this is also true for phonological contextual cues.

Experiment 2

Methods

Participants

The participants from Experiment 1 also completed Experiment 2, except for one participant from the Anterior group (A2) who was no longer available for testing.

Materials

Targets were pictures of 60 common nouns (taken from the same corpora as in Experiment 1), half beginning with a vowel, the other half with a consonant (Appendix, Table A2). Each item appeared once as the target in the experimental (Restrictive) condition (“an eagle”), once as the target in the control (Non-restrictive) condition (“the eagle”), and six more times as distractor in trials with other target nouns. A hundred and twenty sentences (60 Restrictive with “a”/“an” and 60 Non-restrictive with “the”) with the structure “She will see [determiner][target].” were recorded by the same speaker as in Experiment 1 and with the same specifications. All sentences were recorded naturally, without word splicing. This was necessary because each word serves as its own control in the “a/an” vs. “the” condition, and pronunciation of “the” changes depending on whether the following noun starts with a vowel or a consonant. Splicing would have provided an unnatural comparison baseline for a subset of trials and could have contaminated the results. In the recorded materials, there was no significant difference between the duration of the determiners and their paired “the” controls (t(29) = .58, p = .56 for “a” vs. “the”; t(29) = 1.15, p = .23 for “an” vs. “the”).

Procedure

Procedures were similar to Experiment 1. Because this experiment was longer, it was divided into two blocks, and participants were allowed to take a break (as long as they wished) between the blocks. All participants finished the study within an hour.

Results and Discussion

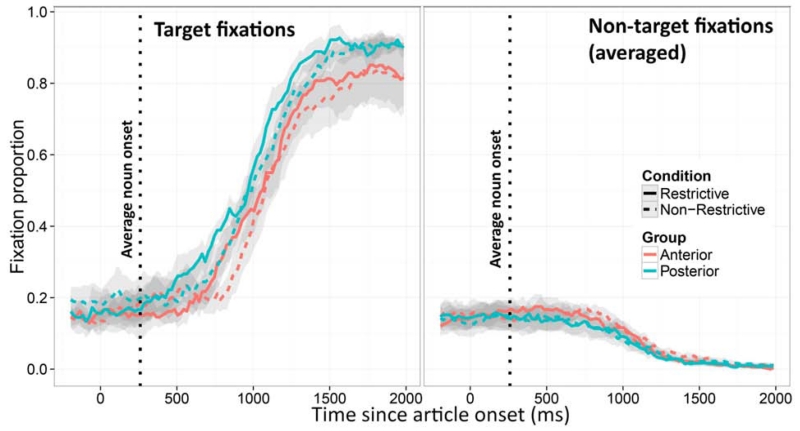

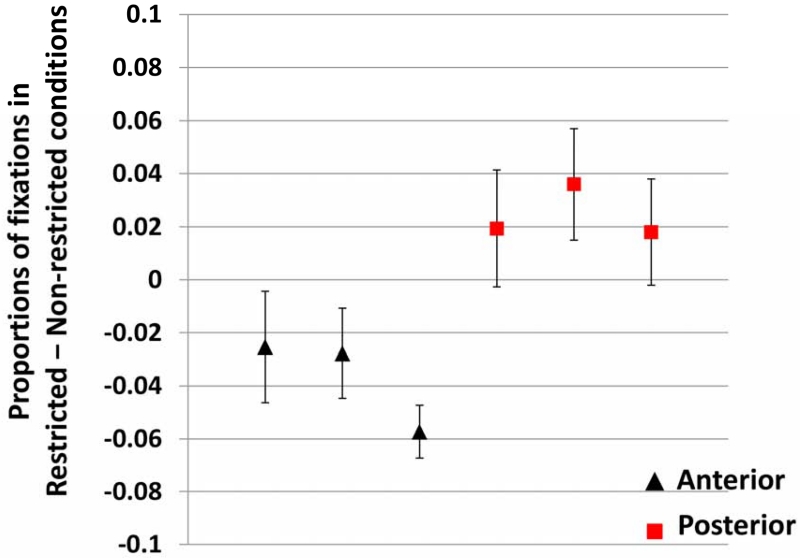

Figure 3 shows the results, formatted as in Experiment 1. The same analysis method as Experiment 1 was used. Table 3 shows the full results of this analysis. As before, we focus on the interactions between the intercept and variables of interest. Because the articles are very short (average time from article onset to noun onset = 260 ms), and planning an eye-movement also takes 200 ms, the window of analysis could not be confined to the pre-noun region. We therefore selected a window comparable to Experiment 1: starting 200 ms after the article onset and lasting 500 ms (which covers on average 2/3 of the noun utterance). Although this window no longer captures strictly “anticipatory” looks, it still captures the use of past information for integrating the noun, and is in a sense, a more direct measure of “integration” of the already-available information. Just as in Experiment 1, the Condition-by-Group interaction had a significant effect on the intercept term (Estimate = 0.061, SE = 0.015, t = 3.957, p = 0.005). Figure 4 shows individual effect sizes, as the average proportion of target fixations in the Restrictive minus Non-restrictive conditions in the defined analysis window. The figure shows that all the individuals in the Anterior group had consistently smaller effect sizes than all individuals in the Posterior group3.

Figure 3.

Average fixation proportions (±SE) to the target (left panel) and the three distractors (right panel) in the Restrictive and Non-Restrictive conditions for the Anterior and Posterior groups in Experiment 2.

Table 3.

Results of GCA analysis for Experiment 2 (phonological restriction)

| Fixed effects | ||||

|---|---|---|---|---|

|

| ||||

| coefficient | SE | t | p-value | |

|

|

||||

| intercept | 0.203 | 0.061 | 3.342 | 0.028 |

| linear term | 0.064 | 0.063 | 1.011 | 0.345 |

| quadratic term | −0.045 | 0.046 | −0.979 | 0.356 |

| cubic term | −0.016 | 0.043 | −0.365 | 0.723 |

| condition*intercept | −0.037 | 0.011 | −3.362 | 0.012 |

| condition*linear term | 0.021 | 0.071 | 0.298 | 0.776 |

| condition*quadratic term | 0.097 | 0.055 | 1.780 | 0.112 |

| condition*cubic term | 0.029 | 0.048 | 0.605 | 0.553 |

| group* intercept | 0.003 | 0.086 | 0.038 | 0.971 |

| group*linear term | 0.006 | 0.089 | 0.066 | 0.949 |

| group*quadratic term | 0.095 | 0.065 | 1.455 | 0.183 |

| group* cubic term | 0.033 | 0.060 | 0.548 | 0.597 |

| condition*group*intercept | 0.061 | 0.015 | 3.957 | 0.005 |

| condition*group*linear term | 0.149 | 0.100 | 1.489 | 0.186 |

| condition*group*quadratic term | −0.124 | 0.077 | −1.608 | 0.145 |

| condition*group*cubic term | −0.059 | 0.068 | −0.863 | 0.399 |

| Random effects | |

|---|---|

| Subject intercept | Variance |

| intercept | 0.0109 |

| linear term | 0.0044 |

| quadratic term | 0.0020 |

| cubic term | 0.0020 |

|

| |

| Condition|Subject | Variance |

|

| |

| intercept | <0.0001 |

| linear term | 0.0045 |

| quadratic term | 0.0015 |

| cubic term | 0.0005 |

Figure 4.

The individual effect sizes (±SE) in Experiment 2. Black triangles are patients in the Anterior group. Red squares are patients in the Posterior group.

In summary, the results of Experiment 2 replicated the key findings of Experiment 1, but in the phonological domain.

General Discussion

In two experiments we investigated the role of the left VLPFC in comprehension of simple sentences with minimal syntactic complexity or ambiguity. Experiment 1 tested the time course of using verb cue information to locate the target in the absence of strong competitors in individuals with VLPFC lesions compared to a matched group of individuals with lesions that spared VLPFC. If VLPFC has a role in integrating information (words) into contexts (sentences), its damage should manifest as slower target fixations in the Anterior group. This prediction was supported: all individuals in the Anterior group were slower than all individuals in the Posterior group in using the verb cue to anticipate the target. Importantly, this slowness was relative to a baseline that required the same basic processes of matching a sentence to a visual scene and choosing a target picture among four options, so the difference between the two groups appears to be specific to how quickly they were able to link the restrictive verb to the target.

Why does the difference manifest in the timing and not the magnitude of fixations to the target? This is similar to the question of speed-accuracy trade-off in behavioral experiments. Two strategies can be adopted by participants: (1) They can choose to keep the criterion of accuracy high. In DDM terms, this translates into keeping the response boundaries in the same place as the control group. The time to reach the boundary then becomes the main index of performance. A difficult condition (e.g., when VLPFC’s boosting is absent) would manifest as a random walk which simply reaches the boundary later, but once it does, the response is as strong as that of the control group. (2) Participants may choose to lower their criterion of selection, by bringing the response boundaries closer to the midline, i.e., the starting point of evidence accumulation. In that case, preservation of speed would be accompanied by lower accuracy, as responses are chosen based on fewer pieces of evidence. Thus, in according to a DDM, it is theoretically possible to have looks diverge later with preserved accuracy after divergence, if decision boundaries are unchanged, or to have less accuracy but quicker divergence of looks. Participants in the current study opted for the former option.

Although we have shown a robust difference between the two groups, this should not be taken to mean that participants in the Anterior group were not able to anticipate the target. In fact, the timeline of the effect suggests that they most likely did. Figure 1 shows that the looks to the target in the Restrictive and Non-restrictive conditions start to diverge right around the noun onset in the Anterior group. If the Anterior group were using only the noun information to locate the target, they would have needed another 200 ms to plan and execute a saccade towards the target. Thus, in all likelihood, they were able to use the verb information to pick the target, although this was delayed compared to the Posterior group. One might argue that participants were using co-articulatory cues to locate the target. Since such cues become available before the noun onset, this may have given them enough time to execute an eye-movement which coincided with the onset of the noun. However, if they were using coarticulatory cues, it is unclear why their fixations would have differed between the Restrictive and Non-restrictive conditions, as both provide co-articulatory cues. At least two of the Anterior group participants had greater proportion of looks to the target in the pre-noun region, suggesting some anticipatory use of the verb cue. This finding is compatible with the absence of deficits for comprehension of simple sentences in individuals with VLPFC damage. The eye-tracking paradigm is, however, sensitive enough to pick up on subtle differences that may not be testable in overt behavioral measures. In summary, the results of Experiment 1 showed that in the absence of strong competitors, the Anterior group was able to locate the target, but was significantly slower than the Posterior group to utilize semantic constraints of the sentence context.

Experiment 2’s results mirrored those obtained from Experiment 1 but in the phonological domain. Unlike Experiment 1, the analysis window in this experiment was not limited to prediction, but included integration after the noun information became available. Here too, the Anterior group was delayed in using the contextual information to locate the target noun. Thus, we can answer question (1) posed in the Introduction confidently: VLPFC does have a role in integration even when the task poses no explicit demands for competition resolution. This can manifest as using context for predicting the target before direct information about the target identity becomes available, or as using contextual information to more quickly activate the target after some information about its identity is available. Both cases boil down to more efficient integration of information with context. Together the two experiments also provided an answer to question (2): VLPFC does not seem to be exclusively sensitive to semantic information. Both semantic and phonological contextual cues were employed more quickly by participants whose lesion did not include left VLPFC. It is possible, however, that different but neighboring subsections of VLPFC were sensitive to semantic vs. phonological information.

Before we can conclude that current findings support integration of information in sentences for the purpose of referent localization, we consider an alternative explanation. It is possible that the current results simply reflect a mechanical impairment in the Anterior group in programming a saccade in a timely fashion. There are a few arguments against this interpretation. For one, none of the terms in the statistical model that compared group differences without including condition were close to significant, implying no main differences between fixations in the two groups when conditions are collapsed. This is in keeping with past reports of normal visually-guided and memory-guided saccades in patients with PFC lesions in the absence of damage to the frontal eye field (Pierrot-Deseilligny et al., 2003). Moreover, in an eye-tracking task with four pictures and a single auditory word, Mirman and Graziano (2012) showed that the time course of target fixation did not differ between patients with Anterior lesions and those with Posterior lesions (their Fig. 2). Together, these findings show that patients with anterior lesions sparing the frontal eye field do not have a general impairment in planning and executing eye movements, and that their activation of thematic relations, when not anticipatory and in a context requiring fast integration is normal. Thus, the current results imply a specific problem in integration of semantic information in patients with VLPFC damage, when efficient processing requires anticipatory activation of the upcoming word.

These findings align well with previous reports on the role of the PFC in using contextual cues for prediction (e.g., Fogelson et al., 2009). Although not limited to VLPFC, patients with lesions to PFC show smaller gains in performance on predictable targets than random targets, compared to healthy controls. This finding implies a general impairment in use of predictive local context for fast processing, an essential component of integration in sentence comprehension. We also acknowledge that other parts of the PFC, such as the dorsolateral region, might also be involved in anticipation and integration of information (e.g., Pierrot-Deseilligny et al., 2003).

In the Introduction, we reviewed evidence for the role of VLPFC in conflict resolution even when no integration was necessary. Current results provide evidence for its role in integration when no conflict resolution seems necessary. Does this mean that VLPFC has (at least) two functions, conflict resolution and integration? Many have proposed functional and anatomical heterogeneity of the VLPFC (e.g., Amunts et al., 2004; Badre & Wagner, 2007; Hagoort, 2005; Huang et al., 2012; Lau et al., 2008; Zhu et al., 2013). For example, in their dual account of VLPFC, Badre and Wagner (2007) proposed two roles for VLPFC in lexical-semantic processing, (a) accessing the stored representations, which is specific to semantics and is carried out by anterior VLPFC, and (b) selection among competitors, which is domain-general and is carried out by middle VLPFC (Badre, Poldrack, Paré-Blagoev, Insler, & Wagner, 2005). In a recent study, Zhu et al. (2013) reported that both anterior and posterior parts of VLPFC showed increased activation during semantic integration, but only the posterior part showed sensitivity to congruency in the Stroop task (see also Fedorenko, Behr, & Kanwisher, 2011). Another study found that while BA45 responded to conflict in both semantic and syntactic domains, BA47’s activity was associated only with semantic conflict (Glaser et al., 2013). Finally, Hagoort (2005) proposed that posterior VLPFC supports phonological and syntactic processing. While we do not have enough variability in our sample to test the anatomical heterogeneity of VLPFC, we now consider whether a single computational framework can accommodate both conflict resolution and semantic integration.

A single framework for integration and competition resolution

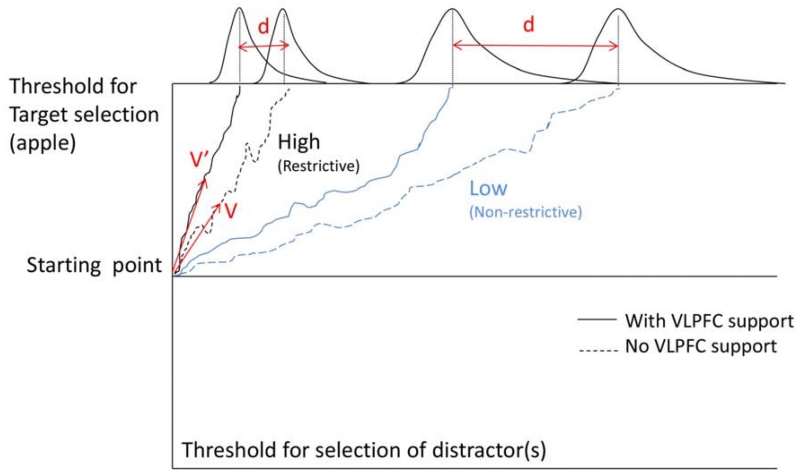

Selection of a referent is a process of evidence accumulation, whereby different pieces of often-noisy information are gathered over time until a decision threshold is reached and a target is chosen. This general idea can and has been implemented in a variety of different computational frameworks. The Drift Diffusion Model (DDM; Laming, 1968; Ratcliff, 1978; Stone, 1960; see also Wagenmakers, Ratcliff, Gomez, & McKoon, 2008; Wagenmakers, Van Der Maas, & Grasman, 2007, for specific application to linguistic forced-choice tasks) was initially constructed to explain how individuals choose between two alternatives, but extensions of the model can explain situations where more than two alternatives are available (e.g., Ratcliff & McKoon, 1997). The DDM continuously accumulates information in favor of one response or another over repeated samples, and moves towards one response boundary or another at each step (Figure 5). The drift rate (v, v’ in Figure 5) captures how quickly a response boundary is reached. In parallel distributed processing (PDP) or connectionist computational frameworks, an analogous function is served by activation gain (e.g., Kello & Plaut, 2003; Mirman, Yee, Blumstein, & Magnuson, 2011). We propose that VLPFC’s operation affects this drift rate or activation gain parameter. In what follows, our focus on the DDM is not meant as an endorsement or test of the model; rather, the DDM simply provides a straightforward way to describe the effect of drift rate (or gain) on processing.

Figure 5.

A schema of the Drift Diffusion Model. Blue lines show the process of locating a target with low association to input (e.g., the Non-restrictive condition). Black lines show the process of locating a target with high association to input (e.g., the Restrictive condition). The dotted lines show the accumulation of evidence in the absence of VLPFC contribution. The solid line show this process facilitated by VLPFC. Parameters v and v’ are drift rates (see text), and the difference between them marks the difference in the speed of target activation with and without VLPFC contribution. The top part of the figure shows hypothetical response latency distributions. Parameter d is related to the distance between the means of the two distributions and quantifies the effect of VLPFC in facilitating the process of locating the target; the smaller the possible contribution of VLPFC.

Before discussing the implications of VLPFC’s effect on the drift rate, let us review the rest of the DDM’s parameters to see if any may explain the difference in performance between the two participant groups. The main parameters are the starting bias and the boundary position (along with their variability measures). Starting bias reflects a tendency to make a certain type of response more often than another (this could be resting activation levels in a PDP model). In the present study, this could manifest as a higher tendency to looking at a certain location, say the top-right corner. This bias would predict a difference between the groups in their looks to the target picture regardless of the condition’s restrictiveness. However, the analyses showed no such overall difference between the groups in this respect (the non-significant effect of Group on the polynomial terms in Tables 2 and 3). The other parameter, the boundary position, reflects a speed-accuracy trade-off (this could be a response threshold in a PDP model). By shifting the response boundary closer to the starting point, participants can select a response more quickly, while sacrificing accuracy. This is unlikely to have been the case in our task, because (1) no response was required, hence no pressure to choose quickly, and (2) the overall looks to the target did not differ between the two groups. If the Posterior group had opted for a lower selection criterion, their probability of fixating the correct target should have decreased. Because these two parameters are unlikely to explain the differences observed in our study, we instead focus on the drift rate.

In general, the differences in stimulus properties are best captured by changes to the drift rate (Ratcliff, Gomez, & McKoon, 2004). The drift rate captures the difference in the strength of bottom-up information contained in the input, for example the strength of association between memorized items in a retrieval task (Ratcliff, 1978), or the “word-likeness” of a word in a lexical decision task (Ratcliff et al., 2004). In our case, because “eat” signals “apple” more strongly than “see” signals “apple”, the drift rate will be inherently higher for an “eat” than a “see” trial, even without any intervention from VLPFC (Figure 5, the dotted black vs. the dotted blue line). Thus, bottom-up association imposes its effect even when VLPFC is not strongly involved. However, when VLPFC is intact, the same bottom-up cues are mapped onto the target more quickly (i.e., in Figure 1 and 3 the solid lines are above the dotted lines for both groups). This is compatible with VLPFC activity affecting the drift rate by boosting the associations between the bottom-up cues and the target. It is noteworthy that variations in the drift rate may also reflect “top-down attention” (e.g., Bogacz et al., 2006; p.731), but implementation of top-down attention seems to be more aligned with the role of dorsolateral PFC (e.g., Snyder, et al., 2014).

The proposal that VLPFC boosts associations is not new. Wagner et al. (2001) reported that during a global similarity judgment task, VLPFC was more activated when judging the similarity of low-association items such as “candle” to “halo” than when judging the similarity of high-association items like “candle” to “flame”. Similarly, Martin and Cheng (2006) showed that patients with VLPFC damage were not impaired in generating a verb in response to a noun probe as long as the two were highly associated, even if multiple possible answers were possible. For example, both “apple” → eat, and “door” → close/open were easier for VLPFC patients compared to “rug” → roll, lay, walk, etc., even though “apple” was strongly associated with only one verb, and “door” with more than one (see also Snyder, Banich, & Munakata, 2014). The authors argued that these results pointed to VLPFC’s role in strengthening associations, as opposed to selection among alternatives. Our results also suggest that VLPFC might facilitate processing of association when no overt selection demands are posed, compatible with a role of this region in integration. Our data also show that involvement of VLPFC in boosting associations is not binary (i.e., present for weak associations; absent for strong associations), and even relatively strong associations (e.g., “eat” and “apple”) can benefit from the boost. This effect, which is difficult to capture with overt measures of performance such as accuracy and even RTs, can be captured with tracking fixations. Note again, that even patients with VLPFC damage were able to establish a link between restrictive verbs and their corresponding nouns around the time of the noun onset (Fig. 1), showing that potent associations can be established in the absence of intact VLPFC, even though with a small - but reliable - delay. Thus, it is not unexpected that such patients would show little to no clinical deficits performing tasks that involve strong bottom-up associations. This is another reason why discussing VLPFC’s role in terms of a DDM is appealing, as it provides a natural explanation for graded sensitivity of association strength to VLPFC’s boost: the stronger the associations, the less room for contribution of a boost (Fig. 5), without the need for a binary cutoff between strong and weak associations. One question remains: Is this process fundamentally different from competition resolution?

As we have noted throughout the preceding discussion, the drift rate parameter in DDM and gain parameter in PDP models have very similar computational properties. DDM does not have an explicit competition resolution parameter, because the drift rate inherently contains this information: any movement towards the boundary of one response implies a movement away from the boundary of the other response. This also explains why situations with high association between a bottom-up cue and target rely less strongly on VLPFC activation compared to low association situations. Parameter d in Figure 5 provides an intuitive demonstration: when association is high, movement towards one response boundary is quick (dotted black line), thus the room for speeding up this process (solid black line) is limited leading to a smaller difference in the means between the VLPFC-facilitated and VLPFC-independent distributions of response selection times, compared to situations where the original association, and the subsequent drift rate, is low.

In the PDP models, the efficiency of competition resolution is often captured by the mutual inhibition4 parameter. Because mutual inhibition is typically a monotonically increasing function of activation, a representation that is more active than its competitors sends a stronger inhibitory signal to those competitors until it eventually becomes the only active representation (i.e., until competition is resolved). An increase in gain would increase the inhibitory effect on competitors and therefore speed up the rate of this competition resolution process. The same asymmetry between the benefit of boosting associations in the case of high and low associations demonstrated above, falls out of the activation dynamics of PDP models. For biologically-motivated reasons, output functions in these models are a non-linear (sigmoidal) function of their input. If the input to a node is already large, the extra input makes little difference. However, if a neuron’s input is around zero, any extra input has a significant effect on the node’s output. If we view the input received from the stimulus (e.g., the verb) as the original input and VLPFC’s contribution as the additional input to the output nodes, the original input will be much stronger in the case of high than low association. Therefore, an output neuron is closer to its maximum response in the high association case and benefits less from the additional input provided by the VLPFC. However, this little extra input can exert an influence on target selection, even though it may not be behaviorally tangible.

This computational approach offers a path for reconciling integration and competition resolution accounts of VLPFC, and explains the classic finding that VLPFC is more involved in low-association than high-association conditions. Thus VLPFC’s role can be viewed as increasing the drift rate (integration) or gain (competition resolution), with the same behavioral outcomes. This computational perspective allows us to see that differences in drift rate or gain should influence processing even when no “integration” is required (e.g., single word processing) or when there are no strong competitors (e.g., the present experiments). However, the role/influence of VLPFC will be larger when either integration is difficult (e.g., semantically or syntactically anomalous or garden path sentences) or competition resolution is difficult (e.g., multiple strongly competing responses).

To put this all together, VLPFC seems to facilitate the process of mapping an input to an output by affecting how quickly that mapping is established. This could be accomplished by a neuromodulatory function that increased neural responsiveness, as illustrated by the higher drift rate v’ in Figure 5 (for a detailed discussion and neurobiologically plausible implementation see Gotts & Plaut, 2002; for a related but different view see Munakata et al., 2011). The nonlinear dynamics of neural processing (i.e., threshold or sigmoidal) also constrain the impact of drift rate or gain modulation: there is an intermediate “sensitive range” of activation (i.e., near the threshold or the cross-over point of the sigmoid curve) where changes in gain would have a large impact on neural activation; neurons that are very weakly active and very strongly active (far from the threshold or in the asymptotic sections of the sigmoid curve) would show very little response to gain modulation. That is, an equivalent (VLPFC-based) modulation of gain should have a much larger influence on hard input-output mappings (e.g., when bottom-up cues are weak to signal a response) than on easy input-output mappings (e.g., when bottom-up cues strongly suggest a response). It is important to note that while this computational framework provides a unified account of integration and conflict resolution in case of indeterminacy (i.e., when several responses are associated with the probe with equal strength), additional mechanisms are required for resolution of conflict in favor of the less potent response when in competition with a response that is more strongly associated with the probe (e.g., naming the ink color in Stroop). A recent study shows that resolution of conflict in the face of prepotent responses might be critically dependent on DLPFC, a region that is hypothesized to selectively support the task relevant responses (Snyder et al., 2014). Regardless of whether VLPFC has a role in boosting associations in cases where overriding a prepotent response is required (e.g., January et al., 2009; Novick et al., 2005, 2009; Thompson-Schill et al., 1997; Thothathiri et al., 2012) or not (Snyder et al., 2014), contribution of a region that links task goals to the to-be-selected representations seems necessary in those conditions.

Conclusion

This study demonstrated the role of VLPFC in facilitating target activation in response to contextual cues (i.e., integration), when demands for resolving conflict were minimal. Furthermore, both semantic and phonological cues evoked similar patterns of behavior, pointing to some degree of domain-generality in VLPFC’s involvement in integration. These results call for models in which VLPFC’s activation facilitates the process of input-output mapping. While we are agnostic with regard to the possible specialization of different parts of VLPFC for processing different kinds of input, we suggested that a unitary computational framework can accommodate findings on both integration and conflict resolution. Future work must test whether integration and conflict resolution can be doubly dissociated in individuals with brain damage, in which case, the proposed unified framework would need to be revised.

Highlights.

We studied the role of VLPFC in auditory sentence comprehension.

Patients with and without VLPFC lesions were tested in an eye-tracking paradigm.

Semantic or phonological cues were used less efficiently by the VLPFC patients.

This finding supports the integration account of VLPFC in sentence comprehension.

A common framework for integration and conflict resolution in VLPFC is proposed.

Acknowledgment

This work was supported by R01-DC009209 to STS and R01DC010805 to DM. We would like to thank the participants and staff at Moss Rehabilitation Research Institute, Allison Britt and Kristen Graziano for their help with data collection, and Drs. Randi Martin and Vitória Piai for their comments on an earlier version of this manuscript.

Appendix : Materials for Experiment 1 and 2

Table A1.

Target nouns, each paired with a restrictive and a non-restrictive noun in Experiment 1.

| Target noun | Restrictive verb | Non-restrictive verb |

|---|---|---|

| baby | nurse | spot |

| banana | peel | get |

| boat | sail | hate |

| book | read | see |

| bow | tie | eye |

| bus | ride | draw |

| candle | light | bring |

| car | drive | study |

| deer | hunt | examine |

| dog | walk | paint |

| doll | cradle | remember |

| fish | fry | stare at |

| flashlight | turn off | leave |

| flower | pluck | describe |

| guitar | play | picture |

| gun | fire | imagine |

| hat | take off | hold |

| horse | saddle | point to |

| kite | fly | sketch |

| ladder | climb | forget |

| pear | eat | look at |

| pie | taste | take |

| pipe | smoke | notice |

| pool | swim in | gaze at |

| present | unwrap | keep |

| shirt | button | need |

| towel | fold | move |

| watch | wind | like |

| whistle | blow | recognize |

| window | close | observe |

Table A2.

Target nouns for the “a” and “an” conditions in Experiment 2.

| Targets for “an” | Target for “a” |

|---|---|

| accordion | barrel |

| acorn | bathtub |

| alligator | carrot |

| ambulance | church |

| anchor | crown |

| angel | desk |

| ant | fence |

| apple | fish |

| apron | helicopter |

| arm | horse |

| arrow | lion |

| artichoke | lipstick |

| ashtray | mosquito |

| asparagus | mountain |

| axe | nose |

| eagle | pitcher |

| ear | radish |

| egg | rocket |

| elephant | strawberry |

| envelope | swan |

| Eskimo | telescope |

| eye | train |

| ice cream | vacuum |

| igloo | volcano |

| iron | wagon |

| octopus | wheelchair |

| onion | wolf |

| ostrich | wrench |

| owl | yoyo |

| umbrella | zipper |

Footnotes

Results of this and subsequent tests on the small sample are reported from the non-parametric Mann-Whitney test.

The results of the GCA were double-checked by using the non-parametric Mann-Whitney test, which showed the two groups were significantly different in the difference of looks to the target when the verb was restrictive vs. when it was not (z = 2.12, p = 0.034).

Using the conservative non-parametric Mann-Whitney test, a comparison between the two groups returns a z = 1.96 (p = 0.05).

Bogacz et al. (2006) compared two architecturally similar models – one without mutual inhibition (Vickers, 1970) and one that had mutual inhibition (Usher & McClelland, 2001; simplified version) – and found that the mutual inhibition model was reducible in its essentials to the DDM, but the inhibition-free model was not.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Allopenna PD, Magnuson JS, Tanenhaus MK. Tracking the time course of spoken word recognition using eye movements: Evidence for continuous mapping models. Journal of Memory and Language. 1998;38(4):419–439. [Google Scholar]

- Altmann G, Kamide Y. Incremental interpretation at verbs: Restricting the domain of subsequent reference. Cognition. 1999;73(3):247–264. doi: 10.1016/s0010-0277(99)00059-1. [DOI] [PubMed] [Google Scholar]

- Amunts K, Weiss PH, Mohlberg H, Pieperhoff P, Eickhoff S, Gurd JM, Zilles K. Analysis of neural mechanisms underlying verbal fluency in cytoarchitectonically defined stereotaxic space—the roles of Brodmann areas 44 and 45. Neuroimage. 2004;22(1):42–56. doi: 10.1016/j.neuroimage.2003.12.031. [DOI] [PubMed] [Google Scholar]

- Badre D, Poldrack RA, Paré-Blagoev EJ, Insler RZ, Wagner AD. Dissociable controlled retrieval and generalized selection mechanisms in ventrolateral prefrontal cortex. Neuron. 2005;47(6):907–918. doi: 10.1016/j.neuron.2005.07.023. [DOI] [PubMed] [Google Scholar]

- Badre D, Wagner AD. Left ventrolateral prefrontal cortex and the cognitive control of memory. Neuropsychologia. 2007;45(13):2883–2901. doi: 10.1016/j.neuropsychologia.2007.06.015. [DOI] [PubMed] [Google Scholar]

- Basho S, Palmer ED, Rubio MA, Wulfeck B, Müller R-A. Effects of generation mode in fMRI adaptations of semantic fluency: paced production and overt speech. Neuropsychologia. 2007;45(8):1697–1706. doi: 10.1016/j.neuropsychologia.2007.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedny M, McGill M, Thompson-Schill SL. Semantic adaptation and competition during word comprehension. Cerebral Cortex. 2008;18(11):2574–2585. doi: 10.1093/cercor/bhn018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ben-Shachar M, Hendler T, Kahn I, Ben-Bashat D, Grodzinsky Y. The neural reality of syntactic transformations evidence from functional magnetic resonance imaging. Psychological Science. 2003;14(5):433–440. doi: 10.1111/1467-9280.01459. [DOI] [PubMed] [Google Scholar]

- Birn RM, Kenworthy L, Case L, Caravella R, Jones TB, Bandettini PA, Martin A. Neural systems supporting lexical search guided by letter and semantic category cues: a self-paced overt response fMRI study of verbal fluency. Neuroimage. 2010;49(1):1099–1107. doi: 10.1016/j.neuroimage.2009.07.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bogacz R, Brown E, Moehlis J, Holmes P, Cohen JD. The physics of optimal decision making: a formal analysis of models of performance in two-alternative forced-choice tasks. Psychological Review. 2006;113(4):700. doi: 10.1037/0033-295X.113.4.700. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Petersen SE, Ojemann JG, Miezin FM, Squire LR, Raichle ME. Functional anatomical studies of explicit and implicit memory retrieval tasks. The Journal of Neuroscience. 1995;15(1):12–29. doi: 10.1523/JNEUROSCI.15-01-00012.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Q, Mirman D. Interaction between phonological and semantic representations: Time matters. Cognitive Science. 2015;39(3):538–558. doi: 10.1111/cogs.12156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dahan D, Magnuson JS, Tanenhaus MK, Hogan EM. Subcategorical mismatches and the time course of lexical access: Evidence for lexical competition. Language and Cognitive Processes. 2001;16(5-6):507–534. [Google Scholar]

- Dehaene S, Kerszberg M, Changeux JP. A neuronal model of a global workspace in effortful cognitive tasks. Proceedings of the National Academy of Sciences. 1998;95(24):14529–14534. doi: 10.1073/pnas.95.24.14529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Demonet J-F, Chollet F, RAMSAY S, Cardebat D, Nespoulous J-L, Wise R, Frackowiak R. The anatomy of phonological and semantic processing in normal subjects. Brain. 1992;115(6):1753–1768. doi: 10.1093/brain/115.6.1753. [DOI] [PubMed] [Google Scholar]

- Fedorenko E, Behr MK, Kanwisher N. Functional specificity for high-level linguistic processing in the human brain. Proceedings of the National Academy of Sciences. 2011;108(39):16428–16433. doi: 10.1073/pnas.1112937108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fedorenko E, Thompson-Schill SL. Reworking the language network. Trends in Cognitive Sciences. 2014;18(3):120–126. doi: 10.1016/j.tics.2013.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fogelson N, Shah M, Scabini D, Knight RT. Prefrontal cortex is critical for contextual processing: evidence from brain lesions. Brain. 2009;132:3002–3010. doi: 10.1093/brain/awp230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiebach CJ, Friederici AD, Müller K, Von Cramon DY. fMRI evidence for dual routes to the mental lexicon in visual word recognition. Journal of Cognitive Neuroscience. 2002;14(1):11–23. doi: 10.1162/089892902317205285. [DOI] [PubMed] [Google Scholar]

- Fiebach CJ, Schlesewsky M, Lohmann G, Von Cramon DY, Friederici AD. Revisiting the role of Broca’s area in sentence processing: syntactic integration versus syntactic working memory. Human Brain Mapping. 2005;24(2):79–91. doi: 10.1002/hbm.20070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiez JA. Phonology, semantics, and the role of the left inferior prefrontal cortex. Human Brain Mapping. 1997;5(2):79–83. [PubMed] [Google Scholar]

- Freedman M, Martin R. Dissociable components of short-term memory and their relation to long-term learning. Cognitive europsychology. 2001;18(3):193–226. doi: 10.1080/02643290126002. [DOI] [PubMed] [Google Scholar]

- Gabrieli JD, Desmond JE, Demb JB, Wagner AD, Stone MV, Vaidya CJ, Glover GH. Functional magnetic resonance imaging of semantic memory processes in the frontal lobes. Psychological Science. 1996;7(5):278–283. [Google Scholar]

- Gelfand JR, Bookheimer SY. Dissociating neural mechanisms of temporal sequencing and processing phonemes. Neuron. 2003;38(5):831–842. doi: 10.1016/s0896-6273(03)00285-x. [DOI] [PubMed] [Google Scholar]

- Glaser YG, Martin RC, Van Dyke JA, Hamilton AC, Tan Y. Neural basis of semantic and syntactic interference in sentence comprehension. Brain and language. 2013;126(3):314–326. doi: 10.1016/j.bandl.2013.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grodzinsky Y. The neurology of syntax: Language use without Broca’s area. Behavioral and Brain Sciences. 2000;23(01):1–21. doi: 10.1017/s0140525x00002399. [DOI] [PubMed] [Google Scholar]

- Hagoort P. On Broca, brain, and binding: a new framework. Trends in Cognitive Sciences. 2005;9(9):416–423. doi: 10.1016/j.tics.2005.07.004. [DOI] [PubMed] [Google Scholar]

- Hagoort P, Hald L, Bastiaansen M, Petersson KM. Integration of word meaning and world knowledge in language comprehension. Science. 2004;304(5669):438–441. doi: 10.1126/science.1095455. [DOI] [PubMed] [Google Scholar]

- Hallett P. Eye movements((and human visual perception)) Handbook of Perception and Human Performance. 1986;1:10–1. [Google Scholar]

- Huang J, Zhu Z, Zhang JX, Wu M, Chen H-C, Wang S. The role of left inferior frontal gyrus in explicit and implicit semantic processing. Brain Research. 2012;1440:56–64. doi: 10.1016/j.brainres.2011.11.060. [DOI] [PubMed] [Google Scholar]

- January D, Trueswell JC, Thompson-Schill SL. Co-localization of Stroop and syntactic ambiguity resolution in Broca’s area: Implications for the neural basis of sentence processing. Journal of Cognitive Neuroscience. 2009;21(12):2434–2444. doi: 10.1162/jocn.2008.21179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaan E, Swaab TY. The brain circuitry of syntactic comprehension. Trends in Cognitive Sciences. 2002;6(8):350–356. doi: 10.1016/s1364-6613(02)01947-2. [DOI] [PubMed] [Google Scholar]

- Kamide Y, Altmann G, Haywood SL. The time-course of prediction in incremental sentence processing: Evidence from anticipatory eye movements. Journal of Memory and Language. 2003;49(1):133–156. [Google Scholar]

- Kello CT, Plaut DC. Strategic control over rate of processing in word reading: A computational investigation. Journal of Memory and Language. 2003;48(1):207–232. [Google Scholar]

- Kiehl KA, Laurens KR, Liddle PF. Reading anomalous sentences: an event-related fMRI study of semantic processing. Neuroimage. 2002;17(2):842–850. [PubMed] [Google Scholar]

- Kuperberg GR. Neural mechanisms of language comprehension: Challenges to syntax. Brain Research. 2007;1146:23–49. doi: 10.1016/j.brainres.2006.12.063. [DOI] [PubMed] [Google Scholar]

- Kuperberg GR, Holcomb PJ, Sitnikova T, Greve D, Dale AM, Caplan D. Distinct patterns of neural modulation during the processing of conceptual and syntactic anomalies. Journal of Cognitive Neuroscience. 2003;15(2):272–293. doi: 10.1162/089892903321208204. [DOI] [PubMed] [Google Scholar]

- Kuperberg GR, McGuire PK, Bullmore ET, Brammer MJ, Rabe-Hesketh S, Wright IC, David AS. Common and distinct neural substrates for pragmatic, semantic, and syntactic processing of spoken sentences: an fMRI study. Journal of Cognitive Neuroscience. 2000;12(2):321–341. doi: 10.1162/089892900562138. [DOI] [PubMed] [Google Scholar]

- Kuperberg GR, Sitnikova T, Lakshmanan BM. Neuroanatomical distinctions within the semantic system during sentence comprehension: evidence from functional magnetic resonance imaging. Neuroimage. 2008;40(1):367–388. doi: 10.1016/j.neuroimage.2007.10.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Magnuson JS, Dixon JA, Tanenhaus MK, Aslin RN. The dynamics of lexical competition during spoken word recognition. Cognitive Science. 2007;31(1):133–156. doi: 10.1080/03640210709336987. [DOI] [PubMed] [Google Scholar]

- Martin A, Haxby JV, Lalonde FM, Wiggs CL, Ungerleider LG. Discrete cortical regions associated with knowledge of color and knowledge of action. Science. 1995;270(5233):102–105. doi: 10.1126/science.270.5233.102. [DOI] [PubMed] [Google Scholar]

- Martin RC, Cheng Y. Selection demands versus association strength in the verb generation task. Psychonomic Bulletin & Review. 2006;13(3):396–401. doi: 10.3758/bf03193859. [DOI] [PubMed] [Google Scholar]