ABSTRACT

Touch is bound to the skin – that is, to the boundaries of the body. Yet, the activity of neurons in primary somatosensory cortex just mirrors the spatial distribution of the sensors across the skin. To determine the location of a tactile stimulus on the body, the body's spatial layout must be considered. Moreover, to relate touch to the external world, body posture has to be evaluated. In this review, we argue that posture is incorporated, by default, for any tactile stimulus. However, the relevance of the external location and, thus, its expression in behaviour, depends on various sensory and cognitive factors. Together, these factors imply that an external representation of touch dominates over the skin-based, anatomical when our focus is on the world rather than on our own body. We conclude that touch localization is a reconstructive process that is adjusted to the context while maintaining all available spatial information.

KEYWORDS: Tactile, reference frames, localization, body posture, multisensory

Introduction

One of psychology’s central objects of investigation is the self. A number of findings in recent years have stressed the importance of sensory information for ongoing construction of “the self” (e.g., Blanke, 2012; Blanke, Slater, & Serino, 2015; Lenggenhager, Tadi, Metzinger, & Blanke, 2007). These findings suggest that, as humans, we define our self through our body. However, the conception of our own body, too, is a construction. For instance, where we perceive a body part to be located, or where we perceive tactile information to have occurred, is instrumental for deciding what belongs to our body (Botvinick & Cohen, 1998), where it ends (Graziano & Botvinick, 2002), and even for where we are located as a whole (Ehrsson, 2007; Lenggenhager et al., 2007). These examples demonstrate that perceptions and conceptions that are most central to us as humans, such as who and what we are, depend on our brains’ interpretation of basic sensory perceptual processing. The main focus of this review is on one aspect of such basic processing – the spatial processing of tactile information. Yet, before we delve into these basic processes, we first expand on body processing as a whole, to make explicit the context and relevance of tactile spatial processing for many complex, body-related cognitive abilities.

If the perceived location of a touch on the body and the boundaries of the body do not coincide, the brain sometimes simply adjusts the perceived configuration or shape of the body to re-incorporate the touch into the body. A configural change is illustrated by the well-known rubber hand illusion: Vision of a simultaneously stroked rubber hand induces a shift in the perceived location of the stroking on the real hand towards the rubber hand, and participants frequently perceive the artificial hand as their own (Botvinick & Cohen, 1998; Ehrsson, 2007); doing the same while the real hand is visible, lying next to the rubber hand, can even create the impression of owning a third arm, thus, reconfiguring the brain’s inventory of the parts that make up the body (Guterstam, Petkova, & Ehrsson, 2011). The brain’s flexibility in perceiving body shape is strikingly evident in the Pinocchio illusion (Lackner, 1988). In this illusion, participants close their eyes and place their finger on their nose. Then, tendon of the triceps is stimulated with strong vibration; this manipulation creates the illusion of the arm moving outward. Because the finger is touching the nose, the brain creates the impression of the nose, finger, or both, being elongated, in some participants by up to 30 cm. These phenomena show how highly flexible our perception of the body is, and how much it depends on the current tactile input – a surprising feature of cognition, given that our bodies are actually quite stable entities, and we do not usually obtain additional arms, or grow massively long noses. The brain’s disposition for such perceptual flexibility may stem from the fact that we do not possess any direct sensory input about the shape of the body (Longo & Haggard, 2010). Instead, the inventory of body parts and their shape must be inferred from the sensory information we receive from touch, proprioception, audition, and vision. Because our tactile sensors are aligned along the skin – that is, the border between body and world – tactile input may appear to be the most valid sensory information available for perceiving and defining the body (Medina & Coslett, 2010). However, touch is by no means organized in a manner that makes the outcome of tactile perception easily predictable from the input. Already at the first stage of cortical tactile processing, the primary somatosensory cortex, we observe strong distortions: Tactile receptors are distributed with varying density across the skin and, accordingly, target varying numbers of neurons in the primary somatosensory cortex’s tactile homunculus (Penfield & Boldrey, 1937). This anatomical imbalance affects the perceived size of tactile objects, such that size is perceived bigger in more strongly innervated regions of the skin (Green, 1982; Weber, 1978). Thus, already at early processing stages, tactile input does not reflect the objectively measurable physical state of the world and, in turn, of the body. Although the brain partly compensates for this distortion, non-matching sensory input on body shape that stems from vision (Taylor-Clarke, Jacobsen, & Haggard, 2004) and proprioception (de Vignemont, Ehrsson, & Haggard, 2005) induces erroneous perceptual adjustments: It is possible to generate the illusion that a body part has increased in size by presenting distorted visual images of the body part or incorrect proprioceptive information as in the Pinocchio illusion; such manipulations alter the perception of tactile distance at the affected body part (de Vignemont et al., 2005; Taylor-Clarke et al., 2004). At first glance, it may seem counterintuitive that skin-based, tactile information is combined with visual and proprioceptive information in tasks for which tactile input alone would seem sufficient. This is especially true when the end result is less precise than if the additional sensory information had been ignored, as in the case of the aforementioned illusions. But a closer look reveals that vision and proprioception usually add critical information that is not available from skin-based tactile information alone, such as information about body posture. Only with posture information is it possible to infer where the part of the skin that felt a touch is located in space, allowing, for instance, goal-directed actions towards the identified location. Even when localization of the touched skin in space would not be necessary, such as when the size of the tactile stimulus on the skin must be determined, the use of sensory information besides touch may be advantageous. For instance, it may be convenient to judge object size visually rather than just inferring it from tactile input (Ernst & Banks, 2002). Thus, the brain’s default strategy to integrate many different sensory inputs usually grants improved perception. Yet, this strategy will nonetheless sometimes lead to errors and suboptimal inference, which in turn can be exploited to make inferences about the brain’s processing principles.

Note, however, that the strategy to use information from another sensory modality requires that the location be translated from a purely tactile location into one useable by the added sense. In the case of looking at the touched location to judge object size, stimulus location must be available to the visual system, and to make the saccade towards the touched object, the brain must first integrate the skin location of the touch with body posture. This transformation of spatial information from the initial skin-based reference frame into another reference frame is termed tactile remapping (Driver & Spence, 1998b). The reference frame that results from remapping is regularly called an “external” reference frame, maybe owing to the notion that the transformation has abstracted from a location on the body into one that can be treated like that of any other non-body – that is, external – object. The term external reference frame is commonly used as a surrogate for several possible egocentric reference frames, such as gaze-centred or trunk-centred ones. Note, that “external” should not be understood as implying independence of the body. Rather, the resulting external reference frame is usually understood as egocentric (that is, body-related), and not as allocentric (that is, purely world-related; Heed, Buchholz, Engel, & Röder, 2015). Notably, the external coordinates of touch might not necessarily be bound to the body, because under certain circumstances tactile stimuli are perceived to be located outside the body (L. M. Chen, Friedman, & Roe, 2003). For this reason, we prefer the term external to body-centred reference frame.

Remapping appears to be an automatic process (Azañón, Longo, Soto-Faraco, & Haggard, 2010; Badde, Heed, & Röder, 2014; Kitazawa, 2002; Röder, Rösler, & Spence, 2004), implying that every touch to our skin is remapped into an external reference frame, whether this transformation is currently necessary or not. Consequently, it is not at all clear whether the spatial information about touch that is used in constructing the body, as, for instance, to define the body’s borders and shape or to assess its parts, is coded with respect to the skin or with respect to the external space.

In this review, we argue that in fact tactile localization often relies on a combination of several spatial reference frames, rather than just referring to locations either on the skin or in external space. Even though using multiple pieces of spatial information is advantageous for tactile localization in most situations, it presents an additional challenge to the brain when establishing the relation between touch and the body. To elucidate the resulting uncertainty about the information incorporated in tactile spatial estimates, we identify factors that modulate the weighting of the different spatial representations of touch. From these multiple factors emerges the view that the different sources of tactile information are weighted according to the context: If the focus is on the body, skin-based information will be used as the dominant source of spatial information. In contrast, if the focus is on interacting with the external world, externally coded information will dominate the perception of touch location. Thus, tactile information does not define “the body” – that is, a single representational entity. Instead, tactile information creates multiple views of the body, depending on the situation at hand.

Experimental approaches to investigating reference frames

To explore the role of postural and visual information in tactile localization, and to test which reference frames are used for coding the tactile location estimate, participants are usually asked to localize touch while adopting different postures. For example, while judging the location of stimuli on the hand or on the torso, participants might be asked to turn their eyes or their head in different directions. The aim of such posture changes is to manipulate the location of the tactile stimulus relative to the axes of the reference frame anchored to the manipulated body part. Spatial relations between a stimulus’s skin location and the rest of the body should have no effect if processing relied exclusively on a skin-based reference frame; accordingly, manipulations of posture should not change task performance. In turn, if a posture manipulation did induce changes in task performance, this would be an indication that the manipulated reference frame has been used to code tactile stimulus location. For example, the identity of a touched body part could theoretically be reported by relying on skin-based information alone. Yet, localization responses are modulated by posture, indicating an influence of external reference frames.

Two types of posture manipulation have been especially popular in tactile research: limb crossing and gaze shifts. Limb crossing induces a conflict between the anatomical and external left–right location of a touch. For instance, a touch to the right hand (anatomical reference frame) is located on the left side (external reference frame) in the crossed posture (Driver & Spence, 1998b; Yamamoto & Kitazawa, 2001a). Although crossing effects indicate the use of an external reference frame, the specific anchor of this reference frame, such as the eyes, head, or trunk, is often not further specified (Riggio, de Gonzaga Gawryszewski, & Umilta, 1986). In contrast, gaze shifts explicitly test for tactile localization within an external reference frame anchored to the line of gaze – that is, the direction of the eyes as a sum of eye position in the head and head position on the trunk. Some studies have investigated also the separate relevance of eye (Harrar & Harris, 2009, 2010) and head position (Ho & Spence, 2007; Pritchett & Harris, 2011). A manipulation of eye, head, or gaze should influence tactile localization only if the location of the touch is encoded with respect to these body parts.

Demonstrations of tactile remapping and external coding

Initial reports of tactile remapping from skin into external space described the effects of hand crossing in patients suffering from hemispatial neglect and tactile extinction (Aglioti, Smania, & Peru, 1999; Smania & Aglioti, 1995). These patients do not detect tactile stimuli on the side contralateral to their lesion, either generally (neglect) or when the stimulus is presented together with a stimulus on the other body side (extinction). Importantly, the deficit can affect the side of space rather than the body side contralateral to the lesion: When some patients crossed their hands, stimuli were neglected when they were presented to the ipsilesional rather than the contralesional hand – that is, the hand that lay in contralesional space (Aglioti et al., 1999). This finding implies that these patients based their detection on the remapped, external representations of the tactile stimuli. Similarly, cross-modal extinction – that is, failure to report a tactile stimulus when a visual stimulus is presented concurrently – was shown to depend on the proximity of visual information and tactile events in space (Làdavas, di Pellegrino, Farnè, & Zeloni, 1998).

Experiments in healthy participants further explored remapping effects in the context of cross-modal exogenous cueing of spatial attention. In one line of experiments, a cue is presented abruptly on one side. The cue draws attention to its side even if it is entirely task-irrelevant. This effect can be demonstrated with speeded elevation judgments (up versus down) of a target that is presented on the same side as, or on the other side than, the cue. Tactile cues facilitate visual elevation judgments on the same side and vice versa. Crucially, cues facilitate judgments on the same side of space: When the hands are crossed, visual judgments are sped up by tactile cues on the other hand than when the hands are uncrossed (Driver & Spence, 1998b, 1998a; Kennett, Eimer, Spence, & Driver, 2001; Kennett, Spence, & Driver, 2002). Thus, cross-modal cueing of attention operated in external space. Analogous hand-crossing effects have been demonstrated for spatial and non-spatial (e.g., temporal) tactile–visual interactions (Holmes & Spence, 2006; Spence, Pavani, & Driver, 2004; Spence, Pavani, Maravita, & Holmes, 2004; Spence & Walton, 2005) as well as for tactile–auditory interactions (Bruns & Röder, 2010a, 2010b). Thus, various results indicate that cross-modal interactions rely on the remapped, external representation of touch.

In the cross-modal cueing paradigm, attention effects were used as a window into the spatial representation of touch. A similar approach of measuring spatial attention to investigate tactile remapping has been used in combination with event-related potentials (ERPs) in the electroencephalogram (EEG; e.g., Eimer, Cockburn, Smedley, & Driver, 2001). In such studies, participants direct attention to one side; tactile stimuli delivered to the hand of the attended side usually elicit enhanced ERP amplitudes in the range of 80–300 ms, such as the P100 and N140 ERP deflections (Eimer & Forster, 2003). When participants crossed their hands, these attentional effects were regularly reduced or not observed at all (Eimer, Forster, & Velzen, 2003). These effects of posture on ERP correlates of spatial attention were interpreted to indicate that spatial attention is not guided by a skin-based reference frame alone (Eimer, Forster, Fieger, & Harbich, 2004; Eimer et al., 2003; Gillmeister & Forster, 2012; Heed & Röder, 2010).

The use of attention-based experimental paradigms, though fruitful, has also been criticized. Because the approach relies on the modulation of attention-related ERP activity, any conclusions drawn from such experiments may not be generalizable to situations in which attention is not a critical factor. Furthermore, activity common to all conditions independent of attention cannot be detected, and, thus, experiments using attentional manipulations as an investigative tool may render an incomplete picture of the processes involved in spatial processing. Therefore, some studies have addressed these limitations by implementing experimental paradigms that assess the effect of hand crossing on tactile ERPs independent of attention. These studies, too, have reported ERP effects of hand posture, indicating that different spatial reference frames are relevant in tactile processing independent of the need to direct spatial attention. Furthermore, these reference frame effects were evident in similar time intervals to those based on manipulating spatial attention (Rigato et al., 2013; Soto-Faraco & Azañón, 2013).

Probably the most used experimental paradigm that has been combined with limb crossing is the tactile temporal order judgment (TOJ) task (Shore, Spry, & Spence, 2002; Yamamoto & Kitazawa, 2001a; for a review see Heed & Azanon, 2014). In the TOJ task, participants indicate the temporal order of two tactile stimuli that are applied in rapid succession to different locations, usually one to each hand. Hand crossing impairs TOJ performance when the hands are crossed rather than uncrossed (Azañón & Soto-Faraco, 2007; Azañón, Stenner, Cardini, & Haggard, 2015; Badde, Heed, et al., 2014; Badde, Röder, & Heed, 2014; Badde, Röder, & Heed, 2015; Begum Ali, Cowie, & Bremner, 2014; Cadieux, Barnett-Cowan, & Shore, 2010; Craig, 2003; Craig & Belser, 2006; Heed, Backhaus, & Röder, 2012; Kitazawa et al., 2008; Kóbor, Füredi, Kovács, Spence, & Vidnyánszky, 2006; Ley, Bottari, Shenoy, Kekunnaya, & Röder, 2013; Moseley, Gallace, & Spence, 2009; Nishikawa, Shimo, Wada, Hattori, & Kitazawa, 2015; Pagel, Heed, & Röder, 2009; Roberts & Humphreys, 2008; Röder et al., 2004; Sambo et al., 2013; Schicke & Röder, 2006; Shore et al., 2002; Soto-Faraco & Azañón, 2013; Studenka, Eliasz, Shore, & Balasubramaniam, 2014; Takahashi, Kansaku, Wada, Shibuya, & Kitazawa, 2012; Wada et al., 2012; Wada, Yamamoto, & Kitazawa, 2004; Yamamoto & Kitazawa, 2001a). This performance deficit is presumed to originate from the conflict between anatomical and external left–right coordinates in the crossed posture (the right hand lying in left hemispace). Additionally, tactile TOJs with uncrossed hands have been reported to be modulated by the distance between stimulated body parts (Gallace & Spence, 2005; Roberts, Wing, Durkin, & Humphreys, 2003; Shore, Gray, Spry, & Spence, 2005). Crucially, the TOJ task could theoretically be solved with the participant relying entirely on skin-based information; posture of the hands can (in theory) be discounted when deciding which of the two stimuli occurred first. The fact that posture, instead, strongly influences TOJ performance is commonly interpreted to indicate that tactile remapping is performed automatically (Badde, Heed, et al., 2014; Badde, Heed, & Röder, 2016; Badde, Röder, et al., 2016; Kitazawa, 2002; Röder, Heed, & Badde, 2014; Röder et al., 2004; Yamamoto & Kitazawa, 2001a).

In the TOJ task, localization is tested indirectly through the requirement of ordering stimuli in time and of then reporting the location of the first stimulus. Yet, posture influences performance also in some tasks that do not require any spatial processing. For example, the pattern of masking effects leading to impaired touch detection in the presence of additional tactile stimuli varies with the posture of the stimulated hands (Tamè, Farnè, & Pavani, 2011). Similarly, tactile stimuli that ease non-spatial visual discrimination at the same position cue locations in an external rather than anatomical reference frame (Azañón, Camacho, & Soto-Faraco, 2010).

However, tactile remapping becomes evident also when posture manipulations are combined with direct localization of tactile targets. For instance, participants’ localization of a tactile stimulus to one of the hands was found to be slower in crossed than in uncrossed postures (Bradshaw, Nathan, Nettleton, Pierson, & Wilson, 1983), and participants reported a single tactile stimulus on the wrong hand in 5% of trials when the hands were crossed (Badde, Heed, et al., 2016; Figure 1C). In line with these reports, saccades to tactile stimuli on crossed hands sometimes show curved trajectories (Groh & Sparks, 1996; Overvliet, Azañón, & Soto-Faraco, 2011), suggesting that participants first aim their saccade to the incorrect hand and then correct in flight. In another experimental approach, gaze-centred reference frames were investigated by asking participants to indicate the locations of tactile stimuli to the arm by referring to a visual reference. Location estimates of tactile stimuli on the arm were shifted towards the location of gaze (Harrar & Harris, 2009, 2010, see also Figure 2 adapted from Mueller & Fiehler, 2014a), indicating the use of a gaze-centred, external reference frame.

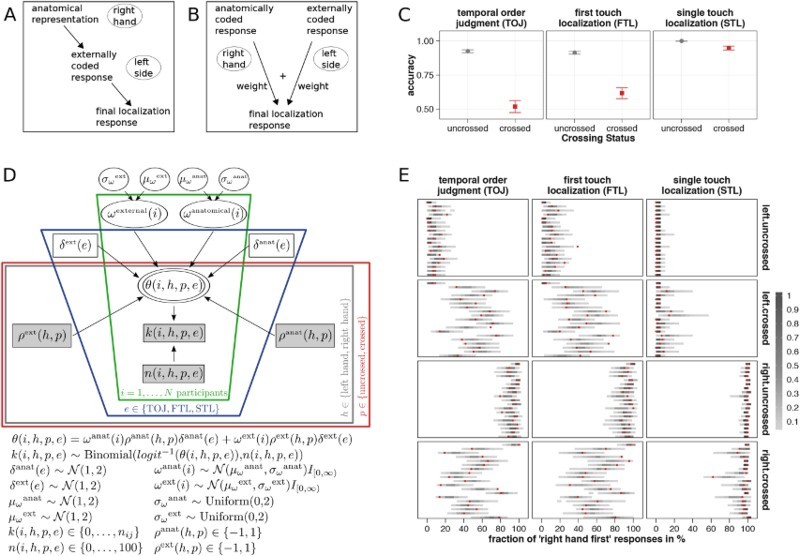

Figure 1.

Integration account of crossing effects in touch localization. (A) Serial account of touch localization. Tactile locations are remapped from a skin-based anatomical reference frame into an external reference frame. Tactile location estimates are exclusively based on these externally coded representations. (B) Integration account of touch localization. Anatomical and external tactile codes exist in parallel. Tactile location estimates are based on the weighted integration of both response codes. (C) Crossing effects on accuracy in three tactile localization tasks. In the temporal order judgment (TOJ) and first touch localization (FTL) tasks, two stimuli were successively applied, one to each hand. Participants were instructed to judge the temporal order of the stimuli and to press the button underneath the hand that received the first touch (TOJ), or to indicate the location of the first touch and ignore the second touch (FTL). In the single touch localization task (STL), participants pressed the button underneath the hand that received a single stimulus. All three tasks were executed with crossed (red) and uncrossed (grey) hands by the same participants. Crossing effects in all three tasks were pairwise correlated. Error bars show standard errors of the mean. (D) Graphical description of the integration model. The probability of localizing a touch to the right hand in a single trial, logit−1(θ), is derived from the stimulus’s weighted anatomical and external left–right response codes (ρanat and ρext). For each participant (i), the weight parameters (ωanat and ωext) were drawn from a population distribution N (μanat, σanat) and N (μext, σext), the parameters of which were concurrently estimated by the model. The individual weights were adjusted to the three different tasks (TOJ, FTL, and STL) by non-individual task context parameters (δanat and δext). Weights varied across individuals (green frame), but the task context parameters did not (blue frame). Crucially, in the integration model none of the free parameters (non-shaded boxes) varied across postures (red frame), that is performance was explained by common weighting for all postures. (E) Goodness of fit of the integration model’s predictions of each participant’s performance. Posterior predictive distributions (grey bars) – that is, frequency distributions of the fraction of “right hand” responses as predicted by the model – are plotted with the observed fractions (red dots). Data from three tactile localization tasks are shown separately for each hand posture and stimulated hand. Each line represents one participant. Figures were adapted from Badde, Heed, et al. (2016). With permission of Springer.

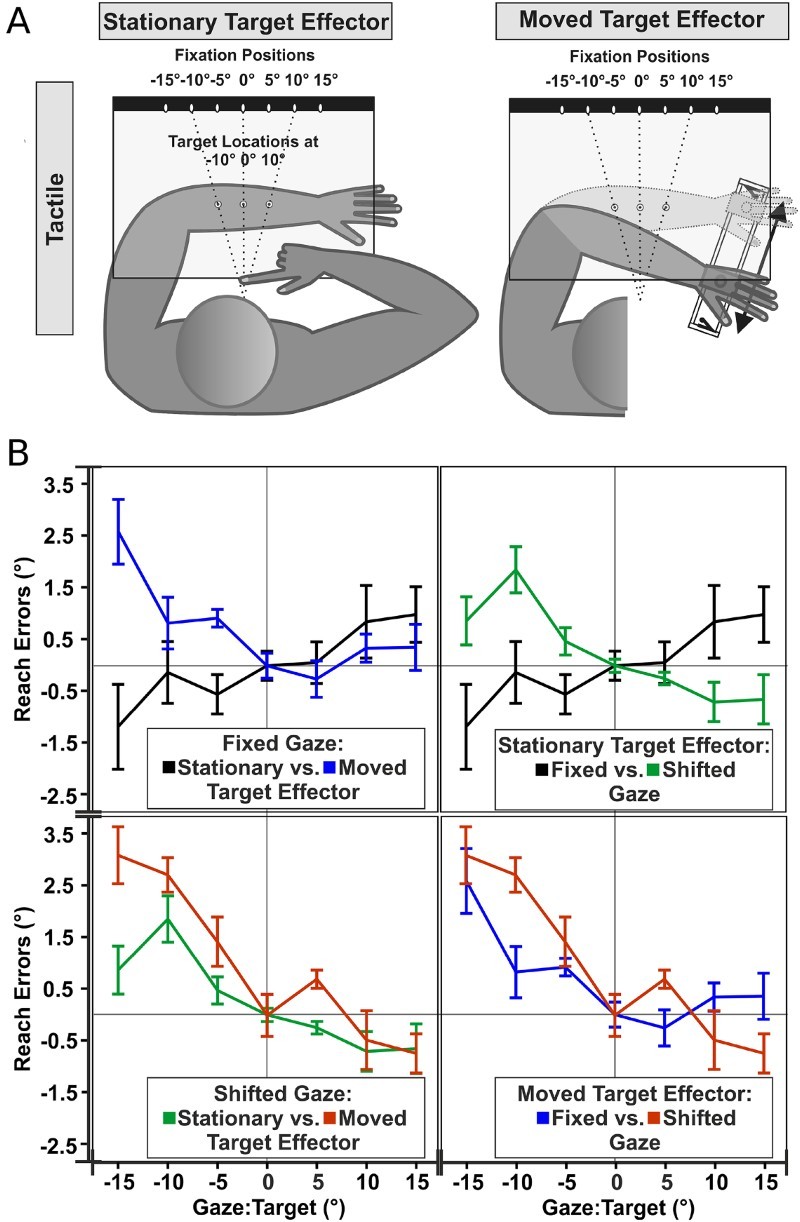

Figure 2.

Motor effects on gaze-dependent coding of tactile targets. (A) Conditions adapted from Mueller and Fiehler (2014a). Participants fixated one of the fixation lights (white ovals). In the stationary condition, tactile stimuli were applied to one of three possible locations on the left forearm (grey circles), which remained stationary at the target position. In the movement condition, the arm was always moved before and after tactile stimulation, guided by a slider on a rail. Responses were given by pointing with the right hand. (B) Mean horizontal reach errors of the 2 gaze conditions (fixed vs. shifted) and the 2 modes of target presentation (stationary vs. moved) as a function of gaze relative to target. Reach errors were collapsed for the 3 target locations and were averaged across subjects.

In sum, evidence for the external coding of touch, established by remapping, has been found in various paradigms and even for very simple localization tasks. Yet, posture manipulations usually induced an (often large) change of the response pattern, rather than a complete shift or reversal. Thus, in sum these studies suggest that external tactile coding influences rather than determines performance in many tasks.

Demonstrations of anatomical, skin-based tactile coding

The large number of phenomena demonstrating tactile remapping could lead to the impression that touch is always coded with respect to external space (Kitazawa, 2002; Röder et al., 2004), and, in fact, some experimental findings support this conclusion (Azañón, Camacho, et al., 2010). Yet, other studies have found that participants’ task performance depended on skin location but not on posture. Such results suggest that, in these contexts, touch location was coded exclusively in an anatomical reference frame.

Tactile cues inhibit, rather than facilitate, tactile detection at the cued location, when the cue–target interval is quite long (some 300–1500 ms; Posner & Cohen, 1984; Tassinari & Campara, 1996). Similarly, detection of tactile targets on the fingers was impaired when a preceding tactile cue had occurred on an adjacent finger. However, this inhibitory effect was absent when the fingers of the two hands were intertwined: A cue on the finger of the other hand that was now a direct neighbour of the tactually stimulated one did not influence detection at the finger now adjacent in space (Röder, Spence, & Rösler, 2002). Thus, inhibition appears to have depended on anatomical coding alone.

Similarly, a tactile variant of the Simon effect was unaffected by posture (Medina, McCloskey, Coslett, & Rapp, 2014). The Simon effect describes the finding that spatially defined responses to non-spatial target characteristics (e.g., whether a target is red or blue) are affected by the congruence of target location and the side of the response. In the tactile version of these experiments, participants discriminated between high- and low-intensity stimuli. Each intensity was assigned to one of the feet; stimuli were presented to the left and right hands, so that the side of stimulation and the side of the response foot could be congruent or incongruent. Responses were modulated by the irrelevant location of the tactile stimuli: Participants tended to respond faster with the right foot if the stimulus had been applied to the right hand. Critically, this mapping between right hand and right foot was independent of whether the hands were uncrossed or crossed (Medina et al., 2014).

Finally, different types of temporal judgments about two spatially distinct, successive tactile stimuli were affected by the somatotopic distance between the stimuli (same hand vs. different hands), but not so by the spatiotopic distance between them (Kuroki, Watanabe, Kawakami, Tachi, & Nishida, 2010).

In sum, although an external reference frame is relevant for many tasks that involve tactile stimuli, human participants appear to rely solely on a skin-based reference frame in some experimental contexts. Thus, the use of an external reference frame in tactile processing may not be universal and, accordingly, requires explanation.

Concurrent use of skin-based and external reference frames

Touch is originally organized in a homuncular, skin-based fashion in primary somatosensory cortex and must then be transformed into external space. As a consequence, tactile localization has often been conceptualized as a serial process (see Figure 1A). According to this idea, touch is first coded with respect to the skin, then remapped, and consecutively stored only in external coordinates. Thus, in the serial logic, the anatomical code is just an interim stage that is abandoned after remapping is complete. One key result that could lend support to the serial viewpoint has been that a tactile cue stimulus on the hand attracts attention in anatomical space for short cue–target time intervals, but in external space for long cue–target intervals (Azañón & Soto-Faraco, 2008). However, this result can as well be explained by assuming that the weighting of anatomical and external reference frames, rather than their availability, varied over time (Azañón & Soto-Faraco, 2008; see also Ley, Steinberg, Hanganu-Opatz, & Röder, 2015).

Electrophysiological studies speak in favour of the latter idea. In one study, participants placed their uncrossed or crossed hands near the feet (Heed & Röder, 2010). In each block, they attended one limb and received a train of stimuli, one at a time, on all four limbs. Participants reported deviant stimuli on the attended limb. When participants attended, say, the right foot, then ERPs to stimuli on the right hand evoked a higher amplitude in the time range of 100–140 ms post stimulus than stimuli on the left hand, presumably because right hand and right foot are anatomically closer to each other than left hand and right foot. Additionally, the stimulus evoked higher ERP amplitude in the same time interval when the right hand was held near the attended foot than when it was crossed away to the other foot. This latter effect reflected an influence of the spatial distance between attended and stimulated locations. Thus, both anatomical and external reference frames affected the amplitude of tactile ERPs in this study.

In another set of studies, participants had to fixate one of their ring fingers; they then received a tactile stimulus on an index or a little finger not necessarily of the same hand. Thus, stimuli could occur either at the left or right side of the body while being to the left or to the right with respect to gaze. After a short delay, participants made a saccade (Buchholz, Jensen, & Medendorp, 2011) or a pointing movement (Buchholz, Jensen, & Medendorp, 2013) towards the touched location. Oscillatory brain activity, measured with magnetoencephalography (MEG), reflected anatomical spatial coding in the alpha frequency range and external spatial coding in the beta frequency range. Both types of activity were observed in parallel, again suggesting that anatomical and external reference frames are active concurrently, and that the skin-based spatial location of touch is not abandoned after the transformation into an external reference frame. Additionally, parallel coding of anatomical and external spatial information in beta and alpha band activity was observed also in a tactile attention task that did not require any movements towards the tactile stimuli (Schubert et al., 2015).

In sum, electrophysiological evidence further corroborates the suggestion that spatial information is maintained in different reference frames in parallel.

Integration of anatomically and externally coded information

Consequently, performance impairments associated with posture changes are thought to reflect errors in the remapping process (Yamamoto & Kitazawa, 2001a), and variations in task performance are associated with variations in the quality of remapping (Gallace, Tan, Haggard, & Spence, 2008). The finding that tactile information is concurrently available in different spatial formats provides the basis for an alternative interpretation of remapping effects. In the serial view, only the external representation of touch is available after remapping has been accomplished. If the external representation of touch is not the only available representation, tactile localization might as well be based on both anatomically and externally coded information. In this alternative view, localization errors such as those that create the crossing effect occur when conflicting anatomical and external codes are integrated (Badde, Heed, et al., 2014; Badde, Heed, et al., 2016; Badde, Röder, et al., 2014; Badde, Röder, et al., 2015; Shore et al., 2002). Variations in the size of posture effects are then interpreted as indicative of the weighting of anatomical and external information (Badde, Heed, et al., 2016; Buchholz, Goonetilleke, Medendorp, & Corneil, 2012; Eardley & van Velzen, 2011). The two accounts differ in their implicit and explicit assumptions. Whereas performance variations due to “improved remapping” can only occur if remapping was incorrect to start with, it is only useful to integrate anatomical and external coordinates if, in general, both provide valid information towards the estimate of touch location. The two accounts consequently reflect different views of the brain: Errors in tactile localization occur either because routine processes like remapping deliver results of strongly varying quality, or because the brain integrates all available information, resulting in occasional, non-optimal results. We have compared these two accounts by means of modelling the data from three different crossing experiments.

Integration model

Participants localized tactile stimuli on their left and right hands in three slightly different task settings: (a) report the TOJ of two tactile stimuli by a button press with the hand that received the first stimulus; (b) report the location of the first of two stimuli by a button press with the hand that received this stimulus, while the second stimulus had to be ignored (note, that the only difference to the TOJ task is that the latter explicitly asks participants to compare the two stimuli in time); and (c) report the location of a single tactile stimulus by a button press with the hand that received it. In all three localization tasks, localization accuracy declined in crossed compared to uncrossed hand postures. The size of the individual crossing effects was correlated across tasks (Badde, Heed, et al., 2016; Figure 1C), supporting the assumption that crossing effects were caused by a common underlying mechanism in all three tasks. Notably, no crossing effect was observed in a fourth task that required a stereotyped response to report the detection (but not location) of a tactile stimulus, indicating that this common mechanism was indeed related to stimulus localization. We developed a probabilistic model that describes localization responses based on the integration of anatomical (left or right hand) and external (left or right side of space) localization responses. The resulting model accounts for tactile localization errors with crossed hands exclusively by weighted integration of the incongruent anatomical and external localization responses (Badde, Heed, et al., 2016; see Figure 1B), which are assumed to be available in sufficient precision in uncrossed and crossed postures. In the uncrossed posture, both reference frames favour the same response, so that using both kinds of spatial information provides redundant information for the correct location and consequently improves performance. In the model, the probability of the correct response accordingly depends on the added weight of anatomical and external response codes. In the crossed posture, tactile stimuli to the right hand (anatomically coded response) are located in the left hemispace (externally coded response), so that both potential responses are in conflict. Accordingly, in the model the difference between the anatomical and external weights determines the final response rates in the crossed posture. In different variants of the model, these weights were allowed to vary in various theoretically plausible ways across individuals, tasks, and posture. Each of these integration model variants was fitted to the experimental data from the three tactile localization tasks. Models were then compared using a Bayesian information criterion that relates the amount of explained variance to the number of free parameters. Model comparisons suggested that individual participants used the same reference frame weights for crossed and uncrossed postures. Further, individual differences in weighting across the three tasks were systematic across participants – that is, they could be explained by fitting a variable on the group level rather than having to assume subject-specific parameters. Despite these reductions of the parameter space, the model achieved a very good fit of the data (R 2 = .96; (Figure 1C), supporting the notion that spatial integration can nicely explain performance changes of tactile localization across different body postures (Badde, Heed, et al., 2016).

To contrast our integration account with serial, remapping-based interpretations of crossed-hand performance deficits, we designed a model that incorporated assumptions often made in the literature on crossing effects. In this alternative model, responses were based on the external representation of touch alone, but the reliability of these representations varied between crossed and uncrossed postures. Thus according to this model, crossing effects did not arise because conflicting anatomical information was used for the response. Instead the external coordinates of touch were assumed to be less reliable in crossed than in uncrossed postures. This alternative model was also fitted to the data from the three tactile localization tasks. Model comparisons performed in the same way as described above confirmed that the integration model was superior to the alternative model in explaining participants’ behaviour in our three tasks Thus, modelling supported the notion that localization errors in crossed postures arise from the integration of conflicting spatial representations, and not from a quality reduction in the remapping process.

Behavioural evidence for the integration of anatomically and externally coded information

Probabilistic modelling favoured the integration view over the non-integration, serial view. Indeed, integrating all available information is advantageous in most situations outside the laboratory, as usually anatomical and external reference frames will be aligned at least roughly, for example with respect to left and right. Thus, reliance on more than one spatial code leads to gain of redundant information that can usually be employed to reduce the variance of the combined sensory estimate (Attneave, 1954). Consequently, the integration view predicts that performance should be better in situations that do not contain spatial conflict, as, for instance, when the hands are uncrossed. Yet, if the beneficial integration of information fails, or is prevented, performance should decline.

Evidence for this idea that integration should be beneficial in situations in which anatomical and external reference frames are aligned comes from studies on the development of tactile remapping. Children aged 5.5 years and older showed a TOJ crossing effect; children younger than 5.5 did not (Pagel et al., 2009). At first glance, this finding strikes as surprising, because it has been demonstrated that infants can access external tactile coordinates already at the age of about 10 months (Bremner, Holmes, & Spence, 2008; Rigato, Begum Ali, van Velzen, & Bremner, 2014). Strikingly, the performance difference between younger and older children originated from a performance improvement in the uncrossed posture in the older children. The combination of these results can be explained by assuming is that young children based their responses in the TOJ task in both postures solely on anatomical information. This account implies that infants and young children do not yet automatically integrate anatomical and external information (Röder et al., 2014; Röder, Pagel, & Heed, 2013), although both types of information are generally available to them (Begum Ali et al., 2014). When integrated processing has been established – suggested to begin at about 6 years of age by the TOJ results – performance in non-conflict situations improves through the additional, redundant, external spatial information. Thus, these developmental findings corroborate the key assumption of the integration model, namely that tactile localization weighs different kinds of information in order to improve localization performance in most normal (i.e., non-experimental) situations.

This key assumption is further supported by experiments that introduce several crossings at once. For instance, TOJ crossing effects arose not just when stimuli are applied to the hands, but also when touch is applied to the tips of hand-held sticks (Yamamoto & Kitazawa, 2001b). Performance is impaired when either the sticks, or the hands that held the sticks, are crossed. When both hands and sticks are crossed at the same time, such that the tips of the sticks again lay in their regular spatial hemifield, performance is comparable to that for uncrossed conditions (Yamamoto & Kitazawa, 2001b), despite the unusual doubly crossed posture. A similar effect of recovered performance with double crossing was shown in TOJ of tactile stimuli on the fingers. Here, TOJ performance was impaired if either the hands were crossed as a whole, or the hands were uncrossed but the stimulated fingers were crossed. When, however, the hands were crossed, and, at the same time, the fingers were crossed back into their regular hemifield, TOJ performance was markedly improved (Heed et al., 2012). If crossing impaired tactile remapping, performance in doubly crossed postures should be even worse than that in the regular crossed posture that involves just a single crossing. In contrast, the integration model predicts the observed performance recovery because anatomical and external codes agree in the final position and can, thus, be integrated without conflict.

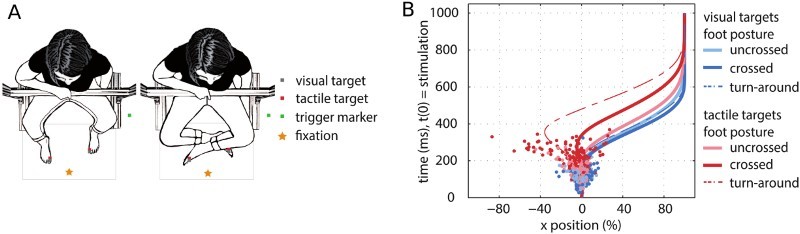

Finally, a recent study explicitly contrasted the non-integration, serial account and the integration account experimentally to scrutinize the results obtained by probabilistic modelling. Participants received a tactile stimulus on one of their feet after they had initiated a straight reach and then redirected the reach to the touch (Brandes & Heed, 2015, see Figure 3). If crossing effects reflected serial processing, then reaches to crossed feet should deviate towards the anatomical side of the stimulated foot immediately following stimulation, and until remapping is completed. At this time, the reach should then turn around towards the correct touch location. In contrast, the integration account predicts that the target coordinate of the reach is undefined until incongruent information of anatomical and external reference frames is successfully integrated. During this time, reaches to crossed feet should continue straight, and they should be corrected towards the correct target hereafter. The majority of reaches to crossed feet continued straight and turned late, favouring the integration account. Nevertheless, a lower number of reaches initially deviated towards the anatomical side of stimulation. A parsimonious explanation of the findings is that reaches reflected an evidence accumulation process that assumes that evidence must reach a bound before a response is initiated (Ratcliff & Rouder, 1998; Wolpert & Landy, 2012). In most cases, this bound is reached at the end of the decision process, and the reach will be corrected late. However, occasionally, the bound of the target on the anatomical side will be reached, leading to deviation of the reach towards the incorrect side; evidence accumulation continues after this initial decision (Resulaj, Kiani, Wolpert, & Shadlen, 2009; Murphy, Robertson, Harty, & O'Connell, 2015) and elicits a correction at a later time. In sum, these experiments provide evidence against the idea that crossing effects originate from failures in tactile remapping and instead suggest that they reflect integration of multiple spatial codes, well in line with the results of probabilistic modelling.

Figure 3.

Pointing to tactile targets. (A) Conditions from Brandes and Heed (2015). In each trial, participants initiated a straight reach. When the hand passed a trigger location (ca. 10 cm into the reach), the participant recieved a visual or tactile stimulus on their uncrossed or crossed feet and had to redirect the reach to this stimulus. (B) Spatial characteristics of the resulting reach trajectories. Single-subject example of mean trajectories; reaches to the left target were flipped to be analysed together with reaches to the right target. Points display single-trial turn points towards the correct goal location for reaches to visual and tactile targets located at uncrossed (light blue/red) or crossed feet (dark blue/red). Dashed line indicates the mean of a subset of reaches that first deviated towards the incorrect side of space (about 15% of reaches, termed “turn-around trajectories”). When turn-around reaches were excluded, the remaining 85% of trials showed trajectories that continued straight and then immediately turned to the correct target. Figure was adapted from Brandes and Heed (2015).

Factors that influence the weighting of spatial information

The idea that sensory information is combined in a weighted fashion to generate optimal perceptual judgments has been developed in the field of visual perception (Landy, Maloney, Johnston, & Young, 1995; for an overview see Trommershäuser, Körding, & Landy, 2011) and multisensory integration (Alais & Burr, 2004; Ernst & Banks, 2002; for reviews see Ernst & Bülthoff, 2004; Ernst & Di Luca, 2011). The cue integration approach posits that sensory information is weighted according to its reliability, and reliability is conceptualized as the inverse of variance. These principles of cue integration may also be at the heart of the weighting of anatomically and externally coded information on touch. However, it is not trivial to validate the concept of optimal integration in the present context; skin-based reliabilities are hardly measurable without the potential confound of proprioceptive information, and they cannot be varied without influencing external reliabilities as well. Nevertheless, a number of factors that influence the weighting of spatial information from the different reference frames have been identified experimentally. These results show that weighting of tactile spatial information is not determined just by bottom-up processed sensory information, but depends on top-down regulated cognitive factors as well. We discuss each of these factors in turn.

Visual information

Some studies have investigated whether the availability of vision per se affects tactile localization. For instance, non-informative visual input has been shown to influence the weighting of external and anatomical reference frames: In a haptic alignment task (Kappers, 2004) that required participants to align turnable bars parallel to each other, providing vision of the surroundings while hiding bars and hands biased responses towards an external reference frame in comparison to blindfolded conditions (Newport, Rabb, & Jackson, 2002). Because the visual content available in this experimental set-up is not related to the tactile input, the most probable mechanism behind the effect on haptic processing is the change of the relative weighting of reference frames in the tactile modality.

Similarly, visual information about the posture of the hands can influence the weighting of anatomical and external information. Crossing effects in tactile TOJ were reduced when participants performed the task with eyes closed as compared to with open eyes (Cadieux & Shore, 2013). Furthermore, visual input that provided relevant, but false, information has been shown to affect tactile localization (Azañón & Soto-Faraco, 2007). In this experiment, uncrossed rubber hands were placed over participants’ covered, crossed real hands. Compared to participants just viewing crossed rubber hands, this manipulation significantly reduced the TOJ crossing effect.

Studies of blind humans further corroborate the relevance of the visual system for reference frame weighting. In contrast to sighted humans, congenitally blind participants showed neither crossing effects in the TOJ task (Röder et al., 2004) nor a modulation of ERP components that reflect an employment of external coding for tactile attention (Röder, Föcker, Hötting, & Spence, 2008). In contrast, participants who lost vision later in life showed TOJ hand-crossing effects just like sighted participants. Consequently, vision seems to be necessary to develop the automatic integration of externally coded tactile information into the spatial processing of touch. Similar to congenitally blind participants, a participant who was born blind, but whose vision was restored at the age of 2 years, showed no crossing effect in tactile TOJ. Yet, in a tactile–visual task the participant showed an effect of external touch location (Ley et al., 2013). Thus, even though the participant did not automatically integrate the external response code, the additional visual input in the second task appears to have changed the weights given to anatomically and externally coded information.

The suggested association between visual stimulation and external coding of touch is in line with the finding that some purely tactile non-spatial attention effects, such as inhibition of return and the Simon effect, unfolded entirely in anatomical space (Medina et al., 2014; Röder et al., 2002; see above), whereas cueing effects of touch on non-spatial visual decisions were mediated in an external reference frame (Azañón, Camacho, et al., 2010).

Together, these findings suggest that the task relevance of vision raises the weight of the external information for tactile spatial coding.

Movement

Movements enable us to interact with the external world. Thus, if tactile reference frames were weighted to best fit the current situation, using the external representation of touch within a movement context would seem most appropriate. Indeed, several recent results have demonstrated that externally coded information is weighted higher when directed movement (e.g., pointing or simply a change of posture) is required. One study compared the reference frames applied by participants to localize tactile stimuli on the forearm when the arm was kept still, versus when it was moved before and after the stimulation (Mueller & Fiehler, 2014a, 2014b; Figure 2A). An influence of gaze-dependent location codes was evident only if a movement was executed before the localization response. Moreover, this effect was observed after any kind of movement – that is, target or effector arm as well as gaze shifts – as long as this movement occurred before the localization response (Mueller & Fiehler, 2014a, 2014b; Figure 2B). Similarly, rotations of the head by 90 degrees executed between the presentation of a tactile target and the localization response modulated the gaze-dependent error for touch localization on the abdomen (Pritchett, Carnevale, & Harris, 2012). Further evidence for the association between movement and the external reference frame comes from studies in congenitally blind individuals. Recall that congenitally blind individuals did not exhibit a TOJ crossing effect (Röder et al., 2004). However, when participants had to execute hand movements in every trial to cross or uncross the hands, then congenitally blind participants showed a crossing effect in tactile TOJ as well (Heed, Röder, & Möller, 2015). In sum, several experiments suggest that movement context biases spatial integration towards an external reference frame.

Task and response requirements

The specific requirements of the task and the responses in an experiment may directly or indirectly set an external or anatomical context. For instance, if tactile locations on the arm were reported with respect to a ruler, localization errors were affected by gaze location (Harrar & Harris, 2009). This effect of eye posture was considerably reduced when localization judgments were given with respect to imagined spatial segments, even though the segments might still rely on visual imagery (Harrar, Pritchett, & Harris, 2013). Thus, the required comparison to the concrete visual reference might have induced a higher weighting of the external, here gaze-centred, reference frame.

As another example, no evidence for external spatial coding was observed in ERPs of congenitally blind participants in a task that required the detection of deviant tactile stimuli at a pre-cued hand (Röder et al., 2008). In contrast, a study employing the same experimental paradigm did report such external coding in blind participants (Eardley & van Velzen, 2011). The difference between the two studies was the way participants had been instructed about the cued hand – anatomically in the first, and externally in the second study. Thus, even seemingly small details in the response set-up can have significant consequences on spatial coding.

Behavioural evidence from the TOJ task further corroborates these conclusions. Recall the study in which we tested hand-crossing effects in three distinct, though similar, tactile localization paradigms (Badde, Heed, et al., 2016). The second task in this study employed identical stimulation to that in the TOJ task; furthermore, in both tasks participants had to judge the location of the first stimulus. The only difference between the experiments was that participants were asked to monitor the temporal order of both stimuli in the first task and to “ignore the second stimulus” in the second task. This latter instruction was associated with better crossed hands performance than the classical TOJ instruction, suggesting that participants used a different weighting scheme for integrating anatomical and external reference frames in the two versions of the task (Badde, Heed, et al., 2016).

Further evidence that the weighting of anatomical and external reference frames can even be changed by the wording of the task instructions comes from a tactile congruency task in which the elevation of a tactile target stimulus had to be reported while ignoring a congruent or incongruent tactile distractor on the other hand (Soto-Faraco, Ronald, & Spence, 2004). Formulation of the task instructions in anatomical versus in external terms produced different experimental results: When verbal elevation judgments were instructed referring to external space (up or down), congruency effects were determined by external space in contrast to the same task with anatomically coded instruction (thumb or finger; Gallace et al., 2008).

In sum, these experimental results suggest that the weighting of sensory information is biased towards the reference frame of the required output.

Task context

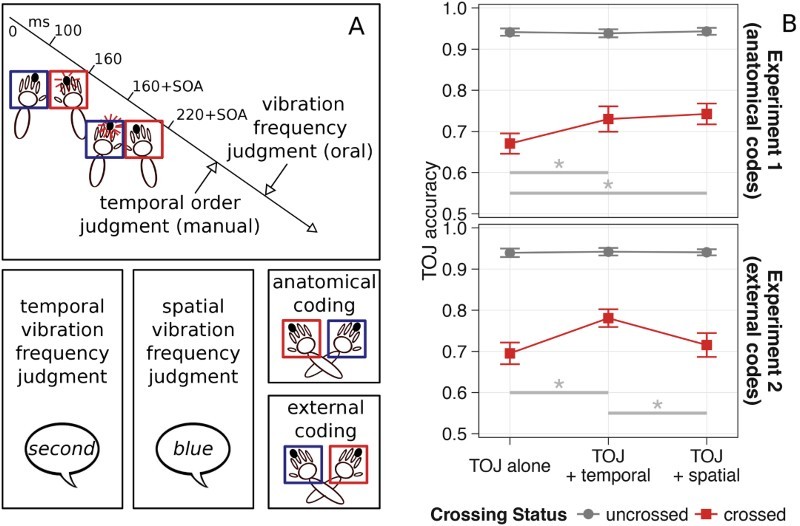

In the previous paragraphs, we have presented evidence that the weighting of information from anatomical and external reference frames is adjusted to the task demands. However, we wondered whether weighting would also adapt to task-irrelevant context parameters. To explore this question, we used a dual-task paradigm: In each trial, participants first conducted a TOJ task and then a secondary task, based on the same stimuli. One tactile stimulus was presented to each hand; each stimulus varied with respect to vibration frequency (fast vs. slow), independent of stimulus location. For the primary TOJ task, participants pressed a button with the hand that was stimulated first. The secondary task was to indicate where the stimulus with faster vibration had occurred. To avoid that responses in the secondary task would confound performance in the primary TOJ task, responses of the secondary task were colour rather than location coded. Participants had their hands in coloured boxes and indicated the location of the faster stimulus by naming the colour of the box in which the faster stimulus had occurred (Badde, Röder, et al., 2015; Figure 4). For one group of participants, the coloured boxes were moved along with the hands when the hands were crossed, so that one specific colour was associated with a hand. For another group of participants, the boxes were attached to the table, and the hands thus switched colours between crossed and uncrossed postures. Effectively, then, response coding was anatomical for the first, but external for the second group. Notably, this manipulation of response framing in the secondary task affected participants’ error rates in the primary task, the tactile TOJ task. Note that responses in the two tasks were clearly distinct (hand press vs. verbal response) and that the relevant stimulus features (location vs. vibration frequency) of the two tasks were completely independent. Compared to performing the TOJ task without the second task, crossed hand performance was improved when the secondary task accentuated an anatomical reference frame, but not when the secondary task accentuated an external reference frame (Badde, Röder, et al., 2015). In sum, the irrelevant context of the secondary task influenced the weighting of anatomically and externally coded tactile information in the primary task.

Figure 4.

Task context effects on the crossing effect in tactile temporal order judgment (TOJ). (A) Procedure and conditions from Badde, Röder, et al. (2015). In each trial, participants perceived two successive tactile stimuli, one to each hand. The vibration frequency of the stimuli varied independently of their location. First, participants performed the TOJ task – that is, they indicated the location of the first stimulus by a button press with the respective hand. Second, participants verbally indicated either the temporal order of the two vibration frequencies or their spatial arrangement. To avoid confounds between left–right responses in the TOJ task and left–right responses in the secondary spatial task, participants reported the colour of the box that the faster stimulus was located in. In Experiment 1, the colours were associated with the hands, accentuating anatomical coding. In Experiment 2, the coloured boxes were attached to one side of the table and, thus, were associated with a side of space, accentuating external coding. (B) Accuracy in the primary TOJ task. Error rates with uncrossed hands (grey circles) were unaffected by the additional judgments. In contrast, error rates with crossed hands (red squares) decreased with temporal additional judgments and when the anatomical reference frame was accentuated, but not when the external reference frame was accentuated. Error bars depict standard errors of the mean. Figure adapted with permission from Badde, Röder, et al. (2015).

Another modulation of re-weighting due to the task context has been demonstrated in the context of tactile spatial attention. Tactile elevation judgments are usually improved at the location of upcoming saccades, indicating the use of a visual reference frame for touch. However, this benefit diminished when the tactile target occurred only infrequently at the location of the subsequent saccade (Rorden, Greene, Sasine, & Baylis, 2002). Thus, the visual reference frame was employed to code tactile locations as long as the task context made it advantageous for tactile processing, but when the advantage was withdrawn, the influence of the visual reference frame diminished. These results demonstrate that adapting reference frame weighting to context parameters can be an efficient strategy, even if, at first glance, these parameters appear irrelevant to the task.

Working memory load

The sensitivity of reference frame weighting to the task instructions implies that cognitive control processes – that is, processes that exert modulation in a top-down manner – are involved in weighting information coded in different reference frames. To explicitly test for such top-down processing in reference frame weighting, we combined the TOJ task with a working memory task that loaded either verbal or spatial working memory (Badde, Heed, et al., 2014). TOJ crossing effects were reduced under concurrent working memory load; more specifically, performance improved in crossed conditions and declined in uncrossed conditions under high as compared to low load. The performance improvement in the crossed posture precludes that the observed effects of the secondary task simply resulted from increased difficulty in responding to the TOJ task when load was high. Instead, the result pattern implies that the processes underlying tactile localization required cognitive resources.

The reduction of the crossing effect under high load in the above study was independent of the kind of working memory load, verbal or spatial (Badde, Heed, et al., 2014). The process of tactile remapping is spatial in nature; accordingly, if the transformation between reference frames were affected by load, the spatial, but not the verbal, task should have changed TOJ performance. That both types of load material affected performance, instead, implies that the weighting of anatomical and external reference frames during spatial integration for tactile localization is under top-down control. The result pattern is, thus, well in line with the evidence favouring the integration account we have presented so far.

Previous and future postures

Tactile localization is influenced not only by current, but also by previous postures (Azañón et al., 2015). Errors in tactile TOJ declined after repeated exposure to tactile stimuli presented to crossed hands, but rose again when tactile stimuli had been applied to uncrossed hands in the preceding trial. Such order effects can be explained by conflict-induced cognitive control (Botvinick, Braver, Barch, Carter, & Cohen, 2001) that might induce a re-weighting of anatomical and external information (Azañón et al., 2015).

Furthermore, TOJ performance is affected not only by the recent history of posture and stimulation, but also by the imminently planned posture (Heed, Röder, et al., 2015; Hermosillo, Ritterband-Rosenbaum, & van Donkelaar, 2011). In these studies, participants were instructed to place their hands either uncrossed or crossed; then, they received the instruction to move both hands forward, again ending in either an uncrossed or a crossed posture. Stimuli for the TOJ were presented before the movement was executed. TOJ performance was impaired when the planned movement brought the hands into a crossed posture, and it improved when the movement brought the hands into an uncrossed posture. As with the dual-task setting, the improved performance for some experimental conditions argues against the observed effects being due to changes of task difficulty. As the stimuli were presented before movement, an anticipated reference frame conflict (in the case of a planned movement into a crossed posture) and the anticipated removal of such a conflict (in the case of a planned movement into an uncrossed posture) presumably led to a re-weighing of anatomical and external information.

Both of these lines of study suggest that cognitive processes monitor for previous and potentially arising conflicts between tactile coding in different reference frames.

Implications of the identified factors

Our proposed integration scheme also accommodates some findings that were originally designed to investigate tactile remapping – that is, the transformation rather than integration of tactile spatial information (e.g., Gallace et al., 2008). However, whereas the results of some studies may be interpreted both in a transformation and in an integration framework, some experimental findings are difficult to account for without returning to our proposed integration scheme. For instance, in the study that assessed the relevance of the task context by introducing secondary tasks (Badde, Röder, et al., 2015), participants’ performance markedly improved with the secondary task when it accentuated an anatomical reference frame. Such selectivity should not be observed if crossing effects were due to difficulties with tactile remapping, and it is not straightforward to posit that remapping improves when an additional task has to be executed. Nevertheless, crossing effects are clearly an indicator that remapping must have taken place, because without remapping, the external spatial information would not be available. However, in our view, manipulations of the crossing effect probably do not indicate any characteristics of the remapping process, but rather speak to the integration of spatial information.

All of the findings we have presented can be funnelled into a coherent picture: The external reference frame is accentuated whenever the focus of the participant is on one’s surroundings, rather than on the body. This focus might be induced by task demands as well as by environmental cues. Such a weighting scheme is ecologically plausible: Remapping spatial information into an external reference frame is thought to have the functionality to enable interactions with objects around the body – for instance, by allowing actions like saccades and reaches towards the source of the tactile sensation. However, even if the external information is not needed, it is usually still used, though to a lesser extent. Consequently, all information including potential information about a lingering conflict is preserved, but the current task can be adequately pursued.

Spatial integration in the brain

One important strategy of cognitive science is to attempt to link psychological concepts with processing in the brain, either on the level of neurons or on the level of brain regions and brain networks. We briefly review how tactile remapping and spatial integration have been investigated in this regard. Tactile remapping, and coordinate transformations more generally, have been associated with regions in the posterior parietal cortex (PPC). The crucial role of the PPC in the spatial representation of touch has been corroborated using transcranial magnetic stimulation (TMS) over the putative human homologue region of the macaque ventral intraparietal region (hVIP). TMS stimulation over hVIP interrupted tactile–visual facilitation in external space (Bolognini & Maravita, 2007), comparisons of the external alignment of two tactile stimuli (Azañón, Longo, et al., 2010), and the external–spatial mediation of tactile–auditory interactions (Renzi et al., 2013). These studies are often taken as evidence that hVIP is responsible for tactile remapping. However, the results are compatible also with the notion that tactile remapping occurs in SII (Heed, 2010; Soto-Faraco & Azañón, 2013; Schubert et al., 2015), whereas the spatial information is maintained and integrated in hVIP. However, it is as plausible that PPC performs both coordinate transformation and weighted integration. Monkey neurophysiology and imaging in humans have demonstrated reference frames anchored to the eye (Batista, Buneo, Snyder, & Andersen, 1999; Bernier & Grafton, 2010; X. Chen, DeAngelis, & Angelaki, 2013; Medendorp, Goltz, Vilis, & Crawford, 2003; Sereno, Pitzalis, & Martinez, 2001), the head (Bremmer, Schlack, Duhamel, Graf, & Fink, 2001; Brotchie, Andersen, Snyder, & Goodman, 1995), and the body (Bernier & Grafton, 2010; Snyder, Grieve, Brotchie, & Andersen, 1998), as well as world-centred (Snyder et al., 1998) and idiosyncratic (Chang & Snyder, 2010) ones in PPC. Importantly, different reference frames can co-exist in one brain region; for instance, tactile and visual receptive fields are not necessarily aligned in monkey VIP (Avillac, Denève, Olivier, Pouget, & Duhamel, 2005). In agreement with these data, computational models (Beck, Latham, & Pouget, 2011; De Meyer & Spratling, 2013; Deneve, Latham, & Pouget, 2001; Makin, Fellows, & Sabes, 2013; Pouget, Ducom, Torri, & Bavelier, 2002) have been derived that are able to integrate sensory information coded in different reference frames without assuming a common superordinate reference frame that has been suggested previously (e.g., Schlack, Sterbing-D’Angelo, Hartung, Hoffmann, & Bremmer, 2005; Stein & Stanford, 2008). Additionally, it has been suggested that the different reference frames can be individuated through oscillatory activity – that is, recurring rhythmic activity of neuronal ensembles (Heed, Buchholz, et al., 2015). It has been demonstrated that different regions in parietal cortex exhibit a bias for one or another reference frame; these biases may depend on the position of the respective region in the sensory processing hierarchy, with a region hierarchically close to the tactile system preferring a body-centred code, a region close to the vestibular cortex using a mixture of body- and head-centred coding, and a region along the visual pathway preferring eye- and head-centred, but not body-centred, coding (X. Chen, Deangelis, & Angelaki, 2013). Additionally, it has recently been proposed that these regions may indeed perform Bayes optimal (multi-) sensory integration, based on neuronal population responses (Fetsch, Pouget, DeAngelis, & Angelaki, 2012; Ma, Beck, Latham, & Pouget, 2006; Ohshiro, Angelaki, & DeAngelis, 2011; Seilheimer, Rosenberg, & Angelaki, 2014). However, although creating a chain of evidence from coordinate transformations and sensory integration in neuronal activity recorded in macaques with the results from human psychophysics and psychophysiology, as reviewed in this paper, would seem promising to fertilize theoretical accounts and experimental approaches in both directions, a direct link between these two fields is currently lacking (Carandini, 2012; Fetsch, DeAngelis, & Angelaki, 2013).

Touch localization is a constructive process

We began this review with the notion that perceptual phenomena like the rubber hand or the Pinocchio illusion show that the perception of our body is not necessarily veridical or bound to our long-term experiences about body shape and configuration. These phenomena vividly illustrate that the concept of our body inputs, while decisively relying on tactile location estimates, is constructed from second to second based on numerous sensory inputs. This construction process is inevitable because no direct sensory information about body shape and configuration is available to our brain. In contrast, tactile signals provide direct information about the location of tactile stimuli. However, this information is direct only with respect to where the stimulus has occurred on the skin. In contrast, where the event happened on the body must be inferred by combining skin location with information about the layout of the body, and to infer its location in space it further has to be combined with posture information. Accordingly, estimates of tactile location in space have to be constructed as well, rather than merely being the consequence of simplistic, straightforward processing of sensory information. In this review we have argued that tactile localization is not bound to one reference frame, either a skin-based or an external one, but always relies on information coded with respect to several reference frames. Consequently, remapping of tactile locations from a skin-based into an external reference frame is necessary, but not exhaustive, for tactile localization. Rather, the result of integrating the potentially conflicting pieces of information determines tactile location estimates. We have identified several factors that bias weighted integration towards either anatomical or external reference frames. These mediating factors range from sensory inputs to cognitive processes, demonstrating that tactile localization is ideally suited for the systematic investigation of the principles according to which the brain constructs our percept not only of the world but also of ourselves.

Funding Statement

S.B. is supported by a research fellowship from the German Research Foundation (DFG) [grant number BA 5600/1-1]; T.H. is supported by an Emmy Noether grant from the German Research Foundation (DFG) [grant number HE 6368/1-1].

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Aglioti S., Smania N., Peru A. Frames of reference for mapping tactile stimuli in brain-damaged patients. Journal of Cognitive Neuroscience. 1999:67–79. doi: 10.1162/089892999563256. [DOI] [PubMed] [Google Scholar]

- Alais D., Burr D. The ventriloquist effect results from near-optimal bimodal integration. Current Biology. 2004:257–262. doi: 10.1016/j.cub.2004.01.029. http://dx.doi.org/10.1016/j.cub.2004.01.029 [DOI] [PubMed] [Google Scholar]

- Attneave F. Some informational aspects of visual perception. Psychological Review. 1954:183–193. doi: 10.1037/h0054663. http://dx.doi.org/10.1037/h0054663 [DOI] [PubMed] [Google Scholar]

- Avillac M., Denève S., Olivier E., Pouget A., Duhamel J.-R. Reference frames for representing visual and tactile locations in parietal cortex. Nature Neuroscience. 2005:941–949. doi: 10.1038/nn1480. http://dx.doi.org/10.1038/nn1480 [DOI] [PubMed] [Google Scholar]

- Azañón E., Camacho K., Soto-Faraco S. Tactile remapping beyond space. European Journal of Neuroscience. 2010:1858–1867. doi: 10.1111/j.1460-9568.2010.07233.x. http://dx.doi.org/10.1111/j.1460-9568.2010.07233.x [DOI] [PubMed] [Google Scholar]

- Azañón E., Longo M. R., Soto-Faraco S., Haggard P. The posterior parietal cortex remaps touch into external space. Current Biology. 2010:1304–1309. doi: 10.1016/j.cub.2010.05.063. http://dx.doi.org/10.1016/j.cub.2010.05.063 [DOI] [PubMed] [Google Scholar]

- Azañón E., Soto-Faraco S. Alleviating the ‘crossed-hands’ deficit by seeing uncrossed rubber hands. Experimental Brain Research. 2007:537–548. doi: 10.1007/s00221-007-1011-3. http://dx.doi.org/10.1007/s00221-007-1011-3 [DOI] [PubMed] [Google Scholar]

- Azañón E., Soto-Faraco S. Changing reference frames during the encoding of tactile events. Current Biology. 2008:1044–1049. doi: 10.1016/j.cub.2008.06.045. http://dx.doi.org/10.1016/j.cub.2008.06.045 [DOI] [PubMed] [Google Scholar]

- Azañón E., Stenner M.-P., Cardini F., Haggard P. Dynamic tuning of tactile localization to body posture. Current Biology. 2015 doi: 10.1016/j.cub.2014.12.038. http://dx.doi.org/10.1016/j.cub.2014.12.038 [DOI] [PubMed] [Google Scholar]

- Badde S., Heed T., Röder B. Processing load impairs coordinate integration for the localization of touch. Attention, Perception, & Psychophysics. 2014:1136–1150. doi: 10.3758/s13414-013-0590-2. http://dx.doi.org/10.3758/s13414-013-0590-2 [DOI] [PubMed] [Google Scholar]

- Badde S., Heed T., Röder B. Integration of anatomical and external response mappings explains crossing effects in tactile localization: A probabilistic modeling approach. Psychonomic Bulletin & Review. 2016:387–404. doi: 10.3758/s13423-015-0918-0. [DOI] [PubMed] [Google Scholar]

- Badde S., Röder B., Heed T. Multiple spatial representations determine touch localization on the fingers. Journal of Experimental Psychology: Human Perception and Performance. 2014:784–801. doi: 10.1037/a0034690. http://dx.doi.org/10.1037/a0034690 [DOI] [PubMed] [Google Scholar]

- Badde S., Röder B., Heed T. Flexibly weighted integration of tactile reference frames. Neuropsychologia. 2016:367–374. doi: 10.1016/j.neuropsychologia.2014.10.001. http://dx.doi.org/10.1016/j.neuropsychologia.2014.10.001 [DOI] [PubMed] [Google Scholar]

- Batista A. P., Buneo C. A., Snyder L. H., Andersen R. A. Reach plans in eye-centered coordinates. Science. 1999:257–260. doi: 10.1126/science.285.5425.257. [DOI] [PubMed] [Google Scholar]

- Beck J. M., Latham P. E., Pouget A. Marginalization in neural circuits with divisive normalization. Journal of Neuroscience. 2011:15310–15319. doi: 10.1523/JNEUROSCI.1706-11.2011. http://dx.doi.org/10.1523/JNEUROSCI.1706-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Begum Ali J., Cowie D., Bremner A. J. Effects of posture on tactile localization by 4 years of age are modulated by sight of the hands: Evidence for an early acquired external spatial frame of reference for touch. Developmental Science. 2014:935–943. doi: 10.1111/desc.12184. http://dx.doi.org/10.1111/desc.12184 [DOI] [PubMed] [Google Scholar]

- Bernier P.-M., Grafton S. T. Human posterior parietal cortex flexibly determines reference frames for reaching based on sensory context. Neuron. 2010:776–788. doi: 10.1016/j.neuron.2010.11.002. http://dx.doi.org/10.1016/j.neuron.2010.11.002 [DOI] [PubMed] [Google Scholar]

- Blanke O. Multisensory brain mechanisms of bodily self-consciousness. Nature Reviews Neuroscience. 2012:556–571. doi: 10.1038/nrn3292. http://dx.doi.org/10.1038/nrn3292 [DOI] [PubMed] [Google Scholar]

- Blanke O., Slater M., Serino A. Behavioral, neural, and compu-tational principles of bodily self-consciousness. Neuron. 2015:145–166. doi: 10.1016/j.neuron.2015.09.029. http://dx.doi.org/10.1016/j.neuron.2015.09.029 [DOI] [PubMed] [Google Scholar]

- Bolognini N., Maravita A. Proprioceptive alignment of visual and somatosensory maps in the posterior parietal cortex. Current Biology. 2007:1890–1895. doi: 10.1016/j.cub.2007.09.057. http://dx.doi.org/10.1016/j.cub.2007.09.057 [DOI] [PubMed] [Google Scholar]

- Botvinick M. M., Braver T. S., Barch D. M., Carter C. S., Cohen J. D. Conflict monitoring and cognitive control. Psychological Review. 2001:624–652. doi: 10.1037/0033-295X.108.3.624. [DOI] [PubMed] [Google Scholar]

- Botvinick M. M., Cohen J. Rubber hands ‘feel’ touch that eyes see. Nature. 1998:756. doi: 10.1038/35784. http://dx.doi.org/10.1038/35784 [DOI] [PubMed] [Google Scholar]

- Bradshaw J., Nathan G., Nettleton N., Pierson J., Wilson L. Head and body hemispace to left and right iii: Vibrotactile stimulation and sensory and motor components. Perception. 1983:651–661. doi: 10.1068/p120651. [DOI] [PubMed] [Google Scholar]