Abstract

Accurate motor control is required when walking around obstacles in order to avoid collisions. When vision is unavailable, sensory substitution can be used to improve locomotion through the environment. Tactile sensory substitution devices (SSDs) are electronic travel aids, some of which indicate the distance of an obstacle using the rate of vibration of a transducer on the skin. We investigated how accurately such an SSD guided navigation in an obstacle circumvention task. Using an SSD, 12 blindfolded participants navigated around a single flat 0.6 x 2 m obstacle. A 3-dimensional Vicon motion capture system was used to quantify various kinematic indices of human movement. Navigation performance under full vision was used as a baseline for comparison. The obstacle position was varied from trial to trial relative to the participant, being placed at two distances 25 cm to the left, right or directly ahead. Under SSD guidance, participants navigated without collision in 93% of trials. No collisions occurred under visual guidance. Buffer space (clearance between the obstacle and shoulder) was larger by a factor of 2.1 with SSD guidance than with visual guidance, movement times were longer by a factor of 9.4, and numbers of velocity corrections were larger by a factor of 5 (all p<0.05). Participants passed the obstacle on the side affording the most space in the majority of trials for both SSD and visual guidance conditions. The results are consistent with the idea that SSD information can be used to generate a protective envelope during locomotion in order to avoid collisions when navigating around obstacles, and to pass on the side of the obstacle affording the most space in the majority of trials.

Introduction

A requirement of travelling through urban environments is the ability to safely navigate around obstacles [1]. Visual loss substantially reduces the spatial information available for safe locomotion, although aids such as canes and guide dogs can partially compensate for this. Echoes from self-generated sound (for reviews, see [2–4]), or from electronic travel aids called sensory substitution devices (SSDs), which convey spatial information via an unimpaired modality [5], can also help visually impaired individuals to circumvent obstacles. The current study addressed three questions: 1) Can SSD information be used to create a ‘protective-envelope’, or buffer space, to help protect people during visionless navigation? 2) What are the exact kinematics of obstacle circumvention using an echoic SSD, and how do they compare to those for echolocation-guided locomotion, as measured previously [6]? 3) Do blindfolded participants using an SSD pass on the side of an obstacle affording the most space, as they typically do when visual information is available?

Previous work has investigated obstacle avoidance under visual guidance [7], and using sensory substitution information when vision is absent [8–11]. Some SSDs convert visual information into an auditory or a haptic signal using a predetermined transformation algorithm [12]. Such SSDs, referred to here as visual pattern SSDs, include the vOICe (the central letters stand for “oh I see,” [13–15]), and the “Prosthesis Substituting Vision with Audition” (PSVA, [16, 17]). Other SSDs, referred to here as echoic SSDs, work on an echolocation principle, and include Kay’s Advanced Spatial Perception Aid (KASPA, [18]) and the Miniguide [19, 20]. Using an ultrasound source and a receiver, an echoic SSD detects signal echoes, which are used to calculate the distance to an obstacle using the time delay between the emission and echo. This information is then converted into a haptic or auditory signal.

A number of studies have demonstrated that echoes from self-generated sounds can be used to perceive the spatial location of an obstacle, and to approach it as closely as possible without touching it, for both blind [21–24] and blindfolded sighted participants [22, 25, 26]. Spatiotemporal flow fields from echoic SSDs can also provide information regarding the layout of the environment to inform safe locomotion. Maidenbaum et al. [8] showed that blindfolded-sighted participants were able to use an echoic SSD (Eyecane) for distance estimation, navigation and obstacle detection. Chebat et al. [10] showed that blind participants and blindfolded-sighted controls could use the Eyecane to navigate through real and virtual mazes. In virtual environments, the increased sensory range of 5 m provided by a virtual Eyecane allowed participants to take shorter navigation paths and make fewer collisions than when a virtual white cane was used [9].

Although SSDs can be highly useful as a navigation aid, collisions do sometimes occur. Levy-Tzedek et al. [27] reported that the rate of collisions was higher using the Eyecane SSD than using vision to navigate in virtual mazes. Veraart et al. [28] reported that binocularly blinded cats were able to use echoic SSD information to assess depth in a jumping task and to avoid obstacles when moving through a maze. Veraart and Wanet-Defalque [29] showed that use of an echoic SSD by early-blind humans increased performance for judging the distance and direction of obstacles located along various routes. Hughes [30] reported that echoic SSD information allowed blindfolded normally sighted participants to judge whether narrow apertures were passable in the majority of trials. Kolarik et al. [20] showed that echoic SSD information allowed blindfolded participants to make the shoulder rotations needed to move through narrow apertures. As expected, compared to visual guidance, shoulder rotations made under SSD guidance were greater, movement times were longer, and collisions sometimes occurred. Hicks et al. [11] developed a depth-based visual display as an assistive device for people with residual vision. Sighted participants were able to use the device to navigate an obstacle course, and partially sighted individuals were able to respond to illuminated objects presented to their residual visual fields using the device.

Chebat et al. [31] showed that blind and normally sighted blindfolded participants were able to navigate an obstacle course using a visual pattern SSD called the Tongue Display Unit (TDU). Blind and sighted participants successfully avoided large obstacles on approximately 78% and 71% of trials, respectively. Kolarik et al. [6] showed that echoes from sounds that were self-generated by sighted blindfolded participants could effectively guide locomotion around an obstacle in the majority of trials. Performance was evaluated by successful avoidance as well as using 3-dimensional motion capture to quantify various indices including buffer space (the clearance between the shoulders and the obstacle, following Franchak et al. [32], who used the term buffer space to indicate the margin between the body and sides of an aperture during locomotion). Compared to visual guidance, buffer space, movement times, and the number of velocity corrections were greater when using echolocation.

Under visual navigation, people appear to generate a protective envelope during locomotion that allows sufficient time and distance to perceive hazards in the local environment, and plan gait adaptations to avoid collisions [33]. If SSD information can be used to generate a protective envelope during visionless navigation, then under SSD guidance participants should be able to navigate around an obstacle on the majority of trials without collision and they should do this in an efficient manner once the obstacle has been detected. We assessed whether this was the case. Locomotion under visual guidance was measured to provide a baseline for comparison. The experiment was designed to allow comparison with a previous study [6] using echoes from self-generated mouth clicks, as well as a no-click/no-vision condition in which participants wore blindfolds and did not produce mouth clicks, as the task, procedure, and kinematics measured were similar to those used by Kolarik et al. [6].

In their review of the effectiveness of electronic mobility devices, Roentgen et al. [34] noted that although existing studies showed that the use of SSDs by those with visual impairment was generally beneficial, no standardized methods or existing measurement instruments were used in the studies that were reviewed (apart from one study that reported preferred walking speed). Kinematic measures of SSD-guided obstacle circumvention have not been obtained in previous studies, and an examination of the kinematics would be relevant to blindness and rehabilitation training, providing objective assessments of how practical and efficient SSD information is for everyday navigation. Although previous work has shown that SSDs can help prevent collision with obstacles [31], other important practical information is lacking, including an assessment of the time needed to scan and safely navigate around an obstacle using an SSD and the size of the buffer space. Reduced walking speed, indicated by increased movement time, is also an important safety mechanism, since if a collision does occur it is less likely to result in injury. Previous research [35] demonstrated that participants with substantially reduced visual fields slowed down and increased the clearance between the obstacle and body when navigating around multiple obstacles. The participants in that study appeared to optimize safety (collision avoidance) at the cost of spending more energy (greater clearance).

The measure “velocity corrections” was chosen for the present study to characterize the fluidity of movement and navigation around an obstacle. If participants navigating under SSD guidance frequently stop and start, this would have implications for the practicality of use in non-laboratory settings, and may also affect energy consumption. In addition, we investigated whether participants passed obstacles on the side affording the most space under SSD guidance, as has been previously shown for vision [7]. We also measured initial path deviations (the distance from the obstacle where participants first deviated from moving in a straight line), indicative of path planning. Kinematic information may highlight possible limitations in the practicality of SSDs outside a research setting [36]. In summary, the current study measured for the first time the kinematics of SSD-guided single-obstacle circumvention. The kinematics were directly compared to those for echolocation-guided obstacle circumvention, as measured in a previous study [6].

We hypothesized that echoic SSD information would enable blindfolded normally sighted participants to safely navigate around a single obstacle in the majority of trials, passing on the side affording the most space. Navigation under SSD guidance was hypothesized to be less accurate than in a visual baseline condition, indicated by a greater number of collisions and velocity corrections, larger buffer space, and longer movement times.

Methods

Participants

12 participants took part (8 males and 4 females, mean age 31 yrs, range 21–42 yrs). All had normal or near-normal hearing, defined as better-ear average (BEA) hearing thresholds across the frequencies 500, 1000, 2000 and 4000 Hz ≤25 dB HL, as measured using an Interacoustics AS608 audiometer. All participants reported normal or corrected-to-normal vision. None of the participants reported having any prior experience with SSDs. The experiments followed the tenets of the Declaration of Helsinki. Written informed consent was obtained from all participants following an explanation of the nature and possible consequences of the study. The experiments were approved by the Anglia Ruskin University Ethics committee.

Apparatus and data acquisition

Testing took place in a quiet room measuring 5.7 × 3.5 m with a ceiling height of 2.8 m, with an ambient sound level of approximately 36 dBA. The floor was carpeted, the walls were painted, and the ceiling was tiled. The obstacle measured 0.6 (width) × 2 m (height). It was constructed of wood and was covered by smooth aluminium foil, following Arnott et al. [37], to achieve high reflectivity. It was movable, flat and rectangular with a thickness of 0.6 cm, with a small plastic triangular frame at the bottom on the side away from the participant, mounted on castors. See Fig 1 for a schematic of the experimental layout. The obstacle was positioned in the approximate center of the room to minimize SSD reflections from surfaces other than the obstacle. The experimenter and participants maintained silence during testing.

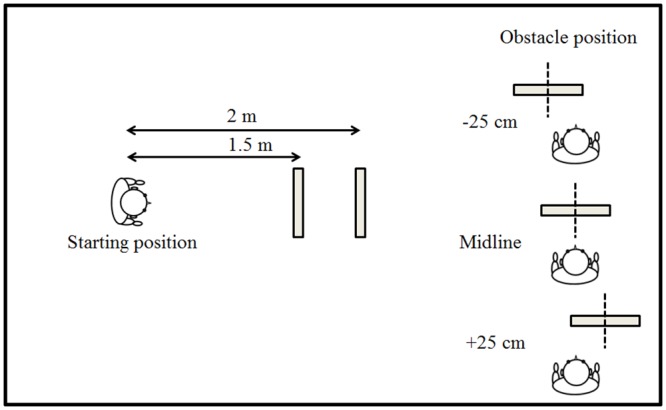

Fig 1. Two views of the layout for obstacle circumvention.

The 0.6-m wide obstacle was either straight ahead on the midline relative to the participant, or ±25 cm to the left or right (right part of figure), at an approach distance of either 1.5 or 2 m (left part of figure).

The SSD used in the study was the Miniguide, manufactured by GDP Research [19, 20]. The device emitted an ultrasound signal. The distance between the device and the obstacle was estimated from the time taken for the emitted signal to reflect back from the obstacle to a receptor on the device. Spatial information was provided via a tactile vibration signal; the rate of vibration was proportional to the distance between the device and the obstacle. The range of the device (the distance over which an obstacle could be detected) was set to 1 m [20]. The device was held in the participant’s dominant hand. Participants were instructed to tuck their elbow against their side when using the SSD to prevent arm movements in an anterior/posterior direction and to ensure that the hand holding the device was perpendicular to the body, in order to standardize the SSD feedback received across the trials without any influence from the movements of the arm [20].

The experimenter recorded the trials when a collision occurred between any part of the participant’s body or the SSD and the obstacle, and whether the participant passed the obstacle on the left or right side. An 8-camera motion capture system (Vicon Bonita; Oxford Metrics Ltd) collected 3-D kinematic data at 50 Hz. Retro-reflective spherical markers were attached bilaterally to the participant at the following anatomical locations: the antero-lateral and postero-lateral aspects of the head, the most distal, superior aspect of the 1st toe, the most distal, superior aspect of the 5th toe, the posterior aspect of the calcanei, and the acromio-clavicular joint. A marker was also placed on the posterior aspect of the dominant hand and another on the sternum. In order to define the obstacle within the Vicon coordinate system, three markers were attached to the front aspect of the obstacle. Marker trajectory data were filtered using the cross validatory quintic spline smoothing routine, with ‘smoothing’ options set at a predicted mean-squared error value of 10 and processed using the Plug-in gait software (Oxford Metrics). Although reflections from the aluminium foil covering the obstacle were sometimes recorded by the Vicon system, care was taken to label each marker individually for each trial to exclude these erroneous reflections from the analyses.

Kinematic variables for the obstacle circumvention task were assessed using custom written Visual Basic scripts (Table 1). A velocity correction was deemed to occur when the participant accelerated or decelerated in the anterior/posterior direction. To avoid including very small fluctuations in velocity, this was required to occur for the duration of 50 frames (1 sec). Stopping and starting were counted as velocity corrections, as were slowing down and speeding up. A change in trajectory was not deemed to be a velocity correction unless it involved a forward acceleration/deceleration. If the participant took a side step without slowing down in the anterior/posterior direction then this was not counted. However if they stopped, took a side step and then continued forward again, this would count as two velocity corrections (1 for decelerating prior to the side step, and 1 for accelerating after the side step).

Table 1. Kinematic variables assessed for the single obstacle circumvention task.

| Variable | |

|---|---|

| Buffer space | The medio-lateral distance between the shoulder marker and obstacle, at the point of passing the obstacle, specified as the point where the shoulder marker passed the marker attached to the front aspect of the obstacle. |

| Movement time | The time taken to complete the movement, measured from when the sternum marker was 1 m from the obstacle in the anterior-posterior direction, until the point of passing the obstacle marker (or the spatial position of the virtual obstacle marker for ‘no obstacle’ catch trials). |

| Velocity corrections | The number of changes in velocity i.e. changes from acceleration to deceleration or vice versa, measured from when the sternum was 1 m away from the obstacle until the point of passing the obstacle marker (or the spatial position of the virtual obstacle marker for ‘no obstacle’ catch trials). See main text for additional information. |

| Initial path deviation | The distance between the obstacle marker and the sternum marker when the final lateral change of direction occurred prior to the trajectory of the sternum marker departing from that for the average of the obstacle-absent trials by more than ±1 standard deviation (SD, measured within participants). |

Procedures

There were two locomotion guidance conditions: SSD and full vision. Following previous studies [6, 20], the vision condition was performed second to avoid ‘training’ the participant with regard to the range of distances and obstacle positions tested.

The SSD condition consisted of a training phase followed by a testing phase. Previous studies that investigated the use of echoic SSDs for passing through apertures trained participants for approximately 5 minutes [30, 38]. We provided more extensive training than used in these studies; participants practiced navigating around the obstacle using the SSD from an approach distance of 1.75 m for a minimum of 15 minutes. For the first 5 minutes the participants were allowed to keep their eyes open, for the next five minutes they were encouraged to close their eyes, and for the last five minutes they were blindfolded [6, 20]. In the first 10 minutes, the obstacle was placed straight ahead in front of the participant, and for the last 5 minutes the location of the obstacle was varied randomly among three positions: straight ahead and 25 cm to the participants’ left or right.

In the testing phase, participants were blindfolded and instructed to maintain a straight line of travel until the obstacle was detected using the device and then to circumnavigate around the obstacle without collision. Participants were told that the obstacle would sometimes be absent, and in this case they should maintain a straight line of travel until the experimenter instructed them to stop. Trials were terminated when the participant had successfully moved past the obstacle, when a collision occurred, or, for trials where the obstacle was absent, when they moved more than 2 m forward from the starting position. Participants commenced each trial from the same starting position, and the obstacle was initially 1.5 m from the participant for half of the trials and 2 m away for the other half, selected randomly. These distances were shorter than typically used for visual obstacle circumvention tasks (e.g. 5 m [1, 7]), as pilot data showed that participants often stopped a short distance in front of the obstacle and then explored it using the SSD, so a longer distance was not required. This has also been shown for locomotion through apertures using an SSD [20], or using echolocation to avoid an obstacle [6]. The obstacle was randomly varied in lateral location relative to the participant (midline, or 25 cm to the right or left), with 3 repetitions for each obstacle location, and 6 ‘no obstacle’ catch trials. In total, each participant completed 24 trials.

The full vision condition consisted of a testing phase only, similar to previous studies of visually guided navigation around an obstacle [6, 7]. This was the same as for the SSD testing phase, except that participants were blindfolded between trials only and they removed the blindfold at the start of each trial when signaled by the experimenter.

For both conditions, before each trial, the participants’ feet were aligned against a removable plastic box so that they faced directly forward, to ensure that they would walk straight ahead (this was checked by the experimenter). A shoulder tap from the experimenter signaled the beginning of the trial. Once completed, the experimenter led participants back to the starting point, and stood in the same place to the side for the duration of each trial. Except when navigating under visual guidance, participants wore close-fitting blindfolds, and their ears were occluded using headphones with sound-attenuating muffs. Participants were not allowed to use their hands to touch the obstacle and no feedback was provided during the testing phase. The sound-attenuating headphones prevented participants from hearing the obstacle being moved (this was checked during pilot testing; participants were asked to report whether the obstacle was present or had been moved at the start of each trial while blindfolded. None reported being able to do this). The experiment took approximately 1.5 hours to complete for each participant.

Statistical analyses

Unless otherwise stated, repeated-measures analyses of variance (ANOVAs) were used to analyze how the buffer space, the side of obstacle chosen (right or left), the overall movement time, and the number of velocity corrections were affected by guidance condition (SSD and vision), obstacle lateral location (left, midline, or right relative to the participant), and repetition (trial 1–3). The significance level was chosen as p<0.05. As preliminary analyses indicated that scores for all measures were not significantly different for the two approach distances (p>0.05), the results for these were pooled. Proportional data for side of avoidance were subjected to arcsine transformation prior to analysis, as recommended by Howell [39]. Post hoc analyses were performed using Bonferroni correction.

Results

The proportions of obstacle-present trials for which no collisions occurred under SSD guidance were 0.90, 0.96, and 0.92 for obstacles located to the left, midline and right, respectively. No collisions occurred under visual guidance. See S1 File for participant data. The proportion of successful obstacle circumventions under SSD and visual guidance was substantially greater than for the no-click/no-vision condition of Kolarik et al. [6], for which the proportion of obstacle-present trials when no collisions occurred was 0.22, 0.22 and 0.06, for obstacles located to the left, midline and right, respectively. The results of Kolarik et al. [6] suggest that under conditions of no-click/no-vision guidance participants had severe difficulty even detecting the presence of the obstacle, and were not able to pass the obstacle on the side affording the most space or to generate a protective envelope to avoid collision in the majority of trials.

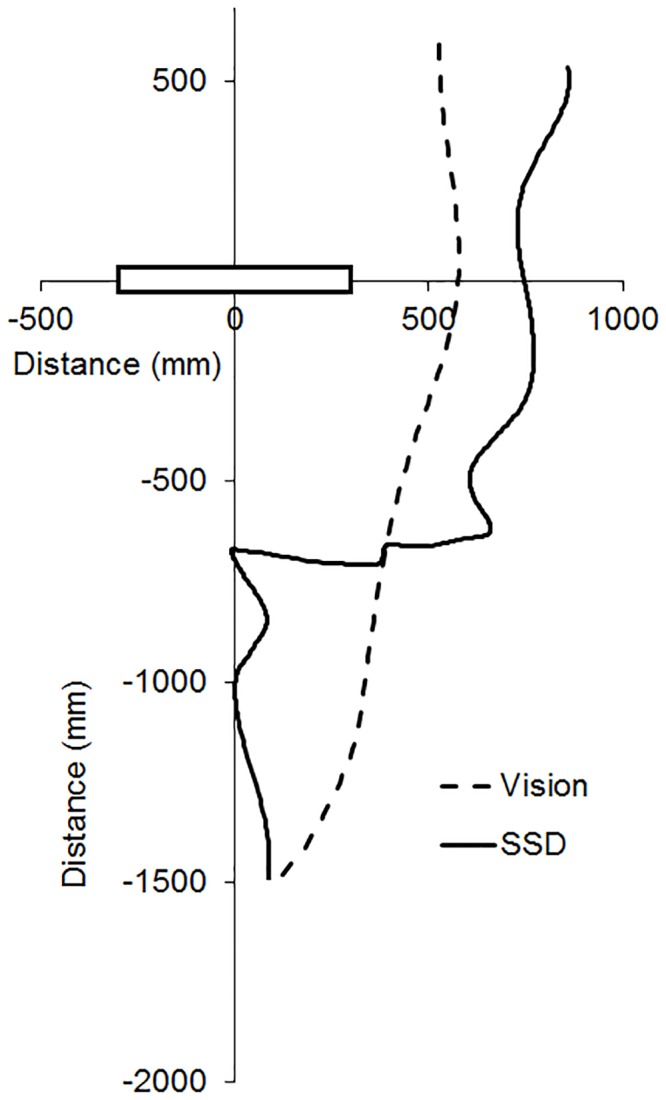

Fig 2 shows trajectories for a representative participant under visual guidance (dashed line) and SSD guidance (solid line). The obstacle was 25 cm to the left (black rectangle). Under SSD guidance, participants showed distinct deviations from the straight-ahead direction only when the obstacle was within the operating range of the SSD (1 m from the obstacle), whereas under visual guidance, participants deviated from straight ahead immediately upon initiating their movement. Under SSD guidance, the mean distances from the obstacle at which participants deviated by more than ± 1 SD from obstacle absent direction (with standard errors in parentheses) were 752 (46), 776 (51), and 782 (59) mm for the left, midline, and right obstacles, respectively. Under both visual and SSD guidance participants moved roughly straight ahead in no-obstacle catch trials, indicating that they only deviated markedly from straight ahead when an obstacle was present.

Fig 2. Representative participant trajectories under visual guidance (dashed line) and SSD guidance (solid line) when circumventing an obstacle (black rectangle).

Data are shown for the left shoulder marker, for a participant approaching the obstacle from a distance of 1.5 m. The obstacle’s lateral location was 25 cm to the left of the participant.

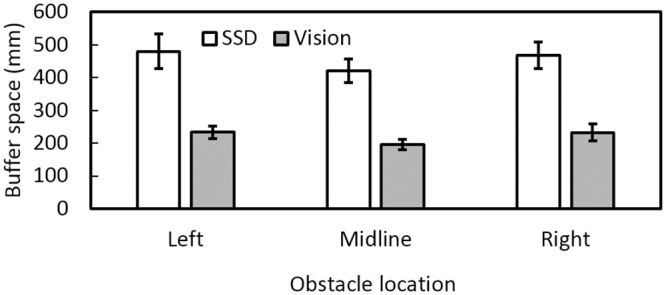

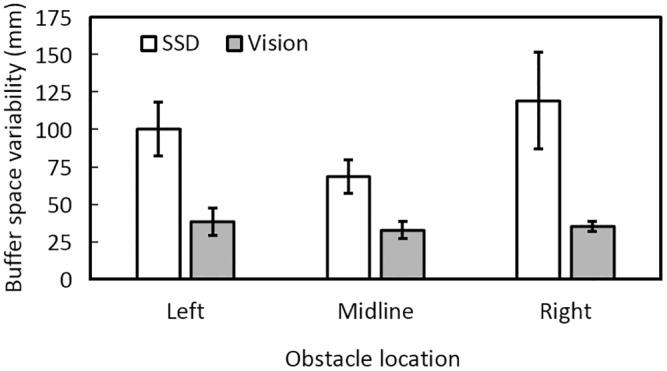

Fig 3 shows that for obstacle-present trials on which collisions did not occur, buffer space was greater under SSD guidance than under visual guidance for all obstacle positions. The mean buffer spaces under SSD guidance were 480, 422, and 468 mm for the left, midline, and right obstacles, respectively, while those under visual guidance were 233, 196, and 233 mm, respectively. There was a main effect of guidance condition [F(1, 11) = 42.4, p = 0.001] but no effect of obstacle location. To assess the variability of the buffer space, the SD of the buffer space across trials was calculated for each participant and each condition, and then the SDs were averaged across participants for each condition. Fig 4 shows the variability estimated in this way under SSD and visual guidance. Variability under SSD guidance (100, 69, and 119 mm for the left, midline, and right obstacles, respectively) was greater than under vision (39, 33, and 35 mm, respectively), suggesting that participants were less accurate in judging the position of the nearest edge of the obstacle under SSD guidance than under vision.

Fig 3. Mean buffer space between the shoulder and obstacle at the point of passing the obstacle, for each obstacle location.

Open bars show buffer space under SSD guidance and grey bars show buffer space using vision. Here and in subsequent figures error bars denote ±1 standard error.

Fig 4. Mean buffer space variability under SSD guidance (open bars) and visual guidance (grey bars) for each obstacle location.

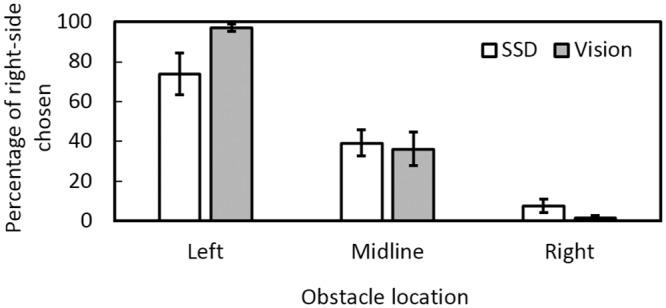

Under both SSD and visual guidance, participants generally passed the obstacle on the side that afforded the most space. In other words, participants mostly passed on the right side when the obstacle was positioned to the left, and passed on the left side when the obstacle was positioned to the right (Fig 5). The data for the side that was chosen showed a main effect of obstacle position (F(2, 22) = 75.01, p = 0.001), but not guidance condition (F(1, 11) = 0.58, p = 0.46), and a significant interaction between obstacle position and guidance condition (F(2, 22) = 7.95, p = 0.003). In both conditions, the right side was chosen significantly more often when the obstacle was on the left than when it was on the midline or the right, and when the obstacle was on the midline than when it was on the right (all p < 0.01).

Fig 5. Percentage of times the right side was chosen for each obstacle location under SSD guidance (open bars) and visual guidance (grey bars) for each obstacle location.

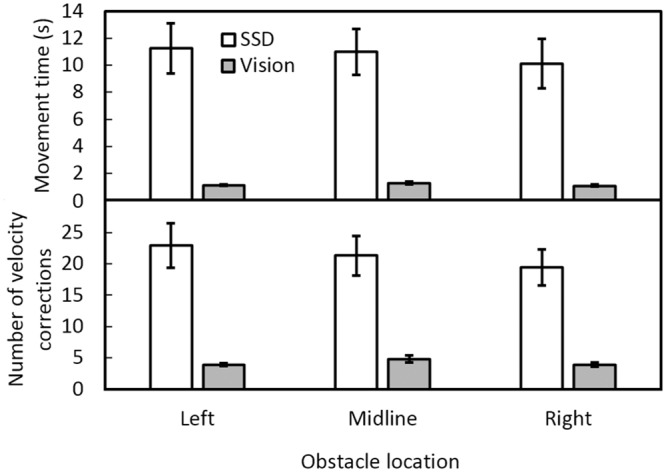

The mean movement times to circumvent the obstacle, and the mean number of velocity corrections (Fig 6, upper and lower panels respectively) were larger with the SSD than with vision. When navigating using the SSD, the mean movement times were 11, 11 and 10 s for obstacles located to the left, midline and right, respectively, compared to 1.1, 1.2, and 1.1 s, respectively, for vision. There was a main effect of guidance condition [F(1, 11) = 28.96, p = 0.001], but no effect of obstacle location. When navigating using the SSD, the mean numbers of velocity corrections were 23, 21, and 20 for obstacles that were located to the left, midline and right, respectively, while using vision they were 4, 5, and 4, respectively. There was a significant main effect of guidance condition [F(1, 11) = 31.05, p = 0.001], but not of obstacle location.

Fig 6. Mean movement times (upper panel) and numbers of velocity corrections (lower panel) under SSD guidance (open bars), and visual guidance (grey bars) for each obstacle location.

To investigate whether there was any reduction in movement speed simply due to being blindfolded, regardless of obstacle circumvention, movement times were assessed for no-obstacle catch trials. The mean movement times were 1, 5, and 8 s when navigating using vision, SSD guidance, and in the no-click/no-vision condition of Kolarik et al. [6], respectively. The mean numbers of velocity corrections were 4, 9, and 20 using vision, the SSD and in the no-click/no-vision condition, respectively. These findings suggest that participants moved more slowly when using the SSD than when using vision mainly because of the absence of vision, rather than because of SSD use per se.

Discussion

The results show that: 1) echoic SSD information guided locomotion by blindfolded participants in a single-obstacle circumvention task with 93% accuracy; 2) Buffer space using the SSD was larger by a factor of 2.1 than for visual guidance; 3) Movement times were longer by a factor of 9.4, and number of velocity corrections were larger by a factor of 5 for SSD than for visual guidance; 4) Using SSD information, participants generally passed the obstacle on the side affording the most space. These results can be compared to those for a previous study in which blindfolded, normally sighted, non-expert echolocators performed a similar task using echolocation to guide their movements around an obstacle [6]. In that study, participants circumvented the obstacle without collision in 67% of the trials. Compared to visual guidance, the buffer space was larger by a factor of 1.8, movement times were longer by a factor of 27, and number of velocity corrections was larger by a factor of 14. Also, in that study participants did not generally pass on the side of the obstacle affording the most space. The results suggest that for blindfolded participants, locomotion using an echoic SSD is more accurate and faster than when using echolocation based on self-generated sounds. Further investigation is needed to test the magnitude of deviation from the midline required for participants to choose the path affording most space when using SSD guidance.

The current findings are consistent with the idea that echoic SSD information enables a protective envelope to be generated during locomotion, similar to that generated using visual information. The buffer space was larger under SSD guidance than under visual guidance. One possible reason for this is that the wavelength of ultrasound imposes a limit on the spatial detail available from echoic SSDs [40, 41]. The distance and location information available with the SSD were less precise than provided by vision, and participants may have allowed for this (perhaps without conscious awareness) by increasing the size of the protective envelope under SSD guidance. Consistent with this, the measure of variability of the buffer space was greater under SSD guidance than under vision. Another possibility is that the size of the protective envelope was not altered by the perceptual system, but buffer space increased due to misjudging the obstacle location or overestimating the obstacle width. A third possibility is that the increased buffer space under SSD guidance was partly due to increased postural sway, since the participants were blindfolded. Franchak et al. [32] described how a larger buffer space may be produced either when the participant is exercising greater caution to avoid high speed collision or when lateral sway increases, requiring larger spatial buffers for passage.

One potential limitation of our study arises from the possible obstacle locations being fixed. Participants may have learned these locations during the last part of the training or early in the main experiment and might then have required only minimal cues from the SSD to move in an appropriate way. However, we think that this is unlikely, for the following reasons. Firstly, seven possible predetermined locations were used (left, right, or directly ahead, at closer and further distances, and, for catch trials, the obstacle was absent). During training only a single distance was used (different to the distances used in the testing phase) and each lateral position was presented only once or twice. During the testing phase, each location was used only three times (plus six catch trials) and participants were not informed about the number of possible locations, minimizing the opportunity for learning. Consistent with this, there was no significant effect of repetition, indicating that performance did not improve over trials. Thus, we believe it is highly unlikely that participants learned the possible locations of the obstacle.

The present results are consistent with previous work suggesting that the representation of space is amodal [42], although they are also consistent with other interpretations; see [43] for a review. Blindfolded participants were able to generate a representation of space using SSD vibration information and adopt a strategy that is similar to the one used during visual navigation, as has been shown in previous work [10]. The finding that movement times and velocity corrections were greater under SSD guidance than under vision is consistent with results from a recent study [27] which showed that the number of pauses and time taken to complete a trial when navigating through virtual mazes were greater when using an SSD than when using vision. Next, we discuss possible interpretations of how participants use sensory information from a substituted modality to navigate around an obstacle.

According to representation-based control approaches [44, 45] to path planning (see [46] for a review of visually guided obstacle avoidance), participants may use the SSD to gather information about the spatial positions of the boundaries of the obstacle as they move, thus generating an internal perceptual representation similar to that obtained over a series of saccadic eye movements [47] or when combining information from a series of echolocation clicks made while moving the head [48]. Such internal representations are then used to make locomotor adjustments, similar to using visual information during locomotor actions, where representation of the body may also play a critical role [46]. The findings of the current study and of our previous study [20] suggest that, across different tasks (single obstacle circumvention and aperture navigation) and substituted modalities (haptic and auditory), internal representations based on the SSD and echoes from self-generated sound are less accurate than those based on vision. In the current study, less accurate internal representations with the SSD may have led to increased buffer space, velocity corrections and movement times. However, internal representations derived from the SSD may have been more accurate than those derived by non-expert echolocators using self-generated sounds [6].

According to the representation-based control approach, an action path is planned based on the internal representation, prior to obstacle circumvention. The greater the effective range over which the obstacle’s position can be detected, the further in advance can the path be planned, potentially allowing minimization of energy expenditure and reducing the chance of collision. In our study, vision provided an effective range covering the full length of the room (5.7 m). Thus, participants could plan their path from the start of each trial. The effective range for non-expert echolocators performing a similar obstacle circumvention task was approximately 60 cm [6]. In the current study the range of the SSD was set to 1 m, following a previous study of locomotion through apertures [20]. Under SSD guidance, participants deviated from a straight-ahead path approximately 77 cm from the obstacle. Overall, these results indicate that participants were able to plan their movements from the onset of the trial under visual guidance, whereas under SSD guidance they planned their movement once they were within the effective range of the SSD (1 m). In terms of the representation-based control approach, increasing the range of the SSD may allow participants to plan their movements further in advance, improving circumvention performance. A previous study using the EyeCane SSD with a 5 m range showed that participants were indeed able to plan their movements in a maze accurately and represent the configuration of the maze [10].

An alternative, information-based control approach [49, 50] is based on the assumption that the perceptual system uses some law of control to guide navigation on a moment-by-moment basis, using information from one or more relevant variables, such as optic flow in the case of vision [51]. Advance path planning or internal models of space are not needed to avoid an obstacle, and the variable used for control of movement may not even provide information regarding spatial layout. In our experiment, participants may have avoided the obstacle by using SSD information to inform local steering dynamics, rather than by planning the route in advance using an internal representation of space. The variable used under SSD guidance may have been less informative than the variable used under visual guidance, leading to increased buffer space, velocity corrections and movement times for the former. On the other hand, the variable used under SSD guidance may have been more informative than the variable used by the non-expert echolocating participants tested in a previous study [6], leading to faster and more accurate performance for the former.

The representation-based and information-based control approaches are not mutually exclusive, and both approaches can provide time-to-contact information. Information regarding time-to-contact to an obstacle can be obtained using visual or auditory information [52]. By monitoring the rate of change of a relevant variable such as echoic intensity when approaching an obstacle, accuracy in locating its position may increase [25, 53]. In the current study, participants could have monitored the rate of change in SSD vibration to judge their position relative to the obstacle and to avoid contacting the obstacle.

It is possible that echoic SSDs provide an aspect of vision (depth) more efficiently than echolocation alone, and for this reason the participants in the current study were able to pass on the side affording the most space in the majority of trials, whereas non-expert echolocators in a previous study [6], who did not pass on the side affording the most space in the majority of trials, did not have access to this information. The blindfolded sighted participants moved more slowly under SSD guidance than under visual guidance, but this was probably caused by the presence/absence of vision rather than to SSD use per se. Congenitally blind participants might not take longer to navigate around an obstacle with an SSD than without one, as they are used to navigating without vision.

Chebat et al. [31] showed that blind participants were better than blindfolded sighted controls at detecting and avoiding obstacles using the TDU. The improved performance of blind than of blindfolded sighted participants when using SSDs was shown to reflect cortical reorganization by Kupers et al. [54], who used the TDU to guide navigation in a virtual environment in an fMRI scanner and demonstrated that the occipital (visual) cortex was activated in congenitally blind participants but not sighted controls. The activation of the occipital cortex in blind but not sighted controls during a distance evaluation task using an echoic SSD was also reported by De Volder et al. [55]. Such cortical reorganization may result in different movement kinematics under SSD guidance between blind and sighted participants. However, this requires testing.

Peripersonal space describes space near the individual e.g. within reaching and grasping distance, and extrapersonal space is space farther from the body [4, 10, 56–58]. Sensory events in peripersonal space often require rapid motor responses such as avoidance in response to a perceived threat [56], and multisensory information may be processed differently depending on whether it is presented in peripersonal or extrapersonal space [57]. Peripersonal space can be extended or projected by altering the reaching distance [57, 58], and Chebat et al. [10] previously suggested that use of the EyeCane extended peripersonal space, contributing to success navigating through real or virtual mazes. Given the strong adaptive value of peripersonal space in detecting stimuli in close proximity to the body prior to contact [58] and the similar role of the protective envelope in obstacle avoidance during locomotion, the spatial boundary of peripersonal space may be similar to the protective envelope, and/or the buffer space measured in the current study, however this requires further investigation.

Finally, we turn to some practical aspects of the use of SSDs. In the present study, participants took approximately 11 s to move 1 m, equivalent to a walking speed of only about 0.33 km/hr. However, further training with an SSD may lead to improved performance, decreasing collisions and resulting in smoother and faster movements. Benefits of training have been shown previously. For example Kim and Zatorre [59] showed that long-term training with the vOICe SSD improved shape and pattern identification of visual images using visual-to-auditory substitution with sighted participants.

Chebat et al. [31] pointed out that the visual pattern SSD that they used to assess navigation through a maze was tested under optimal experimental conditions, and that the findings may not generalize to less ideal real-world conditions, such as when low-contrast obstacles are encountered in varied light conditions. The echoic SSD tested here was also tested under optimal experimental conditions, and caution is needed in generalizing the findings to real-world conditions, where, for example, noisy environments could reduce its effectiveness. Echoic SSDs are as effective in dark and low-level light conditions as in well-lit conditions (as are self-generated echoes used for human echolocation). However, less acoustically reflective objects may lead to lower locomotion performance based on sound echoes, while visual pattern SSDs would not be affected by acoustic reflectivity. Further investigation comparing the two classes of SSD across various environmental conditions may serve to provide greater insight regarding their applicability for practical visual rehabilitation of visually impaired individuals.

Supporting Information

(XLSX)

Acknowledgments

We thank the Editor Michael Proulx, Daniel-Robert Chebat, and two anonymous reviewers, whose extensive comments were most helpful. We also thank Silvia Cirstea for valuable early discussions regarding an earlier experiment, and John Peters and George Oluwafemi for their assistance in collecting data.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

This research was supported by the Vision and Eye Research Unit, Postgraduate Medical Institute at Anglia Ruskin University (awarded to SP), and the Medical Research Council (awarded to BCJM, Grant number G0701870), http://www.anglia.ac.uk/postgraduate-medical-institute/groups/vision-and-eyeresearch-unit; http://www.mrc.ac.uk. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Gérin-Lajoie M, Richards CL, Fung J, McFadyen BJ. Characteristics of personal space during obstacle circumvention in physical and virtual environments. Gait Posture. 2008;27:239–47. [DOI] [PubMed] [Google Scholar]

- 2.Stoffregen TA, Pittenger JB. Human echolocation as a basic form of perception and action. Ecol Psychol. 1995;7:181–216. [Google Scholar]

- 3.Kolarik AJ, Cirstea S, Pardhan S, Moore BCJ. A summary of research investigating echolocation abilities of blind and sighted humans. Hear Res. 2014;310:60–8. 10.1016/j.heares.2014.01.010 [DOI] [PubMed] [Google Scholar]

- 4.Kolarik AJ, Moore BCJ, Zahorik P, Cirstea S, Pardhan S. Auditory distance perception in humans: A review of cues, development, neuronal bases and effects of sensory loss. Atten Percept Psychophys. 2016;78:373–95. 10.3758/s13414-015-1015-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bach-y-Rita P, Collins CC, Saunders FA, White B, Scadden L. Vision substitution by tactile image projection. Nature. 1969;221:963–4. [DOI] [PubMed] [Google Scholar]

- 6.Kolarik AJ, Scarfe AC, Moore BCJ, Pardhan S. An assessment of auditory-guided locomotion in an obstacle circumvention task. Exp Brain Res. 2016;234:1725–35. 10.1007/s00221-016-4567-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hackney AL, Van Ruymbeke N, Bryden PJ, Cinelli ME. Direction of single obstacle circumvention in middle-aged children. Gait Posture. 2014;40:113–7. 10.1016/j.gaitpost.2014.03.005 [DOI] [PubMed] [Google Scholar]

- 8.Maidenbaum S, Hanassy S, Abboud S, Buchs G, Chebat DR, Levy-Tzedek S, et al. The “EyeCane”, a new electronic travel aid for the blind: Technology, behavior & swift learning. Restor Neurol Neurosci. 2014;32:813–24. 10.3233/RNN-130351 [DOI] [PubMed] [Google Scholar]

- 9.Maidenbaum S, Levy-Tzedek S, Chebat DR, Namer-Furstenberg R, Amedi A. The effect of extended sensory range via the EyeCane sensory substitution device on the characteristics of visionless virtual navigation. Multisens Res. 2014;27:379–97. [DOI] [PubMed] [Google Scholar]

- 10.Chebat DR, Maidenbaum S, Amedi A. Navigation using sensory substitution in real and virtual mazes. PloS One. 2015;10:e0126307 10.1371/journal.pone.0126307 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hicks SL, Wilson I, Muhammed L, Worsfold J, Downes SM, Kennard C. A depth-based head-mounted visual display to aid navigation in partially sighted individuals. PloS one. 2013;8:e67695 10.1371/journal.pone.0067695 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Maidenbaum S, Levy-Tzedek S, Chebat DR, Amedi A. Increasing accessibility to the blind of virtual environments, using a virtual mobility aid based on the "EyeCane": Feasibility study. PloS One. 2013;8:e72555 10.1371/journal.pone.0072555 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Meijer PBL. An experimental system for auditory image representations. IEEE Transactions on Biomedical Engineering. 1992;39:112–21. [DOI] [PubMed] [Google Scholar]

- 14.Striem-Amit E, Guendelman M, Amedi A. 'Visual' acuity of the congenitally blind using visual-to-auditory sensory substitution. PLoS One. 2012;7:e33136 10.1371/journal.pone.0033136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Striem-Amit E, Cohen L, Dehaene S, Amedi A. Reading with sounds: Sensory substitution selectively activates the visual word form area in the blind. Neuron. 2012;76:640–52. 10.1016/j.neuron.2012.08.026 [DOI] [PubMed] [Google Scholar]

- 16.Renier L, Collignon O, Poirier C, Tranduy D, Vanlierde A, Bol A, et al. Cross-modal activation of visual cortex during depth perception using auditory substitution of vision. NeuroImage. 2005;26:573–80. [DOI] [PubMed] [Google Scholar]

- 17.Renier L, Laloyaux C, Collignon O, Tranduy D, Vanlierde A, Bruyer R, et al. The Ponzo illusion with auditory substitution of vision in sighted and early-blind subjects. Perception. 2005;34:857–67. [DOI] [PubMed] [Google Scholar]

- 18.Kay L. A sonar aid to enhance spatial perception of the blind: Engineering design and evaluation. Radio and Electronic Engineer. 1974;44:605–27. [Google Scholar]

- 19.Vincent C, Routhier F, Martel V, Mottard M-È, Dumont F, Côté L, et al. Field testing of two electronic mobility aid devices for persons who are deaf-blind. Disabil Rehabil Assist Technol. 2014;9:414–20. 10.3109/17483107.2013.825929 [DOI] [PubMed] [Google Scholar]

- 20.Kolarik AJ, Timmis MA, Cirstea S, Pardhan S. Sensory substitution information informs locomotor adjustments when walking through apertures. Exp Brain Res. 2014;232:975–84. 10.1007/s00221-013-3809-5 [DOI] [PubMed] [Google Scholar]

- 21.Worchel P, Mauney J, Andrew JG. The perception of obstacles by the blind. J Exp Psychol. 1950;40:746–51. [DOI] [PubMed] [Google Scholar]

- 22.Supa M, Cotzin M, Dallenbach KM. "Facial vision": The perception of obstacles by the blind. Am J Psychol. 1944;57:133–83. [Google Scholar]

- 23.Worchel P, Mauney J. The effect of practice on the perception of obstacles by the blind. J Exp Psychol. 1951;41:170–6. [DOI] [PubMed] [Google Scholar]

- 24.Ammons CH, Worchel P, Dallenbach KM. "Facial vision": The perception of obstacles out of doors by blindfolded and blindfolded-deafened subjects. Am J Psychol. 1953;66:519–53. [PubMed] [Google Scholar]

- 25.Rosenblum LD, Gordon MS, Jarquin L. Echolocating distance by moving and stationary listeners. Ecol Psychol. 2000;12:181–206. [Google Scholar]

- 26.Carlson-Smith C, Weiner WR. The auditory skills necessary for echolocation: A new explanation. J Vis Impair Blind. 1996;90:21–35. [Google Scholar]

- 27.Levy-Tzedek S, Maidenbaum S, Amedi A, Lackner J. Aging and Sensory Substitution in a Virtual Navigation Task. PloS One. 2016;11(3):e0151593 10.1371/journal.pone.0151593 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Veraart C, Crémieux J, Wanet-Defalque MC. Use of an ultrasonic echolocation prosthesis by early visually deprived cats. Behav Neurosci. 1992;106:203–16. [DOI] [PubMed] [Google Scholar]

- 29.Veraart C, Wanet-Defalque MC. Representation of locomotor space by the blind. Percept Psychophys. 1987;42:132–9. [DOI] [PubMed] [Google Scholar]

- 30.Hughes B. Active artificial echolocation and the nonvisual perception of aperture passability. Hum Mov Sci. 2001;20:371–400. [DOI] [PubMed] [Google Scholar]

- 31.Chebat DR, Schneider FC, Kupers R, Ptito M. Navigation with a sensory substitution device in congenitally blind individuals. NeuroReport. 2011;22:342–7. 10.1097/WNR.0b013e3283462def [DOI] [PubMed] [Google Scholar]

- 32.Franchak JM, Celano EC, Adolph KE. Perception of passage through openings depends on the size of the body in motion. Exp Brain Res. 2012;223:301–10. 10.1007/s00221-012-3261-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Gérin-Lajoie M, Richards CL, McFadyen BJ. The negotiation of stationary and moving obstructions during walking: anticipatory locomotor adaptations and preservation of personal space. Motor Control. 2005;9:242–69. [DOI] [PubMed] [Google Scholar]

- 34.Roentgen UR, Gelderblom GJ, Soede M, de Witte LP. The impact of electronic mobility devices for persons who are visually impaired: A systematic review of effects and effectiveness. J Vis Impair Blind. 2009;103:743–53. [Google Scholar]

- 35.Jansen SE, Toet A, Werkhoven PJ. Human locomotion through a multiple obstacle environment: Strategy changes as a result of visual field limitation. Exp Brain Res. 2011;212:449–56. 10.1007/s00221-011-2757-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Maidenbaum S, Abboud S, Amedi A. Sensory substitution: Closing the gap between basic research and widespread practical visual rehabilitation. Neurosci Biobehav Rev. 2014;41:3–15. 10.1016/j.neubiorev.2013.11.007 [DOI] [PubMed] [Google Scholar]

- 37.Arnott SR, Thaler L, Milne JL, Kish D, Goodale MA. Shape-specific activation of occipital cortex in an early blind echolocation expert. Neuropsychologia. 2013;51:938–49. 10.1016/j.neuropsychologia.2013.01.024 [DOI] [PubMed] [Google Scholar]

- 38.Davies TC, Pinder SD, Burns CM. What's that sound? Distance determination and aperture passage from ultrasound echoes. Disabil Rehabil Assist Technol. 2011;6:500–10. 10.3109/17483107.2010.542569 [DOI] [PubMed] [Google Scholar]

- 39.Howell DC. Statistical Methods for Psychology. Belmont, CA: Duxbury Press; 1997. [Google Scholar]

- 40.Lee DN. Body-environment coupling In: Neisser U, editor. The perceived self: Ecological and interpersonal sources of self-knowledge. Cambridge: Cambridge University Press; 1993. p. 43–67. [Google Scholar]

- 41.Easton RD. Inherent problems of attempts to apply sonar and vibrotactile sensory aid technology to the perceptual needs of the blind. Optom Vis Sci. 1992;69:3–14. [DOI] [PubMed] [Google Scholar]

- 42.Levy-Tzedek S, Novick I, Arbel R, Abboud S, Maidenbaum S, Vaadia E, et al. Cross-sensory transfer of sensory-motor information: Visuomotor learning affects performance on an audiomotor task, using sensory-substitution. Sci Rep. 2012;2:949 10.1038/srep00949 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Schinazi VR, Thrash T, Chebat DR. Spatial navigation by congenitally blind individuals. Wiley Interdiscip Rev Cogn Sci. 2016;7:37–58. 10.1002/wcs.1375 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Frenz H, Lappe M. Absolute travel distance from optic flow. Vision Res. 2005;45:1679–92. [DOI] [PubMed] [Google Scholar]

- 45.Turano KA, Yu D, Hao L, Hicks JC. Optic-flow and egocentric-direction strategies in walking: Central vs peripheral visual field. Vision Res. 2005;45:3117–32. [DOI] [PubMed] [Google Scholar]

- 46.Higuchi T, Imanaka K, Patla AE. Action-oriented representation of peripersonal and extrapersonal space: Insights from manual and locomotor actions. Jpn Psychol Res. 2006;48:126–40. [Google Scholar]

- 47.Prime SL, Vesia M, Crawford JD. Cortical mechanisms for trans-saccadic memory and integration of multiple object features. Phil Trans R Soc B. 2011;366:540–53. 10.1098/rstb.2010.0184 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Milne JL, Goodale MA, Thaler L. The role of head movements in the discrimination of 2-D shape by blind echolocation experts. Atten Percept Psychophys. 2014;76:1828–37. 10.3758/s13414-014-0695-2 [DOI] [PubMed] [Google Scholar]

- 49.Fajen BR, Warren WH. Behavioral dynamics of steering, obstacle avoidance, and route selection. J Exp Psychol Hum Percept Perform. 2003;29:343–62. [DOI] [PubMed] [Google Scholar]

- 50.Warren WH. Visually controlled locomotion: 40 years later. Ecol Psychol. 1998;10:177–219. [Google Scholar]

- 51.Fajen BR. Affordance-based control of visually guided action. Ecol Psychol. 2007;19:383–410. [Google Scholar]

- 52.Schiff W, Oldak R. Accuracy of judging time to arrival: Effects of modality, trajectory, and gender. J Exp Psychol Hum Percept Perform. 1990;16:303–16. [DOI] [PubMed] [Google Scholar]

- 53.Lee DN, van der Weel FR, Hitchcock T, Matejowsky E, Pettigrew JD. Common principle of guidance by echolocation and vision. J Comp Physiol A. 1992;171:563–71. [DOI] [PubMed] [Google Scholar]

- 54.Kupers R, Chebat DR, Madsen KH, Paulson OB, Ptito M. Neural correlates of virtual route recognition in congenital blindness. Proc Natl Acad Sci USA. 2010;107:12716–21. 10.1073/pnas.1006199107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.De Volder AG, Catalan-Ahumada M, Robert A, Bol A, Labar D, Coppens A, et al. Changes in occipital cortex activity in early blind humans using a sensory substitution device. Brain Res. 1999;826:128–34. [DOI] [PubMed] [Google Scholar]

- 56.Serino A, Canzoneri E, Avenanti A. Fronto-parietal areas necessary for a multisensory representation of peripersonal space in humans: An rTMS study. J Cognitive Neurosci. 2011;23:2956–67. [DOI] [PubMed] [Google Scholar]

- 57.Van der Stoep N, Nijboer T, Van der Stigchel S, Spence C. Multisensory interactions in the depth plane in front and rear space: A review. Neuropsychologia. 2015;70:335–49. 10.1016/j.neuropsychologia.2014.12.007 [DOI] [PubMed] [Google Scholar]

- 58.Serino A, Bassolino M, Farnè A, Làdavas E. Extended multisensory space in blind cane users. Psych Sci. 2007;18:642–8. [DOI] [PubMed] [Google Scholar]

- 59.Kim JK, Zatorre RJ. Generalized learning of visual-to-auditory substitution in sighted individuals. Brain Res. 2008;1242:263–75. 10.1016/j.brainres.2008.06.038 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(XLSX)

Data Availability Statement

All relevant data are within the paper and its Supporting Information files.