Abstract

Purpose

The decision by journals to append protocols to published reports of randomized trials was a landmark event in clinical trial reporting. However, limited information is available on how this initiative effected transparency and selective reporting of clinical trial data.

Methods

We analyzed 74 oncology-based randomized trials published in Journal of Clinical Oncology, the New England Journal of Medicine, and The Lancet in 2012. To ascertain integrity of reporting, we compared published reports with their respective appended protocols with regard to primary end points, nonprimary end points, unplanned end points, and unplanned analyses.

Results

A total of 86 primary end points were reported in 74 randomized trials; nine trials had greater than one primary end point. Nine trials (12.2%) had some discrepancy between their planned and published primary end points. A total of 579 nonprimary end points (median, seven per trial) were planned, of which 373 (64.4%; median, five per trial) were reported. A significant positive correlation was found between the number of planned and nonreported nonprimary end points (Spearman r = 0.66; P < .001). Twenty-eight studies (37.8%) reported a total of 65 unplanned end points; 52 (80.0%) of which were not identified as unplanned. Thirty-one (41.9%) and 19 (25.7%) of 74 trials reported a total of 52 unplanned analyses involving primary end points and 33 unplanned analyses involving nonprimary end points, respectively. Studies reported positive unplanned end points and unplanned analyses more frequently than negative outcomes in abstracts (unplanned end points odds ratio, 6.8; P = .002; unplanned analyses odd ratio, 8.4; P = .007).

Conclusion

Despite public and reviewer access to protocols, selective outcome reporting persists and is a major concern in the reporting of randomized clinical trials. To foster credible evidence-based medicine, additional initiatives are needed to minimize selective reporting.

INTRODUCTION

Evidence-based clinical practice is a fundamental dogma of modern day medicine. For this evidence-based paradigm to accurately inform clinical practice, the reported data must be complete, accurate, and unbiased. However, empiric evidence suggests the presence of substantial publication and outcome reporting bias in the published literature that pertains to randomized trials.1–3 Publication and outcome reporting bias occur when the decision to selectively publish a study or selectively report an outcome is made on the basis of the direction and statistical significance of the data.4 The term selective reporting as it pertains to outcomes encompasses a diverse group of practices that include under-reporting (nonreporting of planned outcomes), over-reporting (reporting of unplanned outcomes), or misreporting (changing definitions and measures of outcomes), all of which are intended to make the results seem more exciting and to increase the likelihood of publication. These publication practices hamper the reproducibility of research and result in the dissemination of potentially misleading scientific data, including overestimation of effect size, which thereby threatens the validity of the evidence-based medicine model.3,5

Randomized clinical trials reflect the highest level of scientific evidence and should be held to the highest standards of reporting. Initiatives such as development of the CONSORT statement in 1996 and the requirement for mandatory registration of all clinical trials in 2005 were substantial efforts made toward improving the transparency and reporting of randomized studies.6–8 Despite these efforts, selective reporting of outcomes remained a problem in randomized trials.2,9 To address this issue and to improve the credibility of results reported from randomized trials, major journals began requiring the disclosure of protocols for published randomized trials to journal editors, reviewers, and readers.10 This requirement introduced greater transparency in reporting; however, the degree to which transparency has improved the accuracy of reporting is unknown. To evaluate the incidence of selective reporting of outcomes in the era of public access to protocols, we designed a systematic review of published randomized trials in oncology in three high-impact journals. The outcomes and analyses reported in published reports were compared with the appended protocols to determine the consistency between planned and published outcomes.

METHODS

Study Inclusion Criteria and Search Strategy

We performed a PubMed search on December 1, 2013, with the key words randomized, or randomized, randomly, or random in either the title or the abstract. Results were limited to reports published in Journal of Clinical Oncology (JCO), the New England Journal of Medicine (NEJM), or The Lancet between March 1, 2012, and December 31, 2012, and were filtered with the subject of cancer. These journals were selected because they had an impact factor of 15 or greater and published randomized trials in oncology. The Lancet Oncology and The Journal of the American Medical Association were not included, because they did not provide protocols for randomized trials during this time period. Because major journals mandated appended protocols beginning in March 2011, the March 2012 time point for study inception was chosen to allow a 1-year interval for practice incorporation.10 The search was cross-checked by the specific journal's search engine and an independent review of the tables of contents for all archived issues during this period (Fig 1). Nonrandomized studies, secondary reports of randomized trials, and articles focused on the screening or prevention of cancer were excluded. Primary publications of 74 cancer-related randomized clinical trials with available appended protocols were included in the study (Data Supplement).

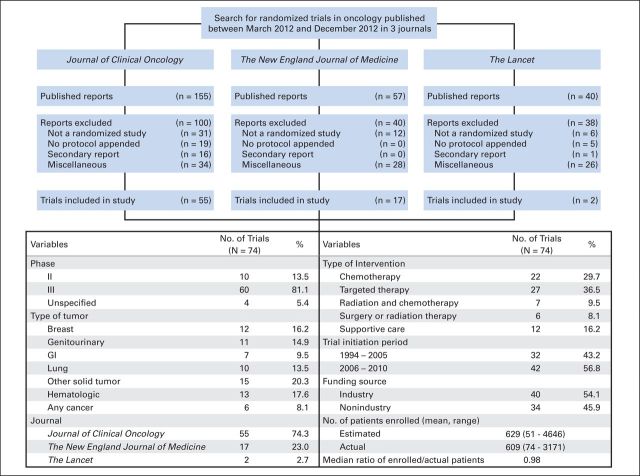

Fig 1.

Search strategy for and baseline characteristics of randomized trials included in the study. A search for randomized trials returned 252 published trials, of which 74 were included in the current analysis. Reports excluded for miscellaneous reasons were those that were reviews, were published outside the specified time period, or were not oncology related.

For each randomized trial, two study documents—the appended protocol and the published report—were reviewed and compared for objectives, end points, and a statistical analysis plan. Baseline characteristics, such as study size, phase, funding source, year of initiation, type of intervention, and disease site, were recorded. Data elements extracted from study documents included the number and type of planned primary end points, planned nonprimary end points, nonreported nonprimary end points, unplanned end points, and unplanned analyses (Data Supplement). End points were determined from the protocol, and preference was given to sections in this order: end points, objectives, and statistical plan. Secondary, exploratory, correlative, or translational end points were considered nonprimary end points. Because clinical trials invariably report adverse events, these were not considered unplanned, even if they were unspecified in the protocol. Specific toxicities (eg, incidence of secondary malignancies) were considered planned nonprimary end points only when specifically identified as outcome measures in the protocol, or they were considered unplanned end points if they were statistically compared in the report but not listed in the protocol. An unplanned analysis represented a novel analysis that had not been specified or implied in the protocol. Unplanned analyses did not include analyses of unplanned end points or instances in which a change in the statistical test for an analysis occurred. Data were collected by one investigator, verified by another investigator, and confirmed by the principal investigator before final analysis. All discrepancies were resolved through reappraisal of original documents.

Statistical Methods

The objective of the study was to assess selective reporting in randomized trials by determining discrepancies in planned primary end points, quantifying the incidence of nonreported nonprimary end points, and assessing reporting frequency and labeling of unplanned end points and unplanned analyses. Fisher's exact test was used for determining the significance of association between dichotomized and categoric variables. The Wilcoxon Mann-Whitney U test was used for the comparison of distributions between two groups, and Spearman's rank correlation coefficient was used as the measure of statistical dependence between two variables. All reported P values were two sided, and P < .05 was considered statistically significant. We have used P values as descriptive measures of discrepancy, not as inferential tests of null hypotheses. Because of the descriptive nature of our analyses, no adjustments were made for multiple comparisons. Statistical software R (R Development Core Team, www.r-project.org) version 3.1.2 was used.

RESULTS

Trial Characteristics

We identified a total of 74 oncology-based randomized trials published in The Lancet (n = 2), NEJM (n = 17), and JCO (n = 55; Fig 1). These trials enrolled an average of 609 patients (range, 74 to 3,171 patients). A majority of the trials were phase III (81.1%), were industry sponsored (54.1%), and involved systemic therapy as an intervention (66.2%; Fig 1).

Reporting of Primary End Points

A total of 86 primary end points were reported across 74 trials, and nine trials (12.2%) had more than one reported primary end point (Table 1). Nine trials (12.2%) had some discrepancy between their planned and published primary end points. Sources of disagreement included introduction of a new primary end point (n = 1), failure to report a planned primary end point (n = 1), change in the reporting of planned primary end point (n = 3), and change in the terminology of planned primary end point (n = 4; Table 1; Data Supplement). Trials with discrepant primary end points were more likely to have been initiated before 2005 (P = .03; Data Supplement).

Table 1.

Reporting and Analyses of PEPs in Randomized Trials (N = 74)

| Variable | Trials |

|

|---|---|---|

| No. | % | |

| Total No. of PEPs reported* | 86 | |

| No. of PEPs in the trial | ||

| Single | 65 | 87.8 |

| Multiple | 9 | 12.2 |

| Type of PEP in the trial* | ||

| Overall survival | 25 | 33.8 |

| Time to event (excluding overall survival)† | 35 | 47.3 |

| Response rate | 7 | 9.5 |

| Symptom scale | 10 | 13.5 |

| Other | 9 | 12.2 |

| Outcome of the trial‡ | ||

| Positive | 35 | 47.3 |

| Negative | 39 | 52.7 |

| Labeling of PEPs in the reports | ||

| PEP | 63 | 85.1 |

| Primary outcome or primary objective | 10 | 13.5 |

| Aim | 1 | 1.4 |

| Discrepancy of PEPs between protocols and reports | ||

| Total | 9 | 12.2 |

| Reporting of new PEP | 1 | 1.4 |

| Omission of planned PEP | 1 | 1.4 |

| Changed PEP | 3 | 4.1 |

| Changed terminology of PEP§ | 4 | 5.4 |

| Unplanned analyses involving PEPs | ||

| Total No. of reports with unplanned analyses | 31 | 41.9 |

| Total No. of unplanned analyses | 52 | |

| Labeling of unplanned analyses in the reports (n = 31)‖ | ||

| Unplanned, post hoc, or ad hoc | 8 | 25.8 |

| Labeling other than unplanned/post hoc/ad hoc¶ | 8 | 25.8 |

| No specific labeling | 19 | 61.3 |

Abbreviation: PEP, primary end point.

Some studies had multiple planned PEPs.

Time to event end points included colostomy-free survival, disease-free survival, disease-free interval, event-free survival, progression-free survival, relapse-free survival, and time to progression.

Outcome was positive if study reported to have met its PEP and negative if study failed to meet its PEP.

Two different terms were used for the end point in the protocol and the report, but the definition of the end points was similar (eg, disease-free survival in protocol, progression-free survival in report).

Studies have more than one unplanned analyses, labeled under same or different categories.

Labeled exploratory, planned, secondary, additional, correlative, or retrospective.

Reporting of Nonprimary End Points

Of the 579 nonprimary end points that were planned and specified in protocols (median, seven per trial; range, 0 to 23 per trial), only 373 (64.4%) were reported (median, five per trial; range, 0 to 16 per trial; Table 2). Only 19 studies (25.7%) reported all of their planned nonprimary end points. The most common planned nonprimary end points that were not reported were related to biomarkers (19.4%), quality-of-life measures (17.4%), and time-to-event end points (16.0%; Data Supplement).

Table 2.

Reporting and Analyses of NPEPs in Randomized Trials

| Variable | Protocol (N = 74) | Report (N = 74) | % |

|---|---|---|---|

| Planned NPEPs | |||

| Total No. of planned NPEPs | 579 | 373 | |

| Median (range) | 7 (0-23) | 5 (0-16) | |

| Mean planned NPEPs per study | 7.8 | 5.1 | |

| Planned NPEPs not reported | |||

| Total No. of planned NPEPs not in reports | 206 | ||

| Median (range) | 2 (0-12) | ||

| Mean planned NPEPs not reported per study | 2.8 | ||

| Studies with all planned NPEPs in report | 19 | 25.7 | |

| Studies with > 3 planned NPEPs not in report | 25 | 33.8 | |

| Studies with > 5 planned NPEPs not in report | 12 | 16.2 | |

| Unplanned end points in the reports | |||

| Total No. of reports with unplanned end points | 28 | 37.8 | |

| Total No. of unplanned end points | 65 | ||

| Mean unplanned end points per trial | 2.3 | ||

| Labeling of unplanned end points (n = 63) | |||

| Unplanned, post hoc, or ad hoc | 2 | 3.1 | |

| Labeling other than unplanned/post hoc/ad hoc* | 11 | 16.9 | |

| No specific labeling | 52 | 80.0 | |

| Unplanned analyses involving planned NPEPs† | |||

| Total No. of reports with unplanned analyses | 19 | 25.7 | |

| Total No. of unplanned analyses | 33 | ||

| Labeling of unplanned analyses in the reports (n = 19)‡ | |||

| Unplanned, post hoc, or ad hoc | 5 | 26.3 | |

| Labeling other than unplanned/post hoc/ad hoc* | 5 | 31.6 | |

| No specific labeling | 9 | 47.4 |

Abbreviation: NPEP, nonprimary end point.

Labeled exploratory, planned, secondary, additional, correlative, or retrospective.

Unplanned analyses included subgroup, multivariable, and adjusted population analyses.

Studies had more than one unplanned analyses, labeled under similar or different categories.

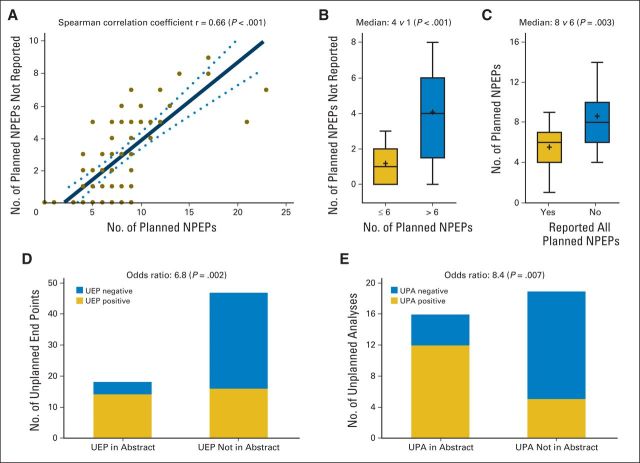

There was a significant positive correlation between the number of planned and the number of nonreported nonprimary end points (Spearman r = 0.66; Fig 2A), such that trials with greater than six planned nonprimary end points had more nonreported nonprimary end points than trials with six or fewer planned nonprimary end points (median, 4 v 1; P < .001; Fig 2B). Conversely, trials with nonreported nonprimary end points had a larger number of planned nonprimary end points than trials that reported all of their planned nonprimary end points (median, 8 v 6; P = .003; Fig 2C). Studies that reported all planned nonprimary end points did not differ significantly with regard to study size, study outcome, study sponsor, or study year from studies that did not report one or more planned nonprimary end points (Data Supplement).

Fig 2.

Reporting of planned nonprimary end points (NPEPs), unplanned end points (UEPs), and unplanned analyses (UPAs) in randomized trials. (A) Positive correlation between the number of planned NPEPs and unreported NPEPs. (B) Studies with greater than six NPEPs had more unreported NPEPs than studies with six or fewer NPEPs. (C) Conversely, studies with unreported NPEPs had larger numbers of planned NPEPs than studies that reported all of their NPEPs. (D and E) A higher number of positive UEPs and UPAs than negative UEPs and UPAs were reported in abstracts.

Reporting of Unplanned End Points and Unplanned Analyses

Twenty-eight studies (37.8%) reported a total of 65 unplanned end points (Table 2). The most common unplanned end points reported were related to tumor response (40.0%), time-to-event end points (26.0%), and symptoms or toxicity (12.0%; Data Supplement). Only two (3.1%) of these 65 unplanned end points were actually clearly labeled as unplanned, post hoc, or ad hoc, whereas 52 unplanned end points (80.0%) had no specific labeling to allow recognition as unplanned (Table 2). Studies with positive unplanned end points alluded to these end points in the abstract more frequently than studies with negative unplanned end points (odds ratio, 6.8; P = .002; Fig 2D).

Thirty-one trials (41.9%) reported a total of 52 unplanned analyses that involved planned primary end points, of which 19 trials (61.3%) did not label these unplanned analyses as unplanned (Table 2). Similarly, 19 trials (25.7%) reported a total of 33 unplanned analyses that involved planned nonprimary end points, of which nine trials (47.4%) did not label these unplanned analyses as unplanned (Table 2). The most common of these unplanned analyses reported were subgroup analyses (49.4%) and adjusted population analyses (27.1%; Data Supplement). Studies with positive unplanned analyses were more likely than studies with negative unplanned analyses to mention these in abstracts (odds ratio, 8.4; P = .007). Studies that reported unplanned end points or unplanned analyses did not differ substantially from studies that did not report unplanned end points or analyses with regard to study size, study outcome, study sponsor, or study year (Data Supplement).

DISCUSSION

The decision by major journals to provide access to the protocols for published reports of randomized trials promoted transparency of clinical trial reporting and was expected to prevent selective reporting of outcomes. Our results indicate that a small fraction of randomized trials reported primary end points different from those specified in the protocol. A third of all planned nonprimary end points in randomized trials are not reported, and only a quarter of all trials reported all of the planned nonprimary end points. We also found that many trials reported unplanned end points (38%) and unplanned analyses (47%) and that most of these reports had no labeling to identify these outcomes as unplanned. Studies preferentially cited the statistically significant unplanned outcomes in the abstracts. Contrary to prior studies, our analysis does not support a greater bias within industry-sponsored research with regard to selective outcome reporting.11,12

Prior studies that compared protocols to published reports have shown evidence that selective reporting has suffered from selection biases, because the protocols used were either a part of a selected registry or solicited or provided for the purpose of the study.2,9,13–15 This is the first study, to our knowledge, to use the unrestricted public access to protocols to conduct a transparent and reproducible analysis of the incidence of selective reporting in a cohort of randomized published trials.10 For this reason, we believe that, compared with available research, this study may better represent the incidence of selective reporting of outcomes in the current literature. Compared with a meta-analysis of studies that investigated selective reporting of outcomes before the era of public access to protocols, our study shows a decline in selective reporting of primary end points (12% v 47%; P < .001) but a similar incidence of selective reporting of nonprimary end points (unreported planned nonprimary end points, 74% v 86%; P = .22; Data Supplement).2

The primary limitation of our study is that it includes a cohort of trials restricted to a single disease area and published in three high-impact journals. It is possible that these journals are likely to publish higher-profile and, possibly, more positive trials; therefore, these trials may not reflect the larger body of published literature. Also, although we did not find any difference of selective reporting between positive and negative trials within this cohort of studies (Data Supplement), it is possible that assessment of reports published in other fields and journals may lead to different results. We believe that our observations about selective reporting are not unique to oncology trials but instead reflect medical reporting in general. Another potential drawback of the study is that it is restricted to the protocol documents provided to the journals by the investigators; only 55% of trials had complete protocols, whereas others provided redacted protocols. However, there was no difference in reporting of unplanned end points (P = .34) and unplanned analyses (P = .81) among these reports. This suggests that selective reporting was not because of a lack of information in the version of the provided protocol. Because the purpose of appending these protocols to reports is to provide adequate information for the appraisal of results, we believe that such documents should be comprehensive and up to date. Our analysis was also limited to the primary report of the randomized trial. Investigators may publish other outcomes in future reports, and, therefore, we may be overestimating selective reporting problems. However, we have taken a conservative estimate and given credit to investigators for any mention of a planned outcome in the report. Conversely, some investigators of these same trials may report unplanned outcomes in subsequent reports.

Because randomized trials are level-I evidence, they should be held to the highest standards of conduct and reporting. Despite the CONSORT guidelines, mandatory registration of trials, and the practice of protocol disclosure, selective reporting of outcomes remains an ongoing concern. The increased likelihood of publication of statistically significant results influences authors to spin outcomes (eg, suppress nonsignificant outcomes and highlight or misrepresent significant outcomes) to render the data more attractive.16,17 The reasons for the persistence of selective reporting, despite the availability of the protocol documents, are likely multifactorial and, in our opinion, reflect the challenges of reviewing lengthy protocols, the lack of protocol clarity with regard to the analysis of each end point, the internal inconsistencies within protocols caused by the replication of data across multiple protocol sections, and the impracticality of reporting large number of secondary objectives.

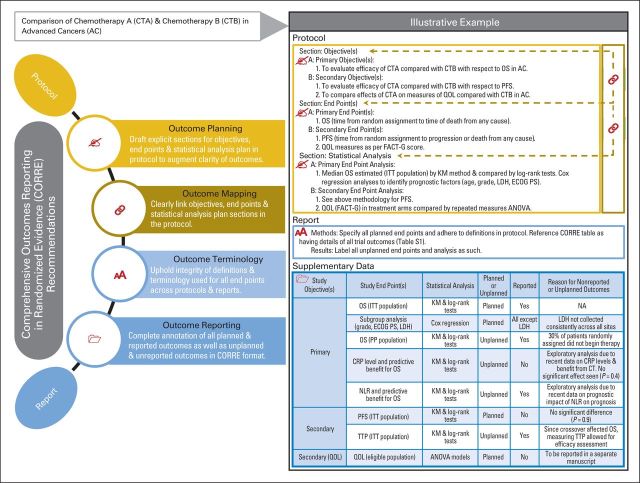

To overcome these challenges, we propose a CORRE (Comprehensive Outcomes Reporting in Randomized Evidence) initiative that involves systematic recommendations (Fig 3, with illustrative example) aimed toward ensuring consistent and comprehensive reporting of outcomes in randomized trials. Although resources such as public registries and results databases exist and are vital to the process, they can sometimes be cumbersome to navigate and are not readily accessible to readers (ie, end users).18–21 Furthermore, the compliance with reporting of results to these databases has been suboptimal.22 The CORRE initiative allows for a readily available supplementary section that provides a quick review of outcome measures and their statuses. These recommendations would add to the existing standardized guidelines for drafting protocols (eg, the Standard Protocol Items: Recommendations for Interventional Trials [SPIRIT] statement) and defining end points (eg, the Standardized Definitions for Efficacy End Points [STEEP] proposal).23–26

Fig 3.

Recommendations for Comprehensive Outcomes Reporting in Randomized Evidence (CORRE). CORRE table (as a supplementary table) should include a list of all outcomes analyzed along with their reporting statuses, reasons for not reporting outcomes, and exploration of unplanned outcomes. AE, adverse events; ANOVA, analysis of variance; CMH, Cochran-Mantel-Haenszel test; CR, complete response; CRP, C-reactive protein; CT, chemotherapy; CTCAE, Common Terminology Criteria for Adverse Events; ECOG PS, Eastern Cooperative Oncology Group performance status; FACT-G, Functional Assessment of Cancer Therapy–General Scale; ITT, intention-to-treat; KM, Kaplan-Meier; LDH, lactate dehydrogenase; NA, not applicable; NLR, neutrophil-lymphocyte ratio; ORR, objective response rate; OS, overall survival; PD, progressive disease; PFS, progression-free survival; PP, per-protocol; PR, partial response; QOL, quality of life; SD, stable disease; TTP, time to progression.

During the review, considerable ambiguity of definitions and terminology for end points was noted. In addition, the objectives section did not mirror the end point section in 31% of protocols. Such practices can cause confusion for readers.27 The authors therefore recommend that investigators must include distinct objectives, end points (or a merged objective/end point section), and statistical analysis sections in protocols; ensure a clear link between these sections; and safeguard the integrity of definitions and terminology used for outcome reporting (Fig 3).

Finally, we detected a high incidence of nonreported outcomes and unplanned outcomes, the majority of which were without specific labeling that identified them as unplanned. For a precise interpretation of results, end users need to know what outcomes were planned, unplanned, or unreported.28 The occurrence of multiple end points, subgroup analyses, significance tests, and under-reported comparisons is fairly prevalent in randomized trials.29–35 End users need to be aware of these, because they can result in high probability of spurious positive findings.31,32,34 Also, although the absence of evidence is not evidence of absence, the failure to report end points obscures potentially important true-negative findings.36,37 The authors recommend the use of a mandated supplementary table (Fig 3) to all randomized clinical trials that would list all planned end points and unplanned end points and analyses, including the status of each outcome at the time of report publication. The CORRE table is designed to streamline outcomes reporting and to facilitate full disclosure between authors and end users with regard to all analyses performed. Furthermore, this is an efficient platform for sharing the maximum amount of information with end users to aid accurate translation of results and ancillary research.20 The CORRE table will also act as a reference for the reviewers of these articles to match reported data to planned data. Although the potential scientific knowledge gained from exploratory unplanned analyses cannot be undervalued and is critical to discovery, these are almost always hypothesis generating and, therefore, should be appropriately labeled in reports to allow distinction from confirmatory analyses.

The implications of selective reporting of outcomes are noteworthy. Lack of information or misinterpretation of outcomes can result in wasteful duplication, misdirected research, and suboptimal or even potentially harmful patient care. The CORRE directives are aimed toward improving the quality of outcomes reporting by enhancing clarity, completeness, and transparency of reporting outcomes to discourage investigators from under-reporting, over-reporting, or misreporting outcomes. We propose the CORRE table as a simple and feasible tool for systematic dissemination of results, in keeping with recent strategies proposed by the National Institutes of Health and the Institute of Medicine.26,38 The CORRE table will enhance the current system of outcomes reporting in clinical trials, including clinical trials registration and results submission, to publically available registries.26 The CORRE table is a preliminary step that we propose toward improving outcomes reporting in randomized trials. However, additional effort toward refining this tool, including a review from a committee of experts regarding a uniform framework, is needed for successful execution.

In conclusion, despite the unrestricted public access to protocols of randomized trials, selective reporting continues to be a major concern in the reporting of clinical trials. Therefore, added initiatives, such as the CORRE recommendations discussed within, are necessary to additionally minimize the occurrence of selective reporting in randomized trials. In the face of challenges associated with publishing every finding, the CORRE format can ensure dissemination of unabridged information, thereby making sure that costly research endeavors facilitate true evidence-based medicine.

Supplementary Material

Footnotes

Authors' disclosures of potential conflicts of interest are found in the article online at www.jco.org. Author contributions are found at the end of this article.

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

Disclosures provided by the authors are available with this article at www.jco.org.

AUTHOR CONTRIBUTIONS

Conception and design: Kanwal Pratap Singh Raghav, Lee M. Ellis, James Abbruzzese, Michael J. Overman

Financial support: Michael J. Overman

Administrative support: Michael J. Overman

Collection and assembly of data: Kanwal Pratap Singh Raghav, Sminil Mahajan, Michael J. Overman

Data analysis and interpretation: All authors

Manuscript writing: All authors

Final approval of manuscript: All authors

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

From Protocols to Publications: A Study in Selective Reporting of Outcomes in Randomized Trials in Oncology

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO's conflict of interest policy, please refer to www.asco.org/rwc or jco.ascopubs.org/site/ifc.

Kanwal Pratap Singh Raghav

No relationship to disclose

Sminil Mahajan

No relationship to disclose

James C. Yao

Consulting or Advisory Role: Novartis, Ipsen, AAA, Eisai

Research Funding: Novartis

Brian P. Hobbs

No relationship to disclose

Donald A. Berry

Employment: Berry Consultants

Leadership: Berry Consultants

Stock or Other Ownership: Berry Consultants

Consulting or Advisory Role: Berry Consultants

Travel, Accommodations, Expenses: Berry Consultants

Rebecca D. Pentz

No relationship to disclose

Alda Tam

Stock or Other Ownership: Novartis

Research Funding: AngioDynamics

Waun K. Hong

No relationship to disclose

Lee M. Ellis

Consulting or Advisory Role: Genentech, Eli Lilly, Celgene, OncoMed Pharmaceuticals

James Abbruzzese

Honoraria: Daiichi Sankyo, Halozyme Therapeutics, Aduro Biotech, Clarion Healthcare Consulting, Celgene

Consulting or Advisory Role: Acerta Pharma, Celgene, Halozyme Therapeutics, GeneKey, Progen, Aduro Biotech, Clarion Healthcare Consulting, Merck Sharp & Dohme

Michael J. Overman

Stock or Other Ownership: Novartis (I)

Consulting or Advisory Role: Sirtex Medical, Roche

Research Funding: Celgene, AstraZeneca, MedImmune, Bristol-Myers Squibb, Amgen, Roche

REFERENCES

- 1.Hopewell S, Loudon K, Clarke MJ, et al. Publication bias in clinical trials due to statistical significance or direction of trial results. Cochrane Database Syst Rev. 2009:MR000006. doi: 10.1002/14651858.MR000006.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Dwan K, Altman DG, Cresswell L, et al. Comparison of protocols and registry entries to published reports for randomized controlled trials. Cochrane Database Syst Rev. 2011:MR000031. doi: 10.1002/14651858.MR000031.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kirkham JJ, Dwan KM, Altman DG, et al. The impact of outcome reporting bias in randomised controlled trials on a cohort of systematic reviews. BMJ. 2010;340:c365. doi: 10.1136/bmj.c365. [DOI] [PubMed] [Google Scholar]

- 4.Franco A, Malhotra N, Simonovits G. Social science: Publication bias in the social sciences—Unlocking the file drawer. Science. 2014;345:1502–1505. doi: 10.1126/science.1255484. [DOI] [PubMed] [Google Scholar]

- 5.Turner EH, Matthews AM, Linardatos E, et al. Selective publication of antidepressant trials and its influence on apparent efficacy. N Engl J Med. 2008;358:252–260. doi: 10.1056/NEJMsa065779. [DOI] [PubMed] [Google Scholar]

- 6.Begg C, Cho M, Eastwood S, et al. Improving the quality of reporting of randomized controlled trials: The CONSORT statement. JAMA. 1996;276:637–639. doi: 10.1001/jama.276.8.637. [DOI] [PubMed] [Google Scholar]

- 7.De Angelis CD, Drazen JM, Frizelle FA, et al. Is this clinical trial fully registered? A statement from the International Committee of Medical Journal Editors. N Engl J Med. 2005;352:2436–2438. doi: 10.1056/NEJMe058127. [DOI] [PubMed] [Google Scholar]

- 8.Laine C, Horton R, DeAngelis CD, et al. Clinical trial registration: Looking back and moving ahead. N Engl J Med. 2007;356:2734–2736. doi: 10.1056/NEJMe078110. [DOI] [PubMed] [Google Scholar]

- 9.Vedula SS, Bero L, Scherer RW, et al. Outcome reporting in industry-sponsored trials of gabapentin for off-label use. N Engl J Med. 2009;361:1963–1971. doi: 10.1056/NEJMsa0906126. [DOI] [PubMed] [Google Scholar]

- 10.Haller DG, Cannistra SA. Providing protocol information for J Clin Oncol readers: What practicing clinicians need to know. J Clin Oncol. 2011;29:1091. [Google Scholar]

- 11.Djulbegovic B, Lacevic M, Cantor A, et al. The uncertainty principle and industry-sponsored research. Lancet. 2000;356:635–638. doi: 10.1016/S0140-6736(00)02605-2. [DOI] [PubMed] [Google Scholar]

- 12.Lexchin J, Bero LA, Djulbegovic B, et al. Pharmaceutical industry sponsorship and research outcome and quality: Systematic review. BMJ. 2003;326:1167–1170. doi: 10.1136/bmj.326.7400.1167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chan AW, Hrobjartsson A, Haahr MT, et al. Empirical evidence for selective reporting of outcomes in randomized trials: Comparison of protocols to published articles. JAMA. 2004;291:2457–2465. doi: 10.1001/jama.291.20.2457. [DOI] [PubMed] [Google Scholar]

- 14.Al-Marzouki S, Roberts I, Evans S, et al. Selective reporting in clinical trials: Analysis of trial protocols accepted by The Lancet. Lancet. 2008;372:201. doi: 10.1016/S0140-6736(08)61060-0. [DOI] [PubMed] [Google Scholar]

- 15.Chan AW, Krleza-Jeric K, Schmid I, et al. Outcome reporting bias in randomized trials funded by the Canadian Institutes of Health Research. CMAJ. 2004;171:735–740. doi: 10.1503/cmaj.1041086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Easterbrook PJ, Berlin JA, Gopalan R, et al. Publication bias in clinical research. Lancet. 1991;337:867–872. doi: 10.1016/0140-6736(91)90201-y. [DOI] [PubMed] [Google Scholar]

- 17.Chan AW. Bias, spin, and misreporting: Time for full access to trial protocols and results. PLoS Med. 2008;5:e230. doi: 10.1371/journal.pmed.0050230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Zarin DA, Tse T. Medicine: Moving toward transparency of clinical trials. Science. 2008;319:1340–1342. doi: 10.1126/science.1153632. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Krleza-Jeric K, Chan AW, Dickersin K, et al. Principles for international registration of protocol information and results from human trials of health related interventions: Ottawa statement (part 1) BMJ. 2005;330:956–958. doi: 10.1136/bmj.330.7497.956. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Glasziou P, Macleod M, Chalmers I, et al. Research: Increasing value, reducing waste—Authors' reply. Lancet. 2014;383:1126–1127. doi: 10.1016/S0140-6736(14)60563-8. [DOI] [PubMed] [Google Scholar]

- 21.Hartung DM, Zarin DA, Guise JM, et al. Reporting discrepancies between the ClinicalTrials.gov results database and peer-reviewed publications. Ann Intern Med. 2014;160:477–483. doi: 10.7326/M13-0480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Anderson ML, Chiswell K, Peterson ED, et al. Compliance with results reporting at ClinicalTrials.gov. N Engl J Med. 2015;372:1031–1039. doi: 10.1056/NEJMsa1409364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Punt CJ, Buyse M, Kohne CH, et al. End points in adjuvant treatment trials: A systematic review of the literature in colon cancer and proposed definitions for future trials. J Natl Cancer Inst. 2007;99:998–1003. doi: 10.1093/jnci/djm024. [DOI] [PubMed] [Google Scholar]

- 24.Chan AW, Tetzlaff JM, Altman DG, et al. SPIRIT 2013 statement: Defining standard protocol items for clinical trials. Ann Intern Med. 2013;158:200–207. doi: 10.7326/0003-4819-158-3-201302050-00583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hudis CA, Barlow WE, Costantino JP, et al. Proposal for standardized definitions for efficacy end points in adjuvant breast cancer trials: The STEEP system. J Clin Oncol. 2007;25:2127–2132. doi: 10.1200/JCO.2006.10.3523. [DOI] [PubMed] [Google Scholar]

- 26.Zarin DA, Tse T, Sheehan J. The proposed rule for US clinical trial registration and results submission. N Engl J Med. 2015;372:174–180. doi: 10.1056/NEJMsr1414226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Saad ED, Katz A. Progression-free survival and time to progression as primary end points in advanced breast cancer: Often used, sometimes loosely defined. Ann Oncol. 2009;20:460–464. doi: 10.1093/annonc/mdn670. [DOI] [PubMed] [Google Scholar]

- 28.Al-Marzouki S, Roberts I, Marshall T, et al. The effect of scientific misconduct on the results of clinical trials: A Delphi survey. Contemp Clin Trials. 2005;26:331–337. doi: 10.1016/j.cct.2005.01.011. [DOI] [PubMed] [Google Scholar]

- 29.Schulz KF, Grimes DA. Multiplicity in randomized trials I: End points and treatments. Lancet. 2005;365:1591–1595. doi: 10.1016/S0140-6736(05)66461-6. [DOI] [PubMed] [Google Scholar]

- 30.Schulz KF, Grimes DA. Multiplicity in randomized trials II: Subgroup and interim analyses. Lancet. 2005;365:1657–1661. doi: 10.1016/S0140-6736(05)66516-6. [DOI] [PubMed] [Google Scholar]

- 31.Tannock IF. False-positive results in clinical trials: Multiple significance tests and the problem of unreported comparisons. J Natl Cancer Inst. 1996;88:206–207. doi: 10.1093/jnci/88.3-4.206. [DOI] [PubMed] [Google Scholar]

- 32.Brookes ST, Whitley E, Peters TJ, et al. Subgroup analyses in randomized controlled trials: Quantifying the risks of false-positives and false-negatives. Health Technol Assess. 2001;5:1–56. doi: 10.3310/hta5330. [DOI] [PubMed] [Google Scholar]

- 33.Sun X, Briel M, Busse JW, et al. Credibility of claims of subgroup effects in randomised controlled trials: Systematic review. BMJ. 2012;344:e1553. doi: 10.1136/bmj.e1553. [DOI] [PubMed] [Google Scholar]

- 34.Berry D. Multiplicities in cancer research: Ubiquitous and necessary evils. J Natl Cancer Inst. 2012;104:1124–1132. doi: 10.1093/jnci/djs301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lagakos SW. The challenge of subgroup analyses: Reporting without distorting. N Engl J Med. 2006;354:1667–1669. doi: 10.1056/NEJMp068070. [DOI] [PubMed] [Google Scholar]

- 36.van Assen MA, van Aert RC, Nuijten MB, et al. Why publishing everything is more effective than selective publishing of statistically significant results. PLoS One. 2014;9:e84896. doi: 10.1371/journal.pone.0084896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Bedard PL, Krzyzanowska MK, Pintilie M, et al. Statistical power of negative randomized controlled trials presented at American Society for Clinical Oncology annual meetings. J Clin Oncol. 2007;25:3482–3487. doi: 10.1200/JCO.2007.11.3670. [DOI] [PubMed] [Google Scholar]

- 38.Institute of Medicine. Washington, DC: National Academies Press; 2015. Sharing clinical trial data: Maximizing benefits, minimizing risk. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.