Abstract

Previous studies have revealed the impact of emotion on conflict processing. The present study was conducted to investigate whether cognitive control in the affective domain is also affected by emotion. Emotional face-word and body-word Stroop tasks were explored and contrasted, and both behavioural and electrophysiological measures were recorded. Behavioural results showed that both tasks replicated previous robust interference effects. At the physiological level, the two tasks showed dissociable neural activity in the early attention and perception stages. It was also found that the face-word task evoked more pronounced N1 and P2 amplitudes than the body-word task. However, the two tasks evoked comparable N450 amplitudes. At later processing stages, positive slow potentials were modulated by target emotion and congruency. In addition, time-frequency analyses also revealed that the face-word task induced enhanced theta activity compared to the body-word task at both early and later stages of processing. The present findings provide support for the dual competition framework and suggest the dynamic modulation of emotion on cognitive control in the affective domain.

Cognitive control is regarded as a key function of humans, serving to maintain normal life and allow adaptation to a changing environment. Cognitive control specifically refers to the ability to switch flexibly between appropriate and prepotent actions when faced with conflicting action tendency1,2. Thus, conflict detection and resolution is the core of cognitive control, and has already been explored for decades. Based on the influential conflict monitoring theory2,3,4, once a conflict is detected, the cognitive system will boost control efficiency, aiming to resolve subsequent trials. Indeed, several imaging studies have found that activity in the dorsal anterior cingulate cortex is correlated with conflict detection, whereas activity in the dorsolateral prefrontal cortex is associated with pronounced cognitive control2,3,5,6.

Under various circumstances, salient emotional stimuli may gain priority access to the cognitive processing system, and consequently interfere with an individual’s engagement in goal-directed behaviour. The affective modulation of conflict processing has been investigated extensively7,8,9,10,11,12,13,14,15. One approach to investigating the affective modulation of conflict processing is to explore how emotion exerts influence over performance on a cognitive control task. For example, on the Stroop color-word trial, the interference effect was larger when preceded by a negative emotional picture compared to a positive emotional picture16. In another recent study, participants were presented with positive, negative, and neutral emotional sounds prior to a Stroop colour-word trial in separate blocks15. The results showed that response latency on the Stroop colour-word trial was delayed when preceded by negative sound compared to positive and neutral sounds. In contrast to these studies, which have explored the direct and explicit influence of emotion on conflict processing, other studies have attempted to probe the implicit effect of emotion on conflict processing7,8,9,10,11. For example, in an adapted Flanker task, participants were instructed to identify the colour of the ink of the centre word while ignoring two flanker words above or below, for words with negative, positive or neutral valence. Results from studies using this task have shown that both negative and positive words speed up conflict processing.

Although existing evidence reveals that emotions affect conflict processing, an important yet unresolved issue is whether emotion also influences cognitive control in the affective domain. For example, in the emotional Stroop test, which is adapted from the classic colour-word Stroop paradigm, participants are required to judge emotion as expressed by a facial or body expression in the presence of inhibitory influences from a simultaneously presented emotion-laden word17,18,19,20,21,22,23,24,25. Imaging evidence shows that resolution of emotional Stroop conflict is associated with activity in the rostral anterior cingulate cortex, and that resolution of emotional conflict occurs via top-down inhibition of the amygdala by the rostral cingulate cortex17,18. Similar behavioural and electrophysiological data have been obtained using an emotional face-word and body-word task. However, these results do not necessarily imply that emotion exerts an influence on conflict processing, due to the fact that the goals of such studies were to explore the behavioural and neural dynamics of emotional conflict processing rather than the emotional modulation of conflict processing. Moreover, emotional conflict processing and emotional modulation of conflict processing have never been studied simultaneously. A study that employs the same paradigm in one experiment and manipulates the target is needed to clarify the emotional modulation of conflict processing within the affective domain. To this end, in the present study, both the emotional face-word and body-word Stroop tasks were explored.

Imaging approaches provide the exact brain structure that is involved in conflict detection and resolution, whereas behavioural measures capture the results of multiple sequential sub-stage processing. Furthermore, the high time-resolution of event-related potentials (ERPs) would provide the fine detail of each individual sub-stage processing. To date, two main ERP components have been reported for emotional Stroop tasks: the N450, which is related to conflict resolution, and a later stage slow potential14,19,20,21,22,25,26. The conflict N450 is a negative deflection in the time-locked ERP with frontal-central scalp distribution peaking around 400–500 ms after stimulus onset and index conflict detection, whereas the slow potential has a central-parietal scalp distribution at a later processing stage, which might be associated with conflict resolution27. It is plausible that cognitive control processing involves multiple successive stages, from early emotional target perception to later stage conflict detection and resolution. In the early stages, N1 and P2 are reliable neural markers of emotion and attention-related and perceptual processing28,29,30,31. Several studies have provided evidence that N1 and P2 can be modulated by the threat level of emotional stimuli. For example, high-threat stimuli evoked higher N1 and P2 amplitudes than low-threat stimuli32,33,34,35. With respect to the threat level of facial and body expressions, there is evidence that facial expressions are more threatening than body expressions, because facial expressions lead to more pronounced activity in the amygdala than threatening body expressions36,37,38,39. Based on these findings, it is possible that threatening facial expressions would evoke larger N1 and P2 amplitudes than body expressions in the early emotion perception stage. However, in the early stage where high cognitive control processing is not accessible, these two components may not be modulated by emotional congruency.

The onset of a particular type of stimulus can also induce event-related spectral perturbations (ERSPs) and oscillatory activity. Numerous prior cognitive control studies40,41,42,43,44,45,46,47 have found that specific bands of ERSP activity, such as increased theta activity, may be associated with a greater need for executive control under conflictive conditions. Our previous investigation19 also found augmented theta oscillations for the incongruent but not congruent condition in an emotional body-word task. We speculate that resolution of emotional conflict under the face-word task may also entail enhanced theta activity.

In summary, the purpose of the present study was to test whether the affective nature of the target influences emotional conflict processing. Based on the findings of our previous study, our hypothesis is as follows: (i) Longer response latencies and more errors would be found under the incongruent condition in both tasks, (ii) N1 and P2 would be modulated by stimuli type instead of emotional congruency, (iii) N450 and slow potentials (SP) would be modulated by emotional congruency rather than stimuli type, and (iv) enhanced theta oscillation activity underlying conflictive conditions for both tasks would be observed.

Methods

Participants

Twenty-seven undergraduate students took part in the formal ERP study. All participants were right-handed, had normal or corrected-to-normal vision, and had no history of psychiatric illness or neurological problems. Handedness was assessed through the Edinburgh Handedness Inventory. Furthermore, none of these subjects had participated previously in a similar ERP study. In this study, five participants were eliminated due to chance level of behavioural performance. The remaining participants were aged 18 to 26 years with a mean age of 23.6 years. The study was approved by the local Southwest University ethics committee. In accordance with the approved guidelines, written informed consent was obtained from the participants prior to conducting pilot or formal experiments. All methods were carried out in accordance with the approved guidelines.

Materials and Procedure

In order to equate the body-word and face-word Stroop tasks, both tasks included negative angry and sad stimuli only. The body-word stimuli used in this study were identical to those used in our previous study. All body stimuli from the Bodily Expression Action Stimulus Test were subjected to a 4000-s forced-choice judgment. The resulting data showed that angry and sad bodies are better recognized than happy and fearful bodies. To minimize the influence of accuracy on behavioural data, 20 angry and 20 sad body-expression images of statistically equivalent accuracy were selected. These body images were compounded with Chinese words using Photoshop. The two Chinese characters, “ ” (“angry”) and “

” (“angry”) and “ ” (“sad”), were superimposed across the body in red colour in 28-point Times New Roman font. The position of the word was fixed on the chest of the body in all the body-word compound stimuli. A total of 80 compound images, in which 20 images for each of the four possible combinations of body-emotion and word-emotion, were generated. The four stimulus types (angry body-angry word, angry body-sad word, sad body-angry word, and sad body-sad word) then were divided into congruent (body expression matched emotional word) or incongruent (body expression did not match emotional word) categories. The incongruent category was expected to induce conflict in emotional conflict processing. For the face-word Stroop task, the stimuli consisted of 10 pictures of angry faces (5 male, 5 female) and 10 pictures of sad faces (5 male, 5 female) selected from the Chinese affective picture system. The two Chinese characters, “

” (“sad”), were superimposed across the body in red colour in 28-point Times New Roman font. The position of the word was fixed on the chest of the body in all the body-word compound stimuli. A total of 80 compound images, in which 20 images for each of the four possible combinations of body-emotion and word-emotion, were generated. The four stimulus types (angry body-angry word, angry body-sad word, sad body-angry word, and sad body-sad word) then were divided into congruent (body expression matched emotional word) or incongruent (body expression did not match emotional word) categories. The incongruent category was expected to induce conflict in emotional conflict processing. For the face-word Stroop task, the stimuli consisted of 10 pictures of angry faces (5 male, 5 female) and 10 pictures of sad faces (5 male, 5 female) selected from the Chinese affective picture system. The two Chinese characters, “ ” (“angry”) and “

” (“angry”) and “ ” (happy), were superimposed on the faces in red colour in 28-point Times New Roman font. The words and facial expressions were either congruent (e.g.,“

” (happy), were superimposed on the faces in red colour in 28-point Times New Roman font. The words and facial expressions were either congruent (e.g.,“ ” superimposed on an angry face picture) or incongruent (e.g.,“

” superimposed on an angry face picture) or incongruent (e.g.,“ ” superimposed on a happy face picture). Following ERP measurements, participants were required to rate the angry and sad expressions in terms of arousal and pleasantness using a 9-point scale: 1 (most pleasant) to 9 (most unpleasant); arousal: 1 (least arousing) to 9 (most arousing). Results showed that angry expressions (5.15 ± 0.21) were more arousing than sad expressions (4.68 ± 0.23), F (1, 21) = 8.346, p < 0.01,

” superimposed on a happy face picture). Following ERP measurements, participants were required to rate the angry and sad expressions in terms of arousal and pleasantness using a 9-point scale: 1 (most pleasant) to 9 (most unpleasant); arousal: 1 (least arousing) to 9 (most arousing). Results showed that angry expressions (5.15 ± 0.21) were more arousing than sad expressions (4.68 ± 0.23), F (1, 21) = 8.346, p < 0.01,  = 0.284; and body expressions (5.39 ± 0.24) were more arousing than facial expressions (4.44 ± 0.30), F (1, 21) = 6.660, p < 0.05,

= 0.284; and body expressions (5.39 ± 0.24) were more arousing than facial expressions (4.44 ± 0.30), F (1, 21) = 6.660, p < 0.05,  = 0.241. Angry expressions (3.13 ± 0.17) were not significantly more or less pleasant than sad expressions (3.09 ± 0.17), F (1, 21) = 0.073, p = 0.789; body expressions (3.4 ± 0.27) also were not significantly more or less pleasant than facial expressions (2.8 ± 0.27), F (1, 21) = 2.141, p = 0.158.

= 0.241. Angry expressions (3.13 ± 0.17) were not significantly more or less pleasant than sad expressions (3.09 ± 0.17), F (1, 21) = 0.073, p = 0.789; body expressions (3.4 ± 0.27) also were not significantly more or less pleasant than facial expressions (2.8 ± 0.27), F (1, 21) = 2.141, p = 0.158.

All compound stimuli were programmed and presented using E-Prime tools on a Dell 19-inch monitor. Participants were seated in a quiet room with dim light at a distance of 80 cm from the screen. The viewing angle of the framed body-word compound stimuli on the screen extended 9.87° vertically and 6.58° horizontally. Participants completed the body-word and face-word emotional Stroop tasks in two separate sessions. Participants were instructed to identify the emotion of the body or facial expression and ignore the meaning of the superimposed word. Session order was counterbalanced across participants. Subjects were instructed to respond as quickly and accurately as possible by pressing either “f” or “j” on the keyboard to indicate the expression of the image. Key allocations were counterbalanced across participants. All 80 compound body-word and 80 face-word stimuli were presented thrice and equally separated into 3 blocks, generating 240 trials in total for each task. Each block consisted of an equal number of congruent and incongruent trials. In each block, a trial began with a 500-ms fixation display, which was followed by a blank screen with a blank display that ranged from 300 to 600 ms. Then, the target body-word (or face-word) compound stimulus appeared at the centre of the screen for 1000 ms; participants were required to respond within this time window. The inter-trial interval display following each stimulus presentation was set randomly between 1200 and 1800 ms. Participants completed 20 practice trials before the start of each session. Participants were instructed to avoid blinking and other eye movements.

EEG recording

Electroencephalography (EEG) was recorded from each participant at 64 sites on the scalp using Ag/AgCl electrodes mounted on an elastic cap (Brain Products GmbH, Gilching, Germany) with on-line references to the FCz electrode site. Vertical and horizontal electro-oculograms were recorded using electrodes placed below the right eye and from the right orbital rim, respectively. All inter-electrode impedances were maintained below 5 kΩ. Signals were amplified using a 0.01–100 Hz band-pass filter and continuously sampled at 500 Hz/channel.

EEG analysis

Time-domain analysis included pre-processing using EEGLAB (Version 12.0.2.6b), an open toolbox running under the MATLAB environment. For time-domain analysis, all EEG signals were re-referenced off-line to TP9 and TP10 (average mastoid reference). An artifact subspace reconstruction algorithm was performed on continuous data to remove flat-line channels, eye-movement-related activity, low-frequency drifts, noisy channels, short-time bursts, and incompletely repaired segments from the data. Then, pre-processed clean EEG data were band-pass filtered to a range of 0.05 to 40 Hz, and epoched into a period of 1200 ms (200 ms baseline and 1000 ms post-stimulus onset). Signals were averaged across trials and time-locked to the onset of the compound stimuli separately for the congruent and incongruent categories for each task. Trials associated with erroneous responses and trials with a peak-to-peak deflection exceeding ±80 μV were excluded from averaging in both tasks. More than 80 and 100 trials were available for each subject for the face-word and body-word task analyses, respectively.

To assess dynamic changes in spectral power over time, raw EEG data were analysed in a similar manner to the ERP data as described above, except that data were band-pass filtered to a range of 1 to 40 Hz and epoched into a period of 1800 ms (600 ms pre-stimulus onset baseline and 1200 ms post-stimulus onset). To assess dynamic changes in spectral power over time, ERSP data were estimated and calculated using the newtime() function embedded in the EEGLAB toolbox. A Hanning tapered zero-padded fast Fourier transform (FFT) was applied to 1800-ms epoch data (900 frames per epoch). The window size of the moving FFT was set to 256 ms. For each condition (face-word congruent, face-word incongruent, body-word congruent, and body-word incongruent), the resulting estimated ERSP values were averaged across all trials and then converted to log power in decibels (dB). The baseline-normalized ERSP was obtained by subtracting the mean baseline (−400 ms to −100 ms pre-stimulus onset) power spectrum from each estimated spectrum, resulting in a 20 × 200 two-dimensional matrix representing time frequency for each electrode site and condition (20 frequencies from 0.97 to 31 Hz at each of 200 time points from −471 ms pre-stimulus onset to 1069 ms post-stimulus onset).

ERSP modulation was estimated in the following four steps:

(1) Based on our previous research, theta oscillation was more pronounced over the frontal midline area. We then plotted theta activity as a function of the post-stimulus onset time at Fz, F1, F2, F3, F4, FCz, FC1, FC2, FC3, and FC4.

(2) Judging from the time courses of theta power in the present study, the theta oscillation appeared to be more pronounced over two time windows: the early time window (0 to 300 ms) and the late (400 to 800 ms) time window. Based on these observations, we calculated the topographical distribution averaged across participants for theta activity in the intervals 0 to 300 ms and 400 to 800 ms, and confirmed that the enhancement of theta activity indeed originated in the frontal and frontal-central areas.

(3) The mass-univariate approach implemented in the statcond function of the EEGLAB toolbox was used to identify the time window in the ERSP values in which theta power was significantly different between the face-word and body-word tasks or between the incongruent and congruent conditions. A paired t-test was conducted on averaged time-frequency points including Fz, F1, F2, F3, F4, FCz, FC1, FC2, FC3, FC4, Cz, C1, C2, C3, and C4. For multiple comparisons, P < 0.05 was used to establish preliminary results of interest to prevent a too-conservative result from our false discovery rate (FDR) correction procedure. For theta band activity (3.9 to 7.8 Hz), the early time window (0–300 ms) and time points from 400 to 800 ms post-stimulus-onset were analysed.

(4) The resulting data showed that mean theta oscillations between 0 and 300 ms were significantly different between the face-word and body-word tasks. The mean theta oscillation between 450 and 750 ms was significantly different between congruent and incongruent conditions as well as between the face-word and body-word tasks at Fz, F1, F2, F3, FCz, FC1, FC2, and FC3. Because theta activity was more pronounced over the midline, only the traces from Fz, F1, F2, FCz, FC1, and FC2 were selected for further analysis.

Behavioural data analysis

Reaction time and accuracy of the behavioural response were analysed with a 2 (task type: body-word vs. face-word) ×2 (congruency: congruent vs. incongruent) repeated-measures analysis of variance (ANOVA).

ERP and ERSP data statistics

For ERP data analysis, the peak (baseline to peak) and latency of N1 (80–130 ms) and P2 (140–230 ms) were analysed at the following electrodes: F1, Fz, F2, FC1, FCz, and FC2. The peak (baseline to peak) and latency of N1 and P2 were subjected to a 2 (task type: body-word vs. face-word) ×2 (congruency: congruent vs. incongruent) ×6 (electrode site: F1, F2, Fz, FC1, FC2, and FCz) repeated-measures ANOVA. The mean amplitude within 400–550 ms of the N450 component was analysed at nine sites: F1, Fz, F2, C1, Cz, C2, CP1, CPz, and CP2. These nine electrodes were divided into two factors: area (frontal, central, and central-parietal) and hemisphere (left, middle, right). In the case of N450 data, a 2 (task type: body-word vs. face-word) ×2 (congruency: congruent vs. incongruent) ×3 (area: frontal, central, and central-parietal) ×3 (hemisphere: left, middle, and right) repeated-measures ANOVA was conducted. The mean amplitude within 580–700 ms of slow potentials was analysed at nine sites: FC1, FCz, FC2, C1, CZ, C2, CP1, CPz, and CP2. These nine electrodes were divided into two factors: area (frontal-central, central, and central-parietal) and hemisphere (left, middle, right). For slow potentials data, a 2 (task type: body-word vs. face-word) ×2 (congruency: congruent vs. incongruent) ×3 (area: frontal-central, central, and central-parietal) ×3 (hemisphere: left, middle, and right) repeated-measures ANOVA was conducted.

Theta-range ERSP values were averaged over the frequency from 3.9 to 7.8 Hz for the two time windows (early time window: 0 to 300 ms and the late time window: 450 to 750 ms). The resulting ERSP values were then entered into a three-way repeated-factor ANOVA: 2 (task type: body-word task vs. face-word task) × 2 (congruency: congruent vs. incongruent) ×electrode site (six levels: Fz, F1, F2, FCz, FC1, FC2) for the early (0–300 ms) and late (450–750 ms) time windows. All statistical analyses were performed using SPSS. Statistics were adjusted using the Greenhouse-Geisser epsilon correction for non-sphericity if the number of factor levels exceeded two. Uncorrected degrees of freedom and corrected p-values were reported. If applicable, main effects were followed by post hoc pairwise comparison with Bonferroni correction.

Results

Behavioural results

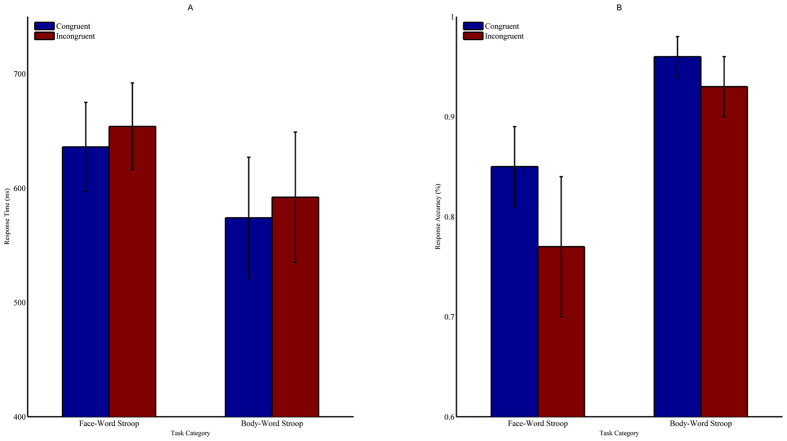

In the accuracy analysis, a significant congruency effect [F (1, 21) = 38.93, p < 0.001,  = 0.65], indicated by higher accuracy for congruent trials (90.4 ± 0.5%) compared to incongruent trials (84.8 ± 0.9%), was observed. The main effect of task type was significant [F (1, 21) = 128, p < 0.001,

= 0.65], indicated by higher accuracy for congruent trials (90.4 ± 0.5%) compared to incongruent trials (84.8 ± 0.9%), was observed. The main effect of task type was significant [F (1, 21) = 128, p < 0.001,  = 0.86], demonstrating that the body-word Stroop task (94.4 ± 0.5%) had a higher accuracy than the face-word Stroop task (80.8 ± 1.1%). The interaction between congruency and task was significant [F (1, 21) = 11.992, p < 0.01,

= 0.86], demonstrating that the body-word Stroop task (94.4 ± 0.5%) had a higher accuracy than the face-word Stroop task (80.8 ± 1.1%). The interaction between congruency and task was significant [F (1, 21) = 11.992, p < 0.01,  = 0.363]. The follow-up simple-effects test showed performance was better on the body-word task than the face-word task under both congruent and incongruent conditions (congruent condition: 95.7 ± 0.5% vs. 85 ± 1%, F (1, 21) = 82.51, p < 0.001; incongruent condition: 93 ± 0.7% vs. 76.5 ± 1.6%, F (1, 21) = 94.75, p < 0.001). Moreover, response time was faster on congruent trials (605 ± 9 ms) compared to incongruent trials (622 ± 8 ms, F (1, 21) = 16.63, p < 0.01,

= 0.363]. The follow-up simple-effects test showed performance was better on the body-word task than the face-word task under both congruent and incongruent conditions (congruent condition: 95.7 ± 0.5% vs. 85 ± 1%, F (1, 21) = 82.51, p < 0.001; incongruent condition: 93 ± 0.7% vs. 76.5 ± 1.6%, F (1, 21) = 94.75, p < 0.001). Moreover, response time was faster on congruent trials (605 ± 9 ms) compared to incongruent trials (622 ± 8 ms, F (1, 21) = 16.63, p < 0.01,  = 0.442) for trials with accurate responses. In addition, participants responded more quickly to trials on the body-word task (582 ± 11 ms) than to trials on the face-word task (645 ± 7 ms) (F (1, 21) = 42.35, p < 0.001,

= 0.442) for trials with accurate responses. In addition, participants responded more quickly to trials on the body-word task (582 ± 11 ms) than to trials on the face-word task (645 ± 7 ms) (F (1, 21) = 42.35, p < 0.001,  = 0.668) (Fig. 1).

= 0.668) (Fig. 1).

Figure 1. Behavioural responses on the emotional Stroop tasks.

Response time (A) and response accuracy (B) results for the face-word and body-word Stroop tasks. Data are represented as the mean and standard deviation.

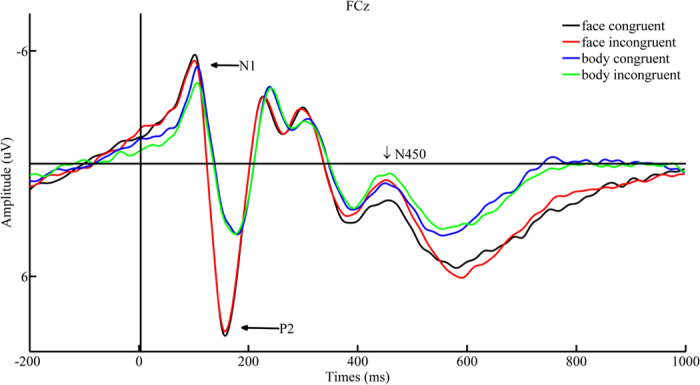

ERP results N1 effect

Analysis of the N1 amplitude revealed that the main effect of task type and congruency was significant [F (1, 21) = 58.46, p < 0.001,  = 0.736; F (1, 21) = 30.535, p < 0.001,

= 0.736; F (1, 21) = 30.535, p < 0.001,  = 0.593]. The face-word task (−6.109 ± 0.44 μV) elicited a larger N1 amplitude than the body-word task (−3.508 ± 0.288 μV), and the incongruent condition (−5.172 ± 0.348 μV) elicited a more pronounced N1 amplitude than the congruent condition (−4.445 ± 0.331 μV). No other significant main effect or interaction was found. Analysis of the N1 latency revealed that the main effect of task type was significant [F (1, 21) = 28.899, p < 0.001,

= 0.593]. The face-word task (−6.109 ± 0.44 μV) elicited a larger N1 amplitude than the body-word task (−3.508 ± 0.288 μV), and the incongruent condition (−5.172 ± 0.348 μV) elicited a more pronounced N1 amplitude than the congruent condition (−4.445 ± 0.331 μV). No other significant main effect or interaction was found. Analysis of the N1 latency revealed that the main effect of task type was significant [F (1, 21) = 28.899, p < 0.001,  = 0.579]. The face-word task (102 ± 1.995 ms) elicited a shorter N1 latency than the body-word task (107 ± 1.901 ms). No other significant main effect or interaction was observed.

= 0.579]. The face-word task (102 ± 1.995 ms) elicited a shorter N1 latency than the body-word task (107 ± 1.901 ms). No other significant main effect or interaction was observed.

P2 effect

Analysis of the P2 amplitude revealed that the main effect of task type was significant [F (1, 21) = 51.62, p < 0.001,  = 0.711]. The face-word task (10.39 ± 0.96 μV) elicited a larger P2 amplitude than the body-word task (5.32 ± 0.85 μV). No other significant main effect or interaction was observed. Analysis of the P2 latency revealed that the main effect of task type was significant [F (1, 21) = 19.559, p < 0.001,

= 0.711]. The face-word task (10.39 ± 0.96 μV) elicited a larger P2 amplitude than the body-word task (5.32 ± 0.85 μV). No other significant main effect or interaction was observed. Analysis of the P2 latency revealed that the main effect of task type was significant [F (1, 21) = 19.559, p < 0.001,  = 0.482]. The face-word task (159 ± 2.26 ms) elicited a faster P2 latency than the body-word task (172 ± 3.99 ms). No other main effect or interaction was found (Fig. 2).

= 0.482]. The face-word task (159 ± 2.26 ms) elicited a faster P2 latency than the body-word task (172 ± 3.99 ms). No other main effect or interaction was found (Fig. 2).

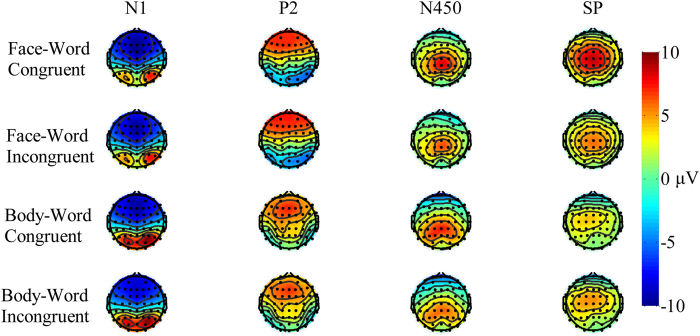

Figure 2. Grand-averaged waveforms in the frontal-central region.

Grand-averaged waveforms at the “FCz” electrode site for face-congruent (black solid line), face-incongruent (red solid line), body-congruent (blue solid line), and body-incongruent (green solid line) trials as a function of time. The X-axis represents time within a trial, where 0 indicates the onset of the target stimulus. The Y-axis represents the wave amplitude range.

N450 effect

Analysis of the mean N450 amplitude revealed that the main effect of task type was not significant [F (1, 21) = 4.112, p = 0.055], but the main effect of congruency was significant [F (1, 21) = 12.535, p < 0.01,  = 0.374]. The N450 amplitude corresponding to incongruent pairs (3.221 ± 0.538 μV) was more negative than that associated with congruent pairs (2.57 ± 0.553 μV). There was also a main effect of area [F (2, 42) = 42.936, p < 0.001,

= 0.374]. The N450 amplitude corresponding to incongruent pairs (3.221 ± 0.538 μV) was more negative than that associated with congruent pairs (2.57 ± 0.553 μV). There was also a main effect of area [F (2, 42) = 42.936, p < 0.001,  = 0.672], and post hoc Bonferroni comparison revealed that the N450 amplitude was more pronounced in the central-parietal area (3.97 ± 0.475 μV) than in the central (3.39 ± 0.58 μV) and frontal (1.328 ± 0.63 μV) areas. The main effect of hemisphere also was significant [F (2, 42) = 4.73, p < 0.05,

= 0.672], and post hoc Bonferroni comparison revealed that the N450 amplitude was more pronounced in the central-parietal area (3.97 ± 0.475 μV) than in the central (3.39 ± 0.58 μV) and frontal (1.328 ± 0.63 μV) areas. The main effect of hemisphere also was significant [F (2, 42) = 4.73, p < 0.05,  = 0.184]. Post hoc Bonferroni comparisons also revealed that the N450 amplitude was higher in the middle (3.078 ± 0.568 μV) than in the right (2.934 ± 0.544 μV) or left (2.678 ± 0.517 μV) hemispheres. No other significant effects were observed.

= 0.184]. Post hoc Bonferroni comparisons also revealed that the N450 amplitude was higher in the middle (3.078 ± 0.568 μV) than in the right (2.934 ± 0.544 μV) or left (2.678 ± 0.517 μV) hemispheres. No other significant effects were observed.

Slow Potential effect

Analysis of mean slow potential amplitude revealed a significant main effect of task type [F (1, 21) = 111.281, p < 0.01,  = 0.84]. The mean SP amplitude was more positive on the face-word task (4.73 ± 0.4 μV) than on the body-word task (2.01 ± 0.3 μV). The main effect of congruency was significant [F (1, 21) = 17.768, p < 0.05,

= 0.84]. The mean SP amplitude was more positive on the face-word task (4.73 ± 0.4 μV) than on the body-word task (2.01 ± 0.3 μV). The main effect of congruency was significant [F (1, 21) = 17.768, p < 0.05,  = 0.206]. The mean slow potential amplitude corresponding to incongruent pairs (3.2 ± 0.35 μV) was more positive than that associated with congruent pairs (3.5 ± 0.36 μV). No other significant effects were observed (Fig. 3).

= 0.206]. The mean slow potential amplitude corresponding to incongruent pairs (3.2 ± 0.35 μV) was more positive than that associated with congruent pairs (3.5 ± 0.36 μV). No other significant effects were observed (Fig. 3).

Figure 3. N1, P2, N450, and SP responses across tasks.

Topographical distribution of N1 (80–130 ms), P2 (140–230 ms), N450 (400–550), and slow potentials (SP; 580–700 ms) averaged across participants for the face-congruent, face-incongruent, body-congruent, and body-incongruent conditions. Black dots represent scalp electrode positions. Contours connect points of equal amplitude on the waves.

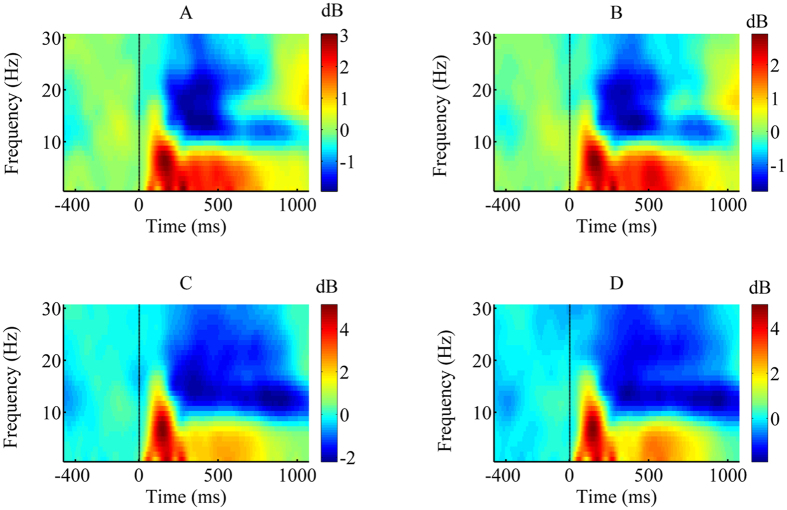

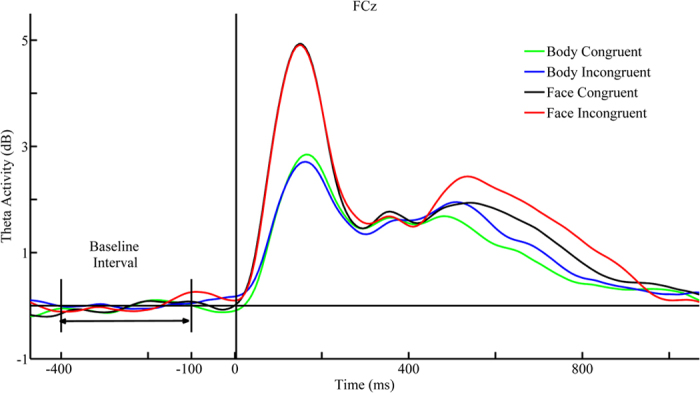

ERSP results

Analysis of the early time window revealed that the main effect of task was statistically significant [F (1, 21) = 32.934, p < 0.001,  = 0.611]. The face-word task (2.34 ± 0.22 dB) induced a more boosted theta ERSP than the body-word task (1.55 ± 0.16 dB). No other significant effects were observed for the early time window. Analysis of the later time window revealed that the main effect of task type was significant [F (1, 21) = 10.148, p < 0.01,

= 0.611]. The face-word task (2.34 ± 0.22 dB) induced a more boosted theta ERSP than the body-word task (1.55 ± 0.16 dB). No other significant effects were observed for the early time window. Analysis of the later time window revealed that the main effect of task type was significant [F (1, 21) = 10.148, p < 0.01,  = 0.326]. The ERSP of the face-word task (1.483 ± 0.17 dB) was more enhanced than that associated with the body-word task (1.07 ± 0.08 dB). The main effect of congruency was significant [F (1, 21) = 8.35, p < 0.01,

= 0.326]. The ERSP of the face-word task (1.483 ± 0.17 dB) was more enhanced than that associated with the body-word task (1.07 ± 0.08 dB). The main effect of congruency was significant [F (1, 21) = 8.35, p < 0.01,  = 0.293]. The ERSP corresponding to incongruent pairs (1.403 ± 0.128 dB) was larger than that associated with congruent pairs (1.152 ± 0.12 dB). No other significant main effects or interactions were observed for the late time window (Figs 4 and 5).

= 0.293]. The ERSP corresponding to incongruent pairs (1.403 ± 0.128 dB) was larger than that associated with congruent pairs (1.152 ± 0.12 dB). No other significant main effects or interactions were observed for the late time window (Figs 4 and 5).

Figure 4. Grand-averaged time-frequency representation in the frontal-central region.

Grand-averaged time-frequency representation (TF-R) plots at the “FCz” electrode site for face-congruent (A), face-incongruent (B), body-congruent (C), and body-incongruent (D) trials as a function of time. The X-axis represents time within a trial, where the dashed line indicates the onset of the target stimulus. The Y-axis represents the frequency range covered by our fast Fourier transform method.

Figure 5. Theta activity time courses across tasks.

Theta activity time courses extracted from the time-frequency representation (TF-R) at the FCz site for face-congruent (black solid line), face-incongruent (red solid line), body-congruent (green solid line), and body-incongruent (blue solid line) conditions. The X-axis represents time range transformed from our Fourier transform method within a trial, where the dashed line indicates the onset of the target stimulus. The Y-axis represents the theta power value covered by our fast Fourier transform method. The baseline-normalized theta event-related spectral perturbations (ERSP) was obtained by subtracting the mean baseline (−400-ms to −100-ms pre-stimulus onset) power.

Discussion

The present study aimed to examine emotional modulation of cognitive control in the affective domain by exploring emotional face-word and body-word conflict tasks. Behaviourally, both tasks replicated previous interference effects, revealing both a slower response time and higher error rate for incongruent conditions than for congruent conditions and a stronger interference effect for the face-word task than for the body-word task. ERP results revealed both common and dissociable neural activities across the two tasks: the face-word task evoked more enhanced early and later stage neural activity than the body-word task as indexed by more pronounced N1, P2, and Slow potential amplitudes for the face-word task relative to the body-word task, whereas the two tasks evoked comparable N450 amplitudes. Moreover, time-frequency representation analysis revealed that the face-word task induced more boosted theta oscillation than the body-word task at early and late stages. Based on these findings, we concluded that cognitive control in the affective domain, at least as manifested by the emotional Stroop task, can be modulated by the target emotion.

Our behavioural data are consistent with previous studies reporting robust behavioural interference effects14,17,18,19,20,22,25,48. Interestingly, the present study revealed higher accuracy and faster response time on the body-word task than the face-word task. Such behavioural performance differences are more obvious at the neural level. We found that the two tasks evoked dissociable neural activity as measured by EEG, including larger N1 and P2 amplitudes and boosted theta oscillation during the face-word task compared to the body-word task. N1 and P2 are suggested as reliable neural markers of early emotional attention and perceptual processing, and are always generally coupled with selective augmented theta-band activities40,44,45,49,50. Our results are consistent with these previous studies. Moreover, such dissociable neural activities indicate that facial and body expressions evoked distinct responses despite both representing negative stimuli, which is consistent with prior evidence36,37,38,39, demonstrating that threatening facial and body expressions may evoke different brain activity.

Both tasks evoked a pronounced N450 amplitude under incongruent conditions, which is consistent with previous tasks performed in both the emotional and cognitive domains26,50,51,52,53,54. Although the underlying meaning of this component remains controversial, it is commonly believed that N450 reflects conflict detection processing18,25,27,52,55. Thus, it is possible that N450 might be correlated to the detection of emotional conflict between emotional target expression and the task irrelevant distractor in the present study. In addition, our finding that N450 amplitudes were not significantly different between the face-word task and body-word task suggests that may reflect conflict detection but not intentional control processing.

We found a significant slow potential congruency effect and theta effect. Slow potential may be associated with conflict resolution or post-response monitoring, and is believed to reflect voluntary control processing53,56,57,58. More importantly, Slow potential amplitudes were larger and theta activity was stronger during the face-word task than the body-word task. Furthermore, both tasks induced enhanced theta oscillation under the incongruent condition but not the congruent condition. If a larger amplitude reflects a more elaborate control process59,60 and theta oscillation reflects the need to control the distractor41,46,47,61, then together these two neural indexes may indicate that the face-word task was more difficult to execute, which is consistent with the inferior behavioural performance observed on the face-word task.

Based on the behavioural and neural observations, the results of the present study bolster the ‘dual-competition’ framework, which aims to explain how emotion interacts with executive control16,62,63. As proposed by this model, emotion (affective significance) directs cognitive control mainly through perceptual and executive competition. The threat level of the stimulus is the determinant of perceptual competition. Low-threat stimuli exert a modest effect whereas high-threat stimuli exert a deleterious effect on the perceptual stage. The results of the present study extend these findings by demonstrating a differential effect of the threat level on conceptual processing such that stimuli with higher levels of threat elicit stronger effects.Threatening stimuli draw more attention to common resources than non-threatening stimuli, thus leaving fewer resources dedicated to executive control processes, and consequently reducing the ability to control conflict56. Based on the behavioural findings in this study, negative facial expressions could be regarded as high-threat stimuli, whereas negative body expressions could be regarded as relatively low-threat stimuli. In a similar manner, fewer resources may be recruited during the presentation of threatening facial stimuli compared to threatening body stimuli. Consequently, more control effort, as evidenced by more pronounced later-stage SP amplitudes and theta oscillation in the face-word task, is needed to resolve the emotional conflict.

It should be noted that the proposed dual competition model was initially applicable to cognitive control context. Despite the fact that the context varies considerably, the shared feature is that the cognitive control task instead of the emotional task is the primary task, and the primary aim is to investigate how affective information exerts control on a pure cognitive control process64. However, our study and a recent study54 fall into another category in which participants are required to resolve the emotional conflict rather than the cognitive conflict. It is clear that the dual competition model could also be extended to the emotional conflict domain. Furthermore, the nature of the target emotion per se could have an effect on emotional conflict processing.

There remain unresolved issues in the current study. Although early and later stage brain activity was modulated by the target threat level, whether such modulation is of a quantitative or qualitative nature remains unknown. Are identical brain structures responsible for the resolution of both face-word and body-word conflict with variation only in the magnitude of activity, or are both common and dissociable brain areas involved in conflict resolution related to the face-word and body-word tasks? Another unresolved issue is whether this modulation effect would emerge in other tasks, such as the Flanker or Simon task. Further studies are needed to solve these issues. In summary, the present study provides evidence that emotional conflict processing is modulated by the target stimuli. Thus, our results address the importance of considering the nature of the target when exploring emotional conflict processing.

Additional Information

How to cite this article: Ma, J. et al. Emotional Modulation of Conflict Processing in the Affective Domain: Evidence from Event-related Potentials and Event-related Spectral Perturbation Analysis. Sci. Rep. 6, 31278; doi: 10.1038/srep31278 (2016).

Acknowledgments

This project was supported by the National Natural Science Foundation of China (No. 31571146).

Footnotes

Author Contributions J.M., C.L. and X.C. performed the data analysis and wrote, reviewed, and approved the manuscript. J.M. and C.L. designed the research.

References

- Botvinick M. & Braver T. Motivation and Cognitive Control: From Behavior to Neural Mechanism. Annu. Rev. Psych. 66, 83–113 (2014). [DOI] [PubMed] [Google Scholar]

- Botvinick M. M., Carter C. S., Braver T. S., Barch D. M. & Cohen J. D. Conflict Monitoring and Cognitive Control. Psychol. Rev. 108, 624–652 (2001). [DOI] [PubMed] [Google Scholar]

- Botvinick M., Nystrom L. E., Fissell K., Carter C. S. & Cohen J. D. Conflict monitoring versus selection-for-action in anterior cingulate cortex. Nature 402, 179–181 (1999). [DOI] [PubMed] [Google Scholar]

- Botvinick M. M., Cohen J. D. & Carter C. S. Conflict monitoring and anterior cingulate cortex: an update. Trends Cogn. Sci. 8, 539–546 (2004). [DOI] [PubMed] [Google Scholar]

- Egner T. & Hirsch J. Cognitive control mechanisms resolve conflict through cortical amplification of task-relevant information. Nat. Neurosci. 8, 1784–1790 (2005). [DOI] [PubMed] [Google Scholar]

- Cohen J. D., Botvinick M. & Carter C. S. Anterior cingulate and prefrontal cortex: who’s in control? Nat. Neurosci. 12, 421–423 (2000). [DOI] [PubMed] [Google Scholar]

- Kanske P. & Kotz S. A. Emotion speeds up conflict resolution: a new role for the ventral anterior cingulate cortex? Cereb. Cortex, 21, 911–919 (2010). [DOI] [PubMed] [Google Scholar]

- Kanske P. & Kotz S. A. Positive Emotion speeds up conflict processing: ERP response in an auditory Simon task? Biol. Psychol. 87, 122–127 (2011). [DOI] [PubMed] [Google Scholar]

- Kanske P. & Kotz S. A. Modulation of early conflict processing: N200 responses to emotional words in a flanker task. Neuropsychologia 48, 3661–3664 (2010). [DOI] [PubMed] [Google Scholar]

- Kanske P. & Kotz S. A. Emotion triggers executive attention: anterior cingulate cortex and amygdala responses to emotional words in a conflict task. Hum. Brain Mapp. 32, 198–208 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanske P. & Kotz S. A. Conflict processing is modulated by positive emotion: ERP data from a flanker task. Behav. Brain Res. 219, 382–386 (2011). [DOI] [PubMed] [Google Scholar]

- Zinchenko A., Kanske P., Obermeier C., Schröger E. & Kotz S. A. Emotion and goal-directed behavior: ERP evidence on cognitive and emotional conflict. Soc. Cog. Affect. Neurosci. 10, 1577–1587 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiew K. S. & Braver T. S. Dissociable influences of reward motivation and positive emotion on cognitive control. Cogn. Affect. Behav. Neurosci. 14, 509–529 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xue S. et al. Positive emotion modulates cognitive control: An event‐related potentials study. Scand. J. Psychol. 54, 82–88 (2013). [DOI] [PubMed] [Google Scholar]

- Yuan J. et al. Pleasant mood intensifies brain processing of cognitive control: ERP correlates. Biol. Psychol. 87, 17–24 (2011). [DOI] [PubMed] [Google Scholar]

- Padmala S., Bauer A. & Pessoa L. Negative emotion impairs conflict-driven executive control. Front. Psychol. 2, 192 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Egner T., Etkin A., Gale S. & Hirsch J. Dissociable neural systems resolve conflict from emotional versus nonemotional distracters. Cereb. Cortex 18, 1475–1484 (2008). [DOI] [PubMed] [Google Scholar]

- Etkin A., Egner T., Peraza D. M., Kandel E. R. & Hirsch J. Resolving emotional conflict: a role for the rostral anterior cingulate cortex in modulating activity in the amygdala. Neuron 51, 871–882 (2006). [DOI] [PubMed] [Google Scholar]

- Ma J., Liu C. & Chen X. Emotional conflict processing induce boosted theta oscillation. Neurosci. Lett. 595, 69–73 (2015). [DOI] [PubMed] [Google Scholar]

- Ma J., Liu C., Zhong X., Wang L. & Chen X. Emotional Body-Word Conflict Evokes Enhanced N450 and Slow Potential. PloS ONE 9, e95198 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xue S., Ren G., Kong X., Liu J. & Qiu J. Electrophysiological correlates related to the conflict adaptation effect in an emotional conflict task. Neurosci. Lett. 584, 219–223 (2014). [DOI] [PubMed] [Google Scholar]

- Zhu X. R., Zhang H. J., Wu T. T., Luo W. B. & Luo Y. J. Emotional conflict occurs at an early stage: Evidence from the emotional face–word Stroop task. Neurosci. Lett. 478, 1–4 (2010). [DOI] [PubMed] [Google Scholar]

- Marusak H. A., Martin K. R., Etkin A. & Thomason M. E. Childhood trauma exposure disrupts the automatic regulation of emotional processing. Neuropsychopharmacology 40, 1250–1258 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deng Z. et al. Regional gray matter density associated with emotional conflict resolution: Evidence from voxel-based morphometry. Neuroscience 275, 500–507 (2014). [DOI] [PubMed] [Google Scholar]

- Shen Y., Xue S., Wang K. & Qiu J. Neural time course of emotional conflict control: An ERP study. Neurosci. Lett. 541, 34–38 (2013). [DOI] [PubMed] [Google Scholar]

- Xue S. et al. The dissociable neural dynamics of cognitive conflict and emotional conflict control: An ERP study. Neurosci. Lett. 619, 149–154 (2016). [DOI] [PubMed] [Google Scholar]

- Clawson A., Clayson P. E. & Larson M. J. Cognitive control adjustments and conflict adaptation in major depressive disorder. Psychophysiology 50, 711–721 (2013). [DOI] [PubMed] [Google Scholar]

- Delplanque S., Lavoie M. E., Hot P., Silvert L. & Sequeira H. Modulation of cognitive processing by emotional valence studied through event-related potentials in humans. Neurosci. Lett. 356, 1–4 (2004). [DOI] [PubMed] [Google Scholar]

- Cuthbert B. N., Schupp H. T., Bradley M. M., Birbaumer N. & Lang P. J. Brain potentials in affective picture processing: covariation with autonomic arousal and affective report. Biol. Psychol. 52, 95–111 (2000). [DOI] [PubMed] [Google Scholar]

- Schupp H. T., Junghöfer M., Weike A. I. & Hamm A. O. Attention and emotion: an ERP analysis of facilitated emotional stimulus processing. Neuroreport 14, 1107–1110 (2003). [DOI] [PubMed] [Google Scholar]

- Olofsson J. K., Nordin S., Sequeira H. & Polich J. Affective picture processing: an integrative review of ERP findings. Biol. Psychol. 77, 247–265 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keil A. et al. Large‐scale neural correlates of affective picture processing. Psychophysiology 39, 641–649 (2002). [DOI] [PubMed] [Google Scholar]

- Scott G. G., O’Donnell P. J., Leuthold H. & Sereno S. C. Early emotion word processing: Evidence from event-related potentials. Biol. Psychol. 80, 95–104 (2009). [DOI] [PubMed] [Google Scholar]

- Herbert C., Kissler J., Junghöfer M., Peyk P. & Rockstroh B. Processing of emotional adjectives: Evidence from startle EMG and ERPs. Psychophysiology 43, 197–206 (2006). [DOI] [PubMed] [Google Scholar]

- Bar-Haim Y., Lamy D. & Glickman S. Attentional bias in anxiety: A behavioral and ERP study. Brain Cogn. 59, 11–22 (2005). [DOI] [PubMed] [Google Scholar]

- Kret M., Pichon S., Grèzes J. & de Gelder B. Similarities and differences in perceiving threat from dynamic faces and bodies. An fMRI study. Neuroimage 54, 1755–1762 (2011). [DOI] [PubMed] [Google Scholar]

- Kret M. E., Pichon S., Grèzes J. & De Gelder B. Men fear other men most: gender specific brain activations in perceiving threat from dynamic faces and bodies–an fMRI study. Front. Psychol. 2, 3 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kret M. E., Stekelenburg J. J., Roelofs K. & De Gelder B. Perception of face and body expressions using electromyography, pupillometry and gaze measures. Front. Psychol 4, 28 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichon S., de Gelder B. & Grèzes J. Two different faces of threat. Comparing the neural systems for recognizing fear and anger in dynamic body expressions. Neuroimage 47, 1873–1883 (2009). [DOI] [PubMed] [Google Scholar]

- Bekkedal M. Y., Rossi J. & Panksepp J. Human brain EEG indices of emotions: delineating responses to affective vocalizations by measuring frontal theta event-related synchronization. Neurosci. Biobehav. Rev. 35, 1959–1970 (2011). [DOI] [PubMed] [Google Scholar]

- Cavanagh J. F. & Frank M. J. Frontal theta as a mechanism for cognitive control. Trends Cogn. Sci. 18, 414–421 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen M. X. & Cavanagh J. F. Single-trial regression elucidates the role of prefrontal theta oscillations in response conflict. Front. Psychol. 2, 30 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen M. X. & Donner T. H. Midfrontal conflict-related theta-band power reflects neural oscillations that predict behavior. J. Neurophysiol. 110, 2752–2763 (2013). [DOI] [PubMed] [Google Scholar]

- Knyazev G. G., Slobodskoj-Plusnin J. Y. & Bocharov A. V. Gender differences in implicit and explicit processing of emotional facial expressions as revealed by event-related theta synchronization. Emotion 10, 678 (2010). [DOI] [PubMed] [Google Scholar]

- Maratos F. A., Mogg K., Bradley B. P., Rippon G. & Senior C. Coarse threat images reveal theta oscillations in the amygdala: A magnetoencephalography study. Cogn. Affect. Behav. Neurosci. 9, 133–143 (2009). [DOI] [PubMed] [Google Scholar]

- Nigbur R., Cohen M., Ridderinkhof K. & Stürmer B. Theta Dynamics Reveal Domain-specific Control over Stimulus and Response Conflict. J. Cogn. Neurosci. 24, 1264–1274 (2012). [DOI] [PubMed] [Google Scholar]

- Nigbur R., Ivanova G. & Stürmer B. Theta power as a marker for cognitive interference. Clin. Neurophysiol. 122, 2185–2194 (2011). [DOI] [PubMed] [Google Scholar]

- Egner T., Delano M. & Hirsch J. Separate conflict-specific cognitive control mechanisms in the human brain. Neuroimage 35, 940–948 (2007). [DOI] [PubMed] [Google Scholar]

- Sun J., Sun B., Wang B. & Gong H. The processing bias for threatening cues revealed by event-related potential and event-related oscillation analyses. Neuroscience 203, 91–98 (2012). [DOI] [PubMed] [Google Scholar]

- DeLaRosa B. L. et al. Electrophysiological spatiotemporal dynamics during implicit visual threat processing. Brain Cogn. 91, 54–61 (2014). [DOI] [PubMed] [Google Scholar]

- Szűcs D. & Soltész F. Functional definition of the N450 event-related brain potential marker of conflict processing: a numerical Stroop study. BMC Neurosci. 13, 35 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clayson P. E., Larson M. J. & Astikainen P. S. Adaptation to emotional conflict: evidence from a novel face emotion paradigm. PloS ONE 8, e75776 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- West R. Neural correlates of cognitive control and conflict detection in the Stroop and digit-location tasks. Neuropsychologia 41, 1122–1135 (2003). [DOI] [PubMed] [Google Scholar]

- West R., Jakubek K., Wymbs N., Perry M. & Moore K. Neural correlates of conflict processing. Exp. Brain Res. 167, 38–48 (2005). [DOI] [PubMed] [Google Scholar]

- Larson M. J., Kaufman D. A. & Perlstein W. M. Neural time course of conflict adaptation effects on the Stroop task. Neuropsychologia 47, 663–670 (2009). [DOI] [PubMed] [Google Scholar]

- Inzlicht M., Bartholow B. D. & Hirsh J. B. Emotional foundations of cognitive control. Trends Cogn. Sci. 19, 126–132 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu Y., Wang Z., Quan S. & Li M. The effect of positive affect on conflict resolution: Modulated by approach-motivational intensity. Cogn. Emot. 11, 1–14 (2015). [DOI] [PubMed] [Google Scholar]

- Soutschek A., Müller H. J. & Schubert T. Conflict-specific effects of accessory stimuli on cognitive control in the Stroop task and the Simon task. Exp. Psychol. 60, 140–147 (2013). [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends Cogn. Sci. 9, 585–594 (2005). [DOI] [PubMed] [Google Scholar]

- Wendt M., Kluwe R. H. & Peters A. Sequential modulations of interference evoked by processing task-irrelevant stimulus features. J. Exp. Psychol. Hum. Percept. Perform. 32, 644–667 (2006). [DOI] [PubMed] [Google Scholar]

- Cavanagh J. F., Cohen M. X. & Allen J. J. Prelude to and resolution of an error: EEG phase synchrony reveals cognitive control dynamics during action monitoring. J. Neurosci. 29, 98–105 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L. How do emotion and motivation direct executive control? Trends Cogn. Sci. 13, 160–166 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindström B. R. & Bohlin G. Threat-relevance impairs executive functions: negative impact on working memory and response inhibition. Emotion 12, 384–393 (2012). [DOI] [PubMed] [Google Scholar]

- Hart S. J., Green S. R., Casp M. & Belger A. Emotional priming effects during Stroop task performance. Neuroimage 49, 2662–2670 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]