Abstract

The explosion of atom bombs over the cities of Hiroshima and Nagasaki in August 1945 resulted in very high casualties, both immediate and delayed but also left a large number of survivors who had been exposed to radiation, at levels that could be fairly precisely ascertained. Extensive follow-up of a large cohort of survivors (120,000) and of their offspring (77,000) was initiated in 1947 and continues to this day. In essence, survivors having received 1 Gy irradiation (∼1000 mSV) have a significantly elevated rate of cancer (42% increase) but a limited decrease of longevity (∼1 year), while their offspring show no increased frequency of abnormalities and, so far, no detectable elevation of the mutation rate. Current acceptable exposure levels for the general population and for workers in the nuclear industry have largely been derived from these studies, which have been reported in more than 100 publications. Yet the general public, and indeed most scientists, are unaware of these data: it is widely believed that irradiated survivors suffered a very high cancer burden and dramatically shortened life span, and that their progeny were affected by elevated mutation rates and frequent abnormalities. In this article, I summarize the results and discuss possible reasons for this very striking discrepancy between the facts and general beliefs about this situation.

THE first (and only) two A-bombs used in war were detonated over Hiroshima and Nagasaki on August 6 and 9, 1945. Casualties were horrendous, approximately 100,000 in each city including deaths in the following days from severe burns and radiation. Although massive bombing of cities had already taken place with similar death tolls (e.g., Dresden, Hamburg, and Tokyo, the latter with 100,000 casualties on March 9, 1945), the devastation caused by a single bomb was unheard of and remains one of the most horrifying events in the past century. The people who had survived the explosions were soon designated as Hibakusha and were severely discriminated against in Japanese society, as (supposedly) carriers of (contagious?) radiation diseases and potential begetters of malformed offspring. While not reaching such extremes, the dominant present-day image of the aftermath of the Hiroshima/Nagasaki bombings, in line with the general perception of radiation risk (Ropeik 2013; Perko 2014), is that it left the sites heavily contaminated, that the survivors suffered very serious health consequences, notably a very high rate of cancer and other debilitating diseases, and that offspring from these survivors had a highly increased rate of genetic defects. In fact, the survivors have been the object of massive and careful long-term studies whose results to date do not support these conceptions and indicate, instead, measurable but limited detrimental health effects in survivors, and no detectable genetic effects in their offspring. This Perspectives article does not provide any new data; rather, its aim is to summarize the results of the studies undertaken to date, which have been published in more than 100 papers (most of them in international journals), and to discuss why they seem to have had so little impact beyond specialized circles.

Bombings and Implementation of Cohort Studies

Characteristics of the bombs and the explosions

The device used at Hiroshima was based on enriched uranium and exploded at an altitude of 600 m with an estimated yield equivalent to 16 kilotons of high explosive. The bomb at Nagasaki was based on plutonium and exploded at 500 m with a yield of 21 kilotons. The major effect of both bombs was an extreme heat and pressure blast accompanied by a strong burst of gamma radiation and a more limited burst of neutrons. The heat blast set the (mostly wooden) buildings on fire in a radius of several kilometers and resulted in an extensive firestorm centered on the explosion site (also called the hypocenter). People were exposed to the combined heat and radiation blasts, with little shielding from the buildings; most of those located within 1.5 km of the hypocenter were killed. The contribution of fallout from these explosions, which occurred mostly as “black rain” in the following days, is not precisely known: few measurements were taken due to scarcity of equipment, and investigations in the first months were performed by the US army and subsequently classified. It was probably limited: the bombs exploded at a significant altitude, the resulting firestorm carried the fission products into the high atmosphere, and the eventual fallout was spread over a large area. In addition, a strong typhoon occurred 2 weeks after the bombings and may have washed out much of the materiel. The major health effects (other than the heat blast and accompanying destruction) were almost certainly due to the gamma and neutron radiation from the blasts themselves, and these doses can be quite reliably estimated from the distance to the hypocenter. Thus studies on the survivors can ascertain the health effects of a single, fairly well-defined dose of gamma radiation with a small component from neutrons.

The Atomic Bomb Casualty Commission and the Radiation Effects Research Foundation

Initial studies (1945–1946) on survivors from the bombings were performed under the authority of the occupying US army and their results remained classified; the number of delayed deaths from radiation or, possibly, contamination is therefore not known precisely, although it is probably of the order of 10,000 for each site. Open studies were started in 1947, with the establishment of the Atomic Bomb Casualty Commission (ABCC) by the US National Academy of Sciences, joined a year later by the Japanese National Institutes of Health, and including well-known geneticists such as James Neel and William Schull. It initiated extensive health studies on the survivors and was reorganized in 1975 to form the Radiation Effects Research Foundation (RERF), a Japanese foundation funded by both Japan (Ministry of Health) and the United States (Department of Energy). Both institutions have been criticized by the Japanese public for observing the victims but not providing medical assistance to them. They have, however, fulfilled an extremely useful role in establishing reliable data on radiation effects. A general description of the RERF and its activities (including references to published studies) is accessible through the RERF Web site (RERF 2014). The RERF currently employs ∼170 persons at its main location in Hiroshima, as well as 50 in Nagasaki, with staff from both Japan and the United States. The ABCC and, later, the RERF, assembled a “Life Span Study” (LSS) cohort of 120,000 individuals [∼100,000 exposed at various (known) levels and ∼20,000 controls, “not in city” at the time of the bombings], and a cohort of 77,000 children born between 1946 and 1984 and for which at least one parent had been exposed. These have been followed now for over 60 years in most cases, and their general health, life expectancy, cancer incidence, and mortality ascertained. In addition, cytogenetic, biochemical, and molecular genetic studies have also been performed on significant subsets. The population followed represents approximately half of the people who were exposed in the bombings, and the fact that they received a single dose of radiation that can be consistently estimated makes the conclusions much more reliable than in more complex situations such as the Chernobyl disaster (see later). A detailed general overview of the results as of 2011 has been published (Douple et al. 2011). Current results from these studies (that are still ongoing) are summarized below, first for survivors and then for their offspring.

Studies on Survivors

In both Hiroshima and Nagasaki, there was extensive mortality in the days and weeks following the bombings, representing perhaps 10% of the casualties. It is difficult to separate the effect of radiation (acute radiation syndrome, ARS) and, possibly, of contamination from the consequences of burns since most victims suffered both. Early studies, however, indicated that the median lethal dose (LD50) from whole-body gamma radiation is ∼2.5 Gy1 when little or no medical assistance is available (5 Gy with extensive medical care). This estimate is based on early studies at the bomb sites, but with dose estimates refined according to later studies.

Cancer

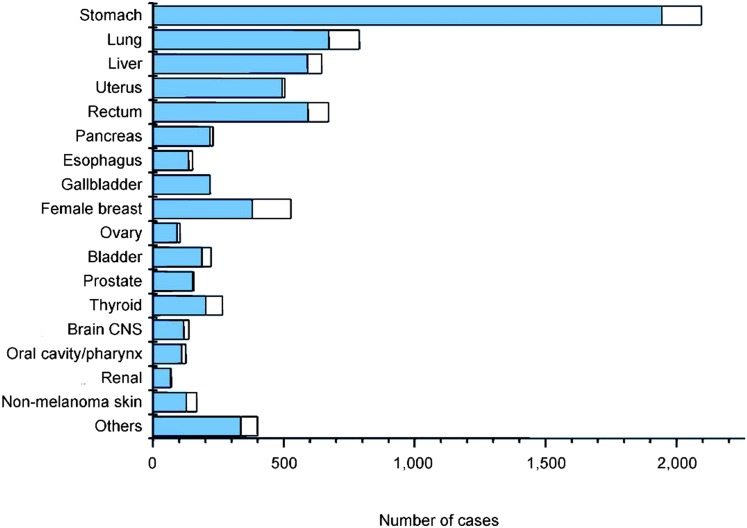

In 1950, a survivor cohort was defined and detailed medical follow-up established. From then on, the causes of death and the excess due to radiation exposure could be ascertained. This excludes mortality caused by ARS and other bomb-related trauma, but not most delayed effects, except for a small number of leukemia deaths since this is the earliest neoplasm to appear. The results of these studies have been published in a large number of papers, mostly in specialized journals (such as Radiation Research or the Journal of Radiation Research) but occasionally in more widely read journals (The Lancet, the American Journal of Human Genetics, Nature, etc.). Figure 1 shows the solid cancer cases in the whole exposed group from the LSS survivor cohort, with the excess cases (in white) attributable to radiation (by comparison with the control group “not in town” from the same cohort). It is quite obvious from Figure 1 that there is a measurable excess of cancer cases in the exposed group, but also that this excess is relatively limited, amounting at most to an increase of ∼30%, often much less.

Figure 1.

Number of solid cancers observed up to 1998 in the exposed group; the white portion indicates the excess cases associated with radiation (comparison with the unexposed group). Data are from Preston et al. (2007).

Figure 1, however, tabulates results for the whole exposed group, most of whose members have experienced a relatively low dose of radiation: half of them received less than 0.1 Gy. It is therefore more meaningful to look at the percentage of excess cancers according to dose received, as shown in Table 1 (Preston et al. 2007). This time, all solid cancers are lumped together, but the cases are broken down according to radiation exposure. As expected, the fraction of excess cancers increases with radiation dose, from a nearly negligible 1.8% below 0.1 Gy to 61% at 2 Gy or above. For a quite sizeable exposure of 0.5–1 Gy,2 the figure is 29.5%, corresponding to 206 excess solid cancer cases (all types) in a group of 3173 persons. In other words, there is a clear excess of cancer cases in strongly irradiated survivors, but this involves less than 10% of the total. It is also important to note that the excess risk is higher for people exposed at a young age, that this risk persists through the subject’s lifetime, and that it is ∼50% higher in women than in men (Douple et al. 2011).

Table 1. Observed and excess solid cancers observed up to 1998 in the exposed group, according to radiation dose.

| Weighted colon dose (Gy) | LSS subjects | Cancers | Attributable risk (%) | |

|---|---|---|---|---|

| Observed | Estimated excess | |||

| 0.005–0.1 | 27,789 | 4406 | 81 | 1.8 |

| 0.1–0.2 | 5,527 | 968 | 75 | 7.6 |

| 0.2–0.5 | 5,935 | 1144 | 179 | 15.7 |

| 0.5–1.0 | 3,173 | 688 | 206 | 29.5 |

| 1.0–2.0 | 1,647 | 460 | 196 | 44.2 |

| >2.0 | 564 | 185 | 111 | 61.0 |

| Total | 44,635 | 7851 | 848 | 10.7 |

This is a simplified version of Table 9 in Preston et al. (2007), which tabulates all cancers observed from 1958 through 1998 among 105,427 LSS cohort members. LSS, Life Span Study.

For leukemias (Table 2), the outlook is both worse and better (Preston et al. 2004): worse, as the fraction of excess cases is larger (63% in the 0.5–1 Gy group), and better since, given the rarity of the disease, this translates into a much smaller number of excess cases, 19 for 3963 individuals. Leukemias also appear earlier than solid cancers, as early as 4 or 5 years after exposure; thus, some of them may have been missed in this accounting that started 5 years after the bombing.

Table 2. Observed and excess leukemia deaths observed up to 2000 in the exposed group according to radiation dose.

| Weighted marrow dose (Gy) | Subjects | Deaths | Attributable risk (%) | |

|---|---|---|---|---|

| Observed | Estimated excess | |||

| 0.005–0.1 | 30,387 | 69 | 4 | 6 |

| 0.1–0.2 | 5,841 | 14 | 5 | 36 |

| 0.2–0.5 | 6,304 | 27 | 10 | 37 |

| 0.5–1.0 | 3,963 | 30 | 19 | 63 |

| 1.0–2.0 | 1,972 | 39 | 28 | 72 |

| >2.0 | 737 | 25 | 28 | 100 |

| Total | 49,204 | 204 | 94 | 46 |

Data are from Preston et al. (2004), as reported in RERF (2014).

Altogether, the picture that emerges is that, for quite heavily irradiated survivors (e.g., the 0.5–1 Gy group), there is a sizeable increase of neoplasms, especially leukemia but also most solid cancers. It would be wrong, however, to assume that all survivors are hit by this disease, since even in this group the fraction affected is slightly above 20%, less than one-third of this being attributable to radiation exposure. The most recent report on the LSS cohort of survivors (Ozasa et al. 2012), covering the period up to 2003 (by which time 58% of the survivors had died) confirms these results while increasing somewhat the excess relative risk (ERR) associated with radiation; these results also indicate a dependence on age at irradiation, with elevated risk for those irradiated when young. Overall, the ERR for all solid cancers corresponding to a (very sizeable) irradiation of 1 Gy works out as 0.42, and the ERR/radiation dose relationship appears to be linear, with no indication of a threshold.

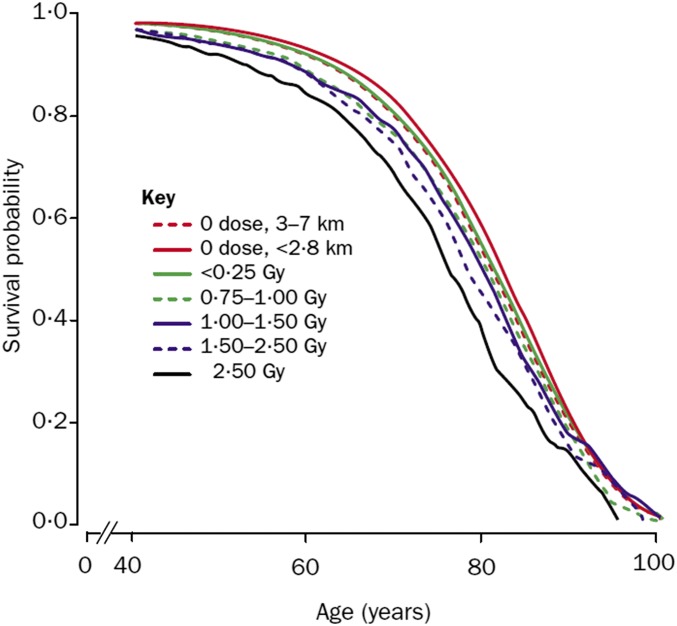

Other diseases and life span

Of course, cancer is not the only possible detrimental effect of radiation exposure, which could have an influence on cardiovascular diseases, autoimmune syndromes, and other ailments. Because of the size of the LSS cohort and the exceptional quality3 and duration of its follow-up, it is possible to look at the end result, i.e., the longevity of individuals according to the radiation dose received. The result is shown in Figure 2, reproduced from the 2000 Lancet paper by Cologne and Preston (2000) and showing data from the LSS cohort up to 1995. At that time, approximately half of the original cohort had died, allowing a reliable evaluation of life span. From Figure 2, it is clear that the separation between the curves is limited, even for the one that corresponds to a dose of >2 Gy. The median loss of life span at 1 Gy irradiation is 1.3 years, and decreases to 0.12 years at 0.1 Gy. Again, the effect is measurable, and follows the expected dose/effect relationship, but its magnitude is quite limited. As a comparison, note that in Russia, life expectancy decreased by 5 years between 1990 and 1994, essentially because of social disruption impacting on living conditions and healthcare (Notzon et al. 1998). As noted above, the survivors may have been exposed to an additional (but unknown) irradiation due to fallout from the bombs; this would lead to an overestimate of the gamma and neutron radiation effects. In other words, it would not affect the major conclusion from this section, that these effects are detectable but relatively limited even for radiation doses of the order of 1 Gy.

Figure 2.

Survival curve (up to 1995) according to level of exposure to radiation (Cologne and Preston 2000). Note the limited separation between the curves for zero (red) and 2.5 Gy (black) exposure.

Studies on the Offspring of Survivors

The large cohort of children of survivors (77,000 individuals) is of particular interest: it should allow reliable estimation of detectable genetic effects resulting from parental irradiation thanks to its large size and to detailed follow-up over several decades. It must be emphasized, however, that some members of this group are still quite young: the cohort includes children born from 1946 to 1984, and the latest published results (Grant et al. 2015) are based on data as of December 31, 2009. Thus late events (e.g., excess cancer cases) are likely to be underrepresented. In addition, the assessment of mutation rate has not yet been performed directly by whole-genome or whole-exome DNA sequencing. The indirect evaluation through examination of the phenotype (incidence of malformations, age-specific mortality) and the limited molecular data (gross chromosome aberrations, mutations at microsatellite loci) lack sensitivity to detect small increases in mutation rate or large increases of point mutations of subtle phenotypic effect.

Malformations and mutations

Within the limitations indicated above, and setting apart the case of children exposed in utero, who display growth deficiencies, intellectual impairment and neurological effects (Douple et al., 2011), the children of survivors show no detectable radiation-related pathology. The incidence of malformations at birth does not increase if both parents have been exposed (Neel and Schull 1991; Table 3). Of course, such studies may not reveal recessive mutations that would only become apparent in subsequent generations.

Table 3. Malformation frequency at birth (including stillbirths and perinatal deaths, but not early miscarriages) in relation to parental exposure.

| Mother’s exposure condition | Father’s exposure condition | ||

|---|---|---|---|

| Not in cities (%) | Low-to-moderate doses (%) | High doses (%) | |

| Not in cities | 294/31,904 (0.92) | 40/4,509 (0.89) | 6/534 (1.1) |

| Low-to-moderate doses | 144/17,616 (0.82) | 79/7,970 (0.99) | 5/614 (0.81) |

| High doses | 19/1,676 (1.1) | 6/463 (1.3) | 1/145 (0.7) |

Data are from Neel and Schull (1991). The early miscarriages have apparently not been recorded.

In addition, all attempts to detect increases in mutation rate (looking at chromosome aberrations, blood protein changes, and minisatellite mutations at various loci) have so far given negative results (Table 4).

Table 4. Mutations at minisatellite loci in relation to parental exposure.

| Controls (<0.01 Gy) | Exposeda (≥0.01 Gy) | |

|---|---|---|

| Number of children examined | 58 | 61 |

| Minisatellite loci tested | 1403 | 496 |

| Mutations detected | 39 | 13 |

| Mutation rate/loci/generation | 2.8% | 2.6% |

Data are from Kodaira et al. (2010). Note that the irradiation level for the exposed parents is quite high.

Mean parental gonadal dose = 1.47 Gy.

Naturally, this does not mean that the radiation received by parents has no genetic effect, only that this is not detectable with the techniques used: in particular, microsatellite variation may not be a reliable indicator of mutation rates. Current technology should allow much more extensive investigations using DNA sequencing, which might allow the detection of a small increase in mutation rate. The fact that samples are available from individuals whose parents have received quite diverse (but fairly well-known) doses would be a great asset to make sure that whatever is observed is actually radiation dependent. It is somewhat surprising that detailed sequencing studies have not yet been performed or, at least, reported—this may reflect both funding issues at RERF and possibly reluctance to provide samples to US collaborators. These investigations could take the form of full genome sequencing on parent/offspring trios, which would enable a more direct estimate of the mutation rates. It is true that such studies are technically demanding, as they require extremely high accuracy to eliminate false positives and to provide a true evaluation of mutation rates, but they are doable (see e.g., Roach et al. 2010). In any case, it is clear that—contrary to popular belief—the genetic effects in humans of quite significant radiation doses (of the order of 1 Gy) seem to be small, indeed so far undetectable. This is in contrast with some animal studies: for example, gamma irradiation of BALB/c and CBA/Ca mice at, respectively, 1 and 2 Gy has been found to double the mutation frequency in their progeny (Barber et al. 2006), at least within expanded simple tandem repeat sequences. It thus appears that humans are less radiosensitive than mice, which makes evolutionary sense in view of their much longer reproductive life span. The mechanisms responsible for this difference, however, are not clear. It is interesting to note that a recent study (Abegglen et al., 2015) found multiple copies of the TP53 gene in the elephant genome and interpreted this as a potential mechanism for cancer resistance in this large-bodied and long-lived species.

Risk of death due to cancer or noncancer diseases in offspring of irradiated survivors

A recent assessment of the risk of death among these offspring (after 62 years of follow-up for the oldest members of this cohort) (Grant et al. 2015) confirms these results and shows no discernible effect of the radiation dose received by parents on the risk of death either by cancer or other causes—i.e., no indication of strongly deleterious health effects. More precisely, the risk of either cancer or noncancer mortality is not correlated with maternal or paternal exposures, and all hazard ratios are in the 0.9 to 1.1 range, even when the mother and/or father have received an exposure of 1 Gy. In other words, as the paper states, there is “no indication of deleterious health effects after 62 years”4 of follow-up. It is too early to have lifespan results similar to those reported above for survivors, since >90% of the offspring were still alive at the cut-off date for the study (end of 2009), and, as already mentioned, an excess of late-appearing pathologies such as cancer may be still undetected. Nevertheless, as of today, there is no discernible effect of the parental irradiation on the health of their offspring, even for quite significant exposures of 1 Gy (∼1000 mSV), to be compared with current safety standards of 1 mSv per year for the general population.

To conclude this section, the studies on the offspring of irradiated survivors have so far not demonstrated excess mutations or decrease of fitness in this group. These studies are ongoing and may eventually reveal effects that have been missed because of the relatively young age of most members of the cohort as well as the limitations of the assay methods used. In light of the data already obtained, however, these effects are likely to be very limited.

Coming back to the issue of the possible contribution of fallout to health effects in both exposed individuals and their offspring, this would—if found to be more significant than previously indicated—worsen the outcome in all cases and thus lead to an overestimate of radiation effects. This would not, however, affect the major conclusion of these studies, i.e., the limited impact of significant irradiation on the longevity of survivors and the absence of detectable genetic effects in their offspring (apart from children irradiated in utero).

What These Results Tell Us

A very clear-cut set of studies

Compared to subsequent nuclear disasters involving nuclear power stations (Chernobyl and Fukushima), the Hiroshima/Nagasaki bombings provide data that are much more clear cut and reliable. The Chernobyl disaster involved quite differentiated populations: the “liquidators” who attempted to quench the fires and dump shielding material onto the reactor, the local inhabitants, and the much larger population potentially affected by the plume of radioactive fallout. There were contributions from direct irradiation and from contamination. In addition, extensive but disordered redistribution of people took place, all in the framework of a largely dysfunctional administrative and political system. As a result there has been no exhaustive and systematic follow-up, the exact radiation exposure of most people is unknown, and the estimates of the associated health effects vary wildly (Williams 2008). The Fukushima accident also resulted in the release of large amounts of radioactivity, and in exposure of the surrounding population to a combination of irradiation and contamination (Hasegawa et al. 2015). Thorough follow-up studies have been initiated but uncertainties in the estimation of radiation exposure and the fact that this has been quite low (<10 mSv) for most of the exposed persons (excluding the personnel working in the nuclear facility) (Tsubokura et al., 2012) will limit the possible conclusions. In contrast, the RERF studies include a large and representative population sample, rely on a fairly accurate estimation of a single irradiation dose, with a wide range of exposure within the cohort, and have been able to follow in detail this population (as well as its offspring) for more than half a century. They have, in fact, been essential to defining the legal limits for radiation exposure from nuclear activities, which are currently 1 mSv/year for the general public and 20 mSv/year for workers in the nuclear industry.5

The picture obtained from these extensive and careful studies is very different from the impressions that prevail in the general public and even among many scientists (Perko 2014). The general perception is that survivors from these cities were heavily affected by various types of cancer, and suffered much shorter lives as a result. While it is true that the rate of cancer was increased by almost 50% for those who had received 1 Gy of radiation, most of the survivors did not develop cancer and their average life span was reduced by months, at most 1 year. Likewise, it is generally thought that abnormal births, malformations, and extensive mutations are common among the children of irradiated survivors, when in fact the follow-up of 77,000 such children (excluding children irradiated in utero) fails so far to show evidence of deleterious effects (Douple et al. 2011; Grant et al. 2015). These studies should, of course, not lead to complacency about the effects of accidents at nuclear power plants, and even less with respect to the (still possible) prospect of a nuclear war, that would involve huge amounts of fallout and very large exposed populations. Nevertheless, concerning the Hiroshima/Nagasaki bombings, there is indeed a large gap between the results of careful studies backed by more than 100 scholarly publications, and the perception of the situation as seen by the general (and even scientific) public (Ropeik 2013).

Why this disparity?

This contradiction between the perceived (imagined) long-term health effects of the Hiroshima/Nagasaki bombs and the actual data are extremely striking. Part of this distortion must stem from the fact that radiation is a new and unfamiliar danger in the history of mankind, an agent that is unseen and unfelt, whose nature and mode of action are mysterious. Familiar dangers are more easily tolerated, as shown by the absence of concern about deaths due to the use of coal, whether they are direct, due to extraction activities (dozens of casualties every year in the United States, thousands in China) or indirect, through atmospheric pollution (several 100,000 premature deaths per year according to the World Health Organization). In addition, radiation is associated with the instant obliteration of two cities and 200,000 people, and with several decades during which the risk of an all-out nuclear war, either by design or by accident, was quite high and present in all minds.

On a more scientific level, the extreme sensitivity of radioactivity detection systems also plays a role. Depending on the type of radiation, a simple Geiger counter can detect radioactivity levels as low as a few becquerels (1 Bq = 1 disintegration per second) that would correspond in most circumstances to very low irradiation levels, orders of magnitude below 1 µSv/day.6 In other words, even simple handheld counters can detect minuscule levels of radioactivity and cause alarm, even though they pose no actual danger. If detection systems for pollutants and poisons were similarly sensitive, we would realize that these molecules are ubiquitous, albeit at very low concentrations. Another contribution to anxiety has been the uncertainty about extrapolation of radiation effects toward very low doses: since these effects are only measurable for fairly high irradiation levels, they have to be estimated by extrapolation for low doses. There have been debates on this point, some arguing that there is a threshold below which no biological effect occurs (assuming that DNA repair mechanisms kick in and have ample time to repair any damage), and others asserting that very low levels over long periods are somehow more damaging than expected from linear extrapolation. Both the latest RERF studies (Ozasa et al. 2012) and recent very large-scale cohort studies covering 300,000 individuals working in the nuclear industry (Leuraud et al. 2015; Richardson et al. 2015) indicate that the relationship between irradiation levels and biological effects is probably linear down to zero exposure—so there is an effect from very low doses, even though it is very small: 10 mSv of accumulated exposure are estimated to raise the risk of leukemia by 0.002% (Leuraud et al. 2015). Thus even low doses of radiation entail some health risks, but the magnitude of these risks is extremely small.

Finally, the handling of recent nuclear incidents by the authorities has been particularly inept and has provided strong grounds for public distrust. The Chernobyl disaster was denied for several days by Soviet authorities while a strongly radioactive plume was being swept by winds over Eastern and then Western Europe; the French government repeatedly asserted that this plume did not spread over France, while it actually was depositing significant (but relatively harmless) amounts of radioactivity on the vegetation. More recently, the seriousness of problems at the Fukushima power station was repeatedly denied by Tepco, the company in charge of this plant, until the scope of the disaster became evident to all. The credibility of authorities over nuclear matters has become very low, and sensational news stories abound, in which irradiation levels are often expressed in microsieverts, which makes for impressive figures. Conspiracy theories argue for a massive cover-up of catastrophic health information and sometimes make their way into allegedly scientific papers (Sawada 2007; Yablokov 2009). Furthermore, there is indeed a gray area in the history of the Hiroshima/Nagasaki bombings: during the first 2 years (1945–1947), before the establishment of the ABCC (later RERF), medical studies were performed by the US army and their results were not disclosed. There may have been significant casualties in this period from the fallout and radioactive contamination that occurred in these two cities. At that time, at the beginning of the Cold War, the US military-industrial complex advocated the potential use of A-bombs as tactical weapons, and would definitely have wanted to suppress evidence of risks from fallout, in order to present them as “clean” weapons differing from conventional explosives only in their potency. Thus it is indeed possible that our knowledge on the aftermath of Hiroshima and Nagasaki is incomplete. This does not, however, affect the conclusions discussed in this Perspective article, which cover the more than 60 years following the explosions, rely on comparison of well-defined exposure groups, and show effects that are clearly related to radiation dose.

A duty to correct distortions

The tremendous gap between public perception and actual data is unfortunately not unique to radiation studies. It is easy to list a number of cases where dangers are grossly exaggerated (e.g., foods from genetically modified organisms being supposedly detrimental to health, on the basis of essentially zero scientific evidence), or, on the opposite side, not recognized in spite of strong and convergent scientific evidence (anthropogenic climate change, until recently at least). Sometimes, as in the topic of this article, these misrepresentations are also present within the scientific community. These distortions can be very damaging as they skew important public debates, such as the choice of the best mix of energy generating options for the future;7 I believe it is important to try to clear up these questions, and to disseminate widely the scientific data when they exist, in order to allow for a balanced debate and more rational decisions.

Acknowledgments

The author thanks Professor Nori Nakamura for welcoming him at RERF and for many helpful discussions.

Footnotes

Communicating editor: A. S. Wilkins

Throughout this paper, radiation exposure is expressed using the gray unit (irradiation resulting in the absorption of 1 joule per kilogram), which is the unit appropriate for whole-body irradiation; for low levels of radiation and taking into account the nature of radiation and the exposed tissue, the unit generally used is the sievert (mSv, millisievert). For whole-body, mostly gamma-ray exposure, the two units are roughly equivalent, i.e., 1 Gy ∼1 Sv = 1000 mSv.

Let us remember that 2.5 Gy is the LD50, and that the limit for annual exposure for the general public is 1 mSv, i.e., ∼1 mGy.

Thanks to the Japanese koseki family registration system, only 121 individuals were lost to follow-up among the 120,321 cohort members.

For the youngest of these offspring (born 1984) the follow-up is only 25 years.

Note that annual exposure from medical devices is currently estimated at 3 mSv in the United States (Leuraud et al 2010).

The correspondence is not trivial; it depends on the type of radioactivity considered and the geometry of the layout; the figure quoted is just an order of magnitude. A calculation tool can be found at http://www.radprocalculator.com/.

There are real issues with nuclear energy—the unknown danger and cost of decommissioning power stations, and the problems with safe storage of nuclear waste, which are more serious than radiation risks in normal operation.

Literature Cited

- Abegglen L. M., Caulin A. F., Chan A., Lee K., Robinson R., et al. , 2015. Potential mechanisms for cancer resistance in elephants and comparative cellular response to DNA damage in humans. JAMA 314: 1850–1860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barber R. C., Hickenbotham P., Hatch T., Kelly D., Topchiy N., et al. , 2006. Radiation-induced transgenerational alterations in genome stability and DNA damage. Oncogene 25: 7336–7342. [DOI] [PubMed] [Google Scholar]

- Cologne J. B., Preston D. L., 2000. Longevity of atomic-bomb survivors. Lancet 356: 303–307. [DOI] [PubMed] [Google Scholar]

- Douple E. B., Mabuchi K., Cullings H. M., Preston D. L., Kodama K., et al. , 2011. Long-term radiation-related health effects in a unique human population: lessons learned from the atomic bomb survivors of Hiroshima and Nagasaki. Disaster Med. Public Health Prep. 5(Suppl 1): S122–S133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grant E. J., Furukawa K., Sakata R., Sugiyama H., Sadakane A., et al. , 2015. Risk of death among children of atomic bomb survivors after 62 years of follow-up: a cohort study. Lancet Oncol. 16: 1316–1323. [DOI] [PubMed] [Google Scholar]

- Hasegawa A., Tanigawa K., Ohtsuru A., Yabe H., Maeda M., et al. , 2015. Health effects of radiation and other health problems in the aftermath of nuclear accidents, with an emphasis on Fukushima. Lancet 386: 479–488. [DOI] [PubMed] [Google Scholar]

- Kodaira M., Ryo H., Kamada N., Furukawa K., Takahashi N., et al. , 2010. No evidence of increased mutation rates at microsatellite loci in offspring of A-bomb survivors. Radiat. Res. 173: 205–213. [DOI] [PubMed] [Google Scholar]

- Leuraud K., Richardson D. B., Cardis E., Daniels R. D., Gillies M., et al. , 2015. Ionising radiation and risk of death from leukaemia and lymphoma in radiation-monitored workers (INWORKS): an international cohort study. Lancet Haematol. 2: e276–e281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neel J. V., Schull W. J., 1991. The Children of Atomic Bomb Survivors: A Genetic Study, National Academy Press, Washington, DC. [PubMed] [Google Scholar]

- Notzon F. C., Komarov Y. M., Ermakov S. P., Sempos C. T., Marks J. S., et al. , 1998. Causes of declining life expectancy in Russia. JAMA 279: 793–800. [DOI] [PubMed] [Google Scholar]

- Ozasa K., Shimizu Y., Suyama A., Kasagi F., Soda M., et al. , 2012. Studies of the mortality of atomic bomb survivors, Report 14, 1950–2003: an overview of cancer and noncancer diseases. Radiat. Res. 177: 229–243. [DOI] [PubMed] [Google Scholar]

- Perko T., 2014. Radiation risk perception: a discrepancy between the experts and the general population. J. Environ. Radioact. 133: 86–91. [DOI] [PubMed] [Google Scholar]

- Preston D. L., Pierce D. A., Shimizu Y., Cullings H. M., Fujita S., et al. , 2004. Effect of recent changes in atomic bomb survivor dosimetry on cancer mortality risk estimates. Radiat. Res. 162: 377–389. [DOI] [PubMed] [Google Scholar]

- Preston D. L., Ron E., Tokuoka S., Funamoto S., Nishi N., et al. , 2007. Solid cancer incidence in atomic bomb survivors: 1958–1998. Radiat. Res. 168: 1–64. [DOI] [PubMed] [Google Scholar]

- Roach J. C., Glusman G., Smit A. F., Huff C. D., Hubley R., et al. , 2010. Analysis of genetic inheritance in a family quartet by whole-genome sequencing. Science 328: 636–639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- RERF, Radiation Effects Research Foundation, 2014 A brief description. Available at: http://www.rerf.jp/shared/briefdescript/briefdescript_e.pdf. Accessed June 14, 2016.

- Richardson D. B., Cardis E., Daniels R. D., Gillies M., O’Hagan J. A., et al. , 2015. Risk of cancer from occupational exposure to ionising radiation: retrospective cohort study of workers in France, the United Kingdom, and the United States (INWORKS). BMJ 351: h5359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ropeik, D., 2013 Fear vs. Radiation: The Mismatch. The New York Times, Oct. 21, 2013. Available at http://www.nytimes.com/2013/10/22/opinion/fear-vs-radiation-the-mismatch.html. Accessed: May 17, 2016.

- Sawada S., 2007. Cover-up of the effects of internal exposure by residual radiation from the atomic bombing of Hiroshima and Nagasaki. Med. Confl. Surviv. 23: 58–74. [DOI] [PubMed] [Google Scholar]

- Tsubokura M., Gilmour S., Takahashi K., Oikawa T., Kanazawa Y., 2012. Internal radiation exposure after the Fukushima nuclear power plant disaster. JAMA 308: 669–670. [DOI] [PubMed] [Google Scholar]

- Williams D., 2008. Radiation carcinogenesis: lessons from Chernobyl. Oncogene 27(Suppl 2): S9–S18. [DOI] [PubMed] [Google Scholar]

- Yablokov A. V., 2009. Mortality after the Chernobyl catastrophe. Ann. N. Y. Acad. Sci. 1181: 192–216. [DOI] [PubMed] [Google Scholar]