Abstract

Background

Video-based noninvasive eye trackers are an extremely useful tool for many areas of research. Many open-source eye trackers are available but current open-source systems are not designed to track eye movements with the temporal resolution required to investigate the mechanisms of oculomotor behavior. Commercial systems are available but employ closed source hardware and software and are relatively expensive, limiting wide-spread use.

New Method

Here we present Oculomatic, an open-source software and modular hardware solution to eye tracking for use in humans and non-human primates.

Results

Oculomatic features high temporal resolution (up to 600Hz), real-time eye tracking with high spatial accuracy (< 0.5°), and low system latency (∼ 1.8ms, 0.32ms STD) at a relatively low-cost.

Comparison with Existing Method(s)

Oculomatic compares favorably to our exciting scleral search-coil system while being fully non invasive.

Conclusions

We propose that Oculomatic can support a wide range of research into the properties and neural mechanisms of oculomotor behavior.

Keywords: Eye Tracking, Search Coil, Open-Source, Non-human primate, Monkey, Rhesus

Introduction

Studies ranging from basic visual psychophysics to complex social interactions require the measurement of eye position with low-latency, less than 10ms. Precise measurements of eye position were pioneered using the scleral search coil technique by placing a small coil of wire embedded within a contact lens or directly implanted into the sclera (Robinson 1963; Judge et al. 1980). When placed within two magnetic fields in quadrature phase, a scleral search coil produces an induced current, which allows the reconstruction of the current eye position. Since this system is entirely analog until the current is converted at digital acquisition, the sampling frequency (> 500Hz) is limited by the analog to digital conversion as well as the magnetic fields encoding frequency (30kHz). Hence, the accuracy (≪ 1°) of scleral search coil systems is limited only by the internal signal-to-noise. Circuit diagrams of scleral search coil systems are easy to obtain. As a result, most scleral search coil systems are nearly equivalent and the data produced is highly replicable. While scleral search coils provide good temporal resolution, spatial accuracy, and replicability, their primary drawback is the invasive nature of the procedure. In human studies, a potentially uncomfortable contact lens is used and discarded between subjects. In non-human primates (NHPs), chronic implantation of a search coil within the sclera requires a surgical placement under anesthesia (Judge et al. 1980). The interface between subcutaneous wires and the bone cement holding the connector means wire breakage is a common issue often requiring additional surgical intervention. Additionally, the strong magnetic fields required for using search coils can generate noise in modern electrophysiological data acquisition systems which can complicate subsequent data analysis.

As a non-invasive alternative to scleral search coils, a growing number of studies over the last 20 years have turned to video-based eye tracking. Historically, this method was focused on remote (not head mounted) eye tracking and was limited to expensive commercial products due to demanding hardware requirements. Nevertheless, being fully non-invasive and easily deployable has caused video-based eye tracking to increasingly become the primary tool for the study of human and NHP eye movements. Additionally, video based trackers can measure other ocular parameters such as the pupil size and torsion (even though the gold standard for torsion is still a 3 dimensional eye coil), making them even more versatile. One general requirement for optical methods is the need for fast imaging sensors and efficient algorithms to permit sampling over 200 Hz. Commercial products have become faster (up to 2000 Hz) and more accurate (< 0.5°) but unfortunately not more accessible (price and transparency) in the past 10 years. The open-source / computer science community has invested a great deal into developing inexpensive, webcam-based, head-mounted eye-trackers for use in accessibility or marketing research scenarios, but such approaches have not been applied to precise psychophysics grade eye trackers. One likely reason for this is sheer necessity. In most applications, sampling rates of 120 Hz are sufficient since the vast majority of saccades lie within a spectrum of 20-200ms depending on their amplitude (Bahill et al. 1975; Baloh et al. 1975). Recent studies comparing scleral eye coil systems with modern commercial optical systems demonstrate the benefits of using optical methods (Kimmel et al. 2012).

Given the advantages of optical eye trackers, we believe that there is an immediate need for open-source eye tracking techniques that can be used to study human and NHP eye movements in a psychophysical research environment. Here, we introduce Oculomatic, an open-source eye tracking software that can be used with off-the-shelf and affordable machine vision imaging sensors. In developing Oculomatic, we have used commercial off-the-shelf components to reduce the overall cost of the final system and allow wide distribution. Since the software as well as hardware recommendations are transparent, it is easy for the user to change the setup to their particular need and application; we have focused on our primary need, namely tracking of head-fixed NHP (Macaca mulatta) eye movements. In designing the system, we have evaluated multiple existing eye tracking algorithms (Swirski et al. 2012; Kassner et al. 2014; Javadi et al. 2015) for their ability to accurately track pupil location in NHPs as well as their computational burden. Our final solution uses binary image moments, an approach which is robust, easily modified to specific needs, and can be handled by affordable off-the-shelf hardware without relying on specialized implementations on embedded hardware systems. The final system and software can be quickly acquired and easily set up using a simple executable and costs less than $1000 at the time of publication.

Methods and Materials

Hardware

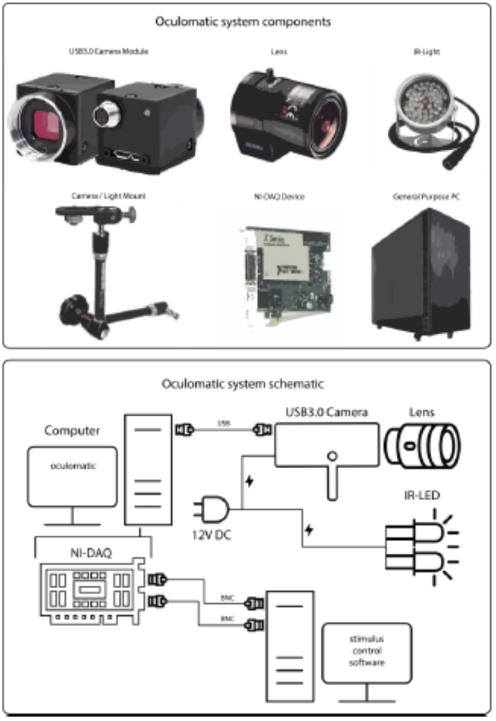

Oculomatic is a full eye tracking system that entails a real time tracking software and recommendations for off-the-shelf hardware. The hardware can be adapted to the users' needs. The required hardware contains the following elements: Camera module, lens, IR-Light source, mounting solution, data acquisition device, and a general purpose PC (Figure 1). Additional detailed resources can be found in the supplementary materials.

Figure 1.

Oculomatic main system components (top) excluding cables and PC monitor. The bottom describes the connectivity of all system components.

Most eye tracking solutions for the NHP require the integration of eye signals within the electrophysiological signal chain. This integration is fairly simple for scleral eye coil systems, since all computations and filtering are performed on analog signals and the resulting horizontal and vertical pupil positions are represented as voltages; these voltages can be adjusted by the experimenter by setting the DC gains and offsets independently. In Oculomatic we have opted to maintain this traditional approach. The primary output of the digital x and y pupil position is normalized to the image sensor size and gain is adjusted by the experimenter. Centering the voltages is achieved by pressing a button when the subject fixates on the x,y = 0 position on the screen. Voltages are output using a National Instruments DAQ card using the NI-DAQmx proprietary library. Any National Instruments card that offers 2 or more analog outputs at 10 or higher bits should be sufficient for use with Oculomatic. Our recommendation is the low-cost NI USB-6001 DAQ since it requires no additional breakout boards. A low-cost breakout circuit board can be cheaply acquired for the PCI and PCIe NI-DAQ boards, the schematics (EAGLE files) can be downloaded from our Github repository (link to repository can be found in the additional information). Currently, we recommend using a Windows operating system since it is the only OS that fully supports NI-DAQ adapters. We have a prototype version of Oculomatic working under a Linux OS using the comedi library (comedi.org). However, the DAQ adapters supported by comedi are limited to mostly older legacy devices.

Computers used by Oculomatic should have the following minimum specifications: a modern, 4th generation, Core i5/i7 Intel processor or equivalent, 8GB of RAM and a native USB3.0 controller to connect the camera module. Older computers might be equipped with third party controllers that can cause compatibility issues (for reference consult: Point Grey website https://www.ptgrey.com/). If the required temporal resolution is below 300 Hz, most modern laptops will suffice. On a modern core i7, we were able to simultaneously record neural data as well as run Oculomatic without any interference or decrease in temporal resolution using the PCI interface. We caution against the use of the same USB host controller for both electrophysiology and Oculomatic. If the used PC only has one controller we urge the user to purchase an additional USB3.0 PCIe card if high channel count electrophysiological signals are acquired through the same host.

Supported image sensors can be obtained from Point Grey Research (www.ptgrey.com). We have tested three different mono-color modules without infra-red (IR) cut-off filters (see Table 1).

Table 1. Recommended Point Grey sensor modules (pricing as of March 2016).

| PART # | FPS | RESOLUTION | PRICE |

|---|---|---|---|

| CM3-U3-13Y3M-CS | 590 | 320×240 | $350 |

| FL3-U3-13Y3M-C | 600 | 320×240 | $590 |

| FL3-U3-13SM2-CS | 240 | 640×480 | $500 |

The lens should be chosen according to the requirements of each setup. C-Mount lenses are more versatile since they can be mounted to C and CS-Mount camera modules, while CS-Mount lenses cannot be mounted to C-Mount cameras. We recommend a light-sensitive lens, with F-stops of 0.8 to 1.4, such as Fujinon (YV10X5HR4A-2 for CS-Mount) or fixed focal length lenses such as Tamron (23FM25SP for C-Mount).

IR illumination systems operating at either 850nm (visible) or 920nm (invisible) can be purchased from many online vendors. We recommend an inexpensive (<$20) light source driven by 12V DC power supply, such as those available on amazon.com (e.g. Crazy Cart 48-LED CCTV).

As a mounting solution, we recommend using the Manfrotto magic arm (SKU244) with mounting brackets in both unobstructed and hot mirror setups. Manfrotto mounting arms allow flexible placement with many degrees of freedom and are widely available.

Software and Algorithm

The Oculomatic software tracks eye position within the acquired image frame at the frame rate dictated by the image sensor. Eye position image coordinates are then transformed into voltages according to user-defined parameters and sent to the behavioral control software via a National Instrument DAQ card/board.

To facilitate fast cross-platform development and deployment we use the openframeworks (http://openframeworks.cc/) open source C++ toolkit paired with open computer vision (http://opencv.org/) and boost (http://www.boost.org/) libraries. All libraries and toolkits are cross platform, and freely-available with community support.

To quantify gaze position, we employ a standard “contour finder” algorithm (Suzuki and Abe 1985) that matches the contour of a thresholded input image and then computes the image moments to find the centroid. In our experience, ellipse fitting algorithms (Swirski et al. 2012; Javadi et al. 2015) as well as Hough circle transformations tend to perform poorly on NHP eyes, especially when the pupil is dilated and partially-occluded by the eyelid. Contour finders and their associated image moments maintain functionality despite partial occlusion.

The procedure starts with the raw image output from the image sensor, which is then thresholded by a user-defined value (i.e. pupil threshold) to yield a binary image in which the pupil is a white circle within a black surround. This binary image is smoothed using a 2D Gaussian filter of 2×2 pixel. From this smoothed binary image, we extract the largest (user-defined) boundary using a previously-described algorithm (Suzuki and Abe 1985). The boundary is described as a connected component (vector of 2D points) between 1-pixel (pupil) and 0-pixel (background) points. Importantly, the implementation ignores all 1-pixel components which are surrounded by other 1-pixel components. In practice, this means that borders within the pupil border (for example from the IR reflection) are ignored.

To reduce errors in centroid estimation, Oculomatic provides the following user-defined parameters to filter the contour estimate: area, circularity, convexity, and inertia. Extracted contours are limited to a user-defined area between a minimum (inclusive) and a maximum (exclusive) value. Contours are limited to have circularity (4*pi*area/perimeterˆ2) between minimum circularity = 0 and a user-defined maximum circularity. Inertia describes how elongated (elliptical) the shape is allowed to be between inertia = 0 and user-defined maximum (1 being a perfect circle). The contour convexity is defined as the tightest convex shape that completely encloses the contour (area/area of convex hull). The user-defined parameter is designed to allow IR illumination to border the pupil edge (overlap) and still obtain a tracking solution. If a matching contour is returned, the 0th and 1st order image moments are computed (following Green's Theorem (Riemann 1876)), area and centroid, and scaled to analog outputs. If no matching contour is returned in the current frame, the last matching contour is used. The same procedure can simultaneously track pupil IR reflection. If the pupil centroid and IR centroid are both used, the vector between the two points is output. This is useful in human subject applications when a small but noticeable degree of head motion is expected (i.e. when a chin rest is used).

Output signals are unfiltered or optionally filtered using a heuristic algorithm (Stampe 1993). Commercial eye-trackers often include some form of signal smoothing to obtain more reliable tracking in situations in which the detection algorithm fails. We have opted for an algorithm that does not alter the signal significantly (no direct smoothing), yet eliminates sudden erroneous signal changes following simple heuristics. In head-restrained non-human primates, we have found no significant difference in accuracy using the algorithm. During sub-optimal illumination, however, the algorithm limits spikes in signal output that are generated from false centroid estimations.

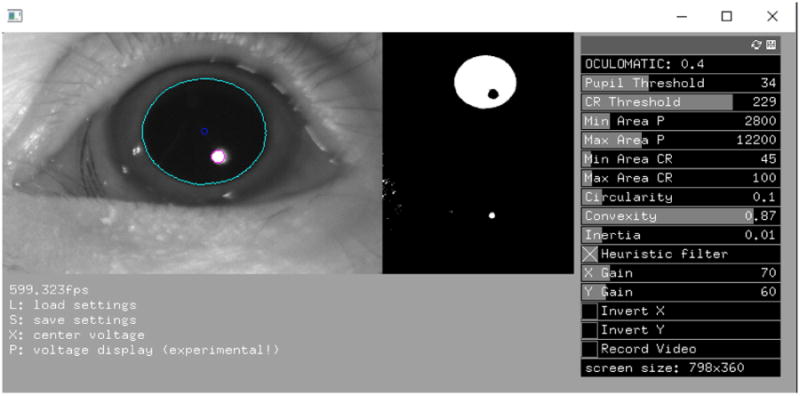

Oculomatic is deployed as a Windows-executable (source code available on Github) with a straightforward user interface (Figure 2). To ensure speed and consistency, Oculomatic is a single threaded procedural program allowing us to easily assess round trip latency and tracking speed.

Figure 2.

Oculomatic graphical user interface. Oculomatic running at 600Hz. Left image: the original camera image overlaid with the estimated pupil location of an NHP. Right image: the thresholded pupil and the IR reflectance binary images. Both help the user determine the correct threshold. The right side shows Oculomatic's user control panel. Settings can be saved to human readable XML files and loaded using keyboard commands.

Validation and accuracy measurements

To validate the accuracy of Oculomatic, we integrated the software and hardware in ongoing NHP research and compared the obtained eye signals to scleral search coils. A qualitative comparison to a commercial tracker solution can be found in supplementary figure 1. Software latencies were measured using the high resolution boost timer library. Round trip full system latencies were measured by driving two bright white light-emitting diodes (LEDs) using National Instruments DAQ cards and measuring the discrepancy between switches from one LED to another. LEDs were placed within the FOV of the image sensor at approximately 150 pixels apart and switched at a frequency of 30 Hz. Latency was defined as the time it would take to receive an analog signal switch from the Oculomatic signal as detected using the same National Instrument DAQ board running on the same system clock. This latency measure references the fixed system latency describing data availability and does not correspond to the temporal capabilities in terms of refresh rate.

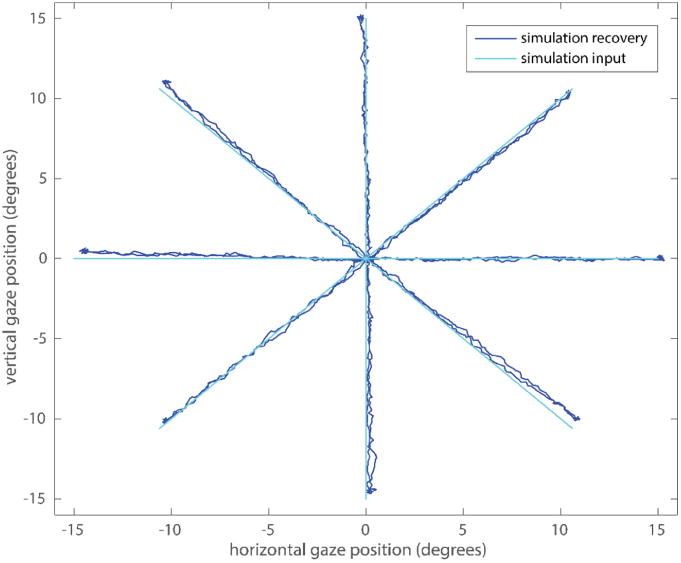

In an additional validation step, we simulated the trajectory of saccadic eye movements using a synthetic model of the human eye in Blender based on previous work (Swirski and Dodgson 2014, Holmberg 2012). This model simulates the rotational components of the eye as well as the displacement of its surrounding features such as eyelids, eyelashes and surrounding skin. A physically correct rendering of reflections, refraction, shadows and depth-of-field blur was performed. An example image of the synthetic eye can be found in supplementary figure 2. We then simulated 9600 frames in which the simulated eye changes gaze to virtual targets evenly spaced every 25 degrees at 15 degrees eccentricity. Frames were then compressed to a playable movie using the ffmpeg library and played on a computer screen at 144 fps. Oculomatic was setup to track the virtual eye in a fully dark room.

Subjects and tasks

Three male rhesus monkeys (Macaca mulatta) were used as subjects (Monkey H, 11.3 kg; Monkey R, 7.5 kg; Monkey M, 10.3 kg). All experimental procedures were performed in accordance with the United States Public Health Service's Guide for the Care and Use of Laboratory Animals, and approved by the New York University Institutional Use and Care Committee. Experiments were conducted in a dimly lit, sound-attenuated room using standard techniques. Briefly, the monkeys were head restrained and seated in a Plexiglas primate chair that permitted arm and leg movements. Visual stimuli were generated using an LCD display placed 67cm (Monkey H) or 34cm (Monkey R and M) in front of the animal. Monkey H eye movements were monitored in an unobstructed frontal approach at 600Hz (320 × 240 pixel) while Monkey R and M eye movements were sampled at 240Hz using a 45° hot mirror setup (480 × 320 pixel). Monkey H eye movements were additionally recorded using the scleral search coil technique, with horizontal and vertical eye position sampled at 600 Hz using a quadrature phase detector (Riverbend Electronics). Presentation of visual stimuli and water/juice reinforcement delivery were controlled with integrated software and hardware systems, controlled with either MonkeyLogic (Asaad and Eskandar 2008a, 2008b; Asaad et al. 2013) (Monkey H) or custom software written in Labview (Dean et al. 2012; Hagan et al. 2012) (Monkeys R and M).

For all analysis of behavioral eye tracking in our non human primates, all trials that a monkey correctly performed were included in the analysis. No online drift correction was performed and no trials were explicitly excluded. All correct trials require the monkey to acquire fixation within a defined radius (4 degrees radius around fixation as well as target for monkey H and 5 degrees radius around fixation as well as target for monkey R and M) and perform a subsequent saccade to a peripherally placed target within the same defined radius.

Monkey H performed a simple two target delayed saccade task in which two different juice options of varying reward sized were presented. After an initial fixation interval of 1300 ms, a saccade could be initiated to either 15° eccentric target within 300 ms to receive reward. Choice targets were presented 500 ms after fixation onset. We used this task with its long fixation interval to assess the stability of fixations of the Oculomatic system. Gaze position was calibrated using a simple 5 point calibration routine as implemented in MonkeyLogic. Transformations from pixel space into gaze space were performed online and no recalibration was performed during the acquisition. For this animal, we focused on measuring the stability of Oculomatic during the fixation interval and report the following metrics. The first metric concatenates every single trial and computes the variability of the obtained eye signal as its standard deviation over the fixation interval. An ideal system used on an ideal participant would return a mean of 0 for both horizontal and vertical eye positions and a low standard deviation. Furthermore, no apparent changes in variability over time should occur. As a second measure, we were interested in the overall variability of the eye signal across single trials of fixation. This measure, in an ideal observer should have a small spread and no bias towards a cardinal direction.

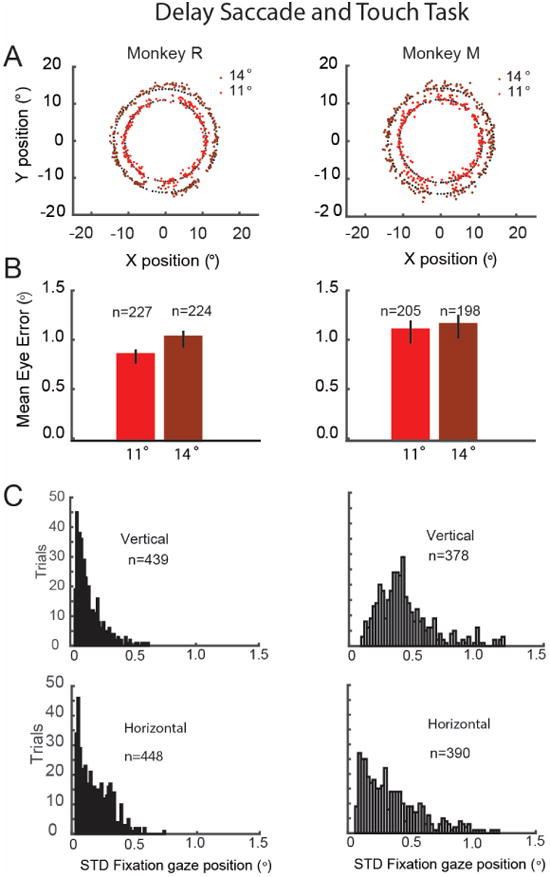

Monkeys R and M performed a delay saccade and touch task (Dean et al. 2012). In this task, monkeys performed saccades to a red peripheral target while maintaining central touch. Reaches were made either with the left (Monkey R) or the right (Monkey M) arm to a touch-sensitive screen (ELO Touch Systems, CA). Visual stimuli were presented on an LCD display (Dell Computers, TX), placed behind the touchscreen. We used Oculomatic to track eye movements. The camera and light source were positioned above and perpendicular to a hot mirror positioned at 45° (Edmund Optics). At the beginning of the trial, a yellow square was presented in the screen center, instructing the monkey to maintain fixation and touch for 500-800 ms. Subsequently, a peripheral target appeared at a random location on circle with either 11° or 14° visual eccentricity. A 700-1000 ms delay period followed, during which the animal was required to withhold his response. After the delay period, the yellow fixation square turned into gray, instructing the monkey to perform a saccade alone to the peripheral target while maintaining central touch. The fixation central square had an area of two degrees, with a window of tolerance of 2×2 degrees for the eye and the hand. The same window size was used for peripheral targets.

All task variables were controlled using Labview. Behavioral data were digitized (12 bits at 10 kHz; National Instruments, TX) and continuously recorded to disk for further analysis (custom C and Matlab code). Gaze calibration was performed using an initial offset and gain calibration for online task control and later offline using an affine transformation.

Results

We developed an open-source NHP eye tracking system for reliable, accurate and fast use. Following, we present relevant validation performance metrics of Oculomatic.

First, we show latency measurements for both software and round-trip hardware and software components of the system. Software latencies refer to the time it takes Oculomatic to fetch an image from the host controller, process it and send the resulting gaze position to the DAQ card for analog output. Round-trip hardware latencies refer to the delay in time between an event occurring in the real world and the time that this event, processed by Oculomatic, gets registered by an independent computer. These measurements are particularly important since the reliability of subsequent measurements is based on accurate, repeatable frame analysis. With repeatable frame analysis we refer to the need for processing each incoming image frame in exactly the same amount of time. The FlyCapture SDK (software development kit) provided by Point Grey provides diagnostic routines that run directly on the camera modules FPGA (camera internal microprocessor). Using these routines, we guarantee consistent frame delivery in our testing range of 240 Hz to 600 Hz (no dropped frames). Higher frame rates using different sensors require further testing. Oculomatic provides a FPS (frames per second) counter for quick assessment. We have noted two errors that can lead to reduced frame rates. One source is a problematic negotiation between the USB3 host controller and the camera module leading to lower bandwidth. This can be solved by connecting the camera directly to the motherboard USB controller and not to external USB hubs (including front USB3 headers on computer cases). Another source of error is a problematic camera register configuration which can be solved by restarting the module. Tested camera configurations are provided on Github. In validating the system we have tried to adhere to the recommendations of the COGAIN association (Mulvey 2010) and altered the metrics to better account for NHP eye tracking in a head-fixed setup. We evaluated the round trip latency using two switching LED's. A total average system latency of ∼1.8ms (0.32ms STD) was observed excluding frame time. While higher frame rate sensors could certainly drive down the total system latency in terms of data availability, we have not been able to test our software with frame rates higher than reported here.

Spatial resolution of tracking is dependent on multiple factors such as the size of pupil on the sensor, resolution of the sensor as well as noise within the analog output stream. Because of these factors, we feel that an objective measure of spatial accuracy is difficult if not impossible to provide given the modular nature of our system. The reported measures of spatial resolution here provide a reference of what accuracies are to be expected when well trained non human primates are required to perform our experiments.

The recovery of simulated synthetic eye model data can be seen in figure 3. A close match between the input and the recovered eye position is evident. During fixation the mean error is 0.06° (STD = 0.0181) and 0.12° (STD = 0.091) during saccades. One “large” systematic offset of 0.4° degrees can be observed at the endpoints of the 135° saccade direction that could potentially be reduced by using a higher order (polynomials of order 3 or more) calibration routine.

Figure 3.

Synthetic eye simulation gaze position and recovery using Oculomatic.

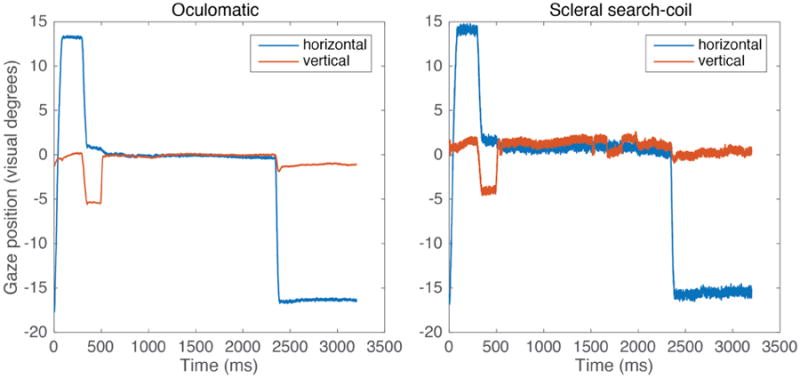

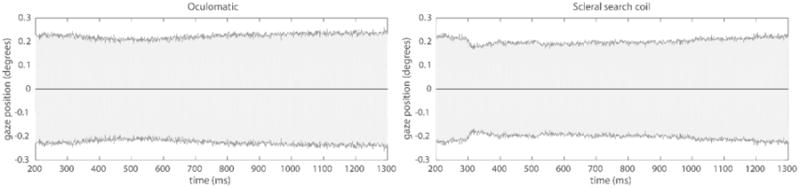

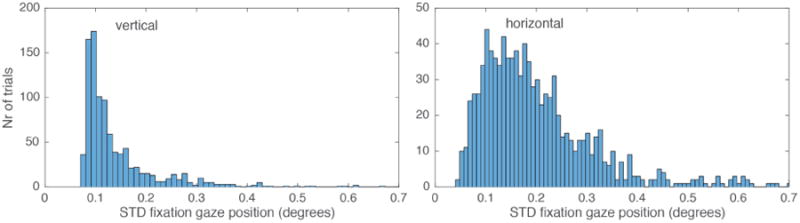

In Monkey H, we measured fixation stability in a 1300 ms fixation interval while the monkey performed a standard two alternative choice task. These sessions used a front facing camera operating at a sampling frequency of 600 Hz. An example single trial trace for both video based and scleral search coil eye tracking can be seen in Figure 4. In a single session (1000 trials), we observed a gaze position accuracy (standard deviation of eye signal over trials) of 0.2° degrees (Figure 5). In comparison, gaze positions quantified via a scleral search coil exhibited an accuracy of 0.16°. It is important to note that the results depicted in figure 4 and figure 5 are from independent measurements. The higher variability in figure 4 for the single trace search coil measurements is the result from our experimental setup being unable to precisely calibrate two independent streams of analog data simultaneously. In figure 5, when data were first recorded using Oculomatic and subsequently using the eye-coil system, the calibration was more precise leading to lower variability for the eye-coil data. Horizontal gaze positions generally exhibited more variability (Figure 6). The higher variability in the horizontal gaze position is not a result of higher gains resulting from non-square image matrices (gains were kept constant) but potentially an effect of the monkey anticipating targets on horizontal eccentricities during this task. Our synthetic eye simulations did not exhibit a bias towards any cardinal direction.

Figure 4.

Single trial Oculomatic and scleral search-coil gaze position at 600Hz. Monkey H performs a simple 2 alternative forced choice task to an eccentric 15° target.

Figure 5.

Time locked Oculomatic and scleral search coil fixation stability. The shaded error plot depicts the mean (black) and standard deviation (grey shading) (1000 trials) of horizontal and vertical gaze position signals for each time bin during a 1300 ms fixation period (first 200 ms cropped to allow the animal to settle on the fixation point) at x = 0° and y = 0° for Oculomatic (left) and scleral search coil (right).

Figure 6.

Oculomatic standard deviation of horizontal and vertical gaze position during fixation intervals averaged across single trial epochs.

For the tasks involving arm and eye control (Monkeys R and M), each monkey sat in an open chair with a hot-mirror setup. Both features enable monkeys to reach towards a touch screen while eye movements were tracked. Figure 7A shows the results for saccade endpoints to targets. The mean saccade endpoint errors for both eccentricities were on the order of: 0.8°-1.2° with a slight bias towards higher errors in vertical positions (Figure 7B). Mean fixation stability (Figure 7C) was comparable to that observed in Monkey H using the front facing camera setup. Monkey M exhibited larger errors. Overall, errors in the hot mirror setup were slightly higher than in the front facing setup. Using a hot mirror setup in confined spaces limits illumination and can lead to additional IR reflections that can be a source of noise in the eye images. Additionally, because the image plane is angled (45°) in respect to the camera module, full field of view focus cannot be obtained without using an additional prism or angling the camera with respect to the hot mirror.

Figure 7.

Oculomatic performance during a delayed saccade and touch task. A. Targets and saccade endpoints. Targets (black dots), and saccade endpoints at 11° and 14° (red dots). B. Mean eye error magnitude for the endpoints depicted in B. Monkey R (11°: 0.82+-0.03 sem; 14°: 1.00 +-0.03sem); Monkey M (11°: 1.07+-0.05; 14°: 1.13+-0.05). C. Histogram of gaze position variability during fixation in the horizontal and vertical axis: Monkey R (horizontal m=0.20+-0.005sem; vertical m=0.11+-0.004), Monkey M (horizontal 0.41+-0.01, vertical: 0.49+-0.01).

In summary, our results demonstrate the feasibility of using an open-source, affordable high speed eye tracking system to measure non human primate eye movements.

Discussion

We present an alternative for human and NHP eye tracking in frontal and hot-mirror camera setups based on open-source software combined with customizable hardware. To our knowledge, this is the first such project that allows researchers to acquire an eye tracking system for NHP performing at high-speed, high-accuracy and at a relatively low-cost. Oculomatic can be adjusted to fit many laboratory use cases and can be extended to accompany new needs and use cases. Our primary goal was to develop a cost-effective solution that rivals commercial systems and is open, transparent and modifiable. This enables the researcher to rapidly develop and modify hardware and software based on experimental findings. Many researchers have previously compared scleral search coil and video-based eye tracking favorably (Frens and van der Geest 2002; Smeets and Hooge 2003; Kimmel et al. 2012) and Oculomatic allowed us to easily extend our multiple search coil setups with video-based tracking. Other open-source eye tracking software and hardware solutions exist (Swirski et al. 2012; Kassner et al. 2014) and have features that go beyond what Oculomatic offers, such as scene cameras as well as free head motion. They are however either not fast enough (< 200Hz) to be applied to NHP work or are primarily aimed at fusing gaze and point of view information concurrently (a modality not required in head stabilized setups). In principle, many of these existing solutions should also work for NHP research using faster machine vision sensors. We have noticed however that more complex algorithms, especially ones with iterative optimization, can become computationally expensive at higher frame rates. Another drawback of these algorithms is that they require an entirely unobstructed view to track the pupil position. An unobstructed view often hard to achieve when studying NHP due to the prominent eyebrow ridge. Slightly retracting the eyebrows or increasing the luminance of the stimulus display to decrease pupil size can help in alleviating pupil occlusion but occlusions are a general problem for NHP eye tracking with video-based methods.

The current limitations of the Oculomatic system are integration within multiple behavioral control software environments. Behavioral control systems that accept analog inputs (most common in electrophysiology) should work out of the box. At this time, Oculomatic does not have a full affine gaze position transformation built in but relies on either setting horizontal and vertical gains and offsets similar to scleral search coil setups or using a transformation routine in the behavioral control software. Another limitation is light interference. Oculomatic works best in environments where IR reflections are minimal.

Oculomatic hardware and software can be extended in a variety of ways. In the short run, we are working on integrating head tracking for use in non head restricted animals as well as dual eye tracking within the same image field of view. Future work can integrate ZeroMQ networking capabilities (or TCP/IP) that allow communication with behavioral control software (Matlab, Python, C) as well as integrate a gaze position calibration routine. Additionally, all camera controls (currently bundled via the FlyCapture GUI) can be made directly accessible from within Oculomatic. The use of affordable FPGA's to provide a computer independent embedded eye tracking system for non-human primates can also provide substantial performance improvements and cost savings.

Supplementary Material

Highlights.

Oculomatic is an easy to use open-source, modular hardware eye tracking system for use in humans or non human primates.

Oculomatic provides fast (600 Hz), precise (<0.5°) eye tracking at low system latency (<1.8ms)

Oculomatic integrates directly into the electrophysiological signal stream via analog outputs and can be used for humans as well as non human primates.

Acknowledgments

The authors would like to thank one anonymous reviewer for substantially improving our manuscript and providing feedback that helped us strengthen many aspect of our findings.

Footnotes

Additional Information: Software and user manual is obtainable from https://github.com/oculomatic

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Asaad WF, Eskandar EN. A flexible software tool for temporally-precise behavioral control in Matlab. J Neurosci Methods [Internet] 2008a Sep 30;174(2):245–58. doi: 10.1016/j.jneumeth.2008.07.014. [cited 2014 Sep 7] Available from: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=2630513&tool=pmcentrez&rendertype=abstract. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asaad WF, Eskandar EN. Achieving behavioral control with millisecond resolution in a high-level programming environment. J Neurosci Methods [Internet] 2008b Aug 30;173(2):235–40. doi: 10.1016/j.jneumeth.2008.06.003. [cited 2014 Sep 7] Available from: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=2581819&tool=pmcentrez&rendertype=abstract. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asaad WF, Santhanam N, McClellan S, Freedman DJ. High-performance execution of psychophysical tasks with complex visual stimuli in MATLAB. J Neurophysiol [Internet] 2013 Jan;109(1):249–60. doi: 10.1152/jn.00527.2012. [cited 2014 Sep 7] Available from: http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=3545163&tool=pmcentrez&rendertype=abstract. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bahill AT, Clark MR, Stark L. The main sequence, a tool for studying human eye movements. Math Biosci. 1975;24:191–204. [Google Scholar]

- Baloh RW, Sills a W, Kumley WE, Honrubia V. Quantitative measurement of saccade amplitude, duration, and velocity. Neurology. 1975;25:1065–70. doi: 10.1212/wnl.25.11.1065. [DOI] [PubMed] [Google Scholar]

- Dean HL, Hagan MA, Pesaran B. Neuron [Internet] 4. Vol. 73. Elsevier Inc.; 2012. Only Coherent Spiking in Posterior Parietal Cortex Coordinates Looking and Reaching; pp. 829–41. Available from: http://dx.doi.org/10.1016/j.neuron.2011.12.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frens MA, van der Geest JN. Scleral search coils influence saccade dynamics. J Neurophysiol [Internet] 2002;88:692–8. doi: 10.1152/jn.00457.2001. Available from: http://www.ncbi.nlm.nih.gov/pubmed/12163522. [DOI] [PubMed] [Google Scholar]

- Hagan Ma, Dean HL, Pesaran B. Spike-field activity in parietal area LIP during coordinated reach and saccade movements. J Neurophysiol. 2012;107(5):1275–90. doi: 10.1152/jn.00867.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmberg N. Advance head rig. 2012 http://www.blendswap.com/blends/view/48717.

- Javadi AH, Hakimi Z, Barati M, Walsh V, Tcheang L. SET: a pupil detection method using sinusoidal approximation. Front Neuroeng [Internet] 2015;8(April):1–10. doi: 10.3389/fneng.2015.00004. Available from: http://journal.frontiersin.org/article/10.3389/fneng.2015.00004/abstract. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Judge S, Richmond B, Chu F. Implantation of magnetic search coils for measurement of eye position: an improved method. Vision Res [Internet] 1980;20:535–8. doi: 10.1016/0042-6989(80)90128-5. [cited 2014 Oct 3] Available from: http://scholar.google.com/scholar?hl=en&btnG=Search&q=intitle:Implantation+of+magnetic+search+coils+for+the+measurement+of+eye+position:+an+improved+method#0. [DOI] [PubMed] [Google Scholar]

- Kassner M, Patera W, Bulling A. Pupil: An Open Source Platform for Pervasive Eye Tracking and Mobile Gaze-based Interaction. Proc 2014 ACM Int Jt Conf Pervasive Ubiquitous Comput Adjun Publ [Internet] 2014:1151–60. Available from: http://arxiv.org/abs/1405.0006.

- Kimmel DL, Mammo D, Newsome WT. Tracking the eye non-invasively: simultaneous comparison of the scleral search coil and optical tracking techniques in the macaque monkey. Front Behav Neurosci. 2012;6(49):1–17. doi: 10.3389/fnbeh.2012.00049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mulvey F. Woking copy of definitions and terminology for Eye Tracker accuracy and precision. 2010 [Google Scholar]

- Riemann B. Grundlagen für eine allgemeine Theorie der Funktionen einer veränderlichen komplexen Grösse. 1876 [Google Scholar]

- Robinson DA. Movemnent Using a Scieral Search in a Magnetic Field. IEEE Trans Biomed Eng. 1963:137–45. doi: 10.1109/tbmel.1963.4322822. [DOI] [PubMed] [Google Scholar]

- Smeets JBJ, Hooge ITC. Nature of variability in saccades. J Neurophysiol. 2003;90(1):12–20. doi: 10.1152/jn.01075.2002. [DOI] [PubMed] [Google Scholar]

- Stampe DM. Heuristic filtering and reliable calibration methods for video-based pupil-tracking systems. Behav Res Methods, Instruments, Comput. 1993;25(2):137–42. [Google Scholar]

- Suzuki S, Abe K. Topological structural analysis of digital binary image by border following. Comput Vision, Graph Image Process. 1985;30:32–46. [Google Scholar]

- Swirski L, Bulling A, Dodgson N. Robust real-time pupil tracking in highly off-axis images. Etra [Internet] 2012:1–4. Available from: http://www.cl.cam.ac.uk/research/rainbow/projects/pupiltracking/files/Swirski.; Bulling, Dodgson Robust real-time pupil tracking in highly off-axis images 2012 [Google Scholar]

- Świrski L, Dodgson N. Proceedings of the Symposium on Eye Tracking Research and Applications. ACM; 2014. Rendering synthetic ground truth images for eye tracker evaluation; pp. 219–222. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.