Abstract

Spike timing dependent plasticity (STDP) is believed to play an important role in shaping the structure of neural circuits. Here we show that STDP generates effective interactions between synapses of different neurons, which were neglected in previous theoretical treatments, and can be described as a sum over contributions from structural motifs. These interactions can have a pivotal influence on the connectivity patterns that emerge under the influence of STDP. In particular, we consider two highly ordered forms of structure: wide synfire chains, in which groups of neurons project to each other sequentially, and self connected assemblies. We show that high order synaptic interactions can enable the formation of both structures, depending on the form of the STDP function and the time course of synaptic currents. Furthermore, within a certain regime of biophysical parameters, emergence of the ordered connectivity occurs robustly and autonomously in a stochastic network of spiking neurons, without a need to expose the neural network to structured inputs during learning.

Author Summary

Plasticity between neural connections plays a key role in our ability to process and store information. One of the fundamental questions on plasticity, is the extent to which local processes, affecting individual synapses, are responsible for large scale structures of neural connectivity. Here we focus on two types of structures: synfire chains and self connected assemblies. These structures are often proposed as forms of neural connectivity that can support brain functions such as memory and generation of motor activity. We show that an important plasticity mechanism, spike timing dependent plasticity, can lead to autonomous emergence of these large scale structures in the brain: in contrast to previous theoretical proposals, we show that the emergence can occur autonomously even if instructive signals are not fed into the neural network while its form is shaped by synaptic plasticity.

Introduction

One of the striking features of local organisation in the brain is its diversity across brain regions and cell populations [1–3]. Therefore, it is of significant importance to understand the factors that contribute to the organization of local neural circuitry. Among such factors, synaptic plasticity involves mechanisms that rely on neural activity [4, 5], as first proposed by Hebb [6].

Spike timing dependent plasticity (STDP), observed in many brain areas, depends on the relative timing of pre and post synaptic spikes [7–10]. This form of plasticity introduces a coupling between the ongoing activity in a neural network and its architecture, because the change in synaptic efficacy is driven by the timing of neural spikes, while the statistics characterizing neural activity are strongly dependent on the connectivity. This link raises interesting questions from a functional and computational perspective.

Considerable theoretical effort was devoted to elucidate the coupling between neural activity and plasticity, when considering modifiable synapses that converge into one post synaptic neuron [11–15]. When considering STDP in a network of recurrently connected neurons, the coupling between activity and plasticity becomes even more elaborate, since the timing of spikes in each neuron is potentially influenced by the activity of all other neurons in the network. Consequently, the change in one synapse can depend on the full connectivity structure in a complicated manner.

Most theoretical works on STDP in recurrent neural networks approximated the dynamics of each synapse as depending on local quantities: the firing rates of the pre and post synaptic neurons [16], and the strengths of the synapses between them [17]. These local approximations provide important insights on synaptic dynamics driven by STDP, such as the competition that arises between reciprocal synapses under an asymmetric STDP function [17, 18]. However, local approximations do not fully address how plasticity in a given synapse is influenced by other synapses in the network.

Only very recently consequences of STDP in recurrent neural networks were studied analytically, without resorting to the local approximations discussed above. In Ref. [19] an expression was derived for the dynamics of synaptic efficacies in recurrent neural networks, based on an approximation for spike correlations in networks of leaky integrate and fire neurons. This approach has led to the understanding that STDP induces effective interactions between pairs of adjacent synapses. These interactions, in turn, influence the connectivity, and specifically the distribution of local motifs which include pairs of synapses. These results highlight the elaborate role played by STDP in shaping the structure of recurrent neural circuits.

Many questions remain open, or have received so far only partial answers: Is it possible to describe in a systematic manner interactions between synapses of all orders, beyond the pairwise interactions analyzed in previous works? Can STDP induce global ordered structures in the connectivity, beyond its influence on the statistical distribution of local motifs within the network? How do biophysical properties, such as the structure of the STDP function and the time course of synaptic current, influence the emergent structures?

The first goal of this work is to provide a full description of non-local interactions between different synapses in recurrent neural networks. We develop an expression for the synaptic dynamics in recurrent networks of Poisson spiking neurons, which precisely describes how STDP in each synapse depends on the full network connectivity. The main advantage of using this neural model is that all the expressions we obtain are precise and fully self-consistent, without resorting to any approximations other than those embodied in our model for the intrinsic dynamics of individual neurons. Furthermore, this approach leads to a systematic description of non-local synaptic interactions, expressed as a sum over contributions from structural motifs of varying orders. Using this formalism, we demonstrate that non-local interactions between different synapses can profoundly influence the synaptic connectivity that emerges under STDP.

A second goal of this work is to show that high order, non local interactions between different synapses can promote the spontaneous formation of global organization in the connectivity. This result is significant on its own merit (see below), but it also serves as a concrete example for the possible role of high-order synaptic interactions in shaping the structure of neural circuits. We focus on two specific types of structures: wide synfire chains, and assemblies of self connected neurons (Fig 1). The formalism developed here allows us also to predict how biophysical parameters, such as the specific STDP function and the temporal trace of post synaptic currents, impact on the structures that emerge.

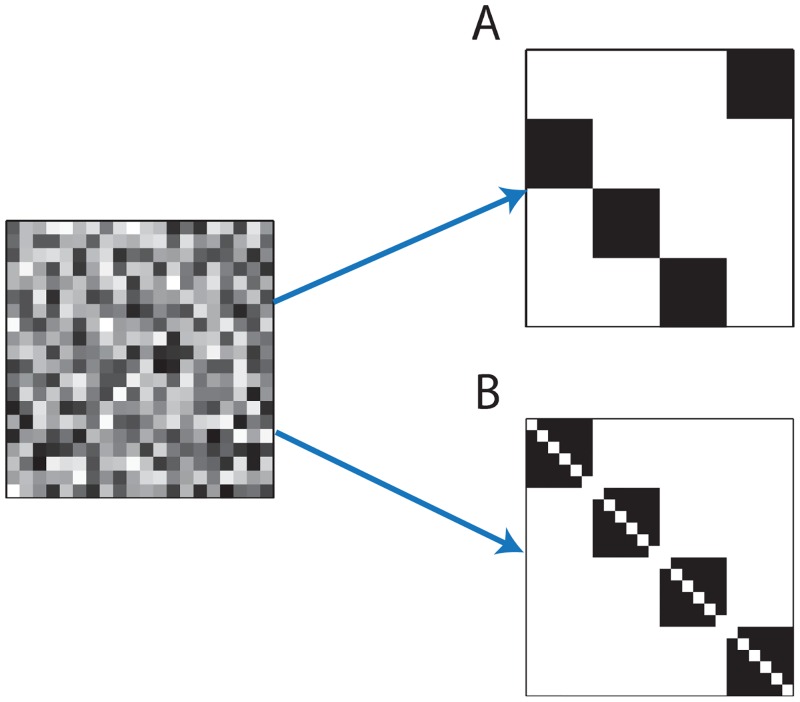

Fig 1. Spontaneous emergence of global structures from initially random network connectivity.

Illustration of connectivity matrices: Left—random connectivity, A. Synfire chain connectivity, B. Self connected assemblies. In all panels horizontal (vertical) axes represent the pre (post) synaptic neuron.

Autonomous emergence of global structure

Synfire chains, consisting of distinct groups of neurons that project to each other in a sequential order (Fig 1A), were originally proposed as a model for sequence generation in the mammalian cortex [20]. Subsequently, compelling evidence has pointed to the possibility that this architecture underlies the synchronous neural activity observed in the songbird premotor nucleus HVC [21, 22], with ∼100 excitatory neurons in each layer. Theoretical reasoning indicates that this architecture can indeed produce stable propagation of synchronous spiking activity if the groups are sufficiently large [23, 24].

It was shown theoretically that STDP, combined with heterosynaptic competition, can lead spontaneously to the formation of thin chains [25], in which single neurons project to each other sequentially (see also [26]). Formation of wide synfire chains, in which many neurons participate in each group along the sequence, proved to be more difficult, and was successfully demonstrated when structured inputs were fed into the network [25]. Thus it remains unclear whether structured inputs are required for the formation of wide chains in networks of spiking neurons. Here we demonstrate that with appropriate choice of the biophysical parameters, STDP dynamics can lead to robust formation of wide synfire chains, without the need to provide any structured inputs to the network. The grouping of neurons occurs spontaneously, assisted by high order synaptic interactions that arise from the STDP dynamics.

As a second example for the role of high order synaptic interactions in plasticity, we consider whether STDP can promote the formation of distinct, self connected groups of cells (Fig 1B). Theoretical works demonstrated that such structures can lead to multiple persistent states in the neural dynamics [27–31]. Another motivation for consideration of these structures arises from anatomical studies of local connectivity: for example, connections among excitatory neurons in the rat visual and somatosensory cortices tend to be clustered [1, 32]. In the context of learning, it has been demonstrated theoretically that STDP, combined with additional plasticity mechanisms and structured inputs, can lead to formation of self connected assemblies [27, 33, 34]. However, in similarity to the formation of synfire chains by STDP, it remains unclear whether self connected assemblies can emerge in an initially unstructured network without the inclusion of correlated inputs that are fed into the network during learning. Here we show that high order synaptic interactions enable the spontaneous formation of self connected assemblies, without the inclusion of such inputs.

Results

Synaptic drift in recurrent networks

In studying the effects of STDP, it is necessary to consider models of neural activity that explicitly involve the timing of action potentials (as opposed to simpler rate models). Due to the difficulty in evaluating spike correlation functions in most models of spiking neurons, analytical treatments of STDP have made certain approximations for the spiking statistics: typically, pre and post synaptic spike trains were treated as if they follow inhomogeneous Poisson statistics [12–14, 16]. Therefore, we explicitly consider a recurrent network of neurons which follow linear Poisson (LP) dynamics (Methods). The activity in the network is stochastic, and the probability of each neuron to emit an action potential is proportional to a weighted sum of the previous activity in the network and a constant external input (see Fig 2A and Methods).

Fig 2.

A. Illustration of the network architecture. The network contains recurrently connected neurons that receive a constant external input. B. Example of an STDP function. Horizontal axis: time interval between action potentials in the pre and post synaptic neurons. Vertical axis: change in synaptic efficacy. C. The drift of the STDP dynamics is an integral over the product of the correlation and STDP functions. Black line: time dependent correlation. Dashed line: STDP function. The area under the product of these curves (yellow) determines the synaptic drift.

Networks of LP neurons have been shown to approximate well the correlation in spike timing of neurons with more elaborate leaky integrate-and-fire dynamics, operating in an asynchronous regime [35]. The availability of an exact expression for spike correlations in such networks (see below) allows us to develop a precise theory for the weight dynamics driven by STDP.

We consider synaptic efficacies in a recurrent neural network with an arbitrary structure (Fig 2A). The efficacies undergo long term potentiation or depression in response to each pair of spikes, depending on the time interval between the firing of the pre and post synaptic neurons (Fig 2B). In the case of slow learning rate, the rate of change in the synaptic efficacies can be expressed in terms of the product between the time dependent pair correlation and the STDP function (see Methods):

| (1) |

Here, is the drift in the synaptic efficacy from neuron j to neuron i, defined as the average change in the synaptic efficacy per unit time. The correlation function Cij(τ) represents the probability density that neurons j and i emit a pair of spikes temporally separated by a duration τ (Eq 9), and F(τ) is the STDP function that describes how potentiation (or depression) depends on the time interval τ (Fig 2C).

Using an analytic expression for the spike correlation function [36], we obtain an exact expression for STDP in recurrent networks with arbitrary connectivity (Methods):

| (2) |

Here, W is the connectivity matrix, a(t) is the time course of synaptic currents, is its Fourier transform, ri is the average firing rate of neuron i (Eq 13), Dij = δij rj, and f0 is the area under the STDP function (Eq 16). The derivation of Eq 2 does not involve any assumptions on the specific form of the synaptic currents, STDP function, or the network architecture. S1 Fig demonstrates that this expression provides an accurate description of the average learning dynamics in networks of LP neurons, over time scales which are relevant to plasticity in the brain.

The synaptic drift can be interpreted as a sum over contributions from structural motifs

Eq 2 expresses how plasticity in one synapse depends on the connectivity of the full network. Additional insight on this expression, which may seem elaborate, is obtained by noticing that the spike correlation functions in the network can be written as a power series, obtained as an expansion in the strength of the synaptic efficacies [37]. This allows us to reformulate Eq 2 as follows (Methods):

| (3) |

where the coefficients fα, β are defined below. Each one of the terms in Eq 3 has a relatively simple, intuitive interpretation that we discuss next.

The first term in Eq 3, f0 ri rj, represents the contribution to STDP arising from the mean firing rates of the pre and post synaptic neurons, while ignoring any correlations in the timing of their spikes. Accordingly, this term is simply proportional to the firing rates ri and rj (Fig 3A). Such a term is often postulated in phenomenological models of synaptic plasticity [38], and its emergence from STDP dynamics has been described, e.g., in [11, 12, 16, 17].

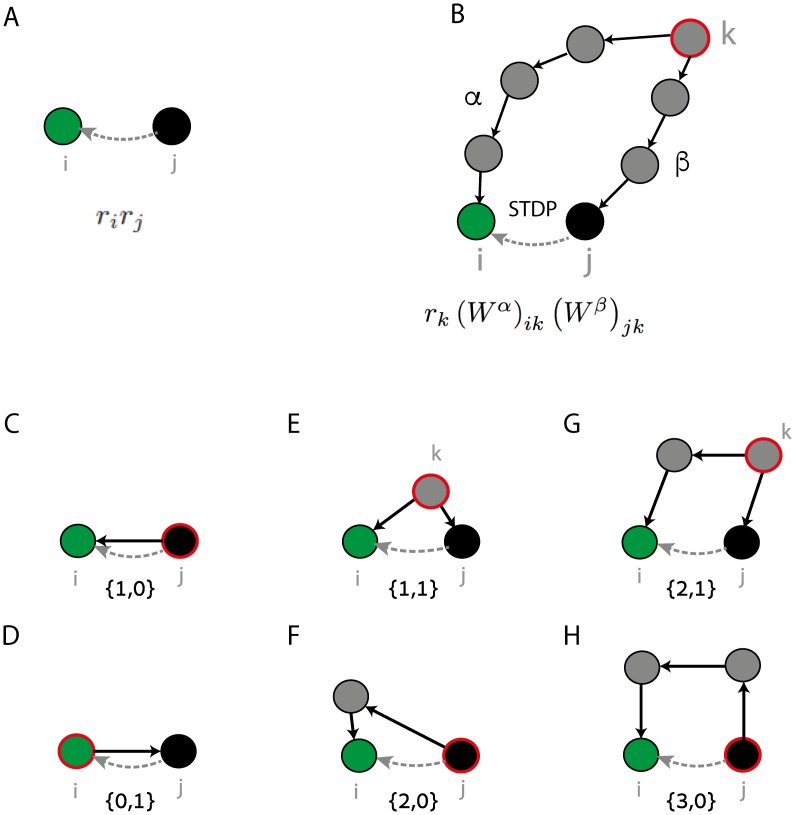

Fig 3. Network motifs that affect the STDP dynamics.

A. The first term in Eq 3 is proportional to the average firing rates of the pre and post synaptic neurons. B. General form of a structural motif, contributing to the STDP dynamics in the synapse Wij (dashed line). A source neuron k (red line) projects to the post synaptic neuron i (green) via α synapses, and to the pre synaptic neuron j (black) via β synapses. The contribution is proportional to the firing rate of the source neuron rk and to the product of all synaptic efficacies along the two paths. The expression (Wα)ik rk(Wβ)jk is a sum over all paths that start from neuron k and reach the post synaptic neuron i via α synapses and the pre synaptic neuron j via β synapses. C-H: Examples of six motifs that affect STDP in the synapse Wij (dashed line), by inducing time dependent coupling between neurons j and i. Black arrows indicate synapses that participate in the motifs. C. One of the first order motifs contains the direct synapse from j to i (α = 1, β = 0). The source neuron is the pre synaptic neuron j. D. The other first order motif contains the opposite synapse from i to j (α = 0, β = 1). Here the source neuron is the post synaptic neuron i. E. One of the second order motifs (α = 1, β = 1). Here the source neuron is some other neuron in the network that projects directly both to i and j. F. Additional example for a second order motif (α = 2, β = 0). The source neuron is the pre synaptic neuron j, that projects to the post synaptic neuron i through one intermediate neuron. G. Example of a third order motif (α = 2, β = 1). The source neuron projects directly to the pre synaptic neuron j and via one intermediate neuron to the post synaptic neuron i. H. Additional example of a third order motif (α = 3, β = 0). The pre synaptic neuron j is the source neuron, that projects to the post synaptic neuron i via two intermediate neurons.

The probability of neurons i and j to emit a spike is transiently modulated whenever a spike is emitted anywhere within the network. In the sum on the right hand side of Eq 3, each term quantifies how a spike in a neuron k modulates the probability of neurons i and j to emit pairs of spikes at various latencies—and through this modulation, how the spikes of neuron k influence the drift in the synaptic efficacy Wij. This contribution to the drift is written as a sum over structural motifs, which share a common organization shown schematically in Fig 3B.

In each structural motif, the source neuron k projects to the post synaptic neuron i via a path of α synapses, and to the pre synaptic neuron j via a path of β synapses. The synaptic drift driven by the motif is proportional to all the synaptic weights along the two paths, and to the firing rate of the source neuron. In addition, the drift is proportional to a motif coefficient fα, β. This coefficient depends on the number of synapses in the two paths, the time course of the synaptic currents, and the detailed form of the STDP learning function. We discuss this dependence in detail later (see also Methods).

The first order contributions in the above sum are those in which {α, β} = {1, 0} (Fig 3C), or {α, β} = {0, 1} (Fig 3D):

| (4) |

These terms are local: they depend only on the direct synapses that link neurons i and j, and on the firing rate of these two neurons (Fig 3C and 3D). Previous works [17, 18] derived these contributions to STDP using heuristic arguments that focused on the pre and post synaptic neurons, and studied their consequences when embedded in a recurrent network. Under an asymmetric STDP function, the first order terms induce a competition between a synapse Wij and the opposite synapse Wji [17, 18], whereas a symmetric STDP function tends to promote the development of a symmetric weight matrix. Here, these local plasticity rules are obtained as the first order terms in a systematic expansion, which includes also higher order terms.

Synapses of different neurons affect each other through higher order terms

A central consequence of Eq 3 is that the drift in one synapse can be affected by other synapses in the network through the contribution of high order motifs. An illustration of how high order motifs induce interactions between different synapses is seen in two examples of second order motifs, shown in Fig 3E and 3F. In Fig 3E, the source neuron k is an arbitrary neuron in the network, that projects directly to neurons j and i. STDP in Wij is influenced, through this motif, by the synaptic efficacies Wjk and Wik.

In the motif shown in Fig 3F, the source neuron is the neuron j itself, and the motif includes a path leading from neuron j to neuron i. Here the effect of a spike in neuron j on neuron i is mediated through an intermediate neuron. Thus, the synapse Wij is influenced, via this motif, by synapses along indirect paths connecting neuron j and i through a single intermediate neuron. Additional examples, of third order motifs, are shown in Fig 3G and 3H.

High order motifs can promote self organization into global structures

Next, we demonstrate that high order motifs can promote the formation of large-scale structures in the synaptic connectivity. We focus on two types of structures: synfire chains (Fig 4A), and clusters of self connected assemblies (Fig 4B). In both structures, synapses of different neurons are highly correlated. The purpose of this section is to illustrate by specific examples that high order motifs, beyond the first order, can lead to emergence of these structural correlations. A more systematic study is presented in later sections.

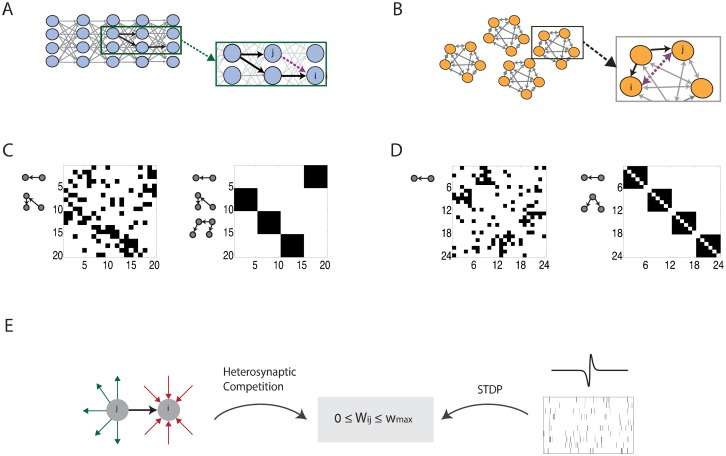

Fig 4. Self organization into global structures.

A. The third order motif {2, 1} promotes formation of wide synfire chain connectivity, by encouraging neurons that receive input from the same pre synaptic neuron to project to the same post synaptic neuron. B. The second order motif {1, 1}, combined with a symmetric STDP function, promotes formation of self connected assemblies by encouraging two neurons that receive inputs from a common source to increase their synaptic coupling reciprocally. C-D. Steady state connectivity, obtained from simulations in which the motif coefficients fα, β are tuned artificially. STDP is combined with heterosynaptic competition. Neurons are ordered such that the matrix represents the optimal feed forward connectivity (C) or self connected assemblies connectivity (D). C. Left—results from dynamics that contain only the motifs {1, 0}, {0, 1}, {2, 0}, {0, 2}. The simulation does not converge into synfire chains connectivity. Right—the simulation contains also the motifs {2, 1}, {1, 2}. The simulation converges into synfire chain connectivity. D. Left—the dynamics contain only the motifs {1, 0}, {0, 1}. The simulation does not converge to assemblies. Right—the dynamics include also the motif {1, 1}. The connectivity converges into self connected assemblies. E. The drift in the excitatory connections is driven by three plasticity mechanisms: (i) Spike timing dependent plasticity. (ii) Heterosynaptic competition between all synapses that terminate in the same post synaptic neuron (red arrows), and all synapses that originate from the same pre synaptic neuron (green arrows). (iii) A hard bound on the synaptic efficacy.

We consider networks that consist of recurrently connected excitatory neurons, and inhibitory interneurons with fast synapses (see Methods). The inhibitory input to each neuron depends on the total activity of the excitatory network, and is adjusted such that the inhibitory weights balance the excitatory ones [14, 15]. The main role of inhibition in the network is to suppress runaway excitation in the neural dynamics. In addition, all neurons receive constant, identical inputs (Methods).

The plasticity mechanisms acting on the excitatory neurons are summarized in Fig 4E. The excitatory connections are modifiable through STDP and are bounded between zero and a positive bound, denoted by wmax. In addition to STDP, the excitatory synapses undergo heterosynaptic competition that limits the total synaptic input and output of each neuron: the sum of the incoming excitatory weights to each neuron, and the sum of outgoing excitatory weights from each neuron are bounded to lie below a positive hard bound, denoted by Wmax. The competition, combined with STDP and with the hard bound on each synaptic weight, can lead to a steady state connectivity pattern in which each neuron receives input from a certain number of pre-synaptic partners, and projects to a certain number of post-synaptic partners [25]. These numbers are tuned by the ratio between Wmax and wmax [25].

To test the influence of individual motifs on the STDP dynamics, we perform simulations in which we include only a few of the terms in Eq 3, starting from initial weights that were drawn independently from a uniform random distribution (Methods). Instead of obtaining the exact expressions for the coefficients fα, β (Eq 15), we artificially tune their values and observe the consequences on the structures that emerge.

The motif {2, 1} (Fig 3G), when acting between excitatory neurons, encourages neurons that receive input from the same pre synaptic neuron to project into the same post synaptic neuron (Fig 4A). Consequently, this motif can induce correlations between the synaptic connections formed by neurons that belong to the same layer of a synfire chain. This is a key feature which differentiates wide synfire chain structures from other connectivity patterns in which each neuron has a prescribed number of presynaptic and postsynaptic partners. This observation raises a hypothesis, that the motif {2, 1} can promote formation of wide synfire chains, by favoring these structures over other connectivity patterns which are compatible with the constraints set by the heterosynaptic competition.

To test this hypothesis, we perform simulations that include only contributions from the motifs {α, β} = {1, 0}, {2, 0}, {2, 1}, and the opposing terms in which α and β are exchanged. Additionally, we set fα, β = −fβ, α, as expected if the STDP function is antisymmetric.

An example is shown in Fig 4C. When including only the first and second order motifs, a simulation of the plasticity dynamics leads to a structure in which each row and column contains a small number of active synapses, without any reciprocal connections (left). However, the synaptic weights are not organized in a synfire chain structure. When including the third order motifs {2, 1} and {1, 2}, the synaptic efficacies self organize into a perfect synfire chain (right), despite the absence of any correlations in the external inputs to the network. Results from a wider set of simulations, in which we systematically vary the strength of motifs, are presented in the section Self organization into synfire chains.

As a second example, we examine the influence of the motif {1, 1} on the formation of self connected cell assemblies. The motif {1, 1} (Fig 3E), when acting between excitatory neurons, enhances reciprocal connections between neurons that receive common input (note that the contribution from this motif vanishes if the STDP function is antisymmetric, but for a symmetric STDP function this motif can significantly contribute to the plasticity dynamics). This raises the hypothesis that formation of self connected assemblies can be promoted by the contribution of the motif {1, 1}.

To check this hypothesis, we conduct plasticity simulations that contain only contributions from first and second order motifs: {0, 1}, {1, 0}, and {1, 1} in Eq 3. In addition, we impose the relation f1,0 = f0,1, as expected if the STDP function is symmetric (see Eq 15 in Methods). In the example shown in Fig 4D, the first order motifs, together with the synaptic competition, lead to a symmetric connectivity matrix in which the number of active synapses is limited in each row and in each column (left). With the inclusion of the {1,1} motif (right), strong correlations emerge between synapses of different neurons, and fully connected cell assemblies emerge.

Biophysical properties affect the relative contribution of different motifs to STDP

So far, we illustrated how high order motifs can promote the formation of global structures by artificially tuning the contribution of specific motifs to plasticity (through the coefficients fα, β). In the actual STDP dynamics, the coefficients fα, β cannot be controlled independently. Instead, these coefficients are determined by the temporal structure of the STDP function and the synaptic currents.

Each motif induces temporal correlations of spikes between the pre and post synaptic neurons with a characteristic time course. The time course of correlations depends on the structure of the motif (characterized by α and β), and on the time course of the synaptic currents. Therefore, the synaptic current, together with the STDP function, affects how strongly each motif influences the synaptic drift, as quantified by the magnitude of the corresponding motif coefficient fα, β (Eq 15). The influence of the synaptic currents on the motif coefficients is illustrated in detail in Methods.

As a specific example for how the time course of synaptic currents can affect the motif coefficients, we consider in Fig 5 the influence of a delay in the onset of the post synaptic current (abbreviated below as the synaptic latency). Synaptic latencies depend on diverse physiological properties, such as the length and conductance velocity in the axon [39] and location on the dendrite [40]. Here we model the synaptic latency as a temporal shift in the synaptic current (Fig 5).

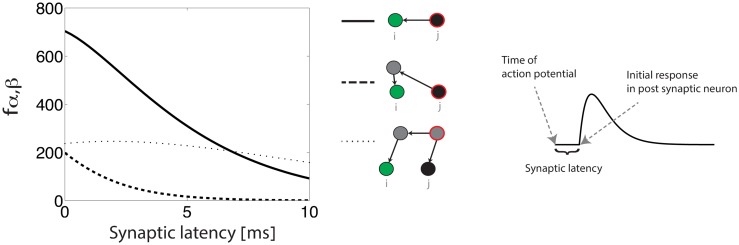

Fig 5.

Left: Motif coefficients as a function of the synaptic latency. Solid line—f1,0, dashed line—f2,0, dotted line—f2,1. An antisymmetric STDP function is assumed. Right: the time course of the synaptic current. The synaptic latency is modeled as a delay in the onset of the postsynaptic current, relative to the presynaptic spike time. The precise form of the synaptic current and STDP function are specified in Methods.

In the example shown in Fig 5 the motif coefficients f1,0 and f2,0 decrease with an increase of the synaptic latency. The coefficient f2,1 is influenced by the synaptic latency as well, but for synaptic latencies ranging from 0 to 10 ms this dependence is extremely weak. Therefore, an increase in the synaptic latency reduces the contribution of the motifs {1, 0} and {2, 0} to STDP relative to the motif {2, 1}. The reason for these trends is explained qualitatively in Methods. Higher order motifs exhibit a similar behavior, depending on the difference between α and β (S5 Fig).

The possibility to tune the relative contribution of motifs through the interplay of post synaptic currents and the STDP function, suggests that global structures could be spontaneously generated via STDP with appropriate choice of these biophysical parameters. Furthermore, this observation provides a principled way to search for parameters that enable emergence of specific structures.

Self organization into synfire chains

We next focus on the emergence of synfire chains under the influence of STDP and heterosynaptic competition. For simplicity, we consider mainly the case where the STDP function is antisymmetric.

First, we consider in detail the interplay between the third order motif {2, 1}, which facilitates the formation of synfire chains, and the first order motifs {1, 0} and {0, 1}, whose contribution to STDP dynamics was the focus of previous theoretical works [17, 18]. Intrinsic plasticity mechanisms other than STDP can act to self-regulate the efficacy of each synapse, in similarity to the effect of the first order motif {1, 0} (see below). Therefore, it is interesting to consider f1,0 and f0,1 as separate parameters even if the STDP function is antisymmetric. In Fig 6 we examine the phase space spanned by f1,0, f0,1, and f2,1, while assuming that f1,2 = −f2,1 due to the antisymmetric form of the STDP function. To avoid decay of all weights to zero when f1,0 is strongly negative, we include in the dynamics also a term that drives growth of each weight at a fixed rate (see Methods, Eq 19). For simplicity, we first consider a situation in which other motifs do not contribute to the dynamics.

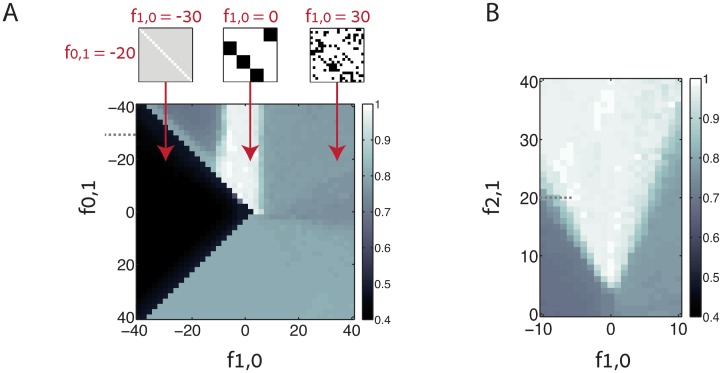

Fig 6. Chain score of the steady state connectivity, obtained from simulations that include selected motifs.

A. Density plot of the chain score, displayed as a function of the motif coefficients f1,0 (horizontal axis) and f0,1 (vertical axis). All other motif coefficients are kept fixed. Note that here f2,1 = 20 (designated by a dotted line in panel B). Above: examples of steady state connectivity matrices for f0,1 = −20, and (from left to right) f1,0 = −30, 0, 30. B. Chain score as a function of the motif coefficients f1,0 (horizontal axis) and f2,1 (vertical axis) with all other motif coefficients kept fixed. Here f0,1 = −30 (designated by a dotted line in panel A). Each data point represents an average over ten simulations, each with a different realization of the initial random connectivity. Additional details of the plasticity dynamics are specified in Methods.

Fig 6A shows results from simulations in which we varied f0,1 and f1,0 while fixing f2,1. To quantify whether synfire chains emerge robustly we constructed a score that quantifies similarity between the steady state excitatory connectivity and a perfect synfire chain structure (abbreviated below as the chain score). This chain score ranges from 0 to 1, where 1 corresponds to a perfect match (Methods). The figure shows the chain score, averaged over multiple random choices of the initial weights. Over a wide range of values of f1,0 and f0,1 precise synfire chain structures are obtained robustly. We note two characteristics of this parameter regime: first, the coefficient f0,1 is negative. Thus, each synapse inhibits its reciprocal synapse. Second, f1,0 lies within a range of values which is fairly insensitive to f0,1 when |f0,1| is sufficiently large.

Similarly, in Fig 6B we fix f0,1, while varying f1,0 and f2,1. As expected, synfire chains emerge only when f2,1 is sufficiently large. Furthermore, with increase of f2,1 the range of values of f1,0 that permits formation of synfire chains becomes wider. Thus, the high order motif {2, 1} plays a pivotal role in the spontaneous emergence of wide synfire chains. Results from additional simulations, in which we include additional low order motifs are shown in S2 Fig. Under typical conditions relevant to the full plasticity dynamics (discussed below), the second order motifs {2, 0} and {1, 1} have a detrimental effect on synfire chain formation (a contribution from the motif {1, 1} may be present if the STDP function is not antisymmetric). Next, we address the emergence of synfire chains under the full STDP dynamics.

Synaptic self-depression enables self-organization into synfire chains in the full STDP dynamics

With typical choices of the STDP function and the synaptic currents, the contribution of the first order motif {1, 0} to the dynamics is large relative to higher order motifs (Fig 5). Consequently, based on the results shown in Fig 6 we do not expect emergence of synfire chain structures under STDP dynamics alone. To enable formation of synfire chains, we include in the dynamics a term that describes constant self depression of each excitatory synapse, which acts alongside STDP (see Methods). Such a term can arise, for example, from a self-regulating process which decreases the size of each dendritic spine in proportion to its volume [41]. Hence, we assume that the self depression term, acting on each synapse is proportional to the synaptic efficacy (− μWij in Eq 19). This form of synaptic self-depression selectively suppresses the contribution of the motif {1, 0}, since its contribution is similar to that of the motif {1, 0}. An increase in the rate of self-depression corresponds in Fig 6A to motion from right to left, in parallel to the horizontal axis. In light of Fig 6 we expect synfire chains to emerge for appropriate rates of the synaptic self-depression.

The black trace in Fig 7 shows results from simulations of the full STDP dynamics (Eq 2) combined with synaptic self-depression and heterosynaptic competition (Eq 19). All the processes contributing to the synaptic dynamics are summarized schematically in Fig 7A. In Fig 7B chain scores of the steady state connectivity, averaged over multiple simulations with random initial synaptic weights, are shown as a function of μ, the rate of self depression. All other parameters in the simulation are kept fixed. As expected, synfire chains emerge robustly when μ lies within an appropriate range.

Fig 7. Emergence of synfire chains under the full STDP dynamics.

A. Schematic representation of the plasticity rules applied to excitatory synapses. In addition to the mechanisms mentioned in Fig 4, the synapses undergo self depression that weakens their efficacy in proportion to its magnitude. B-C. Chain score of the steady state connectivity, averaged over ten random choices of the initial connectivity. Black traces: complete dynamics (Eq 2); red (gray) traces—power series expansion (Eq 3), truncated to include motifs up to third (second) order. B. Chain score plotted as a function of μ, the relative rate of self-depression (the rate of self depression is given by ημ, where η is the learning rate, see Methods). All other parameters are kept fixed. C. Chain score plotted as a function of the synaptic latency, while keeping all other parameters fixed. Above: three examples of the steady state connectivity obtained in specific simulations, with different values of synaptic latency. Error bars in panels B-C represent the standard deviation of the chain score over the ten initial conditions.

The red trace in Fig 7B shows similar results from simulations in which the expansion of the STDP dynamics (Eq 3) was truncated to include only contributions of motifs up to third order. Comparison with results of the full STDP dynamics (black line) indicates that the contributions of motifs up to third order are sufficient to predict quite well the conditions in which synfire chains emerge. In contrast, synfire chain structures do not emerge when the expansion in Eq 3 is truncated to include only motifs up to second order (Fig 7B, gray trace).

Synaptic latency can facilitate self organization into synfire chains

Fig 5 demonstrates that a synaptic latency of several ms reduces the motif coefficients f1,0 and f2,0, while having only little influence on the third order motif coefficient f2,1. Therefore, we consider how a synaptic latency influences the emergence of synfire chain structures.

Fig 7C shows that in the full STDP dynamics, perfect synfire chain structures emerge robustly within a certain range of synaptic latencies (black trace). Several examples of steady state structures, obtained with different synaptic latencies are shown at the top of panel C. Qualitatively, varying the synaptic latency has a similar effect as that of self-depression, since both mechanisms decrease the contribution of the motif {1, 0}, with little or no effect on the contribution of the third order motif {2, 1}. However, the influence of the synaptic latency is more elaborate than that of self-depression, since the latter mechanism tunes only the contribution of the motif {1, 0}, whereas the synaptic latency influences (in general) all the motif coefficients. Thus, varying the synaptic latency traces a curve within the phase space of motif coefficients in which most motif coefficients vary. The red trace in Fig 7C demonstrates that even in this more elaborate situation, it is sufficient to include contributions of motifs up to third order in order to qualitatively predict the influence of the synaptic latency in the full STDP dynamics.

In S3 Fig we consider the influence of the strength of synaptic weights. An increase in the synaptic weights amplifies the relative contribution of high-order motifs (Eq 3). As expected, the outcome is a widening of the permissive range for synfire formation. Finally, in S4 Fig we demonstrate that synfire chain structures can emerge robustly also for an STDP function which is not precisely antisymmetric. Thus, the {1, 1} motif contributes to the dynamics, and so do other high order motifs with α = β. We assumed that the area under the STDP function is positive: in this case it is possible to set γ = 0 (Eq 19), because the zeroth order term of the dynamics (Eq 3) is sufficient to drive growth of all the synapses.

Robustness to noise

The average synaptic drift as expressed by Eq 2 is sufficient to describe the plasticity dynamics when the learning rate is small and noise in the STDP dynamics, arising from random fluctuations in the number of pre and post synaptic spike pairs is averaged out. Thus, the analysis in the limit of slow learning does not guarantee that the network will exhibit similar plasticity dynamics in a more realistic scenario, where learning occurs over a biologically relevant time scale.

A rough estimate for the dependence of noise on the time scale of averaging can be obtained by comparing the prediction of the deterministic theory (Eq 2) with the synaptic efficacy generated in a stochastic spiking simulation. Such results are shown in S1 Fig, where the network parameters were chosen to roughly match those used in Fig 7. The synaptic drift is correctly predicted by Eq 2 and, as expected, the scatter relative to the predicted drift decreases with increase of the duration of averaging T. When averaged over a fairly short time scale of T = 6 min, the stochastic drift is strongly correlated with the prediction of the deterministic theory, but the scatter relative to the mean is fairly large (S1A Fig). When the duration of averaging is 200 times longer (T = 20 hours) the scatter is much smaller, and the agreement with Eq 2 is excellent (S1C Fig).

These results demonstrate that the deterministic theory provides a relevant prediction for the stochastic synaptic drift when the averaging is performed over a time scale of several minutes or more. However, during synfire chain formation the synaptic weights are constantly changing. Therefore, the whole process should occur over a significantly longer duration in order for the deterministic theory to be adequate. In Fig 8A–8C we examine the plasticity dynamics in neural networks with initial random synaptic weights, under parameters that enable robust emergence of synfire chain structures. The chain score is measured in stochastic simulations with varying values of the learning rate η, and compared with the prediction of the deterministic theory. Each data point in the figure represents an average of the chain score over ten randomly chosen initial conditions.

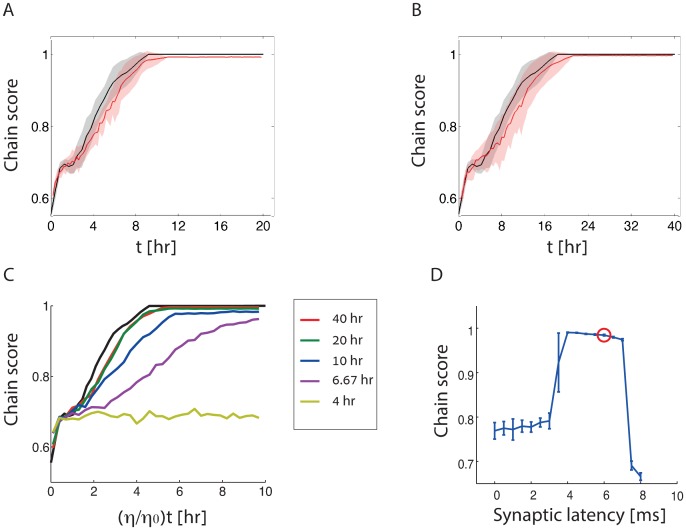

Fig 8. Formation of synfire chains under stochastic dynamics.

A-B. Evolution in time of the chain score in the stochastic dynamics (red), compared with the prediction of the deterministic theory (black), shown for two different learning rates: η = 2 × 10−7 (A) and η = 1 × 10−7 (B) (see Methods for all other parameters). Solid traces: average over ten initial conditions. Limits of the shaded areas represent the standard deviation. Note the different time scales in A and B. C. Evolution in time of the chain score, shown for different learning rates (η) as a function of the time multiplied by η/η0 where η0 = 4 × 10−7. Each trace represents an average over ten random intial conditions, with a specific learning rate: η = 10−7 (red), 2 × 10−7 (green), 4 × 10−7 (blue), 6 × 10−7 (purple), and 10 × 10−7 (yellow). The black trace represents the prediction of the deterministic theory. Note that the traces for the smallest learning rates (green and red traces, corresponding to panels A and B, respectively) nearly collapse on a single line, in close agreement with the prediction of the deterministic theory. The legend shows the actual time corresponding to the right end of the horizontal axis for each one of the plots. The synaptic latency d = 6 ms. D. Chain score evaluated in simulations of the stochastic dynamics, shown as a function of the synaptic latency (the red circle corresponds to the synaptic latency in panel C). The learning rate η = η0 = 4 × 10−7, matching the blue trace in panel C. Each data point represents an average over ten simulations with random initial conditions, and the error bars represent the standard deviation. The chain score is evaluated after 60 hours of simulated time.

Within the deterministic theory the convergence time simply scales in proportion to η−1. In contrast, in the stochastic simulations an increase in η entails an increase in the amplitude of noise (relative to the mean synaptic drift) and thus we can expect the agreement with the deterministic theory to break down if η is too large. Fig 8A and 8B demonstrate that the time course of the chain score in the stochastic simulations matches the deterministic theory very well when η is set such that convergence to synfire chains occurs over a time scale of ∼10 or ∼20 hours, respectively. Correspondingly, the time course of the chain score, when plotted as a function of ηt is nearly identical in these two conditions (red and green traces in Fig 8C), in very good agreement with the prediction of the deterministic theory (black trace). When the learning rate is faster, such that convergence to a synfire chain occurs over a time scale of ∼5 hours, the stochastic simulations are somewhat slower to converge than the deterministic simulation (blue trace in Fig 8C). At an even higher learning rate, in which perfect synfire chains emerge under the deterministic dynamics within ∼2 hours, the stochastic simulations do not converge at all to synfire chain structures within a comparable time frame (yellow trace).

In summary, Fig 8 demonstrates that STDP in a stochastic spiking network can lead robustly to synfire chain structures over a time scale of several hours. Moreover, if the learning rate is sufficiently small, such that the time scale of convergence is of order ∼10 hours or more, the time course of convergence is predicted very well by the deterministic theory of Eq 2. As another demonstration for the relevance of the deterministic theory, we measure in Fig 8D the dependence of the chain score on the synaptic latency in stochastic spiking simulations lasting 60 hours of biological time, using the same parameters as in Fig 7C and a learning rate that matches the blue trace in Fig 8C. The results are very similar to those obtained in the deterministic dynamics (Fig 7C).

Self organization into self connected assemblies

Finally, we check whether self connected assemblies can spontaneously emerge under the full STDP dynamics. The motif {1, 1} promotes formation of such structures (Fig 4B and 4D). Therefore, we choose biophysical parameters that increase the relative contribution of this motif. First, we choose an STDP function with a Mexican hat structure (Fig 9A), which increases synaptic efficacies between neurons that spike at similar times, regardless of the temporal order of the spikes. Second, we note that the contribution of the {1, 1} motif is independent of synaptic latency, because both the pre and post synaptic neurons i, j accrue the same latency relative to the source neuron k. On the other hand, coefficients of other low order motifs do depend on the synaptic latency (Fig 9B).

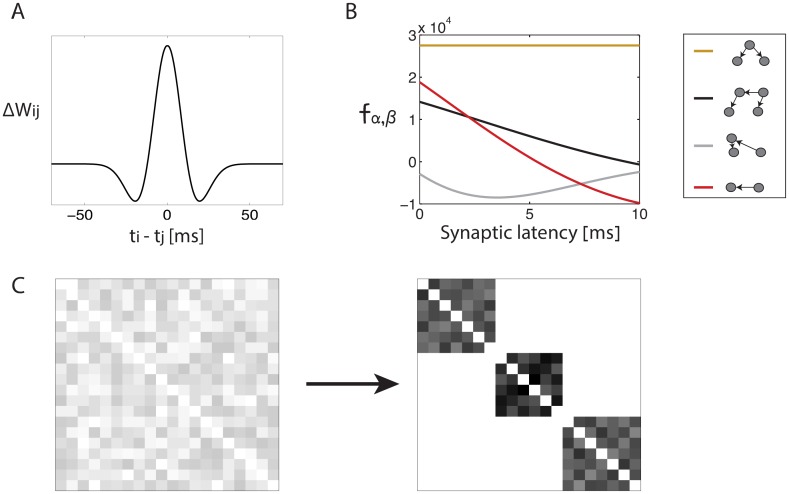

Fig 9. Self organization into self connected assemblies.

A. STDP function with Mexican hat structure. B. The coefficients fα, β of four different motifs as a function of the synaptic latency. Orange line—{1, 1}, black line—{2, 1}, gray line—{2, 0}, red line—{1, 0}. C. Emergence of self connected assemblies under stochastic dynamics. Example of connectivity obtained in a parameter regime in which self connected assemblies emerge robustly. Left—initial connectivity, right—steady state. Here the synaptic latency is 5.25 ms (for other parameters, see Methods).

Based on the above reasoning, we expect that self connected assemblies will emerge, under the influence of STDP and synaptic competition, for finite synaptic latencies, in which contributions from motifs other than {1, 1} are suppressed. Fig 9C shows results from a stochastic simulation of a Poisson network, starting from initial random connectivity, with a synaptic delay of ∼5 ms. Here, a precise structure of self connected assemblies emerges robustly. Other structures were observed for alternative choices of the synaptic latency.

Discussion

In summary, we developed a precise theoretical description of STDP in recurrent networks, which allows us to examine how the drift in the efficacy of each synapse depends on the full network structure. This dependence, although complicated, can be expressed as a sum of contributions from structural motifs, each with a fairly simple interpretation.

High order motifs couple the plasticity dynamics of multiple synapses. Therefore, they can produce correlations between the synapses of different neurons, and promote the emergence of large-scale structures. Furthermore, under certain conditions, the high order motifs have a pivotal influence on the emerging connectivity. We demonstrated these central results of the work within a scenario, in which STDP dynamics are combined with heterosynaptic competition. In this case, high order motifs can drive the spontaneous formation of very ordered global structures, such as wide synfire chains and self connected assemblies. The same theoretical framework can be applied also to other scenarios, in which STDP acts alone, or together with other plasticity mechanisms.

The analytical framework allows us to predict how the biophysical parameters, which characterize the dynamics of single synapses, shape the emerging structures at the level of the whole network. This relationship arises from the influence of the parameters on the relative contributions from different motifs. We varied the synaptic latency or the shape of the STDP function as an illustration of these dependencies, but other parameters such as the rise time of the synaptic current can have a similar influence on network plasticity. It will also be interesting to consider in future work how the size of the network, and the desired size of clusters, influence the regime of parameters in which ordered structures are formed.

The influence of different structural motifs on spike correlation functions has been studied in several previous works [37, 42, 43]. Here, our interest lies in the influence of these correlation functions on STDP acting on specific synapses within the network. Thus, we view the spike correlations induced by each structural motif as the source for an effective interaction between the synapses that participate in the motif. For example, the motif of the form {1, 1}, combined with a symmetric STDP function, encourages any two excitatory neurons that receive presynaptic inputs from a common excitatory source to enhance the synaptic efficacies between them. The motif of the form {2, 1} encourages neurons that receive common input to project to common output units.

We worked with linear Poisson spiking neurons, since this approach allows us to analyze the consequences of STDP in a fully self-consistent manner, which does not involve any approximations beyond the initial choice of the underlying neural model (for another justification, see [35]). However, our analysis of STDP in terms of high order contributions from structural motifs is much more general. For example, Ocker et al. showed, very recently [19], how STDP in recurrent neural networks of integrate and fire neurons can be analyzed based on an expression for spike correlation functions, obtained under a linear response approximation. This approach leads to an expression for the synaptic drift which is very similar to the starting point of our analysis (Eq 2). Therefore, we expect that the qualitative consequences arising from high order motifs will be very similar under the two approaches.

High order motifs beyond the third order

We focused on the influence of biophysical parameters on the contribution of motifs up to third order. However, Eqs 2 and 3 include also contributions from higher order motifs. This raises a question, why an analysis up to third order allowed us to predict the emergence of global structures. A partial answer to this question is that for small synaptic weights, the contribution of high order motifs decays with the number of participating synapses. One important factor contributing to the decay of motif coefficients is that when |α − β| is large the spike correlation function is shifted outside the range of the STDP window. In addition, spike correlation functions widen with increase of the number of participating synapses, thus decreasing their overlap with the STDP function.

Another, more formal argument is based on the derivation of Eq 3: as long as the synaptic weights are sufficiently weak, such that all eigenvalues of the connectivity matrix are smaller than unity, the expansion in Eq 14 converges for all ω, and therefore the sum in Eq 3 must converge as well (this is also the condition for stability of the linear neural dynamics). This implies that the combined contribution from all motifs of order n must decay as a function of n.

Even though contributions of high order motifs must eventually decay, our simulations of the full STDP dynamics were performed in a regime where motifs beyond the third order do influence the plasticity. To a large extent, the effect of higher order motifs can be predicted by the contribution of second and third order motifs (S5 Fig). For example, all high order motifs that satisfy α − β = 1 are expected to assist the formation of synfire chains structures, based on the same intuition that was demonstrated in Fig 4A. All these motifs also share a similar time course since they involve a delay of one synapse between the activity of the pre and post synaptic neurons. Similarly, all the motifs with α = β are expected to contribute to formation of self connected assemblies. In similarity to the motif {1, 1}, and in contrast to the other motifs, their contribution is not influenced by the synaptic latency. The similar dependence on the synaptic latency in motifs with the same value of α − β is illustrated in S5 Fig.

Formation of synfire chains in previous works

Fiete et al. [25] demonstrated that narrow synfire chains, in which single units project sequentially to each other, can emerge spontaneously under the combined influence of STDP and heterosynaptic competition. However, wide synfire chains did not emerge unless correlated inputs were fed into the network, even though the STDP simulations implicitly included motifs of all orders. Our results suggest why it was difficult to obtain wide synfire chains robustly in this work: first, Fiete et al. did not include in their model self depression, which can suppress the contribution of the first order motif, bringing the plasticity dynamics to an appropriate regime (see Fig 6A). In particular, it is likely that the choice of parameters was such, that the relative contribution of the third order motif was not sufficiently large.

Interestingly, wide (but sparsely connected) synfire chains were spontaneously produced in another recent work [44], which considered a simplified model of neural and synaptic dynamics, operating in discrete time bins. By applying our framework to this model, it is straightforward to see that only a small subset of the possible structural motifs contributed to plasticity, due to the simplified and discrete dynamics. In addition, the synaptic plasticity rules included an effective form of self-depression. Thus, the spontaneous formation of synfire chains in [44] is consistent with the predictions of our work.

Distribution of synaptic weights

Considerable theoretical attention has been devoted to the influence of STDP on the steady state distribution of synaptic weights [12, 13, 45–49]. This interest is partially motivated by the observation in specific brain areas of unimodal distributions of synaptic efficacies, often following approximately a log-normal distribution [1, 50]. Due to our interest in formation of synfire chains and self-connected assemblies, we focused on situations in which the steady-state weight distribution is bimodal. However, under certain choices of parameters which do not lead to the formation of ordered structures, we observe unimodal weight distributions (see, for example, the black area in Fig 6A which is characterized by strong synaptic self-inhibition [48]). It will be of interest in future studies, to ask whether it is possible to obtain highly ordered structures, in which the non-vanishing weights follow broad distributions, perhaps under a softer implementation of the synaptic competition.

It will also be interesting in future studies to consider situations in which connections exist between a subset of neuron pairs: for example, the structural connectivity may be sparse. The analytical framework that we developed can be directly applied to networks with an arbitrary adjacency matrix. In this case, only efficacies of structurally existing synapses should be updated based on Eqs 2 and 3 (note that in Eq 3, only those motifs that are realized in the structural connectivity graph can contribute to the sum, since the synaptic efficacies associated with non-existing connections vanish). Moreover, the formalism can be easily generalized to consider synapses with heterogeneous biophysical properties.

Significance for neural dynamics and structure

Nucleus HVC plays a key role in timing the vocal output of songbirds [21, 22]. This nucleus is a compelling candidate for a brain area that can organize autonomously to produce structured dynamics, since auditory deprived songbirds generate a song with a stereotypical temporal course [51, 52]. However, in almost all theoretical works that addressed how local plasticity rules give rise to temporal sequences of neural activity, it was necessary to provide some form of structured input into the network in order to robustly produce the sequential neural activity [25, 53–55]. Similarly, structured inputs were required in order to robustly produce self connected assemblies, which give rise to another useful form of neural dynamics, characterized by multiple stable states [27–31]. It is therefore significant that synaptic structures which support structured neural dynamics can emerge in a neural circuit without any preexisting order in the synaptic organization, and without any exposure to external stimuli. Appropriate choices of the biophysical parameters, which enable this type of autonomous organization, became apparent by applying the theoretical formalism and reasoning developed in this work.

Finally, we briefly mention another area of future work, in which the formalism developed here may find a useful application: we expect that the analysis of synaptic dynamics in terms of contributions from structural motifs, will be valuable for assessing the role of STDP in shaping the high order statistics of cortical connectivity, as experimental data on these statistics become increasingly available [1, 2, 32].

Methods

Network dynamics

The time dependent activity of neuron i is a stochastic realization of an inhomogeneous Poisson process, with expectation value

| (5) |

where N is the number of neurons, W is the connectivity matrix, a(t) is the synaptic current, bi is a constant external input, and is the spike train of the neuron k (where are spike times of the neuron). We assume that the neurons do not excite themselves, meaning that ∀i Wii = 0.

An analytical expression for the synaptic drift

Assuming that all spike pairs contribute to STDP, the change in the synaptic efficacies due to STDP can be expressed as follows:

| (6) |

where

| (7) |

is the change in synaptic efficacy arising from spikes in the post synaptic neuron i at time t, and

| (8) |

is the change following a spike in the pre synaptic neuron j at time t. In both terms, the integration is over all previous spikes of the presynaptic neuron (Eq 7) or the postsynaptic neuron (Eq 8).

We define the correlation function of spikes in each pair of cells as follows,

| (9) |

where 〈 ⋅ 〉 denotes averaging over different realizations of the Poisson dynamics for a given connectivity. For constant external input, and under the assumption of slow learning, the correlation function is stationary, and does not depend on t. We denote the rate of change in the synaptic efficacy, averaged over realizations of the Poisson dynamics as

| (10) |

and refer to it in short as the synaptic drift. Using the correlation function we can express the synaptic drift as follows,

| (11) |

For linear dynamics the correlation function can be written exactly. In the frequency domain [36],

| (12) |

where ri = 〈λi〉, the diagonal matrix Dij = δij rj, I denotes the unit matrix, and we use the convention in which the Fourier transform of a function g(t) is defined as .

The average firing rate can be easily obtained from Eq 5:

| (13) |

By substituting Eq 12 in Eq 11, we obtain Eq 2. Note that the diagonal terms should be ignored, since we assume that there is no synapse from a neuron to itself.

Power series expansion of the average learning dynamics

The matrix inverses appearing in Eq 12 can be expanded using the matrix identity [37]

| (14) |

In our context, this substitution can be seen as an expansion in powers of the synaptic efficacies. We assume that the synaptic efficacies are sufficiently weak, such that for all values of ω, all eigenvalues of are smaller (in absolute magnitude) than unity. Note that below we normalize the synaptic current such that , and for all ω. In this case the requirement is that all eigenvalues of W are smaller (in absolute magnitude) than unity.

Using Eq 14 we rewrite Eq 2 as Eq 3, where we define:

| (15) |

and

| (16) |

Note that fα, β are dimensionless, and f0 has dimensions of time. In the time domain,

| (17) |

where cα, β can be written as a series convolutions of the synaptic current function:

| (18) |

The way fα, β is determined from the synaptic current and the STDP function is illustrated in Fig 10 for a few examples.

Fig 10. Dependence of motif coefficients on the time course of STDP and time course of post synaptic currents.

A-F. Examples illustrating how the coefficients fα, β are determined for three motifs. Solid lines: motif driven spike correlations (Eq 18). Dashed lines: the STDP function (chosen to be antisymmetric). Yellow area: the integral of the product of these two functions, which determines the motif coefficient. For simplicity, we consider only the correlation structure induced by the excitatory synaptic connectivity. A. The correlation time course (solid line) induced by the motif {1, 0} is simply the synaptic current function. B. In comparison with A, the correlation time course induced by the motif {2, 0} peaks later and is more widespread, because the coupling between the pre and the post synaptic neurons is mediated through an intermediate neuron. C. The correlation function induced by the third order motif {2, 1} peaks at a similar time as in A, because in both motifs α − β = 1, meaning that the paths leading to neurons i and j differ in length by one synapse. D-F. A synaptic latency of 3 ms affects the overlap between the correlation time course and the STDP function, and thus the motif coefficients (see also Fig 5). D. In the motif {1, 0} the correlation time course is shifted by 3 ms relative to panel A, leading to a decrease in the overlap with the STDP function and a decrease in f1,0. E. In the motif {2, 0} the spike correlation function (solid line) is shifted by 6 ms relative to panel B because two synaptic latencies accumulate along the path from the pre synaptic neuron j to the post synaptic neuron i. The overlap with the STDP function and the coefficient f2,0 are sharply reduced. F. In the motif {2, 1} the spike correlation function is shifted by 3 ms relative to panel because the relative latency, incurred along the paths from the source neuron to neurons i and j amounts to a single synaptic latency. The time course of correlations arising from this motif (solid line) is very broad, and therefore shifting it relative to the STDP trace has relatively little influence on the overlap with the STDP function and on f2,1.

Full plasticity dynamics

We consider N excitatory neurons, with modifiable recurrent connections. In addition to the STDP, the excitatory synapses undergo heterosynaptic competition, and possibly constant self depression and growth at a constant rate. The full plasticity dynamics of these synapses can be summarized by the following expression:

| (19) |

The terms represent the heterosynaptic competition [25]: competition over the input to neuron i,

| (20) |

and competition over the outputs from neuron j:

| (21) |

Here, Θ(x) is the Heaviside step function:

When ψ is sufficiently large, the competition guarantees that the sum over each row and column of Wex does not exceed Wmax. Finally, the term represents self depression (constant weakening of the synapse in proportion to the synaptic efficacy), and the term γ represents a constant growth of each weight at a fixed rate.

In addition to these rules, the excitatory synapses are restricted to the range [0, wmax].

Inhibitory synapses

In all plasticity simulations, the connectivity between each pair of neurons was divided into excitatory and inhibitory components,

| (22) |

where Wex are the excitatory synaptic efficacies, which satisfy the dynamics described above, and Win are effective inhibitory synaptic efficacies. These have the following structure:

| (23) |

Because Wik does not depend on k, this form of inhibition can be interpreted as arising from an inhibitory drive which is proportional to the total activity within the network, and is mediated by inhibitory interneurons with fast synapses. The sum of inhibitory synapses into each neuron is dynamically adjusted to balance the sum of the excitatory synapses.

Simulations

Power series expansion simulations

In Figs 4, 6, 7B and 7C (Gray and red lines), and in S2 Fig, STDP is modeled as in Eq 3, but with a small number of non-vanishing coefficients fα, β which are set as in Table 1. In Fig 7B and 7C the coefficients fα, β which are not set to zero are directly evaluated from the STDP function and the synaptic current function.

Table 1. The coefficients fα, β in Figs 4, 6 and S2.

| f1,0 | f0,1 | f2,0 | f0,2 | f1,1 | f2,1 | f1,2 | |

|---|---|---|---|---|---|---|---|

| Fig 4C | 1 | −1 | 1 | −1 | 0 | left panel: 0 right panel: 16 |

−f2,1 |

| Fig 4D | 0.25 | 0.25 | 0 | 0 | left panel: 0 right panel: 1.8 |

0 | 0 |

| Fig 6A | * | * | 0 | 0 | 0 | 20 | −20 |

| Fig 6B | * | −30 | 0 | 0 | 0 | * | −f2,1 |

| S2A Fig | −2 | −30 | * | −f2,0 | 0 | * | −f2,1 |

| S2B Fig | −2 | −30 | 0 | 0 | * | * | −f2,1 |

| S2C Fig | * | * | 0 | 0 | 0 | 20 | −20 |

* Values are specified in the Figure.

Average learning simulations

In the average learning simulations (Figs 7, 8, S1, S3 and S4), the contribution of STDP to the synaptic drift was evaluated numerically in the Fourier domain, using Eq 2.

Stochastic Poisson learning simulations

In the stochastic simulations (Figs 8, 9 and S1) the STDP term was explicitly determined by the spiking activity in the network (Eqs 6–8).

Simulations parameters

Synaptic current function: In all simulations,

| (24) |

where τ1 = 5ms, τ2 = 1s, and d is the synaptic latency. The coefficient a0 normalizes the synaptic current such that . This choice sets a meaningful scale for W: if Wij = 1, a single spike in a pre synaptic neuron j increases (or decreases), on average, the number of spikes emitted by the post synaptic neuron i by one.

STDP function: In Figs 5, 7, 8, 10, S1 and S3 we used the following antisymmetric STDP function:

| (25) |

where:

| (26) |

h0 = 104, τ1 = 3ms, τ1 = 2sec, and , A− = −A+.

In Fig 9 we used a symmetric STDP function:

| (27) |

where σ = 12ms, A = 5.2 ⋅ 104.

In S4 and S5 Figs we used the following asymmetric (but not antisymmetric) STDP function:

| (28) |

where:

| (29) |

, , , τ2 = 2sec, , and .

Limitation on the total synaptic input and output: In all simulations,

| (30) |

The parameter M affects the number of neurons in each group when the ordered structures emerge [25].

Learning rate: In all simulations except the stochastic simulations, the learning dynamics were implemented using the Euler method with an adaptive time step, chosen such that the maximal change in the weights in each step was 0.0005 (Fig 4), 0.0001 (Figs 6 and S2) and 0.02 (Figs 7, S3 and S4), and 0.002 (Fig 8).

Initial connectivity: The initial weights were chosen independently from a uniform distribution between 0 and wmax M/N (Figs 4, 6, 9 and S2), and between 0 and 1.5wmax M/N (Figs 7, 8, S3 and S4).

Simulations duration and convergence criterion: The convergence criterion was that in the last 10 iterations the absolute change in each element of the matrix did not exceed e−15. The stochastic simulations were conducted using stochastic Euler dynamics with a time step of 0.25 ms.

Other parameters are summarized in Tables 2 and 3.

Table 2. Simulation parameters.

| Fig 4 | Fig 6 | Fig 7 | Fig 8 | Fig 9 | |

|---|---|---|---|---|---|

| N |

Fig 4C: 20 Fig 4D: 24 |

20 | 20 | 20 | 20 |

| ψ | 5 ⋅ 104 s−1 | 2 ⋅ 103 s−1 | 5 ⋅ 104 s−1 | 5 ⋅ 104 s−1 | 105 s−1 |

| η | 10−8 | 10−8 | 10−8 |

Fig 8A: 2 ⋅ 10−7

Fig 8B: 1 ⋅ 10−7 Fig 8C: * Fig 8D: 4 ⋅ 10−7 |

2.5 ⋅ 10−9 |

| b | 0 | 15Hz | 15Hz | 15Hz | 5Hz |

| μ | – | – |

Fig 7B: *

Fig 7C: 300b |

300b | 0 |

| M | 5 | 5 | 5 | 5 | 4 |

| wmax | |||||

| γ | 0 | 225s−1 | 225s−1 | 225s−1 | 0 |

| d | - | - |

Fig 7B: 0 Fig 7C: * |

Fig 8A: 6 ms Fig 8B: 6 ms Fig 8C: 6 ms Fig 8D: * |

5.25 ms |

* Values are specified in the Figure.

Table 3. Supplementary parameters.

| S1 Fig | S2 Fig | S3 Fig | S4 Fig | |

|---|---|---|---|---|

| N | 20 | 20 | 20 | 20 |

| ψ | – | 2 ⋅ 103 s−1 | 5 ⋅ 104 s−1 | 5 ⋅ 104 s−1 |

| η | 4 ⋅ 10−7 | 10−8 | 10−8 | 10−8 |

| b | 15Hz | 15Hz | 15Hz | 15Hz |

| μ | – | – | 300b | 600b |

| M | 5 | 5 | 4 | 4 |

| wmax | * | |||

| γ | 225s−1 | 225s−1 | 225s−1 | 0 |

| d | 0 | - | * | * |

* Values are specified in the Figure.

Chain score and ordering of connectivity matrices

To classify groups of neurons that share similar connectivity, we performed k-means classification on a set of N vectors, where the i-th vector includes all the excitatory input and output synaptic efficacies of neuron i: , and using a squared Euclidean distance. We then reordered the neurons (and the connectivity matrix) based on the groups identified by the k-means clustering. When searching for synfire chain structure, we chose the order of groups as follows: We randomly chose one group and set it as the first group. We then looked for a remaining group that receives the largest total synaptic input from the first group, and set it as the next group. This process was repeated to include all groups. Next, we compared the ordered connectivity matrix to an “ideal” binary connectivity matrix that represents complete feed forward connectivity between the groups, or complete clustering into self connected groups. The chain score is defined as: 1 − x, where x is the normalized square distance between the ordered matrix, scaled by the largest element, and a matrix representing ideal feed forward connectivity (or a perfect arrangement of self connected clusters). Finally we maximized the similarity score over a range of values of k.

Supporting Information

Scatter plot of the analytical expression for the average change in the synaptic efficacy (vertical axis), against the change in the synaptic efficacy generated by STDP (Eqs 6–8) in a stochastic simulation of stochastic spiking neurons, averaged over 6 minutes (A), 1 hour (B), and 20 hours (C). Each point represents the change in one synapse, where all synapses belong to the same connectivity matrix. The standard deviation (STD) listed under each panel is the mean square distance, across all synaptic pairs, between the change of the synaptic efficacy in the stochastic simulation and the prediction of the deterministic theory. The synaptic efficacies are drawn independently from a uniform distribution between , where Wmax = 0.9, N = 20, and are kept fixed during the stochastic simulations.

(TIF)

A. Chain score as a function of the motif coefficients f2,0 (horizontal axis) and f2,1 (vertical axis). B. Chain score as a function of the motif coefficients f1,1 (horizontal axis) and f2,1 (vertical axis). The simulations in A and B include also a contribution from the motif {0, 1} with fixed f0,1, and f1,2 = −f2,1. C. Reproduction of Fig 6A. The red cross designates a set of motif coefficients identical to those marked by red crosses in panels A-B. In all panels, each data point represents an average over ten simulations, each with a different realization of the initial random connectivity.

(TIF)

Chain score of the steady state connectivity, obtained from simulations of the complete dynamics (Eq 2). Horizontal axis: synaptic latency. Each line corresponds to simulations with a different choice of Wmax: 0.9 (black), 0.7 (red), 0.5 (gray). Each data point represents an average over ten simulations, each with a different realization of the initial random connectivity, and the error bars represent the standard deviation of the chain score. All other parameters are specified in Methods.

(TIF)

A. Chain score of the steady state connectivity, obtained from simulations of the complete dynamics (Eq 2) with a non antisymmetric STDP function (see Methods). Horizontal axis: synaptic latency. Each data point represents an average over ten simulations, each with a different realization of the initial random connectivity. B. An illustration of the non antisymmetric STDP function.

(TIF)

A. Contribution of motifs with α − β = 1 weakly depends on the synaptic latency. B. Contribution of motifs with α = β does not depend on the synaptic latency. C. Contribution of motifs with α − β = 2 decays rapidly as a function of the synaptic latency. All the parameters are specified in Methods.

(TIF)

Acknowledgments

We thank Mor Nitzan and Idan Segev for useful discussions, and Nimrod Shaham for comments on the manuscript.

Data Availability

The paper is a theoretical work and does not contain experimental data. All the parameters required to reproduce our results are specified in the Methods section.

Funding Statement

This research was supported by the Israel Science Foundation grant No. 1733/13 and (in part) by grant No. 1978/13, and by the Gatsby Charitable Foundation. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Song S, Sjo PJ, Reigl M, Nelson S, Chklovskii DB. Highly nonrandom features of synaptic connectivity in local cortical circuits. Plos Biology. 2005;3(3):507–519. 10.1371/journal.pbio.0030068 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Yoshimura Y, Dantzker J, Callaway E. Excitatory cortical neurons from fine-scale functional networks. Nature. 2005;433:868–873. 10.1038/nature03252 [DOI] [PubMed] [Google Scholar]

- 3. Kampa BM, Letzkus JJ, Stuart GJ. Cortical feed-forward networks for binding different streams of sensory information. Nature Neuroscience. 2006;9(12):1472–1473. 10.1038/nn1798 [DOI] [PubMed] [Google Scholar]

- 4. Morrison A, Diesmann M, Gerstner W. Phenomenological models of synaptic plasticity based on spike timing. Biological Cybernetics. 2008;98(6):459–478. 10.1007/s00422-008-0233-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Holtmaat A, Svoboda K. Experience-dependent structural synaptic plasticity in the mammalian brain. Nature Reviews Neuroscience. 2009;10(9):647–658. 10.1038/nrn2699 [DOI] [PubMed] [Google Scholar]

- 6. Hebb DO. The organization of behavior; a neuropsychological theory. New York: Wiley; 1949. [Google Scholar]

- 7. Markram H, Lübke J, Frotscher M, Sakmann B. Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science. 1997;275(5297):213–215. 10.1126/science.275.5297.213 [DOI] [PubMed] [Google Scholar]

- 8. Bi GQ, Poo MM. Synaptic modifications in cultured hippocampal neurons: dependence on spike timing, synaptic strength, and postsynaptic cell type. The Journal of Neuroscience. 1998;18(24):10464–10472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Froemke RC, Dan Y. Spike-timing-dependent synaptic modification induced by natural spike trains. Nature. 2002;416(6879):433–438. 10.1038/416433a [DOI] [PubMed] [Google Scholar]

- 10. Woodin MA, Ganguly K, Poo MM. Coincident pre- and postsynaptic activity modifies GABAergic synapses by postsynaptic changes in Cl- transporter activity. Neuron. 2003;39(5):807–820. 10.1016/S0896-6273(03)00507-5 [DOI] [PubMed] [Google Scholar]

- 11. Kempter R, Gerstner W, van Hemmen LJ. Hebbian learning and spiking neurons. Physical Review E. 1999;59(4):4498–4514. 10.1103/PhysRevE.59.4498 [DOI] [Google Scholar]

- 12. Gütig R, Aharonov R, Rotter S, Sompolinsky H. Learning input correlations through nonlinear temporally asymmetric Hebbian plasticity. The Journal of Neuroscience. 2003;23(9):3697–3714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Babadi B, Abbott LF. Intrinsic stability of temporally shifted spike-timing dependent plasticity. PLoS Computational Biology. 2010;6(11):e1000961 10.1371/journal.pcbi.1000961 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Vogels TP, Sprekeler H, Zenke F, Clopath C, Gerstner W. Inhibitory Plasticity Balances Excitation and Inhibition in Sensory Pathways and Memory Networks. Science. 2011;334(6062):1569–1573. 10.1126/science.1211095 [DOI] [PubMed] [Google Scholar]

- 15. Luz Y, Shamir M. Balancing feed-forward excitation and inhibition via Hebbian inhibitory synaptic plasticity. PLoS Computational Biology. 2012. January;8(1):e1002334 10.1371/journal.pcbi.1002334 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Burkitt aN, Gilson M, Van Hemmen JL. Spike-timing-dependent plasticity for neurons with recurrent connections. Biological Cybernetics. 2007;96(5):533–546. 10.1007/s00422-007-0148-2 [DOI] [PubMed] [Google Scholar]

- 17. Babadi B, Abbott LF. Pairwise Analysis Can Account for Network Structures Arising from Spike-Timing Dependent Plasticity. PLoS Computational Biology. 2013;9(2). 10.1371/journal.pcbi.1002906 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Song S, Abbott LF. Cortical Development and Remapping through Spike Timing-Dependent Plasticity. Neuron. 2001. October;32(2):339–350. 10.1016/S0896-6273(01)00451-2 [DOI] [PubMed] [Google Scholar]

- 19. Ocker GK, Litwin-Kumar A, Doiron B. Self-Organization of Microcircuits in Networks of Spiking Neurons with Plastic Synapses. PLoS Computational Biology. 2015;11(8):e1004458 10.1371/journal.pcbi.1004458 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Abeles M. Corticonics: Neural circuits of the cerebral cortex. Cambridge University Press; 1991. [Google Scholar]

- 21. Hahnloser RHR, Kozhevnikov Aa, Fee MS. An ultra-sparse code underlies the generation of neural sequences in a songbird. Nature. 2002;419(6902):65–70. 10.1038/nature00974 [DOI] [PubMed] [Google Scholar]

- 22. Long Ma, Jin DZ, Fee MS. Support for a synaptic chain model of neuronal sequence generation. Nature. 2010;468(7322):394–399. 10.1038/nature09514 [DOI] [PMC free article] [PubMed] [Google Scholar]