Abstract

Nonrandomized studies are essential in the postmarket activities of the US Food and Drug Administration, which, however, must often act on the basis of imperfect data.

Systematic errors can lead to inaccurate inferences, so it is critical to develop analytic methods that quantify uncertainty and bias and ensure that these methods are implemented when needed. “Quantitative bias analysis” is an overarching term for methods that estimate quantitatively the direction, magnitude, and uncertainty associated with systematic errors influencing measures of associations.

The Food and Drug Administration sponsored a collaborative project to develop tools to better quantify the uncertainties associated with postmarket surveillance studies used in regulatory decision making. We have described the rationale, progress, and future directions of this project.

Nonrandomized study designs play an essential role in the activities of the US Food and Drug Administration (FDA). Beginning with the FDA Amendments Act of 2007, Congress mandated that the FDA use observational health care data to conduct active surveillance of risks associated with medical products. In response, the FDA launched the Sentinel Initiative1 in May 2008. Mini-Sentinel is a pilot program for the Sentinel Initiative and includes about 178 million individuals as of July 2014.2,3 Sentinel data partners maintain patient data, and requests to analyze the data are processed through a clearinghouse to protect patient privacy. Regulatory agencies other than the FDA also use nonrandomized data. For example, in response to the Patient Protection and Affordable Care Act of 2010, Congress authorized the establishment of the Patient-Centered Outcomes Research Institute4 to fund comparative clinical effectiveness research. Some of this research uses large-scale observational studies that include data from the Centers for Medicare and Medicaid Services.

Nonrandomized designs are used in three stages of medical product postmarket surveillance: in signal generation, such as data mining in the FDA Adverse Event Reporting System and Vaccine Adverse Event Reporting System; in signal refinement, such as active surveillance in FDA Mini-Sentinel and Centers for Disease Control and Prevention Vaccine Safety Datalink; and in signal evaluation, such as hypothesis-driven epidemiological studies involving individual-level data.5 Nonrandomized studies are also used in benefit–risk assessment of medical products (less so with regard to benefits), which is “the basis of FDA’s regulatory decisions in the premarket and postmarket review process.”6(p1) Large-scale observational data are especially useful for studies of low-probability adverse events that cannot be detected with sufficient precision during premarket randomized clinical trials. One can reasonably expect that these activities will substantially increase the use of nonrandomized epidemiological study designs in regulatory activities.

Although nonrandomized studies can provide critical insights into causal relations and inform regulatory decisions, “in many cases, FDA must draw conclusions from imperfect data.”6(p9),7 It is important, therefore, to identify and evaluate the sources of uncertainty, such as the “absence of information, conflicting findings, [and] marginal results.”6(p9),7 Both random error and systematic error contribute to the uncertainty of a study’s results, but systematic error is often the main concern in nonrandomized studies. Bias arising from systematic errors in epidemiological research can lead to inaccurate inferences, so it is critical to develop or adapt analytic methods that accurately and transparently quantify uncertainty and bias in these findings and to ensure that these methods are implemented routinely. Efforts to do so are already under way. For example, the Prescription Drug User Fee Act V implementation plan for benefit–risk analysis includes plans to develop methods that characterize uncertainty,6 and in 2014 the Institute of Medicine held a two-part workshop specifically focused on this issue.7

Quantitative bias analysis is an overarching term applied to methods that estimate quantitatively the direction, magnitude, and uncertainty associated with systematic errors that influence measures of associations. Methods for implementing quantitative bias analysis have been well described,8,9 and good practices for quantitative bias analysis, including the acknowledgment of its underlying assumptions and limitations, have been outlined.10 Some have previously noted that quantitative bias analysis in the regulatory arena would be useful.10,11 To our knowledge, however, no regulatory authority has systematically incorporated quantitative bias analysis into its consideration of epidemiological research as part of the evidence base supporting health policymaking.

We describe an FDA-sponsored initiative to customize quantitative bias analysis tools for use with epidemiological studies of vaccine safety and to incorporate the results of these bias analyses into the FDA’s efforts to achieve the regulatory mission of protecting and advancing public health. Although our initial focus is on vaccine safety, the methods and tools are flexible and can be adapted to other safety and effectiveness studies and to benefit–risk studies.

INTRODUCTION TO BIAS ANALYSIS AND ITS UTILITIES

Random error is the error in a study’s findings resulting from sampling variability. Assessments of random error are ubiquitous in epidemiological research and are typically quantified using frequentist P values or 95% confidence intervals (CIs). An important hallmark of random error is that as the study size increases, random error decreases. Therefore, as the study size becomes large, random error approaches zero. “Systematic error,” also referred to as “bias,” is the error in a study that results from errors in its design and conduct or in data analysis. Unlike random error, as study size increases, the systematic error does not decrease. Although random error is nearly always quantified in presentations of epidemiological research, the impact of systematic error on study findings is rarely quantified,10 even though quantitative methods have been long available.

Quantitative bias analysis methods date to the 1950s, when Bross described methods to assess the impact of misclassification12 and Cornfield described methods to assess the impact of uncontrolled confounding.13 These simple approaches used only the two-by-two contingency tables summarizing the study data and plausible assumptions about sources of bias, yet they provided powerful insight into the impact that systematic error could have had on study findings. More recently, methods have been developed to account for the uncertainty in the evaluation of bias using Monte Carlo simulation methods,8,11 Bayesian methods,9,14 empirical methods,15 and missing data methods.14 The basic approach for all methods of quantitative bias analysis is to assess the likely sources of systematic error in a study (typically uncontrolled confounding, selection bias, and information bias); relate the biases to the observed data through bias models; quantify the direction, magnitude, and uncertainty associated with the biases by assigning plausible values to the parameters of the bias models; and interpret the results of the study in light of this assessment of bias.10

Quantitative bias analysis can have different objectives, and the goal of the analysis guides the details of the methodology. One of the most common uses is to estimate the direction, magnitude, and uncertainty resulting from one or more sources of systematic error in a study.8,10 A second common rationale for quantitative bias analysis arises when the analyst has little information to guide the choice of values for a bias analysis but believes sources of systematic error likely affected the study results.8,10 In this case, the analyst may feel uncomfortable making strong assumptions about the values to assign to bias parameters but may still want to explore how sensitive the study findings are to plausible ranges for the values assigned to the bias parameters.

In such cases, rather than specifying the bias parameters a priori, one instead estimates the amount of bias that would be required to change an estimate of association to a target value. This target value could be the null value, but any value that would lead to a substantively different inference is possible for the target. Judgments can then be made as to whether the values that must be assigned to the bias parameters to reach the target are plausible. A third, less well-recognized motivation for quantitative bias analysis is the desire to counter the human tendency to reason poorly in the face of uncertainty.8,10 A large body of research has documented how humans are prone to error when making judgments when given imperfect information,16,17 and judgments about biases affecting epidemiological research are likely no exception.8

It may seem adequate to discuss sources of systematic error qualitatively in the limitations section of an article and to allow the reader to estimate how much to shift the point estimate to account for the bias and how much to widen the CI. Numerous examples demonstrate, however, that such attempts are prone to predictable errors and are typically no better among experts than among those without specialized training.17 A final motivation of quantitative bias analysis is to identify important, and often critical, differences between stakeholder assumptions about sources of bias in the literature on a topic. Groups of stakeholders may have different assumptions about the proper bias model or the values to assign to the bias model’s parameters. If the results of their bias analyses differ sufficiently to yield different inferences, results of the bias analysis provide a guide for efficient allocation of resources for further research.18,19

THE FDA BIAS ANALYSIS PROJECT

Because of this rationale and background, the FDA sponsored a collaborative project to enhance the validity of postmarket surveillance studies for safety signals. Postlicensure safety surveillance of biologic products relies mostly on observational studies, in which bias can make appropriate inference difficult. Adverse events of interest are often rare, so postlicensure safety studies tend to involve large study populations. In some cases, events are so rare that the precision of estimates of association are poor because cases are so sparse.20,21 When outcomes are less rare, the large sample size may provide relatively precise estimates of the risk if one considers only the random error. However, these large observational studies are often susceptible to systematic errors in data capture. Our specific aim, therefore, was to create a user-friendly computing tool with broad utility that can adjust for the impact of different bias types in different study designs using quantitative bias analysis.

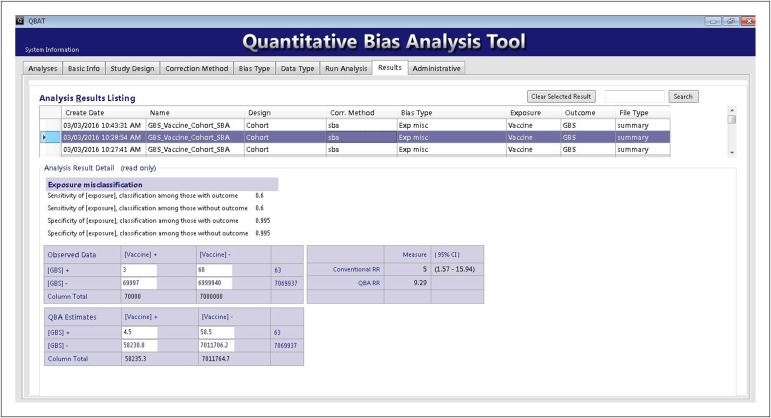

The tool’s user interface first gathers critical information, such as study design type, bias types and parameters, data type (e.g., record or summary level), and analysis method. It then provides users with a screen that prompts them to input the appropriate data and bias parameters for their analysis. Finally, the tool estimates the bias in the data considering the parameters, provides adjusted point estimates and simulation intervals that account for both random and systematic error, and reports the output back to the analyst.

To allow testing of the quantitative bias analysis tool and new adjustment methods, we created an additional program to simulate realistic claims data for a source population exposed to different vaccines and monitored for adverse effects related to those exposures. Individual analytic data sets can then be extracted from the source population following a desired study design. Users are able to input various parameter values to create the source population, including confounders, measures of true association, and types of bias to inject into the data. These simulated data sets allow users to examine the impact of bias on conventional estimates because the true measure of association is a known value. The development of this simulated source population cannot mimic real data completely, but it has the advantage that we know the true underlying effects and can accurately test our methods.

To illustrate the utility of the quantitative bias analysis tool and the underlying methods, we developed a hypothetical example on the basis of a study of the association between vaccination and the incidence of Guillain–Barre Syndrome (GBS).20 Imagine a group of persons exposed to a hypothetical vaccine in which 3 cases of GBS appeared in 70 000 person-years following vaccination (incidence rate = 43 per million person-years). For comparison, imagine a second group in which 60 cases of GBS appear in 7 000 000 person-years among those who were not vaccinated (incidence rate of 8.6 per million person-years). The rate ratio (RR) associating receipt of the hypothetical vaccination with GBS equals 5.0 with a 95% CI from 1.6 to 16.

We used bias analysis methods to investigate the potential influences of misclassification of vaccination status and uncontrolled confounding by influenza vaccination. Estimates of the sensitivity and specificity of vaccination classification in administrative records equal 60.0% and 99.5%, respectively.21 Using these point estimates of classification, and assuming nondifferential misclassification, we estimated a revised point estimate of the RR associating the hypothetical vaccine and GBS occurrence of 9.3 (Figure 1), indicating that the original estimate of an RR equal to 5.0 may have been biased to the null by nondifferential misclassification.

FIGURE 1—

Example Application of the Quantitative Bias Analysis Tool

A second potential source of bias may have been confounding by receiving the influenza vaccination. Although the topic remains controversial, there is some evidence that the receipt of at least one dose of H1N1 pandemic influenza vaccination approximately doubles the risk of GBS.22 It is also plausible that persons who receive the influenza vaccine would be more likely to receive our hypothetical vaccine than would those who do not receive the influenza vaccine. We will assume it is four times more likely. With these estimates to inform the bias model, we estimated a revised point estimate of the RR associating the hypothetical vaccine and GBS occurrence of 4.4, indicating that the original estimate of an RR of 5.0 may have been biased away from the null by uncontrolled confounding by receiving the influenza vaccination.

One would ordinarily have poor intuition of how the misclassification bias and uncontrolled confounding biases may work together. Fortunately, quantitative bias analysis methods can be applied in sequence. Applying this sequential strategy, we estimated a revised point estimate of the RR associating the hypothetical vaccine and GBS occurrence of 8.1, suggesting that the bias toward the null owing to misclassification may be more important than is the bias away from the null owing to the unmeasured confounder. A reasonable future direction, under this hypothetical scenario, would be to collect data to validate receipt versus no receipt of the hypothetical vaccine in a subset of the original study population. This validation substudy would improve the accuracy of the values assigned to the misclassification bias parameters, thereby improving the confidence one might have about the accuracy of the original RR point estimate.

The project to date has adapted existing methods of quantitative bias analysis to a user-friendly quantitative bias analysis tool that implements bias analysis directed at unmeasured confounders, information bias (misclassification), and selection bias using summary-level or record-level data. The tool can conduct analyses on summary-level or record-level data and can work with cohort and case–control designs. Existing methods have been extended to self-controlled case series23,24 and vaccinee-only risk interval25 designs, which are used frequently to evaluate vaccine safety. Future work includes further training, implementation to resolve current problems, and further extensions to specialized designs. The first application to a current postmarket safety topic is underway.

CONCLUSIONS

Regulatory agencies must weigh the risks and benefits of an authorized product and strive to communicate clearly and transparently the scientific basis of their decisions. Those decisions must sometimes be made even when the available data are imperfect. Methods and tools to rigorously and transparently explore the potential impact of known or suspected sources of systematic bias in observational data will support decision making and improve communication with all stakeholders. The methods and tools we have proposed have been shown to be simple to use and of benefit for quantifying the impact of potential biases. Nonetheless, they must be rigorously evaluated in real-life conditions before generalizing their use in the regulatory setting.

REFERENCES

- 1.US Food and Drug Administration. FDA’s sentinel initiative. Available at: http://www.fda.gov/Safety/FDAsSentinelInitiative/ucm2007250.htm. Accessed April 6, 2016.

- 2.Mini-Sentinel. Welcome to Mini-Sentinel. Available at: http://www.mini-sentinel.org. Accessed April 6, 2016.

- 3.Platt R, Carnahan RM, Brown JS et al. The U.S. Food and Drug Administration’s Mini-Sentinel program: status and direction. Pharmacoepidemiol Drug Saf. 2012;21(suppl 1):1–8. doi: 10.1002/pds.2343. [DOI] [PubMed] [Google Scholar]

- 4.Patient-Centered Outcomes Research Institute. Important questions, meaningful answers. Available at: http://www.pcori.org. Accessed April 6, 2016.

- 5.McClure DL, Raebel MA, Yih K Mini-Sentinel methods: framework for assessment of signal refinement positive results. 2012. Available at: http://www.mini-sentinel.org/work_products/Statistical_Methods/Mini-Sentinel_Methods_Framework-for-Assessment-of-Signal-Refinement-Positive-Results.pdf. Accessed April 6, 2016. [DOI] [PubMed]

- 6.US Food and Drug Administration. Structured Approach to Benefit-Risk Assessment in Drug Regulatory Decision-Making. Rockville, MD: Food and Drug Administration; 2013. [Google Scholar]

- 7.Institute of Medicine. Characterizing and communicating uncertainty in the assessment of benefits and risks of pharmaceutical products. Available at: http://www.iom.edu/Activities/Research/DrugForum/2014-FEB-13.aspx. Accessed April 6, 2016. [PubMed]

- 8.Lash TL, Fox MP, Fink AK. Applying Quantitative Bias Analysis to Epidemiologic Data. New York, NY: Springer; 2009. [Google Scholar]

- 9.Gustafson P. Measurement Error and Misclassification in Statistics and Epidemiology Impacts and Bayesian Adjustments. Boca Raton, FL: Chapman & Hall/CRC; 2004. [Google Scholar]

- 10.Lash TL, Fox MP, MacLehose RF, Maldonado G, McCandless LC, Greenland S. Good practices for quantitative bias analysis. Int J Epidemiol. 2014;43(6):1969–1985. doi: 10.1093/ije/dyu149. [DOI] [PubMed] [Google Scholar]

- 11.Phillips CV, LaPole LM. Quantifying errors without random sampling. BMC Med Res Methodol. 2003;3:9. doi: 10.1186/1471-2288-3-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bross I. Misclassification in 2x2 tables. Biometrics. 1954;10:478–489. [Google Scholar]

- 13.Cornfield J, Haenszel W, Hammond EC, Lilienfeld AM, Shimkin MB, Wydner EL. Smoking and lung cancer: recent evidence and a discussion of some questions. J Natl Cancer Inst. 1959;22(1):173–203. [PubMed] [Google Scholar]

- 14.Greenland S. Bayesian perspectives for epidemiologic research: III. Bias analysis via missing-data methods. Int J Epidemiol. 2009;38(6):1662–1673. doi: 10.1093/ije/dyp278. [Erratum Int J Epidemiol. 2010;39(4):1116] [DOI] [PubMed] [Google Scholar]

- 15.Spiegelman D, Rosner B, Logan R. Estimation and inference for logistic regression with covariate misclassification and measurement error, in main study/validation study designs. J Am Stat Assoc. 2000;95(449):51–61. [Google Scholar]

- 16.Kahneman D, Slovic P, Tversky A. Judgment Under Uncertainty: Heuristics and Biases. New York, NY: Cambridge University Press; 1982. [DOI] [PubMed] [Google Scholar]

- 17.Gilovich T, Griffin D, Kahneman D. Heuristics and Biases: The Psychology of Intuitive Judgment. New York, NY: Cambridge University Press; 2002. [Google Scholar]

- 18.Lash TL, Ahern TP. Bias analysis to guide new data collection. Int J Biostat. 2012;8(2) doi: 10.2202/1557-4679.1345. pii. [DOI] [PubMed] [Google Scholar]

- 19.US National Academies of Science. A Risk-Characterization Framework for Decision-Making at the Food and Drug Administration. Washington, DC: National Academies Press; 2011. [Google Scholar]

- 20.Velentgas P, Amato AA, Bohn RL et al. Risk of Guillain-Barré syndrome after meningococcal conjugate vaccination. Pharmacoepidemiol Drug Saf. 2012;21(12):1350–1358. doi: 10.1002/pds.3321. [DOI] [PubMed] [Google Scholar]

- 21.Funch D, Holick C, Velentgas P et al. Algorithms for identification of Guillain-Barré syndrome among adolescents in claims databases. Vaccine. 2013;31(16):2075–2079. doi: 10.1016/j.vaccine.2013.02.009. [DOI] [PubMed] [Google Scholar]

- 22.Salmon DA, Proschan M, Forshee R et al. Association between Guillain-Barré syndrome and influenza A (H1N1) 2009 monovalent inactivated vaccines in the USA: a meta-analysis. Lancet. 2013;381(9876):1461–1468. doi: 10.1016/S0140-6736(12)62189-8. [DOI] [PubMed] [Google Scholar]

- 23.Hallas J, Pottegard A. Use of self-controlled designs in pharmacoepidemiology. J Intern Med. 2014;275(6):581–589. doi: 10.1111/joim.12186. [DOI] [PubMed] [Google Scholar]

- 24.Whitaker HJ, Farrington CP, Spiessens B, Musonda P. Tutorial in biostatistics: the self-controlled case series method. Stat Med. 2006;25(10):1768–1797. doi: 10.1002/sim.2302. [DOI] [PubMed] [Google Scholar]

- 25.Farrington CP. Control without separate controls: evaluation of vaccine safety using case-only methods. Vaccine. 2004;22(15–16):2064–2070. doi: 10.1016/j.vaccine.2004.01.017. [DOI] [PubMed] [Google Scholar]