Abstract

Purpose

We set out to examine the impact of perceptual, linguistic, and capacity demands on performance of verbal working-memory tasks. The Ease of Language Understanding model (Rönnberg et al., 2013) provides a framework for testing the dynamics of these interactions within the auditory-cognitive system.

Methods

Adult native speakers of English (n = 45) participated in verbal working-memory tasks requiring processing and storage of words involving different linguistic demands (closed/open set). Capacity demand ranged from 2 to 7 words per trial. Participants performed the tasks in quiet and in speech-spectrum-shaped noise. Separate groups of participants were tested at different signal-to-noise ratios. Word-recognition measures were obtained to determine effects of noise on intelligibility.

Results

Contrary to predictions, steady-state noise did not have an adverse effect on working-memory performance in every situation. Noise negatively influenced performance for the task with high linguistic demand. Of particular importance is the finding that the adverse effects of background noise were not confined to conditions involving declines in recognition.

Conclusions

Perceptual, linguistic, and cognitive demands can dynamically affect verbal working-memory performance even in a population of healthy young adults. Results suggest that researchers and clinicians need to carefully analyze task demands to understand the independent and combined auditory-cognitive factors governing performance in everyday listening situations.

Speech communication involves multiple auditory and cognitive mechanisms to hear, understand, and make use of information in spoken language. In daily life, performance on verbal tasks involves more than recognition and is influenced by the relative demands placed on the auditory-cognitive system. For example, individuals may perform well on a range of simple tasks in quiet acoustic environments under focused attention (e.g., writing items on a grocery list when the person requesting the items is communicating face-to-face with the writer). However, the same individuals may demonstrate worse performance with increases in the perceptual or cognitive demands of the behavioral task (e.g., if the room has other people having competing conversations—greater perceptual demand—or if the person dictating the list suddenly lists multiple items without pausing—greater cognitive demand). Performance may decline due to changes in the auditory environment, such as degradation of the acoustic signal with background noise, reverberation, distortion, or filtering of speech. Performance may also decline due to changes in the cognitive processing demands, such as increasing the number of words to be remembered, reducing linguistic context, or dividing attention across multiple tasks. The purpose of the current study is to examine the interplay between perceptual, linguistic, and capacity demands on verbal working-memory performance.

One potential nexus for auditory-cognitive interactions is working memory. Information in working memory supports our capacity for thinking and language processing. Working memory represents the cognitive processes associated with the encoding, temporary storage, and manipulation of perceptual information (Baddeley, 2003; Daneman & Merikle, 1996; Pichora-Fuller, 2006; Rönnberg, Rudner, Lunner, & Zekveld, 2010; Waters & Caplan, 2003; Wingfield & Tun, 2007). Working-memory span represents the amount of information one can hold in mind, attend to, and maintain in a rapidly accessible state at one time (Baddeley, 2003). The use of the term “working” in this construct refers to the mental effort expended in storing and manipulating information held in mind (Cowan, 2008). The capacity of working memory is limited, and individuals differ in measures of working-memory capacity across the life span (e.g., Carpenter, Miyake, & Just, 1994; Case, Kurland, & Goldberg, 1982; Cowan, 1997; Waters & Caplan, 2003).

Evidence supporting the idea that working memory contributes to both auditory-perceptual and cognitive processing comes from a number of studies. With respect to perceptual processing, measures of working-memory capacity, such as the reading span task (Daneman & Carpenter, 1980), have shown moderate to strong positive correlations with some measures of speech perception in various types of background noise, such as the Hearing in Noise Test and the QuickSIN Speech-in-Noise Test (Parbery-Clark, Skoe, Lam, & Kraus, 2009; Parbery-Clark, Strait, Anderson, Hittner, & Kraus, 2011). A growing number of studies have explored the relation between working memory and speech perception in adults with hearing loss using hearing-aid amplification. Results of these studies suggest that individuals with poor working-memory capacity are more susceptible to signal distortions (Arehart, Souza, Baca, & Kates, 2013), and individuals with high working-memory capacity are able to benefit more from certain signal-processing algorithms than are individuals with low working-memory capacity (Foo, Rudner, Rönnberg, & Lunner, 2007; Lunner, 2003; Ng, Rudner, Lunner, Pedersen, & Rönnberg, 2013; Rudner, Rönnberg, & Lunner, 2011).

With respect to cognitive processing, there is considerable evidence that people with higher working-memory capacity perform better on measures of spoken-language comprehension than people with lower working-memory capacity (e.g., DeDe, Caplan, Kemtes, & Waters, 2004; Just & Carpenter, 1992). In addition, working-memory capacity is positively correlated with performance on measures of general intellectual ability and executive-function tasks such as reasoning and problem solving (e.g., Conway, Kane, & Engle, 2003; Engle, Tuholski, Laughlin, & Conway, 1999). Thus, working-memory capacity seems to be associated with performance on both cognitive and perceptual tasks. Although there is evidence for describing the nature of this relation within speech-recognition tasks (e.g., Mattys, Davis, Bradlow, & Scott, 2012), the nature of the interaction of noise and performance on cognitive/linguistic tasks is currently not fully understood.

Effects of Noise on Memory

Rabbitt (1966, 1968) examined the effects of modulated noise on young adults' ability to recall lists or details of discourse passages presented auditorily. Even after controlling for the intelligibility of the speech presented in noise, Rabbitt found that young adults were less likely to correctly recall lists of eight digits and remembered fewer passage details in noise compared with when stimuli were presented in quiet. These findings were interpreted as evidence that even when noise is presented at levels that do not cause errors in recognition, it may still affect memory storage and retrieval (see also Murphy, Craik, Li, & Schneider, 2000). In related research, there has been extensive study of the effects of background noise or speech on serial recall for visually presented stimuli. Salamé and Baddeley (1982) directly compared the effects of noise type on serial recall and found a higher number of errors when a list of nine digits was encoded visually in the presence of continuous speech (in a nonnative language) as compared with background noise (pink noise). This finding has become known in the literature as the irrelevant speech effect (cf. Ellermeier & Zimmer, 2014).

Researchers have also studied the effects of background noise on memory tasks that require not only information storage and rehearsal but also simultaneous processing of information. Pichora-Fuller, Schneider, and Daneman (1995) conducted a seminal study in this area. They used a listening-span task to investigate the effects of background noise on working memory for auditory information in younger adults and older adults without presbycusis. In this experiment, participants were presented with sentences in quiet or at different signal-to-noise ratios (SNRs), and the memory task involved both storage and processing. Participants were asked to simultaneously comprehend sentences and provide a judgment about whether the sentence was predictable or unpredictable on the basis of context, identify the last word in the sentence, and store the sentence-final words for later recall in sets of varying length (e.g., lists of two, four, six, and eight sentences). Perceptual demand was manipulated by presenting the stimuli either in quiet or in the presence of multitalker babble at a +8, +5, or 0 dB SNR. Both younger and older adults were less able to recall words they had identified correctly as the SNR declined (with increases in background noise). There was no effect of background noise in more favorable conditions (+8 dB SNR), whereas it had a negative effect on recall and a significant interaction with set size when the background noise was less favorable (+5 and 0 dB SNR). Taken together, these types of data demonstrate that performance on cognitive tasks, including working-memory measures, declines when tasks are performed in the presence of background noise. However, the focus of this work has been on perceptual manipulations. It is not clear whether the effects of noise differ in relatively easy and relatively complex cognitive tasks.

Ease of Language Understanding Model

The ease of language understanding (ELU) model (Rönnberg et al., 2013) provides a framework with which to test the impact of perceptual, linguistic, and capacity demands on the auditory-cognitive system. Within the ELU model, successful listening involves implicit and explicit processing of phonological and semantic input within working memory. When there is a linguistic mismatch due to adverse listening conditions, demands on working-memory capacity increase, triggering explicit processing to map sound to meaning from the acoustic signal. The model can also be applied to better understand how perceptual, linguistic, and capacity demands will directly affect performance on verbal working-memory tasks.

In our use of this model, we characterized what Rönnberg et al. (2013) described as phonological and semantic input as linguistic demand. Explicit processing, in addition to being triggered by adverse listening conditions, may be induced by linguistic demands. As postulated by the ELU model, these demands may be phonological or semantic. Either form of linguistic demand could induce explicit processing. For example, a highly overlearned closed set of words may be less likely to induce explicit processing than an open set of unrelated words (without context).

An example of linguistic demand in terms of phonology would be a task that uses digits compared with open-set words. The use of digits limits the probability of phonological confusion. Digits are a small, closed, overlearned set. Thus, when a listener is presented with a digit, the probability of a lexical match is high; there are few competing possibilities. This leads to a quick, easy (implicit) link to long-term memory (Rönnberg et al., 2013) and relatively lower linguistic demand. In contrast, a task that uses open-set words, especially single-syllable familiar words, has thousands of potential members of a set. Thus the probability of a lexical mismatch is high (e.g., Did I hear cat, hat, bat, sat?), which may induce explicit processing.

In terms of semantics, digits involve little chance of semantic interference. Numbers all have a similar straightforward semantic meaning. In contrast, open-set words have diverse meanings and are part of different hierarchical categorization patterns. Thus, in addition to performing the nominal task, listeners may need to inhibit the urge to organize information semantically or make semantic links between words. The need to inhibit the task of creating meaning to focus on the task at hand could potentially induce explicit processing.

Another important consideration is working-memory capacity demand. The ELU model addresses working-memory capacity such that individuals with higher working-memory capacity are predicted to be more able to successfully cope with adverse listening conditions. However, it is also important to consider the effects of increased set size on working-memory performance beyond individual differences in capacity. This type of cognitive load also has the potential to affect performance on a task and possibly interact with noise and linguistic demands (Mishra, Stenfelt, Lunner, Rönnberg, & Rudner, 2014).

Following the ELU model, the need for explicit processing is likely to result in a decrease in working-memory performance compared with conditions that do not require explicit processing. Adverse listening conditions such as noise should generally result in the need for explicit processing. In the absence of adverse listening conditions, we predicted that explicit processing may also be triggered by linguistic demands, capacity demands, or a combination of both. It is not clear which demand would have the largest effect or if multiple demands would have an additive effect. We are interested in better understanding these three distinct demands on verbal working-memory performance: linguistic, capacity, and perceptual (noise).

Current Study

We manipulated these parameters by having participants complete two working-memory tasks. We varied linguistic demand by manipulating features of the words across the working-memory tasks (closed/open set). We varied capacity demand within both tasks by increasing the number of words to be manipulated and recalled. We varied perceptual demand through use of varied listening conditions (quiet vs. noise with different SNRs).

On the basis of prior research, we expected each type of demand to have an influence on performance. We predicted that the worst performance should occur on the task with the highest capacity and linguistic demands in the most adverse listening conditions. Within the classification of adverse conditions by Mattys et al. (2012), source degradations due to environment or transmission degradation by energetic masking (e.g., steady-state speech-spectrum noise) were predicted to lead to frequent or severe failures of recognition as well as common or moderately reduced memory capacity. Difficulty listening, as operationalized by a less-favorable SNR, should disproportionately affect performance on the task with the higher linguistic demand.

Method

Participants

Forty-five healthy adults between the ages of 18 and 30 years participated in the experiment (35 women and 10 men; average age = 21.5 years, SD = 3). In addition to age, eligibility criteria included speaking English as a native language; normal otoscopy; pure-tone air-conduction thresholds of 20 dB HL or better in both ears (250–8000 Hz); and no self-reported history of a language, learning, or neurological disorder. Participants were recruited from the University of Arizona campus. They received either $10 per hour or research course credit for their participation. The Institutional Review Board at the University of Arizona approved the research, and all participants provided informed consent. Participants were assigned at random to one of three participant groups, each tested at a different SNR for the experiment (n = 15 per group).

Stimuli

The stimuli for the working-memory span tasks were recorded in a sound booth by a male native English speaker. After recording, each word was separated into individual sound clips. The trials in each task consisted of sets of words ranging from two to seven items. The trials were created by splicing words into a single .wav file. One second of silence was inserted between each pair of words within a trial. All speech stimuli were root-mean-square normalized and presented at 65 dB HL.

Subtract-2 Span Task (Lower Linguistic Demand)

The subtract-2 span task was modeled after the Salthouse (1994) subtract-2 span, requiring participants to repeat back a series of numbers after subtracting 2 from each (cf. also Waters & Caplan, 2003). For this task, the recorded words were one-syllable numbers between 2 and 10 (excluding two-syllable seven). The numbers were randomized and listed in increasing set size of two to seven numbers. To avoid negative-number responses, the smallest number included in the task was 2. Stimuli were controlled so that no number was repeated within a trial. Each set size included three trials (e.g., for span 2: Trial 1: 4, 2; Trial 2: 8, 10; Trial 3: 9, 5). The task began with four practice trials. All participants were tested at spans 2 through 7 with three trials per span, for a total of 81 items across the entire task. Increasing the number of digits to be remembered and manipulated on each trial served as the manipulation of working-memory capacity demand. Two versions of the subtract-2 task (including practice, then span 2 through span 7) were created, and participants were randomly assigned to either Version 1 or 2 for the quiet and noise conditions. For example, Participant 1 might hear Version 1 in quiet and Version 2 in noise, whereas Participant 2 might hear Version 2 in quiet and Version 1 in noise.

Subtract-2 span served as the lower linguistic-demand task due to its closed set size. In subtract-2 span, participants were instructed to repeat the series of numbers in the same order that they heard them, after subtracting 2 from each number. For example, if the participant heard “6, 2, 9,” he or she would be expected to respond with “4, 0, 7.” To be scored as correct, participants needed to repeat the correct number in the correct order. A participant who responded “4, 0, blank” for this example would earn 2 out of 3 possible points for that trial. The total possible score for all items was 81.

Alphabet Span Task (Higher Linguistic Demand)

The alphabet span task was modeled after tasks developed by Craik (1986) and Waters and Caplan (2003). Participants listened to a string of words and repeated them back after rearranging them in alphabetical order. All stimuli for the alphabet span were English monosyllabic, consonant–vowel–consonant nouns. Word recall and processing are affected by variables such as frequency of occurrence in the lexicon, number of phonological neighbors (e.g., words that vary by one phoneme), and phonotactic probability (e.g., the frequency with which sounds co-occur in words of a particular language; e.g., Alt, 2011). On the basis of such research, stimuli were carefully chosen to control for these variables. Compared with the database norms of the English Lexicon Project (Balota et al., 2007), all words had high frequency in the lexicon, high neighborhood density, and high phonotactic probability. Homophones that could interfere with alphabetization (e.g., knot, not) were excluded.

Two versions of alphabet span were created so that participants heard different versions in quiet and in noise. Using t tests, we verified that the versions were not different from one another in terms of log frequency, t(160) = −0.68, p = .49, number of phonological neighbors, t(160) = −0.63, p = .52, and sum of biphone probabilities, t(160) = −0.31, p = .75. No words were repeated within either version of the task, and different words were included in each version. As in subtract-2 span, all participants were tested from span 2 to span 7, with three trials at each set size. Thus, there were 81 unique items for scoring for each version of the task.

Alphabet span served as the task with higher linguistic demand due to its open set size and possibilities for semantic interference. Participants were instructed to repeat back a series of words in the same order that they heard them after rearranging them in alphabetical order. For example, if the participant heard “lake, pad, bat,” he or she would be expected to respond with “bat, lake, pad.” Responses were scored as correct only if the response included the correct words in the correct order. For example, “bat, pad, lake” would receive a score of only 1 out of 3 possible, and “fat, lake, pad” would receive a score of 2 out of 3 possible. Participants were instructed that order was important. For example, if the participant heard “rib, deed, nun, gill” and did not remember the first two words, he or she was to say, “blank, blank, nun, rib.” Within alphabet span, capacity demand increased from span 2 to span 7 by increasing the number of words to be remembered and alphabetized.

Noise

The steady-state speech-spectrum-shaped noise was generated using Praat software (Boersma & Weenink, 2014, with the Akustyk add-on (Plichta, 2012). The long-term average spectrum of the subtract-2 and alphabet span stimuli was calculated and then used as a filter to shape Gaussian noise. The level of the noise was set to equal the root-mean-square amplitude of the speech stimuli. A random segment of the resulting steady-state, speech-spectrum-shaped noise was then digitally combined with each subtract-2 or alphabet span trial. The presentation level of the noise during testing was then adjusted to the selected SNRs by changing the level of the second channel of the stereo track on the audiometer. The speech-spectrum-shaped noise was presented for the entire duration of each trial during the noise conditions, but no noise was presented during the response window.

Procedure

Participants were seated in a single-walled, sound-isolating booth for the experiment. Following otoscopy, air-conduction thresholds (250–8000 Hz) and speech-reception thresholds for spondee words (VA-CD) were measured for each ear via insert earphones (ER-3A) using standard clinical procedures (American Speech- Language-Hearing Association, 2005) with an audiometer (Interacoustics AC-40). Each participant completed the combination of experimental tasks and conditions in a randomized order to reduce the potential risk of list and order effects (e.g., presentation order for subtract-2 in quiet, subtract-2 in noise, alphabet span in quiet, and alphabet span in noise were randomized across participants). All auditory stimuli were administered via binaural insert earphones, with the speech signal presented at an overall root-mean-square level of 65 dB HL. The digital stimuli were presented on a PC using E-Prime 3.0 software (Psychology Software Tools, Inc., Sharpsburg, PA). The stimuli were routed from the computer using an audio interface (Edirol UA-25EX) routed to an amplifier (Crown, Model D-75A) located outside the sound booth. They were then presented through an audiometer (Interacoustics AC-40) via insert earphones (ER-3A).

We varied the amount of steady-state speech-spectrum noise, including a condition without noise. The presentation level for the speech stimuli was held constant across experimental conditions at 65 dB HL. One group of participants (n = 15) was tested in quiet and in noise with the presentation levels for the speech and noise set equally (0 dB SNR). A second group of participants (n = 15) was tested in quiet and at −5 dB SNR, and a third group of participants (n = 15) was tested in quiet and at −10 dB SNR. Two research assistants were present during testing to administer the tests and score responses. Their scoring was reviewed for agreement, and any conflicts were resolved on the basis of comparison to a digital audio recording of the participant's verbal responses.

Following the experimental conditions, participants were tested on word recognition in quiet and in noise using the words from the alphabet span task. We included the measure of word recognition in noise to ensure that participants were able to recognize words in the absence of the cognitive load associated with a working-memory manipulation. Each word was presented at 65 dB HL in conditions equivalent to those in which the participant was tested (e.g., if alphabet span List 1 was presented with noise at 0 dB SNR, then word recognition for List 1 was also presented with noise at 0 dB SNR).

Results

Overview of Tasks and Scoring

Descriptive statistics for word-recognition scores in quiet and noise are shown in Table 1. For each of the three participant groups in quiet, word recognition was at ceiling (>99% correct). Note that speech recognition in noise for the stimuli used in the alphabet span task was above 90% correct for the 0 and −5 dB SNR conditions. For each participant, any words that were incorrectly recognized were removed from consideration in scoring, to control for the effects of reduced intelligibility in noise for any particular word. To create this adjusted score, the number correct in each condition was converted to a percent correct score on the basis of the number of trials where the word was correctly recognized in isolation. 1

Table 1.

Average percent correct score and standard deviation for word recognition of stimuli in quiet and in noise, by participant group (maximum = 81 words).

| Participant group | Quiet | Noise |

|---|---|---|

| 0 dB SNR | 99.9% (0.03) | 96.4% (3.6) |

| −5 dB SNR | 99.7% (0.6) | 91.3% (5.4) |

| −10 dB SNR | 99.60% (1.0) | 54.8% (7.9) |

Note. SNR = signal-to-noise ratio.

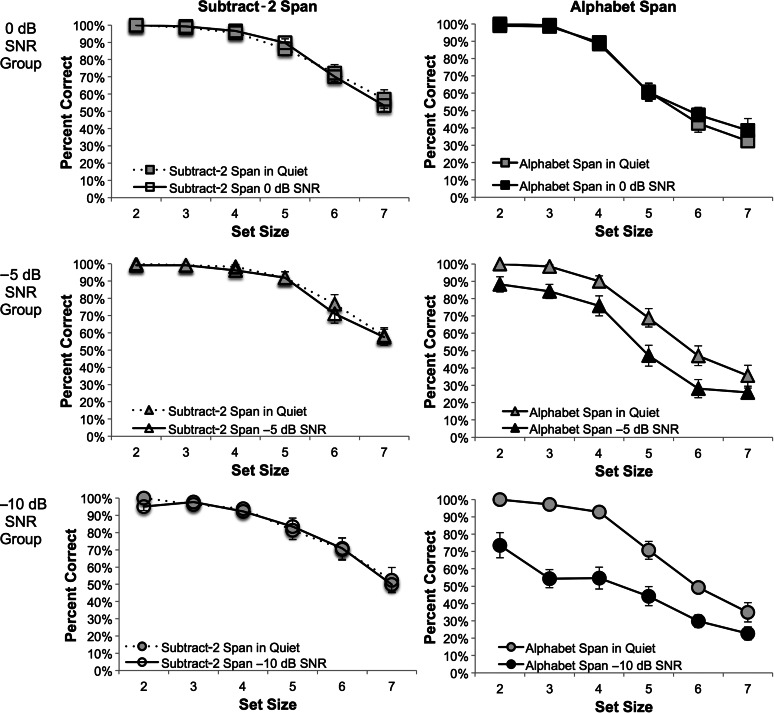

Item scores on the working-memory measures were calculated in percent correct on the basis of the number of words correctly recalled divided by the total number of items possible and correctly recognized. Figure 1 presents item scores at each set size for the working-memory tasks performed in quiet and noise. Results for the subtract-2 span task are in the left column and alphabet span results are in the right. Each panel displays results in quiet and in noise, and the error bars indicate ±1 standard error of the intersubject means. Each row in Figure 1 shows the mean performance by set size on the working-memory measures by separate participant groups (n = 15 per group) tested in the presence of background noise, at 0 (most favorable), −5, and −10 dB SNR (most adverse). The rationale for examining SNR using a between-subjects rather than a within-subject comparison was to avoid possible influences of fatigue and practice effects on task performance.

Figure 1.

Group mean score at each set size, ranging from two to seven items. Subtract-2 span is in the left column and alphabet span in the right. Data are presented by signal-to-noise-ratio (SNR) group in rows (n = 15 per group). Error bars represent ±1 standard error of the mean.

For both tasks in quiet and noise, scores declined with increasing set size (see Figure 1). Note that for most participants, only a limited number of items could be remembered and manipulated at the largest set size of seven items. Span scores were also calculated for each participant and defined as the longest set length at which the participant could recall all of the items in the correct order on two out of three trials. Of the 45 participants, only two achieved a span score of 7 on the subtract-2 task without background noise, and only one achieved a span score of 7 on the alphabet span task without background noise. Therefore, in analyses comparing results by set size (lower or higher capacity demand), we used item scores at spans 5 and 6 rather than 7 to avoid floor effects.

Working-Memory Performance in Quiet

Total item scores (combined across all set sizes 2–7) were also calculated for the purpose of comparison to other studies in the literature. Group means and standard deviations for total item scores for the three participant groups (n = 15 per group) on the subtract-2 span task were 79.7% (10.4), 82.1% (10.5), and 77.3% (15.2). For the alphabet span task, the group means and standard deviations for total item scores by participant group were 60.3% (10.3), 64.8% (12.9), and 65.2% (10.5). These scores are consistent with others reported in the literature for the subtract-2 and alphabet span tasks for adults ages 18–30 years (e.g., Waters & Caplan, 2003). To confirm that there were not group-level differences for working-memory performance in quiet, separate one-way analyses of variance (ANOVAs) were carried out for each of the tasks, with total item score as the dependent variable. For performance in quiet, the groups did not differ statistically in terms of total item score on the subtract-2 span task, F(2, 42) = 0.59, p = .56, or on the alphabet span task, F(2, 42) = 0.88, p = .42.

Effects of Capacity and Linguistic Demands in Quiet

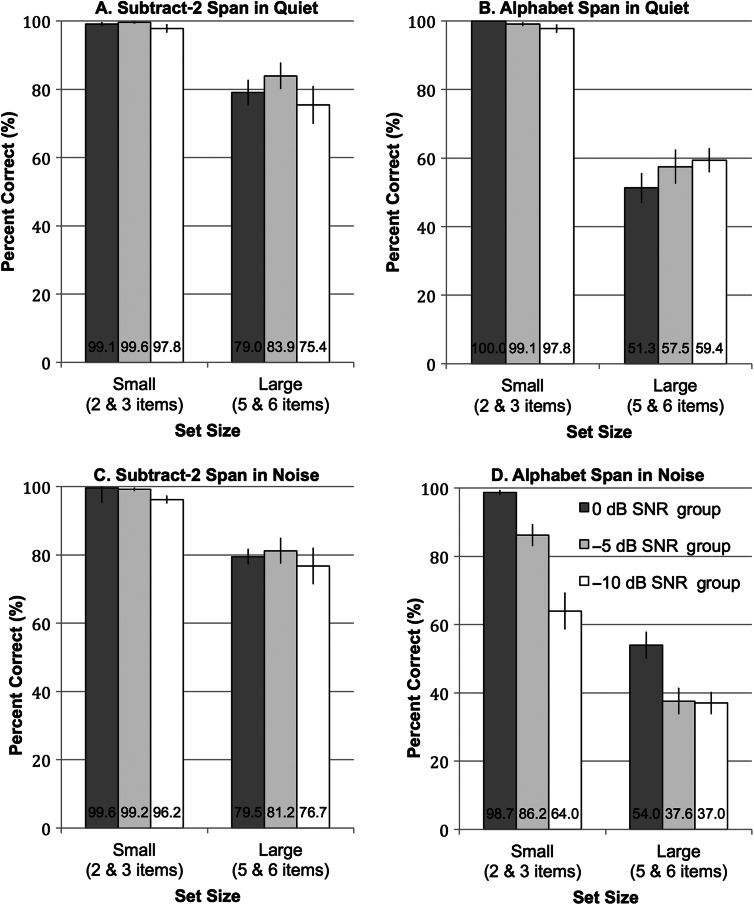

For the purpose of comparing capacity demands within each task, item scores were calculated for two nonoverlapping regions of the performance by length of set size: small span set sizes (2 and 3) and large span set sizes (5 and 6). This choice for grouping of set size was made to reduce the influence of potential floor effects at span 7 and to accommodate the drop in performance between set sizes 4 and 5 on the alphabet span task (see Figure 1, right column). Figure 2 presents group mean item scores at small and large capacity demands for the quiet conditions on both working-memory tasks (panel A: subtract-2 span, panel B: alphabet span).

Figure 2.

The upper panels display group mean scores in quiet at small (two and three items, lower capacity demand) and large (five and six items, higher capacity demand) set sizes for subtract-2 span (A) and alphabet span (B) tasks. The lower panels illustrate performance in the presence of speech-spectrum-shaped noise on subtract-2 span (C) and alphabet span (D). Error bars represent ±1 standard error of the mean (n = 45; 15 per signal-to-noise-ratio [SNR] group).

Individuals in the three participant groups performed equally well on the working-memory tasks in quiet conditions without background noise for small and large sets (see Figures 2A and 2B). When the tasks were performed without background noise, participants were more accurate when there were fewer numbers or words to remember than when there were more. We analyzed the data to confirm a main effect of working-memory capacity demand within each task and a main effect of linguistic demand across tasks. In addition, this analysis allowed us to confirm that the participant groups did not differ from one another in terms of performance in quiet.

Data from the quiet conditions were analyzed in a three-way mixed repeated measures ANOVA on performance with the factors of Linguistic Demand (subtract-2 vs. alphabet span), Capacity Demand (small set size vs. large set size), and Participant Group. There was a significant main effect of linguistic demand, F(1, 42) = 65.97, p < .001, and of capacity demand, F(1, 42) = 239.74, p < .001, and a significant Linguistic Demand × Capacity Demand interaction, F(1, 42) = 61.13, p < .001. There was not a significant main effect of participant group, and none of the between-groups interactions with linguistic demand or capacity demand were statistically significant (all ps > .2). Post hoc analyses demonstrated that the significant Linguistic Demand × Capacity Demand interaction was driven by significantly lower performance on the large set size for alphabet span compared with subtract-2 span, t(44) = 8.1, p < .001. Performance on alphabet span and subtract-2 span did not differ at the smaller set size in quiet, t(44) = −0.2, p = .84.

To explore these results further, the data for each task were analyzed in separate repeated measures ANOVAs with Set Size as the within-subject factor and Participant Group as the between-subjects factor. As expected, there were statistically significant effects of increasing capacity demand in both working-memory measures. For subtract-2 span, performance was significantly worse at the large capacity demand (set sizes 5 and 6) as compared with the small capacity demand (set sizes 2 and 3), F(1, 42) = 58.0, p < .001. There were not statistically significant differences between the participant groups, F(2, 42) = 1.22, p = .31, and there was no Capacity Demand × Participant Group interaction, F(2, 42) = 0.61, p = .55. For alphabet span, performance was also significantly worse at the large capacity demand (set sizes 5 and 6) as compared to the small capacity demand (set sizes 2 and 3), F(1, 42) = 298.7, p < .001; there were no differences between participant groups in quiet, F(2, 42) = 0.53, p = .60; and there was not a significant Capacity Demand × Participant Group interaction, F(2, 42) = 1.5, p = .24.

Working-Memory Performance in the Presence of Background Noise

Figure 2 also presents group mean item scores at small and large capacity demands for the conditions in steady-state noise on both working-memory tasks (panel C: subtract-2 span, panel D: alphabet span). Data from the noise conditions were analyzed in a three-way mixed repeated measures ANOVA on performance with the factors of Linguistic Demand (subtract-2 vs. alphabet span tasks), Capacity Demand (set size small vs. large), and Perceptual Demand (SNR; between-groups). There were significant main effects of linguistic demand, F(1, 42) = 192.35, p < .001, capacity demand, F(1, 42) = 213.3, p < .001, and perceptual demand, F(2, 42) = 13.53, p < .001. There was also a significant Linguistic Demand × Capacity Demand interaction, F(1, 42) = 57.7, p < .001, and a Linguistic Demand × Perceptual Demand interaction, F(2, 42) = 12.91, p < .001, as well as a significant three-way Linguistic Demand × Capacity Demand × Perceptual Demand interaction, F(2, 42) = 6.37, p = .004. There was not a statistically significant Capacity Demand × Perceptual Demand interaction, F(2, 42) = 2.51, p = .094.

In order to explore the nature of the two- and three-way interactions, the data for each working-memory task were further analyzed in separate repeated measures ANOVAs with Perceptual Demand (SNR) as the between-subjects factor and Capacity Demand (small set size vs. large set size) as the within-subject factor. For subtract-2 in noise (see Figure 2C), there was a significant main effect of capacity demand, F(1, 42) = 76.52, p < .001, with performance significantly worse when capacity demand was increased from smaller to larger set sizes. There were no significant differences across perceptual demand (SNRs), F(2, 42) = 0.85, p = .44. It is especially important to note that there was also not a significant Capacity Demand × Perceptual Demand interaction, F(2, 42) = 0.077, p = .93.

For alphabet span in noise (see Figure 2D), there was again a significant main effect of capacity demand, F(1, 42) = 223.13, p < .001, with performance decreasing with increasing capacity demands from small to large set sizes. However, the pattern of results by SNR for the alphabet span task was different in comparison to that for the subtract-2 task (panel C vs. panel D). There was a significant main effect of perceptual demand, F(2, 42) = 20.43, p < .001, and a significant Capacity Demand × Perceptual Demand interaction, F(2, 42) = 6.16, p = .005. Post hoc t tests were conducted using Bonferroni-adjusted α levels of .008 per test (0.05/6). Results for the small set sizes on alphabet span in noise indicated significant differences between the groups tested at 0 and −5 dB SNR (M = 98.7% vs. 86.2%), t(28) = 3.75, p = .001; between −5 and −10 dB SNR (86.2% vs. 64%), t(28) = 3.51, p = .002; and between 0 and −10 dB SNR (98.7% vs. 64%), t(28) = 6.34, p < .001. For the large set sizes, there were significant differences between groups tested in noise at 0 and −5 dB SNR (54% vs. 37.6%), t(28) = 2.97, p = .006, and between 0 and −10 dB SNR (54% vs. 37%), t(28) = 3.31, p = .003. There was not a significant difference between the −5 and −10 dB SNR groups (37.6% vs. 37%), t(28) = 0.11, p = .91.

Discussion

Our goal was to determine the effects of linguistic, capacity, and perceptual demands on working-memory tasks. The data support the notion that each type of demand does influence performance. However, we were surprised by the nature of the interactions, which did not fully align with predictions on the basis of the ELU model. We will discuss each of the findings within the framework of our hypotheses.

As expected, participants were significantly less accurate when manipulating larger versus smaller set sizes. The effects of capacity demand were observed across linguistic demand (i.e., in alphabet span and subtract-2 span) and perceptual demand (i.e., in all SNR conditions). These results show that the manipulations of capacity demand have persistent effects on task performance.

Before considering the effects of background noise, it is important to acknowledge that word recognition was poor in the −10 dB SNR condition compared with the other SNR conditions (see Table 1). This decline in recognition is consistent with previous results showing a steep psychometric function for monosyllabic words in speech-shaped noise, where even small changes in the level of steady-state background noise can lead to large changes in performance (Dubno, Horwitz, & Ahlstrom, 2005; Studebaker, Taylor, & Sherbecoe, 1994). This condition was included to maximize the likelihood of observing effects of noise in both linguistic tasks. However, it is possible that performance on alphabet span was dominated by the poor intelligibility of the stimuli at −10 dB SNR. Inadequate perception of a speech stimulus certainly may interfere with holding it in memory. For this reason, we focused on results of the 0 and −5 dB SNR groups when considering the interactions between perceptual, linguistic, and cognitive demands in this study.

The effects of perceptual demands (steady-state noise) differed for verbal working-memory tasks with different linguistic demands. Increasing levels of steady-state noise adversely affected performance on a working-memory task involving open-set speech recognition (alphabet span), but performance did not change with the same levels of increasing noise on a working-memory task involving closed-set speech recognition (subtract-2 span). Single-digit numbers constitute a closed set of highly overlearned forms, whereas there is a much larger open set of words, resulting in a need for more detailed perceptual discrimination to distinguish a word from its possible lexical neighbors (e.g., bat, hat, bag, bet). It is important to note that the present data demonstrate that background noise significantly affects the perceptual and processing demands associated with alphabet span but not subtract-2 span, at least for the type and levels of noise in the present study. As shown in Figure 1, the effects of background noise at even the highest perceptual demand tested here (−10 dB SNR) did not affect performance accuracy on subtract-2 span at any set size. This was in contrast to the effects of background noise on the alphabet span task, where performance was affected by noise as early as a set size of two words to be alphabetized. The decline cannot be attributed to poor intelligibility, as the average word-recognition scores were above 90% correct for the 0 and −5 dB SNR conditions.

It is striking that the effects of background noise on working-memory performance can be so pronounced on a linguistically demanding task such as alphabet span yet completely absent for the same levels of noise on a task with comparatively simple linguistic stimuli such as subtract-2 span. It is possible that another measure of processing demands on performance (e.g., reaction time) would be more sensitive to subtle effects of background noise on performance in the subtract-2 span task. For example, in an early study by Broadbent (1958), participants were slower to calculate subtractions of four-digit numbers from six-digit strings in the presence of background noise. Nonetheless, background noise had a significant effect on performance accuracy in alphabet span but not subtract-2 span. This pattern of findings demonstrates that the perceptual demands of steady-state noise, an energetic masker, interact with linguistic demands on some working-memory tasks. Other types of background noise such as background babble or competing talkers with greater informational masking may also produce a different pattern of results for these working-memory tasks. In related work, Neidleman, Wambacq, Besing, Spitzer, and Koehnke (2015) recently reported on the effect of background babble on working memory for a combined word-recognition and recall task. It is interesting to note that through examination of serial position performance curves, they found that young adults unexpectedly performed significantly better in babble than in quiet for recall of words in the first position. Percent correct of the first three serial positions of a word string was also significantly lower for middle-aged as compared with younger adults. These findings suggest that future studies in this line should consider the effects of task demands and their potential interactions with type of noise.

The interaction between perceptual and linguistic demands appears to be consistent with the ELU model. The basic premise is that processing capacity for speech perception and working memory is limited. Reduced or distorted acoustic cues for speech may increase the probability of lexical mismatch and induce explicit processing at the point of comparing a phonological representation to semantic long-term memory. A phonological mismatch may increase the number of possible phonological representations that may be activated. In the current study, when words belonged to a small closed set of phonologically dissimilar items (digits), they could be rapidly and effortlessly matched to existing representations in long-term memory even when they were relatively degraded by noise (subtract-2 span). However, when the set was less constrained (alphabet span), noise induced mismatch, leading to explicit processing of items. The present results suggest that increasing the linguistic demand of a verbal memory task in an adverse listening condition may limit the cognitive reserve available for other cognitive processing (cf. the “cognitive spare capacity” of Mishra, Lunner, Stenfelt, Rönnberg, & Rudner, 2013; Rudner & Lunner, 2013). Other potential explanations of the effects of background noise on working memory include interference at encoding or rehearsal, even when perception (recognition) is intact (McCoy et al., 2005; Rabbitt, 1991; Wingfield & Tun, 2007). The nature of the linguistic demand within the verbal working-memory task also matters, as demonstrated by the differential effects of noise on subtract-2 span and alphabet span. Noise alone, depending on its level, is not necessarily enough to induce explicit processing as measured by performance. However, there may be differences in reaction time or other metrics that our experiment did not capture.

The picture is more complex once you consider the additional effects of capacity demands. Recall that we predicted that the most dramatic effects on performance would occur at the longest set size, for the task with the highest linguistic demand, in the most adverse listening conditions. This is not what we found. Our data showed that changes across SNR were greater for the smaller set size than for the larger set size in the task with higher linguistic demand (alphabet span). In addition to the decline between 0 and −5 dB SNR, we also expected to see a decrease in performance between −5 and −10 dB SNR for the larger set size in alphabet span. Likewise, there was no real effect of increasing perceptual demand for the subtract-2 span task. These findings are the opposite of what would be predicted by capacity-limited models such as the ELU model.

One possibility is that these unexpected findings reflect floor effects. On this account, we did not observe significantly greater performance drops in the most adverse conditions because participants could not perform any worse than they already were. As Figure 1 shows, accuracy on alphabet span never fell below about 20%, even for set sizes of 7 in the −10 dB SNR condition. This accuracy level corresponds to correctly identifying approximately one word in each trial. The fact that participants did not fall to 0% accuracy, even in the most challenging condition, suggests that 20% accuracy might function as the floor for this task. However, it is unlikely that floor effects can fully account for the results. The alphabet span task has an open set of potential targets. In theory, participants could fail to recognize any of the words, resulting in 0% accuracy. Thus even if floor effects contribute to the results, it is unlikely that these findings are reducible to floor effects.

Another possibility is that this pattern of findings can be attributed to engagement (i.e., depth of processing). On this account, background noise might have less impact on more difficult tasks because of an increase in task engagement. In this context, engagement is not equivalent to interest in the task. Rather, the increased difficulty of the task, due to capacity, linguistic, or perceptual demands, would induce increased cognitive processing (e.g., Neidleman et al., 2015). Halin, Marsh, Haga, Holmgren, and Sörqvist (2014) offered such a task-engagement explanation to account for their findings that whereas background speech disrupted performance on a visual proofreading task, the effect was reduced for proofreading in the presence of a visual masker or altered font. The reduced effects of background noise with higher visual task demands could be due to reduced attentional capture by the auditory signal with heightened focal task attention, or by task engagement constraining auditory-sensory gating so that the auditory stimulus is not processed at as deep a level. Sörqvist, Stenfelt, and Rönnberg (2012) provided electrophysiological evidence supporting the depth-of-processing explanation. In a study of visual-verbal working memory, they found that as cognitive load increased, processing of irrelevant sounds (e.g., tone bursts) decreased, with wave-V amplitudes of the auditory brainstem response decreasing with increasing task load. They interpreted these results as higher task engagement affecting auditory-sensory gating, meaning that for difficult tasks, background speech would not be processed as deeply and therefore would be less distracting than with simpler tasks. These types of findings suggest that more difficult tasks may result in increased depth of processing, known as engagement, and yield better-than-expected performance in challenging conditions. In the present study, the implication is that participants did not fall to 0% accuracy in the most challenging conditions due to a higher level of task engagement, similar to the findings of Neidleman et al. (2015).

The extent to which such enhanced processing in difficult listening conditions is under conscious control is unclear. For example, task engagement might be mediated by the subjective experience of task difficulty. Recall that word recognition scores at −5 dB SNR were quite high—above 90% (see Table 1). In contrast, accuracy on the alphabet span task declined notably at this same level of perceptual demand. Thus, a person focusing solely on perceptual ability (e.g., recognition) would not detect a need for increased attention to task and might have an illusion of competence in noise despite declines in working-memory performance. This might be one reason that we see the decrease in performance for the alphabet span task even in conditions demanding the least of working-memory capacity (spans 2 and 3). On the converse, participants in the −10 dB SNR condition would be very likely to detect a need for increased attention to the task, resulting in better-than-expected performance at higher set sizes.

The present study has demonstrated differential effects of steady-state noise on verbal working-memory tasks. The findings point to a more nuanced view of interactions in auditory-cognitive processing than the ELU model currently offers. The central idea of the ELU model is that working-memory processes are engaged under adverse listening conditions. This idea is consistent with the claim that linguistic and capacity demands would also engage working memory, but the ELU model to date has focused on adverse perceptual conditions. The ELU model is currently underspecified with respect to how other variables might trigger a listener to engage the explicit processes that involve working memory. The present results suggest that higher levels of capacity and linguistic demand will trigger explicit processing. However, different sources of difficulty may interact in somewhat unexpected ways. For example, we observed no effect of noise in the subtract-2 span task, which has relatively low linguistic demands. In contrast, noise had a detrimental effect when combined with the high linguistic demands of the alphabet span task, even for low set sizes. Further, the high levels of performance on alphabet span in quiet at small set sizes demonstrated that this task is well within the working-memory capacity abilities of the participants. Thus, effects of perceptual demand were only observed under high levels of linguistic demand.

These findings have potential implications for researchers and clinicians. In terms of research, the results suggest the importance of consideration of specific task demands when measuring and interpreting performance on cognitive or listening tasks. That is, one would not want to conflate the effects of noise with effects of linguistic demand or working-memory capacity demand. Further research is needed to more fully explain the critical task parameters. The present results suggest that an important variable to consider is the nature of the stimuli (closed vs. open set of lexical items). In an open set with a greater number of alternatives, there may be a greater linguistic demand on working memory to determine the correct phonologic and semantic interpretation (Cowan, 1988, 1997; Francis & Nusbaum, 2009). Another consideration for future research would be the type of operation (e.g., simple math vs. alphabetizing). When predicting performance in listening situations, we conclude that it is important to consider noise, linguistic demand, and working-memory capacity demand, because they each play an independent role and have the potential to interact with one another.

The finding that performance was affected at a low set size for linguistically demanding tasks in an even mildly adverse listening condition is also important clinically. It suggests that many people might have difficulty in memory performance in conditions that might not, at first glance, seem too challenging on the basis of recognition. Also, people with deficits in language skills, working memory, or perceptual abilities might be at higher risk for declines in memory performance in noise. Extrapolating from the present results, the interaction between linguistic and perceptual demand on working memory may differ across populations. For example, populations with known perceptual challenges, such as individuals with hearing loss, may show interactions between noise levels even for the less linguistically demanding subtract-2 span task. In the alternative, the relative ease of a subtract-2 task might change for young adults who have hearing loss or the additional cognitive challenge of learning English and are thus not as familiar with the closed number set. When considering performance in a particular listening situation, it is important to examine the perceptual, linguistic, and capacity demands, and the way those demands might be influenced by factors intrinsic to the individual, such as hearing loss, nonnative language processing, age-related declines in working-memory capacity, and language or neurocognitive disorders such as developmental language delay or aphasia. For future research, there are several details to examine in terms of how different types of listening conditions and cognitive tasks will interact to affect people with differing perceptual and cognitive skills.

Acknowledgments

This study was supported by research funding associated with the James S. and Dyan Pignatelli/Unisource Clinical Chair in Audiologic Rehabilitation for Adults, the Department of Speech, Language, and Hearing Sciences at the University of Arizona, and the National Institutes of Health/National Institute on Deafness and Other Communication Disorders (K23DC010808 to Gayle DeDe). Preliminary data were presented at the American Auditory Society meeting in Scottsdale, AZ, March 2014. We thank research assistants Naomi Rhodes, Jaclyn Hellmann, Daniel Bos, Sarah Whitehurst, and Jen DiLallo for their assistance with data collection. We also thank Mitch Sommers for his helpful comments on an earlier version of this research article.

Funding Statement

This study was supported by research funding associated with the James S. and Dyan Pignatelli/Unisource Clinical Chair in Audiologic Rehabilitation for Adults, the Department of Speech, Language, and Hearing Sciences at the University of Arizona, and the National Institutes of Health/National Institute on Deafness and Other Communication Disorders (K23DC010808 to Gayle DeDe). Preliminary data were presented at the American Auditory Society meeting in Scottsdale, AZ, March 2014.

Footnote

The data were also analyzed using raw item scores without adjustment for intelligibility of items. The overall results would be interpreted in the same way.

References

- Alt M. (2011). Phonological working memory impairments in children with specific language impairment: Where does the problem lie? Journal of Communication Disorders, 44, 173–185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- American Speech-Language-Hearing Association. (2005). Guidelines for manual pure-tone threshold audiometry [Guidelines]. Retrieved from http://www.asha.org/policy

- Arehart K. H., Souza P., Baca R., & Kates J. M. (2013). Working memory, age, and hearing loss: Susceptibility to hearing aid distortion. Ear and Hearing, 34, 251–260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley A. (2003). Working memory: Looking back and looking forward. Nature Reviews Neuroscience, 4, 829–839. [DOI] [PubMed] [Google Scholar]

- Balota D. A., Yap M. J., Cortese M. J., Hutchison K. A., Kessler B., Loftis B., … Treiman R. (2007). The English Lexicon Project. Behavior Research Methods, 39, 445–459. [DOI] [PubMed] [Google Scholar]

- Boersma P., & Weenink D. (2014). Praat: Doing phonetics by computer (Version 5.3.82) [Computer software]. Retrieved from http://www.praat.org

- Broadbent D. E. (1958). Effect of noise on an “intellectual” task. The Journal of the Acoustical Society of America, 30, 824–826. [Google Scholar]

- Carpenter P. A., Miyake A., & Just M. A. (1994). Working memory constraints in comprehension: Evidence from individual differences, aphasia, and aging. In Gernsbacher M. A. (Ed.), Handbook of psycholinguistics (pp. 1075–1122). New York, NY: Academic Press. [Google Scholar]

- Case R., Kurland D. M., & Goldberg J. (1982). Operational efficiency and the growth of short-term memory span. Journal of Experimental Child Psychology, 33, 386–404. [Google Scholar]

- Conway A. R. A., Kane M. J., & Engle R. W. (2003). Working memory capacity and its relation to general intelligence. Trends in Cognitive Sciences, 7, 547–552. [DOI] [PubMed] [Google Scholar]

- Cowan N. (1988). Evolving conceptions of memory storage, selective attention, and their mutual constraints within the human information-processing system. Psychological Bulletin, 104, 163–191. [DOI] [PubMed] [Google Scholar]

- Cowan N. (1997). Attention and memory. New York, NY: Oxford University Press. [Google Scholar]

- Cowan N. (2008). What are the differences between long-term, short-term, and working memory? Progress in Brain Research, 169, 323–338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craik F. I. M. (1986). A functional account of age differences in memory. In Klix F. & Hagendorf H. (Eds.), Human memory and cognitive capabilities (pp. 409–422). New York, NY: Elsevier Science. [Google Scholar]

- Daneman M., & Carpenter P. A. (1980). Individual differences in working memory and reading. Journal of Verbal Learning and Verbal Behavior, 19, 450–466. [Google Scholar]

- Daneman M., & Merikle P. M. (1996). Working memory and language comprehension: A meta-analysis. Psychonomic Bulletin & Review, 3, 422–433. [DOI] [PubMed] [Google Scholar]

- DeDe G., Caplan D., Kemtes K., & Waters G. (2004). The relationship between age, verbal working memory, and language comprehension. Psychology and Aging, 19, 601–616. [DOI] [PubMed] [Google Scholar]

- Dubno J. R., Horwitz A.R., & Ahlstrom J. B. (2005). Word recognition in noise at higher-than-normal levels: Decreases in scores and increases in masking. The Journal of the Acoustical Society of America, 118, 914–922. [DOI] [PubMed] [Google Scholar]

- Ellermeier W., & Zimmer K. (2014). The psychoacoustics of the irrelevant sound effect. Acoustical Science and Technology, 35, 10–16. [Google Scholar]

- Engle R. W., Tuholski S. W., Laughlin J. E., & Conway A. R. (1999). Working memory, short-term memory, and general fluid intelligence: A latent-variable approach. Journal of Experimental Psychology: General, 128, 309–331. [DOI] [PubMed] [Google Scholar]

- Foo C., Rudner M., Rönnberg J., & Lunner T. (2007). Recognition of speech in noise with new hearing instrument compression release settings requires explicit cognitive storage and processing capacity. Journal of the American Academy of Audiology, 18, 618–631. [DOI] [PubMed] [Google Scholar]

- Francis A. L., & Nusbaum H. C. (2009). Effects of intelligibility on working memory demand for speech perception. Attention, Perception, & Psychophysics, 71, 1360–1374. [DOI] [PubMed] [Google Scholar]

- Halin N., Marsh J. E., Haga A., Holmgren M., & Sörqvist P. (2014). Effects of speech on proofreading: Can task-engagement manipulations shield against distraction? Journal of Experimental Psychology: Applied, 20, 69–80. [DOI] [PubMed] [Google Scholar]

- Just M. A., & Carpenter P. A. (1992). A capacity theory of comprehension: Individual differences in working memory. Psychological Review, 99, 122–149. [DOI] [PubMed] [Google Scholar]

- Lunner T. (2003). Cognitive function in relation to hearing aid use. International Journal of Audiology, 42, S49–S58. [DOI] [PubMed] [Google Scholar]

- Mattys S. L., Davis M. H., Bradlow A. R., & Scott S. K. (2012). Speech recognition in adverse conditions: A review. Language and Cognitive Processes, 27, 953–978. [Google Scholar]

- McCoy S. L., Tun P. A., Cox L. C., Colangelo M., Stewart R. A., & Wingfield A. (2005). Hearing loss and perceptual effort: Downstream effects on older adults' memory for speech. The Quarterly Journal of Experimental Psychology: Human Experimental Psychology, 58(A), 22–33. [DOI] [PubMed] [Google Scholar]

- Mishra S., Lunner T., Stenfelt S., Rönnberg J., & Rudner M. (2013). Seeing the talker's face supports executive processing of speech in steady state noise. Frontiers in Systems Neuroscience, 7, 96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mishra S., Stenfelt S., Lunner T., Rönnberg J., & Rudner M. (2014). Cognitive spare capacity in older adults with hearing loss. Frontiers in Aging Neuroscience, 6, 96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy D. R., Craik F. I. M., Li K. Z. H., & Schneider B. A. (2000). Comparing the effects of aging and background noise on short-term memory performance. Psychology and Aging, 15, 323–334. [DOI] [PubMed] [Google Scholar]

- Neidleman M. T., Wambacq I., Besing J., Spitzer J. B., & Koehnke J. (2015). The effect of background babble on working memory in young and middle-aged adults. Journal of the American Academy of Audiology, 26, 220–228. [DOI] [PubMed] [Google Scholar]

- Ng E. H. N., Rudner M., Lunner T., Pedersen M. S., & Rönnberg J. (2013). Effects of noise and working memory capacity on memory processing of speech for hearing-aid users. International Journal of Audiology, 52, 433–441. [DOI] [PubMed] [Google Scholar]

- Parbery-Clark A., Skoe E., Lam C., & Kraus N. (2009). Musician enhancement for speech-in-noise. Ear and Hearing, 30, 653–661. [DOI] [PubMed] [Google Scholar]

- Parbery-Clark A., Strait D. L., Anderson S., Hittner E., & Kraus N. (2011). Musical experience and the aging auditory system: Implications for cognitive abilities and hearing speech in noise. PLoS ONE, 6, e18082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichora-Fuller M. K. (2006). Perceptual effort and apparent cognitive decline: Implications for audiologic rehabilitation. Seminars in Hearing, 27, 284–293. [Google Scholar]

- Pichora‐Fuller M. K., Schneider B. A., & Daneman M. (1995). How young and old adults listen to and remember speech in noise. The Journal of the Acoustical Society of America, 97, 593–608. [DOI] [PubMed] [Google Scholar]

- Plichta B. (2012). Akustyk (Version 1.9.3) [Computer software]. Retrieved from http://archive.is/Ma5SA

- Rabbitt P. (1966). Recognition: Memory for words correctly heard in noise. Psychonomic Science, 6, 383–384. [Google Scholar]

- Rabbitt P. M. A. (1968). Channel-capacity, intelligibility and immediate memory. The Quarterly Journal of Experimental Psychology, 20, 241–248. [DOI] [PubMed] [Google Scholar]

- Rabbitt P. (1991). Mild hearing loss can cause apparent memory failures which increase with age and reduce with IQ. Acta Oto-Laryngologica, 111(Suppl. 476), 167–176. [DOI] [PubMed] [Google Scholar]

- Rönnberg J., Lunner T., Zekveld A., Sörqvist P., Danielsson H., Lyxell B., … Rudner M. (2013). The Ease of Language Understanding (ELU) model: Theoretical, empirical, and clinical advances. Frontiers in Systems Neuroscience, 7, 31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rönnberg J., Rudner M., Lunner T., & Zekveld A. A. (2010). When cognition kicks in: Working memory and speech understanding in noise. Noise & Health, 12, 263–269. [DOI] [PubMed] [Google Scholar]

- Rudner M., & Lunner T. (2013). Cognitive spare capacity as a window on hearing aid benefit. Seminars in Hearing, 34, 298–307. [Google Scholar]

- Rudner M., Rönnberg J., & Lunner T. (2011). Working memory supports listening in noise for persons with hearing impairment. Journal of the American Academy of Audiology, 22, 156–167. [DOI] [PubMed] [Google Scholar]

- Salamé P., & Baddeley A. (1982). Disruption of short-term memory by unattended speech: Implications for the structure of working memory. Journal of Verbal Learning and Verbal Behavior, 21, 150–164. [Google Scholar]

- Salthouse T. A. (1994). The aging of working memory. Neuropsychology, 8, 535–543. [Google Scholar]

- Sörqvist P., Stenfelt S., & Rönnberg J. (2012). Working memory capacity and visual–verbal cognitive load modulate auditory–sensory gating in the brainstem: Toward a unified view of attention. Journal of Cognitive Neuroscience, 24, 2147–2154. [DOI] [PubMed] [Google Scholar]

- Studebaker G. A., Taylor R., & Sherbecoe R. L. (1994). The effect of noise spectrum on speech recognition performance-intensity functions. Journal of Speech and Hearing Research, 37, 439–448. [DOI] [PubMed] [Google Scholar]

- Waters G. S., & Caplan D. (2003). The reliability and stability of verbal working memory measures. Behavior Research Methods, Instruments, & Computers, 35, 550–564. [DOI] [PubMed] [Google Scholar]

- Wingfield A., & Tun P. A. (2007). Cognitive supports and cognitive constraints on comprehension of spoken language. Journal of the American Academy of Audiology, 18, 548–558. [DOI] [PubMed] [Google Scholar]