Abstract

Signals with discontinuities appear in many problems in the applied sciences ranging from mechanics, electrical engineering to biology and medicine. The concrete data acquired are typically discrete, indirect and noisy measurements of some quantities describing the signal under consideration. The task is to restore the signal and, in particular, the discontinuities. In this respect, classical methods perform rather poor, whereas non-convex non-smooth variational methods seem to be the correct choice. Examples are methods based on Mumford–Shah and piecewise constant Mumford–Shah functionals and discretized versions which are known as Blake–Zisserman and Potts functionals. Owing to their non-convexity, minimization of such functionals is challenging. In this paper, we propose a new iterative minimization strategy for Blake–Zisserman as well as Potts functionals and a related jump-sparsity problem dealing with indirect, noisy measurements. We provide a convergence analysis and underpin our findings with numerical experiments.

Keywords: signals with discontinuities, piecewise constant signals, Potts functionals, Blake–Zisserman functionals, inverse problems, convergence analysis

1. Introduction

Problems involving reconstruction tasks for functions with discontinuities appear in numerous biological and medical applications. Examples are the steps in the rotation of the bacterial flagella [1,2], the cross-hybridization of DNA [3–5] and single-molecule fluorescence resonance energy transfer [6]. A more classical engineering example is crack detection in fracture mechanics. In general, signals with discontinuities appear in many applied problems ranging from mechanics, electrical engineering to biology and medicine. For further examples, we refer to the papers [7–9] and the references therein. The latter references in particular deal with various aspects concerning the reconstruction of piecewise constant signals.

For reconstruction tasks, it is quite usual to use a variational approach: an energy functional consisting of a data fidelity term, and a regularizing term is minimized. It is well known that using classical regularizing terms based on Hilbert–Sobolev semi-norms performs poorly for the recovery of functions with discontinuities. Much better results are obtained by non-smooth non-convex minimizers such as the Mumford–Shah functional and the piecewise-constant Mumford–Shah functional [10,11]. These functionals penalize the ‘size’ (i.e. the outer Hausdorff measure) of the discontinuity set and, only on its complement, measure some qth variation. However, their analysis is more demanding because they are non-smooth and non-convex. Some references concerning Mumford–Shah functionals are [12–15] and also the references therein; see also the book [16]. Mumford–Shah functionals are the maybe most well-known representative of the class of free-discontinuity problems introduced by De Giorgi [17].

The concrete data acquired in applications are usually indirectly measured. Furthermore, they consist of measurements on a discretized grid and are typically corrupted by noise. In practical applications, the task is to restore the signal and, in particular, to recover the discontinuities from these measurements. In order to deal with indirect linear measurements, data terms of the form ∥Au−f∥2, where A represents the measurement device and f is the given data, are considered. To deal with the discrete data, typically, the energy functional is discretized.

Discretizations of the Mumford–Shah functional and the piecewise-constant Mumford–Shah functional are known under the names Blake–Zisserman functionals and Potts functionals, respectively. The weak string model as it is called by Blake & Zisserman in [18] is given in its univariate version by

Here, the data f live in a real-valued finite dimensional linear space. The parameter γ>0 controls the balance between data fidelity and regularizing term. The parameter s>0 determines the discontinuity. More precisely, the underlying signal models are piecewise smooth functions with discontinuities. In a discrete set-up, we say there is a jump (a discontinuity) between i,i+1 if the distance between ui+1,ui is at least s. The penalty for a jump is then independent of its magnitude. If the distance between consecutive members of u is smaller than s, the penalty is just chosen as the (discrete) qth variation of u. We allow for . References related to Blake–Zisserman functionals are [13,18–20].

The Potts model [21–23] is a discrete variant of the piecewise-constant Mumford–Shah model. It assumes that the underlying signal is constant between its discontinuities. The corresponding minimization problem is given by

where ∥∇u∥0=|{i:ui≠ui+1}| denotes the number of jumps of the target variable u. The name Potts model is frequently used in statistics as well as signal and image processing [24–28]; it is a tribute to Renfrey B. Potts and his work in statistical mechanics [21] where the above priors were used for the first time. For recent work concerning the reconstruction of piecewise constant signals, we refer to the papers [7,8] of Little & Jones; the first paper includes an overview, and the second paper in particular deals with methods.

Formally, the Potts problem () might be seen as the ‘Lagrange formulation’ of the following constrained optimization problem which we call J-jump sparsity problem. It is given by

where We later see that the relation between the Potts problem () and the J-jump sparsity problem is only of formal nature; they are not equivalent. To the best of our knowledge, the J-jump sparsity problem () for general A has only recently appeared in the literature [29]; the authors obtain asymptotic statements in the context of inverse regression. The situation A=id is well studied. References on the J-jump sparsity problem () for A=id are [30,31]. Related sparsity problems were considered in [32].

Finding solutions of all the above problems is challenging because they are NP hard; for the Blake–Zisserman problem (), this has been shown in [33], and for the Potts problem, in [34]. Thus, for real applications, there is no hope to find a global minimizer in reasonable time. However, owing to the practical importance, several approximative strategies have been proposed. Fornasier & Ward [19] (see also Artina et al. [35]) rewrite the Blake–Zisserman problem as a pointwise-penalized problem and derive generalized iterative thresholding algorithms for the rewritten problem. They show that their method converges to a local minimizer. Further theoretical results concerning the pointwise penalized problem were derived by Nikolova [20]. Related algorithms are iterative soft thresholding for ℓ1 penalized problems, analysed by Daubechies et al. [36], and the iterative hard thresholding algorithms for ℓ0 penalizations, analysed by Blumensath & Davies in [32,37]. For the Potts problem, the authors of this paper have proposed a strategy based on the alternating methods of multipliers in [34]. Furthermore, Candès et al. [38] use iteratively re-weighted total variation minimization for piecewise constant recovery problems. Results of compressed sensing type related to the Potts problem have been derived by Needell & Ward [39,40]: under certain conditions, minimizers of the Potts functional agree with total variation minimizers. For the case of Blake–Zisserman functionals we are not aware of similar results. However, in the presence of noise, total variation minimizers might significantly differ from minimizers of the Potts problem. But, the minimizers of the Potts problem are the results frequently desired in practice.

In this paper, we propose a new iterative minimization strategy for Blake–Zisserman as well as Potts functionals and the J-jump sparsity problem dealing with indirect, noisy measurements. Our methods belong to the class of majorization–minimization or forward–backward splitting methods of Douglas–Rachford type [41]. In contrast to the approaches in [19] and [32,37], which lead to thresholding algorithms, our approach leads to non-separable yet computationally tractable problems in the backward step. Facing the additional challenge owing to the non-separability of the backward step, we provide a convergence analysis. Most notably, we obtain convergence statements towards local minimizers. We further establish a relation between stationary points of the iterations and local and global minimizers. We also show that the Potts problem () and the J-jump sparsity problem () are not equivalent. From an experimental side, we show the applicability of each of our algorithms in several signal recovery experiments. In particular, we consider deconvolution problems with full and partial data. We apply our methods to real data: we estimate the steps in the rotation of the bacterial flagellar motor [1].

The paper is organized as follows. In §2, we consider the variational recovery of signals with jumps. We properly introduce the considered problems and derive iterative algorithms for their solution. This is done for the Potts problem in §2a, for the J-jump sparsity problem in §2b, and for the Blake–Zisserman problem in §2c. In §3, we gather our analytical results. They are formulated in §3a, and the proofs are supplied in the following sections. In §4, we apply the algorithms derived in this paper to concrete reconstruction problems.

2. Variational recovery of signals with jumps and related algorithms

We consider the Potts problem and the J-jump sparsity problem dealing with piecewise constant signals in §2a and b, respectively. The Blake–Zisserman problem dealing with piecewise smooth signals is treated in §2c.

(a). The Potts problem

We start with the Potts problem (). Here, the underlying signal models are the piecewise constant functions. The idea is to minimize the functional in () for corrupted, indirectly measured noisy data to obtain a parsimonious piecewise constant estimate for the underlying signal.

We first of all record the existence of minimizers for this non-continuous problem.

Theorem 2.1 —

The Potts problem () has a minimizer.

Proofs are, e.g. given in [19] or in [34] where also more general ℓp data terms (which lead to a non-Hilbert space setup) are considered. The minimizers of the Potts problem are not unique in general—even if A is injective; but, at least, for A=id, the set of data and parameters γ for which minimizers are not unique are known to be a negligible set [42]. It is worth mentioning that the time-continuous formulation of the Potts problem need not have solutions in general. Here, additional regularization, e.g. by constraints is needed. Then, under further suitable assumptions, the minimizers of the Potts problem converge to piecewise constant minimizer of a continuous Potts functional (or, synonymously, piecewise constant Mumford–Shah functional), as sampling gets finer [25]. This also holds in the presence of noise [25,26,43]. For related results on Potts functionals with L1 data terms, we also refer to Weinmann et al. [44].

Iterative Potts minimization algorithms. Because the Potts problem is NP hard (cf. [34]), there is no hope to find a practically applicable algorithm that computes a global minimizer within reasonable time. The challenge is to derive practically applicable algorithms that produce ‘good solutions’—‘good’ from the practical point of view—and to obtain analytic guarantees, at least locally. We here derive an algorithm that does so. Later on, we show convergence towards a local minimizers. To this end, we consider the Potts functional

| 2.1 |

Here, the target variable u lives in data f consisting of s measurements live in and A is a real-valued s×L matrix. The corresponding surrogate functional Psurrγ is given by

| 2.2 |

Expanding and rearranging the terms on the right-hand side, we see that

| 2.3 |

with some constant C which is independent of u and thus negligible. It follows that

| 2.4 |

We now successively compute We obtain the following iterative Potts minimization algorithm given by the iteration

| 2.5 |

where I denotes the identity matrix. The first step consists of simple matrix–vector multiplications which can be done in quadratic time. The crucial observation is that the second step consists of minimizing a Potts functional with A=id, given by

| 2.6 |

for data d=dk+1. For the special case A=id, a global minimizer can be computed in quadratic complexity as explained in the next paragraph. Hence, both steps of the iteration can be computed efficiently.

The Potts problem for A=id. The Potts problem (2.6) (with A=id) can be solved exactly by dynamic programming [10,11,13,23,31,45–47]. Because it is a basic building block of our method, we briefly describe the basic idea of the dynamic programming algorithm for its solution. For a more detailed description, we refer, e.g. to [45]. We assume that we have already computed minimizers ul of the Potts functionals Pidγ associated with the partial data (d1,…,dl) for each l=1,…,r and some r smaller than the number L of full data items. Using this information, the Potts functional associated with data (d1,…,dr+1) can be efficiently minimized via

| 2.7 |

where ϵ[l,r+1] is the quadratic deviation of the data (dl,…,dr+1) from its mean. Here, we use the convention that u0 is an empty vector and Pidγ(u0)=−γ. A corresponding minimizer reads ur+1= (ul*−1,w[l*,r+1]), where l* is a minimizing argument of the right-hand side of (2.7) and w[l*,r+1]=(μ,…,μ) with μ being the mean value of (dl*,…,dr). We obtain a minimizer for full data d by successively computing ul for each l=1,…,L. The described method can be implemented in O(L2) using pre-computation of the moments and storing only jump locations [45]. Furthermore, there are strategies to prune the search space which speed up the computations in practice [48,49]. Besides the dynamic programming approach there are greedy algorithms which perform well in practice but which come without theoretical guarantees; for a discussion, see [7,8].

Relations to iterative hard thresholding. In [19], Fornasier and Ward transform Blake–Zisserman problems with ℓ2 data terms to sparsity-related problems and solve the resulting problem by iterative thresholding algorithms. The same technique can also be applied to the Potts problem (cf. [34]). We obtain the sparsity problem

| 2.8 |

Here, ∇+ is the pseudo-inverse of the discrete difference operator ∇. The transformed matrix A′ and the transformed data f′ are obtained from A,f by

| 2.9 |

with given by ,

| 2.10 |

Then, the iterative thresholding algorithm proposed by Blumensath & Davis [32,37] can be applied to (2.8). This thresholding algorithm consists of a gradient or Landweber step for the data f′ and the matrix A′∇+ followed by a hard thresholding step. In contrast, the proposed method in this paper uses Potts minimization for A=id working on the direct problem instead of thresholding on a transformed problem. Solving the Potts problem for A=id is of quadratic complexity as opposed to the linear complexity of thresholding. Thus, the proposed algorithm might seem computationally more expensive than the iterative hard thresholding. However, the involved matrix–vector multiplications have already quadratic complexity in general. Even if A is a band matrix or of convolution type (and thus diagonalizable by Fourier methods), the matrix A′∇+ is typically full or not of convolution-type anymore. This is because ∇+ is a full lower triangular matrix. So in either way, the complexity is quadratic.

(b). The J-jump sparsity problem

We here consider the J-jump sparsity problem (). As for the Potts problem, the underlying signal model is the piecewise constant functions. We minimize the constrained functional in () for corrupted, indirectly measured noisy data to obtain a parsimonious J-jump sparse estimate for the underlying signal. To the best of our knowledge, the J-jump sparsity problem () for general A has only recently appeared in the literature [29]. However, the situation A=id has been considered, for example, in optimum quantization [30] and computational biology [31]. Related sparsity problems were considered in [32].

We first state the existence of minimizers.

Theorem 2.2 —

The J-jump sparsity problem () has a solution.

Although the functional is not continuous, the proof is trivial: fix arbitrary J indices M, consider u's which have their (left) jump set contained in J. These u form a vector space of dimension J on which the quadratic functional u↦Q(u)=∥Au−f∥2 has a minimizer, say uM. Variation over all jump sets M of size J (which are finitely many) yields a candidate uM for each jump set M. Now, one can just take a candidate u* with the smallest Q(uM)-value among all M.

The minimizers of the J-jump sparsity problem are not unique in general. This is a consequence of theorem 3.1, because Potts solutions are solutions of the J-jump sparsity problems, and Potts solutions are not unique.

We record that the problem of finding one or all minimizers of the J-jump sparsity problem () is NP-hard.

Theorem 2.3 —

The J-jump sparsity problem () is NP-hard.

This can be seen easily seen by the following argument. One (theoretic) way to obtain a solution for the Potts problem for specific parameter γ is to compute a J-jump sparse solution uJ for all J, J smaller than the signal length. Then, we compare the values Pγ(uJ) and choose a minimal one. This is a minimizer of the Potts problem for γ. Hence, there is a strategy to find a solution of the NP-hard Potts problem by solving at most L J-jump sparsity problems (), where L is the problem size. Therefore, the NP-hardness of the Potts problem implies the NP-hardness of the J-jump sparsity problem ().

By these considerations, finding global minimizer within reasonable time is infeasible. Instead, we derive a practically applicable algorithm that performs well in practice and derive at least statements on local optimality.

Iterative J-jump sparsity algorithms. We consider the J-jump sparsity problem

| 2.11 |

and the corresponding constrained surrogate problem

| 2.12 |

We may rewrite Qsurr(u,v) to obtain the problem

| 2.13 |

We see that this problem is a J-jump sparsity problem with A=id which is computationally feasible as explained below; the complexity is quadratic. Then, minimizing Qsurr(u,v) (or, more precisely, the constrained surrogate problem) with respect to u and taking the result as new input v yields the iteration

| 2.14 |

We call this iteration iterative J-jump sparsity algorithm.

The J-jump sparsity problem for A=id. In (2.13), we have to solve a J-jump sparsity problem for A=id of the form

| 2.15 |

for data d=dk+1=(I−A*A)uk+A*f. As the Potts problem, it can be solved exactly using dynamic programming. The algorithm was reinvented several times in different contexts; we refer to [30,31]. Let us assume that we have solutions of (2.15) for all numbers of jumps j=0,…,J and for all partial data (d1,…,dl) for all l=1,…,r with r being smaller than the number L of data items. For j jumps and length l, we denote a corresponding solution by ul,j. We want to compute a minimizer for data (d1,…,dr+1). We first note that the zero jump solution ur+1,0 is given by the mean of d[1,r+1]:=(d1,…,dr+1). For j jumps, j=1,…,J, we use that

where ϵ[l,r+1] is again the quadratic deviation of the data (dl,…,dr+1) from its mean value. Corresponding minimizers are given by ur+1,j=(ul*−1,j−1,w[l*,r+1]), where l* is a minimizing index of the right-hand side of the above equation and the components of w[l*,r+1] are given by the mean value on d[l*,r+1]. A solution for the full data is obtained by successively computing ul,j for all j=0,…,J and l=1,…,n. Compared with the minimization algorithm for the Potts problem, it is more expensive, because, actually, minimizers for all j=0,…,J have to be computed. Nonetheless, the method has quadratic complexity with respect to the data size L. As in the case of the Potts problem with A=id, there are greedy algorithms which may perform well, in practice, but which do not guarantee a global optimum; see [7,8] for a discussion.

Relation to iterative hard thresholding. Similar to (2.8), the J-jump sparsity problem can be transformed to the constrained sparsity problem

| 2.16 |

with A′ and f′ given by (2.9) and (2.10), respectively. Then, the iterative constrained hard thresholding algorithms of Blumensath & Davis [32,37] can be applied to (2.16). This thresholding algorithm involves a hard thresholding variant keeping the J items with highest absolute value. When applying this approach to the J jump sparsity problem, the resulting complexity of one iteration step is again quadratic. This is because the same arguments as given for hard thresholding in the section on Potts problems also apply to iterative constrained hard thresholding. Hence, the approach via iterative constrained hard thresholding has the same complexity as the iterative J-jump sparsity algorithm proposed in this paper.

(c). The Blake–Zisserman problem

Until now, we have only considered piecewise constant signals. We now also allow the signal to vary smoothly in-between the jumps; this corresponds to piecewise smooth signals as underlying signal model. In a discrete set-up, we allow for small qth variations in-between the jumps. These small variations are reflected by the Blake–Zisserman regularizers given in ().

We first of all note that the Blake–Zisserman problem has a minimizer.

Theorem 2.4 —

The Blake–Zisserman weak string problem () has a solution.

Theorem 2.4 has been shown in [19] for general A. Convergence results of Blake–Zisserman functionals towards Mumford–Shah functionals are given in [13].

Iterative Blake–Zisserman algorithm. We derive an iterative Blake–Zisserman minimization algorithm in analogy to the iterative algorithm derived for the Potts problem. The Blake–Zisserman functional Bγ,s was given by

| 2.17 |

In analogy to the Potts problem, we consider its surrogate functional Bsurrγ,s(u,v) =Bγ,s(u) After some calculation, we see that

| 2.18 |

where C is independent of u and can therefore be omitted. Iteratively minimizing this functional, using the previous minimizer as new input v, leads to the iterative Blake–Zissermann minimization algorithm

| 2.19 |

We note that the second line constitutes a Blake–Zisserman problem for A=id for which there is again a computationally feasible algorithm as explained next.

The Blake–Zisserman problem for A=id. The Blake–Zisserman functional Bidγ,s(u) for A=id is given by

| 2.20 |

It is a basic building block of our iterative Blake–Zissermann minimization algorithm (2.19). For the particular case A=id, the dynamic programming principle as explained for the Potts problem before, can be applied to the Blake–Zissermann problem to obtain an algorithm computing an exact solution [10,11,13,23,45]. We use the basic dynamic program explained in the corresponding paragraph concerning the Potts problem, where we reinterpret (2.7). In the context of Blake–Zisserman functionals, the deviation ϵ[l,r] of (2.7) from the data (dl,…,dr) is given by

| 2.21 |

Then, minimizers of Bidγ,s have the form (ul*−1,w[l*,r]), where w[l*,r] is a minimizer of (2.21). We note that, in contrast to the Potts problem, w[l*,r] need not be constant. The functional (2.21) is convex for all p≥1 which makes it accessible for standard convex optimization techniques such as the primal–dual strategy of Chambolle & Pock [50]. The special case p=2 reduces to solving a linear system of equations.

Relation to thresholding algorithms. In [19], Fornasier and Ward transform Blake–Zisserman problems to sparsity type problems of the form (2.8) with the sparsity term in (2.8) replaced by . Then, they solve the resulting transformed problem by iterative thresholding algorithms with a corresponding suitable thresholding function. In contrast, we directly work on the non-transformed problem here.

3. Analysis

(a). Main analytic results

The problems () and () both deal with an underlying piecewise constant signal model. They are intimately connected; in particular, one can formally interpret the Potts problem () as the Lagrange formulation of the J-jump sparsity problem (). However, we see that this formal connection is not given analytically.

Theorem 3.1 —

The problems (2.5) and () are not equivalent. More precisely, if u* is a solution of the Potts problem (2.1) with γ>0 then it is also a solution of the constrained problem () with parameter k=∥∇u*∥0. On the other hand, a minimizer of () need not necessarily be a minimizer of () – not even for some parameter.

Proof. —

Because u* is a minimizer of the Potts functional, it minimizes the problem

We further have that for all v with ∥∇v∥0<k. This is because ∥∇v∥0<∥∇u*∥0, and u* is a minimizer of the Potts functional (). Thus, u* is a solution of (), which shows the first assertions.

To show the second assertion, we give an example of a solution of () which is not a solution of (). To this end, we consider f=(0,2,0), A=id. For J=0, we get the solution It has functional value The possible solutions, for J=1, u1=(0,1,1) and u1=(1,1,0), attain the functional value Pγ(u1)=γ+2. The 2-jump solution u2=f has the functional value Pγ(u2)=2γ. It follows that Pγ(u1)>Pγ(u0) for all γ>1 and that Pγ(u1)>Pγ(u2) for γ<2. Hence, there is no γ>0 such that the solutions for J=1 of () are solutions of (). This shows the second assertion. ▪

The above proposition shows that it can happen that even (theoretically) scanning the whole parameter range of γ of the Potts problem () will not even yield at least one (not speaking about all) J-jump sparse solution for some J.

We learned that theorem 3.1 can also be proved as follows: one rewrites () and () as the equivalent sparsity problems (2.8) and (2.16) and uses the recent results by Nikolova [51] on the relation between these sparsity problems. There, under additional assumptions, also quasi-equivalence has been shown. For A=id, this has been already addressed in, e.g. [26]. However, the proof next to the theorem 3.1. is short and instructive, and moreover, it generalizes to arbitrary data terms of the form p≥1, whereas the equivalence to the sparsity problems is only true for p=2.

In the following theorems, we always assume that the measurement matrix A fulfills ∥A∥<1 with respect to the operator norm on ℓ2. This is a set-up that can always be achieved by rescaling. More precisely, multiply the functional under consideration with λ2, where λ is chosen such that ∥λA∥<1. This results in a corresponding problem with rescaled data λf, and rescaled parameters for which our results apply (because ∥λA∥<1). We note that rescaling with λ<1 results in a smaller step size in the respective algorithm.

We first analyse iterative Potts minimization for which we obtain the following convergence results.

Theorem 3.2 —

We let ∥A∥<1. Then, the iterative Potts minimization algorithm (2.5) converges to a local minimizer of the inverse Potts functional (2.1) for any starting point. The convergence rate is linear. Furthermore, we have the following relation between local minimizers global minimizers and the fixed points of the iteration (2.5),

3.1

This result is shown in §3b.

We next consider the constrained J-jump sparsity problem (). We derive a convergence result for the J-jump sparsity algorithm (2.14). Its formulation is somewhat more involved than theorem 3.2 for the Potts problem () which is owing additional assumptions. These additional assumptions are usually met in noisy data situations (which are the typical situation in practice) as discussed below.

We recall that u is J-jump sparse if it has at most J jumps, i.e. at most J indices with ui≠ui+1.

Theorem 3.3 —

We let ∥A∥<1 and assume that the unrestricted problem of minimizing ∥Au−f∥2 with respect to has at most one J-jump sparse solution. (This is, for example, the case if A is injective.)

If the unrestricted problem has no J-jump sparse solution, then the iterative J-jump sparsity algorithm (2.14) converges towards a local minimizer of (2.11).

Otherwise, the iterative J-jump sparsity algorithm (2.14) produces iterates uk that either converge to a local minimizer of (2.11) with (exactly) J jumps or they have a cluster point which is a global minimizer of (2.11) with (strictly) less than J jumps. If, in this situation, f is in the range of A and A is injective, then the iterates converge to a local minimizer (which is a global minimizer when it has (strictly) less than J jumps.)

This result is shown in §3c. We briefly discuss the assumptions of the theorem and give some applications.

We first consider measurements from time/translation-invariant systems with full measurement data. These systems are described by a convolution operator represented by a corresponding Töplitz matrix A. If the Fourier transform of the underlying convolution kernel is non-zero, and we are given full measurement data, A is invertible and theorem 3.3 yields convergence to a local minimizer for any starting point.

Theorem 3.3 also applies to inpainting or missing data situations with noisy data. The simplest cases are missing data problems for direct measurements. Here, A equals the identity matrix with some rows missing, and the data f are a noisy version of the underlying signal with some items removed. Because f is noisy, it is typically not J-jump sparse for a smaller number of jumps J. Therefore, there is typically no J-jump sparse solution of the unrestricted problem, because all such solutions equal f on the non-missing part. Hence, theorem 3.3 may be applied to obtain convergence. Obviously, this argumentation generalizes to missing and noisy data situations for more general problems, in particular to deconvolution problems.

Theorem 3.3 can also be applied to certain missing data situations for noise-free data. Because noise-free data appears rather infrequently in practice, they are not our primary interest. However, for completeness, we give a simple example as example 3.11 in §3c. We note that, in case of non-noisy few data situations, theorem 3.3 is rather restrictive. In this situation, the corresponding statement theorem 3.2 we derived for the Potts problem is stronger because it also deals with this situation without additional requirements.

Besides considering piecewise constant signal models, we also consider piecewise smooth signals. In a discrete set-up, this corresponds to small variations in-between the jumps. In this context, we introduced the iterative Blake–Zisserman minimization algorithm in §2c. Regarding its analysis, we obtain the following convergence results.

Theorem 3.4 —

For ∥A∥<1, the iterative Blake–Zisserman minimization algorithm (2.19) converges to a local minimizer of the Blake–Zisserman functional (2.17) for any starting point. Furthermore, the relation (3.1) holds true in the context of the Blake–Zisserman functionals Bγ,s of (2.17) as well.

This result is shown in §3d.

(b). Analysis of the iterative Potts minimization algorithm

We first need some well-known results for surrogate functionals of the form F(u)=γJ(u)+∥Au−f∥2 [19,32,36]. We first need the implementation J(u)=∥∇u∥0 counting the number of jumps of u. In §3d, we also consider the Blake–Zisserman regularizer .

Lemma 3.5 —

Consider the functionals F(u)=γJ(u)+∥Au−f∥2 (where, for our purposes, J is either the Potts regularizer J(u)=∥∇u∥0 or the Blake–Zisserman regularizer ) We assume that the operator norm of A on ℓ2 fulfills ∥A∥<1. Then, we obtain, for the associated surrogate functional, Fsurr given by (2.2) (with J as regularizer), that

holds for all v; and equality holds if and only if u=v;

3.2

3.3

For proofs of this general statement on surrogate functionals, we exemplarily refer to the above-mentioned papers [19,32,36]. The statements actually hold for general real-valued surrogate functionals and do not rely on the specific structure of the problems considered here.

We now invoke specific properties of Potts functionals. We first show that minimizers of the Potts functional Pidγ (with A=id) have a minimal jump height which only depends on the scale parameter γ but not on the particular input data.

Lemma 3.6 —

Consider the Potts functional Pidγ given by (2.6) for data f. Then, there is a constant c>0 which is independent of the minimizer u*, and the data f such that the minimal jump height of u* fulfills

3.4

Proof. —

We let

3.5 where L is the length of the underlying signal u. We now assume that which means that the minimizer u* has a jump of height smaller than c. We construct an element u′ with a smaller Pidγ value which contradicts u* being a minimizer. To this end, denote the (left) jump points of u* by ji. By assumption there, is some jump point ji0 such that the corresponding jump height hi0<c. We let I1 be the (discrete) interval between ji0−1+1 and ji0 (corresponding to the plateau of u* to the left of ji0) and I2 be the (discrete) interval between ji0+1 and ji0+1 (corresponding to the plateau of u* to the right of ji0). Here, we use the convention that ji0−1=0 if ji0 is the first jump of u*, and ji0+1=L if ji0 is the last jump of u*. We let m1,m2 and m be the mean of f on I1,I2 and I1∪I2, respectively. We define

3.6 We have by construction that

3.7 Furthermore, because u* is a minimizer of Pidγ, it equals m1 on I1 as well as m2 on I2. We get, because u* and u′ only differ on I1∪I2, that

3.8 where l1 is the length of I1 and l2 is the length of I2. Combining (3.7) and (3.8) we obtain

The last inequality is a consequence of (3.5). Summing up, u′ has a smaller Pidγ value than u* which contradicts u* being a minimizer. This shows the assertion of the lemma. ▪

Proposition 3.7 —

The iteration (2.5) converges to a local minimizer of (2.1). The convergence rate is linear.

Proof. —

The proof is subdivided into three steps. We first show that the jump sets of the iterates uk get fixed after sufficiently many iterations. Using this, we proceed by showing that the algorithm (2.5) converges and conclude by showing that the limit point is a local minimizer.

(1) We start out by showing that the jump sets of the uk get fixed. The iterate uk of the algorithm (2.5) is a minimizer of a Potts functional Pidγ (with A=id) given by (2.6) for data dk=(I−A*A)uk−1+A*f. The parameter γ is the same for all k. By lemma 3.6, there is a constant c>0 which is independent of uk and the data dk such that the minimal jump height uk fulfills

3.9 If now two consecutive members uk,uk−1 have different jump locations, then their distance in the ℓ2 sense fulfills ∥uk−uk−1∥>c/2. This can happen only in the initial steps because by (3.3), we have ∥uk−uk−1∥→0 as k increases. In consequence, there is an index K such that all following members uk have the same jump set.

(2) We use this observation to show the convergence of (2.5). We consider iterates uk with k≥K (all having the same jump set and all jumps with minimal jump height c) and denote the corresponding (left) jump locations by ji and the corresponding (discrete) intervals on which the iterates are constant by Ii. Using the notation meanI(g) for the mean value of g on the interval I, the corresponding orthogonal projection reads

For all k≥K, we can now write the iteration (2.5) as

3.10 Because uk=Puk and P is an orthogonal projection we further obtain

Hence, the iteration (3.10) may be interpreted as Landweber iteration on the image space P(ℓ2) for the matrix AP and data f. The Landweber iteration converges at a linear rate; cf. e.g. [52]. Thus, the iteration (3.10) convergences and, in turn, we obtain the convergence of (2.5) to some u*.

(3) It remains to show that u* is a local minimizer. Because u* is the limit of the iterates uk, the jumps of u* also have minimal height c, the number of jumps are equal to those of the uk for k≥K, and the locations are the same as those of the uk for k≥K. We choose a vector h and consider u*+th for small If the jump set of h is contained in that of u* and, in turn, in that of the uk for k≥K, it follows that Pγ(u*+th)≥Pγ(u*) for all t. This is because u* equals the limit of the above Landweber iteration on P(ℓ2) and is thus a minimizer on P(ℓ2). It remains to consider the case where the jump set of h is not contained in that of u*. Then, for small t, t<c/∥h∥, the jump set of the vector u*+th is a proper superset of the jump set of u*. This is because u* has jumps of height at least c. Hence, ∥∇(u*+th)∥0 ≥∥∇u*∥0+1. Because N(u)=∥Au−f∥2 is a continuous function of u, there is a neighbourhood of u* with |N(u*)−N(u)|<γ for all u in this neighbourhood. Hence, for small enough t, u*+th is contained in this neighbourhood and therefore,

Together, this shows that u* is a local minimizer of the Potts functional Pγ. ▪

Proposition 3.8 —

The global minimizers 𝒢 and the local minimizers ℒ of the Potts functional (2.1) and the fixed points of the Potts iteration given by (2.5) (which we here denote by ) fulfill the inclusions

Proof. —

We first show that any global minimizer of the Potts functional (2.1) appears as a stationary point of the algorithm (2.5). We start the algorithm with a global minimizer u* as initialization. Then, we have for all v with v≠u*,

3.11 This means that u* is the minimizer of v↦ Psurrγ(v,u*) and hence the iterate u1 of the algorithm (2.5) equals u* when the iteration is started with u*. Thus, the global minimizer u* is a stationary point of (2.5).

It remains to show that each stationary point of (2.5) is a local minimizer of the Potts functional (2.1). This has essentially already been done in the proof of proposition 3.7: start the iteration (2.5) with a stationary point u′. Its limit equals u′ which is a local minimizer by proposition 3.7. ▪

Proof of theorem 3.2 —

The statement follows by propositions 3.7 and 3.8. ▪

(c). Analysis of the iterative constrained jump-sparsity algorithm

Here, we analyse the iterative constrained jump-sparsity algorithm (2.14). In analogy to lemma 3.5, lemma 3.9 gathers the relevant facts on constrained surrogate functionals of the form (2.12). Related assertions may be found in [32] where also proofs or sketches of the proof are given. For the sake of completeness and because this case might be less well known, we give short proofs after stating the assertions.

Lemma 3.9 —

For the surrogate functional Qsurr given by (2.12) of the constrained jump-sparsity problem (2.11) we have

holds for all v, and equality holds if and only if u=v;

3.12

3.13

Proof. —

For the first statement, note that we can rewrite the term ∥u−v∥2−∥Au− Av∥2=〈(I−A*A)(u−v),u−v〉 appearing in the definition (2.12). Because ∥A∥<1 the positive matrix I−A*A has its spectrum in (0,1] and is in particular invertible. Hence, 〈(I−A*A)(u−v),u−v〉≥0 and it equals 0 if and only if u=v.

For the second statement, we consider the kth iterate uk which is J-jump sparse. We have Q(uk)=Qsurr(uk,uk), and so a J-jump sparse minimizer uk+1 of Qsurr(⋅,uk) fulfills Qsurr(uk+1,uk)≤Q(uk). (This is because the candidate uk is admissible.) Because Q(uk+1)≤Qsurr(uk+1,uk), the second statement holds true.

For the last assertion, note that, for some C>0,

Here, the last inequality follows from the minimizing property of uk+1. Now, we have that Q(uk)−Q(uk+1)→0 as because the bounded below sequence Q(uk) is monotone and thus convergent. This completes the proof. ▪

We need specific properties of a minimizer of the J-jumps sparsity problem (2.15) (with A=id).

Lemma 3.10 —

Consider a minimizer u* of the J-jump sparsity problem (2.15) (with A=id) for data f and assume that the Jth jump of u* has height at most ε. Then, there is a constant C independent of f such that ∥u*−f∥<Cε.

Proof. —

Enumerate the (left) jump points of u* by ji with i=1,…,J. Let ji0 be the smallest jump point of u* and let I0 and I1 be the intervals of constance of u* neighbouring ji0 to the left and right, respectively. Let furthermore m1,m2 and m be the mean of f on I1,I2 and I1∪I2. We define u′ by (3.6) and observe as in the proof of lemma 3.6 that

3.14 where L is the length of u*. By construction, u′ has at least one jump less than u*.

If ∥u′−f∥<C′ε for some C′>0, then the assertion of the lemma is shown because . We enumerate the jump points of u′ by j′i and let, for a given index l, the integer dl be the distance of the index l to the left next jump location of u′. We scan u′ starting from the leftmost index l=1, and increase l until |u(l′)−f(l′)|>2dl′Lε for some l′. If there is no index l′ fulfilling this inequality, we have found a corresponding C′ and the proof is complete. Otherwise, we construct u′′ with

3.15 We attach the index l′ to the jump set of u′ and denote the corresponding left and right intervals I3 (between l′ and its leftmost neighbour in the jump set of u′) and I4, respectively. We denote the mean of f on I3,I4 and I3∪I4 by m3,m4 and m′. We define u′′ by

The jump set of u′′ is the index l′ together with the jump set of u′. So, ∥∇u′′∥0≤J and u′′ is admissible. We show that u′′ fulfills (3.15). By the definition of u′′, we obtain

3.16 Here, the symbols |I3|,|I4| denote the length of the corresponding intervals. We consider the interval I3. For all indices l in I3 smaller than l′, we have that |u(l)−f(l)|≤2dlLε and, for the index l′ (which is the last in I3 by construction), that |u(l′)−f(l′)|>2dl′Lε. Hence, |m3−m′|>Lε/|I3|, and therefore,

Plugging this into (3.16) shows (3.15). Now, we combine (3.15) and (3.14) to obtain

with u′′ having at most J jumps. This contradicts u* being a minimizer and so shows the assertion. ▪

We next give the proof of theorem 3.3.

Proof of theorem 3.3 —

We arrange the jump height of each iterate uk in decreasing order and denote the Jth highest jump by hk. If uk has less than k jumps we let hk=0. We distinguish two cases according to whether or not.

(1) If then there is an index K′ such that for all greater indices k each member uk has J jumps with each jump being at least c>0 in height with c independent of k. By (3.13), we have ∥uk−uk−1∥→0 as k increases. Hence, there is an index K such that all following members uk have the same jump set. This is because otherwise we would have ∥uk−uk−1∥>c/2 if uk+1 and uk have different jump points. Then, we may argue as in the proof of proposition 3.7 to conclude that, in this case, uk converges to a local minimizer of (2.11).

(2) If then there is a subsequence ki such that hki converges to 0 as i increases. This means that, given ε>0, there is an I such that for all i≥I, the Jth jump of uki is smaller than ε. Lemma 3.10 implies that, for dki defined by (2.14),

3.17 with C independent of i.

Rewriting the definition of dki in (2.14), we have dki =uki−1 +A*(f−Auki−1). Hence, we may estimate

3.18 as i increases. Here, the first summand converges to 0 by (3.17), and the second summand converges to 0 by (3.13).

Now, we decompose each

with and vki−1 in its orthogonal complement. The sequence vki is bounded because otherwise

This would contradict (3.18). Therefore, vki is bounded, and thus contains a convergent subsequence, denoted by vkj. We denote the corresponding limit by v*. Using (3.18), we obtain

3.19 Hence, v* is a minimizer of the unrestricted functional u→∥Au−f∥2 on Then, the solution space for the unrestricted problem is By (3.19),

3.20 Passing to a further subsequence ukr of ukj, we may assume that the ukr have the same jump sets. Hence, they are all elements of the same subspace U with dimension lower than or equal to J. By our assumption, the unrestricted problem has at most one J-jump sparse solution, now denoted u*. This means that either or (Note that we have chosen a particular subspace which a priori need not be a subspace where u* is in.)

(2a) In the first case, , these affine subspaces do not intersect which contradicts (3.20). Hence, in this case (where there is no J-jump sparse minimizer of the unrestricted problem), situation (2) of this proof does not apply (and the subsequence we chose before necessary belongs to a subspace also u* is in if it exists.) This shows that if there is no J-jump sparse minimizer of the unrestricted problem, then (1) of this proof applies and we then have the convergence to a local minimizer.

(2b) So, it remains to consider the case where Then, ukr converges to u* which is a J-jump sparse minimizer; actually, u* has strictly less than J jumps. This is because ukr converges to u* which implies that the jump set of u* is contained in the jumps sets of all ukr with sufficiently high r. The subsequence ukr is chosen such that jump sets are fixed, and the height hkr of the smallest jump approaches 0 which implies that the limit does not have this jump.

(3) Finally, we show convergence for the case (2b) when the data f lie in the image space of A and A is injective. By (2b), we have that the subsequence ukr converges to u* which is a minimizer of the unrestricted problem with less than J jumps. Then, by assumption, ∥Au*−f∥=0. For a given index k we choose the next bigger index kr of the convergent subsequence ukr. Then,

as The first inequality is a consequence of (3.12). Hence, uk converges because A is lower bounded, i.e. ∥Ax∥≥m∥x∥ for some m>0, as an injective operator on finite dimensional space. Then, the limit equals that of the subsequence which is u*. ▪

As pointed out, we conclude this part by giving a simple example of a particular situation where theorem 3.3 applies to a deconvolution problem with missing data in a noise-free set-up. Although the noise-free set-up is not our primary interest (because it rather infrequently appears in practice), we give such an example for completeness.

Example 3.11 —

We consider cyclic convolution on (the cyclic group consisting of) L elements, which may be described as a cyclic L×L matrix. Let be the moving average of size 2 and B be the corresponding cyclic L×L matrix. We let m be a positive integer which divides L, and we remove every mth row of B to obtain A. We consider data f=Ag with g having n<m jumps. Let s be the number of (left) jump points of g in the set {m,2m,…}. We are interested in the corresponding J-jump sparsity problem for J≤m−1−n+s. In this case, we may apply theorem 3.3, because each element in has at least m−1−n+s jumps which means that the corresponding unrestricted problem has at most one J-jump sparse solution. If theorem 3.3 yields convergence to a local minimizer because has no J-jump sparse member. If then has no or precisely one J-jump sparse member. In the first case, the previous argument applies. In the second case, theorem 3.3 yields either convergence to a local minimizer or a cluster point which is a global minimizer and which has less than jumps. Because the latter situation cannot occur (as discussed above), theorem 3.3 also yields convergence in this situation.

(d). Analysis of the iterative Blake–Zisserman minimization algorithm

Here, we analyse the iterative Blake–Zisserman algorithm (2.19). Besides the facts on surrogate functionals gathered in lemma 3.5 we need lemma 3.12 on the minimizers of Blake–Zisserman functionals Bidγ,s (with A=id). In the context of Blake–Zisserman functionals, an index l with difference |∇u(l)|≥s might be interpreted as a jump. In this interpretation, lemma 3.12 states that minimizers either take a jump of height bigger than s+c or have differences |∇u(l)|≤s−c, i.e. there is a 2c-gap, with c only dependent on the parameters γ,s and q (the underlying qth variation) but not on the input data.

Lemma 3.12 —

Consider the Blake–Zisserman functional Bidγ,s given by (2.20) for data f and a corresponding (global) minimizer u*. Then, there is a constant c>0 which is independent of u* and the data f such that

3.21

Proof. —

Lemma 3.12 can be proven directly by a quite tedious argumentation. However, it is also possible to base the argumentation on results derived in [19]. We decided to do the latter.

We consider a global minimizer u* of Bidγ,s. Then, v*=∇u* is a global minimizer of the functional 𝒥p defined by (22) in [19]. By [19], Theorem 5.1, the global minimizer v* is a fixed point of the iteration (36) in [19]. For any output vector v of this iteration, there is a constant c>0 which is independent of the iterate v and the data f, but dependent on the parameters γ,s, such that

3.22 This is a direct consequence of [19], Proposition 4.3. Then (3.22) also holds for v* because it is a fixed point. In consequence, u*, which is related to v* via v*=∇u*, fulfills (3.21) which shows the assertion of the lemma. ▪

Proposition 3.13 —

The iteration (2.19) converges to a local minimizer of (2.17).

Proof. —

(1) We first show that the jump sets of the iterates uk are identical for sufficiently large k. We recall that, in the context of Blake–Zisserman functionals, an index l is a (left) jump point of u, if |u(l+1)−u(l)|≥s. Each iterate uk of the iterative Blake–Zisserman minimization algorithm (2.19) is a global minimizer of a Blake–Zisserman functional Bidγ,s of the form (2.20) for certain data dk. The parameters γ and s are the same for all k. By lemma 3.6, there is a constant c>0 which is independent of uk and the data dk such that, for each uk,

3.23 Now, (3.3) states that ∥uk−uk−1∥→0 as Hence, for sufficiently large k, |uk(l)−uk−1(l)|<c/2 for all l. Therefore, |∇uk(l)−∇uk−1(l)|<c. Hence, if uk−1 has a jump at l, uk does so, too. This is because

which implies that |∇uk(l)|≥s+c by (3.23). If uk−1 has no jump at l,

which implies that |∇uk(l)|≤s−c by (3.23). This shows that the jump sets of the uk get fixed for sufficiently large k.

(2) We now show the convergence of (2.19). Let K be sufficiently large such that, for all indices k≥K, the iterates uk have the same jump set. We denote the jump set by Z. When the jump set is fixed, then minimizing the Blake–Zisserman functional (2.20) given by

is equivalent to minimizing the functional

3.24 This is because the jump sets are fixed, and thus the corresponding costs are the fixed number of jumps times γsq, regardless whatever the candidate u looks like whenever it is admissible. The functional in (3.24) is a convex functional of qth variation type or TV type for q=1 (appearing in similar form in inpainting problems based on TV type functionals). Replacing the Blake–Zisserman functional Bidγ,s by the convex functional in the algorithm (2.19) we obtain a classical forward–backward splitting algorithm (cf. [41]) for the problem

3.25 Obviously, F1+F2 has a minimizer. Furthermore, the Lipschitz constant of the gradient of F2 equals ∥A∥ which is smaller than 1 by assumption. Thus, we may apply, e.g. [53], Theorem 3.4 to obtain that the corresponding forward–backward splitting algorithm for F1+F2 converges to a minimizer of F1+F2. Hence, the algorithm (2.19) converges to some limit u*.

(3) We finally show that u* is a local minimizer of the Blake–Zisserman functional Bγ,s. By (2) of this proof, the sequence of iterates fulfills (3.23) which implies that the same is also true for its limit u*, i.e. —∇u*(l)|∉(s−c,s+c).

We first consider an arbitrary vector h and the corresponding perturbation u*+th for small More precisely, we consider h with and t with t<c/2. All these candidates u*+th fulfill

for all indices l in the jump set Z of u*. Hence, Bγ,s(u*+th)=(F1+F2)(u*+th)+|Z| (with F1+F2 from (3.25)). Because u* is a minimizer of F1+F2 by part (2), we have

for all h,t with and t<c/2. This shows that u* is a local minimizer of the Blake–Zisserman functional Bγ,s. ▪

Proposition 3.14 —

The global minimizers 𝒢 and the local minimizers ℒ of the Blake–Zisserman functional (2.17) are related to the fixed points of the iterative Blake–Zisserman iteration given by (2.19) (which we here denote by ) by

3.26

Proof. —

We start with the second inclusion and show that each stationary point u′ of is a local minimizer of Bγ,s. To this end, we start the iteration (2.19) with a fixed point u′. Its limit equals u′ which is a local minimizer by proposition 3.13.

It remains to show the first inclusion which means that any global minimizer of a Blake–Zisserman functional of the form (2.17) is a fixed point of the iteration (2.19). Starting the algorithm (2.19) with a global minimizer u*, the estimate (3.11) with the Potts functional Pγ replaced by the Blake–Zisserman functional Bγ,s remains valid and we obtain for all v with v≠u*. Hence, the first iterate produced by the autonomous system (2.19) for input u* equals u* which means that it is a fixed point. This shows the statement. ▪

Proof of theorem 3.4 —

The assertion follows by propositions 3.13 and 3.14. ▪

4. Numerical results

We first present a relaxation strategy for the initial steps of the algorithms. This strategy improves the performance of the proposed methods in practice. Then, we employ the derived algorithms for the deconvolution of noisy signals with discontinuities. We also deal with the situation where data is missing. Finally, we consider real data: we estimate steps in the rotation of the bacterial flagellar motor.

(a). A relaxation strategy for the initial steps of the algorithms

Because we deal with non-smooth non-convex problems, the set of (local) minimizers usually is neither unique nor convex. Thus, the solutions produced by our iterative methods typically depend on the starting point. Without additional a priori information, we use the initial guess A*f in the experiments. However, it turns out that this guess is, in practice, often close to an undesired local minimum. Instead of trying to choose another starting point (without additional a priori information), we propose a relaxation strategy for the initial steps of the algorithm. This strategy addresses the empirical observation that the unrelaxed iteration suffers from fixing partitions too early. We note that the corresponding convergence statements remain true unchanged, because only the initial steps of the iterations of the autonomous system are relaxed.

Let us first consider the iterative Potts minimization algorithm (2.5). We start with a small regularization parameter γk which we successively increase during the first k0 iterations until we reach the desired parameter γ. In our examples, we choose the sequence γk given by

| 4.1 |

In our experiments, the value k0=1000 turned out to be a reasonable choice. For the J-jump sparsity problem, we use the sequence

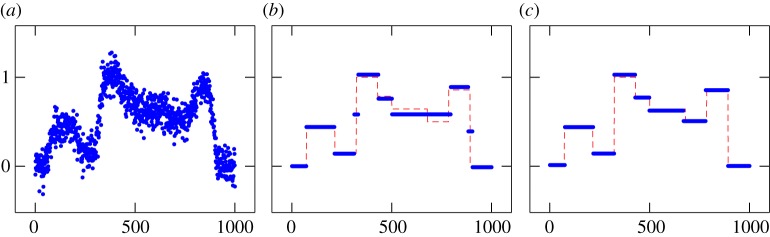

with t=20. For the Blake–Zisserman problem, we keep the s-parameter fixed and use the relaxation (4.1) for the γ-parameter. Figure 1 illustrates the practical gain of the relaxation. There, A represents the convolution with Gaussian kernel of standard deviation 16, and the noise is zero-mean Gaussian distributed with standard deviation σ=0.1.

Figure 1.

Iterative Potts minimization (γ=0.7) for the deconvolution of a noisy signal: effect of the relaxation strategy proposed in §4a. The runtime is 1.1 s without relaxation and 43.8 s with relaxation. (a) Blurred and noisy data. (b) Without relaxation. (c) With relaxation. (Online version in colour.)

(b). Deconvolution of signals with discontinuities

As application, we consider deconvolution problems. Deconvolution problems actually cover all measurement systems A which are translation- or time-invariant.

In our test cases, A is a L×L Töplitz matrix describing a discrete convolution by a Gaussian kernel of standard deviation 16. As common setup, we deal with signals of length L=1000. We simulate data f by f=Ag+η, where g is the underlying true signal and η is a Gaussian-distributed random vector consisting of independent zero mean random variables with standard deviation σ=0.1. To simulate incomplete data, we randomly select a set of 500 missing data points which we remove from the data vector f. We further adapt the matrix A by removing the corresponding rows. For all our algorithms, we employ the following stopping criterium. We stop the iterations when the term ∥uk−uk+1∥/(∥uk∥+∥uk+1∥), measuring a kind of relative ℓ2 ‘distance’ between two consecutive iterates uk,uk+1, falls below a threshold which we here chose as 10−6. A Matlab implementation of the algorithms developed in this work is available online.1 All experiments were conducted on a desktop computer with Intel Xeon E5 (3.5 GHz).

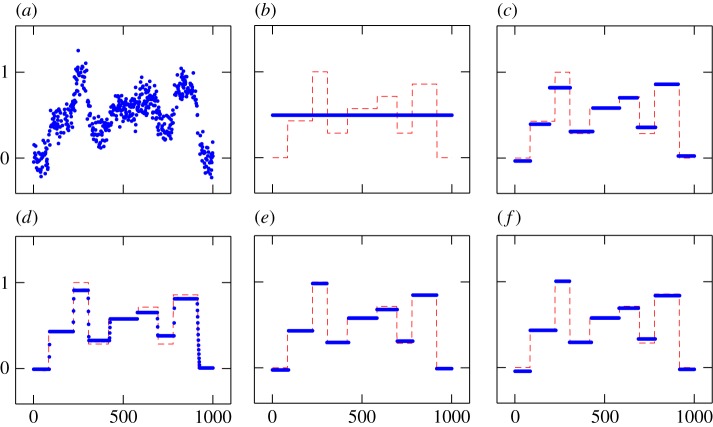

In our first experiment (figure 2), we reconstruct a piecewise constant signal from blurry and noisy data. (In the figures, we display the data points and the computed results as dots and the ground truth as dashed line.) We compare our results to those of iterative thresholding. To this end, we use (2.8) and (2.16) to obtain corresponding separable problems. Then, we apply the iterative thresholding algorithms proposed by Blumensath & Davies [32,37] using the toolbox ‘sparsify 0.5’.2 We also compare with total variation minimization, i.e.

which may be seen as the convex state of the art method for the corresponding task. In the experiment (figure 2), we see that total variation minimization tends to produce transitional points at the jumps and to decrease the contrast. The iterative thresholding approach to the Potts problem gets stuck in a constant result. The result of the thresholding approach to the J-jump sparsity problem is satisfactory. We observe an almost perfect reconstruction using the proposed iterative Potts and J-jump sparsity algorithms.

Figure 2.

Reconstruction of a piecewise constant signal. Total variation minimization tends to produce transitional points at the jumps and to decrease the contrast. The iterative thresholding approach to the Potts problem gets stuck in a constant result. The result of the thresholding approach to the J-jump sparsity problem is satisfactory. Iterative Potts minimization and the iterative J-jump sparsity algorithm recover the piecewise constant signal almost perfectly. (a) Blurred and noisy data. (b) Iterative thresholding algorithm for (), γ=0.7. (c) Iterative thresholding algorithm for (), J=8. (d) Total variation solution, λ=1.5. (e) Iterative Potts algorithm for () (proposed method), γ=0.7. (f) Iterative J-jump sparsity algorithm for () (proposed method), J=8. (Online version in colour.)

Next, we study the influence of the model parameter. In figure 3, we show the results of the iterative Potts algorithm for various parameters γ. We observe that the majority of the partitions is recovered robustly over a large range of parameters.

Figure 3.

Influence of the parameter γ on the result of the iterative Potts algorithm: for smaller values of γ, some additional jumps appear, and for larger values of γ, some jumps vanish. Despite this variation, the result is stable for a large parameter range on the majority of the partitions. (a) Blurred and noisy data. (b) γ=0.25. (c) γ=0.5. (d) γ=1. (e) γ=2. (f) γ=4. (Online version in colour.)

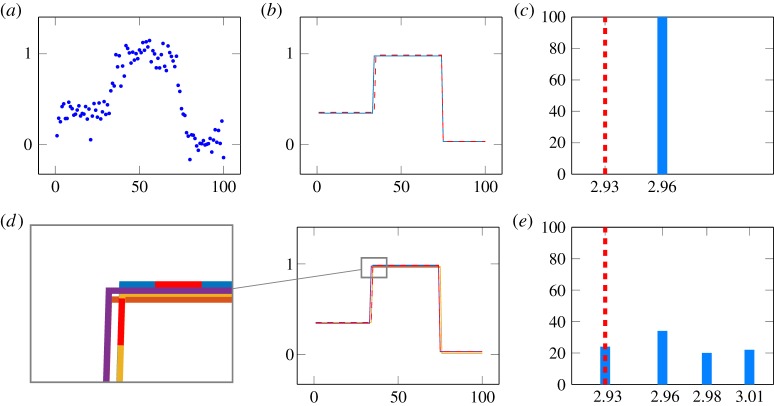

In the next experiment, we look at the influence of the starting value on the result. To this end, we start the iteration with perturbed starting values u0=A*(f+η), where η is a Gaussian distributed random vector with zero mean. We consider a particularly simple experiment where we can compute the global minimizer explicitly using brute force. (We used 100 data points, two jumps, Gaussian convolution of standard deviation 3.) In figure 4, we see the results of two series of the iterative Potts algorithm for 100 realizations of η, one with smaller perturbations and one with larger perturbations. For the small standard deviation τ=0.01 of η (nearby starting points), we end up in the same local minimizer, which is rather near to the global minimizer. The case of large standard deviation τ=0.5 of η illustrates the dependence of the solution on the starting point; we get different nearby solutions which also include the global minimizer.

Figure 4.

Influence of the starting point on the result of the iterative Potts algorithm (γ=1): for small random perturbations of the starting point, we end up the same local minimizer for all 100 realizations. The result is near to the global minimizer which is displayed as dashed line (b). For large random perturbations, we obtain four different nearby results (d). The histogram shows their frequency where the abscissa displays their functional values (e). The dashed line indicates the global optimum which could be computed for this particularly simple example by brute force. (a) Blurred and noisy signal. (b) Result with perturbed starting point (τ=0.01). (c) Histogram of the local minimizers (τ=0.01). (d) Results with perturbed starting point (τ=0.5). (e) Histogram of the local minimizers (τ=0.5). (Online version in colour.)

Figure 5 shows the reconstruction of a piecewise smooth function. Total variation minimization produces the typical ‘staircasing’ effects. The iterative Blake–Zisserman algorithm yields a very satisfactory reconstruction.

Figure 5.

Reconstruction of a piecewise smooth signal. Total variation minimization produces so-called staircasing. The iterative Blake–Zisserman minimization reconstructs the underlying signal almost perfectly. (a) Blurred and noisy signal. (b) Total variation solution, λ=1. (c) Iterative Blake–Zisserman solution, γ=1000, s=0.04. (Online version in colour.)

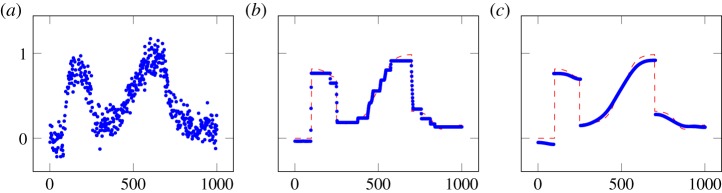

(c). Estimating steps in the rotation of the bacterial flagellar motor

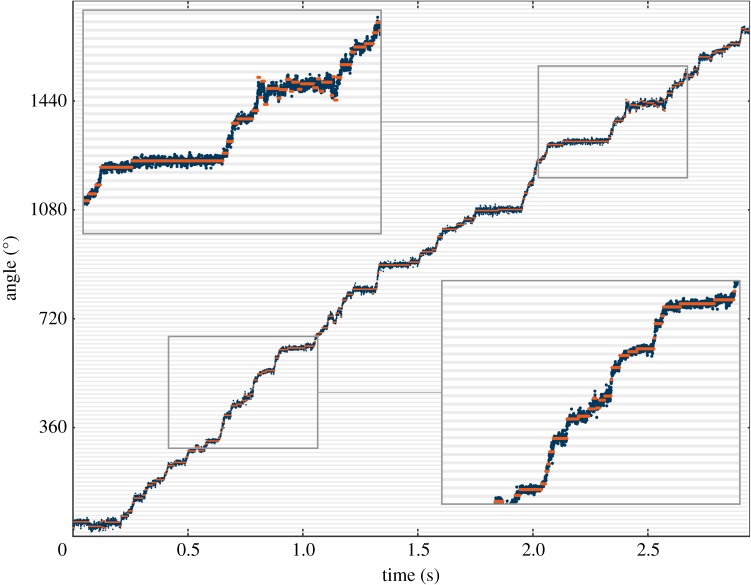

The bacterial flagellar motor is a rotary molecular machine that is embedded in the bacterial cell envelope. It propels many species of swimming bacteria [1,2]. Sowa et al. [1] observed steps in the rotation of the flagellar motor over time; on average, they found approximately 26 steps per revolution. The present dataset is a time series of the angular position of the flagellar motor. The data were acquired using back-focal plane interferometry (BFP) using around 4000 recordings per second. The maximum number of steps that can be detected is around 200 per second [1]. This measurement set-up motivates to model the measurements as convolution with zero mean Gaussian with a standard deviation such that the [−σ,σ] interval spreads over 20 data points. This leads to σ=2.5⋅10−3 s. For this rather large dataset of 11 912 elements, we used k0=10 in the relaxation strategy (4.1). The result of the iterative Potts algorithm is shown in figure 6. The exact runtime for this experiment was 106.6 s. We obtain a reasonable estimate of the steps.

Figure 6.

The points represent the angle of the bacterial flagellar motor over time. The grid lines are in a distance of (360/26)≈13.8 degree. We obtain a reasonable estimate of the steps using the iterative Potts algorithm (solid line, γ=900). (Original data by courtesy of Y. Sowa et al. [1].) (Online version in colour.)

5. Conclusion and future research

We have considered the problem of restoring signals with discontinuities from indirect noisy measurements. For piecewise smooth signals, we have employed Blake–Zisserman functionals. We have derived an iterative Blake–Zisserman minimization algorithm. We have shown its convergence to a local minimizer. For piecewise constant signals, we have considered the Potts and the J-jump sparsity problem. We have seen that these two problems are related but not equivalent. For both problems, we have derived iterative algorithms and obtained convergence results. We have shown the practical potential of all proposed methods in numerical experiments on signal deconvolution. In particular, the proposed methods are preferable to total variation-based relaxation for jump/discontinuity/step detection.

Future research includes the extension of the proposed approaches to the multivariate set-up.

Acknowledgements

We thank Yoshiyuki Sowa et al. for providing the data set of the bacterial flagellar motor.

Footnotes

Available at http://pottslab.de.

Available at http://users.fmrib.ox.ac.uk/~tblumens/sparsify/sparsify.html.

Data accessibility

Data and code are available at http://pottslab.de/.

Author contributions

The paper was developed and written by both authors.

Funding statement

This work was supported by the German Federal Ministry for Education and Research under SysTec grant no. 0315508. A.W. acknowledges support by the Helmholtz Association within the young investigator group VH-NG-526. M.S. acknowledges support from the European Research Council under the European Union's Seventh Framework Programme (FP7/2007-2013) / ERC grant agreement no. 267439.

Conflict of interests

We declare we have no competing interests.

References

- 1.Sowa Y, Rowe A, Leake M, Yakushi T, Homma M, Ishijima A, Berry R. 2005. Direct observation of steps in rotation of the bacterial flagellar motor. Nature 437, 916–919. (doi:10.1038/nature04003) [DOI] [PubMed] [Google Scholar]

- 2.Sowa Y, Berry R. 2008. Bacterial flagellar motor. Q. Rev. Biophys. 41, 103–132. (doi:10.1017/S0033583508004691) [DOI] [PubMed] [Google Scholar]

- 3.Snijders A. et al. 2001. Assembly of microarrays for genome-wide measurement of DNA copy number by CGH. Nat. Genet. 29, 263–264. (doi:10.1038/ng754) [DOI] [PubMed] [Google Scholar]

- 4.Drobyshev A, Machka C, Horsch M, Seltmann M, Liebscher V, Hrabé de Angelis M, Beckers J. 2003. Specificity assessment from fractionation experiments (SAFE): a novel method to evaluate microarray probe specificity based on hybridisation stringencies. Nucleic Acids Res. 31, e1 (doi:10.1093/nar/gng001) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hupé P, Stransky N, Thiery JP, Radvanyi F, Barillot E. 2004. Analysis of array CGH data: from signal ratio to gain and loss of DNA regions. Bioinformatics 20, 3413–3422. (doi:10.1093/bioinformatics/bth418) [DOI] [PubMed] [Google Scholar]

- 6.Joo C, Balci H, Ishitsuka Y, Buranachai C, Ha T. 2008. Advances in single-molecule fluorescence methods for molecular biology. Annu. Rev. Biochem. 77, 51–76. (doi:10.1146/annurev.biochem.77.070606.101543) [DOI] [PubMed] [Google Scholar]

- 7.Little M, Jones N. 2011. Generalized methods and solvers for noise removal from piecewise constant signals. I. Background theory. Proc. R. Soc. A 467, 3088–3114. (doi:10.1098/rspa.2010.0671) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Little M, Jones N. 2011. Generalized methods and solvers for noise removal from piecewise constant signals. II. New methods. Proc. R. Soc. A 467, 3115–3140. (doi:10.1098/rspa.2010.0674) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Frick K, Munk A, Sieling H. 2014. Multiscale change point inference. J. R. Stat. Soc. B (Stat. Methodol.) 76, 495–580. (doi:10.1111/rssb.12047) [Google Scholar]

- 10.Mumford D, Shah J. 1985. Boundary detection by minimizing functionals. In IEEE Conf. on Computer Vision and Pattern Recognition, San Francisco, CA, 19–23 June, vol. 17, pp. 137–154.

- 11.Mumford D, Shah J. 1989. Optimal approximations by piecewise smooth functions and associated variational problems. Commun. Pure Appl. Math. 42, 577–685. (doi:10.1002/cpa.3160420503) [Google Scholar]

- 12.Ambrosio L, Tortorelli V. 1990. Approximation of functional depending on jumps by elliptic functional via Γ-convergence. Commun. Pure Appl. Math. 43, 999–1036. (doi:10.1002/cpa.3160430805) [Google Scholar]

- 13.Chambolle A. 1995. Image segmentation by variational methods: Mumford and Shah functional and the discrete approximations. SIAM J. Appl. Math. 55, 827–863. (doi:10.1137/S0036139993257132) [Google Scholar]

- 14.Ramlau R, Ring W. 2010. Regularization of ill-posed Mumford–Shah models with perimeter penalization. Inverse Probl. 26, 115001 (doi:10.1088/0266-5611/26/11/115001) [Google Scholar]

- 15.Jiang M, Maass P, Page T. 2014. Regularizing properties of the Mumford–Shah functional for imaging applications. Inverse Probl. 30, 035007 (doi:10.1088/0266-5611/30/3/035007) [Google Scholar]

- 16.Ambrosio L, Fusco N, Pallara D. 2000. Functions of bounded variation and free discontinuity problems. Oxford, UK: Clarendon Press. [Google Scholar]

- 17.DeGiorgi E. 1991. Free discontinuity problems in calculus of variations. In Frontiers in pure and applied mathematics, a collection of papers dedicated to J.L. Lions on the occasion of his 60th birthday (ed. R Dautray), pp. 55–61. Amsterdam, The Netherlands: North Holland. [Google Scholar]

- 18.Blake A, Zisserman A. 1987. Visual reconstruction. Cambridge, MA: MIT Press. [Google Scholar]

- 19.Fornasier M, Ward R. 2010. Iterative thresholding meets free-discontinuity problems. Found. Comput. Math. 10, 527–567. (doi:10.1007/s10208-010-9071-3) [Google Scholar]

- 20.Nikolova M. 2000. Thresholding implied by truncated quadratic regularization. IEEE Trans. Signal Process. 48, 3437–3450. (doi:10.1109/78.887035) [Google Scholar]

- 21.Potts R. 1952. Some generalized order-disorder transformations. Math Proc. Camb. Philos. Soc. 48, 106–109. (doi:10.1017/S0305004100027419) [Google Scholar]

- 22.Geman S, Geman D. 1984. Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 6, 721–741. (doi:10.1109/TPAMI.1984.4767596) [DOI] [PubMed] [Google Scholar]

- 23.Winkler G, Liebscher V. 2002. Smoothers for discontinuous signals. J. Nonparametric Stat. 14, 203–222. (doi:10.1080/10485250211388) [Google Scholar]

- 24.Boykov Y, Veksler O, Zabih R. 2001. Fast approximate energy minimization via graph cuts. IEEE Trans. Pattern Anal. Mach. Intell. 23, 1222–1239. (doi:10.1109/34.969114) [Google Scholar]

- 25.Boysen L, Bruns S, Munk A. 2009. Jump estimation in inverse regression. Electron. J. Stat. 3, 1322–1359. (doi:10.1214/08-EJS204) [Google Scholar]

- 26.Boysen L, Kempe A, Liebscher V, Munk A, Wittich O. 2009. Consistencies and rates of convergence of jump-penalized least squares estimators. Ann. Stat. 37, 157–183. (doi:10.1214/07-AOS558) [Google Scholar]

- 27.Pock T, Chambolle A, Cremers D, Bischof H. 2009. A convex relaxation approach for computing minimal partitions. In IEEE Conf. Computer Vision and Pattern Recognition, Miami, FL, 20–25 June, pp. 810–817. New York, NY: IEEE.

- 28.Winkler G. 2003. Image analysis, random fields and Markov chain Monte Carlo methods: a mathematical introduction. Berlin, Germany: Springer. [Google Scholar]

- 29.Frick S, Hohage T, Munk A. 2014. Asymptotic laws for change point estimation in inverse regression. Stat. Sin. 24, 555–575. [Google Scholar]

- 30.Bruce J. 1965. Optimum quantization. Technical report 429. MIT Research Laboratory of Electronics.

- 31.Auger I, Lawrence C. 1989. Algorithms for the optimal identification of segment neighbourhoods. Bull. Math. Biol. 51, 39–54. (doi:10.1007/BF02458835) [DOI] [PubMed] [Google Scholar]

- 32.Blumensath T, Davies M. 2008. Iterative thresholding for sparse approximations. J. Fourier Anal. Appl. 14, 629–654. (doi:10.1007/s00041-008-9035-z) [Google Scholar]

- 33.Alexeev B, Ward R. 2010. On the complexity of Mumford–Shah-type regularization, viewed as a relaxed sparsity constraint. IEEE Trans. Image Process. 19, 2787–2789. (doi:10.1109/TIP.2010.2048969) [DOI] [PubMed] [Google Scholar]

- 34.Storath M, Weinmann A, Demaret L. 2014. Jump-sparse and sparse recovery using Potts functionals. IEEE Trans. Signal Process. 62, 3654–3666. (doi:10.1109/TSP.2014.2329263) [Google Scholar]

- 35.Artina M, Fornasier M, Solombrino F. 2013. Linearly constrained nonsmooth and nonconvex minimization. SIAM J. Optim. 23, 1904–1937. (doi:10.1137/120869079) [Google Scholar]

- 36.Daubechies I, Defrise M, De Mol C. 2004. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 57, 1413–1457. (doi:10.1002/cpa.20042) [Google Scholar]

- 37.Blumensath T, Davies ME. 2009. Iterative hard thresholding for compressed sensing. Appl. Comput. Harmonic Anal. 27, 265–274. (doi:10.1016/j.acha.2009.04.002) [Google Scholar]

- 38.Candès E, Wakin M, Boyd S. 2008. Enhancing sparsity by reweighted ℓ1 minimization. J. Fourier Anal. Appl. 14, 877–905. (doi:10.1007/s00041-008-9045-x) [Google Scholar]

- 39.Needell D, Ward R. 2013. Near-optimal compressed sensing guarantees for total variation minimization. IEEE Trans. Image Process. 22, 3941–3949. (doi:10.1109/TIP.2013.2264681) [DOI] [PubMed] [Google Scholar]

- 40.Needell D, Ward R. 2013. Stable image reconstruction using total variation minimization. SIAM J. Imaging Sci. 6, 1035–1058. (doi:10.1137/120868281) [Google Scholar]

- 41.Lions P-L, Mercier B. 1979. Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 16, 964–979. (doi:10.1137/0716071) [Google Scholar]

- 42.Wittich O, Kempe A, Winkler G, Liebscher V. 2008. Complexity penalized least squares estimators: analytical results. Mathematische Nachrichten 281, 582–595. (doi:10.1002/mana.200510627) [Google Scholar]

- 43.Boysen L, Liebscher V, Munk A, Wittich O. 2007. Scale space consistency of piecewise constant least squares estimators: another look at the regressogram. Inst. Math. Stat. Lect Notes-Monogr. Ser. 55, 65–84. [Google Scholar]

- 44.Weinmann A, Storath M, Demaret L.2014. The L1-Potts functional for robust jump-sparse reconstruction. SIAM J. Numer. Anal. (http://arxiv.org/abs/1207.4642. )

- 45.Friedrich F, Kempe A, Liebscher V, Winkler G. 2008. Complexity penalized M-estimation. J. Comput. Graph. Stat. 17, 201–224. (doi:10.1198/106186008X285591) [Google Scholar]

- 46.Yao Y-C. 1984. Estimation of a noisy discrete-time step function: Bayes and empirical Bayes approaches. Ann. Stat. 12, 1434–1447. (doi:10.1214/aos/1176346802) [Google Scholar]

- 47.Jackson B. et al. 2005. An algorithm for optimal partitioning of data on an interval. IEEE Signal Process. Lett. 12, 105–108. (doi:10.1109/LSP.2001.838216) [Google Scholar]

- 48.Killick R, Fearnhead P, Eckley I. 2012. Optimal detection of changepoints with a linear computational cost. J. Am. Stat. Assoc. 107, 1590–1598. (doi:10.1080/01621459.2012.737745) [Google Scholar]

- 49.Storath M, Weinmann A. 2014. Fast partitioning of vector-valued images. SIAM J. Imaging Sci. 7, 1826–1852. (doi:10.1137/130950367) [Google Scholar]

- 50.Chambolle A, Pock T. 2011. A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vision 40, 120–145. (doi:10.1007/s10851-010-0251-1) [Google Scholar]

- 51.Nikolova M. 2014. Relationship between the optimal solutions of least squares regularized with L0-norm and constrained by k-sparsity.

- 52.Engl H, Hanke M, Neubauer A. 1996. Regularization of inverse problems. Berlin, Germany: Springer. [Google Scholar]

- 53.Combettes P, Wajs V. 2005. Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 4, 1168–1200. (doi:10.1137/050626090) [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data and code are available at http://pottslab.de/.