Abstract

Contextual influences on choice are ubiquitous in ecological settings. Current evidence suggests that subjective values are normalized with respect to the distribution of potentially available rewards. However, how this context-sensitivity is realised in the brain remains unknown. To address this, here we examine functional magnetic resonance imaging (fMRI) data during performance of a gambling task where blocks comprise values drawn from one of two different, but partially overlapping, reward distributions or contexts. At the beginning of each block (when information about context is provided), hippocampus is activated and this response is enhanced when contextual influence on choice increases. In addition, response to value in ventral tegmental area/substantia nigra (VTA/SN) shows context-sensitivity, an effect enhanced with an increased contextual influence on choice. Finally, greater response in hippocampus at block start is associated with enhanced context sensitivity in VTA/SN. These findings suggest that context-sensitive choice is driven by a brain circuit involving hippocampus and dopaminergic midbrain.

The influence of context on value-based choice is well established but the neural correlates associated with this remain poorly understood. Here the authors perform fMRI in human subjects and find that the hippocampus and ventral tegmental area/substantia nigra are associated with the degree of influence of context on choice behaviour.

The influence of context on value-based choice is well established but the neural correlates associated with this remain poorly understood. Here the authors perform fMRI in human subjects and find that the hippocampus and ventral tegmental area/substantia nigra are associated with the degree of influence of context on choice behaviour.

The influence of context on value-based choice is substantial and ubiquitous. A classic example is the framing effect, in which risky options are preferred when choices are framed in terms of losses rather than gains1. Recent evidence suggests that an influence of context on choice behaviour arises because subjective values are normalized with respect to the distribution of potentially available rewards2,3,4,5,6,7. As an everyday example, this entails that the very same dish will be evaluated as better in a bad restaurant than would be the case if evaluated in a good restaurant.

Recently, there have been attempts to identify neural mechanisms underlying choice adaptation to context-sensitive reward distributions. One candidate mechanism is suggested by the observation that, in several brain structures, activity elicited by reward adapts to context such that an outcome produces a larger response when the associated reward distribution has lower values2,6,8,9,10,11. This effect is seen in brain regions involved in processing expected value (EV) and reward prediction error (RPE), including ventral striatum12,13, ventral tegmental area/substantia nigra (VTA/SN)6,14, orbitofrontal cortex12,15,16,17,18, amygdala19 and parietal cortex20. We recently reported a direct association between context-sensitive reward adaptation in the brain, specifically in VTA/SN, and choice adaptation6. However, fundamental questions about the neural substrates of behavioural adaptation to context remain unanswered.

One unanswered question relates to which aspects of neural adaption mediate choice adaptation. Several models are proposed2,6,8,9,10,11,16,21,22, and two key predictions arise out of these. First, neuronal representations of a reference point (for example, reflected in basal neural firing rates) might change so that a context characterized by small rewards would be linked to a lower reference point, leading to enhanced responses to reward with an associated impact on choice behaviour11,23,24. Second, choice adaptation might be mediated by a gain modulation, leading to an enhanced signal-to-noise ratio in response to reward and thereby eliciting a context effect on choice8,10,21,22,25,26. Both these (additive and multiplicative) proposals entail a normalization that renders subjective value a function of reward that is scaled relative to alternative outcomes.

Another important question regards the precise brain circuits that represent context for reward information. A candidate region is the hippocampus as there is substantial evidence that this region processes contextual information in several cognitive domains27,28,29,30. For instance, the hippocampus is implicated in contextual fear processing31,32,33,34, in remembering the spatial context in which an object has been encountered35,36 and in conditional discrimination tasks where contextual information is critical37. In addition, recent studies show the hippocampus is involved in complex aspects of reward processing38,39,40,41,42,43,44,45,46,47.

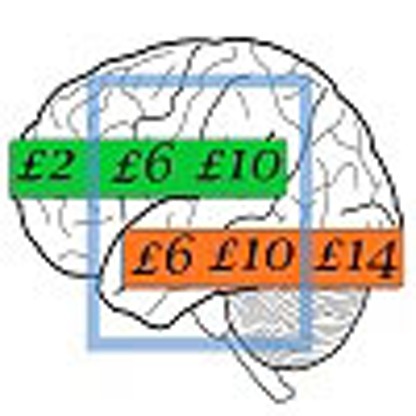

Here, using functional magnetic resonance imaging (fMRI), we investigated the neural underpinnings of choice adaptation to context-specific reward distributions. Participants were presented with a monetary reward, varying trial-by-trial, and were asked to choose between half the amount for sure and a gamble associated with an equal probability of obtaining either the full amount or zero (Fig. 1a). In this way, the two options had equivalent EV. Trials were arranged in short blocks (five trials each), each associated with one of two subtly different gambling contexts involving specific, but partially overlapping, distributions of EV. In a high-value context, possible EVs were £3, £5 and £7, and in a low-value context they were £1, £3 and £5. At the beginning of each block, a panel delivered information about the context, by showing the average trial amount; that is, £6 corresponding to £3 EV, and £10 corresponding to £5 EV for the low- and high-value context, respectively. We predicted this information would elicit activity in regions representing context-sensitive reward distributions, in particular the hippocampus, and that the magnitude of responses would correlate with the degree of contextual adaptation inferred from choice behaviour.

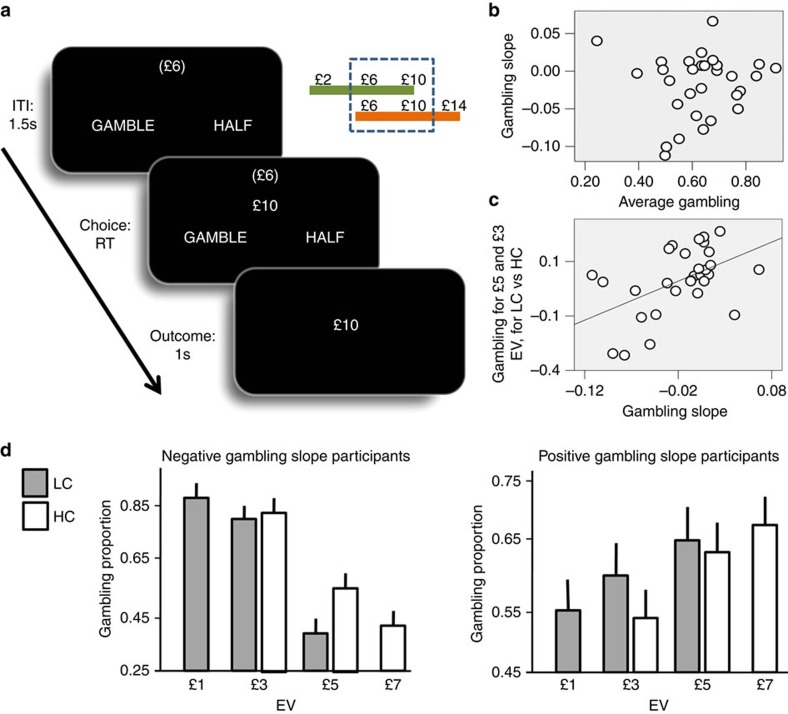

Figure 1. Behavioural results.

(a) Experimental paradigm: on every trial, participants were presented with a monetary gain amount (£10 in the example) in the centre of the screen. They had to choose between receiving half of it (£5 in the example) for sure or select a 50:50 gamble associated with either the full amount or a zero outcome (hence options had always equivalent EV). After an option was selected (by pressing one of two buttons on a keyboard—left for the gambling, right for the safe option), the outcome appeared for 1 s. During a 1.5 s inter-trial interval, a monetary amount was visible on the top of the screen (in brackets) that indicated the average amount of monetary amount associated with the current block. A low-value context was associated with £2, £6 and £10 amount (corresponding to £1, £3 and £5 EV, respectively), and a high-value context to £6, £10 and £14 amount (corresponding to £3, £5 and £7 EV, respectively). Contexts alternated pseudo-randomly every 5 trials. (b) Relationship between individual average gambling proportion (x-axis) and the beta weight (labelled as gambling slope; y-axis) of the logistic regression of choice behaviour with EV as predictor (r(30)=0.06, P=0.74, non significant). (c) Relationship between the gambling slope (x-axis) and the difference in gambling proportion for £3 and £5 choices (common to both contexts) comparing the low-value context (LC) and the high-value context (HC) (y-axis; r(30)=0.5, P=0.005). (d) Gambling proportion plotted separately for participants with negative (n=16; on the left) and positive (n=14; on the right) gambling slope parameter, for different EVs and contexts. Error bars represent standard errors. Considering choices common to both contexts (that is, £3 and £5), it is evident that participants who risked more with decreasing EVs (that is, with a negative gambling slope) gambled more when equivalent choices were smaller compared with the context; whereas participants who risked more with increasing EVs (that is, with a positive gambling slope) gambled more when equivalent choices were larger compared with the context.

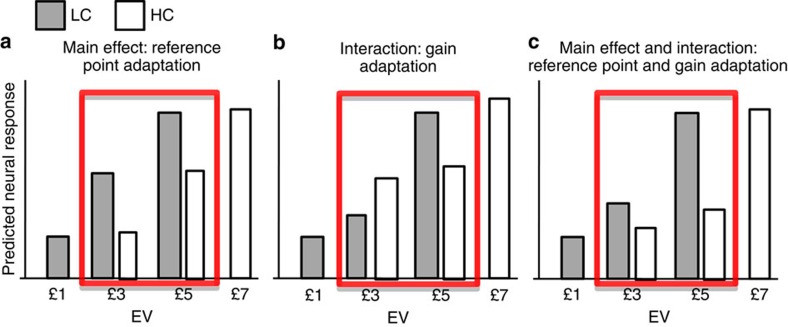

We also exploited the presence of choices common to both contexts (that is, associated with £3 and £5 choices in both contexts) to probe the link between VTA/SN and choice adaptation, by comparing neural responses to identical choices in a low- and high-value context. An increased activation to these choices in a low-value context (and a correlation of this increase with the degree of choice adaptation) would suggest that a modulation in reference point underlies choice adaptation (that is, an additive normalization). Conversely, an increased ‘difference' in VTA/SN activation between £5 and £3 choices in the low- compared with high-value context (and a correlation of this effect with the degree of choice adaptation) would suggest that an enhanced signal-to-noise ratio in value signalling (as implied by adaptation of neural gain) underlies choice adaptation (that is, a multiplicative normalization). Formally speaking, in terms of experimental design, the reference point (subtractive normalization) hypothesis predicts a mean effect of context, while the modulation (divisive normalization) hypothesis predicts an interaction between choice (£5 versus £3) and context (low versus high). Importantly, both of these (orthogonal) effects would constitute evidence for contextual normalization of subjective value above and beyond evidence for standard EV theory, implicit in the main effect of choice (£5 versus £3).

Consistent with our predictions, we observed that, at the beginning of each block (when information about context is provided), hippocampus is activated and this response is enhanced when contextual influence on choice increases. When examining choices common to both contexts, we found that response to value in VTA/SN shows context-sensitivity consistent with adaptive gain control, an effect enhanced with an increased contextual influence on choice. Finally, we show that greater response in hippocampus at block start is associated with enhanced context sensitivity in VTA/SN. These findings suggest that context-sensitive choice is driven by a brain circuit involving hippocampus and VTA/SN.

Results

Behaviour

Across participants (n=30), average gambling exceeded 50% (mean=63; s.d.=14; t(29)=24.62, P<0.001; two-tailed P<0.05 was used as the significance criterion for behavioural tests). Such overall risk seeking behaviour is consistent with evidence from studies where, similar to our task, small monetary payoffs were used48. Given the fixed relationship between the gamble and the certain gain, the only independent measure varying trial-by-trial was the EV, which was equal for both options (sure and gamble options) on each trial. We assessed the impact of this variable in a logistic regression model of gambling probability, finding that participants gambled more with lower EVs (t-test on the slope parameter of the logistic regression: t(29)=−2.30, P=0.03). There was no correlation between the individual effect of EV (that is, the slope parameter of the logistic regression model) and the average gambling percentage (Fig. 1b; r(30)=0.06, P=0.74). The latter result replicates previous findings6,7 and supports the idea of a differentiation between an average gambling propensity and a preference to gamble with large or small EV as determinant of risk choice.

Using a similar paradigm6,7, we showed a context effect consistent with the idea that the subjective value of a reward is smaller in the high- compared with low-value context. However, in previous studies, the context changed rarely (about every 10 min) rendering it unclear whether an effect of context emerges only after extensive learning. In the present experiment, we were able to resolve this ambiguity by exploiting a task design where we used short blocks that allowed contexts to alternate quickly (every 30 s).

Consistent with value normalization to context, across participants, we observed a positive correlation between the differential gambling percentage for EVs common to both contexts (that is, the gambling percentage in low-value minus high-value context for £3 and £5), and the effect of EV on gambling percentage (that is, the slope parameter estimated in a logistic regression; Fig. 1c; r(30)=0.50, P=0.005). Similar to our previous studies6,7, this finding shows the direction of a contextual influence depends on a subject-specific propensity to gamble more with large or small rewards (Fig. 1d). In other words, participants who risked more with increasing EVs gambled more when equivalent choices were larger compared with the context, whereas participants who risked more with decreasing EVs gambled more when equivalent choices were smaller compared with the context. This is consistent with the notion that the subjective value of a reward is smaller in the high- compared with low-value context, and indicates that such contextual effects emerge even without extensive training.

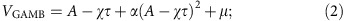

To better characterize the mechanisms underlying choice behaviour and to quantify the level of influence exerted by context on value normalization, we fit a mean-variance return model that computed subjective values consistent with individual choices. If the reward associated with the safe option was A, then the value of the safe option was:

|

Where, χ encodes the low- (χ=0) or high-value context (χ=1), and the context parameter τ implements (subtractive) normalization of the reward amount associated with the high-value context. This formulation implies that the mean and variance of the gamble are A−χτ and (A−χτ)2, respectively, making the value of the gamble be:

|

where α is a value-function parameter which determines whether (α>0) or not (α<0) reward variance is attractive, and μ represents a gambling bias parameter. According to this model, the probability of choosing the gamble is given by a softmax choice rule:

|

We used the Bayesian Information Criterion (BIC; summed across participants) to compare this model with simpler models, where one or two parameters were set to zero: model comparison favoured the full model (model with α, μ and τ, BIC=19,070; model with α and μ, BIC=19,427; model with α, BIC=22,866; model with μ, BIC=22,237).

The value-function parameter α captures a propensity to gamble as a function of reward variance, which in our design corresponds to choice EV. Therefore, we expect this parameter to be correlated with (although not equivalent to) the effect of choice EV on gambling percentage (that is, the effect of EV on gambling percentage as indexed by the slope of a logistic regression), a prediction confirmed by data (r(30)=0.91, P<0.001). This ensures that the value-function parameter α has construct validity in relation to (logistic regression) indices of risk preference. An explicit generative model (instead of a logistic regression model) elucidates the computations underlying choice, can be applied to all choices under risk (and not, like the logistic regression model, only when EV is equivalent across options as in our task), and allows estimating the context parameter τ, which is the key variable in our formulation.

To assess whether our model can explain the main behavioural findings, we used the model and subject-specific parameters estimates to generate simulated data and perform the behavioural analyses on the simulated data. Consistent with real data, the full model replicated the lack of correlation between average gambling and the effect of EV on gambling (that is, the slope of the logistic regression) (r(30)=0.083, P=0.66), while a correlation emerged when data were simulated using a model without the gambling bias parameter μ (r(30)=0.95, P<0.001). Again consistent with empirical data, the full model replicated the correlation between the effect of EV on gambling and the difference across contexts in gambling for choices common to both contexts (r(30)=0.54, P=0.002); a result not obtained using a model without the value-function parameter α (r(30)=0.14, P=0.45) or without the context parameter τ (r(30)=−0.02, P=0.90).

To examine robustness of model parameters, we estimated new parameters from data simulated with the parameters inferred from real data. Parameters estimated from real data were highly correlated with parameters estimated from simulated data (α, r(30)=0.95, P<0.001; μ, r(30)=0.92, P<0.001; τ, r(30)=0.89, P<0.001). Moreover, the average gambling proportion in simulated data was highly correlated with the average gambling proportion in real data (r(30)=0.92, P<0.001), and the effect of EV on gambling percentage (that is, the slope parameter of the logistic regression model) in real data was highly correlated with the same effect in simulated data (r(30)=0.88, P<0.001). Collectively, these analyses validate the generative model and show that it can account for the main empirical results.

The generative model allowed us to estimate the degree of context sensitivity as captured by the context parameter τ, so that the relationship between this parameter and neural responses could be investigated. The effect on choice of varying the context parameter is illustrated in Supplementary Fig. 1. As expected, we found that the context parameter τ was positive across participants (t(29)=2.7, P=0.01), indicating that subjective values were normalized so that rewards were afforded less/more subjective values in the high/low context. The context parameter τ was uncorrelated with other measures (average gambling: r(30)=−0.060, P=0.750; value-function parameter α, r(30)=0.150, P=0.430; gambling bias parameter μ, r(30)=0.120, P=0.528), ensuring that its relationship with neural responses (reported below) is not confounded by other behavioural factors.

Normalization in the model is subtractive. We compared such model with a model where normalization was divisive, where the value of the sure option is VCERT=A/(1+χτ) and the value of the gamble is VGAMB=A/(1+χτ)+α (A/(1+χτ))2+μ. The divisive normalization version of the model fits less well than the subtractive normalization version (BIC=19,079 and BIC=19,070, respectively). The context parameters in the two models were highly correlated (r(30)=0.87, P<0.001). To ascertain that the neural results presented below in relation to the parameter τ were not due to the particular normalization used in the behavioural analysis, we re-ran all the neural analyses using the context parameter extracted from the divisive normalization model, and obtained similar results.

Imaging

Our principal goal was to identify the neural correlates of contextual choice adaptation. To do that, we estimated a general linear model (GLM) including a stick function regressor at option presentation separately for each specific EV (£1, £3 and £5 for the low-value context and £3, £5 and £7 for the high-value context) in addition to a stick function regressor at the first trial of blocks. Our ensuing statistical parametric mapping (SPM), analyses focused on regions of interest (ROIs), namely VTA/SN, ventral striatum and hippocampus. For the latter structure, our focus was on the posterior portion, which has been shown to be particularly linked with context processing27,28,31,32. ROIs' significance statistics were small-volume corrected (SVC) with P<0.05 family wise error (see the ‘Methods' section for details).

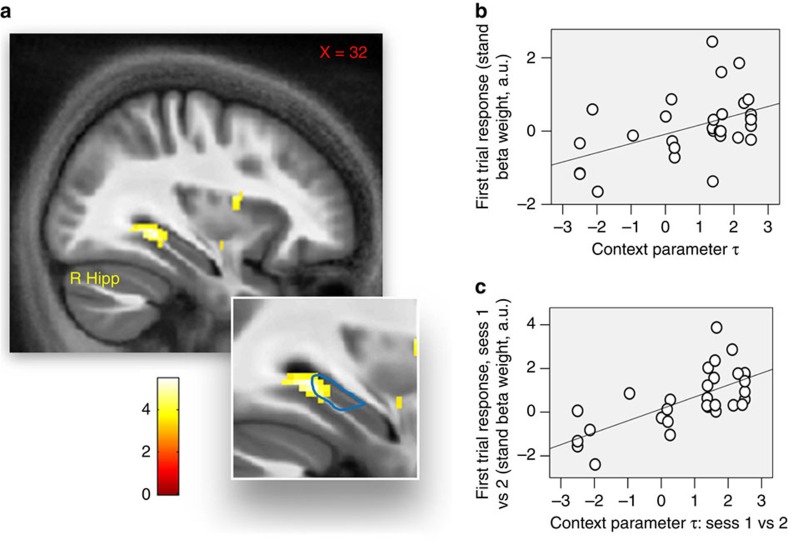

We first tested for brain regions responding to contextual reward information. We reasoned that these regions should be activated following the first trial of each block, when information about context was provided. Hence, we contrasted the regressor associated with the first trial against baseline and found a significant response in right posterior hippocampus (Fig. 2a; 32, −37, −12; Z=4.02, P=0.003 SVC; Montreal Neurological Institute coordinates were used) but not VTA/SN or ventral striatum (P>0.05 SVC). If this signal was linked with processing contextual reward information, one would predict an association between this signal and the impact of context on choice. We tested this both across and within participants. Consistent with our prediction, across individuals we found a positive correlation between the context parameter τ (reporting the influence exerted by context on choice behaviour) and the response induced by contextual cues (at the start of each block) in the right posterior hippocampus (Fig. 2b; 32, −34, −8; Z=3.19, P=0.038 SVC).

Figure 2. Brain response at the first trial of a block.

(a) Brain activation in the right hippocampus (Hipp) at first trials of blocks (32, −37, −12; Z=4.02, P=0.003 SVC). Significance threshold of P<0.005 is used in the figure for display purposes. The faint blue line represents our ROI relative to posterior hippocampus. (b) Relationship between the individual context parameter τ (reporting, for each participant, the degree of contextual adaptation during the task) and the beta weight relative to first trials of blocks in right hippocampus (32, −34, −8; Z=3.19, P=0.038 SVC). Data are plotted for the peak-activation voxel (plot is for display purposes only and no further analyses were performed on these data). (c) Relationship between (i) the difference in the individual context parameter τ, when comparing the first and second session of the task and (ii) the beta weight relative to first trials of blocks in right hippocampus, when comparing the first and second session of the task (32, −29, −7; Z=3.28, P=0.030 SVC). Data are plotted for the peak-activation voxel (plot is for display purposes only and no further analyses were performed on these data).

When examining variability within single participants, we predicted an increased effect during task sessions characterized by enhanced contextual influence on choice. We tested this by fitting, for each participant, the computational model of behaviour separately for the first and second task session. The two estimates of the context parameter τ were correlated across subjects (r(30)=0.38, P=0.01) and there was no systematic difference between the first and second session (t(29)=1.28, P=0.21). We considered the difference between the context parameter in the first and second session and investigated the relationship between this difference and neural response to contextual cues. A significant positive correlation was evident in right posterior hippocampus (Fig. 2c; 32, −29, −7; Z=3.28, P=0.030 SVC). This analysis shows a within-subjects relationship between responses in hippocampus and the context effect on choice behaviour. Specifically, task sessions associated with increased hippocampal activity for contextual cues were also associated with enhanced contextual adaptation in choice behaviour.

We next investigated context-sensitive (value-related) neural responses and their link with choice adaptation by focusing on the time of option presentation. At this time, activity in VTA/SN and ventral striatum has been shown to correlate with the average EV of options (or with the value of the chosen option)49. However, important questions on the role of context remain unanswered. First, it is unclear whether the signal at option presentation adapts to reward distribution expected in a given context. One hypothesis is that adaptation is slow because it depends on an average reward representation, which only changes with extensive experience23. Alternatively, the response might adapt immediately to the context, as observed with the presentation of single cues and outcomes13,14. Experiments that have manipulated reward context have generally used long blocks (that is, in the order of several minutes)6, leaving this issue open. Second, it is unclear whether adaptation can be explained by a change in neural reference point (subtractive normalization) or gain (divisive normalization)8,10,21,22,25,26. Third, it is yet to be established whether these putative forms of context-sensitive normalization are related to choice adaptation to context.

To address these questions, we tested two key hypotheses by analysing the response to £3 and £5, choices that were common to both contexts (Fig. 3). A reference point shift predicts increased activation for (common) choices in the low- minus high-value context (a main effect of context). A contextualizing divisive normalization predicts an increased difference in responses to £5 and £3 choices across contexts (an interaction effect; note that a main effect of high- versus low-value choice would identify regions encoding value per se).

Figure 3. Predicted neural response at option presentation in the two contexts for different EVs, as postulated by different hypotheses.

(a) According to an hypothesis of a neural reference point which reflects the average contextual EV, a lower reference point is predicted in the low-value context (LC; in grey) compared with the high-value context (HC; in white), leading to larger responses for £3 and £5 EV (highlighted in red) in the former context. Note that the difference in brain activity between £5 and £3 choices is equivalent in the low and high-value context. (b) According to the hypothesis that the neural gain increases in low-value contexts, the difference in activation between £5 and £3 choices is larger in the low compared with high-value context. Note that the overall activity when comparing EVs common to both contexts is not necessarily different, as exemplified here. (c) Several models of non-linear normalization predict in the low-value context both larger response for EVs common to both contexts and increased difference in activity between £5 and £3 when comparing the two contexts. These predictions are shown here.

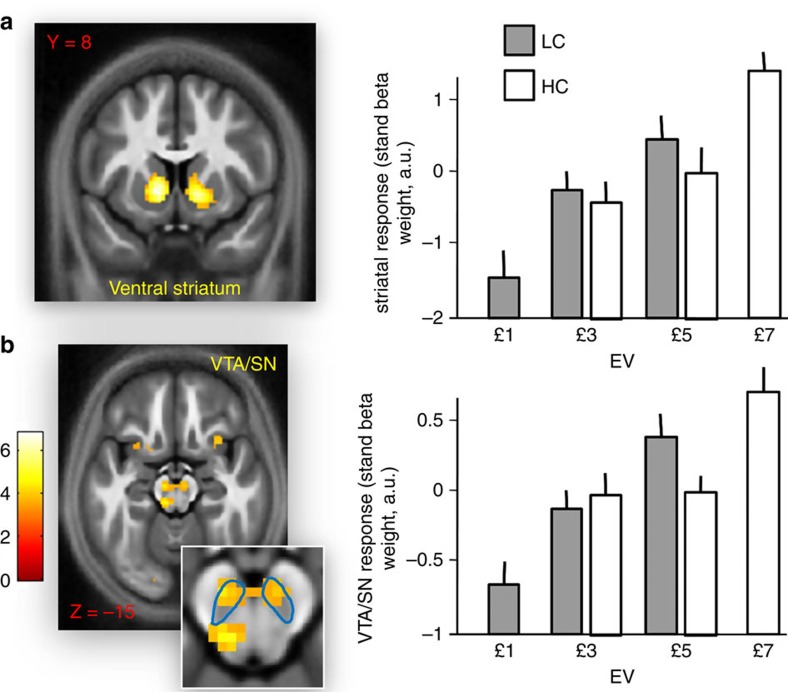

We first identified areas responding to increasing EV levels, comparing responses at option presentation to the largest EV choice (that is, £7 in the high-value context) with the lowest EV choice (that is, £1 in the low-value context). Increased activity was observed in bilateral ventral striatum (Fig. 4a; left: −10, 8, −2; Z=5.11, P<0.001 SVC; right: 12, 13, 0; Z=5.21, P<0.001 SVC) and VTA/SN (Fig. 4b; −8, −17, −15; Z=3.80, P=0.005 SVC). To ensure that the further analyses (reported below) focused on voxels sensitive to EV, activations were masked by a contrast comparing £7 and £1 EV choices, using a P<0.005 uncorrected threshold. For completeness, we also analysed the ventromedial prefrontal cortex, another region involved in processing reward information50. Several reports (including our previous study6) indicate that, at option presentation, activity in this region reflects the subjective value of the chosen minus the unchosen option and not the average EV of option50. As predicted, no voxel in ventromedial prefrontal cortex (defined as a 10 mm sphere ROI centred on prior coordinates49: 2, 46, −8) showed an effect for this contrast (even using P<0.05 uncorrected) and consequently this region was not considered further.

Figure 4. Brain response to EV at option presentation.

(a) On the left, response in ventral striatum at option presentation for choices associated with £7 EV compared with choices associated with £1 EV (left: −10, 8, −2; Z=5.11, P<0.001 SVC; right: 12, 13, 0; Z=5.21, P<0.001 SVC). Significance threshold of P<0.005 is used in the figure for display purposes. On the right, beta weights (adjusted to each participant's mean) for the choices associated with the different EVs in the low-value context (LC; in grey) and high-value context (HC; in white). Data are shown for the peak-activation voxel from the £7 minus £1 EV contrast. Error lines represent s.e.m. (b) On the left, response in VTA/SN at option presentation for choices associated with £7 EV compared with choices associated with £1 EV (−8, −17, −15; Z=3.80, P=0.005 SVC). The faint blue line represents our ROI relative to VTA/SN. On the right, beta weights (adjusted to each participant's mean) for the choices associated with the different EVs and contexts. Data are shown for the peak-activation voxel from the £7 minus £1 EV contrast.

When testing for a main effect of context (subtractive normalization), an increase in the low-value context was seen in bilateral ventral striatum, although only as a trend on the right side (left: −3, 10, −10; Z=3.07, P=0.046 SVC; right: 4, 10, −7; Z=2.87, P=0.073 SVC). Data from VTA/SN were less clear, with a main effect of context in VTA/SN showing only as a weak trend (−5, −19, −12; Z=2.49, P=0.095 SVC; P=0.006 uncorrected). However, an interaction was evident in VTA/SN, indicating that the difference in activity between choices associated with £5 minus choices associated with £3 was larger in the low- compared with the high-value context (Fig. 5a; −3, −24, −22; Z=3.23, P=0.036 SVC). No interaction was detected in ventral striatum (P>0.05 SVC).

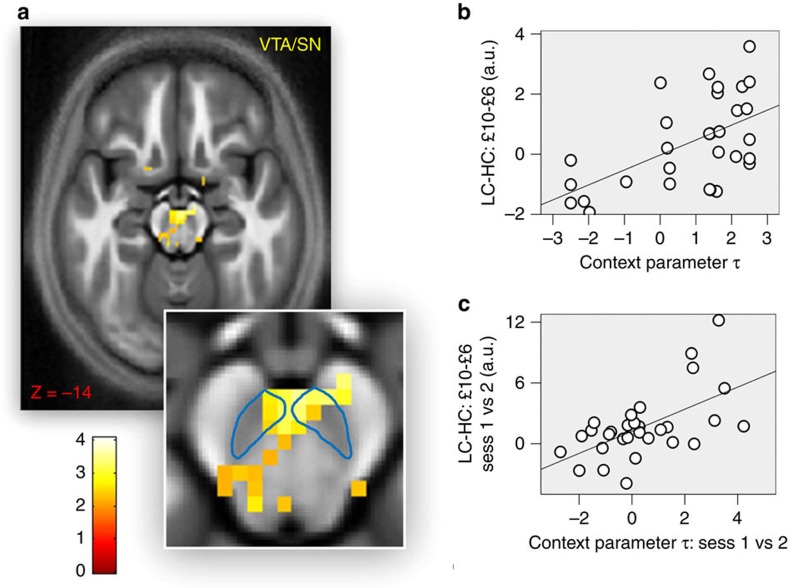

Figure 5. The impact of context on activity in VTA/SN.

(a) Brain activation at option presentation in VTA/SN for choices associated with £5 EV minus choices associated with £3 EV when comparing low- and high-value context (−3, −24, −22; Z=3.23, P=0.036 SVC). Significance threshold of P<0.005 is used in the figure for display purposes. The faint blue line represents our ROI relative to VTA/SN. (b) Relationship between the individual context parameter τ (reporting, for each participant, the degree of contextual adaptation during the task) and the neural contrast related to choices associated with £5 minus choices associated with £3 when comparing low- and high-value context in VTA/SN (−1, −22, −20; Z=3.22, P=0.037 SVC). Data are plotted for the peak-activation voxel (plot is for display purposes only and no further analyses were performed on these data). (c) Relationship between (i) the difference in the individual context parameter τ when comparing the first and second session of the task and (ii) the contrast related to choices associated with £5 minus choices associated with £3 when comparing low- and high-value context in VTA/SN (2, −22, −15; Z=3.39, P=0.018 SVC). Data are plotted for the peak-activation voxel (plot is for display purposes only and no further analyses were performed on these data).

We also assessed at a between and within-participants level to investigate whether the context-sensitive effects were related to choice adaptation. We found no evidence in any ROI for a relationship between the context parameter τ (reporting the influence exerted by context on choice behaviour) and a contrast comparing £5 and £3 choices in the low- minus high-value context. Conversely, across individuals we found a positive correlation between the context parameter τ and the interaction effect in VTA/SN (Fig. 5b; −1, −22, −20; Z=3.22, P=0.037 SVC). In other words, the change in the differential activation for £5 minus £3 choices across contexts was more pronounced in participants exhibiting a greater contextual influence on choice. As above, we also considered the difference between the context parameter τ in the first and second session and investigated the relationship between this difference and the neural interaction effect for the first minus second session. A significant correlation was seen in VTA/SN (Fig. 5c; 2, −22, −15; Z=3.39, P=0.018 SVC).

Overall, these data establish a rapid context-sensitive normalization of subjective value in ventral striatum, consistent with a reference point shift (but not with adaptive gain control), independent of choice adaptation. The data also show a rapid contextual adaptation in VTA/SN consistent with adaptive gain control, with weaker evidence for a reference point shift in this region. Moreover, the adaptive gain control effect in VTA/SN was correlated with choice adaptation.

An intriguing possibility is that the hippocampal encoding of contextual cues mediates a context-sensitive adaptation in VTA/SN, and subsequent choice adaptation. This implicates a modulatory effect, such that adaptation in VTA/SN is enhanced when the hippocampal response to initial trials of blocks (associated with contextual cues) is greater. To test this we performed a psychophysiological interaction analysis (PPI)51 at the subject level. In PPI analyses, the interaction between a psychological factor and a physiological response is used to predict observed activity elsewhere in the brain. Here, we extracted the individual contrast coefficients reflecting the hippocampal response during initial trials (at the peak-activation voxel). This physiological response was then used to predict the adaptation (that is, interaction comparing low- versus high-value context for £5 minus £3 choices) in VTA/SN. We found a significant PPI in the VTA/SN (7, −14, −12; Z=3.68, P=0.005 SVC). This result is consistent with an hypothesis that (initial responses in) the hippocampus mediates context-sensitive adaptation in the VTA/SN. In other words, participants with increased hippocampal response to contextual cues also exhibited enhanced VTA/SN adaptation. In a subsidiary (within-subjects) analysis, we extracted the individual contrast coefficients reflecting the hippocampal response during initial trials for first versus second task session. This physiological response was then used to predict the difference in adaptation across sessions (that is, the difference across sessions for the interaction comparing low- versus high-value context for £5 minus £3 choices) in VTA/SN. The interaction effect in VTA/SN was again significant (−4, −21, −15; Z=3.41, P=0.008 SVC). This result suggests that sessions with increased hippocampal response to contextual cues were also characterized by enhanced VTA/SN adaptation.

Overall these findings suggest an enhanced neural adaptation in VTA/SN when the hippocampus responds more to contextual cues. This supports an hypothesis that hippocampus is involved in mediating context-sensitive evaluation by controlling response adaptation in VTA/SN, with a subsequent effect on choice adaptation.

Discussion

Recent evidence suggests contextual effects on choice behaviour are explained by subjective values adapting to the context in which rewards are evaluated, whereby they are increased with a reduction in potentially available rewards2,3,4,5,6,7. Although this effect is relevant in many real-life conditions, its neural underpinnings are largely unknown. Our findings illuminate this context-sensitive evaluation by showing that the hippocampus plays a key role in representing information about reward context, and that its response to contextual information is tightly coupled with the degree of adaptation in choice behaviour.

Across many domains, it is well-established that posterior hippocampus is a key structure in processing contextual cues. For instance, this region is strongly implicated in processing contextual fear31,32,33,34, in remembering the spatial context in which an object has been encountered35,36, and in conditional discrimination tasks where contextual information is crucial37. We build on this evidence, extending the scope of hippocampus to include processing information about reward context as well as highlighting a tight coupling between these contextual influences and choice behaviour. Although fMRI data do not allow causal interpretations, the correlation between neural and behavioural effects (both within and between participants) suggests an hypothesis that responses in this region might endow choice behaviour with context sensitivity.

Recent models of choice behaviour view subjective values as inherently context-dependent, and this is supported by numerous empirical findings2,3,4,5,6,7,11,21,22. This notion is also implicit in models where planning is conceived as (active) inference52,53,54, where subjective values become preferences or ‘prior beliefs' (about outcomes) that are necessarily normalized, so that they sum to one in any particular context. These models propose that the subjective value of an outcome depends on its relative preference compared with other potential outcomes. In turn, the distribution of potential outcomes is determined by the statistics of the environment, which depend strongly on context. This idea can explain several empirical phenomena including contextual adaptation, the concave shape of the utility function and inter-temporal choice preferences5.

Our findings are consistent with the idea that hippocampus is crucial for representing a reward context, which in turn influences a computation of subjective values. It has been proposed that subjective values depend on comparing the value of a target outcome against the value of potential outcomes sampled from memory5. Within this framework, hippocampal recruitment might facilitate a sampling of potential outcomes associated with a given context43. An alternative possibility is that neural activity in this region represents the sufficient statistics of the context per se. These statistics might be used to estimate subjective values, a process which might engage Bayesian approximate inference in the form of free-energy minimization53,54,55.

One of the most important finding in rat studies shows that hippocampal neurons are activated when animals occupy specific spatial positions56,57. The same neuron can be activated for a location in one spatial context and for another location in another context. However, these neurons tend to respond in corresponding locations in different contexts58. For instance, a neuron associated with the centre of an experimental chamber is likely to respond to the centre of a second experimental chamber. It has been hypothesized that this form of neuronal coding might also be deployed in non-spatial tasks59. We can speculate that this may also extend to contextual reward processing. One way this could be realised is by different hippocampal cells being preferentially activated by specific instances within a reward context. In addition, similar to reports in the spatial domain58, this representation might be normalized so that neurons respond to relative values of rewards within the distribution, and hence to different EVs in different contexts, provided their relative subjective value is equivalent. Note, this form of value coding is different from that observed in regions classically linked to value representation, such as ventral striatum and VTA/SN6,13,14,60,61,62,63. In these regions, neuronal activity correlates with EV (and RPE). Our findings raise a possibility that hippocampal neurons might encode contextual information that is necessary for, or dependent on, a context-sensitive encoding of subjective value.

Recent studies have highlighted a role for hippocampus in (spatial and non-spatial) planning tasks, where value computation is involved, suggesting this structure might be fundamental in representing state-outcome contingencies that are the building blocks of goal-directed or prospective (as opposed to habitual and retrospective) choice38,39,40,41,42,43,44,45,46,47. This implies that computations more directly related to EV are performed somewhere else in the brain, in structures like basal ganglia, VTA/SN and prefrontal cortex. Our findings raise the possibility that, at least in some cases—for instance, when the context changes rapidly—the hippocampus might play a more direct role in reward processing, and specifically in the contextualization of value.

We note that several studies have shown responses consistent with adaptive coding in ventral striatum12,13, VTA/SN6,7,8,9,10,11,12,13,14, orbitofrontal cortex12,15,16,17,18, amygdala19 and parietal cortex20. For example, in a recent experiment12, participants chose between variable delayed payment options across two conditions, where the delay spanned either a narrow or wide range. Activation in ventral striatum was consistent with predictions of range adaptation12. Here, we extend these findings by showing a link between neural adaptation and choice adaptation, suggesting that the former might mediate the latter6.

Consistent with previous reports6, our data indicate that an influence of context on choice behaviour is mediated via VTA/SN neural adaptation at choice presentation. Moreover, in line with previous findings6, we saw no link between choice and neural adaptation in ventral striatum, supporting further an hypothesis of a distinct role for this structure and VTA/SN in contextual adaptation. Note that a link between VTA/SN and choice adaptation is particularly strong, given that both participants with enhanced choice adaptation exhibited greater interactions between choice and context (that is, neural adaptation) and task sessions with enhanced choice adaptation were characterized by increased neural adaptation. Our data demonstrate that a neural adaptation at option presentation can emerge also when a context changes quickly rendering it unlikely that this process is driven by a slow accumulation of experience with reward over time23. One possibility is that a reward distribution is learnt in association with a context and that this representation is activated when a particular context is presented, and is reflected in activation in VTA/SN and in choice behaviour. More generally, our data support a proposal that normalization processes in the brain might represent a canonical form of neural computation encompassing different cognitive functions, from vision to value-guided choice25,26.

In our previous study6, the use of long blocks did not allow us to assess whether VTA/SN adaptation can be explained by a shift in reference point and/or by adaptive gain control. The former hypothesis suggests that, for choices associated with the same EV, responses would increase in a low-value context compared with a high-value context, since the low-value context would be characterized by a smaller reference point. However, our data only marginally support a reference point normalization in VTA/SN (as the corresponding effect emerged only as a weak trend), and they show no relationship between a reference point adaptation and choice adaptation. Conversely, VTA/SN adaptation demonstrated an increased difference between choices associated with £5 and £3 when comparing neural responses in the low- and high-value context, an effect related to choice adaptation.

Several theoretical perspectives suggest that context should induce divisive normalization in both value-related brain regions and choice behaviour2,8,10,21,22,25,26. These models are only partially supported by our data, which show that divisive normalization in VTA/SN is linked with an adaptation in choice, but highlight a subtractive—and not divisive—normalization in choice behaviour. This suggests that divisive normalization in VTA/SN may mediate subtractive normalization in choice. However, further theoretical and empirical research is needed to fully understand the link between divisive normalization in VTA/SN and subtractive normalization in choice, and to clarify whether, and how, other aspects of VTA/SN adaptation are involved.

VTA/SN is the main dopaminergic hub in the brain, and substantial evidence supports a central role of dopamine in motivation and adaptive behaviour23,64,65. The functions of dopamine are the subject of ongoing debates and one recent proposal has suggested it might be crucial in representing the precision of policies, a concept closely related to neural gain, in the context of incentive value53. The observed gain adaptation in VTA/SN linked to choice behaviour seen in our data supports this view, consistent with the idea that this region regulates the incentive value of rewards based on the contextual information, via neural gain control.

An intriguing hypothesis is that the hippocampal response to contextual cues is involved in setting a context by influencing a response adaptation in VTA/SN, and in turn mediating an impact on subsequent choice behaviour. At least three questions arise from this hypothesis. Is there a relationship among contextual effects in hippocampus, VTA/SN and choice behaviour? Do contextual effects in hippocampus precede effects in VTA/SN? Do experimental manipulations of hippocampal response have an impact on contextual effects in VTA/SN response and choice? Our design allowed us to investigate the first two questions. In relation to the first question, we provide evidence of a relationship between contextual influences on hippocampal neural responses and choice, in VTA/SN and choice, and between contextual effects in hippocampus and VTA/SN. In relation to the second question, we found that the context effect in the hippocampus precedes adaptation in VTA/SN, since the former occurs when contextual cues are presented and the latter manifests at option presentation. However, the third question remains open and is likely to require the use of techniques where hippocampal activation can be manipulated directly (for example, through optogenetic interventions).

In conclusion, we provide evidence which suggests that the hippocampus and VTA/SN represent information about the prevailing reward context, and that their responses are associated with the degree of influence of context on choice behaviour. Our results highlight the importance of context in choice and propose a link with its neural substrate. Understanding the cognitive and neural mechanisms of contextual influences is crucial for clarifying the deep nature of choice and to explain important ecological phenomena (for example, in economics) and in psychopathologies (for example, pathological gambling and drug abuse).

Methods

Participants

Thirty healthy right-handed adults (17 females and 13 males, aged 20–40 years, mean age 27 years) participated in the experiment. All participants had normal or corrected-to-normal vision. None had history of head injury, a diagnosis of any neurological or psychiatric condition, or was currently on medication affecting the central nervous system. The study was approved by the University College of London Research Ethics Committee. All participants provided written informed consent and were paid for participating.

Experimental paradigm and procedure

During MRI scan, participants performed a computer-based decision-making task lasting ∼40 min (Fig. 1a). On each trial, a monetary amount (referred as trial amount), changing trial-by-trial, was presented in the centre of the screen and participants had to choose whether to accept half of it for sure (pressing a right button) or select a gamble (pressing a left button). The prospects of this choice were always zero and the full monetary amount, each with equal probability. Therefore, on every trial the certain option and the gamble always had the same EV.

The task was organized in short blocks, each comprising five trials. Each block was associated with one of two contexts that determined the possible EVs associated with the block. These EVs were £1, £3 and £5 for the low-value context, and £3, £5 and £7 for the high-value context. Contexts were indicated by the corresponding average trial amount, displayed in brackets on the top of the screen throughout the block, namely £6 and £10 (corresponding to £3 and £5 EV) for the low- and high-value context, respectively. To maximize attention to this contextual cue, the task was made as simple as possible by fixing the buttons used for making choices (that is, the right and left buttons were always used to select the safe option and the gamble, respectively).

Before a new block started, the construction ‘New set' appeared for 2 s during the inter-block interval, followed by the context (average trial amount) shown for 2 s. Next, the trial amount of the first trial was displayed followed, right after a response was performed, by the outcome of the choice, shown for 1 s. The block average amount remained on the screen during an inter-trial interval lasting 1.5 s. The order of blocks, trial amounts and outcomes were pseudo-randomized. Participants had 3 s to make their choices; otherwise the statement ‘too late' appeared and they received an outcome of zero. At the end of the experiment, one outcome was randomly selected among those received and added to an initial participation payment of £17.

Participants were tested at the Wellcome Trust Centre for Neuroimaging at the University College London. Before scanning, they were fully instructed about the task rules and payment method (that is, they were told that only one outcome would be selected for payment), and practiced for up to 20 unpaid trials. Inside the scanner, participants performed the task in two separate sessions, followed by a 12 min structural scan. After scanning, participants were debriefed and informed about their total remuneration.

fMRI scanning and analysis

The task was programmed using the Cogent toolbox (Wellcome Trust Centre for Neuroimaging) in Matlab. Visual stimuli were back projected onto a translucent screen positioned behind the bore of the magnet and viewed via an angled mirror. Blood oxygenation level dependent contrast functional images were acquired with echo-planar T2*-weighted (EPI) imaging using a Siemens Trio 3-Tesla MR system with a 32-channel head coil. To optimize the coverage of our ROIs, a partial volume of the ventral part of the brain was imaged. Each image volume comprised 25 interleaved 3-mm-thick sagittal slices (in-plane resolution=3 × 3 mm; time to echo=30 ms; repetition time=1.75 s). The first six volumes were discarded to allow for T1 equilibration effects. T1-weighted structural images were acquired at a 1 × 1 × 1 mm resolution. Functional MRI data were analysed using statistical parametric mapping version 8. Data preprocessing included spatial realignment, unwarping using individual field maps, slice timing correction, normalization and smoothing. Specifically, functional volumes were realigned to the mean volume, were spatially normalized to the standard Montreal Neurological Institute template with a 3 × 3 × 3 voxel size, and were smoothed with 8 mm Gaussian kernel. High-pass filtering with a cut-off of 128 s and AR(1)-model were applied.

Neural responses were modelled with a canonical hemodynamic response function and a GLM including six stimulus functions encoding option presentation separately for each choice EV (£1, £3 and £5 for the low-value context and £3, £5 and £7 for the high-value context). Each of these stick functions was modulated by the corresponding RPE, computed as the difference between the outcomes minus the EV. Thus, RPEs were zero for certain option choices and had positive or negative values when gambles were chosen. The GLM also included a stick function regressor at option presentation for the first trials of each block. This was modulated by a binary variable, indicating whether the block was a high- or low-value context. The GLM was estimated separately for each half of each of the two sessions of the task. The GLM included also 6 movement and 17 physiological (derived from breathing and heart rate signals) nuisance regressors.

Contrasts of interest were computed subject by subject, and used for second-level (between subjects) one-sample t-tests and regressions across subjects using standard summary statistic approach66. Statistical (SVC) tests focused on the following ROIs: bilateral ventral striatum, VTA/SN and bilateral posterior hippocampus. For VTA/SN we used bilateral anatomical masks manually defined using the software MRIcro and the mean structural image for the group, similar to the approach used in previous studies67. For ventral striatum we used a 8 mm sphere centred on a priori coordinates extracted from a recent metanalysis49 (left: −12, 12, −6; right: 12, 10, −6). For posterior hippocampus, we used the template available in the MarsBar Toolbox atlas, and, given our specific interest on the posterior portion, we split the template relative to the vertical axis, resulting in the inclusion of voxels with z>−12 coordinates. Statistics of ROIs were SVC using a family wise error rate of P<0.05 as the significance threshold. For exploratory purposes, we also analysed other brain regions where statistics were corrected with respect to the recorded partial volume of the brain, using P<0.05 FWE as the significance threshold. These results are reported in Supplementary Table 1.

Data availability

All data necessary to reproduce the results reported are available on request to the corresponding author.

Additional information

How to cite this article: Rigoli, F. et al. Neural processes mediating contextual influences on human choice behaviour. Nat. Commun. 7:12416 doi: 10.1038/ncomms12416 (2016).

Supplementary Material

Supplementary Figure 1 and Supplementary Table 1

Acknowledgments

This work was supported by the Wellcome Trust (Ray Dolan Senior Investigator Award 098362/Z/12/Z) and the Max Planck Society. The Wellcome Trust Centre for Neuroimaging is supported by core funding from the Wellcome Trust 091593/Z/10/Z. We thank Peter Dayan, Robb Rutledge and Cristina Martinelli for helpful discussions on the topic of the study.

Footnotes

Author contributions All authors contributed to designing the study. F.R. performed the study and analysed the data. All authors contributed to discussion and interpretation of the findings and writing the manuscript.

References

- Kahneman D. & Tversky A. Prospect theory: an analysis of decision under risk. Econometrica 47, 263–291 (1979). [Google Scholar]

- Louie K., Khaw M. W. & Glimcher P. W. Normalization is a general neural mechanism for context-dependent decision making. Proc. Natl Acad. Sci. 110, 6139–6144 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ludvig E. A., Madan C. R. & Spetch M. L. Extreme outcomes sway risky decisions from experience. J. Behav. Decis. Making 27, 146–156 (2013). [Google Scholar]

- Stewart N., Chater N., Stott H. P. & Reimers S. Prospect relativity: how choice options influence decision under risk. J. Exp. Psychol. Gen. 132, 23–46 (2003). [DOI] [PubMed] [Google Scholar]

- Stewart N., Chater N. & Brown G. D. Decision by sampling. Cognit. Psychol. 53, 1–26 (2006). [DOI] [PubMed] [Google Scholar]

- Rigoli F., Rutledge R. B., Dayan P. & Dolan R. J. The influence of contextual reward statistics on risk preference. NeuroImage 128, 74–84 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rigoli F. et al. Dopamine increases a value-independent gambling propensity. Neuropsychopharmacology http://dx.doi.org/10.1038/npp.2016.68 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Louie K. & Glimcher P. W. Efficient coding and the neural representation of value. Ann. N. Y. Acad. Sci. 1251, 13–32 (2012). [DOI] [PubMed] [Google Scholar]

- Padoa-Schioppa C. & Rustichini A. Rational attention and adaptive coding: a puzzle and a solution. Am. Econ. Rev. 104, 507–513 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rangel A. & Clithero J. A. Value normalization in decision making: theory and evidence. Curr. Opin. Neurobiol. 22, 970–981 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seymour B. & McClure S. M. Anchors, scales and the relative coding of value in the brain. Curr. Opin. Neurobiol. 18, 173–178 (2008). [DOI] [PubMed] [Google Scholar]

- Cox K. M. & Kable J. W. BOLD subjective value signals exhibit robust range adaptation. J. Neurosci. 34, 16533–16543 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park S. Q. et al. Adaptive coding of reward prediction errors is gated by striatal coupling. Proc. Natl Acad. Sci. 109, 4285–4289 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobler P. N., Fiorillo C. D. & Schultz W. Adaptive coding of reward value by dopamine neurons. Science 307, 1642–1645 (2005). [DOI] [PubMed] [Google Scholar]

- Kobayashi S., de Carvalho O. P. & Schultz W. Adaptation of reward sensitivity in orbitofrontal neurons. J. Neurosci. 30, 534–544 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C. Range-adapting representation of economic value in the orbitofrontal cortex. J. Neurosci. 29, 1404–1414 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C. & Assad J. A. The representation of economic value in the orbitofrontal cortex is invariant for changes of menu. Nat. Neurosci. 11, 95–102 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay L. & Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature 398, 704–708 (1999). [DOI] [PubMed] [Google Scholar]

- Bermudez M. A. & Schultz W. Reward magnitude coding in primate amygdala neurons. J. Neurophysiol. 104, 3424–3432 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Louie K., Grattan L. E. & Glimcher P. W. Reward value-based gain control: divisive normalization in parietal cortex. J. Neurosci. 31, 10627–10639 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soltani A., De Martino B. & Camerer C. A range-normalization model of context-dependent choice: a new model and evidence. PLoS Comput. Biol. 8, e1002607 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Summerfield C. & Tsetsos K. Do humans make good decisions? Trends Cogn. Sci. 19, 27–34 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niv Y., Daw N. D., Joel D. & Dayan P. Tonic dopamine: opportunity costs and the control of response vigor. Psychopharmacology 191, 507–520 (2007). [DOI] [PubMed] [Google Scholar]

- Rigoli F., Chew B., Dayan P. & Dolan R. J. The dopaminergic midbrain mediates an effect of average reward on Pavlovian vigor. J. Cogn. Neurosci. http://dx.doi.org/10.1162/jocn_a_00972 (2016). [DOI] [PubMed] [Google Scholar]

- Carandini M. & Heeger D. Normalization as a canonical neural computation. Nat. Rev. Neurosci. 13, 51–62 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheadle S. et al. Adaptive gain control during human perceptual choice. Neuron 81, 1429–1441 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burgess N., Maguire E. A. & O'Keefe J. The human hippocampus and spatial and episodic memory. Neuron 35, 625–641 (2002). [DOI] [PubMed] [Google Scholar]

- Moser M. B. & Moser E. I. Functional differentiation in the hippocampus. Hippocampus 8, 608–619 (1998). [DOI] [PubMed] [Google Scholar]

- Matus-Amat P., Higgins E. A., Barrientos R. M. & Rudy J. W. The role of the dorsal hippocampus in the acquisition and retrieval of context memory representations. J. Neurosci. 24, 2431–2439 (2004). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudy J. W. Context representations, context functions, and the parahippocampal–hippocampal system. Learn. Memory 16, 573–585 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anagnostaras S. G., Gale G. D. & Fanselow M. S. Hippocampus and contextual fear conditioning: recent controversies and advances. Hippocampus 11, 8–17 (2001). [DOI] [PubMed] [Google Scholar]

- Fanselow M. S. Contextual fear, gestalt memories, and the hippocampus. Behav. Brain Res. 110, 73–81 (2000). [DOI] [PubMed] [Google Scholar]

- Holland P. C. & Bouton M. E. Hippocampus and context in classical conditioning. Curr. Opin. Neurobiol. 9, 195–202 (1999). [DOI] [PubMed] [Google Scholar]

- Marschner A., Kalisch R., Vervliet B., Vansteenwegen D. & Büchel C. Dissociable roles for the hippocampus and the amygdala in human cued versus context fear conditioning. J. Neurosci. 28, 9030–9036 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mumby D. G., Gaskin S., Glenn M. J., Schramek T. E. & Lehmann H. Hippocampal damage and exploratory preferences in rats: memory for objects, places, and contexts. Learn. Memory 9, 49–57 (2002). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norman G. & Eacott M. J. Dissociable effects of lesions to the perirhinal cortex and the postrhinal cortex on memory for context and objects in rats. Behav. Neurosci. 119, 557–566 (2005). [DOI] [PubMed] [Google Scholar]

- Sziklas V. & Petrides M. Effects of lesions to the hippocampus or the fornix on allocentric conditional associative learning in rats. Hippocampus 12, 543–550 (2002). [DOI] [PubMed] [Google Scholar]

- Van Der Meer M. A. & Redish A. D. Covert expectation of reward in rat ventral striatum at decision points. Front. Integr. Neurosci. 3, 1 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Der Meer M. A. & Redish A. D. Theta phase precession in rat ventral striatum links place and reward information. J. Neurosci. 31, 2843–2854 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Der Meer M. A., Johnson A., Schmitzer-Torbert N. C. & Redish A. D. Triple dissociation of information processing in dorsal striatum, ventral striatum, and hippocampus on a learned spatial decision task. Neuron 67, 25–32 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shohamy D., Myers C. E., Hopkins R. O., Sage J. & Gluck M. A. Distinct hippocampal and basal ganglia contributions to probabilistic learning and reversal. J. Cogn. Neurosci. 21, 1820–1832 (2009). [DOI] [PubMed] [Google Scholar]

- Pezzulo G., Van Ver Meer M. A., Lansink C. S. & Pennartz C. M. Internally generated sequences in learning and executing goal-directed behavior. Trends Cogn. Sci. 18, 647–657 (2014). [DOI] [PubMed] [Google Scholar]

- Pezzulo G., Rigoli F. & Chersi F. The mixed instrumental controller: using value of information to combine habitual choice and mental simulation. Front. Psychol. 4, 92 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pennartz C. M. A., Ito R., Verschure P. F. M. J., Battaglia F. P. & Robbins T. W. The hippocampal–striatal axis in learning, prediction and goal-directed behavior. Trends Neurosci. 34, 548–559 (2011). [DOI] [PubMed] [Google Scholar]

- Lansink C. S., Goltstein P. M., Lankelma J. V., McNaughton B. L. & Pennartz C. M. A. Hippocampus leads ventral striatum in replay of place-reward information. PLoS Biol. 7, e1000173 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wimmer G. E. & Shohamy D. Preference by association: how memory mechanisms in the hippocampus bias decisions. Science 338, 270–273 (2012). [DOI] [PubMed] [Google Scholar]

- Johnson A., van der Meer M. A. & Redish A. D. Integrating hippocampus and striatum in decision-making. Curr. Opin. Neurobiol. 17, 692–697 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prelec D. & Loewenstein G. Decision making over time and under uncertainty: a common approach. Manage. Sci. 37, 770–786 (1991). [Google Scholar]

- Bartra O., McGuire J. T. & Kable J. W. The valuation system: a coordinate-based meta-analysis of BOLD fMRI experiments examining neural correlates of subjective value. Neuroimage 76, 412–427 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rushworth M. F., Noonan M. P., Boorman E. D., Walton M. E. & Behrens T. E. Frontal cortex and reward-guided learning and decision-making. Neuron 70, 1054–1069 (2011). [DOI] [PubMed] [Google Scholar]

- Friston K. J. et al. Psychophysiological and modulatory interactions in neuroimaging. Neuroimage 6, 218–229 (1997). [DOI] [PubMed] [Google Scholar]

- Botvinick M. & Toussaint M. Planning as inference. Trends Cogn. Sci. 16, 485–488 (2012). [DOI] [PubMed] [Google Scholar]

- Friston K. et al. The anatomy of choice: active inference and agency. Front. Hum. Neurosci. 7, 598 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K. et al. Active inference and epistemic value. Cogn. Neurosci. 6, 187–214 (2015). [DOI] [PubMed] [Google Scholar]

- Pezzulo G., Rigoli F. & Friston K. Active Inference, homeostatic regulation and adaptive behavioural control. Prog. Neurobiol. 134, 17–35 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Keefe J. A review of the hippocampal place cells. Prog. Neurobiol. 13, 419–439 (1979). [DOI] [PubMed] [Google Scholar]

- Moser E. I., Kropff E. & Moser M. B. Place cells, grid cells, and the brain's spatial representation system. Annu. Rev. Neurosci. 31, 69–89 (2008). [DOI] [PubMed] [Google Scholar]

- O'Keefe J. & Burgess N. Geometric determinants of the place fields of hippocampal neurons. Nature 381, 425–428 (1996). [DOI] [PubMed] [Google Scholar]

- Cohen N. J. & Eichenbaum H. Memory, amnesia, and the hippocampal system MIT press (1993). [Google Scholar]

- D'Ardenne K., McClure S. M., Nystrom L. E. & Cohen J. D. BOLD responses reflecting dopaminergic signals in the human ventral tegmental area. Science 319, 1264–1267 (2008). [DOI] [PubMed] [Google Scholar]

- O'Doherty J. P., Dayan P., Friston K., Critchley H. & Dolan R. J. Temporal difference models and reward-related learning in the human brain. Neuron 38, 329–337 (2003). [DOI] [PubMed] [Google Scholar]

- O'Doherty J. et al. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science 304, 452–454 (2004). [DOI] [PubMed] [Google Scholar]

- Tom S. M., Fox C. R., Trepel C. & Poldrack R. A. The neural basis of loss aversion in decision-making under risk. Science 315, 515–518 (2007). [DOI] [PubMed] [Google Scholar]

- Boureau Y. L. & Dayan P. Opponency revisited: competition and cooperation between dopamine and serotonin. Neuropsychopharmacology 36, 74–97 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berridge K. C. The debate over dopamine's role in reward: the case for incentive salience. Psychopharmacology 191, 391–431 (2007). [DOI] [PubMed] [Google Scholar]

- Holmes A. P. & Friston K. J. Generalisability, random effects and population inference. Neuroimage 7, S754 (1998). [Google Scholar]

- Guitart-Masip M. et al. Action dominates valence in anticipatory representations in the human striatum and dopaminergic midbrain. J. Neurosci. 31, 7867–7875 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Figure 1 and Supplementary Table 1

Data Availability Statement

All data necessary to reproduce the results reported are available on request to the corresponding author.