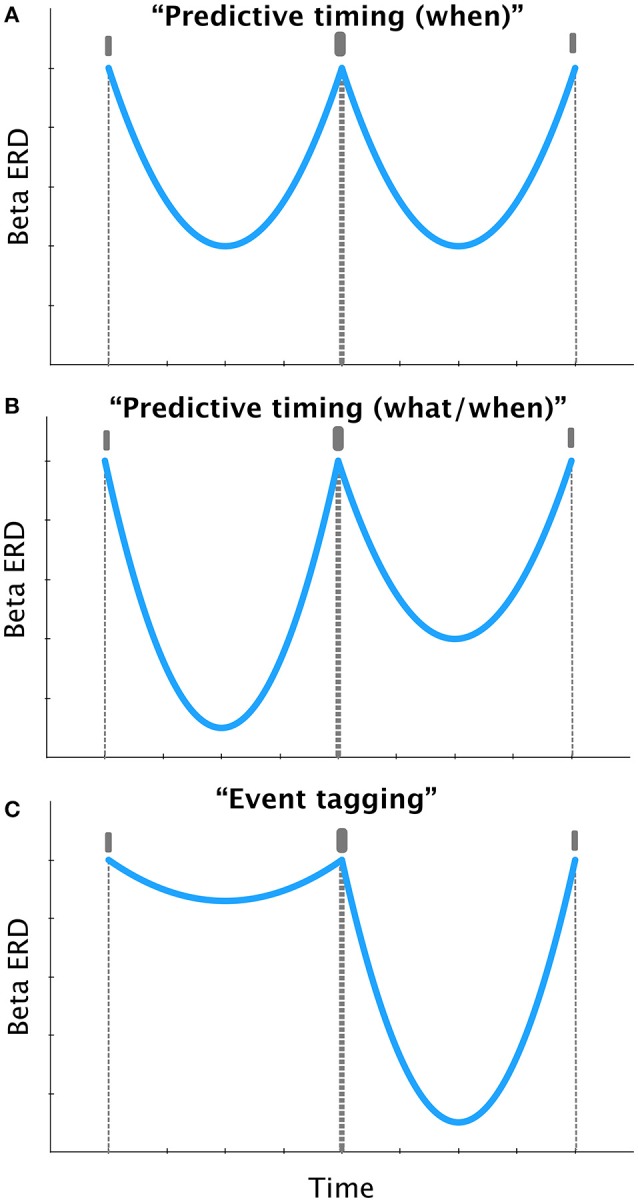

The ability to predict the timing of natural sounds is essential for accurate comprehension of speech and music (Allman et al., 2014). Rhythmic activity in the beta range (12–30 Hz) is crucial for encoding the temporal structure of regular sound sequences (Fujioka et al., 2009, 2012; Bartolo et al., 2014; Teki, 2014; Bartolo and Merchant, 2015). Specifically, the power of induced beta oscillations in the auditory cortex is dynamically modulated according to the temporal pattern of beats (Fujioka et al., 2012), such that beat-related induced beta power decreases after the beat and then increases preceding the next beat as depicted in Figure 1A. However, it is not known whether beta oscillations encode the beat positions in metrical sequences with physically or subjectively accented beats (i.e., “upbeat” and “downbeat”) and whether this is accomplished in a predictive manner or not.

Figure 1.

Schematic depiction of the time course of induced beta oscillatory activity for a hypothetical sound sequence (indicated by vertical bars in gray, in the order, upbeat, downbeat, and upbeat), in accordance to the “predictive timing” and “event tagging” mechanisms. Presented pattern is based on previous studies such as Fujioka et al. (2012). (A) Predictive timing theory (e.g., Arnal and Giraud, 2012) suggests that beta power should peak before each sound, such that the rebound of beta power could be predictive of the timing of the upcoming sound, regardless of the salience of the sound. (B) A hypothesized predictive code that also encodes the identity of the salient events in a sequence may show modulation of the stereotypical beta ERD response in panel (A), expressed in terms of differential magnitude (here, greater beta suppression) before the salient event. As opposed to panel (A) beta power is not modulated in the same manner before upbeats and downbeats, allowing the encoding of “what” and “when” information in a manner consistent with the predictive timing framework (e.g., Arnal and Giraud, 2012). (C) Event tagging proposal (Iversen et al., 2009; Hanslmayr and Staudigl, 2014) suggests that beta power encodes accented events and should peak after the accented sounds, which is in contradiction with the predictive coding of “what” information depicted in panel (B).

In a recent study, Fujioka et al. (2015) used magnetoencephalography to examine the role of induced beta oscillations in representing “what” and “when” information in musical sequences with different metrical contexts, i.e., a march and a waltz. Musically trained participants listened to 12-beat sequences of metrically accented beats, where every second (march) or third (waltz) beat was louder, along with unaccented beats at the same intensity. The paradigm consisted of two phases: A perception phase where accented beats were presented in march or waltz contexts and participants were required to actively perceive the meter, followed by an imagery phase where unaccented beats were presented at a softer intensity and participants had to subjectively imagine the meter.

Similar to their previous study (Fujioka et al., 2012), the authors found that event-related beta desynchronization (ERD) follows the beat, i.e., beta ERD response showed a sharp decrease after the stimulus, attained a minima with a latency of ~200 ms, and subsequently recovered with a shallow slope (see Figure 2 in Fujioka et al., 2015). This result has been previously demonstrated (Fujioka et al., 2009, 2012), and extended by other groups using electrophysiological recordings in humans (e.g., Iversen et al., 2009), and macaques (Bartolo et al., 2014; Bartolo and Merchant, 2015), as well as demonstrating a role for induced beta oscillations in time production (Arnal et al., 2015; Kononowicz and van Rijn, 2015). This result was valid for both the march and waltz conditions in the perception and even more importantly in the imagery phase, implying a top-down mechanism. Significantly, the authors claimed that the beta ERD response in the auditory cortex differentiates between the positions of the downbeat and the following beat (see Figures 3, 4 in Fujioka et al., 2015).

The novel result reported by Fujioka et al. (2015) is that the beta ERD response in auditory cortex can distinguish between accented beat positions in metrical sequences. However, the underlying mechanisms are far from clear. Fujioka et al. (2015) explain their results using predictive coding theory (Bastos et al., 2012) but an alternative “event tagging” mechanism (Iversen et al., 2009; see Repp, 2005 and Repp and Su, 2013 for a review on mechanisms for metrical processing), may also account for metrical interpretation of beat-based sequences.

We consider the results of Fujioka et al. (2015) in the light of these two mechanisms, i.e., predictive timing and event tagging (Figure 1). The predictive coding framework posits that beta oscillations are associated with anticipatory behavior and predictive coding (Arnal and Giraud, 2012). An internal model is established that conveys top-down predictions based on modulation of beta power with the goal of predicting the next event as shown in Figure 1A. However, if the predictive code were to also represent the identity of the salient event (i.e., what) in addition to its timing (i.e., when), one may hypothesize a modulation of the beta ERD response before the downbeat, which might be expressed in terms of differential magnitude as shown in Figure 1B. Such a response, that is specific to the downbeat would predict both the identity and timing of accented beats.

According to the event tagging framework (Hanslmayr and Staudigl, 2014), beta oscillations encode salient events (e.g., downbeat) as depicted in Figure 1C. Specifically, desynchronization of induced beta power may reflect memory formation (Hanslmayr and Staudigl, 2014) or an active change in sensorimotor processing (Pfurtscheller and Lopes da Silva, 1999). During rhythm perception, where encoding the beat in memory is critical (Teki and Griffiths, 2014, 2016) the structure of the metrical accents and salient events may be represented by the depth of beta desynchronization. Therefore, the largest beta desynchronization may be expected to occur after the downbeat (Figure 1C). In the present study, the amount of beta desynchronization was found to be largest after the accented tones. Therefore, the reported results are consistent with the event tagging framework, suggesting that subjectively and physically accented events invoke changes in the encoding of these events (Pfurtscheller and Lopes da Silva, 1999; Repp, 2005).

However, it is plausible that the predictive timing and event tagging mechanisms may operate in concert. To confirm this hypothesis, one needs to assess whether any prediction, implemented as beta rebound, occurs before the accented tones (Figure 1B). In the current study, it is difficult to determine whether there is robust beta synchronization before the accented tones. Careful observation of the results (Figures 3, 4 in Fujioka et al., 2015) suggests that beta power is not modulated before the downbeat in either of the two metrical conditions, neither in the perception nor in the imagery phase, except for a weak effect for the waltz-perception condition in right auditory cortex.

It is therefore not evident whether beta ERD carries predictive information about salient events, in addition to their timing. Therefore, future studies should also focus on other (non-sensory) brain regions, like the supplementary motor area or basal ganglia that are implicated in encoding rhythmic patterns (Grahn and Brett, 2007; Teki et al., 2011; Crowe et al., 2014; Merchant et al., 2015), as it is possible that different regions may recruit distinct mechanisms.

Overall, this study has provided significant insights about the neural representation of musical sequences. Beta oscillations have repeatedly been shown to track the timing of events in sound sequences but whether they can differentiate between beat positions, i.e., also encode categorical information about the events has been highlighted by the present study. It is important to build upon the current results and identify the precise role of beta oscillations with respect to encoding of “when” and “what” information in natural sound sequences, and future research may benefit highly from the current study.

Author contributions

All authors listed, have made substantial, direct and intellectual contribution to the work, and approved it for publication.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

ST is supported by the Wellcome Trust (WT106084/Z/14/Z; Sir Henry Wellcome Postdoctoral Fellowship). TK is supported by ERC-YSt-263584 awarded to Virginie van Wassenhove.

References

- Allman M. J., Teki S., Griffiths T. D., Meck W. H. (2014). Properties of the internal clock: first- and second-order principles of subjective time. Annu. Rev. Psychol. 65, 743–771. 10.1146/annurev-psych-010213-115117 [DOI] [PubMed] [Google Scholar]

- Arnal L. H., Doelling K. B., Poeppel D. (2015). Delta-Beta Coupled Oscillations Underlie Temporal Prediction Accuracy. Cereb. Cortex 25, 3077–3085. 10.1093/cercor/bhu103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnal L. H., Giraud A.-L. (2012). Cortical oscillations and sensory predictions. Trends Cogn. Sci. 16, 390–398. 10.1016/j.tics.2012.05.003 [DOI] [PubMed] [Google Scholar]

- Bartolo R., Merchant H. (2015). β oscillations are linked to the initiation of sensory-cued movement sequences and the internal guidance of regular tapping in the monkey. J. Neurosci. 35, 4635–4640. 10.1523/JNEUROSCI.4570-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartolo R., Prado L., Merchant H. (2014). Information processing in the primate basal ganglia during sensory-guided and internally driven rhythmic tapping. J. Neurosci. 34, 3910–3923. 10.1523/JNEUROSCI.2679-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bastos A. M., Usrey W. M., Adams R. A., Mangun G. R., Fries P., Friston K. J. (2012). Canonical microcircuits for predictive coding. Neuron 76, 695–711. 10.1016/j.neuron.2012.10.038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowe D. A., Zarco W., Bartolo R., Merchant H. (2014). Dynamic representation of the temporal and sequential structure of rhythmic movements in the primate medial premotor cortex. J. Neurosci. 34, 11972–11983. 10.1523/JNEUROSCI.2177-14.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fujioka T., Ross B., Trainor L. J. (2015). Beta-band oscillations represent auditory beat and its metrical hierarchy in perception and imagery. J. Neurosci. 35, 15187–15198. 10.1523/JNEUROSCI.2397-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fujioka T., Trainor L. J., Large E. W., Ross B. (2009). Beta and gamma rhythms in human auditory cortex during musical beat processing. Ann. N.Y. Acad. Sci. 1169, 89–92. 10.1111/j.1749-6632.2009.04779.x [DOI] [PubMed] [Google Scholar]

- Fujioka T., Trainor L. J., Large E. W., Ross B. (2012). Internalized timing of isochronous sounds is represented in neuromagnetic β oscillations. J. Neurosci. 32, 1791–1802. 10.1523/JNEUROSCI.4107-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grahn J. A., Brett M. (2007). Rhythm and beat perception in motor areas of the brain. J. Cogn. Neurosci. 19, 893–906. 10.1162/jocn.2007.19.5.893 [DOI] [PubMed] [Google Scholar]

- Hanslmayr S., Staudigl T. (2014). How brain oscillations form memories–a processing based perspective on oscillatory subsequent memory effects. Neuroimage 85(Pt 2), 648–655. 10.1016/j.neuroimage.2013.05.121 [DOI] [PubMed] [Google Scholar]

- Iversen J. R., Repp B. H., Patel A. D. (2009). Top-down control of rhythm perception modulates early auditory responses. Ann. N.Y. Acad. Sci. 1169, 58–73. 10.1111/j.1749-6632.2009.04579.x [DOI] [PubMed] [Google Scholar]

- Kononowicz T. W., van Rijn H. (2015). Single trial beta oscillations index time estimation. Neuropsychologia 75, 381–389. 10.1016/j.neuropsychologia.2015.06.014 [DOI] [PubMed] [Google Scholar]

- Merchant H., Pérez O., Bartolo R., Méndez J. C., Mendoza G., Gámez J., et al. (2015). Sensorimotor neural dynamics during isochronous tapping in the medial premotor cortex of the macaque. Eur. J. Neurosci. 41, 586–602. 10.1111/ejn.12811 [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G., Lopes da Silva F. H. (1999). Event-related EEG/MEG synchronization and desynchronization: basic principles. Clin. Neurophysiol. 110, 1842–1857. 10.1016/S1388-2457(99)00141-8 [DOI] [PubMed] [Google Scholar]

- Repp B. H. (2005). Sensorimotor synchronization: a review of the tapping literature. Psychon. Bull. Rev. 12, 969–992. 10.3758/BF03206433 [DOI] [PubMed] [Google Scholar]

- Repp B. H., Su Y.-H. (2013). Sensorimotor synchronization: a review of recent research (2006-2012). Psychon. Bull. Rev. 20, 403–452. 10.3758/s13423-012-0371-2 [DOI] [PubMed] [Google Scholar]

- Teki S. (2014). Beta drives brain beats. Front. Syst. Neurosci. 8:155. 10.3389/fnsys.2014.00155 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teki S., Griffiths T. D. (2014). Working memory for time intervals in auditory rhythmic sequences. Front. Psychol. 5:1329. 10.3389/fpsyg.2014.01329 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teki S., Griffiths T. D. (2016). Brain bases of working memory for time intervals in rhythmic sequences. Front. Neurosci. 10:239. 10.3389/fnins.2016.00239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teki S., Grube M., Kumar S., Griffiths T. D. (2011). Distinct neural substrates of duration-based and beat-based auditory timing. J. Neurosci. 31, 3805–3812. 10.1523/JNEUROSCI.5561-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]