Abstract

We describe a new method to compute general cubature formulae. The problem is initially transformed into the computation of truncated Hankel operators with flat extensions. We then analyze the algebraic properties associated to flat extensions and show how to recover the cubature points and weights from the truncated Hankel operator. We next present an algorithm to test the flat extension property and to additionally compute the decomposition. To generate cubature formulae with a minimal number of points, we propose a new relaxation hierarchy of convex optimization problems minimizing the nuclear norm of the Hankel operators. For a suitably high order of convex relaxation, the minimizer of the optimization problem corresponds to a cubature formula. Furthermore cubature formulae with a minimal number of points are associated to faces of the convex sets. We illustrate our method on some examples, and for each we obtain a new minimal cubature formula.

Keywords: Cubature formula, Hankel matrix, Flat extension, Orthogonal polynomials, Border basis, Semidefinite programming

1. Cubature formula

1.1. Statement of the problem

Consider the integral for a continuous function f,

where Ω ⊂ ℝn and w is a positive function on Ω.

We are looking for a cubature formula which has the form

| (1) |

where the points ζj ∈ ℂn and the weights wj ∈ ℝ are independent of the function f. They are chosen so that

where V is a finite dimensional vector space of functions. Usually, the vector space V is the vector space of polynomials of degree ≤ d, because a well-behaved function f can be approximated by a polynomial, so that Q[f] approximates the integral I[f].

Given a cubature formula (1) for I, its algebraic degree is the largest degree d for which I[f] = 〈σ|f〉 for all f of degree ≤ d.

1.2. Related works

Prior approaches to the solution of cubature problem can be grouped into roughly two classes. One, where the goal is to estimate the fewest weighted, aka cubature points possible for satisfying a prescribed cubature rule of fixed degree [9,24,26,29,30,33]. The other class focusses on the determination and construction of cubature rules which would yield the fewest cubature points possible [7,34,38–41,44,45]. In [34], for example, Radon introduced a fundamental technique for constructing minimal cubature rules where the cubature points are common zeros of multivariate orthogonal polynomials. This fundamental technique has since been extended by many, including e.g. [33,41,45] where notably, the paper [45] uses multivariate ideal theory, while [33] uses operator dilation theory. In this paper, we propose another approach to the second class of cubature solutions, namely, constructing a suitable finite dimensional Hankel matrix and extracting the cubature points using sub-operators of the Hankel matrix [18]. This approach is related to [21–23], which in turn are based on the methods of multivariate truncated moment matrices, their positivity and extension properties [11–13].

Applications of such algorithms determining cubature rules and cubature points over general domains occur in isogeometric modeling and finite element analysis using generalized Barycentric finite elements [17,1,35,36]. Additional applications abound in numerical integration for low dimensional (6–100 dimensions) convolution integrals that appear naturally in computational molecular biology [3,2], as well in truly high dimensional (tens of thousands of dimensions) integrals that occur in finance [32,8].

1.3. Reformulation

Let R = ℝ[x] be the ring of polynomials in the variables x = (x1,…, xn) with coefficients in ℝ. Let Rd be the set of polynomials of degree ≤ d. The set of linear forms on R, that is, the set of linear maps from R to ℝ is denoted by R*. The value of a linear form Λ ∈ R* on a polynomial p ∈ R is denoted by 〈Λ|p〉. The set R* can be identified with the ring of formal power series in new variables y = (y1,…, yn):

The coefficients 〈Λ|xα〉 of these series are called the moments of Λ. The evaluation at a point ζ ∈ ℝn is an element of R, denoted by eζ, and defined by eζ : f ∈ R ↦ f(ζ) ∈ ℝ. For any p ∈ R and any Λ ∈ R*, let p ★ Λ : q ∈ R ↦ Λ(pq).

Cubature problem

Let V ⊂ R be a vector space of polynomials and consider the linear form Ī ∈ V* defined by

Computing a cubature formula for I on V then consists in finding a linear form

which coincides on V with Ī. In other words, given the linear form Ī on Rd, we wish to find a linear form which extends Ī.

2. Cubature formulae and Hankel operators

To find such a linear form σ ∈ R*, we exploit the properties of its associated bilinear form Hσ : (p, q) ∈ R × R → 〈σ|pq〉, or equivalently, the associated Hankel operator:

The kernel of Hσ is kerHσ = {p ∈ R | ∀q ∈ R, 〈σ|pq〉 = 0}. It is an ideal of R. Let 𝒜σ = R/ker Hσ be the associated quotient ring.

The matrix of the bilinear form or the Hankel operator Hσ associated to σ in the monomial basis, and its dual are (〈Λ|xα+β〉)α,β∈ℕn. If we restrict them to a space V spanned by the monomial basis (xα)α∈A for some finite set A ⊂ ℕn, we obtain a finite dimensional matrix , and which is a Hankel matrix. More generally, for any vector spaces V, V′ ⊂ R, we define the truncated bilinear form and Hankel operators: and . If V (resp. V′) is spanned by a monomial set xA for A ⊂ ℕn (resp. xB for B ⊂ ℕn), the truncated bilinear form and truncated Hankel operator are also denoted by . The associated Hankel matrix in the monomial basis is then .

The main property that we will use to characterize a cubature formula is the following (see [22,20]):

Proposition 2.1

A linear form σ ∈ R* can be decomposed as with wi ∈ ℂ \ {0}, ζi ∈ ℂn iff

Hσ : p ↦ p ★ σ is of rank r,

ker Hσ is the ideal of polynomials vanishing at the points {ζ1,…, ζr}.

This shows that in order to find the points ζi of a cubature formula, it is sufficient to compute the polynomials p ∈ R such that ∀q ∈ R, 〈σ|pq〉 = 0, and to determine their common zeroes. In Section 4 we describe a direct way to recover the points ζi, and the weights ωi from suboperators of Hσ.

In the case of cubature formulae with real points and positive weights, we already have the following stronger result (see [22,20]):

Proposition 2.2

Let σ ∈ R*.

with wi > 0, ζi ∈ ℝn iff rankHσ = r and Hσ ≽ 0.

A linear form with wi > 0, ζi ∈ ℝn is called a r-atomic measure since it coincides with the weighted sum of the r Dirac measures at the points ζi.

Therefore, the problem of constructing a cubature formula σ for I exact on V ⊂ R can be reformulated as follows: Construct a linear form σ ∈ R* such that

rank Hσ = r < ∞ and Hσ ≽ 0.

v ∈ V, I[v] = 〈σ|v〉.

The rank r of Hσ is given by the number of points of the cubature formula, which is expected to be small or even minimal.

The following result states that a cubature formula with dim(V) points, always exists.

Theorem 2.3. (See [42,4].)

If a sequence (σα)α∈ℕn,|α|≤t is the truncated moment sequence of a measure μ (i.e. σα = ∫ xαdμ for |α| ≤ t), then it can also be represented by an r-atomic measure: for |α| ≤ t, where r ≤ st, wi > 0, ζi ∈ supp(μ).

This result can be generalized to any set of linearly independent polynomials v1,…, vr ∈ R (see the proof in [4] or Theorem 5.9 in [22]). We deduce that the cubature problem always has a solution with dim(V) or less points.

Definition 2.4

Let rc(I) be the maximum rank of the bilinear form where W, W′ ⊂ V are such that ∀w ∈ W, ∀w′ ∈ W′, ww′ ∈ V. It is called the Catalecticant rank of I.

Proposition 2.5

Any cubature formula for I exact on V involves at least rc(I) points.

Proof

Suppose that σ is a cubature formula for I exact on V with r points. Let W, W′ ⊂ V be vector spaces such that ∀w ∈ W, ∀w′ ∈ W′, ww′ ∈ V. Since coincides with , which is the restriction of the bilinear form Hσ to W × W′, we deduce that . Thus r ≥ rc(I).

Corollary 2.6

Let W ⊂ V such that ∀w, w′ ∈ W, ww′ ∈ V. Then any cubature formula of I exact on V involves at least dim(W) points.

Proof

As we have ∀p ∈ W, p2 ∈ V so that I(p2) = 0 implies p = 0. Therefore the quadratic form is positive definite of rank dim(W). By Proposition 2.5, a cubature formula of I exact on V involves at least rc(I) ≥ dim(W) points.

In particular, if V = Rd any cubature formula of I exact on V involves at least points.

In [25], this lower bound is improved for cubature problems in two variables.

3. Flat extensions

In order to reduce the extension problem to a finite-dimensional problem, we consider hereafter only truncated Hankel operators. Given two subspaces W, W′ of R and a linear form σ defined on W ·W′ (i.e. σ ∈ 〈W ·W′〉*), we define

If w (resp. w′) is a basis of W (resp. W′), then we will also denote . The matrix of in the basis w = {w1,…, ws}, is [〈σ|wiwj〉]1≤i≤s,1≤j≤s′.

Definition 3.1

Let W ⊂ V, W′ ⊂ V′ be subvector spaces of R and σ ∈ 〈V · V′〉*. We say that is a flat extension of if .

A set B of monomials of R is connected to 1 if it contains 1 and if for any m ≠ 1 ∈ B, there exist 1 ≤ i ≤ n and m′ ∈ B such that m = xim′.

As a quotient R/ker Hσ has always a monomial basis connected to 1, so in the first step we take for w, w′, monomial sets that are connected to 1.

For a set B of monomials in R, let us define B+ = B ∪ x1B ∪ ··· ∪ xn, B and ∂B = B+ \ B.

The next theorem gives a characterization of flat extensions for Hankel operators defined on monomial sets connected to 1. It is a generalized form of the Curto–Fialkow theorem [13].

Theorem 3.2. (See [23,6,5].)

Let B ⊂ C, B′ ⊂ C′ be sets of monomials connected to 1 such that |B| = |B′| = r and C · C′ contains B+ · B′+. If σ ∈ 〈C · C′〉* is such that , then has a unique flat extension Hσ̃ for some σ̃ ∈ R*. Moreover, we have and R = 〈B〉 ⊕ker Hσ̃ = 〈B′〉 ⊕ker Hσ̃. In the case where B′ = B, if , then Hσ̃ ≽ 0.

Based on this theorem, in order to find a flat extension of , it suffices to construct an extension of the same rank r.

Corollary 3.3

Let V ⊂ R be a finite dimensional vector space. If there exists a set B of monomials connected to 1 such that V ⊂ 〈B+ · B+〉 and σ ∈ 〈B+ · B+〉* such that ∀v ∈ V, 〈σ|v〉 = I[v] and , then there exist wi > 0, ζi ∈ ℝn, i = 1,…, r such that ∀v ∈ V,

This characterization leads to equations which are at most of degree 2 in a set of variables related to unknown moments and relation coefficients as described by the following proposition:

Proposition 3.4

Let B and B′ be two sets of monomials of R of size r, connected to 1 and σ be a linear form on 〈B′ + · B+〉. Then, admits a flat extension Hσ̃ such that Hσ̃ is of rank r and B (resp. B′) a basis of R/ker Hσ̃ iff

| (2) |

with such that ℚ is invertible and

| (3) |

for some matrices ℙ ∈ ℂB×∂B′, ℙ′ ∈ ℂB′×∂B.

Proof

If we have 𝕄 = ℚtℙ, 𝕄′ = ℚℙ′, ℕ = ℙtℚℙ′, then

has clearly the same rank as . According to Theorem 3.2, admits a flat extension Hσ̃ with σ̃ ∈ R* such that B and B′ are bases of 𝒜σ̃ = R/ker Hσ̃.

Conversely, if Hσ̃ is a flat extension of with B and B′ bases of 𝒜σ̃ = R/ker Hσ̃, then is invertible and of size r = |B| = |B′|. As Hσσ is of rank r, is also of rank r. Thus, there exists ℙ′ ∈ ℂB′×∂B (ℙ′ = ℚ−1𝕄′) such that 𝕄′ = ℚℙ′. Similarly, there exists ℙ ∈ ℂB×∂B′ such that 𝕄 = ℚtℙ. Thus, the kernel of is the image of . We deduce that ℕ = 𝕄tℙ′ = ℙtℚℙ′.

Remark 3.5

A basis of the kernel of is given by the columns of , which represent polynomials of the form

for α ∈ ∂B. These polynomials are border relations which project the monomials xα of ∂B on the vector space spanned by the monomials B, modulo ker . It is proved in [6] that they form a border basis of the ideal ker Hσ̃ when is a flat extension and is invertible.

Remark 3.6

Let A ⊂ ℕn be a set of monomials such that 〈σ|xα〉 = I[xα]. Considering the entries of ℙ, ℙ′ and the entries σα of ℚ with α ∉ A as variables, the constraints (3) are multilinear equations in these variables of total degree at most 3 if ℚ contains unknown entries and 2 otherwise.

Example 3.7

We consider here V = R2k for k > 0. By Proposition 3.4, any cubature formula for I exact on V has at least rk := dim Rk points. Let us take B to be all the monomials of degree ≤ k so that B+ is the set of monomials of degree ≤ k + 1. If a cubature formula for I is exact on R2k and has rk points, then is a flat extension of of rank rk. Consider a decomposition of as in (2). By Proposition 3.4, we have the relations

| (4) |

where

ℚ = (I[xβ+β′])β,β′∈B,

𝕄 = (〈σ|xβ+β′〉)β∈B,β′∈∂B with 〈σ|xβ+β′〉 = I[xβ+β′] when |β + β′| ≤ 2k,

ℕ = (〈σ|xβ+β′〉)β,β′∈∂B,

ℙ = (pβ,α)β∈B,α∈∂B.

The equations (4) are quadratic in the variables ℙ and linear in the variables in 𝕄. Solving these equations yields a flat extension of . As , any real solution of this system of equations corresponds to a cubature for I on exact R2k of the form with wi > 0, ζi ∈ ℝn.

We illustrate the approach with R = ℝ[x1, x2], V = R4, . Let

be the series truncated in degree 4, corresponding to the first moments (not necessarily given by an integral).

where σ1 = σ5,0, σ2 = σ4,1, σ2 = σ3,2, σ4 = σ2,3, σ5 = σ1,4, σ6 = σ0,5, σ7 = σ6,0, σ8 = σ5,1, σ9 = σ4,2, σ10 = σ3,3, σ11 = σ2,4, σ12 = σ1,5, σ13 = σ0,6.

The first 6 × 6 diagonal block is invertible. To have a flat extension , we impose the condition that the sixteen 7 × 7 minors of , which contains the first 6 rows and columns, must vanish. This yields the following system of quadratic equations:

The set of solutions of this system is an algebraic variety of dimension 3 and degree 52. A solution is σ1 = −484, σ2 = 226, σ3 = −54, σ4 = 82, σ5 = −6, σ6 = 167, σ7 = −1456, σ8 = 614, σ9 = −162, σ10 = 182, σ11 = −18, σ12 = 134, σ13 = 195.

3.1. Computing an orthogonal basis of 𝒜σ

In this section, we describe a new method to construct a basis B of 𝒜σ and to detect flat extensions, from the knowledge of the moments σα of σ(y). We are going to inductively construct a family P of polynomials, orthogonal for the inner product

and a monomial set B connected to 1 such that 〈B〉 = 〈P〉.

We start with B = {1}, P = {1} ⊂ R. As 〈1, 1〉σ = 〈σ | 1〉 ≠ 0, the family P is orthogonal for σ and 〈B〉 = 〈P〉.

We now describe the induction step. Assume that we have a set B = {m1,…, ms} and P = {p1,…, ps} such that

〈B〉 = 〈P〉;

〈pi, pj〉σ ≠ 0 if i = j and 0 otherwise.

To construct the next orthogonal polynomials, we consider the monomials in and project them on 〈P〉:

By construction, and . We extend B by choosing a subset of monomials such that the matrix

is invertible. The family P is then extended by adding an orthogonal family of polynomials {ps+1,…, ps+k} constructed from { }. If all the polynomials are such that , the process stops.

This leads to the following algorithm:

Algorithm 1

Input: the coefficients σα of a series σ ∈ ℂ[[y]] for α ∈ A ⊂ ℕn connected to 1 with σ0 ≠ 0.

Let B := {1}; P = {1}; r := 1; E = 〈yα〉α∈A;

-

While s > 0 and B+ · B+ ⊂ E do

Compute and ;

Compute a (maximal) subset of ∂B such that is invertible;

Compute an orthogonal family of polynomials {ps+1,…, ps+k} from { };

B := B ∪ B′, P := P ∪ {ps+1,…, ps+k}; r+ = k;

If B+ · B+ ⊄ ⊂ E then return failed.

Output: failed or success with

a set of monomials B = {m1,…, mr} connected to 1, and non-degenerate for 〈·, ·〉σ;

a set of polynomials P = {p1,…, pr} orthogonal for σ and such that 〈B〉 = 〈P〉;

the relations for the monomials in .

The above algorithm is a Gramm–Schmidt-type orthogonalization method, where, at each step, new monomials are taken in ∂B and projected onto the space spanned by the previous monomial set B. Notice that if the polynomials pi are of degree at most d′ < d, then only the moments of σ of degree ≤ 2d′ + 1 are involved in this computation.

Proposition 3.8

If Algorithm 1 outputs with success a set B = {m1,…, mr} and the relations , for in , then σ coincides on 〈B+ · B+〉 with the series σ̃ such that

rank Hσ̃ = r;

B and P are bases of 𝒜σ̃ for the inner product 〈·, ·〉σ̃;

The ideal Iσ̃ = ker Hσ̄ is generated by (ρi)i=1,…,l;

- The matrix of multiplication by xk in the basis P of 𝒜σ̃ is

Proof

By construction, B is connected to 1. A basis B′ of 〈B+〉 is formed by the elements of B and the polynomials ρi, i = 1,…, l. Since Algorithm 1 stops with success, we have ∀i, j ∈ [1, l], ∀b ∈ 〈B〉, 〈ρi, b〉σ = 〈ρi, ρj〉σ = 0 and . As 〈B+〉 = 〈B〉 ⊕ 〈ρ1,…, ρl〉, and is a flat extension of . By construction, P is an orthogonal basis of 〈B〉 and the matrix of in this basis is diagonal with non-zero entries on the diagonal. Thus is of rank r.

By Theorem 3.2, σ coincides on 〈B+ ·B+〉 with a series σ̃ ∈ R* such that B is a basis of 𝒜σ̄ = R/Iσ̃ and .

As 〈B+〉 = 〈B〉 ⊕ 〈ρ1,…, ρl〉 = 〈P 〉 ⊕〈ρ1,…, ρl〉 and P is an orthogonal basis of 𝒜σ̄, which is orthogonal to 〈ρ1,…, ρl〉, we have

with ρ ∈ 〈ρ1,…, ρl〉. This shows that the matrix of the multiplication by xk modulo Iσ̄ = (ρ1,…, ρl), in the basis P = {p1,…, pr} is .

Remark 3.9

It can be shown that the polynomials (ρi)i=1,·,l are a border basis of Iσ̃ for the basis B [23,6,27,28].

Remark 3.10

If , then by Proposition 2.2, the common roots ζ1,…, ζr of the polynomials ρ1,…, ρl are simple and real ∈ ℝn. They are the cubature points:

with wj > 0.

4. The cubature formula from the moment matrix

We now describe how to recover the cubature formula, from the moment matrix . We assume that the flat extension condition is satisfied:

| (5) |

Theorem 4.1

Let B and B′ be monomial subsets of R of size r connected to 1 and σ ∈ 〈B+ · B′+〉*. Suppose that . Let and . Then,

B and B′ are bases of 𝒜σ̃ = R/ker Hσ̃,

is the matrix of multiplication by xi in the basis B (resp. B′) of 𝒜σ̃,

Proof

By the flat extension Theorem 3.2, there exists σ̄ ∈ R* such that Hσ̃ is a flat extension of of rank r = |B| = |B′| and . As R = 〈B〉 ⊕ ker Hσ̄ and rank Hσ̃ = r, 𝒜σ̃ = R/ker Hσ̄ is of dimension r and generated by B. Thus B is a basis of 𝒜σ̃. A similar argument shows that B′ is also a basis of 𝒜σ̃. We denote by π : 𝒜σ̃ → 〈B〉 and π′ : 𝒜σ̃ → 〈B′〉 the isomorphisms associated to these bases representations.

The matrix [ ] is the matrix of the Hankel operator

in the basis B and the dual basis of B′. Similarly, [ ] is the matrix of

in the same bases. As xi ★ σ̄ = σ̄ ○ Mi where Mi : 𝒜σ̃ → 𝒜σ̃ is the multiplication by xi in 𝒜σ̃, we deduce that H̄xi★σ̄ = H̄σ̄ ○ Mi and is the matrix of multiplication by xi in the basis B of 𝒜σ̃. By exchanging the role of B and B′ and by transposition ( ), we obtain that is the transpose of the matrix of multiplication by xi in the basis B′ of 𝒜σ̃.

Theorem 4.2

Let B be a monomial subset of R of size r connected to 1 and σ ∈ 〈B+ · B+〉*. Suppose that and that . Let . Then σ can be decomposed as

with wj > 0 and ζj ∈ ℝn such that Mi have r common linearly independent eigenvectors uj, j = 1, …, r and

for 1 ≤ i ≤ n, 1 ≤ j ≤ r;

.

Proof

By Theorem 4.1, the matrix Mi is the matrix of multiplication by xi in the basis B of 𝒜σ̃. As , the flat extension Theorem 3.2 implies that Hσ̄ ≽ 0 and that

where wj > 0 and ζj ∈ ℝn are the simple roots of the ideal ker Hσ̄. Thus the commuting operators Mi are diagonalizable in a common basis of eigenvectors ui, i = 1, …, r, which are scalar multiples of the interpolation polynomials at the roots ζ1, …, ζr: ui(ζi) = λi ≠ 0 and ui(ζj) = 0 if j ≠ i (see [15, Chap. 4] or [10]). We deduce that

so that . As ui(ζi) = λi, we have .

Algorithm 2

Input: B is a set of monomials connected to 1, σ ∈ 〈 B+ · B+〉* such that is a flat extension of of rank |B|.

Compute an orthogonal basis {p1, …, pr} of B for σ;

Compute the matrices ;

Compute their common eigenvectors u1, …, ur.

Output: For j = 1, …, r,

;

.

Remark 4.3

Since the matrices Mk commute and are diagonalizable with the same basis, their common eigenvectors can be obtained by computing the eigenvectors of a generic linear combination l1M1 + ··· + lnMn, li ∈ ℝ.

5. Cubature formula by convex optimization

As described in the previous section, the computation of cubature formulae reduces to a low rank Hankel matrix completion problem, using the flat extension property. In this section, we describe a new approach which relaxes this problem into a convex optimization problem.

Let V ⊂ R be a vector space spanned by monomials xα for α ∈ A ⊂ ℕn. Our aim is to construct a cubature formula for an integral function I exact on V. Let i = (I[xα])α∈A be the sequence of moments given by the integral I. We also denote i ∈ V * the associated linear form such that ∀v ∈ V, 〈i | v〉 = I[v].

For k ∈ ℕ, we denote by

the set of semi-definite Hankel operators on Rt is associated to moment sequences which extend i. We can easily check that ℋk(i) is a convex set. We denote by the set of elements of ℋk(i) of rank ≤ r.

A subset of is the set of Hankel operators associated to cubature formulae of r points:

We can check that is also a convex set.

To impose the cubature points to be in a semialgebraic set 𝒮 defined by equality and inequalities , one can refine the space of ℋk(i) by imposing that σ is positive on the quadratic module (resp. preordering) associated to the constraints [19]. For the sake of simplicity, we don’t analyze this case here, which can be done in a similar way.

The Hankel operator associated to a cubature formula of r points is an element of . In order to find a cubature formula of minimal rank, we would like to compute a minimizer solution of the following optimization problem:

However this problem is NP-hard [16]. We therefore relax it into the minimization of the nuclear norm of the Hankel operators, i.e. the minimization of the sum of the singular values of the Hankel matrix [37]. More precisely, for a generic matrix P ∈ ℝst × st, we consider the following minimization problem:

| (6) |

Let (A, B) ∈ ℝsk × sk × ℝsk × sk → 〈A, B〉 = trace(AB) denote the inner product induced by the trace on the space of sk × sk matrices. The optimization problem (6) requires minimizing the linear form H → trace(HPPt) = 〈H, PPt〉 on the convex set ℋk(i). As the trace of PtHP is bounded by below by 0 when H ≽ 0, our optimization problem (6) has a non-negative minimum ≥ 0.

Problem (6) is a Semi-Definite Program (SDP), which can be solved efficiently by interior point methods. See [31]. SDP is an important ingredient of relaxation techniques in polynomial optimization. See [19,22].

Let be the set of polynomials of degree ≤ 2k which are sums of squares, let x(k) be the vector of all monomials in x of degree ≤ k and let q(x) = (x(k))tPPt x(k) ∈ Σk. Let pi(x) (1 ≤ i ≤ sk) denote the polynomial 〈Pi, x(k)〉 associated to the column Pi of P. We have and for any σ ∈ R2k,

For any l ∈ ℕ, we denote by πl: Rl → Rl the linear map which associates to a polynomial p ∈ Rl its homogeneous component of degree l. We say that P is a proper matrix if π2k(q(x)) ≠ 0 for all x ∈ ℝn.

We are thus looking for cubature formulae with a small number of points, which correspond to Hankel operators with small rank. The next result describes the structure of truncated Hankel operators, when the degree of truncation is high enough, compared to the rank.

Theorem 5.1

Let and let Hσ be its truncated Hankel operator on Rk. If Hσ ≽ 0 and Hσ is of rank r ≤ k, then

with ωi > 0 and ζi ∈ ℝn distinct for i = 1, …, r.

Proof

The substitution τ0: S[2k] → R2k which replaces x0 by 1 is an isomorphism of 𝕂-vector spaces. Let be the pull-back map on the . Let be the linear form induced by σ on S[2k] and let be the corresponding truncated operator on S[k]. The kernel K̄ of Hσ̄ is the vector space spanned by the homogenization in x0 of the elements of the kernel K of Hσ.

Let ≽ be the lexicographic ordering such that x0 ≽ ··· ≽ xn. By [14, Theorem 15.20, p. 351], after a generic change of coordinates, the initial J of the homogeneous ideal (K̄) ⊂ S is Borel fixed. That is, if xixα ∈ J, then xjxα ∈ J for j > i. Let B̄ be the set of monomials of degree k, which are not in J. As J is Borel fixed and different from S[2k], . Similarly we check that if with α1 = ··· = αl−1 = 0, then . This shows that B = τ0(B̄) is connected to 1.

As 〈 B̄ 〉 ⊕〈J〉 = 〈 B̄ 〉 ⊕ K̄ = S[k] where K̄ = ker Hσ̄, we have |B| = r. As B is connected to 1, deg(B) < r ≤ k and B+ ⊂ Rk.

By the substitution x0 = 1, we have Rk = 〈B〉 ⊕ K with K = ker Hσ. Therefore, Hσ is a flat extension of . By the flat extension Theorem 3.2, there exist λi > 0, ζ̄i = (ζi,0, ζi,1, …, ζi,n) ∈ ℝn+1 distinct for i = 1, …, r such that

| (7) |

Notice that for any λ ≠ 0, eζ̄i = λ−keλζ̄ion S[2k].

By an inverse change of coordinates, the points ζ̄i of (7) are transformed into some points ζ̄i = (ζi,0, ζi,1, …, ζi,n) ∈ 𝕂n+1 such that ζi,0 ≠ 0 (say for i = 1, …, r′) and the remaining r − r′ points with ζi,0 = 0. The image by of with ζi,0 ≠ 0 is

where . The image by of with , which vanishes on all the monomials xα with |α| < 2k, since their homogenization in degree 2k is and their evaluation at ζ̄i = (0, ζi,1, …, ζi,n) gives 0. The value of at xα with |α| = 2k, where ζi = (ζi,1,…, ζi,n). We deduce that

By dehomogenization, we have for i = 1, …, r′ and ζi = (ζi,1, …, ζi,n) ∈ ℝn for i = r′ + 1, …, n.

We exploit this structure theorem to show that if the truncation order is sufficiently high, a minimizer of (6) corresponds to a cubature formula.

Theorem 5.2

Let P be a proper operator and . Assume that there exists such that Hσ* is a minimizer of (6) of rank r with r ≤ k. Then i.e. there exist ωi > 0 and ζi ∈ ℝn such that

Proof

By Theorem 5.1,

with ωi > 0 and ζi ∈ ℝn for i = 1, …, r.

Let us suppose that r ≠ r′. As , the elements of V are of degree < 2k, therefore σ* and coincide on V and Hσ′ ∈ ℋk(i). We have the decomposition

The homogeneous component of highest degree π2k(q) of is the sum of the squares of the degree-k components of the pi:

so that . As trace(PHσ*P) is minimal, we must have , which implies that π2k(q)(ζi) for i = r′ + 1, …, r. However, this is impossible, since P is proper. We thus deduce that r′ = r, which concludes the proof of the theorem.

This theorem shows that an optimal solution of the minimization problem (6) of small rank (r ≤ k) yields a cubature formula, which is exact on V. Among such minimizers, we have those of minimal rank as shown in the next proposition.

Proposition 5.3

Let and H be an element of ℋk(i) with minimal rank r. If k ≥ r, then and it is either an extremal point of ℋk(i) or on a face of ℋk(i), which is included in .

Proof

Let be of minimal rank r.

By Theorem 5.1, with ωi > 0 and ζi ∈ ℝn for i = 1, …, r. The elements of V are of degree < 2k, therefore σ and coincide on V. We deduce that Hσ′ ∈ ℋk(i).

As rank Hσ′ = r′ ≤ r and Hσ ∈ ℋk(i) is of minimal rank r, r = r′ and .

Let us assume that Hσ is not an extremal point of ℋk(i). Then it is in the relative interior of a face F of ℋk(i). For any Hσ1 in a sufficiently small ball of F around Hσ, there exist t ∈ ]0, 1[ and Hσ2 ∈ F such that

The kernel of Hσ is the set of polynomials p ∈ Rk such that

As Hσi ≽ 0, we have Hσi (p, p) = 0 for i = 1, 2. This implies that ker Hσ ⊂ ker Hσi, for i = 1, 2. From the inclusion ker Hσ1 ∩ ker Hσ2 ⊂ ker Hσ, we deduce that

As Hσ is of minimal rank r, we have dim ker Hσ ≥ dim ker Hσi. This implies that ker Hσ = ker Hσ1 = ker Hσ2.

As r ≤ k, 𝒜σ has a monomial basis B (connected to 1) in degree < k and Rk = 〈B〉 ⊕ ker Hσ. Consequently, Hσ (resp. Hσi) is a flat extension of and we have the decomposition

with ωi,j > 0, i = 1,…, r, j = 1, 2. We deduce that and all the elements of the line (Hσ, Hσ1) which are in F are also in . Since F is convex, we deduce that .

Remark 5.4

A cubature formula is interpolatory when the weights are uniquely determined from the points. From the previous theorem and proposition, we see that if a cubature formula is of minimal rank and interpolatory, then it is an extremal point of ℋk(i).

According to the previous proposition, by minimizing the nuclear norm of a random matrix, we expect to find an element of minimal rank in one of the faces of ℋk(i), provided k is big enough. This yields the following simple algorithm, which solves the SDP problem, and checks the flat extension property using Algorithm 1. Furthermore it computes the decomposition using Algorithm 2 or increases the degree if there is no flat extension:

Algorithm 3

; notflat := true; P:= random sk × sk matrix;

-

While (notflat) do

Let σ be a solution of the SDP problem: minH∈ℋk(i) trace(PtHP);

If is not a flat extension, then k := k + 1; else notflat := false;

Compute the decomposition of , ωi > 0, ζi ∈ ℝn.

6. Examples

We now illustrate our cubature method on a few explicit examples.

Example 6.1 (Cubature on a square)

Our first application is a well known case, namely, the square domain Ω = [−1, 1] × [−1, 1]. We solve the SDP problem (6), with a random matrix P and with no constraint on the support of the points. In the following table, we give the degree of the cubature formula (i.e. the degree of the polynomials for which the cubature formula is exact), the number N of cubature points, the coordinates of the cubature points and the associated weights.

| Degree | N | Points | Weights |

|---|---|---|---|

| 3 | 4 | ±(0.46503, 0.464462) | 1.545 |

| ±(0.855875, −0.855943) | 0.454996 | ||

| 5 | 7 | ±(0.673625, 0.692362) | 0.595115 |

| ±(0.40546, −0.878538) | 0.43343 | ||

| ±(−0.901706, 0.340618) | 0.3993 | ||

| (0, 0) | 1.14305 | ||

| 7 | 12 | ±(0.757951, 0.778815) | 0.304141 |

| ±(0.902107, 0.0795967) | 0.203806 | ||

| ±(0.04182, 0.9432) | 0.194607 | ||

| ±(0.36885, 0.19394) | 0.756312 | ||

| ±(0.875533, −0.873448) | 0.0363 | ||

| ±(0.589325, −0.54688) | 0.50478 |

The cubature points are symmetric with respect to the origin (0, 0). The computed cubature formula involves the minimal number of points, which all lie in the domain Ω.

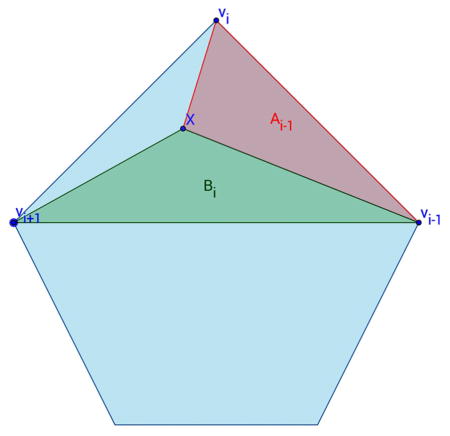

Example 6.2 (Barycentric Wachpress coordinates on a pentagon)

Here we consider the pentagon C of vertices v1 = (0, 1), v2 = (1, 0), v3 = (−1, 0), v4 = (−0.5, −1), v5 = (0.5, −1).

To this pentagon, we associate (Wachpress) barycentric coordinates [43], which are defined as follows. The weighted function associated to the vertex vi is defined as:

where Ai is the signed area of the triangle (x, vi−1, vi) and Bi is the area of (x, vi+1, vi−1). The coordinate function associated to vi is:

These coordinate functions satisfy:

λi(x) ≥ 0 for x ∈ C

,

For all polynomials p ∈ R = ℝ[u0, u1, u2, u3, u4], we consider

We look for a cubature formula σ ∈ R* of the form:

| (8) |

with wi > 0, ζi ∈ ℝ5, such that I[p] = 〈σ | p〉 for all polynomials p of degree ≤ 2.

The moment matrix associated to B = {1, u0, u1, u2, u3, u4} involves moments of degree ≤ 2:

Its rank is rank .

We compute . In this matrix, there are 105 unknown parameters. We solve the following SDP problem

| (9) |

which yields a solution with minimal rank 5. Since the rank of the solution matrix is the rank of , we do have a flat extension. Applying Algorithm 1, we find the orthogonal polynomials ρi, the matrices of the operators of multiplication by a variable, their common eigenvectors, which gives the following cubature points and weights:

| Points | Weights |

|---|---|

| (0.249888, −0.20028, 0.249993, 0.350146, 0.350193) | 0.485759 |

| (0.376647, 0.277438, −0.186609, 0.20327, 0.329016) | 0.498813 |

| (0.348358, 0.379898, 0.244967, −0.174627, 0.201363) | 0.509684 |

| (−0.18472, 0.277593, 0.376188, 0.329316, 0.201622) | 0.490663 |

| (0.242468, 0.379314, 0.348244, 0.200593, −0.170579) | 0.51508 |

Acknowledgments

The research of C. Bajaj was supported in part by grants from NSF (OCI-1216701737), NIH (R01-GM117594) and a contract (BD-4485) from Sandia National Labs.

Contributor Information

Marta Abril Bucero, Email: marta.abril_bucero@inria.fr.

Chandrajit Bajaj, Email: bajaj@cs.utexas.edu.

Bernard Mourrain, Email: bernard.mourrain@inria.fr.

References

- 1.Gillette AA, Rand A, Bajaj C. Error estimates for generalized barycentric coordinates. Adv Comput Math. 2012;37(3):417–439. doi: 10.1007/s10444-011-9218-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Abrams C, Bussi G. Enhanced sampling in molecular dynamics using metadynamics, replicaexchange and temperature acceleration. Entropy. 2014;16:163–199. [Google Scholar]

- 3.Bajaj C, Bauer B, Bettadapura R, Vollrath A. Non-uniform Fourier transforms for multi-dimensional rotational correlations. SIAM J Sci Comput. 2013;35(4):821–845. doi: 10.1137/120892386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bayer C, Teichmann J. The proof of Tchakaloff’s Theorem. Proc Amer Math Soc. 2006;134:3035–3040. [Google Scholar]

- 5.Bernardi A, Brachat J, Comon P, Mourrain B. General tensor decomposition, moment matrices and applications. J Symbolic Comput. 2013;52:51–71. [Google Scholar]

- 6.Brachat J, Comon P, Mourrain B, Tsigaridas E. Symmetric tensor decomposition. Linear Algebra Appl. 2010;433:1851–1872. [Google Scholar]

- 7.Patterson TNL, Morrow CR. Construction of algebraic cubature rules using polynomial ideal theory. SIAM J Numer Anal. 1978;15:953–976. [Google Scholar]

- 8.Caflisch R, Morokoff W, Owen A. Valuation of mortgage backed securities using Brownian bridges to reduce effective dimension. J Comput Finance. 1997;1:27–46. [Google Scholar]

- 9.Cools R. Constructing cubature formulae: the science behind the art. Acta Numer. 1997;6:1–54. [Google Scholar]

- 10.Cox D. Solving equations via algebra. In: Dickenstein A, Emiris IZ, editors. Algorithms Comput Math. Vol. 14. Springer; 2005. pp. 63–123. Solving Polynomial Equations: Foundations, Algorithms, and Applications. [Google Scholar]

- 11.Curto RE, Fialkow LA. Recursiveness, positivity, and truncated moment problems. Houston J Math. 1991;17(4):603–635. [Google Scholar]

- 12.Curto RE, Fialkow L. Flat extensions of positive moment matrices: recursively generated relations. Mem Amer Math Soc. 1998;136(648):1–64. [Google Scholar]

- 13.Curto RE, Fialkow L. The truncated complex k-moment problem. Trans Amer Math Soc. 2000;352:2825–2855. [Google Scholar]

- 14.Eisenbud D. Texts in Math. Vol. 150. Springer-Verlag; Berlin: 1994. Commutative Algebra with a View Toward Algebraic Geometry, Grad. [Google Scholar]

- 15.Elkadi M, Mourrain B. Math Appl. Vol. 59. Springer-Verlag; 2007. Introduction à la résolution des systèmes d’équations algébriques. [Google Scholar]

- 16.Fazel M. PhD thesis. Stanford University; 2002. Matrix rank minimization with applications. [Google Scholar]

- 17.Gillette A, Bajaj C. Dual formulations of mixed finite element methods with applications. Comput Aided Design. 2011;43(10):1213–1221. doi: 10.1016/j.cad.2011.06.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Iohvidov IS. Hankel and Toeplitz Matrices and Forms: Algebraic Theory. Birkhäuser; Boston, MA: 1982. Translated from the Russian by G. Philip A. Thijsse. With an introduction by I. Gohberg. [Google Scholar]

- 19.Lasserre JB. Moments, Positive Polynomials and Their Applications. Imperial College Press; 2009. [Google Scholar]

- 20.Lasserre JB, Laurent M, Mourrain B, Rostalski P, Trébuchet P. Moment matrices, border bases and real radical computation. J Symbolic Comput. 2012:63–85. [Google Scholar]

- 21.Laurent M. Revisiting two theorems of Curto and Fialkow on moment matrices. Proc Amer Math Soc. 2005;133:2965–2976. [Google Scholar]

- 22.Laurent M. IMA Vol Math Appl. Vol. 149. Springer; 2009. Sums of Squares, Moment Matrices and Optimization over Polynomials; pp. 157–270. [Google Scholar]

- 23.Laurent M, Mourrain B. A sparse flat extension theorem for moment matrices. Arch Math. 2009;93:87–98. [Google Scholar]

- 24.Möller HM. Kubaturformeln mit minimaler knotenzahl. Numer Math. 1975/1976;25(2):185–200. [Google Scholar]

- 25.Möller HM. Lower bounds for the number of nodes in cubature formulae. In: Hämmerlin G, editor. Numerische Integration. Vol. 45. Birkhäuser; Basel: 1979. pp. 221–230. (International Series of Numerical Mathematics/Internationale Schriftenreihe zur Numerischen Mathematik/Série Internationale D’Analyse Numérique). [Google Scholar]

- 26.Möller HM. Lower bounds for the number of nodes in cubature formulae. Numerische Integration. 1979;45:221–230. Internat. Ser. Numer. Math. [Google Scholar]

- 27.Mourrain B. A new criterion for normal form algorithms. AAECC. 1999:430–443. [Google Scholar]

- 28.Mourrain B, Trébuchet P. Generalized normal forms and polynomials system solving. In: Kauers M, editor. ISSAC: Proceedings of the ACM SIGSAM International Symposium on Symbolic and Algebraic Computation; 2005; pp. 253–260. [Google Scholar]

- 29.Mysovskikh IP. A proof of minimality of the number of nodes of a cubature formula for a hypersphere. Z Vycisl Mat i Mat Fiz. 1966;6:621–630. (in Russian) [Google Scholar]

- 30.Mysovskikh IP. Interpolational Cubature Formulas. Nauka, Moscow: 1981. pp. 1–336. (in Russian) [Google Scholar]

- 31.Nesterov Y, Nemirovski A. Interior-Point Polynomial Algorithms in Convex Programming. SIAM; Philadelphia: 1994. [Google Scholar]

- 32.Ninomiya S, Tezuka S. Toward real time pricing of complex financial derivatives. Appl Math Finance. 1996;3:1–20. [Google Scholar]

- 33.Putinar M. A dilation theory approach to cubature formulas. Expo Math. 1997;15:183–192. [Google Scholar]

- 34.Radon J. Zur mechanischen kubatur. Monatsh Math. 1948;52:286–300. (in German) [Google Scholar]

- 35.Rand A, Gillette A, Bajaj C. Interpolation error estimates for mean value coordinates. Adv Comput Math. 2013;39:327–347. doi: 10.1007/s10444-012-9282-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rand A, Gillette A, Bajaj C. Quadratic serendipity finite elements on polygons using generalized barycentric coordinates. Math Comp. 2014;83:2691–2716. doi: 10.1090/s0025-5718-2014-02807-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Recht B, Fazel M, Parrilo P. Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM Rev. 2010;52(3):471–501. [Google Scholar]

- 38.Schmid HJ. Two-dimensional minimal cubature formulas and matrix equations. SIAM J Matrix Anal Appl. 1995;16:898–921. [Google Scholar]

- 39.Schmid HJ, Xu Y. On bivariate Gaussian cubature formulae. Proc Amer Math Soc. 1994;122:833–841. [Google Scholar]

- 40.Stroud AH. Quadrature methods for functions of more than one variable. Ann NY Acad Sci. 1960;86:776–791. [Google Scholar]

- 41.Stroud AH. Integration formulas and orthogonal polynomials. SIAM J Numer Anal. 1970;7:271–276. [Google Scholar]

- 42.Tchakaloff V. Formules de cubatures mécaniques à coefficients non négatifs. Bull Sci Math. 1957;81(2):123–134. [Google Scholar]

- 43.Wachpress E. A Rational Finite Element Basis. Academic Press; 1975. [Google Scholar]

- 44.Xu Y. Common zeros of polynomials in several variables and higher-dimensional quadrature. Pitman Res Notes Math Ser. 1994;312(312):119. [Google Scholar]

- 45.Xu Y. Cubature formulae and polynomial ideals. Adv in Appl Math. 1999;23:211–233. [Google Scholar]