Abstract

Cryo-electron microscopy nowadays often requires the analysis of hundreds of thousands of 2-D images as large as a few hundred pixels in each direction. Here, we introduce an algorithm that efficiently and accurately performs principal component analysis (PCA) for a large set of 2-D images, and, for each image, the set of its uniform rotations in the plane and their reflections. For a dataset consisting of n images of size L × L pixels, the computational complexity of our algorithm is O(nL3 + L4), while existing algorithms take O(nL4). The new algorithm computes the expansion coefficients of the images in a Fourier–Bessel basis efficiently using the nonuniform fast Fourier transform. We compare the accuracy and efficiency of the new algorithm with traditional PCA and existing algorithms for steerable PCA.

Index Terms: Steerable PCA, group invariance, non-uniform FFT, denoising

I. Introduction

Principal component analysis (PCA) is widely used in image analysis and pattern recognition for dimensionality reduction and denoising. In particular, PCA is often one of the first steps [1] in the algorithmic pipeline of cryo-electron microscopy (cryo-EM) single particle reconstruction (SPR) [2] to compress and denoise the acquired 2D projection images in order to eventually determine the 3D structure of a macromolecule. The high level of noise in those images drastically deteriorates the performance of single-image based denoising methods, such as non-local means [3] and wavelet thresholding [4], and so the latter are outperformed by PCA. As any planar rotation of any given projection image is equally likely to appear in the experiment, by either in-plane rotating the detector or the specimen, it makes sense to include all possible rotations of the projection images when performing PCA. The resulting decomposition, termed steerable PCA, consists of principal components which are tensor products of radial functions and angular Fourier modes [5], [6], [7], [8], [9]. Beyond cryo-EM, steerable PCA has many other applications in image analysis and computer vision [10].

The term “steerable PCA” comes from the fact that rotating the principal components is achieved by a simple phase shift of their angular part. The principal components are invariant to any in-plane rotation of the images, therefore finding steerable principal components is equivalent to finding in-plane rotationally invariant principal components.

In cryo-EM data processing, in addition to compression and denoising, steerable PCA is also useful in generating rotationally invariant image features (i.e. bispectrum-like features [11]). These are crucial for fast rotationally invariant nearest neighbors search used in efficient computation of class averages [11]. Rotational alignment between image pairs can also be computed more efficiently using the expansion coefficients in a steerable basis.

In this letter, we focus on the action of the group O(2) on digital images by in-plane rotating and possibly reflecting them. The idea of using group actions for constructing group invariant features and filters has been previously proposed in [12], [13]. This group theoretical framework has been applied to SO(3) and SU(1, 1) in [14], [15]. The representation of finite groups, such as the dihedral groups, has been used for computing the Karhunen-Loéve expansion of digital images in [16].

Various efficient algorithms for steerable PCA have been introduced [17], [8]. However, steerable PCA of modern cryo-EM datasets that contain hundreds of thousands of large images poses a computational challenge. Also, it is important to ensure that the steerable PCA algorithm is numerically accurate when the input images are noisy. In order to exploit the special separation of variables structure of the principal components in polar coordinates, most algorithms rely on resampling the images on a polar grid. However, the transformation from Cartesian to polar is non-unitary, and thus changes the statistics of the noise. In particular, resampling transforms uncorrelated white noise to colored noise that may lead to spurious principal components.

Recently, [9] addressed this issue by incorporating a sampling criterion into the steerable PCA framework and introduced an algorithm called Fourier-Bessel steerable PCA (FBsPCA). FBsPCA assumes that the underlying clean images (before being possibly contaminated with noise) are bandlimited and essentially compactly supported in a disk. This assumption holds, for example, for 2D projection images of a 3D molecule compactly supported in a ball. It also implies that the images can be expanded in an orthogonal basis for bandlimited functions, such as the Fourier-Bessel basis. In FBsPCA, the Fourier-Bessel expansion of each image is truncated into a finite series using a sampling criterion that was introduced by Klug and Crowther [18]. The sampling criterion ensures that the transformation from the Cartesian grid to the truncated Fourier-Bessel expansion is nearly unitary. Moreover, the covariance matrix built from the expansion coefficients of the images and all their possible rotations has a block diagonal structure where the block size decreases as a function of the angular frequency. The computational complexity of FBsPCA is O(nL4) operations for n images of size L × L. Notice that, when n > L2, the computational complexity of traditional PCA is O(nL4 + L6), where the first term corresponds to forming the L2 × L2 covariance matrix and the second term corresponds to its eigen-decomposition. Although FBsPCA and PCA have a similar computational complexity, FBsPCA leads to better denoising as it takes into account all possible rotations and reflections. This makes FBsPCA more suitable than traditional PCA as a tool for 2D analysis of cryo-EM images [9]. With the enhancement of electron microscope detectors’ resolution, a typical image size of a single particle can easily be over 300 × 300 pixels. Thus, FBsPCA is still not efficient enough to analyze a large number of images of large size (i.e. large n and large L). The bottleneck for this algorithm is the first step that computes the Fourier-Bessel expansion coefficients, whose computational complexity is O(nL4).

In this letter we introduce a fast Fourier-Bessel steerable PCA (FFBsPCA) that reduces the computational complexity for FBsPCA from O(nL4) to O(nL3) by computing the Fourier-Bessel expansion coefficients more efficiently and accurately. This is achieved by first mapping the images from their Cartesian grid representation to a polar grid representation in the reciprocal (Fourier) domain using the non-uniform fast Fourier transform (NUFFT) [19], [20], [21], [22]. The polar grid representation enables to efficiently evaluate the Fourier-Bessel expansion coefficients of the images by 1D FFT on concentric circles followed by accurate evaluation of a radial integral with a Gaussian quadrature rule. The overall complexity of computing the Fourier-Bessel coefficients is reduced to O(nL3) operations. The increased accuracy and efficiency in evaluating the Fourier-Bessel expansion coefficients are the main contributions of this letter.

We note that the Fourier-Bessel expansion coefficients can be computed in O(nL2 log L) operations using algorithms for rapid evaluation of special functions [23] or a fast analysis-based Fourier-Bessel expansion [24]. However, such “fast” algorithms may only lead to a marginal improvement for two reasons. First, the break even point for them compared to the direct approach is for relatively large L such as L = 256 or larger. Second, forming the covariance matrix from the expansion coefficients still requires O(nL3) operations.

The letter is organized as follows: Section II contains the mathematical preliminaries of the Fourier-Bessel expansion, the sampling criterion, and the numerical evaluation of the expansion coefficients. The computation of the steerable principal components is described in Section III. We present the algorithm and give a detailed computational complexity analysis in Section IV. Various numerical examples concerning the computation time of FFBsPCA compared with FBsPCA and traditional PCA are presented in Section V. In the same section, we demonstrate the performance of FFBsPCA-based denoising using simulated cryo-EM projection images.

Reproducible research: The FFBsPCA is available in the SPR toolbox ASPIRE (http://spr.math.princeton.edu/). There are two main functions: FBCoeff computes the Fourier Bessel expansion coefficients and sPCA computes the steerable PCA basis and the associated expansion coefficients.

II. Fourier-Bessel Expansion of Bandlimited Images

We say that f has a band limit radius c if its Fourier transform

| (1) |

satisfies ℱ(f)(ξ1, ξ2) = 0, for . In our setup, a digital image I is obtained by sampling a squared-integrable bandlimited function f on a Cartesian grid of size L × L, that is, I(i1, i2) = f(i1Δ, i2Δ), where , and Δ is the pixel size.

For pixel size Δ = 1, the Nyquist-Shannon sampling theorem implies that the Fourier transform of I is supported on the square [−1/2, 1/2) × [−1/2, 1/2). In many applications, the support size is effectively smaller due to other experimental considerations, for example, the exponentially decaying envelope of the contrast transfer function in electron microscopy. Thus, we assume that the band limit radius of all images is . The scaled Fourier-Bessel functions are the eigenfunctions of the Laplacian in a disk of radius c with Dirichlet boundary condition and they are given by

| (2) |

where (ξ, θ) are polar coordinates in the Fourier domain (i.e., ξ1 = ξ cos θ, ξ2 = ξ sin θ, ξ ≥ 0, and θ ∈ [0, 2π)); is the normalization factor; Jk is the Bessel function of the first kind of integer order k; and Rk,q is the qth root of the Bessel function Jk. For a function f with band limit c that is also in L2(ℝ2) ∩ L1(ℝ2),

| (3) |

which converges pointwise. In Section II-A, we derive a finite truncation rule for the Fourier-Bessel expansion in Eq. (3).

A. Sampling Criterion

For digital implementations of Eq. (3), we must truncate it to a finite sum, namely to derive a sampling criterion for selecting k and q.

With the following convention for the 2D inverse polar Fourier transform of a function g(ξ, θ),

| (4) |

the 2D inverse Fourier transform of the Fourier-Bessel functions, denoted , is given in polar coordinates as

| (5) |

The maximum of in (5) is obtained near the circle and vanishes on concentric circles of radii with q′ ≠ q. The smallest circle with vanishing that encircles the maximum of is of radius .

We assume that the underlying clean images (before being possibly contaminated with noise) are essentially compactly supported in a disk of radius R. Therefore, we should rule out Fourier-Bessel functions for which the maximum of their inverse Fourier transform resides outside a disk of radius R. Otherwise, those functions introduce spurious information from noise. Notice that if the maximum is inside the disk, yet the zero after the maximum is outside the disk, then there is a significant spillover of energy outside the disk. We therefore require the more stringent criterion that the zero after the maximum is inside the disk, namely

| (6) |

This sampling argument gives a finite truncation rule for the Fourier-Bessel expansion in Eq. (3), that is

| (7) |

For each k, we denote by pk the number of components satisfying Eq. (7). We also denote by the total number of components, where kmax is the maximal possible value of k satisfying Eq. (7). The locations of Bessel zeros have been extensively studied, for example, in [25, p.517–521], [26, p.370], [27], [28], [29]. Several lower and upper bounds for Bessel zeros Rk,q were proven by Breen in [29], such as

| (8) |

where aq is the qth zero of the Airy function, shown to satisfy

| (9) |

Using the lower bound for |aq| and the sampling criterion in Eq. (7), we have the following inequality for k and pk,

| (10) |

Breen also obtained

| (11) |

so we get another inequality for k and pk,

| (12) |

Combining Eqs. (10) and (12), we have the following lower and upper bounds for pk,

| (13) |

The bound for the highest angular frequency kmax is determined by setting pk = 1 in Eq. (13), resulting in

| (14) |

Equation (13) implies that as the angular frequency k increases, the number of components pk decreases. Moreover, using the lower and upper bounds for pk and kmax in Eqs. (13) and (14), we derive that the total number of selected Fourier-Bessel basis functions is between 8(cR)2 and 4π(cR)2. When c is the largest possible band limit, i.e. , the number of basis functions is between 2R2 and πR2, where the latter is approximately the number of pixels inside a disk of radius R. Also, whenever c = O(1) and R = O(L), we get that p = O(L2) and kmax = O(L).

Because the bandlimited function f is assumed to be essentially compactly supported, the infinite expansion in Eq. (3) is approximated by the finite expansion

| (15) |

where Pc,R is the orthogonal projection from L2(Dc) (the space of L2 functions supported on a disk of radius c), to the space of functions spanned by a finite number of Fourier-Bessel functions that satisfy (7).

B. Numerical Evaluation of Fourier–Bessel Expansion Coefficients

Previously in [9], the evaluation of the expansion coefficients ak,q of Eq. (15) was done by least squares. Let Ψ be the matrix whose entries are evaluations of the Fourier-Bessel functions at the Cartesian grid points, with rows indexed by the grid points and columns indexed by angular and radial frequencies. Finding the coefficient vector a as the solution to requires the computation of Ψ*I, which takes O(pL2) = O(L4) operations, because p = O(L2). In general a = (Ψ*Ψ)−1Ψ*I, but here Ψ*Ψ is approximately the identity matrix, due to the orthogonality of the Fourier-Bessel functions.

We introduce here a method that computes the expansion coefficients ak,q in O(L3) operations instead of O(L4). The expansion coefficients in Eq. (15) are given analytically by

| (16) |

We evaluate the last integral numerically using a quadrature rule that consists of equally spaced points in the angular direction and a Gaussian quadrature rule in the radial direction, that is, using the nodes, ξ1(j, l) = ξj cos(2πl/nθ), ξ2(j, l) = ξj sin(2πl/nθ), j = 1, …, nξ, l = 0, …, nθ − 1 (see Fig. 1). The values of nξ and nθ depend on the compact support radius R and the band limit c and are derived later in the letter. To use this quadrature rule, we need to sample the Fourier transform of f at the quadrature nodes. This is approximated by the Fourier coefficients of the image I (consisting of samples of f on a Cartesian grid) at the given quadrature nodes, namely by the Fourier coefficients

| (17) |

which can be evaluated efficiently using the the nonuniform discrete Fourier transform. The angular integration in Eq. (16) is then sped up by 1D FFT on the concentric circles, followed by a numerical evaluation of the radial integral with a Gaussian quadrature rule.

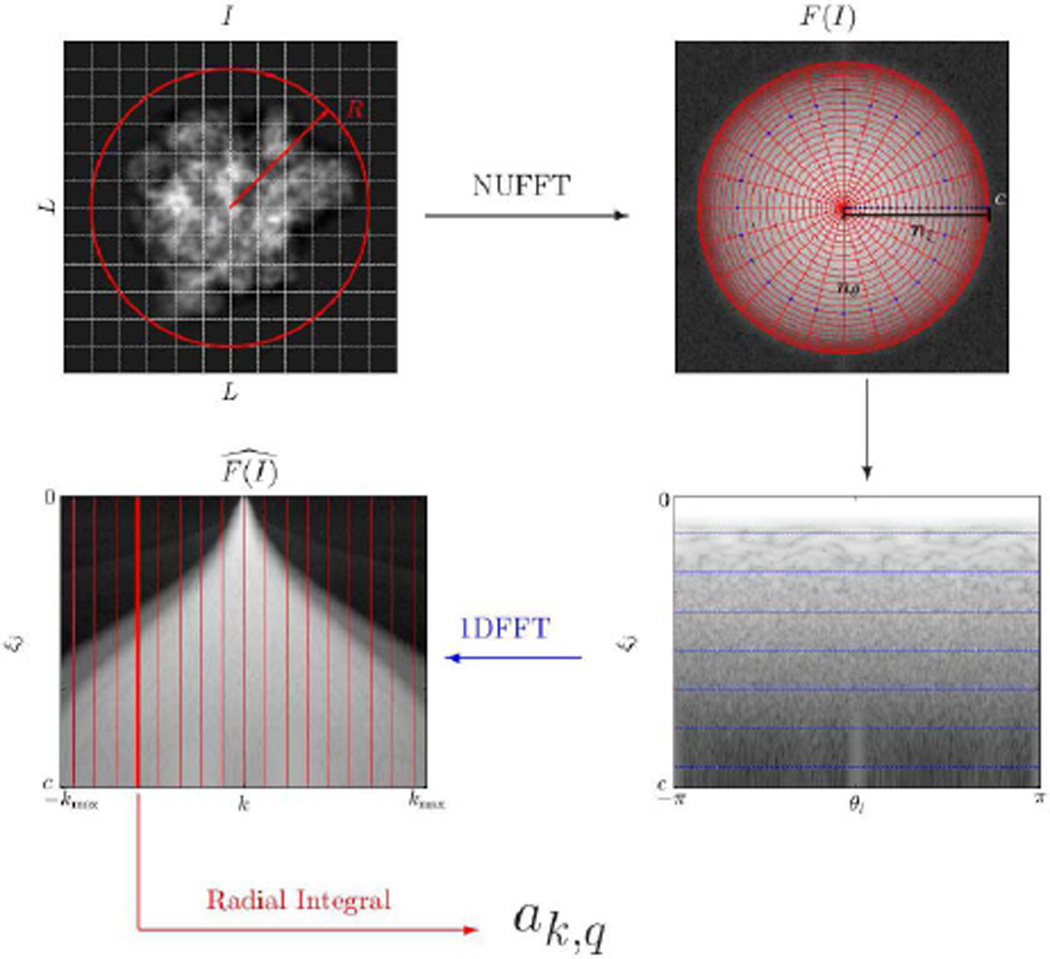

Fig. 1.

Pictorial summary of the procedure for computing the Fourier-Bessel expansion coefficients. The original image (top left) is resampled on a polar Fourier grid (Eq. (17)) using NUFFT (top right and bottom right) followed by 1D FFT (Eq. (18)) on each concentric circle. The evaluation of the radial integral (Eq. (19)) gives the expansion coefficients ak,q. The bow-tie phenomenon illustrated in bottom-left was discussed in [31].

As the samples on each concentric circle are equally-spaced, the natural quadrature weights for the angular integral are , with the nodes taken at for l = 0, …, nθ − 1. The angular integration using one-dimensional FFT on each concentric circle thus yields

| (18) |

The radial integral is evaluated using the Gauss-Legendre quadrature rule [30, Chap. 4], which determines the locations of nξ points on the interval [0, c] and the associated weights w(ξj). The integral in Eq. (16) is thus approximated by

| (19) |

Since I is real valued and J−k(x) = (−1)kJk(x), we get that and thus we only need to evaluate coefficients with k ≥ 0.

The procedure for numerical evaluation of the Fourier-Bessel expansion coefficients is illustrated in Fig. 1. In practice, we have observed that using nξ = 4cR and nθ = 16cR results in highly-accurate numerical evaluation of the integral in Eq. (16).

If our image can be expressed in terms of the truncated Fourier-Bessel expansion in Eq. (15), the approximation error in the radial integral comes from the numerical evaluation of the integrals

| (20) |

where the approximation error using nξ points is

| (21) |

Asymptotically, a Bessel function behaves like a decaying cosine function with frequency for [26],

| (22) |

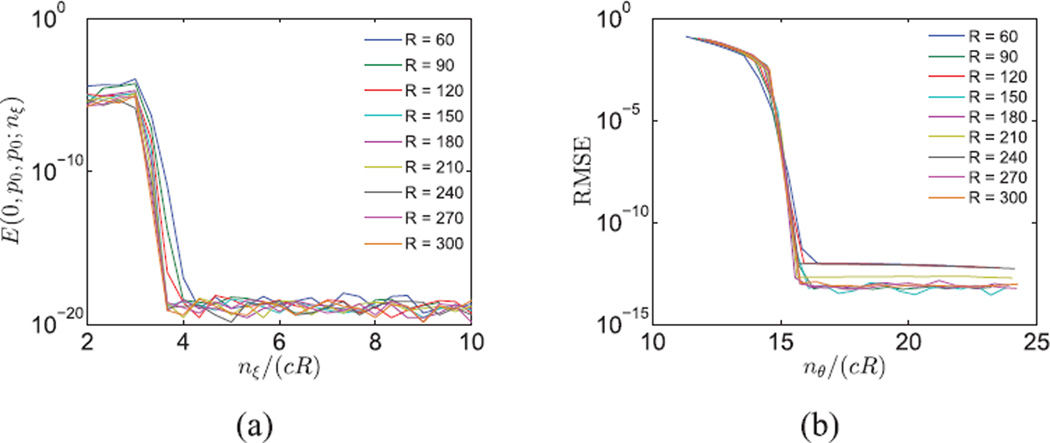

For a fixed nξ, the largest approximation error occurs when k = 0 and q1 = q2 = p0, since is the most oscillatory function within the band limit. The Nyquist rate of is and we need to sample at Nyquist rate, or higher. Therefore, we choose nξ = ⌈4cR⌉. Fig. 2a justifies this choice as the error decays dramatically to 10−17 before nξ = ⌈4cR⌉.

Fig. 2.

(a) Error, as a function of nξ, in the numerical evaluation of the integral G(0, p0, p0) in Eq. (20). (b) Error, as a function of nθ, in the evaluation of the integral in Eq. (16).

To choose nθ, we computed the root mean squared error (RMSE) in evaluating the expansion coefficients for simulated images composed of white Gaussian noise with various R and nθ, while c = 1/2.We oversampled on the radial lines by nξ = ⌈10cR⌉ and the ground truth for the angular integral in Eq. (16) was computed by Eq. (18) via oversampling in the angular direction by nθ = 60cR. We observe that when nθ ≥ 16cR, the estimation error for the Fourier-Bessel expansion coefficients becomes negligible (see Fig. 2b). Notice that Eq. (14) implies that kmax < 2πcR. The corresponding Nyquist rate is bounded by 4πcR.We therefore sample at a slightly higher rate of nθ = 16cR to ensure numerical accuracy.

Now that we are able to numerically evaluate ak,q with high accuracy, we can study the spectral behavior of the finite Fourier-Bessel expansion of the images. We define a as the vector that contains the expansion coefficients ak,q computed in Eq. (19) and denote by T* the transformation that maps an image I to its finite Fourier-Bessel expansion coefficients through Eqs. (17), (18) and (19), that is,

| (23) |

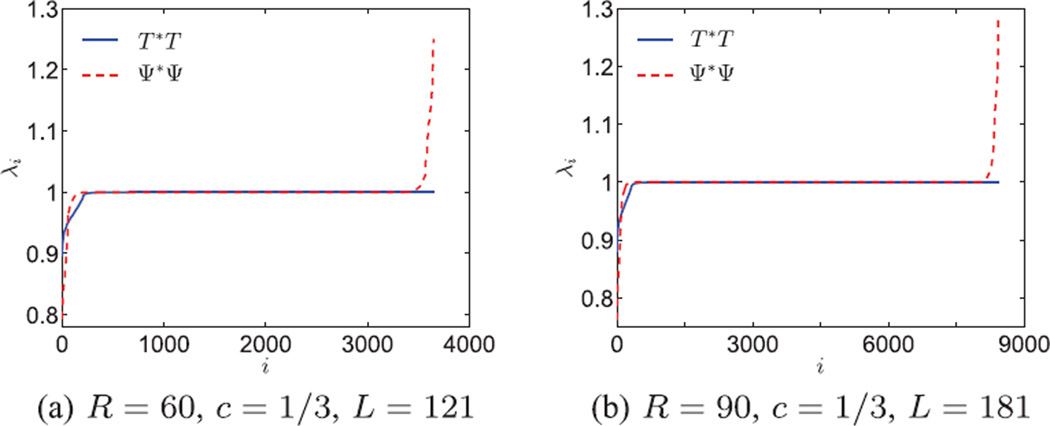

Ideally we would like T* to be a unitary transformation, that is T*T = I, so that the transformation from the images to the coefficients preserves the noise statistics. Numerically, we observe that the majority of the eigenvalues of T*T are 1 and the smallest eigenvalues are also close to 1 (see blue solid line in Fig. 3). The transformation T* is close to unitary because it is a numerical approximation of an expansion in an orthogonal basis (Fourier-Bessel), and the sampling criterion prevents aliasing. In Fig. 3, the eigenvalues of Ψ*Ψ are also plotted for comparison. It can be observed that T*T has fewer eigenvalues that deviate from 1. Although the Fourier-Bessel functions are orthogonal as continuous functions, their discrete sampled versions are not necessarily orthogonal, hence Ψ*Ψ deviates from the identity matrix. The fact that T*T is closer to the identity than Ψ*Ψ implies that the numerical evaluation of the expansion coefficient vector a as T*I is more accurate than estimating it as Ψ*I.We compare the numerical accuracy explicitly with an example. We choose a signal f that satisfies for c = 0.5 and R = 30, ak,q = 1, for k = 1 and q = 5, and otherwise, ak,q = 0. The evaluation method from [9] is applied here in Fourier space. It first evaluates discrete samples of ℱ(f) and the Fourier-Bessel basis on a Cartesian grid of size 2R × 2R, and then projects the discrete samples onto the basis. The root mean squared error (RMSE) is 7.2 × 10−5 and the maximum absolute error is 4.0 × 10−4. Using the numerical evaluation in Eq. (19), we get that RMSE = 1.2 × 10−16 and the maximum absolute error is 2.7 × 10−15.

Fig. 3.

Eigenvalues of T*T and Ψ*Ψ, where T* and Ψ* are the truncated Fourier-Bessel transforms using numerical integration and least squares respectively. These are also the spectra of the population covariance matrices of transformed white noise images. Most eigenvalues are close to 1, indicating that the truncated Fourier-Bessel transform is almost unitary. Thus white noise remains approximately white.

Computing the polar Fourier transform of an image of size L × L on a polar grid with nξ × nθ points in Eq. (17) is implemented efficiently using NUFFT [19], [20], [21], [22], whose computational complexity is O(L2 log L + nξnθ). Since nθ = 16cR = O(L) and nξ = 4cR = O(L), nξ × nθ = O(L2) and the complexity of the discrete polar Fourier transform is O(L2 log L). The complexity of the 1D FFTs in Eq. (18) is O(nξnθ log nθ), because there are nξ concentric circles with nθ samples on each circle. Both nξ and nθ are of O(L), so the total complexity of the 1D FFTs is also O(L2 log L). Evaluating Eq. (19) (the quadrature rule for the radial integral in Eq. (16)) for all k and q requires a total of O(L3) operations using a direct method, because there are O(L2) basis functions to integrate, and each function is integrated using O(L) quadrature points. However, this complexity can be reduced to O(L2 log L) using a fast Bessel transform [23], [24]. In summary, the computational complexity of computing the Fourier-Bessel expansion coefficients of an image of size L × L is O(L3) operations, or O(L2 log L) using a “fast” transform.

III. Steerable Principal Components

Given a dataset of n images , we denote by fi the underlying bandlimited function that corresponds to the i’th image Ii. Under the action of the group O(2), the function fi is transformed to , where α ∈ [0, 2π) is the counter-clockwise rotation angle and β denotes reflection and takes values in {+, −}. More specifically, and . The images and are obtained by sampling and respectively.

The Fourier transform of fi commutes with the action of the group O(2), namely, , and . The transformation of the images under rotation and reflection can be represented by the transformation of their Fourier-Bessel expansion coefficients in Eq. (3). Under counter-clockwise rotation by an angle α, is given by

| (24) |

Therefore a planar rotation introduces a phase shift in the expansion coefficients. Under rotation and reflection,

| (25) |

namely, the expansion coefficient changes to .

If we augment the collection of bandlimited functions by all possible rotations and reflections, the Fourier transform of the sample mean of the augmented collection, denoted fmean, becomes,

| (26) |

Using the properties in Eqs. (24) and (25), we have

| (27) |

As expected, the sample mean is radially symmetric, because is only a function of ξ but not of θ.

The rotationally invariant covariance kernel 𝒞((ξ, θ), (ξ′, θ′)) built from Fourier transformed functions with all their possible in-plane rotations and reflections is defined as

| (28) |

From Eq. (27) it follows that if we express ℱ(fi) and ℱ(fmean) in terms of the Fourier-Bessel basis and the associated expansion coefficients, subtracting the sample mean is equivalent to subtracting from the coefficients , while keeping other coefficients unchanged. Therefore, we first update the zero angular frequency coefficients by , and then

| (29) |

where

| (30) |

δk,k′ comes from the integral over α∈ [0, 2π). The covariance matrix in Eq. (30) is positive semi-definite and block diagonal because the non-zero entries of C correspond only to k = k′. Since the images are well approximated by the subspace spanned by a finite number of Fourier-Bessel basis functions (see Eq. (15)), C(k,q),(k′,q′) are close to zero when (k, q) or (k′, q′) do not satisfy the sampling criterion in Eq. (7). Therefore, we have a finite matrix representation C of 𝒞. Moreover, it suffices to consider k ≥ 0, because C(k,q),(k,q′) = C(−k,q),(−k,q′). Thus, the covariance matrix in Eq. (30) can be written as the direct sum , where C(k) is by itself a sample covariance matrix of size pk × pk, given by,

| (31) |

Let us denote by A(k) the matrix of expansion coefficients, obtained by putting the coefficients for all q and all i into a matrix, where the columns are indexed by the image number i and the rows are indexed by the radial index q. The coefficient matrix A(k) for k ≠ 0 is of size pk × n and the covariance matrix for k ≠ 0 is,

| (32) |

where A* is the conjugate transpose . The case k = 0 is special because the expansion coefficients satisfy , and so A(0) is a matrix of size p0 × n and

| (33) |

We compute the eigenvalues and eigenvectors of the covariance matrices C(k). Because 𝒞 and C are related through Eq. (29) and C is block diagonal as in Eq. (30), 𝒞((ξ, θ), (ξ′, θ′)) is well approximated by , where Ψ(k) contains Fourier-Bessel functions with angular frequency k. Equation (29) reveals that the eigenfunctions of 𝒞, which are the steerable principal components, can be expressed as linear combinations of the Fourier-Bessel functions with the coefficients given by the eigenvectors of the matrix C,

| (34) |

Therefore the radial parts of the steerable principal components

| (35) |

are linear combinations of the Bessel functions within the same angular frequency. The associated expansion coefficients for Ii are

| (36) |

The computational complexity for forming the matrix C(k) is . The complexity for eigendecomposition of C(k) is , since the size of the covariance matrix is pk × pk. Using the upper and lower bounds for pk in Eq. (13) and assuming c = O(1) and R = O(L), we get and . Therefore, the complexity for forming the covariance matrix C is and the complexity of its full eigendecomposition is . Equations (32) and (33) show that instead of constructing the covariance matrices C(k) to compute the principal components, we can perform singular value decomposition (SVD) on the coefficient matrix A(k) directly and take the left singular vectors as the principal components. The computational complexity for full rank SVD on A(k) is and the total complexity of SVD of all coefficient matrices is .

IV. Algorithm and Computational Complexity

The new algorithm introduced in this letter is termed fast Fourier-Bessel steerable PCA (FFBsPCA). The algorithm is composed of two steps. In the first step, Fourier-Bessel expansion coefficients are computed according to Algorithm 1. The input to the algorithm includes an image dataset, the band limit c, and the compact support radius R. The second step (Algorithm 2) takes the Fourier-Bessel expansion coefficients from Algorithm 1 as input and computes the steerable PCA radial functions and the expansion coefficients of the images in the new steerable basis. Algorithm 2 is the same as the corresponding part of the algorithm in [9].

Algorithm 1.

Fast Fourier-Bessel Expansion

|

Require:

n images I1, …, In sampled on a Cartesian grid of size L × L with compact support radius R and band limit c. | |

| 1: | (Precomputation) Select (k, q)’s that satisfy the sampling criterion of Eq. (7). Fix nξ = ⌈4cR⌉ and nθ = ⌈16cR⌉. |

| 2: | (Precomputation) Find nξ Gaussian quadrature points and weights on the interval [0, c] and evaluate , j = 1, …, nξ, for all selected (k, q)’s. |

| 3: | Compute F(Ii) (Eq. (17)) on a polar grid of size nξ × nθ by NUFFT for each i = 1, …, n. |

| 4: | For each F(Ii), compute using Eqs. (18) and (19). |

| 5: | return for all selected (k, q)’s. |

The analysis of the computational complexity of FFBsPCA is as follows. The precomputation that generates all radial basis functions requires O(L3) operations because there are O(L2) basis functions, each of which is sampled over O(L) points. Computing the Fourier-Bessel expansion coefficients in Eq. (19) for all images takes O(nL3) operations (or O(nL2 log L) with a fast Bessel transform) as discussed in Section II-B.

The complexity of constructing the covariance matrix C and computing its full eigendecomposition is O(nL3 + L4) as described in Section III. Another method for computing the principal components is by SVD of the coefficient matrices.

Algorithm 2.

Steerable PCA

|

Require: Fourier-Bessel expansion coefficients for n images and the maximum angular frequency kmax. | |

| 1: | Compute the coefficient vector of the mean image . Then, set . |

| 2: | for k = 0, 1, …, kmax do |

| 3: | Construct the coefficient matrix A(k). |

| 4: | Compute the covariance matrix C(k), its eigenvalues , and eigenvectors, ; or perform SVD of A(k) and take the left singular vectors . |

| 5: | Compute the radial eigenvectors fk,l(ξj) for j = 1, …, nξ using Eq. (35). |

| 6: | Compute the expansion coefficients of the images in the new steerable basis using Eq. (36). |

| 7: | end for |

| 8: |

return For all (k, l), , fk,l, and i = 1, …, n. |

Full rank SVD on all coefficient matrices requires O(nL3) floating point operations (see Section III).

To generate the new steerable basis, we take linear combinations of the Bessel functions as in line 5 of Algorithm 2, which takes O(L4) operations. Computing the steerable PCA expansion coefficients for i = 1 …, n (line 6 in Algorithm 2) requires O(nL3) operations by taking linear combinations of the Fourier-Bessel expansion coefficients as in Eq. (36). Therefore the total computational complexity of FFBsPCA is O(nL3 + L4).

The complexity of FBsPCA introduced in [9] is O(nL4). Thus, FFBsPCA is faster than FBsPCA. For PCA, when the number of images is smaller than the number of pixels in the compact support disk, we form XTX and compute its eigendecomposition and the complexity is O(n2L2 + n3). However, as the number of images grows, the complexity of PCA switches to O(nL4 + L6) since it becomes more efficient to compute the eigendecomposition of XXT. Therefore the computational complexity of traditional PCA, without taking into account all rotations and reflections is O(min{n2L2 + n3, nL4 + L6}). When n > O(L), FFBsPCA is more efficient than the traditional PCA.

FFBsPCA is easily adapted for parallel computation. The computation of Fourier-Bessel expansion coefficients in Algorithm 1 can run on multiple workers in parallel, where each worker is allocated with a subset of the images and Fourier-Bessel radial basis functions. In addition, in Algorithm 2, the radial eigenfunctions and the steerable PCA expansion coefficients can also be efficiently computed in parallel for each angular index k.

V. Numerical Experiments

We compare the running times of FFBsPCA, FBsPCA and traditional PCA, where the latter is computed without the images’ in-plane rotations and reflections. The algorithms are implemented in MATLAB on a machine with 60 cores, running at 2.3 GHz, with total RAM of 1.5TB.

We first simulated n = 24, 000 images with different radii of compact support R, while the band limit is fixed at c = 1/2. For small R, since FFBsPCA performs polar Fourier transformation, it appears slightly slower than FBsPCA. However when R increases, FFBsPCA is computationally more efficient (see Tab. I). We next fixed the size of the images while using R = 150 and c = 1/2, and varied the number of images n. Table II shows that the running time of FBsPCA and FFBsPCA grows linearly with n.

TABLE I.

Running Times (in seconds) as a Function of R for n = 2.4 × 104, c = 1/2, and L = 2R

| R | PCA | FBsPCA | FFBsPCA |

|---|---|---|---|

| 30 | 8 | 7 | 51 |

| 60 | 214 | 50 | 87 |

| 90 | 1,636 | 168 | 148 |

| 120 | 1,640 | 413 | 234 |

| 150 | 1808 | 757 | 371 |

| 180 | 1,988 | 1,437 | 657 |

| 210 | 2,106 | 2,274 | 695 |

| 240 | 2,188 | 3,827 | 892 |

TABLE II.

Running Times (in Minutes) as a Function of n for Image Size 300 × 300 pixels (L = 300), with R = 150 and c = 1/2

| n (×103) | PCA | FBsPCA | FFBsPCA |

|---|---|---|---|

| 1 | 0.05 | 1.2 | 1.1 |

| 2 | 0.1 | 2.1 | 1.3 |

| 4 | 0.3 | 3.5 | 1.8 |

| 8 | 1.3 | 4.3 | 2.4 |

| 16 | 9.8 | 8.7 | 4.6 |

| 32 | 59.1 | 17.9 | 8.0 |

| 64 | 424.7 | 35.7 | 14.4 |

| 128 | 653.7 | 74.2 | 30.6 |

To show that our new algorithm can handle large datasets efficiently, we simulated a large dataset with 105 images of size 300 × 300 pixels. The images consist entirely of Gaussian noise with mean 0 and variance 1. We assume that the compact support in the image domain is R = 150 and the band limit in Fourier domain is c = 1/2. In Table III, the total running time is divided into three parts: precomputation, Fourier-Bessel expansion (Algorithm 1), and steerable PCA (Algorithm 2). Fourier Bessel expansion took about 24 minutes, during which 91% of the time was spent on mapping images to polar Fourier grid, where we used the software package [22] downloaded from https://www-user.tu-chemnitz.de/potts/nfft/potts/nfft/. Numerical evaluation of the angular integration by 1D FFT and the radial integration by a direct method took 6.4% and 2.6% of the time respectively. Steerable PCA took 42 seconds.

TABLE III.

Timing for FFBsPCA on a Large Dataset With n = 105 Images. Each Image is of Size 300 × 300 Pixels, R = 150 and c = 1/2. We Computed the Full Eigendecomposition in Algorithm 2

| Steps | Time (sec) |

|---|---|

| Precomputation | 7 |

| NUFFT and Fourier-Bessel Expansion | 1,438 |

| Steerable PCA | 42 |

| Total | 1487 |

In our third experiment, we simulated n = 105 clean projection images from a reconstructed volume of a human mitochondrial large ribosomal subunit, downloaded from the electron microscopy data bank [32] (EMDB-2762). The original volume in the data bank is of size 320 × 320 × 320 voxels. We preprocessed the volume such that its center of mass is at the origin and cropped out a volume of size 240 × 240 × 240 voxels that contains the particle. Each projection image is of size 240 × 240 pixels. We simulated both the vanishing behavior of the CTF at low frequencies and the blurring effect due to the Gaussian envelope of the CTF. This was done by convolving the images with the inverse Fourier transform of

| (37) |

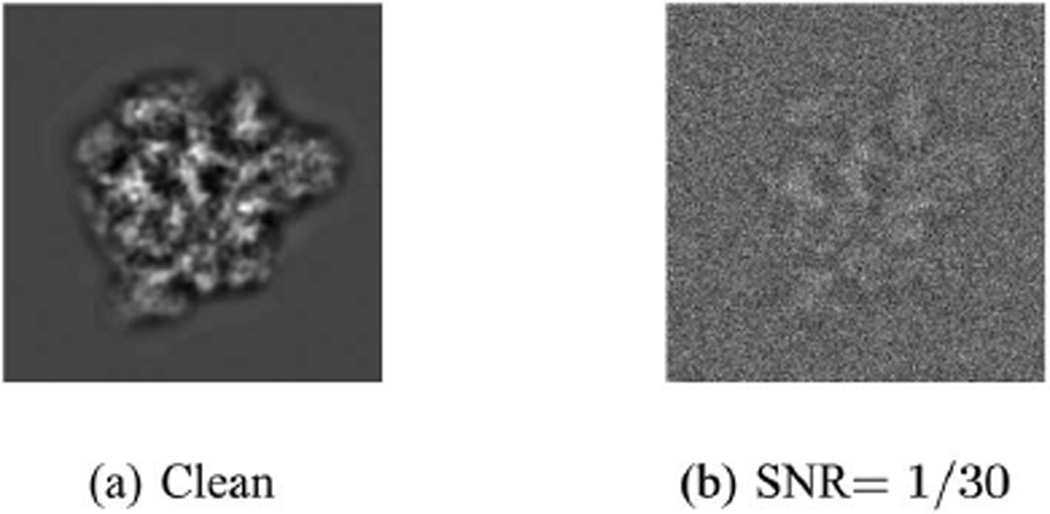

where f is the frequency, λ is the wavelength of the electron beam, z is the defocus, and a is the phase of the CTF introduced by microscope. This stems from the analytic form of the CTF given by sin(πλzf2 + a) exp (−Bf2). For the simulations we chose λ = 0.0197Å, z = 2.5 µm, a = 0.1rad, and B = 100Å2. Our clean images (see Fig. 4a) are the projection images filtered by the filter in Eq. (37) and they were then corrupted by additive white Gaussian noise at SNR= 1/30, corresponding to noise variance of σ2 = 9 (see Fig. 4b).

Fig. 4.

Simulated projection images of the human mitochondrial large ribosomal subunit. Image size is 240 × 240 pixels.

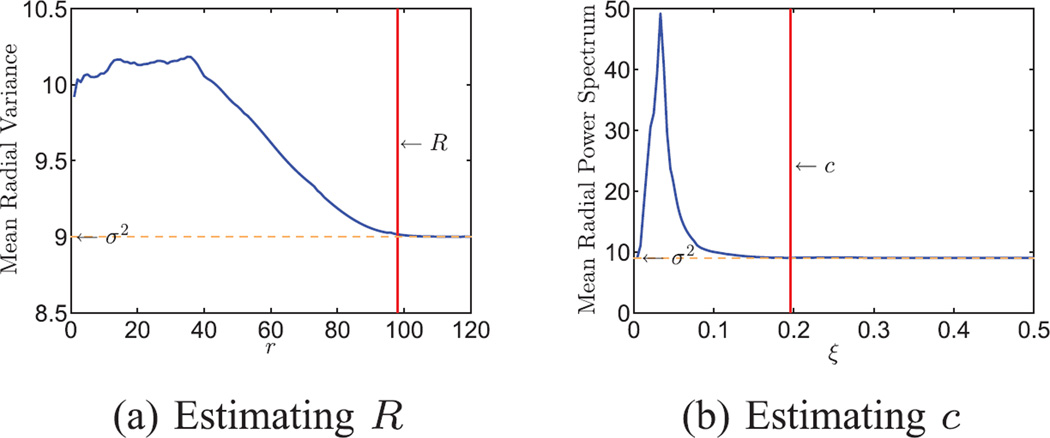

We estimated the radius of compact support of the particle in real domain and the band limit in Fourier domain from the noisy images in the following way. We first subtracted the mean image of the dataset from each image. Then we computed the 2D variance map of the dataset averaged in the angular direction, to get the mean radial variance (see Fig. 5a). At large r, the mean radial variance levels off at 9, which corresponds to the noise variance. We subtracted the noise variance from the estimated mean radial variance and computed the cumulative variance by integrating the mean radial variance over r with a Jacobian weight rdr. The fraction of the cumulative variance reaches 99.9% at r = 98, and therefore R was chosen to be 98. In the Fourier domain, we computed the angular average of the mean 2D power spectrum. The curve in Fig. 5b also levels off at the noise variance when ξ is large. We used the same method as before to compute the cumulative radial power spectrum. The fraction reaches 99.9% at ξ = 0.196, therefore the band limit is chosen to be c = 0.196.

Fig. 5.

Estimating R and c from n = 105 simulated noisy projection images of a human mitochondrial large ribosomal subunit. Each image is of size 240 × 240 pixels. (a) Mean radial variance of the images. The curve levels off at about σ2 = 9 when r ≥ 98. The radius of compact support is chosen as R = 98. (b) Mean radial power spectrum. The curve levels off at σ2 = 9 when ξ ≥ 0.196. The band limit is chosen as c = 0.196.

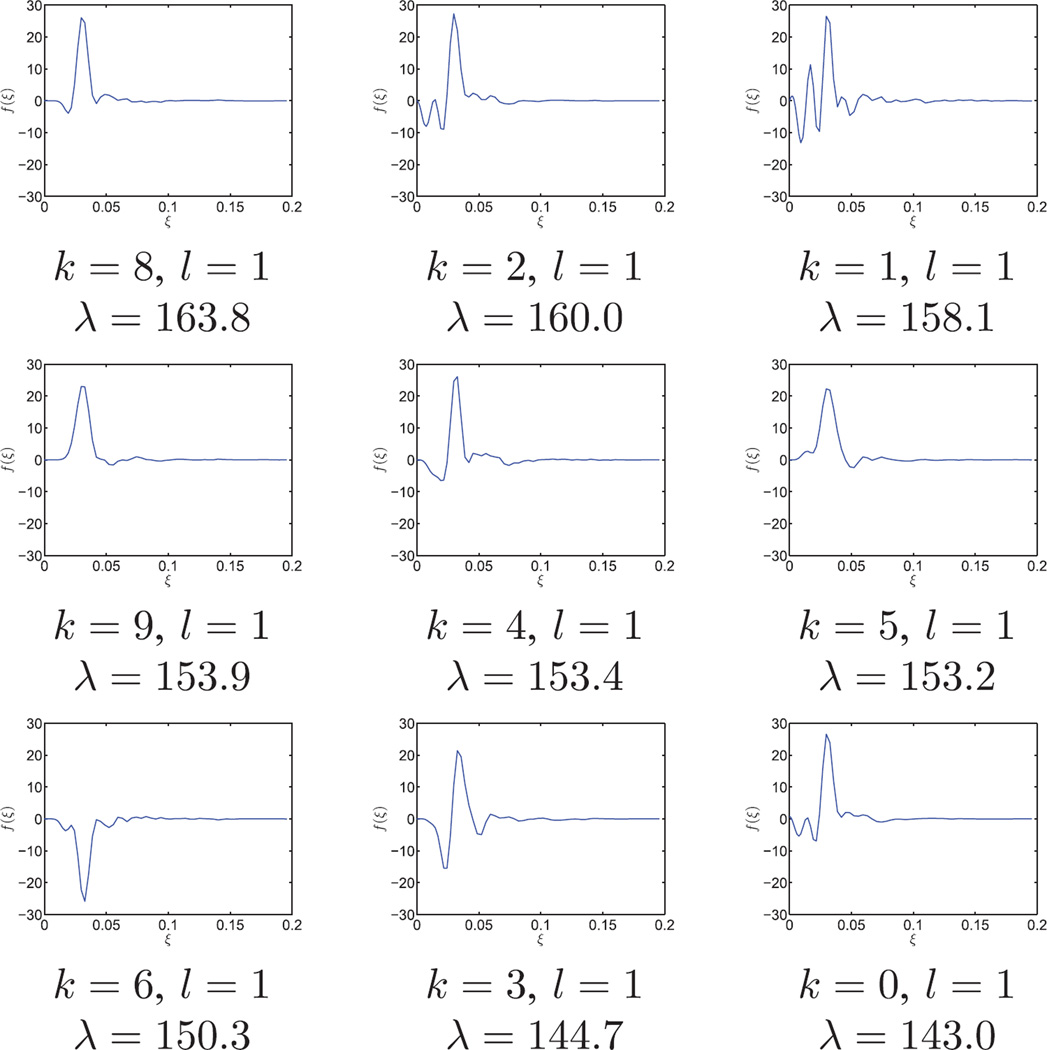

The radial functions of the top nine principal components are shown in Fig. 6. Each radial function is indexed by k and l, where k determines the angular Fourier mode and l is the order of the radial function within the same k. Taking the tensor product of the radial functions and their corresponding angular Fourier modes gives the two dimensional principal components in Fourier domain. It took about 9 minutes in total to get the steerable PCA radial components and the associated expansion coefficients. In particular, Fourier-Bessel expansion coefficients were computed in 9 minutes and the steerable PCA took 12 seconds.

Fig. 6.

FFBsPCA principal radial functions in Fourier domain. The dataset contains n = 105 simulated human mitochondrial large ribosomal subunit projection images corrupted by additive white Gaussian noise with SNR= 1/30. Image size is 240 × 240 pixels, R = 98, c = 0.196. Each radial function is labeled with angular index k, radial order l, and eigenvalue λ.

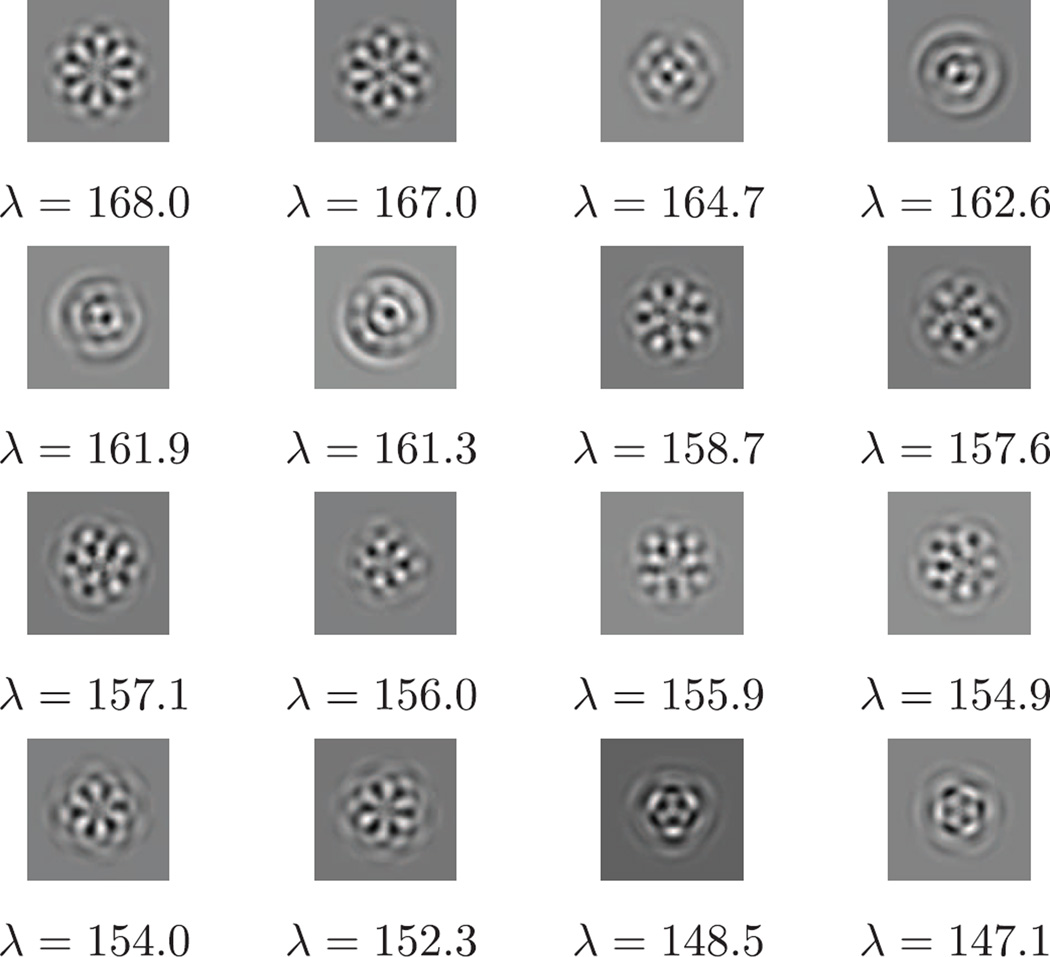

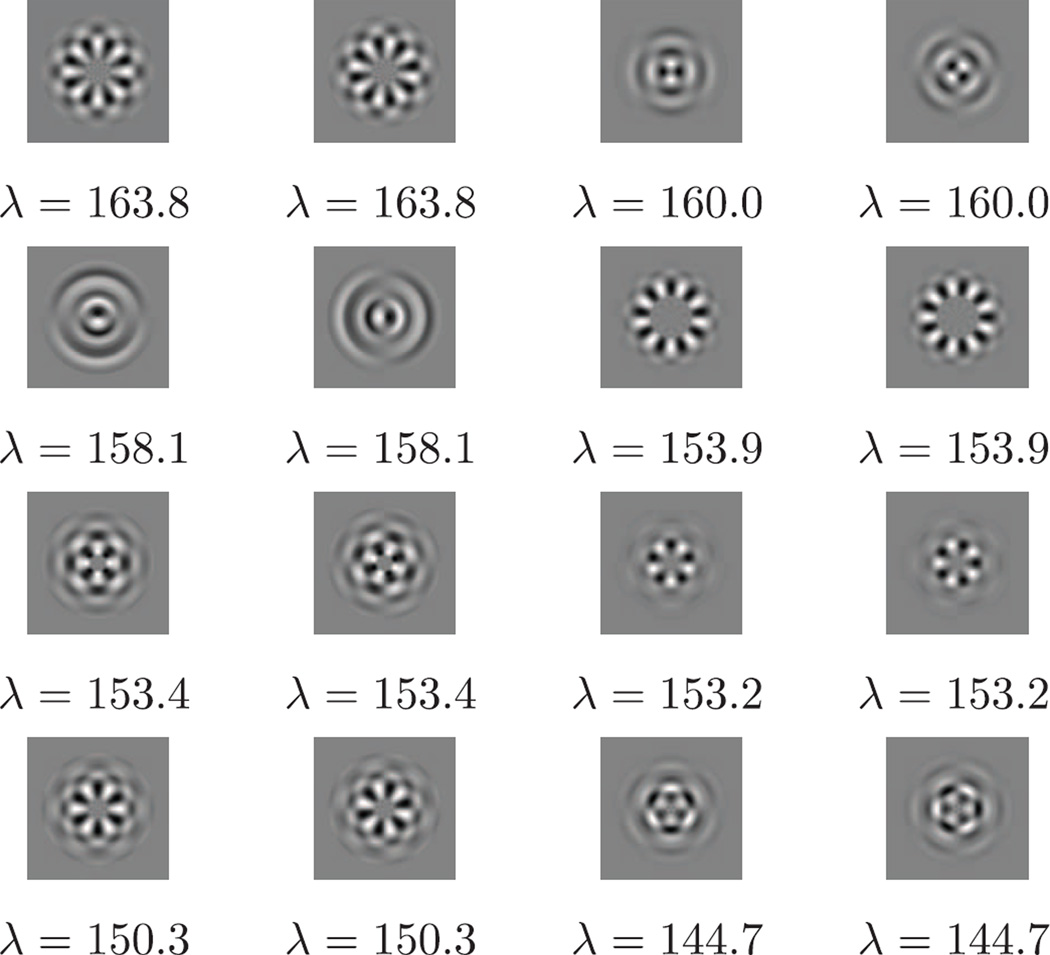

We computed the traditional PCA and FBsPCA on the same dataset in real image domain (see Fig. 8 for PCA components), which took 60 minutes and 16 minutes respectively. In order to compare the principal components computed by FFBsPCA with those computed by traditional PCA, we take the inverse Fourier transform of the FFBsPCA components. We do not compute the inverse polar Fourier transform directly, since such a transform is ill-conditioned. Instead, since the FFBsPCA components are linear combinations of the Fourier-Bessel functions as in Eq. (34), we evaluate the steerable principal components on a Cartesian grid in real space using the linear combinations of , given by Eq. (5). Those principal components are shown in Fig. 7. Some of the top sixteen principal components computed from traditional PCA and FFBsPCA look very similar, for example, the first three and the last four principal components (see Fig. 7 and Fig. 8). Because the gap between the eigenvalues of the traditional PCA is very small for the components in the middle two rows of Fig. 8, those components become degenerate and therefore look different from the corresponding components in Fig. 7.

Fig. 8.

Traditional PCA principal components in real image domain for the same dataset used in Figures 6 and 7.

Fig. 7.

FFBsPCA principal components (eigenimages in real domain) corresponding to Figure 6.

In our simulation, each noisy projection image I is obtained by contaminating the clean image Ic with additive white Gaussian noise of variance σ2 = 9. Given the noise level, we would like to automatically select the appropriate principal components to compress and denoise the noisy images. Since the transformation T* is nearly unitary, the coefficient matrices can be modeled approximately as , where ε(k) is white Gaussian noise with variance σ2 and is the coefficient matrix for the clean images. In the case when there is no signal, that is , all eigenvalues of the covariance matrix C(k) from Eqs. (32) and (33) converge to σ2 as n goes to infinity, while pk is fixed. When , components with eigenvalues larger than σ2 correspond to the underlying clean signal. In the non-asymptotic regime of a finite number of images, the eigenvalues of the sample covariance matrix from white Gaussian noise spread around σ2. The empirical density of the eigenvalues can be approximated by the Marčenko-Pastur distribution with parameter γk, where and for k > 0 and the eigenvalues of C(k) are supported on , with . The principal components corresponding to eigenvalues larger than correspond to signal information beyond noise level. Therefore, with the estimated noise variance σ̂2, we denote by the eigenvalues of the covariance matrix C(k), and select the components with eigenvalues

| (38) |

Various ways of selecting principal components from noisy data have been proposed. We refer to [33] for an automatic procedure for estimating the noise variance and the number of components beyond the noise level. For the simulated ribosomal subunit projections images, there are 966 steerable principal radial components above the threshold in Eq. (38), whereas considerably fewer principal components (391) with the traditional PCA were selected.

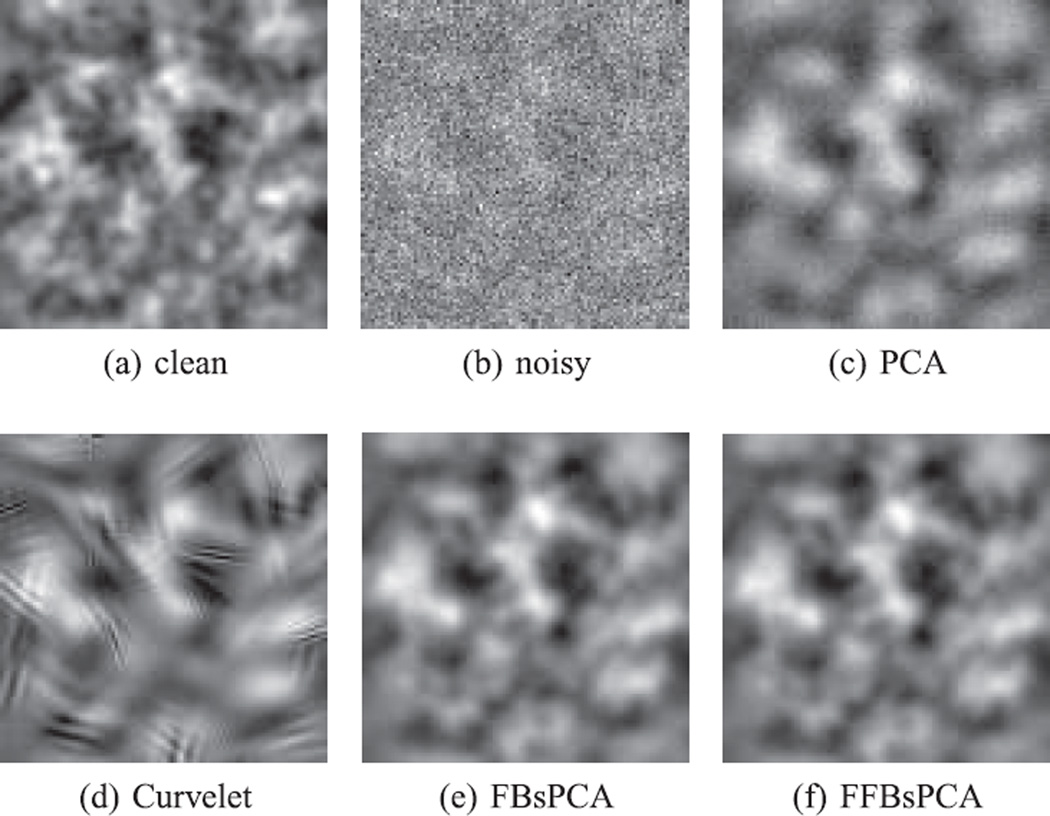

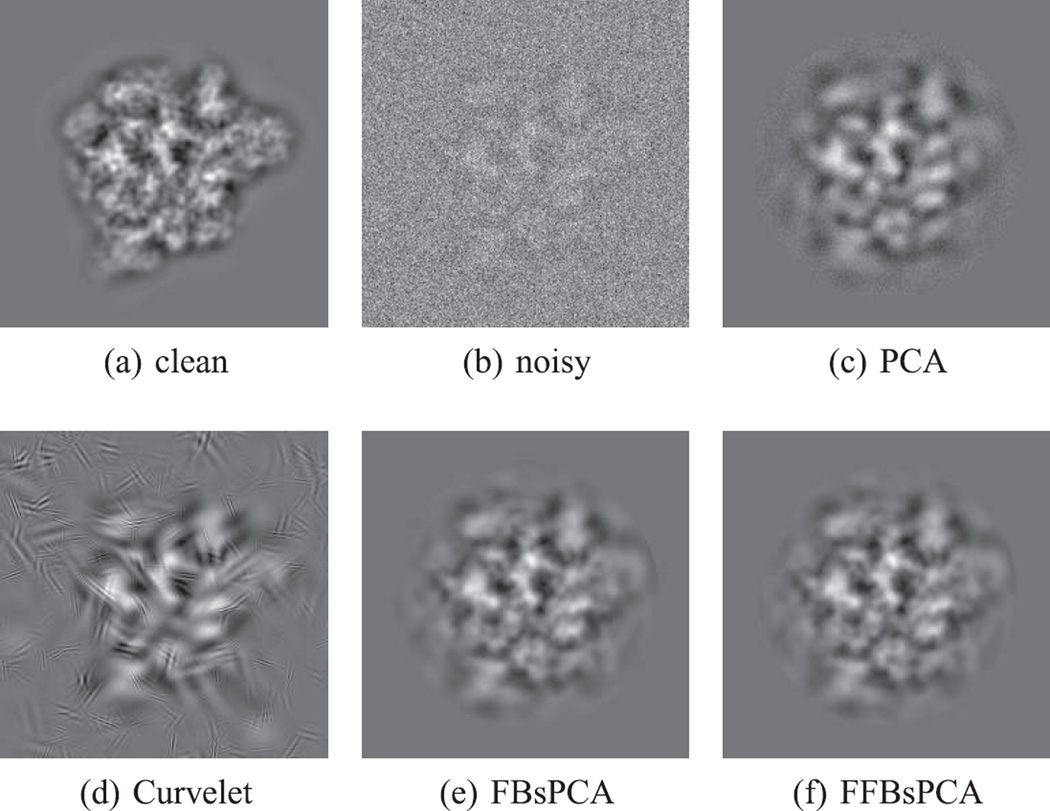

Moreover, we filter the expansion coefficients to get better denoising. To first order approximation, when n ≫ pk, the noise simply shifts all eigenvalues upward by σ2 and this calls for soft thresholding of the sample covariance eigenvalues: (λ − σ2)+. To correct for the finite sample effect, we can apply more sophisticated shrinkage to the eigenvalues, such as the methods proposed in [34], [35]. Specifically, we applied the shrinkage method in [34] to the coefficients computed by FFBsPCA, FBsPCA, and PCA. Because we were able to use more principal components with FFBsPCA, we recovered finer details of the clean projection images, comparing Fig. 10c and Fig. 10f.

Fig. 10.

Enlarged view of 100 × 100 pixels box at the center of the images in Figure 9.

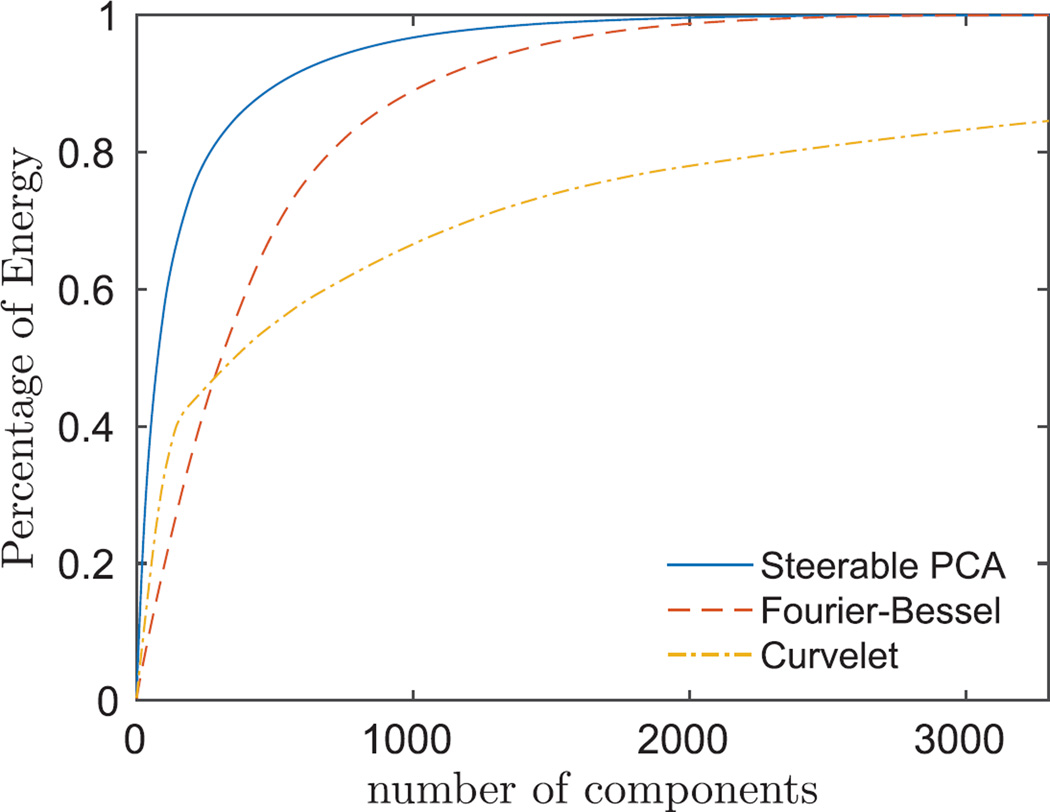

In addition to using data-adaptive bases, we also used a non-isotropic directional multiscale transform, i.e., Curvelet transform [36] with complex block thresholding and cycle spinning, to denoise the images. An example of a denoised image using PCA, Curvelet, FBsPCA, and FFBsPCA is shown in Fig. 9. The steerable PCA basis captures the variance of the clean dataset with fewer components than non-adaptive bases, such as Fourier-Bessel basis or Curvelets (see Fig. 11).

Fig. 9.

Denoising simulated projection images. (a) clean clean projection image, (b) noisy noisy projection image with SNR= 1/30, (c) denoised projection image using traditional PCA, (d) denoised projection image using Curvelet transform, complex block thresholding and cycle spinning, (e) denoised image using FBsPCA, and (f) denoised image using FFBsPCA.

Fig. 11.

Cumulative variance of FFBsPCA, Fourier-Bessel and Curvelet expansion coefficients of simulated clean ribosome projection images as in Fig. 4a.

We computed the mean squared error (MSE) and Peak SNR (PSNR) to quantify the denoising effects in Tab. IV and Tab. V. Comparing with the traditional PCA, FFBsPCA reduced the MSE by more than 25% and increased the PSNR by over 1.3 dB. When the images are of low SNR, Curvelets are unable to outperform data adaptive bases, such as PCA, FBsPCA and FFBsPCA (see Tab. IV and Tab. V). This experiment shows that FFBsPCA is an efficient and effective procedure for denoising large image datasets.

TABLE IV.

MSE of Denoised Images Using PCA, Curvelets, FBsPCA and FFBsPCA, all Computed Using Pixels Within R = 98

| MSE (10−5) | ||||

|---|---|---|---|---|

| Curvelet | PCA | FBsPCA | FFBsPCA | |

| Image 1 | 1.38 | 1.10 | 0.77 | 0.77 |

| Image 2 | 1.63 | 1.29 | 0.95 | 0.96 |

| Image 3 | 1.58 | 1.17 | 0.85 | 0.85 |

TABLE V.

PSNR of Denoised Images Using PCA, Curvelets, FBSPCA and FFBSPCA, All Computed Using Pixels Within R = 98

| PSNR (dB) | ||||

|---|---|---|---|---|

| Curvelet | PCA | FBsPCA | FFBsPCA | |

| Image 1 | 18.10 | 19.06 | 20.62 | 20.63 |

| Image 2 | 17.90 | 18.93 | 20.26 | 20.23 |

| Image 3 | 18.68 | 19.99 | 21.35 | 21.35 |

Finally, we show that steerable PCA denoising is robust to small shifts. We simulated clean data with random shifts in the ±x and ±y directions with maximum shifts equal to 0 (centered images), 5, 10, 15, and 20 pixels. The clean images are corrupted with additive white Gaussian noise of variance 9. As shown in Tab. VI, the denoising performance using FFBsPCA (measured in PSNR) is almost unaffected. The denoising results for centered images in Tab. V and Tab. VI are slightly different because we used different support sizes to evaluate PSNRs.

TABLE VI.

FFBsPCA Denoising of Images With Maximum Shifts of 0, 5, 10, 15, and 20 pixels. PSNRs Are Computed With Pixels Within R = 110. The Estimated Compact Support R Increases With Maximum Shift

| PSNR (dB) | ||||

|---|---|---|---|---|

| max shifts (pixels) | R (pixels) | Image 1 | Image 2 | Image 3 |

| 0 | 98 | 21.61 | 21.16 | 22.34 |

| 5 | 99 | 21.53 | 21.26 | 22.32 |

| 10 | 102 | 21.42 | 21.13 | 22.11 |

| 15 | 107 | 21.59 | 21.31 | 22.18 |

| 20 | 110 | 21.31 | 21.30 | 22.07 |

VI. Conclusion

In this letter we presented a fast Fourier-Bessel steerable PCA method that reduces the computational complexity with respect to the size of the images so that it can handle larger images. The complexity of the new algorithm is O(nL3 + L4) compared with O(nL4) of the steerable PCA introduced in [9]. The key improvement is through mapping the images to a polar Fourier grid using NUFFT and evaluating the Fourier-Bessel expansion coefficients by angular 1D FFT and accurate radial integration.

This work has been mostly motivated by its application to cryo-EM single particle reconstruction. Besides compression and denoising of the experimental images required for 2D class averaging [11] and common-lines based 3D ab-initio modeling, FFBsPCA can also be applied in conjunction with Kam’s approach [37] that requires the covariance matrix of the 2D images [38]. The method developed here can also be extended to perform fast principal component analysis of a set of 3D volumes and their rotations. For this purpose, the Fourier-Bessel basis is replaced with the spherical-Bessel basis, and the expansion coefficients can be evaluated by performing the angular integration using a fast spherical harmonics transform [39] followed by radial integration.

Our numerical experiments show that an adaptive basis is necessary for denoising images with very low SNR. Steerable PCA is able to recover more signal components than PCA and achieves better denoising results. It is definitely possible to improve the denoising obtained by just using steerable PCA. For example, we can have more sophisticated dictionary denoising schemes, in which part of the dictionary is made of the steerable principal components and another part of the dictionary is made of wavelets. As these methods require the computation of steerable PCA, computing steerable PCA fast would be useful also for more advanced denoising schemes.

Finally, we remark that the Fourier-Bessel basis can be replaced in our framework with other suitable bases, for example, the 2D prolate spheroidal wave functions (PSWF) on a disk [40]. The 2D prolates also have a separation of variables form which makes them convenient for steerable PCA. A possible advantage of using 2D prolates is that they are optimal in terms of the size of their support.

Acknowledgments

The authors would like to thank Leslie Greengard, Michael O’Neil, and Alex Townsend for valuable discussions.

This work was supported by the NIGMS under award number R01GM090200, in part by the Simons Foundation under award number LTR DTD 06-05-2012, in part by the Moore Foundation DDD Investigator Award, and in part by the Israel Science Foundation under Grant 578/14. The associate editor coordinating the review of this manuscript and approving it for publication was Prof. Alessandro Foi.

Biographies

Zhizhen Zhao received the B.A. and M.Sc. degrees in physics from Trinity College, Cambridge University, Cambridge, U.K., the Ph.D. degree in physics from Princeton University, Princeton, NJ, USA, in 2008 and 2013, respectively. Since September 2013, she has been with the Courant Institute of Mathematical Sciences, New York University, New York, NY, USA. She is currently a Courant Instructor with NYU. Her research interests include applied and computational harmonic analysis, signal processing, data analysis and the applications in structural biology and atmospheric, and oceanic sciences.

Yoel Shkolnisky received the B.Sc. degree in mathematics and computer science, and the M.Sc. and Ph.D. degrees in computer science from Tel-Aviv University, Tel-Aviv, Israel, in 1996, 2001, and 2005, respectively. From July 2005 to July 2008, he was a Gibbs Assistant Professor in applied mathematics with the Department of Mathematics, Yale University, New Haven, CT, USA. Since October 2009, he has been with the Department of Applied Mathematics, School of Mathematical Sciences, Tel-Aviv University. His research interests include computational harmonic analysis, scientific computing, and data analysis.

Amit Singer received the B.Sc. degree in physics and mathematics and the Ph.D. degree in applied mathematics from Tel-Aviv University, Tel-Aviv, Israel, in 1997 and 2005, respectively. He is a Professor of Mathematics and a Member of the Executive Committee of the Program in Applied and Computational Mathematics (PACM), Princeton University, Princeton, NJ, USA. He joined Princeton University as an Assistant Professor in 2008. From 2005 to 2008, he was a Gibbs Assistant Professor in Applied Mathematics with the Department of Mathematics, Yale University, New Haven, CT, USA. His research interests include applied mathematics focuses on theoretical and computational aspects of data science, and on developing computational methods for structural biology. He served in the Israeli Defense Forces from 1997 to 2003. He was the recipient of the Moore Investigator in Data-Driven Discovery (2014), the Simons Investigator Award (2012), the Presidential Early Career Award for Scientists and Engineers (2010), the Alfred P. Sloan Research Fellowship (2010), and the Haim Nessyahu Prize for Best PhD in Mathematics in Israel (2007).

Footnotes

Color versions of one or more of the figures in this paper are available online at http://ieeexplore.ieee.org.

Contributor Information

Zhizhen Zhao, Email: jzhao@cims.nyu.edu, Courant Institute of Mathematical Sciences, New York University, New York, NY 10012 USA.

Yoel Shkolnisky, Department of Applied Mathematics, School of Mathematical Sciences, Tel Aviv University, Tel Aviv 69978, Israel.

Amit Singer, Department of Mathematics and Program in Applied and Computational Mathematics, Princeton University, Princeton, NJ 08544 USA.

REFERENCES

- 1.van Heel M, Frank J. Use of multivariate statistics in analysing the images of biological macromolecules. Ultramicroscopy. 1981;6(2):187–194. doi: 10.1016/0304-3991(81)90059-0. [DOI] [PubMed] [Google Scholar]

- 2.Frank J. Three-Dimensional Electron Microscopy of Macromolecular Assemblies: Visualization of Biological Molecules in Their Native State. London, U.K: Oxford Univ. Press; 2006. [Google Scholar]

- 3.Buades A, Coll B, Morel J-M. A non-local algorithm for image denoising. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recog. (CVPR) 2005;2:60–65. [Google Scholar]

- 4.Donoho DL. De-noising by soft-thresholding. IEEE Trans. Inf. Theory. 1995 May;41(3):613–627. [Google Scholar]

- 5.Hilai R, Rubinstein J. Recognition of rotated images by invariant Karhunen-Loéve expansion. J. Opt. Soc. Amer. A. 1994;11(5):1610–1618. [Google Scholar]

- 6.Perona P. Deformable kernels for early vision. IEEE Trans. Pattern Anal. Mach. Intell. 1995 May;17(5):488–499. [Google Scholar]

- 7.Uenohara M, Kanade T. Optimal approximation of uniformly rotated images: Relationship between Karhunen-Loéve expansion and discrete cosine transform. IEEE Trans. Image Process. 1998 Jan.7(1):116–119. doi: 10.1109/83.650856. [DOI] [PubMed] [Google Scholar]

- 8.Ponce C, Singer A. Computing steerable principal components of a large set of images and their rotations. IEEE Trans. Image Process. 2011 Nov.20(11):3051–3062. doi: 10.1109/TIP.2011.2147323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Zhao Z, Singer A. Fourier-Bessel rotational invariant eigenimages. J. Opt. Soc. Amer. A. 2013;30(5):871–877. doi: 10.1364/JOSAA.30.000871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Vonesch C, Stauber F, Unser M. Design of steerable filters for the detection of micro-particles. Proc. 10th IEEE Int. Symp. Biomed. Imag. (ISBI’13)/Nano Macro. 2013:934–937. [Google Scholar]

- 11.Zhao Z, Singer A. Rotationally invariant image representation for viewing direction classification in cryo-em. J. Struct. Biol. 2014;186(1):153–166. doi: 10.1016/j.jsb.2014.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lenz R. Group-theoretical model of feature extraction. J. Opt. Soc. Amer. A. 1989;6:827–834. [Google Scholar]

- 13.Lenz R. Group invariant pattern recognition. Pattern Recognit. 1990;23:199–217. [Google Scholar]

- 14.Lenz R. Steerable filters and invariant recognition in spacetime; Proc. Int. Conf. Acoust. Speech Signal Process; 1998. pp. 2737–2740. [Google Scholar]

- 15.Lenz R, Bui TH, Hernández-Andrés J. Group theoretical structure of spectral spaces. J. Math. Imag. Vis. 2005;23:297–313. [Google Scholar]

- 16.Lenz R. Investigation of receptive fields using representations of the dihedral groups. J. Vis. Commun. Image Represent. 1995;6(3):209–277. [Google Scholar]

- 17.Jogan M, Zagar E, Leonardis A. Karhunen-Loéve expansion of a set of rotated templates. IEEE Trans. Image Process. 2003 Jul.12(7):817–825. doi: 10.1109/TIP.2003.813141. [DOI] [PubMed] [Google Scholar]

- 18.Klug A, Crowther RA. Three-dimensional image reconstruction from the viewpoint of information theory. Nature. 1972;238:435–440. [Google Scholar]

- 19.Dutt A, Rokhlin V. Fast Fourier transforms for nonequispaced data. SIAM J. Sci. Comput. 1993;14(6):1368–1393. [Google Scholar]

- 20.Fessler JA, Sutton BP. Nonuniform fast Fourier transforms using min-max interpolation. IEEE Trans. Signal Process. 2003 Feb.51(2):560–574. [Google Scholar]

- 21.Greengard L, Lee J. Accelerating the nonuniform fast Fourier transform. SIAM Rev. 2004;46(3):443–454. [Google Scholar]

- 22.Fenn M, Kunis S, Potts D. On the computation of the polar FFT. Appl. Comput. Harmon. Anal. 2007;22:257–263. [Google Scholar]

- 23.O’Neil M, Woolfe F, Rokhlin V. An algorithm for the rapid evaluation of special function transforms. Appl. Comput. Harmon. Anal. 2010;28:203–226. [Google Scholar]

- 24.Townsend A. A fast analysis-based discrete Hankel transform using asymptotic expansions. 2015 arxiv.org/abs/1501.01652. [Google Scholar]

- 25.Watson GN. Theory of Bessel Functions. Cambridge, U.K: Cambridge Univ. Press; 1944. [Google Scholar]

- 26.Abramowitz M, Stegun IA. Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. New York, NY, USA: Dover; 1964. [Google Scholar]

- 27.Olver FWJ. A further method for the evaluation of zeros of Bessel functions and some new asymptotic expansions for zeros of functions of large order. Math. Proc. Cambridge Philosoph. Soc. 1951;47:699–712. [Google Scholar]

- 28.Elbert Á. Some recent results on the zeros of Bessel functions and orthogonal polynomials. J. Comput. Appl. Math. 2001;133(1–2):65–83. [Google Scholar]

- 29.Breen S. Uniform upper and lower bounds on the zeros of Bessel functions of the first kind. J. Math. Anal. Appl. 1995;196:1–17. [Google Scholar]

- 30.Press WH, Flannery BP, Teukolsky SA, Vetterling WT. Numerical Recipes in FORTRAN 77: The Art of Scientific Computing. plus Cambridge, U.K: Cambridge Univ. Press; 1986. [Google Scholar]

- 31.Rattey PA, Lindgren AG. Sampling the 2-D radon transform. IEEE Trans. Acoust. Speech Signal Process. 1981 Oct.(5):994–1002. vol. ASSP-29. [Google Scholar]

- 32.Brown A, et al. Structure of the large ribosomal subunit from human mitochondria. Science. 2014;346(6210):718–722. doi: 10.1126/science.1258026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kritchman S, Nadler B. Determining the number of components in a factor model from limited noisy data. Chemometr. Intell. Lab. 2008;94:19–32. [Google Scholar]

- 34.Singer A, Wu H-T. Two-dimensional tomography from noisy projections taken at unknown random directions. SIAM J. Imag. Sci. 2013;6(1):136–175. doi: 10.1137/090764657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Donoho DL, Gavish M, Johnstone IM. Optimal shrinkage of eigenvalues in the spiked covariance model. 2014 doi: 10.1214/17-AOS1601. arxiv.org/abs/1311.0851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Candes EJ, Demanet L, Donoho DL, Ying L. Fast discrete curvelet transforms. SIAM Multiscale Model. Simul. 2005;5(3):861–899. [Google Scholar]

- 37.Kam Z. The reconstruction of structure from electron micrographs of randomly oriented particles. J. Theor. Biol. 1980;82:15–39. doi: 10.1016/0022-5193(80)90088-0. [DOI] [PubMed] [Google Scholar]

- 38.Bhamre T, Zhang T, Singer A. Orthogonal matrix retrieval in cryo-electron microscopy. Proc. 12th IEEE Int. Symp. Biomed. Imag. (ISBI’15) 2015:1048–1052. doi: 10.1109/ISBI.2015.7164051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Rokhlin V, Tygert M. Fast algorithms for spherical harmonic expansions. SIAM J. Sci. Comput. 2006;27(6):1903–1928. [Google Scholar]

- 40.Slepian D. Prolate spheroidal wave functions, Fourier analysis, and uncertainty—IV: Extensions to many dimensions, generalized prolate spheroidal wave functions. Bell Syst. Tech. J. 1964;43:3009–3057. [Google Scholar]