Abstract

Introduction

Opioid overdose is a leading cause of death in the USA. Internet-based teaching can improve medical knowledge among trainees, but there are limited data to show the effect of Internet-based teaching on clinical competence in medical training, including management of opioid poisoning.

Methods

We used an ecological design to assess the effect of an Internet-based teaching module on the management of a simulated opioid-poisoned patient. We enrolled two consecutive classes of post-graduate year-1 residents from a single emergency medicine program. The first group (RA) was instructed to read a toxicology textbook chapter and the second group (IT) took a brief Internet training module. All participants subsequently managed a simulated opioid-poisoned patient. The participants’ performance was evaluated with two types of checklist (simple and time-weighted), along with global assessment scores.

Results

Internet-trained participants performed better on both checklist scales. The difference between mean simple checklist scores by the IT and RA groups was 0.23 (95 % CI, 0.016–0.44). The difference between mean time-weighted checklist scores was 0.27 (95 % CI, 0.048–0.49). When measured by global assessment, there was no statistically significant difference between RA and IT participants.

Conclusion

These data suggest that the Internet module taught basic principles of management of the opioid-poisoned patient. In this scenario, global assessment and checklist assessment may not measure the same proficiencies. These encouraging results are not sufficient to show that this Internet tool improves clinical performance. We should assess the impact of the Internet module on performance in a true clinical environment.

Keywords: Opioids, Simulation, Medical education, Toxicology

Introduction

Internet Training in Medical Education

Drug overdose has become the leading cause of death from injury in the USA [1]. More than half of these deaths are from opioids, leading prominent experts to conclude that we are in the midst of an opioid epidemic [2]. Medical toxicologists have an ideal skillset to teach identification and management of drug overdose. However, medical toxicologists are not available to teach bedside clinical skills to all trainees [3]. An asynchronous Internet-based program is an ideal modality to allow medical toxicology educators to reach learners. Internet-based education has been used to teach ambulatory medicine and surgery curricula [4, 5]. Internet-based teaching can improve medical knowledge among resident physicians and can be readily assessed through standardized testing. However, acquisition and advancement of clinical skills are comparatively difficult to evaluate. Moreover, there are limited data to show the effect of Internet-based teaching on clinical skills in medical training, including medical toxicology [6, 7].

Evaluation of Clinical Skill

Clinical skills are difficult to evaluate in actual patient care settings because patients and clinical conditions cannot be standardized. Patient presentations and characteristics vary significantly, even with identical chief complaints. Further, in the clinical environment, the trainee works as part of a team, making it difficult for the evaluator to isolate the performance of a single trainee. A medical trainee with a clinical deficiency can compensate through collaborating with colleagues with more advanced clinical skills. This may result in optimal care of the patient, but may not facilitate evaluation of the clinical skills of a single trainee. Finally, in the real clinical environment, the teaching physician/evaluator is responsible for different clinical duties that compete for time and attention. The supervising physician’s ability to evaluate the medical trainee’s clinical knowledge and skills are limited by length, frequency, and depth of supervisor-trainee interaction.

Medical simulation is an ideal assessment tool for clinical competence, allowing standardization of scenarios and evaluation of trainees in isolation. Moreover, medical simulation poses no risk of harm to patients. Simulation has been used as an assessment tool to measure the performance of medical trainees in pediatric resuscitation, perioperative emergencies, and adult medical resuscitations [8–10].

The goal of this study is to train emergency medicine residents using an Internet-based teaching tool and evaluate their performance using assessment tools.

Methods

We used an ecological design to assess the effect of an Internet-based teaching module on the management of a simulated opioid-poisoned patient. The Johns Hopkins Medicine Institutional Review Board approved this study. We enrolled two consecutive classes of post-graduate year-1 (PGY-1) residents from a single emergency medicine program. The participants were members of consecutive PGY-1 classes from the same emergency medicine residency program, and they were each enrolled during their first month of residency. Therefore, each class completed all study procedures approximately 1 year apart. We assigned each residency class to a different training condition. One residency class (IT) was assigned to receive Internet-based training prior to the simulation scenario. The comparator group (RA) participated in the medical simulation with a recommended reading assignment in a toxicology textbook but without the Internet-based training. The participants were enrolled during their first week of residency, before starting their clinical training. Both groups of trainees were surveyed about their prior medical school experience in emergency medicine, medical toxicology, and medical simulation. The RA group was assigned to read a textbook chapter on opioid poisoning 1 to 7 days prior to the simulation. (The reading was assigned 7 days prior to the simulation and the trainees were allowed to complete it on their own time.)

The IT group completed a brief Internet-based training on the management of toxicologic coma between 1 and 7 days prior to their participation in the medical simulation. (The module was assigned 7 days prior to the simulation and the trainees were allowed to complete it on their own time during this period.) The Internet module, titled “Approach to the Toxicologic Coma,” consisted of a multiple-choice pretest, case-based curriculum, and multiple-choice post-test. The module addressed the initial management of the poisoned patient, including assessment of airway, breathing, circulation, bedside glucose, and physical examination to identify a toxicologic syndrome. The module post-test was previously validated for assessment of medical knowledge in approach to the toxicology patient [11].

All participants (UT and IT) individually completed an identical simulation scenario using Laerdal SimMan Classic®. The case scenario was presented to the participants as an unknown exposure. The simulation case, adapted from a medical simulation textbook, involved a patient with opioid-induced CNS and respiratory depression (Fig. 1) [12].

Fig. 1.

The simulation case

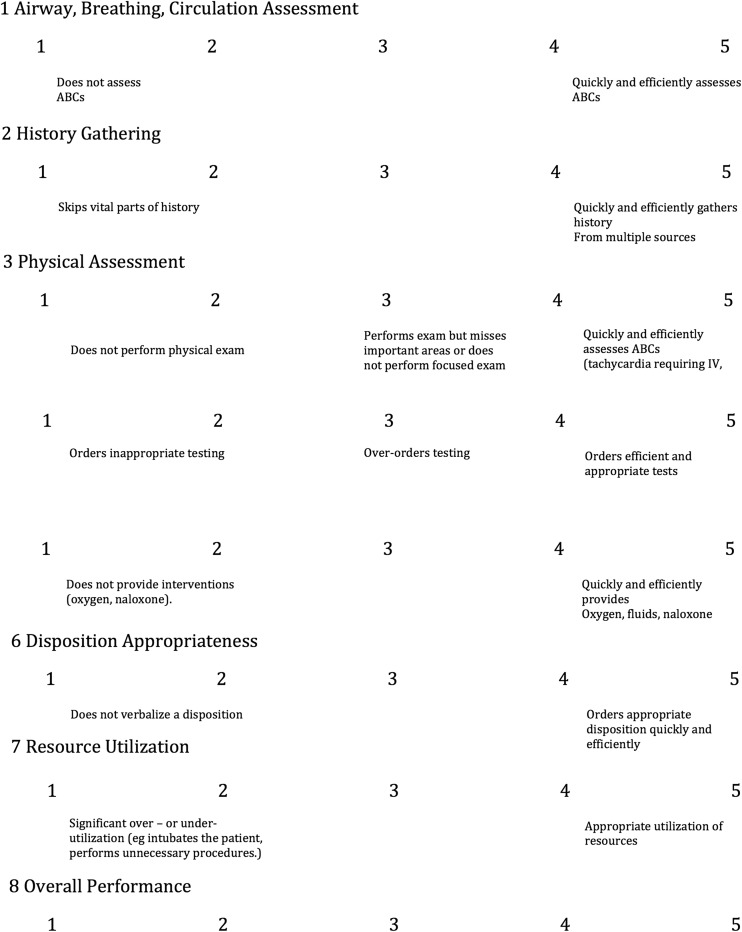

Each participant was video recorded and subsequently evaluated by a reviewer who was unfamiliar with the participant and was blinded to the training condition. The participants were measured with three different assessment tools: a simple checklist which assigned a point for completion of select clinical action (Fig. 2), a time-weighted version of the checklist (Fig. 2), which only gave the participants a point when they completed a list of select critical actions within 3 min, and a global scoring tool (Fig. 3), which used the reviewer’s general impression of the participant’s performance in various domains.

Fig. 2.

Evaluation checklist

Fig. 3.

Global assessment scale

The checklists were derived from simulation literature, objective structured clinical examination scoring scales, and medical toxicology literature, and then refined for this scenario based on a validation study-based performance of skilled trainees [13]. The global scoring tool was taken from a published tool and adapted to fit this scenario [14]. All scores were compared using unpaired t tests.

Results

All members of each residency class agreed to participate in the study.

The IT group (n = 12) had one more eligible participant than RA (n = 11) because the former group had one new resident who was not a PGY-1 and was therefore excluded from the study. Both groups were similar regarding their medical school experience with emergency medicine, medical simulation, and medical toxicology (Table 1).

Table 1.

Baseline characteristics of reading assignment and Internet-trained participants

| Female | Med school simulated patient experience (yes) | Med school tox rotation experience (yes) | Med school EM rotation experience (# 4-week rotations) | |

|---|---|---|---|---|

| Reading assignment (n = 11) | 0.45 (0.15–0.76) | 0.54 (0.24–0.85) | 0.18 (−0.06–0.42) | 2.32 (1.89–2.74) |

| Internet trained (n = 12) | 0.33 (0.05–0.61) | 0.42 (0.14–0.70) | 0 – | 2.13 (1.80–2.36) |

All IT participants completed the Internet-based curriculum. The IT participants required a median of 22.5 min to complete the Internet-based curriculum. The mean pretest score during the Internet training was 67.61 % (95 % confidence interval (CI), 60.28–72.94) and the mean post-test score was 99.08 % (95 % CI, 97.26–100). We did not measure the adherence of the RA group with the assigned reading, and there was no post-test administered to the RA group. Both IT and RA groups performed the simulated patient scenario.

Our simulation results are summarized in Tables 2, 3, and 4. When measured by the simple checklist, the IT participants performed better than the RA participants (Table 2). The difference between mean scores was 0.23 (95 % CI, 0.016–0.44). When measured by time-weighted checklist, the IT participants performed better than the RA participants (Table 3). The difference between the mean scores was 0.27 (95 % CI, 0.048–0.49). In each item on our checklist, the IT residents performed better than the RA residents. When measured by global assessment, there was no statistically significant difference between trained and untrained participants (Table 4).

Table 2.

Results of simple checklist scoring

| Reading assignment (n = 11) | Internet-trained residents (n = 12) | |

|---|---|---|

| Simple checklist | ||

| Glucose assessment | 0.36 (95 % CI, 0.065–0.66) | 0.42 (95 % CI, 0.13–0.71) |

| Naloxone administration | 0.81 (0.58–1.06) | 0.92 (0.75–1.08) |

| Pupil assessment | 0.63 (0.34–0.93) | 0.75 (0.49–1.01) |

| Respiratory rate assessment | 0.55 (0.24–0.85) | 0.83 (0.61–1.05) |

| Skin assessment | 0.10 (−.09–0.27) | 0.67 (0.39–0.95) |

| Overall simple checklist mean score | 0.49 (0.44–0.55) | 0.72 (0.65–0.79) |

| Difference (trained-untrained) | 0.23 (95 % CI, 0.016–43.6) | |

| p < 0.05 |

Table 3.

Results of time-weighted checklist scoring

| Reading assignment (n = 11) | Internet-trained residents (n = 12) | |

|---|---|---|

| Time-weighted Checklist | ||

| Glucose assessment | 0.36 (0.07–0.66) | 0.42 (0.12–0.71) |

| Naloxone Administration | 0.55 (0.24–0.85) | 0.58 (0.29–0.87) |

| Pupil assessment | 0.45 (0.14–0.76) | 0.75 (0.49–1.01) |

| Respiratory Rate assessment | 0.45 (0.15–0.76) | 0.83 (0.61–1.05) |

| Skin assessment | 0.09 (−0.09–0.27) | 0.67 (0.39–0.95) |

| Overall time-weighted checklist mean score | 0.38 (0.33–0.44) | 0.65 (0.57–0.73) |

| Difference (trained-untrained) | 0.27 (95 % CI, 0.048–0.49) | |

| p < 0.05 |

Table 4.

Global assessment

| Reading assignment (n = 11) | Internet-trained residents (n = 12) | |

|---|---|---|

| Global assessment (1–5) | ||

| Airway, breathing | 3.18 (2.47–3.89) | 3.42 (2.53–4.30) |

| History gathering | 2.72 (2.1–3.35) | 3.00 (2.52–3.48) |

| Physical assessment | 2.82 (2.48–3.15) | 2.92 (2.54–3.29) |

| Diagnostic testing | 2.45 (1.88–3.03) | 2.18 (1.47–2.89) |

| Therapeutic interventions | 2.64 (2.24–3.04) | 3.27 (2.7–3.84) |

| Disposition determination | 3.09 (2.38–3.80) | 2.58 (1.92–3.24) |

| Resource utilization | 2.82 (2.21–3.43) | 2.92 (2.30–3.53) |

| General performance | 2.73 (2.35–3.10) | 2.83 (2.20–3.46) |

| OVERALL SCORE | 2.81 (2.76–2.86) | 2.89 (2.81–2.97) |

| Difference (trained-untrained) | −0.08 | |

| p > 0.05 |

Discussion

We used a simulated opioid-poisoned patient to measure the performance of emergency medicine trainees and compare the performance of the participants with brief Internet training versus those who were assigned reading only. The RA group was intended to serve as an “active control.” A reading assignment was chosen for preparation because we felt that this may represent a typical “standard of care” mode to prepare trainees for a medical simulation session. We considered assigning this group to “no training” but felt leaving this comparator group completely untrained might bias results in favor of the Internet-trained group.

The participants were 1 week into their residency and had not yet begun clinical rotations, so their level of training was equivalent to that of a medical school graduate. Overall, the IT participants scored better than the RA participants when measured by both the simple and time-weighted checklists. The IT participants were more likely to carry out actions measured by the checklist. The point estimate for IT was greater than that of RA for each item on both the simple and time-weighted checklists. The skin exam (in both checklists) was the only item for which the 95 % CI do not overlap for the IT and RA groups. These data suggest the possibility that the brief Internet module taught the participants some basic principles of management of the opioid-poisoned patient.

The IT group did not show a statistical difference in global assessment compared to the RA group. The global assessment scores of our two groups were similar: 2.81 (95 %CI, 2.76–2.86) for RA versus 2.89 (95 % CI, 2.81–2.97) for IT. This finding may suggest a limitation of the global assessment instrument to measure differences in resuscitation performance in this instance. Alternatively, this may indicate that global assessment and checklist assessment do not measure the same proficiencies in this clinical scenario. Checklists may be better measures of performance in emergency situations where there are specific key critical actions (such as naloxone administration), while global performance rating may be superior in settings such as patient interviews [15]. Global performance scales may be more difficult for raters to apply than simple binary checklists [16]. Global performance scales may be more subjective than checklist, as the scale gives less specific guidance to the rater.

There are several limitations to this study. First, this study did not randomize the participants at the level of the individual. The participants were assigned a training condition based on the year they began residency. This methodology allowed the assignment of all members of the same residency class to the identical training condition. It is possible that the difference in checklist scores is attributable to different levels of baseline clinical knowledge and skills in the two separate groups and not a result of the Internet-training module. Moreover, because we used an ecological design, the second group (IT) completed module 1 year later, as the opioid epidemic continued to grow in scope and prominence in the news media. It is possible that the second group of participants were more familiar with opioid poisoning than the prior (RA) group. Despite these limitations, we believe that the IT and RA groups are comparable because they were similar in their medical school simulation experience, medical school emergency medicine experience, and medical school clinical toxicology experience (Table 1). Because the two groups have these similar baseline characteristics, we believe that the comparison is valid. A second limitation is that we do not know how many of the RA participants completed their training assignment—reading a textbook chapter. In contrast, every member of the IT group completed their preparation—an Internet training module. We believe that it is possible (perhaps likely) that many of the RA participants did not read the chapter. In other words, many of the RA participants may have been “untrained” compared to the IT participants, who had 100 % adherence with the training. However, difficulty in assuring and verifying adherence is a real-world limitation of assigning reading. In contrast, Internet learning makes tracking adherence relatively easy and tracking completion is a typical feature of Internet-training modules. Additionally, although the simulation case was presented as an unknown, the participants likely knew that they were encountering a toxicology case because the faculty member leading the session is a clinical toxicologist. However, this potential bias would be present for all participants, not just those in one group or the other.

Another limitation is the use of a single reviewer. The use of multiple reviewers would have been ideal to minimize any subjectivity in the trainees’ evaluation and to provide summary evaluation scores better reflective of each trainee’s performance. However, because the reviewer was blinded to the training condition and was unfamiliar with the residents, we minimized any potential bias from subjectivity and the evaluations remain valid. Finally, we do not know if the measured difference by the checklist in the resuscitation skill will translate to real-world resuscitations. In the clinical environment, trainees work in a team of EM physicians (resident and attending physician) and not in isolation.

Our results are encouraging, but this study is not sufficient to show that this Internet tool improves clinical performance. Future research should study the effectiveness of the Internet module with a truly randomized sample. We should also investigate the results of the Internet tool on the management of patients in the clinical environment. A short Internet-based training that could improve actual clinical performance would be an exciting and useful tool to teach our trainees to effectively treat opioid poisoning during the current opioid epidemic in the USA.

Compliance with Ethical Standards

The Johns Hopkins Medicine Institutional Review Board approved this study.

Conflicts of Interest

None

Sources of Funding

None

References

- 1.McCarthy M. Drug overdose has become leading cause of death from injury in US. BMJ. 2015;350:h3328. doi: 10.1136/bmj.h3328. [DOI] [PubMed] [Google Scholar]

- 2.Rudd RA, Aleshire N, Zibbell JE, Gladden RM. Increases in drug and opioid overdose deaths—United States, 2000-2014. MMWR Morb Mortal Wkly Rep. 2016;64:1378–1382. doi: 10.15585/mmwr.mm6450a3. [DOI] [PubMed] [Google Scholar]

- 3.White SR, Baker B, Baum CR, Harvey A, Korte R, Avery AN, et al. 2007 survey of medical toxicology practice. J Med Toxicol. 2010;6:281–285. doi: 10.1007/s13181-010-0044-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Gold JP, Begg WB, Fullerton D, Mathisen D, Olinger G, Orringer M, et al. Successful implementation of a novel internet hybrid surgery curriculum: the early phase outcome of thoracic surgery prerequisite curriculum e-learning project. Ann Surg. 2004;240:499–507. doi: 10.1097/01.sla.0000137139.63446.35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sisson SD, Rastegar DA, Hughes MT, Bertram AK, Yeh HC. Learner feedback and educational outcomes with an internet-based ambulatory curriculum: a qualitative and quantitative analysis. BMC Med Educ. 2012;12:55. doi: 10.1186/1472-6920-12-55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Stolbach A, Lemkin E, Sisson S, Heverling H, Hayes B. Internet-based teaching can improve medical knowledge, but there are limited data to show the effect of internet-based teaching on clinical skills [abstract only] Clin Toxicol. 2012;50:580–720. [Google Scholar]

- 7.Kulier R, Gülmezoglu AM, Zamora J, et al. Effectiveness of a clinically integrated e-learning course in evidence-based medicine for reproductive health training: a randomized trial. JAMA. 2012;308:2218–2225. doi: 10.1001/jama.2012.33640. [DOI] [PubMed] [Google Scholar]

- 8.Schmutz J, Manser T, Keil J, Heimberg E, Hoffmann F. Structured performance assessment in three pediatric emergency scenarios: a validation study. J Pediatr. 2015;166:1498–504.e1. doi: 10.1016/j.jpeds.2015.03.015. [DOI] [PubMed] [Google Scholar]

- 9.McEvoy MD, Smalley JC, Nietert PJ, et al. Validation of a detailed scoring checklist for use during advanced cardiac life support certification. Simul Healthc. 2012;7:222–235. doi: 10.1097/SIH.0b013e3182590b07. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.McEvoy MD, Hand WR, Furse CM, Field LC, Clark CA, Moitra VK, et al. Validity and reliability assessment of detailed scoring checklists for use during perioperative emergency simulation training. Simul Healthc. 2014;9:295–303. doi: 10.1097/SIH.0000000000000048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Stolbach A, Hayes B, Heverling H, Sisson S, Lemkin E. Successful short and long-term educational outcomes in residents using internet toxidromes curriculum [abstract only] Clin Toxicol. 2012;50:627–628. [Google Scholar]

- 12.Omron R, Heverling H, Stolbach A. Toxicologic emergencies. In: Thoureen TL, Scott SB, editors. Emergency medicine simulation workbook. New York: Wiley-Blackwell; 2013. pp. 203–225. [Google Scholar]

- 13.Vasquez V, Cordeiro M, Heverling H, Stolbach A. Derivation of a checklist score for evaluation of trainee performance in a toxicological medical simulation scenario [abstract only] Clin Toxicol. 2014;52:719. [Google Scholar]

- 14.Kim J, Neilipovitz D, Cardinal P, Chiu M. A comparison of global rating scale and checklist scores in the validation of an evaluation tool to assess performance in the resuscitation of critically ill patients during simulated emergencies (abbreviated as “CRM simulator study IB”) Simul Healthc. 2009;4:6–16. doi: 10.1097/SIH.0b013e3181880472. [DOI] [PubMed] [Google Scholar]

- 15.Hodges B, Regehr G, McNaughton N, Tiberius R, Hanson M. OSCE checklists do not capture increasing levels of expertise. Acad Med. 1999;74:1129–1134. doi: 10.1097/00001888-199910000-00017. [DOI] [PubMed] [Google Scholar]

- 16.Morgan PJ, Cleave-Hogg D, Guest CB. A comparison of global ratings and checklist scores from an undergraduate assessment using an anesthesia simulator. Acad Med. 2001;76:1053–1055. doi: 10.1097/00001888-200110000-00016. [DOI] [PubMed] [Google Scholar]