Abstract

Many accounts of decision making and reinforcement learning posit the existence of two distinct systems that control choice: a fast, automatic system and a slow, deliberative system. Recent research formalizes this distinction by mapping these systems to “model-free” and “model-based” strategies in reinforcement learning. Model-free strategies are computationally cheap, but sometimes inaccurate, because action values can be accessed by inspecting a look-up table constructed through trial-and-error. In contrast, model-based strategies compute action values through planning in a causal model of the environment, which is more accurate but also more cognitively demanding. It is assumed that this trade-off between accuracy and computational demand plays an important role in the arbitration between the two strategies, but we show that the hallmark task for dissociating model-free and model-based strategies, as well as several related variants, do not embody such a trade-off. We describe five factors that reduce the effectiveness of the model-based strategy on these tasks by reducing its accuracy in estimating reward outcomes and decreasing the importance of its choices. Based on these observations, we describe a version of the task that formally and empirically obtains an accuracy-demand trade-off between model-free and model-based strategies. Moreover, we show that human participants spontaneously increase their reliance on model-based control on this task, compared to the original paradigm. Our novel task and our computational analyses may prove important in subsequent empirical investigations of how humans balance accuracy and demand.

Author Summary

When you make a choice about what groceries to get for dinner, you can rely on two different strategies. You can make your choice by relying on habit, simply buying the items you need to make a meal that is second nature to you. However, you can also plan your actions in a more deliberative way, realizing that the friend who will join you is a vegetarian, and therefore you should not make the burgers that have become a staple in your cooking. These two strategies differ in how computationally demanding and accurate they are. While the habitual strategy is less computationally demanding (costs less effort and time), the deliberative strategy is more accurate. Scientists have been able to study the distinction between these strategies using a task that allows them to measure how much people rely on habit and planning strategies. Interestingly, we have discovered that in this task, the deliberative strategy does not increase performance accuracy, and hence does not induce a trade-off between accuracy and demand. We describe why this happens, and improve the task so that it embodies an accuracy-demand trade-off, providing evidence for theories of cost-based arbitration between cognitive strategies.

Introduction

Theoretical accounts of decision making emphasize a distinction between two systems competing for control of behavior [1–6]: one that is fast and automatic, and one that is slow and deliberative. These systems occupy different points along a trade-off between accuracy and computational demand (henceforth demand), making each one suitable for particular task demands. This raises the problem of arbitration: how does the brain adaptively determine which system to use at any given time? Answering this question depends on models and experimental tasks that embody the accuracy-demand trade-off at the heart of dual-system models.

Recent research formalizes the dual-system architecture in the framework of reinforcement learning [7, 8], a computational approach to value-guided decision-making that we describe in further detail below. The application of reinforcement learning methods to dual-process models of decision-making sparked an explosion of empirical and theoretical developments over the past decade because it offers a computationally precise characterization of the distinction between “automatic” and “controlled” processes for the task of value guided decision-making. Current research assumes that experimental methods grounded in reinforcement learning also capture a trade-off between accuracy (the proportion of value-maximizing actions) and computational demand (the minimization of computational effort and related costs), but this assumption remains largely untested (cf. [9]).

Currently, the dominant method that aims to dissociate mechanisms of behavioral control within the reinforcement learning framework is the “two-step task” introduced by Daw, Gershman, Seymour, Dayan, and Dolan [8] (Fig 1A), which we describe in detail in the next section. This task has proven to be a useful and popular tool to characterize the neural [8, 10–18], behavioral [19–31] and clinical [32–35] implications of dual-process models within the reinforcement learning framework. However, in this paper we argue that the two-step task does not induce a trade-off between accuracy and demand: Our simulations show that the “deliberative” strategy does not increase performance accuracy on the task. These simulations mirror a recent report by Akam, Costa, and Dayan [9], who also show that the two-step task does not embody a trade-off between model-based control and reward. Here, we expand on that result by showing that it holds across an exhaustive range of reinforcement learning parameters. Furthermore, we show that the same shortcoming is present in other, more recent variants of the task that have been reported. We then identify five factors that collectively restrict the accuracy benefits posited to arise from model-based control. Finally, we describe a novel task that induces the accuracy-demand trade-off, while retaining the ability to dissociate formally between distinct processes of behavioral control.

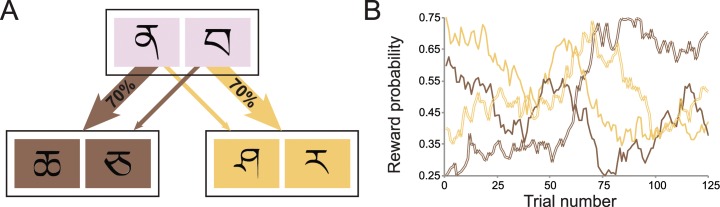

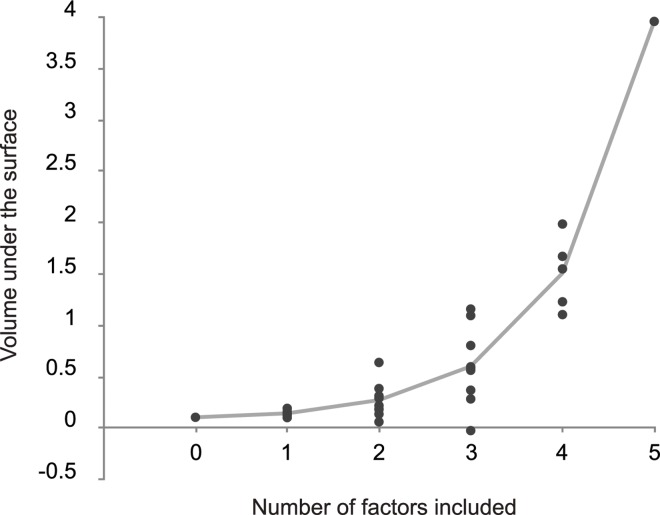

Fig 1. Design of the Daw two-step task.

(A) State transition structure of the original two-step paradigm. Each first-stage choice has a high probability of transitioning to one of two states and a low probability of transitioning to the other. Each second-stage choice is associated with a probability of obtaining a binary reward. (B) To encourage learning, the second-stage reward probabilities change slowly over the course of the experiment.

Dual-process models in the reinforcement learning setting

The fundamental problem in reinforcement learning is estimation of state-action values (cumulative future reward), which an agent then uses to choose actions. In the dual-system theory, the fast and automatic system corresponds to a “model-free” reinforcement learning strategy, which estimates state-action values from trial-and-error learning [36, 37]. In essence, this strategy is an elaborated version of Thorndike’s Law of Effect: actions that previously led to reward are more likely to be taken in the future. The strategy is “model-free” because it has no representation of the environment’s causal structure (i.e., the transition function between states and the reward function in each state). Instead, it incrementally constructs a look-up table or function approximation from which values can be quickly computed. However, this strategy can lead to errors if the environment changes, because the entire value function must be incrementally updated to accommodate changes. In addition, the strategy can produce sub-optimal credit assignment [8], a property we explore below. These forms of brittleness illustrate how model-free learning gives rise to “habits”—fast but inflexible response tendencies stamped in by repetition.

The slow and deliberative system corresponds to a “model-based” learning strategy that possesses operating characteristics complementary to the model-free strategy. This strategy learns an explicit causal model of the environment, which it uses to construct plans (e.g., by dynamic programming or tree search). In contrast to the habitual nature of the model-free strategy, the capacity to plan enables the model-based strategy to flexibly pursue goals. While more computationally expensive (hence slower and more effortful) than the model-free approach, it has the potential to be more accurate, because changes in the environment can be immediately incorporated into the model. The availability of a causal model also allows the model-based strategy to solve the credit-assignment problem optimally.

This dual-system framework sketched above can account for important findings in the reinforcement learning literature, such as insensitivity to outcome devaluation following overtraining of an action-reward contingency [7, 38]. Furthermore, the framework has spurred a wealth of new research on the neural [8, 10–13, 39, 40] and behavioral implications of competition and cooperation between reinforcement learning strategies [19–25, 29, 41, 42].

How might the brain arbitrate between model-free and model-based strategies? Since the model-based strategy attains more accurate performance through effortful computation, people can (up to a point) increase reward by engaging this system. However, in time-critical decision making settings, the model-based strategy may be too slow to be useful. Furthermore, if cognitive effort enters into the reward function [43–45], then it may be rational to prefer the model-free strategy in situations where the additional cognitive effort of model-based planning does not appreciably increase reward. It has been hypothesized that this trade-off between accuracy and demand plays a pivotal role in the arbitration between the two strategies [38, 46–50], but so far direct evidence for arbitration has been sparse [51].

Methods

The Daw two-step task

Here, we will first describe in detail the design of the Daw two-step task, and the reinforcement-learning model of this task [8]. Next, we will show through computational simulations that model-based planning on this task does not yield increased performance accuracy. Finally, we will discuss several factors that contribute to this shortcoming in the current approach in this two-step task, and related paradigms.

Experimental design

In the two-step task, participants make a series of choices between two stimuli, which lead probabilistically to one of two second-stage states (Fig 1A). These second-stage states require a choice between stimuli that offer different probabilities of obtaining monetary reward. To encourage learning, the reward probabilities of these second-stage choices change slowly and independently throughout the task (Fig 1B), according to a Gaussian random walk (mean = 0, σ = 0.025) with reflecting boundaries at 0.25 and 0.75. Crucially, each first-stage option leads more frequently (70%) to one of the second-stage states (a “common” transition), whereas it leads to the other state in a minority of the choices (a “rare” transition).

These low-probability transitions allow for a behavioral dissociation between habitual and goal-directed choice. Since the model-free strategy is insensitive to the structure the task, it will simply increase the likelihood of performing an action if it previously led to reward, regardless whether this reward was obtained after a common or rare transition. Choice dictated by the model-based strategy, on the other hand, reflects an interaction between the transition type and reward on the previous trial (Fig 2A). This strategy will decrease the tendency of repeating a first-stage action after a reward and a rare transition, since the alternative first-stage action is more likely to lead to the previously rewarded second-stage state (Fig 2B). Empirically, behavioral performance on this task reflects a mixture of these two strategies (Fig 2C). That is, the stay probability shows a main effect of reward, increasing when the previous trial was rewarded, but also shows the model-based crossover interaction between the previous transition and reward.

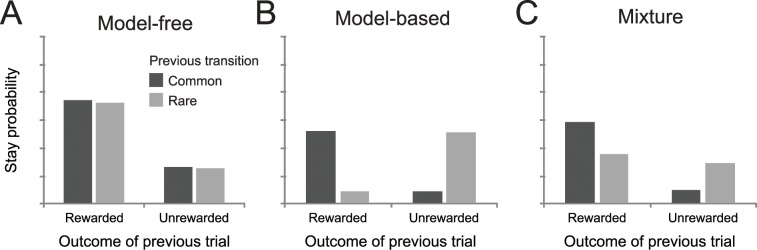

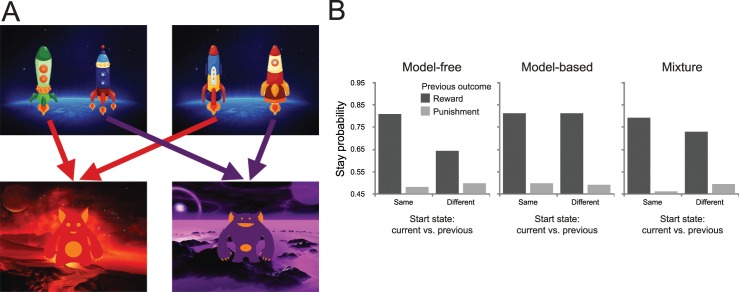

Fig 2. Probability of repeating the first-stage choice for three agents.

(A) For model-free agents, the probability of repeating the previous choice is dependent only on whether a reward was obtained, and not on transition structure. (B) Model-based behavior is reflected in an interaction between previous transition and outcome, increasing the probability of transitioning to the state where reward was obtained. (C) Behavioral performance on this task reflects features of both model-based and model-free decision making, the main effect of previous reward and its interaction with the previous transition.

Computational model

Behavior on the Daw two-step task can be modeled using an established dual-system reinforcement-learning model [7, 8, 40]. The task consists of three states across two stages (first stage: sA; second stage: sB and sC), all with two available actions (aA and aB). The model consists of model-based and model-free strategies that both learn a function Q(s, a) mapping each state-action pair to its expected discounted future return. On trial t, the first-stage state (always sA) is denoted by s1,t, the second-stage state by s2,t (sB or sC), the first- and second-stage actions by a1,t and a2,t, and the second-stage rewards as r1,t (always zero, there is only reward on the second stage) and r2,t.

Model-free strategy. The model-free agent uses the SARSA(λ) temporal difference learning algorithm [52], which updates the value for each state-action pair (s, a) at stage i and trial t according to:

where

is the reward prediction error, α is the learning rate parameter (which determines to what degree new information is incorporated), and ei,t(s,a) is an eligibility trace set equal to 0 at the beginning of each trial and updated according to

before the Q-value update. The eligibilities of all state-action pairs are then decayed by λ after the update.

We now describe how these learning rules apply specifically to the two-step task. The reward prediction error is different for the first two levels of the task. Since r1,t is always zero, the reward prediction error at the first stage is driven by the value of the selected second-stage action QMF(s2,t,a2,t):

Since there is no third stage, the second-stage prediction error is driven by the reward r2,t:

Both the first- and second-stage values are updated at the second stage, with the first-stage values receiving a prediction error down-weighted by the eligibility trace decay, λ. Thus, when λ = 0, only the values of the current state get updated.

Model-based strategy. The model-based algorithm works by learning a transition function that maps the first-stage state-action pairs to a probability distribution over the subsequent states, and then combining this function with the second-level model-free values (i.e., the immediate reward predictions) to compute cumulative state-action values by iterative expectation. In other words, the agent first decides which first-stage action leads to which second-stage state, and then learns the reward values for the second-stage actions.

At the second stage, the learning of the immediate rewards is equivalent to the model-free learning, since those Q-values are simply an estimate of the immediate reward r2,t. As we showed above, the SARSA learning rule reduces to a delta-rule for predicting the immediate reward. This means that the two approaches coincide at the second stage, and so we set QMB = QMF at this level.

The model-based values are defined in terms of Bellman’s equation [37], which specifies the expected values of each first-stage action using the transition structure P (assumed to be fully known to the agent):

where we have assumed these are recomputed at each trial from the current estimates of the transition probabilities and second-stage reward values.

Decision rule. To connect the values to choices, the Q-values are mixed according to a weighting parameter w:

Again, at the second stage the decision is made using only the model-free values. We used the softmax rule to translate these Q-values to actions. This rule computes the probability for an action, reflecting the combination of the model-based and model-free action values weighted by an inverse temperature parameter. At both states, the probability of choosing action a on trial t is computed as

where the inverse temperature β determines the randomness of the choice. Specifically, when β → ∞ the probability of the action with the highest expected value tends to 1, whereas for β → 0 the probabilities over actions becomes uniform.

Simulation of the accuracy-demand trade-off

In order to test whether the Daw two-step task embodies a trade-off between goal-directed behavior and reward, we estimated the relationship between control (model based vs. model free) and reward by Monte Carlo simulation.

For each simulation, we generated a new set of four series of independently drifting reward probabilities across 201 trials according to a Gaussian random walk (mean = 0, σ = 0.025, the same parameters used by Daw and colleagues [8]) with reflecting boundaries at 0.25 and 0.75 (also used by Daw and colleagues). Then we simulated performance on the task for 11 different values of the weighting parameter w, ranging from 0 to 1, the inverse temperature, ranging from 0 to 10, and the learning rate, ranging from 0 to 10. For each of these, we recorded the reward rate obtained. Next, we ran a linear regression for each combination of inverse temperature and learning rate, predicting the reward rate from the size of the weighting parameter. Note that the data points in each linear regression were generated using the same set of drifting rewards, ensuring that any effect was due to the changes in the weighting parameter and not to random variation across the reward distributions themselves. The eligibility trace parameter was fixed at a value that corresponded approximately with previous reports of this task, λ = 0.5, but we found qualitatively identical results across all simulations when we fixed λ at 0 or 1 (see Supporting Information). We repeated this process 1000 times, computing a surface of regression coefficients across a range of reinforcement learning parameters, and then then averaged across these surfaces. The results of this analysis can be seen in the surface plot in Fig 3A, where we have plotted the average standardized linear effect of the weighting parameter as a function of the learning rate and inverse temperature (the median fit is indicated by the red circle on the surface in Fig 3A).

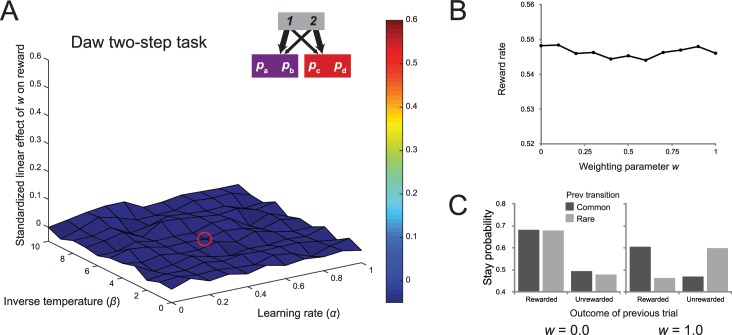

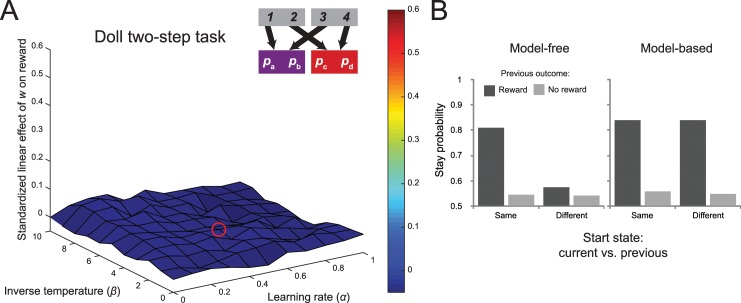

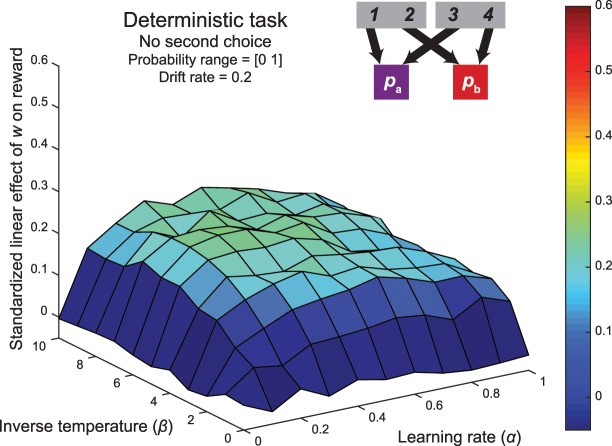

Fig 3. Results of simulation of accuracy-demand trade-off in the Daw two-step task.

(A) Surface plot of the standardized linear effect of the weighting parameter on reward rate in the original version of the two-step task. Each point reflects the average of 1000 simulations of a dual-system reinforcement-learning model of behavior of this task with different sets of drifting reward probabilities, as a function of the learning rate and inverse temperature of the agents. The red circle shows the median fit. Importantly, across the entire range of parameters, the task does not embody a trade-off between habit and reward. (B) An example of the average relationship between the weighting parameter and reward rate with inverse temperature = 5.0 and α = 0.5 (mirroring the median fits reported by Daw and colleagues [8]) across 1000 simulations. (C) The probabilities of repeating the first-stage action as a function of the previous reward and transition for a purely model-free agent and purely model-based agent.

The striking feature of this surface map is that the regression coefficients are uniformly close to zero, indicating that none of the parameterizations yielded a linear relationship between model-based control and reward rate. Fig 3B provides a more fine-grained picture of this relationship for a specific parameterization that follows the median fits reported by Daw and colleagues [8]. Note that even though there is no significant relationship between reward and model-based control, this does not undermine the usefulness of the task for measuring the relative balance of model-based and model-free strategies (see Fig 3C). What we can conclude is that this balance does not embody a trade-off between accuracy and demand.

Simulations of related tasks

Since its conception, the design of the Daw two-step task has been used in many similar sequential decision making tasks. Given the surprising absence of the accuracy-demand trade-off in the original task, it is important to investigate whether related versions of this paradigm are subject to the same shortcoming.

Dezfouli and Balleine two-step task

In one of these variants, developed by Dezfouli and Balleine [25, 26], participants also navigate from one first-level stage to two second-level stages, utilizing the same common and rare transition structure as in the Daw two-step task, but the reward probabilities are implemented in different fashion. Instead, choices at the second stage have either a high probability (0.7) or a low probability (0.2) of winning, and on every trial the probability of each second-level action changes randomly to either the high or low probability with a small probability (0.2). This task dissociates model-based and model-free control in a manner similar to the Daw two-step task. We performed the same analysis as reported in the previous section for this task, and the results were strikingly similar (see Fig 4). As before, across the entire range of reinforcement learning parameters, the task did not exhibit a trade-off between demand and accuracy, evidence by the uniformly flat regression coefficients (the median fit is represented by the red circle on the surface of Fig 4).

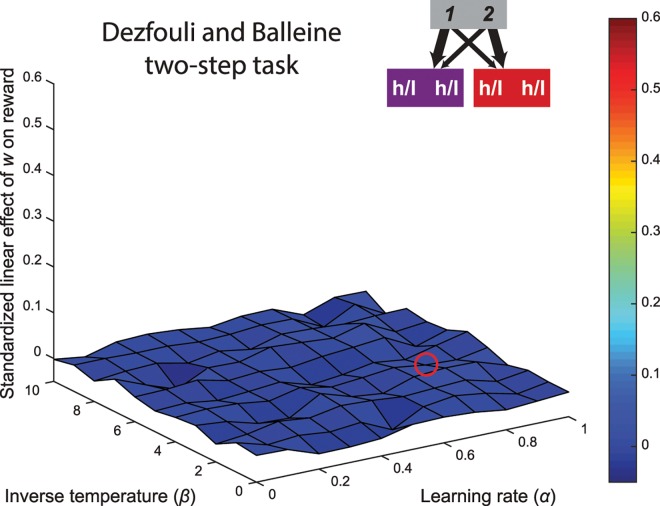

Fig 4. Surface plot of the linear relationship between the weighting parameter and reward rate in the Dezfouli and Balleine version of the two-step task.

The red circle shows the median fit. Similar to the Daw variant, this task does not capture a trade-off between accuracy and demand across all tested parameterizations.

Doll two-step task

A second variant, reported by Doll and colleagues [15], uses two first-stage states, but the choices at these states transition deterministically to one of the two second-stage states (Fig 5A). At the second stage the choices have chance of producing reward, with probabilities slowly changing over the course of the experiment according to the same Gaussian walk (mean = 0, σ = 0.025) as in the original task with reflecting bounds at 0.25 and 0.75.

Fig 5. Results of simulation of the Doll two-step task.

(A) Surface plot of the linear relationship between the weighting parameter and reward rate in the Doll version of the two-step task. The red circle shows the median fit. Similar to the Daw variant, this task does not capture a trade-off between accuracy and demand across all tested parameterizations, except for a slightly elevated region of parameter space with high inverse temperature and low learning rate. (B) Behavioral predictions in this task. The model-free system learns separate values for each action in each state, so outcomes only affect choices in the same start state. Our simulation of model-free behavior revealed elevated likelihood of staying after a reward from the other state, since this means there is a current high-probability option that the model-free system has been learning about after transitioning there from both start states. The model-based system (on the right) treats start states as equivalent, since they both afford the same transitions, so choices are not affected by whether the previous start state was the same or different.

The dissociation between habit and planning in this task follows a different logic. Here, it is assumed that only model-based learners use the implicit equivalence between the two first-stage states, and can generalize knowledge across them. Therefore, for a model-based learner, outcomes at the second level should equally affect first-stage preferences on the next trial, regardless whether this trial starts with the same state as the previous trial or a different one. For model-free agents, however, rewards that are received following one start state should not affect subsequent choices from the other start state. According to Doll and colleagues [15], this results in a clear dissociation in staying behavior between these two strategies (Fig 5B): The model-based learner shows increased likelihood to stay with the choice made on the previous trial when this led to a reward, regardless of whether the start-state is the same as or different from the start state on the previous trial. (Note that here the term ‘staying’ is used to refer to taking the action that leads to the same previous second-stage state, and not to describe a repetition of the same first-stage action.) The model-free learner, on the other hand, is argued to only show increased likelihood to repeat a choice after a reward when the current start state is the same as that on the previous trial. Behavioral performance on this task is consistent with these predictions, and compared to the original two-step task, seems to reflect a mixture of model-based and model-free strategies [15].

However, our simulations revealed that the behavioral profile for the model-free learner also showed a slightly elevated likelihood to stay with the previous choice after a reward if the start state was different (Fig 5B). On the first glance, this may seem surprising because the model-free system does not have access to the second-stage action values. Note, though, that this system builds up first-stage action values based on the previous reward history. If one particular action has a high chance of producing reward for an extended period of trials, then the model-free system will learn to choose actions in order to transition to the relevant state, resulting in increased stay behavior. It is important to note that in this task the effect is small, and only becomes reliable with large sample sizes (we simulated 1000 reinforcement-learning agents). Regardless, this observation makes the interpretation of raw stay probabilities less clean, since an elevated likelihood to repeat the previous action after a reward with a different start-state is not simply attributable to a contribution of the model-based system. This places extra importance on fitting computational models to behavior in this task, since these incorporate reward histories over the entire experiment, which are omitted when analyzing raw choice behavior on single trials. (This point becomes crucial in subsequent experiments described below.)

In order to assess whether the Doll two-step task embodied a trade-off between accuracy and demand, we again estimated the relationship between the weighting parameter and reward rate. This analysis (depicted in Fig 5A) suggested that this relationship was largely similar to the results reported above, and very close to zero for large portions of the parameter range. However, in comparison with the Daw and Dezfouli versions, the Doll task showed increased sensitivity in a parameter space with relatively high inverse temperatures (low randomness) and small learning rates. It is important that even in this elevated part of the coefficient surface the hypothetical effect is small, and that the participants’ parameter fits did not fall in this parameter range (the mean fit is indicated by the red circle on the surface in Fig 5A).

Factors contributing to the absence of the trade-off

Despite the substantial differences between these variants of the two-step task, we found that none of them encompasses a motivational trade-off between planning and reward. This observation naturally raises a question: Why does planning not produce an increased reward rate in this task? What characteristics of the paradigm distort the accuracy-demand trade-off?

We investigate five potential explanations. These are not mutually exclusive; rather, they may have a cumulative effect, and they may also interact with each other. First, we show that the sets of drifting reward probabilities that are most often employed are marked by relatively low distinguishability. Second, we show that the rate of change in this paradigm is slow and does not require fast online (model-based) flexibility. Third, we show that the rare transitions in the Daw two-step task diminish the reward-maximizing effect of a model-based choice. Fourth, we show that the presence of the choice at the second stage decreases the importance of the choice at the first stage, which is the only phase where the model-based system has an influence. Fifth, we show that the stochastic reward observations in this task do not carry enough information about the value of the associated stimuli. We use simulations of performance on novel tasks to demonstrate these five points and, as a result, develop a novel paradigm that embodies an accuracy-demand trade-off.

1. Distinguishability of second-stage probabilities

In the two-step task, the difference between model-based and model-free strategies only carries consequences for the first stage, since the second stage values are identical for both strategies. Therefore, the finding that model-based control is not associated with an increased reward rate suggests that the first-stage choices the agent makes do not carry importance, for example, because the reward outcomes at the second stage are too similar. In the original version of the two-step task, the reward probabilities have a lower bound of 0.25 and an upper bound of 0.75. This feature results in a distribution of differences between reward probabilities that is heavily skewed left (Fig 6A). The mean value of this distribution approaches 1/6 (1/3 of the range of 0.5), suggesting that most choices in this task only carry modest consequences, because the associated values have relatively low distinguishability.

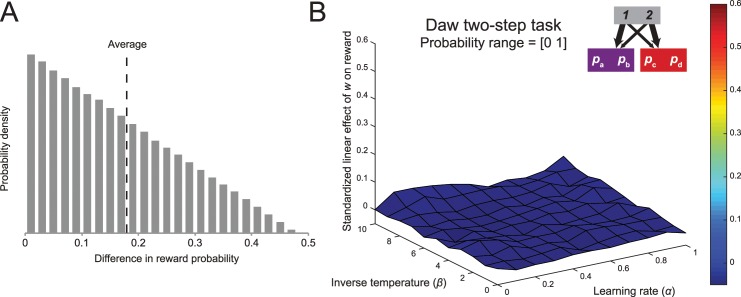

Fig 6. The influence of the range of reward probabilities.

(A) Distribution of differences in reward probabilities between the actions of each trial. (B) Increasing the range of probabilities increases the average linear effect between model-based control and reward for a parameter space associated with high inverse temperatures and relatively low learning rate. Average parameter fits in the original report do not lie within this region of increased sensitivity to the accuracy-demand trade-off.

One straightforward way to increase the differences between the second-stage options is to maximize the range of reward probabilities by setting the lower bound to 0 and the upper bound to 1 (e.g., [20]). This shifts the mean difference in reward probability between options to 1/3, doubling the consequences of each choice in terms of reward maximization. As can be seen in Fig 6B, this change in the paradigm slightly increases the degree to which behavior in this task reflects an accuracy-demand trade-off (Simulation 1). The regression coefficients of the relationship between reward and w are slightly elevated compared to those of the Daw two-step task (compare with Fig 1), especially in the part of parameter space with high inverse temperature and low learning rate, suggesting that low distinguishability between the options is a factor contributing to the absence of the trade-off. However, the increase in the effect is fairly small, and the parameter space at which the highest increase is observed does not correspond with the average parameter fits reported in the literature.

2. Increased drift rate

It is also possible that the changes over time in the second-stage reward probabilities, depicted in Fig 1B, contribute to the absence of the accuracy-demand trade-off in the Daw two-step task. For example, these changes might be too slow, such that model-free learning can adapt to these values at a rate that is proportional to the rate of change over time [53]. Another possibility is that these changes happen too fast, such that the model-based system never accurately reflects the values of the second-stage actions.

In order to explore the effect of the drift rate (i.e., the standard deviation of the Gaussian noise that determines the random walks of the reward distributions) on the accuracy-demand trade-off, we performed simulations of the generative reinforcement learning model with inverse temperature parameter β = 5 and learning rate parameter α = 0.5, mirroring the median fits reported by Daw and colleagues [8]. For each of these, we generated a new set of four series of independently drifting reward probabilities across 201 trials according to Gaussian random walks. We simulated performance on the task for 11 values of the weighting parameter, ranging from 0 to 1, and ran a linear regression predicting the reward rate from the size of the weighting parameter. Again, this method ensured that the data points in each linear regression were generated using the same set of drifting reward probabilities, ensuring that any effect was due to the changes in the weighting parameter. This analysis was performed for 7 different drift rate values, ranging from 0 to 0.5, using the narrow range of reward probabilities used in the original report [8] and the broader range described above. The slopes we report will be the average across a large number of iterations (10,000), since we are estimating very subtle effects, especially in the narrow range condition.

The results, depicted in Fig 7A, suggest that the drift rate of the Gaussian random walk affects the strength of the accuracy-demand trade-off in the task. Specifically, for the broader range of parameterizations, the strongest relationship was observed for a drift rate of 0.2, and for the parameterization range of the original report the maximum effect occurred with a drift rate of 0.1. Consistent with the section above, both the strength of the relationship between reward and planning, as well as the effect of the drift rate on this relationship was stronger for the task with the broader reward probability range. Note however, that for both probability ranges large drift rates negatively impact the relationship, presumably because for these values there is no learnable stability in the terminal state reward probabilities. We confirmed that this general pattern between the accuracy-demand trade-off, the drift rate, and the range of the reward probabilities occurs not only at the parameterization that most closely matched the estimates of the original report, but also across a broader parameter space (see Supporting Information). Fig 7B depicts the surface map of average regression coefficients for the effect between model-based control and reward rate for a task with the wider reward probability range and a drift rate of 0.2 (Simulation 2). These changes to the task substantially increase the strength of the accuracy-demand relationship, especially for agents with a high inverse temperature. However, as before, the increase in the strength of this relationship primarily occurred in regions of parameter space that do not correspond with the fits reported in studies that employ the Daw two-step task [8, 41], and had a very weak effect size.

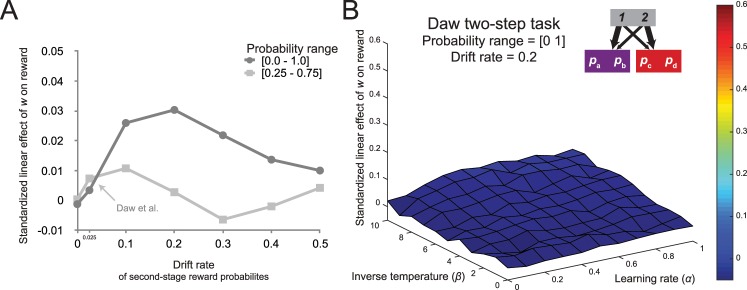

Fig 7. The influence of the drift rate.

(A) The effect of the size of the drift rate on the relationship between model-based control and reward, for two-step tasks with a narrow and a broad reward probability range. (B) Increasing the range of probabilities and the drift substantially increases the average linear effect between model-based control and reward when the inverse temperature is high.

These analyses show that the rate of change of the reward probabilities in the original Daw two-step task is too slow to promote model-based planning. The relationship between reward and model-based control becomes stronger when the drift rate of the Gaussian random walk governing the reward probabilities is moderately increased, and this effect is especially pronounced when these probabilities are more dissociable. However, even though these two factors contribute substantially to the absence of the accuracy-demand trade-off in the Daw two-step task, we found that a task that adjusted for their shortcomings only obtained a modest trade-off between reward and goal-directed control.

3. Deterministic transition structure

Because the Daw two-step task employs rare transitions, model-based choices at the first stage do not always lead to the state that the goal-directed system selected. This feature of the task might lead to a weakening of the relationship between model-based control and reward rate. The task structure employed by Doll and colleagues [15], discussed in the previous section (Fig 5A), avoids this issue by implementing deterministic transitions, such that model-based choices always lead to the desired second-stage state. Indeed, the simulation analysis for this task revealed that a certain range of the simulated parameter space showed an increased relationship between model-based control and reward rate. However, for this analysis the set of drifting rewards still suffered from the factors discussed above–relatively low distinguishability of the second-stage options and a suboptimal drift rate.

To assess the influence of the deterministic task structure, we simulated performance on the Doll version of the two-step task with sets of reward probabilities with the wider range and increased drift rate (a bounded Gaussian random walk with μ = 0, σ = 0.2 on a range from 0 to 1 with reflecting bounds). The resulting surface map (Simulation 3a; Fig 8A) showed that, consistent with our predictions, across the entire parameter space the relationship between control and reward was stronger when compared to the Daw two-task with improved sets of reward probabilities in the previous section.

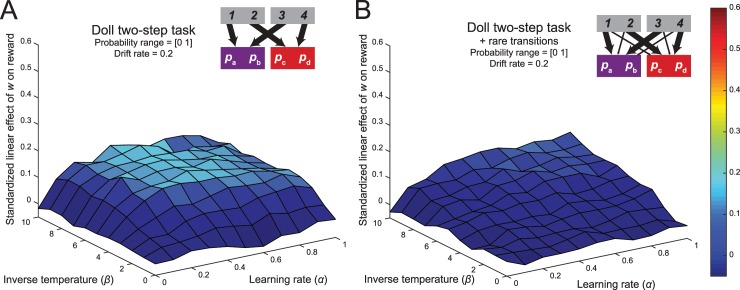

Fig 8. The influence of a deterministic task structure.

(A) Because of the deterministic transitions, model-based choices in the Doll two-step task always result in the desired state outcome. Combined with increased distinguishability and increased drift rate in the reward probabilities, this task results in a substantial increase in the relationship between planning and reward. (B) When this task structure is adapted to include stochastic transitions, the relationship between planning and reward is significantly reduced, indicating an important contribution of the rare transitions in diminishing the accuracy-demand trade-off in the original paradigm.

Even though this result is consistent with the assumption that model-based choices in the Daw two-step task lead to the desired state less often than in the deterministic version of the two-step task, it is equally possible that the second task shows an increased accuracy-demand trade-off because it introduces the possibility of generalization across actions, and not because of the elimination of the rare transitions. To disentangle these two possibilities, we simulated reinforcement-learning performance on a hybrid task with two starting states but with rare transitions (Simulation 3b; Fig 8B). The regression coefficients in the resulting surface map were substantially lower than in the deterministic variant of the task, and was comparable to that of the Daw two-step task with broader probability range and a higher drift rate. This indicates a critical role for the rare transitions in diminishing the accuracy-demand trade-off.

4. One choice in second stage

As noted above, model-based and model-free strategies make divergent choices only at the first stage of the multi-step paradigms we have considered so far; at the second stage, both strategies perform a biased selection weighted towards the reward-maximizing option. Thus, the advantage of model-based control over model-free control is approximately bounded by the difference between the maximum value of all actions available in one second-stage state and the maximum value of all actions available in the other second-stage state. Intuitively, as the number of actions available within each second-stage state grows, this difference will shrink, because both second-stage states will likely contain some action close to the maximum possible reward value (i.e., a reward probability of 1). Conversely, the difference between the maximum value actions available in each second-stage state will be greatest when only a single action is available in each state. This design should favor the largest possible difference in the rate of return between model-based and model-free strategies.

To quantify this, we generated 10,000 sets of reward probabilities in this task (according to a Gaussian random walk with reflecting bounds at 0 and 1 and σ = 0.2). The average difference between any two reward probabilities within a state was equal to 0.33, whereas the average difference between the maximal reward probabilities of the two states was 0.27.

Since the model-based system only contributes to the first-stage decision, we simulated performance of the reinforcement-learning model in a deterministic two-step task in which the second-stage states do not contain a choice between two actions. In this task, the average difference in reward probabilities that the model-based system uses to make a choice at the first stage is 33%, an increase in comparison to the task that implements a binary choice at the second stage states.

To assess whether this change to the task resulted in a stronger accuracy-demand trade-off, we simulated performance on this task and estimated the strength of the relationship between the weighting parameter and reward rate, across the same range of reinforcement-learning parameters (Simulation 4; Fig 9). This analysis revealed that the elimination of the choices at the second stage indeed strengthened the relationship between w and reward in comparison to the deterministic task with second-stage choice (Fig 8A), because the larger difference between ‘maximal’ reward probabilities between the two second-stage states increased the importance of the model-based contribution to the first-stage choice.

Fig 9. The influence of reducing the number of second-stage action.

Because of the deterministic transitions, model-based choices in the Doll two-step task always result in the desired state outcome. Combined with increased distinguishability and increased drift rate in the reward probabilities, this task results in a substantial increase in the relationship between planning and reward.

5. Informativeness of an observation

In order to determine the value of an action in the two-step task, the stochastic nature of the task requires participants to sample the same action repeatedly and integrate their observations. In other words, since each outcome is either a win or a loss, the information contained in one observation is fairly limited. Here, we will test whether the high amount of ambiguity associated with each observation contributes to the absence of the accuracy-demand trade-off in the two-step task. One way to increase the informativeness of an outcome observation is to replace the drifting reward probabilities at the second stage with drifting scalar rewards, so that the payoff of each action is exactly identical to its value [42]. This elimination of uncertainty increases the information obtained from each outcome observation, and thus may lead to a strengthened relationship between model-based control and reward.

In order to test whether the reward distributions for the second-stage actions would improve the information obtained from each observation, we performed a series of simulations for two simple reinforcement learning tasks (Fig 10A). Both tasks involved a repeated decision between task options, but the nature of the reward for these two options was different. For one task, these actions were associated with a probability of a reward, which independently drifted across the session according to a Gaussian random walk (μ = 0, σ = 0.2) on a range from 0 to 1 with reflecting bounds. For the other task, the same series of drifting probabilities were treated as series of drifting rewards. Specifically, this meant that if an action afforded a 74% probability for a reward in the first task, the same action in the second task would lead to a payoff of 0.74 points. As before, we performed reinforcement learning simulations (λ = 0.5) on these two tasks across a range of inverse temperatures and learning rates. Because we used the same sets of drifting values as probabilities and payoff (i.e., their expected values are the same), any difference in performance between the two tasks is a function of the increased amount of information available in the payoff condition.

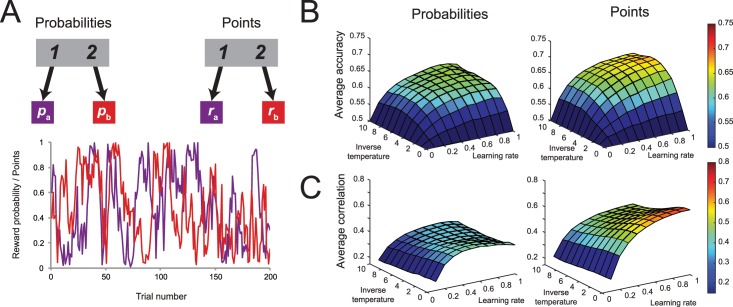

Fig 10. The influence of the type of reward distribution (points vs probabilities) on choice accuracy.

(A) We ran simulations of RL agents on two different two-armed bandit tasks. For one, the reward distributions indicate the reward probability associated with each action. The other task does not include binomial noise, but instead the actions pay off rewards that are directly proportional to its value in the reward distribution. (B) Agents show greater accuracy in choosing the highest-value action on the task the task where the two-armed bandit pays off points instead of affording a probability to win a reward, especially when both the inverse temperature and learning rate were high. (C) The Q-values of each action shows stronger correlations with their objective reward value in the task where the two-armed bandit payed off points instead of affording a probability to win a reward.

We first compared the model’s performance on these two tasks by computing the accuracy of its choices, i.e., how often it selected the action with the highest reward probability or reward payoff. Fig 10B displays the average accuracy for each task across the range of inverse temperatures and learning rates. For both tasks, the model showed increased accuracy for higher learning rates and inverse temperatures. That is, agents with less randomness in choice and greater incorporation of new information were more likely to pick the option with the objectively higher chance to win or reward payoff. Consistent with our prediction, this effect was larger in the task with reward payoffs compared to the task with reward probabilities across virtually the entire simulated parameter space. This suggests that the observation of reward outcome in the payoff condition was more informative, leading to overall better performance in the simple two-alternative choice task.

As a second metric of the information contained in each outcome observation, we computed the correlation between the model’s action values and the actual payoffs in the simulations reported above. We expected that the increased precision in outcome observations in the payoff condition would lead to a tighter coupling between the Q-values of the model and the objective values as compared to the probabilities. Fig 10C depicts the results of this analysis. In both tasks, the average correlation was strongest for high learning rates, since for these agents, new information was incorporated fully, always reflecting the latest information to the largest extent. Second, the correlation was stronger when the inverse temperature was low, presumably because agents with high randomness in choice sample from both options. Most importantly, the correlations between action and objective values was higher in the reward payoff condition than in the probability range across the entire range of tested reinforcement learning parameters. This observation provides convergent evidence that increased resolution in the outcome observations is associated with enhanced performance in reinforcement-learning tasks.

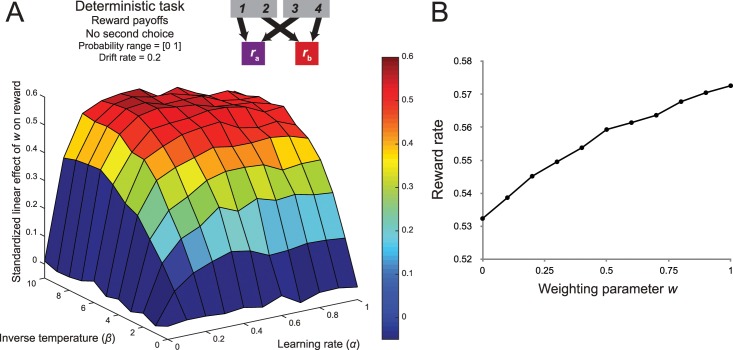

Next, we assessed whether this increased performance in the payoff condition would result in a stronger accuracy-demand trade-off in the deterministic two-step task. We reasoned that if the agent obtained a more accurate estimation of the second-stage action values, then the model-based system would be better positioned to maximize reward. To test this prediction, we again estimated the strength of the relationship between the weighting and reward rate, across the range of reinforcement-learning parameters (Simulation 5; Fig 11). The surface map revealed a marked increase when compared to that of the task with reward probabilities. Across the entire range of inverse temperatures and learning rates, the regression coefficients of the relationship between control and reward were substantially higher in comparison to the deterministic task with reward probabilities (Fig 9). This final analysis revealed that the reward outcomes in the Daw two-step task do not carry enough information about the action’s value, leading to a decrease in accuracy for the model-based system.

Fig 11. The influence of removing binomial noise from the reward distributions at the second stage.

(A) The surface plot of the relationship between model-based control and reward in the novel two-step task with reward payoffs at the second stage. The inclusion of this fifth factor substantially increased the accuracy-demand trade-off in the two-step paradigm. (B) An example of the average relationship between the weighting parameter and reward rate with inverse temperature = 10 and α = 0.4.

Comparison with Akam and colleagues

In a recent study, Akam and colleagues [9] reported that the original version of the two-step task does not embody a trade-off between control and reward. They simulated performance on the task for a pure model-free and model-based agent, with independently optimized parameters to maximize reward rate, and found no difference between them, consistent with the results of our simulations. Furthermore, they proposed a new task that establishes a trade-off between control and reward. This task is similar to the original Daw version of the task, except for the elimination of the second-stage choice, a reduction of the rare transition probability (20%), and the reward probabilities in the second-stage states alternate between blocks with reward probabilities of 0.8/0.2 and blocks with probabilities of 0.2/0.8 [9]. Using optimized reinforcement learning parameters independently for model-free and model-based agents, they show that reward rate is higher for the model-based agent.

This approach—i.e., a comparison of optimal parameter settings under model-free versus model-based control—provides an important existence proof of the potential benefits of model-based control. However, their way of quantifying the accuracy-demand trade-off differs significantly from the current approach. In order get a more comprehensive overview of the accuracy-demand trade-off in the Akam two-step task, we again estimated the strength of the relationship between the weighting parameter and reward rate, across the same range of reinforcement learning parameters (Fig 12). This surface of regression coefficients shows remarkable differences compared to our novel paradigm (presented in Fig 11). Most importantly, high correlation coefficients are restricted to a selective region of parameter space with low learning rate and high inverse temperature. The strength of this relationship drops in the rest of the parameter space.

Fig 12. Surface plot of the relationship between model-based control and reward in the Akam and colleagues [9] version of two-step task with alternating blocks of reward probabilities at the second-stage states.

This feature of the task means that an increase in model-based control, keeping all other RL parameters fixed, is not likely to yield significantly increased total reward, because reinforcement learning parameters tend to vary widely across individuals [54]. However, it has been shown that people adapt RL parameters such as the learning rate and choice randomness to maximize reward in the environment [55], providing alleviation for this concern. A second, distinct advantage of the task we introduce here involves the possibility that humans may identify and exploit higher-level regularities in the structure of reward. Specifically, in the Akam task participants may learn to predict the alternating blocks of reward probabilities, complicating the interpretation of behavior. In contrast, in the task we introduce it is impossible to perfectly anticipate changes in our randomly changing reward distributions.

Despite these concerns, both tasks achieve an accuracy-demand trade-off, and in this respect represent a substantial improvement over the Daw two-step task. Future empirical work should compare the empirical correlations between reward and model-based control for our task and the Akam two-step task, so as to gain fuller comprehension of their respective merits.

Summary

We have identified several key factors that reduce the accuracy-demand trade-off in the Daw two-step task. We found that the sets of drifting reward probabilities that are most often employed in this task are marked by low distinguishability and a rate of change that is too slow to benefit from flexible online adaptation. We also showed that the rare transitions in the original task and the presence of multiple choices in the second-stage states diminished the effect of model-based decisions on reward rate. Finally, we showed that the stochastic reward observations in this task do not carry sufficient information about the value of the associated stimuli. In addition to identifying these factors, we have provided improvements to the paradigm targeting each shortcoming.

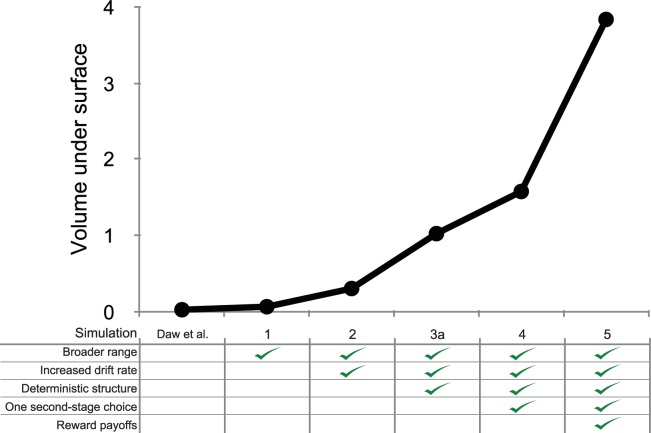

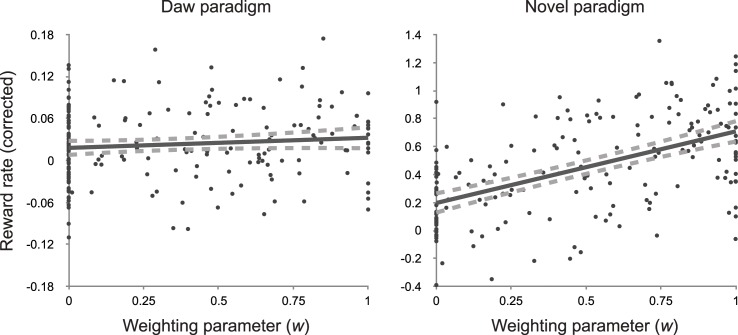

Fig 13 shows the progression in the average strength of the relationship between reward and control across these changes in the task structure, operationalized as the volume under the surface of each simulation. It reveals a progressive contribution of each change to the task, suggesting that implementing a broader range, increased drift rate, deterministic task structure, one second-stage choice, and reward payoffs all have identifiable contributions to the strength of the relationship between model-based control and reward.

Fig 13. Comparison of trade-off between model-based control and reward across different paradigms.

We calculated the volume under the surface of coefficients of the linear relationship between the weighting parameter and the reward rate for each of the paradigms in the section above. Across these simulations, we progressively included elements that strengthened the relationship, as summarized in this figure.

Here, we have presented a progression of five factors that enhance the accuracy-demand trade-off in the two-step task. Which of these factors contributed most to the increase in this strength? Fig 13 represents the increase in this strength as additional factors are layered into the paradigm, and from this figure one might conclude that the conversion from reward probabilities to reward payoffs carried the strongest contribution. So far, however, we have confounded the contribution of each factor with the order in which they were represented. It is possible that the reward payoffs contributed a substantial amount of strength to the trade-off simply because it was the final factor introduced.

In order to test the effect of factor order in our analyses, we computed the surface of regression coefficients for all 32 possible combinations of our binary factors (25), using the same procedure as described above (omitting the cases where β = 0, or α = 0). Next, we computed the volume under the surface as an approximation of the average strength of the relationship between model-based control and reward for each these simulations. Fig 14, depicts the volume under the surface of each simulation as a function of the number of factors that were included in the design. These results indicate that the primary cause for the strength in the final paradigm was the inclusion of all five factors, and not necessarily the contribution of one of them. To see this, compare the score of the final task with 5 included factors to the scores of the task with 4 factors. The strength of the effect in the final task was 6.9 standard deviations removed from the tasks with 4 factors, and 5.7 standard deviations from the 4-factor task with the strongest average accuracy-demand trade-off.

Fig 14. Volume under the surface for all 32 tasks generated by the 5 binary factors discussed in this paper.

Each dot represents the volume under the surface of linear regression coefficients for one task, and is plotted as a function of the number of ‘beneficial’ factors that are included in each task’s design. The gray line represents the average increase in the strength of the relationship between model-based control and reward.

The converse is also true: all factors had a similar and small individual effect on the original Daw paradigm. To see this, compare the score of the original task with 0 factors to the scores of all tasks with 1 factor. The strength of the effect in the original task was only 1.3 standard deviations removed from the tasks with 1 factor, and even slightly better than the 1-factor task with the smallest effect. Most importantly, even if each individual factor did not substantially increase the total effect compared to the original paradigm, their joint inclusion increased the strength of the relationship between model-based control and reward rate by a factor of approximately 230.

At least in theory, we have developed a paradigm that embodies an accuracy-demand trade-off between model-based control and reward rate. Next, we attempt to validate this paradigm by having human participants perform either a novel version of the two-step task with the improved features described above, or the original version of the two-step task as described by Daw and colleagues [8]. We predicted that measures of model-based planning in the novel, but not in the original, paradigm would show a positive correlation with the reward rate.

In addition, the comparison between these two paradigms allows us to test whether human participants spontaneously modulate the balance between model-free and model-based control depending on whether a novel task favors model-based control. So far, we have discussed the accuracy-demand trade-off uniquely as it is instantiated in the two-step task. However, if the novel paradigm embodies an empirical accuracy-demand trade-off, then the results of this study allow us to test whether the brain also computes a cost-benefit trade-off between the two systems. We predicted that average model-based control would be elevated in the novel paradigm, since planning was incentivized in this task [56].

Experimental methods

Participants

Four hundred and six participants (range: 18–70 years of age; mean: 33 years of age; 195 female) were recruited on Amazon Mechanical Turk to participate in the experiment. Participants gave informed consent, and the Harvard Committee on the Use of Human Subjects approved the study.

Materials and procedure

One hundred and ninety-nine participants completed 125 trials of the novel two-step reinforcement-learning task. The structure of the task was based on the procedure developed in the previous section. The remaining two hundred and seven participants completed 125 trials of the two-step with the original Daw structure [8]. We embedded both tasks in a cover story in order to make it more engaging for participants [31].

Novel paradigm. Every trial in the novel two-step task consisted of two stages (Fig 15A). Each trial would start randomly in one of two possible first-stage states. In both, a pair of ‘spaceships’ appeared side by side on a blue earth-like planet background. Participants were told they had to choose between these two spaceships to fly to one of two different planets. The choice between the left- and right-hand spaceship had to be made using the “F” or “J” button keys within 2000ms. After a choice was made, the selected spaceship was highlighted for the remainder of the response period. The positions of the spaceships were randomly selected on each trial. Depending on the choice of spaceship, the participants would then deterministically transition to one of two second-stage states, a purple or a red planet. The spaceship selected in the first-stage was displayed at the top of the screen in this planet. On each planet, participants found an alien that ‘mines’ from a ‘space mine’. These mines act as the second-stage bandits. Participants were told that sometimes the aliens were in a good part of the mine and they paid off a certain number of points or ‘space treasure’, whereas at other times the aliens were mining in a bad spot, and this yielded negative points or ‘antimatter’. The payoffs of these mines slowly changed over the course of the experiment. Even though there was only one choice available at the second-stage planets, participants were instructed that they were to press the space bar within 2000ms in order to receive the reward. One of these reward distributions was initialized randomly within a range of -4 points to -1 points, and the other within a range of +1 to +5 points. Then, they varied according to a Gaussian random walk (σ = 2) with reflecting bounds at -4 and +5 for the remainder of the experiment. A new set of randomly drifting reward distributions was generated for each participant. At the end of the experiment, participants were given 1¢ for every two points they earned.

Fig 15. Design of the novel two-step task.

(A) State transition structure of the paradigm. At the first stage, participants choose between one of two pairs of spaceships. Each choice deterministically leads to a second-stage state that was associated with a reward payoff that changed slowly according to a random Gaussian walk over the duration of the experiment. Note that the choices in the two different first-stage states are essentially equivalent. (B) Predicted behavior from the generative reinforcement-learning model of this task (using median parameter estimates, and w = 0.5 for the agent with a mixture of strategies). Note that in this task the model does not produce qualitatively different behavior for the different systems as reported in Fig 5. Instead, the differences in behavior are subtler, and therefore differences in strategy arbitration are better captured using model-fitting techniques.

The most important feature of the task is that the spaceships at the first states were essentially equivalent. For each pair, one spaceship always led to the red planet and alien, whereas the other always led to the purple planet and alien. Because of this equivalence, we were able to dissociate model-based and model-free contributions to choice behavior, since only the model-based system generalizes across the equivalent start state options by computing each action’s value as its expected future reward. Therefore, model-based and model-free strategies make qualitatively different predictions about how second-stage rewards influence first-stage choices on subsequent trials. Specifically, for a pure model-based learner, each outcome at the second stage should affect first-stage preferences on the next trial, regardless of whether this trial starts with the same or the other pair of spaceships. In contrast, under a pure model-free strategy a reward obtained after one pair of spaceships should not affect choices between the other pair.

Daw paradigm. The Daw two-step task used the same buttons, timing, visual appearance, and counter balancing procedures as the novel paradigm, but the structure of the task matched that of the design in the original report (discussed in detail above). At the start of each trial, participants chose between a pair of spaceships. Depending on the choice of spaceship, the participants would then transition to one of two second-stage states, a purple or a red planet. Each spaceship traveled more frequently to one planet than to the other (70% versus 30%), and these transition probabilities were opposite for the two spaceships. On each planet, participants chose between pairs of aliens that mines from a space mine. Participants were told that sometimes the aliens were in a good part of the mine and they were more likely to deliver a piece of space treasure, whereas at other times the aliens were mining in a bad spot, and they were less likely to deliver space treasure. The payoffs of these mines slowly changed over the course of the experiment. One pair of aliens was initialized with probabilities of 0.25 and 0.75, and the other pair with probabilities of 0.4 and 0.6, after which they changed according to a Gaussian random walk (σ = 0.025) with reflecting bounds at 0.25 and 0.75 for the remainder of the experiment. A new set of randomly drifting reward distributions was generated for each participant. To equate average pay-off between conditions, participants were given 1¢ for every point they earned.

As explained in detail above, model-based and model-free strategies make qualitatively different predictions about how second-stage rewards influence first-stage choices on subsequent trials. Specifically, choice under a pure model-free strategy should not be affected by the type of transition (common vs. rare) observed on the previous trial (see Fig 2A), whereas pure model-based learners should base their choice on both the type of transition and whether a reward was observed on the previous trial (see Fig 2B).

Before completing the full task, participants were extensively trained on different aspects of the task. Participants who completed the novel paradigm first learned about the value of space treasure and antimatter, and the change in payoffs from both space mines by sampling rewards from two different aliens. Next, they learned about the deterministic transitions between spaceships and planets during a phase in which they were instructed to travel to one planet until accurate performance was reached. Participants who completed the Daw paradigm sampled from aliens with different reward probabilities, and were extensively instructed on the transition structure. Finally, both groups of participants practiced the full task for 25 trials. There was no response deadline for any of the sections of the training phase. The color of the planets and aliens in this phase were different from those in the experimental phase.

Reinforcement learning model and behavioral predictions

We used our reinforcement learning model of the novel task to produce behavioral predictions for a pure model-free and pure model-based decision maker, and an agent with a mixture between model-free and model-based control. This model was largely the same as before, with the exception of how the transition structures were learned.

Recall that participants that completed the novel paradigm performed a practice phase in which they were taught a set of deterministic transitions between the four spaceships and two different planets. Next, they were told that in the experimental phase, the rules and spaceships were the same as in the practice phase, but that there would be new planets. Therefore, we assumed that participants would assume equal probability of each spaceship traveling to one of the two planets, until they observed one transition for a first-stage state. After this observation, the model immediately infers the veridical transition structure for that first-stage state.

The participants that completed the Daw paradigm of the two-step task learned about the transition structure through instruction and direct experience in a practice phase with two different planets. They were also told that the rules and spaceships would be the same, but that the planets would be new. Therefore, we assumed that participants initially assumed equal probability of transitioning between the spaceships and the planets. Next, we characterized transition learning by assuming that participants chose between three possible transition structures as a function of how many transitions they observed between the states and actions: a flat structure with equal probabilities between all states and actions, or two symmetric transition structures with opposite transition probabilities of 70% and 30% between the two spaceships and planets.

As we have argued above, in our novel paradigm the differences in the probability of repeating the previous first-stage choice do not show a major qualitative difference between a purely model-based and model-free strategy, when plotted as a function of whether the previous start state is the same as or different from the current start state and whether a reward was obtained on the previous trial (Fig 15B). In fact, both a model-free and a mixture agent show an interaction between the two factors, start-state similarity and previous reward, with the likelihood of staying being higher if the current start state is similar the start state on the previous compared to when it was different, but still significantly bigger than chance. For the model-free agent, this reflects the presence of a highly rewarding action that the model-free learner learns to approach (for a detailed analysis, see [9]). This erosion of the qualitative predictions afforded by a stay/switch analysis is enhanced in these simulations compared to the original Doll investigation (Fig 5), presumably because reward observations in the current task carry more consequential information for behavior.

The lack of qualitative differences in single-trial staying behavior between the model-free and mixture strategies places special importance on model-fitting to quantify the balance between habit and control. Not only does model-fitting incorporate an influence of all previous trials on choice, but it also provides a numerical value for the relative weighting of model-based and model-free strategies (the w parameter).

In order to demonstrate that standard model-fitting procedures are sufficient to robustly estimate w on a per-participant basis, we generated data from 200 agents with randomly selected reinforcement learning parameters and then estimated these parameters using the model-fitting procedure described below. This method, described in more detail in the Supporting Information, yielded substantial correlations between the true and estimated parameters (including w, r = 0.68), validating our approach (S1 Text).

An alternative way to correct for the influence of reward in the previous trials is by predicting ‘staying’ behavior through a multilevel logistic regression analysis that accounts for this influence with a predictor that incorporates behavior about the outcome of the previous choice [9, 10]. The Supporting Information describes this method in detail; in brief, it produced qualitatively similar results to the model fitting procedure (S2 Text).

Model fitting

In order to estimate each participant’s weighting parameter, we fitted one of two reinforcement learning models to each participant’s data, dependent on which task they completed. This model was equivalent to the models described above, with the exception for the input into the softmax decision rule:

where the indicator variable rep(a) is defined as 1 if a is a first-stage action and is the same one as was chosen on the previous trial, zero otherwise. Multiplied with the ‘stickiness’ parameter π, this captures the degree to which participants show perseveration (π > 0) or switching (π < 0) at the first stage. The indicator variable resp(a) is defined as 1 if a is a first-stage action selecting the same response key as the key that was pressed on the previous trial, zero otherwise. Multiplied with the ‘response stickiness’ parameter ρ, this captures the degree to which participants repeated (ρ > 0) or alternated (ρ < 0) key presses at the first stage. We introduced this parameter since the spaceship’s positions were not fixed, hence participants could show perseveration in spaceship choices, button presses, or both.

We used maximum a posteriori estimation with empirical priors, implemented using the mfit toolbox [54] parameters to fit the free parameters in the computational models to observed data for each participant separately. Based on prior work [54], we used weak priors for the distributions for the inverse temperature, β ~ Gamma(4.82, 0.88), and stickiness parameters, π, ρ ~ (0.15, 1.42), and flat priors for all other parameters. To avoid local optima in the estimation solution, we ran the optimization 25 times for each participant with randomly selected initializations for each parameter.

Correlation analysis. In order to assess the relationship between model-based control and reward in our novel paradigm, we computed the Pearson correlation coefficient between the estimated weighting parameter and reward rate obtained in the task. However, since we generated new sets of drifting rewards for each participant, baseline differences in average reward might weaken this correlation. Therefore, we calculated the difference between actual reward and average chance performance for each participant, and used this as the measure of reward obtained to correlate with the weighting parameter. For both tasks, chance performance was computed as the average value across the reward distributions.

Exclusion criteria

Participants were excluded from analysis if they timed out on more than 20% of all trials (more than 25), and we excluded all trials on which participants timed out (average 2.7%). After applying these criteria, data from 381 participants were submitted to the model-fitting procedure.

Results

Behavioral performance

For the participants who completed the Daw task, we found that a reward on the previous trial increased the probability of staying with the previous trial’s choice [t(196) = 7.70, p < 0.001; Fig 16A], but that this effect interacted with the type of transition on the previous trial [t(196) = 5.38, p < 0.001]. This result replicates the basic finding on the original two-step confirming that participants used both model-based and model-free strategies.

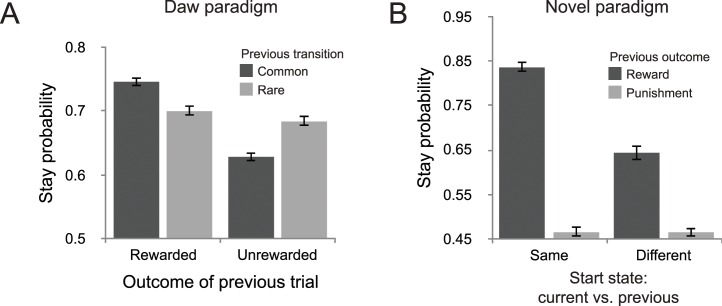

Fig 16. Behavioral performance on the two-step tasks.

(A) Behavioral performance on the Daw task showed both a main effect of previous outcome and an interaction between previous outcome and transition type, suggesting that behavior showed both model-based and model-free strategies. (B) Behavioral performance on the novel paradigm showed a significant difference in stay behavior between same and different start states conditions after a reward, suggesting that behavior was not fully model-based. Error bars indicate within-subject SEM.

For the participants who completed the new paradigm, we found that a positive reward on the previous trial significantly enhanced staying behavior from chance for both similar and different current start states, (p < 0.001 for both effects), but this effect was larger for the same compared to the different start state condition [t(183) = 9.64, p < 0.001; Fig 16B]. This pattern of behavior suggests that the participants did not employ a pure model-based strategy (compare with Fig 15B). However, as described above, it does not allow us to assess the relative contributions of model-based and model-free strategies to control based on these raw stay probabilities: both a purely model-free agent and an agent with a mixture of model-based and model-free strategies choices are predicted to show an increased stay probability after a win in a different start state, since a reward is indicative of history of recently reward trials.

Model fits

The reinforcement learning models described above incorporates the (decayed) experience on all previous trials to choice and is better able to dissociate the contributions of the two strategies. This model consists of a model-free system that updates action values using temporal-difference learning and model-based system that learns the transition model of the task and uses this to compute action values online. The weighting parameter w determines the relative contribution between model-based and model-free control. The stickiness parameters π and ρ capture perseveration on either the response-level or the stimulus-choice.

We first investigated whether the inclusion of either stickiness parameter (π and ρ) was justified by comparing both the Bayesian Information Criterion (BIC) and Akaike Information Criterion (AIC), for models that included none, one, or both parameters for both tasks separately (see S1 Table). For the Daw task, we found that both goodness-of-fit measures favored a model that included both stickiness parameters. For the novel task, the BIC favored a model with response stickiness but not stimulus stickiness included, whereas the AIC favored a model that included both stickiness parameters. We decided to favor the more parsimonious model without stimulus stickiness, and parameter fits from this model will be reported in the following, but the results did not qualitatively change when the stimulus stickiness parameter was included.

Second, we used model comparison with both goodness-of-fit measures to analyze whether the hybrid model including the w parameter fit the data better than either a pure model-based or model-free model (see S2 Table). For the Daw task, we found that the AIC favored the hybrid model, but that the BIC favored the pure model-free model. However, there have been many reports in the literature that justify the inclusion of the weighting parameter for this task [8], and so we adopt the hybrid model for consistency with prior work. (Note also that it would be impossible to assess the relationship between model-based control and reward without using the hybrid model). For the new task, we found that both BIC and AIC favored the hybrid model compared to the pure model-based and model-free models. This suggests that human performance in the new paradigm is characterized by a mixture of model-based and model-free strategies.

In summary, the model fits presented below used all six free parameters for the participants that completed the Daw paradigm, but omitted the stimulus stickiness parameters for the participants that completed the novel paradigm. These parameter estimates and their quartiles are depicted in Table 1.

Table 1. Best-fitting parameter estimates shown as median plus quartiles across participants.

| Paradigm | Predictor | β | α | λ | π | ρ | w |

|---|---|---|---|---|---|---|---|

| Daw | 25th percentile | 2.35 | 0.11 | 0.25 | 0.03 | -0.03 | 0.00 |

| Median | 3.35 | 0.34 | 0.65 | 0.21 | 0.05 | 0.27 | |

| 75th percentile | 3.88 | 0.57 | 1.00 | 0.4 | 0.19 | 0.66 | |

| Novel | 25th percentile | 0.51 | 0.01 | 0.07 | -0.29 | 0.04 | |

| Median | 0.72 | 0.67 | 0.62 | -0.06 | 0.48 | ||

| 75th percentile | 3.31 | 1.00 | 1.00 | 0.14 | 0.85 |