The megamap is a quasi-continuous attractor network built on the experimental fact that each place cell has multiple, irregularly spaced place fields in a large environment. This flexibility allows the megamap to seamlessly cover much larger environments than standard rigid continuous attractor models. The megamap has additional inherent properties, such as robustness to degraded inputs and ease of storing nonspatial information, that render it suitable as a basic building block for modeling hippocampal activity.

Keywords: continuous attractor, Poisson, activity bump, combinatorial mode, CA3

Abstract

The problem of how the hippocampus encodes both spatial and nonspatial information at the cellular network level remains largely unresolved. Spatial memory is widely modeled through the theoretical framework of attractor networks, but standard computational models can only represent spaces that are much smaller than the natural habitat of an animal. We propose that hippocampal networks are built on a basic unit called a “megamap,” or a cognitive attractor map in which place cells are flexibly recombined to represent a large space. Its inherent flexibility gives the megamap a huge representational capacity and enables the hippocampus to simultaneously represent multiple learned memories and naturally carry nonspatial information at no additional cost. On the other hand, the megamap is dynamically stable, because the underlying network of place cells robustly encodes any location in a large environment given a weak or incomplete input signal from the upstream entorhinal cortex. Our results suggest a general computational strategy by which a hippocampal network enjoys the stability of attractor dynamics without sacrificing the flexibility needed to represent a complex, changing world.

NEW & NOTEWORTHY

The megamap is a quasi-continuous attractor network built on the experimental fact that each place cell has multiple, irregularly spaced place fields in a large environment. This flexibility allows the megamap to seamlessly cover much larger environments than standard rigid continuous attractor models. The megamap has additional inherent properties, such as robustness to degraded inputs and ease of storing nonspatial information, that render it suitable as a basic building block for modeling hippocampal activity.

an attractor network is a widely used theoretical framework for constructing neural models of spatial, declarative, and episodic memory. Attractor dynamics arise naturally in models of recurrent neural networks with Hebbian-type associative synaptic plasticity (Cerasti and Treves 2013; Kali and Dayan 2000; Knierim and Zhang 2012). The resulting attractor networks exhibit emergent properties desirable for memory storage, such as full recalls from partial cues and robustness against noise and damage (Hopfield 1982; Marr 1971; McNaughton and Nadel 1990), and they have become increasingly useful for neurophysiology by offering a practical framework for experimental design and data interpretation (Peyrache et al. 2015; Stella et al. 2012; Yoon et al. 2013).

The hippocampus, especially the CA3 area with its prominent recurrent collaterals and proposed role in associative memory (Johnston and Amaral 1998; Nakazawa et al. 2004), has long been modeled as a discrete attractor network representing nonspatial information and as a continuous attractor network representing space (Knierim and Zhang 2012; Leutgeb and Leutgeb 2007; Rolls 2007). The latter class of models has largely been built on the seminal theory commonly referred to as the multichart model (Samsonovich and McNaughton 1997; Tsodyks 1999). The fundamental idea is that a given spatial environment is represented by a cognitive map (chart) in which each place cell is located at its single place field center (Fig. 1A). The strength of recurrent connections then decays exponentially with distance between cells on the chart, consistent with Hebbian plasticity. The chart has become a useful convention by which one may visualize place cell activity as a localized activity bump on the chart centered at the animal's current location. Moreover, the model offers a plausible explanation for experimental data, such as the relative stability of place cell activity in the CA3 compared with that in the CA1 (Lee et al. 2004; Vazdarjanova and Guzowski 2004). Numerous studies have used the chart as a building block, modeling additional biological mechanisms and multiple charts to capture various phenomena, e.g., global remapping (Samsonovich and McNaughton 1997), partial remapping (Stringer et al. 2004), rate remapping (Rennó-Costa et al. 2014; Solstad et al. 2014), and incorporation of nonspatial information (Rolls et al. 2002). Other studies have shown how the chart may self-organize (Cerasti and Treves 2013; Kali and Dayan 2000; Stringer et al. 2002) and have generalized the attractor to model context dependence (Doboli et al. 2000).

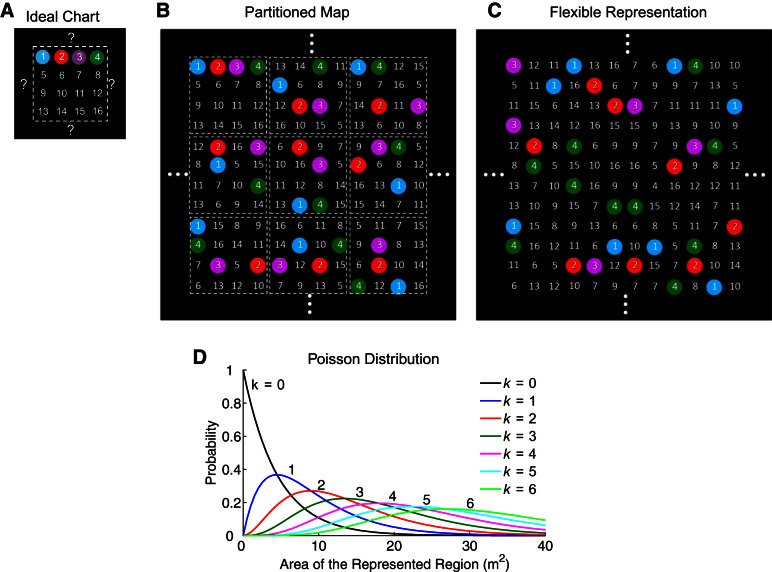

Fig. 1.

Basic models of place cell representations. In this schematic, 16 place cells represent a large uniform space, where cell numbers are placed at respective place field centers, and the fields of cells 1–4 are additionally indicated by color. A: models of single-peaked attractor networks assume each cell represents at most one location. This rigid representation limits the environmental size, because it is unclear how the network would represent new locations after all cells have been used. B: one possible remedy is to partition the region, treating each subregion as a distinct environment in which each cell has at most one place field. The resulting representation is piecewise rigid with artificial boundaries between subregions. C: place cells are flexibly recombined for the flexible representation on which the megamap is built. A single cognitive map without artificial boundaries or size limitations represents the large space. D: we construct a benchmark model for the flexible representation by assuming the number of place fields (k) for a given cell in the represented region follows the Poisson distribution (Eq. 1). The average field density is given by λ = −ln(0.8) m−2 ≈ 0.22 m−2.

The ideal chart is single peaked, i.e., each place cell exhibits a single place field within a single environment. This simplification, which is common to most attractor models, is justifiable in a small, standard environment (∼1 m2), in which a majority of place cells exhibit no more than one place field. The chart does not extend naturally to large environments. Since each cell appears only once on the chart, it can only represent a small area before all cells are used (McNaughton et al. 2006). Assuming ∼20% of cells in the CA3 have place fields within a 1-m2 environment, the entire CA3 could represent at most 5 m2, a much smaller area than the foraging range of a rat (Davis 1953; Innes and Skipworth 1983; Lambert et al. 2008; Taylor 1978).

One possibility is that place fields scale with the environmental size (Muller et al. 1987; O'Keefe and Burgess 1996). A scaled representation would certainly increase the capacity of any cognitive map, but it would also coarsen the map's spatial resolution. Another possibility is that the animal partitions the environment, treating each subregion as a distinct environment represented by a distinct chart (Fig. 1B). This simple model removes size limitations by imposing artificial boundaries on a seemingly continuous space, and it is unclear how such a partitioned cognitive map might self-organize in a novel environment. Furthermore, while the boundaries between charts may be difficult to detect (Samsonovich 1998), they have not been apparent in recent experiments conducted in large environments (Fenton et al. 2008; Park et al. 2011; Rich et al. 2014). Rather, these experimental data seem to indicate that the animal represents a larger than standard environment through a single cognitive map in which many place cells exhibit multiple, irregularly spaced place fields. Thus an attractor network based on a single-peaked code is appropriate for modeling head-direction cells (Peyrache et al. 2015; Redish et al. 1996; Seelig and Jayaraman 2015; Skaggs et al. 1995; Zhang 1996), grid cells within a module (McNaughton et al. 2006; Yoon et al. 2013), and even place cells representing a small environment (McNaughton et al. 2006; Samsonovich and McNaughton 1997; Tsodyks 1999); but it becomes unnatural for place cells representing a large environment.

We offer a more natural theoretical framework for an attractor network representing a large space. We propose that hippocampal networks are built on a megamap, or a single, quasi-continuous attractor map in which place cells with structured recurrent connections are flexibly recombined to represent a large space (Fig. 1C). The megamap's underlying place code, which we refer to as the flexible representation, is based directly on experimental data (Fenton et al. 2008; Park et al. 2011; Rich et al. 2014). However, the megamap is the first model to explicitly examine the structure and properties of an attractor network built on this flexible representation. Our focus in this study is to present the megamap as a basic unit of a cognitive map by elucidating the structure of the optimal recurrent connections, demonstrating the novel manner in which an activity bump on the megamap may be visualized, and comparing the properties of the megamap with those of the alternative single-peaked chart. The model also reveals two emergent properties of the multipeaked attractor network: its adaptation to environmental changes by recombining learned memories, and its incorporation of nonspatial information at no additional cost. We close by discussing experimental predictions and implications of the megamap.

MATERIALS AND METHODS

Poisson distribution of place fields on the megamap.

The megamap represents a large space through flexible recombinations of place cells. In other words, each cell in the megamap has multiple, irregularly spaced place fields, and there is no spatial correlation among place field pairs. We construct a benchmark model by setting place fields according to the Poisson distribution, for which the probability that a given place cell has k place fields in a region of area A is given by

| (1) |

The single parameter, λ, is the average number of place fields per unit area for a single cell. By using Eq. 1 with k = 0, λ = −ln[P(0)]/A, where P(0) is the proportion of silent cells in a region of area A. Unless otherwise specified, we set λ = −ln(0.8)/(1 m2) ≈ 0.22 m−2. This estimate is derived from the observation that roughly 80% of CA3 place cells are silent in standard recording enclosures (A ∼ 1 m2) (Alme et al. 2014; Vazdarjanova and Guzowski 2004). For simplicity, we assume λ is constant for all cells, rather than variable (Rich et al. 2014). The place fields of each cell are centered at random locations throughout the environment.

Flexible representation of a large space.

We first consider the implications of a flexible, multipeaked place code without modeling an underlying dynamical system. Rather, we initially consider a flexible representation in which each place cell exhibits Gaussian place fields distributed according to the Poisson distribution.

In this context the representational capacity refers to the number of locations uniquely encoded on the cognitive map. For the single-peaked and flexible representations, we estimate the representational capacity by computing the number of unique subsets of place cells that may be co-active in an activity bump. We compute the analogous measure of the representational capacity for grid cells as done by Fiete et al. (2008). Consider a population of N grid cells divided evenly among M modules. Unique subsets of co-active grid cells within a module appear to encode distinct phases of the animal's location with respect to the period (spacing) of the module. Since there is a rigid spatial relation among phases within a module (Yoon et al. 2013), a single module can uniquely encode N/M phases, analogous to the single-peaked place code. The entire population may encode the animal's actual location through a unique set of phases over all modules, bounding the representational capacity by Cgrid ≤ (N/M)M. [One may compute the capacity in terms of distance uniquely represented by determining the uncertainty of a downstream neural population in reading the location encoded by place cells or the phase encoded by grid cells (Fiete et al. 2008). Assuming a similar uncertainty for either population, the qualitative results would be unchanged.]

In this report the spatial resolution refers to the least mean squared error between the animal's location [x = (x1, x2)] and the decoded location [x̂ = (x̂1, x̂2)] given any scheme for decoding the stochastic spike vector s, whose elements are the number of spikes for each place cell in the time window T. The spatial resolution is determined analytically by computing the Fisher information (Kay 1993). Regardless of the place field distribution, element (i, j) of the 2 × 2 Fisher information matrix carried by N place cells is given by

| (2) |

where the average firing rate of cell n with place field centers {cnm}m=1Mn and peak firing rate a is given by

| (3) |

Neglecting the rare overlapping place fields of individual cells [which create slight variations in F(x) over the region], only one term of the summation in Eq. 3 is nonzero. This permits Eq. 2 to be simplified to a single summation over all place fields of all cells. Assuming x is at least a place field width from any boundary, in the limit of a large population,

where A is the area of the region, Nflds is the number of place fields for the entire population, and ρ = Nflds/A is the density of all place fields in the population. Therefore,

Finally, the expected value of the squared error is bounded below by

| (4) |

by the Cramér-Rao lower bound, assuming x̂ is an unbiased estimator (E[x̂] = x).

An upstream neuronal population can theoretically decode the stochastic spike vector s to obtain this optimal accuracy (Eq. 4) by computing the maximum likelihood estimate (MLE), which has biologically plausible implementations (Jazayeri and Movshon 2006; Pouget et al. 1998). The MLE is given by

| (5) |

assuming the place cell spike trains are independent Poisson processes such that

is the probability that cell n has sn spikes in the time window T given the animal's location x.

We numerically test the agreement between the analytical spatial resolution (Eq. 4) and the mean squared error in the MLE (Eq. 5) for the standard, scaled, and flexible place cell representations (see Fig. 4). For the standard and scaled representations, each of N place cells has a single place field, where the place field centers are distributed uniformly throughout the region. The place field width is held constant for the standard representation, while the place field width (as controlled by σ in Eq. 3) for the scaled representation increases with the environmental area such that the number of co-active cells at any location is constant. For the flexible representation, which is used to generate the megamap, place fields of each cell follow the Poisson distribution (Eq. 1).

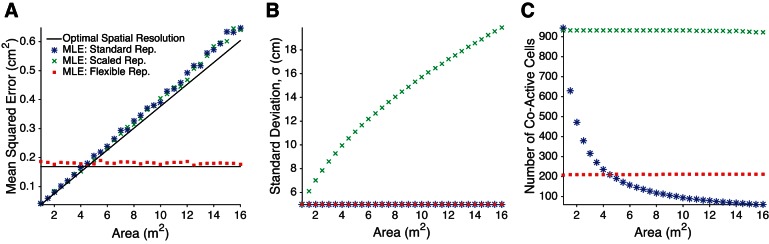

Fig. 4.

Spatial resolution, as quantified by the least mean squared error (LMSE) between the animal's position and the position decoded from the stochastic spikes of place cells with idealized Gaussian place fields (Eq. 3). A: the spatial resolution grows linearly with the area of the represented region if each place cell has exactly one place field, whether the place field size is constant (Standard Rep.) or grows with the environmental size (Scaled Rep.). In contrast, the LMSE is constant for any area given the Poisson distribution of place fields used for the megamap (Flexible Rep.). The apparent advantage of the single-peaked codes over the flexible representation when A < 1/λ is an artifact, since many cells in the flexible representation are silent in these small regions. The maximum likelihood estimates (MLEs; Eq. 5) approximate the LMSE (black; Eq. 4), as expected since the MLEs are unbiased estimators. (The mean error along either dimension is negligible: −0.0080 ± 0.0076 cm for the single-peaked place code and 0.0006 ± 0.0063 cm for the megamap.) B: the standard deviation of the Gaussian place fields (Eq. 3) grows with the environmental area only for the scaled representation. C: the number of co-active place cells at any single position is constant for the scaled and flexible representations but decreases for the standard representation, since the number of cells (N) is constant. For all simulations presented, N = 22,500, T = 250 ms, λ = −ln(0.8) m−2, and a = 15 Hz (see materials and methods for more details).

We place the animal at 50 random locations (not necessarily locations on which place fields are centered) at least 20 cm from any boundary of the region. At each location we compute the MLE for each of 50 stochastic spike vectors, s. We solve Eq. 5 by finding the maximizer over the vertices of a square grid of length 10 cm and pixel size 0.05 × 0.05 cm2 centered at the animal's true location. We also perform a coarse exhaustive search with a pixel size of 4 × 4 cm2 over the entire region to catch outliers. We then plot the mean squared error between the MLE and the animal's location, averaged over all 2,500 iterates. This process is repeated over regions varying in size with T = 250 ms, N = 22,500, a = 15 Hz, and σ = 5 cm.

Dynamical system of the megamap.

We examine how an associative network of place cells may contribute to the formation and stability of the activity bump on the megamap by simulating a standard firing rate model (Li and Dayan 1999; Wilson and Cowan 1972) consisting of a network of N place cells with recurrent excitation, global feedback inhibition, and external input. The state vector, u ∈ ℝN×1, loosely represents the depolarization of each place cell and is governed by

| (6) |

where τ = 10 ms and 1 ∈ ℝN×1 denotes a vector of all ones.

The recurrent excitation, Wf, provides the internal network drive. The weight matrix, W ∈ ℝN×N, describes the strength of connections among place cells. The activity vector, f ∈ ℝN×1, represents the firing rate of place cells and depends on the state through the threshold linear gain function,

| (7) |

where [·]+ = max(·, 0) and fpeak = 15 Hz. We use a threshold linear gain function because of its simplicity for analysis, but similar qualitative results would be obtained using a sigmoid gain function.

All interneurons are modeled as a single inhibitory unit providing global feedback inhibition so that only the external input and recurrent hippocampal input provide a spatial signal. Future versions of the model may incorporate a weak spatial signal carried by hippocampal theta cells (Kubie et al. 1990). The activity of the inhibitory unit, fI, depends on the total network activity through the threshold linear gain function,

The inhibitory threshold is set to 90% of the total desired activity (Eq. 9). Explicitly, θ = 0.9·1Tf̄(x), which is constant for all x. The inhibitory activity is scaled by wI to provide global inhibition to all place cells, controlling the overall network activity.

The external input, I ∈ ℝN×1, carries sensory information about the animal's location or self-motion. We model the collective effect of the various external inputs into the hippocampus as a Gaussian shaped pattern that peaks at the animal's location on the megamap, and we focus our attention on the inherent dynamics of the attractor network. The external input into cell n when the animal is at location x is modeled by

| (8) |

so that the input peaks at the place field centers of cell n, {cnm}m=1Mn. The parameter σu is set such that the external input and the network state have the same width.

We integrate the dynamical system using the staggered Euler scheme (Hines 1984) with a time step of 0.1 ms. We consider the state to be an equilibrium state if its relative change over 50 ms is less than 10−6. The activity of an ensemble of hippocampal place cells can be decoded to predict the animal's perceived location (Brown et al. 1998; Davidson et al. 2009; Wilson and McNaughton 1993; Zhang et al. 1998). We decode the network activity through a process called maximum likelihood Euclidean distance decoding (Cerasti and Treves 2013; Rolls et al. 1997). We find the location, x̂, at which the desired activity, f̄(x̂) (Eq. 9), best approximates the network activity, f(t). Explicitly, the location encoded by the activity bump satisfies

We compute x̂ by first finding x̂1, the minimizer over all vertices in the region on which a place field is centered. Since the activity bump may encode locations between place field centers, we then refine the search about x̂1. The final decoded location is the minimizer over the vertices of a square grid of width d with pixel size 0.1 × 0.1 cm2 centered at x̂1, where d is the distance between neighboring place field centers along either dimension.

Construction of the ideal megamap.

We construct a benchmark model to determine whether a network with a flexible representation can exhibit stable, Gaussian-like place fields. Unless otherwise specified, the megamap represents a 3 × 3-m2 region and comprises 11,240 place cells (9,731 active cells and 1,509 silent cells).

We first set the desired place field centers. The number of place fields for each cell n (Mn) is set according to the Poisson distribution (Eq. 1) with density λ = −ln(0.8) m−2 ≈ 0.22 m−2. Each place field center {cnm}m=1Mn is then assigned to a random vertex of a rectangular grid over the entire region such that exactly one field is centered at each vertex, resulting in a place field center each 4 cm2.

When the external input is spatially tuned, the depolarization of a place cell with a single place field should ideally decay as a Gaussian with the distance between the place field center and the animal's location. Under the simplification that multiple place fields of a cell sum linearly, the desired activity of a given cell n when the animal is at location x is given by

| (9) |

where the state and activity tuning curves are respectively given by

The gain function, g(u), is defined in Eq. 7. The parameter u0 = 0.2 permits subthreshold depolarization, and the parameter σu = 5.94 cm is set such that ftune best approximates a Gaussian tuning curve with a standard deviation of 5 cm, as expected for place cells in the dorsal hippocampus (Muller 1996).

We set the inhibitory weight (wI) and recurrent weights among place cells (W) such that the desired activity vectors (Eq. 9) correspond to fixed points of the dynamical system (Eq. 6) when the external input is given by Eq. 8 with Ipeak = 0.3. For the resting state of a place cell to be −u0, the inhibitory weight is given by the explicit expression,

Since the summation of the ideal network activity (1Tf̄) is constant throughout the region (within a place field width of a boundary), wI is independent of the animal's location.

The optimal recurrent weights onto a given cell j are initialized to zero and set iteratively through the delta rule,

| (10) |

where xi are all vertices, or locations at which a place field is centered, within the set learning region. Unless otherwise specified, the learning region is any location at least 20 cm from any boundary of the environment. The projected activity vector is given by

The resulting weights may be considered optimal since f̄(xi) corresponds to a fixed point of Eq. 6 if f̄(xi) = fproj(xi), and the delta rule minimizes the least squared error between the projected and desired activity vectors for each cell j. The learning rate (s) is sufficiently small such that fproj(xi) → f̄(xi) smoothly. No sparsity structure is enforced except the exclusion of self-connections. A predefined sparsity structure could be incorporated by holding f̄k = 0 when setting Wjk if cell k does not connect to cell j. The results would be unchanged on average, but all-to-all connections are used for this study to avoid an additional source of noise. The weights are held constant after the learning phase.

We compare this benchmark model to a second megamap constructed through a learning rule adapted from a basic Hebbian rule widely used in attractor models of place cells (Kali and Dayan 2000; Rolls et al. 2002; Samsonovich and McNaughton 1997; Solstad et al. 2014; Stringer et al. 2004). The recurrent weight between any two cells j and k is given by

| (11) |

for some tuning function, wtune. When wtune is a Gaussian function, W approximates the weights given uniform Hebbian learning (Cerasti and Treves 2013; Kali and Dayan 2000). We set wtune = wsingle, the optimal weights for the single-peaked place code when Ipeak = 0.3. To compute wsingle, we iteratively apply Eq. 10 over a circular region with a radius of 40 cm represented by a network of single-peaked place cells. The resulting vector of optimal weights onto the cell representing the region's center provides a discrete set of data points for the monotonically decreasing function, wsingle(d), where d denotes the distance between the place field centers of the pre- and postsynaptic cells. For d ≤ 12 cm, we set wsingle(d) as the polynomial of degree 3 that is the least squares approximation to the data points with d ≤ 12 cm. We define wsingle(d) = 0 for d > 12 cm.

The fundamental difference between the optimal weights generated by Eq. 10 and the Hebbian weights generated by Eq. 11 is that wsingle is the average spatial profile for the optimal weights and a lower bound for the Hebbian weights. This creates differences in the attractor dynamics as the megamaps become very large, but the two megamaps behave similarly in small to moderately large environments (up to at least 9 m2).

Gradual extension of the megamap.

We gradually extend the megamap to model the animal continually learning its local surroundings in two settings. First, we model the animal moving from one end to the other of a track 2,000 × 0.7 m2. We begin by setting place fields over the entire track according to the Poisson distribution. To construct the optimal megamap, Mopt, we initialize the weight matrix by Eq. 10 with the learning region set to all vertices within the initial 100 cm of the track that are at least 15 cm from any boundary of the track. For each subsequent iteration, we update the weight matrix by Eq. 10, shifting the learning region to the right by 50 cm. To construct the Hebbian megamap, Msum, the weights are updated at each iteration to incorporate all new place field pairs in the learning region into the summation of Eq. 11.

We test the megamap by computing the equilibrium state when the external input (Eq. 8) has amplitude Ipeak = 0.3 and the animal is placed at an initially learned location or at a recently learned location. Ideally, the equilibrium state approximates the ideal activity (Eq. 9). The megamap has lost all its inherent spatial stability if the equilibrium state on the megamap is indistinguishable from that of a feedforward model for which the recurrent excitation has lost all spatial tuning, leaving only the spatial signal of the external input. For the most direct comparison of the two models, we compute the equilibrium state of the feedforward model (û) from that of the megamap (u) by replacing the recurrent excitation of the latter with a global shift over all place cells. Explicitly,

| (12) |

where f and fI are the equilibrium activity of the place cell network and of the inhibitory unit of Mopt when the animal is placed at the beginning of the track (x = xinit) after learning the entire track. The corresponding equilibrium state of the megamap satisfies

| (13) |

Second, we iteratively extend a square environment to compare the two-dimensional megamap to the megamap representing the linear track (which may be considered as approximately one-dimensional). At each iteration n, we add 1-m-wide strips to the bottom and right edges of the square. We do this by sequentially adding (2n − 1) subregions of size 1 × 1 m2 to extend the megamap from (n − 1)2 m2 to n2 m2. To add each 1-m2 novel subregion to Mopt, we use Eq. 10 with the learning region set to all vertices that are either 1) in the novel subregion and at least 15 cm from the boundaries of the learned environment or 2) in the learned environment no more than 15 cm from the novel subregion. This protocol avoids artificial boundaries between subregions. We construct Msum by using Eq. 11.

Emergent properties in large environments.

We examine the response of both small and large megamaps to conflicting external inputs. For the simulations presented in Figs. 10 and 11, the small megamap represents a 3 × 3-m2 region and is used throughout much of the article. The large megamap is constructed by gradually learning a track 500 × 0.7 m2, as presented in Fig. 8 (Mopt). Since the learning region excludes the top and bottom 15 cm, the large megamap represents ∼200 m2. Note that even the small megamap represents an area much larger than the standard environments typically modeled (∼1 m2). The megamaps are driven by the conflicting external input,

| (14) |

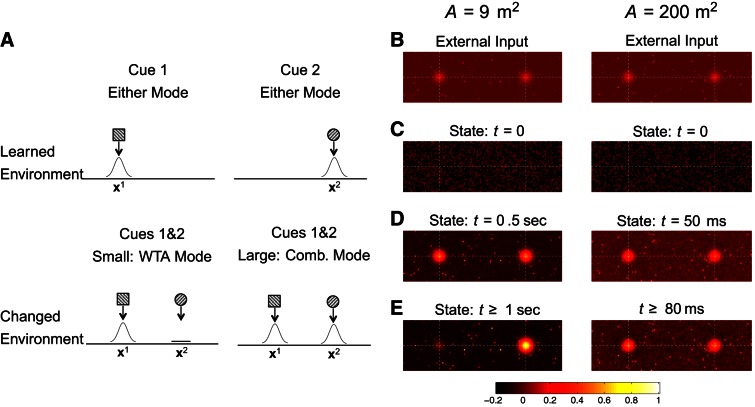

Fig. 10.

Coactivation of embedded activity bumps. A megamap representing a sufficiently large environment can adapt to a changed environment by simultaneously representing multiple learned memories never before encountered together. A: this schematic illustrates a cue conflict situation in which a learned environment is changed such that cues that were originally in different locations are now present at the same location, inducing a competition between 2 embedded (learned) activity bumps. Top, the 2 activity bumps illustrated, which encode 2 locations through distinct subsets of active cells, were embedded into the megamap when the animal learned the original environment. Bottom, in a small environment, the rigid attractor dynamics of the megamap cause the place cell activity to converge to a single learned memory (WTA mode). In a large environment, the place cell activity stably encodes both cues through 2 co-active activity bumps (combinatorial mode). B–E: for the simulation, the megamap representing 9 m2 operates in the WTA mode (left), and the megamap representing 200 m2 operates in the combinatorial mode (right). A 220 × 70-cm2 subregion of each environment is shown. The input or state of each place cell is plotted redundantly at all its place field centers from the original learned environment. B: the conflicting external input (Eq. 14 with Ipeak1 = Ipeak2 = 0.15) signals for 2 locations on the megamap. C: the initial state is random. D: 2 activity bumps initially appear on both megamaps. E: only 1 activity bump persists in time for the small megamap, but the equilibrium state of the large megamap has 2 activity bumps encoding both cue sets. See Fig. 11, A and B, for more details.

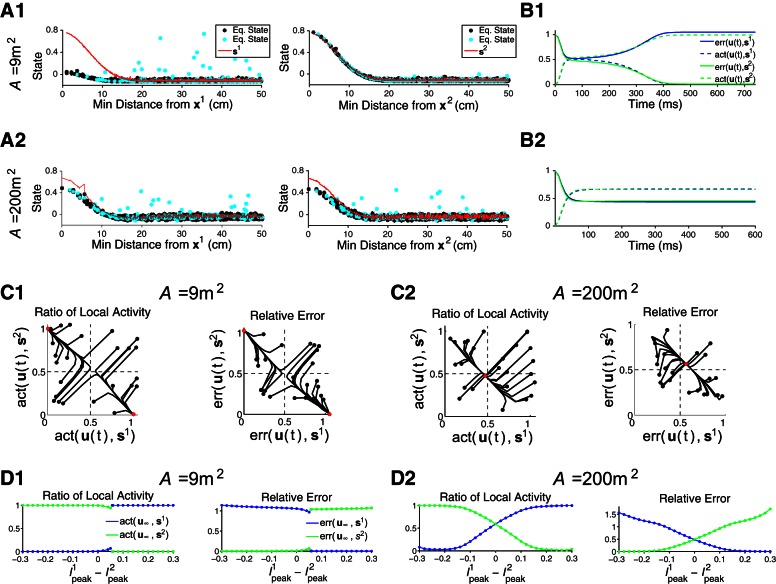

Fig. 11.

Dynamics of the operational modes. Conflicting external input signaling for 2 locations (x1 and x2) reveals functional differences between a megamap representing 9 m2, which operates in the WTA mode, and a megamap representing 200 m2, which operates in the combinatorial mode (Fig. 10A). The external input is given by Eq. 14, where Ipeak1 = Ipeak2 = 0.15 for A–C. A: the small megamap ignores the signal for x1 and fully represents x2 (A1), whereas the large megamap represents both locations (A2). The equilibrium state (Eq. state; data points; also shown in Fig. 10E) is plotted as a function of the minimal distance between the given cell's place field centers and x1 (left) or x2 (right). Red curves show si, the equilibrium state given the single-peaked external input, I(xi; Ipeaki). Only cells with place fields near x2 are active for the small megamap, where the states of cells with place field centers within 50 cm of both x1 and x2 are highlighted in cyan. B: although 2 activity bumps emerge from the random initial state of each megamap, the co-active bumps are stable only in sufficiently large environments. We quantify the network's representation of each location in 2 ways: err[u(t), si], the relative 2-norm error between the state vector u(t) and si (solid); and act[u(t), si], the ratio of the corresponding activity (Eq. 7) summed over all cells with a place field center within 10 cm of xi (dashed). C: the small megamap shows hysteresis (C1), but the large megamap combines the representation of each location regardless of the initial state (C2). Black curves show the evolution of the activity ratio (left) and relative error (right) from their initial values (black dots) to their equilibrium values (red dots). D: the small megamap pattern separates, its equilibrium state (u∞) sharply transitioning from s2 to s1 as the external input gradually shifts from I(x2; 0.3) to I(x1; 0.3) (D1). The large megamap gradually transitions between the 2 embedded activity bumps, amplifying the difference in the input signals (D2). In both cases, u(0) = s2.

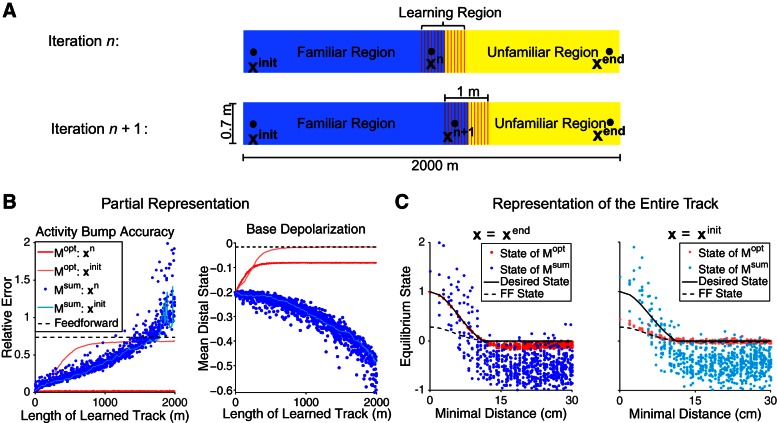

Fig. 8.

Gradual extension of the megamap representing a linear track. The optimal megamap (Mopt; constructed via Eq. 10) continuously unfolds as the animal explores novel terrains, stably representing newly acquired locations at the cost of previously learned representations. In contrast, a megamap constructed through a widely used Hebbian learning rule (Msum; constructed via Eq. 11) attempts to uniformly represent the entire track. A: at each iteration the animal learns a local subregion (shaded red) consisting of familiar (blue) and unfamiliar (yellow) locations. B, left: as the learned portion of the track grows, Mopt continually learns the representation of newly encountered locations (dark red), but its representation of previously learned locations gradually degrades (light red). For the latter case, the place cell activity becomes tightly bound to the external input, converging to the activity of a feedforward model for which the internal network drive has no spatial tuning (dashed black; Eq. 12). In contrast, the representation by Msum degrades uniformly throughout the learned track (blue). The sharp increase in error for some locations near the end of the track is due to activity bumps that drift away from the animal's location. Each data point shows the relative error between the desired activity (Eq. 9) and the equilibrium activity when the animal is tested at a newly acquired location (x = xn) or at an initially learned location (x = xinit) after iteration n of the track extension. Right, distal cells, which have no place field within 40 cm of the animal, receive no external input and are inactive. For Mopt, the recurrent excitation causes their equilibrium state (distal state) to approach a subthreshold value. C: after learning the entire track, the animal is tested at a recently learned location (xend) or at an initially learned location (xinit). Mopt accurately represents xend (dark red) despite the 2,000 m of track the animal has previously learned. However, the animal has effectively forgotten the beginning of the track, because the corresponding equilibrium state (light red; Eq. 13) approximates that of the feedforward model (dashed black; Eq. 12). Msum uniformly represents the entire track through a noisy activity bump (blue), which may drift away from the animal's location.

where I is given by Eq. 8. The two locations are well-separated and recently learned. For the small megamap, x1 = [1 m, 1.5 m] and x2 = [2.2 m, 1.5 m]. For the large megamap, x1 = [498 m, 0.35 m] and x2 = [499.2 m, 0.35 m].

For the simulations presented in Fig. 12, the megamaps are constructed by gradually extending a square environment, as presented in Fig. 9. A proportion (p1) of elements n of the input vector are randomly chosen to represent x1 via In(x1; 0.3), whereas the remaining elements are set to In(x2; 0.3). For each of 50 trials for each value of p1, x1 and x2 are randomly set to be at least 60 cm apart and 25 cm from an outer boundary of the environment. For the bottom two rows of plots, the means over the 50 trials are computed by considering only place cells that are active in the respective desired activity bump (Eq. 9). The proportion of active cells is the fraction of these cells with a nonzero firing rate in the equilibrium activity bump. The relative error of the activity of these cells compared with their desired activity at the respective location is also shown. When p1 = 0.5, trials are split into those for which the local error in the activity bump over x1 is less than that over x2, and vice versa. The initial state is random for all trials.

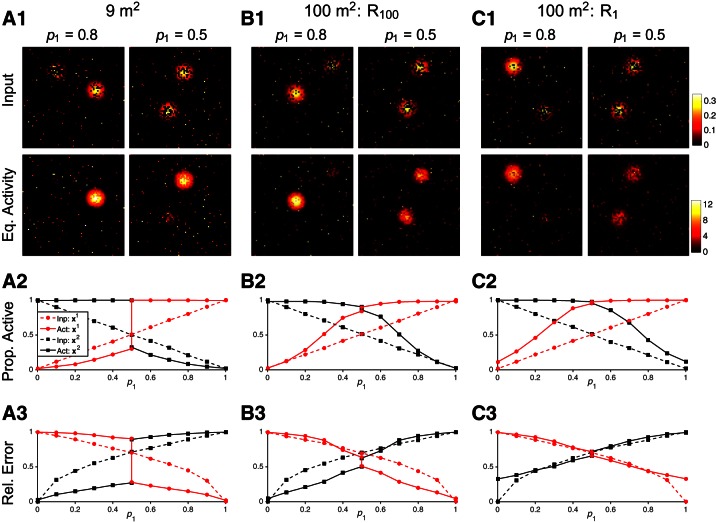

Fig. 12.

Pattern completion and pattern separation in large square environments. For each trial, a proportion (p1) of place cells are driven by the external input I(x1; 0.3) and the remaining cells are driven by I(x2; 0.3) (Eq. 8). Given these competing, incomplete inputs, the megamap performs pattern completion over at least 1 of the 2 locations regardless of the operational mode. A: the megamap representing 9 m2 operates in the WTA mode. A1: when 80% of place cells receive input for x1, a single equilibrium activity (Eq. activity) bump fully represents x1 (left). When exactly half of the place cells receive input for each location, the megamap still fully represents one location (right). A2: when p1 > 0.5, pattern completion is apparent in that all place cells representing x1 are active in the equilibrium activity bump (solid red), even though only a fraction of these cells receive input (dashed red). The activity bump over x2 is suppressed, because only a fraction of place cells receiving input for x2 are active (black). The opposite is true when p1 < 0.5. When p1 = 0.5, the activity bump represents either x1 or x2, but never both. A3: when p1 > 0.5, the error in place cell activity encoding x1 (solid red) is less than the error in the inputs (dashed red), a characteristic of pattern completion. When p1 < 0.5, the error in place cell activity is greater than the error in the inputs, a characteristic of pattern separation. B and C: the megamap representing 100 m2 operates in the combinatorial mode. B: when x1 and x2 are both in the last subregion learned (R100), the megamap performs pattern completion over both locations when 0.2 ≤ p1 ≤ 0.8, because more place cells representing each location are active than receive input. Both locations are strongly represented through 2 co-active equilibrium activity bumps when 0.4 ≤ p1 ≤ 0.6, because the error in place cell activity is less than the error in the inputs at both locations. Pattern separation is not as apparent as in the WTA mode since the weaker activity bump closely follows the external input. C: when x1 and x2 are both in the first subregion learned (R1), the representation of any location is weaker. Although the megamap still performs pattern completion at all locations, the error in place cell activity closely follows the error in the input. Prop. active, proportion of active cells; Rel. error, relative error.

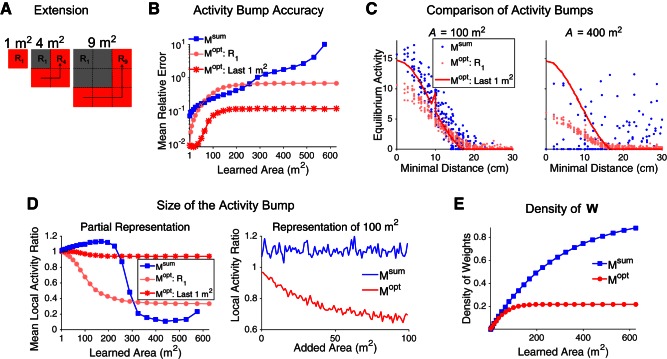

Fig. 9.

Gradual extension of the megamap representing a square environment. A: at each iteration n, the megamap grows from (n − 1)2 m2 to n2 m2 by sequential addition of (2n − 1) subregions of size 1 × 1 m2, from the bottom left to the top right, by Eq. 10 (Mopt) or Eq. 11 (Msum). B: after each iteration n, the equilibrium activity bump is compared with the desired activity (Eq. 9) when the animal is placed at 50 random locations taken throughout the environment for Msum (blue) or within the last subregion learned (Rn2; dark red) or first subregion learned (R1, light red) for Mopt. As the megamap grows, Mopt accurately learns new subregions (dark red) at the cost of previously learned locations (light red). Msum becomes increasingly inaccurate until reaching a breakdown point (∼300 m2) beyond which it can no longer represent any location (blue). C, left: after learning 100 m2, both Msum and Mopt encode any location in the environment through the equilibrium activity bump. Right, after learning 400 m2, Msum no longer has an equilibrium activity bump anywhere in the environment. The amplitude of the equilibrium activity bump of Mopt depends on how recently the location was learned. D: for Mopt, the animal gradually forgets locations previously learned as the megamap grows. Mopt always forms a strong equilibrium activity bump at newly acquired locations (red), but the equilibrium activity bump representing R1 decreases in size as the learned area increases (left; light red). For example, after learning 100 m2, the size of the equilibrium activity bump of Mopt representing any location decreases as a function of the area added to the megamap since learning that location (right). On the other hand, Msum uniformly represents the entire learned region (right; blue). When the learned area becomes sufficiently large, Msum has no equilibrium activity bump, resulting in very little activity near the animal's location (left; blue). The local activity ratio is the ratio of net activity of the equilibrium and desired (Eq. 9) activity bumps, where the activity is summed over all cells with a place field within 10 cm of the animal. E: the density of recurrent connections increases according to Eq. 19 for Msum but approaches 0.25 for Mopt. For all plots, equilibrium activity bumps are computed by setting the input by Eq. 8 with Ipeak = 0.3.

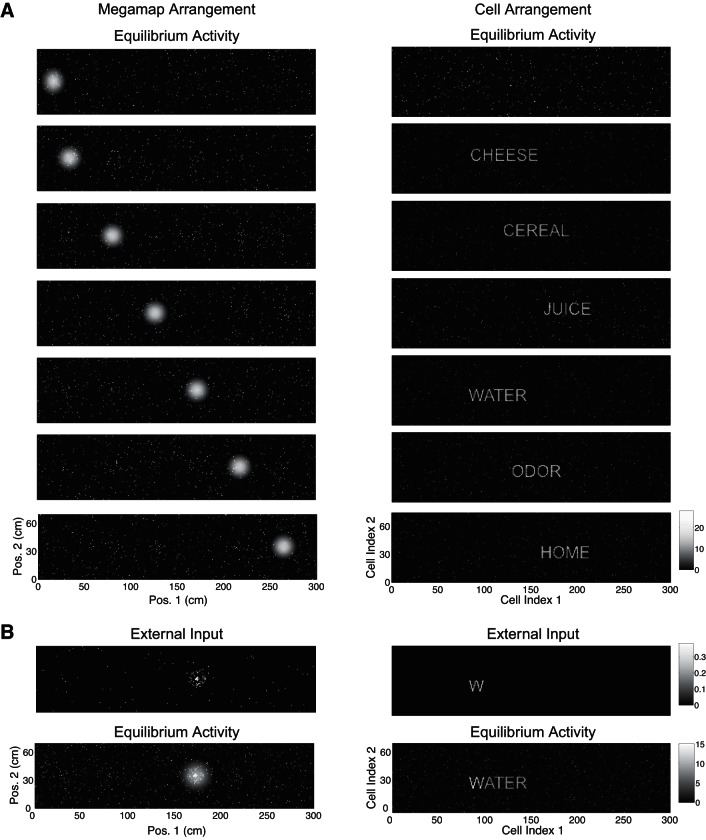

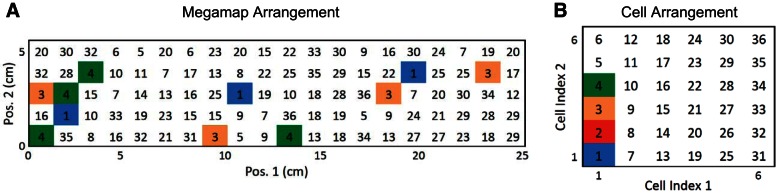

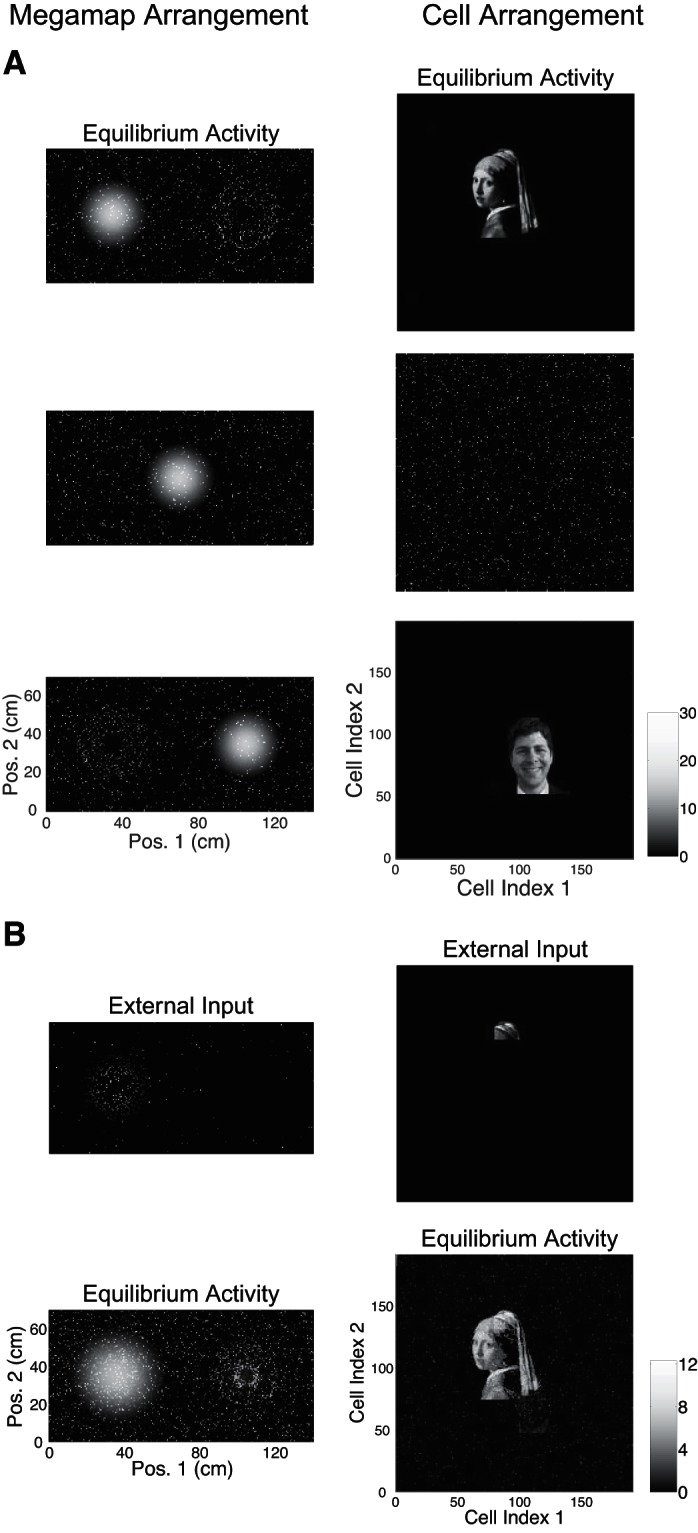

Incorporation of nonspatial information.

When the animal is learning a new environment, hypothetical cells representing a salient nonspatial cue should be active when the animal is near the cue. This subset of active cells would then be incorporated into the megamap through associative learning, forming place fields at the corresponding location of the cue. We construct the megamap to model the effective result of this associative learning. We then demonstrate that the megamap can sustain a continuous representation of space while simultaneously exhibiting activity patterns representing nonspatial cues at the corresponding discrete set of locations.

We initialize the megamap by setting place field centers over the entire region according to the Poisson distribution. For the cell arrangement, each place cell is assigned to a pixel within a rectangular grid of dimension a1 × a2, where a1a2 = N, the number of cells in the population (including cells with no place fields). To prepare each image (nonspatial pattern) to be embedded, we convert the original image to black and white, crop it to dimension a1 × a2, and truncate it so the number of nonzero pixels is no greater than the number of co-active cells in the desired activity bump (Eq. 9).

We then sequentially embed each image into the megamap at the given location x by reassigning proximal place fields, or fields whose center lies within 20 cm of x, to cells representing the image. This is done in such a manner that cells corresponding to a brighter pixel in the image have a place field closer to x, and the megamap retains the Poisson distribution of place fields near x. Explicitly, let Nimg be the number of cells with a nonzero value in the image representation. For n = 1, 2, …, Nimg, we reassign all proximal place fields of the cell with the nth highest desired activity at x (Eq. 9) to the cell with the nth brightest pixel in the image. For any remaining cell with a nonzero desired activity at x, we reassign its proximal place fields to a random cell whose value in the image representation is zero. This results in the appearance of scattered noise around the nonspatial activity pattern given the cell arrangement. After setting the place fields to embed each image, we simulate the animal learning the region by setting the recurrent weights according to Eq. 10.

We present two examples of the dual interpretation. For example 1, a1 = 300, a2 = 75, N = 22,500, and λ ≈ 0.44 m−2. A place field is centered each 1 cm2 within the 300 × 70-cm2 region. For example 2, a1 = 191, a2 = 191, N = 36,481, and λ ≈ 0.56 m−2. A place field is centered each 0.49 cm2 within the 140 × 70-cm2 region. To generate the movies, we simulate the animal moving from left to right across the region with a velocity of 10 cm/s and set the external input by Eq. 8 with Ipeak = 0.3. Every 0.1 cm, we visualize the spatial and nonspatial information carried by the network by plotting the network activity vector according to the megamap arrangement and cell arrangement, respectively. To generate the figures, we compute the equilibrium activity when the animal is at the given location, setting the external input by Eq. 8 with Ipeak = 0.3.

Distinguishing features of the partitioned attractor map and the megamap.

We contrast the partitioned attractor map to the megamap for a square environment and for a long linear track (Fig. 16). For the square 15 × 15-m2 environment, one place field is centered each 4 cm2 for both the megamap and the partitioned map. Place fields are set according to the Poisson distribution (Eq. 1) with λ = −ln(0.8) m−2. We model an ensemble of N = 104/(4λ) = 11,204 place cells to obtain a pixel size of 4 cm2. To construct each 150 × 150-cm2 chart of the partitioned map, (1502/4) = 5,625 of the 11,204 place cells are randomly selected to have a single place field. All place fields are centered on a random grid vertex.

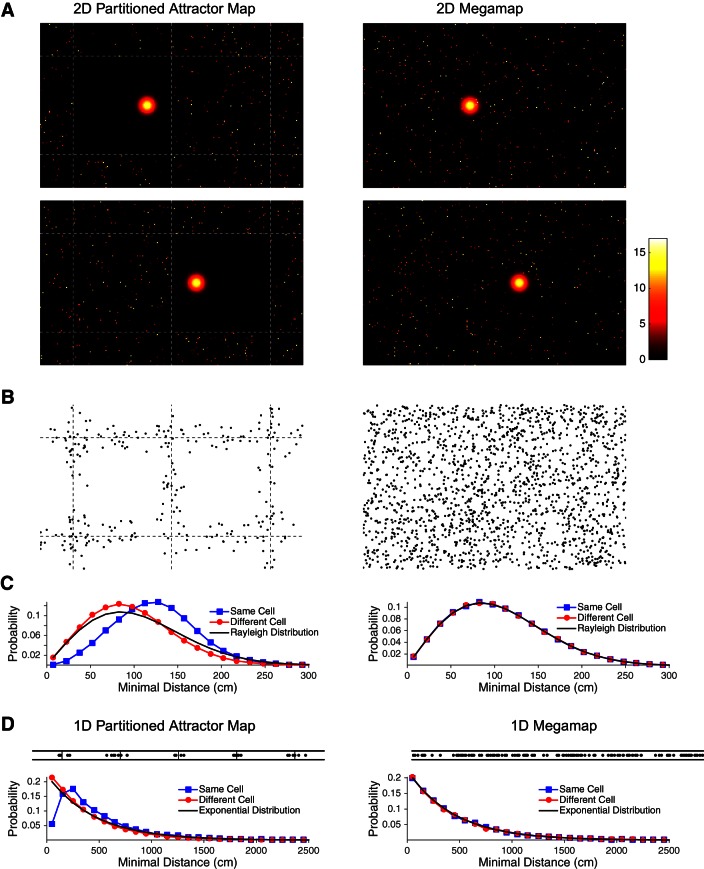

Fig. 16.

Distinguishing features of the partitioned attractor map (Fig. 1B) and the megamap (Fig. 1C). A–C: the ensemble represents a 15 × 15-m2 region (the central 16-m2 subregion is shown in A and B). Each place cell has at most 1 place field in each 1.5 × 1.5-m2 chart of the partitioned region (left), and place fields follow the Poisson distribution over the megamap (right). A: the models differ in the scattered activity surrounding the localized activity bump, where the desired activity (Eq. 9, in Hz) of each place cell is plotted redundantly at all of its place field centers for both models. A place cell participating in the activity bump is excluded from having an additional place field in the same chart of the partitioned attractor map, creating a single clean chart analogous to the active chart predicted when the single-peaked attractor model is applied to multiple environments (Samsonovich and McNaughton 1997; Samsonovich 1998). A neighboring chart becomes clean when the animal moves 60 cm to the right (bottom). In contrast, activity is scattered throughout the megamap, since a place cell representing the animal's location may have additional place fields anywhere in the region. B: place fields that are close to their nearest neighbors cluster near the artificial boundaries between subregions of the partitioned attractor map, but they are distributed evenly throughout the megamap. Each data point indicates the center of a place field that is within 30 cm of a second place field of the same cell. C: the Poisson distribution of place fields over the megamap implies that the distance to nearest neighbor follows the Rayleigh distribution (black curve; Eq. 15), whether the nearest neighbor is taken from place fields of the same cell (blue) or a single random second cell (red). The 2 distributions differ for the partitioned attractor map because of its exclusion principle. D: the ensemble represents a 100-m × 30-cm linear track, which may be approximated as a 1-dimensional environment. Top, similar to B, place fields within 50 cm of their nearest neighbor from the same cell cluster near the artificial boundaries of the partitioned attractor map but are evenly distributed throughout the megamap. Bottom, similar to C, the distribution of distances to nearest neighbor for the partitioned attractor differ whether considering the same cell or a different cell, but the distances follow the exponential distribution (Eq. 16) over the 1-dimensional megamap.

For the 100-m × 30-cm linear track, one place field is centered each 0.5 cm for both the megamap and the partitioned map. Approximating the track as a one-dimensional environment, the Poisson distribution (Eq. 1) becomes

where λ1 = λ(w + d) given the track width (w = 0.3 m) and place field diameter (d = 0.2 m). We model an ensemble of N = 102/(0.5 λ1) = 1,793 place cells to obtain a pixel size of 0.5 cm. To construct the partitioned track, (200/0.5) = 400 of the 1,793 place cells are randomly selected to represent each 200-cm segment of the linear track. All place fields are centered 15 cm from the top and bottom of the track.

The Poisson distribution implies that, in a two-dimensional environment, the distance from the center of a given place field to that of the closest place field of any single place cell (whether it is the same cell or a different cell) with place field density λ follows the Rayleigh distribution, because its probability density function is given by

| (15) |

To see this, note that the probability that this distance to nearest neighbor is between x and x+δ is given by

or the probability that the cell has no place fields in a circle of radius x and has at least one place field in a circle of radius x+δ. Equation 15 follows from computing limδ→0(1/δ)P(x, x+δ). On a one-dimensional track, the distance to nearest neighbor instead obeys the exponential distribution,

| (16) |

We compare these probability distributions to distributions obtained numerically for the partitioned attractor map and megamap. For a given place field, we compute either the minimal distance to a second place field of the same cell or the minimal distance to a place field of a single random second cell. This is done for all place fields at least 250 cm from all edges of the two-dimensional region or 250 cm from the beginning and end of the track to avoid biasing the data for larger distances.

RESULTS

Flexible representation of a large space.

We begin by examining the ideal place cell activity in a large space without yet considering its stability or how it may be generated by plausible neural mechanisms. In particular, we describe the flexible representation on which the megamap is built and compare its capacity and spatial resolution to those of the single-peaked representation on which the standard attractor chart is built.

The flexible representation assumes that a place cell may exhibit multiple, irregularly spaced place fields within a large environment, and there is no spatial correlation among place fields of different cells. We use the homogeneous Poisson distribution of place fields (Eq. 1; Fig. 1D) as a benchmark model since it maximizes the flexibility of the megamap and provides a reasonable approximation to experimental data (Fig. 2, Table 1; see discussion). Accordingly, the most likely number of place fields for a cell in a region of area A is given by the integer part of A/λ, where λ denotes the cell's place field density. Other plausible place field distributions, such as the exponential distribution (Maurer et al. 2006) or the Poisson-gamma distribution (Rich et al. 2014), would not affect the qualitative results of this study.

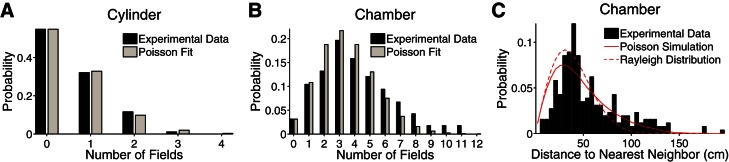

Fig. 2.

Poisson fit to experimental data from a moderately large open-field environment (Fenton et al. 2008). The data was recorded from place cells in the CA1 as a rat explored a cylinder with a 68-cm diameter and a chamber whose floor had dimensions 150 × 140 cm2. Place cells had multiple, irregularly spaced place fields and showed global remapping between environments. We fit the experimental data with the Poisson distribution (Eq. 1), using the respective areas for the cylinder and chamber floor and the place field density, λ = 1.65 m−2. This parameter implies that ∼50% of CA1 place cells would be silent in a 0.4-m2 region, which is consistent with the 30–50% range found experimentally (Guzowski et al. 1999; Vazdarjanova and Guzowski 2004; Wilson and McNaughton 1993). A and B: the Poisson distribution captures the general trend of the data. Since the number of silent cells was not measured in the experiment, we extrapolated the probability that a cell has no fields from the experimental data using the Poisson distribution with λ = 1.65 m−2. C: the model also predicts the distance from one place field to the closest place field of the same cell, which is approximated by the Rayleigh distribution (dashed red curve; Eq. 15). To take into account the close boundaries of the chamber, which lower the probability of small distances, the solid red curve was generated from numerical simulations in which the place fields of 150,000 cells were distributed throughout the chamber floor according to the Poisson distribution. Additional statistics are given in Table 1.

Table 1.

Poisson fit to experimental data from a moderately large open-field environment

| Apparatus | Source | Pact(1) | Fields per Cell |

|---|---|---|---|

| Cylinder | Experimental data | 0.72 | 1.3 ± 0.03 |

| Poisson fit | 0.731 | 1.326 | |

| Relative error | 0.02 | 0.02 | |

| Chamber floor | Experimental data | 0.11 | 3.4 ± 0.11 |

| Poisson fit | 0.112 | 3.569 | |

| Relative error | 0.02 | 0.05 |

Data are Poisson fits to experimental data from a moderately large open-field environment (Fenton et al. 2008). In addition to the statistical agreement shown in Fig. 2, the Poisson distribution [Eq. 1 with λ = 1.65m−2 and A = 0.36 m2 (cylinder) or 2.1 m2 (chamber floor)] predicts the probability that an active cell (cell with at least one place field in the given apparatus) has exactly one place field within the cylinder or chamber floor [Pact(1)]. It also predicts the average number of place fields per active cell in both enclosures.

The flexible representation of the megamap is ideal for uniquely representing a large environment because place cells are used independently of one another, making it a combinatorial code. We estimate an ensemble's representational capacity by assuming a downstream neural network can distinguish between two activity patterns if they differ in their respective subset of co-active cells. Since the spatial relation among cells on the standard attractor chart is rigid, a chart with N place cells can have only N unique activity patterns. If place cells flexibly recombine, however, a population of N place cells supports

| (17) |

unique activity patterns by Stirling's approximation, where n is the number of co-active cells in each activity pattern, p = n/N, c1 = p−p(1 − p)−(1 − p), and c2 = 2πp(1 − p). This crude estimate implies that 10,000 cells with a flexible representation can have 6 × 10241 activity patterns, assuming 1% of cells are co-active in each activity pattern. Hence, this relatively small population can theoretically encode the entire surface of the Earth, since assigning a unique activity pattern to each square millimeter requires only ∼1019 patterns. Of course, obtaining this representational capacity depends on the hippocampal ability to orthogonalize its representations of new locations, likely mediated by a pattern separation process in the dentate gyrus (Aimone et al. 2011; Cerasti and Treves 2010; Leutgeb et al. 2007).

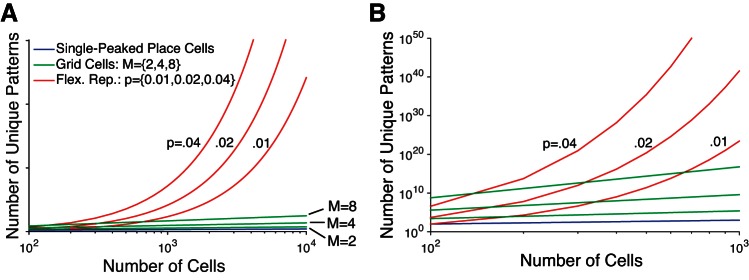

The flexible representation shares this large representational capacity with any combinatorial code for space, such as the memoryless Bayesian position estimation (Davidson et al. 2009). The upstream grid cell network also has a capacity far exceeding the single-peaked place code (Fiete et al. 2008; Mathis et al. 2012). In particular, a population of N grid cells divided among M modules has the representational capacity (computed analogously, see materials and methods),

| (18) |

Whereas Cgrid is exponential in the number of modules (M), Cflex is approximately exponential in the number of cells (N). This implies that Cflex ≫ Cgrid (Fig. 3), since N ≫ M ≈ 4 − 5 (Stensola et al. 2012). Although grid cells can represent the naturalistic environment of a rat, the hippocampus may require a larger representational capacity since place cells flexibly recombine to uniquely represent different environments (Alme et al. 2014), whereas grid cells within a module retain a rigid spacing, orientation, and spatial relation among phases in any environment (Yoon et al. 2013). Additionally, place cells must incorporate the nonspatial stimuli involved in episodic memories (Fortin et al. 2002).

Fig. 3.

Representational capacity. The flexible representation on which the megamap is built alleviates the inherent size limitations of the single-peaked code on which the standard attractor chart is built and even improves on the large representational capacity of the upstream grid cell network. A: the single-peaked place code enforces a rigid spatial relation among place fields within a single environment, constraining a population of N place cells to support at most N unique activity patterns (blue). The representational capacity of the grid cell population is similarly linear in the number of cells, although it is exponential in the number of modules, M (green; Eq. 18). The representational capacity of the flexible representation approaches an exponential growth in the number of cells (red; Eq. 17), where p denotes the proportion of co-active cells in an activity bump. B: replication of A for N ≤ 1,000.

The flexible representation of the megamap also retains a fine spatial resolution, as quantified by the least possible mean squared error between the animal's location and the location determined from the stochastic spikes of place cells (Fig. 4). Regardless of the distribution of place fields, the spatial resolution is inversely proportional to the place field density across the population, ρ (Eq. 4). Consequently, the megamap has the potential to represent arbitrarily large regions with the same spatial resolution observed in small standard enclosures since the density is constant (ρ = Nλ), but the spatial resolution of the single-peaked place code grows linearly with the environmental area, A, since ρ = N/A. Since the least mean squared error (Eq. 4) does not depend on the place field width, σ, the single-peaked place code becomes coarser in larger environments even if the ensemble has a scaled representation in which the place field size scales with the environmental area (Muller et al. 1987; O'Keefe and Burgess 1996).

Ideal megamap.

The representational capacity of the flexible representation would be more than sufficient if hippocampal place cells were a mere readout of the spatial signal carried by its afferents. Indeed, feedforward models have demonstrated how place fields may form from grid cells in the medial entorhinal cortex carrying self-motion information (Azizi et al. 2014; Fuhs and Touretzky 2006; Lyttle et al. 2013; McNaughton et al. 2006; Monaco and Abbott 2011; Savelli and Knierim 2010); boundary vector cells in the subiculum, parasubiculum, and medial entorhinal cortex carrying information relating to the boundaries of the environment (Burgess et al. 2000; Hartley et al. 2000; Lever et al. 2009); and granule cells in the dentate gyrus providing a strong, sparse signal (Cerasti and Treves 2010). However, place cells in the CA3 belong to a network with strong recurrent collaterals (Johnston and Amaral 1998) that likely processes its external inputs during memory retrieval, as evidenced by phenomena such as pattern completion in the CA3 (Harris et al. 2003; Lee et al. 2004; Neunuebel and Knierim 2014; Vazdarjanova and Guzowski 2004).

We now shift our focus to how an associative network may contribute to the formation and robustness of place cell activity. We adopt a standard firing rate model (Kali and Dayan 2000; Wilson and Cowan 1972) consisting of a network of place cells with recurrent excitation, global feedback inhibition, and external input (Eq. 6). For simplicity, we assume the external input carries an idealistic spatial signal (Eq. 8). The model could be generalized for more realistic external inputs, including input from the lateral entorhinal cortex (Deshmukh and Knierim 2011) or from any source mentioned above.

We construct the ideal megamap by arranging place cells on the megamap according to the flexible representation (Fig. 1C) and setting the strength of recurrent connections optimally to obtain the desired activity (Eq. 9) when the external input is spatially tuned (Eq. 8 with Ipeak = 0.3; see materials and methods). The resulting weights are correlated with the overlap of place fields (Fig. 5, C–E), consistent with associative plasticity. The overall network structure is a natural extension from a small environment, in which a place cell is connected to its single group of neighbors on the chart. In a large environment, a place cell is connected to its multiple sets of neighbors on the megamap (Fig. 5C). Within environments of less than ∼9 m2, the weights are approximated by the summation of Gaussian-like tuning curves (Eq. 11), analogous to the classic multichart model representing multiple environments. However, key differences emerge in larger environments (see Gradual extension of the megamap).

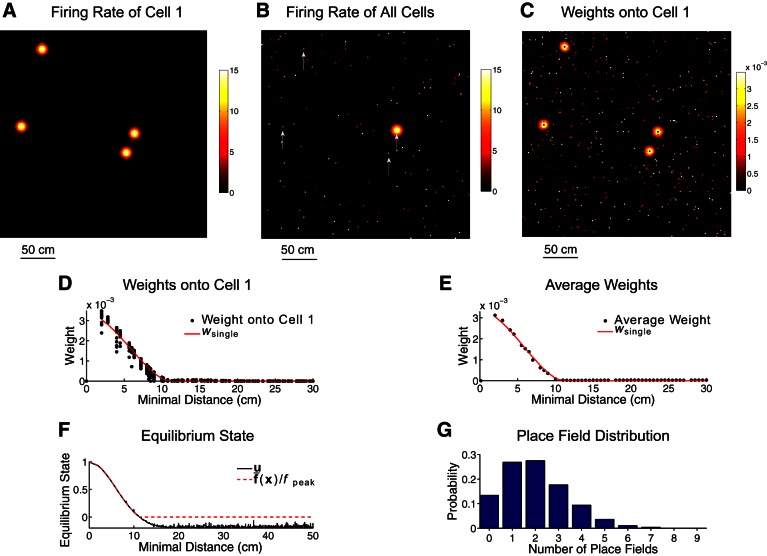

Fig. 5.

Structure of the ideal megamap. The external input is given by Eq. 8 with Ipeak = 0.3. A: any cell in the megamap may have multiple, irregularly spaced place fields, as illustrated by the firing rate (Hz) of cell 1 as the animal moves throughout the 9-m2 region. B: the megamap encodes space through a localized activity bump centered at the animal's location. The activity bump is visualized by plotting the equilibrium firing rate of each place cell redundantly at all of its place field centers. Each cell appears multiple times in the megamap, as illustrated in Fig. 1C. Scattered noise can be seen throughout the megamap, since cells like cell 1 (whose firing rate is indicated by arrows) have place fields near the animal and elsewhere in the region. C: recurrent weights onto cell 1 are visualized by plotting the weight from each presynaptic place cell redundantly at all of its place field centers. Cell 1 is driven by its 4 groups of neighbors on the megamap. D and E: the spatial profile of the recurrent weights is approximated by wsingle, the spatial profile were each cell to have a single place field (see materials and methods). The weight profile for cell 1 (D) is revealed by plotting the weight from each place cell onto cell 1 as a function of the minimal distance between the place field centers of the 2 cells. In E, this weight profile is averaged over all cells in the megamap. F: the network state vector is visualized by plotting the equilibrium state of each cell as a function of the minimal distance between its place field centers and the animal's location, x. The corresponding activity bump (B) approximates the desired activity (Eq. 9), providing a strong, stable signal for the animal's location. The subthreshold fluctuations in the state are due to the multipeaked structure of the recurrent weights. G: for the population of 11,204 place cells, 9,731 cells have at least one place field in the 9-m2 region with 2.3 ± 1.3 fields per cell.

The activity of an ensemble of place cells within a small environment is typically visualized by plotting the firing rate of each place cell at its single location on the chart (Fig. 1A), revealing a localized activity bump centered at the encoded location (Samsonovich and McNaughton 1997). In the case that a cell has multiple place fields, it is often plotted on the chart at the center of its largest place field (Cerasti and Treves 2013). A new convention is needed for the megamap since a cell may have many place fields of similar size. We visualize activity on the megamap by plotting the firing rate of each cell redundantly at all of its place field centers (Fig. 1C).

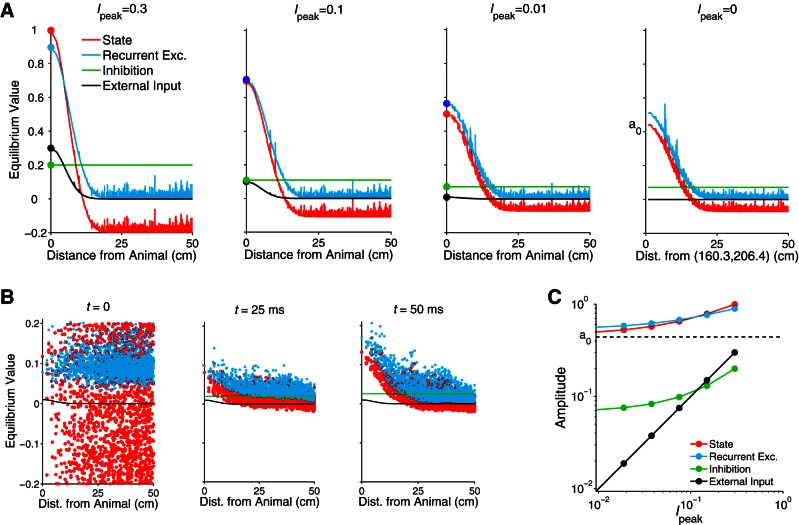

The network activity converges from any initial state to the desired activity (Eq. 9) when Ipeak = 0.3 (Fig. 5F). Although a given place cell now appears multiple times on the megamap, the ensemble of place cells encodes a location through a single localized activity bump, exactly as shown on the single-peaked chart (Fig. 5B). The difference is that scattered activity appears throughout the megamap since the firing rate of a given place cell is plotted redundantly (Fig. 5, A and B). With Ipeak = 0.3, the external input is about one-third of the strength of the recurrent excitation at the equilibrium state (Fig. 6A) and binds the activity bump to any location within the continuous two-dimensional space.

Fig. 6.

Relative influence of internal network drive and external input on place cell activity of the megamap. A: the equilibrium state maintains its spatial tuning as the external input weakens due to the increasingly dominant network drive via structured recurrent excitation among place cells in the megamap. According to Eq. 6, the equilibrium state (red) is the sum of the recurrent excitation (blue), global feedback inhibition (green), and external input (Eq. 8) with amplitude Ipeak (black). The equilibrium state of each cell roughly decays with the minimal distance between the cell's place field centers and the location encoded by the activity bump. This location is the animal's location when Ipeak ≥ 0.01 and depends on the random initial state otherwise. B: weak external input (Ipeak = 0.01 for this example) drives cells with place fields near the animal, providing an early spatial bias. The recurrent excitation initially has no spatial tuning since the initial state is random, but it gradually amplifies the spatial bias to create a localized activity bump centered at the animal's location. The equilibrium state is reached by 500 ms. Colors are as defined in A. The initial feedback inhibition [wIfI(0) ≈ 3] is not shown. C: the processing power of the network is revealed by the nonlinear decay in the amplitude of the equilibrium state as the input amplitude decays linearly. The state amplitude is bounded below by a0 (dashed line), which is indicated in A, far right. The amplitude of each term refers to the respective value for the cell with a place field at the animal's location, as indicated by the marked data points in A.

Megamap as an attractor.

A network of neurons is called an attractor network if its state always converges in time to a stable, low-dimensional manifold (attractor) when the external input is either absent or fixed. Rigorously, an attractor, A, is defined as a minimal closed set in the state space of a dynamical system such that any trajectory that starts in A stays in A, and A attracts all trajectories that start in an open set containing A (Amit 1989; Ermentrout and Terman 2010; Ivancevic and Ivancevic 2007; Strogatz 1994). The attractor is continuous if there is an infinitesimally small difference between attractor states, as might be expected of an attractor representing a continuous spatial environment.

Place cells on the megamap can be characterized as an attractor network since their activity converges from any initial state to a localized activity bump of approximately the same size and shape in the absence of external input (Fig. 6A, far right). Whereas the state space for N place cells is N-dimensional, the attractor of the megamap is contained in a two-dimensional space since any attractor state (stable equilibrium activity bump) is characterized by the location it encodes in the two-dimensional spatial environment. Strictly speaking, the megamap supports a discrete set of point attractors rather than the ideal continuous attractor. In the absence of external input, the activity bump drifts over the megamap until remaining fixed at one of a discrete number of preferred locations (Fig. 7A, far left).

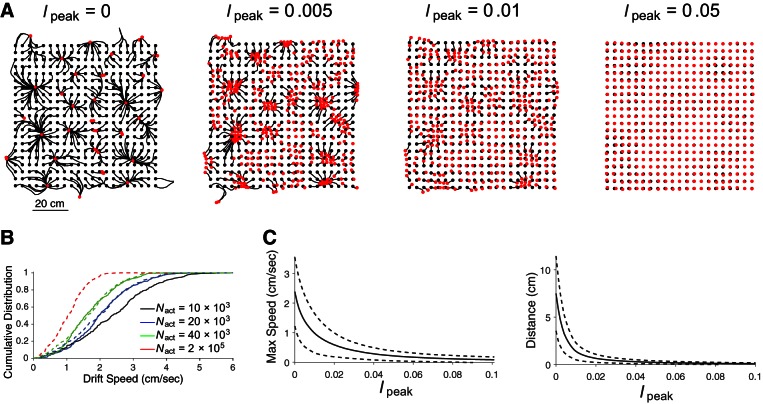

Fig. 7.

Binding of the activity bump to space. Relatively weak external input biases the activity bump for any location in the learned space, effectively rendering the megamap a quasi-continuous attractor. A: for each of 400 trials, the initial state is set to the equilibrium state given external input with an amplitude of 0.3 centered at the animal's location (black dot). When the external input amplitude is then reduced to the value indicated for Ipeak, the preexisting activity bump persists but slowly drifts (black curve) until remaining fixed at the location encoded by the equilibrium activity bump (red dot). In the absence of external input (far left), the activity bump drifts to one of a discrete set of point attractors. As Ipeak increases, the activity bump travels a shorter distance along a similar trajectory. B: increasing the number of place cells in the megamap, which increases the density of the population's place fields, slows the drift of the activity bump. The maximum drift speed when Ipeak = 0 (solid curves) appears to follow the cumulative distribution function (CDF), F(Nact) = F0(N0/Nact)0.27 (dashed curves), where Nact is the number of place cells with at least one place field, and F0 is the CDF for a network with Nact = N0 = 9,731.This empirical power law implies that if the attractor network were to include all place cells in the CA3 (Nact = 2 × 105), then the probability that the drift speed exceeds 2 cm/s would be 0.005 (dashed red curve). The CDF for each value of Nact was constructed using the maximum drift speed for each of the 400 trials with Ipeak = 0. The exact network sizes are Nact = 9,731 (black), Nact = 19,465 (blue), and Nact = 38,756 (green). These networks correspond to one place field each 4 cm2 (black), 2 cm2 (blue), and 1 cm2 (green). The drift speed was measured as the distance traveled each second. C: increasing the input magnitude decreases both the speed of the drift and the distance traveled from the animal's location. Both measures were averaged over the 400 trials for each value of Ipeak. Dashed curves show 1 standard deviation. Since the drift from any location becomes negligible given only a weak external input (Ipeak ≥ 0.05), the megamap encodes any location within the continuous space through a stable equilibrium activity bump. For A and C, Nact = 9,731.

The emergence of a discrete attractor is common in network models intended to support a continuous attractor. The recurrent weights among a continuous attractor network must be perfectly shift invariant, so introducing any random noise or heterogeneities to these weights reduces the continuum of attractor states to a discrete set of point attractors (Renart et al. 2003; Zhang 1996). This implies that the problem of drift is also expected for the classic multichart model, because the components of the recurrent weights needed for one chart appear as random noise from the view of another chart (Samsonovich and McNaughton 1997). Drifting to point attractors has also been shown explicitly in a two-dimensional attractor map obtained by learning (Cerasti and Treves 2013). The timescale of the drift (seconds) is about two orders of magnitude greater than the timescale of the intrinsic attractor dynamics (tens of milliseconds), or the timescale for a random activity pattern to collapse into a bump of stereotyped shape (Wu et al. 2008).

The megamap may be considered as a quasi-continuous attractor map for the following reasons. First, as mentioned above, the megamap approximates a continuous attractor at the timescale of intrinsic attractor dynamics. The slow drift of the activity bump becomes even slower for larger networks (Fig. 7B) (Kali and Dayan 2000; Renart et al. 2003; Zhang 1996). In addition, the drift could potentially be alleviated by introducing other dynamical processes at slower timescales, such as homeostatic synaptic scaling (Renart et al. 2003), enhancing the firing of active cells (Stringer et al. 2002), and including individual neurons with inherent persistent activity (Egorov et al. 2002; Jochems and Yoshida 2013; Kulkarni et al. 2011; Winograd et al. 2008; Yoshida and Hasselmo 2009). Finally, the drift occurs when there is no external input, whereas the hippocampus is unlikely to ever be devoid of external input. Rather, it is reasonable to expect that the overall effect of the rich array of multimodal hippocampal inputs about both the environment and self-motion vary uniquely throughout a naturalistic environment. External input anchors the activity bump to any location on the megamap, even when the external input is very weak, noisy, or incomplete.

Role of the external input.

The megamap can account for how the CA3 may robustly retrieve representations of large spaces. When an animal is asked to retrieve a memory of a familiar environment, hippocampal activity is likely driven by both a strong recurrent network input and an external input carrying various types of sensory information about the environment. Given any initial state, the structured recurrent network drive largely maintains the equilibrium activity bump on the megamap as the external input weakens. A relatively weak external input provides a spatial bias, binding the activity bump to the animal's location (Fig. 6). The network responds similarly to a noisy external input due to its global inhibition (data not shown). See Pattern separation and pattern completion for examples of the network's response to an incomplete input.

We next examine the cognitive map generated by the set of all stable equilibrium activity bumps when the external input has the form of Eq. 8 with a fixed Ipeak ≥ 0. The cognitive map (megamap) converges from a discrete to a continuous map as the external input strengthens, binding the activity bump to space (Fig. 7, A and C). When Ipeak = 0.3, the continuity of the stable megamap is largely inherited from the continuity of its strong external input. The processing power of the hippocampal network is revealed in that relatively weak location-specific input is sufficient to anchor the activity bump to any location within the continuous learned region. For example, the activity bump drifts only 0.27 ± 0.20 cm when Ipeak = 0.05 (Fig. 7A). Although the external input has just 8% of the amplitude of the equilibrium recurrent excitation (Fig. 6C), it provides a sufficient bias for the animal's location to overcome the drift over the megamap and bind the activity bump to any location in the large environment.

In summary, the megamap consists of a continuum of point attractors, each corresponding to a different external input centered at a given location in the environment. The megamap is a continuous cognitive map with attractor dynamics in the sense that it can denoise or pattern complete a corrupted input at any location within the large, continuous environment. Although these properties are expected of any standard attractor network representing small environments, the megamap theory explains how this stability may be extended naturally to large environments.

Gradual extension of the megamap.

Since the megamap does not require the animal to partition a large environment, it is natural to gradually extend the megamap as the animal explores novel subregions. We simulate an animal iteratively learning a track 2,000 × 0.7 m2, as illustrated in Fig. 8A. We gradually extend the megamap to incorporate a contiguous novel subregion by setting new place field centers according to the Poisson distribution and updating the recurrent weights to model the animal learning its local surroundings (the surrounding 0.7-m2 subregion). Since the linear track may be considered as approximately one-dimensional, we also iteratively enlarge a square environment to test the megamap's representation of large two-dimensional spaces (Fig. 9A). The megamap model in its current form is insensitive to the shape of the environment, so the results for the linear track and square environment are qualitatively the same. The key difference between the two simulations is that place fields are set to be slightly larger for the square environment (σu = 8.97 cm rather than 5.94 cm, leading to a place field radius of 17 cm rather than 11 cm). Both simulations are consistent with the experimental observation that when a rat initially explores a novel subregion, new place fields tend to appear immediately and grow more robust in time (Rich et al. 2014; Wilson and McNaughton 1993).

At each iteration, we extend the megamap Mopt by updating the weights according to Eq. 10. Mopt continuously unfolds as the animal explores novel terrains. The simulated animal accurately learns new regions regardless of how much information is stored in the recurrent weights (Figs. 8, B and C, and 9, B and C, dark red). However, locations learned in the distant past and never reinforced are gradually forgotten as the corresponding recurrent hippocampal input loses its spatial tuning (Figs. 8, B and C, and 9, B and C, light red). Existing connections are pruned at each iteration so that the megamap accurately represents the local subregion, resulting in a gradual decrease in the size of a learned activity bump as more area is added (Fig. 9D). As shown for the linear track, the equilibrium activity bump representing xinit converges to that of a feedforward model for which the internal network drive has no spatial tuning (Eq. 12). The corresponding activity bump is largely controlled by the external input and thus is not robust to a degraded input. The robustness through recurrent excitation would be fully restored were the animal to revisit these locations.

In our simulations, the megamap accurately represents ∼350 m of the track (140 m2 excluding the 15 cm boundaries of the learning region) and 100 m2 of the square environment before the relative error in the equilibrium activity bump at any location reaches 0.35. The large subregion accurately represented by Mopt continuously shifts along with the animal as it learns the environment. Hence, this relatively small network of ∼11,500 place cells can accurately represent an environment comparable in size to the foraging range of a rat (Davis 1953; Innes and Skipworth 1983; Lambert et al. 2008; Taylor 1978), regardless of past experience.

We contrast these results with those found given Msum, a megamap constructed through the summation of Gaussian-like tuning curves (Eq. 11). This simple model is commonly used to construct attractor networks representing multiple environments (Rolls et al. 2002; Samsonovich and McNaughton 1997; Solstad et al. 2014; Stringer et al. 2004) and approximates basic Hebbian learning in small environments (Cerasti and Treves 2013; Kali and Dayan 2000). Whereas Msum and Mopt behave similarly in relatively small environments, differences emerge as the memory load increases. Regardless of the animal's location, the noise in the equilibrium activity of Msum grows as the learned subregion grows, eventually reaching a breakdown point at which the network can no longer remember old places or learn new places. This breakdown occurs because the network uniformly represents the entire track, attempting to maintain the memory of places learned in the distant past and never reinforced (Figs. 8 and 9; blue).