Abstract

Background/Aims

We developed a new endoscopic biopsy training simulator and determined its efficacy for improving the endoscopic biopsy skills of beginners.

Methods

This biopsy simulator, which presents seven biopsy sites, was constructed using readily available materials. We enrolled 40 participants: 14 residents, 11 first-year clinical fellows, 10 second-year clinical fellows, and five staff members. We recorded the simulation completion time for all participants, and then simulator performance was assessed via a questionnaire using the 7-point Likert scale.

Results

The mean times for completing the five trials were 417.7±138.8, 145.2±31.5, 112.7±21.9, and 90.5±20.0 seconds for the residents, first-year clinical fellows, second-year clinical fellows, and staff members, respectively. Endoscopists with less experience reported that they found this simulator more useful for improving their biopsy technique (6.8±0.4 in the resident group and 5.7±1.0 in the first-year clinical fellow group). The realism score of the simulator for endoscopic handling was 6.4±0.5 in the staff group.

Conclusions

This new, easy-to-manufacture endoscopic biopsy simulator is useful for biopsy training for beginner endoscopists and shows good efficacy and realism.

Keywords: Endoscopy, Training, Simulator, Biopsy, Education

INTRODUCTION

Esophagogastroduodenoscopy (EGD) is an excellent screening and treatment modality for various diseases. Because the frequency of endoscopic examinations is increasing, the rate of adverse events is also increasing: 0.95% for screening colonoscopy, 1.07% for EGD, and as high as 15% in selected therapeutic endoscopy procedures.1–3 However, there is no standard methodology for training endoscopists and endoscopy is usually learned by supervised hands-on training with patients in the clinical setting.4

Traditional endoscopic training techniques pose potential risks for patients and require willing patients.5 Thus, various endoscopic simulators are used in the clinical field.6,7 However, they have some limitations. Training of beginners in the endoscopic procedure can be thought of as involving two areas: one concerns training of the entire endoscopic examination procedure and the other involves training of each individual procedural step, such as insertion, scope manipulation, biopsy, and polypectomy. Widely used teaching systems for endoscopic techniques, such as virtual or computerized simulators, usually deal with the entire endoscopic procedure, from scope insertion into the esophagus to biopsy of the lesion. However, these simulators are extremely expensive and only train some techniques.8–10 To train each step or technique and overcome the high cost of these simulators, various low-cost simulators have been developed for beginners.11,12 Although biopsy techniques are fundamental to correctly diagnose disease and reduce procedure time, especially in beginners, simulators to improve biopsy skill remain insufficient.

Therefore, we developed an easy-to-manufacture simulator using common materials that can be purchased relatively cheaply. We investigated the efficacy and realism of this training simulator for endoscopic biopsy in trainees without little-to-no upper endoscopy experience and compared their results with those of more experienced endoscopists and experts.

MATERIALS AND METHODS

1. Participants

Between March 2014 and December 2014, 40 participants working at Asan Medical Center, Seoul, Korea, were enrolled in this study. Of these, 14 were residents attached to the Department of Internal Medicine without experience of EGD or colonoscopy, 11 were first-year clinical fellows attached to the Department of Gastroenterology with experience of a median of 711 cases of EGD (interquartile range [IQR], 158.0 to 820.0) and 81 cases of colonoscopy (IQR, 52.0 to 95.0), 10 were second-year clinical fellows attached to the Department of Gastroenterology with experience of a median of 1,563 cases of EGD (IQR, 1,478.3 to 1,963.3) and 951 cases of colonoscopy (IQR, 890.3 to 1,004.8), and five were staff attached to the Department of Gastroenterology with experience of a median of 20,339 cases of EGD (IQR, 9,963.0 to 26,068.5) and 1,838 cases of colonoscopy (IQR, 1,533.5 to 4,989.5). All residents attended a lecture for trainee endoscopists on the endoscopic technique in our center. All participants learned how to handle the biopsy simulator before testing. This study was approved by the Institutional Review Board of Asan Medical Center.

2. Construction of the biopsy simulator

This simulator was designed to allow for adequate maneuverability of the endoscope within the lumen of a polyvinylchloride (PVC) hose (3.15-cm outer diameter and 40-cm length) and to recreate seven biopsy sites with the male halves of nickel snap fasteners (7-mm diameter). The inside view of the simulator and its schematic are shown in Fig. 1. The main box consisted of a polypropylene food container (210×140×80 mm) with a lid, and a PVC hose for passage of the scope shaft was connected to the main box. To minimize friction between the scope shaft and the PVC hose during simulation, a silk sheet was placed inside the hose. Seven snap fasteners were attached to each biopsy site and a PVC bottle cap was placed at the bottom of the box for receiving the removed snap fasteners. The overall perspective view and magnified views of each part are shown in Fig. 2. Table 1 provides a list of all materials used to construct the biopsy simulator. Most can be attained without difficulty.

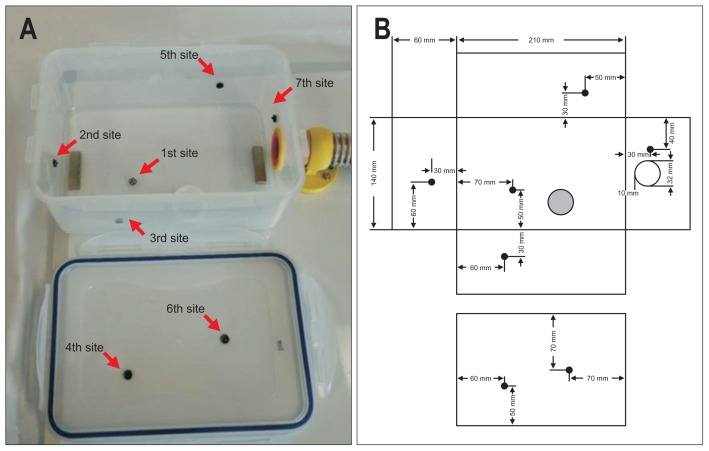

Fig. 1.

View of the interior of the container without its lid and a schematic diagram. (A) The photograph shows the interior of the food container without its lid. (B) The design schematic. The numbers are in order of biopsy progression and indicate the following: the greater curvature (GC)/anterior wall (AW) of the lower body (LB) (1st site, arrow), the AW of the antrum (2nd site, arrow), the AW of the LB (3rd site, arrow), the lesser curvature (LC)/posterior wall (PW) of the LB (4th site, arrow), the PW of the mid-body (MB) (5th site, arrow), the LC of the MB (6th site, arrow), and the cardia (7th site, arrow). The corresponding locations of the biopsy sites (●), polyvinylchloride (PVC) bottle cap (gray spot), and the hole for the scope shaft (○) are shown.

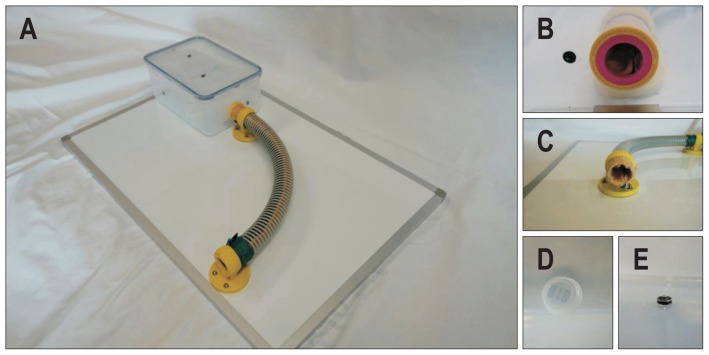

Fig. 2.

View of the completed biopsy simulation and magnified views of its components. (A) View of the completed biopsy simulation. (B) Interior view of the hole in the container wall for the passage of the scope shaft. (C) View of the distal end of the polyvinylchloride (PVC) hose with the container removed. Also visible is the silk sheet fastened by the bracket and hose. (D) View of the PVC bottle cap. (E) View of the small round magnetic foam tape with a snap fastener in situ in one of the seven biopsy sites.

Table 1.

List of Materials Required to Assemble the Biopsy Simulator

| Polypropylene food container (210×140×80 mm) |

| Male halves of 7 nickel snap fasteners (7-mm diameter) |

| Polyvinylchloride hose (3.15-cm outer diameter and 40-cm length) |

| Magnetic foam tape (1.7-mm thickness) |

| Two pipe brackets (3.2-cm diameter) |

| Six steel M4 hex bolts and nuts of 2-cm length to fasten the pipe bracket to the white board |

| Magnetic white board (60×40 cm) |

| Silk sheet inside the hose to minimize friction between the scope shaft and the PVC hose wall |

| Polyvinylchloride bottle cap of appropriate size |

| Four neodymium magnets (4×1×0.3 cm) to fasten the container to the white board |

3. Biopsy simulator training methods

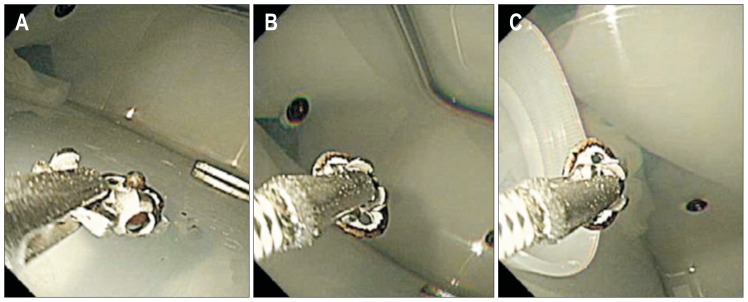

We recorded the total completion time for all participants from the start to the end of the training session and checked the time to completion of all seven biopsy sites, which were designed as follows: the greater curvature (GC)/anterior wall (AW) of the lower body (LB) (first site), the AW of the antrum (second site), the AW of the LB (third site), the lesser curvature (LC)/posterior wall (PW) of the LB (fourth site), the PW of the mid-body (MB) (fifth site), the LC of the MB (sixth site), and the cardia (seventh site) (Fig. 1). The training method was as follows: (1) the scope was inserted into the box through the PVC hose; (2) the endoscopist tried to grasp and detach the snap fasteners attached to each biopsy site with biopsy forceps, assisted by other participants, and the snap fasteners were moved to the PVC bottle cap for collection (Fig. 3).

Fig. 3.

Typical sequence of the maneuver for picking up the snap fastener with the biopsy forceps and putting it into the polyvinylchloride (PVC) bottle cap. (A) Approach to the snap fastener. (B) Grasping and detaching the snap fastener. (C) Moving the snap fastener to the PVC bottle cap.

The timer was begun (start time) when the scope was inserted into the PVC hose and was stopped (end time) when the last snap fastener was placed in the PVC bottle. All participants performed the simulation repeatedly until they successfully completed the entire simulation (removal of seven snap fasteners) five times. The target time was 183 seconds in the resident group, which was defined as 150% of the mean completion time of the first trial (122 seconds) of the staff group and 146 seconds in the fellow group, which was 120% of the mean completion time of the first trial of the staff group. The entire procedure was video recorded and we reviewed the recording to determine the total completion time and the completion time of each of the seven biopsy sites.

4. Questionnaire on simulator realism and usefulness in training

After all participants performed the simulation, self-administered questionnaires were given to the participants to estimate the level of difficulty of each biopsy site using a 7-point Likert scale (1 to 7), where 1 indicates easy and 7 indicates difficult. In addition, participants answered 10 questions used in a previous study:12 (1) endoscopic handling in the simulator is realistic (this question was not answered by our resident group); (2) the simulator is easy to handle; (3) use of the simulator is suitable for endoscopic training; (4) working with the simulator improves my skills; (5–7) the following skills (introduction and positioning of the biopsy forceps, handling of the biopsy forceps, interaction with the assistant) can be trained with the simulator; (8) the simulator training improves concentration during biopsy procedure; (9) training with the simulator reduces the risk for patients; and (10) I recommend that the simulator be used in biopsy forceps training (this question was not answered by our resident group). Participants’ responses were assessed using a 7-point Likert scale (1 to 7), where 1 indicates poor and 7 indicates excellent. In addition, the experienced endoscopists (i.e., not the residents) also graded the level of realism of each of the seven biopsy sites using a 7-point Likert scale (1 to 7), where 1 indicates poor and 7 indicates excellent.

5. Outcome measures

The primary outcome measure was the duration of the entire procedure for this newly developed biopsy simulator. We hypothesized that the completion time would be longer in less experienced groups in the first trial but that the completion time would rapidly decline in these groups, especially in the resident group. The secondary outcome measures were whether (1) the realism of this simulator is similar to that of a real stomach and (2) this biopsy simulator could be helpful and be recommended for teaching endoscopic biopsy to trainees.

6. Statistical analysis

Continuous variables are reported as the median with IQR and/or as mean±standard deviation (SD). Categorical variables are expressed as relative frequencies. Depending on the distribution, the two-sample t-test or the Wilcoxon signed-rank test was used to compare continuous variables and the chi-square or Fisher exact test (if the numbers were inadequate) was used to compare the categorical variables. Correlations between the level of difficulty and duration were analyzed by the Spearman rank test and linear regression. All p-values were two-sided and p-values of <0.05 were considered to indicate statistical significance. All statistical analyses were performed by using SPSS version 18.0 (SPSS Inc., Chicago, IL, USA).

RESULTS

1. Biopsy simulator outcomes

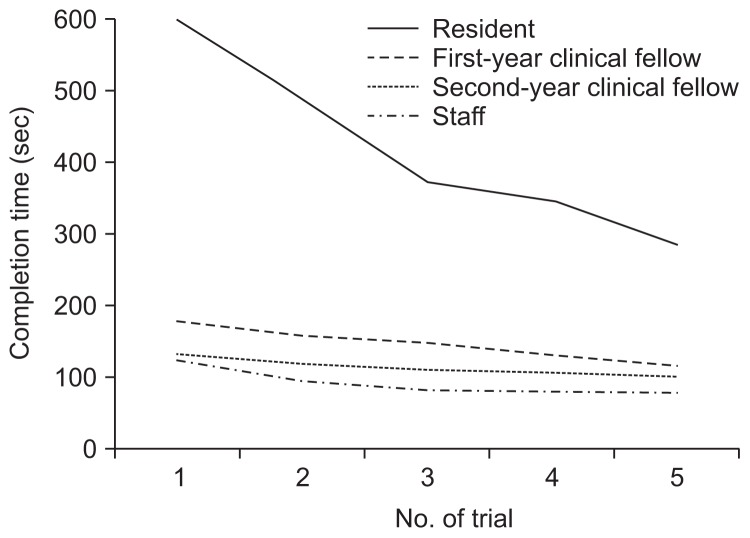

The mean±SD time (seconds) required to complete the first and fifth trials with the simulator were 598.2±248.2 and 284.4±63.2, 177.1±43.8 and 115.1±21.2, 131.2±27.8 and 100.3±16.8, and 122.0±60.3 and 77.4±14.1 in the resident group, first-year clinical fellow group, second-year clinical fellow group, and staff group, respectively. The mean time to complete the five trials was significant longer in the resident group than in the other groups and was not significantly different between the second-year clinical fellow group and staff group (Table 2). The interval between trials decreased after each trial, with the intervals sharply decreasing in the resident group (Fig. 4). The mean±SD number of training sessions to achieve the target time was 9.0±2.4 in the resident group, 3.5±1.6 in the first-year clinical fellow group, 1.5±1.3 in the second-year clinical fellow group, and 1.2±0.4 in the staff group.

Table 2.

Time Required to Complete the Biopsy Simulator according to the Experience Level of the Endoscopist

| Resident (n=14) | First-year clinical fellow (n=11) | Second-year clinical fellow (n=10) | Staff (n=5) | |

|---|---|---|---|---|

| Duration of 1st trial, sec | 598.2±248.2* | 177.1±43.8† | 131.2±27.8‡ | 122.0±60.3 |

| Duration of 2nd trial, sec | 487.6±244.1§ | 156.9±38.7|| | 117.9±27.4 | 92.8±17.3 |

| Duration of 3rd trial, sec | 372.6±122.3# | 147.0±32.5** | 109.4±27.6†† | 81.2±10.2 |

| Duration of 4th trial, sec | 345.6±100.5‡‡ | 130.0±33.3§§ | 104.7±20.3|| || | 79.2±16.9 |

| Duration of 5th trail, sec | 284.4±63.2 | 115.1±21.2## | 100.3±16.8*** | 77.4±14.1 |

| Mean duration of 5 trials, sec | 417.7±138.8††† | 145.2±31.5‡‡‡ | 112.7±21.9§§§ | 90.5±20.0 |

Data are presented as means±SD.

p<0.001 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p<0.001 vs staff group;

p=0.024 vs second-year clinical fellow group and p>0.05 vs staff group;

p>0.05 vs staff group;

p<0.001 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p<0.001 vs staff group;

p=0.020 vs second-year clinical fellow group and p=0.003 vs staff group; p>0.05 vs staff group;

p<0.001 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p<0.001 vs staff group;

p=0.020 vs second-year clinical fellow group and p=0.001 vs staff group;

p=0.005 vs staff group;

p<0.001 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p<0.001 vs staff group;

p>0.05 vs second-year clinical fellow group and p=0.005 vs staff group;

p=0.040 vs staff group; p<0.001 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p<0.001 vs staff group;

p>0.05 vs second-year clinical fellow group and p=0.005 vs staff group;

p=0.028 vs staff group;

p<0.001 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p<0.001 vs staff group;

p=0.010 vs second-year clinical fellow group and p=0.013 vs staff group;

p>0.05 vs staff group.

Fig. 4.

Completion times for each trial for all the groups.

2. Participant opinions of the biopsy simulator

Table 3 shows the mean scores of the opinions of the users of the biopsy simulator. According to the experts, endoscopic handling in this simulator was quite realistic and the use of the simulator in endoscopic training was reasonable (6.4±0.5 and 7.0±0.0, respectively). On the other hand, this simulator was considered more useful for improving the biopsy technique by less experienced endoscopists (6.8±0.4 in the resident group and 5.7±1.0 in the first-year clinical fellow group) than by experienced endoscopists (3.0±1.1 in the second-year clinical fellow group and 3.2±3.0 in the staff group). Nonetheless, the more experienced endoscopists recommended this simulator as a biopsy training method.

Table 3.

Mean Scores of the Answers to the Questions in the Biopsy Simulator Using a 7-Point Likert Scale and Based on the Experience Level of the Endoscopist

| Resident (n=14) | First-year clinical fellow (n=11) | Second-year clinical fellow (n=10) | Staff (n=5) | |

|---|---|---|---|---|

| Endoscopic handling in the simulator is realistic | NA | 4.5±0.9* | 4.4±0.8† | 6.4±0.5 |

| The simulator is easy to handle | 5.9±0.9‡ | 5.6±1.3‡ | 5.5±1.2‡ | 6.6±0.9 |

| Use of the simulator is reasonable for endoscopic training | 6.6±0.6§ | 5.5±1.3|| | 6.0±0.8 | 7.0±0.0 |

| Working with the simulator improves my skills | 6.8±0.4# | 5.7±1.0** | 3.0±1.1†† | 3.2±3.0 |

| The following skills can be trained with the simulator | ||||

| Introduction and positioning of the biopsy forceps | 6.4±1.2‡‡ | 5.5±1.3‡‡ | 5.6±1.2‡‡ | 6.4±0.5 |

| Handling of the biopsy forceps | 6.6±0.5§§ | 5.4±1.7§§ | 5.7±1.2§§ | 6.4±0.9 |

| Interaction with the assistant | 6.9±0.4|| || | 5.9±1.3 | 5.5±1.8## | 5.8±1.3 |

| The simulator training improves concentration during biopsy procedure | 6.5±0.8*** | 5.3±1.4††† | 4.4±1.8‡‡‡ | 5.8±2.7 |

| Training with the simulator reduces the risk for patients | 6.2±0.9§§§ | 4.9±1.3§§§ | 5.1±2.1§§§ | 4.4±2.7 |

| I recommend that the simulator be used in biopsy forceps training | NA | 5.9±0.8|| || || | 6.1±1.3 | 7.0±0.0 |

Data are presented as means±SD.

NA, not available.

p>0.05 vs second-year clinical fellow group and p=0.001 vs staff group;

p=0.001 vs staff group;

p>0.05 between all groups;

p=0.005 vs first-year clinical fellow group, p>0.05 vs second-year clinical fellow group, and p>0.05 vs staff group;

p>0.05 vs second-year clinical fellow group and p=0.002 vs staff group; p=0.028 vs staff group;

p=0.009 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p=0.019 vs staff group;

p<0.001 vs second-year clinical fellow group and p>0.05 vs staff group;

p>0.05 vs staff group;

p>0.05 between all groups;

p>0.05 between all groups;

p=0.029 vs first-year clinical fellow group, p=0.013 vs second-year clinical fellow group, and p>0.05 vs staff group; p>0.05 vs second-year clinical fellow group and p>0.05 vs staff group;

p>0.05 vs staff group;

p=0.021 vs first-year clinical fellow group, p=0.001 vs second-year clinical fellow group, and p>0.05 vs staff group;

p>0.05 vs second-year clinical fellow group and p>0.05 vs staff group;

p>0.05 vs staff group;

p>0.05 between all groups;

p>0.05 vs second-year clinical fellow group and p=0.019 vs staff group; p>0.05 vs staff group.

3. Simulator outcomes at each biopsy site

Table 4 shows the durations and difficulty scores of each biopsy site for each group. In all participants, the mean±SD duration (seconds) of biopsy at the site designed to represent the cardia (seventh site) was longer than that of the other sites (92.0±38.5 in the resident group, 34.8±11.6 in the first-year clinical fellow group, 23.1±6.0 in the second-year clinical fellow group, and 17.0±2.1 seconds in the staff group) and duration required for the AW of the antrum (second site) was shortest in most groups (38.1±14.3, 14.1±3.0, 11.9±2.0, and 10.1±1.6 in each group, respectively) (Table 4). The mean score for the level of difficulty using the Likert scale showed that the cardia (seventh site) was the most difficult site (5.9±1.3, 6.5±0.7, 6.5±0.7, and 6.0±1.2 in each group, respectively) and that the AW of the antrum (second site) was the easiest site (1.1±0.3, 1.6±0.8, 1.5±1.3, and 1.0±0.0 in each group, respectively) (Table 4). Rank-order correlation analysis by the Spearman test showed a significant relationship between the duration of the biopsy and the level of difficulty (p<0.001, Spearman rho=0.848).

Table 4.

Durations and Difficulty Scores of the Biopsy Simulator Categorized according to the Site of the Biopsy and the Experience Level of the Endoscopist

| Resident (n=14) | First-year clinical fellow (n=11) | Second-year clinical fellow (n=10) | Staff (n=5) | |

|---|---|---|---|---|

| 1st site (LB-GC/AW) | ||||

| Mean duration of 5 trials, sec | 64.8±13.0* | 21.1±5.2† | 15.5±2.8‡ | 15.2±5.3 |

| Rank of mean duration | 2 | 3 | 3 | 3 |

| Mean scores for level of difficulty | 6.5±0.8 | 5.8±1.5 | 5.8±1.0 | 6.6±0.5 |

| Rank of scores for the level of difficulty | 1 | 2 | 2 | 1 |

| 2nd site (antrum-AW) | ||||

| Mean duration of 5 trials, sec | 38.1±14.3§ | 14.1±3.0|| | 11.9±2.0 | 10.1±1.6 |

| Rank of mean duration | 7 | 7 | 7 | 6 |

| Mean scores for level of difficulty | 1.1±0.3 | 1.6±0.8 | 1.5±1.3 | 1.0±0.0 |

| Rank of scores for the level of difficulty | 7 | 7 | 7 | 7 |

| 3rd site (LB-AW) | ||||

| Mean duration of 5 trials, sec | 53.2±24.6# | 16.1±4.1** | 12.8±2.6†† | 9.5±2.2 |

| Rank of mean duration | 4 | 6 | 6 | 7 |

| Mean scores for level of difficulty | 3.1±1.5 | 2.4±1.7 | 2.3±1.2 | 2.8±1.3 |

| Rank of scores for the level of difficulty | 6 | 6 | 6 | 6 |

| 4th site (LB-LC/PW) | ||||

| Mean duration of 5 trials, sec | 51.0±17.6‡‡ | 17.6±3.8§§ | 14.4±2.7|| || | 12.0±2.7 |

| Rank of mean duration | 5 | 5 | 5 | 4 |

| Mean scores for level of difficulty | 3.1±0.9 | 3.5±1.0 | 3.0±0.8 | 3.0±0.7 |

| Rank of scores for the level of difficulty | 5 | 5 | 5 | 5 |

| 5th site (MB-PW) | ||||

| Mean duration of 5 trials, sec | 70.0±45.8 | 22.4±8.3## | 20.4±6.1*** | 16.5±8.9 |

| Rank of mean duration | 3 | 2 | 2 | 2 |

| Mean scores for level of difficulty | 4.8±1.1 | 4.5±1.1 | 4.4±1.3 | 4.2±0.8 |

| Rank of scores for the level of difficulty | 3 | 3 | 4 | 4 |

| 6th site (MB-LC) | ||||

| Mean duration of 5 trials, sec | 48.5±18.3††† | 19.2±7.6‡‡‡ | 14.6±2.8§§§ | 10.3±0.6 |

| Rank of mean duration | 6 | 4 | 4 | 5 |

| Mean scores for level of difficulty | 3.6±0.9 | 3.9±1.2 | 4.5±1.4 | 4.4±1.5 |

| Rank of scores for the level of difficulty | 4 | 4 | 3 | 3 |

| 7th site (cardia) | ||||

| Mean duration of 5 trials, sec | 92.0±38.5|| || || | 34.8±11.6 | 23.1±6.0### | 17.0±2.1 |

| Rank of mean duration | 1 | 1 | 1 | 1 |

| Mean scores for level of difficulty | 5.9±1.3 | 6.5±0.7 | 6.5±0.7 | 6.0±1.2 |

| Rank of scores for the level of difficulty | 2 | 1 | 1 | 2 |

Data are presented as means±SD. No significant differences were found between groups for the mean scores of the difficulty level.

LB, lower body; GC, greater curvature; AW, anterior wall; LC, lesser curvature; PW, posterior wall; MB, mid-body.

p<0.001 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p<0.001 vs staff group;

p=0.006 vs second-year fellow group and p>0.05 vs staff group;

p>0.05 vs staff group;

p<0.001 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p<0.001 vs staff group;

p=0.043 vs second-year clinical fellow group and p=0.009 vs staff group; p>0.05 vs staff group;

p<0.001 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p<0.001 vs staff group.

p=0.029 vs second-year clinical fellow group and p=0.002 vs staff group;

p= 0.028 vs staff group;

p<0.001 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p<0.001 vs staff group;

p=0.036 vs second-year clinical fellow group and p=0.019 vs staff group;

p>0.05 vs staff group; p<0.001 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p<0.001 vs staff group;

p>0.05 vs second-year clinical fellow group and p>0.05 vs staff group;

p>0.05 vs staff group;

p<0.001 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p<0.001 vs staff group;

p>.05 vs second-year clinical fellow group and p=0.003 vs staff group;

p=0.001 vs staff group;

p<0.001 vs first-year clinical fellow group, p<0.001 vs second-year clinical fellow group, and p<0.001 vs staff group; p=0.005 vs second-year clinical fellow group and p<0.001 vs staff group;

p=0.008 vs staff group.

4. Participant opinions on the realism of the biopsy simulator

The level of realism of each biopsy site was estimated by experienced endoscopists (i.e., excluding the residents) (Table 5). The realism was higher at the cardia site (seventh site) than at the other sites for all groups (6.7±0.5, 6.6±0.7, and 6.8±0.4 in the first-year clinical fellow group, second-year clinical fellow group, and staff group, respectively). For the first-year clinical fellow group, the LB-LC/PW lesion (fourth site) had lowest realism (5.3±1.3), whereas the body-PW lesion (fifth site) had the lowest realism for the second-year clinical fellow group (3.5±1.4). For the staff group, the realism scores of the LB-GC/AW (firth site) and LB-LC/PW (fourth site) lesions were the lowest (4.8±1.3 and 4.8±1.5, respectively). Compared with the opinion of the staff group, the opinion of the first-year clinical fellow group was significantly different (p=0.028), whereas the opinion of the second-year clinical fellow group was not significantly different (p=0.089) using the Wilcoxon signed-rank test.

Table 5.

Score of the Level of Realism of the Biopsy Simulator for Each Biopsy Site Categorized according to the Experience Level of the Endosco-pist

| First-year clinical fellow (n=11) | Second-year clinical fellow (n=10) | Staff (n=5) | |

|---|---|---|---|

| 1st site (LB-GC/AW) | |||

| Mean level of realism score | 5.6±1.9 | 4.9± 1.7 | 4.8±1.3 |

| 2nd site (antrum-AW) | |||

| Mean level of realism score | 5.6±1.0 | 4.8±1.4 | 5.2±2.7 |

| 3rd site (LB-AW) | |||

| Mean level of realism score | 5.6±1.6 | 4.1±1.5 | 5.0±2.0 |

| 4th site (LB-LC/PW) | |||

| Mean level of realism score | 5.3±1.3 | 4.7±2.0 | 4.8±1.5 |

| 5th site (MB-PW) | |||

| Mean level of realism score | 5.6±1.0 | 3.5±1.4 | 5.4±1.7 |

| 6th site (MB-LC) | |||

| Mean level of realism score | 5.9±0.8 | 5.5±0.7 | 5.4±1.8 |

| 7th site (cardia) | |||

| Mean level of realism score | 6.7±0.5 | 6.6±0.7 | 6.8±0.4 |

Data are presented as means±SD. No significant differences were found between groups regarding the mean scores of the level of realism except for the first-year clinical fellow group vs the second-year clinical fellow group for the 5th biopsy site (MB-PW) (p=0.001).

LB, lower body; GC, greater curvature; AW, anterior wall; LC, lesser curvature; PW, posterior wall; MB, mid-body.

DISCSUSSION

Our current analyses reveal the efficacy and realism of a newly designed biopsy simulator that can be made using readily obtainable materials. The completion time of this simulator differed according to the degree of participant experience with endoscopic examination and a longer time was required to complete the simulator in the less experienced group than in the more experienced group. In addition, the completion time became shorter as the participants performed more training sessions and the effect of repeated training was higher in the resident group who had no endoscopic experience. Following simulator training, experts considered the realism of this simulator to be high compared with a real stomach and felt that this simulator would be a useful tool for endoscopic examination training. Thus, the value of our new simulator is that it can be easily made, is considered realistic, and can help beginners to practice the endoscopic biopsy technique.

We have hypothesis that there are four functions that can be simultaneously performed during endoscopic examination: cognitive, technical, methodological, and communicative. The cognitive function is involved when endoscopists find lesions and make diagnoses and further plans. The technical function is useful for control of micro-motion in the endoscopic procedure and for handling accessories for endoscopic examination. The methodological function is used to know the sequences and methods for routine endoscopic examination from the oral cavity to the second portion of the duodenum. Finally, the communicative function is needed to communicate with assistants and to understand patient status. Because these four functions are simultaneously performed in the real endoscopic examination, endoscopy training should involve the correct harmonization of these functions. However, the process for combining each step that allows one or two functions to be practiced remains to be perfected because there are some obstacles, as noted by Cohen and Thompson13 in a previous review.

In the present study, in our training system, we focused on technical function, particularly that of the endoscopic biopsy. Our results revealed that the completion time was longer in less experienced participants than in experienced participants (the mean±SD time to completion [seconds] of the five trials were 417.7±138.8, 145.2±31.5, 112.7±21.9, and 90.5±20.0 seconds in the resident group, first-year clinical fellow group, second-year clinical fellow group, and staff group, respectively) and the completion time between the first and final trials sharply decreased in less experienced participants (the mean completion times of the first and fifth trials were 598.2±248.2 and 284.4±63.2, 177.1±43.8 and 115.1±21.2, 131.2±27.8 and 100.3±16.8, and 122.0±60.3 and 77.4±14.1 in the resident group, first-year clinical fellow group, second-year clinical fellow group, and staff group, respectively). Thus, our simulator can reflect the differences in endoscopic control according to experience and can also show the effect of repetitive training, especially in beginners.

Regarding the questionnaire results, the experts felt that this simulator was relatively realistic and that it could be valuable as a training tool for endoscopic examination. After using the simulator, less experienced groups (the resident and first-year clinical fellow groups) felt that working with the simulator could improve their skills. Our results also showed that this biopsy simulator is a relatively realistic representation of the stomach and can be used to train less experienced endoscopists to improve their endoscopic biopsy skills.

Previous attempts to develop endoscopy simulators that included the four functions and adequate realism did not show satisfactory results.14–18 To overcome these limitations, a recent study12 described a different approach to the simulator that focused on the sectional parts, such as endoscope maneuverability, as well as on alignment and intubation of the papilla, in addition to ease of manufacture and high educational value. In another study, the simulator emphasized basic maneuvers such as retroflexion, tip flexion, torque, polypectomy, and navigation and loop reduction.19 The main purpose of these previous reports12,19 was similar to that of our study and we expect that these trials will lead to a trend in endoscopic educational systems that involve the disassembly of each step and the union of the disassembled steps. In the disassembly phase, it will be possible to make relatively simple simulators for training each step, such as insertion, biopsy, injection, clipping, and coagulation. In the union phase, the results of the training in each step should be integrated using a well-designed system. Our study focused on the endoscopic biopsy technique, which is one of the core endoscopy skills.

In addition, we wanted to create an easy-to-manufacture and low-cost simulator that could be used with repeated training by many trainees. So that the simulator could be used for the simple training of the biopsy technique, we constructed the seven sites of biopsy lesions to match the lesions of the real stomach to enable trainees to get more biopsy experience from different sites. We found that biopsy was easier in the sites representing the antrum and LB than in the sites representing the mid- and higher body, similar to the real practice of endoscopic examination. The easiest site was the AW of the antrum and the hardest was the cardia, which is similar to the real situation. Thus, this simulator can be useful for biopsy training at various sites with various levels of difficulty. The experienced endoscopists felt that the realism of the cardia was high, but the realism was slightly lower in LB sites. This result might be because this simulator cannot reflect the various morphological changes caused by the angle of the stomach in the antrum and LB area. Therefore, a more complex version of the simulator is required for these sites.

This simulator is a powerful tool for beginners wishing to obtain minute endoscopy control and improve biopsy skill before performing examinations in real patients in the clinical field and its construction price is relatively low. In addition, the operation of the simulator requires endoscopic control, so it is likely to effectively improve technical function among four functions of simulator. However, the simulator was not totally similar to the human stomach in shape. Other functions could not be trained with this simulator except for the biopsy technique. Therefore additional simulators for training other skills needed in real endoscopic procedures are necessary. In addition, an educational system for integrating each simulator of each function of endoscopic skills should be made with a standardized protocol in the near future.

In conclusion, our data show that this newly designed endoscopic biopsy simulator is a useful, effective, and realistic method for improving the biopsy technique. Furthermore, even though this simulator is limited for the training of multiple endoscopic techniques, it is easy-to-manufacture with cheap materials, can be used with a large number of trainees, and can raise standards to a certain degree in trainees. Therefore, this simulator can be very useful for training endoscopy beginners before they work with actual patients, especially in centers where rapid training of endoscopists is required.

Footnotes

CONFLICTS OF INTEREST

No potential conflict of interest relevant to this article was reported.

REFERENCES

- 1.Leffler DA, Kheraj R, Garud S, et al. The incidence and cost of unexpected hospital use after scheduled outpatient endoscopy. Arch Intern Med. 2010;170:1752–1757. doi: 10.1001/archinternmed.2010.373. [DOI] [PubMed] [Google Scholar]

- 2.Freeman ML, Nelson DB, Sherman S, et al. Complications of endoscopic biliary sphincterotomy. N Engl J Med. 1996;335:909–918. doi: 10.1056/NEJM199609263351301. [DOI] [PubMed] [Google Scholar]

- 3.Mallery JS, Baron TH, Dominitz JA, et al. American Society for Gastrointestinal Endoscopy: complications of ERCP. Gastrointest Endosc. 2003;57:633–638. doi: 10.1053/ge.2003.v57.amge030576633. [DOI] [PubMed] [Google Scholar]

- 4.Bisschops R, Wilmer A, Tack J. A survey on gastroenterology training in Europe. Gut. 2002;50:724–729. doi: 10.1136/gut.50.5.724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bini EJ, Firoozi B, Choung RJ, Ali EM, Osman M, Weinshel EH. Systematic evaluation of complications related to endoscopy in a training setting: a prospective 30-day outcomes study. Gastroin-test Endosc. 2003;57:8–16. doi: 10.1067/mge.2003.15. [DOI] [PubMed] [Google Scholar]

- 6.Ziv A, Wolpe PR, Small SD, Glick S. Simulation-based medical education: an ethical imperative. Acad Med. 2003;78:783–788. doi: 10.1097/00001888-200308000-00006. [DOI] [PubMed] [Google Scholar]

- 7.Sedlack RE, Kolars JC. Computer simulator training enhances the competency of gastroenterology fellows at colonoscopy: results of a pilot study. Am J Gastroenterol. 2004;99:33–37. doi: 10.1111/j.1572-0241.2004.04007.x. [DOI] [PubMed] [Google Scholar]

- 8.Verdaasdonk EG, Stassen LP, Monteny LJ, Dankelman J. Validation of a new basic virtual reality simulator for training of basic endoscopic skills: the SIMENDO. Surg Endosc. 2006;20:511–518. doi: 10.1007/s00464-005-0230-6. [DOI] [PubMed] [Google Scholar]

- 9.Haycock A, Koch AD, Familiari P, et al. Training and transfer of colonoscopy skills: a multinational, randomized, blinded, controlled trial of simulator versus bedside training. Gastrointest Endosc. 2010;71:298–307. doi: 10.1016/j.gie.2009.07.017. [DOI] [PubMed] [Google Scholar]

- 10.Haycock AV, Bassett P, Bladen J, Thomas-Gibson S. Validation of the second-generation Olympus colonoscopy simulator for skills assessment. Endoscopy. 2009;41:952–958. doi: 10.1055/s-0029-1215193. [DOI] [PubMed] [Google Scholar]

- 11.Thompson CC, Jirapinyo P, Kumar N, et al. Development and initial validation of an endoscopic part-task training box. Endoscopy. 2014;46:735–744. doi: 10.1055/s-0034-1365463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Schneider AR, Schepp W. Do it yourself: building an ERCP training system within 30 minutes (with videos) Gastrointest Endosc. 2014;79:828–832. doi: 10.1016/j.gie.2014.01.006. [DOI] [PubMed] [Google Scholar]

- 13.Cohen J, Thompson CC. The next generation of endoscopic simulation. Am J Gastroenterol. 2013;108:1036–1039. doi: 10.1038/ajg.2012.390. [DOI] [PubMed] [Google Scholar]

- 14.Classen M, Ruppin H. Practical endoscopy training using a new gastrointestinal phantom. Endoscopy. 1974;6:127–131. doi: 10.1055/s-0028-1098609. [DOI] [Google Scholar]

- 15.Nelson DB, Bosco JJ, Curtis WD, et al. Technology status evaluation report: endoscopy simulators* May 1999. Gastrointest Endosc. 2000;51:790–792. doi: 10.1053/ge.2000.v51.age516790. [DOI] [PubMed] [Google Scholar]

- 16.Hochberger J, Matthes K, Maiss J, Koebnick C, Hahn EG, Cohen J. Training with the compactEASIE biologic endoscopy simulator significantly improves hemostatic technical skill of gastroenterology fellows: a randomized controlled comparison with clinical endoscopy training alone. Gastrointest Endosc. 2005;61:204–215. doi: 10.1016/S0016-5107(04)02471-X. [DOI] [PubMed] [Google Scholar]

- 17.Sedlack RE, Baron TH, Downing SM, Schwartz AJ. Validation of a colonoscopy simulation model for skills assessment. Am J Gastroenterol. 2007;102:64–74. doi: 10.1111/j.1572-0241.2006.00942.x. [DOI] [PubMed] [Google Scholar]

- 18.Camus M, Marteau P, Pocard M, et al. Validation of a live animal model for training in endoscopic hemostasis of upper gastrointestinal bleeding ulcers. Endoscopy. 2013;45:451–457. doi: 10.1055/s-0032-1326483. [DOI] [PubMed] [Google Scholar]

- 19.Sedlack RE. The Mayo Colonoscopy Skills Assessment Tool: validation of a unique instrument to assess colonoscopy skills in trainees. Gastrointest Endosc. 2010;72:1125–1133.e3. doi: 10.1016/j.gie.2010.09.001. [DOI] [PubMed] [Google Scholar]