Significance

The neural basis of prediction is poorly understood. Here, utilizing the probabilistic song sequences of the Bengalese finch, we recorded neural activity following the termination of auditory playback of an individual’s produced song sequences. We demonstrate that induced neural activity is predictive of the timing and identity of individual syllables. Consistent with the intuition that more uncertain sequences should give rise to weaker predictions, we find that the uncertainty of upcoming syllables modulates the strength of neural predictions. The utility of such statistical predictions for a variety of neural computations suggest that similar properties of neural dynamics may be a general feature of sensory and motor circuits.

Keywords: sequences, prediction, uncertainty, electrophysiology, birdsong

Abstract

Predicting future events is a critical computation for both perception and behavior. Despite the essential nature of this computation, there are few studies demonstrating neural activity that predicts specific events in learned, probabilistic sequences. Here, we test the hypotheses that the dynamics of internally generated neural activity are predictive of future events and are structured by the learned temporal–sequential statistics of those events. We recorded neural activity in Bengalese finch sensory-motor area HVC in response to playback of sequences from individuals’ songs, and examined the neural activity that continued after stimulus offset. We found that the strength of response to a syllable in the sequence depended on the delay at which that syllable was played, with a maximal response when the delay matched the intersyllable gap normally present for that specific syllable during song production. Furthermore, poststimulus neural activity induced by sequence playback resembled the neural response to the next syllable in the sequence when that syllable was predictable, but not when the next syllable was uncertain. Our results demonstrate that the dynamics of internally generated HVC neural activity are predictive of the learned temporal–sequential structure of produced song and that the strength of this prediction is modulated by uncertainty.

Most complex behaviors, such as vocalizations of humans and birds, unfold over time as coordinated sequences (1–4). Furthermore, the sensory world exhibits sequential structure, and so perception also reflects processing a series of sequential events (5–8). The statistical regularities of naturally occurring sequences potentially enable prediction of upcoming sensory/behavioral events based on previous experience. Indeed, statistical prediction is central to many theories of nervous system function, including reinforcement learning (9, 10), optimal sensory-motor control (2, 11), decision making (5, 8, 12, 13), and efficient coding of sensory information (14–17). Optimal statistical predictions reflect not only the expected value (i.e., mean) of upcoming events but also the uncertainty (i.e., entropy) of those values (18, 19). The ability to form statistical predictions of sequential events suggests that neural circuits underlying perception and production of those sequences generate activity that reflects these predictions (4, 8–10, 12, 16, 17, 20–25). In contrast to sequential neuronal activation during production or replay of deterministic sequences (3, 24, 26–28), experimental demonstration of circuits exhibiting predictive activity shaped by the specific, long-term experienced statistical structure of upcoming events are rare. Here, we use the Bengalese finch (Bf) as a model system to test the hypothesis that the dynamics of internally generated neural activity are predictive of specific events and are shaped by the long-term, learned statistics of the timing and sequencing of those events.

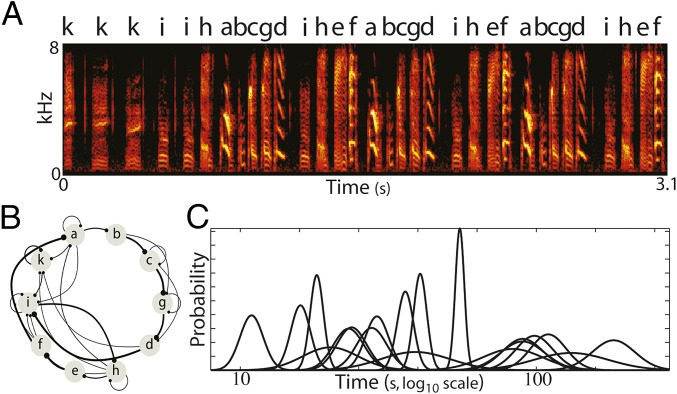

Songbirds provide an excellent model for investigating neural processing involved in perception and production of complex sequential behavior. Birdsong is a learned behavior in which each bird produces a unique sequence of categorical acoustic elements, termed syllables. Fig. 1A displays an example song segment from one Bf, represented here as a spectrogram (color intensity indicates energy at a given frequency over time). The sequencing of this bird’s syllables, derived from multiple renditions of song, is summarized in Fig. 1B, which displays a transition diagram showing the (forward) conditional probability of transitioning from any given syllable to any other syllable. For the Bf, sequencing of syllables can be variable; multiple syllables may, in principle, transition to a given syllable (convergence points, e.g., “g” in Fig. 1B), and a particular syllable may, in principle, transition to multiple syllables (divergence points, e.g., “h” in Fig. 1B). Despite the variability in sequence production, Bf sequencing is not random, as the probabilistic structure of syllable transitions is reproducible across months (29). In addition to variability in sequences, silent intervals between syllables associated with a given transition, intersyllable gaps (ISGs), also exhibit reproducible stochastic structure that can differ across different transitions and may be linked to underlying sequence generation mechanisms (30, 31). Fig. 1C displays Gaussian fits to all gap distributions derived from songs for this bird. As is typical for Bfs, the gap distributions exhibited a range of mean values. Thus, song is a learned behavior with diverse statistics associated with both timing and sequencing (temporal–sequential statistics) of syllables.

Fig. 1.

Temporal–sequential structure of birdsong. (A) Spectrogram (power at frequency vs. time) of a Bf song segment, with letter labels assigned to unique syllables. Adult Bf song typically consists of 5–12 unique syllables produced in probabilistic sequences. (B) Forward transition diagram of song syntax, compiled from many renditions of the song in A. Each node corresponds to a unique syllable from the bird’s repertoire. Edges between nodes denote transitions between syllables obtained from a large corpus of songs (dots denote transition destination). The width of each edge corresponds to the observed probability of transitioning from a syllable to any other syllable. (C) Intersyllable gap (ISG) probability distributions for all transitions in this bird. Each distribution is presented as the Gaussian fit to the log10 ISG data.

The brain regions responsible for perception, acquisition, and production of song are well studied. Sensory-motor nucleus HVC (acronym used as proper name) transmits much of the descending motor control for the temporal structure of learned song (3, 32–35) and contributes to syllable sequencing (32, 34, 36). Interestingly, HVC neurons also respond more strongly to playback of the bird’s own song (BOS) than to manipulated versions of BOS, such as songs with reverse syllable ordering (37–39). Indeed, previous work in Bfs has shown that HVC auditory responses exhibit long-time, probability-dependent integration that is shaped by the statistics of produced song sequences (40). We designed a series of experiments to examine whether neural activity in sensory-motor nucleus HVC persists after the termination of playback of syllable sequences, whether this activity is predictive of the timing and sequencing of produced syllable transitions, and whether the strength of predictions is modulated by the uncertainty of upcoming syllables.

Results

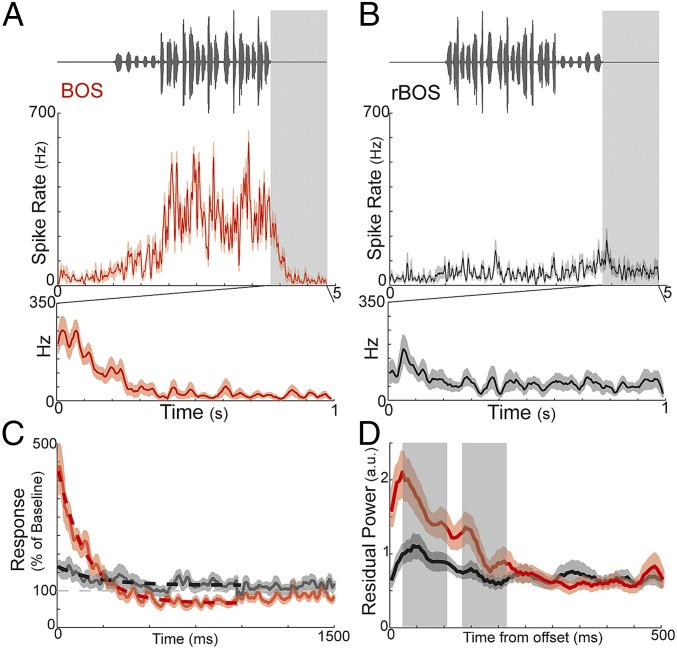

We played back auditory stimuli to 12 sedated adult Bfs while recording from HVC (Materials and Methods). We first characterized activity elicited by playback of BOS and reversed versions of BOS. Fig. 2 A and B shows example oscillograms of a BOS stimulus (BOS segment is excised from a longer bout of singing) and the temporally reversed BOS stimulus (rBOS), a control stimulus with the same spectral and amplitude content of BOS. The evoked auditory responses at one site to BOS and rBOS are displayed below the corresponding stimuli (mean ± SEM, n = 25 trials). The mean evoked spike rate relative to the mean baseline activity (evoked/baseline) was significantly greater for BOS evoked auditory responses than rBOS auditory responses (across n = 83 sites from 12 birds: 5.15 ± 0.62 vs. 1.1 ± 0.53, mean ± SEM, ***P ≤ 10−10, paired t test on log10 data; across n = 12 birds: 4.45 ± 0.59 vs. 1.46 ± 0.16, mean ± SEM, ***P = 0, Wilcoxon signed-rank test on log10 data). Thus, as reported previously, Bf HVC auditory responses to BOS were much larger than auditory responses to rBOS (35, 41, 42).

Fig. 2.

Poststimulus activity in HVC induced by BOS playback. Oscillograms (Top) of BOS (A) and rBOS (B), with evoked HVC auditory responses at one site (Middle: mean ± SEM, n = 25 trials) and induced poststimulus activity demarcated with gray boxes expanded at Bottom. HVC auditory responses evoked by BOS were larger than those evoked by rBOS, and the corresponding induced poststimulus activity was also larger. (C) Average poststimulus activity across HVC sites, expressed as percent above baseline, induced by auditory playback of BOS (red) and rBOS (black) stimuli (mean ± SEM; n = 83 sites from 12 birds). Dashed red and black lines are best-fitting exponential decay. Gray dashed line corresponds to baseline activity. (D) Average power (5–25 Hz) of residuals from exponential fits of poststimulus activity across HVC sites induced by auditory playback of BOS (red) and rBOS (black) stimuli (mean ± SEM; n = 83 sites from 12 birds). Gray boxes demarcate average duration of syllables, separated by median gap. BOS-induced residuals were significantly greater than rBOS-induced residuals over the first 250 ms of poststimulus activity (across n = 83 sites from 12 birds; BOS, 1.50 ± 0.14, vs. rBOS, 0.9 ± 0.09; mean ± SEM; P ≤ 10−4, paired t test; across n = 12 birds; BOS, 1.40 ± 0.19, vs. rBOS, 0.86 ± 0.12; mean ± SEM; P ≤ 10−3, Wilcoxon signed-rank test).

If internally generated activity in HVC is predictive of future syllables, then this activity should persist after the termination of the BOS stimulus. Indeed, playback of BOS induced poststimulus activity that persisted for several hundred milliseconds after stimulus offset. Examples of poststimulus activity are displayed at the Bottom of Fig. 2 A and B for BOS and rBOS stimuli, where we magnify the poststimulus activity from directly above (corresponding time periods demarcated in gray). BOS-induced poststimulus activity decayed roughly exponentially after stimulus offset, whereas rBOS did not induce similarly temporally extended poststimulus activity. Across all sites, BOS-induced poststimulus activity decayed to baseline levels after ∼300 ms (exponential fit: R2 = 0.22, P < 10−4, n = 83 sites, Materials and Methods) and dipped slightly below baseline for ∼700 ms before returning to approximate baseline levels (Fig. 2C). In contrast, rBOS poststimulus activity hovered around baseline and did not exhibit a systematic dependence on time (exponential fit: R2 = 0.008, P > 0.4, n = 83 sites). Qualitatively, the poststimulus activity following BOS playback exhibited an initial (0–30 ms following BOS offset; Fig. 2D) increase in residual power (low-pass–filtered residuals from best-fit exponential at each site; Materials and Methods) followed by more subtle fluctuations at the average times at which syllables would have occurred in produced song (gray shaded regions, Fig. 2D). Poststimulus activity that could exhibit nonmonotonic decay following BOS playback was also apparent in both multiunit and single-unit activity recorded at single sites (e.g., Fig. 4A, red trace, and Fig. S1). The presence of such poststimulus activity following BOS playback raises the possibility that ongoing HVC activity carries a prediction about upcoming events in song. Here, we tested whether such predictions are present for the timing and identity of syllables that would normally follow the segments of BOS that were played back to birds.

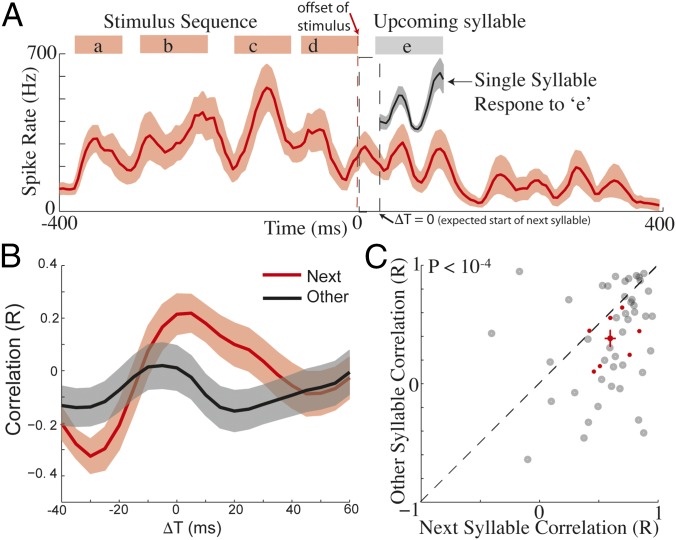

Fig. 4.

Poststimulus activity is predictive of upcoming syllables for low uncertainty transitions. (A) Poststimulus activity induced by playback of a BOS sequence was similar to the auditory response to the next syllable in the sequence. The spike rate evoked by playback of the sequence “abcd” (syllable timing in stimulus demarcated by red boxes), as well as the induced poststimulus activity (stimulus offset is indicated with red dashed line). The single-syllable response to “e” is presented above the period when it would have occurred (indicated by gray box). ΔT = 0 is the average expected time of next syllables. Data are presented as mean ± SEM across trials. (B) Time course of correlation coefficients comparing poststimulus activity to evoked single-syllable responses for the next syllable in the sequence (red) and other syllables in the birds repertoire (black) (mean ± SEM; n = 44 single-syllable comparisons from 18 sites in seven birds). (C) Scatter plot contrasting maximum correlation coefficients comparing poststimulus activity to evoked single-syllable responses for next syllables and other syllables. Black circles are individual data points, and red cross is mean ± SEM for the 44 unique syllable transitions; red points are within-bird averages for seven birds with one to four recording sites, and four to nine unique syllable transitions per bird.

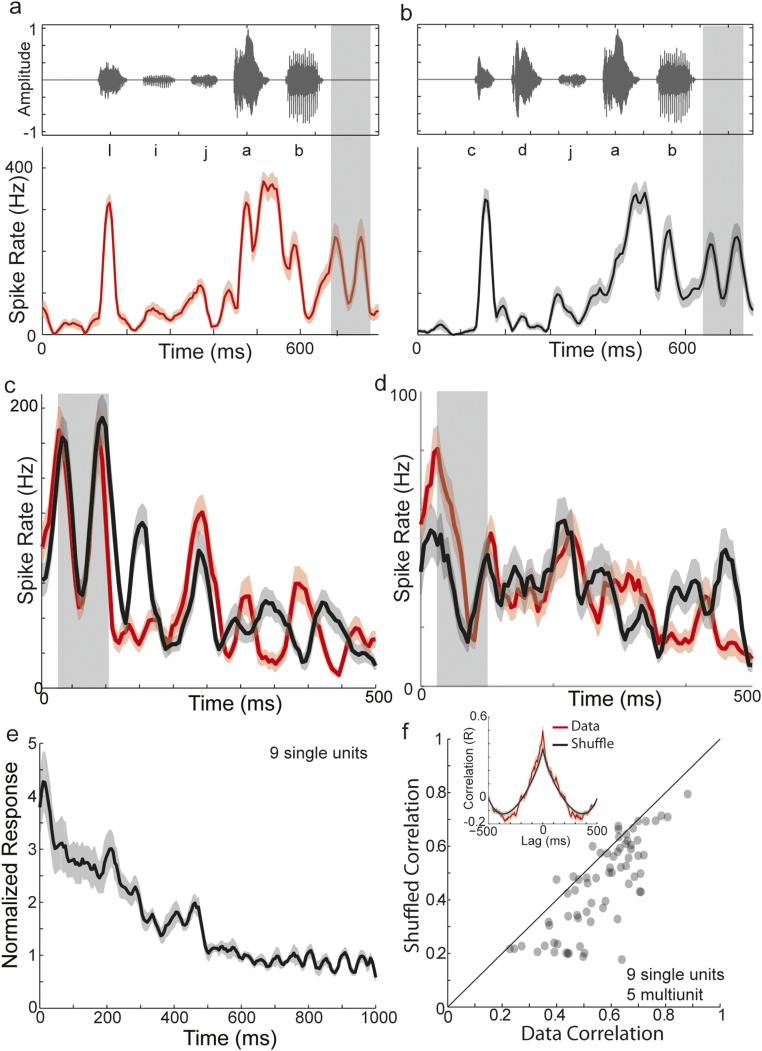

Fig. S1.

Dynamics of poststimulus activity at single units induced by short sequences of syllables. To further characterize the dynamics of poststimulus activity, we played back naturally produced sequences of syllables (four to five syllables) that ended in the same syllable. A and B show an example of two such auditory sequences (amplitude waveforms, Top) and the evoked auditory response recorded from a single unit in HVC (mean ± SE; n = 60 trials). Following the offset of the stimulus, a biphasic modulation of poststimulus activity is observed at approximately the time of the expected upcoming syllable (gray box). The poststimulus activity for these two sequences are overlaid in C, whereas D overlays single-unit poststimulus activity from a different bird in responses to an analogous stimulus pair. Qualitatively, poststimulus activity evoked by individual sequences could exhibit large fluctuations on the timescales of 50–100 ms, as evidenced by the values of the means (dark colors) relative to the SEs (lighter colors). More importantly, the poststimulus activity evoked by different sequences could also exhibit temporal fluctuations that appeared similar, especially within the first 100–250 ms. In agreement with the results for BOS, we observed that single-unit poststimulus neural activity evoked by short sequences decayed roughly exponentially back to baseline (E; n = 9 single-unit sites in three birds). To measure the consistency of the poststimulus activity dynamics evoked by different sequences at the same site, we performed a cross-covariance analysis. At each site (nine single units and five multiunits in three birds), we measured the correlation coefficient between the poststimulus activity evoked by two sequences at different lags relative to one another. The red curve of the Inset of F shows the average correlation coefficients at different lags across all pairwise comparisons of sequences across all sites (mean ± SE; n = 69 pairwise comparisons). To gauge the significance of these correlations, we randomly shuffled (100 times) the short-term structure (“signal”) while retaining the long-term structure (“carrier”) of poststimulus activity for each sequence, and performed the same cross-covariance analysis (black curve; mean ± SE; n = 69). Specifically, we calculated the best-fitting exponential (carrier) to the mean (average across trials at a site) poststimulus activity for each sequence and determined the residuals (signal). We then randomly permuted the indices (timing) of the residuals for both sequences, added these randomized “signals” to the best-fit exponentials, and then calculated the cross-covariance. Both curves in F exhibit a prominent positive peak at zero lag with symmetric negative lobes, reflecting the exponential decaying carrier. However, the peak values at zero lag were greater for the data relative to the shuffled control. Therefore, we measured the correlation coefficient around zero lag (±10 ms) for all pairwise comparisons for both real poststimulus activity as well as shuffle controls (average of values across different permutations for a pair) (F). The vast majority of points lie below the diagonal (dashed gray line), indicating the real correlations were greater than the shuffled correlations (real median = 0.59; shuffled median = 0.45; P < 10−10, Wilcoxon signed-rank test; n = 69). Together, these results indicate that the dynamics of poststimulus activity are consistently timed across playback of different sequences ending in the same last syllable, and that these features are present at single-unit sites.

Temporal Tuning for Syllable Transitions Reflects the Expected Timing of Produced Intersyllable Gaps.

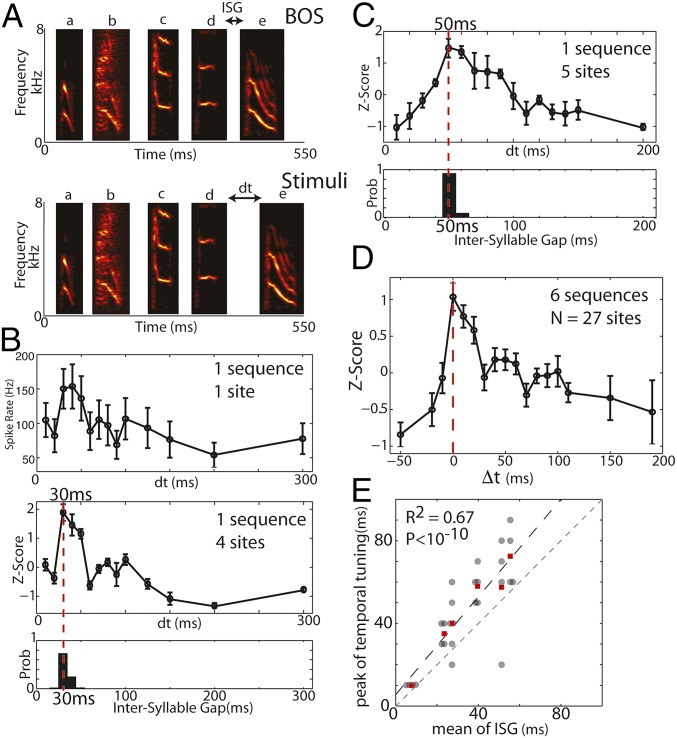

If the dynamics of HVC neural activity are structured by the temporal–sequential statistics of produced song, then auditory responses to sequences of syllables should be tuned to the specific timing of those sequences during song production. However, the degree to which the timing between syllables presented during auditory playback modulates HVC auditory responses is poorly understood. A previous study reported responses of a single HVC neuron that decayed monotonically with increasing time between syllables (39). Such a monotonically decreasing response might be explained simply if the response to delayed syllables in the sequence were superimposed on gradually decaying activity elicited by the preceding sequence. In contrast, we hypothesized that persistent HVC activity contains a more specific prediction of the timing at which subsequent syllables of song normally occur. To test for such a prediction, we played back segments of the BOS (three to five syllables) and varied the time between the last two syllables across a range that contained durations that were both shorter and longer than the mean ISG for that transition. For example, if a bird produced sequence “abcde” (Fig. 3A, Top), we played back the sequence “abcde,” and measured responses to “e” as a function of the gap between “d” and “e.” We asked whether responses to the delayed syllable depended nonmonotonically on the delay at which the syllable was played, and whether there was any relationship between the delay at which the strongest responses to the terminal syllable occurred and the duration of the ISG that normally preceded that syllable in song.

Fig. 3.

Temporal tuning of HVC auditory responses for syllable transitions reflects the expected timing of produced intersyllable gaps (ISGs). (A) Illustration of stimulus used to determine temporal tuning. To investigate whether the temporal tuning of HVC auditory responses to a syllable is matched to the statistics of song production, we presented sequences of syllables and varied the time between the last two syllables (Bottom) across values that were both shorter and longer than the average of naturally produced ISGs (Top). (B, Top) Average spike rate in response to the last syllable as a function of the temporal offset from the previous syllable (dt) at one site (mean ± SEM; n = 20). (Middle) Average responses across sites (z scores; mean ± SEM; n = 4 sites). (Bottom) The probability distribution of ISGs for this transition produced by the bird during singing. The temporal tuning exhibited a peak (time of peak demarcated by dashed red line) at 30 ms that was well aligned to the mean of the produced ISG’s for that transition (32 ms). (C) Another example showing the response as a function of dt (Top: z scores; mean ± SEM; n = 5 sites) for a sequence of syllables with a longer produced ISG (Bottom). Here, the mean produced gap duration was 53 ms, and the temporal tuning exhibited a peak at 50 ms (dashed red line). (D) Mean z scores as a function of the difference between the presented ISG and the mean produced ISG for six transitions at 27 sites from three birds [Δt = dt-mean(ISG)]. Black curve: mean ± SEM, n = 27 sites. Vertical dashed red line demarcates Δt = 0. (E) Location of peaks in temporal tuning curves for individual sites and transitions as a function of the mean of the produced ISG distributions. There was a strong correlation between the locations of the peaks of temporal tuning curves and the means of produced ISGs for the corresponding transitions (black dots: individual sites, small horizontal shift added to overlapping points; dashed black line is best linear fit, R2 = 0.67, P < 10−10, six transitions played at n = 27 sites; red squares: mean across sites for a transition, n = 6 transitions from three birds; R2 = 0.93, P < 0.002; dashed gray line is unity).

Fig. 3B illustrates temporal tuning for one transition. The top panel shows the response at one site to the last syllable as a function of the temporal offset (dt) from the previous syllable. The bottom panel shows the average response across four sites. The probability distribution of produced ISGs for this transition is presented in the bottom panel. For this transition, we found that the peak in temporal tuning occurred at an interval of 30 ms, which was very close to the mean of the produced ISG distribution (33 ms). Fig. 3C presents results from a different bird for a transition where the mean of the produced ISGs (Bottom) was much longer. The peak in temporal tuning was again well aligned to the peak in the produced ISG distribution (Bottom).

Across all 27 sites in three birds at which a total of six transitions were tested, peak temporal tuning was well aligned to the mean duration of produced gaps. Fig. 3D summarizes temporal tuning for all sites as function of time between the last two syllables relative to the mean produced ISG for associated transitions (Δt). The average temporal tuning function (n = 27 sites) exhibited a peak near Δt = 0, indicating that peak responses occur near the mean of produced ISG distributions. Indeed, across all sites, there was a strong positive correlation between the peak of the temporal tuning curve and the mean of the produced ISG distribution (Fig. 3E, gray, n = 27 sites at which six transitions were played back; R2 = 0.67, P < 10−10; red, responses averaged across sites for n = 6 transitions from three birds; R2 = 0.93, P < 0.002). However, peak tuning was delayed by a small but significant amount (12 ± 3 ms) relative to the produced mean ISG for a transition (P < 10−3, t test, N = 27). As there is no physical energy in the stimulus during gaps to drive neural activity, the observed temporal tuning must result from dynamics of internally generated neural activity induced by preceding sequences. These results are consistent with the hypothesis that HVC dynamics are predictive for the expected timing of upcoming syllables.

Poststimulus Neural Activity Is Predictive of Upcoming Syllable Identity for Low-Uncertainty Transitions.

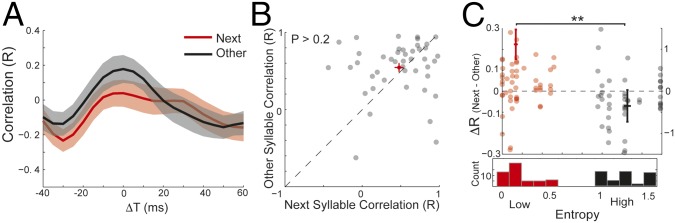

The observation of temporal tuning shaped by the timing of upcoming syllables suggests that HVC activity might also predict the identity of upcoming syllables. We tested the specificity of sequence-induced poststimulus activity for the next syllable in a sequence. Bf song contains transitions where the upcoming syllable is highly certain, and transitions where the upcoming syllable is uncertain. We quantified syllable transition uncertainty as conditional entropy: lower entropy corresponds to lower uncertainty in the identity of upcoming syllables. Transitions exhibited a bimodal distribution of entropies, which was perfectly separable (i.e., completely nonoverlapping, area under receiver operating characteristic curve = 1) at 0.75 bits; we therefore split the data into sequences ending in “low entropy” (<0.75 bits) or “high entropy” (>0.75 bits) transitions. We hypothesized that poststimulus activity induced by sequences ending in low-entropy transitions would be predictive of upcoming syllables. To test this hypothesis, we played back short sequences of syllables (three to six syllables) from BOS, as well as individual syllables in isolation (in total, 88 sequences were played back, 44 low entropy and 44 high entropy) at 18 multiunit sites in seven birds, and responses to identical stimuli were averaged across multiple sites within a bird. For the example in Fig. 4A, syllable “e” always followed the sequence “abcd” during song production, and so the transition from “d–e” was low entropy. Fig. 4A illustrates the average auditory response evoked by playback of sequence “abcd,” and the induced poststimulus activity. The average single-syllable response to “e” is presented above the period when it would have occurred had the sequence “abcde” been presented. Poststimulus activity induced by “abcd” was qualitatively similar to the response evoked by syllable “e,” raising the possibility that HVC activity following termination of the sequence “abcd” anticipates, or “predicts,” the specific temporal structure of activity elicited by the next syllable in song (in this case, “e”).

We quantified similarity between poststimulus activity and single-syllable response waveforms evoked by the next syllable(s) in the sequence using a sliding covariance analysis (Materials and Methods). This measures the similarity between single-syllable responses and poststimulus activity of the same duration. To determine the time course of these correlations, we carried out this analysis over a range of temporal lags spanning the expected onset of the next syllable(s) (Materials and Methods). To determine selectivity of poststimulus activity for the identity of the next syllable(s), we compared next-syllable correlations with correlations between poststimulus activity and responses to other syllables in the bird’s repertoire (i.e., those that did not follow the presented sequences during song production). For a given sequence, if there were multiple “next” syllables, each contributed a data point, whereas the correlations for all “other” syllables were averaged together.

Across all low-entropy transitions, poststimulus activity was more similar to responses for next syllable(s) than for other syllables. Fig. 4B presents the average correlation (relative to the mean expected timing, ΔT = 0) comparing poststimulus activity to evoked single-syllable responses for the next syllable(s) in the sequence and other syllables. Correlations for next syllables (red) were greater than the correlations for other syllables (black). Relative to the expected timing (ΔT = 0), the peak of the average correlation for next syllables occurred with a delay of 7 ms, and the median of peaks for individual correlations was delayed by 9 ms (Fig. 4B, N = 44). These delays were similar to the delay observed for temporal tuning (12 ms; Fig. 3). The maximum correlation coefficients for next syllables (extracted between −20 and 40 ms after the offset of the stimulus sequence) were significantly greater than for other syllables (Fig. 4C, P < 10−4, Wilcoxon signed-rank test; n = 44 syllables). These results indicate that the dynamics of poststimulus neural activity in HVC are predictive of upcoming syllable identity.

Uncertainty of Produced Sequences Modulates the Degree to Which Poststimulus Activity Is Predictive of Upcoming Syllables.

We next used the diversity of transition uncertainties found in Bf song sequences to examine whether predictive strength of HVC poststimulus activity is modulated by the uncertainty of upcoming syllables. We hypothesized that poststimulus activity induced by sequences ending in uncertain transitions would be less predictive of upcoming syllables than for sequences ending in more certain transitions. Fig. 5A presents for high-entropy transitions (>0.75 bits) average correlations between poststimulus activity and evoked single-syllable responses for the next syllable(s) in the sequence (red) and the other syllables (black). Here, maximum correlation coefficients were statistically indistinguishable between next and other syllables (Fig. 5B, P > 0.3, Wilcoxon signed-rank test, n = 44 syllables; red cross is mean ± SEM). Thus, for high-entropy (i.e., uncertain) transitions, unlike the case for low-entropy transitions, we did not detect selectivity of poststimulus activity for upcoming syllable identity.

Fig. 5.

Uncertainty of produced sequences modulates the degree to which poststimulus activity is predictive of upcoming syllables. (A) Time course of correlation coefficients comparing poststimulus activity to evoked single syllable responses for the next syllable in the sequence (red) and other syllables in the birds repertoire (black) (mean ± SEM; n = 44). ΔT = 0 is the average expected time of next syllables. (B) In contrast to results for low-entropy transitions, for high-entropy transitions we found that maximum correlation coefficients were statistically indistinguishable between next and other syllables (P > 0.3, Wilcoxon signed-rank test, n = 44 syllables; black circles are individual syllables, and red is mean ± SEM). (C) Transition uncertainty modulates the degree to which poststimulus activity is predictive of upcoming syllables. Difference in correlation coefficients (ΔR) between next and other syllables was significantly larger for low-entropy transitions than for high-entropy transitions (**P = 0.006, Wilcoxon signed-rank test; n = 44 syllables for both).

To directly examine how predictive strength differed between low- and high-entropy sequences, we quantified predictive strength as the difference between maximum next-syllable correlation coefficients and maximum other-syllable correlation coefficients (ΔR). The histogram at Bottom of Fig. 5C plots transition entropies for all tested sequences. The entropies formed a bimodal distribution, with a large margin between the low-entropy (red) and high-entropy (black) modes. A paired comparison of predictive strength for low- and high-entropy transitions indicated that predictive strength was larger for low-entropy transitions (0.224 ± 0.071; mean ± SEM; thick red line) than for high-entropy transitions (−0.070 ± 0.076; mean ± SEM; thick black line) (Fig. 5C, numerical values on left ordinate, **P = 0.006, Wilcoxon signed-rank test, n = 44 sequences for both low and high entropy). Furthermore, average predictive strength was greater for low-entropy transitions than for high-entropy transitions in five out of seven individual birds. These results were further confirmed by regressing ΔR against transition entropy for individual transitions (R = −0.28, P = 0.006, n = 88; Fig. 5C, colored dots, numerical values indicated on right ordinate). There were no significant differences in responses to the last syllable between the high- and low-entropy sequences (Fig. S2). These results demonstrate that transition entropy modulates the predictive strength of poststimulus activity in HVC; low-entropy transitions induced poststimulus activity that was more similar to the single-syllable responses for next syllables than other syllables, whereas high-entropy transitions did not. Correspondingly, predictive strength (ΔR) was significantly greater for low-entropy than for high-entropy sequences.

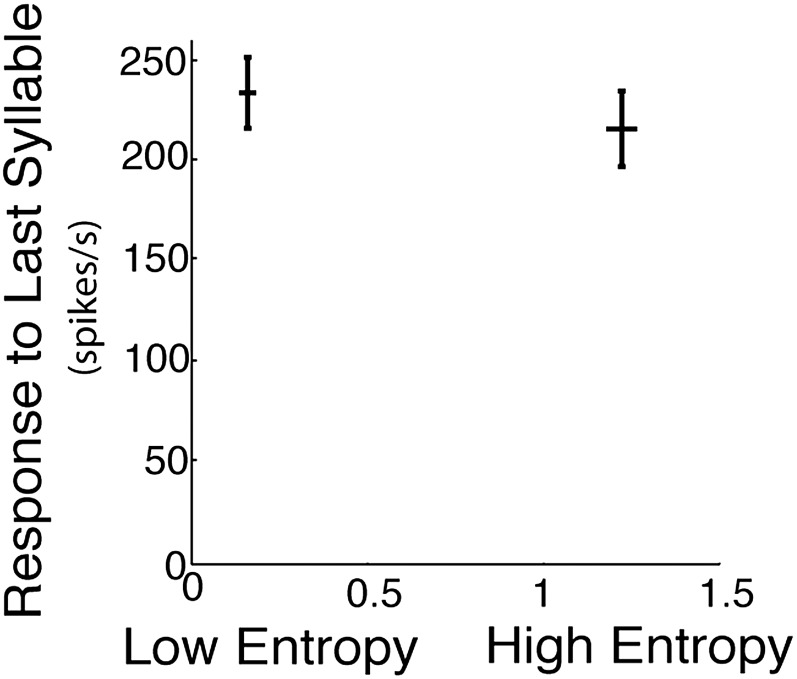

Fig. S2.

Responses to the last syllable in the low- and high-entropy sequences were not significantly different. We tested whether the responses to the last syllables in the low- and high-entropy sequences were different. The data in this figure are presented as mean ± SE. Low entropy: 230 ± 21; high entropy: 214 ± 23; P > 0.4, paired t test; n = 44 for each. Therefore, it is unlikely that the differences in predictive strength observed in Fig. 5C result from differences in responses to last syllables. Indeed, our calculation of local covariance explicitly removes the local mean activity in the examined poststimulus activity.

Discussion

An individual’s ability to form statistical predictions of sequential events implies that neural circuits must be able to leverage information about past experience to predict future events (1–4, 8–10, 12, 16, 17, 20–25). We used playback of birdsong to investigate whether and how activity in sensorimotor nucleus HVC encodes information about song sequence statistics to predict upcoming sensorimotor events. We found that song playback induces ongoing activity in HVC predictive of the timing and identity of upcoming syllables, and that the strength of this predictive activity depends on the certainty with which upcoming syllables occur.

A previous study examining the spectrotemporal selectivity of HVC auditory responses reported one neuron for which responses to terminal syllables decreased monotonically as the delay between syllables was progressively increased (39). However, that study used stimuli with timing between syllables that only exceeded the produced ISGs and therefore was not designed to sample the range of timing delays required to detect a correspondence between temporal tuning and produced gap durations. In contrast, we found that responses to playback of syllable sequences did not decay monotonically as a function of delay, but rather were greatest when those sequences were presented with ISGs that matched those normally produced during singing. Thus, in HVC, the peak of temporal tuning is correlated with the expected value of the produced ISG distributions for specific transitions. Because there is no physical energy in the stimulus during the time between syllables, this temporal tuning for specific syllable transitions must arise from dynamics of internally generated neural activity, and is consistent with the hypothesis that the neural dynamics in HVC are predictive of the temporal statistics of song.

We also tested whether poststimulus activity induced by playback of syllable sequences resembled single-syllable responses evoked by the next syllables in the sequence. Previous studies in the zebra finch characterized “temporal combination-selective” cells, in which the response of a neuron to playback of two normally ordered syllables is supralinear relative to responses to the syllables in isolation, or in abnormal order (37, 39). Similarly, in the Bf, HVC responses exhibit long-time, probability-dependent sequence integration (42). These forms of temporal integration suggest that neural activity following offset of a particular sequence can persist until the presentation of the next syllable in the sequence. Moreover, enhanced responses to normally ordered syllables suggest that such neural activity includes some prediction of the identity of upcoming syllables. Indeed, playback of normally sequenced elements of the BOS can evoke patterns of activity that resemble those present during singing (43). Here, we found that, for low-uncertainty transitions, poststimulus activity following a sequence of syllables resembles the temporal structure of activity that would normally be elicited by playback of the next syllable in the sequence. Hence, when the next syllable is predictable with greater certainty, neural responses are predictive of that syllable. Moreover, in agreement with the intuition that more uncertain transitions should give rise to weaker predictions about upcoming syllables, poststimulus activity induced by low-entropy transitions was more selective for upcoming syllables than poststimulus activity induced by high-entropy transitions.

Together, these results demonstrate that the dynamics of internally generated neural activity are predictive of the identity of upcoming syllables, and that the uncertainty of a given transition modulates the strength of this prediction. This predictive activity may, in part, contribute to long-time probability-dependent sequence integration (42), by providing a “trace” of the preceding sequence and a prediction of the upcoming syllable. Specifically, incoming sensory signals from a syllable may be integrated with “reverberating” activity evoked by the preceding sequence. Interestingly, distorted auditory feedback during singing has a larger effect on sequence production and HVC neural activity at more entropic transitions (35). This could reflect differences in the strength of “prediction” for upcoming syllables in ongoing activity, which might promote the corresponding, highest probability transitions. Given the strong sensory-motor correspondences in HVC, the entropy-dependent, predictive poststimulus activity demonstrated here could be a basis for biasing upcoming transitions and for the differential effects of distorted auditory feedback on higher vs. lower entropy transitions.

Although our results derive from recordings in HVC, the mechanisms that underlie generation of predictive activity are likely distributed across multiple nuclei. The network mechanisms underlying syllable sequencing include contributions from local microcircuits in HVC (31–36, 44), reefferent activity through extended brain circuits (e.g., HVC → RA → … → HVC) (45), and activation due to sensory feedback (29, 35, 46). Our observation that the dynamics of poststimulus activity were slowed by ∼10 ms relative to the expected timing of song [for both temporal tuning (Fig. 3 D and E) and peak predictive strength (Fig. 4B)] also suggests that the song generation network is in a different state during singing, perhaps resulting from different neuromodulatory tone (47), or from additional excitatory inputs that are not activated during playback experiments (32–35, 45).

Our findings have similarities to observations in other systems in which neural circuitry encodes information about the statistics of experience. For example, in the visual system of mice, spatiotemporal patterns of spontaneous neural activity resemble those evoked by visual stimuli for a period immediately following stimulus presentation (48). Similarly, spontaneous activity in the experienced ferret visual system can exhibit greater similarities to activity evoked by natural movies than in naïve ferrets, suggesting that spontaneous activity reflects prior natural sensory experience (49). Moreover, the hippocampus in many species has been implicated in both the encoding and recall of memory episodes, and spontaneous hippocampal neural dynamics have been associated with sequence prediction (23, 24, 26, 28). These observations and others indicate that prior experiences can shape predictive neural activity, as we have observed here. Our results additionally indicate that such predictive neural activity reflects the strength of statistical regularities of specific events in an individual’s long-term experiences. We have provided converging lines of evidence indicating that playback of sequences of syllables induces internally generated neural activity, the dynamics of which are predictive of the learned temporal–sequential statistics of specific sensory-motor events from long-term exposure. Our results derive from a sensorimotor circuit specialized for perception and production of song. Hence, it remains to be determined whether there is similar encoding of stimulus specific predictions in other systems. However, the utility of statistical predictions for a variety of neural computations and the ability of Hebbian learning to engrain these statistics in neural circuits (50) suggest that similar properties of neural dynamics may be a general feature of sensory and motor circuits.

Materials and Methods

Twelve male Bfs (age, >110 d) were used in this study. During experiments, birds were housed individually in sound-attenuating chambers (Acoustic Systems), and food and water were provided ad libitum. Acute electrophysiology procedures, as previously described (42), were performed in accordance with established animal care protocols approved by the University of California, San Francisco, Institutional Animal Care and Use Committee. Birds used in this study also contributed data to other studies (42, 46, 50). See SI Materials and Methods for further details.

SI Materials and Methods

Animals.

Twelve adult male Bengalese finches (age, >110 d) were used in this study. During the experiments, birds were housed individually in sound-attenuating chambers (Acoustic Systems), and food and water were provided ad libitum. The 14:10 light/dark photocycles were maintained during development and throughout all experiments. Birds were raised with a single tutor. All procedures were performed in accordance with established animal care protocols approved by the University of California, San Francisco, Institutional Animal Care and Use Committee. The birds used in this study have also contributed data to other studies (42, 46, 50).

Electrophysiology.

Several days before the first experiment was conducted on a bird, a small preparatory surgical procedure was performed. Briefly, birds were anesthetized with Equithesin, a patch of scalp was excised, and the top layer of skull was removed over the caudal part of the midsagittal sinus, where it branches, as well as 0.2 mm rostral, 1.9 mm lateral of the branch point. A metallic stereotaxic pin was secured to the skull with epoxy, and a grounding wire was inserted under the dura. For neural recordings, birds were placed in a large sound-attenuating chamber (Acoustic Systems) and stereotaxically fixed via the previously implanted pin. For the first penetration into a hemisphere, the second layer of skull was removed, the dura was retracted, and polydimethylsiloxane (Sigma) was applied to the craniotomy. Using the caudal branch point of the midsagittal sinus as a landmark, high-impedance (>5-MΩ) single-channel sharp tungsten electrodes were inserted at stereotaxic coordinates for HVC (see coordinates above). At the end of a recording session, craniotomies were cleaned and bone wax was used to cover the brain. For subsequent penetrations into a previously exposed hemisphere, bone wax was removed and dura was retracted.

During electrophysiological recordings, birds were sedated by titrating various concentrations of isoflurane in O2 using a nonrebreathing anesthesia machine (VetEquip). Typical isoflurane concentrations were between 0.0125% and 0.7%, and were adjusted to maintain a constant level of sedation. Sedation was defined as a state in which the bird's eyes were closed and neural activity exhibited high rates of spontaneous bursts and maintained responsiveness to auditory stimuli. Within this range, both bursting baseline activity and highly selective BOS responses were similar to those previously found in the sleeping bird or in urethane-anesthetized birds. In one experiment, urethane was used instead of isoflurane. The results of this experiment were qualitatively similar to those of nonurethane experiments and were pooled with the rest of the data. Recordings were performed in sedated, head-fixed birds to achieve stable neuronal recordings in a controlled environment for the long durations required of the experimental paradigm. This preparation allowed for multiple experiments to be conducted in the same bird over the course of several weeks. Throughout the experiment, the state of the bird was gauged by visually monitoring the eyes and respiration rate using an IR camera. Body temperature was thermostatically regulated (39 °C) through a heating blanket and custom equipment. Sites within HVC were at least 100 μm apart and were identified based on stereotaxic coordinates, baseline neural activity, and auditory response properties. Experiments were controlled and neural data were collected using in-house software (Krank; B. D. Wright and D. Schleef, University of California, San Francisco). Electrophysiological data were amplified with an AM Systems amplifier (1,000×), filtered (300–10,000 Hz), and digitized at 32,000 Hz.

Spike Sorting and Calculation of Instantaneous Firing Rates.

Single units were identified events exceeding 6 SDs from the mean and/or were spike sorted using in-house software based on a Bayesian inference algorithm, described previously (35). Single units had <1% interspike interval <1 ms. Multiunit neural data were thresholded to detect spikes more than 3 SDs away from the mean. Both single and multiunit spike times were binned into 5-ms compartments and then smoothed using a truncated Gaussian kernel with a SD of 2.5 ms and total width of 5 ms.

Playback of Auditory Stimuli.

Stimuli were bandpass filtered between 300 and 8,000 Hz and normalized such that BOS playback through a speaker placed 90 cm from the head had an average sound pressure level of 80 dB at the head (A scale). Each stimulus was preceded and followed by 0.5–1 s of silence, and a cosine modulated ramp was used to transition from silence to sounds. The power spectrum varied less than 5 dB across 300–8,000 Hz for white-noise stimuli. All stimuli were presented pseudorandomly. Each stimulus was presented between 10 and 50 times per recording site. Different recording sites from the same bird could be collected in the same or different recording sessions.

Song Collection and Analysis.

All methods for behavioral data collection and analyses have been described previously (42). Briefly, we collected and analyzed the songs of Bengalese finches recorded in sound-attenuating chambers (Acoustic Systems) and hand labeled the syllables from 15 to 50 bouts of recorded song using custom-written software in Matlab. We calculated several features of the temporal–sequential structure of song: the intersyllable gaps (ISGs), forward transition probabilities, and corresponding entropies.

ISGs.

The ISG between syllable si transitioning to syllable sj [ISG(si,sj)] is the time between the acoustic offset of si (Toff) and the acoustic onset of sj (Ton)

| [S1] |

Forward transition probability.

The forward transition probability is the conditional probability of transitioning from syllable si at time t to syllable sj at time t + 1

| [S2] |

Note that we have previously called the forward transition probability the divergence probability (42).

Conditional entropy.

The conditional entropy for the transition from syllable si is the entropy of the forward transition probabilities, conditioned on si

| [S3] |

Stimulus Creation.

As noted above, Bengalese finch song consists of many acoustically distinct syllables separated by periods of silence, called ISGs. Over several bouts of singing, a bird will produce hundreds of examples of each of these syllables, and each example, although identifiable as a particular syllable, will have subtle acoustic differences. Our goal was to examine how the timing between syllables modulated auditory responses to individual syllables, and to examine the similarity of poststimulus activity induced by BOS sequence playback relative to the auditory responses to single syllables. To remove potential effects of different acoustics associated with the same syllable in different contexts, the stimuli in this study, other than the BOS stimuli, consisted of one representative exemplar of each syllable selected from among those naturally produced by the bird. We selected exemplar syllables as described previously (42).

Characterization of Poststimulus Activity Induced by Playback of BOS and rBOS.

To compare poststimulus activity (PSA) induced by BOS playback to PSA induced by rBOS playback, we first fit an exponentially decaying function to a smoothed version (50-ms box car kernel) of the first 1 s of PSA for each stimulus and site

| [S4] |

where B corresponds to the baseline level of activity at asymptote, A is the initial amplitude of the PSA, less the asymptotic baseline, and τ is the decay time constant. The parameters of this model were found by minimizing the RMS difference between the model output and the data using the nlinfit function in Matlab. The amplitude of PSA was computed as A + B from the above fits for each site and stimulus.

Calculation of Local Correlation Coefficients Comparing Single-Syllable Responses to Sequenced Induced Poststimulus Activity.

For each of the syllables and sequences that went into the analysis presented in Figs. 4 and 5, we averaged responses to identical auditory stimuli across multiple sites within a bird. We defined the responses to single syllables as the response to the syllable presented in isolation, beginning at acoustic onset and extending 15 ms after sound offset (to account for response delays). We compared the temporal similarity of sequence induced poststimulus activity and single-syllable evoked responses [] using a local covariance method. Starting from the onset of the poststimulus activity, we extracted segments of the same duration as the single-syllable response (T) being compared. The correlation coefficient between the single-syllable response [], and the segment of poststimulus activity [PSA(t:t + T)] was calculated as follows:

| [S5] |

where σ denotes the variance of the associated signal. By successively shifting the extracted segment of poststimulus activity by one time bin, we investigated how the temporal structure of poststimulus activity covaried with the single-syllable responses, while controlling for both segment length and differences in the time-varying local mean activity of PSA.

Acknowledgments

This work was supported by the Howard Hughes Medical Institute, National Institutes of Health Grant DC006636 (to M.S.B.), National Science Foundation Grant IOS0951348 (to M.S.B.), and a National Science Foundation predoctoral award (to K.E.B.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1606725113/-/DCSupplemental.

References

- 1.Lashley KS. The problem of serial order in behavior. In: Jeffress LA, editor. Cerebral Mechanisms in Behavior. Wiley; New York: 1951. pp. 112–147. [Google Scholar]

- 2.Todorov E, Jordan MI. Optimal feedback control as a theory of motor coordination. Nat Neurosci. 2002;5(11):1226–1235. doi: 10.1038/nn963. [DOI] [PubMed] [Google Scholar]

- 3.Hahnloser RHR, Kozhevnikov AA, Fee MS. An ultra-sparse code underlies the generation of neural sequences in a songbird. Nature. 2002;419(6902):65–70. doi: 10.1038/nature00974. [DOI] [PubMed] [Google Scholar]

- 4.Bouchard KE, Chang EF. Control of spoken vowel acoustics and the influence of phonetic context in human speech sensorimotor cortex. J Neurosci. 2014;34(38):12662–12677. doi: 10.1523/JNEUROSCI.1219-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bogacz R, Brown E, Moehlis J, Holmes P, Cohen JD. The physics of optimal decision making: A formal analysis of models of performance in two-alternative forced-choice tasks. Psychol Rev. 2006;113(4):700–765. doi: 10.1037/0033-295X.113.4.700. [DOI] [PubMed] [Google Scholar]

- 6.Peña M, Bonatti LL, Nespor M, Mehler J. Signal-driven computations in speech processing. Science. 2002;298(5593):604–607. doi: 10.1126/science.1072901. [DOI] [PubMed] [Google Scholar]

- 7.Saffran JR, Aslin RN, Newport EL. Statistical learning by 8-month-old infants. Science. 1996;274(5294):1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- 8.Yang T, Shadlen MN. Probabilistic reasoning by neurons. Nature. 2007;447(7148):1075–1080. doi: 10.1038/nature05852. [DOI] [PubMed] [Google Scholar]

- 9.Hollerman JR, Schultz W. Dopamine neurons report an error in the temporal prediction of reward during learning. Nat Neurosci. 1998;1(4):304–309. doi: 10.1038/1124. [DOI] [PubMed] [Google Scholar]

- 10.Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299(5614):1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- 11.Wiener N. Cybernetics. The MIT Press; Cambridge, MA: 1961. [Google Scholar]

- 12.Sugrue LP, Corrado GS, Newsome WT. Matching behavior and the representation of value in the parietal cortex. Science. 2004;304(5678):1782–1787. doi: 10.1126/science.1094765. [DOI] [PubMed] [Google Scholar]

- 13.Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- 14.Barlow HB. Possible principles underlying the transformation of sensory messages. In: Rosenblith WA, editor. Sensory Communication. MIT Press; Cambridge, MA: 1961. pp. 217–234. [Google Scholar]

- 15.Olshausen BA, Field DJ. Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature. 1996;381(6583):607–609. doi: 10.1038/381607a0. [DOI] [PubMed] [Google Scholar]

- 16.Hosoya T, Baccus SA, Meister M. Dynamic predictive coding by the retina. Nature. 2005;436(7047):71–77. doi: 10.1038/nature03689. [DOI] [PubMed] [Google Scholar]

- 17.Rao RP, Ballard DH. Predictive coding in the visual cortex: A functional interpretation of some extra-classical receptive-field effects. Nat Neurosci. 1999;2(1):79–87. doi: 10.1038/4580. [DOI] [PubMed] [Google Scholar]

- 18.Knill DC, Pouget A. The Bayesian brain: The role of uncertainty in neural coding and computation. Trends Neurosci. 2004;27(12):712–719. doi: 10.1016/j.tins.2004.10.007. [DOI] [PubMed] [Google Scholar]

- 19.Bach DR, Dolan RJ. Knowing how much you don’t know: A neural organization of uncertainty estimates. Nat Rev Neurosci. 2012;13(8):572–586. doi: 10.1038/nrn3289. [DOI] [PubMed] [Google Scholar]

- 20.DeLong KA, Urbach TP, Kutas M. Probabilistic word pre-activation during language comprehension inferred from electrical brain activity. Nat Neurosci. 2005;8(8):1117–1121. doi: 10.1038/nn1504. [DOI] [PubMed] [Google Scholar]

- 21.Diba K, Buzsáki G. Forward and reverse hippocampal place-cell sequences during ripples. Nat Neurosci. 2007;10(10):1241–1242. doi: 10.1038/nn1961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Leaver AM, Van Lare J, Zielinski B, Halpern AR, Rauschecker JP. Brain activation during anticipation of sound sequences. J Neurosci. 2009;29(8):2477–2485. doi: 10.1523/JNEUROSCI.4921-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lisman J, Redish AD. Prediction, sequences and the hippocampus. Philos Trans R Soc Lond B Biol Sci. 2009;364(1521):1193–1201. doi: 10.1098/rstb.2008.0316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Pastalkova E, Itskov V, Amarasingham A, Buzsáki G. Internally generated cell assembly sequences in the rat hippocampus. Science. 2008;321(5894):1322–1327. doi: 10.1126/science.1159775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zhou X, de Villers-Sidani E, Panizzutti R, Merzenich MM. Successive-signal biasing for a learned sound sequence. Proc Natl Acad Sci USA. 2010;107(33):14839–14844. doi: 10.1073/pnas.1009433107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Davidson TJ, Kloosterman F, Wilson MA. Hippocampal replay of extended experience. Neuron. 2009;63(4):497–507. doi: 10.1016/j.neuron.2009.07.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ikegaya Y, et al. Synfire chains and cortical songs: Temporal modules of cortical activity. Science. 2004;304(5670):559–564. doi: 10.1126/science.1093173. [DOI] [PubMed] [Google Scholar]

- 28.Karlsson MP, Frank LM. Awake replay of remote experiences in the hippocampus. Nat Neurosci. 2009;12(7):913–918. doi: 10.1038/nn.2344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Warren TL, Charlesworth JD, Tumer EC, Brainard MS. Variable sequencing is actively maintained in a well learned motor skill. J Neurosci. 2012;32(44):15414–15425. doi: 10.1523/JNEUROSCI.1254-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Glaze CM, Troyer TW. Temporal structure in zebra finch song: Implications for motor coding. J Neurosci. 2006;26(3):991–1005. doi: 10.1523/JNEUROSCI.3387-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Jin DZ. Generating variable birdsong syllable sequences with branching chain networks in avian premotor nucleus HVC. Phys Rev E Stat Nonlin Soft Matter Phys. 2009;80(5 Pt 1):051902. doi: 10.1103/PhysRevE.80.051902. [DOI] [PubMed] [Google Scholar]

- 32.Fee MS, Kozhevnikov AA, Hahnloser RHR. Neural mechanisms of vocal sequence generation in the songbird. Ann N Y Acad Sci. 2004;1016:153–170. doi: 10.1196/annals.1298.022. [DOI] [PubMed] [Google Scholar]

- 33.Long MA, Jin DZ, Fee MS. Support for a synaptic chain model of neuronal sequence generation. Nature. 2010;468(7322):394–399. doi: 10.1038/nature09514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.McCasland JS. Neuronal control of bird song production. J Neurosci. 1987;7(1):23–39. doi: 10.1523/JNEUROSCI.07-01-00023.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Sakata JT, Brainard MS. Online contributions of auditory feedback to neural activity in avian song control circuitry. J Neurosci. 2008;28(44):11378–11390. doi: 10.1523/JNEUROSCI.3254-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Long MA, Fee MS. Using temperature to analyse temporal dynamics in the songbird motor pathway. Nature. 2008;456(7219):189–194. doi: 10.1038/nature07448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lewicki MS, Arthur BJ. Hierarchical organization of auditory temporal context sensitivity. J Neurosci. 1996;16(21):6987–6998. doi: 10.1523/JNEUROSCI.16-21-06987.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Lewicki MS, Konishi M. Mechanisms underlying the sensitivity of songbird forebrain neurons to temporal order. Proc Natl Acad Sci USA. 1995;92(12):5582–5586. doi: 10.1073/pnas.92.12.5582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Margoliash D, Fortune ES. Temporal and harmonic combination-sensitive neurons in the zebra finch’s HVc. J Neurosci. 1992;12(11):4309–4326. doi: 10.1523/JNEUROSCI.12-11-04309.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Bouchard KE, Mesgarani N, Johnson K, Chang EF. Functional organization of human sensorimotor cortex for speech articulation. Nature. 2013;495(7441):327–332. doi: 10.1038/nature11911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Nakamura KZ, Okanoya K. Neural correlates of song complexity in Bengalese finch high vocal center. Neuroreport. 2004;15(8):1359–1363. doi: 10.1097/01.wnr.0000125782.35268.d6. [DOI] [PubMed] [Google Scholar]

- 42.Bouchard KE, Brainard MS. Neural encoding and integration of learned probabilistic sequences in avian sensory-motor circuitry. J Neurosci. 2013;33(45):17710–17723. doi: 10.1523/JNEUROSCI.2181-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Dave AS, Margoliash D. Song replay during sleep and computational rules for sensorimotor vocal learning. Science. 2000;290(5492):812–816. doi: 10.1126/science.290.5492.812. [DOI] [PubMed] [Google Scholar]

- 44.Vu ET, Mazurek ME, Kuo YC. Identification of a forebrain motor programming network for the learned song of zebra finches. J Neurosci. 1994;14(11 Pt 2):6924–6934. doi: 10.1523/JNEUROSCI.14-11-06924.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ashmore RC, Wild JM, Schmidt MF. Brainstem and forebrain contributions to the generation of learned motor behaviors for song. J Neurosci. 2005;25(37):8543–8554. doi: 10.1523/JNEUROSCI.1668-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Wittenbach J, Bouchard KE, Brainard MS, Jin DZ. 2015. An adapting auditory-motor feedback loop can contribute to generating vocal repetition. arXiv:1501:527.

- 47.Dave AS, Yu AC, Margoliash D. Behavioral state modulation of auditory activity in a vocal motor system. Science. 1998;282(5397):2250–2254. doi: 10.1126/science.282.5397.2250. [DOI] [PubMed] [Google Scholar]

- 48.Han F, Caporale N, Dan Y. Reverberation of recent visual experience in spontaneous cortical waves. Neuron. 2008;60(2):321–327. doi: 10.1016/j.neuron.2008.08.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Berkes P, Orbán G, Lengyel M, Fiser J. Spontaneous cortical activity reveals hallmarks of an optimal internal model of the environment. Science. 2011;331(6013):83–87. doi: 10.1126/science.1195870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Bouchard KE, Ganguli S, Brainard MS. Role of the site of synaptic competition and the balance of learning forces for Hebbian encoding of probabilistic Markov sequences. Front Comput Neurosci. 2015;9:92. doi: 10.3389/fncom.2015.00092. [DOI] [PMC free article] [PubMed] [Google Scholar]