A time traveler from 1915 arriving in 1965 would have been astonished by the scientific theories and engineering technologies invented during that half century. One can only speculate, but it seems likely that few of the major advances that emerged during those 50 years were even remotely foreseeable in 1915: Life scientists discovered DNA, the genetic code, transcription, and examples of its regulation, yielding, among other insights, the central dogma of biology. Astronomers and astrophysicists found other galaxies and the signatures of the big bang. Groundbreaking inventions included the transistor, photolithography, and the printed circuit, as well as microwave and satellite communications and the practices of building computers, writing software, and storing data. Atomic scientists developed NMR and nuclear power. The theory of information appeared, as well as the formulation of finite state machines, universal computers, and a theory of formal grammars. Physicists extended the classical models with the theories of relativity, quantum mechanics, and quantum fields, while launching the standard model of elementary particles and conceiving the earliest versions of string theory.

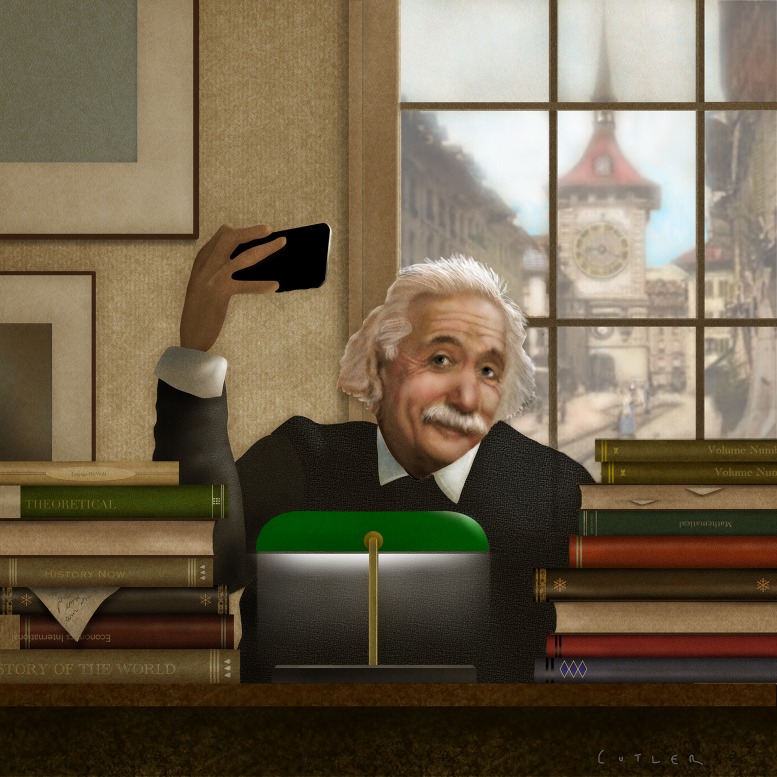

These days, scientists spend much of their time taking “professional selfies”—effectively spending more time announcing ideas than formulating them. Image courtesy of Dave Cutler.

Some of these advances emerged from academia and some from the great industrial research laboratories where pure thinking was valued along with better products. Would a visitor from 1965, having traveled the 50 years to 2015, be equally dazzled?

Maybe not. Perhaps, though, the pace of technological development would have surprised most futurists, but the trajectory was at least partly foreseeable. This is not to deny that our time traveler would find the Internet, new medical imaging devices, advances in molecular biology and gene editing, the verification of gravity waves, and other inventions and discoveries remarkable, nor to deny that these developments often required leaps of imagination, deep mathematical analyses, and hard-earned technical know-how. Nevertheless, the advances are mostly incremental, and largely focused on newer and faster ways to gather and store information, communicate, or be entertained.

Here there is a paradox: Today, there are many more scientists, and much more money is spent on research, yet the pace of fundamental innovation, the kinds of theories and engineering practices that will feed the pipeline of future progress, appears, to some observers, including us, to be slowing [B. Jones (1, 2) R. McMillan (3), P. Krugman (4), P. Thiel (bigthink.com/videos/peter-thiel-what-happened-to-innovation-2), E. Brynjolfsson (5), R. Gordon (6), T. Cowen (7), and R. Ness (8)]. Why might that be the case?

One argument is that “theoretical models” may not even exist for some branches of science, at least not in the traditional mathematical sense, because the systems under study are inherently too complex. In the last few centuries, breakthroughs in physics, chemistry, and engineering were magically captured by elegant mathematical models. However, that paradigm has not translated to the studies of biology, medicine, climate, cognition, or perception, to name a few examples. The usual explanation in areas such as brain science is that such systems are somehow “unsimplifiable,” not amenable to abstraction. Instead, the argument continues, the challenges ahead are more about computation, simulation, and “big data”-style empiricism, and less about mechanisms and unifying theories.

But many natural phenomena seem mysterious and hopelessly complex before being truly understood. And besides, premature claims that theory has run its course appear throughout the history of modern science, including Pierre-Simon Laplace’s “scientific determinism,” Albert Michelson’s (poorly timed) declaration of 1894 that it was likely that “most of the grand underlying principles” had been “firmly established,” and, more recently, John Horgan’s The End of Science (9) and Tyler Cowen’s The Great Stagnation (7). By the historical evidence, it would be a mistake, possibly even self-fulfilling, to conclude that the best works of theoretical science are behind us.

What has certainly changed, even drastically, is the day-to-day behavior of scientists, partly driven by new technology that affects everyone and partly driven by an alteration in the system of rewards and incentives.

Others have argued that, soon enough, scientific ideas won’t have to come from scientists: After the “singularity” (also known as “strong artificial intelligence,” which Ray Kurzweil and others foresee within a few decades), discovery will be the business of machines, which are better equipped to handle complex high-dimensional data. But there is no more evidence for this argument now than there was for the outsized claims for artificial intelligence in the 1960s. After all, the “knowledge representation” and “knowledge engineering” of the 1960s are not so dissimilar to today’s “data science.” But neither the old-style artificial intelligence, which was deterministic and logic-based, nor the statistical and machine-learning approaches of today have anything to say about the discovery, much less the formulation, of mechanisms, which, after all, is not the same as detecting patterns, even in unlimited data. Besides, data science itself has many of its roots in the 1960s, as recently noted by David Donoho (courses.csail.mit.edu/18.337/2015/docs/50YearsDataScience.pdf).

Another explanation is that, in many domains, for instance, biology, it is only over the past several decades that the experimental and observational data that are necessary for deep understanding of complex systems, including formulating unifying principles and validating explanatory models, have come into existence. This enterprise has itself required major insights and fundamental advances in computer engineering, biotechnology, image acquisition, and information processing, and could set the stage for theoretical breakthroughs in the coming years. This “enabling technology” scenario is plausible enough. However, its realization may be at odds with recent changes in the practice of doing science, due, in part, and paradoxically, to other new technologies such as the Internet.

Cultural Shift

What has certainly changed, even drastically, is the day-to-day behavior of scientists, partly driven by new technology that affects everyone and partly driven by an alteration in the system of rewards and incentives.

Start with technology: As often noted, the advances in computing, wireless communication, data storage, and the availability of the Internet have had profound behavioral consequences. One outcome that might be quickly apparent to our time traveler would be the new mode of activity, “being online,” and how popular it is. There is already widespread concern that most of us, but especially young people, are perpetually distracted by “messaging.” Less discussed is the possible effect on creativity: Finding organized explanations for the world around us, and solutions for our existential problems, is hard work and requires intense and sustained concentration. Constant external stimulation may inhibit deep thinking. In fact, is it even possible to think creatively while online? Perhaps “thinking out of the box” has become rare because the Internet is itself a box.

It may not be a coincidence, then, that two of the most profound developments in mathematics in the current century—Grigori Perelman’s proof of the Poincaré conjecture and Yitang Zhang’s contributions to the twin-prime conjecture—were the work of iconoclasts with an instinct for solitude and, by all accounts, no particular interest in being “connected.” Prolonged focusing is getting harder. In the past, getting distracted required more effort. Writer Philip Roth predicts a negligible audience for novels (“maybe more people than now read Latin poetry, but somewhere in that range”) as they become too demanding of sustained attention in our new culture (10).

Another change from 1965, related to these same technologies, is in the way we communicate, or, more to the point, how much we communicate. Easy travel, many more meetings, relentless emails, and, in general, a low threshold for interaction have created a veritable epidemic of communication. Evolution relies on genetic drift and the creation of a diverse gene pool. Are ideas so different? Is there a risk of cognitive inbreeding? Communication is necessary, but, if there is too much communication, it starts to look like everyone is working in pretty much the same direction. A current example is the mass migration to “deep learning” in machine intelligence.

In fact, maybe it has become too easy to collaborate. Great ideas rarely come from teams. There is, of course, a role for “big science” (the Apollo program, the Human Genome Project, CERN’s Large Hadron Collider), but teamwork cannot supplant individual ideas. The great physicist Richard Feynman remarked that “Science is the belief in the ignorance of experts.” In a 2014 letter to The Guardian newspaper (11), 30 scientists, concerned about today’s scientific culture, noted that it was the work of mavericks like Feynman that defined 20th century science. Science of the past 50 years seems to be more defined by big projects than by big ideas.

Daily Grind

But maybe the biggest change affecting scientists is their role as employees, and what they are paid for doing—in effect, the job description. In industry, there are few jobs for pure research and, despite initiatives at companies like Microsoft and Google, still no modern version of Bell Labs. At the top research universities, scientists are hired, paid, and promoted primarily based on their degree of exposure, often measured by the sheer size of the vita listing all publications, conferences attended or organized, talks given, proposals submitted or funded, and so forth.

The response of the scientific community to the changing performance metrics has been entirely rational: We spend much of our time taking “professional selfies.” In fact, many of us spend more time announcing ideas than formulating them. Being busy needs to be visible, and deep thinking is not. Academia has largely become a small-idea factory. Rewarded for publishing more frequently, we search for “minimum publishable units.” Not surprisingly, many papers turn out to be early “progress reports,” quickly superseded. At the same time, there is hugely increased pressure to secure outside funding, converting most of our best scientists into government contractors. As Roberta Ness [author of The Creativity Crisis (8)] points out, the incentives for exploring truly novel ideas have practically disappeared. All this favors incremental advances, and young scientists contend that being original is just too risky.

In academia, the two most important sources of feedback scientists receive about their performance are the written evaluations following the submission of papers for publication and proposals for research funding. Unfortunately, in both cases, the peer review process rarely supports pursuing paths that sharply diverge from the mainstream direction, or even from researchers’ own previously published work. Moreover, in the biomedical sciences and other areas, institutional support is often limited, and, consequently, young researchers are obliged to play small roles in multiple projects to piece together their own salary. In fact, there are now many impediments to exploring new ideas and formulating ambitious long-term goals [see Alberts et al. (12)].

We entered academia in the 1970s when jobs were plentiful and the research environment was less hectic, due to sharply lower expectations about external support and publication rates. There was ample time for reflection and quality control. Sadly, our younger colleagues are less privileged and lack this key resource.

Less Is More

Albert Einstein remarked that “an academic career, in which a person is forced to produce scientific writings in great amounts, creates a danger of intellectual superficiality” (13); the physicist Peter Higgs felt that he could not replicate his discovery of 1964 in today’s academic climate (14); and the neurophysiologist David Hubel observed that the climate that nurtured his remarkable 25-year collaboration with Torsten Wiesel, which began in the late 1950s and revealed the basic properties of the visual cortex, had all but disappeared by the early 1980s, replaced by intense competition for grants and pressure to publish (www.nobelprize.org/nobel_prizes/medicine/laureates/1981/hubel-bio.html and ref. 15). Looking back on the collaboration, he noted that “it was possible to take more long-shots without becoming panic stricken if things didn’t work out brilliantly in the first few months” (16).

Maybe this is why data mining has largely replaced traditional hypothesis-driven science. We are awash in small discoveries, most of which are essentially detections of “statistically significant” patterns in big data. Usually, there is no unifying model or theory that generates predictions, testable or not. That would take too much time and thought. Even the elite scientific journals seem too favorable to observations of patterns in new data, even if irreproducible, possibly explained by chance, or utterly lacking any supporting theory. Except in a few areas, such as string theory and climate studies, there are few incentives to search for unifying principles, let alone large-scale models.

If our traveler from the 1960s had caught a few episodes of Star Trek, then he could hardly be surprised by handheld communication devices, speech recognition, or big data (“Computer, search your memory banks...”). What will the theories and technologies of 2065 look like? If we are not careful, if we do not sufficiently value explanatory science and individual creativity, they will look pretty much like they do today. But if we do, many wonders may lie ahead—not only the elusive unified field theory for physics but perhaps also a new type of “theory” for cognition and consciousness, or maybe theories matching the scope and elegance of natural selection for other great challenges of biology, including the mechanisms of regulation and dysregulation (e.g., cancer and aging) at the cellular level and the prediction of structure and function at the molecular level. There is no lack of frontiers.

Being busy needs to be visible, and deep thinking is not. Academia has largely become a small-idea factory. Rewarded for publishing more frequently, we search for “minimum publishable units.”

Alberts et al. (12) recommend fundamental changes in the way universities and government agencies fund biomedical research, some intended to reduce hypercompetition and encourage risk-taking and original thinking. Such things move very slowly, if at all. In that 2014 letter to The Guardian, Braben et al. (11) suggest that small changes could keep science healthy. We agree, and suggest one: Change the criteria for measuring performance. In essence, go back in time. Discard numerical performance metrics, which many believe have negative impacts on scientific inquiry [see, for example, “The mismeasurement of science” by Peter Lawrence (17)]. Suppose, instead, every hiring and promotion decision were mainly based on reviewing a small number of publications chosen by the candidate. The rational reaction would be to spend more time on each project, be less inclined to join large teams in small roles, and spend less time taking professional selfies. Perhaps we can then return to a culture of great ideas and great discoveries.

Footnotes

Any opinions, findings, conclusions, or recommendations expressed in this work are those of the authors and do not necessarily reflect the views of the National Academy of Sciences.

References

- 1.Jones B. 2005 The burden of knowledge and the ‘death of the renaissance man’: Is innovation getting harder? Working Paper 11360 (Natl Bur Econ Res, Cambridge, MA). Available at www.nber.org/papers/w11360.

- 2.Jones B. Age and great invention. Rev Econ Stat. 2010;92(1):1–14. [Google Scholar]

- 3.McMillan R. May 26, 2015 Innovation shows signs of slowing. Wall Street Journal. Available at www.wsj.com/articles/innovation-shows-signs-of-slowing-1432656076.

- 4.Krugman P. May 25, 2015. The big meh. NY Times, Section A, p 19.

- 5.Brynjolfsson E. The productivity paradox of information technology. Commun ACM. 1993;36(12):66–77. [Google Scholar]

- 6.Gordon R. 2014. The demise of U.S. economic growth: Restatement, rebuttal, and reflections. Working paper 19895 (Natl Bur Econ Res, Cambridge, MA). Available at www.nber.org/papers/w19895.

- 7.Cowen T. The Great Stagnation: How America Ate All the Low-Hanging Fruit of Modern History, Got Sick, and Will (Eventually) Feel Better. Dutton; New York: 2011. [Google Scholar]

- 8.Ness R. The Creativity Crisis. Oxford Univ Press; Oxford: 2015. [Google Scholar]

- 9.Horgan J. The End of Science: Facing the Limits of Science in the Twilight of the Scientific Age. Addison Wesley; Boston: 1996. [Google Scholar]

- 10.Brown T. October 30, 2009. Philip Roth unbound: Interview transcript. Daily Beast. Available at www.thedailybeast.com/articles/2009/10/30/philip-roth-unbound-interview-transcript.html.

- 11.Braben D, et al. March 18, 2014. We need more scientific mavericks. Guardian. Available at https://www.theguardian.com/science/2014/mar/18/we-need-more-scientific-mavericks.

- 12.Alberts B, Kirschner MW, Tilghman S, Varmus H. Rescuing US biomedical research from its systemic flaws. Proc Natl Acad Sci USA. 2014;111(16):5773–5777. doi: 10.1073/pnas.1404402111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Isaacson W. Einstein (His Life and Universe) 1st Ed. Simon and Schuster; New York: 2008. p. 79. [Google Scholar]

- 14.Aitkenhead D. December 6, 2013 “Peter Higgs: I wouldn’t be productive enough for today’s academic system,” Guardian. Available at https://www.theguardian.com/science/2013/dec/06/peter-higgs-boson-academic-system.

- 15.Hubel D, Wiesel T. Brain and Visual Perception: The Story of a 25-Year Collaboration. Oxford Univ Press; Oxford: 2005. [Google Scholar]

- 16.Hubel DH. The way biomedical research is organized has dramatically changed over the past half-century: Are the changes for the better? Neuron. 2009;64(2):161–163. doi: 10.1016/j.neuron.2009.09.022. [DOI] [PubMed] [Google Scholar]

- 17.Lawrence PA. The mismeasurement of science. Curr Biol. 2007;17(15):R583–R585. doi: 10.1016/j.cub.2007.06.014. [DOI] [PubMed] [Google Scholar]